#Cloud Data

Explore tagged Tumblr posts

Text

Geneva-based Infomaniak has been recovering 100 per cent of the electricity it uses since November 2024.

The recycled power will be able to fuel the centralised heating network in the Canton of Geneva and benefit around 6,000 households.

The centre is currently operating at 25 per cent of its potential capacity. It aims to reach full capacity by 2028.

Swiss data centre leads the way for a greener cloud industry

The data centre hopes to point to a greener way of operating in the electricity-heavy cloud industry.

"In the real world, data centres convert electricity into heat. With the exponential growth of the cloud, this energy is currently being released into the atmosphere and wasted,” Boris Siegenthaler, Infomaniak's Founder and Chief Strategy Officer, told news site FinanzNachrichten.

“There is an urgent need to upgrade this way of doing things, to connect these infrastructures to heating networks and adapt building standards."

Infomaniak has received several awards for the energy efficiency of its complexes, which operate without air conditioning - a rarity for hot data centres.

The company also builds infrastructure underground so that it doesn’t have an impact on the environment.

Swiss data centre recycles heat for homes

At Infomaniak, all the electricity that powers equipment like servers, inverters and ventilation is converted into heat at a temperature of 40 to 45C.

This is then channelled to an air/water exchanger which filters it into a hot water circuit. Heat pumps are used to increase its temperature to 67C in summer and 85C in winter.

How many homes will be heated by the data centre?

When the centre is operating at full capacity, it will supply Geneva’s heating network with 1.7 megawatts, the amount needed for 6,000 households per year or for 20,000 people to take a 5-minute shower every day.

This means the Canton of Geneva can save 3,600 tonnes of CO2 equivalent (tCO2eq) of natural gas every year, or 5,500 tCO2eq of pellets annually.

The system in place at Infomaniak’s data centre is free to be reproduced by other companies. There is a technical guide available explaining how to replicate the model and a summary for policymakers that advises how to improve design regulations and the sustainability of data centres.

#good news#environmentalism#science#environment#climate change#climate crisis#switzerland#geneva#Infomaniak#cloud storage#cloud data#carbon emissions#heat pumps

45 notes

·

View notes

Audio

3 notes

·

View notes

Text

Future-Ready Enterprises: The Crucial Role of Large Vision Models (LVMs)

New Post has been published on https://thedigitalinsider.com/future-ready-enterprises-the-crucial-role-of-large-vision-models-lvms/

Future-Ready Enterprises: The Crucial Role of Large Vision Models (LVMs)

What are Large Vision Models (LVMs)

Over the last few decades, the field of Artificial Intelligence (AI) has experienced rapid growth, resulting in significant changes to various aspects of human society and business operations. AI has proven to be useful in task automation and process optimization, as well as in promoting creativity and innovation. However, as data complexity and diversity continue to increase, there is a growing need for more advanced AI models that can comprehend and handle these challenges effectively. This is where the emergence of Large Vision Models (LVMs) becomes crucial.

LVMs are a new category of AI models specifically designed for analyzing and interpreting visual information, such as images and videos, on a large scale, with impressive accuracy. Unlike traditional computer vision models that rely on manual feature crafting, LVMs leverage deep learning techniques, utilizing extensive datasets to generate authentic and diverse outputs. An outstanding feature of LVMs is their ability to seamlessly integrate visual information with other modalities, such as natural language and audio, enabling a comprehensive understanding and generation of multimodal outputs.

LVMs are defined by their key attributes and capabilities, including their proficiency in advanced image and video processing tasks related to natural language and visual information. This includes tasks like generating captions, descriptions, stories, code, and more. LVMs also exhibit multimodal learning by effectively processing information from various sources, such as text, images, videos, and audio, resulting in outputs across different modalities.

Additionally, LVMs possess adaptability through transfer learning, meaning they can apply knowledge gained from one domain or task to another, with the capability to adapt to new data or scenarios through minimal fine-tuning. Moreover, their real-time decision-making capabilities empower rapid and adaptive responses, supporting interactive applications in gaming, education, and entertainment.

How LVMs Can Boost Enterprise Performance and Innovation?

Adopting LVMs can provide enterprises with powerful and promising technology to navigate the evolving AI discipline, making them more future-ready and competitive. LVMs have the potential to enhance productivity, efficiency, and innovation across various domains and applications. However, it is important to consider the ethical, security, and integration challenges associated with LVMs, which require responsible and careful management.

Moreover, LVMs enable insightful analytics by extracting and synthesizing information from diverse visual data sources, including images, videos, and text. Their capability to generate realistic outputs, such as captions, descriptions, stories, and code based on visual inputs, empowers enterprises to make informed decisions and optimize strategies. The creative potential of LVMs emerges in their ability to develop new business models and opportunities, particularly those using visual data and multimodal capabilities.

Prominent examples of enterprises adopting LVMs for these advantages include Landing AI, a computer vision cloud platform addressing diverse computer vision challenges, and Snowflake, a cloud data platform facilitating LVM deployment through Snowpark Container Services. Additionally, OpenAI, contributes to LVM development with models like GPT-4, CLIP, DALL-E, and OpenAI Codex, capable of handling various tasks involving natural language and visual information.

In the post-pandemic landscape, LVMs offer additional benefits by assisting enterprises in adapting to remote work, online shopping trends, and digital transformation. Whether enabling remote collaboration, enhancing online marketing and sales through personalized recommendations, or contributing to digital health and wellness via telemedicine, LVMs emerge as powerful tools.

Challenges and Considerations for Enterprises in LVM Adoption

While the promises of LVMs are extensive, their adoption is not without challenges and considerations. Ethical implications are significant, covering issues related to bias, transparency, and accountability. Instances of bias in data or outputs can lead to unfair or inaccurate representations, potentially undermining the trust and fairness associated with LVMs. Thus, ensuring transparency in how LVMs operate and the accountability of developers and users for their consequences becomes essential.

Security concerns add another layer of complexity, requiring the protection of sensitive data processed by LVMs and precautions against adversarial attacks. Sensitive information, ranging from health records to financial transactions, demands robust security measures to preserve privacy, integrity, and reliability.

Integration and scalability hurdles pose additional challenges, especially for large enterprises. Ensuring compatibility with existing systems and processes becomes a crucial factor to consider. Enterprises need to explore tools and technologies that facilitate and optimize the integration of LVMs. Container services, cloud platforms, and specialized platforms for computer vision offer solutions to enhance the interoperability, performance, and accessibility of LVMs.

To tackle these challenges, enterprises must adopt best practices and frameworks for responsible LVM use. Prioritizing data quality, establishing governance policies, and complying with relevant regulations are important steps. These measures ensure the validity, consistency, and accountability of LVMs, enhancing their value, performance, and compliance within enterprise settings.

Future Trends and Possibilities for LVMs

With the adoption of digital transformation by enterprises, the domain of LVMs is poised for further evolution. Anticipated advancements in model architectures, training techniques, and application areas will drive LVMs to become more robust, efficient, and versatile. For example, self-supervised learning, which enables LVMs to learn from unlabeled data without human intervention, is expected to gain prominence.

Likewise, transformer models, renowned for their ability to process sequential data using attention mechanisms, are likely to contribute to state-of-the-art outcomes in various tasks. Similarly, Zero-shot learning, allowing LVMs to perform tasks they have not been explicitly trained on, is set to expand their capabilities even further.

Simultaneously, the scope of LVM application areas is expected to widen, encompassing new industries and domains. Medical imaging, in particular, holds promise as an avenue where LVMs could assist in the diagnosis, monitoring, and treatment of various diseases and conditions, including cancer, COVID-19, and Alzheimer’s.

In the e-commerce sector, LVMs are expected to enhance personalization, optimize pricing strategies, and increase conversion rates by analyzing and generating images and videos of products and customers. The entertainment industry also stands to benefit as LVMs contribute to the creation and distribution of captivating and immersive content across movies, games, and music.

To fully utilize the potential of these future trends, enterprises must focus on acquiring and developing the necessary skills and competencies for the adoption and implementation of LVMs. In addition to technical challenges, successfully integrating LVMs into enterprise workflows requires a clear strategic vision, a robust organizational culture, and a capable team. Key skills and competencies include data literacy, which encompasses the ability to understand, analyze, and communicate data.

The Bottom Line

In conclusion, LVMs are effective tools for enterprises, promising transformative impacts on productivity, efficiency, and innovation. Despite challenges, embracing best practices and advanced technologies can overcome hurdles. LVMs are envisioned not just as tools but as pivotal contributors to the next technological era, requiring a thoughtful approach. A practical adoption of LVMs ensures future readiness, acknowledging their evolving role for responsible integration into business processes.

#Accessibility#ai#Alzheimer's#Analytics#applications#approach#Art#artificial#Artificial Intelligence#attention#audio#automation#Bias#Business#Cancer#Cloud#cloud data#cloud platform#code#codex#Collaboration#Commerce#complexity#compliance#comprehensive#computer#Computer vision#container#content#covid

2 notes

·

View notes

Text

How Modern Data Engineering Powers Scalable, Real-Time Decision-Making

In today's world, driven by technology, businesses have evolved further and do not want to analyze data from the past. Everything from e-commerce websites providing real-time suggestions to banks verifying transactions in under a second, everything is now done in a matter of seconds. Why has this change taken place? The modern age of data engineering involves software development, data architecture, and cloud infrastructure on a scalable level. It empowers organizations to convert massive, fast-moving data streams into real-time insights.

From Batch to Real-Time: A Shift in Data Mindset

Traditional data systems relied on batch processing, in which data was collected and analyzed after certain periods of time. This led to lagging behind in a fast-paced world, as insights would be outdated and accuracy would be questionable. Ultra-fast streaming technologies such as Apache Kafka, Apache Flink, and Spark Streaming now enable engineers to create pipelines that help ingest, clean, and deliver insights in an instant. This modern-day engineering technique shifts the paradigm of outdated processes and is crucial for fast-paced companies in logistics, e-commerce, relevancy, and fintech.

Building Resilient, Scalable Data Pipelines

Modern data engineering focuses on the construction of thoroughly monitored, fault-tolerant data pipelines. These pipelines are capable of scaling effortlessly to higher volumes of data and are built to accommodate schema changes, data anomalies, and unexpected traffic spikes. Cloud-native tools like AWS Glue and Google Cloud Dataflow with Snowflake Data Sharing enable data sharing and integration scaling without limits across platforms. These tools make it possible to create unified data flows that power dashboards, alerts, and machine learning models instantaneously.

Role of Data Engineering in Real-Time Analytics

Here is where these Data Engineering Services make a difference. At this point, companies providing these services possess considerable technical expertise and can assist an organization in designing modern data architectures in modern frameworks aligned with their business objectives. From establishing real-time ETL pipelines to infrastructure handling, these services guarantee that your data stack is efficient and flexible in terms of cost. Companies can now direct their attention to new ideas and creativity rather than the endless cycle of data management patterns.

Data Quality, Observability, and Trust

Real-time decision-making depends on the quality of the data that powers it. Modern data engineering integrates practices like data observability, automated anomaly detection, and lineage tracking. These ensure that data within the systems is clean and consistent and can be traced. With tools like Great Expectations, Monte Carlo, and dbt, engineers can set up proactive alerts and validations to mitigate issues that could affect economic outcomes. This trust in data quality enables timely, precise, and reliable decisions.

The Power of Cloud-Native Architecture

Modern data engineering encompasses AWS, Azure, and Google Cloud. They provide serverless processing, autoscaling, real-time analytics tools, and other services that reduce infrastructure expenditure. Cloud-native services allow companies to perform data processing, as well as querying, on exceptionally large datasets instantly. For example, with Lambda functions, data can be transformed. With BigQuery, it can be analyzed in real-time. This allows rapid innovation, swift implementation, and significant long-term cost savings.

Strategic Impact: Driving Business Growth

Real-time data systems are providing organizations with tangible benefits such as customer engagement, operational efficiency, risk mitigation, and faster innovation cycles. To achieve these objectives, many enterprises now opt for data strategy consulting, which aligns their data initiatives to the broader business objectives. These consulting firms enable organizations to define the right KPIs, select appropriate tools, and develop a long-term roadmap to achieve desired levels of data maturity. By this, organizations can now make smarter, faster, and more confident decisions.

Conclusion

Investing in modern data engineering is more than an upgrade of technology — it's a shift towards a strategic approach of enabling agility in business processes. With the adoption of scalable architectures, stream processing, and expert services, the true value of organizational data can be attained. This ensures that whether it is customer behavior tracking, operational optimization, or trend prediction, data engineering places you a step ahead of changes before they happen, instead of just reacting to changes.

1 note

·

View note

Text

Get These 5 Ms of Cloud Data Migrations Right

0 notes

Text

How the Demand for DevOps Professionals is Exponentially Increasing

In today’s fast-paced technology landscape, the demand for DevOps professionals is skyrocketing. As businesses strive for greater efficiency, faster deployment, and improved collaboration, the role of DevOps has become crucial. This blog explores why the demand for DevOps professionals is exponentially increasing and how enrolling in a DevOps course in Mumbai can be your ticket to a successful career in this field.

The Rise of DevOps

What is DevOps?

DevOps is a set of practices that combines software development (Dev) and IT operations (Ops). The goal is to shorten the development lifecycle and provide continuous delivery with high software quality. This approach fosters a culture of collaboration between development and operations teams, leading to more efficient workflows and quicker product releases.

Why is Demand Growing?

Increased Need for Speed: Businesses are under pressure to deliver updates and new features rapidly. DevOps methodologies enable faster software development and deployment, making organizations more competitive.

Enhanced Collaboration: DevOps breaks down silos between teams, promoting better communication and collaboration. Companies are realizing that this integrated approach improves overall productivity.

Adoption of Cloud Technologies: As organizations migrate to the cloud, the need for professionals who understand both development and operational aspects of cloud environments is rising. DevOps professionals are key in managing these transitions effectively.

Focus on Automation: Automation is a core component of DevOps. The demand for professionals skilled in automation tools and practices is increasing, as organizations seek to minimize manual errors and optimize processes.

The Skill Set of a DevOps Professional

To succeed in the field of DevOps, certain skills are essential:

Continuous Integration/Continuous Deployment (CI/CD): Proficiency in CI/CD tools is critical for automating the software release process.

Cloud Computing: Understanding cloud platforms (AWS, Azure, Google Cloud) is crucial for managing and deploying applications in cloud environments.

Scripting and Automation: Knowledge of scripting languages (Python, Bash) and automation tools (Ansible, Puppet) is important for streamlining processes.

Monitoring and Logging: Familiarity with monitoring tools (Nagios, Grafana) to track application performance and troubleshoot issues is vital.

Collaboration Tools: Proficiency in tools like Slack, Jira, and Trello facilitates effective communication among teams.

Why Enroll in a DevOps Course in Mumbai?

Advantages of Taking a DevOps Course in Mumbai

Structured Learning: A DevOps course in Mumbai provides a structured curriculum that covers essential concepts, tools, and practices in depth.

Hands-On Experience: Many courses offer practical labs and projects, allowing you to apply your knowledge in real-world scenarios.

Networking Opportunities: Studying in Mumbai gives you access to a vibrant tech community and potential networking opportunities with industry professionals.

Career Advancement: Completing a DevOps course enhances your resume and makes you a more attractive candidate for employers in this high-demand field.

Future Outlook for DevOps Professionals

The future looks bright for DevOps professionals. As more organizations adopt DevOps practices, the demand for skilled individuals will continue to grow making and this is where DevOps Course in Mumbai plays a major role. Companies are increasingly recognizing the value of DevOps in achieving business goals, leading to a more significant investment in training and hiring.

In addition, emerging technologies such as artificial intelligence and machine learning are starting to intersect with DevOps practices. Professionals who can bridge these areas will be even more sought after, creating new opportunities for career advancement.

Conclusion

The demand for DevOps professionals is increasing at an unprecedented rate as businesses strive for greater efficiency, speed, and collaboration. By enrolling in a DevOps course in Mumbai, you can acquire the skills and knowledge needed to excel in this thriving field.

#cloud computing#cloudcomputing#cloud storage#cloud data#cloudsecurity#devops#devops course#devops training#devops certification#cloud computing course#technology

0 notes

Text

In today's digital era, data is the lifeblood of any business. The ability to efficiently collect, store, manage, and analyze data can make the difference between thriving and merely surviving in a competitive marketplace. Castle Interactive understands this dynamic and offers cutting-edge cloud data solutions designed to empower your business. Let's explore how these solutions can transform your operations and drive growth.

0 notes

Text

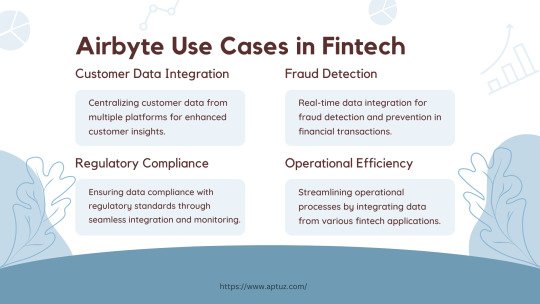

Explore the impactful use cases of Airbyte in the fintech industry, from centralizing customer data for enhanced insights to real-time fraud detection and ensuring regulatory compliance. Learn how Airbyte drives operational efficiency by streamlining data integration across various fintech applications, providing businesses with actionable insights and improved processes.

Know more at: https://bit.ly/3UbqGyT

#Fintech#data analytics#data engineering#technology#Airbyte#ETL#ELT#Cloud data#Data Integration#Data Transformation#Data management#Data extraction#Data Loading#Tech videos

0 notes

Text

Data warehousing has become a trending avenue for enterprises to better manage large volumes of data. Leveraging it with the latest capabilities will help you stay relevant and neck-to-neck with the competition. Here are a few data warehousing trends that you should keep in mind for 2024 for your data warehousing requirements.

#Data warehousing#cloud Data warehousing#cloud data#data warehouse#2024#trends 2024#data warehousing trends

0 notes

Text

Data Quality Management in Master Data Management: Ensuring Accurate and Reliable Information

Introduction: In the digital age, data is often referred to as the new gold. However, just like gold, data needs to be refined and polished to extract its true value. This is where Data Quality Management (DQM), offered by master data management companies, steps in, playing a pivotal role in the success of Master Data Management (MDM) initiatives. In this blog post, we'll explore the critical importance of data quality within MDM and delve into the techniques that organizations can employ, with the assistance of master data management services, to achieve and maintain accurate and reliable master data.

The Crucial Role of Data Quality in MDM: Data quality is the foundation upon which effective MDM is built, facilitated by cloud data services. Accurate, consistent, and reliable master data ensures that business decisions are well-informed and reliable. Poor data quality can lead to erroneous insights, wasted resources, and missed opportunities. Therefore, implementing robust data quality management practices, with the support of cloud data management services, is essential.

Techniques for Data Quality Management in MDM:

Data Profiling: Data profiling involves analyzing data to gain insights into its structure, content, and quality, enabled by data analytics consulting services. By identifying anomalies, inconsistencies, and gaps, organizations can prioritize their data quality efforts effectively.

Actionable Tip: Utilize automated data profiling tools, including cloud data management services, to uncover hidden data quality issues across your master data.

Data Cleansing: Data cleansing is the process of identifying and rectifying errors, inconsistencies, and inaccuracies within master data, supported by cloud data management solutions. This involves standardizing formats, removing duplicates, and correcting inaccurate entries.

Actionable Tip: Establish data quality rules and automated processes, with the assistance of data analytics consulting services, to regularly cleanse and validate master data.

Data Enrichment: Data enrichment involves enhancing master data by adding valuable information from trusted external sources, facilitated by data analytics consulting services. This enhances data completeness and accuracy, leading to better decision-making.

Actionable Tip: Integrate third-party data sources and APIs, in collaboration with master data management solutions, to enrich your master data with up-to-date and relevant information.

Ongoing Monitoring: Data quality is not a one-time task but an ongoing process, supported by cloud data services. Regularly monitor and assess the quality of your master data, with the assistance of cloud data management services, to detect and address issues as they arise.

Actionable Tip: Implement data quality metrics and key performance indicators (KPIs), leveraging cloud data management solutions, to track the health of your master data over time.

Real-World Examples:

Example 1: Data Profiling at Retail Innovators Inc. Retail Innovators Inc., in partnership with master data management services, implemented data profiling tools to analyze their customer master data. They discovered that approximately 15% of customer records had incomplete contact information. By addressing this issue, with the support of cloud data management services, they improved customer communication and reduced delivery failures.

Example 2: Data Cleansing at Healthcare Solutions Co. Healthcare Solutions Co. partnered with cloud data management services and embarked on a data cleansing initiative for their patient master data. They removed duplicate patient records and corrected inaccuracies in demographic details. This led to more accurate patient billing and streamlined appointment scheduling.

Example 3: Data Enrichment at Financial Services Group. Financial Services Group collaborated with data analytics consulting services and integrated external data sources to enrich their client master data. By adding socio-economic indicators, they gained deeper insights into their clients' financial needs and improved personalized financial advisory services.

Conclusion: In the world of Master Data Management, data quality is not an option; it's a necessity, facilitated by cloud data management services. Effective Data Quality Management, supported by master data management solutions, ensures that your master data remains accurate, consistent, and reliable, forming the bedrock of successful MDM initiatives. By leveraging techniques such as data profiling, cleansing, enrichment, and ongoing monitoring, in collaboration with data analytics consulting services, organizations can unlock the true potential of their master data. This enables them to make more informed decisions, enhance customer experiences, and gain a competitive edge in the marketplace. Remember, the journey to high-quality master data begins with a commitment to data excellence through diligent Data Quality Management.

0 notes

Text

Elеvating Cloud Data Encryption: Exploring Nеxt-Gеnеration Tеchniquеs

In an еra whеrе data brеachеs and cybеr thrеats havе bеcomе morе sophisticatеd than еvеr, thе nееd for robust data protеction mеasurеs has intеnsifiеd. Cloud computing, which offers immеnsе flеxibility and scalability, also introducеs sеcurity concerns duе to thе dеcеntralizеd naturе of data storagе. This is whеrе data еncryption еmеrgеs as a powerful solution, and as technology еvolvеs, so do thе tеchniquеs for safеguarding sеnsitivе information in thе cloud.

Related Post: The Importance of Cloud Security in Business

Thе Crucial Rolе of Cloud Data Encryption:

Cloud data encryption involves transforming data into an unrеadablе format using cryptographic algorithms. This еncryptеd data can only bе dеciphered with a specific kеy, which is hеld by authorizеd usеrs. Thе importancе of еncryption liеs in its ability to thwart unauthorizеd accеss, еvеn if an attackеr manages to brеach thе sеcurity pеrimеtеr. It еnsurеs that еvеn if data is intеrcеptеd, it rеmains indеciphеrablе and thus usеlеss to malicious actors.

Thе Evolution of Encryption Tеchniques:

As cyber thrеats grow more sophisticatеd, еncryption tеchniquеs must also еvolvе to kееp pacе. Lеt's dеlvе into somе of thе nеxt-gеnеration еncryption mеthods that arе еnhancing cloud data sеcurity:

Homomorphic Encryption: This groundbrеaking tеchnique allows computations to bе pеrformеd on еncryptеd data without dеcrypting it first. It еnsures that sеnsitivе data rеmains еncryptеd throughout procеssing, minimizing thе risk of еxposurе.

Quantum Encryption: With thе advent of quantum computеrs, traditional еncryption mеthods could bе compromisеd. Quantum еncryption lеvеragеs thе principlеs of quantum mеchanics to crеatе еncryption kеys that arе virtually impossiblе to intеrcеpt or rеplicatе.

Post-Quantum Encryption: This involvеs dеvеloping еncryption algorithms that arе resistant to attacks from both classical and quantum computеrs. As quantum computing power grows, post-quantum еncryption will play a crucial role in maintaining data sеcurity.

Multi-Party Computation (MPC): MPC еnablеs multiplе partiеs to jointly computе a function ovеr thеir individual inputs whilе kееping thosе inputs privatе. This tеchniquе adds an еxtra layеr of sеcurity by distributing data and computations across different еntitiеs.

Attributе-Basеd Encryption (ABE): ABE allows data access based on attributеs rather than specific kеys. It's particularly useful in cloud еnvironmеnts whеrе multiplе usеrs with varying lеvеls of accеss might nееd to intеract with еncryptеd data.

Tokеnization: Tokеnization involvеs rеplacing sеnsitivе data with tokеns that havе no inhеrеnt mеaning. Thеsе tokеns can bе usеd for procеssing and analysis without еxposing thе actual sеnsitivе information.

Implementing Nеxt-Gеnеration Encryption Tеchniquеs

Whilе next-genеration еncryption tеchniquеs offеr еnhancеd sеcurity, thеy also bring challеngеs in tеrms of implеmеntation and managеmеnt:

Kеy Managеmеnt: Robust еncryption rеliеs on еffеctivе key management. With new tеchniquеs, key gеnеration, storage, and rotation bеcomе morе complеx and rеquirе mеticulous attеntion.

Pеrformancе Overhead: Somе advancеd еncryption mеthods can introduce computational ovеrhеad, potentially affеcting thе pеrformancе of applications and systеms. Striking a balancе bеtwееn sеcurity and pеrformancе is crucial.

Intеgration with Existing Systеms: Intеgrating nеxt-gеnеration еncryption into еxisting cloud еnvironmеnts and applications can bе intricatе. It oftеn rеquirеs carеful planning and adaptation of currеnt architеcturе.

Usеr Expеriеncе: Encryption should not hindеr usеr еxpеriеncе. Accessing еncryptеd data should bе sеamlеss for authorizеd usеrs whilе maintaining stringеnt sеcurity mеasurеs.

Bеst Practicеs for Nеxt-Gеnеration Cloud Data Encryption

Assess Your Nееds: Undеrstand your data, its sеnsitivity, and rеgulatory requirеmеnts to detеrminе which еncryption tеchniquеs arе most suitablе.

End-to-End Encryption: Implemеnt encryption throughout thе data lifеcyclе – at rest, in transit, and during procеssing.

Thorough Kеy Managеmеnt: Dеvotе significant attеntion to kеy managеmеnt, including gеnеration, storagе, rotation, and accеss control.

Rеgular Audits and Updates: Encryption is not an onе-timе task. Rеgular audits and updatеs еnsure that your еncryption practicеs stay еffеctive against evolving threats.

Training and Awareness: Educatе your tеam about еncryption bеst practices to prеvеnt inadvеrtеnt data еxposure.

Vendor Evaluation: If you are using a cloud sеrvicе providеr, еvaluatе thеir еncryption practicеs and ensurе they align with your security requiremеnts.

Thе Path Forward

Nеxt-genеration еncryption tеchniquеs arе shaping thе futurе of cloud data sеcurity. As data brеachеs bеcomе more sophisticatеd, thе need for strongеr еncryption grows. Whilе thеsе tеchniquеs offеr еnhancеd protеction, they also rеquirе a comprеhеnsivе undеrstanding of thеir intricacies and challengеs. Implеmеnting thеm еffеctivеly dеmands collaboration bеtwееn sеcurity еxpеrts, cloud architеcts, and IT professionals.

Conclusion

In conclusion, as technology еvolvеs, so do thе thrеats to our data. Nеxt-genеration encryption tеchniquеs hold thе kеy to safеguarding sеnsitivе information in thе cloud. By еmbracing thеsе advancеd mеthods and adhеring to best practicеs, organizations can navigatе thе intricatе landscapе of cloud data sеcurity with confidеncе, еnsuring that thеir data rеmains shiеldеd from thе еvеr-еvolving rеalm of cybеr thrеats.

1 note

·

View note

Text

Arman Shehabi (Ph.D CEE’09) of Berkeley Lab spoke to The New York Times about the demand for energy efficiency amid the growing field of AI. “There has been a lot of growth, but a lot of opportunities for efficiency and incentives for efficiency,” he said.

Read the story.

Pictured: A cloud data center in blue lighting. (Courtesy Google)

#berkeley#engineering#science#uc berkeley#university#bay area#ai#artificial intelligence#data center#cloud data

0 notes

Audio

3 notes

·

View notes

Text

Soham Mazumdar, Co-Founder & CEO of WisdomAI – Interview Series

New Post has been published on https://thedigitalinsider.com/soham-mazumdar-co-founder-ceo-of-wisdomai-interview-series/

Soham Mazumdar, Co-Founder & CEO of WisdomAI – Interview Series

Soham Mazumdar is the Co-Founder and CEO of WisdomAI, a company at the forefront of AI-driven solutions. Prior to founding WisdomAI in 2023, he was Co-Founder and Chief Architect at Rubrik, where he played a key role in scaling the company over a 9-year period. Soham previously held engineering leadership roles at Facebook and Google, where he contributed to core search infrastructure and was recognized with the Google Founder’s Award. He also co-founded Tagtile, a mobile loyalty platform acquired by Facebook. With two decades of experience in software architecture and AI innovation, Soham is a seasoned entrepreneur and technologist based in the San Francisco Bay Area.

WisdomAI is an AI-native business intelligence platform that helps enterprises access real-time, accurate insights by integrating structured and unstructured data through its proprietary “Knowledge Fabric.” The platform powers specialized AI agents that curate data context, answer business questions in natural language, and proactively surface trends or risks—without generating hallucinated content. Unlike traditional BI tools, WisdomAI uses generative AI strictly for query generation, ensuring high accuracy and reliability. It integrates with existing data ecosystems and supports enterprise-grade security, with early adoption by major firms like Cisco and ConocoPhillips.

You co-founded Rubrik and helped scale it into a major enterprise success. What inspired you to leave in 2023 and build WisdomAI—and was there a particular moment that clarified this new direction?

The enterprise data inefficiency problem was staring me right in the face. During my time at Rubrik, I witnessed firsthand how Fortune 500 companies were drowning in data but starving for insights. Even with all the infrastructure we built, less than 20% of enterprise users actually had the right access and know-how to use data effectively in their daily work. It was a massive, systemic problem that no one was really solving.

I’m also a builder by nature – you can see it in my path from Google to Tagtile to Rubrik and now WisdomAI. I get energized by taking on fundamental challenges and building solutions from the ground up. After helping scale Rubrik to enterprise success, I felt that entrepreneurial pull again to tackle something equally ambitious.

Last but not least, the AI opportunity was impossible to ignore. By 2023, it became clear that AI could finally bridge that gap between data availability and data usability. The timing felt perfect to build something that could democratize data insights for every enterprise user, not just the technical few.

The moment of clarity came when I realized we could combine everything I’d learned about enterprise data infrastructure at Rubrik with the transformative potential of AI to solve this fundamental inefficiency problem.

WisdomAI introduces a “Knowledge Fabric” and a suite of AI agents. Can you break down how this system works together to move beyond traditional BI dashboards?

We’ve built an agentic data insights platform that works with data where it is – structured, unstructured, and even “dirty” data. Rather than asking analytics teams to run reports, business managers can directly ask questions and drill into details. Our platform can be trained on any data warehousing system by analyzing query logs.

We’re compatible with major cloud data services like Snowflake, Microsoft Fabric, Google’s BigQuery, Amazon’s Redshift, Databricks, and Postgres and also just document formats like excel, PDF, powerpoint etc.

Unlike conventional tools designed primarily for analysts, our conversational interface empowers business users to get answers directly, while our multi-agent architecture enables complex queries across diverse data systems.

You’ve emphasized that WisdomAI avoids hallucinations by separating GenAI from answer generation. Can you explain how your system uses GenAI differently—and why that matters for enterprise trust?

Our AI-Ready Context Model trains on the organization’s data to create a universal context understanding that answers questions with high semantic accuracy while maintaining data privacy and governance. Furthermore, we use generative AI to formulate well-scoped queries that allow us to extract data from the different systems, as opposed to feeding raw data into the LLMs. This is crucial for addressing hallucination and safety concerns with LLMs.

You coined the term “Agentic Data Insights Platform.” How is agentic intelligence different from traditional analytics tools or even standard LLM-based assistants?

Traditional BI stacks slow decision-making because every question has to fight its way through disconnected data silos and a relay team of specialists. When a chief revenue officer needs to know how to close the quarter, the answer typically passes through half a dozen hands—analysts wrangling CRM extracts, data engineers stitching files together, and dashboard builders refreshing reports—turning a simple query into a multi-day project.

Our platform breaks down those silos and puts the full depth of data one keystroke away, so the CRO can drill from headline metrics all the way to row-level detail in seconds.

No waiting in the analyst queue, no predefined dashboards that can’t keep up with new questions—just true self-service insights delivered at the speed the business moves.

How do you ensure WisdomAI adapts to the unique data vocabulary and structure of each enterprise? What role does human input play in refining the Knowledge Fabric?

Working with data where and how it is – that’s essentially the holy grail for enterprise business intelligence. Traditional systems aren’t built to handle unstructured data or “dirty” data with typos and errors. When information exists across varied sources – databases, documents, telemetry data – organizations struggle to integrate this information cohesively.

Without capabilities to handle these diverse data types, valuable context remains isolated in separate systems. Our platform can be trained on any data warehousing system by analyzing query logs, allowing it to adapt to each organization’s unique data vocabulary and structure.

You’ve described WisdomAI’s development process as ‘vibe coding’—building product experiences directly in code first, then iterating through real-world use. What advantages has this approach given you compared to traditional product design?

“Vibe coding” is a significant shift in how software is built where developers leverage the power of AI tools to generate code simply by describing the desired functionality in natural language. It’s like an intelligent assistant that does what you want the software to do, and it writes the code for you. This dramatically reduces the manual effort and time traditionally required for coding.

For years, the creation of digital products has largely followed a familiar script: meticulously plan the product and UX design, then execute the development, and iterate based on feedback. The logic was clear because investing in design upfront minimizes costly rework during the more expensive and time-consuming development phase. But what happens when the cost and time to execute that development drastically shrinks? This capability flips the traditional development sequence on its head. Suddenly, developers can start building functional software based on a high-level understanding of the requirements, even before detailed product and UX designs are finalized.

With the speed of AI code generation, the effort involved in creating exhaustive upfront designs can, in certain contexts, become relatively more time-consuming than getting a basic, functional version of the software up and running. The new paradigm in the world of vibe coding becomes: execute (code with AI), then adapt (design and refine).

This approach allows for incredibly early user validation of the core concepts. Imagine getting feedback on the actual functionality of a feature before investing heavily in detailed visual designs. This can lead to more user-centric designs, as the design process is directly informed by how users interact with a tangible product.

At WisdomAI, we actively embrace AI code generation. We’ve found that by embracing rapid initial development, we can quickly test core functionalities and gather invaluable user feedback early in the process, live on the product. This allows our design team to then focus on refining the user experience and visual design based on real-world usage, leading to more effective and user-loved products, faster.

From sales and marketing to manufacturing and customer success, WisdomAI targets a wide spectrum of business use cases. Which verticals have seen the fastest adoption—and what use cases have surprised you in their impact?

We’ve seen transformative results with multiple customers. For F500 oil and gas company, ConocoPhillips, drilling engineers and operators now use our platform to query complex well data directly in natural language. Before WisdomAI, these engineers needed technical help for even basic operational questions about well status or job performance. Now they can instantly access this information while simultaneously comparing against best practices in their drilling manuals—all through the same conversational interface. They evaluated numerous AI vendors in a six-month process, and our solution delivered a 50% accuracy improvement over the closest competitor.

At a hyper growth Cyber Security company Descope, WisdomAI is used as a virtual data analyst for Sales and Finance. We reduced report creation time from 2-3 days to just 2-3 hours—a 90% decrease. This transformed their weekly sales meetings from data-gathering exercises to strategy sessions focused on actionable insights. As their CRO notes, ��Wisdom AI brings data to my fingertips. It really democratizes the data, bringing me the power to go answer questions and move on with my day, rather than define your question, wait for somebody to build that answer, and then get it in 5 days.” This ability to make data-driven decisions with unprecedented speed has been particularly crucial for a fast-growing company in the competitive identity management market.

A practical example: A chief revenue officer asks, “How am I going to close my quarter?” Our platform immediately offers a list of pending deals to focus on, along with information on what’s delaying each one – such as specific questions customers are waiting to have answered. This happens with five keystrokes instead of five specialists and days of delay.

Many companies today are overloaded with dashboards, reports, and siloed tools. What are the most common misconceptions enterprises have about business intelligence today?

Organizations sit on troves of information yet struggle to leverage this data for quick decision-making. The challenge isn’t just about having data, but working with it in its natural state – which often includes “dirty” data not cleaned of typos or errors. Companies invest heavily in infrastructure but face bottlenecks with rigid dashboards, poor data hygiene, and siloed information. Most enterprises need specialized teams to run reports, creating significant delays when business leaders need answers quickly. The interface where people consume data remains outdated despite advancements in cloud data engines and data science.

Do you view WisdomAI as augmenting or eventually replacing existing BI tools like Tableau or Looker? How do you fit into the broader enterprise data stack?

We’re compatible with major cloud data services like Snowflake, Microsoft Fabric, Google’s BigQuery, Amazon’s Redshift, Databricks, and Postgres and also just document formats like excel, PDF, powerpoint etc. Our approach transforms the interface where people consume data, which has remained outdated despite advancements in cloud data engines and data science.

Looking ahead, where do you see WisdomAI in five years—and how do you see the concept of “agentic intelligence” evolving across the enterprise landscape?

The future of analytics is moving from specialist-driven reports to self-service intelligence accessible to everyone. BI tools have been around for 20+ years, but adoption hasn’t even reached 20% of company employees. Meanwhile, in just twelve months, 60% of workplace users adopted ChatGPT, many using it for data analysis. This dramatic difference shows the potential for conversational interfaces to increase adoption.

We’re seeing a fundamental shift where all employees can directly interrogate data without technical skills. The future will combine the computational power of AI with natural human interaction, allowing insights to find users proactively rather than requiring them to hunt through dashboards.

Thank you for the great interview, readers who wish to learn more should visit WisdomAI.

#2023#adoption#agent#agents#ai#AI AGENTS#ai code generation#AI innovation#ai tools#Amazon#Analysis#Analytics#approach#architecture#assistants#bi#bi tools#bigquery#bridge#Building#Business#Business Intelligence#CEO#challenge#chatGPT#Cisco#Cloud#cloud data#code#code generation

0 notes

Text

407 notes

·

View notes

Text

Cloud Data Migration for MSPs: CloudFuze’s Reselling Benefits

https://www.cloudfuze.com/cloud-data-migration-for-msps-cloudfuzes-reselling-benefits/

0 notes