#Cloud based DevOps

Explore tagged Tumblr posts

Text

#Cloud and DevOps#Cloud based DevOps#Cloud Native Development#Cloud SecurityCloud Consulting services#Cloud migration#Cloud infrastructure management#Application modernization services over cloud

0 notes

Text

#Top Technology Services Company in India#Generative AI Development Company#AI Calling Software#AI Software Development Company#Best Chatbot Service Company#AI Calling Software Development#AI Automation Software#Best AI Chat Bot Development Company#AI Software Dev Company#Top Web Development Company in India#Top Software Services Company in India#Best Product Design Company in World#Best Cloud and Devops Company#Best Analytic Solutions Company#Best Blockchain Development Company in India#Best Tech Blogs in 2024#Creating an AI-Based Product#Custom Software Development for Healthcare#NexaCalling Best AI Calling Bot#Best ERP Management System#WhatsApp Bulk Sender#Neighborhue Frontend Vercel

0 notes

Text

Invimatic is a leading DevOps company based in the USA, specializing in cloud-based Single Sign-On (SSO) solutions. With a focus on enhancing security and efficiency, Invimatic offers cutting-edge SSO technology to streamline access management for businesses. Their expertise in DevOps and commitment to innovation make them a trusted choice for organizations seeking robust SSO solutions in the United States.

0 notes

Text

https://www.blogbangboom.com/blog/aws-cloud-services-empowering-businesses-with-scalability-and-flexibility

AWS Cloud Services: Empowering Businesses with Scalability and Flexibility

In today's digital age, businesses are increasingly relying on cloud services to meet their computing and storage needs. Amazon Web Services (AWS) has emerged as a leading cloud service provider, offering a wide range of services to help businesses scale, innovate, and stay competitive.

#aws cloud services#aws cloud server#server hosting aws#devops consulting services#aws hosting provider#aws cloud infrastructure#devops consulting companies#aws cloud technologies#cloud based cyber security#azure cloud computing services#cloud architecture design

0 notes

Text

So, let me try and put everything together here, because I really do think it needs to be talked about.

Today, Unity announced that it intends to apply a fee to use its software. Then it got worse.

For those not in the know, Unity is the most popular free to use video game development tool, offering a basic version for individuals who want to learn how to create games or create independently alongside paid versions for corporations or people who want more features. It's decent enough at this job, has issues but for the price point I can't complain, and is the idea entry point into creating in this medium, it's a very important piece of software.

But speaking of tools, the CEO is a massive one. When he was the COO of EA, he advocated for using, what out and out sounds like emotional manipulation to coerce players into microtransactions.

"A consumer gets engaged in a property, they might spend 10, 20, 30, 50 hours on the game and then when they're deep into the game they're well invested in it. We're not gouging, but we're charging and at that point in time the commitment can be pretty high."

He also called game developers who don't discuss monetization early in the planning stages of development, quote, "fucking idiots".

So that sets the stage for what might be one of the most bald-faced greediest moves I've seen from a corporation in a minute. Most at least have the sense of self-preservation to hide it.

A few hours ago, Unity posted this announcement on the official blog.

Effective January 1, 2024, we will introduce a new Unity Runtime Fee that’s based on game installs. We will also add cloud-based asset storage, Unity DevOps tools, and AI at runtime at no extra cost to Unity subscription plans this November. We are introducing a Unity Runtime Fee that is based upon each time a qualifying game is downloaded by an end user. We chose this because each time a game is downloaded, the Unity Runtime is also installed. Also we believe that an initial install-based fee allows creators to keep the ongoing financial gains from player engagement, unlike a revenue share.

Now there are a few red flags to note in this pitch immediately.

Unity is planning on charging a fee on all games which use its engine.

This is a flat fee per number of installs.

They are using an always online runtime function to determine whether a game is downloaded.

There is just so many things wrong with this that it's hard to know where to start, not helped by this FAQ which doubled down on a lot of the major issues people had.

I guess let's start with what people noticed first. Because it's using a system baked into the software itself, Unity would not be differentiating between a "purchase" and a "download". If someone uninstalls and reinstalls a game, that's two downloads. If someone gets a new computer or a new console and downloads a game already purchased from their account, that's two download. If someone pirates the game, the studio will be asked to pay for that download.

Q: How are you going to collect installs? A: We leverage our own proprietary data model. We believe it gives an accurate determination of the number of times the runtime is distributed for a given project. Q: Is software made in unity going to be calling home to unity whenever it's ran, even for enterprice licenses? A: We use a composite model for counting runtime installs that collects data from numerous sources. The Unity Runtime Fee will use data in compliance with GDPR and CCPA. The data being requested is aggregated and is being used for billing purposes. Q: If a user reinstalls/redownloads a game / changes their hardware, will that count as multiple installs? A: Yes. The creator will need to pay for all future installs. The reason is that Unity doesn’t receive end-player information, just aggregate data. Q: What's going to stop us being charged for pirated copies of our games? A: We do already have fraud detection practices in our Ads technology which is solving a similar problem, so we will leverage that know-how as a starting point. We recognize that users will have concerns about this and we will make available a process for them to submit their concerns to our fraud compliance team.

This is potentially related to a new system that will require Unity Personal developers to go online at least once every three days.

Starting in November, Unity Personal users will get a new sign-in and online user experience. Users will need to be signed into the Hub with their Unity ID and connect to the internet to use Unity. If the internet connection is lost, users can continue using Unity for up to 3 days while offline. More details to come, when this change takes effect.

It's unclear whether this requirement will be attached to any and all Unity games, though it would explain how they're theoretically able to track "the number of installs", and why the methodology for tracking these installs is so shit, as we'll discuss later.

Unity claims that it will only leverage this fee to games which surpass a certain threshold of downloads and yearly revenue.

Only games that meet the following thresholds qualify for the Unity Runtime Fee: Unity Personal and Unity Plus: Those that have made $200,000 USD or more in the last 12 months AND have at least 200,000 lifetime game installs. Unity Pro and Unity Enterprise: Those that have made $1,000,000 USD or more in the last 12 months AND have at least 1,000,000 lifetime game installs.

They don't say how they're going to collect information on a game's revenue, likely this is just to say that they're only interested in squeezing larger products (games like Genshin Impact and Honkai: Star Rail, Fate Grand Order, Among Us, and Fall Guys) and not every 2 dollar puzzle platformer that drops on Steam. But also, these larger products have the easiest time porting off of Unity and the most incentives to, meaning realistically those heaviest impacted are going to be the ones who just barely meet this threshold, most of them indie developers.

Aggro Crab Games, one of the first to properly break this story, points out that systems like the Xbox Game Pass, which is already pretty predatory towards smaller developers, will quickly inflate their "lifetime game installs" meaning even skimming the threshold of that 200k revenue, will be asked to pay a fee per install, not a percentage on said revenue.

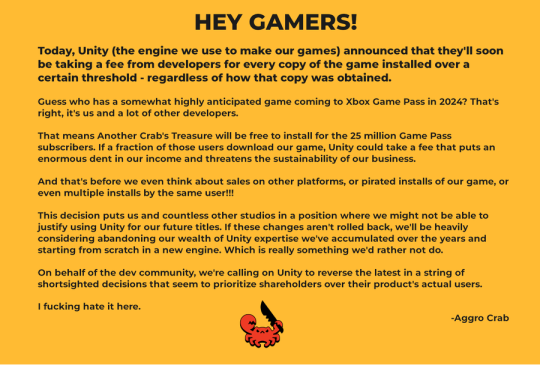

[IMAGE DESCRIPTION: Hey Gamers!

Today, Unity (the engine we use to make our games) announced that they'll soon be taking a fee from developers for every copy of the game installed over a certain threshold - regardless of how that copy was obtained.

Guess who has a somewhat highly anticipated game coming to Xbox Game Pass in 2024? That's right, it's us and a lot of other developers.

That means Another Crab's Treasure will be free to install for the 25 million Game Pass subscribers. If a fraction of those users download our game, Unity could take a fee that puts an enormous dent in our income and threatens the sustainability of our business.

And that's before we even think about sales on other platforms, or pirated installs of our game, or even multiple installs by the same user!!!

This decision puts us and countless other studios in a position where we might not be able to justify using Unity for our future titles. If these changes aren't rolled back, we'll be heavily considering abandoning our wealth of Unity expertise we've accumulated over the years and starting from scratch in a new engine. Which is really something we'd rather not do.

On behalf of the dev community, we're calling on Unity to reverse the latest in a string of shortsighted decisions that seem to prioritize shareholders over their product's actual users.

I fucking hate it here.

-Aggro Crab - END DESCRIPTION]

That fee, by the way, is a flat fee. Not a percentage, not a royalty. This means that any games made in Unity expecting any kind of success are heavily incentivized to cost as much as possible.

[IMAGE DESCRIPTION: A table listing the various fees by number of Installs over the Install Threshold vs. version of Unity used, ranging from $0.01 to $0.20 per install. END DESCRIPTION]

Basic elementary school math tells us that if a game comes out for $1.99, they will be paying, at maximum, 10% of their revenue to Unity, whereas jacking the price up to $59.99 lowers that percentage to something closer to 0.3%. Obviously any company, especially any company in financial desperation, which a sudden anchor on all your revenue is going to create, is going to choose the latter.

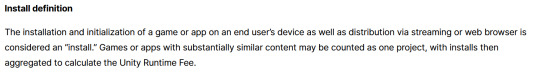

Furthermore, and following the trend of "fuck anyone who doesn't ask for money", Unity helpfully defines what an install is on their main site.

While I'm looking at this page as it exists now, it currently says

The installation and initialization of a game or app on an end user’s device as well as distribution via streaming is considered an “install.” Games or apps with substantially similar content may be counted as one project, with installs then aggregated to calculate the Unity Runtime Fee.

However, I saw a screenshot saying something different, and utilizing the Wayback Machine we can see that this phrasing was changed at some point in the few hours since this announcement went up. Instead, it reads:

The installation and initialization of a game or app on an end user’s device as well as distribution via streaming or web browser is considered an “install.” Games or apps with substantially similar content may be counted as one project, with installs then aggregated to calculate the Unity Runtime Fee.

Screenshot for posterity:

That would mean web browser games made in Unity would count towards this install threshold. You could legitimately drive the count up simply by continuously refreshing the page. The FAQ, again, doubles down.

Q: Does this affect WebGL and streamed games? A: Games on all platforms are eligible for the fee but will only incur costs if both the install and revenue thresholds are crossed. Installs - which involves initialization of the runtime on a client device - are counted on all platforms the same way (WebGL and streaming included).

And, what I personally consider to be the most suspect claim in this entire debacle, they claim that "lifetime installs" includes installs prior to this change going into effect.

Will this fee apply to games using Unity Runtime that are already on the market on January 1, 2024? Yes, the fee applies to eligible games currently in market that continue to distribute the runtime. We look at a game's lifetime installs to determine eligibility for the runtime fee. Then we bill the runtime fee based on all new installs that occur after January 1, 2024.

Again, again, doubled down in the FAQ.

Q: Are these fees going to apply to games which have been out for years already? If you met the threshold 2 years ago, you'll start owing for any installs monthly from January, no? (in theory). It says they'll use previous installs to determine threshold eligibility & then you'll start owing them for the new ones. A: Yes, assuming the game is eligible and distributing the Unity Runtime then runtime fees will apply. We look at a game's lifetime installs to determine eligibility for the runtime fee. Then we bill the runtime fee based on all new installs that occur after January 1, 2024.

That would involve billing companies for using their software before telling them of the existence of a bill. Holding their actions to a contract that they performed before the contract existed!

Okay. I think that's everything. So far.

There is one thing that I want to mention before ending this post, unfortunately it's a little conspiratorial, but it's so hard to believe that anyone genuinely thought this was a good idea that it's stuck in my brain as a significant possibility.

A few days ago it was reported that Unity's CEO sold 2,000 shares of his own company.

On September 6, 2023, John Riccitiello, President and CEO of Unity Software Inc (NYSE:U), sold 2,000 shares of the company. This move is part of a larger trend for the insider, who over the past year has sold a total of 50,610 shares and purchased none.

I would not be surprised if this decision gets reversed tomorrow, that it was literally only made for the CEO to short his own goddamn company, because I would sooner believe that this whole thing is some idiotic attempt at committing fraud than a real monetization strategy, even knowing how unfathomably greedy these people can be.

So, with all that said, what do we do now?

Well, in all likelihood you won't need to do anything. As I said, some of the biggest names in the industry would be directly affected by this change, and you can bet your bottom dollar that they're not just going to take it lying down. After all, the only way to stop a greedy CEO is with a greedier CEO, right?

(I fucking hate it here.)

And that's not mentioning the indie devs who are already talking about abandoning the engine.

[Links display tweets from the lead developer of Among Us saying it'd be less costly to hire people to move the game off of Unity and Cult of the Lamb's official twitter saying the game won't be available after January 1st in response to the news.]

That being said, I'm still shaken by all this. The fact that Unity is openly willing to go back and punish its developers for ever having used the engine in the past makes me question my relationship to it.

The news has given rise to the visibility of free, open source alternative Godot, which, if you're interested, is likely a better option than Unity at this point. Mostly, though, I just hope we can get out of this whole, fucking, environment where creatives are treated as an endless mill of free profits that's going to be continuously ratcheted up and up to drive unsustainable infinite corporate growth that our entire economy is based on for some fuckin reason.

Anyways, that's that, I find having these big posts that break everything down to be helpful.

#Unity#Unity3D#Video Games#Game Development#Game Developers#fuckshit#I don't know what to tag news like this

6K notes

·

View notes

Text

Build the Future of Tech: Enroll in the Leading DevOps Course Online Today

In a global economy where speed, security, and scalability are parameters of success, DevOps has emerged as the pulsating core of contemporary IT operations. Businesses are not recruiting either developers or sysadmins anymore—employers need DevOps individuals who can seamlessly integrate both worlds.

If you're willing to accelerate your career and become irreplaceable in the tech world, then now is the ideal time to sign up for Devops Course Online. And ReferMe Group's AWS DevOps Course is the one to take you there—quicker.

Why DevOps? Why Now?

The need for DevOps professionals is growing like crazy. As per current industry reports, job titles such as DevOps Engineer, Cloud Architect, and Site Reliability Engineer are among the best-paying and safest careers in technology today.

Why? Because DevOps helps businesses to:

Deploy faster using continuous integration and delivery (CI/CD)

Boost reliability and uptime

Automate everything-from infrastructure to testing

Scale apps with ease on cloud platforms like AWS

And individuals who develop these skills are rapidly becoming the pillars of today's tech teams.

Why Learn a DevOps Online?

Learning DevOps online provides more than convenience—it provides liberation. As a full-time professional, student, or career changer, online learning allows you:

✅ To learn at your own pace

✅ To access world-class instructors anywhere

✅ To develop real-world, project-based skills

✅ To prepare for globally recognized certifications

✅ J To join a growing network of DevOps learners and mentors

It’s professional-grade training—without the classroom limitations.

What Makes ReferMe Group’s DevOps Course Stand Out?

The AWS DevOps Course from ReferMe Group isn’t just a course—it’s a career accelerator. Here's what sets it apart:

Hands-On Labs & Projects: You’ll work on live AWS environments and build end-to-end DevOps pipelines using tools like Jenkins, Docker, Terraform, Git, Kubernetes, and more.

Training from Experts: Learn from experienced industry experts who have used DevOps at scale.

Resume-Reinforcing Certifications: Train to clear AWS and DevOps certification exams confidently.

Career Guidance: From resume creation to interview preparation, we prepare you for jobs, not course completion.

Lifetime Access: Come back to the content anytime with future upgrades covered.

Who Should Take This Course?

This DevOps course is ideal for:

Software Developers looking to move into deployment and automation

IT Professionals who want to upskill in cloud infrastructure

System Admins transitioning to new-age DevOps careers

Career changers entering the high-demand cloud and DevOps space

Students and recent graduates seeking a future-proof skill set

No experience in DevOps? No worries. We take you from the basics to advanced tools.

Final Thoughts: Your DevOps Journey Starts Here

As businesses continue to move to the cloud and automate their pipelines, DevOps engineers are no longer a nicety—they're a necessity. Investing in a high-quality DevOps course online provides you with the skills, certification, and confidence to compete and succeed in today's tech industry.

Start building your future today.

Join ReferMe Group's AWS DevOps Course today and become the architect of tomorrow's technology.

2 notes

·

View notes

Text

How Python Powers Scalable and Cost-Effective Cloud Solutions

Explore the role of Python in developing scalable and cost-effective cloud solutions. This guide covers Python's advantages in cloud computing, addresses potential challenges, and highlights real-world applications, providing insights into leveraging Python for efficient cloud development.

Introduction

In today's rapidly evolving digital landscape, businesses are increasingly leveraging cloud computing to enhance scalability, optimize costs, and drive innovation. Among the myriad of programming languages available, Python has emerged as a preferred choice for developing robust cloud solutions. Its simplicity, versatility, and extensive library support make it an ideal candidate for cloud-based applications.

In this comprehensive guide, we will delve into how Python empowers scalable and cost-effective cloud solutions, explore its advantages, address potential challenges, and highlight real-world applications.

Why Python is the Preferred Choice for Cloud Computing?

Python's popularity in cloud computing is driven by several factors, making it the preferred language for developing and managing cloud solutions. Here are some key reasons why Python stands out:

Simplicity and Readability: Python's clean and straightforward syntax allows developers to write and maintain code efficiently, reducing development time and costs.

Extensive Library Support: Python offers a rich set of libraries and frameworks like Django, Flask, and FastAPI for building cloud applications.

Seamless Integration with Cloud Services: Python is well-supported across major cloud platforms like AWS, Azure, and Google Cloud.

Automation and DevOps Friendly: Python supports infrastructure automation with tools like Ansible, Terraform, and Boto3.

Strong Community and Enterprise Adoption: Python has a massive global community that continuously improves and innovates cloud-related solutions.

How Python Enables Scalable Cloud Solutions?

Scalability is a critical factor in cloud computing, and Python provides multiple ways to achieve it:

1. Automation of Cloud Infrastructure

Python's compatibility with cloud service provider SDKs, such as AWS Boto3, Azure SDK for Python, and Google Cloud Client Library, enables developers to automate the provisioning and management of cloud resources efficiently.

2. Containerization and Orchestration

Python integrates seamlessly with Docker and Kubernetes, enabling businesses to deploy scalable containerized applications efficiently.

3. Cloud-Native Development

Frameworks like Flask, Django, and FastAPI support microservices architecture, allowing businesses to develop lightweight, scalable cloud applications.

4. Serverless Computing

Python's support for serverless platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions, allows developers to build applications that automatically scale in response to demand, optimizing resource utilization and cost.

5. AI and Big Data Scalability

Python’s dominance in AI and data science makes it an ideal choice for cloud-based AI/ML services like AWS SageMaker, Google AI, and Azure Machine Learning.

Looking for expert Python developers to build scalable cloud solutions? Hire Python Developers now!

Advantages of Using Python for Cloud Computing

Cost Efficiency: Python’s compatibility with serverless computing and auto-scaling strategies minimizes cloud costs.

Faster Development: Python’s simplicity accelerates cloud application development, reducing time-to-market.

Cross-Platform Compatibility: Python runs seamlessly across different cloud platforms.

Security and Reliability: Python-based security tools help in encryption, authentication, and cloud monitoring.

Strong Community Support: Python developers worldwide contribute to continuous improvements, making it future-proof.

Challenges and Considerations

While Python offers many benefits, there are some challenges to consider:

Performance Limitations: Python is an interpreted language, which may not be as fast as compiled languages like Java or C++.

Memory Consumption: Python applications might require optimization to handle large-scale cloud workloads efficiently.

Learning Curve for Beginners: Though Python is simple, mastering cloud-specific frameworks requires time and expertise.

Python Libraries and Tools for Cloud Computing

Python’s ecosystem includes powerful libraries and tools tailored for cloud computing, such as:

Boto3: AWS SDK for Python, used for cloud automation.

Google Cloud Client Library: Helps interact with Google Cloud services.

Azure SDK for Python: Enables seamless integration with Microsoft Azure.

Apache Libcloud: Provides a unified interface for multiple cloud providers.

PyCaret: Simplifies machine learning deployment in cloud environments.

Real-World Applications of Python in Cloud Computing

1. Netflix - Scalable Streaming with Python

Netflix extensively uses Python for automation, data analysis, and managing cloud infrastructure, enabling seamless content delivery to millions of users.

2. Spotify - Cloud-Based Music Streaming

Spotify leverages Python for big data processing, recommendation algorithms, and cloud automation, ensuring high availability and scalability.

3. Reddit - Handling Massive Traffic

Reddit uses Python and AWS cloud solutions to manage heavy traffic while optimizing server costs efficiently.

Future of Python in Cloud Computing

The future of Python in cloud computing looks promising with emerging trends such as:

AI-Driven Cloud Automation: Python-powered AI and machine learning will drive intelligent cloud automation.

Edge Computing: Python will play a crucial role in processing data at the edge for IoT and real-time applications.

Hybrid and Multi-Cloud Strategies: Python’s flexibility will enable seamless integration across multiple cloud platforms.

Increased Adoption of Serverless Computing: More enterprises will adopt Python for cost-effective serverless applications.

Conclusion

Python's simplicity, versatility, and robust ecosystem make it a powerful tool for developing scalable and cost-effective cloud solutions. By leveraging Python's capabilities, businesses can enhance their cloud applications' performance, flexibility, and efficiency.

Ready to harness the power of Python for your cloud solutions? Explore our Python Development Services to discover how we can assist you in building scalable and efficient cloud applications.

FAQs

1. Why is Python used in cloud computing?

Python is widely used in cloud computing due to its simplicity, extensive libraries, and seamless integration with cloud platforms like AWS, Google Cloud, and Azure.

2. Is Python good for serverless computing?

Yes! Python works efficiently in serverless environments like AWS Lambda, Azure Functions, and Google Cloud Functions, making it an ideal choice for cost-effective, auto-scaling applications.

3. Which companies use Python for cloud solutions?

Major companies like Netflix, Spotify, Dropbox, and Reddit use Python for cloud automation, AI, and scalable infrastructure management.

4. How does Python help with cloud security?

Python offers robust security libraries like PyCryptodome and OpenSSL, enabling encryption, authentication, and cloud monitoring for secure cloud applications.

5. Can Python handle big data in the cloud?

Yes! Python supports big data processing with tools like Apache Spark, Pandas, and NumPy, making it suitable for data-driven cloud applications.

#Python development company#Python in Cloud Computing#Hire Python Developers#Python for Multi-Cloud Environments

2 notes

·

View notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

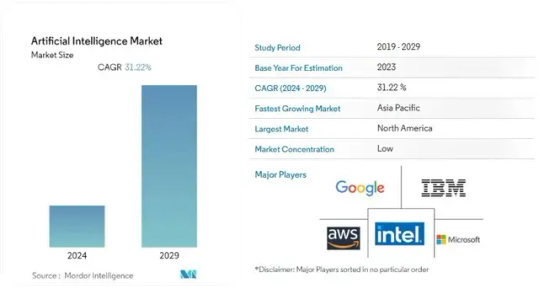

Embrace the Future with AI 🚀

The AI industry is set to skyrocket from USD 2.41 trillion in 2023 to a projected USD 30.13 trillion by 2032, growing at a phenomenal CAGR of 32.4%! The AI market continues to experience robust growth driven by advancements in machine learning, natural language processing, and cloud computing. Key industry player heavily invests in AI to enhance their product offerings and gain competitive advantages.

Here is a brief analysis of why and how AI can transform businesses to stay ahead in the digital age.

Key Trends:

Predictive Analytics: There’s an increasing demand for predictive analytics solutions across various industries to leverage data-driven decision-making.

Data Generation: Massive growth in data generation due to technological advancements is pushing the demand for AI solutions.

Cloud Adoption: The adoption of cloud-based applications and services is accelerating AI implementation.

Consumer Experience: Companies are focusing on enhancing consumer experience through AI-driven personalized services.

Challenges:

Initial Costs: High initial costs and concerns over replacing the human workforce.

Skill Gap: A lack of skilled AI technicians and experts.

Data Privacy: Concerns regarding data privacy and security.

Vabro is excited to announce the launch of Vabro Genie, one of the most intelligent SaaS AI engines. Vabro Genie helps companies manage projects, DevOps, and workflows with unprecedented efficiency and intelligence. Don’t miss out on leveraging this game-changing tool!

Visit www.vabro.com

#ArtificialIntelligence#TechTrends#Innovation#Vabro#AI#VabroGenie#ProjectManagement#DevOps#Workflows#Scrum#Agile

3 notes

·

View notes

Text

Navigating the DevOps Landscape: Opportunities and Roles

DevOps has become a game-changer in the quick-moving world of technology. This dynamic process, whose name is a combination of "Development" and "Operations," is revolutionising the way software is created, tested, and deployed. DevOps is a cultural shift that encourages cooperation, automation, and integration between development and IT operations teams, not merely a set of practises. The outcome? greater software delivery speed, dependability, and effectiveness.

In this comprehensive guide, we'll delve into the essence of DevOps, explore the key technologies that underpin its success, and uncover the vast array of job opportunities it offers. Whether you're an aspiring IT professional looking to enter the world of DevOps or an experienced practitioner seeking to enhance your skills, this blog will serve as your roadmap to mastering DevOps. So, let's embark on this enlightening journey into the realm of DevOps.

Key Technologies for DevOps:

Version Control Systems: DevOps teams rely heavily on robust version control systems such as Git and SVN. These systems are instrumental in managing and tracking changes in code and configurations, promoting collaboration and ensuring the integrity of the software development process.

Continuous Integration/Continuous Deployment (CI/CD): The heart of DevOps, CI/CD tools like Jenkins, Travis CI, and CircleCI drive the automation of critical processes. They orchestrate the building, testing, and deployment of code changes, enabling rapid, reliable, and consistent software releases.

Configuration Management: Tools like Ansible, Puppet, and Chef are the architects of automation in the DevOps landscape. They facilitate the automated provisioning and management of infrastructure and application configurations, ensuring consistency and efficiency.

Containerization: Docker and Kubernetes, the cornerstones of containerization, are pivotal in the DevOps toolkit. They empower the creation, deployment, and management of containers that encapsulate applications and their dependencies, simplifying deployment and scaling.

Orchestration: Docker Swarm and Amazon ECS take center stage in orchestrating and managing containerized applications at scale. They provide the control and coordination required to maintain the efficiency and reliability of containerized systems.

Monitoring and Logging: The observability of applications and systems is essential in the DevOps workflow. Monitoring and logging tools like the ELK Stack (Elasticsearch, Logstash, Kibana) and Prometheus are the eyes and ears of DevOps professionals, tracking performance, identifying issues, and optimizing system behavior.

Cloud Computing Platforms: AWS, Azure, and Google Cloud are the foundational pillars of cloud infrastructure in DevOps. They offer the infrastructure and services essential for creating and scaling cloud-based applications, facilitating the agility and flexibility required in modern software development.

Scripting and Coding: Proficiency in scripting languages such as Shell, Python, Ruby, and coding skills are invaluable assets for DevOps professionals. They empower the creation of automation scripts and tools, enabling customization and extensibility in the DevOps pipeline.

Collaboration and Communication Tools: Collaboration tools like Slack and Microsoft Teams enhance the communication and coordination among DevOps team members. They foster efficient collaboration and facilitate the exchange of ideas and information.

Infrastructure as Code (IaC): The concept of Infrastructure as Code, represented by tools like Terraform and AWS CloudFormation, is a pivotal practice in DevOps. It allows the definition and management of infrastructure using code, ensuring consistency and reproducibility, and enabling the rapid provisioning of resources.

Job Opportunities in DevOps:

DevOps Engineer: DevOps engineers are the architects of continuous integration and continuous deployment (CI/CD) pipelines. They meticulously design and maintain these pipelines to automate the deployment process, ensuring the rapid, reliable, and consistent release of software. Their responsibilities extend to optimizing the system's reliability, making them the backbone of seamless software delivery.

Release Manager: Release managers play a pivotal role in orchestrating the software release process. They carefully plan and schedule software releases, coordinating activities between development and IT teams. Their keen oversight ensures the smooth transition of software from development to production, enabling timely and successful releases.

Automation Architect: Automation architects are the visionaries behind the design and development of automation frameworks. These frameworks streamline deployment and monitoring processes, leveraging automation to enhance efficiency and reliability. They are the engineers of innovation, transforming manual tasks into automated wonders.

Cloud Engineer: Cloud engineers are the custodians of cloud infrastructure. They adeptly manage cloud resources, optimizing their performance and ensuring scalability. Their expertise lies in harnessing the power of cloud platforms like AWS, Azure, or Google Cloud to provide robust, flexible, and cost-effective solutions.

Site Reliability Engineer (SRE): SREs are the sentinels of system reliability. They focus on maintaining the system's resilience through efficient practices, continuous monitoring, and rapid incident response. Their vigilance ensures that applications and systems remain stable and performant, even in the face of challenges.

Security Engineer: Security engineers are the guardians of the DevOps pipeline. They integrate security measures seamlessly into the software development process, safeguarding it from potential threats and vulnerabilities. Their role is crucial in an era where security is paramount, ensuring that DevOps practices are fortified against breaches.

As DevOps continues to redefine the landscape of software development and deployment, gaining expertise in its core principles and technologies is a strategic career move. ACTE Technologies offers comprehensive DevOps training programs, led by industry experts who provide invaluable insights, real-world examples, and hands-on guidance. ACTE Technologies's DevOps training covers a wide range of essential concepts, practical exercises, and real-world applications. With a strong focus on certification preparation, ACTE Technologies ensures that you're well-prepared to excel in the world of DevOps. With their guidance, you can gain mastery over DevOps practices, enhance your skill set, and propel your career to new heights.

11 notes

·

View notes

Text

As a top technology service provider, Tech Avtar specializes in AI Product Development, ensuring excellence and affordability. Our agile methodologies guarantee quick turnaround times without compromising quality. Visit our website for more details or contact us at +91-92341-29799.

#Top Technology Services Company in India#Generative AI Development Company#AI Calling Software#AI Software Development Company#Best Chatbot Service Company#AI Calling Software Development#AI Automation Software#Best AI Chat Bot Development Company#AI Software Dev Company#Top Web Development Company in India#Top Software Services Company in India#Best Product Design Company in World#Best Cloud and Devops Company#Best Analytic Solutions Company#Best Blockchain Development Company in India#Best Tech Blogs in 2024#Creating an AI-Based Product#Custom Software Development for Healthcare#Best ERP Management System#WhatsApp Bulk Sender#Neighborhue Frontend Vercel

0 notes

Text

java full stack

A Java Full Stack Developer is proficient in both front-end and back-end development, using Java for server-side (backend) programming. Here's a comprehensive guide to becoming a Java Full Stack Developer:

1. Core Java

Fundamentals: Object-Oriented Programming, Data Types, Variables, Arrays, Operators, Control Statements.

Advanced Topics: Exception Handling, Collections Framework, Streams, Lambda Expressions, Multithreading.

2. Front-End Development

HTML: Structure of web pages, Semantic HTML.

CSS: Styling, Flexbox, Grid, Responsive Design.

JavaScript: ES6+, DOM Manipulation, Fetch API, Event Handling.

Frameworks/Libraries:

React: Components, State, Props, Hooks, Context API, Router.

Angular: Modules, Components, Services, Directives, Dependency Injection.

Vue.js: Directives, Components, Vue Router, Vuex for state management.

3. Back-End Development

Java Frameworks:

Spring: Core, Boot, MVC, Data JPA, Security, Rest.

Hibernate: ORM (Object-Relational Mapping) framework.

Building REST APIs: Using Spring Boot to build scalable and maintainable REST APIs.

4. Database Management

SQL Databases: MySQL, PostgreSQL (CRUD operations, Joins, Indexing).

NoSQL Databases: MongoDB (CRUD operations, Aggregation).

5. Version Control/Git

Basic Git commands: clone, pull, push, commit, branch, merge.

Platforms: GitHub, GitLab, Bitbucket.

6. Build Tools

Maven: Dependency management, Project building.

Gradle: Advanced build tool with Groovy-based DSL.

7. Testing

Unit Testing: JUnit, Mockito.

Integration Testing: Using Spring Test.

8. DevOps (Optional but beneficial)

Containerization: Docker (Creating, managing containers).

CI/CD: Jenkins, GitHub Actions.

Cloud Services: AWS, Azure (Basics of deployment).

9. Soft Skills

Problem-Solving: Algorithms and Data Structures.

Communication: Working in teams, Agile/Scrum methodologies.

Project Management: Basic understanding of managing projects and tasks.

Learning Path

Start with Core Java: Master the basics before moving to advanced concepts.

Learn Front-End Basics: HTML, CSS, JavaScript.

Move to Frameworks: Choose one front-end framework (React/Angular/Vue.js).

Back-End Development: Dive into Spring and Hibernate.

Database Knowledge: Learn both SQL and NoSQL databases.

Version Control: Get comfortable with Git.

Testing and DevOps: Understand the basics of testing and deployment.

Resources

Books:

Effective Java by Joshua Bloch.

Java: The Complete Reference by Herbert Schildt.

Head First Java by Kathy Sierra & Bert Bates.

Online Courses:

Coursera, Udemy, Pluralsight (Java, Spring, React/Angular/Vue.js).

FreeCodeCamp, Codecademy (HTML, CSS, JavaScript).

Documentation:

Official documentation for Java, Spring, React, Angular, and Vue.js.

Community and Practice

GitHub: Explore open-source projects.

Stack Overflow: Participate in discussions and problem-solving.

Coding Challenges: LeetCode, HackerRank, CodeWars for practice.

By mastering these areas, you'll be well-equipped to handle the diverse responsibilities of a Java Full Stack Developer.

visit https://www.izeoninnovative.com/izeon/

2 notes

·

View notes

Text

Invimatic, a prominent DevOps company in the USA, specializes in cloud-based Single Sign-On (SSO) solutions. With a focus on enhancing security and streamlining user authentication, they offer cutting-edge SSO services that ensure seamless access to cloud applications and resources. Invimatic stands as a trusted partner for businesses seeking to optimize their IT operations, boost efficiency, and strengthen security through their innovative DevOps and SSO solutions.

0 notes

Text

Unity's Changes

On the 12th of September Unity released a blog post concerning changes being made to their plan pricing and packaging updates. The intention behind the change is the generate more income for the company. From Unity Blog...

"Effective January 1, 2024, we will introduce a new Unity Runtime Fee that’s based on game installs. We will also add cloud-based asset storage, Unity DevOps tools, and AI at runtime at no extra cost to Unity subscription plans this November."

Unity's services consist of two products: The Unity Engine, which is the game engine used to create projects, and the Unity Runtime, which is the code the execute on a player's device that allows games made with the engine to run.

Simply put, Unity will now be charging a fee "each time a qualifying game is downloaded by an end user." The reasoning given for this change is that "each time a game is downloaded, the Unity Runtime is also installed."

While many (basically all) developers have used their collective voices to reply with a unanimous "nope", many people do not understand the very important specifics of how this will be implemented.

These fees will only take effect when the preexisting thresholds have been met. They will only be applied once a game has reached both a set revenue figure and a set lifetime install count. From the blog:

Unity Personal and Unity Plus: Those that have made $200,000 USD or more in the last 12 months AND have at least 200,000 lifetime game installs.

Unity Pro and Unity Enterprise: Those that have made $1,000,000 USD or more in the last 12 months AND have at least 1,000,000 lifetime game installs.

While this may not seem to be such a bad thing, especially since the reasoning behind the change (their Runtime product being distributed) is quite reasonable. However there are a litany of issues this will pose for developers. The smallest scale developers, such as indie devs and studios are unlikely to feel any sort of pressure from this, and the large, AAA studios also wont feel the brunt of the new pricing plan. The weight of this change falls directly onto the smaller-but-not-small studios. These studios making games for a more significant budget will essentially have these budgets put under more strain, because as soon as they begin to approach breaking even or perhaps making a profit on their projects, Unity will step in a start charging them from there on out. It is also unclear whether the developers alone will have to pay this new fee or whether it will be shared by publishers as well.

Developers are largely unhappy with this new plan because studios almost always make commercial games on very thing margins. Charging a couple cents per install does not sound like much but it can and will mean the difference between financial success and closure for many smaller studios who otherwise would have ended with their balance sheets in the green.

It is also important to be aware that, while these changes are scheduled to take effect from the start of 2024, the thresholds are retroactive, which means that if you already have reasonable install and revenue numbers (thus qualifying for the fees) you will be immediately forced to pay moving forward.

On a more informal note, there have also been some jokes made that this scheme will make it possible for players to actively harm developers if they wish. The fee is charged when an end-user (customer) downloads a game. Note that they did not say "purchases", but "downloads." Technically, this would mean that a person can buy a game (developer gains one instance of revenue) and then repeatedly download, delete and re-download the game, charging this fee each time they do this. Whether this was a poor choice of wording or a miscommunication is unclear at this time, but well let's certainly hope this new plan doesn't open up this possibility.

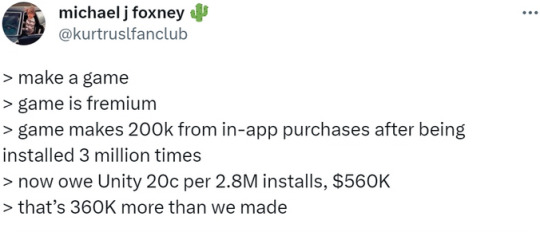

There are also numerous other important considerations Unity have not commented on. Do installs of pirated games count? How will these threshold figures be tracked? Also, as a massive concern, what about games that rely on in-app purchases for revenue. Below is a tweet that concisely highlights the problem.

There is also the problem of free games. This pricing plan does not take into account how much the game costs at all. Developers making a massively successful free game would end up having to pay Unity to sell a free product.

Many developers and studios are now seriously considering simply ditching Unity all together. With Unreal's much more reasonable pricing plans and the release of UE5, unless either some very significant "miscommunications" are cleared up or the plan is scrapped entirely, this will likely be the beginning of the end for Unity. As a learning indie developer myself, having been a die-hard Unity supported until this announcement, I do not know how to express my disappointment, and if Unity follows through with this scheme on 01/01/2024, even if they reverse it later, I will never open another one of this fucking greedy company's products ever again.

Sources: - https://blog.unity.com/news/plan-pricing-and-packaging-updates - https://www.youtube.com/watch?v=JQSDsjJAics

11 notes

·

View notes

Text

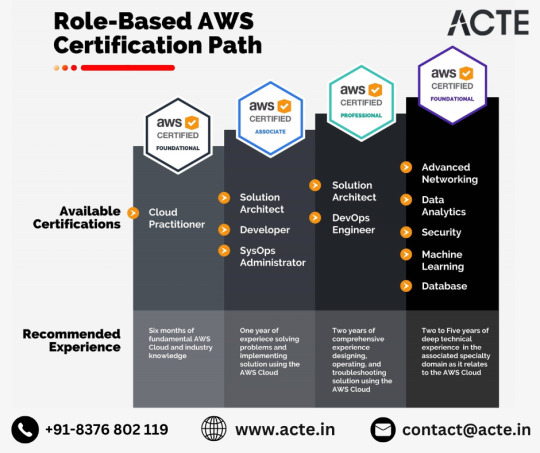

From Novice to Pro: Master the Cloud with AWS Training!

In today's rapidly evolving technology landscape, cloud computing has emerged as a game-changer, providing businesses with unparalleled flexibility, scalability, and cost-efficiency. Among the various cloud platforms available, Amazon Web Services (AWS) stands out as a leader, offering a comprehensive suite of services and solutions. Whether you are a fresh graduate eager to kickstart your career or a seasoned professional looking to upskill, AWS training can be the gateway to success in the cloud. This article explores the key components of AWS training, the reasons why it is a compelling choice, the promising placement opportunities it brings, and the numerous benefits it offers.

Key Components of AWS Training

1. Foundational Knowledge: Building a Strong Base

AWS training starts by laying a solid foundation of cloud computing concepts and AWS-specific terminology. It covers essential topics such as virtualization, storage types, networking, and security fundamentals. This groundwork ensures that even individuals with little to no prior knowledge of cloud computing can grasp the intricacies of AWS technology easily.

2. Core Services: Exploring the AWS Portfolio

Once the fundamentals are in place, AWS training delves into the vast array of core services offered by the platform. Participants learn about compute services like Amazon Elastic Compute Cloud (EC2), storage options such as Amazon Simple Storage Service (S3), and database solutions like Amazon Relational Database Service (RDS). Additionally, they gain insights into services that enhance performance, scalability, and security, such as Amazon Virtual Private Cloud (VPC), AWS Identity and Access Management (IAM), and AWS CloudTrail.

3. Specialized Domains: Nurturing Expertise

As participants progress through the training, they have the opportunity to explore advanced and specialized areas within AWS. These can include topics like machine learning, big data analytics, Internet of Things (IoT), serverless computing, and DevOps practices. By delving into these niches, individuals can gain expertise in specific domains and position themselves as sought-after professionals in the industry.

Reasons to Choose AWS Training

1. Industry Dominance: Aligning with the Market Leader

One of the significant reasons to choose AWS training is the platform's unrivaled market dominance. With a staggering market share, AWS is trusted and adopted by businesses across industries worldwide. By acquiring AWS skills, individuals become part of the ecosystem that powers the digital transformation of numerous organizations, enhancing their career prospects significantly.

2. Comprehensive Learning Resources: Abundance of Educational Tools

AWS training offers a wealth of comprehensive learning resources, ranging from extensive documentation, tutorials, and whitepapers to hands-on labs and interactive courses. These resources cater to different learning preferences, enabling individuals to choose their preferred mode of learning and acquire a deep understanding of AWS services and concepts.

3. Recognized Certifications: Validating Expertise

AWS certifications are globally recognized credentials that validate an individual's competence in using AWS services and solutions effectively. By completing AWS training and obtaining certifications like AWS Certified Solutions Architect or AWS Certified Developer, individuals can boost their professional credibility, open doors to new job opportunities, and command higher salaries in the job market.

Placement Opportunities

Upon completing AWS training, individuals can explore a multitude of placement opportunities. The demand for professionals skilled in AWS is soaring, as organizations increasingly migrate their infrastructure to the cloud or adopt hybrid cloud strategies. From startups to multinational corporations, industries spanning finance, healthcare, retail, and more seek talented individuals who can architect, develop, and manage cloud-based solutions using AWS. This robust demand translates into a plethora of rewarding career options and a higher likelihood of finding positions that align with one's interests and aspirations.

In conclusion, mastering the cloud with AWS training at ACTE institute provides individuals with a solid foundation, comprehensive knowledge, and specialized expertise in one of the most dominant cloud platforms available. The reasons to choose AWS training are compelling, ranging from the industry's unparalleled market position to the top ranking state.

9 notes

·

View notes

Text

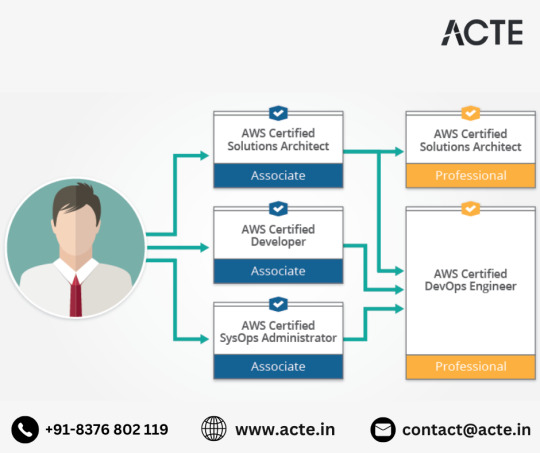

Crafting a Career Odyssey: AWS Certification Unveiled for Solution Architects

Embarking on the journey of AWS certification as a Solution Architect unveils a plethora of career avenues, transforming your professional trajectory in the dynamic landscape of cloud computing. Let's explore the myriad paths that unfold for certified AWS Solution Architects:

1. Architecting Excellence: Steering Digital Transformations AWS certification catapults you into roles where you architect and implement cutting-edge solutions. As a linchpin in digital transformations, you play a pivotal role in creating scalable, secure, and cost-effective solutions aligned with organizational objectives.

2. Cloud Architect Mastery: Orchestrating Comprehensive Cloud Strategies The journey doesn't stop at Solution Architect; it seamlessly transitions into broader Cloud Architect roles. Here, you orchestrate end-to-end cloud strategies, ensuring optimal performance, security, and efficiency in cloud-based environments.

3. Enterprise Architect Pinnacle: Shaping Holistic IT Strategies With AWS certification, the pathway extends to Enterprise Architect roles. This involves shaping the overarching IT strategy, aligning technology solutions with business goals, and ensuring seamless integration across the enterprise.

4. Cloud Consulting Expertise: Guiding Clients on Cloud Journey Organizations seek AWS-certified Solution Architects for Cloud Consultant positions, where you provide guidance on cloud strategies, migration plans, and optimize AWS infrastructure for enhanced performance.

5. Technical Leadership Zenith: Guiding Development Initiatives Expertise gained through AWS certification positions you favorably for technical leadership roles. Leading teams, guiding development projects, and offering strategic input on technology initiatives become part of your purview.

6. DevOps Alchemy: Bridging Development and Operations The fusion of AWS expertise and Solution Architect skills opens doors to DevOps Engineer opportunities. Your grasp of cloud infrastructure proves invaluable in optimizing continuous integration and deployment pipelines.

7. Pre-Sales Artistry: Crafting Compelling Solutions Leverage AWS certification in Pre-Sales Solutions Architect positions. Engaging with clients during the pre-sales phase, you become instrumental in understanding their needs and crafting compelling solutions.

8. Specialized Architectural Prowess: Exploring Niche Opportunities As technology evolves, specialized Solution Architect roles emerge. Depending on your interests and the evolving AWS service landscape, opportunities in areas like AI/ML architecture, IoT solutions, or serverless architectures beckon.

9. Entrepreneurial Odyssey: Beyond Conventional Paths Armed with AWS certification, entrepreneurial pursuits become viable. Whether offering specialized AWS services or launching a tech startup, the certification serves as a foundation for innovative endeavors.

10. Lifelong Learning Odyssey: Staying Ahead in the Dynamic AWS Realm The AWS ecosystem is dynamic, with constant updates and new services. Your certification journey becomes a springboard for continuous learning and professional development, ensuring you remain at the forefront of cloud technology.

In conclusion, AWS certification for Solution Architects is not just a validation; it's a compass guiding you through a rich tapestry of career possibilities. Whether crafting digital landscapes, steering enterprises through the cloud, or exploring niche opportunities, the certification becomes a catalyst for continuous growth, learning, and innovation in the ever-evolving cloud computing domain.

2 notes

·

View notes