#Cluster sampling vs random sampling

Explore tagged Tumblr posts

Text

Cluster Sampling: Types, Advantages, Limitations, and Examples

Explore the various types, advantages, limitations, and real-world examples of cluster sampling in our informative blog. Learn how this sampling method can help researchers gather data efficiently and effectively for insightful analysis.

#Cluster sampling#Sampling techniques#Cluster sampling definition#Cluster sampling steps#Types of cluster sampling#Advantages of cluster sampling#Limitations of cluster sampling#Cluster sampling comparison#Cluster sampling examples#Cluster sampling applications#Cluster sampling process#Cluster sampling methodology#Cluster sampling in research#Cluster sampling in surveys#Cluster sampling in statistics#Cluster sampling design#Cluster sampling procedure#Cluster sampling considerations#Cluster sampling analysis#Cluster sampling benefits#Cluster sampling challenges#Cluster sampling vs other methods#Cluster sampling vs stratified sampling#Cluster sampling vs random sampling#Cluster sampling vs systematic sampling#Cluster sampling vs convenience sampling#Cluster sampling vs multistage sampling#Cluster sampling vs quota sampling#Cluster sampling vs snowball sampling#Cluster sampling steps explained

0 notes

Text

Evaluating Model Performance in Machine Learning

Evaluating the performance of a machine learning model is crucial to ensure it generalizes well to new data. Different evaluation metrics are used based on the type of problem (classification, regression, clustering, etc.).

1. Key Metrics for Model Evaluation

📌 Classification Metrics

Used when predicting categories (e.g., spam detection, image classification).

✅ Accuracy = TP+TNTP+TN+FP+FN\frac{TP + TN}{TP + TN + FP + FN}TP+TN+FP+FNTP+TN

Good for balanced datasets but misleading for imbalanced classes.

✅ Precision, Recall, and F1-score

Precision = TPTP+FP\frac{TP}{TP + FP}TP+FPTP (How many predicted positives were correct?)

Recall (Sensitivity) = TPTP+FN\frac{TP}{TP + FN}TP+FNTP (How many actual positives were detected?)

F1-score = Harmonic mean of Precision & Recall.

✅ ROC-AUC (Receiver Operating Characteristic — Area Under Curve)

Measures the trade-off between True Positive Rate (TPR) & False Positive Rate (FPR).

✅ Confusion Matrix

Shows True Positives (TP), False Positives (FP), True Negatives (TN), and False Negatives (FN).

✅ Log Loss (Cross-Entropy Loss)

Measures how uncertain the model’s predicted probabilities are. Lower is better.

📌 Regression Metrics

Used when predicting continuous values (e.g., house prices, stock prices).

✅ Mean Absolute Error (MAE)

Measures the average absolute difference between actual & predicted values.

✅ Mean Squared Error (MSE) & Root Mean Squared Error (RMSE)

Penalizes large errors more than MAE. RMSE gives values in original units.

✅ R² Score (Coefficient of Determination)

Measures how well the model explains variance in data (ranges from 0 to 1).

✅ Mean Absolute Percentage Error (MAPE)

Measures error as a percentage of actual values.

2. Model Performance Evaluation Techniques

✅ Train-Test Split

Split data into training (80%) and testing (20%).

Ensures model performance is evaluated on unseen data.

✅ Cross-Validation (K-Fold CV)

Splits data into K subsets and trains the model K times.

Reduces bias from a single train-test split.

✅ Bias-Variance Tradeoff

High Bias → Underfitting (Model too simple).

High Variance → Overfitting (Model too complex).

Solution: Use regularization (L1, L2), feature selection, and cross-validation.

✅ Learning Curves

Shows training vs. validation performance over epochs.

Helps detect underfitting or overfitting trends.

✅ Feature Importance & SHAP Values

Identifies which features influence model predictions the most.

Used in tree-based models like Random Forest, XGBoost.

3. Best Practices for Model Evaluation

✅ Use multiple metrics to get a complete picture. ✅ Handle imbalanced data using SMOTE, class weighting, or balanced sampling. ✅ Check for data leakage (e.g., using future information in training). ✅ Use domain knowledge to interpret model performance.

Conclusion

Evaluating model performance requires selecting appropriate metrics and validation techniques to ensure robust and generalizable models. The choice of metrics depends on the problem type (classification, regression) and dataset characteristics.

WEBSITE: https://www.ficusoft.in/deep-learning-training-in-chennai/

0 notes

Text

Mastering Data Science Using Python

Data Science is not just a buzzword; it's the backbone of modern decision-making and innovation. If you're looking to step into this exciting field, Data Science using Python is a fantastic place to start. Python, with its simplicity and vast libraries, has become the go-to programming language for aspiring data scientists. Let’s explore everything you need to know to get started with Data Science using Python and take your skills to the next level.

What is Data Science?

In simple terms, Data Science is all about extracting meaningful insights from data. These insights help businesses make smarter decisions, predict trends, and even shape new innovations. Data Science involves various stages, including:

Data Collection

Data Cleaning

Data Analysis

Data Visualization

Machine Learning

Why Choose Python for Data Science?

Python is the heart of Data Science for several compelling reasons:

Ease of Learning: Python’s syntax is intuitive and beginner-friendly, making it ideal for those new to programming.

Versatile Libraries: Libraries like Pandas, NumPy, Matplotlib, and Scikit-learn make Python a powerhouse for data manipulation, analysis, and machine learning.

Community Support: With a vast and active community, you’ll always find solutions to challenges you face.

Integration: Python integrates seamlessly with other technologies, enabling smooth workflows.

Getting Started with Data Science Using Python

1. Set Up Your Python Environment

To begin, install Python on your system. Use tools like Anaconda, which comes preloaded with essential libraries for Data Science.

Once installed, launch Jupyter Notebook, an interactive environment for coding and visualizing data.

2. Learn the Basics of Python

Before diving into Data Science, get comfortable with Python basics:

Variables and Data Types

Control Structures (loops and conditionals)

Functions and Modules

File Handling

You can explore free resources or take a Python for Beginners course to grasp these fundamentals.

3. Libraries Essential for Data Science

Python’s true power lies in its libraries. Here are the must-know ones:

a) NumPy

NumPy is your go-to for numerical computations. It handles large datasets and supports multi-dimensional arrays.

Common Use Cases: Mathematical operations, linear algebra, random sampling.

Keywords to Highlight: NumPy for Data Science, NumPy Arrays, Data Manipulation in Python.

b) Pandas

Pandas simplifies working with structured data like tables. It’s perfect for data manipulation and analysis.

Key Features: DataFrames, filtering, and merging datasets.

Top Keywords: Pandas for Beginners, DataFrame Operations, Pandas Tutorial.

c) Matplotlib and Seaborn

For data visualization, Matplotlib and Seaborn are unbeatable.

Matplotlib: For creating static, animated, or interactive visualizations.

Seaborn: For aesthetically pleasing statistical plots.

Keywords to Use: Data Visualization with Python, Seaborn vs. Matplotlib, Python Graphs.

d) Scikit-learn

Scikit-learn is the go-to library for machine learning, offering tools for classification, regression, and clustering.

Steps to Implement Data Science Projects

Step 1: Data Collection

You can collect data from sources like web APIs, web scraping, or public datasets available on platforms like Kaggle.

Step 2: Data Cleaning

Raw data is often messy. Use Python to clean and preprocess it.

Remove duplicates and missing values using Pandas.

Normalize or scale data for analysis.

Step 3: Exploratory Data Analysis (EDA)

EDA involves understanding the dataset and finding patterns.

Use Pandas for descriptive statistics.

Visualize data using Matplotlib or Seaborn.

Step 4: Build Machine Learning Models

With Scikit-learn, you can train machine learning models to make predictions. Start with simple algorithms like:

Linear Regression

Logistic Regression

Decision Trees

Step 5: Data Visualization

Communicating results is critical in Data Science. Create impactful visuals that tell a story.

Use Case: Visualizing sales trends over time.

Best Practices for Data Science Using Python

1. Document Your Code

Always write comments and document your work to ensure your code is understandable.

2. Practice Regularly

Consistent practice on platforms like Kaggle or HackerRank helps sharpen your skills.

3. Stay Updated

Follow Python communities and blogs to stay updated on the latest tools and trends.

Top Resources to Learn Data Science Using Python

1. Online Courses

Platforms like Udemy, Coursera, and edX offer excellent Data Science courses.

Recommended Course: "Data Science with Python - Beginner to Pro" on Udemy.

2. Books

Books like "Python for Data Analysis" by Wes McKinney are excellent resources.

Keywords: Best Books for Data Science, Python Analysis Books, Data Science Guides.

3. Practice Platforms

Kaggle for hands-on projects.

HackerRank for Python coding challenges.

Career Opportunities in Data Science

Data Science offers lucrative career options, including roles like:

Data Analyst

Machine Learning Engineer

Business Intelligence Analyst

Data Scientist

How to Stand Out in Data Science

1. Build a Portfolio

Showcase projects on platforms like GitHub to demonstrate your skills.

2. Earn Certifications

Certifications like Google Data Analytics Professional Certificate or IBM Data Science Professional Certificate add credibility to your resume.

Conclusion

Learning Data Science using Python can open doors to exciting opportunities and career growth. Python's simplicity and powerful libraries make it an ideal choice for beginners and professionals alike. With consistent effort and the right resources, you can master this skill and stand out in the competitive field of Data Science.

0 notes

Text

Research | Population vs Sample and Sample Strategies

via Scribbr

Within the context of research, a population is the entire group a researcher would want to draw conclusions on, whilst a sample is the group from which the data would be gathered to represent the population. The population can be defined based on a variety of factors, such as geographical location, age, income, etc. It can be very broad, or extremely specific based on the topic of research. Sampling itself can be divided into two sections;

Probability Sampling : A random selection of the population, allowing for strong statistical inferences about a given group.

Non-Probability Sampling : A Non random selection based on convenience or a certain criterion or criteria, allowing for easy collection of data.

Probability Sampling Methods

Probability Sampling is mainly used in quantitative research. It means that every member of the population has a chance of being selected. If the goal is to produce results representative of the whole population, probability sampling techniques are the most valid choice. These can be divided into four groups;

Simple Random Sample

Systematic Sample

Stratified Sample

Cluster Sample

The first technique dictates that any random member of the population has an equal chance of being selected. Therefore the sampling frame should include the whole population. To conduct this, RNG or other chance based techniques could be used to gather a sample. Systematic sampling is similar to simple random sampling, but it is usually slightly easier to conduct. Every member of the population is listed with a number, but instead of randomly generating numbers, individuals are chosen at regular intervals. It is important to make sure that there is no hidden pattern in the list that might skew the sample.

Stratified sampling involves dividing the population into subpopulations that may differ in important ways. It allows you draw more precise conclusions by ensuring that every subgroup is properly represented in the sample. This method involves separating the population into substrata based on characteristics such as age, gender, or other relevant factors. Based on the overall proportions of the population, the sample must be reflective of that ratio. So, the sample is formed by calculating the the number of people based on the size of the strata. After this, any of the above two sampling strategies could be used.

Cluster sampling also involves dividing the population into subgroups, but each subgroup should have similar characteristics to the whole sample. Instead of sampling individuals from each subgroup, you randomly select entire subgroups. While this method is better suited to dealing with large, dispersed populations, there is room for more error within the sample, as there could be substantial differences between clusters.

Non-Probability Sampling Methods

This sampling technique is based on non-random criteria, and not every individual has a chance to be included in the sample. This type of sampling is cheaper and easier to access, but runs a larger risk of sampling bias. If a non-probability sample is used, it must be as representative of the population as possible.

Non-probability sampling techniques are often used in qualitative research. The aim is not to test a hypothesis about a broad population, but to develop an initial understanding of a small or under-researched population. This too, can be divided into four groups;

Convenience Sample

Purposive Sample

Snowball Sample

Quota Sample

Convenience sampling is the most self-explanatory; it includes a population that is most accessible to the researcher. While it is easier, there is no way to guarantee generalisable results. Another method of sampling similar to this is voluntary response sampling, which involves voluntary action to help the researcher (eg;- an online survey). Alas, this method of selection is also somewhat biased, as some people are inherently more likely to volunteer than others, and thus are likely to have stronger opinions on a given topic.

Purposive sampling involves selecting a demography that is most useful towards the topic of research being conducted. It is often used in qualitative research, where the researcher wants to gain detailed knowledge about a specific phenomenon rather than make statistical inferences, or where the population is very small and specific. When using this method, a strong rationale and criteria need to be made clear based on inclusion and exclusion.

If a population is harder to access, a snowball sample can be used to recruit participants via other participants. This is also susceptible to sampling bias, as there is no way to guarantee representation of the entire population based on the reliance of other participants to recruit more people.

A quota is a non-random selection of a predetermined number or proportion of units. This is the basic premise of quota sampling. To find a quota, the population must be divided into mutually exclusive strata, and individuals would be recruited until the quota is reached. These units share specific characteristics, determined prior to forming each strata. The aim of quota sampling is to control what or who makes up the sample.

via labroots

So how can this be applied to my primary research methods? In terms of the survey, first we must determine the population. The primary purpose of this survey is to gather data on the general Sri Lankan population's attitudes towards their daily commute to school or work. The secondary purpose is to garner if the average Sri Lankan student or salaryman enjoys their daily routine, and whether they would be doing something different. Since the demography is more vague (mostly being based on geographical location), the responses would be a result of voluntary response sampling; the only factors that I can control are the platforms that I post the survey on and whether or not participants share the survey on their own public networks.

These sampling strategies are more applicable to my focus and control group initiatives. The purpose of these two groups is to gather qualitative information on the population's attitudes towards a Solarpunk future, whether these attitudes change based on animated content, and to measure the change between those who have seen the animation and those who haven't. The population in this case would be those at university. Why? This is because it is statistically likely that those who attend AOD are culturally diverse Sri Lankans, between the ages of 16 and 25 (Gen Z), belong to families of a moderate to high income bracket (which would heighten their access to information), and are more versed in non-traditional problem solving skills as a result of majoring in design.

As I am looking into qualitative research, I would have to use a non-probability sampling strategy. Convenience sampling would be ruled out as it is a more unreliable strategy, as would quota and snowball sampling. This leaves purposive sampling, which fits well into what I am trying to do; gather data on a small specific population on a niche concept/idea. For the purpose of this research, as mentioned earlier, I would like to gather a section of the current L4 batch (which as it happens, is representative of the population of AOD). This is due to their cultural diversity, age (as Gen Z, they are more likely to be progressive and environmentally conscious), and most importantly their experience in design- which at this point is not as much as the average designer but more than the average person their age. Due to the nature of them being exposed in large part to the standard education system, which prioritises subjects such as math, science, etc., and just breaking into the design field, these students could offer fresh perspectives on the topic of my research.

This could be taken a step further; looking at the L4s, I could conduct multi-stage sampling by including an element of stratified sampling; depending on if I can procure the necessary data on those from my campus.

Now that I have narrowed down what kind of strategies I want to use for my primary research, I can move on to curating a new set of questions based on this information.

0 notes

Text

been reading abt this one genetic study and it is rlly interesting but also bc using modern genetics to infer stuff abt history is something i'm Aware you have to be Cautious of it does kind of result in me having Knowledge that i don't feel entirely comfortable thinking of as Knowledge. which is a v weird state of mind. esp when some of the original phrasing was already really cautiously vague.

(cut to ramble abt boring things i am in no way qualified to teach)

like okay time to ramble: i found it bc as part of a long slowburn identity crisis i got rlly frustrated with what little i know of history & not having a clue where to place myself in it - like, england has had so many different waves of migration and changes of regime, and also aristocracy-focused history isn't always good at even distinguishing between those, and if we don't even know which of those groups we're descended from... do we know anything??? (eg: fucking druids wld be talking abt The Old Ways and i'd be sitting here like. okay even if you weren't glorified wiccans, are they "the Old Ways"? are they??? is that our history or someone else's entirely? like, literally, i don't have any particular interest in doing this, but if i theoretically WERE to try and return to the religion of my prechristian ancestors, should i reconstruct druidry or heathenry or smthn else entirely?)

SO i basically wanted to ask how much, if at all, are the modern english descended from the various groups who have lived here. Who The Fuck Actually Are We

and i did basically get a cautious answer! (after finding better scicomm than the fucking guardian, which didn't even take enough care to clearly separate "english and cornish" from "british". fuck the guardian.) the actual conclusions we can pretty safely draw re: this question are:

1. the modern english have a v high level of similarity with other peoples of the uk (the study said "british isles" but roi was not counted), much of which appears to be v ancient dna, which means the genetic evidence directly contradicts the old theory that the anglo-saxons completely displaced/wiped out the britons of england. which is nice. love when my ancestors do not commit genocide on my other ancestors

2. the genetic "clusters" in england and cornwall showed a significant minority of dna (less as you travel north) theorised to be anglo-saxon - "between 10% and 40%". which, like i was saying, is both Information and Non Information. "congratulations participants, you're helping our understanding of history evolve bc you're def partly descended from the ancient britons but you also appear to have some anglo-saxon ancestry!" "oh cool. how much?" "oh you know... some". i know it doesn't matter in the real world but sjfkflshlk damn historic population geneticists u live like this? (they weren't even saying "25% +/- 15%". didn't even give us an average. just like. somewhere in this range lol)

(okay actually i am in Explaining Mode so here goes. afaict part of the problem is they're not even sure which common ancestry to be counting. only clusters in england/cornwall have any northern german common ancestry, but everyone in the uk has danish common ancestry - BUT the danish dna is significantly higher than average in groups w n.german dna. so the problem is: what's ancient, what's anglo-saxon, could any of it be viking? we just don't know.)

(they might have been able to tell by dating it but idk if they tried. and also some of their other dating was coming out Wonky - eg iirc the n.german dna is mostly dated to abt 300 years after anglo-saxon migration ended. so what's going on? did the two communities just take a v long time to integrate, or is something afoot?)

(also, of course - england is pretty genetically homogenous but there is still Some variety by region in this genetic component so making a sweeping statement abt "the english" is hard.)

3. there is a Mystery ComponentTM that makes up a larger segment than the alleged anglo-saxon dna, is found in england, scotland and northern ireland BUT not wales (so it's not just Basic British Ingredients), and matches northern france? i think they're guessing prehistoric migration for that. idk if they dated it. Hmmmm. ~Mystery DNA~

4. methodological info if you're concerned: they used participants from rural areas whose grandparents had all been born in the 50mile radius from them, so region-specific info should be p trustworthy, and the sample size was over 2000. they also found their "clusters" algorithmically and then plotted them back onto the map, so there shouldn't be confirmation bias there.)

(if i *were* to complain, looking at their map... scotland and wales have some gaps in them. some significant gaps.)

5. smthn we might genuinely be concerned abt in the analysis of these results - are we taking the results from places we know to have a historical migration as more meaningful than those we don't? looking at the results shows me every single cluster has a small but significant portion of common ancestry with modern belgium, maybe 1/12. (i'm looking at blurry pie charts, that's my best guess lol.) no analysis i've read has mentioned it.

on the other hand - idk anything abt the history of belgium but i wld not be at all surprised if their genetics were basically somewhere between germany and france, and we've already discussed both those places.

plus, possibly more relevantly - they DID scan for similarities with various other countries in europe and didn't find them. eg, no signficant/detectable common ancestry with the finnish. so if it's showing up at all, let alone as 10% or more, it's more than just random noise.

so it's knowledge but it's not knowledge but it's /more/ knowledge than not knowledge? yeah. i'm having a great time

6. assorted fun(?) facts for those who made it this far:

-the most unique place genetically they found was orkney (note: there were no participants from shetland), who showed ~25% norwegian ancestry, followed by wales, who as we remember have no Mystery DNA.

-the differences between cornwall and devon were minor, but they were definitely there and they followed modern county line p much perfectly!

-there were two different clusters in northern ireland and the west of scotland, but they DIDN'T break down into ireland vs scotland. it looked more like it might be a highland/lowland gael/gall thing. i don't know if they checked if the n.irish respondents were catholic or protestant but uhh probably better not to all things considered

-no matter how minutely you break down genetic differences, there is a large group covering much of england that is basically homogenous. you can tell genetically which island in orkney someone's from, but you can't tell the difference between people from north yorkshire and people from kent.

okay this has been a poorly explained ramble if you'd like to read the damn thing yourself it's this: https://peopleofthebritishisles.web.ox.ac.uk/population-genetics

nb that is their website for laypeople, i've looked at a few different interpetations of this but i haven't looked at the actual paper (yet? dk if i can be bothered going deeper. we'll see)

5 notes

·

View notes

Text

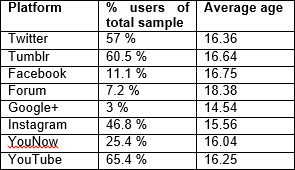

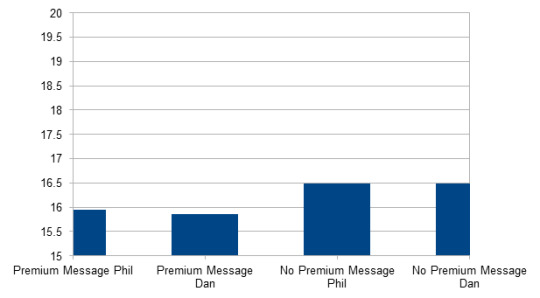

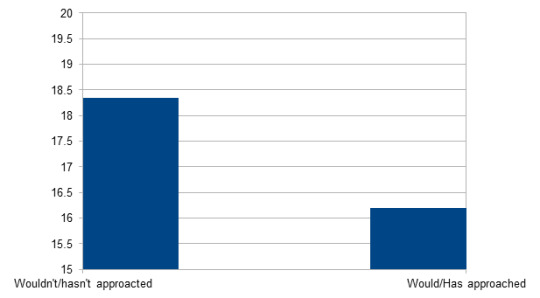

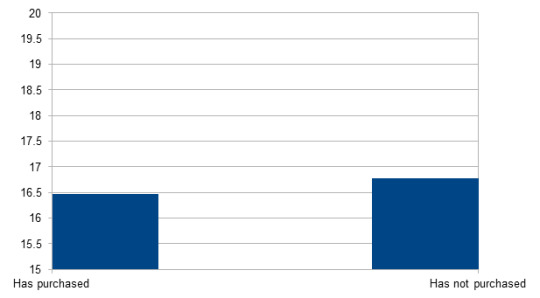

Analysis requests: Age

This first set of results shows the relationship between the age of the viewer and their responses, with conclusions written above the cut and full results and explanation of methodology to be found below the cut, along with a brief definition of terms.

Age/Are you subscribed to Phil? vs Age/Are you subscribed to Dan?

- People who only watch Phil are not significantly older than those who only watch Dan, but both groups are significantly older than those who watch both.

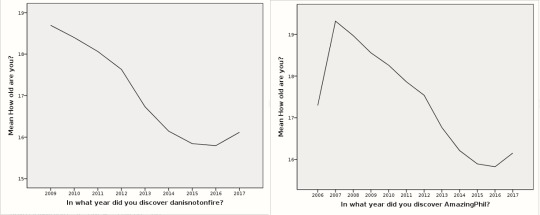

Age/In what year did you discover danisnotonfire/AmazingPhil?

- Respondents who started watching in the earlier years are older.

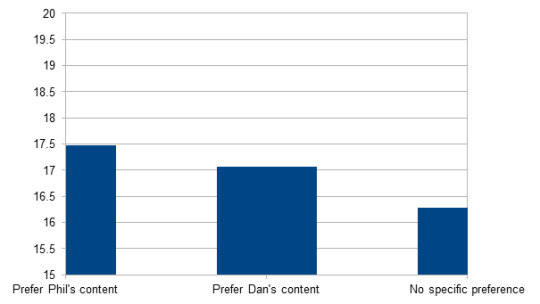

Age/Overall do you prefer the content of danisnotonfire or AmazingPhil?

- People who have no specific preference are significantly younger than those who prefer Dan's or Phil's content, and people who prefer Phil's content are significantly older than those who don't have a specific preference or prefer Dan's content.

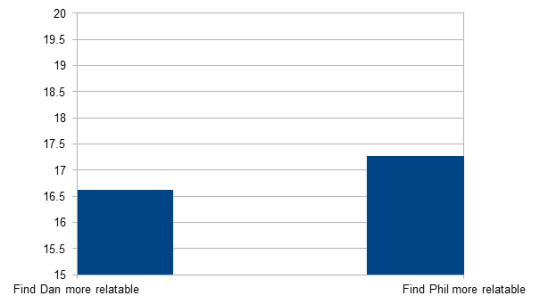

Age/Who do you relate to the most?

- People who find Phil more relatable are significantly older than people who find Dan more relatable.

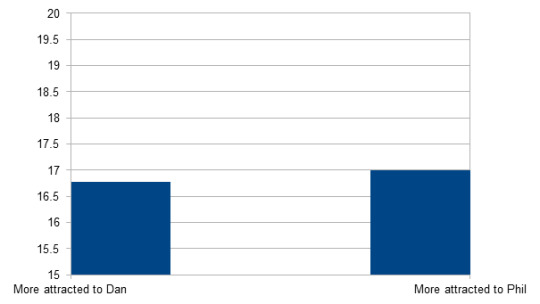

Age/Who are you most attracted to?

- People who are most attracted to Phil are significantly older than people who are more attracted to Dan.

Age/Who would you rather be friends with?

- There is no significant difference between the ages of people dependent on who they would rather be friends with.

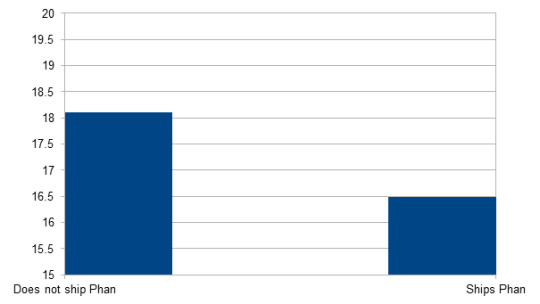

Age/Do you ship Phan?

- People who ship Phan are significantly younger than those who do not ship Phan.

Age/Which of the following do you think is most likely to describe Dan and Phil’s relationship in real life?

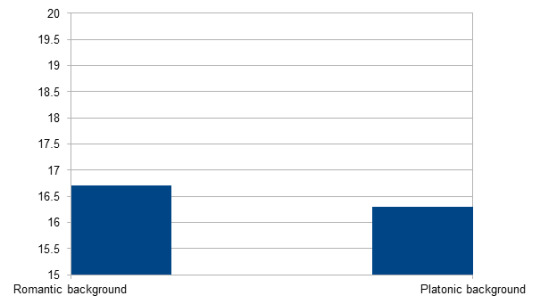

- People who believe that Dan and Phil have or have had a romantic background are significantly older than those who believe in a platonic background.

Age/Do you consider yourself to be part of the phandom?

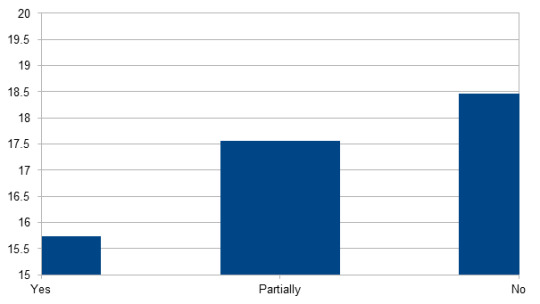

- These three groups differ significantly in age, in all combinations. People who consider themselves part of the phandom are younger than those who do not, or only partially do, and people who do not consider themselves as a part of the phandom are older than those who do, or only partially do.

Age/Is the Phandom the only fandom you belong to?

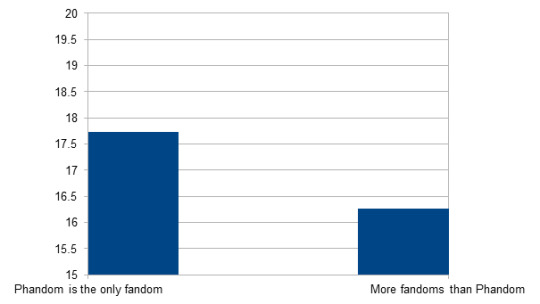

- Respondents who are in multiple fandoms are significantly younger.

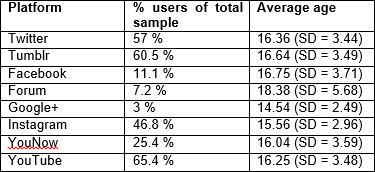

Age/Whether or not you consider yourself part of a website’s phandom, where do you choose to see or discuss Dan and Phil related content?

Age/Do you read phanfiction?

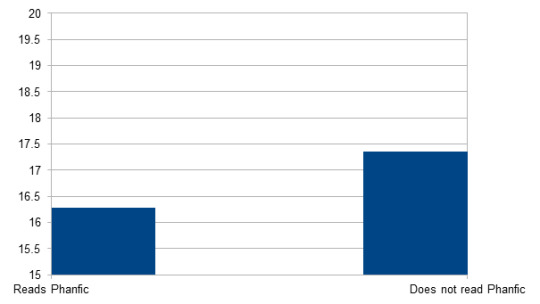

- People who read phanfiction are significantly younger than those who do not.

Age/Do you send premium messages?

- People who send Premium msgs in Phil's and Dan's chat are significantly younger than those who do not send Premiums.

Age/Would you approach Dan and Phil if you saw them randomly?

- Respondents who wouldn't/haven't approached them are significantly older than those who would or have approached them.

Age/Did you attend TATINOF?

- There is no significant difference between the ages of people who did or did not attend TATINOF.

Age/Have you ever purchased something from the Dan and Phil Shop?

- People who have purchased from the DAP Shop are significantly younger than those who have not.

n - number of responses.

M - mean, the average result.

SD - standard deviation. The average distance to the average result - showing if results are typically clustered around an average result, or if there is a wide range of results.

Significant difference (p < x)/Not significant - There is a mathematical way to determine if two numbers (for example the age averages of two groups) are actually different from one another, or if they are within the range of what is considered random variance. This is called the p-value and we consider differences between results ‘significant’ when the p-value is smaller than 0.05. For large samples such as this, most results are significant - this does not mean that the difference is particularly large, just that there is a difference.

Pearson r - a measure of the linear correlation between two variables, with a value of between -1 and 1. A negative correlation means that as one becomes higher the other becomes lower, a positive correlation means that as one becomes higher so does the other.

Age/Are you subscribed to Phil? vs Age/Are you subscribed to Dan?

(Only subscriptions to DINOF and AP were taken into consideration.)

Only watch Dan n = 91; M = 18.99; SD = 5.05

Only watch Phil n = 132; M = 19.32; SD = 5.01

Watch both n = 24,085; M = 16.52; SD = 3.55

Partially significant (p < 0.001): People who only watch Phil are not significantly older than those who only watch Dan, but both groups are significantly older than those who watch both.

Age/In what year did you discover danisnotonfire/AmazingPhil?

There is a significant (p < 0.01) negative correlation between year in which respondents started watching and their age (Pearson r = -.228). This means that respondents who started watching in the earlier years are older.

Age/Overall do you prefer the content of danisnotonfire or AmazingPhil?

Prefer Phil's content n = 2268; M = 17.47; SD = 4.4

Prefer Dan's content n = 5475; M = 17.06; SD = 3.66

No specific preference n = 16722; M = 16.28; SD = 3.40

Significant (p < 0.001): These three groups differ significantly in age, in all combinations. (People who have no specific preference are significantly younger than those who prefer Dan's or Phil's content, and people who prefer Phil's content are significantly older than those who don't have a specific preference or prefer Dan's content.)

Age/Who do you relate to the most?

Dan n = 12603; M = 16.62; SD = 3.42

Phil n = 2189; M = 17.27; SD = 4.55

Significant (p < 0.001): People who find Phil more relatable are significantly older than people who find Dan more relatable.

Age/Who are you most attracted to?

Dan n = 5324; M = 16.77; SD = 3.56

Phil n = 1255; M = 17.00; SD = 4.512

Significant (p < 0.001): People who are most attracted to Phil are significantly older than people who are more attracted to Dan.

Age/Who would you rather be friends with?

Dan n = 1707; M = 17.12; SD = 3.75

Phil n = 2596; M = 17.35; SD = 4.42

Not significant.

Age/Do you ship Phan?

(Simplified by only taking clear "Yes" and "No" answers)

Does not ship Phan n = 1332; M = 17.89; SD = 4.42

Ships Phan n = 13097; M = 16.29; SD = 3.55

Significant (p < 0.001): People who ship Phan are significantly younger than those who do not ship Phan.

Age/Which of the following do you think is most likely to describe Dan and Phil’s relationship in real life?

(Relationship question was recoded to reflect romantic background vs. platonic background. Romantic background = long term romantic relationship; they recently started a romantic relationship; they were romantic/sexual in the past, broke up and are now back together; they were romantic/sexual in the past, but now are just friends; they were romantic/sexual in the past, but now I don’t know. Platonic background = purely good friends; purely platonic best buds who mean the world to each other.)

Romantic background n = 12358 (50.3 %); M = 16.70; SD = 3.79

Platonic background n = 7781 (31.7 %); M = 16.29; SD = 3.27

Significant (p < 0.001): People who believe that Dan and Phil have or have had a romantic background are significantly older than those who believe in a platonic background.

Age/Do you consider yourself to be part of the phandom?

Yes n = 11811; M = 15.74; SD = 3.1

Partially n = 3615; M = 17.55; SD = 3.98

No n = 601; M = 18.46; SD = 4.27)

Significant (p < 0.001): These three groups differ significantly in age, in all combinations. People who consider themselves part of the phandom are younger than the other two groups, and people who do not consider themselves as a part of the phandom are older than the other two groups.

Age/Is the Phandom the only fandom you belong to?

(Filtered out respondents who replied that they are not in the phandom.)

Phandom as only fandom: n = 2477; M = 17.73; SD = 4.82

More fandoms than Phandom: n = 20127; M = 16.27; SD = 3.29

Significant (p < 0.001): Respondents who are in multiple fandoms are significantly younger.

Age/Whether or not you consider yourself part of a website’s phandom, where do you choose to see or discuss Dan and Phil related content?

Significance levels not included due to high number of possible comparisons.

Age/Do you read phanfiction?

Reads Phanfic n = 18090; M = 16.28; SD = 3.43

Does not read Phanfic n = 6423; M = 17.36; SD = 3.433

Significant (p < 0.001): People who read phanfiction are significantly younger than those who do not.

Age/Do you send premium messages?

- Phil:

Sends Premium msgs n = 1140; M = 15.94; SD = 3.63

Does not send n = 18408; M = 16.48; SD = 3.63

- Dan:

Sends Premium msgs n = 1180; M = 15.86; SD = 3.65

Does not send n = 18589; M = 16.49; SD = 3.48

Both significant (p < 0.001): People who send Premium msgs in Phil's and Dan's chat are significantly younger than those who do not send Premiums.

Age/Would you approach Dan and Phil if you saw them randomly?

(Answer options "Yes, I met Dan/Phil/both/individually" and "No, but I would approach if I saw them" were counted as "would approach them")

Would approach/has approached: n = 20205; M = 16.19; SD = 3.18

Wouldn't approached/hasn't appoached: n = 4308; M = 18.34; SD = 4.74

Significant (p < 0.001): Respondents who wouldn't/haven't approached them are significantly older than those who would or have approached them.

Age/Did you attend TATINOF?

Attended TATINOF: n = 6191; M = 16.62; SD = 3.54

Did not attend TATINOF: n = 18321; M = 16.55; SD = 3,62

Not significant

Age/Have you ever purchased something from the Dan and Phil Shop?

Has purchased n = 9285; M = 16.47; SD = 3.80

Has not purchased n = 13222; M = 16.78; SD = 3.55

Significant (p < 0.001): People who have purchased from the DAP Shop are significantly younger.

255 notes

·

View notes

Text

Databricks Certified Professional Data Scientist Exam Questions

If you are looking to clear the Databricks Certified Professional Data Scientist exam on your first go, then you should study PassQuestion high-quality Databricks Certified Professional Data Scientist Exam Questions that will help you succeed in the exam. With the help of the Databricks Certified Professional Data Scientist Exam Questions provided by PassQuestion, you will be able to get complete technical assistance and guidelines for the preparation of Databricks Certified Professional Data Scientist exam so you can pass your exam on the first attempt.

Databricks Certified Professional Data Scientist Exam Description

The Databricks Certified Professional Data Scientist certification exam assesses the understanding of the basics of machine learning and the steps in the machine learning lifecycle, including data preparation, feature engineering, the training of models, model selection, interpreting models, and the production of models. The exam also assesses the understanding of basic machine learning algorithms and techniques, including linear regression, logistic regression, regularization, decision trees, tree-based ensembles, basic clustering algorithms, and matrix factorization techniques. The basics of model management with MLflow, like logging and model organization, are also assessed.

Prerequisites

The minimally qualified candidate should have:

a complete understanding of the basics of machine learning, including:

bias-variance tradeoff

in-sample vs. out-of sample data

categories of machine learning

applied statistics concepts

a intermediate understanding of the steps in the machine learning lifecycle, including:

data preparation

feature engineering

model training, selection, and production

interpreting models

a complete understanding of basic machine learning algorithms and techniques, including:

linear, logistic, and regularized regression

tree-based models like decision trees, random forest and gradient boosted trees

unsupervised techniniques like K-means and PCA

specific algorithms like ALS for recommendation and isolation forests for outlier detection

a complete understanding of the basics of machine learning model management like logging and model organization with MLflowExam Details

The exam consists of 60 multiple-choice questions. Candidates will have 120 minutes to complete the exam. The minimum passing score for the exam is 70 percent. This translates to correctly answering a minimum of 42 of the 60 questions. The exam will be conducted via an online proctor. This exam has no code-based questions, and there will be no test aids available while taking the exam.

View Online Databricks Certified Professional Data Scientist Free Questions

You are asked to create a model to predict the total number of monthly subscribers for a specific magazine. You are provided with 1 year's worth of subscription and payment data, user demographic data, and 10 years worth of content of the magazine (articles and pictures). Which algorithm is the most appropriate for building a predictive model for subscribers? A.Linear regression B.Logistic regression C.Decision trees D.TF-IDF Answer : A

You are working in a data analytics company as a data scientist, you have been given a set of various types of Pizzas available across various premium food centers in a country. This data is given as numeric values like Calorie. Size, and Sale per day etc. You need to group all the pizzas with the similar properties, which of the following technique you would be using for that? A.Association Rules B.Naive Bayes Classifier C.K-means Clustering D.Linear Regression E.Grouping Answer : C

Which of the below best describe the Principal component analysis A.Dimensionality reduction B.Collaborative filtering C.Classification D.Regression E.Clustering Answer : A

You have collected the 100's of parameters about the 1000's of websites e.g. daily hits, average time on the websites, number of unique visitors, number of returning visitors etc. Now you have find the most important parameters which can best describe a website, so which of the following technique you will use A.PCA (Principal component analysis) B.Linear Regression C.Logistic Regression D.Clustering Answer : A

Refer to the exhibit.

You are building a decision tree. In this exhibit, four variables are listed with their respective values of info-gain. Based on this information, on which attribute would you expect the next split to be in the decision tree? A.Credit Score B.Age C.Income D.Gender Answer : A

0 notes

Text

Hands on Machine Learning

Chapter 1-2

- batch vs online learning

- instance vs model learning

- hyperparameter grid search

Chapter 3

- 1-precision (x) vs recall (y) is the ROC curve

- true positive rate = recall = sensitivity and true negative rate is = precision = specificity

- harmonic mean to balance precision and recall averages

Chapter 4

- training data with 3 different types of stochastic gradient descent: batch, mini batch, stochastic (with single sample row)

- cross entropy error is minimized for logistic regression

- softmax for multi class predictions. multi-label vs multi-class predictions where labels are mutually exclusive. Softmax is used when mutually exclusive labels.

- softmax helps the gradient not die, while argmax will make it disappear

Chapter 5 SVM

- svm regression is opposite of few points in the street but actually more

- hard vs soft margin classification (like output is a probability vs 1 or 0?)

- kernel trick makes non-linear classification less computationally complex

- dual problem is a problem with a similar or in this case the same mathematical solution as the primal problem of maximizing the distance between the boundaries

- things to better understand: kernel SVM thru Mercer’s condition, how hinge loss applies to SVM solved with gradient descent

Chapter 6

- trees are prone to overfit and regressions are sensitive to the orientation of the data (can be fixed with PCA)

Chapter 7

- ensemble through bagging or pasting: one with replacement and the other without, leading to OOB error

- extra randomized trees when splits on nodes for the tree is done on a random threshold. It’s called random trees bc of using only a subset of features and data points for each tree

- Adaboost (weighting wrong predictions more) vs. gradient boost (adding predictions on all the error residuals)

- stacking is a separate model used to aggregate multiple models instead of a hard vote

Chapter 9 unsupervised

- Silhouette score, balance intra and inter cluster scores, but can do for each cluster to get you a balance within the clusters

- DBSCAN density clustering, sihoulette score to find the optimal epsilon, working well for dense clusters. Don’t need to specify number of clusters

- Gaussian Mixture Model, also density clustering working well for ellipsoid clusters. Do need to specify cluster number, and covariance type of the types of shapes, which would mess it up. It also helps with anomaly detection because of p values. This can’t use silhouette score bc they’re not spherical shapes because of biases of distances.

- Bayesian GMM, similar to lasso for GMM, to set cluster count for you with priors

- Latent class, which is the cluster label of a latent variable

Chapter 13 CNN computer vision

- CNN uses a square to go over pixels in a square, some with zero padding; this is called “convolving”

- the layers are actual horizontal and vertical filters, that the model uses to multiple against inputted image

- these filters can be trained to eventually become pattern detectors. Patterns could be dog faces or even edges

- a pooling layer doesn’t detect patterns but simply averages things together, simplifying complex images

- QUESTION: how does the pattern eventually detect if yes or no for training if something is a dog for instance?

Chapter 8 Dimensionality Reduction

- PCA: projection onto a hyperplane in a dimension, max with the same number of features. The number of top dimensions you pick is your hyper parameter, with the max being the dimensions you are in. The next line is orthogonal for projection

- Kernel PCA: vector is curved or circular, not just 1 straight line. The additional hyper parameter is the shape of the curved lines used. It’s a mathematical transformation used to make different data points linearly separable in a higher dimension (making lines in a lower dimension look curved) without actually having to go to the higher dimension.

- you can decompress by multiplying by the inverse transformation. Then you see how off you are from the actual image, i.e reconstruction error

- another measurement is explained variance ratio for each dimension n, also chosen with an elbow plot

- manifold learning is twisting, unfolding, etc from a 2D space to 3D space

Chapter 14

- RNN predict time series and NLP

- it is a loop with time, each previous layer feeding into the next

- can be shorted with probabilistic dropout and feeding older t-20 to t-1 outputs, to prevent vanishing gradient

- LTSM cell allows you to recognize what’s an important input vs an unimportant one to forget

- encoder vs decoder for machine translation NLP occurs such that encoders are fed in a series as one output to a series of decoders, each with its own output. https://youtu.be/jCrgzJlxTKg

youtube

Chapter 15 autoencoders

a neural function that encodes and decodes, predicting itself (technically unsupervised but is a supervised training neural network with fewer outputs in the middle ie the encoder which simplifies and then the same number of outputs as inputs in the final layer.

GANS used autoencoders to build additional data, and autoencoders are dimensionality reducers.

Questions: how is it reducing dimensionality if the same number of outputs as inputs exist?

It’s helpful for detecting anomalies or even predicting if something is a different class. If the error bar of the output and input is super large, it is likely an anomaly or different class.

https://youtu.be/H1AllrJ-_30

https://youtu.be/yz6dNf7X7SA

Reinforcement learning

Q-learning is a value derived to punish or reward behaviors at each step in reinforcement learning

Reinforcement learning requires doing a lot of steps and getting just 1 success criteria at the end

It can be trained with stochastic gradient descent, boosting the actions with gradient descent that yielded more positive end Q score results

youtube

youtube

QUESTIONS

- does waiting longer days increase power? Or does it increase only in so far that sample size increases with more days of new users exposed? More days of data even with the same sample size will decrease std.

1 note

·

View note

Text

Learn Statistics and Data Analysis Intuitively

Welcome to Statistical Aid!

Statistical Aid is a site that provides statistical content, data analysis content, and also discusses the various fields of statistics. You can learn statistics and data analysis intuitively by Statistical Aid. All the contents on this site are written to provide help to the students who are very weak in statistics and data analysis. From basic to advanced, you can get all the topics of statistics presented on this site very simply. You can get help from the following topics:

Basic Statistics

Definition and scope of statistics

Statistical Data

Population vs Sample

Random Variable

Central tendency

Arithmetic, Geometric and harmonic mean

Measures of Dispersion

Variance and Standard Deviation

Skewness and Kurtosis

Correlation analysis

Intra vs Inter class correlation

Regression Analysis

Data levels (Nominal, ordinal, Interval and Ratio)

Hypothesis Testing

Probability Distributions in Statistics

Bernoulli Distribution

Binomial Distribution

Negative Binomial distribution

Poission Distribution

Exponential Distribution

Normal distribution

Gamma Distribution

Geometric Distribution

Hypergeometric Distribution

Uniform Distribution

Power Series Distribution

Logarithmic Series Distribution

Sampling Distributions in Statistics

Probability Sampling

Simple Random Sampling

Stratified Sampling

Systematic Sampling

Multi-Stage Sampling

Cluster Sampling

Quadrat Sampling

Non Probability Sampling

Purposive sampling

Snowball sampling

Convenience sampling

Data analysis Content and Tutorials

SPSS

R Tutoriala

SPSS Tutorials

There are also some other topics as following:

Non Parametric Tests

Time Series Analysis

Statistical Inference

Experimental Design

Data Science

Data Analytics tools free Download

SPSS 25 free Download

Stata 14.2 free Download

0 notes

Text

data science functions

Credits are earned by completing courses the place each course is usually 7.5 credits. The programme consists of obligatory courses, obligatory elective courses and elective courses. Through a combination of principle and practice within the courses of the program, you'll gain an understanding of how and why certain fashions and algorithms work and will be in a position to determine their potentialities and limitations. You will be able to approach a real-world downside in a selected drawback area, combining current and new methods to create an efficient resolution. You will have the flexibility to constantly be taught in these rapidly evolving fields, talk with experts and non-experts in particular downside domains and to responsibly apply these technologies. You will also acquire the insights to have the ability to understand and influence the roles of Data Science and AI in society.

To interface Bluetooth with Arduino/Raspberry Pi and write a program to turn LED ON/OFF when ‘1’/’0’ is acquired from smartphone using Bluetooth. To interface LED/Buzzer with Arduino/Raspberry Pi and write a program to turn ON LED for 1 sec after each 2 seconds. Explore the potential areas using embedded controllers/processors in real time techniques.

In fact, you most likely use synthetic intelligence every single day, no matter the place you work or what you do. Ask 10 totally different specialists what synthetic intelligence is, and you may get 10 completely different solutions. A good definition comes from Demis Hassabis, CEO of DeepMind, an AI firm that Google purchased. To be precise, Data Science covers AI, which includes machine learning. However, machine studying itself covers another sub-technology — Deep Learning.

Data science is not just related to the statistical aspect of the method, nevertheless it feeds the method and derives advantages from it through knowledge engineering. Data engineers and data scientists have a huge position to play in propelling AI ahead. Data science is a broad field of examine pertaining to knowledge methods and processes, aimed at maintaining data sets and deriving meaning out of them.

At Marketing AI Institute, we have spent years researching and making use of AI. Since 2016, we have published more than four hundred articles on the topic. And we've revealed stories on 50+ AI-powered distributors with greater than $1 billion in whole funding. We're additionally monitoring 1,500+ gross sales and marketing AI corporations with mixed funding north of $6.2 billion.

The core position of a Machine Learning Engineer could be to create programs that allow a machine to take specific actions with none specific programming. Their primary responsibilities consist of knowledge sets for analysis, personalising net experiences, and identifying enterprise requirements. Salaries of a Machine Learning Engineer vs Data Scientist can vary based on skills, experience and corporations hiring. Simply put, machine learning is the hyperlink that connects Data Science and AI.

Both machine studying and its subtype, deep learning, incorporate the method of learning from the information over time. While it isn't the only thing connecting the 2, deep learning is a kind of machine learning that works finest to strengthen the process of AI and knowledge science. Deep learning may be outlined as a machine studying approach that endeavors to teach computer methods things that come naturally to humans.

For instance, an AI device exists that writes e mail subject traces for you using natural language generation and pure language processing . The tool's AI model makes use of coaching from humans (samples of an organization's marketing copy) to study and enhance. But then the software drafts its personal email subject lines.

Data scientists use a mix of instruments, functions, rules and algorithms to make sense of random information clusters. Since almost all types of organizations today are producing exponential quantities of data around the world, it turns into tough to watch and store this knowledge. Data science focuses on information modelling and information warehousing to track the ever-growing information set. The data extracted through data science functions are used to guide enterprise processes and attain organisational targets.

Explore more on - Data Science Institutes in Hyderabad

360DigiTMG - Data Analytics, Data Science Course Training Hyderabad

Address:-2-56/2/19, 3rd floor, Vijaya towers, near Meridian school, Ayyappa Society Rd, Madhapur, Hyderabad, Telangana 500081

Contact us ( 099899 94319 )

https://360digitmg.com/data-science-course-training-in-hyderabad

Hours: Sunday - Saturday 7 AM - 11 PM

#best data science institute in hyderabad#data science institutes in hyderabad#data science course in hyderabad with placements

0 notes

Text

Supervised vs unsupervised machine learning

Supervised and unsupervised learning are the two different types of tasks in machine learning. In short supervised learning is done when we have prior knowledge of what the output values for our samples should be, and therefore, the goal of supervised learning is to learn a function that best approximates the relationship between input and output observable in the data. Unsupervised learning, on the other hand, does not have labeled outputs, so its goal is to infer the natural structure present within a set of data points.

Supervised Learning

Supervised learning is typically done in the context of classification, when we want to map input to output labels, or regression, when we want to map input to a continuous output. Common algorithms in supervised learning include logistic regression, naive bayes, support vector machines, artificial neural networks, and random forests. In both regression and classification, the goal is to find specific relationships or structure in the input data that allow us to effectively produce correct output data.

When conducting supervised learning, the main considerations are model complexity, and the bias-variance tradeoff. Note that both of these are interrelated.

Model complexity refers to the complexity of the function you are attempting to learn - similar to the degree of a polynomial. The proper level of model complexity is generally determined by the nature of your training data. If you have a small amount of data, or if your data is not uniformly spread throughout different possible scenarios, you should opt for a low-complexity model. This is because a high-complexity model will overfit if used on a small number of data points.

The bias-variance tradeoff also relates to model generalisation. In any model, there is a balance between bias, which is the constant error term, and variance, which is the amount by which the error may vary between different training sets. So, high bias and low variance would be a model that is consistently wrong 20% of the time, whereas a low bias and high variance model would be a model that can be wrong anywhere from 5%-50% of the time, depending on the data used to train it.

Unsupervised Learning

The most common tasks within unsupervised learning are clustering, representation learning, and density estimation. In all of these cases, we wish to learn the inherent structure of our data without using explicitly-provided labels. Some common algorithms include k-means clustering, principal component analysis, and auto-encoders. Since no labels are provided, there is no specific way to compare model performance in most unsupervised learning methods.

Two common use-cases for unsupervised learning are exploratory analysis and dimensionality reduction.

Unsupervised learning is very useful in exploratory analysis because it can automatically identify structure in data. For example, if an analyst were trying to segment consumers, unsupervised clustering methods would be a great starting point for their analysis. In situations where it is either impossible or impractical for a human to propose trends in the data, unsupervised learning can provide initial insights that can then be used to test individual hypotheses.

Dimensionality reduction, which refers to the methods used to represent data using less columns or features, can be accomplished through unsupervised methods. In representation learning, we wish to learn relationships between individual features, allowing us to represent our data using the latent features that interrelate our initial features. This sparse latent structure is often represented using far fewer features than we started with, so it can make further data processing much less intensive, and can eliminate redundant features.

So in summary:

Supervised: All data is labeled and the algorithms learn to predict the output from the input data.

Unsupervised: All data is unlabelled and the algorithms learn to inherent structure from the input data.

0 notes

Text

How Best to Measure Patient Persistence with Medications? Self Report or Pharmacy Fill?

MedicalResearch.com Interview with:

Dr. Fanaroll Alexander C. Fanaroff, MD, MHS Assistant Professor of Medicine, Division of Cardiovascular Medicine University of Pennsylvania MedicalResearch.com: What is the background for this study? Response: This is a secondary analysis of the ARTEMIS, a cluster randomized trial of copayment assistance for P2Y12 inhibitors in patients that had myocardial infarction. One of the primary endpoints of ARTEMIS was persistence with P2Y12 inhibitors: Did the patient continue to take a P2Y12 inhibitor over the entire 1 year following MI? In ARTEMIS, we captured persistence data in two ways, patient report and pharmacy fill records. What we did in this study was to look at the agreement between persistence as measured by these two methods. MedicalResearch.com: What are the main findings? Response: Overall, 15% of patients self-reported non-persistence, but 48% of patients were non-persistent by pharmacy fill data. When we looked at agreement between the methods, the two methods quite frequently did not agree: 50% of patients were persistent by both methods, 13.5% were non-persistent by both methods, 34.8% reported that they were persistent but were non-persistent by pharmacy fill, and 1.8% reported that they were non-persistent but were actually persistent by pharmacy fill records. Both methods are subject to bias: Self-report is subject to social desirability bias (where patients misrepresent medication taking behavior to please investigators) and recall bias (where patients don't remember their medications). Pharmacy fill data is subject to missing data if a pharmacy is not included in the database, or other reasons. Because of these biases, we next tried to understand which method of measuring persistence -- pharmacy fill records or self-report -- was "correct." To do this, we used two other methods of measuring persistence that were captured in ARTEMIS: copayment assistance vouchers and P2Y12 inhibitor serum drug levels. We had serum drug levels for a random sample of patients in ARTEMIS, and we found limited agreement beyond chance between serum drug levels and persistence as measured by pharmacy fill and patient report, which did not help sort out which method was more "correct." In the intervention arm, patients used vouchers to have copayments for P2Y12 inhibitors waived, and we were able to track when vouchers were used. Using the voucher data, 20% of patients that self-reported persistence but were characterized by pharmacy fill data as non-persistent actually were persistent, suggesting that pharmacy fill data underestimates persistence by roughly that amount. Lastly, we looked at clinical outcomes by persistence category, and found that patients persistent by both methods had the best outcomes, patients non-persistent by both methods had the worst outcomes, and patients with discordant persistence had outcomes intermediate between concordantly persistent and non-persistent patients. MedicalResearch.com: What should readers take away from your report? Response: Electronic health records increasingly have a mechanism for enabling physicians to check pharmacy fill records for patients to track persistence with medications. Our results show that this data is important, as it identifies more patients than self-report, but that it needs to be interpreted somewhat cautiously: Many patients non-persistent by pharmacy fill are actually persistent. The best way to understand persistence is to incorporate both patient self-report and pharmacy fill data, recognizing that patients non-persistent by both methods will have the worst outcomes. MedicalResearch.com: What recommendations do you have for future research as a result of this work? Response: Currently, health systems employ a number of interventions to increase medication persistence. Future research should look at the best way to target these interventions to patients that are non-persistent with medications, including methods that use both patient report and pharmacy fill data. No disclosures relevant to this research Citation: Fanaroff AC, Peterson ED, Kaltenbach LA, et al. Agreement and Accuracy of Medication Persistence Identified by Patient Self-report vs Pharmacy Fill: A Secondary Analysis of the Cluster Randomized ARTEMIS Trial. JAMA Cardiol. Published online March 04, 2020. doi:10.1001/jamacardio.2020.0125 Last Modified: The information on MedicalResearch.com is provided for educational purposes only, and is in no way intended to diagnose, cure, or treat any medical or other condition. Always seek the advice of your physician or other qualified health and ask your doctor any questions you may have regarding a medical condition. In addition to all other limitations and disclaimers in this agreement, service provider and its third party providers disclaim any liability or loss in connection with the content provided on this website. Read the full article

#EHRs#electronicmedicalrecords#healthcare#jamacardio#medicalresearch#medicationadherence#PennLDI#PennMed#pharmacy

0 notes

Text

300+ TOP Deep Learning Interview Questions and Answers

Deep Learning Interview Questions for freshers experienced :-

1. What is Deep Learning? Deep learning is one part of a broader group of machine learning techniques based on learning data analytics designs, as exposed through task-specific algorithms. Deep Learning can be supervised us a semi-supervised or unsupervised. 2. Which data visualization libraries do you use and why they are useful? It is valuable to determine your views value on the data value properly visualization and your individual preferences when one comes to tools. Popular methods add R’s ggplot, Python’s seaborn including matplotlib value, and media such as Plot.ly and Tableau. 3. Where do you regularly source data-sets? This type of questions remains any real tie-breakers. If someone exists going into an interview, he/she need to remember this drill of any related question. That completely explains your interest in Machine Learning. 4. What is the cost function? A cost function is a strength of the efficiency of the neural network data-set value with respect to given sample value and expected output data-set. It is a single value of data-set-function, non-vector as it gives the appearance of the neural network as a whole. MSE=1nΣi=0n(Y^i–Yi)^2 5. What are the benefits of mini-batch gradient descent? This is more efficient of compared tools to stochastic gradient reduction. The generalization data value by determining the flat minima. The Mini-batches provides help to approximate the gradient of this entire data-set advantage which helps us to neglect local minima. 6. What is mean by gradient descent? Gradient descent defined as an essential optimization algorithm value point, which is managed to get the value of parameters that reduces the cost function. It is an iterative algorithm data value function which is moves towards the direction of steepest data value function relationship as described by the form of the gradient. Θ: =Θ–αd∂ΘJ(Θ) 7. What is meant by a backpropagation? It ‘s Forward to the propagation of data-set value function in order to display the output data value function. Then using objective value also output value error derivative package is computed including respect to output activation. Then we after propagate to computing derivative of the error with regard to output activation value function and the previous and continue data value function this for all the hidden layers. Using previously calculated the data-set value and its derivatives the for output including any hidden stories we estimate error derivatives including respect to weights. 8. What is means by convex hull? The convex hull is represents to the outer boundaries of the two-level group of the data point. Once is the convex hull has to been created the data-set value, we get maximum data-set value level of margin hyperplane (MMH), which attempts to create data set value the greatest departure between two groups data set value, as a vertical bisector between two convex hulls data set value. 9. Do you have experience including Spark about big data tools for machine learning? The Spark and big data mean most favorite demand now, able to the handle high-level data-sets value and including speed. Be true if you don’t should experience including those tools needed, but more take a look into assignment descriptions also understand methods pop. 10. How will do handle the missing data? One can find out the missing data and then a data-set value either drop thorugh those rows value or columns value or decide value to restore them with another value. In python library using towards the Pandas, there are two thinging useful functions helpful, IsNull() and drop() the value function.

Deep Learning Interview Questions 11. What is means by auto-encoder? An Auto-encoder does an autonomous Machine learning algorithm data that uses backpropagation system, where that target large values are data-set to be similar to the inputs provided data-set value. Internally, it converts a deep layer that describes a code used to represent specific input. 12. Explain about from Machine Learning in industry. Robots are replacing individuals in various areas. It is because robots are added so that all can perform this task based on the data-set value function they find from sensors. They see from this data also behaves intelligently. 13. What are the difference Algorithm techniques in Machine Learning? Reinforcement Learning Supervised Learning Unsupervised Learning Semi-supervised Learning Transduction Learning to Learn 14. Difference between supervised and unsupervised machine learning? Supervised learning is a method anywhere that requires instruction defined data While Unsupervised learning it doesn’t need data labeling. 15. What is the advantage of Naive Bayes? The classifier preference converge active than discriminative types It cannot learn that exchanges between characteristics 16. What are the function using Supervised Learning? Classifications Speech recognition Regression Predict time series Annotate strings 17. What are the functions using Unsupervised Learning? To Find that the data of the cluster of the data To Find the low-dimensional representations value of the data To Find determine interesting with directions in data To Find the Magnetic coordinates including correlations To Find novel observations 18. How do you understanding Machine Learning Concepts? Machine learning is the use of artificial intelligence that provides operations that ability to automatically detect further improve from occurrence without doing explicitly entered. Machine learning centers on the evolution of network programs that can access data and utilize it to learn for themselves. 19. What are the roles of activation function? The activation function means related to data enter non-linearity within the neural network helping it to learn more system function. Without which that neural network data value would be simply able to get a linear function which is a direct organization of its input data. 20. Definition of Boltzmann Machine? Boltzmann Machine is used to optimize the resolution of a problem. The work of the Boltzmann machine is essential to optimize data-set value that weights and the quantity for data Value. It uses a recurrent structure data value. If we apply affected annealing on discrete Hopfield network, when it would display Boltzmann Machine. Get Deep Learning 100% Practical Training 21. What is Overfitting in Machine Learning? Overfitting in Machine Learning is described as during a statistical data model represents random value error or noise preferably of any underlying relationship or when a pattern is extremely complex. 22. How can you avoid overfitting? Lots of data Cross-validation 23. What are the conditions when Overfitting happens? One of the important design and chance of overfitting is because the models used as training that model is the same as that criterion used to assess the efficacy of a model. 24. What are the advantages of decision trees? The Decision trees are easy to interpret Nonparametric There are comparatively few parameters to tune 25. What are the three stages to build the hypotheses or model in machine learning? Model building Model testing Applying the model 26. What are parametric models and Non-Parametric models? Parametric models remain these with a limited number from parameters also to predict new data, you only need to understand that parameters from the model. Non Parametric designs are those with an unlimited number from parameters, allowing to and flexibility and to predict new data, you want to understand the parameters of this model also the state from the data that has been observed. 27. What are some different cases uses of machine learning algorithms can be used? Fraud Detection Face detection Natural language processing Market Segmentation Text Categorization Bioinformatics 28. What are the popular algorithms for Machine Learning? Decision Trees Probabilistic networks Nearest Neighbor Support vector machines Neural Networks 29. Define univariate multivariate and bivariate analysis? if an analysis involves only one variable it is called as a univariate analysis for eg: Pie chart, Histogram etc. If a analysis involves 2 variables it is called as bivariate analysis for example to see how age vs population is varying we can plot a scatter plot. A multivariate analysis involves more than two variables, for example in regression analysis we see the effect of variables on the response variable 30. How does missing value imputation lead to selection bias? Case treatment- Deleting the entire row for one missing value in a specific column, Implutaion by mean: distribution might get biased for instance std dev, regression, correlation. 31. What is bootstrap sampling? create resampled data from empirical data known as bootstrap replicates. 32. What is permutation sampling? Also known as randomization tests, the process of testing a statistic based on reshuffling the data labels to see the difference between two samples. 33. What is total sum of squares? summation of squares of difference of individual points from the population mean. 34. What is sum of squares within? summation of squares of difference of individual points from the group mean. 35. What is sum of squares between? summation of squares of difference of individual group means from the population mean for each data point. 36. What is p value? p value is the worst case probability of a statistic under the assumption of null hypothesis being true. 37. What is R^2 value? It’s measures the goodness of fit for a linear regression model. 38. What does it mean to have a high R^2 value? the statistic measures variance percentage in dependent variable that can be explained by the independent variables together. 40. What are residuals in a regression model? Residuals in a regression model is the difference between the actual observation and its distance from the predicted value from a regression model. 41. What are fitted values, calculate fitted value for Y=7X+8, when X =5? Response of the model when predictors values are used in the model, Ans=42. 42. What pattern should residual vs fitted plots show in a regression analysis? No pattern, if the plot shows a pattern regression coefficients cannot be trusted. 43. What is overfitting and underfitting? overfitting occurs when a model is excessively complex and cannot generalize well, a overfitted model has a poor predictive performance. Underfitting of a model occurs when the model is not able to capture any trends from the data. 44. Define precision and recall? Recall = True Positives/(True Positives + False Negatives), Precision = True Positives/(True Positives + False Positive). 45. What is type 1 and type 2 errors? False positives are termed as Type 1 error, False negative are termed as Type 2 error. 46. What is ensemble learning? The art of combining multiple learning algorithms and achieve a model with a higher predictive power, for example bagging, boosting. 47. What is the difference between supervised and unsupervised machine learning algorithms? In supervised learning we use the dataset which is labelled and try and learn from that data, unsupervised modeling involves data which is not labelled. 48. What is named entity recognition? It is identifying, understanding textual data to answer certain question like “who, when,where,What etc.” 49. What is tf-idf? It is the measure if a weight of a term in text data used majorly in text mining. It signifies how important a word is to a document. tf -> term frequency – (Count of text appearing in the data) idf -> inverse document frequency tfidf -> tf * idf 50. What is the difference between regression and deep neural networks, is regression better than neural networks? In some applications neural networks would fit better than regression it usually happens when there are non linearity involved, on the contrary a linear regression model would have less parameters to estimate than a neural network for the same set of input variables. thus for optimization neural network would need a more data in order to get better generalization and nonlinear association. 51. How are node values calculated in a feed forward neural network? The weights are multiplied with node/input values and are summed up to generate the next successive node 52. Name two activation functions used in deep neural networks? Sigmoid, softmax, relu, leaky relu, tanh. 53. What is the use of activation functions in neural networks? Activation functions are used to explain the non linearity present in the data. 54. How are the weights calculated which determine interactions in neural networks? The training model sets weights to optimize predictive accuracy. 55. which layer in a deep learning model would capture a more complex or higher order interaction? The last layer. 56. What is gradient descent? It comprises of minimizing a loss function to find the optimal weights for a neural network. 57. Imagine a loss function vs weights plot depicting a gradient descent. At What point of the curve would we achieve optimal weights? local minima. 58. How does slope of tangent to the curve of loss function vs weigts help us in getting optimal weights for a neural network Slope of a curve at any point will give us the direction component which would help us decide which direction we would want to go i.e What weights to consider to achieve a less magnitude for loss function. 59. What is learning rate in gradient descent? A value depicting how slowly we should move towards achieving optimal weights, weights are changedby the subtracting the value obtained from the product of learning rate and slope. 60. If in backward propagation you have gone through 9 iterations of calculating slopes and updated the weights simultaneously, how many times you must have done forward propagation? 9 61. How does ReLU activation function works? Define its value for -5 and +7 For all x>=0, the output is x, for all x Read the full article

0 notes

Text

Outlier Detection

What is an outlier?

An object that deviates significantly from the rest of the objects.

But outliers do not need to be extreme values.

e.g.) The point B on the first graph & the point on the second graph

Causes

Measurement or execution error.

Problems

Data outliers can spoil and mislead the training process resulting in poorer results.

Outliers can cause problems with certain types of models. For example, linear regression models are less robust to outliers than decision tree models.

How to handle

In general, if you have a legitimate reason to remove an outlier, it will help your model’s performance.