#Code coverage is a software testing

Explore tagged Tumblr posts

Text

I knew moving from a FAANG to a midsized company outside of the software field would be an adjustment. But I was not prepared for the sheer number of things that are just baffling design decisions.

The stuff that everyone recognizes needs to be fixed but haven't had the time to knock out is understandable. It's the stuff that people are like "and what about it?" for the absolute wildest design choices that perplex me the most.

#codeblr#progblr#Code smells#The latest thing is releasing a library that cannot be manually tested outside of prod#I didnt even realize that was possible#If we were using a lisp then itd be a bit more forgivable#It also currently has no unit tests but at least they see the value of that#Theyre saying its a common industry practice#Is it?#Also is it a common industry practice amongst companies that have robust software?#When they hired me they told me they did TDD#That word I do not think it means what you think it means#I went in expecting to be hindered by a rigid push to have 100% code coverage but this is actually worse

10 notes

·

View notes

Text

Test Case Generation: Accelerate Your API Testing Workflow

In modern development workflows, automated testing is essential to ensure fast releases and robust APIs. But writing test cases manually can be time-consuming, error-prone, and difficult to maintain. That’s where test case generation comes in—automatically producing reliable tests that save time and boost coverage.

In this article, we’ll explore test case generation, why it’s important, how it works, and the tools that can help you implement it effectively.

What Is Test Case Generation?

Test case generation is the process of automatically creating test cases for software applications based on various inputs like:

API specifications

Recorded traffic

Code analysis

User behavior patterns

Instead of writing test cases manually, developers can use automation tools to generate them, especially for repetitive and data-driven tests.

Why Is Test Case Generation Important?

Saves Time: No more hand-writing hundreds of test cases.

Increases Coverage: Uncovers edge cases you may miss manually.

Reduces Human Error: Automation ensures consistency and reliability.

Faster Feedback Loops: CI/CD pipelines benefit from ready-to-use tests.

Improves API Quality: Automatically tests against real-world scenarios.

Manual vs Automated Test Case Generation

Feature

Manual Test Cases

Automated Test Case Generation

Time-consuming

Yes

No

Prone to human error

Yes

Minimal

Scalability

Limited

Highly scalable

Maintenance

Needs frequent updates

Easier with auto-update features

Coverage

Often partial

Higher and more systematic

Types of Test Case Generation Techniques

1. Model-Based Testing

Generates tests based on UML diagrams, finite state machines, or workflows.

2. Specification-Based Testing

Uses API specs like Swagger/OpenAPI to auto-generate test scenarios.

3. Record and Replay Testing

Captures real API traffic and reuses it for test generation. Ideal for integration and regression testing.

4. Code-Based Testing

Generates tests by analyzing code structure, function paths, and logic branches.

5. AI-Powered Generation

Uses machine learning to analyze past user behavior and generate smart, context-aware tests.

Real-World Example: API Test Case Generation

Let’s say you have an API endpoint /user/register that accepts a POST request with name, email, and password.

With automated test case generation:

Positive test: Valid input returns 200 OK

Negative test: Missing email returns 400 Bad Request

Edge test: Very long password returns 422 Validation Error

You can generate all of these variations instantly, without writing a single line of test code.

Tools for Automated Test Case Generation

1. Keploy

Open-source

Generates test cases and mocks by recording real API traffic

Achieves >90% coverage with no manual scripting

Integrates easily with CI/CD pipelines

2. Postman

Test generation from API schema

Good for basic request validation

Limited logic coverage

3. Swagger/OpenAPI Tools

Tools like Swagger Codegen or Dredd can generate test cases from OpenAPI specs

4. REST Assured / JUnit Generators

Code-based generators for Java APIs

Benefits for API Testing

Faster onboarding for new developers

Regression testing with live traffic

Simplified test maintenance

Continuous testing in DevOps workflows

Best Practices for Effective Test Case Generation

Start with high-traffic endpoints

Use real data from staging or production (with masking)

Review and customize generated tests periodically

Use assertions to validate key business logic

Final Thoughts

Test case generation is a game-changer for teams looking to improve API quality without slowing down development. Whether you're building microservices or large platforms, automatically generating tests saves time, boosts coverage, and reduces bugs in production. If you want to accelerate your testing process, reduce developer toil, and integrate automation into your CI/CD pipelines, try a tool like Keploy—the AI-powered, open-source platform for smart test case and mock generation.

0 notes

Text

Benefits of High Code Coverage - Edu-Art

Code coverage offers several significant benefits in software development. Firstly, it provides a clear measure of how much of your code has been tested, enabling you to identify untested areas and ensure comprehensive testing. This, in turn, leads to improved software quality, as more defects are detected and resolved early in the development process. High code coverage also enhances software reliability, reduces the cost of bug fixing, and facilitates easier code maintenance and updates. Moreover, it instills confidence in your software's performance, increases customer satisfaction, and ensures compliance with quality standards. In essence, code coverage is a fundamental practice that not only helps prevent issues but also fosters more efficient and reliable software development.

#Benefits of code coverage#code coverage#high code coverage#software testing course#software testing#studying#teaching#software#success#course#institute#marketing#academia#software testing in mumbai

0 notes

Text

How does AI contribute to the automation of software testing?

AI-Based Testing Services

In today’s modern rapid growing software development competitive market, ensuring and assuming quality while keeping up with fast release cycles is challenging and a vital part. That’s where AI-Based Testing comes into play and role. Artificial Intelligence - Ai is changing the software testing and checking process by making it a faster, smarter, and more accurate option to go for.

Smart Test Case Generation:

AI can automatically & on its own analyze past test results, user behavior, and application logic to generate relevant test cases with its implementation. This reduces the burden on QA teams, saves time, and assures that the key user and scenarios are always covered—something manual processes might overlook and forget.

Faster Bug Detection and Resolution:

AI-Based Testing leverages the machine learning algorithms to detect the defects more efficiently by identifying the code patterns and anomalies in the code behavior and structure. This proactive approach helps and assists the testers to catch the bugs as early as possible in the development cycle, improving product quality and reducing the cost of fixes.

Improved Test Maintenance:

Even a small or minor UI change can break or last the multiple test scripts in traditional automation with its adaptation. The AI models can adapt to these changes, self-heal broken scripts, and update them automatically. This makes test maintenance less time-consuming and more reliable.

Enhanced Test Coverage:

AI assures that broader test coverage and areas are covered by simulating the realtime-user interactions and analyzing vast present datasets into the scenario. It aids to identify the edge cases and potential issues that might not be obvious to human testers. As a result, AI-based testing significantly reduces the risk of bugs in production.

Predictive Analytics for Risk Management:

AI tools and its features can analyze the historical testing data to predict areas of the application or product crafted that are more likely to fail. This insight helps the teams to prioritize their testing efforts, optimize resources, and make better decisions throughout the development lifecycle.

Seamless Integration with Agile and DevOps:

AI-powered testing tools are built to support continuous testing environments. They integrate seamlessly with CI/CD pipelines, enabling faster feedback, quick deployment, and improved collaboration between development and QA teams.

Top technology providers like Suma Soft, IBM, Cyntexa, and Cignex lead the way in AI-Based Testing solutions. They offer and assist with customized services that help the businesses to automate down the Testing process, improve the software quality, and accelerate time to market with advanced AI-driven tools.

#it services#technology#software#saas#saas development company#saas technology#digital transformation#software testing

2 notes

·

View notes

Text

Types of software testing

As well as the different types of tests there are, there are categories of software testing: manual and automated.

Manual testing

Manual tests are tests that are ran by a human, generally a set of steps is listed with expected results, and someone will run through them confirming if each step has passed or failed.

Manual testing is good for testing things visual elements, giving fast and accurate visual feedback which is closer to how a user would use the software. It's also good for unplanned changes, updating automated test scripts for projects which are changing a lot can be extremely costly.

The human element of Manual testing means that testing can be dynamic, and if an issue is picked up that wasn't necessarily part of the test, it can be raised accordingly. Manual testing is also needed to triage when automation tests have failed, manually running through tests to see the point of failure, if it's a bug or an issue with the automation test, and raising it accordingly.

Automation testing

Automation testing is when tests are written out to be performed by a computer each time they're needed. For example, sending a certain set of inputs to an API and checking it returns what we expect. Automated tests still need to be written by a human, some require a programmer to write each step of the test, some might use frameworks like Gherkin to write tests in human language linked to code in the background.

Automation testing can be a lot quicker than manual testing, which also means more test coverage can be increased. Once the test cases are written out they can then be run anytime they're needed to make sure a software is still behaving as expected, especially after any changes are made to ensure existing functionality wasn't broken unintentionally. Automation testing also allows for tests to be running constantly in the background to pick up any possible faults which can then be triaged.

2 notes

·

View notes

Text

You know, its weird how software meant to do unethical things costs so much.

It is weird how tests are written that tests nothing but increases code coverage.

How hard to debug bugs keep showing up in the code WAY more than for ethical projects... almost like the programmers are making a point...

The software somehow becomes fragile, expensive and hard to maintain if it is being made for unethical purposes... and the people working on it keeps getting jobs in other firms so the software becomes worse and worse with no experts...

But it IS neat sideeffect of that weird phenomenon that ethical projects work better and cost less, meaning they beat software that is written for doing bad things

Let us together say the first line of the Scribes oath: "I will not produce harmful code"

4 notes

·

View notes

Text

Best Practices for Successful Automation Testing Implementation

Automation testing is an essential part of modern-day software development that accelerates delivery, reduces manual work, and improves software quality. But success in automation testing is not assured, it should be achieved by proper planning and execution along with proper compliance of best practices.

In this blog, we will talk about key actionable strategies and best practices to ensure the successful implementation of automation testing in your projects.

1. Start with a Clear Strategy

Jumping straight into automation testing without a clear strategy will not always yield the desired results. Define the following:

Objectives: Define the goals of the automation, whether it is about shorter test cycles, improved test coverage or eliminating human error.

Scope: Set the areas of your application for automation and focus much on areas that have a high impact like regression and functional testing.

Stakeholders: Get early involvement from the development, QA and product teams to avoid misalignment regarding expectations.

A well-formed strategy helps guide the way and make sure everyone involved is aligned.

2. Prioritize the Right Test Cases for Automation

One of automation testing’s biggest mistakes with it is to use automation for everything. Rather than that, shape your test cases to that of:

Are monotonous and time-consuming.

Wherein critical for application functionality

Have stable requirements.

Some of these tests are regression tests, smoke tests, data-driven tests, etc. Do not automate the exploratory or highly dynamic tests that often get changed.

3. Choose the Right Automation Tools

The effectiveness of your automation testing initiative highly relies on appropriate tools selection. Look for tools that:

Support the technology stack of your application (e.g., web, mobile, APIs).

Give the flexibility to expand your project.

Offer extensive reporting, reusability of scripts, and run across browsers.

GhostQA is one example of a codeless platform that works well for teams across the skill set. GhostQA can let you focus on what matters and Auto Healing reduces your maintenance to enforce.

4. Build a Strong Automation Framework

An automation framework is the backbone of your automation testing process. It helps in standardization, reusability and scalability of test scripts. So, when you start designing your framework, make sure to leave some room for these features:

Modularity: Split test scripts into reusable components

Data-Driven Testing: Use Data-Driven Testing to separate test data from the scripts to provide flexibility.

Error Handling: Install anti-malware solutions to prevent potential threats.

A good framework streamlines collaboration and makes it easier to maintain your tests.

5. Write High-Quality Test Scripts

A good test script decides the reliability of your automation testing. To ensure script quality:

When naming scripts, variables, or methods, use meaningful and descriptive names.

For adaptability, you should leverage parameterization instead of hardcoding these values.

Set up appropriate error-handling procedures for handling unforeseen problems.

Do not add anything unnecessarily, the more complexity, the more difficult it is to debug and maintain.

Tools such as GhostQA minimize the efforts put behind scripting providing no-code possibilities allowing even non-technical users to write robust tests.

6. Regularly Maintain Your Automation Suite

Even though automation testing is a great way to ensure quality in applications, one of its biggest challenges is keeping the test scripts updated with application changes. Keeping your test suite effective and up to date, regular maintenance.

Best practices for maintenance include:

Frequent Reviews: Conduct periodic audit of the test scripts to ensure that they are not outdated.

Version Control: Utilize version control systems to maintain history of your script modifications.

Auto-Healing Features: GhostQA and similar tools can track UI updates and modify scripts to reflect changes with little to no human intervention, minimizing maintenance costs.

Take good care of your automation suite so that it doesn't become a liability.

7. Address Flaky Tests

Flaky tests—tests that pass or fail randomly—are a common issue in automation testing. They reduce trust in test results and take up time when debugging. To address flaky tests:

Dig deeper into what might be the underlying causes — timing problems or dynamic elements.

Use explicit waits instead of static waiting in tests to make them aligned with application behavior.

Prefer smart detection-based tools (GhostQA, to be precise) to eliminate the chances of flaky tests.

This translates into flourish as flakiness and is the most significant impact in strengthening confidence in your automation framework.

8. Ensure Cross-Browser and Cross-Platform Compatibility

Most modern applications work across many browsers and devices, so cross-compatibility testing is a necessity. Your automation testing suite must:

Add test cases for popular browsers like Chrome, Firefox, Edge, and Safari.

Testing across different operating systems on mobile (e.g., iOS/Android).

GhostQA abstracts cross-browser and cross-platform testing so you can verify functionality in several types of environments without repeating yourself.

9. Leverage AI and Smart Automation

AI is revolutionizing automation testing with better efficiency and lesser maintenance cost. Next-generation tools like GhostQA powered by AI offer:

Auto-Healing: Automatically adjust to any changes made to the app;such as modified UI elements

Predictive Analysis: Showcase areas with the most potential high risk to prioritize tests.

Optimized Execution: Run just the tests that yield the most performance insights.

Use AI-Powered Tools as these can help you to increase the efficiency and accuracy of your testing.

10. Monitor and Measure Performance

To measure the effectiveness of your automation testing, you should track key metrics that include:

Test Coverage: Number of automated tests covering application features.

Execution Time: Time taken to execute automated test suites.

Defect Detection Rate: Number of bugs detected in automation testing

Flaky Test Rate: Frequency of inconsistent test results.

Consistent assessment of these metrics helps in discovering the areas of improvement in your automation efforts while also exhibiting the ROI of the same.

Conclusion

So, the right approach of selecting the right tool and plan properly will help to do a successful automation testing implementation. This could be achieved by adopting best practices like prioritizing test cases, maintaining test scripts, making use of the AI-powered tools and collaborating with other stakeholders in the process.

Tools like GhostQA, which come equipped with codeless testing, auto-healing features, and user-friendly interfaces, empower teams of both technical and non-technical backgrounds to streamline their automation processes and devote their attention to shipping quality software.

#automation testing#software testing#test automation#functional testing#automation tools#quality assurance

2 notes

·

View notes

Text

UI Automation Testing: Reducing Manual Efforts and Boosting Accuracy

Introduction:

UI automation testing is a powerful method for reducing manual testing efforts while improving test accuracy and coverage. By automating repetitive tasks, QA teams can focus on more complex testing, leading to faster release cycles and higher software quality.

Automating Repetitive Tasks:

Manual UI testing can be time-consuming and prone to human error. Automating repetitive tasks—like form submissions, button clicks, and navigation—frees up testers to focus on more critical, exploratory testing. Tools like Selenium and Cypress allow you to automate these tasks, ensuring consistency across test runs.

Increasing Accuracy and Consistency:

Automation eliminates the variability introduced by human testers, ensuring the same steps are executed each time, thus improving the reliability of your tests. Automation also enables parallel testing across multiple browsers and devices, enhancing test coverage.

Faster Feedback with CI/CD Integration:

Integrating UI automation testing into CI/CD pipelines ensures that tests run automatically after each code change, providing faster feedback to developers. This helps catch bugs early in the development cycle, reducing the risk of costly errors.

Conclusion:

By automating UI testing, teams can significantly reduce manual efforts, increase accuracy, and accelerate development timelines. This leads to more reliable software and more efficient testing processes.

#codeless test automation#codeless testing platform#test automation software#automated qa testing#no code test automation tools

2 notes

·

View notes

Text

AI Software Testing: Improving Quality Assurance with Artificial Intelligence

Software testing has undergone a remarkable transformation, evolving from manual processes to advanced AI-driven techniques. In the early days of software development, testing was a manual, labor-intensive activity requiring human testers to execute test cases and meticulously identify defects. As software complexity grew, the need for efficiency led to the creation of automated testing tools that accelerated processes and improved accuracy.

The introduction of artificial intelligence (AI) and machine learning (ML) has further revolutionized software testing. According to the 2024 Currents research report, which surveyed tech founders, executives, and employees, 80% believe that AI and ML tools enable them to focus on more critical tasks. This post explores AI software testing, its benefits, challenges, strategies, and the role of tools like GenQE.ai in enhancing testing outcomes.

What is AI Software Testing?

AI software testing integrates AI and machine learning algorithms into the software testing lifecycle. Unlike traditional methods relying heavily on manual effort and predefined scripts, AI testing leverages intelligent automation to optimize various aspects of the testing process.

AI tools like GenQE.ai analyze large volumes of test data, generate optimized test cases, and detect patterns indicating potential defects. These tools adapt dynamically to software changes, ensuring continuous testing with minimal manual intervention—a critical advantage in agile and DevOps environments, where rapid iterations and continuous integration are the norm.

Benefits of AI Software Testing

Extensive Test Coverage

AI-powered tools enhance test coverage by automatically generating diverse and comprehensive test scenarios. Tools like GenQE.ai analyze user behavior, historical defect data, and software requirements to produce test cases that cover a broad range of scenarios, including edge cases often missed by human testers.

For instance, using GenQE.ai, organizations can simulate various user interactions, device configurations, and network conditions. This comprehensive coverage reduces production risks and ensures a higher quality user experience.

Faster Test Execution

AI tools accelerate testing by automating repetitive tasks and optimizing processes. Tools like GenQE.ai execute tests across multiple environments, such as desktop and mobile browsers, enabling continuous testing and providing quick feedback.

For example, a development team using GenQE.ai can trigger instant test executions whenever new code is committed. This rapid feedback loop supports continuous integration/continuous deployment (CI/CD), maintaining high software quality with faster release cycles.

Improved Test Accuracy

AI-powered tools reduce human error, using predictive analytics to identify potential defects and analyze historical test data. GenQE.ai, for instance, can predict high-risk areas of the software and focus testing efforts accordingly, resulting in more accurate and reliable defect detection.

Cost Savings

Automating software testing reduces the need for extensive manual intervention, cutting costs significantly. GenQE.ai automates regression testing and executes complex test suites efficiently, minimizing resource requirements and delivering higher ROI.

Early Fault Detection

AI tools continuously monitor software for anomalies, enabling early fault detection. Self-healing frameworks like those in GenQE.ai adjust test scripts in response to software changes, ensuring that testing remains effective throughout the development lifecycle.

For instance, GenQE.ai can monitor e-commerce platforms, detecting real-time issues such as transaction anomalies or performance bottlenecks. Early detection helps resolve problems before they affect users, enhancing reliability and reducing costs.

Efficient Test Maintenance

Maintaining test scripts is labor-intensive in traditional testing, especially when software undergoes frequent updates. AI tools like GenQE.ai streamline this process by automatically updating test cases to reflect software changes, reducing manual effort and ensuring up-to-date testing frameworks.

Challenges of AI Software Testing

High Initial Setup Costs

Implementing AI testing tools like GenQE.ai requires an upfront investment in technology and training. Organizations can mitigate this by starting with high-priority areas where AI can deliver immediate benefits, gradually expanding as ROI becomes evident.

Complex Integration

Integrating AI tools into existing workflows can be complex. Compatibility issues with legacy systems may arise. Tools like GenQE.ai are designed to integrate seamlessly with popular frameworks, easing the transition. A phased approach—starting with non-critical applications—can minimize disruption.

Limited Expertise in AI

Teams unfamiliar with AI may face a steep learning curve. Training programs and partnerships with AI experts can accelerate the adoption of tools like GenQE.ai, ensuring smooth implementation and effective usage.

Quality Management of AI-Generated Test Cases

AI tools may generate test cases that require refinement. By incorporating feedback loops and manual reviews, tools like GenQE.ai improve the relevance and accuracy of test scenarios, balancing automation with human oversight.

Data Security and Privacy Concerns

AI testing involves handling large volumes of sensitive data, raising privacy concerns. GenQE.ai prioritizes data security, offering encryption, access controls, and compliance with industry standards to safeguard test data. Regular audits and adherence to best practices further mitigate risks.

Conclusion

AI software testing, driven by tools like GenQE.ai, represents the future of quality assurance. By integrating AI and machine learning, organizations can achieve extensive test coverage, faster execution, improved accuracy, and significant cost savings. Addressing challenges through strategic planning, phased implementation, and robust security measures ensures that the transition to AI-powered testing is successful. As software development continues to evolve, embracing AI in testing will be crucial for delivering high-quality, reliable applications at speed.

1 note

·

View note

Text

This Week in Rust 518

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on Twitter or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Project/Tooling Updates

Strobe Crate

System dependencies are hard (so we made them easier)

Observations/Thoughts

Trying to invent a better substring search algorithm

Improving Node.js with Rust-Wasm Library

Mixing C# and Rust - Interop

A fresh look on incremental zero copy serialization

Make the Rust compiler 5% faster with this one weird trick

Part 3: Rowing Afloat Datatype Boats

Recreating concurrent futures combinators in smol

Unpacking some Rust ergonomics: getting a single Result from an iterator of them

Idea: "Using Rust", a living document

Object Soup is Made of Indexes

Analyzing Data 180,000x Faster with Rust

Issue #10: Serving HTML

Rust vs C on an ATTiny85; an embedded war story

Rust Walkthroughs

Analyzing Data /,000x Faster with Rust

Fully Automated Releases for Rust Projects

Make your Rust code unit testable with dependency inversion

Nine Rules to Formally Validate Rust Algorithms with Dafny (Part 2): Lessons from Verifying the range-set-blaze Crate

[video] Let's write a message broker using QUIC - Broke But Quick Episode 1

[video] Publishing Messages over QUIC Streams!! - Broke But Quick episode 2

Miscellaneous

[video] Associated types in Iterator bounds

[video] Rust and the Age of High-Integrity Languages

[video] Implementing (part of) a BitTorrent client in Rust

Crate of the Week

This week's crate is cargo-show-asm, a cargo subcommand to show the optimized assembly of any function.

Thanks to Kornel for the suggestion!

Please submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

* Hyperswitch (Hacktoberfest)- [FEATURE] separate payments_session from payments core * Hyperswitch (Hacktoberfest)- [NMI] Use connector_response_reference_id as reference to merchant * Hyperswitch (Hacktoberfest)- [Airwallex] Use connector_response_reference_id as reference to merchant * Hyperswitch (Hacktoberfest)- [Worldline] Use connector_response_reference_id as reference to merchant * Ockam - Make ockam project delete (no args) interactive by asking the user to choose from a list of space and project names to delete (tuify) * Ockam - Validate CBOR structs according to the cddl schema for authenticator/direct/types * Ockam - Slim down the NodeManagerWorker for node / node status

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from the Rust Project

397 pull requests were merged in the last week

rewrite gdb pretty-printer registration

add FileCheck annotations to mir-opt tests

add MonoItems and Instance to stable_mir

add a csky-unknown-linux-gnuabiv2hf target

add a test showing failing closure signature inference in new solver

add new simpler and more explicit syntax for check-cfg

add stable Instance::body() and RustcInternal trait

automatically enable cross-crate inlining for small functions

avoid a track_errors by bubbling up most errors from check_well_formed

avoid having rustc_smir depend on rustc_interface or rustc_driver

coverage: emit mappings for unused functions without generating stubs

coverage: emit the filenames section before encoding per-function mappings

coverage: fix inconsistent handling of function signature spans

coverage: move most per-function coverage info into mir::Body

coverage: simplify the injection of coverage statements

disable missing_copy_implementations lint on non_exhaustive types

do not bold main message in --error-format=short

don't ICE when encountering unresolved regions in fully_resolve

don't compare host param by name

don't crash on empty match in the nonexhaustive_omitted_patterns lint

duplicate ~const bounds with a non-const one in effects desugaring

eliminate rustc_attrs::builtin::handle_errors in favor of emitting errors directly

fix a performance regression in obligation deduplication

fix implied outlives check for GAT in RPITIT

fix spans for removing .await on for expressions

fix suggestion for renamed coroutines feature

implement an internal lint encouraging use of Span::eq_ctxt

implement jump threading MIR opt

implement rustc part of RFC 3127 trim-paths

improve display of parallel jobs in rustdoc-gui tester script

initiate the inner usage of cfg_match (Compiler)

lint non_exhaustive_omitted_patterns by columns

location-insensitive polonius: consider a loan escaping if an SCC has member constraints applied only

make #[repr(Rust)] incompatible with other (non-modifier) representation hints like C and simd

make rustc_onunimplemented export path agnostic

mention into_iter on borrow errors suggestions when appropriate

mention the syntax for use on mod foo; if foo doesn't exist

panic when the global allocator tries to register a TLS destructor

point at assoc fn definition on type param divergence

preserve unicode escapes in format string literals when pretty-printing AST

properly account for self ty in method disambiguation suggestion

report unused_import for empty reexports even it is pub

special case iterator chain checks for suggestion

strict provenance unwind

suggest ; after bare match expression E0308

suggest constraining assoc types in more cases

suggest relaxing implicit type Assoc: Sized; bound

suggest removing redundant arguments in format!()

uplift movability and mutability, the simple way

miri: avoid a linear scan over the entire int_to_ptr_map on each deallocation

miri: fix rounding mode check in SSE4.1 round functions

miri: intptrcast: remove information about dead allocations

disable effects in libcore again

add #[track_caller] to Option::unwrap_or_else

specialize Bytes<R>::next when R is a BufReader

make TCP connect handle EINTR correctly

on Windows make read_dir error on the empty path

hashbrown: add low-level HashTable API

codegen_gcc: add support for NonNull function attribute

codegen_gcc: fix #[inline(always)] attribute and support unsigned comparison for signed integers

codegen_gcc: fix endianness

codegen_gcc: fix int types alignment

codegen_gcc: optimize popcount implementation

codegen_gcc: optimize u128/i128 popcounts further

cargo add: Preserve more comments

cargo remove: Preserve feature comments

cargo replace: Partial-version spec support

cargo: Provide next steps for bad -Z flag

cargo: Suggest cargo-search on bad commands

cargo: adjust -Zcheck-cfg for new rustc syntax and behavior

cargo: if there's a version in the lock file only use that exact version

cargo: make the precise field of a source an Enum

cargo: print environment variables for build script executions with -vv

cargo: warn about crate name's format when creating new crate

rustdoc: align stability badge to baseline instead of bottom

rustdoc: avoid allocating strings primitive link printing

clippy: map_identity: allow closure with type annotations

clippy: map_identity: recognize tuple identity function

clippy: add lint for struct field names

clippy: don't emit needless_pass_by_ref_mut if the variable is used in an unsafe block or function

clippy: make multiple_unsafe_ops_per_block ignore await desugaring

clippy: needless pass by ref mut closure non async fn

clippy: now declare_interior_mutable_const and borrow_interior_mutable_const respect the ignore-interior-mutability configuration entry

clippy: skip if_not_else lint for '!= 0'-style checks

clippy: suggest passing function instead of calling it in closure for option_if_let_else

clippy: warn missing_enforced_import_renames by default

rust-analyzer: generate descriptors for all unstable features

rust-analyzer: add command for only opening external docs and attempt to fix vscode-remote issue

rust-analyzer: add incorrect case diagnostics for module names

rust-analyzer: fix VS Code detection for Insiders version

rust-analyzer: import trait if needed for unqualify_method_call assist

rust-analyzer: pick a better name for variables introduced by replace_is_some_with_if_let_some

rust-analyzer: store binding mode for each instance of a binding independently

perf: add NES emulation runtime benchmark

Rust Compiler Performance Triage

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

Add f16 and f128 float types

Unicode and escape codes in literals

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

No RFCs entered Final Comment Period this week.

Tracking Issues & PRs

[disposition: merge] Consider alias bounds when computing liveness in NLL (but this time sound hopefully)

[disposition: close] regression: parameter type may not live long enough

[disposition: merge] Remove support for compiler plugins.

[disposition: merge] rustdoc: Document lack of object safety on affected traits

[disposition: merge] Stabilize Ratified RISC-V Target Features

[disposition: merge] Tracking Issue for const mem::discriminant

New and Updated RFCs

[new] eRFC: #[should_move] attribute for per-function opting out of Copy semantics

Call for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

No RFCs issued a call for testing this week.

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Upcoming Events

Rusty Events between 2023-10-25 - 2023-11-22 🦀

Virtual

2023-10-30 | Virtual (Melbourne, VIC, AU) | Rust Melbourne

(Hybrid - online & in person) October 2023 Rust Melbourne Meetup

2023-10-31 | Virtual (Europe / Africa) | Rust for Lunch

Rust Meet-up

2023-11-01 | Virtual (Cardiff, UK)| Rust and C++ Cardiff

ECS with Bevy Game Engine

2023-11-01 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

2023-11-02 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2023-11-07 | Virtual (Berlin, DE) | OpenTechSchool Berlin

Rust Hack and Learn | Mirror

2023-11-07 | Virtual (Buffalo, NY, US) | Buffalo Rust Meetup

Buffalo Rust User Group, First Tuesdays

2023-11-09 | Virtual (Nuremberg, DE) | Rust Nuremberg

Rust Nürnberg online

2023-11-14 | Virtual (Dallas, TX, US) | Dallas Rust

Second Tuesday

2023-11-15 | Virtual (Cardiff, UK)| Rust and C++ Cardiff

Building Our Own Locks (Atomics & Locks Chapter 9)

2023-11-15 | Virtual (Richmond, VA, US) | Linux Plumbers Conference

Rust Microconference in LPC 2023 (Nov 13-16)

2023-11-15 | Virtual (Vancouver, BC, CA) | Vancouver Rust

Rust Study/Hack/Hang-out

2023-11-16 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2023-11-07 | Virtual (Berlin, DE) | OpenTechSchool Berlin

Rust Hack and Learn | Mirror

2023-11-21 | Virtual (Washington, DC, US) | Rust DC

Mid-month Rustful

Europe

2023-10-25 | Dublin, IE | Rust Dublin

Biome, web development tooling with Rust

2023-10-25 | Paris, FR | Rust Paris

Rust for the web - Paris meetup #61

2023-10-25 | Zagreb, HR | impl Zagreb for Rust

Rust Meetup 2023/10: Lunatic

2023-10-26 | Augsburg, DE | Rust - Modern Systems Programming in Leipzig

Augsburg Rust Meetup #3

2023-10-26 | Copenhagen, DK | Copenhagen Rust Community

Rust metup #41 sponsored by Factbird

2023-10-26 | Delft, NL | Rust Nederland

Rust at TU Delft

2023-10-26 | Lille, FR | Rust Lille

Rust Lille #4 at SFEIR

2022-10-30 | Stockholm, SE | Stockholm Rust

Rust Meetup @Aira + Netlight

2023-11-01 | Cologne, DE | Rust Cologne

Web-applications with axum: Hello CRUD!

2023-11-07 | Bratislava, SK | Bratislava Rust Meetup Group

Rust Meetup by Sonalake

2023-11-07 | Brussels, BE | Rust Aarhus

Rust Aarhus - Rust and Talk beginners edition

2023-11-07 | Lyon, FR | Rust Lyon

Rust Lyon Meetup #7

2023-11-09 | Barcelona, ES | BcnRust

11th BcnRust Meetup

2023-11-09 | Reading, UK | Reading Rust Workshop

Reading Rust Meetup at Browns

2023-11-21 | Augsburg, DE | Rust - Modern Systems Programming in Leipzig

GPU processing in Rust

2023-11-23 | Biel/Bienne, CH | Rust Bern

Rust Talks Bern @ Biel: Embedded Edition

North America

2023-10-25 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

2023-10-25 | Chicago, IL, US | Deep Dish Rust

Rust Happy Hour

2023-11-01 | Brookline, MA, US | Boston Rust Meetup

Boston Common Rust Lunch

2023-11-08 | Boulder, CO, US | Boulder Rust Meetup

Let's make a Discord bot!

2023-11-14 | New York, NY, US | Rust NYC

Rust NYC Monthly Mixer: Share, Show, & Tell! 🦀

2023-11-14 | Seattle, WA, US | Cap Hill Rust Coding/Hacking/Learning

Rusty Coding/Hacking/Learning Night

2023-11-15 | Richmond, VA, US + Virtual | Linux Plumbers Conference

Rust Microconference in LPC 2023 (Nov 13-16)

2023-11-16 | Nashville, TN, US | Music City Rust Developers

Python loves Rust!

2023-11-16 | Seattle, WA, US | Seattle Rust User Group

Seattle Rust User Group Meetup

2023-11-21 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

2023-11-22 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

Oceania

2023-10-26 | Brisbane, QLD, AU | Rust Brisbane

October Meetup

2023-10-30 | Melbourne, VIC, AU + Virtual | Rust Melbourne

(Hybrid - in person & online) October 2023 Rust Melbourne Meetup

2023-11-21 | Christchurch, NZ | Christchurch Rust Meetup Group

Christchurch Rust meetup meeting

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

When your Rust build times get slower after adding some procedural macros:

We call that the syn tax :ferris:

– Janet on Fosstodon

Thanks to Jacob Pratt for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, andrewpollack, U007D, kolharsam, joelmarcey, mariannegoldin, bennyvasquez.

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

9 notes

·

View notes

Text

What Does Enumerate Do in Python?

If you've worked with Python loops, you've probably used for loops to iterate over items. But when you need both the index and the value of items in a list, enumerate() is a Pythonic way to do it.

So, what does enumerate do in Python? Simply put, it adds a counter to an iterable and returns it as an enumerate object, which you can loop over to get both the index and the item.

Basic Syntax of enumerate()

python

CopyEdit

enumerate(iterable, start=0)

iterable: A sequence like list, tuple, or string

start (optional): The starting index value (default is 0)

Why Use enumerate()?

Let’s look at a regular loop without enumerate:

python

CopyEdit

fruits = ["apple", "banana", "cherry"]

index = 0

for fruit in fruits:

print(index, fruit)

index += 1

Using enumerate() simplifies this:

python

CopyEdit

for index, fruit in enumerate(fruits):

print(index, fruit)

Cleaner and more readable—this is one reason Python developers love it.

Changing the Start Index

You can start the counter from any number:

python

CopyEdit

for index, fruit in enumerate(fruits, start=1):

print(index, fruit)

Output:

CopyEdit

1 apple

2 banana

3 cherry

Use Cases of enumerate()

1. Tracking Index in Loops

Great for debugging or when you need to reference both the index and value.

python

CopyEdit

for idx, char in enumerate("hello"):

print(f"Character {char} at index {idx}")

2. Looping Through a List with Conditions

python

CopyEdit

colors = ["red", "green", "blue"]

for i, color in enumerate(colors):

if color == "green":

print(f"'green' found at index {i}")

3. Working with Nested Loops

python

CopyEdit

matrix = [[1, 2], [3, 4]]

for i, row in enumerate(matrix):

for j, val in enumerate(row):

print(f"matrix[{i}][{j}] = {val}")

enumerate() vs range(len())

You might’ve used range(len(list)) to loop with indices. Here’s why enumerate is better:

Using range(len()):

python

CopyEdit

for i in range(len(fruits)):

print(i, fruits[i])

Using enumerate:

python

CopyEdit

for i, fruit in enumerate(fruits):

print(i, fruit)

✅ More readable ✅ Less error-prone ✅ More "Pythonic"

enumerate() with List Comprehension (Bonus Tip)

Though enumerate() isn't typically used in list comprehensions, you can still make it work:

python

CopyEdit

indexed = [(i, val) for i, val in enumerate(['a', 'b', 'c'])]

print(indexed)

# Output: [(0, 'a'), (1, 'b'), (2, 'c')]

Final Thoughts

Understanding what does enumerate do in Python can level up your loop logic, especially when writing clean, concise code. Whether you're tracking indexes, debugging, or working with nested structures, enumerate() helps make your code simpler and more Pythonic. Looking to test and validate Python logic automatically? Tools like Keploy can help you generate test cases and mocks by capturing real behavior—great for Python-based microservices and APIs.

0 notes

Text

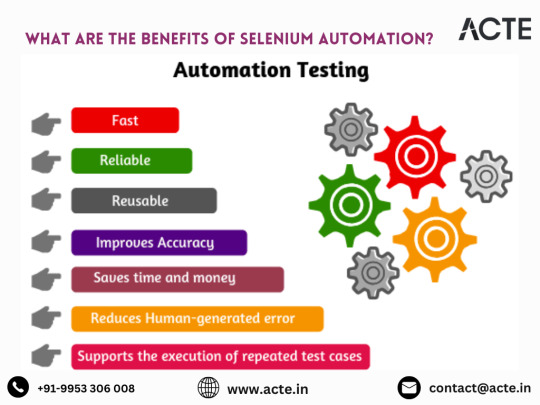

Unleashing the Potential of Selenium Automation: An In-Depth Exploration

Introduction: In the dynamic realm of software development, efficiency and reliability are non-negotiable. With the proliferation of web applications, the demand for robust testing solutions has reached new heights. Enter Selenium – a versatile open-source test automation framework that has transformed the landscape of software testing. In this comprehensive exploration, we'll delve into the multitude of advantages offered by Selenium automation and delve deeper into why it has become the preferred choice for testers and developers worldwide.

1. Seamless Cross-Browser Compatibility: Selenium automation stands out for its seamless cross-browser compatibility feature. Testers can effortlessly execute tests across various web browsers such as Chrome, Firefox, Safari, and Edge. This ensures uniform performance across different platforms, minimizing compatibility issues and enhancing user experience.

2. Platform Flexibility and Independence: A standout feature of Selenium automation is its platform flexibility and independence. Tests crafted with Selenium can be run on diverse operating systems including Windows, macOS, and Linux. This versatility empowers teams to conduct testing on their preferred platforms, fostering collaboration and efficiency.

3. Multilingual Support for Enhanced Productivity: Selenium offers support for multiple programming languages including Java, Python, C#, and JavaScript. This multilingual support enables testers to write automation scripts in their language of choice, maximizing productivity and leveraging existing programming expertise.

4. Promoting Reusability and Ease of Maintenance: Selenium promotes code reusability and ease of maintenance through modular test design and adoption of design patterns like the Page Object Model (POM). By breaking down tests into smaller, reusable components, testers can streamline maintenance efforts and enhance scalability.

5. Accelerating Regression Testing Processes: Automation with Selenium significantly accelerates regression testing, allowing testers to detect and rectify bugs early in the development cycle. Seamless integration with continuous integration (CI) pipelines enables automatic execution of test suites, ensuring software stability and quality.

6. Comprehensive Test Coverage for Robust Applications: Selenium enables testers to achieve comprehensive test coverage by automating repetitive scenarios, edge cases, and boundary conditions. This meticulous testing ensures thorough validation of application functionality and user interactions, resulting in more robust software products.

7. Scalability and Parallel Execution Efficiency: Selenium facilitates parallel execution of tests across multiple browsers and environments, enabling teams to scale automation efforts effectively. By distributing test execution across different machines or virtual environments, testers can expedite the testing process and achieve faster feedback cycles.

8. Streamlined Integration with CI/CD Pipelines: Seamless integration with continuous integration/continuous deployment (CI/CD) pipelines enables automated testing as an integral part of the software delivery process. Automated build verification tests (BVTs) and regression tests ensure software stability, facilitating faster and more frequent deployments.

Conclusion: In conclusion, Selenium automation offers a plethora of advantages that empower testers to streamline testing efforts, enhance software quality, and expedite the development lifecycle. From cross-browser compatibility and platform independence to scalability and CI/CD pipeline integration, Selenium has redefined software testing in the modern era. By harnessing the power of Selenium, organizations can achieve faster releases, superior software quality, and heightened customer satisfaction, gaining a competitive edge in the ever-evolving digital landscape.

2 notes

·

View notes

Text

What is the importance of ai based testing?

Ai-Based Testing Services

AI - Artificial Intelligence is transforming the way software testing is done. AI-Based Testing is now a crucial part of modern software development. It brings speed, accuracy, and intelligence to testing processes that were once slow and repetitive. But why is it so important? Let's explore.

Faster Testing, Faster Releases -

Manual Testing takes time. AI-Based Tests speeds up the process by automatically generating test cases and detecting issues without constant human input. This helps businesses deliver software faster, keeping up with tight deadlines and high customer expectations.

Smarter Test Coverage -

AI can scan large amounts of data and understand complex application behavior. This allows AI-Based Testing to create better test coverage, ensuring that common and rare user scenarios are checked. As a result, software is tested more thoroughly, and fewer bugs slip through.

Reduces Human Error -

Even experienced testers can make mistakes. AI tools run tests with consistent accuracy. AI-Based Tests reduces and lowers down the number of errors by removing the guesswork and repeating tasks the same way every time. This makes Testing more reliable and builds trust in the results.

Adapts to Changes Automatically -

In traditional Testing, when software changes, testers need to rewrite scripts. With AI-Based Testing, tests can adapt on their own. AI tools and its resources can address the changes on its own in the code and update the ongoing tests accordingly by saving time and reducing the maintenance work.

Cost Efficiency -

Though setting up AI testing tools may take some investment, it saves money in the long run. Faster releases, fewer bugs, and reduced manual labor lower overall testing costs. This makes AI-Based Tests a wise financial decision for companies of all sizes.

Supports Agile and DevOps -

Modern development needs fast feedback and continuous delivery. AI-based Tests fits perfectly into Agile and DevOps environments by offering quick and reliable Testing that helps teams stay on track without delays.

AI-Based Testing is no longer just a trend—it's a must-have for businesses that want to build high-quality software quickly and stay ahead in a competitive digital world.

Trusted and expert service providers for AI-Based Testing, such as Suma Soft, IBM, Cyntexa, and Cignex, specialize in delivering innovative, automated testing solutions tailored to business needs. They offer and assist a smooth integration process with ongoing support, and AI-powered testing tools that assure strict accuracy and efficiency is maintained across all the test process. Businesses can enhance software quality, accelerate development cycles, and reduce testing efforts with their expertise. Upgrade your QA process today and unlock the full potential of intelligent automation with this simple AI-Based Testing guide!

#it services#technology#saas#software#saas development company#saas technology#digital transformation

2 notes

·

View notes

Text

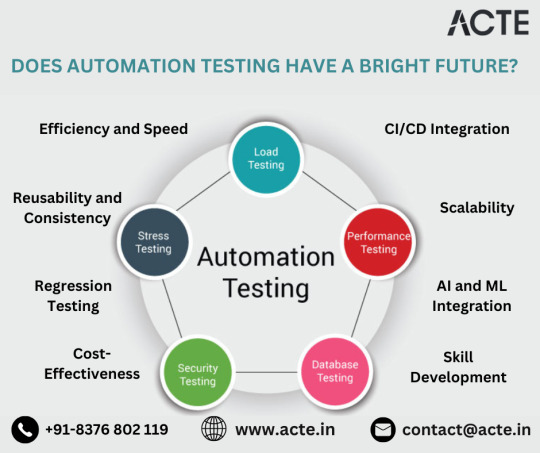

Tomorrow's Software Landscape: The Evolution of Automation Testing

In the ever-evolving tapestry of software development, automation testing emerges as a pivotal force, promising a future marked by heightened efficiency, unwavering reliability, and an uncharted realm of innovation. This exploration delves into the compelling factors that position automation testing on the trajectory towards a transformative and luminous future.

1. Catalyzing Development Cycles: Efficiency Unleashed Automation testing stands as the driving force behind accelerated software development lifecycles. By seamlessly executing repetitive tasks, handling voluminous datasets, and executing intricate calculations with precision, automated tests pave the way for expedited development cycles, ensuring swift releases compared to their manual counterparts.

2. Pillars of Quality Assurance: Reusability and Consistency The hallmark of automation lies in the reusability of test scripts across diverse developmental stages, guaranteeing uniform testing procedures. This not only mitigates the risk of human errors but also establishes a robust foundation for quality assurance, ensuring that applications adhere to the highest quality standards.

3. Guardian of Code Changes: Regression Testing Mastery Automation testing shines brightest in the realm of regression testing. With every alteration in code, automated tests swiftly and effectively validate existing functionalities, acting as vigilant guardians against unintended consequences and upholding the integrity of the software.

4. Strategic Cost-Effectiveness: A Calculated Investment The initial investment in setting up automation frameworks and scripts pales in comparison to the long-term benefits reaped. Automated tests operate ceaselessly, resulting in optimized resource utilization and substantial cost savings over time.

5. Parallel Prowess: Maximizing Testing Efficiency The capability for parallel test execution across diverse environments and browsers elevates the efficiency of automation testing. Simultaneously addressing an array of scenarios ensures comprehensive coverage, aligning testing practices with the multifaceted nature of modern applications.

6. Collaborative CI/CD Integration: Orchestrating Continuous Improvement The seamless integration of automation with Continuous Integration/Continuous Deployment (CI/CD) pipelines is a cornerstone. This integration not only fosters frequent and reliable testing but also nurtures a culture of continuous improvement and swift software delivery.

7. Scalability Dynamics: Adapting to Developmental Complexity As applications burgeon in complexity, automation seamlessly provides scalability. Its ability to handle a myriad of test cases and scenarios ensures adaptability to the evolving demands of a project without compromising operational efficiency.

8. Advanced Testing Horizons: Addressing Varied Needs Automation testing is not confined to the basics; it adeptly supports advanced practices such as performance testing, load testing, and stress testing. These practices are indispensable, ensuring applications stand resilient against varying levels of user loads without compromising performance.

9. Intelligent Automation: The Marriage of AI and ML The integration of artificial intelligence (AI) and machine learning (ML) within automation testing is a testament to its evolutionary path. These sophisticated technologies elevate test script maintenance, introduce self-healing mechanisms, and contribute to the intelligent generation of test cases.

10. Skills as Currency: Meeting Industry Demand The surge in automation adoption translates into a burgeoning demand for professionals skilled in automation testing. Beyond individual skill enhancement, learning automation tools and frameworks opens the gateway to a myriad of job opportunities within the dynamic tech industry.

In conclusion, automation testing is not merely a trend but an irreversible shift in the testing paradigm. Its unparalleled efficiency, steadfast reliability, and adaptive nature constitute a linchpin in guaranteeing the delivery of high-quality software. Embracing automation testing is not just a strategic choice for the present; it's an investment charting the course for the future of software development, where velocity, quality, and innovation converge for unparalleled success.

2 notes

·

View notes

Text

I work for insane people

So… I started work a few months ago and...

I keep being impressed with corporations lowering my expectations.

Like. EVERY time I think "Surely, this is as incompetent as it gets".

The boss is nice, the workers are nice, every PERSON is great so far. But the firm is just… fucked in ways that makes it hard to not scream with laughter.

It is like working in the ministry of silly walks by Monty Python. Insane things are happening, and everyone just acts like it is normal.

A dude was stating to someone else near me, that despite the costumers saying they did not want it, his code that crashed the application once a day, was NECESSARY, because writing code without memory leaks in C is basically impossible. Like… I just have all these small moments of insanity. Completely disconnected from each-other

My boss showing me and the other 3 new hires the coffee room, where a big screen proudly shows that not a single software product have 100% code coverage… as in, not a single person in this entire building filled with software people knows how code coverage works. He then points out an empty bowl, and declares "Twice a week, there is a fruit event". By which he means, fresh fruit is provided, and people can just grab some…. just said by a alien who is pretending to be human. Badly.

He then explained that the 2 coffee machines in here makes bad coffee. He then takes us to the copy room, showing us that THIS is where the GOOD coffee machine is. Which only takes coffee beans from a SPECIFIC vendor (Is… is the coffee machine… sponsored????)

He briefly pets the Foosball table (Again, in the copy room), which is jammed up against the wall so you can only reach the controls on one side ( Because, again, it is a copy room, and there is not enough space for it ) and he exclaims "Ahhhh… Not enough people are using this"

Suggesting, that he is trying to promote the little known sport "Single-player Foosball">

I start setting up my work PC and... Whenever any of the developers in this place wants to install things on their PC's, including compilers and testing frameworks, they have to either use the "SOFTWARE CENTER" program, which installs it FOR you… or in 10% of the cases, fails, without giving you any context for why it did that, and no tools for fixing it. Is it missing a dependency? Not working with the OS? Who knows!

Some programs cannot be installed like this though, because the SOFTWARE CENTER is not updated a lot. And when you want to install something the normal way… You get a popup, where you must provide a written explanation for why you need to have temporary admin rights to your own dang PC … you then submit that, and your screen will then be watched remotely by a worker from India, for a varied amount of time you are not told…

Or at least it says so. Maybe the Indian dude watching me is just an empty threat. Who knows. But they get to see me running absolutely… BONKERS .bat files

Like, I CHECKED them, and a good 80% of them calls a Power-Shell script in the folder above it, called "YES_OR_NO.ps1" which opens a windows 95 window informing you that DURING INSTALLATION YOU MAY NOT USE THE KEYBOARD OR MOUSE, AS IT MAY DISTURB THE SCRIPT THAT WILL INSTALL THE PROGRAM. A normal installation wizard then runs, except the developers are not trusted to click the buttons, and instead the script does it for you by moving and clicking the mouse.

All of this is documented. In markdown like reasonable people? Of course not! It is in ENHANCED markdown. Which is markdown in the same way javascript is java.

ENHANCED markdown requires browser and visual studio code extensions to be read. Completely missing the point of markdown being readable both raw and encoded… And sometimes word documents And sometimes power-point presentations left next to another bat file… this one calling the .exe file… right next to it…. I later found out is because the idea USED to be that all documentation MUST be made with Microsoft office tools.

I had to read the code of conduct today. And it was actually very well written.

I then watched a interactive animation telling me about the code of conduct… which it not only got a fact wrong about, it also broke it once.

I repeat. The introductory course in the code of conduct… broke the code of conduct'

After I watched that, and read the safety material…. which literally just said "Wear safety boots in the production floor"… I was then show the testing room.

I was lead to a different building, saying hello to the Vice CEO who was walking the other way, we walk into the production floor, ignored the fact that none of us have safety boots on, and walks into a room, with a 3*2 meter wide machine, several meters tall.

We edge around it, quietly hoping no one turns it on, since we would get slammed by it if they did, and walk down some stairs into the basement. Casually walk over a small river in the floor from a pipe that is leaking… what I really hope is water, and over to a shelf rack FILLED with the most MacGyver shit you ever did see.

Including, but not limited to, the 3D printed plastic block, with a piston that repeatedly smacking half a aluminum nameplate over the device it is testing. You see, it is a capacitance button, and it is testing it by simulating a human finger pressing it many thousands of times, a saws off antenna which is the end of a cable that is attached to it via a nice thick bolt, so it can send fake signals into it.

And of course the 24 volt, 5 amp system that is turning a circuit board on and off again, until it will crack.

We walk back out, remembering to step over the small river, which never even got a comment, and walk back to my department It is SO great. It is like working in the ministry of silly walks by Monty Python Like… Do I think I can bring value to this company? Like, making it better and more efficient? Yes. It would be hard not to!

And his is the largest pump manufacturer in the world! A super serious company with 4 billion dollars of revenue a year. And it is just… a NUTHOUSE

Like… NEVER believe the myth that corporations are competent.

12 notes

·

View notes

Text

Pioneering the Future of QA: Genqe.ai Intelligent Testing Revolution

In today fast-paced digital world, delivering high-quality software is essential for staying competitive and achieving SEO success. Genqe.ai is at the forefront of this transformation, redefining quality assurance (QA) with its AI-powered testing platform. By streamlining workflows and enhancing software performance, Genqe.ai empowers businesses to excel in both user experience and search engine rankings. This article explores how Genqe.ai is shaping the future of intelligent testing and driving digital growth.

The Shift to Intelligent Testing

As software complexity increases, traditional testing methods fall short, often leading to delays and overlooked errors. Genqe.ai revolutionizes QA by leveraging generative AI to deliver automated, accurate, and scalable testing solutions. This ensures faster releases, superior application quality, and a stronger online presence, making Genqe.ai a game-changer for businesses aiming to optimize their SEO performance.

How Genqe.ai Redefines Quality Assurance

Genqe.ai’s innovative platform transforms the testing process, making it smarter and more efficient. Here’s how it stands out:

1. Smart Test Case Generation

Using advanced AI, Genqe.ai automatically creates detailed test cases by analyzing application requirements, code, and usage patterns. This ensures comprehensive coverage, catching potential issues early and saving valuable time.

2. Proactive Bug Detection

Genqe.ai’s real-time monitoring identifies bugs and performance bottlenecks instantly, providing developers with precise recommendations for resolution. This proactive approach enhances software reliability and user satisfaction.

3. Flexible and Scalable Testing

From startups to large enterprises, Genqe.ai’s cloud-based platform adapts to projects of any scale. Its versatility ensures seamless testing for both simple apps and complex systems.

4. Actionable Analytics

Genqe.ai delivers in-depth reports and analytics, empowering teams to optimize testing strategies and improve software quality. These insights drive continuous improvement and long-term success.

Elevating SEO Performance with Genqe.ai

High-performing software is a key driver of SEO success, as search engines prioritize fast, reliable, and user-friendly applications. Genqe.ai enhances SEO outcomes in the following ways:

Optimized User Experience: By ensuring applications are error-free and intuitive, Genqe.ai boosts user engagement, lowering bounce rates and improving search rankings.

Accelerated Time-to-Market: Genqe.ai’s automated testing speeds up development cycles, enabling businesses to roll out updates quickly and keep content fresh for better SEO performance.

Mobile-Friendly Performance: Genqe.ai ensures apps function flawlessly across devices, aligning with mobile-first indexing and enhancing mobile SEO rankings.

Consistent Reliability: With real-time bug detection, Genqe.ai minimizes downtime, ensuring websites remain accessible and performant—crucial for maintaining high search visibility.

Why Genqe.ai is the QA Solution of Tomorrow

Genqe.ai leads the charge in intelligent testing by combining cutting-edge AI with unparalleled flexibility. Its ability to automate repetitive tasks, provide real-time insights, and scale effortlessly makes it an essential tool for modern QA teams. By integrating Genqe.ai, businesses can achieve exceptional software quality, streamline development, and dominate search engine results.

Conclusion

The future of QA is intelligent, and Genqe.ai is paving the way. By harnessing AI to enhance testing efficiency and software performance, Genqe.ai helps businesses deliver outstanding user experiences and achieve SEO excellence. Embrace the power of intelligent testing with Genqe.ai and unlock new opportunities for digital growth.

Ready to transform your QA and boost your SEO? Explore Genqe.ai innovative solutions at Genqe.ai today!

0 notes