#Content Detector

Explore tagged Tumblr posts

Text

Content Detector for AI Writing – Try ZeroGPT Free

ZeroGPT’s Content Detector helps you find out if any content was generated by AI. Whether it's an article, report, or student essay, our free tool checks for AI patterns and gives a clear result. Just paste your text and click check. You’ll know in seconds if the content is human-made or created by AI like ChatGPT. Fast, simple, and reliable—ZeroGPT is your trusted content detector.

0 notes

Text

ZeroGPT Content Detector: Ensure Text Authenticity

ZeroGPT’s Content Detector is a trusted tool for identifying AI-written content. With advanced detection capabilities, it ensures that your text is original and authentic. Designed for educators, content creators, and professionals, this tool is ideal for verifying essays, reports, or articles. ZeroGPT makes it easy to identify AI involvement in writing, offering detailed insights for better decision-making. Its user-friendly platform is free and accessible online, ensuring quick and reliable results. Protect your work's integrity with ZeroGPT’s Content Detector and detect AI-generated content effortlessly. Visit ZeroGPT today to get started.

0 notes

Text

ZeroGPT: Enhance Credibility with AI Checker Text

ZeroGPT's AI Checker Text provides users with accurate identification of AI-generated content within text. Seamlessly integrated into your workflow, AI Checker Text ensures transparency and integrity in written material. Whether you're analyzing articles, reports, or online discussions, ZeroGPT empowers users to maintain credibility and authenticity. Stay ahead of deceptive practices and make informed decisions with ZeroGPT's reliable AI checker text capabilities.

0 notes

Text

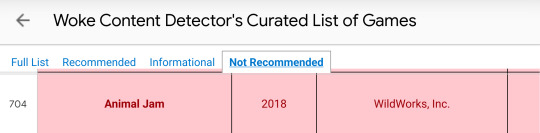

you heard it here first: animal jam is woke!!!

#that fucking woke content detector list is the most batshit thing ever. what is this#animal jam#jamblr

660 notes

·

View notes

Text

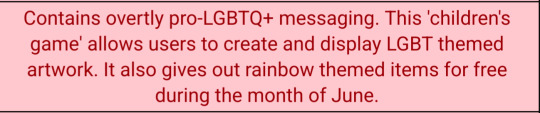

Large number of pronouns to choose from.

348 notes

·

View notes

Text

hit 2000 followers yippee

51 notes

·

View notes

Text

also speaking of woke content detector i find it fucking hilarious that corpse party is marked "woke, but informational" [seiko is the wokeness btw.] what is the informational exactly. is it the writers badly disguised fetish. is it the fact that no one can shut up about naomis boobs and ass also. is it the gore. what is it

#its good gorey horror but i enjoy everything else about this game Less And Less with each passing year i swear to GOD#corpse party#mossball.txt#woke content detector

99 notes

·

View notes

Text

Our Queen Coke Francis briefly got me thinking about Woke Content Detector again and as a fan of this particular franchise this is driving me nuts

Because this is basically what you see immediately after the game company’s logos

By their own logic they should be turning it off right away because this is literally a DEI statement

#also the World of Assassination is particularly anti-corporate#and several targets are women in power but maybe it’s different cuz you have to kill them#woke content detector#hitman#hitman world of assassination#io interactive#comfort games#political on main

48 notes

·

View notes

Text

Have yall seen the Woke gaming list? It's my new favourite thing. Here are a few of my favourite excerpts from said list.

Red Dead Redemption 2 is woke because Sadie Atler, the feminist, acts too much like a femanist.

Star Wars Battlefront Classic Collection is woke because it changed the death message from "Player killed himself" to "Player killed themself"

Battlefield 1 is woke because apparently black people didn't fight in world war 1

Dream Daddy: A Dad Dating Sim is woke because you can make your character, who is always a man, have had a previous relationship with another man.

A game called "Super Lesbian Animal RPG" is woke because it contains lesbian romances.

Destroy All Humans! Contains "Subtle anti-human messages"

Bugsnax is woke because the npc, Floofy Fizzlebean, is non binary.

No Man's Sky is woke because the aliens don't abide by human biological sex.

There are 1551 games on this list.

#Get a life bro#He has a huge issue with games using body type instead of male or female#help#undercooked-ice#eat the rich#badass#She woke on my content till I DEI#woke agenda#wokeness#woke liberal madness#woke content detector

33 notes

·

View notes

Text

#youtube comments#steam group#woke content detector#video games#disco elysium#super lesbian animal rpg#politics#communism#pokemon#professor oak#professor birch#professor elm#professor rowan#professor juniper

22 notes

·

View notes

Text

Extracting Training Data From Fine-Tuned Stable Diffusion Models

New Post has been published on https://thedigitalinsider.com/extracting-training-data-from-fine-tuned-stable-diffusion-models/

Extracting Training Data From Fine-Tuned Stable Diffusion Models

New research from the US presents a method to extract significant portions of training data from fine-tuned models.

This could potentially provide legal evidence in cases where an artist’s style has been copied, or where copyrighted images have been used to train generative models of public figures, IP-protected characters, or other content.

From the new paper: original training images are seen in the row above, and the extracted images are depicted in the row below. Source: https://arxiv.org/pdf/2410.03039

Such models are widely and freely available on the internet, primarily through the enormous user-contributed archives of civit.ai, and, to a lesser extent, on the Hugging Face repository platform.

The new model developed by the researchers is called FineXtract, and the authors contend that it achieves state-of-the-art results in this task.

The paper observes:

‘[Our framework] effectively addresses the challenge of extracting fine-tuning data from publicly available DM fine-tuned checkpoints. By leveraging the transition from pretrained DM distributions to fine-tuning data distributions, FineXtract accurately guides the generation process toward high-probability regions of the fine-tuned data distribution, enabling successful data extraction.’

Far right, the original image used in training. Second from right, the image extracted via FineXtract. The other columns represent alternative, prior methods. Please refer to the source paper for better resolution.

Why It Matters

The original trained models for text-to-image generative systems as Stable Diffusion and Flux can be downloaded and fine-tuned by end-users, using techniques such as the 2022 DreamBooth implementation.

Easier still, the user can create a much smaller LoRA model that is almost as effective as a fully fine-tuned model.

An example of a trained LORA, offered for free download at the hugely popular civitai domain. Such a model can be created in anything from minutes to a few hours, by enthusiasts using locally-installed open source software – and online, through some of the more permissive API-driven training systems. Source: civitai.com

Since 2022 it has been trivial to create identity-specific fine-tuned checkpoints and LoRAs, by providing only a small (average 5-50) number of captioned images, and training the checkpoint (or LoRA) locally, on an open source framework such as Kohya ss, or using online services.

This facile method of deepfaking has attained notoriety in the media over the last few years. Many artists have also had their work ingested into generative models that replicate their style. The controversy around these issues has gathered momentum over the last 18 months.

The ease with which users can create AI systems that replicate the work of real artists has caused furor and diverse campaigns over the last two years. Source: https://www.technologyreview.com/2022/09/16/1059598/this-artist-is-dominating-ai-generated-art-and-hes-not-happy-about-it/

It is difficult to prove which images were used in a fine-tuned checkpoint or in a LoRA, since the process of generalization ‘abstracts’ the identity from the small training datasets, and is not likely to ever reproduce examples from the training data (except in the case of overfitting, where one can consider the training to have failed).

This is where FineXtract comes into the picture. By comparing the state of the ‘template’ diffusion model that the user downloaded to the model that they subsequently created through fine-tuning or through LoRA, the researchers have been able to create highly accurate reconstructions of training data.

Though FineXtract has only been able to recreate 20% of the data from a fine-tune*, this is more than would usually be needed to provide evidence that the user had utilized copyrighted or otherwise protected or banned material in the production of a generative model. In most of the provided examples, the extracted image is extremely close to the known source material.

While captions are needed to extract the source images, this is not a significant barrier for two reasons: a) the uploader generally wants to facilitate the use of the model among a community and will usually provide apposite prompt examples; and b) it is not that difficult, the researchers found, to extract the pivotal terms blindly, from the fine-tuned model:

Essential keywords can usually be extracted blindly from the fine-tuned model using an L2-PGD attack over 1000 iterations, from a random prompt.

Users frequently avoid making their training datasets available alongside the ‘black box’-style trained model. For the research, the authors collaborated with machine learning enthusiasts who did actually provide datasets.

The new paper is titled Revealing the Unseen: Guiding Personalized Diffusion Models to Expose Training Data, and comes from three researchers across Carnegie Mellon and Purdue universities.

Method

The ‘attacker’ (in this case, the FineXtract system) compares estimated data distributions across the original and fine-tuned model, in a process the authors dub ‘model guidance’.

Through ‘model guidance’, developed by the researchers of the new paper, the fine-tuning characteristics can be mapped, allowing for extraction of the training data.

The authors explain:

‘During the fine-tuning process, the [diffusion models] progressively shift their learned distribution from the pretrained DMs’ [distribution] toward the fine-tuned data [distribution].

‘Thus, we parametrically approximate [the] learned distribution of the fine-tuned [diffusion models].’

In this way, the sum of difference between the core and fine-tuned models provides the guidance process.

The authors further comment:

‘With model guidance, we can effectively simulate a “pseudo-”[denoiser], which can be used to steer the sampling process toward the high-probability region within fine-tuned data distribution.’

The guidance relies in part on a time-varying noising process similar to the 2023 outing Erasing Concepts from Diffusion Models.

The denoising prediction obtained also provide a likely Classifier-Free Guidance (CFG) scale. This is important, as CFG significantly affects picture quality and fidelity to the user’s text prompt.

To improve accuracy of extracted images, FineXtract draws on the acclaimed 2023 collaboration Extracting Training Data from Diffusion Models. The method utilized is to compute the similarity of each pair of generated images, based on a threshold defined by the Self-Supervised Descriptor (SSCD) score.

In this way, the clustering algorithm helps FineXtract to identify the subset of extracted images that accord with the training data.

In this case, the researchers collaborated with users who had made the data available. One could reasonably say that, absent such data, it would be impossible to prove that any particular generated image was actually used in training in the original. However, it is now relatively trivial to match uploaded images either against live images on the web, or images that are also in known and published datasets, based solely on image content.

Data and Tests

To test FineXtract, the authors conducted experiments on few-shot fine-tuned models across the two most common fine-tuning scenarios, within the scope of the project: artistic styles, and object-driven generation (the latter effectively encompassing face-based subjects).

They randomly selected 20 artists (each with 10 images) from the WikiArt dataset, and 30 subjects (each with 5-6 images) from the DreamBooth dataset, to address these respective scenarios.

DreamBooth and LoRA were the targeted fine-tuning methods, and Stable Diffusion V1/.4 was used for the tests.

If the clustering algorithm returned no results after thirty seconds, the threshold was amended until images were returned.

The two metrics used for the generated images were Average Similarity (AS) under SSCD, and Average Extraction Success Rate (A-ESR) – a measure broadly in line with prior works, where a score of 0.7 represents the minimum to denote a completely successful extraction of training data.

Since previous approaches have used either direct text-to-image generation or CFG, the researchers compared FineXtract with these two methods.

Results for comparisons of FineXtract against the two most popular prior methods.

The authors comment:

‘The [results] demonstrate a significant advantage of FineXtract over previous methods, with an improvement of approximately 0.02 to 0.05 in AS and a doubling of the A-ESR in most cases.’

To test the method’s ability to generalize to novel data, the researchers conducted a further test, using Stable Diffusion (V1.4), Stable Diffusion XL, and AltDiffusion.

FineXtract applied across a range of diffusion models. For the WikiArt component, the test focused on four classes in WikiArt.

As seen in the results shown above, FineXtract was able to achieve an improvement over prior methods also in this broader test.

A qualitative comparison of extracted results from FineXtract and prior approaches. Please refer to the source paper for better resolution.

The authors observe that when an increased number of images is used in the dataset for a fine-tuned model, the clustering algorithm needs to be run for a longer period of time in order to remain effective.

They additionally observe that a variety of methods have been developed in recent years designed to impede this kind of extraction, under the aegis of privacy protection. They therefore tested FineXtract against data augmented by the Cutout and RandAugment methods.

FineXtract’s performance against images protected; by Cutout and RandAugment.

While the authors concede that the two protection systems perform quite well in obfuscating the training data sources, they note that this comes at the cost of a decline in output quality so severe as to render the protection pointless:

Images produced under Stable Diffusion V1.4, fine-tuned with defensive measures – which drastically lower image quality. Please refer to the source paper for better resolution.

The paper concludes:

‘Our experiments demonstrate the method’s robustness across various datasets and real-world checkpoints, highlighting the potential risks of data leakage and providing strong evidence for copyright infringements.’

Conclusion

2024 has proved the year that corporations’ interest in ‘clean’ training data ramped up significantly, in the face of ongoing media coverage of AI’s propensity to replace humans, and the prospect of legally protecting the generative models that they themselves are so keen to exploit.

It is easy to claim that your training data is clean, but it’s getting easier too for similar technologies to prove that it isn’t – as Runway ML, Stability.ai and MidJourney (amongst others) have found out in recent days.

Projects such as FineXtract are arguably portents of the absolute end of the ‘wild west’ era of AI, where even the apparently occult nature of a trained latent space could be held to account.

* For the sake of convenience, we will now assume ‘fine-tune and LoRA’, where necessary.

First published Monday, October 7, 2024

#2022#2023#2024#ai#AI Copyright Challenges#AI image generation#AI systems#algorithm#API#Art#Artificial Intelligence#artists#barrier#black box#box#challenge#classes#classifier-free guidance#Collaboration#columns#Community#comparison#content#content detector#copyright#Copyright Compliance#data#data extraction#data leakage#datasets

0 notes

Text

"whether pro or anti is unclear" im losing my mind

34 notes

·

View notes

Text

"This video game is woke!"

The game:

#I'm refering to that woke content detector thing#(if you wanna know what game I'm referring to then look in the reblogs)#because it's so funny i don't even know where to start#my stuff

29 notes

·

View notes

Text

How scandalous.

11 notes

·

View notes

Text

Fem cultist.................... oh.............

Bonus:

#Hrelp me she is so fucking hot#Good god. my bonar#I love women#And yes she gets little eyelashes on the mask because she's a diva I know she'd go out of her way to add them#Man I just remembered that T.erraria got put on the fucking Woke Content Detector list thing because of the gender change potion#Insane. happy pride month guys#my art#obsessive devotion 🌙

6 notes

·

View notes

Text

omori isnt on the woke content detector last i checked but when it does get put on there i think itll be marked as woke for the pure fact that you will have to apologize to a pink-haired girl. and also basils entire existence or whatever

#do you guys think it'll be marked for pro-lgbt content for that one picture of aubrey and kim..............#and also because the only straight relationship we really see ends in one of them yknow. Dying#pretty woke guys......... idk................#omori#mossball.txt#woke content detector

28 notes

·

View notes