#CrowdStrike Windows sensor

Explore tagged Tumblr posts

Text

CrowdStrike Falcon sensor | CrowdStrike

CrowdStrike is a top cybersecurity organization recognized for its innovative Falcon platform, which offers full threat prevention via cloud-based solutions. However, a recent July 19, 2024, IT outage brought on by an incorrect upgrade to the CrowdStrike Falcon sensor resulted in extensive disruptions affecting a number of industries, including banking, hospitals, and airlines. This event serves as a reminder of the vital role that CrowdStrike's Falcon technology plays in preserving operational security. What Is CrowdStrike? CrowdStrike is a leading American cybersecurity company based in Texas, renowned for its advanced threat intelligence and endpoint protection solutions. Founded in 2011 by George Kurtz, Dmitri Alperovitch, and Gregg Marston, the company specializes in detecting and preventing cyber threats using its cloud-based Falcon platform. This platform offers comprehensive security features, including CrowdStrike data protection, incident response, and antivirus support, primarily catering to businesses and large organizations. Their innovative approach and emphasis on automation have established it as a trusted name in cybersecurity. Its clients span various sectors, including finance, healthcare, and government, all relying on CrowdStrike to safeguard their critical data and systems from sophisticated cyber threats.

What is the CrowdStrike Falcon sensor? CrowdStrike Falcon is a cloud-based cybersecurity platform designed to provide comprehensive protection against cyber threats. It offers next-generation antivirus (NGAV), endpoint detection and response (EDR), and cyber threat intelligence through a single, lightweight CrowdStrike Falcon sensor. It’s also known for its advanced threat detection capabilities, leveraging machine learning and behavioural analytics to identify and mitigate attacks. The platform is fully cloud-managed, allowing seamless scaling across large environments without performance impact. It integrates security and IT functions, aiming to reduce complexity and lower total costs while providing real-time protection against malware, ransomware, and other malicious activities.

What Happened in the IT Outage? On July 19, 2024, CrowdStrike, a prominent enterprise security company, faced a major IT outage caused by a malformed update to their Falcon program. Falcon, a cloud-based Security as a Service (SaaS) platform, provides next-generation antivirus, endpoint detection and response (EDR), and other security features. The update, intended to enhance the program, contained a logic error that caused the CrowdStrike Windows sensor to crash each time it tried to process the update. This malfunction was particularly impactful because Falcon operates as part of the Windows operating system, rather than just running on top of it. As a result, when Falcon crashed, it also led to a crash of the entire Windows OS, causing widespread disruptions.

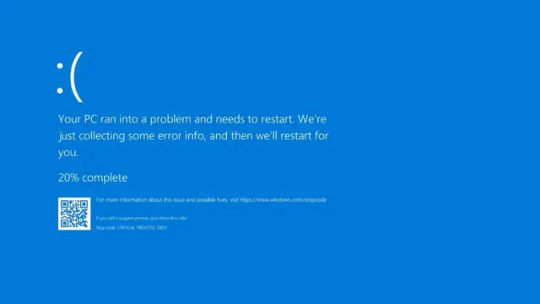

The incident resulted in a "Blue Screen of Death" for many Windows users and prompted a reboot loop on their devices. The outage affected multiple sectors, including transportation, media, and healthcare. Hospitals and health systems around the world experienced significant issues, with some facilities like Scheper Hospital in the Netherlands closing their emergency departments. The problem was specific to Windows systems, particularly those running version 7.11 or above, while Mac and Linux users were not affected.

Impact of the crash on investors The crash of Google's stock on July 19, 2024, had significant implications for investors. Here are some key impacts:

Immediate Financial Losses The crash led to a sharp decline in Google's stock price, causing substantial immediate losses for investors holding significant positions in Google. The broader market also reacted negatively, with the S&P 500 and NASDAQ experiencing declines due to Google's large influence on these indices.

Market Sentiment and Tech Sector Impact The crash contributed to a broader sell-off in the tech sector, affecting other major tech stocks like Apple, Microsoft, and Nvidia. This sector-wide decline was part of a larger rotation of investments as investors moved from large-cap tech stocks to small-cap stocks in anticipation of potential Federal Reserve rate cuts.

Investor Confidence The crash likely eroded investor confidence, particularly among those heavily invested in tech stocks. This could lead to more conservative investment strategies moving forward as investors seek to mitigate risk.

Broader Economic Concerns The event underscored existing concerns about the overall economic environment, including inflation and Federal Reserve policies. These macroeconomic factors played a role in the market's reaction and were significant in shaping investor behavior during this period.

Top CrowdStrike Alternatives

SentinelOne Singularity SentinelOne Singularity is renowned for its advanced threat detection and response capabilities, powered by cutting-edge AI and automation. The platform excels in identifying and neutralizing sophisticated cyber threats in real-time, providing comprehensive protection for endpoints. Its standout features include:

Behavioral AI: Uses behavioral AI to detect and respond to threats without relying on signatures.

Automated Response: Capabilities for automated threat mitigation and remediation reduce the need for manual intervention.

Integration and Scalability: Integrates well with existing IT infrastructure and scales effectively to meet the needs of organizations of all sizes.

SentinelOne is particularly praised for its strong overall performance in various independent tests and real-world scenarios.

Microsoft Defender for Endpoint Microsoft Defender for Endpoint is a robust option for organizations already invested in the Microsoft ecosystem. It offers seamless integration with other Microsoft products and services, ensuring a unified approach to cybersecurity. Key benefits include:

Deep Integration: Integrates deeply with Windows, Microsoft Azure, and Office 365, providing enhanced protection and streamlined management.

Advanced Threat Analytics: Utilizes advanced analytics and threat intelligence to identify and mitigate security threats.

User-Friendly Management: Provides a user-friendly interface for managing security across an organization’s endpoints.

Its ability to integrate with Microsoft services ensures efficient management and robust data protection, making it a natural fit for Microsoft-centric environments.

Palo Alto Networks Cortex XDR Cortex XDR by Palo Alto Networks is designed for organizations looking to adopt an extended detection and response (XDR) approach. It aggregates data from multiple sources to provide a comprehensive view of security threats. Features include:

Holistic Threat Detection: Combines data from endpoints, network, and cloud to detect and respond to threats more effectively.

Advanced Analytics: Uses machine learning and advanced analytics to identify complex threats.

Integration Capabilities: Seamlessly integrates with other Palo Alto Networks products and third-party tools for enhanced security management.

Cortex XDR's sophisticated capabilities make it an excellent choice for organizations seeking an in-depth and integrated security solution.

Bitdefender GravityZone Bitdefender GravityZone is known for its proactive endpoint protection and robust security features. It offers advanced threat prevention and responsive support, making it a reliable alternative for organizations seeking strong data protection. Key features include:

Advanced Threat Prevention: Utilizes machine learning, behavioral analysis, and heuristic methods to prevent advanced threats.

Centralized Management: Provides a centralized console for managing security across all endpoints.

Responsive Support: Known for its responsive customer support and comprehensive security coverage.

Bitdefender GravityZone’s focus on advanced threat prevention and responsive support makes it a dependable choice for maintaining high levels of security.

Conclusion the CrowdStrike Falcon sensor, while designed to offer advanced endpoint protection and threat detection, encountered significant issues during the IT outage on July 19, 2024. The malformed update led to widespread disruptions, causing crashes across Windows systems and affecting various sectors globally. This incident underscores the critical need for robust testing and validation in cybersecurity updates to prevent extensive operational impacts.

Contact Blue Summit for solutions regarding your business. Blue Summit has collaborated with OdiTek Solutions, a frontline custom software development company. It is trusted for its high service quality and delivery consistency. Visit our partner's page today and get your business streamlined. If you want know more about crowd strike outage feel free to visit our website Blue summit Check our other services at Blue summit

#CrowdStrike falcon sensor#CrowdStrike#CrowdStrike Windows sensor#CrowdStrike data protection#CrowdStrike alternatives

0 notes

Text

CrowdStrike Preliminary Incident Review

CrowdStrike released an explanation for what, exactly, happened that sent the world crashing down. Most of it is completely unnecessary background data, but this is the relevant passage:

What Happened on July 19, 2024? On July 19, 2024, two additional IPC Template Instances were deployed. Due to a bug in the Content Validator, one of the two Template Instances passed validation despite containing problematic content data.

(We released two updates on this date. They were checked with a program. There was a problem with the program, which said they were fine, even though one of them was not.)

Based on the testing performed before the initial deployment of the Template Type (on March 05, 2024), trust in the checks performed in the Content Validator, and previous successful IPC Template Instance deployments, these instances were deployed into production.

(We thought it would be fine because we tested the old stuff before, and we trusted our program.)

When received by the sensor and loaded into the Content Interpreter, problematic content in Channel File 291 resulted in an out-of-bounds memory read triggering an exception. This unexpected exception could not be gracefully handled, resulting in a Windows operating system crash (BSOD).

(It was not fine.)

Basically, they used a program to validate the updates they were sending out, and the program said the updates would work, so they sent it out. They did not actually try it on a computer to see if it would work before deploying it to every active computer on their network, thus sending all of them into an unrecoverable crash.

Wow.

39 notes

·

View notes

Text

CrowdStrike'd and BitLocker'd

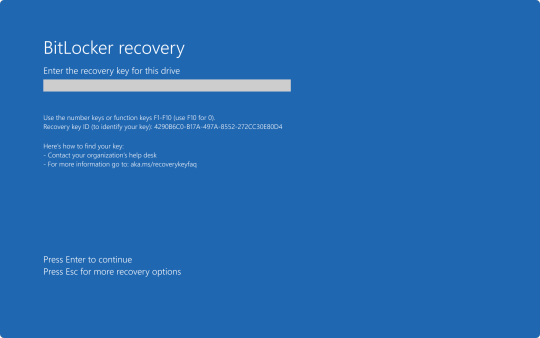

With yesterday's CrowdStrike outage. I'm sure a number of you are probably wondering "what does BitLocker have to do with any of this?" Well, it all has to do with an added layer of security many organizations use to keep data from being stolen if a computers ends up in the hands of an "unauthorized user."

To start, let me briefly explain what the CrowdStrike driver issue did and what the fix for it is.

After the update was automatically installed to computers running the CrowdStrike Falcon Sensor, a faulty driver file caused the Windows kernel on those computers to crash and display a Blue Screen of Death. How Windows typically handles a crash like this is to create a crash log file, then perform a reboot. Since this driver would launch shortly after Windows finished booting, it would cause the operating system to crash and reboot again. When two crashes have occurred in sequence, Windows will automatically boot into Recovery Mode. Hence why we say several pictures of the Recovery Mode screen across social media yesterday.

Unfortunately, this update was automatically pushed out to around 8.5 million computers across several organizations, causing widespread chaos within the matter of a few hours. And the fix for this issue had to be performed by physically accessing each computer, which required those of us working in I.T. to have to run around several facilities, locate each affected computer, and apply the fix one by one.

The short and simple of the fix is either one of two options. You can either use the Recovery Mode that Windows was already booted into to navigate to Start-Up Setting, and launch Safe Mode. Once Windows boots into Safe Mode, the technician can navigate to C:\Windows\System32\Drivers\CrowdStrike, and delete the file C00000291-*.sys. After that the computer can be rebooted as normal, and the crashing will stop.

Or the technician can open System Restore from Recovery Mode. And, assuming there is a recovery point, restore the computer back to a good known working state.

Now, this may all seem simple enough. So why were so many organizations having trouble running this fix? That all has to do with BitLocker. As an added security measure, many organizations use BitLocker on their computers to perform a full hard disk encryption. This is done so that if a computer ends up being lost or stolen, and ends up in the hands of an "unauthorized user" they will not be able to access any of the data stored on the computer without a password to log into the computer, or the computer's BitLocker recovery key.

This presented a problem when trying to restore all of these affected computers, because when either trying to launch Safe Mode or System Restore, the user would be prompted for the BitLocker recovery key.

In my organization's case, we found we could save time by providing our end user the steps to perform one of the two fixes on their own to save time. But the problem we still continue to run into is the need for these BitLocker recovery keys. In my case, I've been fielding several calls where I've had end users walking from one computer to another while I provide them with the key as they walk through reverting back to a previous restore point.

Again, a pretty long story, but hopefully that can provide some context as to what's been happening over the past 48 hours.

30 notes

·

View notes

Text

CrowdStrike code update bricking Windows machines around the world

Source: https://www.theregister.com/2024/07/19/crowdstrike_falcon_sensor_bsod_incident/

More info:

https://isc.sans.edu/diary/rss/31094

https://therecord.media/crowdstrike-update-crashes-windows-devices-globally

https://www.crowdstrike.com/blog/statement-on-windows-sensor-update/

17 notes

·

View notes

Text

CrowdStruck

By Edward Zitron • 19 Jul 2024 View in browser

Soundtrack: EL-P - Tasmanian Pain Coaster (feat. Omar Rodriguez-Lopez & Cedric Bixler-Zavala)

When I first began writing this newsletter, I didn't really have a goal, or a "theme," or anything that could neatly characterize what I was going to write about other than that I was on the computer and that I was typing words.

As it grew, I wrote the Rot Economy, and the Shareholder Supremacy, and many other pieces that speak to a larger problem in the tech industry — a complete misalignment in the incentives of most of the major tech companies, which have become less about building new technologies and selling them to people and more about capturing monopolies and gearing organizations to extract things through them.

Every problem you see is a result of a tech industry — from the people funding the earliest startups to the trillion-dollar juggernauts that dominate our lives — that is no longer focused on the creation of technology with a purpose, and organizations driven toward a purpose. Everything is about expressing growth, about showing how you will dominate an industry rather than serve it, about providing metrics that speak to the paradoxical notion that you'll grow forever without any consideration of how you'll live forever. Legacies are now subordinate to monopolies, current customers are subordinate to new customers, and "products" are considered a means to introduce a customer to a form of parasite designed to punish the user for even considering moving to competitor.

What's happened today with Crowdstrike is completely unprecedented (and I'll get to why shortly), and on the scale of the much-feared Y2K bug that threatened to ground the entirety of the world's computer-based infrastructure once the Year 2000 began.

You'll note that I didn't write "over-hyped" or anything dismissive of Y2K's scale, because Y2K was a huge, society-threatening calamity waiting to happen, and said calamity was averted through a remarkable, $500 billion industrial effort that took a decade to manifest because the seriousness of such a significant single point of failure would have likely crippled governments, banks and airlines.

People laughed when nothing happened on January 1 2000, assuming that all that money and time had been wasted, rather than being grateful that an infrastructural weakness was taken seriously, that a single point of failure was identified, and that a crisis was averted by investing in stopping bad stuff happening before it does.

As we speak, millions — or even hundreds of millions — of different Windows-based computers are now stuck in a doom-loop, repeatedly showing users the famed "Blue Screen of Death" thanks to a single point of failure in a company called Crowdstrike, the developer of a globally-adopted cyber-security product designed, ironically, to prevent the kinds of disruption that we’ve witnessed today. And for reasons we’ll get to shortly, this nightmare is going to drag on for several days (if not weeks) to come.

The product — called Crowdstrike Falcon Sensor — is an EDR system (which stands for Endpoint Detection and Response). If you aren��t a security professional and your eyes have glazed over, I’ll keep this brief. An EDR system is designed to identify hacking attempts, remediate them, and prevent them. They’re big, sophisticated, and complicated products, and they do a lot of things that’s hard to build with the standard tools available to Windows developers.

And so, to make Falcon Sensor work, Crowdstrike had to build its own kernel driver. Now, kernel drivers operate at the lowest level of the computer. They have the highest possible permissions, but they operate with the fewest amount of guardrails. If you’ve ever built your own computer — or you remember what computers were like in the dark days of Windows 98 — you know that a single faulty kernel driver can wreak havoc on the stability of your system.

The problem here is that Crowdstrike pushed out an evidently broken kernel driver that locked whatever system that installed it in a permanent boot loop. The system would start loading Windows, encounter a fatal error, and reboot. And reboot. Again and again. It, in essence, rendered those machines useless.

It's convenient to blame Crowdstrike here, and perhaps that's fair. This should not have happened. On a basic level, whenever you write (or update) a kernel driver, you need to know it’s actually robust and won’t shit the bed immediately. Regrettably, Crowdstrike seemingly borrowed Boeing’s approach to quality control, except instead of building planes where the doors fly off at the most inopportune times (specifically, when you’re cruising at 35,000ft), it released a piece of software that blew up the transportation and banking sectors, to name just a few.

It created a global IT outage that has grounded flights and broken banking services. It took down the BBC’s flagship kids TV channel, infuriating parents across the British Isles, as well as Sky News, which, when it was able to resume live broadcasts, was forced to do so without graphics. In essence, it was forced back to the 1950s — giving it an aesthetic that matches the politics of its owner, Rupert Murdoch. By no means is this an exhaustive list of those affected, either.

The scale and disruption caused by this incident is unlike anything we’ve ever seen before. Previous incidents — particularly rival ransomware outbreaks, like Wannacry — simply can’t compare to this, especially when we’re looking at the disruption and the sheer scale of the problem.

Still, if your day was ruined by this outage, at least spare a thought for those who’ll have to actually fix it. Because those machines affected are now locked in a perpetual boot loop, it’s not like Crowdstrike can release a software patch and call it a day. Undoing this update requires some users to have to individually go to each computer, loading up safe mode (a limited version of Windows with most non-essential software and drivers disabled), and manually removing the faulty code. And if you’ve encrypted your computer, that process gets a lot harder. Servers running on cloud services like Amazon Web Services and Microsoft Azure — you know, the way most of the internet's infrastructure works — require an entirely separate series of actions.

If you’re on a small IT team and you’re supporting hundreds of workstations across several far-flung locations — which isn’t unusual, especially in sectors like retail and social care — you’re especially fucked. Say goodbye to your weekend. Your evenings. Say goodbye to your spouse and kids. You won’t be seeing them for a while. Your life will be driving from site to site, applying the fix and moving on. Forget about sleeping in your own bed, or eating a meal that wasn’t bought from a fast food restaurant. Good luck, godspeed, and God bless. I do not envy you.

The significance of this failure — which isn't a breach, by the way, and in many respects is far worse, at least in the disruption caused — is not in its damage to individual users, but to the amount of technical infrastructure that runs on Windows, and that so much of our global infrastructure relies on automated enterprise software that, when it goes wrong, breaks everything.

It isn't about the number of computers, but the amount of them that underpin things like the security checkpoints or systems that run airlines, or at banks, or hospitals, all running as much automated software as possible so that costs can be kept down.

The problem here is systemic — that there is a company that the majority of people affected by this outage had no idea existed until today that Microsoft trusted to the extent that they were able to push an update that broke the back of a huge chunk of the world's digital infrastructure.

Microsoft, as a company, instead of building the kind of rigorous security protocols that would, say, rigorously test something that connects to what seems to be a huge proportion of Windows computers. Microsoft, in particular, really screwed up here. As pointed out by Wired, the company vets and cryptographically signs all kernel drivers — which is sensible and good, because kernel drivers have an incredible amount of access, and thus can be used to inflict serious harm — with this testing process usually taking several weeks.

How then did this slip through its fingers? For this to have happened, two companies needed to screw up epically. And boy, they did.

What we're seeing today isn't just a major fuckup, but the first of what will be many systematic failures — some small, some potentially larger — that are the natural byproduct of the growth-at-all-costs ecosystem where any attempt to save money by outsourcing major systems is one that simply must be taken to please the shareholder.

The problem with the digitization of society — or, more specifically, the automation of once-manual tasks — is that it introduces a single point of failure. Or, rather, multiple single points of failure. Our world, our lifestyle and our economy, is dependent on automation and computerization, with these systems, in turn, dependent on other systems to work. And if one of those systems breaks, the effects ricochet outwards, like ripples when you cast a rock into a lake.

Today’s Crowdstrike cock-up is just the latest example of this, but it isn’t the only one. Remember the SolarWinds hack in 2020, when Russian state-linked hackers gained access to an estimated 18,000 companies and public sector organizations — including NATO, the European Parliament, the US Treasury Department, and the UK’s National Health Service — by compromising just one service — SolarWinds Orion?

Remember when Okta — a company that makes software that handles authentication for a bunch of websites, governments, and businesses — got hacked in 2023, and then lied about the scale of the breach? And then do you remember how those hackers leapfrogged from Okta to a bunch of other companies, most notably Cloudflare, which provides CDN and DDOS protection services for pretty much the entire internet?

That whole John Donne quote — “No man is an island” — is especially true when we’re talking about tech, because when you scratch beneath the surface, every system that looks like it’s independent is actually heavily, heavily dependent on services and software provided by a very small number of companies, many of whom are not particularly good.

This is as much a cultural failing as it is a technological one, the result of management geared toward value extraction — building systems that build monopolies by attaching themselves to other monopolies. Crowdstrike went public in 2019, and immediately popped on its first day of trading thanks to Wall Street's appreciation of Crowdstrike moving away from a focused approach to serving large enterprise clients, building products for small and medium-sized businesses by selling through channel partners — in effect outsourcing both product sales and the relationship with a client that would tailor a business' solution to a particular need.

Crowdstrike's culture also appears to fucking suck. A recent Glassdoor entry referred to Crowdstrike as "great tech [with] terrible culture" with no work life balance, with "leadership that does not care about employee well being." Another from June claimed that Crowdstrike was "changing culture for the street,” with KPIs (as in metrics related to your “success” at the company) “driving behavior more than building relationships” with a serious lack of experience in the public sector in senior management. Others complain of micromanagement, with one claiming that “management is the biggest issue,” with managers “ask[ing] way too much of you…and it doesn’t matter if you do what they ask since they’re not even around to check on you,” and another saying that “management are arrogant” and need to “stop lying to the market on product capability.”

While I can’t say for sure, I’d imagine an organization with such powerful signs of growth-at-all-costs thinking — a place where you “have to get used to the pressure” that’s a “clique that you’re not in” — likely isn’t giving its quality assurance teams the time and space to make sure that there aren’t any Kaiju-level security threats baked into an update. And that assumes it actually has a significant QA team in-house, and hasn’t just (as with many companies) outsourced the work to a “bodyshop” like Wipro or Infosys or Tata.

And don’t think I’m letting Microsoft off the hook, either. Assuming the kernel driver testing roles are still being done in-house, do you think that these testers — who have likely seen their friends laid off at a time when the company was highly profitable, and denied raises when their well-fed CEO took home hundreds of millions of dollars for doing a job he’s eminenly bad at — are motivated to do their best work?

And this is the culture that’s poisoned almost the entirety of Silicon Valley. What we’re seeing is the societal cost of moving fast and breaking things, of Marc Andreessen considering “risk management the enemy,” of hiring and firing tens of thousands of people to please Wall Street, of seeking as many possible ways to make as much money as possible to show shareholders that you’ll grow, even if doing so means growing at a pace that makes it impossible to sustain organizational and cultural stability. When you aren’t intentional in the people you hire, the people you fire, the things you build and the way that they’re deployed, you’re going to lose the people that understand the problems they’re solving, and thus lack the organizational ability to understand the ways that they might be solved in the future.

This is dangerous, and also a dark warning for the future. Do you think that Facebook, or Microsoft, or Google — all of whom have laid off over 10,000 people in the last year — have done so in a conscientious way that means that the people left understand how their systems run and their inherent issues? Do you think that the management-types obsessed with the unsustainable AI boom are investing heavily in making sure their organizations are rigorously protected against, say, one bad line of code? Do they even know who wrote the code of their current systems? Is that person still there? If not, is that person at least contracted to make sure that something nuanced about the system in question isn’t mistakenly removed?

They’re not. They’re not there anymore. Only a few months ago Google laid off 200 employees from the core of its organization, outsourcing their roles to Mexico and India in a cost-cutting measure the quarter after the company made over $23 billion in profit. Silicon Valley — and big tech writ large — is not built to protect against situations like the one we’re seeing today,because their culture is cancerous. It valuesrowth at all costs, with no respect for the human capital that empowers organizations or the value of building rigorous, quality-focused products.

This is just the beginning. Big tech is in the throes of perdition, teetering over the edge of the abyss, finally paying the harsh cost of building systems as fast as possible. This isn’t simply moving fast or breaking things, but doing so without any regard for the speed at which you’re doing so and firing the people that broke them, the people who know what’s broken, and possibly the people that know how to fix them.

And it’s not just tech! Boeing — a company I’ve already shat on in this post, and one I’ll likely return to in future newsletters, largely because it exemplifies the short-sightedness of today’s managerial class — has, over the past 20 years or so, span off huge parts of the company (parts that, at one point, were vitally important) into separate companies, laid off thousands of employees at a time, and outsourced software dev work to $9-an-hour bodyshop engineers. It hollowed itself out until there was nothing left.

And tell me, knowing what you know about Boeing today, would you rather get into a 737 Max or an Airbus A320neo? Enough said.

As these organizations push their engineers harder, said engineers will turn to AI-generated code, poisoning codebases with insecure and buggy code as companies shed staff to keep up with Wall Street’s demands in ways that I’m not sure people are capable of understanding. The companies that run the critical parts of our digital lives do not invest in maintenance or infrastructure with the intentionality that’s required to prevent the kinds of massive systemic failures you see today, and I need you all to be ready for this to happen again.

This is the cost of the Rot Economy — systems used by billions of people held up by flimsy cultures and brittle infrastructure maintained with the diligence of an absentee parent. This is the cost of arrogance, of rewarding managerial malpractice, of promoting speed over safety and profit over people.

Every single major tech organization should see today as a wakeup call — a time to reevaluate the fundamental infrastructure behind every single tech stack.

What I fear is that they’ll simply see it as someone else’s problem - which is exactly how we got here in the first place.

9 notes

·

View notes

Text

The 'Fake' CrowdStrike Worker Who Crippled Windows Users Worldwide

"What is CrowdStrike? Why is my Windows computer showing the Blue Screen of Death? Who is responsible for the biggest-ever IT outage?"

These are questions that have dominated conversations across the globe after a Microsoft error brought Windows computers to its heels. While it is now known that an update to an anti-virus program 'Falcon Sensor' by CrowdStrike was responsible for the massive worldwide outage, people have still been wondering how such a defective update was allowed to be released and who was behind it.

Step in Vincent Flibustier, an X user parading to be a Crowdstrike employee. Vincent broke the internet with an altered, AI-generated photo of him outside the CrowdStrike office along with the caption, "First day at Crowdstrike, pushed a little update and taking the afternoon off."

The photo went viral within minutes and already has nearly 4 lakh likes and has been shared by over 36,000 users.

Two hours later, Flibustier posted another update - the company had fired him. He also shared a short video where he takes 'responsibility' for causing the global outage.

Vincent Flibustier also changed his X (former Twitter) bio to accompany the parody. His bio said, "Former Crowdstrike employee, fired for an unfair reason, only changed 1 line of code to optimize. Looking for a job as Sysadmin."

While he was trying to make a joke about it, thousands online bought his satire and thought he was the one responsible for the Blue Screen of Death (BSOD) on their system. Airlines, banks, TV channels, and several other industries were scrambling to deal with the issue, and people on social media went into overdrive after finding the 'culprit'.

While several users praised him for ensuring that they don't have to work on a Friday, some posted abusive messages about him.

The truth: Vincent is a satirical writer who runs Nordpresse, a Belgian parody news site. He appeared as a guest on France TV, where he remarked, "People are drawn to stories that confirm their preconceptions."

Explaining further why people on the internet immediately latched on to his joke, he said, "No culprit named yet, I bring it on a platter, people like to have a culprit. The culprit seems completely stupid, he is proud of his stupidity, he takes his afternoon off on the first day of work. This falls right into a huge buzz in which people absolutely need to have new information, and a fake is by nature new, you won't read it anywhere else."

He also said that the post was shared by those who knew it was a joke, but the amplification sent it into a zone where people took every word of the tweet literally.

Millions of users across the globe are still facing issues, with both Microsoft and CrowdStrike trying to resolve the issue at the earliest.

The latest version of its Falcon Sensor software was meant to make CrowdStrike clients' systems more secure against hacking by updating the threats it defends against. However faulty code in the update files resulted in one of the most widespread tech outages in recent years for companies using Microsoft's Windows operating system.

Problems came to light quickly after the update was rolled out on Friday, and users posted pictures on social media of computers with blue screens displaying error messages. These are known in the industry as "blue screens of death."

4 notes

·

View notes

Text

Microsoft Outage: CrowdStrike and the 'Blue Screen of Death' Affecting Users Worldwide

photo microsoft

Global Impact

Microsoft Windows users across the globe, including those in India, Australia, Germany, the United States, and the UK, are experiencing a critical issue leading to the infamous 'Blue Screen of Death' (BSOD). This problem causes systems to restart or shut down automatically. Notably, companies like Dell Technologies have attributed this crash to a recent update from CrowdStrike, although Microsoft has yet to confirm this as the root cause of the outage.

Affected Sectors

The outage, which began Thursday evening, primarily impacted Microsoft's Central US region. Essential systems for numerous airlines were crippled, affecting American Airlines, Frontier Airlines, Allegiant, and Sun Country in the US, as well as IndiGo and other airlines in India. Additionally, the disruption extended to banks, supermarkets, media outlets, and other businesses, highlighting the significant reliance on cloud services for critical infrastructure.

CrowdStrike: An Overview

CrowdStrike is a prominent cybersecurity platform that offers security solutions to both users and businesses. It employs a single sensor and a unified threat interface with attack correlation across endpoints, workloads, and identity. One of its key products, Falcon Identity Threat Protection, is designed to prevent identity-driven breaches in real time.

The Issue with CrowdStrike's Update

Reports indicate that a buggy update caused CrowdStrike’s Falcon Sensor to malfunction and conflict with the Windows operating system. This has led to widespread BSOD errors. CrowdStrike has acknowledged the problem, stating, “Our Engineers are actively working to resolve this issue and there is no need to open a support ticket.” The company has promised to update users once the issue is resolved.

Microsoft's Response

Microsoft confirmed that the Azure outage was resolved early Friday. However, this incident serves as a stark reminder of the potential consequences when critical infrastructure heavily relies on cloud services. The outage underscores the need for robust and reliable cybersecurity measures to prevent such widespread disruptions in the future.

Understanding the Blue Screen of Death

The Blue Screen of Death (BSOD) is a critical error screen on Windows operating systems that appears when the system crashes due to a severe issue, preventing it from operating safely. When a BSOD occurs, the computer restarts unexpectedly, often resulting in the loss of unsaved data. The error message typically states, “Your PC ran into a problem and needs to restart. We are just collecting some error info, then we will restart for you.”

This type of error is not exclusive to Windows; similar issues can be seen across Mac and Linux operating systems as well.

While the exact cause of the widespread BSOD errors remains unclear, the incident highlights the interconnectedness and vulnerability of modern digital infrastructure. Both Microsoft and CrowdStrike are working to resolve the issues and restore normalcy to affected users and businesses worldwide.

2 notes

·

View notes

Text

except crowdstrike also uses kernel extensions in their linux sensors and the exact same thing could have happened to global linux infrastructure? the one company who bricked our global IT infra was not microsoft. it was crowdstrike, full stop.

really the lesson is don't install a fucking rootkit on your endpoints unless you have an out of band controller

inb4 i get called a windoze shill/enjoyer: my workplace has literally banned windows and i will die on that hill

it's honestly nuts to me that critical infrastructure literally everywhere went down because everyone is dependent on windows and instead of questioning whether we should be letting one single company handle literally the vast majority of global technological infrastructure, we're pointing and laughing at a subcontracted company for pushing a bad update and potentially ruining themselves

like yall linux has been here for decades. it's stable. the bank I used to work for is having zero outage on their critical systems because they had the foresight to migrate away from windows-only infrastructure years ago whereas some other institutions literally cannot process debit card transactions right now.

global windows dependence is a massive risk and this WILL happen again if something isn't done to address it. one company should not be able to brick our global infrastructure.

5K notes

·

View notes

Text

CrowdStrike Failure

This week I wanted to talk about a recent event that likely affected all of us. I'm sure many of us heard of the event that happened in July where many Microsoft Windows devices were experiencing “The Blue Screen of Death.” Many thought it was just a widespread Microsoft Windows failure, but it was actually with one of their cyber security providers, CrowdStrike. Because of a flawed update to the CrowdStrike application Falcon, many industries faced some serious charges, such as airports, banks, and healthcare systems. Falcon is CrowdStrike’s endpoint detection and response platform and when the update was sent out it sent Windows machines running Falcon into an endless reboot cycle, leading to the revered “blue screen of death.” According to CrowdStrike, the fact that triggered the outage was in a channel file. “Which is stored in C:\Windows\System32\drivers\CrowdStrike\” with a filename beginning “C-00000291-” and ending “.sys”. Channel File 291 passes information to the Falcon sensor about how to evaluate “named pipe” execution, which Windows systems use for intersystem or interprocess communication. These commands are not inherently malicious but can be misused.” The endless cycle of rebooting was caused by a logic error where the problematic content was loaded into the channel file which resulted in an out-of-bounds memory read triggered an exception. The exception was unable to be handled gracefully and resulted in the Windows OS crashing and rebooting. Because this update was a part of CrowdStrike’s rapid response content program, the organization decided it was to go through less rigorous testing than other updates to Falcon’s software agents.

Following the initial incident, CrowdStrike did end up reporting on the testing lapse that led to the flawed update being passed and pushed to customers. They ended up blaming a gap in the company's testing software that caused its validator tool to miss a flaw in the effective channel file content update.

Overall CrowdStrike and Microsoft are not bad companies. They do practice good security practices and procedures. I do think this event was a good insight into how much we rely on the digital realm in our everyday lives and not only how easy it is to take It away, but also how much of our lives are affected by the absence of it.

Sources:

0 notes

Quote

何百台規模でぜーんぶ対応中だぜーい。再起動ループに入っちゃったやつはパッチも当たらないぜーい。一個ずつ丁寧にやるんだぜーい。俺と俺みたいな人たち、みんな頑張ろー!

[B! windows] 世界規模でWindowsデバイスが次々とブルースクリーン(BSoD)に! 大規模障害発生中【17:10追記】/「CrowdStrike Falcon Sensor」に含まれるドライバーが原因か

1 note

·

View note

Text

CrowdStrike revela a causa raiz das interrupções globais do sistema

A empresa de segurança cibernética CrowdStrike publicou sua análise de causa raiz detalhando a falha na atualização do software Falcon Sensor que paralisou milhões de dispositivos Windows no mundo todo. O incidente “Channel File 291”, conforme destacado originalmente em sua Preliminary Post Incident Review (PIR), foi rastreado até um problema de validação de conteúdo que surgiu após a introdução…

View On WordPress

0 notes

Text

Source for "logic error" On July 19, 2024 at 04:09 UTC, as part of ongoing operations, CrowdStrike released a sensor configuration update to Windows systems. Sensor configuration updates are an ongoing part of the protection mechanisms of the Falcon platform. This configuration update triggered a logic error resulting in a system crash and blue screen (BSOD) on impacted systems. https://www.crowdstrike.com/blog/technical-details-on-todays-outage/ (retrieved July 20th, 2024)

idk if people on tumblr know about this but a cybersecurity software called crowdstrike just did what is probably the single biggest fuck up in any sector in the past 10 years. it's monumentally bad. literally the most horror-inducing nightmare scenario for a tech company.

some info, crowdstrike is essentially an antivirus software for enterprises. which means normal laypeople cant really get it, they're for businesses and organisations and important stuff.

so, on a friday evening (it of course wasnt friday everywhere but it was friday evening in oceania which is where it first started causing damage due to europe and na being asleep), crowdstrike pushed out an update to their windows users that caused a bug.

before i get into what the bug is, know that friday evening is the worst possible time to do this because people are going home. the weekend is starting. offices dont have people in them. this is just one of many perfectly placed failures in the rube goldburg machine of crowdstrike. there's a reason friday is called 'dont push to live friday' or more to the point 'dont fuck it up friday'

so, at 3pm at friday, an update comes rolling into crowdstrike users which is automatically implemented. this update immediately causes the computer to blue screen of death. very very bad. but it's not simply a 'you need to restart' crash, because the computer then gets stuck into a boot loop.

this is the worst possible thing because, in a boot loop state, a computer is never really able to get to a point where it can do anything. like download a fix. so there is nothing crowdstrike can do to remedy this death update anymore. it is now left to the end users.

it was pretty quickly identified what the problem was. you had to boot it in safe mode, and a very small file needed to be deleted. or you could just rename crowdstrike to something else so windows never attempts to use it.

it's a fairly easy fix in the grand scheme of things, but the issue is that it is effecting enterprises. which can have a looooot of computers. in many different locations. so an IT person would need to manually fix hundreds of computers, sometimes in whole other cities and perhaps even other countries if theyre big enough.

another fuck up crowdstrike did was they did not stagger the update, so they could catch any mistakes before they wrecked havoc. (and also how how HOW do you not catch this before deploying it. this isn't a code oopsie this is a complete failure of quality ensurance that probably permeates the whole company to not realise their update was an instant kill). they rolled it out to everyone of their clients in the world at the same time.

and this seems pretty hilarious on the surface. i was havin a good chuckle as eftpos went down in the store i was working at, chaos was definitely ensuring lmao. im in aus, and banking was literally down nationwide.

but then you start hearing about the entire country's planes being grounded because the airport's computers are bricked. and hospitals having no computers anymore. emergency call centres crashing. and you realised that, wow. crowdstrike just killed people probably. this is literally the worst thing possible for a company like this to do.

crowdstrike was kinda on the come up too, they were starting to become a big name in the tech world as a new face. but that has definitely vanished now. to fuck up at this many places, is almost extremely impressive. its hard to even think of a comparable fuckup.

a friday evening simultaneous rollout boot loop is a phrase that haunts IT people in their darkest hours. it's the monster that drags people down into the swamp. it's the big bag in the horror movie. it's the end of the road. and for crowdstrike, that reaper of souls just knocked on their doorstep.

114K notes

·

View notes

Text

The consequences of code

Above: Illustration byAuthorpolygraphus/DepositPhotos BitDepth#1470 for August 05, 2024 On July 19, cybersecurity firm Crowdstrike sent an automatic update to Microsoft Windows computers that was intended to upgrade the Falcon sensor security solution it sells to enterprise. The worst possible thing happened. A bug in the code sent the computers that received into a death spiral of blue…

0 notes

Text

CrowdStrike CEO says 97% of Windows sensors back online after outage http://dlvr.it/TB5G48

0 notes

Text

CrowdStrike CEO Kurtz: 97 Percent Of Windows Sensors ‘Back Online’ After Outage http://dlvr.it/TB4Q0N

0 notes

Text

CrowdStrike-Fiasko: mehr Details

Ein fehlerhaftes Update (Channel File 291) der Security-Software Falcon von CrowdStrike hat weltweit für massive IT-Ausfälle gesorgt. Laut Microsoft waren nur 1% der Windows-Rechner betroffen, aber was wären dann die Folgen bei 3 - 10%?

CrowdStrike hatte vergangenen Freitag von 06:09 Uhr bis 07:27 Uhr deutscher Zeit ein fehlerhaftes Update ausgespielt. Betroffen waren Systeme, die in dieser Zeit online waren und so automatisch mit dem Update versorgt wurden. Bei der fatalen Aktualisierung handelt es sich um ein sogenanntes Channel File für die Komponente Falcon Sensor, die auf den zu schützenden Clients installiert werden muss. Mit verheerenden Folgen: Flughäfen, Banken, Geschäfte, Krankenhäuser und viele weitere Einrichtungen waren betroffen und mussten teilweise ihren Betrieb einstellen.

Neben den Problemen mit Crowdstrike gab es zeitgleich auch eine Fehlkonfiguration durch Microsoft selbst in deren System Azure. Dadurch hatten viele Dienste wie Teams, Onedrive, Microsoft Defender oder Sharepoint keine Verbindung zu den Azure-Servern. Bis all diese Schwierigkeiten behoben sind, wird es wohl noch ein bisschen dauer". Zwar hätten zahlreiche Organisationen ihre Systeme schon wieder an den Start gebracht, aber das oft nur manuell mögliche Löschen der Crowdstrike-Datei verzögert die Reparaturen. Dabei können auch per Bitlocker verschlüsselte Laufwerke Probleme machen.

Für den ungläubig staunenden Linuxer: Microsoft kennt kein "Rollback", wie etwa bei einem Kernel-Update. Da es sich hier aber um einen Systemtreiber mit privilegiertem Zugriff handelte, führte dieser Fehler zu einem kompletten Systemabsturz und dadurch, dass der Treiber bei jedem Start des Rechners erneut geladen wurde, hingen die betroffenen Computer in einer Reboot-Schleife fest und mussten erst von Hand, über den abgesicherten Modus, repariert werden.

Source https://www.0815-info.news/Web_Links-CrowdStrike-Fiasko-mehr-Details-visit-11503.html

0 notes