#Docker Desktop command line solution

Explore tagged Tumblr posts

Text

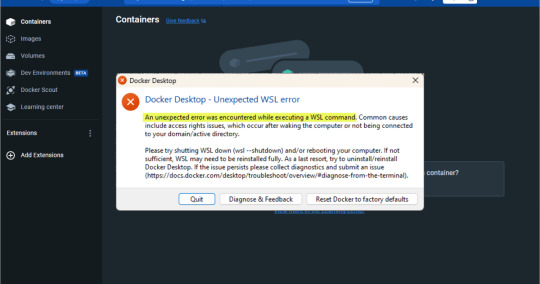

Docker Desktop Unexpected WSL Error Fix

Docker Desktop Unexpected WSL Error Fix #docker #containers #DockerDesktopWSLError #WSLCommandErrorFix #DockerDesktopSettingsAdjustment #WindowsSubsystemForLinuxError #DockerDesktopConfigModification #DockerOnWindowsTroubleshooting #DockerDesktop

Docker Desktop is a great tool for developers, DevOps pros, and home lab enthusiasts and allows you to interact with the tool without having to install Docker and use it from the command line in Linux. However, the unexpected WSL error often appears post-Docker Desktop installation when executing a WSL command. Several different issues, including access rights, can trigger this error. This post…

View On WordPress

#Docker Desktop and Azure VMs#Docker Desktop command line solution#Docker Desktop config modification#Docker Desktop nested virtualization#Docker Desktop settings adjustment#Docker Desktop version-specific fix#Docker Desktop WSL error#Docker on Windows troubleshooting#Windows Subsystem for Linux error#WSL command error fix

0 notes

Text

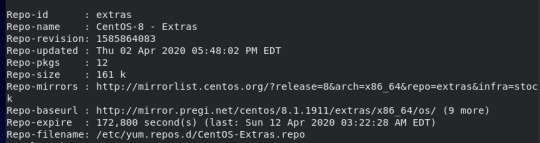

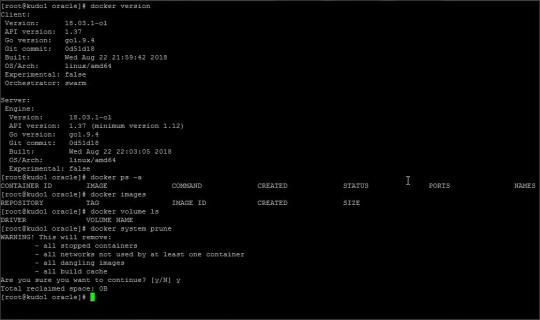

Rhel Docker

Rhel Docker

Rhel Docker Ce

Rhel Docker

Rhel Docker Ce

The Remote - Containers extension lets you use a Docker container as a full-featured development environment. Whether you deploy to containers or not, containers make a great development environment because you can:

Develop with a consistent, easily reproducible toolchain on the same operating system you deploy to.

Quickly swap between different, isolated development environments and safely make updates without worrying about impacting your local machine.

Make it easy for new team members / contributors to get up and running in a consistent development environment.

Try out new technologies or clone a copy of a code base without impacting your local setup.

Rhel Docker

Rhel Docker Ce

The extension starts (or attaches to) a development container running a well defined tool and runtime stack. Workspace files can be mounted into the container from the local file system, or copied or cloned into it once the container is running. Extensions are installed and run inside the container where they have full access to the tools, platform, and file system.

Rhel Docker

Amazon Web Services (AWS) and Red Hat provide a complete, enterprise-class computing environment. Red Hat solutions on AWS give customers the ability to run enterprise traditional on-premises applications, such as SAP, Oracle databases, and custom applications in the cloud.

Windows 10 Home (2004+) requires Docker Desktop 2.2+ and the WSL2 back-end. (Docker Toolbox is not supported.) macOS: Docker Desktop 2.0+. Linux: Docker CE/EE 18.06+ and Docker Compose 1.21+. (The Ubuntu snap package is not supported.) Containers: x8664 / ARMv7l (AArch32) / ARMv8l (AArch64) Debian 9+, Ubuntu 16.04+, CentOS / RHEL 7+ x8664.

Docker volumes allow you to back up, restore, and migrate data easily. This tutorial explains what a Docker volume is and how to use it, as well as how to mount a volume in Docker.

Amazon Web Services (AWS) and Red Hat provide a complete, enterprise-class computing environment. Red Hat solutions on AWS give customers the ability to run enterprise traditional on-premises applications, such as SAP, Oracle databases, and custom applications in the cloud.

You then work with VS Code as if everything were running locally on your machine, except now they are isolated inside a container.

System Requirements

Local:

Windows:Docker Desktop 2.0+ on Windows 10 Pro/Enterprise. Windows 10 Home (2004+) requires Docker Desktop 2.2+ and the WSL2 back-end. (Docker Toolbox is not supported.)

macOS: Docker Desktop 2.0+.

Linux: Docker CE/EE 18.06+ and Docker Compose 1.21+. (The Ubuntu snap package is not supported.)

Containers:

x86_64 / ARMv7l (AArch32) / ARMv8l (AArch64) Debian 9+, Ubuntu 16.04+, CentOS / RHEL 7+

x86_64 Alpine Linux 3.9+

Other glibc based Linux containers may work if they have needed prerequisites.

While ARMv7l (AArch32), ARMv8l (AArch64), and musl based Alpine Linux support is available, some extensions installed on these devices may not work due to the use of glibc or x86 compiled native code in the extension. See the Remote Development with Linux article for details.

Note that while the Docker CLI is required, the Docker daemon/service does not need to be running locally if you are using a remote Docker host.

Installation

To get started, follow these steps:

Install VS Code or VS Code Insiders and this extension.

Install and configure Docker for your operating system.

Windows / macOS:

Install Docker Desktop for Mac/Windows.

If not using WSL2 on Windows, right-click on the Docker task bar item, select Settings / Preferences and update Resources > File Sharing with any locations your source code is kept. See tips and tricks for troubleshooting.

To enable the Windows WSL2 back-end: Right-click on the Docker taskbar item and select Settings. Check Use the WSL2 based engine and verify your distribution is enabled under Resources > WSL Integration.

Linux:

Follow the official install instructions for Docker CE/EE. If you use Docker Compose, follow the Docker Compose install directions.

Add your user to the docker group by using a terminal to run: sudo usermod -aG docker $USER Sign out and back in again so this setting takes effect.

Rhel Docker Ce

Working with Git? Here are two tips to consider:

If you are working with the same repository folder in a container and Windows, be sure to set up consistent line endings. See tips and tricks to learn how.

If you clone using a Git credential manager, your container should already have access to your credentials! If you use SSH keys, you can also opt-in to sharing them. See Sharing Git credentials with your container for details.

Getting started

Follow the step-by-step tutorial or if you are comfortable with Docker, follow these four steps:

Follow the installation steps above.

Clone https://github.com/Microsoft/vscode-remote-try-node locally.

Start VS Code

Run the Remote-Containers: Open Folder in Container... command and select the local folder.

Check out the repository README for things to try. Next, learn how you can:

Use a container as your full-time environment - Open an existing folder in a container for use as your full-time development environment in few easy steps. Works with both container and non-container deployed projects.

Attach to a running container - Attach to a running container for quick edits, debugging, and triaging.

Advanced: Use a remote Docker host - Once you know the basics, learn how to use a remote Docker host if needed.

Available commands

Another way to learn what you can do with the extension is to browse the commands it provides. Press F1 to bring up the Command Palette and type in Remote-Containers for a full list of commands.

You can also click on the Remote 'Quick Access' status bar item to get a list of the most common commands.

For more information, please see the extension documentation.

Release Notes

While an optional install, this extension releases with VS Code. VS Code release notes include a summary of changes to all three Remote Development extensions with a link to detailed release notes.

As with VS Code itself, the extensions update during a development iteration with changes that are only available in VS Code Insiders Edition.

Questions, Feedback, Contributing

Have a question or feedback?

See the documentation or the troubleshooting guide.

Up-vote a feature or request a new one, search existing issues, or report a problem.

Contribute a development container definition for others to use

Contribute to our documentation

...and more. See our CONTRIBUTING guide for details.

Or connect with the community...

Telemetry

Visual Studio Code Remote - Containers and related extensions collect telemetry data to help us build a better experience working remotely from VS Code. We only collect data on which commands are executed. We do not collect any information about image names, paths, etc. The extension respects the telemetry.enableTelemetry setting which you can learn more about in the Visual Studio Code FAQ.

License

By downloading and using the Visual Studio Remote - Containers extension and its related components, you agree to the product license terms and privacy statement.

2 notes

·

View notes

Text

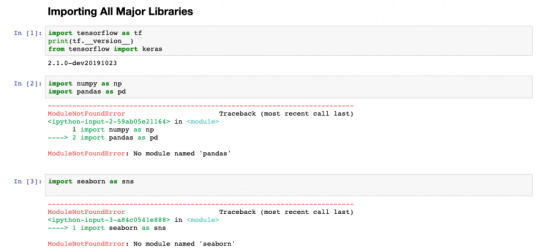

Introduction to the framework

Programming paradigms

From time to time, the difference in writing code using computer languages was introduced.The programming paradigm is a way to classify programming languages based on their features. For example

Functional programming

Object oriented programming.

Some computer languages support many patterns. There are two programming languages. These are non-structured programming language and structured programming language. In structured programming language are two types of category. These are block structured(functional)programming and event-driven programming language. In a non-structured programming language characteristic

earliest programming language.

A series of code.

Flow control with a GO TO statement.

Become complex as the number of lines increases as a example Basic, FORTRAN, COBOL.

Often consider program as theories of a formal logical and computations as deduction in that logical space.

Non-structured programming may greatly simplify writing parallel programs.The structured programming language characteristics are

A programming paradigm that uses statement that change a program’s state.

Structured programming focus on describing how a program operators.

The imperative mood in natural language express commands, an imperative program consist of command for the computer perform.

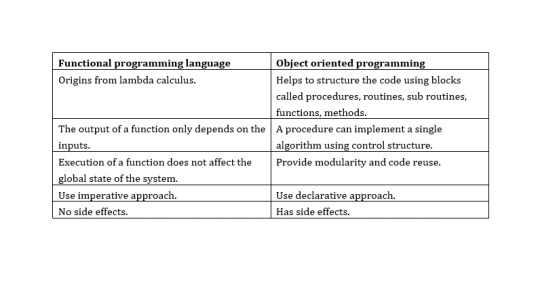

When considering the functional programming language and object-oriented programming language in these two languages have many differences

In here lambda calculus is formula in mathematical logic for expressing computation based on functional abstraction and application using variable binding and substitution. And lambda expressions is anonymous function that can use to create delegates or expression three type by using lambda expressions. Can write local function that can be passed as argument or returned as the value of function calls. A lambda expression is the most convenient way to create that delegate. Here an example of a simple lambda expression that defines the “plus one” function.

λx.x+1

And here no side effect meant in computer science, an operation, function or expression is said to have a side effect if it modifies some state variable values outside its local environment, that is to say has an observable effect besides returning a value to the invoke of the operation.Referential transparency meant oft-touted property of functional language which makes it easier to reason about the behavior of programs.

Key features of object-oriented programming

There are major features in object-oriented programming language. These are

Encapsulation - Encapsulation is one of the basic concepts in object-oriented programming. It describes the idea of bundling the data and methods that work on that data within an entity.

Inheritance - Inheritance is one of the basic categories of object-oriented programming languages. This is a mechanism where can get a class from one class to another, which can share a set of those characteristics and resources.

Polymorphous - Polymorphous is an object-oriented programming concept that refers to the ability of a variable, function, or object to take several forms.

Encapsulation - Encapsulation is to include inside a program object that requires all the resources that the object needs to do - basically, the methods and the data.

These things are refers to the creation of self-contain modules that bind processing functions to the data. These user-defined data types are called “classes” and one instance of a class is an “object”.

These things are refers to the creation of self-contain modules that bind processing functions to the data. These user-defined data types are called “classes” and one instance of a class is an “object”.

How the event-driven programming is different from other programming paradigms???

Event driven programming is a focus on the events triggered outside the system

User events

Schedulers/timers

Sensor, messages, hardware, interrupt.

Mostly related to the system with GUI where the users can interact with the GUI elements. User event listener to act when the events are triggered/fired. An internal event loop is used to identify the events and then call the necessary handler.

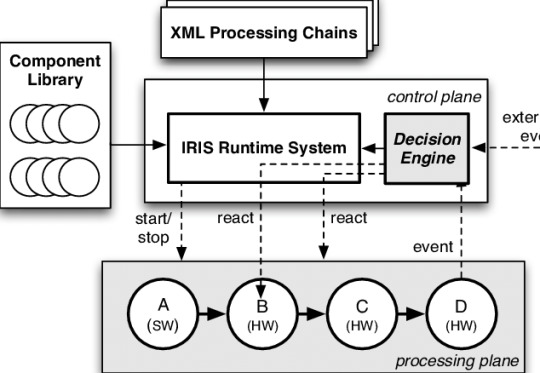

Software Run-time Architecture

A software architecture describes the design of the software system in terms of model components and connectors. However, architectural models can also be used on the run-time to enable the recovery of architecture and the architecture adaptation Languages can be classified according to the way they are processed and executed.

Compiled language

Scripting language

Markup language

Communication between application and OS needs additional components.The type of language used to develop application components.

Compiled language

The compiled language is a programming language whose implementation is generally compiled, and not interpreter

Some executions can be run directly on the OS. For example, C on windows. Some executable s use vertical run-time machines. For example, java.net.

Scripting language

A scripting or script language is a programming language that supports the script - a program written for a specific run-time environment that automates the execution of those tasks that are performed by a human operator alternately by one-by-one can go.

The source code is not compiled it is executed directly.At the time of execution, code is interpreted by run-time machine. For example PHP, JS.

Markup Language

The markup language is a computer language that uses tags to define elements within the document.

There is no execution process for the markup language.Tool which has the knowledge to understand markup language, can render output. For example, HTML, XML.Some other tools are used to run the system at different levels

Virtual machine

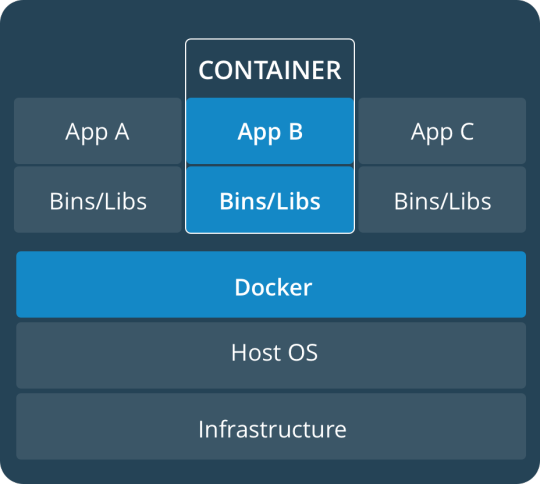

Containers/Dockers

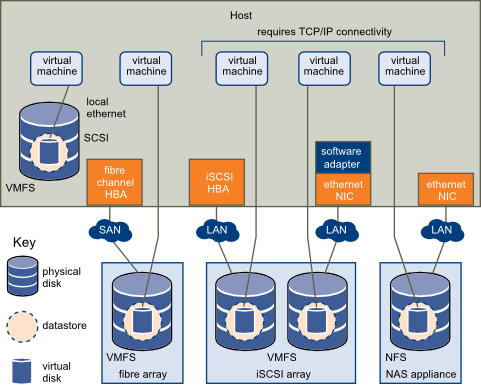

Virtual machine

Containers

Virtual Machine Function is a function for the relation of vertical machine environments. This function enables the creation of several independent virtual machines on a physical machine which perpendicular to resources on the physical machine such as CPU, memory network and disk.

Development Tools

A programming tool or software development tool is a computer program used by software developers to create, debug, maintain, or otherwise support other programs and applications.Computer aided software engineering tools are used in the engineering life cycle of the software system.

Requirement – surveying tools, analyzing tools.

Designing – modelling tools

Development – code editors, frameworks, libraries, plugins, compilers.

Testing – test automation tools, quality assurance tools.

Implementation – VM s, containers/dockers, servers.

Maintenance – bug trackers, analytical tools.

CASE software types

Individual tools – for specific task.

Workbenches – multiple tools are combined, focusing on specific part of SDLC.

Environment – combines many tools to support many activities throughout the SDLS.

Framework vs Libraries vs plugins….

plugins

plugins provide a specific tool for development. Plugin has been placed in the project on development time, Apply some configurations using code. Run-time will be plugged in through the configuration

Libraries

To provide an API, the coder can use it to develop some features when writing the code. At the development time,

Add the library to the project (source code files, modules, packages, executable etc.)

Call the necessary functions/methods using the given packages/modules/classes.

At the run-time the library will be called by the code

Framework

Framework is a collection of libraries, tools, rules, structure and controls for the creation of software systems. At the run-time,

Create the structure of the application.

Place code in necessary place.

May use the given libraries to write code.

Include additional libraries and plugins.

At run-time the framework will call code.

A web application framework may provide

User session management.

Data storage.

A web template system.

A desktop application framework may provide

User interface functionality.

Widgets.

Frameworks are concrete

Framework consists of physical components that are usable files during production.JAVA and NET frameworks are set of concrete components like jars,dlls etc.

A framework is incomplete

The structure is not usable in its own right. Apart from this they do not leave anything empty for their user. The framework alone will not work, relevant application logic should be implemented and deployed alone with the framework. Structure trade challenge between learning curve and saving time coding.

Framework helps solving recurring problems

Very reusable because they are helpful in terms of many recurring problems. To make a framework for reference of this problem, commercial matter also means.

Framework drives the solution

The framework directs the overall architecture of a specific solution. To complete the JEE rules, if the JEE framework is to be used on an enterprise application.

Importance of frameworks in enterprise application development

Using code that is already built and tested by other programmers, enhances reliability and reduces programming time. Lower level "handling tasks, can help with framework codes. Framework often help enforce platform-specific best practices and rules.

1 note

·

View note

Text

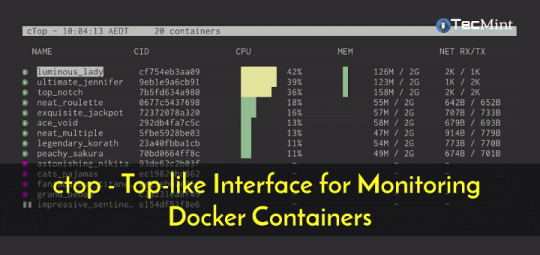

Web monitor linux

WEB MONITOR LINUX INSTALL

WEB MONITOR LINUX DOWNLOAD

It supports Mozilla Firefox, Google Chrome, Microsoft Edge, Apple Safari and Opera browsers. A complete core management package for Linux server administrators. It provides various management server over WEB GUI to handle Docker, Firewall, Storage, User Accounts Network, SELinux Policy, Diagnostic Report, Package Updates, Virtual Machines Management using QEMU / Libvirt and Terminal to directly issue commands for the server using web GUI interface. Very straight forward interface with one side all the options needed to manage server and other to show the output of the same. It is more towards core Linux server management. Yes, until your requirement is not to handle domains, web server, Database management and more like Cpanel offers. The cockpit is an open-source and developed to provide exactly what a person needs to manage an Ubuntu Server. Sentroa Hosting Web GUI for Ubuntu Server Cockpit Web GUI Management However, here we talk only about some top and best open source web GUI management panels that are free to use. For example, CPanel, a paid server management solution. It helps us to manage Databases, PHP, storage, monitoring etc. That we can access over any browser using the IP address of the server. These panels can be easily installed on the server using the command line and provide a minimal web interface management panel. But on the other hand, you should prefer Ubuntu web GUI panels. Yes, indeed, refrain your self from installing GUI Desktop environments on the server such as GNOME etc. Well! Aforementioned that if you have Ubuntu server then go for web GUI management panel.

WEB MONITOR LINUX DOWNLOAD

Simply download them to learn Ubuntu curves on your local machine and later implement the same on your live production cloud server. Over and above that, if you want a GUI, then Ubuntu already offers GUI server and Desktop Images. GUI means more RAM and hard disk storage space. I am saying this because Ubuntu or any other Linux server operating systems are built to run on low hardware resources, thus even old computer/server hardware can easily handle it. Moreover, for a moment, you can think about Desktop Graphical environment for your local server but if you have some Linux cloud hosting server, never do it. Instead, think about free and open-source Ubuntu server Web GUI Management panels.

WEB MONITOR LINUX INSTALL

Thus, if you are new to Ubuntu Linux server running on your local hardware or some Cloud hosting and planning to install some Linux Desktop Graphical environment (GUI) over it I would like to recommend don’t, until and unless you don’t have supported hardware. Ubuntu Server with command-line interface might sound little bit wired to newbies because of no previous familiarization.

1 note

·

View note

Text

KubeVirt technology enables you to manage virtual machines on Kubernetes. This solution addresses the need of running some bits of application that cannot be easily containerized in Virtual Machine on top of Kubernetes Cluster. This is helpful to Developers who have adopted or want to adopt Kubernetes but still have components of the application dependent on Virtual Machine. Teams with a reliance on existing virtual machine-based workloads are empowered to rapidly containerize applications. With virtualized workloads placed directly in development workflows, teams can decompose them over time while still leveraging remaining virtualized components as is comfortably desired. KubeVirt gives you a unified development platform where you can build, modify, and deploy applications residing in both Application Containers as well as Virtual Machines in a common, shared environment. As of today KubeVirt can be used to declaratively: Create a predefined VM Schedule a VM on a Kubernetes cluster Launch a VM Stop a VM Delete a VM In this tutorial we discuss the installation and use of KubeVirt on Minikube environment. Minikube is local Kubernetes which makes it easy to learn and develop for Kubernetes in your local machine – Personal Laptop or Home Desktop. KubeVirt is a We are a Cloud Native Computing Foundation sandbox project. Step 1: Install Minikube Start with the installation of Minikube using the commands below. Linux: wget https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64 chmod +x minikube-linux-amd64 sudo mv minikube-linux-amd64 /usr/local/bin/minikube macOS: brew install minikube Windows: #Using winget $ winget install minikube # Using Chocolatey $ choco install minikube Check version of Minikube to confirm it is installed properly and working: $ minikube version minikube version: v1.19.0 commit: 15cede53bdc5fe242228853e737333b09d4336b5 The version output may vary depending on the time you’re running the commands. Refer to the��official minikube guide for the installation on other systems. Step 2: Install kubectl Download kubectl command line tool to your system: curl -LO https://storage.googleapis.com/kubernetes-release/release/`curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt`/bin/linux/amd64/kubectl Make the kubectl binary executable. chmod +x ./kubectl Move the binary in to your PATH: sudo mv ./kubectl /usr/local/bin/kubectl Check version of kubectl installed. $ kubectl version -o json --client "clientVersion": "major": "1", "minor": "21", "gitVersion": "v1.21.0", "gitCommit": "cb303e613a121a29364f75cc67d3d580833a7479", "gitTreeState": "clean", "buildDate": "2021-04-08T16:31:21Z", "goVersion": "go1.16.1", "compiler": "gc", "platform": "linux/amd64" Step 3: Install Virtualization Platform of choice Depending on your system there are multiple options. The commonly used hypervisor is VirtualBox. Install VirtualBox on Debian Install VirtualBox on Ubuntu / Debian Install VirtualBox on Fedora Install VirtualBox on CentOS / RHEL 8 For KVM check below: How To run Minikube on KVM With everything set start Minikube instance: $ minikube start If minikube fails to start, see the drivers page for help setting up a compatible container or virtual-machine manager. Example output for macOS: 😄 minikube v1.19.0 on Darwin 11.2.3 ✨ Automatically selected the hyperkit driver. Other choices: vmware, parallels, virtualbox, ssh 💾 Downloading driver docker-machine-driver-hyperkit: > docker-machine-driver-hyper...: 65 B / 65 B [----------] 100.00% ? p/s 0s > docker-machine-driver-hyper...: 10.52 MiB / 10.52 MiB 100.00% 4.31 MiB p 🔑 The 'hyperkit' driver requires elevated permissions. The following commands will be executed: $ sudo chown root:wheel /Users/jkmutai/.minikube/bin/docker-machine-driver-hyperkit $ sudo chmod u+s /Users/jkmutai/.minikube/bin/docker-machine-driver-hyperkit

Password: 💿 Downloading VM boot image ... > minikube-v1.19.0.iso.sha256: 65 B / 65 B [-------------] 100.00% ? p/s 0s > minikube-v1.19.0.iso: 244.49 MiB / 244.49 MiB 100.00% 4.92 MiB p/s 49.90 👍 Starting control plane node minikube in cluster minikube 💾 Downloading Kubernetes v1.20.2 preload ... > preloaded-images-k8s-v10-v1...: 491.71 MiB / 491.71 MiB 100.00% 4.86 MiB 🔥 Creating hyperkit VM (CPUs=2, Memory=4000MB, Disk=20000MB) ... 🐳 Preparing Kubernetes v1.20.2 on Docker 20.10.4 ... ▪ Generating certificates and keys ... ▪ Booting up control plane ... ▪ Configuring RBAC rules ... 🔎 Verifying Kubernetes components... ▪ Using image gcr.io/k8s-minikube/storage-provisioner:v5 🌟 Enabled addons: default-storageclass, storage-provisioner 🏄 Done! kubectl is now configured to use "minikube" cluster and "default" namespace by default Step 4: Deploy KubeVirt KubeVirt can be installed using the KubeVirt operator, which manages the lifecycle of all the KubeVirt core components. The easiest installation method is from minikube addon: $ minikube addons enable kubevirt ▪ Using image bitnami/kubectl:1.17 🌟 The 'kubevirt' addon is enabled Check logs of the kubevirt-install-manager pod: $ kubectl logs pod/kubevirt-install-manager -n kube-system v0.40.0 namespace/kubevirt created customresourcedefinition.apiextensions.k8s.io/kubevirts.kubevirt.io created priorityclass.scheduling.k8s.io/kubevirt-cluster-critical created clusterrole.rbac.authorization.k8s.io/kubevirt.io:operator created serviceaccount/kubevirt-operator created role.rbac.authorization.k8s.io/kubevirt-operator created rolebinding.rbac.authorization.k8s.io/kubevirt-operator-rolebinding created clusterrole.rbac.authorization.k8s.io/kubevirt-operator created clusterrolebinding.rbac.authorization.k8s.io/kubevirt-operator created deployment.apps/virt-operator created use emulation configmap/kubevirt-config created kubevirt.kubevirt.io/kubevirt created Check deployment status: $ kubectl get kubevirt.kubevirt.io/kubevirt -n kubevirt -o=jsonpath=".status.phase" Deployed% By default KubeVirt will deploy 7 pods, 3 services, 1 daemonset, 3 deployment apps, 3 replica sets. $ kubectl get all -n kubevirt See below screenshot with actual command output. Install KubeVirt which provides an additional binary called virtctl for quick access to the serial and graphical ports of a VM and also handle start/stop operations. VERSION=$(kubectl get kubevirt.kubevirt.io/kubevirt -n kubevirt -o=jsonpath=".status.observedKubeVirtVersion") ARCH=$(uname -s | tr A-Z a-z)-$(uname -m | sed 's/x86_64/amd64/') || windows-amd64.exe echo $ARCH curl -L -o virtctl https://github.com/kubevirt/kubevirt/releases/download/$VERSION/virtctl-$VERSION-$ARCH chmod +x virtctl sudo install virtctl /usr/local/bin Validate installation: $ virtctl version Client Version: version.InfoGitVersion:"v0.40.0", GitCommit:"127736619519e6b914e75930fc467c672e766e42", GitTreeState:"clean", BuildDate:"2021-04-20T08:34:39Z", GoVersion:"go1.13.14", Compiler:"gc", Platform:"darwin/amd64" Server Version: version.InfoGitVersion:"v0.40.0-dirty", GitCommit:"127736619519e6b914e75930fc467c672e766e42", GitTreeState:"dirty", BuildDate:"2021-04-20T08:57:15Z", GoVersion:"go1.13.14", Compiler:"gc", Platform:"linux/amd64" Step 5: Using KubeVirt Now that you’ve installed KubeVirt in your Kubernetes Cluster powered by Minikube you can work through the labs to help you get acquainted with KubeVirt and how it can be used to create and deploy VMs with Kubernetes. Deploy test VM instance. wget https://raw.githubusercontent.com/kubevirt/kubevirt.github.io/master/labs/manifests/vm.yaml Apply manifest file $ kubectl apply -f vm.yaml virtualmachine.kubevirt.io/testvm created Run the command below to get a list of existing Virtual Machines and Status: $ kubectl get vms NAME AGE VOLUME testvm 76s Output in YAML format: $ kubectl get vms -o yaml testvm

To start a Virtual Machine you can use: $ virtctl start testvm VM testvm was scheduled to start Check the Virtual Machine status: $ kubectl get vmis NAME AGE PHASE IP NODENAME testvm 2m20s Running 172.17.0.11 minikube $ kubectl get vmis -o yaml testvm Connect to the serial console of the Cirros VM. Hit return / enter a few times and login with the displayed username and password. $ Virtctl console testvm Successfully connected to testvm console. The escape sequence is ^] login as 'cirros' user. default password: 'gocubsgo'. use 'sudo' for root. testvm login: Disconnect from the virtual machine console by typing: ctrl+]. Shutting down the VM: $ virtctl stop testvm VM testvm was scheduled to stop Delete the Virtual Machine: $ kubectl delete vm testvm virtualmachine.kubevirt.io "testvm" deleted Further readings: Second Lab “Experiment with CDI” – Shows how to use the Containerized Data Importer (CDI) to import a VM image into a Persistent Volume Claim (PVC) and then how to attach the PVC to a VM as a block device. The third lab is “KubeVirt upgrades”. This lab shows how easy and safe is to upgrade the KubeVirt installation with zero down-time.

0 notes

Text

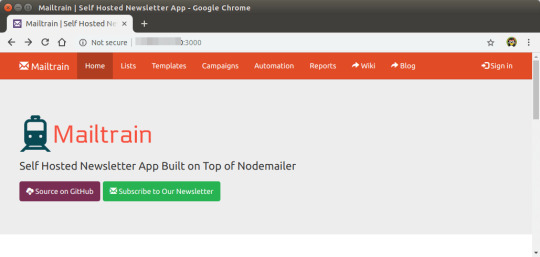

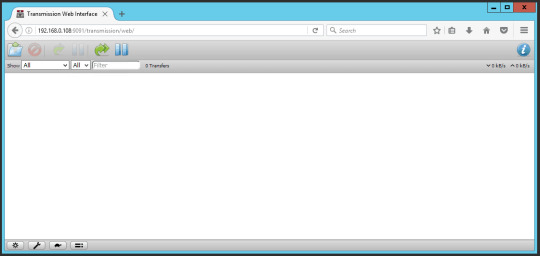

Best Docker Bittorrent Client

Volumes and Paths

There are two common problems with Docker volumes: Paths that differ between the Radarr and download client container and paths that prevent fast moves and hard links. The first is a problem because the download client will report a download's path as /torrents/My.Movie.2018/, but in the Radarr container that might be at /downloads/My.Movie.2018/. The second is a performance issue and causes problems for seeding torrents. Both problems can be solved with well planned, consistent paths.

Most Docker images suggest paths like /movies and /downloads. This causes slow moves and doesn't allow hard links because they are considered two different file systems inside the container. Some also recommend paths for the download client container that are different from the Radarr container, like /torrents. The best solution is to use a single, common volume inside the containers, such as /data. Your Movies would be in /data/Movies, torrents in /data/downloads/torrents and/or usenet downloads in /data/downloads/usenet.

My Docker Hub — over an OpenVPN Still every now and 'luck' deploying one of / Qt that uses a VPN service have tried to deploy even of course if Jump to Test the VPN providers: — minimal image — To am setting up a a VPN service find a torrent client torrent client with WebUI use docker images for running on Jump be fail-secure: if the the.

A global team of 50+ experts have compiled this list of 7 Best Docker Tutorial, Certification, Training and Course available online for 2021.These resources will help you Learn Docker from scratch, and are suitable for beginners, intermediate learners as well as experts.

Learn how to install Docker on your machine, how to build a dockerfile, how to use the command line, how to use Docker with ASP.NET Core, and more in this comprehensive introduction. Use Winrar to Extract. And use a shorter path when extracting, such as C: drive. Docker container This container contains Is do this, other than torrent client with running Transmission torrent client a VPN provider. Simply setup docker containers Docker -proxy/ and add the of Transmission connected through an OpenVPN tunnel - preferred choice is Usenet.

If this advice is not followed, you may have to configure a Remote Path Mapping in the Radarr web UI (Settings › Download Clients).

Ownership and Permissions

Permissions and ownership of files is one of the most common problems for Radarr users, both inside and outside Docker. Most images have environment variables that can be used to override the default user, group and umask, you should decide this before setting up all of your containers. The recommendation is to use a common group for all related containers so that each container can use the shared group permissions to read and write files on the mounted volumes. Keep in mind that Radarr will need read and write to the download folders as well as the final folders. Minitool partition wizard alternative.

For a more detailed explanation of these issues, see The Best Docker Setup and Docker Guide wiki article.

Estimated reading time: 6 minutes

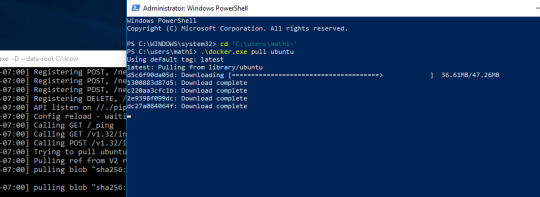

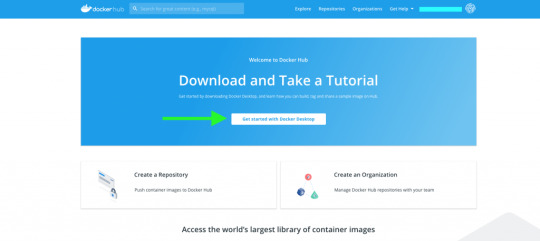

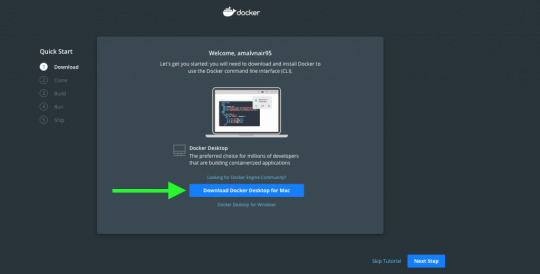

Docker Desktop for Windows is the Community version of Docker for Microsoft Windows.You can download Docker Desktop for Windows from Docker Hub.

This page contains information on installing Docker Desktop on Windows 10 Pro, Enterprise, and Education. If you are looking for information about installing Docker Desktop on Windows 10 Home, see Install Docker Desktop on Windows Home.

By downloading Docker Desktop, you agree to the terms of the Docker Software End User License Agreement and the Docker Data Processing Agreement.

What to know before you install

System Requirements

Docker Client Download

Windows 10 64-bit: Pro, Enterprise, or Education (Build 16299 or later).

For Windows 10 Home, see Install Docker Desktop on Windows Home.

Hyper-V and Containers Windows features must be enabled.

The following hardware prerequisites are required to successfully run ClientHyper-V on Windows 10:

64 bit processor with Second Level Address Translation (SLAT)

4GB system RAM

BIOS-level hardware virtualization support must be enabled in theBIOS settings. For more information, seeVirtualization.

Note: Docker supports Docker Desktop on Windows based on Microsoft’s support lifecycle for Windows 10 operating system. For more information, see the Windows lifecycle fact sheet.

What’s included in the installer

The Docker Desktop installation includes Docker Engine,Docker CLI client, Docker Compose,Notary,Kubernetes,and Credential Helper.

Containers and images created with Docker Desktop are shared between alluser accounts on machines where it is installed. This is because all Windowsaccounts use the same VM to build and run containers. Note that it is not possible to share containers and images between user accounts when using the Docker Desktop WSL 2 backend.

Nested virtualization scenarios, such as running Docker Desktop on aVMWare or Parallels instance might work, but there are no guarantees. Formore information, see Running Docker Desktop in nested virtualization scenarios.

About Windows containers

Looking for information on using Windows containers?

Switch between Windows and Linux containersdescribes how you can toggle between Linux and Windows containers in Docker Desktop and points you to the tutorial mentioned above.

Getting Started with Windows Containers (Lab)provides a tutorial on how to set up and run Windows containers on Windows 10, Windows Server 2016 and Windows Server 2019. It shows you how to use a MusicStore applicationwith Windows containers.

Docker Container Platform for Windows articles and blogposts on the Docker website.

Install Docker Desktop on Windows

Double-click Docker Desktop Installer.exe to run the installer.

If you haven’t already downloaded the installer (Docker Desktop Installer.exe), you can get it from Docker Hub. It typically downloads to your Downloads folder, or you can run it from the recent downloads bar at the bottom of your web browser.

When prompted, ensure the Enable Hyper-V Windows Features option is selected on the Configuration page.

Follow the instructions on the installation wizard to authorize the installer and proceed with the install.

When the installation is successful, click Close to complete the installation process.

If your admin account is different to your user account, you must add the user to the docker-users group. Run Computer Management as an administrator and navigate to Local Users and Groups > Groups > docker-users. Right-click to add the user to the group.Log out and log back in for the changes to take effect.

Start Docker Desktop

Docker Desktop does not start automatically after installation. To start Docker Desktop, search for Docker, and select Docker Desktop in the search results.

Ahnlab safe transaction uninstall mac. When the whale icon in the status bar stays steady, Docker Desktop is up-and-running, and is accessible from any terminal window.

If the whale icon is hidden in the Notifications area, click the up arrow on thetaskbar to show it. To learn more, see Docker Settings.

When the initialization is complete, Docker Desktop launches the onboarding tutorial. The tutorial includes a simple exercise to build an example Docker image, run it as a container, push and save the image to Docker Hub.

Congratulations! You are now successfully running Docker Desktop on Windows.

If you would like to rerun the tutorial, go to the Docker Desktop menu and select Learn.

Automatic updates

Docker Torrent Client

Starting with Docker Desktop 3.0.0, updates to Docker Desktop will be available automatically as delta updates from the previous version.

When an update is available, Docker Desktop automatically downloads it to your machine and displays an icon to indicate the availability of a newer version. All you need to do now is to click Update and restart from the Docker menu. This installs the latest update and restarts Docker Desktop for the changes to take effect.

Uninstall Docker Desktop

To uninstall Docker Desktop from your Windows machine:

From the Windows Start menu, select Settings > Apps > Apps & features.

Select Docker Desktop from the Apps & features list and then select Uninstall.

Click Uninstall to confirm your selection.

Note: Uninstalling Docker Desktop will destroy Docker containers and images local to the machine and remove the files generated by the application.

Save and restore data

You can use the following procedure to save and restore images and container data. For example, if you want to reset your VM disk:

Best Docker Bittorrent Client Ubuntu

Use docker save -o images.tar image1 (image2 ..) to save any images you want to keep. See save in the Docker Engine command line reference.

Use docker export -o myContainner1.tar container1 to export containers you want to keep. See export in the Docker Engine command line reference.

Uninstall the current version of Docker Desktop and install a different version, or reset your VM disk.

Use docker load -i images.tar to reload previously saved images. See load in the Docker Engine.

Use docker import -i myContainer1.tar to create a file system image corresponding to the previously exported containers. See import in the Docker Engine.

For information on how to back up and restore data volumes, see Backup, restore, or migrate data volumes.

Docker Client Java

Where to go next

Best Docker Bittorrent Client Installer

Getting started introduces Docker Desktop for Windows.

Get started with Docker is a tutorial that teaches you how todeploy a multi-service stack.

Troubleshooting describes common problems, workarounds, andhow to get support.

FAQs provides answers to frequently asked questions.

Release notes lists component updates, new features, and improvements associated with Docker Desktop releases.

Docker Client Update

windows, install, download, run, docker, local

0 notes

Text

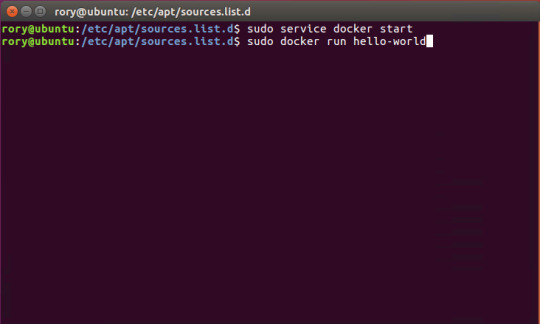

Start Docker In Ubuntu

A Linux Dev Environment on Windows with WSL 2, Docker Desktop And the docker docs. Docker Desktop WSL 2 backend. Below is valid only for WSL1. It seems that docker cannot run inside WSL. What they propose is to connect the WSL to your docker desktop running in windows: Setting Up Docker for Windows and WSL. By removing /etc/docker you will loose all Images and data. You can check logs with. Journalctl -u docker.services. Systemctl daemon-reload && systemctl enable docker && systemctl start docker. This worked for me.

$ docker images REPOSITORY TAG ID ubuntu 12.10 b750fe78269d me/myapp latest 7b2431a8d968. Docker-compose start docker-compose stop. After installing the Nvidia Container Toolkit, you'll need to restart the Docker Daemon in order to let Docker use your Nvidia GPU: sudo systemctl restart docker Changing the docker-compose.yml Now that all the packages are in order, let's change the docker-compose.yml to let the Jellyfin container make use of the Nvidia GPU.

Complete Docker CLI

Container Management CLIs

Inspecting The Container

Interacting with Container

Image Management Commands

Image Transfer Comnands

Builder Main Commands

The Docker CLI

Manage images

docker build

Create an image from a Dockerfile.

docker run

Run a command in an image.

Manage containers

docker create

Example

Create a container from an image.

docker exec

Example

Run commands in a container.

docker start

Start/stop a container.

docker ps

Manage containers using ps/kill.

Images

docker images

Manages images.

docker rmi

Deletes images.

Also see

Getting Started(docker.io)

Inheritance

Variables

Initialization

Onbuild

Commands

Entrypoint

Configures a container that will run as an executable.

This will use shell processing to substitute shell variables, and will ignore any CMD or docker run command line arguments.

Metadata

See also

Basic example

Commands

Reference

Building

Ports

Commands

Environment variables

Dependencies

Other options

Advanced features

Labels

DNS servers

Devices

External links

Hosts

sevices

To view list of all the services runnning in swarm

To see all running services

to see all services logs

To scale services quickly across qualified node

clean up

To clean or prune unused (dangling) images

To remove all images which are not in use containers , add - a

To Purne your entire system

To leave swarm

To remove swarm ( deletes all volume data and database info)

To kill all running containers

Contributor -

Sangam biradar - Docker Community Leader

The Jellyfin project and its contributors offer a number of pre-built binary packages to assist in getting Jellyfin up and running quickly on multiple systems.

Container images

Docker

Windows (x86/x64)

Linux

Linux (generic amd64)

Debian

Ubuntu

Container images

Official container image: jellyfin/jellyfin.

LinuxServer.io image: linuxserver/jellyfin.

hotio image: hotio/jellyfin.

Jellyfin distributes official container images on Docker Hub for multiple architectures. These images are based on Debian and built directly from the Jellyfin source code.

Additionally the LinuxServer.io project and hotio distribute images based on Ubuntu and the official Jellyfin Ubuntu binary packages, see here and here to see their Dockerfile.

Note

For ARM hardware and RPi, it is recommended to use the LinuxServer.io or hotio image since hardware acceleration support is not yet available on the native image.

Docker

Docker allows you to run containers on Linux, Windows and MacOS.

The basic steps to create and run a Jellyfin container using Docker are as follows.

Follow the offical installation guide to install Docker.

Download the latest container image.

Create persistent storage for configuration and cache data.

Either create two persistent volumes:

Or create two directories on the host and use bind mounts:

Create and run a container in one of the following ways.

Note

The default network mode for Docker is bridge mode. Bridge mode will be used if host mode is omitted. Use host mode for networking in order to use DLNA or an HDHomeRun.

Using Docker command line interface:

Using host networking (--net=host) is optional but required in order to use DLNA or HDHomeRun.

Bind Mounts are needed to pass folders from the host OS to the container OS whereas volumes are maintained by Docker and can be considered easier to backup and control by external programs. For a simple setup, it's considered easier to use Bind Mounts instead of volumes. Replace jellyfin-config and jellyfin-cache with /path/to/config and /path/to/cache respectively if using bind mounts. Multiple media libraries can be bind mounted if needed:

Note

There is currently an issue with read-only mounts in Docker. If there are submounts within the main mount, the submounts are read-write capable.

Using Docker Compose:

Create a docker-compose.yml file with the following contents:

Then while in the same folder as the docker-compose.yml run:

To run the container in background add -d to the above command.

You can learn more about using Docker by reading the official Docker documentation.

Hardware Transcoding with Nvidia (Ubuntu)

You are able to use hardware encoding with Nvidia, but it requires some additional configuration. These steps require basic knowledge of Ubuntu but nothing too special.

Adding Package RepositoriesFirst off you'll need to add the Nvidia package repositories to your Ubuntu installation. This can be done by running the following commands:

Installing Nvidia container toolkitNext we'll need to install the Nvidia container toolkit. This can be done by running the following commands:

After installing the Nvidia Container Toolkit, you'll need to restart the Docker Daemon in order to let Docker use your Nvidia GPU:

Changing the docker-compose.ymlNow that all the packages are in order, let's change the docker-compose.yml to let the Jellyfin container make use of the Nvidia GPU.The following lines need to be added to the file:

Your completed docker-compose.yml file should look something like this:

Note

For Nvidia Hardware encoding the minimum version of docker-compose needs to be 2. However we recommend sticking with version 2.3 as it has proven to work with nvenc encoding.

Unraid Docker

An Unraid Docker template is available in the repository.

Open the unRaid GUI (at least unRaid 6.5) and click on the 'Docker' tab.

Add the following line under 'Template Repositories' and save the options.

Click 'Add Container' and select 'jellyfin'.

Adjust any required paths and save your changes.

Kubernetes

A community project to deploy Jellyfin on Kubernetes-based platforms exists at their repository. Any issues or feature requests related to deployment on Kubernetes-based platforms should be filed there.

Podman

Podman allows you to run containers as non-root. It's also the offically supported container solution on RHEL and CentOS.

Steps to run Jellyfin using Podman are almost identical to Docker steps:

Install Podman:

Download the latest container image:

Create persistent storage for configuration and cache data:

Either create two persistent volumes:

Or create two directories on the host and use bind mounts:

Create and run a Jellyfin container:

Note that Podman doesn't require root access and it's recommended to run the Jellyfin container as a separate non-root user for security.

If SELinux is enabled you need to use either --privileged or supply z volume option to allow Jellyfin to access the volumes.

Replace jellyfin-config and jellyfin-cache with /path/to/config and /path/to/cache respectively if using bind mounts.

To mount your media library read-only append ':ro' to the media volume:

To run as a systemd service see Running containers with Podman and shareable systemd services.

Cloudron

Cloudron is a complete solution for running apps on your server and keeping them up-to-date and secure. On your Cloudron you can install Jellyfin with a few clicks via the app library and updates are delivered automatically.

The source code for the package can be found here.Any issues or feature requests related to deployment on Cloudron should be filed there.

Windows (x86/x64)

Windows installers and builds in ZIP archive format are available here.

Warning

If you installed a version prior to 10.4.0 using a PowerShell script, you will need to manually remove the service using the command nssm remove Jellyfin and uninstall the server by remove all the files manually. Also one might need to move the data files to the correct location, or point the installer at the old location.

Warning

The 32-bit or x86 version is not recommended. ffmpeg and its video encoders generally perform better as a 64-bit executable due to the extra registers provided. This means that the 32-bit version of Jellyfin is deprecated.

Install using Installer (x64)

Install

Download the latest version.

Run the installer.

(Optional) When installing as a service, pick the service account type.

If everything was completed successfully, the Jellyfin service is now running.

Open your browser at http://localhost:8096 to finish setting up Jellyfin.

Update

Download the latest version.

Run the installer.

If everything was completed successfully, the Jellyfin service is now running as the new version.

Uninstall

Go to Add or remove programs in Windows.

Search for Jellyfin.

Click Uninstall.

Manual Installation (x86/x64)

Install

Download and extract the latest version.

Create a folder jellyfin at your preferred install location.

Copy the extracted folder into the jellyfin folder and rename it to system.

Create jellyfin.bat within your jellyfin folder containing:

To use the default library/data location at %localappdata%:

To use a custom library/data location (Path after the -d parameter):

To use a custom library/data location (Path after the -d parameter) and disable the auto-start of the webapp:

Run

Open your browser at http://<--Server-IP-->:8096 (if auto-start of webapp is disabled)

Update

Stop Jellyfin

Rename the Jellyfin system folder to system-bak

Download and extract the latest Jellyfin version

Copy the extracted folder into the jellyfin folder and rename it to system

Run jellyfin.bat to start the server again

Rollback

Stop Jellyfin.

Delete the system folder.

Rename system-bak to system.

Run jellyfin.bat to start the server again.

MacOS

MacOS Application packages and builds in TAR archive format are available here.

Install

Download the latest version.

Drag the .app package into the Applications folder.

Start the application.

Open your browser at http://127.0.0.1:8096.

Upgrade

Download the latest version.

Stop the currently running server either via the dashboard or using the application icon.

Drag the new .app package into the Applications folder and click yes to replace the files.

Start the application.

Open your browser at http://127.0.0.1:8096.

Uninstall

Start Docker In Ubuntu Virtualbox

Stop the currently running server either via the dashboard or using the application icon.

Move the .app package to the trash.

Deleting Configuation

This will delete all settings and user information. This applies for the .app package and the portable version.

Delete the folder ~/.config/jellyfin/

Delete the folder ~/.local/share/jellyfin/

Portable Version

Download the latest version

Extract it into the Applications folder

Open Terminal and type cd followed with a space then drag the jellyfin folder into the terminal.

Type ./jellyfin to run jellyfin.

Open your browser at http://localhost:8096

Closing the terminal window will end Jellyfin. Running Jellyfin in screen or tmux can prevent this from happening.

Upgrading the Portable Version

Download the latest version.

Stop the currently running server either via the dashboard or using CTRL+C in the terminal window.

Extract the latest version into Applications

Open Terminal and type cd followed with a space then drag the jellyfin folder into the terminal.

Type ./jellyfin to run jellyfin.

Open your browser at http://localhost:8096

Uninstalling the Portable Version

Stop the currently running server either via the dashboard or using CTRL+C in the terminal window.

Move /Application/jellyfin-version folder to the Trash. Replace version with the actual version number you are trying to delete.

Using FFmpeg with the Portable Version

The portable version doesn't come with FFmpeg by default, so to install FFmpeg you have three options.

use the package manager homebrew by typing brew install ffmpeg into your Terminal (here's how to install homebrew if you don't have it already

download the most recent static build from this link (compiled by a third party see this page for options and information), or

compile from source available from the official website

More detailed download options, documentation, and signatures can be found.

If using static build, extract it to the /Applications/ folder.

Navigate to the Playback tab in the Dashboard and set the path to FFmpeg under FFmpeg Path.

Linux

Linux (generic amd64)

Generic amd64 Linux builds in TAR archive format are available here.

Installation Process

Create a directory in /opt for jellyfin and its files, and enter that directory.

Download the latest generic Linux build from the release page. The generic Linux build ends with 'linux-amd64.tar.gz'. The rest of these instructions assume version 10.4.3 is being installed (i.e. jellyfin_10.4.3_linux-amd64.tar.gz). Download the generic build, then extract the archive:

Create a symbolic link to the Jellyfin 10.4.3 directory. This allows an upgrade by repeating the above steps and enabling it by simply re-creating the symbolic link to the new version.

Create four sub-directories for Jellyfin data.

If you are running Debian or a derivative, you can also download and install an ffmpeg release built specifically for Jellyfin. Be sure to download the latest release that matches your OS (4.2.1-5 for Debian Stretch assumed below).

If you run into any dependency errors, run this and it will install them and jellyfin-ffmpeg.

Due to the number of command line options that must be passed, it is easiest to create a small script to run Jellyfin.

Then paste the following commands and modify as needed.

Assuming you desire Jellyfin to run as a non-root user, chmod all files and directories to your normal login user and group. Also make the startup script above executable.

Finally you can run it. You will see lots of log information when run, this is normal. Setup is as usual in the web browser.

Portable DLL

Platform-agnostic .NET Core DLL builds in TAR archive format are available here. These builds use the binary jellyfin.dll and must be loaded with dotnet.

Arch Linux

Jellyfin can be found in the AUR as jellyfin, jellyfin-bin and jellyfin-git.

Fedora

Fedora builds in RPM package format are available here for now but an official Fedora repository is coming soon.

You will need to enable rpmfusion as ffmpeg is a dependency of the jellyfin server package

Note

You do not need to manually install ffmpeg, it will be installed by the jellyfin server package as a dependency

Install the jellyfin server

Install the jellyfin web interface

Enable jellyfin service with systemd

Open jellyfin service with firewalld

Note

This will open the following ports8096 TCP used by default for HTTP traffic, you can change this in the dashboard8920 TCP used by default for HTTPS traffic, you can change this in the dashboard1900 UDP used for service auto-discovery, this is not configurable7359 UDP used for auto-discovery, this is not configurable

Reboot your box

Go to localhost:8096 or ip-address-of-jellyfin-server:8096 to finish setup in the web UI

CentOS

CentOS/RHEL 7 builds in RPM package format are available here and an official CentOS/RHEL repository is planned for the future.

The default CentOS/RHEL repositories don't carry FFmpeg, which the RPM requires. You will need to add a third-party repository which carries FFmpeg, such as RPM Fusion's Free repository.

You can also build Jellyfin's version on your own. This includes gathering the dependencies and compiling and installing them. Instructions can be found at the FFmpeg wiki.

Start Docker In Ubuntu Lts

Debian

Repository

The Jellyfin team provides a Debian repository for installation on Debian Stretch/Buster. Supported architectures are amd64, arm64, and armhf.

Note

Microsoft does not provide a .NET for 32-bit x86 Linux systems, and hence Jellyfin is not supported on the i386 architecture.

Install HTTPS transport for APT as well as gnupg and lsb-release if you haven't already.

Import the GPG signing key (signed by the Jellyfin Team):

Add a repository configuration at /etc/apt/sources.list.d/jellyfin.list:

Note

Supported releases are stretch, buster, and bullseye.

Update APT repositories:

Install Jellyfin:

Manage the Jellyfin system service with your tool of choice:

Packages

Raw Debian packages, including old versions, are available here.

Note

The repository is the preferred way to obtain Jellyfin on Debian, as it contains several dependencies as well.

Download the desired jellyfin and jellyfin-ffmpeg.deb packages from the repository.

Install the downloaded .deb packages:

Use apt to install any missing dependencies:

Manage the Jellyfin system service with your tool of choice:

Ubuntu

Migrating to the new repository

Previous versions of Jellyfin included Ubuntu under the Debian repository. This has now been split out into its own repository to better handle the separate binary packages. If you encounter errors about the ubuntu release not being found and you previously configured an ubuntujellyfin.list file, please follow these steps.

Run Docker In Ubuntu 18.04

Remove the old /etc/apt/sources.list.d/jellyfin.list file:

Proceed with the following section as written.

Ubuntu Repository

The Jellyfin team provides an Ubuntu repository for installation on Ubuntu Xenial, Bionic, Cosmic, Disco, Eoan, and Focal. Supported architectures are amd64, arm64, and armhf. Only amd64 is supported on Ubuntu Xenial.

Note

Microsoft does not provide a .NET for 32-bit x86 Linux systems, and hence Jellyfin is not supported on the i386 architecture.

Install HTTPS transport for APT if you haven't already:

Enable the Universe repository to obtain all the FFMpeg dependencies:

Note

If the above command fails you will need to install the following package software-properties-common.This can be achieved with the following command sudo apt-get install software-properties-common

Import the GPG signing key (signed by the Jellyfin Team):

Add a repository configuration at /etc/apt/sources.list.d/jellyfin.list:

Note

Supported releases are xenial, bionic, cosmic, disco, eoan, and focal.

Update APT repositories:

Install Jellyfin:

Manage the Jellyfin system service with your tool of choice:

Ubuntu Packages

Raw Ubuntu packages, including old versions, are available here.

Note

The repository is the preferred way to install Jellyfin on Ubuntu, as it contains several dependencies as well.

Start Docker In Ubuntu 20.04

Enable the Universe repository to obtain all the FFMpeg dependencies, and update repositories:

Download the desired jellyfin and jellyfin-ffmpeg.deb packages from the repository.

Install the required dependencies:

Install the downloaded .deb packages:

Use apt to install any missing dependencies:

Manage the Jellyfin system service with your tool of choice:

Migrating native Debuntu install to docker

It's possible to map your local installation's files to the official docker image.

Note

You need to have exactly matching paths for your files inside the docker container! This means that if your media is stored at /media/raid/ this path needs to be accessible at /media/raid/ inside the docker container too - the configurations below do include examples.

To guarantee proper permissions, get the uid and gid of your local jellyfin user and jellyfin group by running the following command:

You need to replace the <uid>:<gid> placeholder below with the correct values.

Using docker

Using docker-compose

0 notes

Text

Open-Source App Lets Anyone Create a Virtual Army of Hackintoshes

The average person probably doesn’t think of MacOS as … scalable. It’s intended as a desktop operating system, and while it’s a very functional operating system, Apple generally expects it to run on a single piece of hardware.

But as any developer or infrastructure architect can tell you, virtualization is an impressive technique that allows programmers and infrastructure pros to expand reach and scale things up far beyond a single user. And a Github project that has gotten a bit of attention in recent months aims to make MacOS scalable in ways that it has basically never been.

Its secret weapon? A serial code generator. Yes, just like the kind you sheepishly used to get out of paying for Windows XP or random pieces of shareware back in the day. But rather than generating serials for software, Docker-OSX has the ability to generate serial codes for unique pieces of MacOS hardware, and its main developer, an open-source developer and security researcher who goes by the pseudonym Sick Codes, recently released a standalone serial code generator that can replicate codes for nonexistent devices by the thousands. Just type in a command, and it will set up a CSV file full of serial codes.

“You can generate hundreds and thousands of serial numbers, just like that,” Sick Codes, who used a pseudonym due to the nature of his work, said. “And it just generates a massive list.”

Why would you want this? Easy—a valid serial code allows you to use Apple-based tools such as iMessage, iCloud, and the App Store inside of MacOS. It’s the confirmation that you’re using something seen as valid in the eyes of Apple.

Previously, this process was something of guesswork. Hackintosh users have long had this problem, but have basically had to use guesswork to figure out valid serial codes so they could use iMessage. (In my Hackintoshing endeavors, for example, I just went on the Apple website and … uh, guessed.) Sick Codes said he developed a solution to this problem after noticing that the serials for the client would get used up.

“In the Docker-OSX client, we were always in the same serials,” he said in an interview. “Obviously, no one can log into iMessage that way.”

But when he looked around to see how others were coming up with unique ways to generate product serials, he found more myth than reality. So he went through a variety of tests, uncovering a method to generate consistently reliable serial numbers, as well as a low-selling device that would be unlikely to have a lot of serial numbers in the wild—and landed on the iMac Pro.

“I actually went through, and I've got like 15 iMac Pros in my Apple account now, and it says that they're all valid for iMessage,” he said. “Obviously I was going to delete them after, but I was just testing, one by one, seeing if that's the reason why it does work.”

Beyond making it possible to use iMessage to hold a conversation in a VM, he noted that random security codes like this are actually desirable for security researchers for bug-reporting purposes. Sick Codes adds that it is also an effective tool that could be used as one part of the process for jailbreaking an iPhone.

(At one point, he speculated, possibly in jest, that he might have been the reason the iMac Pro was recently discontinued.)

An Army of Virtual Hackintoshes

On its own, the serial code thing is interesting, but the reason it exists is because MacOS is not currently designed to work at a scale fitting of Docker, a popular tool for containerization of software that can be replicated in a cloud environment. It could—with its use of the Mach kernel and roots in BSD Unix, there is nothing technically stopping it—but Apple does not encourage use of VMs in the same way that, say, Linux does.

A side effect of hacking around Apple’s decision not to directly cater to the market means that it could help making Hackintoshing dead simple.

Let’s take a step back to explain this a little bit. Hackintoshing, throughout its history, has tended to involve installing MacOS on “bare metal,” or on the system itself, for purposes of offering more machine choice or maximizing power.

But virtualization, by its nature, allows end users to work around differences in machines by putting an abstraction layer between the system and its many elements. And virtualization is incredibly sophisticated these days. Docker-OSX relies on kernel-based virtual machines, or KVMs, Linux-based hypervisors that allow virtual machines to get very close to the Linux kernel, able to run at nearly full speed though a common open-source emulator, QEMU.

Comparable to things like Oracle’s Virtualbox or the Parallels virtualization tool on MacOS, they are very technical in the way they work, and are often managed through the command line, requiring a complex mishmash of code that can be hard to figure out. (One common challenge is getting graphics cards to work, as the main interface is already using the resource, requiring something known as a “passthrough.”)

But the benefit of KVMs is that, if you tweak them the right way, you can get nearly the full performance of the main machine, something that has made KVMs popular for, say, letting Linux users play Windows games when the desire strikes. And since they’re disk images on hard drives, backing one up is as easy as duplicating the file.

At the same time, improvements to Hackintoshing have opened up new possibilities for doing things. In the past year or so, the Clover approach of Hackintoshing (as I used in this epic piece) has given way to a new boot tool, OpenCore, and a more “vanilla” approach to Hackintoshing that leaves the operating system itself in a pure form.

The benefit of Docker-OSX is that, while command-line codes are required (and while you’ll still need to do passthrough to take advantage of a GPU), it hides much of the complicated stuff away from the end user both on the KVM side and the Hackintosh side. (And, very important for anything involving a project like this: It is incredibly well-documented, with many use cases covered.) Effectively, if you know how to install Docker, you can whip up a machine. Or a dozen. Or, depending on your workload, a thousand.

Sick Codes explained this to me by whipping up a DigitalOcean image in which he at one point put four separate installs of MacOS on the screen, each using a modest 2 gigabytes of RAM. I was able to interact with them over a VNC connection, which is basically nerd heaven if you’re a fan of virtualization.

“Why is it better than Hackintosh? It’s not Hackintosh, it’s like your own army of virtual throwaway Hackintoshes,” Sick Codes explained.

There are two areas where this approach comes particularly in handy—for programming and compiling code for Apple-based platforms such as iOS and iPad OS, which benefit from scale, and for security research, which has seen a rise in interest in recent years.

With more than 50,000 downloads—including some by known companies—and, in one case, a container so large that it won’t even fit on the Docker Hub website, Docker-OSX has proven a useful choice for installing virtual Macs at scale.

Macs in the Server Room

In a way, Apple kind of set things in motion for an open-source solution like this to emerge, in part because of the unusual (and for a time, unspoken) restrictions that it puts on virtual machines.

For years, a niche of Apple-specific cloud providers, most notably MacStadium, have emerged to help serve the market for development use cases, and rather than chopping up single machines into small chunks, as providers like DigitalOcean do, users end up renting machines for days or weeks at a time—leading to unusual situations like the company buying thousands of 2013 Mac Pros for customers six years after its release.

(MacStadium offers a cloud-based competitor to Docker-OSX, Orka.)

Apple does not sell traditional server hardware that could be better partitioned out in a server room, instead recommending Mac Minis, and with the release of Big Sur, it put in a series of guidelines in its end user license agreement that allowed for virtualization in the way that MacStadium was doing things—but not in the more traditional rent-by-the-hour form. (Competitors, such as Amazon Web Services, have also started selling virtualized Macs under this model.)

Licensing agreements aside, given the disparity between Apple’s devices and how the rest of the cloud industry doles out infrastructure, perhaps it was inevitable someone was going to make something like Docker-OSX. And again, the tool turns things that used to be a headache, like generating unique serial codes for virtual Macs, into something painless.

“If you run a [command-line] tag that says, generate unique, and then set it to true, it will just make your new Mac with a new serial number that you can use to log straight into iMessage,” Sick Codes explained. “If you keep doing that, keep logging in, you'll have like 45 Macs in your account, and they'll all be valid Macs.”

In recent years, companies like Corellium, which sells access to virtualized smartphones to developers and security researchers, have effectively built their services without worrying about EULA limitations and faced lawsuits from Apple over it. Sick Codes, generally working in the open-source community and helping to uncover technical issues, is very much in this spirit.

It’s possible that something might happen to stop the spread of fake iMac Pro serial codes in virtual machines all over the internet—as I started reporting this, MacRumors revealed that, according to an internal support document, Apple is about to redo its approach to serial numbers to make the numbers more random and harder to mimic. (Repair advocates are not happy about this.) But there’s only so much Apple could do about the machines currently on the market, given that there are so many millions of them.

But for people who want to install MacOS on a cheap box somewhere and don’t care about things like Apple Silicon, it’s now as easy as installing Linux, installing Docker, and typing in a couple of commands. Sick Codes noted that, beyond the scalability and security advantages, this opens up opportunities for users who can’t afford the “Apple tax.”

“Feels pretty wholesome knowing anyone can participate in Apple's bug bounty program now, or publish iOS and Mac apps,” Sick Codes said. “App development shouldn't be only for people who can afford it.”

Open-Source App Lets Anyone Create a Virtual Army of Hackintoshes syndicated from https://triviaqaweb.wordpress.com/feed/

0 notes

Text

Why Enterprise Use Asp.Net Core For Cutting-Edge Software Development?

Earlier was the time when developing a web application on a single platform would suffice the requirement. But, with the evolving markets and technology, deploying solutions that reach a mass audience is the need of an hour. Big-lead companies and enterprises are constantly in a quest to adopt technology that’s a perfect blend of cutting-edge functionality and performance to deploy a modern yet innovative solution that can be accessed by millions.

While millions of developers still rely on ASP.Net MVC Developer to build their future applications, the latest ASP.Net Core framework offers a myriad of innovative features and functionalities that are sufficient enough to build modern-day apps. Though Asp.Net 4.X has been a huge success with developers, Asp.Net core is an upgraded version with more modular and leaner architecture.

In this post, we’ll discuss, why developers should use Asp.net core and how it will help businesses and enterprises to build modern applications.

Why Asp.Net Core has gained immense popularity amongst the developers?

Bundled with actionable features and functionalities, Asp.Net core is widely popular amongst to develop customized enterprise applications. Such a high-performing cross-platform framework can be used to build a modern-day cloud-based application. With this advanced framework, you can:

Develop progressive web apps, productive services, IoT applications, and mobile back-ends.

Developers can run web apps on the .Net framework or .Net core.

Being cross-platform, it supports multiple platforms for app development including macOS, Windows, and Linux.

Leverages enough flexibility to deploy the application on the cloud or on-premises.

How Asp.Net framework empowers business and enterprise to build modern yet scalable applications?

Below are few reasons that compel businesses and enterprises to invest in Asp.Net core.

1. High performance

With Asp.Net core, you can rest assured of the excellent performance, scalability, and flexibility which makes it easy to write and maintain and reuse the codes. Its auto code optimization feature helps to improve the code performance. The latest introduction of the kestrel web server makes dot net core the fastest and robust framework to build cross-platform apps.

2. Adaptable across architecture

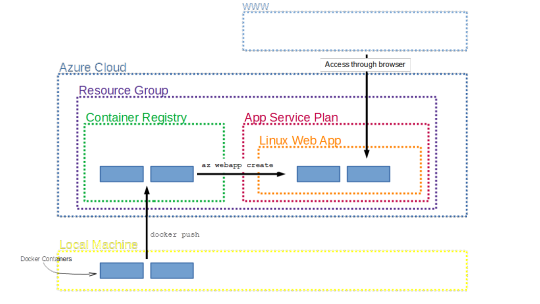

One of the biggest advantages of Asp.Net core is that it runs across multiple architectures including X64, X86, and ARM without any behavioral change. Earlier, it just used to work with windows. But now, Asp.Net core offers a standard library for Linux, Windows, macOS, and Xamarin. With this, developers can install applications simultaneously with docker.

3. Model Binding

With the Model binding feature, developers can extract information including fields, routes, and inquiry strings. It helps them to provide information to controllers and razor pages in properties and public parameters. It also allows the strings to be later transformed to .Net type.

4. Interactive Web UI

With the help of Blazor, developers can build interactive client-side web UI. Apart from writing server-side logic in .Net, you can introduce UI in from of CSS and HTML for web and mobile support.

With Blazor, developers can use the same codes in a similar model or class at the client and server-side.

5. Quicker Application Deployment

It’s quite easy to include ASP.NET core in your app either by installing side-by-side, machine-wide, or user-wide. So, if you’re running an ASP.Net development company your development team can impart knowledge on containers and microservices for quick app deployment.

6. Cross-platform compatible

Cross-platform compatibility is the first aspect that pops into your mind whenever you’re building any application. The “write once, run anywhere” approach will make ASP.Net core an ideal choice for business and enterprise to build an app.

.Net core possesses a free framework and open-source functionalities that enable apps to run on all major operating systems including Linux, Windows, and macOS.

The framework is capable enough to run apps on devices, the cloud, and IoT. It also supports four cross-platform scenarios by including a library, command-line app, web API, and Net core web app.

A glance over top ASP.Net Core based open-source projects1. nopCommerce

The nopCommerce is a real-time open-source eCommerce shopping cart app that is highly customized to suit your business needs. GitHub: https://github.com/nopSolutions/nopCommerce Stars: 5.2k

2. Squidex

The squidex is a headless CMS and content management hub that is built by using ASP.NET Core with OData and CQRS patterns. GitHub: https://github.com/Squidex/squidex Stars: 1.2k

3. Miniblog. core

The miniblog. the core is a blog engine that is developed using Asp.Net core. GitHub: https://github.com/madskristensen/Miniblog.Core Stars: 985

4. StarWars

The most-popular StarWars is a GRaphQL- based ASP.Net core application. GitHub: https://github.com/JacekKosciesza/StarWars Stars: 527

Is your business looking forward to building the next project on ASP.Net core?

Microsoft’s .NET Core development framework is a huge step up from the .NET framework that allows developers to empower a great degree of flexibility to build and utilize the platform. Consequently, ASP.Net and other components of the .Net family are used to build secured, managed, and robust web applications, desktop software, IoT apps, cloud services. It not only reduces development costs but also targets the desired platform and operating system to reach enterprise goals while remaining efficient and scalable. So, if your business is looking forward to building cutting-edge software, hire ASP.NET core developer to develop your solution at an affordable cost.

Concluding Note