#Docker container development

Explore tagged Tumblr posts

Text

Docker Guide: Introduction, Benefits, and Basic Example

This comprehensive guide offers an accessible introduction to

Docker, a powerful platform for developing, shipping, and running applications in isolated environments. We'll demystify what Docker is, explore its key benefits such as portability, scalability, and efficiency, and walk you through a practical, basic example to get you started with containerization.

#Docker #Containerization #DevOps #Introduction #Benefits #Example #Tutorial #Beginner #SoftwareDevelopment #ApplicationDeployment #Virtualization #Microservices

0 notes

Text

What is Docker and how it works

#docker#container#data transfer#application#website#web development#technology#software#information technology

0 notes

Text

Explain the benefits of containerization in Java development.

Containerization offers several benefits in Java development, as it does in many other programming languages and application environments. Here are the key advantages of using containers in Java development: Consistency: Containers encapsulate an application and all its dependencies, including Java Runtime Environment (JRE) versions, libraries, and configurations. This ensures that the…

View On WordPress

#Best Practices#containerization#containers#Docker#interview#interview questions#Interview Success Tips#Interview Tips#Java#K8#Senior Developer#Software Architects#VM

0 notes

Text

youtube

#youtube#video#codeonedigest#microservices#aws#microservice#docker#awscloud#nodejs module#nodejs#nodejs express#node js#node js training#node js express#node js development company#node js development services#app runner#aws app runner#docker image#docker container#docker tutorial#docker course

0 notes

Text

Docker Development Environment: Test your Containers with Docker Desktop

Docker Development Environment: Test your Containers with Docker Desktop #homelab #docker #DockerDesktopDevelopment #SelfHostedContainerTesting #DockerDevEnvironment #ConfigurableDevelopmentEnvironment #DockerContainerManagement #DockerDesktopGUI

One of the benefits of a Docker container is it allows you to have quick and easy test/dev environments on your local machine that are easy to set up. Let’s see how we can set up a Docker development environment with Docker Desktop. Table of contentsQuick overview of Docker Development EnvironmentSetting Up Your Docker Development Environment with Docker Desktop1. Install Docker Desktop2. Create…

View On WordPress

#Configurable Development Environment#Docker and Visual Studio Code#Docker Container Management#Docker Desktop Development#Docker Desktop Extensions#Docker Desktop GUI#docker dev CLI Plugin#Docker Dev Environment#Docker Git Integration#Self-Hosted Container Testing

0 notes

Note

Is the belief at all valid that ultimately there is nothing much we in the imperial core can do for the global south (i.e palestine) and that liberation is largely in their hands only? Was there any time historically where that wasn't the case?

Maybe I am just doom and glooming but it really doesn't feel like there is much we can affect (though I still attend protest and do whatever my party tells me to, I don't air out these thoughts because I don't think they are productive)

Primarly I feel like building a base here for when shit goes south is the only thing we can do

My friend, we can't forget that, while imperialism is committed outside of our reach, it is fueled, supported, and justified in our countries. National liberation movements fight in their own frontlines, and we fight in the rearguard. If you have the impression that any real progress is impossible from our position, that is a product of the very limited development of the subjective conditions in your country. You and I have seen a myriad of protests and encampments this last year, which have had overwhelmingly no material effects on the genocide, but this is not inescapable.

In Greece, where the KKE is a legitimate communist party in the eyes of a significant portion of the Greek working class, their organization in and out of the workplace is very capable. In the 17th of October they, co-organizing with the relevant union and other entities (small note because when this happened some tumblr users seemed to misspeak, this action would have been impossible without the help and involvement of the KKE, take a look at the US to see what trade unions do without communist influence), blocked a shipment of bullets to Israel:

And merely a week ago, they blocked another shipment of ammunition meant to further fuel the imperialist war in Ukraine:

The differentiating factor in Greece that is not present arguably anywhere else in Europe and North America is their strong and established communist party, even their presence exerts an indirect influence in the broader working class, communist or not.

So are the rest of us meant to sit in our milquetoast protests and watch on with envy at the Greeks? No, because these are subjective conditions, and we have control over them. Even if most actions we do don't achieve anything materially, we gain experience, and the base for a proper organization of our class is built up. It's not just building that base for when something goes wrong in our countries, it's building a better base for the very next mobilization, the next action, the next imperialist aggression. The student movement of the imperial core is better off now in terms of lessons to be learned after the encampments than if they hadn't done anything (and the utility of the encampments wasn't completely null anyway, some unis in Spain have ceased all economic and academic relations with Israel).

204 notes

·

View notes

Text

BRB... just upgrading Python

CW: nerdy, technical details.

Originally, MLTSHP (well, MLKSHK back then) was developed for Python 2. That was fine for 2010, but 15 years later, and Python 2 is now pretty ancient and unsupported. January 1st, 2020 was the official sunset for Python 2, and 5 years later, we’re still running things with it. It’s served us well, but we have to transition to Python 3.

Well, I bit the bullet and started working on that in earnest in 2023. The end of that work resulted in a working version of MLTSHP on Python 3. So, just ship it, right? Well, the upgrade process basically required upgrading all Python dependencies as well. And some (flyingcow, torndb, in particular) were never really official, public packages, so those had to be adopted into MLTSHP and upgraded as well. With all those changes, it required some special handling. Namely, setting up an additional web server that could be tested against the production database (unit tests can only go so far).

Here’s what that change comprised: 148 files changed, 1923 insertions, 1725 deletions. Most of those changes were part of the first commit for this branch, made on July 9, 2023 (118 files changed).

But by the end of that July, I took a break from this task - I could tell it wasn’t something I could tackle in my spare time at that time.

Time passes…

Fast forward to late 2024, and I take some time to revisit the Python 3 release work. Making a production web server for the new Python 3 instance was another big update, since I wanted the Docker container OS to be on the latest LTS edition of Ubuntu. For 2023, that was 20.04, but in 2025, it’s 24.04. I also wanted others to be able to test the server, which means the CDN layer would have to be updated to direct traffic to the test server (without affecting general traffic); I went with a client-side cookie that could target the Python 3 canary instance.

In addition to these upgrades, there were others to consider — MySQL, for one. We’ve been running MySQL 5, but version 9 is out. We settled on version 8 for now, but could also upgrade to 8.4… 8.0 is just the version you get for Ubuntu 24.04. RabbitMQ was another server component that was getting behind (3.5.7), so upgrading it to 3.12.1 (latest version for Ubuntu 24.04) seemed proper.

One more thing - our datacenter. We’ve been using Linode’s Fremont region since 2017. It’s been fine, but there are some emerging Linode features that I’ve been wanting. VPC support, for one. And object storage (basically the same as Amazon’s S3, but local, so no egress cost to-from Linode servers). Both were unavailable to Fremont, so I decided to go with their Chicago region for the upgrade.

Now we’re talking… this is now not just a “push a button” release, but a full-fleged, build everything up and tear everything down kind of release that might actually have some downtime (while trying to keep it short)!

I built a release plan document and worked through it. The key to the smooth upgrade I want was to make the cutover as seamless as possible. Picture it: once everything is set up for the new service in Chicago - new database host, new web servers and all, what do we need to do to make the switch almost instant? It’s Fastly, our CDN service.

All traffic to our service runs through Fastly. A request to the site comes in, Fastly routes it to the appropriate host, which in turns speaks to the appropriate database. So, to transition from one datacenter to the other, we need to basically change the hosts Fastly speaks to. Those hosts will already be set to talk to the new database. But that’s a key wrinkle - the new database…

The new database needs the data from the old database. And to make for a seamless transition, it needs to be up to the second in step with the old database. To do that, we have take a copy of the production data and get it up and running on the new database. Then, we need to have some process that will copy any new data to it since the last sync. This sounded a lot like replication to me, but the more I looked at doing it that way, I wasn’t confident I could set that up without bringing the production server down. That’s because any replica needs to start in a synchronized state. You can’t really achieve that with a live database. So, instead, I created my own sync process that would copy new data on a periodic basis as it came in.

Beyond this, we need a proper replication going in the new datacenter. In case the database server goes away unexpectedly, a replica of it allows for faster recovery and some peace of mind. Logical backups can be made from the replica and stored in Linode’s object storage if something really disastrous happens (like tables getting deleted by some intruder or a bad data migration).

I wanted better monitoring, too. We’ve been using Linode’s Longview service and that’s okay and free, but it doesn’t act on anything that might be going wrong. I decided to license M/Monit for this. M/Monit is so lightweight and nice, along with Monit running on each server to keep track of each service needed to operate stuff. Monit can be given instructions on how to self-heal certain things, but also provides alerts if something needs manual attention.

And finally, Linode’s Chicago region supports a proper VPC setup, which allows for all the connectivity between our servers to be totally private to their own subnet. It also means that I was able to set up an additional small Linode instance to serve as a bastion host - a server that can be used for a secure connection to reach the other servers on the private subnet. This is a lot more secure than before… we’ve never had a breach (at least, not to my knowledge), and this makes that even less likely going forward. Remote access via SSH is now unavailable without using the bastion server, so we don’t have to expose our servers to potential future ssh vulnerabilities.

So, to summarize: the MLTSHP Python 3 upgrade grew from a code release to a full stack upgrade, involving touching just about every layer of the backend of MLTSHP.

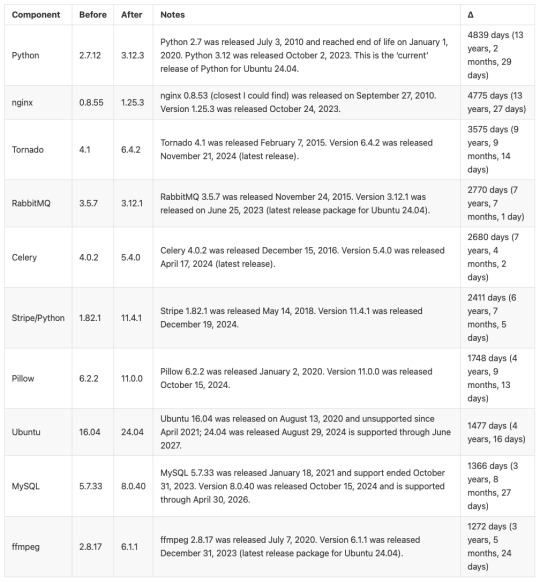

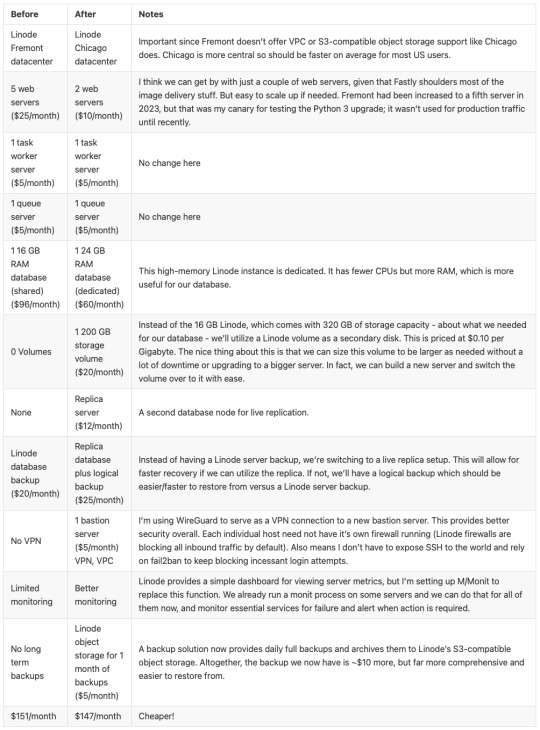

Here’s a before / after picture of some of the bigger software updates applied (apologies for using images for these tables, but Tumblr doesn’t do tables):

And a summary of infrastructure updates:

I’m pretty happy with how this has turned out. And I learned a lot. I’m a full-stack developer, so I’m familiar with a lot of devops concepts, but actually doing that role is newish to me. I got to learn how to set up a proper secure subnet for our set of hosts, making them more secure than before. I learned more about Fastly configuration, about WireGuard, about MySQL replication, and about deploying a large update to a live site with little to no downtime. A lot of that is due to meticulous release planning and careful execution. The secret for that is to think through each and every step - no matter how small. Document it, and consider the side effects of each. And with each step that could affect the public service, consider the rollback process, just in case it’s needed.

At this time, the server migration is complete and things are running smoothly. Hopefully we won’t need to do everything at once again, but we have a recipe if it comes to that.

15 notes

·

View notes

Text

Juni 2025

Ich befördere mich zum Senior Developer

Ich pflege eine Website. Meines Wissens nach bin ich in der dritten Generation an Maintainenden. Und mindestens zwischen der ersten Gruppe und der zweiten gab es so gut wie keine Übergabe. Heißt: der Code der Website ist ein großes Chaos.

Jetzt wurde mir aufgetragen, ein größeres neues Feature zu implementieren, was fast alle komplexeren Systeme der Website wiederverwenden soll. Alleine die Vorstellung dazu hat mir schon keinen Spaß gemacht. Die Realität war dann auch noch schlimmer.

Am Anfang, als ich das Feature implementiert habe, habe ich einen Großteil der Änderungen und Erklärarbeit mit Gemini 2.5 Flash gemacht. Dabei habe ich die Dateien oder Sektionen aus dem Code direkt in das LLM kopiert und habe dann Fragen dazu gestellt oder versucht zu verstehen, wie die ganzen Komponenten zusammenhängen. Das hat nur so mittelgut funktioniert.

Anfang des Jahres (Februar 2025) habe ich von einem Trend namens Vibe Coding und der dazugehörigen Entwicklungsumgebung Cursor gehört. Die Idee dabei war, dass man keine Zeile Code mehr anfasst und einfach nur noch der KI sagt, was sie tun soll. Ich hatte dann wegen der geringen Motivation und aus Trotz die Idee, es einfach an der Website auch mal auszuprobieren. Und gut, dass ich das gemacht habe.

Cursor ist eine Entwicklungsumgebung, die es einem Large Language Model erlaubt, lokal auf dem Gerät an einer Codebase Änderungen durchzuführen. Ich habe dann in ihrem Agent Mode, wo die KI mehrere Aktionen nacheinander ausführen darf, ein Feature nach dem anderen implementiert.

Das Feature, was ich zuvor mühsamst per Hand in etwa 9 Stunden Arbeit implementiert hatte, konnte es in etwa 10 Minuten ohne größere Hilfestellungen replizieren. Wobei ohne Hilfestellung etwas gelogen ist, weil ich ja zu dem Zeitpunkt schon wusste, an welche Dateien man muss, um das Feature zu implementieren. Das war schon sehr beeindruckend. Was das aber noch übertroffen hat, ist die Möglichkeit, dem LLM Zugriff auf die Konsole zu geben.

Die Website hat ein build script, was man ausführen muss, um den Docker Container zu bauen, der dann die Website laufen lässt. Ich habe ihm erklärt, wie man das Skript verwendet, und ihm dann die Erlaubnis gegeben, ohne zu fragen Dinge auf der Commandline auszuführen. Das führt dazu, dass das LLM dann das Build Script von alleine ausführt, wenn es glaubt, es hätte jetzt alles implementiert.

Der Workflow sah dann so aus, dass ich eine Aufgabe gestellt habe und das LLM dann versucht hat, das Feature zu implementieren, den Buildprozess zu auszulösen, festzustellen, dass, was es geschrieben hat, Fehler wirft, die Fehler repariert und den Buildprozess wieder auslöst – so lange, bis entweder das soft limit von 25 Aktionen hintereinander erreicht ist oder der Buildprozess funktioniert. Ich habe mir dann im Browser nur noch angeschaut, wie es aussieht, die neue Änderung beschrieben und das Ganze wieder von vorne losgetreten.

Was ich dabei aber insgesamt am interessantesten fand, ist, dass ich plötzlich nicht mehr die Rolle eines Junior Developers hatte, sondern eher die, die den Senior Developern zukommt. Nämlich Code lesen, verstehen und dann kritisieren.

(Konstantin Passig)

8 notes

·

View notes

Text

if my goal with this project was just "make a website" I would just slap together some html, css, and maybe a little bit of javascript for flair and call it a day. I'd probably be done in 2-3 days tops. but instead I have to practice and make myself "employable" and that means smashing together as many languages and frameworks and technologies as possible to show employers that I'm capable of everything they want and more. so I'm developing apis in java that fetch data from a postgres database using spring boot with authentication from spring security, while coding the front end in typescript via an angular project served by nginx with https support and cloudflare protection, with all of these microservices running in their own docker containers.

basically what that means is I get to spend very little time actually programming and a whole lot of time figuring out how the hell to make all these things play nice together - and let me tell you, they do NOT fucking want to.

but on the bright side, I do actually feel like I'm learning a lot by doing this, and hopefully by the time I'm done, I'll have something really cool that I can show off

8 notes

·

View notes

Text

Are you struggling with deployment issues for your Node.js applications? 😫 Tired of hearing "but it works on my machine"? 🤯 Docker is the game-changer you need!

With Docker, you can containerize your Node.js app, ensuring a smooth, consistent, and scalable deployment across all environments. 🌍

🔥 Why Use Docker for Node.js Deployment? ✅ Eliminates Environment Issues – Package dependencies, runtime, and configurations into a single container for a "works everywhere" experience! ✅ Faster & Seamless Deployment – Reduce deployment time with pre-configured images and lightweight containers! ✅ Improved Scalability – Easily scale your app using Docker Swarm or Kubernetes! ✅ CI/CD Integration – Automate and streamline your deployment pipeline with Docker + Jenkins/GitHub Actions! ✅ Better Resource Utilization – Docker uses less memory and boots faster than traditional virtual machines!

💡 Whether you're a DevOps engineer, developer, or tech enthusiast, understanding Docker for Node.js deployment is a must!

📌 Want to master seamless deployment? Read the full article now!

#node js development#nodejs#top nodejs development company#busniess growth#node js development company

4 notes

·

View notes

Text

Windows Server 2016: Revolutionizing Enterprise Computing

In the ever-evolving landscape of enterprise computing, Windows Server 2016 emerges as a beacon of innovation and efficiency, heralding a new era of productivity and scalability for businesses worldwide. Released by Microsoft in September 2016, Windows Server 2016 represents a significant leap forward in terms of security, performance, and versatility, empowering organizations to embrace the challenges of the digital age with confidence. In this in-depth exploration, we delve into the transformative capabilities of Windows Server 2016 and its profound impact on the fabric of enterprise IT.

Introduction to Windows Server 2016

Windows Server 2016 stands as the cornerstone of Microsoft's server operating systems, offering a comprehensive suite of features and functionalities tailored to meet the diverse needs of modern businesses. From enhanced security measures to advanced virtualization capabilities, Windows Server 2016 is designed to provide organizations with the tools they need to thrive in today's dynamic business environment.

Key Features of Windows Server 2016

Enhanced Security: Security is paramount in Windows Server 2016, with features such as Credential Guard, Device Guard, and Just Enough Administration (JEA) providing robust protection against cyber threats. Shielded Virtual Machines (VMs) further bolster security by encrypting VMs to prevent unauthorized access.

Software-Defined Storage: Windows Server 2016 introduces Storage Spaces Direct, a revolutionary software-defined storage solution that enables organizations to create highly available and scalable storage pools using commodity hardware. With Storage Spaces Direct, businesses can achieve greater flexibility and efficiency in managing their storage infrastructure.

Improved Hyper-V: Hyper-V in Windows Server 2016 undergoes significant enhancements, including support for nested virtualization, Shielded VMs, and rolling upgrades. These features enable organizations to optimize resource utilization, improve scalability, and enhance security in virtualized environments.

Nano Server: Nano Server represents a lightweight and minimalistic installation option in Windows Server 2016, designed for cloud-native and containerized workloads. With reduced footprint and overhead, Nano Server enables organizations to achieve greater agility and efficiency in deploying modern applications.

Container Support: Windows Server 2016 embraces the trend of containerization with native support for Docker and Windows containers. By enabling organizations to build, deploy, and manage containerized applications seamlessly, Windows Server 2016 empowers developers to innovate faster and IT operations teams to achieve greater flexibility and scalability.

Benefits of Windows Server 2016

Windows Server 2016 offers a myriad of benefits that position it as the platform of choice for modern enterprise computing:

Enhanced Security: With advanced security features like Credential Guard and Shielded VMs, Windows Server 2016 helps organizations protect their data and infrastructure from a wide range of cyber threats, ensuring peace of mind and regulatory compliance.

Improved Performance: Windows Server 2016 delivers enhanced performance and scalability, enabling organizations to handle the demands of modern workloads with ease and efficiency.

Flexibility and Agility: With support for Nano Server and containers, Windows Server 2016 provides organizations with unparalleled flexibility and agility in deploying and managing their IT infrastructure, facilitating rapid innovation and adaptation to changing business needs.

Cost Savings: By leveraging features such as Storage Spaces Direct and Hyper-V, organizations can achieve significant cost savings through improved resource utilization, reduced hardware requirements, and streamlined management.

Future-Proofing: Windows Server 2016 is designed to support emerging technologies and trends, ensuring that organizations can stay ahead of the curve and adapt to new challenges and opportunities in the digital landscape.

Conclusion: Embracing the Future with Windows Server 2016

In conclusion, Windows Server 2016 stands as a testament to Microsoft's commitment to innovation and excellence in enterprise computing. With its advanced security, enhanced performance, and unparalleled flexibility, Windows Server 2016 empowers organizations to unlock new levels of efficiency, productivity, and resilience. Whether deployed on-premises, in the cloud, or in hybrid environments, Windows Server 2016 serves as the foundation for digital transformation, enabling organizations to embrace the future with confidence and achieve their full potential in the ever-evolving world of enterprise IT.

Website: https://microsoftlicense.com

5 notes

·

View notes

Text

youtube

#youtube#video#codeonedigest#microservices#microservice#nodejs tutorial#nodejs express#node js development company#node js#nodejs#node#node js training#node js express#node js development services#node js application#redis cache#redis#docker image#dockerhub#docker container#docker tutorial#docker course

0 notes

Text

Virtual machine vs container: Which is best for home lab?

Virtual machine vs container: Which is best for home lab @vexpert #homelab #VirtualMachines #Containers #HomeLabTechnology #VMvsContainer #DockerContainers #OperatingSystems #VirtualizationTechnology #SoftwareDevelopment #VMware #ContainerImages

No doubt, if you have worked with technology for any time, you have heard the terms “virtual machines” and “containers” more than once. Both virtual machines and containers are core technologies in today’s ever-advanced technology world. However, many running home lab environments may wonder which they should run, virtual machine vs container. This post will dive deep into virtual machines vs.…

View On WordPress

#Container Images#containers#Docker containers#Home Lab Technology#Operating Systems#software development#virtual machines#Virtualization Technology#VM vs Container#vmware

0 notes

Text

Docker vs. Podman: Exploring Container Technologies for Modern Web Development

http://securitytc.com/TBd4Qw

2 notes

·

View notes

Text

Critical Vulnerability (CVE-2024-37032) in Ollama

Researchers have discovered a critical vulnerability in Ollama, a widely used open-source project for running Large Language Models (LLMs). The flaw, dubbed "Probllama" and tracked as CVE-2024-37032, could potentially lead to remote code execution, putting thousands of users at risk.

What is Ollama?

Ollama has gained popularity among AI enthusiasts and developers for its ability to perform inference with compatible neural networks, including Meta's Llama family, Microsoft's Phi clan, and models from Mistral. The software can be used via a command line or through a REST API, making it versatile for various applications. With hundreds of thousands of monthly pulls on Docker Hub, Ollama's widespread adoption underscores the potential impact of this vulnerability.

The Nature of the Vulnerability

The Wiz Research team, led by Sagi Tzadik, uncovered the flaw, which stems from insufficient validation on the server side of Ollama's REST API. An attacker could exploit this vulnerability by sending a specially crafted HTTP request to the Ollama API server. The risk is particularly high in Docker installations, where the API server is often publicly exposed. Technical Details of the Exploit The vulnerability specifically affects the `/api/pull` endpoint, which allows users to download models from the Ollama registry and private registries. Researchers found that when pulling a model from a private registry, it's possible to supply a malicious manifest file containing a path traversal payload in the digest field. This payload can be used to: - Corrupt files on the system - Achieve arbitrary file read - Execute remote code, potentially hijacking the system The issue is particularly severe in Docker installations, where the server runs with root privileges and listens on 0.0.0.0 by default, enabling remote exploitation. As of June 10, despite a patched version being available for over a month, more than 1,000 vulnerable Ollama server instances remained exposed to the internet.

Mitigation Strategies

To protect AI applications using Ollama, users should: - Update instances to version 0.1.34 or newer immediately - Implement authentication measures, such as using a reverse proxy, as Ollama doesn't inherently support authentication - Avoid exposing installations to the internet - Place servers behind firewalls and only allow authorized internal applications and users to access them

Broader Implications for AI and Cybersecurity

This vulnerability highlights ongoing challenges in the rapidly evolving field of AI tools and infrastructure. Tzadik noted that the critical issue extends beyond individual vulnerabilities to the inherent lack of authentication support in many new AI tools. He referenced similar remote code execution vulnerabilities found in other LLM deployment tools like TorchServe and Ray Anyscale. Moreover, despite these tools often being written in modern, safety-first programming languages, classic vulnerabilities such as path traversal remain a persistent threat. This underscores the need for continued vigilance and robust security practices in the development and deployment of AI technologies. Read the full article

2 notes

·

View notes

Text

Navigating the DevOps Landscape: Opportunities and Roles

DevOps has become a game-changer in the quick-moving world of technology. This dynamic process, whose name is a combination of "Development" and "Operations," is revolutionising the way software is created, tested, and deployed. DevOps is a cultural shift that encourages cooperation, automation, and integration between development and IT operations teams, not merely a set of practises. The outcome? greater software delivery speed, dependability, and effectiveness.

In this comprehensive guide, we'll delve into the essence of DevOps, explore the key technologies that underpin its success, and uncover the vast array of job opportunities it offers. Whether you're an aspiring IT professional looking to enter the world of DevOps or an experienced practitioner seeking to enhance your skills, this blog will serve as your roadmap to mastering DevOps. So, let's embark on this enlightening journey into the realm of DevOps.

Key Technologies for DevOps:

Version Control Systems: DevOps teams rely heavily on robust version control systems such as Git and SVN. These systems are instrumental in managing and tracking changes in code and configurations, promoting collaboration and ensuring the integrity of the software development process.

Continuous Integration/Continuous Deployment (CI/CD): The heart of DevOps, CI/CD tools like Jenkins, Travis CI, and CircleCI drive the automation of critical processes. They orchestrate the building, testing, and deployment of code changes, enabling rapid, reliable, and consistent software releases.

Configuration Management: Tools like Ansible, Puppet, and Chef are the architects of automation in the DevOps landscape. They facilitate the automated provisioning and management of infrastructure and application configurations, ensuring consistency and efficiency.

Containerization: Docker and Kubernetes, the cornerstones of containerization, are pivotal in the DevOps toolkit. They empower the creation, deployment, and management of containers that encapsulate applications and their dependencies, simplifying deployment and scaling.

Orchestration: Docker Swarm and Amazon ECS take center stage in orchestrating and managing containerized applications at scale. They provide the control and coordination required to maintain the efficiency and reliability of containerized systems.

Monitoring and Logging: The observability of applications and systems is essential in the DevOps workflow. Monitoring and logging tools like the ELK Stack (Elasticsearch, Logstash, Kibana) and Prometheus are the eyes and ears of DevOps professionals, tracking performance, identifying issues, and optimizing system behavior.

Cloud Computing Platforms: AWS, Azure, and Google Cloud are the foundational pillars of cloud infrastructure in DevOps. They offer the infrastructure and services essential for creating and scaling cloud-based applications, facilitating the agility and flexibility required in modern software development.

Scripting and Coding: Proficiency in scripting languages such as Shell, Python, Ruby, and coding skills are invaluable assets for DevOps professionals. They empower the creation of automation scripts and tools, enabling customization and extensibility in the DevOps pipeline.

Collaboration and Communication Tools: Collaboration tools like Slack and Microsoft Teams enhance the communication and coordination among DevOps team members. They foster efficient collaboration and facilitate the exchange of ideas and information.

Infrastructure as Code (IaC): The concept of Infrastructure as Code, represented by tools like Terraform and AWS CloudFormation, is a pivotal practice in DevOps. It allows the definition and management of infrastructure using code, ensuring consistency and reproducibility, and enabling the rapid provisioning of resources.

Job Opportunities in DevOps:

DevOps Engineer: DevOps engineers are the architects of continuous integration and continuous deployment (CI/CD) pipelines. They meticulously design and maintain these pipelines to automate the deployment process, ensuring the rapid, reliable, and consistent release of software. Their responsibilities extend to optimizing the system's reliability, making them the backbone of seamless software delivery.

Release Manager: Release managers play a pivotal role in orchestrating the software release process. They carefully plan and schedule software releases, coordinating activities between development and IT teams. Their keen oversight ensures the smooth transition of software from development to production, enabling timely and successful releases.

Automation Architect: Automation architects are the visionaries behind the design and development of automation frameworks. These frameworks streamline deployment and monitoring processes, leveraging automation to enhance efficiency and reliability. They are the engineers of innovation, transforming manual tasks into automated wonders.

Cloud Engineer: Cloud engineers are the custodians of cloud infrastructure. They adeptly manage cloud resources, optimizing their performance and ensuring scalability. Their expertise lies in harnessing the power of cloud platforms like AWS, Azure, or Google Cloud to provide robust, flexible, and cost-effective solutions.

Site Reliability Engineer (SRE): SREs are the sentinels of system reliability. They focus on maintaining the system's resilience through efficient practices, continuous monitoring, and rapid incident response. Their vigilance ensures that applications and systems remain stable and performant, even in the face of challenges.

Security Engineer: Security engineers are the guardians of the DevOps pipeline. They integrate security measures seamlessly into the software development process, safeguarding it from potential threats and vulnerabilities. Their role is crucial in an era where security is paramount, ensuring that DevOps practices are fortified against breaches.

As DevOps continues to redefine the landscape of software development and deployment, gaining expertise in its core principles and technologies is a strategic career move. ACTE Technologies offers comprehensive DevOps training programs, led by industry experts who provide invaluable insights, real-world examples, and hands-on guidance. ACTE Technologies's DevOps training covers a wide range of essential concepts, practical exercises, and real-world applications. With a strong focus on certification preparation, ACTE Technologies ensures that you're well-prepared to excel in the world of DevOps. With their guidance, you can gain mastery over DevOps practices, enhance your skill set, and propel your career to new heights.

11 notes

·

View notes