#Efficient Software Development with Docker

Explore tagged Tumblr posts

Text

Best Docker Containers Commands You Need to Know

Best Docker Containers Commands You Need to Know @vexpert #vmwarecommunities #100daysofhomelab #homelab #DockerBasics #MasteringDockerCommands #BestDockerContainers #DockerImagesExplained #DockerForWebApplications #DockerComposeGuide

There is arguably not a more familiar name with containerized technology than Docker. With its ability to streamline operations and optimize resources, Docker has shifted the paradigm from traditional virtual machines to containers. It has continued to evolve to enhance user-friendly features and functionalities, making it an ideal platform for managing Continuous Integration and Continuous…

View On WordPress

#Best Docker Containers#Docker Basics#Docker Compose Guide#Docker for Web Applications#Docker Hub Registry#Docker Images Explained#Docker Project Insights#Efficient Software Development with Docker#Mastering Docker Commands#Using Docker in Home Servers

0 notes

Text

Top 10 In- Demand Tech Jobs in 2025

Technology is growing faster than ever, and so is the need for skilled professionals in the field. From artificial intelligence to cloud computing, businesses are looking for experts who can keep up with the latest advancements. These tech jobs not only pay well but also offer great career growth and exciting challenges.

In this blog, we’ll look at the top 10 tech jobs that are in high demand today. Whether you’re starting your career or thinking of learning new skills, these jobs can help you plan a bright future in the tech world.

1. AI and Machine Learning Specialists

Artificial Intelligence (AI) and Machine Learning are changing the game by helping machines learn and improve on their own without needing step-by-step instructions. They’re being used in many areas, like chatbots, spotting fraud, and predicting trends.

Key Skills: Python, TensorFlow, PyTorch, data analysis, deep learning, and natural language processing (NLP).

Industries Hiring: Healthcare, finance, retail, and manufacturing.

Career Tip: Keep up with AI and machine learning by working on projects and getting an AI certification. Joining AI hackathons helps you learn and meet others in the field.

2. Data Scientists

Data scientists work with large sets of data to find patterns, trends, and useful insights that help businesses make smart decisions. They play a key role in everything from personalized marketing to predicting health outcomes.

Key Skills: Data visualization, statistical analysis, R, Python, SQL, and data mining.

Industries Hiring: E-commerce, telecommunications, and pharmaceuticals.

Career Tip: Work with real-world data and build a strong portfolio to showcase your skills. Earning certifications in data science tools can help you stand out.

3. Cloud Computing Engineers: These professionals create and manage cloud systems that allow businesses to store data and run apps without needing physical servers, making operations more efficient.

Key Skills: AWS, Azure, Google Cloud Platform (GCP), DevOps, and containerization (Docker, Kubernetes).

Industries Hiring: IT services, startups, and enterprises undergoing digital transformation.

Career Tip: Get certified in cloud platforms like AWS (e.g., AWS Certified Solutions Architect).

4. Cybersecurity Experts

Cybersecurity professionals protect companies from data breaches, malware, and other online threats. As remote work grows, keeping digital information safe is more crucial than ever.

Key Skills: Ethical hacking, penetration testing, risk management, and cybersecurity tools.

Industries Hiring: Banking, IT, and government agencies.

Career Tip: Stay updated on new cybersecurity threats and trends. Certifications like CEH (Certified Ethical Hacker) or CISSP (Certified Information Systems Security Professional) can help you advance in your career.

5. Full-Stack Developers

Full-stack developers are skilled programmers who can work on both the front-end (what users see) and the back-end (server and database) of web applications.

Key Skills: JavaScript, React, Node.js, HTML/CSS, and APIs.

Industries Hiring: Tech startups, e-commerce, and digital media.

Career Tip: Create a strong GitHub profile with projects that highlight your full-stack skills. Learn popular frameworks like React Native to expand into mobile app development.

6. DevOps Engineers

DevOps engineers help make software faster and more reliable by connecting development and operations teams. They streamline the process for quicker deployments.

Key Skills: CI/CD pipelines, automation tools, scripting, and system administration.

Industries Hiring: SaaS companies, cloud service providers, and enterprise IT.

Career Tip: Earn key tools like Jenkins, Ansible, and Kubernetes, and develop scripting skills in languages like Bash or Python. Earning a DevOps certification is a plus and can enhance your expertise in the field.

7. Blockchain Developers

They build secure, transparent, and unchangeable systems. Blockchain is not just for cryptocurrencies; it’s also used in tracking supply chains, managing healthcare records, and even in voting systems.

Key Skills: Solidity, Ethereum, smart contracts, cryptography, and DApp development.

Industries Hiring: Fintech, logistics, and healthcare.

Career Tip: Create and share your own blockchain projects to show your skills. Joining blockchain communities can help you learn more and connect with others in the field.

8. Robotics Engineers

Robotics engineers design, build, and program robots to do tasks faster or safer than humans. Their work is especially important in industries like manufacturing and healthcare.

Key Skills: Programming (C++, Python), robotics process automation (RPA), and mechanical engineering.

Industries Hiring: Automotive, healthcare, and logistics.

Career Tip: Stay updated on new trends like self-driving cars and AI in robotics.

9. Internet of Things (IoT) Specialists

IoT specialists work on systems that connect devices to the internet, allowing them to communicate and be controlled easily. This is crucial for creating smart cities, homes, and industries.

Key Skills: Embedded systems, wireless communication protocols, data analytics, and IoT platforms.

Industries Hiring: Consumer electronics, automotive, and smart city projects.

Career Tip: Create IoT prototypes and learn to use platforms like AWS IoT or Microsoft Azure IoT. Stay updated on 5G technology and edge computing trends.

10. Product Managers

Product managers oversee the development of products, from idea to launch, making sure they are both technically possible and meet market demands. They connect technical teams with business stakeholders.

Key Skills: Agile methodologies, market research, UX design, and project management.

Industries Hiring: Software development, e-commerce, and SaaS companies.

Career Tip: Work on improving your communication and leadership skills. Getting certifications like PMP (Project Management Professional) or CSPO (Certified Scrum Product Owner) can help you advance.

Importance of Upskilling in the Tech Industry

Stay Up-to-Date: Technology changes fast, and learning new skills helps you keep up with the latest trends and tools.

Grow in Your Career: By learning new skills, you open doors to better job opportunities and promotions.

Earn a Higher Salary: The more skills you have, the more valuable you are to employers, which can lead to higher-paying jobs.

Feel More Confident: Learning new things makes you feel more prepared and ready to take on tougher tasks.

Adapt to Changes: Technology keeps evolving, and upskilling helps you stay flexible and ready for any new changes in the industry.

Top Companies Hiring for These Roles

Global Tech Giants: Google, Microsoft, Amazon, and IBM.

Startups: Fintech, health tech, and AI-based startups are often at the forefront of innovation.

Consulting Firms: Companies like Accenture, Deloitte, and PwC increasingly seek tech talent.

In conclusion, the tech world is constantly changing, and staying updated is key to having a successful career. In 2025, jobs in fields like AI, cybersecurity, data science, and software development will be in high demand. By learning the right skills and keeping up with new trends, you can prepare yourself for these exciting roles. Whether you're just starting or looking to improve your skills, the tech industry offers many opportunities for growth and success.

#Top 10 Tech Jobs in 2025#In- Demand Tech Jobs#High paying Tech Jobs#artificial intelligence#datascience#cybersecurity

2 notes

·

View notes

Text

The Roadmap to Full Stack Developer Proficiency: A Comprehensive Guide

Embarking on the journey to becoming a full stack developer is an exhilarating endeavor filled with growth and challenges. Whether you're taking your first steps or seeking to elevate your skills, understanding the path ahead is crucial. In this detailed roadmap, we'll outline the stages of mastering full stack development, exploring essential milestones, competencies, and strategies to guide you through this enriching career journey.

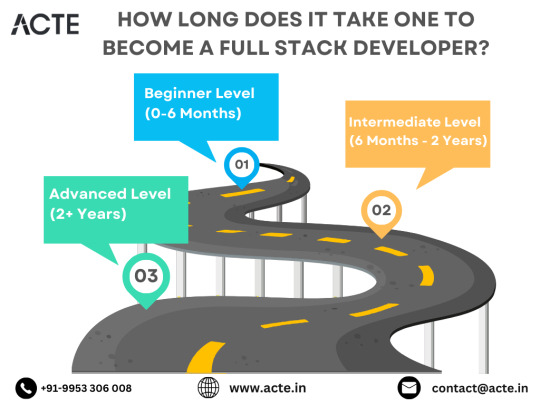

Beginning the Journey: Novice Phase (0-6 Months)

As a novice, you're entering the realm of programming with a fresh perspective and eagerness to learn. This initial phase sets the groundwork for your progression as a full stack developer.

Grasping Programming Fundamentals:

Your journey commences with grasping the foundational elements of programming languages like HTML, CSS, and JavaScript. These are the cornerstone of web development and are essential for crafting dynamic and interactive web applications.

Familiarizing with Basic Data Structures and Algorithms:

To develop proficiency in programming, understanding fundamental data structures such as arrays, objects, and linked lists, along with algorithms like sorting and searching, is imperative. These concepts form the backbone of problem-solving in software development.

Exploring Essential Web Development Concepts:

During this phase, you'll delve into crucial web development concepts like client-server architecture, HTTP protocol, and the Document Object Model (DOM). Acquiring insights into the underlying mechanisms of web applications lays a strong foundation for tackling more intricate projects.

Advancing Forward: Intermediate Stage (6 Months - 2 Years)

As you progress beyond the basics, you'll transition into the intermediate stage, where you'll deepen your understanding and skills across various facets of full stack development.

Venturing into Backend Development:

In the intermediate stage, you'll venture into backend development, honing your proficiency in server-side languages like Node.js, Python, or Java. Here, you'll learn to construct robust server-side applications, manage data storage and retrieval, and implement authentication and authorization mechanisms.

Mastering Database Management:

A pivotal aspect of backend development is comprehending databases. You'll delve into relational databases like MySQL and PostgreSQL, as well as NoSQL databases like MongoDB. Proficiency in database management systems and design principles enables the creation of scalable and efficient applications.

Exploring Frontend Frameworks and Libraries:

In addition to backend development, you'll deepen your expertise in frontend technologies. You'll explore prominent frameworks and libraries such as React, Angular, or Vue.js, streamlining the creation of interactive and responsive user interfaces.

Learning Version Control with Git:

Version control is indispensable for collaborative software development. During this phase, you'll familiarize yourself with Git, a distributed version control system, to manage your codebase, track changes, and collaborate effectively with fellow developers.

Achieving Mastery: Advanced Phase (2+ Years)

As you ascend in your journey, you'll enter the advanced phase of full stack development, where you'll refine your skills, tackle intricate challenges, and delve into specialized domains of interest.

Designing Scalable Systems:

In the advanced stage, focus shifts to designing scalable systems capable of managing substantial volumes of traffic and data. You'll explore design patterns, scalability methodologies, and cloud computing platforms like AWS, Azure, or Google Cloud.

Embracing DevOps Practices:

DevOps practices play a pivotal role in contemporary software development. You'll delve into continuous integration and continuous deployment (CI/CD) pipelines, infrastructure as code (IaC), and containerization technologies such as Docker and Kubernetes.

Specializing in Niche Areas:

With experience, you may opt to specialize in specific domains of full stack development, whether it's frontend or backend development, mobile app development, or DevOps. Specialization enables you to deepen your expertise and pursue career avenues aligned with your passions and strengths.

Conclusion:

Becoming a proficient full stack developer is a transformative journey that demands dedication, resilience, and perpetual learning. By following the roadmap outlined in this guide and maintaining a curious and adaptable mindset, you'll navigate the complexities and opportunities inherent in the realm of full stack development. Remember, mastery isn't merely about acquiring technical skills but also about fostering collaboration, embracing innovation, and contributing meaningfully to the ever-evolving landscape of technology.

#full stack developer#education#information#full stack web development#front end development#frameworks#web development#backend#full stack developer course#technology

9 notes

·

View notes

Text

Windows Server 2016: Revolutionizing Enterprise Computing

In the ever-evolving landscape of enterprise computing, Windows Server 2016 emerges as a beacon of innovation and efficiency, heralding a new era of productivity and scalability for businesses worldwide. Released by Microsoft in September 2016, Windows Server 2016 represents a significant leap forward in terms of security, performance, and versatility, empowering organizations to embrace the challenges of the digital age with confidence. In this in-depth exploration, we delve into the transformative capabilities of Windows Server 2016 and its profound impact on the fabric of enterprise IT.

Introduction to Windows Server 2016

Windows Server 2016 stands as the cornerstone of Microsoft's server operating systems, offering a comprehensive suite of features and functionalities tailored to meet the diverse needs of modern businesses. From enhanced security measures to advanced virtualization capabilities, Windows Server 2016 is designed to provide organizations with the tools they need to thrive in today's dynamic business environment.

Key Features of Windows Server 2016

Enhanced Security: Security is paramount in Windows Server 2016, with features such as Credential Guard, Device Guard, and Just Enough Administration (JEA) providing robust protection against cyber threats. Shielded Virtual Machines (VMs) further bolster security by encrypting VMs to prevent unauthorized access.

Software-Defined Storage: Windows Server 2016 introduces Storage Spaces Direct, a revolutionary software-defined storage solution that enables organizations to create highly available and scalable storage pools using commodity hardware. With Storage Spaces Direct, businesses can achieve greater flexibility and efficiency in managing their storage infrastructure.

Improved Hyper-V: Hyper-V in Windows Server 2016 undergoes significant enhancements, including support for nested virtualization, Shielded VMs, and rolling upgrades. These features enable organizations to optimize resource utilization, improve scalability, and enhance security in virtualized environments.

Nano Server: Nano Server represents a lightweight and minimalistic installation option in Windows Server 2016, designed for cloud-native and containerized workloads. With reduced footprint and overhead, Nano Server enables organizations to achieve greater agility and efficiency in deploying modern applications.

Container Support: Windows Server 2016 embraces the trend of containerization with native support for Docker and Windows containers. By enabling organizations to build, deploy, and manage containerized applications seamlessly, Windows Server 2016 empowers developers to innovate faster and IT operations teams to achieve greater flexibility and scalability.

Benefits of Windows Server 2016

Windows Server 2016 offers a myriad of benefits that position it as the platform of choice for modern enterprise computing:

Enhanced Security: With advanced security features like Credential Guard and Shielded VMs, Windows Server 2016 helps organizations protect their data and infrastructure from a wide range of cyber threats, ensuring peace of mind and regulatory compliance.

Improved Performance: Windows Server 2016 delivers enhanced performance and scalability, enabling organizations to handle the demands of modern workloads with ease and efficiency.

Flexibility and Agility: With support for Nano Server and containers, Windows Server 2016 provides organizations with unparalleled flexibility and agility in deploying and managing their IT infrastructure, facilitating rapid innovation and adaptation to changing business needs.

Cost Savings: By leveraging features such as Storage Spaces Direct and Hyper-V, organizations can achieve significant cost savings through improved resource utilization, reduced hardware requirements, and streamlined management.

Future-Proofing: Windows Server 2016 is designed to support emerging technologies and trends, ensuring that organizations can stay ahead of the curve and adapt to new challenges and opportunities in the digital landscape.

Conclusion: Embracing the Future with Windows Server 2016

In conclusion, Windows Server 2016 stands as a testament to Microsoft's commitment to innovation and excellence in enterprise computing. With its advanced security, enhanced performance, and unparalleled flexibility, Windows Server 2016 empowers organizations to unlock new levels of efficiency, productivity, and resilience. Whether deployed on-premises, in the cloud, or in hybrid environments, Windows Server 2016 serves as the foundation for digital transformation, enabling organizations to embrace the future with confidence and achieve their full potential in the ever-evolving world of enterprise IT.

Website: https://microsoftlicense.com

5 notes

·

View notes

Text

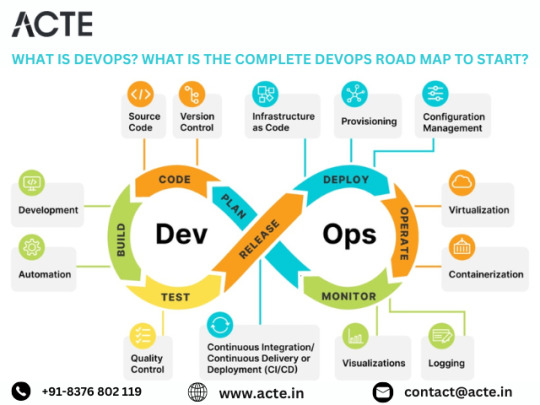

Navigating the DevOps Landscape: Opportunities and Roles

DevOps has become a game-changer in the quick-moving world of technology. This dynamic process, whose name is a combination of "Development" and "Operations," is revolutionising the way software is created, tested, and deployed. DevOps is a cultural shift that encourages cooperation, automation, and integration between development and IT operations teams, not merely a set of practises. The outcome? greater software delivery speed, dependability, and effectiveness.

In this comprehensive guide, we'll delve into the essence of DevOps, explore the key technologies that underpin its success, and uncover the vast array of job opportunities it offers. Whether you're an aspiring IT professional looking to enter the world of DevOps or an experienced practitioner seeking to enhance your skills, this blog will serve as your roadmap to mastering DevOps. So, let's embark on this enlightening journey into the realm of DevOps.

Key Technologies for DevOps:

Version Control Systems: DevOps teams rely heavily on robust version control systems such as Git and SVN. These systems are instrumental in managing and tracking changes in code and configurations, promoting collaboration and ensuring the integrity of the software development process.

Continuous Integration/Continuous Deployment (CI/CD): The heart of DevOps, CI/CD tools like Jenkins, Travis CI, and CircleCI drive the automation of critical processes. They orchestrate the building, testing, and deployment of code changes, enabling rapid, reliable, and consistent software releases.

Configuration Management: Tools like Ansible, Puppet, and Chef are the architects of automation in the DevOps landscape. They facilitate the automated provisioning and management of infrastructure and application configurations, ensuring consistency and efficiency.

Containerization: Docker and Kubernetes, the cornerstones of containerization, are pivotal in the DevOps toolkit. They empower the creation, deployment, and management of containers that encapsulate applications and their dependencies, simplifying deployment and scaling.

Orchestration: Docker Swarm and Amazon ECS take center stage in orchestrating and managing containerized applications at scale. They provide the control and coordination required to maintain the efficiency and reliability of containerized systems.

Monitoring and Logging: The observability of applications and systems is essential in the DevOps workflow. Monitoring and logging tools like the ELK Stack (Elasticsearch, Logstash, Kibana) and Prometheus are the eyes and ears of DevOps professionals, tracking performance, identifying issues, and optimizing system behavior.

Cloud Computing Platforms: AWS, Azure, and Google Cloud are the foundational pillars of cloud infrastructure in DevOps. They offer the infrastructure and services essential for creating and scaling cloud-based applications, facilitating the agility and flexibility required in modern software development.

Scripting and Coding: Proficiency in scripting languages such as Shell, Python, Ruby, and coding skills are invaluable assets for DevOps professionals. They empower the creation of automation scripts and tools, enabling customization and extensibility in the DevOps pipeline.

Collaboration and Communication Tools: Collaboration tools like Slack and Microsoft Teams enhance the communication and coordination among DevOps team members. They foster efficient collaboration and facilitate the exchange of ideas and information.

Infrastructure as Code (IaC): The concept of Infrastructure as Code, represented by tools like Terraform and AWS CloudFormation, is a pivotal practice in DevOps. It allows the definition and management of infrastructure using code, ensuring consistency and reproducibility, and enabling the rapid provisioning of resources.

Job Opportunities in DevOps:

DevOps Engineer: DevOps engineers are the architects of continuous integration and continuous deployment (CI/CD) pipelines. They meticulously design and maintain these pipelines to automate the deployment process, ensuring the rapid, reliable, and consistent release of software. Their responsibilities extend to optimizing the system's reliability, making them the backbone of seamless software delivery.

Release Manager: Release managers play a pivotal role in orchestrating the software release process. They carefully plan and schedule software releases, coordinating activities between development and IT teams. Their keen oversight ensures the smooth transition of software from development to production, enabling timely and successful releases.

Automation Architect: Automation architects are the visionaries behind the design and development of automation frameworks. These frameworks streamline deployment and monitoring processes, leveraging automation to enhance efficiency and reliability. They are the engineers of innovation, transforming manual tasks into automated wonders.

Cloud Engineer: Cloud engineers are the custodians of cloud infrastructure. They adeptly manage cloud resources, optimizing their performance and ensuring scalability. Their expertise lies in harnessing the power of cloud platforms like AWS, Azure, or Google Cloud to provide robust, flexible, and cost-effective solutions.

Site Reliability Engineer (SRE): SREs are the sentinels of system reliability. They focus on maintaining the system's resilience through efficient practices, continuous monitoring, and rapid incident response. Their vigilance ensures that applications and systems remain stable and performant, even in the face of challenges.

Security Engineer: Security engineers are the guardians of the DevOps pipeline. They integrate security measures seamlessly into the software development process, safeguarding it from potential threats and vulnerabilities. Their role is crucial in an era where security is paramount, ensuring that DevOps practices are fortified against breaches.

As DevOps continues to redefine the landscape of software development and deployment, gaining expertise in its core principles and technologies is a strategic career move. ACTE Technologies offers comprehensive DevOps training programs, led by industry experts who provide invaluable insights, real-world examples, and hands-on guidance. ACTE Technologies's DevOps training covers a wide range of essential concepts, practical exercises, and real-world applications. With a strong focus on certification preparation, ACTE Technologies ensures that you're well-prepared to excel in the world of DevOps. With their guidance, you can gain mastery over DevOps practices, enhance your skill set, and propel your career to new heights.

11 notes

·

View notes

Text

Unleashing Efficiency: Containerization with Docker

Introduction: In the fast-paced world of modern IT, agility and efficiency reign supreme. Enter Docker - a revolutionary tool that has transformed the way applications are developed, deployed, and managed. Containerization with Docker has become a cornerstone of contemporary software development, offering unparalleled flexibility, scalability, and portability. In this blog, we'll explore the fundamentals of Docker containerization, its benefits, and practical insights into leveraging Docker for streamlining your development workflow.

Understanding Docker Containerization: At its core, Docker is an open-source platform that enables developers to package applications and their dependencies into lightweight, self-contained units known as containers. Unlike traditional virtualization, where each application runs on its own guest operating system, Docker containers share the host operating system's kernel, resulting in significant resource savings and improved performance.

Key Benefits of Docker Containerization:

Portability: Docker containers encapsulate the application code, runtime, libraries, and dependencies, making them portable across different environments, from development to production.

Isolation: Containers provide a high degree of isolation, ensuring that applications run independently of each other without interference, thus enhancing security and stability.

Scalability: Docker's architecture facilitates effortless scaling by allowing applications to be deployed and replicated across multiple containers, enabling seamless horizontal scaling as demand fluctuates.

Consistency: With Docker, developers can create standardized environments using Dockerfiles and Docker Compose, ensuring consistency between development, testing, and production environments.

Speed: Docker accelerates the development lifecycle by reducing the time spent on setting up development environments, debugging compatibility issues, and deploying applications.

Getting Started with Docker: To embark on your Docker journey, begin by installing Docker Desktop or Docker Engine on your development machine. Docker Desktop provides a user-friendly interface for managing containers, while Docker Engine offers a command-line interface for advanced users.

Once Docker is installed, you can start building and running containers using Docker's command-line interface (CLI). The basic workflow involves:

Writing a Dockerfile: A text file that contains instructions for building a Docker image, specifying the base image, dependencies, environment variables, and commands to run.

Building Docker Images: Use the docker build command to build a Docker image from the Dockerfile.

Running Containers: Utilize the docker run command to create and run containers based on the Docker images.

Managing Containers: Docker provides a range of commands for managing containers, including starting, stopping, restarting, and removing containers.

Best Practices for Docker Containerization: To maximize the benefits of Docker containerization, consider the following best practices:

Keep Containers Lightweight: Minimize the size of Docker images by removing unnecessary dependencies and optimizing Dockerfiles.

Use Multi-Stage Builds: Employ multi-stage builds to reduce the size of Docker images and improve build times.

Utilize Docker Compose: Docker Compose simplifies the management of multi-container applications by defining them in a single YAML file.

Implement Health Checks: Define health checks in Dockerfiles to ensure that containers are functioning correctly and automatically restart them if they fail.

Secure Containers: Follow security best practices, such as running containers with non-root users, limiting container privileges, and regularly updating base images to patch vulnerabilities.

Conclusion: Docker containerization has revolutionized the way applications are developed, deployed, and managed, offering unparalleled agility, efficiency, and scalability. By embracing Docker, developers can streamline their development workflow, accelerate the deployment process, and improve the consistency and reliability of their applications. Whether you're a seasoned developer or just getting started, Docker opens up a world of possibilities, empowering you to build and deploy applications with ease in today's fast-paced digital landscape.

For more details visit www.qcsdclabs.com

#redhat#linux#docker#aws#agile#agiledevelopment#container#redhatcourses#information technology#ContainerSecurity#ContainerDeployment#DockerSwarm#Kubernetes#ContainerOrchestration#DevOps

5 notes

·

View notes

Text

Journey to Devops

The concept of “DevOps” has been gaining traction in the IT sector for a couple of years. It involves promoting teamwork and interaction, between software developers and IT operations groups to enhance the speed and reliability of software delivery. This strategy has become widely accepted as companies strive to provide software to meet customer needs and maintain an edge, in the industry. In this article we will explore the elements of becoming a DevOps Engineer.

Step 1: Get familiar with the basics of Software Development and IT Operations:

In order to pursue a career as a DevOps Engineer it is crucial to possess a grasp of software development and IT operations. Familiarity with programming languages like Python, Java, Ruby or PHP is essential. Additionally, having knowledge about operating systems, databases and networking is vital.

Step 2: Learn the principles of DevOps:

It is crucial to comprehend and apply the principles of DevOps. Automation, continuous integration, continuous deployment and continuous monitoring are aspects that need to be understood and implemented. It is vital to learn how these principles function and how to carry them out efficiently.

Step 3: Familiarize yourself with the DevOps toolchain:

Git: Git, a distributed version control system is extensively utilized by DevOps teams, for code repository management. It aids in monitoring code alterations facilitating collaboration, among team members and preserving a record of modifications made to the codebase.

Ansible: Ansible is an open source tool used for managing configurations deploying applications and automating tasks. It simplifies infrastructure management. Saves time when performing tasks.

Docker: Docker, on the other hand is a platform for containerization that allows DevOps engineers to bundle applications and dependencies into containers. This ensures consistency and compatibility across environments from development, to production.

Kubernetes: Kubernetes is an open-source container orchestration platform that helps manage and scale containers. It helps automate the deployment, scaling, and management of applications and micro-services.

Jenkins: Jenkins is an open-source automation server that helps automate the process of building, testing, and deploying software. It helps to automate repetitive tasks and improve the speed and efficiency of the software delivery process.

Nagios: Nagios is an open-source monitoring tool that helps us monitor the health and performance of our IT infrastructure. It also helps us to identify and resolve issues in real-time and ensure the high availability and reliability of IT systems as well.

Terraform: Terraform is an infrastructure as code (IAC) tool that helps manage and provision IT infrastructure. It helps us automate the process of provisioning and configuring IT resources and ensures consistency between development and production environments.

Step 4: Gain practical experience:

The best way to gain practical experience is by working on real projects and bootcamps. You can start by contributing to open-source projects or participating in coding challenges and hackathons. You can also attend workshops and online courses to improve your skills.

Step 5: Get certified:

Getting certified in DevOps can help you stand out from the crowd and showcase your expertise to various people. Some of the most popular certifications are:

Certified Kubernetes Administrator (CKA)

AWS Certified DevOps Engineer

Microsoft Certified: Azure DevOps Engineer Expert

AWS Certified Cloud Practitioner

Step 6: Build a strong professional network:

Networking is one of the most important parts of becoming a DevOps Engineer. You can join online communities, attend conferences, join webinars and connect with other professionals in the field. This will help you stay up-to-date with the latest developments and also help you find job opportunities and success.

Conclusion:

You can start your journey towards a successful career in DevOps. The most important thing is to be passionate about your work and continuously learn and improve your skills. With the right skills, experience, and network, you can achieve great success in this field and earn valuable experience.

2 notes

·

View notes

Text

Navigating the DevOps Landscape: A Comprehensive Roadmap for Success

Introduction: In the rapidly evolving realm of software development, DevOps stands as a pivotal force reshaping the way teams collaborate, deploy, and manage software. This detailed guide delves into the essence of DevOps, its core principles, and presents a step-by-step roadmap to kickstart your journey towards mastering DevOps methodologies.

Exploring the Core Tenets of DevOps: DevOps transcends mere toolsets; it embodies a cultural transformation focused on fostering collaboration, automation, and continual enhancement. At its essence, DevOps aims to dismantle barriers between development and operations teams, fostering a culture of shared ownership and continuous improvement.

Grasping Essential Tooling and Technologies: To embark on your DevOps odyssey, familiarizing yourself with the key tools and technologies within the DevOps ecosystem is paramount. From version control systems like Git to continuous integration servers such as Jenkins and containerization platforms like Docker, a diverse array of tools awaits exploration.

Mastery in Automation: Automation serves as the cornerstone of DevOps. By automating routine tasks like code deployment, testing, and infrastructure provisioning, teams can amplify efficiency, minimize errors, and accelerate software delivery. Proficiency in automation tools and scripting languages is imperative for effective DevOps implementation.

Crafting Continuous Integration/Continuous Delivery Pipelines: Continuous Integration (CI) and Continuous Delivery (CD) lie at the heart of DevOps practices. CI/CD pipelines automate the process of integrating code changes, executing tests, and deploying applications, ensuring rapid, reliable, and minimally manual intervention-driven software changes.

Embracing Infrastructure as Code (IaC): Infrastructure as Code (IaC) empowers teams to define and manage infrastructure through code, fostering consistency, scalability, and reproducibility. Treating infrastructure as code enables teams to programmatically provision, configure, and manage infrastructure resources, streamlining deployment workflows.

Fostering Collaboration and Communication: DevOps champions collaboration and communication across development, operations, and other cross-functional teams. By nurturing a culture of shared responsibility, transparency, and feedback, teams can dismantle silos and unite towards common objectives, resulting in accelerated delivery and heightened software quality.

Implementing Monitoring and Feedback Loops: Monitoring and feedback mechanisms are integral facets of DevOps methodologies. Establishing robust monitoring and logging solutions empowers teams to monitor application and infrastructure performance, availability, and security in real-time. Instituting feedback loops enables teams to gather insights and iteratively improve based on user feedback and system metrics.

Embracing Continuous Learning and Growth: DevOps thrives on a culture of continuous learning and improvement. Encouraging experimentation, learning, and knowledge exchange empowers teams to adapt to evolving requirements, technologies, and market dynamics, driving innovation and excellence.

Remaining Current with Industry Dynamics: The DevOps landscape is dynamic, with new tools, technologies, and practices emerging regularly. Staying abreast of industry trends, participating in conferences, webinars, and engaging with the DevOps community are essential for staying ahead. By remaining informed, teams can leverage the latest advancements to enhance their DevOps practices and deliver enhanced value to stakeholders.

Conclusion: DevOps represents a paradigm shift in software development, enabling organizations to achieve greater agility, efficiency, and innovation. By following this comprehensive roadmap and tailoring it to your organization's unique needs, you can embark on a transformative DevOps journey and drive positive change in your software delivery processes.

2 notes

·

View notes

Text

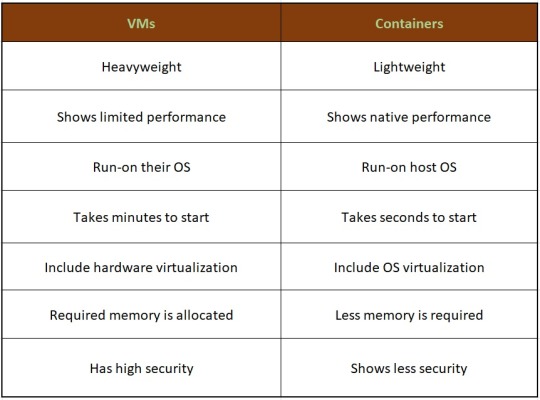

WILL CONTAINER REPLACE HYPERVISOR

As with the increasing technology, the way data centers operate has changed over the years due to virtualization. Over the years, different software has been launched that has made it easy for companies to manage their data operating center. This allows companies to operate their open-source object storage data through different operating systems together, thereby maximizing their resources and making their data managing work easy and useful for their business.

Understanding different technological models to their programming for object storage it requires proper knowledge and understanding of each. The same holds for containers as well as hypervisor which have been in the market for quite a time providing companies with different operating solutions.

Let’s understand how they work

Virtual machines- they work through hypervisor removing hardware system and enabling to run the data operating systems.

Containers- work by extracting operating systems and enable one to run data through applications and they have become more famous recently.

Although container technology has been in use since 2013, it became more engaging after the introduction of Docker. Thereby, it is an open-source object storage platform used for building, deploying and managing containerized applications.

The container’s system always works through the underlying operating system using virtual memory support that provides basic services to all the applications. Whereas hypervisors require their operating system for working properly with the help of hardware support.

Although containers, as well as hypervisors, work differently, have distinct and unique features, both the technologies share some similarities such as improving IT managed service efficiency. The profitability of the applications used and enhancing the lifecycle of software development.

And nowadays, it is becoming a hot topic and there is a lot of discussion going on whether containers will take over and replace hypervisors. This has been becoming of keen interest to many people as some are in favor of containers and some are with hypervisor as both the technologies have some particular properties that can help in solving different solutions.

Let’s discuss in detail and understand their functioning, differences and which one is better in terms of technology?

What are virtual machines?

Virtual machines are software-defined computers that run with the help of cloud hosting software thereby allowing multiple applications to run individually through hardware. They are best suited when one needs to operate different applications without letting them interfere with each other.

As the applications run differently on VMs, all applications will have a different set of hardware, which help companies in reducing the money spent on hardware management.

Virtual machines work with physical computers by using software layers that are light-weighted and are called a hypervisor.

A hypervisor that is used for working virtual machines helps in providing fresh service by separating VMs from one another and then allocating processors, memory and storage among them. This can be used by cloud hosting service providers in increasing their network functioning on nodes that are expensive automatically.

Hypervisors allow host machines to have different operating systems thereby allowing them to operate many virtual machines which leads to the maximum use of their resources such as bandwidth and memory.

What is a container?

Containers are also software-defined computers but they operate through a single host operating system. This means all applications have one operating center that allows it to access from anywhere using any applications such as a laptop, in the cloud etc.

Containers use the operating system (OS) virtualization form, that is they use the host operating system to perform their function. The container includes all the code, dependencies and operating system by itself allowing it to run from anywhere with the help of cloud hosting technology.

They promised methods of implementing infrastructure requirements that were streamlined and can be used as an alternative to virtual machines.

Even though containers are known to improve how cloud platforms was developed and deployed, they are still not as secure as VMs.

The same operating system can run different containers and can share their resources and they further, allow streamlining of implemented infrastructure requirements by the system.

Now as we have understood the working of VMs and containers, let’s see the benefits of both the technologies

Benefits of virtual machines

They allow different operating systems to work in one hardware system that maintains energy costs and rack space to cooling, thereby allowing economical gain in the cloud.

This technology provided by cloud managed services is easier to spin up and down and it is much easier to create backups with this system.

Allowing easy backups and restoring images, it is easy and simple to recover from disaster recovery.

It allows the isolated operating system, hence testing of applications is relatively easy, free and simple.

Benefits of containers:

They are light in weight and hence boost significantly faster as compared to VMs within a few seconds and require hardware and fewer operating systems.

They are portable cloud hosting data centers that can be used to run from anywhere which means the cause of the issue is being reduced.

They enable micro-services that allow easy testing of applications, failures related to the single point are reduced and the velocity related to development is increased.

Let’s see the difference between containers and VMs

Hence, looking at all these differences one can make out that, containers have added advantage over the old virtualization technology. As containers are faster, more lightweight and easy to manage than VMs and are way beyond these previous technologies in many ways.

In the case of hypervisor, virtualization is performed through physical hardware having a separate operating system that can be run on the same physical carrier. Hence each hardware requires a separate operating system to run an application and its associated libraries.

Whereas containers virtualize operating systems instead of hardware, thereby each container only contains the application, its library and dependencies.

Containers in a similar way to a virtual machine will allow developers to improve the CPU and use physical machines' memory. Containers through their managed service provider further allow microservice architecture, allowing application components to be deployed and scaled more granularly.

As we have seen the benefits and differences between the two technologies, one must know when to use containers and when to use virtual machines, as many people want to use both and some want to use either of them.

Let’s see when to use hypervisor for cases such as:

Many people want to continue with the virtual machines as they are compatible and consistent with their use and shifting to containers is not the case for them.

VMs provide a single computer or cloud hosting server to run multiple applications together which is only required by most people.

As containers run on host operating systems which is not the case with VMs. Hence, for security purposes, containers are not that safe as they can destroy all the applications together. However, in the case of virtual machines as it includes different hardware and belongs to secure cloud software, so only one application will be damaged.

Container’s turn out to be useful in case of,

Containers enable DevOps and microservices as they are portable and fast, taking microseconds to start working.

Nowadays, many web applications are moving towards a microservices architecture that helps in building web applications from managed service providers. The containers help in providing this feature making it easy for updating and redeploying of the part needed of the application.

Containers contain a scalability property that automatically scales containers, reproduces container images and spin them down when they are not needed.

With increasing technology, people want to move to technology that is fast and has speed, containers in this scenario are way faster than a hypervisor. That also enables fast testing and speed recovery of images when a reboot is performed.

Hence, will containers replace hypervisor?

Although both the cloud hosting technologies share some similarities, both are different from each other in one or the other aspect. Hence, it is not easy to conclude. Before making any final thoughts about it, let's see a few points about each.

Still, a question can arise in mind, why containers?

Although, as stated above there are many reasons to still use virtual machines, containers provide flexibility and portability that is increasing its demand in the multi-cloud platform world and the way they allocate their resources.

Still today many companies do not know how to deploy their new applications when installed, hence containerizing applications being flexible allow easy handling of many clouds hosting data center software environments of modern IT technology.

These containers are also useful for automation and DevOps pipelines including continuous integration and continuous development implementation. This means containers having small size and modularity of building it in small parts allows application buildup completely by stacking those parts together.

They not only increase the efficiency of the system and enhance the working of resources but also save money by preferring for operating multiple processes.

They are quicker to boost up as compared to virtual machines that take minutes in boosting and for recovery.

Another important point is that they have a minimalistic structure and do not need a full operating system or any hardware for its functioning and can be installed and removed without disturbing the whole system.

Containers replace the patching process that was used traditionally, thereby allowing many organizations to respond to various issues faster and making it easy for managing applications.

As containers contain an operating system abstract that operates its operating system, the virtualization problem that is being faced in the case of virtual machines is solved as containers have virtual environments that make it easy to operate different operating systems provided by vendor management.

Still, virtual machines are useful to many

Although containers have more advantages as compared to virtual machines, still there are a few disadvantages associated with them such as security issues with containers as they belong to disturbed cloud software.

Hacking a container is easy as they are using single software for operating multiple applications which can allow one to excess whole cloud hosting system if breaching occurs which is not the case with virtual machines as they contain an additional barrier between VM, host server and other virtual machines.

In case the fresh service software gets affected by malware, it spreads to all the applications as it uses a single operating system which is not the case with virtual machines.

People feel more familiar with virtual machines as they are well established in most organizations for a long time and businesses include teams and procedures that manage the working of VMs such as their deployment, backups and monitoring.

Many times, companies prefer working with an organized operating system type of secure cloud software as one machine, especially for applications that are complex to understand.

Conclusion

Concluding this blog, the final thought is that, as we have seen, both the containers and virtual machine cloud hosting technologies are provided with different problem-solving qualities. Containers help in focusing more on building code, creating better software and making applications work on a faster note whereas, with virtual machines, although they are slower, less portable and heavy still people prefer them in provisioning infrastructure for enterprise, running legacy or any monolithic applications.

Stating that, if one wants to operate a full operating system, they should go for hypervisor and if they want to have service from a cloud managed service provider that is lightweight and in a portable manner, one must go for containers.

Hence, it will take time for containers to replace virtual machines as they are still needed by many for running some old-style applications and host multiple operating systems in parallel even though VMs has not had so cloud-native servers. Therefore, it can be said that they are not likely to replace virtual machines as both the technologies complement each other by providing IT managed services instead of replacing each other and both the technologies have a place in the modern data center.

For more insights do visit our website

#container #hypervisor #docker #technology #zybisys #godaddy

6 notes

·

View notes

Text

OpenAI to Launch A-SWE: Revolutionizing Software Development with AI

OpenAI is set to introduce A-SWE (Agentic Software Engineer), an advanced AI agent designed to handle the complete software development lifecycle autonomously. Unlike existing tools that assist developers, A-SWE aims to replace human software engineers by performing tasks such as writing code, debugging, conducting quality assurance, and managing deployments.

What Is A-SWE?

A-SWE is an AI agent developed by OpenAI to function as a full-stack software engineer. It can understand software requirements, write code, debug errors, create tests, handle deployments, and utilize development tools like GitHub, Docker, and Jira. This development represents a significant leap from current AI tools primarily assisting developers, positioning A-SWE as a potential replacement for human software engineers.

Key Capabilities of A-SWE

Autonomous Software Development: A-SWE can independently write and optimize code based on specified requirements, reducing the need for human intervention.

Quality Assurance and Bug Testing: The agent autonomously creates test cases, simulates user interactions, and iterates on code to resolve issues, minimizing manual QA processes.

Deployment Management: A-SWE handles the deployment of applications, ensuring seamless integration and functionality in production environments.

Tool Integration: To streamline the development process, it utilizes various development tools, including GitHub for version control, Docker for containerization, and Jira for project management.

Implications for the Software Development Industry

The introduction of A-SWE could significantly impact the software development industry:

Increased Efficiency: By automating routine tasks, A-SWE allows human developers to focus on more complex and creative aspects of software development.

Cost Reduction: Organizations may reduce labor costs associated with software development by integrating AI agents like A-SWE into their workflows.

Skill Evolution: The role of human developers may shift towards overseeing AI agents and focusing on tasks that require human creativity and problem-solving skills.

Educational Pathways for Aspiring AI Developers

To engage with AI agents like A-SWE, professionals can pursue various educational programs:

AI Course: Provides foundational knowledge in artificial intelligence principles and techniques.

Machine Learning Certification: Focuses on developing and applying machine learning algorithms.

Python Certification: Equips individuals with programming skills essential for developing AI applications.

Cyber Security Certifications: Offers expertise in securing AI systems and protecting data integrity.

Information Security Certificate: Focuses on safeguarding AI applications from security threats.

These certifications provide the necessary skills to effectively develop, implement, and manage AI agents.

Future Outlook

The development of A-SWE signifies a move towards more autonomous AI systems capable of performing complex tasks traditionally handled by humans. As AI technology advances, agents like A-SWE could become integral components of software development teams, enhancing productivity and innovation.

Conclusion

OpenAI's A-SWE represents a significant advancement in AI technology, offering the potential to transform the software development industry. By automating the complete software development lifecycle, A-SWE could redefine the roles of human developers and introduce new efficiencies in the development process. Engaging with educational programs focused on AI and machine learning can equip professionals with the skills to work alongside and develop such advanced AI agents.

0 notes

Text

ARMxy SBC Embedded Controller BL340 in sewage Treatment System Monitoring

Case Details

Introduction

Real-time monitoring of wastewater treatment systems is critical for ensuring water quality compliance, optimizing process flows, and reducing operational costs. The ARMxy BL340 series embedded controller, powered by the Allwinner T507-H quad-core ARM Cortex-A53 processor, offers high performance, low power consumption, and flexible I/O configurations, making it ideal for industrial Internet of Things (IoT) applications in wastewater treatment monitoring. This article explores the design and application of the BL340 in wastewater treatment systems, analyzing its technical advantages and practical outcomes.

System Design

Hardware Architecture

The BL340 series adopts a modular design, with core hardware components including:

Processor: Allwinner T507-H, quad-core Cortex-A53, up to 1.4 GHz, paired with 8/16 GB eMMC storage and 1/2 GB DDR4 memory, meeting data processing and storage requirements.

Sensor Interfaces: Supports various sensors via Y-series I/O boards, such as pH (Y33/Y34, 0-5/10V analog input), dissolved oxygen (Y31, 4-20mA input), turbidity (Y36, ±5V/±10V differential input), and temperature (Y51/Y52, PT100/PT1000 RTD).

Communication Modules: Includes 3×10/100M Ethernet ports, 2×USB 2.0, Mini PCIe (4G/WiFi/Bluetooth), and a NANO SIM slot for remote data transmission.

Power and Installation: Supports 9-36 VDC wide voltage input with reverse polarity and overcurrent protection, designed for DIN35 rail mounting, suitable for harsh wastewater treatment environments.

Environmental Adaptability: Certified with IP30 protection and -40~85°C wide temperature testing, ensuring reliability in humid, high-temperature, or vibrating conditions.

Software Architecture

The BL340 supports multiple operating systems and development tools, with a software architecture comprising:

Operating Systems: Linux-4.9.170, Ubuntu 20.04, or Android 10, with Docker container support for rapid deployment.

Protocol Conversion: Pre-installed BLloTLink software supports protocols like Modbus, MQTT, and OPC UA, compatible with cloud platforms such as AWS IoT Core and ThingsBoard.

Data Processing: Utilizes Node-Red and Qt-5.12.5 for data acquisition, processing, and visualization, supporting real-time water quality parameter analysis.

Remote Access: BLRAT tool enables remote maintenance and configuration, enhancing operational efficiency.

Functionality and Applications

Real-Time Water Quality Monitoring

The BL340 collects critical wastewater treatment parameters (e.g., pH, dissolved oxygen, turbidity, temperature, and conductivity) via Y-series I/O boards. For instance, the Y31 module connects to 4-20mA dissolved oxygen sensors, and the Y51 module supports PT100 temperature sensors. Data is sampled via ADC, processed by the BL340, and used to generate real-time water quality reports.

Remote Monitoring and Alarming

The BL340 uploads data to cloud platforms via 4G or WiFi modules, enabling remote monitoring through web interfaces or mobile applications. When water quality parameters exceed thresholds (e.g., pH <6 or >9), the system sends alerts via MQTT and can control valves or pumps using the Y24 relay module to automatically adjust processes.

Data Storage and Analysis

The BL340 supports local SD card storage and cloud backups, archiving historical water quality data. Node-Red facilitates trend analysis, such as correlating dissolved oxygen levels with aeration energy consumption, to optimize wastewater treatment processes.

Typical Application Case

In a municipal wastewater treatment plant, the BL340B (equipped with 3×Ethernet ports and 2×Y-board slots) was deployed to monitor a biological reaction tank. The system configuration included:

Hardware: BL340B-SOM341-X23-Y31-Y51, featuring 4×RS485, 4×DI/DO, 4×4-20mA inputs (dissolved oxygen, turbidity), and 2×PT100 (temperature).

Functionality: Real-time water quality data collection, uploaded to the ThingsBoard platform via 4G, with automated aeration pump control.

Results: The system operated stably, reduced manual inspections, improved effluent compliance, and lowered energy consumption by approximately 15%.

Technical Advantages

High Performance and Low Power: The quad-core Cortex-A53 processor with a 1.4 GHz clock speed ensures efficient data processing, while the wide-voltage power design minimizes energy use.

Flexible I/O Configuration: Supports various X/Y-series I/O boards, accommodating diverse sensor and control requirements.

Robust Communication: Multiple Ethernet ports and 4G/WiFi modules support complex network environments, with BLloTLink enabling seamless integration with mainstream cloud platforms.

Industrial-Grade Reliability: Certified through electromagnetic compatibility (EMC) and environmental adaptability tests (-40~85°C, IP30, vibration resistance), suitable for harsh wastewater treatment conditions.

Ease of Development: Node-Red and Qt tools simplify application development, with BLRAT supporting remote debugging, reducing deployment time.

Challenges and Solutions

Sensor Drift: Regular calibration or software compensation algorithms (e.g., Kalman filtering) enhance data accuracy.

Network Stability: 4G redundancy and local caching ensure reliable data transmission.

Data Security: MQTT over TLS and device authentication safeguard data transfers.

Conclusion

The ARMxy BL340 series embedded controller demonstrates significant advantages in wastewater treatment system monitoring due to its high performance, flexibility, and industrial-grade reliability. Its modular design and robust communication capabilities meet diverse monitoring needs, enabling wastewater treatment plants to achieve intelligent and efficient operations. As industrial IoT technologies advance, the BL340 will play an increasingly vital role in water treatment applications.

0 notes

Text

Who is an MLOps Engineer? A crucial player in the current AI workflows.

As artificial intelligence and machine learning become the core components of modern-day business models, the need to operate these models efficiently has given birth to a new kind of specialist: the **MLOps Engineer**.

Here in this blog, we will explore who an MLOps Engineer is, what they do, why their role matters, and — perhaps most importantly — **how much they earn**.

**What is an MLOps Engineer?**

An **MLOps (Machine Learning Operations) Engineer** is an individual who operates between data science, software engineering, and DevOps. His or her primary responsibility is to simplify the process of the machine learning model and automate it — from development and training to deployment, monitoring, and retraining.

They are not just data scientists or coders. They are system builders who ensure that machine learning models work well, securely, and reliably in production.

What Does an MLOps Engineer Do?

MLOps Engineers handle a wide range of activities, including:

Model Deployment**: Creating automated pipelines to deploy models from experimentation to production. Infrastructure Management**: Creating scalable environments on cloud services (AWS, Azure, GCP), containerization (Docker), and orchestration tools (Kubernetes). CI/CD for ML**: Creating continuous integration and delivery systems tailor-made for ML projects. Monitoring and Logging**: Monitoring model performance and system statistics in real-time for early detection of drift or failure. Collaboration**: Collaboration with data scientists, IT groups, and software engineers to enable seamless handoffs and integrations.

— -

**Skills Needed for an MLOps Engineer**

An MLOps Engineer needs a good mix of technical skills:

- Skilled in **Python** and **Bash/Shell scripting** - Familiarity with **machine learning tools** (TensorFlow, PyTorch, Scikit-learn) - Good grasp of **DevOps tools** (Jenkins, Git, Terraform) - Familiarity with **cloud platforms** and **containerization** - Understanding of data engineering concepts and tools

— -

**Why is this Role Important?**

Training a machine learning model in a Jupyter notebook is just the beginning. The actual challenge is:

- Offering the model to many users at once. - Maintaining it current as information evolves - Guaranteeing security and compliance - Reliability and maintenance of performance

Most good machine learning models would be stuck in the prototype phase without MLOps.

— -

**How Much Does an MLOps Engineer Make?**

Their pay is very competitive, which is in line with their mixed experience and increasing demand.

The following is an approximate breakdown by experience and geography (as of 2024–2025):

| **Experience Level** | **USA** | **Europe (Average)** | **India (Average)**

| Beginner (0–2 yrs) | $90,000 — $120,000 | €45,000 — €65,000 | ₹8 — ₹15 LPA |

| Mid-Level (3–5 yrs) | $120,000 — $150,000 | €65,000 — €90,000 | ₹15 — ₹30 LPA |

| Senior (5+ years) | $150,000 — $180,000+ | €90,000 — €120,000+ | ₹30 LPA and above |

Major corporations and technology hubs such as Silicon Valley or London can offer salaries much higher than these amounts, particularly with bonuses and stock options.

The MLOps Engineer is fast becoming a highly sought-after role in the AI sector. With companies shifting from experimenting with machine learning to using it entirely, the demand for individuals who can bridge the gap between data science and production systems is increasing. If you are a software engineer, DevOps expert, or data scientist wanting to specialize, MLOps provides a good job path that pays well.

0 notes

Text

Full Stack Development: Using DevOps and Agile Practices for Success

In today’s fast-paced and highly competitive tech industry, the demand for Full Stack Developers is steadily on the rise. These versatile professionals possess a unique blend of skills that enable them to handle both the front-end and back-end aspects of software development. However, to excel in this role and meet the ever-evolving demands of modern software development, Full Stack Developers are increasingly turning to DevOps and Agile practices. In this comprehensive guide, we will explore how the combination of Full Stack Development with DevOps and Agile methodologies can lead to unparalleled success in the world of software development.

Full Stack Development: A Brief Overview

Full Stack Development refers to the practice of working on all aspects of a software application, from the user interface (UI) and user experience (UX) on the front end to server-side scripting, databases, and infrastructure on the back end. It requires a broad skill set and the ability to handle various technologies and programming languages.

The Significance of DevOps and Agile Practices

The environment for software development has changed significantly in recent years. The adoption of DevOps and Agile practices has become a cornerstone of modern software development. DevOps focuses on automating and streamlining the development and deployment processes, while Agile methodologies promote collaboration, flexibility, and iterative development. Together, they offer a powerful approach to software development that enhances efficiency, quality, and project success. In this blog, we will delve into the following key areas:

Understanding Full Stack Development

Defining Full Stack Development

We will start by defining Full Stack Development and elucidating its pivotal role in creating end-to-end solutions. Full Stack Developers are akin to the Swiss Army knives of the development world, capable of handling every aspect of a project.

Key Responsibilities of a Full Stack Developer

We will explore the multifaceted responsibilities of Full Stack Developers, from designing user interfaces to managing databases and everything in between. Understanding these responsibilities is crucial to grasping the challenges they face.

DevOps’s Importance in Full Stack Development

Unpacking DevOps

A collection of principles known as DevOps aims to eliminate the divide between development and operations teams. We will delve into what DevOps entails and why it matters in Full Stack Development. The benefits of embracing DevOps principles will also be discussed.

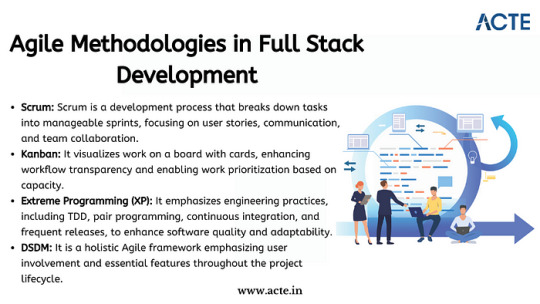

Agile Methodologies in Full Stack Development

Introducing Agile Methodologies

Agile methodologies like Scrum and Kanban have gained immense popularity due to their effectiveness in fostering collaboration and adaptability. We will introduce these methodologies and explain how they enhance project management and teamwork in Full Stack Development.

Synergy Between DevOps and Agile

The Power of Collaboration

We will highlight how DevOps and Agile practices complement each other, creating a synergy that streamlines the entire development process. By aligning development, testing, and deployment, this synergy results in faster delivery and higher-quality software.

Tools and Technologies for DevOps in Full Stack Development

Essential DevOps Tools

DevOps relies on a suite of tools and technologies, such as Jenkins, Docker, and Kubernetes, to automate and manage various aspects of the development pipeline. We will provide an overview of these tools and explain how they can be harnessed in Full Stack Development projects.

Implementing Agile in Full Stack Projects

Agile Implementation Strategies

We will delve into practical strategies for implementing Agile methodologies in Full Stack projects. Topics will include sprint planning, backlog management, and conducting effective stand-up meetings.

Best Practices for Agile Integration

We will share best practices for incorporating Agile principles into Full Stack Development, ensuring that projects are nimble, adaptable, and responsive to changing requirements.

Learning Resources and Real-World Examples

To gain a deeper understanding, ACTE Institute present case studies and real-world examples of successful Full Stack Development projects that leveraged DevOps and Agile practices. These stories will offer valuable insights into best practices and lessons learned. Consider enrolling in accredited full stack developer training course to increase your full stack proficiency.

Challenges and Solutions

Addressing Common Challenges

No journey is without its obstacles, and Full Stack Developers using DevOps and Agile practices may encounter challenges. We will identify these common roadblocks and provide practical solutions and tips for overcoming them.

Benefits and Outcomes

The Fruits of Collaboration

In this section, we will discuss the tangible benefits and outcomes of integrating DevOps and Agile practices in Full Stack projects. Faster development cycles, improved product quality, and enhanced customer satisfaction are among the rewards.

In conclusion, this blog has explored the dynamic world of Full Stack Development and the pivotal role that DevOps and Agile practices play in achieving success in this field. Full Stack Developers are at the forefront of innovation, and by embracing these methodologies, they can enhance their efficiency, drive project success, and stay ahead in the ever-evolving tech landscape. We emphasize the importance of continuous learning and adaptation, as the tech industry continually evolves. DevOps and Agile practices provide a foundation for success, and we encourage readers to explore further resources, courses, and communities to foster their growth as Full Stack Developers. By doing so, they can contribute to the development of cutting-edge solutions and make a lasting impact in the world of software development.

#web development#full stack developer#devops#agile#education#information#technology#full stack web development#innovation

2 notes

·

View notes

Text

How a Full Stack Developer Course Prepares You for Real-World Projects

The tech world is evolving rapidly—and so are the roles within it. One role that continues to grow in demand is that of a full-stack developer. These professionals are the backbone of modern web and software development. But what exactly does it take to become one? Enrolling in a full-stack developer course can be a game-changer, especially if you're someone who enjoys both the creative and logical sides of building digital solutions.

In this article, we'll explore the top 7 skills you’ll master in a full-stack developer course—skills that not only make you job-ready but also turn you into a valuable tech asset.

1. Front-End Development

Let’s face it: first impressions matter. The front-end is what users see and interact with. You’ll dive deep into the languages and frameworks that make websites beautiful and functional.

You’ll learn:

HTML5 and CSS3 for content and layout structuring.

JavaScript and DOM manipulation for interactivity.

Frameworks like React.js, Angular, or Vue.js for scalable user interfaces.

Responsive design using Bootstrap or Tailwind CSS.

You’ll go from building static web pages to creating dynamic, responsive user experiences that work across all devices.

2. Back-End Development

Once the front-end looks good, the back-end makes it work. You’ll learn to build and manage server-side applications that drive the logic, data, and security behind the interface.

Key skills include:

Server-side languages like Node.js, Python (Django/Flask), or Java (Spring Boot).

Building RESTful APIs and handling HTTP requests.

Managing user authentication, data validation, and error handling.

This is where you start to appreciate how things work behind the scenes—from processing a login request to fetching product data from a database.

3. Database Management

Data is the lifeblood of any application. A full-stack developer must know how to store, retrieve, and manipulate data effectively.

Courses will teach you:

Working with SQL databases like MySQL or PostgreSQL.

Understanding NoSQL options like MongoDB.

Designing and optimising data models.

Writing CRUD operations and joining tables.

By mastering databases, you’ll be able to support both small applications and large-scale enterprise systems.

4. Version Control with Git and GitHub

If you’ve ever made a change and broken your code (we’ve all been there!), version control will be your best friend. It helps you track and manage code changes efficiently.

You’ll learn:

Using Git commands to track, commit, and revert changes.

Collaborating on projects using GitHub.

Branching and merging strategies for team-based development.

These skills are not just useful—they’re essential in any collaborative coding environment.

5. Deployment and DevOps Basics

Building an app is only half the battle. Knowing how to deploy it is what makes your work accessible to the world.

Expect to cover:

Hosting apps using Heroku, Netlify, or Vercel.

Basics of CI/CD pipelines.

Cloud platforms like AWS, Google Cloud, or Azure.

Using Docker for containerisation.

Deployment transforms your local project into a living, breathing product on the internet.

6. Problem Solving and Debugging

This is the unspoken art of development. Debugging makes you patient, sharp, and detail-orientated. It’s the difference between a good developer and a great one.

You’ll master

Using browser developer tools.

Analysing error logs and debugging back-end issues.

Writing clean, testable code.

Applying logical thinking to fix bugs and optimise performance.

These problem-solving skills become second nature with practice—and they’re highly valued in the real world.

7. Project Management and Soft Skills

A good full-stack developer isn’t just a coder—they’re a communicator and a team player. Most courses now incorporate soft skills and project-based learning to mimic real work environments.

Expect to develop:

Time management and task prioritisation.

Working in agile environments (Scrum, Kanban).

Collaboration skills through group projects.

Creating portfolio-ready applications with documentation.

By the end of your course, you won’t just have skills—you’ll have confidence and real-world project experience.

Why These Skills Matter

The top 7 skills you’ll master in a full-stack developer course are a balanced mix of hard and soft skills. Together, they prepare you for a versatile role in startups, tech giants, freelance work, or your own entrepreneurial ventures.

Here’s why they’re so powerful:

You can work on both front-end and back-end—making you highly employable.

You’ll gain independence and control over full product development.

You’ll be able to communicate better across departments—design, QA, DevOps, and business.

Conclusion

Choosing to become a full-stack developer is like signing up for a journey of continuous learning. The right course gives you structured learning, industry-relevant projects, and hands-on experience.

Whether you're switching careers, enhancing your skill set, or building your first startup, these top 7 skills you’ll master in a Full Stack Developer course will set you on the right path.

So—are you ready to become a tech all-rounder?

0 notes

Text

Create Impactful and Smarter Learning with Custom MERN-Powered LMS Solutions

Introduction

Learning is evolving fast, and modern education businesses need smarter tools to keep up. As online training grows, a robust learning management software becomes essential for delivering courses, tracking progress, and certifying users. The global LMS market is booming – projected to hit about $70+ billion by 2030 – driven by demand for digital learning and AI-powered personalization. Off-the-shelf LMS platforms like Moodle or Canvas are popular, but they may not fit every startup’s unique needs. That’s why custom learning management solutions, built on flexible technology, are an attractive option for forward-looking EdTech companies. In this post, we’ll explore why Custom MERN-Powered LMS Solutions (using MongoDB, Express, React, Node) can create an impactful, smarter learning experience for modern businesses.

Understanding the MERN Stack for LMS Development