#Expert Elasticsearch Developers

Explore tagged Tumblr posts

Text

Hire Expert Elasticsearch Developers to Accelerate Search Performance & Business Insights

Looking to enhance your application’s search capabilities and scale with confidence? Hire expert Elasticsearch developers from Prosperasoft to power smarter, faster, and more secure search solutions across your digital platforms.

Our seasoned Elasticsearch team brings deep technical expertise across E-commerce, Fintech, Healthcare, SaaS, and more. From seamless cloud migrations to performance optimization, we ensure your search engine is fast, scalable, and secure.

Why Prosperasoft? ✅ 100+ customized Elasticsearch solutions delivered ✅ Trusted by 50+ global startups & enterprises ✅ Lightning-fast onboarding & flexible contracts ✅ 24/7 production support and emergency response ✅ Enterprise-grade consulting with strict NDA protection

We go beyond basic implementation. Our experts help you unlock real-time business insights and enhance search relevance with advanced ELK stack integration, cloud migration, and version upgrades.

Get started today and transform your user search experience with confidence. 🔎 Hire expert Elasticsearch developers now

#Hire Expert Elasticsearch Developers#Expert Elasticsearch Developers#Elasticsearch Developers#elasticsearch consulting#hire elasticsearch developer#elasticsearch developer for hire

0 notes

Text

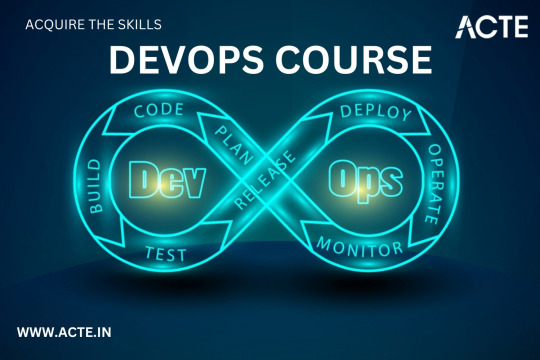

Level Up Your Software Development Skills: Join Our Unique DevOps Course

Would you like to increase your knowledge of software development? Look no further! Our unique DevOps course is the perfect opportunity to upgrade your skillset and pave the way for accelerated career growth in the tech industry. In this article, we will explore the key components of our course, reasons why you should choose it, the remarkable placement opportunities it offers, and the numerous benefits you can expect to gain from joining us.

Key Components of Our DevOps Course

Our DevOps course is meticulously designed to provide you with a comprehensive understanding of the DevOps methodology and equip you with the necessary tools and techniques to excel in the field. Here are the key components you can expect to delve into during the course:

1. Understanding DevOps Fundamentals

Learn the core principles and concepts of DevOps, including continuous integration, continuous delivery, infrastructure automation, and collaboration techniques. Gain insights into how DevOps practices can enhance software development efficiency and communication within cross-functional teams.

2. Mastering Cloud Computing Technologies

Immerse yourself in cloud computing platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform. Acquire hands-on experience in deploying applications, managing serverless architectures, and leveraging containerization technologies such as Docker and Kubernetes for scalable and efficient deployment.

3. Automating Infrastructure as Code

Discover the power of infrastructure automation through tools like Ansible, Terraform, and Puppet. Automate the provisioning, configuration, and management of infrastructure resources, enabling rapid scalability, agility, and error-free deployments.

4. Monitoring and Performance Optimization

Explore various monitoring and observability tools, including Elasticsearch, Grafana, and Prometheus, to ensure your applications are running smoothly and performing optimally. Learn how to diagnose and resolve performance bottlenecks, conduct efficient log analysis, and implement effective alerting mechanisms.

5. Embracing Continuous Integration and Delivery

Dive into the world of continuous integration and delivery (CI/CD) pipelines using popular tools like Jenkins, GitLab CI/CD, and CircleCI. Gain a deep understanding of how to automate build processes, run tests, and deploy applications seamlessly to achieve faster and more reliable software releases.

Reasons to Choose Our DevOps Course

There are numerous reasons why our DevOps course stands out from the rest. Here are some compelling factors that make it the ideal choice for aspiring software developers:

Expert Instructors: Learn from industry professionals who possess extensive experience in the field of DevOps and have a genuine passion for teaching. Benefit from their wealth of knowledge and practical insights gained from working on real-world projects.

Hands-On Approach: Our course emphasizes hands-on learning to ensure you develop the practical skills necessary to thrive in a DevOps environment. Through immersive lab sessions, you will have opportunities to apply the concepts learned and gain valuable experience working with industry-standard tools and technologies.

Tailored Curriculum: We understand that every learner is unique, so our curriculum is strategically designed to cater to individuals of varying proficiency levels. Whether you are a beginner or an experienced professional, our course will be tailored to suit your needs and help you achieve your desired goals.

Industry-Relevant Projects: Gain practical exposure to real-world scenarios by working on industry-relevant projects. Apply your newly acquired skills to solve complex problems and build innovative solutions that mirror the challenges faced by DevOps practitioners in the industry today.

Benefits of Joining Our DevOps Course

By joining our DevOps course, you open up a world of benefits that will enhance your software development career. Here are some notable advantages you can expect to gain:

Enhanced Employability: Acquire sought-after skills that are in high demand in the software development industry. Stand out from the crowd and increase your employability prospects by showcasing your proficiency in DevOps methodologies and tools.

Higher Earning Potential: With the rise of DevOps practices, organizations are willing to offer competitive remuneration packages to skilled professionals. By mastering DevOps through our course, you can significantly increase your earning potential in the tech industry.

Streamlined Software Development Processes: Gain the ability to streamline software development workflows by effectively integrating development and operations. With DevOps expertise, you will be capable of accelerating software deployment, reducing errors, and improving the overall efficiency of the development lifecycle.

Continuous Learning and Growth: DevOps is a rapidly evolving field, and by joining our course, you become a part of a community committed to continuous learning and growth. Stay updated with the latest industry trends, technologies, and best practices to ensure your skills remain relevant in an ever-changing tech landscape.

In conclusion, our unique DevOps course at ACTE institute offers unparalleled opportunities for software developers to level up their skills and propel their careers forward. With a comprehensive curriculum, remarkable placement opportunities, and a host of benefits, joining our course is undoubtedly a wise investment in your future success. Don't miss out on this incredible chance to become a proficient DevOps practitioner and unlock new horizons in the world of software development. Enroll today and embark on an exciting journey towards professional growth and achievement!

10 notes

·

View notes

Text

How to Build a YouTube Clone App: Tech Stack, Features & Cost Explained

Ever scrolled through YouTube and thought, “I could build this—but better”? You’re not alone. With the explosive growth of content creators and the non-stop demand for video content, building your own YouTube clone isn’t just a dream—it’s a solid business move. Whether you're targeting niche creators, regional content, or building the next big video sharing and streaming platform, there’s room in the market for innovation.

But before you dive into code or hire a dev team, let’s talk about the how. What tech stack powers a platform like YouTube? What features are must-haves? And how much does it actually cost to build something this ambitious?

In this post, we’re breaking it all down—no fluff, no filler. Just a clear roadmap to building a killer YouTube-style platform with insights from the clone app experts at Miracuves.

Core Features of a YouTube Clone App

Before picking servers or coding frameworks, you need a feature checklist. Here’s what every modern YouTube clone needs to include:

1. User Registration & Profiles

Users must be able to sign up via email or social logins. Profiles should allow for customization, channel creation, and subscriber tracking.

2. Video Upload & Encoding

Users upload video files that are auto-encoded to multiple resolutions (360p, 720p, 1080p). You’ll need a powerful media processor and cloud storage to handle this.

3. Streaming & Playback

The heart of any video platform. Adaptive bitrate streaming ensures smooth playback regardless of network speed.

4. Content Feed & Recommendations

Dynamic feeds based on trending videos, subscriptions, or AI-driven interests. The better your feed, the longer users stay.

5. Like, Comment, Share & Subscribe

Engagement drives reach. Build these features in early and make them seamless.

6. Search & Filters

Let users find content via keywords, categories, uploaders, and tags.

7. Monetization Features

Allow ads, tipping (like Super Chat), or paid content access. This is where the money lives.

8. Admin Dashboard

Moderation tools, user management, analytics, and content flagging are essential for long-term growth.

Optional Features:

Live Streaming

Playlists

Stories or Shorts

Video Premiere Countdown

Multilingual Subtitles

Media Suggestion: Feature comparison table between YouTube and your envisioned clone

Recommended Tech Stack

The tech behind YouTube is serious business, but you don’t need Google’s budget to launch a lean, high-performance YouTube clone. Here’s what we recommend at Miracuves:

Frontend (User Interface)

React.js or Vue.js – Fast rendering and reusable components

Tailwind CSS or Bootstrap – For modern, responsive UI

Next.js – Great for server-side rendering and SEO

Backend (Server-side)

Node.js with Express – Lightweight and scalable

Python/Django – Excellent for content recommendation algorithms

Laravel (PHP) – If you're going for quick setup and simplicity

Video Processing & Streaming

FFmpeg – Open-source video encoding and processing

HLS/DASH Protocols – For adaptive streaming

AWS MediaConvert or Mux – For advanced media workflows

Cloudflare Stream – Built-in CDN and encoding, fast global delivery

Storage & Database

Amazon S3 or Google Cloud Storage – For storing video content

MongoDB or PostgreSQL – For structured user and video data

Authentication & Security

JWT (JSON Web Tokens) for secure session management

OAuth 2.0 for social logins

Two-Factor Authentication (2FA) for creators and admins

Analytics & Search

Elasticsearch – Fast, scalable search

Mixpanel / Google Analytics – Track video watch time, drop-offs, engagement

AI-based recommendation engine – Python + TensorFlow or third-party API

Media Suggestion: Architecture diagram showing tech stack components and flow

Development Timeline & Team Composition

Depending on complexity, here’s a typical development breakdown:

MVP Build: 3–4 months

Full Product with Monetization: 6–8 months

Team Needed:

1–2 Frontend Developers

1 Backend Developer

1 DevOps/Cloud Engineer

1 UI/UX Designer

1 QA Tester

1 Project Manager

Want to move faster? Miracuves offers pre-built YouTube clone app solutions that can cut launch time in half.

Estimated Cost Breakdown

Here’s a rough ballpark for custom development: PhaseEstimated CostUI/UX Design$3,000 – $5,000Frontend Development$6,000 – $10,000Backend Development$8,000 – $12,000Video Processing Setup$4,000 – $6,000QA & Testing$2,000 – $4,000Cloud Infrastructure$500 – $2,000/month (post-launch)

Total Estimated Cost: $25,000 – $40,000+ depending on features and scale

Need it cheaper? Go the smart way with a customizable YouTube clone from Miracuves—less risk, faster time-to-market, and scalable from day one.

Final Thoughts

Building a YouTube clone isn’t just about copying features—it’s about creating a platform that gives creators and viewers something fresh, intuitive, and monetizable. With the right tech stack, must-have features, and a clear plan, you’re not just chasing YouTube—you’re building your own lane in the massive video sharing and streaming platform space.

At Miracuves, we help startups launch video platforms that are secure, scalable, and streaming-ready from day one. Want to build a revenue-generating video app that users love? Let’s talk.

FAQs

How much does it cost to build a YouTube clone?

Expect $25,000–$40,000 for a custom build. Ready-made solutions from Miracuves can reduce costs significantly.

Can I monetize my YouTube clone?

Absolutely. Use ads, subscriptions, tipping, pay-per-view, or affiliate integrations.

What’s the hardest part of building a video streaming app?

Video encoding, storage costs, and scaling playback across geographies. You’ll need a solid cloud setup.

Do I need to build everything from scratch?

No. Using a YouTube clone script from Miracuves saves time and still offers full customization.

How long does it take to launch?

A simple MVP may take 3–4 months. A full-feature platform can take 6–8 months. Miracuves can cut that timeline in half.

Is it legal to build a YouTube clone?

Yes, as long as you’re not copying YouTube’s trademark or copyrighted content. The tech and business model are fair game.

1 note

·

View note

Text

7 Benefits of Using Search Engine Tools for Data Analysis

We often think of search engines as tools for finding cat videos or answering trivia. But beneath the surface, they possess powerful capabilities that can significantly benefit data science workflows. Let's explore seven often-overlooked advantages of using search engine tools for data analysis.

1. Instant Data Exploration and Ingestion:

Imagine receiving a new, unfamiliar dataset. Instead of wrestling with complex data pipelines, you can load it directly into a search engine. These tools are remarkably flexible, handling a wide range of file formats (JSON, CSV, XML, PDF, images, etc.) and accommodating diverse data structures. This allows for rapid initial analysis, even with noisy or inconsistent data.

2. Efficient Training/Test/Validation Data Generation:

Search engines can act as a cost-effective and efficient data storage and retrieval system for deep learning projects. They excel at complex joins, row/column selection, and providing Google-like access to your data, experiments, and logs, making it easy to generate the necessary data splits for model training.

3. Streamlined Data Reduction and Feature Engineering:

Modern search engines come equipped with tools for transforming diverse data types (text, numeric, categorical, spatial) into vector spaces. They also provide features for weight construction, metadata capture, value imputation, and null handling, simplifying the feature engineering process. Furthermore, their support for natural language processing, including tokenization, stemming, and word embeddings, is invaluable for text-heavy datasets.

4. Powerful Search-Driven Analytics:

Search engines are not just about retrieval; they're also about analysis. They can perform real-time scoring, aggregation, and even regression analysis on retrieved data. This enables you to quickly extract meaningful insights, identify trends, and detect anomalies, moving beyond simple data retrieval.

5. Seamless Integration with Existing Tools:

Whether you prefer the command line, Jupyter notebooks, or languages like Python, R, or Scala, search engines seamlessly integrate with your existing data science toolkit. They can output data in various formats, including CSV and JSON, ensuring compatibility with your preferred workflows.

6. Rapid Prototyping and "Good Enough" Solutions:

Search engines simplify the implementation of algorithms like k-nearest neighbors, classifiers, and recommendation engines. While they may not always provide state-of-the-art results, they offer a quick and efficient way to build "good enough" solutions for prototyping and testing, especially at scale.

7. Versatile Data Storage and Handling:

Modern search engines, particularly those powered by Lucene (like Solr and Elasticsearch), are adept at handling key-value, columnar, and mixed data storage. This versatility allows them to efficiently manage diverse data types within a single platform, eliminating the need for multiple specialized tools.

Elevate Your Data Science Skills with Xaltius Academy's Data Science and AI Program:

While search engine tools offer valuable benefits, they are just one component of a comprehensive data science skillset. Xaltius Academy's Data Science and AI program provides a robust foundation in data analysis, machine learning, and AI, empowering you to leverage these tools effectively and tackle complex data challenges.

Key benefits of the program:

Comprehensive Curriculum: Covers essential data science concepts, including data analysis, machine learning, and AI.

Hands-on Projects: Gain practical experience through real-world projects and case studies.

Expert Instruction: Learn from experienced data scientists and AI practitioners.

Focus on Applied Skills: Develop the skills needed to apply data science and AI techniques to solve real-world problems.

Career Support: Receive guidance and resources to help you launch your career in data science and AI.

Conclusion:

Search engine tools offer a surprising array of benefits for data science, from rapid data exploration to efficient model development. By incorporating these tools into your workflow and complementing them with a strong foundation in data science principles, you can unlock new levels of efficiency and insight.

0 notes

Text

Understanding the Role of Observability in Microservices Environments

In a world reliant on microservices, maintaining distributed system health and performance is critical. Observability, rooted in systems control theory, ensures smooth operations by providing deeper insights than traditional monitoring, enabling teams to accurately identify, diagnose, and resolve issues.

What Sets Observability Apart from Monitoring?

Monitoring tracks predefined metrics and alerts for known issues, while observability focuses on understanding the "why" behind them. It enables developers to analyze telemetry data—logs, metrics, and traces—to uncover and resolve unknown problems. In complex microservices, where failures can cascade unpredictably, observability helps trace issues across services and provides actionable insights to pinpoint root causes efficiently.

Core Pillars of Observability

Logs:

Logs provide detailed, event-specific information that helps developers understand what happened within a system. Structured and centralized logging ensures that teams can easily search and analyze log data from multiple microservices.

Metrics:

Metrics quantify system behavior over time, such as request rates, error counts, or CPU usage. They provide a high-level view of system health, enabling teams to detect anomalies at a glance.

Traces:

Traces map the lifecycle of a request as it traverses through different microservices. This is particularly valuable in identifying bottlenecks or inefficiencies in complex service architectures.

The Importance of Observability in Microservices

Microservices architectures provide scalability and flexibility but also add complexity. With services distributed across environments, traditional monitoring tools often fall short in effective troubleshooting. Observability helps to:

Reduce Mean Time to Resolution (MTTR): By quickly identifying the root cause of an issue, teams can resolve it faster, minimizing downtime.

Improve System Reliability: Proactive insights enable teams to address potential failures before they impact users.

Enhance Development Velocity: Developers can identify inefficiencies and optimize code without waiting for user feedback or production incidents.

Key Tools for Observability

A robust observability strategy requires the right set of tools. Some of the most popular ones include:

Prometheus: A leading open-source solution for metrics collection and alerting.

Grafana: An analytics and visualization platform that integrates seamlessly with Prometheus.

Jaeger: A tool for distributed tracing, designed to help map dependencies and identify bottlenecks.

Elastic Stack: Combines Elasticsearch, Logstash, and Kibana for centralized logging and analysis.

Best Practices for Implementing Observability

Adopt a Holistic Approach: Ensure that logs, metrics, and traces are integrated into a single observability pipeline for a unified view of system health.

Automate Wherever Possible: Use tools to automate data collection, alerting, and incident responses to save time and reduce human error.

Focus on High-Value Metrics: Avoid metric overload by prioritizing KPIs that align with business objectives, such as latency, uptime, and error rates.

Achieving Efficiency with Observability in Distributed Systems

Observability is crucial for maintaining the health and performance of microservices architectures. It provides detailed insights into system behavior, enabling teams to quickly detect, diagnose, and resolve issues. Microservices training equips professionals with the skills to implement observability effectively, ensuring robust, scalable systems. Ascendient Learning offers expert training programs to help teams master microservices for success in modern development.

For more information visit: https://www.ascendientlearning.com/it-training/topics/microservices

0 notes

Text

Unlock Elasticsearch Speed: Expert Query Tuning Techniques for Superior Performance

Introduction Optimizing Elasticsearch Performance with Query Tuning is a crucial aspect of building a scalable and efficient search engine. Elasticsearch is a distributed, RESTful search and analytics engine that allows developers to store, search, and analyze large volumes of data. However, as the volume and complexity of data increase, the performance of Elasticsearch queries can become a…

0 notes

Text

Custom AI Development Services - Grow Your Business Potential

AI Development Company

As a reputable Artificial Intelligence Development Company, Bizvertex provides creative AI Development Solutions for organizations using our experience in AI app development. Our expert AI developers provide customized solutions to meet the specific needs of various sectors, such as intelligent chatbots, predictive analytics, and machine learning algorithms. Our custom AI development services are intended to empower your organization and produce meaningful results as it embarks on its digital transformation path.

AI Development Services That We Offer

Our AI development services are known to unlock the potential of vast amounts of data for driving tangible business results. Being a well-established AI solution provider, we specialize in leveraging the power of AI to transform raw data into actionable insights, paving the way for operational efficiency and enhanced decision-making. Here are our reliably intelligent AI Services that we convert your vision into reality.

Generative AI

Smart AI Assistants and Chatbot

AI/ML Strategy Consulting

AI Chatbot Development

PoC and MVP Development

Recommendation Engines

AI Security

AI Design

AIOps

AI-as-a-Service

Automation Solutions

Predictive Modeling

Data Science Consulting

Unlock Strategic Growth for Your Business With Our AI Know-how

Machine Learning

We use machine learning methods to enable sophisticated data analysis and prediction capabilities. This enables us to create solutions such as recommendation engines and predictive maintenance tools.

Deep Learning

We use deep learning techniques to develop effective solutions for complex data analysis tasks like sentiment analysis and language translation.

Predictive Analytics

We use statistical algorithms and machine learning approaches to create solutions that predict future trends and behaviours, allowing organisations to make informed strategic decisions.

Natural Language Processing

Our NLP knowledge enables us to create sentiment analysis, language translation, and other systems that efficiently process and analyse human language data.

Data Science

Bizvertex's data science skills include data cleansing, analysis, and interpretation, resulting in significant insights that drive informed decision-making and corporate strategy.

Computer Vision

Our computer vision expertise enables the extraction, analysis, and comprehension of visual information from photos or videos, which powers a wide range of applications across industries.

Industries Where Our AI Development Services Excel

Healthcare

Banking and Finance

Restaurant

eCommerce

Supply Chain and Logistics

Insurance

Social Networking

Games and Sports

Travel

Aviation

Real Estate

Education

On-Demand

Entertainment

Government

Agriculture

Manufacturing

Automotive

AI Models We Have Expertise In

GPT-4o

Llama-3

PaLM-2

Claude

DALL.E 2

Whisper

Stable Diffusion

Phi-2

Google Gemini

Vicuna

Mistral

Bloom-560m

Custom Artificial Intelligence Solutions That We Offer

We specialise in designing innovative artificial intelligence (AI) solutions that are tailored to your specific business objectives. We provide the following solutions.

Personlization

Enhanced Security

Optimized Operations

Decision Support Systems

Product Development

Tech Stack That We Using For AI Development

Languages

Scala

Java

Golang

Python

C++

Mobility

Android

iOS

Cross Platform

Python

Windows

Frameworks

Node JS

Angular JS

Vue.JS

React JS

Cloud

AWS

Microsoft Azure

Google Cloud

Thing Worx

C++

SDK

Kotlin

Ionic

Xamarin

React Native

Hardware

Raspberry

Arduino

BeagleBone

OCR

Tesseract

TensorFlow

Copyfish

ABBYY Finereader

OCR.Space

Go

Data

Apache Hadoop

Apache Kafka

OpenTSDB

Elasticsearch

NLP

Wit.ai

Dialogflow

Amazon Lex

Luis

Watson Assistant

Why Choose Bizvertex for AI Development?

Bizvertex the leading AI Development Company that provides unique AI solutions to help businesses increase their performance and efficiency by automating business processes. We provide future-proof AI solutions and fine-tuned AI models that are tailored to your specific business objectives, allowing you to accelerate AI adoption while lowering ongoing tuning expenses.

As a leading AI solutions provider, our major objective is to fulfill our customers' business visions through cutting-edge AI services tailored to a variety of business specializations. Hire AI developers from Bizvertex, which provides turnkey AI solutions and better ideas for your business challenges.

#AI Development#AI Development Services#Custom AI Development Services#AI Development Company#AI Development Service Provider#AI Development Solutions

0 notes

Text

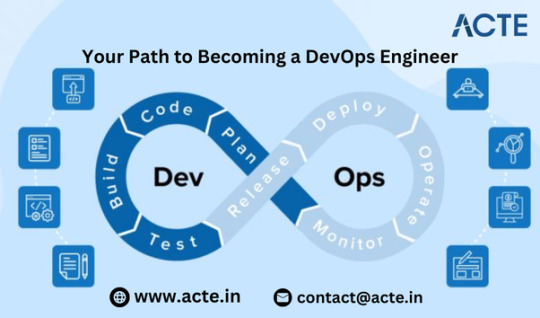

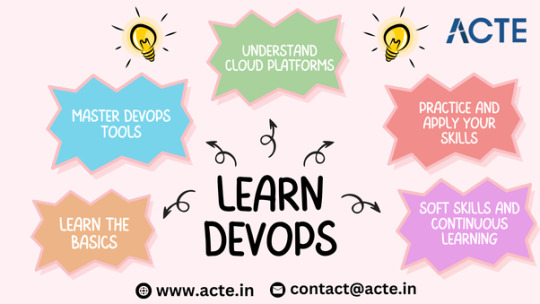

Your Path to Becoming a DevOps Engineer

Thinking about a career as a DevOps engineer? Great choice! DevOps engineers are pivotal in the tech world, automating processes and ensuring smooth collaboration between development and operations teams. Here’s a comprehensive guide to kick-starting your journey with the Best Devops Course.

Grasping the Concept of DevOps

Before you dive in, it’s essential to understand what DevOps entails. DevOps merges "Development" and "Operations" to boost collaboration and efficiency by automating infrastructure, workflows, and continuously monitoring application performance.

Step 1: Build a Strong Foundation

Start with the Essentials:

Programming and Scripting: Learn languages like Python, Ruby, or Java. Master scripting languages such as Bash and PowerShell for automation tasks.

Linux/Unix Basics: Many DevOps tools operate on Linux. Get comfortable with Linux command-line basics and system administration.

Grasp Key Concepts:

Version Control: Familiarize yourself with Git to track code changes and collaborate effectively.

Networking Basics: Understand networking principles, including TCP/IP, DNS, and HTTP/HTTPS.

If you want to learn more about ethical hacking, consider enrolling in an Devops Online course They often offer certifications, mentorship, and job placement opportunities to support your learning journey.

Step 2: Get Proficient with DevOps Tools

Automation Tools:

Jenkins: Learn to set up and manage continuous integration/continuous deployment (CI/CD) pipelines.

Docker: Grasp containerization and how Docker packages applications with their dependencies.

Configuration Management:

Ansible, Puppet, and Chef: Use these tools to automate the setup and management of servers and environments.

Infrastructure as Code (IaC):

Terraform: Master Terraform for managing and provisioning infrastructure via code.

Monitoring and Logging:

Prometheus and Grafana: Get acquainted with monitoring tools to track system performance.

ELK Stack (Elasticsearch, Logstash, Kibana): Learn to set up and visualize log data.

Consider enrolling in a DevOps Online course to delve deeper into ethical hacking. These courses often provide certifications, mentorship, and job placement opportunities to support your learning journey.

Step 3: Master Cloud Platforms

Cloud Services:

AWS, Azure, and Google Cloud: Gain expertise in one or more major cloud providers. Learn about their services, such as compute, storage, databases, and networking.

Cloud Management:

Kubernetes: Understand how to manage containerized applications with Kubernetes.

Step 4: Apply Your Skills Practically

Hands-On Projects:

Personal Projects: Develop your own projects to practice setting up CI/CD pipelines, automating tasks, and deploying applications.

Open Source Contributions: Engage with open-source projects to gain real-world experience and collaborate with other developers.

Certifications:

Earn Certifications: Consider certifications like AWS Certified DevOps Engineer, Google Cloud Professional DevOps Engineer, or Azure DevOps Engineer Expert to validate your skills and enhance your resume.

Step 5: Develop Soft Skills and Commit to Continuous Learning

Collaboration:

Communication: As a bridge between development and operations teams, effective communication is vital.

Teamwork: Work efficiently within a team, understanding and accommodating diverse viewpoints and expertise.

Adaptability:

Stay Current: Technology evolves rapidly. Keep learning and stay updated with the latest trends and tools in the DevOps field.

Problem-Solving: Cultivate strong analytical skills to troubleshoot and resolve issues efficiently.

Conclusion

Begin Your Journey Today: Becoming a DevOps engineer requires a blend of technical skills, hands-on experience, and continuous learning. By building a strong foundation, mastering essential tools, gaining cloud expertise, and applying your skills through projects and certifications, you can pave your way to a successful DevOps career. Persistence and a passion for technology will be your best allies on this journey.

0 notes

Text

Mastering OpenShift Clusters: A Comprehensive Guide for Streamlined Containerized Application Management

As organizations increasingly adopt containerization to enhance their application development and deployment processes, mastering tools like OpenShift becomes crucial. OpenShift, a Kubernetes-based platform, provides powerful capabilities for managing containerized applications. In this blog, we'll walk you through essential steps and best practices to effectively manage OpenShift clusters.

Introduction to OpenShift

OpenShift is a robust container application platform developed by Red Hat. It leverages Kubernetes for orchestration and adds developer-centric and enterprise-ready features. Understanding OpenShift’s architecture, including its components like the master node, worker nodes, and its integrated CI/CD pipeline, is foundational to mastering this platform.

Step-by-Step Tutorial

1. Setting Up Your OpenShift Cluster

Step 1: Prerequisites

Ensure you have a Red Hat OpenShift subscription.

Install oc, the OpenShift CLI tool.

Prepare your infrastructure (on-premise servers, cloud instances, etc.).

Step 2: Install OpenShift

Use the OpenShift Installer to deploy the cluster:openshift-install create cluster --dir=mycluster

Step 3: Configure Access

Log in to your cluster using the oc CLI:oc login -u kubeadmin -p $(cat mycluster/auth/kubeadmin-password) https://api.mycluster.example.com:6443

2. Deploying Applications on OpenShift

Step 1: Create a New Project

A project in OpenShift is similar to a namespace in Kubernetes:oc new-project myproject

Step 2: Deploy an Application

Deploy a sample application, such as an Nginx server:oc new-app nginx

Step 3: Expose the Application

Create a route to expose the application to external traffic:oc expose svc/nginx

3. Managing Resources and Scaling

Step 1: Resource Quotas and Limits

Define resource quotas to control the resource consumption within a project:apiVersion: v1 kind: ResourceQuota metadata: name: mem-cpu-quota spec: hard: requests.cpu: "4" requests.memory: 8Gi Apply the quota:oc create -f quota.yaml

Step 2: Scaling Applications

Scale your deployment to handle increased load:oc scale deployment/nginx --replicas=3

Expert Best Practices

1. Security and Compliance

Role-Based Access Control (RBAC): Define roles and bind them to users or groups to enforce the principle of least privilege.apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: namespace: myproject name: developer rules: - apiGroups: [""] resources: ["pods", "services"] verbs: ["get", "list", "watch", "create", "update", "delete"]oc create -f role.yaml oc create rolebinding developer-binding --role=developer [email protected] -n myproject

Network Policies: Implement network policies to control traffic flow between pods.apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-same-namespace namespace: myproject spec: podSelector: matchLabels: {} policyTypes: - Ingress - Egress ingress: - from: - podSelector: {} oc create -f networkpolicy.yaml

2. Monitoring and Logging

Prometheus and Grafana: Use Prometheus for monitoring and Grafana for visualizing metrics.oc new-project monitoring oc adm policy add-cluster-role-to-user cluster-monitoring-view -z default -n monitoring oc apply -f https://raw.githubusercontent.com/coreos/kube-prometheus/main/manifests/setup oc apply -f https://raw.githubusercontent.com/coreos/kube-prometheus/main/manifests/

ELK Stack: Deploy Elasticsearch, Logstash, and Kibana for centralized logging.oc new-project logging oc new-app elasticsearch oc new-app logstash oc new-app kibana

3. Automation and CI/CD

Jenkins Pipeline: Integrate Jenkins for CI/CD to automate the build, test, and deployment processes.oc new-app jenkins-ephemeral oc create -f jenkins-pipeline.yaml

OpenShift Pipelines: Use OpenShift Pipelines, which is based on Tekton, for advanced CI/CD capabilities.oc apply -f https://raw.githubusercontent.com/tektoncd/pipeline/main/release.yaml

Conclusion

Mastering OpenShift clusters involves understanding the platform's architecture, deploying and managing applications, and implementing best practices for security, monitoring, and automation. By following this comprehensive guide, you'll be well on your way to efficiently managing containerized applications with OpenShift.

For more details click www.qcsdclabs.com

#redhatcourses#information technology#docker#container#linux#kubernetes#containerorchestration#containersecurity#dockerswarm#aws

0 notes

Text

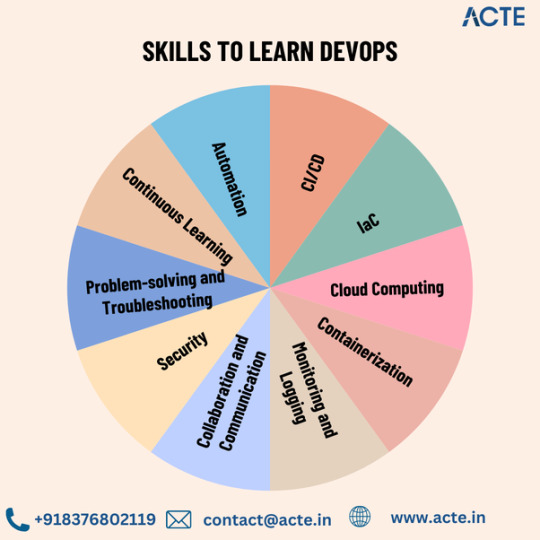

Unlocking the Key Skills for Becoming a DevOps Expert

In the ever-evolving landscape of software development and IT operations, the role of a DevOps expert has become increasingly crucial. DevOps, a combination of development and operations, focuses on fostering collaboration, communication, and integration between software developers and IT operations professionals. To excel in this dynamic field, acquiring specific skills is essential. In this blog post, we will explore the key skills that can unlock the path to becoming a DevOps expert.

Explore top-notch DevOps training in Hyderabad to enhance your skills and accelerate your career. Gain hands-on experience with cutting-edge tools and methodologies, guided by industry experts. #ITTraining #DevOpsSkills #CareerBoost #HandsOnLearning

1. Automation: DevOps heavily relies on automation to streamline processes and eliminate manual tasks. It is crucial to have a strong understanding of automation tools like Jenkins, Ansible, or Puppet to automate build, deployment, and testing processes.

2. Continuous Integration and Continuous Deployment (CI/CD): CI/CD practices are at the core of DevOps. Understanding how to implement, configure, and manage CI/CD pipelines using tools like Git, Jenkins, and Docker is essential for efficient software delivery.

3. Infrastructure as Code (IaC): IaC involves managing infrastructure resources programmatically using tools like Terraform or CloudFormation. It is important to learn how to define and provision infrastructure resources using code, enabling scalability, consistency, and version control.

4. Cloud Computing: Familiarity with cloud platforms like AWS, Azure, or GCP is essential for modern DevOps practices. Understanding cloud concepts, provisioning resources, and managing cloud services will enable efficient infrastructure management and scalability.

5. Containerization: Containerization technologies like Docker and Kubernetes have become central to DevOps practices. Learning how to build, manage, and orchestrate containers will allow for easy deployment, scaling, and portability of applications. Elevate your tech journey with DevOps online courses – unlocking doors to innovation, one lesson at a time. 🚀🖥️ #DevOpsMastery #TechInnovation #OnlineLearningAdventure.

6. Monitoring and Logging: DevOps emphasizes continuous monitoring and feedback. Familiarity with monitoring tools like Prometheus, Grafana, or ELK Stack (Elasticsearch, Logstash, Kibana) is important to track application performance, detect issues, and analyze logs.

7. Collaboration and Communication: DevOps requires strong collaboration and communication skills. Being able to work effectively in cross-functional teams, adopt Agile methodologies, and effectively communicate with developers, operations, and stakeholders is crucial for successful DevOps implementation.

8. Security: Understanding security principles and best practices is essential for DevOps. Knowledge of secure coding practices, vulnerability scanning, and implementing security controls in CI/CD pipelines will ensure the integrity and safety of applications.

9. Problem-solving and Troubleshooting: DevOps professionals need to be skilled in problem-solving and troubleshooting. The ability to identify, analyze, and resolve issues efficiently is crucial for maintaining smooth operations and minimizing downtime.

10. Continuous Learning: Lastly, DevOps is a rapidly evolving field. Being open to continuous learning and staying updated with new tools, technologies, and industry trends is essential for a successful career in DevOps.

Becoming a DevOps expert requires a multifaceted skill set that goes beyond traditional development or operations roles. By mastering automation, containerization, CI/CD, collaboration, monitoring, security, and maintaining a commitment to continuous learning, individuals can unlock the key skills needed to thrive in the dynamic world of DevOps. As organizations increasingly embrace DevOps practices, those with these essential skills will play a pivotal role in driving innovation and ensuring the success of software development initiatives.

0 notes

Text

0 notes

Text

NextBrick's Elasticsearch Consulting: Elevate Your Search and Data Capabilities

In today's data-driven world, Elasticsearch is a critical tool for businesses of all sizes. This powerful open-source search and analytics engine can help you explore, analyze, and visualize your data in ways that were never before possible. However, with its vast capabilities and complex architecture, Elasticsearch can be daunting to implement and manage.

That's where NextBrick's Elasticsearch consulting services come in. Our team of experienced consultants has a deep understanding of Elasticsearch and its capabilities. We can help you with everything from designing and implementing efficient data models to optimizing search queries and troubleshooting performance bottlenecks.

Why Choose NextBrick for Elasticsearch Consulting?

Tailored solutions: We understand that every business is unique. That's why we take the time to understand your specific needs and goals before developing a tailored Elasticsearch solution.

Performance optimization: Our consultants are experts in Elasticsearch performance tuning. We can help you ensure that your Elasticsearch cluster is running smoothly and efficiently, even as your data volume and traffic grow.

Expert support: We offer a wide range of Elasticsearch support services, including 24/7 monitoring, issue resolution, and ongoing performance optimization.

How NextBrick's Elasticsearch Consulting Can Help You

Here are just a few ways that NextBrick's Elasticsearch consulting services can help you:

Improve search relevance: Our consultants can help you design and implement efficient data models and search queries that deliver more relevant results to your users.

Optimize performance: We can help you identify and address performance bottlenecks, ensuring that your Elasticsearch cluster is running smoothly and efficiently.

Troubleshoot issues: Our consultants can help you troubleshoot any Elasticsearch issues that you may encounter, including indexing errors, query performance problems, and security vulnerabilities.

Migrate from other search solutions: If you're currently using a different search solution, we can help you migrate to Elasticsearch with minimal disruption to your business.

Develop custom Elasticsearch applications: Our consultants can help you develop custom Elasticsearch applications to meet your specific needs, such as product search, real-time analytics, and log management.

Elevate Your Search and Data Capabilities with NextBrick

If you're serious about using Elasticsearch to elevate your search and data capabilities, then NextBrick is the partner you need. Our team of experienced consultants can help you with every aspect of Elasticsearch implementation and management, from design to deployment to support.

Contact us today to learn more about our Elasticsearch consulting services and how we can help you achieve your business goals.

0 notes

Text

How do I start learning DevOps?

A Beginner’s Guide to Entering the World of DevOps

In today’s fast-paced tech world, the lines between development and operations are becoming increasingly blurred. Companies are constantly seeking professionals who can bridge the gap between writing code and deploying it smoothly into production. This is where DevOps comes into play. But if you’re new to it, you might wonder: “How do I start learning DevOps?”

This guide is designed for absolute beginners who want to enter the DevOps space with clarity, structure, and confidence. No fluff—just a clear roadmap to get you started.

What is DevOps, Really?

Before jumping into tools and techniques, it’s important to understand what DevOps actually is.

DevOps is not a tool or a programming language. It’s a culture, a mindset, and a set of practices that aim to bridge the gap between software development (Dev) and IT operations (Ops). The goal is to enable faster delivery of software with fewer bugs, more reliability, and continuous improvement.

In short, DevOps is about collaboration, automation, and continuous feedback.

Step 1: Understand the Basics of Software Development and Operations

Before learning DevOps itself, you need a foundational understanding of the environments DevOps operates in.

Learn the Basics of:

Operating Systems: Start with Linux. It’s the backbone of most DevOps tools.

Networking Concepts: Understand IPs, DNS, ports, firewalls, and how servers communicate.

Programming/Scripting: Python, Bash, or even simple shell scripting will go a long way.

If you're a complete beginner, you can spend a month brushing up on these essentials. You don’t have to be an expert—but you should feel comfortable navigating a terminal and writing simple scripts.

Step 2: Learn Version Control Systems (Git)

Git is the first hands-on DevOps tool you should learn. It's used by developers and operations teams alike to manage code changes, collaborate on projects, and track revisions.

Key Concepts to Learn:

Git repositories

Branching and merging

GitHub/GitLab/Bitbucket basics

Pull requests and code reviews

There are plenty of interactive Git tutorials available online where you can experiment in a sandbox environment.

Step 3: Dive Into Continuous Integration/Continuous Deployment (CI/CD)

Once you’ve learned Git, it’s time to explore CI/CD, the heart of DevOps automation.

Start with tools like:

Jenkins (most popular for beginners)

GitHub Actions

GitLab CI/CD

Understand how code can automatically be tested, built, and deployed after each commit. Even a simple pipeline (e.g., compiling code → running tests → deploying to a test server) will give you real-world context.

Step 4: Learn Infrastructure as Code (IaC)

DevOps isn’t just about pushing code—it’s also about managing infrastructure through code.

Popular IaC Tools:

Terraform: Used to provision servers and networks on cloud providers.

Ansible: Used for configuration management and automation.

These tools allow you to automate server provisioning, install software, and manage configuration using code, rather than manual setup.

Step 5: Get Comfortable With Containers and Orchestration

Containers are a huge part of modern DevOps workflows.

Start With:

Docker: Learn how to containerize applications and run them consistently on any environment.

Docker Compose: Manage multi-container setups.

Kubernetes: When you’re comfortable with Docker, move on to Kubernetes, which is used to manage and scale containerized applications.

Step 6: Learn About Monitoring and Logging

DevOps is not just about automation; it’s also about ensuring that systems are reliable and observable.

Get Familiar With:

Prometheus + Grafana: For monitoring system metrics and visualizing data.

ELK Stack (Elasticsearch, Logstash, Kibana): For centralized logging and log analysis.

Step 7: Practice With Real Projects

Theory and tutorials are great—but DevOps is best learned by doing.

Practical Ideas:

Build a CI/CD pipeline for a sample application

Containerize a web app with Docker

Deploy your app to AWS or any cloud provider using Terraform

Monitor your app’s health using Grafana

Don’t aim for perfection; aim for experience. The more problems you face, the better you'll get.

Step 8: Learn About Cloud Platforms

Almost all DevOps jobs require familiarity with cloud services.

Popular Cloud Providers:

AWS (most recommended for beginners)

Google Cloud Platform

Microsoft Azure

You don’t need to learn every service—just focus on compute (like EC2), storage (S3), networking (VPC), and managed Kubernetes (EKS, GKE).

Final Thoughts: DevOps Is a Journey, Not a Sprint

DevOps isn’t a destination—it’s an evolving practice that gets better the more you experiment and adapt. Whether you're from a development background, system administration, or starting from scratch, you can learn DevOps by taking consistent steps.

Don’t worry about mastering every tool at once. Instead, focus on building a strong foundation, gaining hands-on experience, and gradually expanding your skills. With time, you’ll not only learn DevOps—you’ll live it.

And remember: Start small, stay curious, and keep building.

0 notes

Text

DevOps Engineer CI/CD - Remote(Poland)

Company: LivePerson LivePerson (NASDAQ:LPSN) is a leading customer engagement company, creating digital experiences powered by Curiously Human AI. Every person is unique, and our technology makes it possible for companies, including leading brands like HSBC, Orange, and GM Financial, to treat their audiences that way at scale. Nearly a billion conversational interactions are powered by our Conversational Cloud each month. You'll be successful at LivePerson if you are excited to build something from the ground up. You excel by finding daily opportunities to grow at the same pace as the technology we're building, and you build partnerships that improve our business. Likewise, you're someone who sees feedback as a chance to learn and grow and believe decisions powered by data are the norm. You care about the wellbeing of others and yourself. Overview: LivePerson transforms customer care from voice calls to mobile messaging. Our cloud-based software platform, LiveEngage, allows brands with millions of customers and tens of thousands of care agents to deliver digital at scale. The market leader in real-time intelligent customer engagement. As a B2B SaaS company with 20 years of experience and the heart of a startup, we work day in and day out to help our customers live out our mission of creating lasting, meaningful connections with their customers. The Data Operations team at Liveperson is looking for an experienced DevOps engineer: a candidate that takes on challenges, continuously expands his area of expertise and naturally takes ownership of his work. The ideal candidate has a strong engineering background, an eagerness to follow good practices and a desire to apply his skills in the field of DevOps. We will provide you the grounds to succeed, an embracing working environment and very complex and interesting technical challenges to solve. You will: - Work with the latest and greatest data technologies including Relational/NoSQL databases and real-time data messaging and processing. - Responsible for engineering core big data platforms, both real-time analytics, stream & batch - Engage in solving real business problems by exploring and building an efficient and scalable data infrastructure. - Responsible for uptime, stability, redundancy, capacity, optimization, tuning, automation and visibility of our data technologies. - Participate in design reviews of our SaaS services and provide guidance for interoperability with layers such as Elasticsearch, Hadoop, Kafka, Storm and more - Learn new technologies and become an expert to the extent of being the last escalation tier in the company for these technologies - The team will also integrate these technologies into our private cloud adding auto-scaling, auto-healing, and advanced monitoring You have: - 2+ years of experience in DevOps - In-depth understanding of CI/CD concepts - MUST - Experience with GitLab - MUST - Experience with Docker and Kubernetes - Experience with Cloud Platforms (AWS / GCP ) - GCP advantage. - Experience in building CI/CD pipelines with Jenkins. - Development background is a major advantage - Terraform, Ansible - advantage Benefits: - Health: medical, dental, and vision - Development: Native AI learning Why you'll love working here: Your entrepreneurial spirit will be supported. We love team members who chase down their big ideas, become experts, help colleagues, and own their work. These four company values guide our continued, holistic growth as individuals, as teams, and as a global organization. And to further make our point, let's just say we're very proud to be on Fast Company's list of Most Innovative Companies and Newsweek's list of most-loved workplaces. Belonging at LivePerson: At LivePerson, people from diverse backgrounds come together to make an impact and be their authentic selves. One way we share and connect is through our employee resource groups such as: Live In Color, LP Proud, and Women In Tech. We are proud to be an equal opportunity employer. All qualified applicants will receive consideration for employment without regard to age, ancestry, color, family or medical care leave, gender identity or expression, genetic information, marital status, medical condition, national origin, physical or mental disability, protected veteran status, race, religion, sex (including pregnancy), sexual orientation, or any other characteristic protected by applicable laws, regulations and ordinances. We also consider qualified applicants with criminal histories, consistent with applicable federal, state, and local law. We are committed to the accessibility needs of applicants and employees. We provide reasonable accommodations to job applicants with physical or mental disabilities. Applicants with a disability who require a reasonable accommodation for any part of the application or hiring process should inform their recruiting contact upon initial connection. APPLY ON THE COMPANY WEBSITE To get free remote job alerts, please join our telegram channel “Global Job Alerts” or follow us on Twitter for latest job updates. Disclaimer: - This job opening is available on the respective company website as of 9thJuly 2023. The job openings may get expired by the time you check the post. - Candidates are requested to study and verify all the job details before applying and contact the respective company representative in case they have any queries. - The owner of this site has provided all the available information regarding the location of the job i.e. work from anywhere, work from home, fully remote, remote, etc. However, if you would like to have any clarification regarding the location of the job or have any further queries or doubts; please contact the respective company representative. Viewers are advised to do full requisite enquiries regarding job location before applying for each job. - Authentic companies never ask for payments for any job-related processes. Please carry out financial transactions (if any) at your own risk. - All the information and logos are taken from the respective company website. Read the full article

0 notes

Text

New Magento 2.3.1 Features Every Merchant and Developer Should Know

While the buzz created by Magento 2.3 is not over yet, Magento has released Magento 2.3.1 with great features, critical bug fixes, 30 security enhancements, 200 core functional fixes and 500 pull requests contributed by the community.

Before we dive into Magento 2.3.1

Before we jump into the exciting features of Magento 2.3.1, every Magento store owner and developer must be aware of the critical problems in Magento which should be taken care of immediately.

1. SQL vulnerability

There is a critical SQL injection vulnerability in pre 2.3.1 Magento code.

SQL injection is the process of sending malicious code to gain access and modify data. In this case, hackers can gain access to sensitive banking information of customers.

To protect your site from this vulnerability, download and apply the patch available here.

2. PayPal Payflow Pro active carding

The PayPal Payflow integration in Magento is being targeted by hackers for carding activity, which means these hackers check the validity of the stolen cards by making $0 transactions.

Magento has recommended using Google reCAPTCHA on the Payflow Pro checkout. For more details click here.

3. Authorize.Net support end for MD5 hash

Also, if your Magento site is using Authorize.Net MD5 hash and if you don’t plan to update to 2.3.1, then you have to follow these steps to fix Authorize.Net payment method. Otherwise, your site won’t be able to process payments via Authorize.Net from June 28, 2019.

If you need any help in any of the problems mentioned above you can get help from our Magento experts.

Now let’s focus on Magento 2.3.1 features and advantages

What merchants should know about Magento 2.3.1?

1. Creating orders in the back-end is now easy

The delays in back-end for making changes to billing and shipping addresses are eliminated. This helps to achieve a faster order creation workflow.

2. PDP images can be uploaded without downsizing and compressing

Merchants can directly upload PDP (Product Detail Page) images larger than 1920 x 1200 without being downsized and compressed by Magento. In older Magento versions when a merchant uploads a product image larger than 1920 x 1200, Magento will resize and compress the image.

3. Inventory management 1.1.0

3.1 Distance-priority algorithm (SSA)

This feature analyses the shipping destination location with the source fulfillment shipments to find the nearest fulfillment location. The best part of this feature is that the nearest fulfillment location can be determined based on distance or time for traveling. In addition to that, Pick In Store option is added.

3.2 Elasticsearch for custom stocks

Elasticsearch was only supported for Single Source mode for Default Source. With 2.3.1 it is also supported for custom stocks. In addition to that, filtering search results is also added.

Apart from these, Amazon sales channel and support for DHL are also added.

What developers should know about Magento 2.3.1?

1. Upgrade process dependency assessment automation

A composer plugin magento/composer-root-update-plugin which can automatically update all dependencies in composer.json during a Magento 2.x upgrade is introduced.

2. Enhancements

Significant improvements have been added in Progressive Web Apps (PWA) studio and GraphQL.

3. Performance improvements

The admin order creating page can now handle 3000 addresses. This is made possible by rewriting customer address handling with UI components.

Grid format has been enabled to display the list of additional customer addresses which are contained in the storefront customer address book.

Billing and shopping data will not be cleared if the customer interrupts the checkout process. Earlier, if the cart was updated by the customer, the checkout data would be deleted.

4. Advancements in infrastructure

Elasticsearch 6.0 is now supported.

Redis 5.0 is now supported.

Magento 2.3.1 is now compatible with PHP 7.2.x.

For Authorize.Net payment, Accept.js library is used.

5. Security improvements

30 security enhancements.

Protection against SQL injection.

New Authorize.Net extension is added to replace Authorize.Net Direct Post Module.

Other Enhancements

1. Amazon Pay

Multi-currency support was added for merchants in EU and U.K region. Almost 12 currencies have been added.

2. Magento Shipping

Merchants can cancel the shipment that has not been dispatched yet by accessing the shipment and clicking on Cancel Shipment.

Magento Shipping portal can be accessed using Magento using the credentials that are saved in Magento instance.

3. Cart and checkout

The special product price error is now fixed. Earlier Magento displayed the regular price when the special product price of the product was 0.00.

Infinite loading indicator used to appear in case of an error during checkout and it is fixed in this Magento version.

Clear shopping cart button only used to only reload the page and not clear the shopping cart. This problem is now solved.

Another issue fixed is the force logout of the customer when an item is added to the cart and mini-cart icon is clicked multiple times.

Configuring a product after adding it to a cart is now possible, earlier Magento caused errors.

4. Our Contributions to Magento 2.3.1

We at Codilar are a team of Magento experts, but how can we be experts if we haven’t contributed to making Magento better. Almost all Magento releases comprise fixes from our Magento developers.

This time there are two Magento 2.3.1 fixes from Codilar:

Fixed an issue with \Magento\Catalog\Model\Product::getQty() where float/double was returned instead of a string in most cases in pull request 18149.

Fixed an issue with inaccurate floating point calculations during checkout in pull request 18185.

Should I upgrade my Magento store to Magento 2.3.1?

Unlike the previous version Magento 2.3 that came with awesome features like Magento PWA, Magento 2.3.1 is mainly about performance, security and bug fixes. One mandatory reason to upgrade to Magento 2.3.1 is the SQL injection vulnerability. If exploited, it can allow hackers to access sensitive data including credit card details. Magento has recommended switching to version 2.3.1 for all Magento stores below 2.0 that are planning for an update

“Merchants who have not previously downloaded a Magento 2 release should go straight to Magento Commerce or Open Source 2.3.1.” – Magento Security Team

Let us know what you think about Magento 2.3.1 in the comment section below!

Author

Venugopal

0 notes

Text

5 DevOps Tools You Must Use In 2023

As businesses continue to shift towards a DevOps approach, there are several tools that can help streamline the development and deployment process. Here are five DevOps tools that you should consider using in 2023:

Kubernetes - Kubernetes is an open-source container orchestration tool that automates the deployment, scaling, and management of containerized applications. It is highly scalable and can be used across multiple cloud providers.

Jenkins - Jenkins is a popular open-source automation server that is used to automate various parts of the software development process. It can be used for building, testing, and deploying applications.

Terraform - Terraform is an infrastructure as code (IaC) tool that allows you to define and manage your infrastructure in a declarative manner. It supports multiple cloud providers and can be used to provision and manage resources such as virtual machines, containers, and networking.

Git - Git is a version control system that allows developers to collaborate on code and keep track of changes over time. It is essential for DevOps teams that are working on large codebases and need to keep track of multiple versions.

ELK Stack - The ELK Stack is a collection of open-source tools (Elasticsearch, Logstash, and Kibana) that are used to collect, parse, and analyze log data. It can be used to monitor application performance and troubleshoot issues.

If you need help implementing these tools, CloudZenix can provide expert guidance and support to ensure that your DevOps processes are running smoothly. https://cloudzenix.com/devops/devops-solutions-services/

0 notes