#Extract Google Maps Data

Explore tagged Tumblr posts

Text

A complete guide on extracting restaurant data from Google Maps includes using web scraping tools like BeautifulSoup or Scrapy in Python, leveraging the Google Places API for structured data access, and ensuring compliance with Google's terms of service. It covers steps from setup to data extraction and storage.

0 notes

Text

Discover the techniques of extracting location data from Google Maps. Learn to harness the power of this resource to gather accurate geographic information.

Read More: https://www.locationscloud.com/location-data-extraction-from-google-maps/

#Extract Google Maps Data#LocationsCloud#Store Location Data#Location Intelligence#Location Data Provider

0 notes

Text

Dragon Age: The Veilguard

▶ Extracted Asset Drive Folder

Recently finished my first DAV playthrough and wanted to get my hands into the files 🤚 so just like I did with CP77, I put up little google drive folder with extracted assets! Made possible thanks to the Frostbite Modding Tool ◀

OBVIOUS Spoiler warning - I don't recommend looking at the files until you're done with the game's main story!

I wasn't able to grab everything just yet as the majority of assets aren't fully accessible yet (corrupted/missing data). Expect some extracted assets to have some artifacts as well!

But you can already find:

HUD elements

Codex entries's full art

CC, Map, Journal Icons

...and more!

Every elements has been sorted in folders for an (hopefully) easy browsing - I'll try to keep this drive updated :3

▶ THIS IS FOR PERSONAL USE ONLY!

You can use the assets for your videos, thumbnails, character templates, art, mods... but do NOT use these assets for any commercial purposes! Every assets and files are the property of Bioware and their artists

This is from a fan for fans, let's keep it fair and fun! 🙏

If you appreciate my work consider supporting me on Ko-Fi 💜

1K notes

·

View notes

Text

Data Visualization: Transforming Data into Insight

In an technology wherein information is produced at an remarkable tempo, the ability to extract significant insights is extra vital than ever. Data visualization plays a vital function on this procedure, enabling individuals and corporations to understand complex statistics sets, pick out trends, and communicate findings effectively. By converting abstract numbers into intuitive visuals, information visualization bridges the gap among uncooked data and human cognition, turning complexity into readability.

Data Visualization In Research

The Importance of Data Visualization

Data visualization is the graphical illustration of information and facts. By the use of visible elements like charts, graphs, and maps, statistics visualization tools make it less difficult to see and understand styles, trends, and outliers in facts. Its importance lies in numerous key areas:

Improved Understanding: Visuals are processed 60,000 times faster than textual content by way of the human mind. Graphs and charts can screen insights that would pass omitted in spreadsheets.

Enhanced Communication: Well-crafted visualizations allow statistics to be shared in a manner that’s available to a broader audience, no longer simply records analysts or statisticians.

Data-Driven Decision Making: In enterprise, governments, and medical research, visualizations support selection-making via without a doubt showing the implications of various statistics tendencies.

Pattern and Anomaly Detection: They help users quick become aware of deviations, spikes, or drops in data, which could suggest possibilities or threats.

Types of Data Visualization

Data visualization encompasses a big selection of techniques, each applicable to precise types of records and analytical desires. Some of the most commonly used sorts include:

1. Bar Charts

Bar charts are best for comparing quantities throughout classes. They are simple however effective for displaying differences among agencies.

2. Line Graphs

Often used to music changes over time, line graphs display tendencies and fluctuations, making them a fave for time-series information.

3. Pie Charts

They’re satisfactory for simple, clear percent facts.

4. Histograms

Histograms display the distribution of a dataset, making them beneficial for understanding records spread, crucial tendency, and frequency.

5. Heat Maps

Heat maps use colour gradients to indicate value depth throughout two dimensions.

6. Scatter Plots

Scatter plots are used to pick out relationships between variables, often revealing correlations or clusters in facts.

7. Box Plots

Box plots show the distribution of a dataset thru its quartiles, highlighting medians, variability, and ability outliers.

8. Geospatial Maps

These visualizations display facts associated with geographic regions and are extensively utilized in demographic research, environmental tracking, and logistics.

9. Dashboards

Dashboards integrate multiple visualizations into one interface, supplying a actual-time assessment of key metrics and overall performance signs.

Tools for Data Visualization

A huge range of tools is to be had for growing effective statistics visualizations. Popular alternatives encompass:

Tableau: A leading platform for interactive, shareable dashboards with drag-and-drop functions.

Power BI: Microsoft's enterprise analytics tool with sturdy integration into the Office atmosphere.

Google Data Studio: A unfastened tool for developing customizable reports the use of Google records sources.

Ggplot2: A effective R package for constructing state-of-the-art plots the use of the grammar of snap shots.

Each device gives distinctive competencies depending at the user’s technical information, information complexity, and desired results.

Best Practices in Data Visualization

Creating effective facts visualizations requires more than just technical skill. It includes an information of design ideas, cognitive psychology, and storytelling. Here are key exceptional practices:

1. Know Your Audience

Tailor the visualization to the information stage and pursuits of your target market. What a statistics scientist unearths intuitive is probably complicated to a business executive.

2. Choose the Right Chart

Using an inappropriate chart kind can deceive or confuse the viewer. For instance, a line chart ought to not be used for specific information.

Three. Simplify and Clarify

Avoid muddle. Focus on essential statistics and put off unnecessary elements like immoderate gridlines, decorative snap shots, or redundant labels.

Four. Use Color Thoughtfully

Color can enhance know-how but additionally lie to if used improperly. Stick to a consistent color scheme and use contrasts to highlight key points.

5. Tell a Story

Effective facts visualizations guide the viewer through a story. Highlight tendencies, anomalies, or correlations that support your message.

6. Maintain Integrity

Never manipulate axes or distort scales to magnify findings. Ethical visualization ensures accurate illustration of statistics.

Real-World Applications

Data visualization is applied in nearly each region, transforming industries through stepped forward insight and communication.

1. Business Analytics

In commercial enterprise, visualization tools assist in monitoring sales, client behavior, supply chain efficiency, and extra.

2. Healthcare

In medicinal drug and public health, visualizations are crucial for tracking disorder outbreaks, affected person records, and treatment results. For example, COVID-19 dashboards performed a main function in information the pandemic's unfold.

3. Finance

Financial analysts use records visualization to recognize market tendencies, examine investment overall performance, and check chance.

Four. Education

Educators and researchers use visualization to track pupil performance, perceive mastering gaps, and gift studies findings.

Five. Government and Policy

Policymakers use visible facts to understand social trends, aid allocation, and financial overall performance.

6. Journalism

Data journalism is growing hastily. Visual stories on topics like weather change, election results, or social inequality use charts and infographics to inform and engage readers.

Challenges and Limitations

Despite its electricity, facts visualization isn't with out demanding situations:

Data Quality: Inaccurate or incomplete information can lead to deceptive visuals.

Over-Simplification: Trying to make information too easy can lead to lack of nuance or important info.

Misinterpretation: Poor design selections or biased displays can cause audiences to draw wrong conclusions.

Tool Limitations: Not all equipment aid the extent of customization or interactivity wished for unique projects.

Overcoming these demanding situations requires a mix of technical talent, area information, and moral responsibility.

The Future of Data Visualization

The future of statistics visualization is increasingly interactive, actual-time, and AI-assisted. Emerging traits include:

Augmented and Virtual Reality (AR/VR): Immersive visualizations permit users to explore records in three-dimensional environments.

Machine Learning Integration: Algorithms can now endorse or even vehicle-generate visualizations based on the information furnished.

Collaborative Platforms: Teams can now work collectively in actual time on visualization dashboards, improving communique and agility.

These advancements will hold to make records greater accessible and insightful throughout all domain names.

Difference Between Augmented Reality (AR) and Virtual Reality (VR)

What Is Data Analysis In Research

2 notes

·

View notes

Text

Data Science: The Career Catalyst for a Smarter Tomorrow

Ever wondered how Netflix knows just what to recommend or how Google Maps reroutes you around traffic in real time? Behind these everyday conveniences lies a powerful force—data science. It’s the art and science of extracting meaningful insights from data, and it's changing the way we work, shop, heal, and even think.

Whether you're a student, a working professional, or simply curious, understanding data science is more than a tech trend—it’s a career-smart move. Let’s explore this exciting field in a way that’s easy to understand, human-centric, and packed with real-world relevance.

What is Data Science, Really?

At its core, data science blends mathematics, statistics, computer science, and domain knowledge to analyze data and solve complex problems. Think of it as storytelling powered by numbers.

Key Elements:

Data Collection: Gathering data from various sources

Data Cleaning: Removing errors and organizing it

Data Analysis: Understanding patterns and trends

Predictive Modeling: Using past data to forecast future outcomes

2 notes

·

View notes

Text

Top 5 Selling Odoo Modules.

In the dynamic world of business, having the right tools can make all the difference. For Odoo users, certain modules stand out for their ability to enhance data management and operations. To optimize your Odoo implementation and leverage its full potential.

That's where Odoo ERP can be a life savior for your business. This comprehensive solution integrates various functions into one centralized platform, tailor-made for the digital economy.

Let’s drive into 5 top selling module that can revolutionize your Odoo experience:

Dashboard Ninja with AI, Odoo Power BI connector, Looker studio connector, Google sheets connector, and Odoo data model.

1. Dashboard Ninja with AI:

Using this module, Create amazing reports with the powerful and smart Odoo Dashboard ninja app for Odoo. See your business from a 360-degree angle with an interactive, and beautiful dashboard.

Some Key Features:

Real-time streaming Dashboard

Advanced data filter

Create charts from Excel and CSV file

Fluid and flexible layout

Download Dashboards items

This module gives you AI suggestions for improving your operational efficiencies.

2. Odoo Power BI Connector:

This module provides a direct connection between Odoo and Power BI Desktop, a Powerful data visualization tool.

Some Key features:

Secure token-based connection.

Proper schema and data type handling.

Fetch custom tables from Odoo.

Real-time data updates.

With Power BI, you can make informed decisions based on real-time data analysis and visualization.

3. Odoo Data Model:

The Odoo Data Model is the backbone of the entire system. It defines how your data is stored, structured, and related within the application.

Key Features:

Relations & fields: Developers can easily find relations ( one-to-many, many-to-many and many-to-one) and defining fields (columns) between data tables.

Object Relational mapping: Odoo ORM allows developers to define models (classes) that map to database tables.

The module allows you to use SQL query extensions and download data in Excel Sheets.

4. Google Sheet Connector:

This connector bridges the gap between Odoo and Google Sheets.

Some Key features:

Real-time data synchronization and transfer between Odoo and Spreadsheet.

One-time setup, No need to wrestle with API’s.

Transfer multiple tables swiftly.

Helped your team’s workflow by making Odoo data accessible in a sheet format.

5. Odoo Looker Studio Connector:

Looker studio connector by Techfinna easily integrates Odoo data with Looker, a powerful data analytics and visualization platform.

Some Key Features:

Directly integrate Odoo data to Looker Studio with just a few clicks.

The connector automatically retrieves and maps Odoo table schemas in their native data types.

Manual and scheduled data refresh.

Execute custom SQL queries for selective data fetching.

The Module helped you build detailed reports, and provide deeper business intelligence.

These Modules will improve analytics, customization, and reporting. Module setup can significantly enhance your operational efficiency. Let’s embrace these modules and take your Odoo experience to the next level.

Need Help?

I hope you find the blog helpful. Please share your feedback and suggestions.

For flawless Odoo Connectors, implementation, and services contact us at

[email protected] Or www.techneith.com

#odoo#powerbi#connector#looker#studio#google#microsoft#techfinna#ksolves#odooerp#developer#web developers#integration#odooimplementation#crm#odoointegration#odooconnector

4 notes

·

View notes

Text

Submitted via Google Form:

I made a rough map but then after assigning how much time it takes people to get around with various transports and prices and alternatives as well as trying to make it make sense and not just have transport because. However I've found lots of plot holes and started editing things but one edit makes the rest of the map go awry. So I made a more detailed map so sometimes I don't need to edit the entire thing. But now things are spiralling and I have a massive map with hundreds of points and extreme details that get even more screwed up as I make changes. Uhh. What do I do? Timing and optimising ideal times/budgets to get to places, etc is an important part of the character's actions and plot points.

Tex: The fantastic thing about maps is that you can have more than one of them, each suited to a different subject regarding the same area. A master map with all of the data points that you want is very useful, and will allow you to extract specific information for maps about more focused subjects (i.e. geographical features vs settlements vs train lines vs climate zones vs botanical). This is helpful in that on individual, subject-specific maps, you can coordinate colour keys in order to generate an overall scheme, such as which colours you prefer for greater vs lower densities, or background vs foreground information.

The Wikipedia page on maps contains a lot of useful information in this regard, and I would also recommend making a written outline of the information that you have on your map, in order to organize the information displayed there, keep track of changes, and plan how to group your information.

Licorice: It sounds as if you’re having difficulty deciding whether your map should dictate your plot, or vice versa. If your story were set on Earth, your map would be fixed, and the means of transport would be, to some extent, dictated to you; you’d only have to decide where your characters should go next - Beijing, or New York?

I’m getting the impression that with this story, you’re creating the map and devising the plot simultaneously, and what you’ve ended up with is a map that’s so detailed it has become inconsistent and difficult even for its own creator to make sense of. It sounds as if the map is becoming an obstacle to writing the story rather than an aid to it. At the same time, though, it sounds like you have a much clearer idea of your plot points and where this story is going than you did when you started.

Maybe it’s time to put the map aside and focus on writing the story? Then when you’re done, update the map so it’s fully in line with the story you’ve written.

Alternatively, put the story aside for a moment, draft a new simplified map using everything you’ve learnt about your story so far, and then treat it like something as fixed as a map of Earth - something to which additional details can be added, but nothing can be changed.

I found on tumblr this chart of the daily distances a person can travel using different modes of transport, which may be useful to you:

Realistic Travel Chart

Good luck! Your project sounds fascinating.

Mod Note: I’ll toss in our two previous map masterposts here for reader reference as well

Mapmaking Part 1

Mapmaking Part 2 Mapping Cities and Towns

18 notes

·

View notes

Text

How Google Maps, Spotify, Shazam and More Work

"How does Google Maps use satellites, GPS and more to get you from point A to point B? What is the tech that powers Spotify’s recommendation algorithm?

From the unique tech that works in seconds to power tap-to-pay to how Shazam identifies 23,000 songs each minute, WSJ explores the engineering and science of technology that catches our eye.

Chapters:

0:00 Google Maps

9:07 LED wristbands

14:30 Spotify’s algorithm

21:30 Tap-to-Pay

28:18 Noise-canceling headphones

34:33 MSG Sphere

41:30 Shazam "

Source: The Wall Street Journal

#Tech#Algorithm#WSJ

Additional information:

" How Does Google Map Works?

Google Maps is a unique web-based mapping service brought to you by the tech giant, Google. It offers satellite imagery, aerial photography, street maps, 360° panoramic views of streets, real-time traffic conditions, and route planning for traveling by foot, car, bicycle, or public transportation.

A short history of Google maps:

Google Maps was first launched in February 2005, as a desktop web mapping service. It was developed by a team at Google led by Lars and Jens Rasmussen, with the goal of creating a more user-friendly and accurate alternative to existing mapping services. In 2007, Google released the first version of Google Maps for mobile, which was available for the Apple iPhone. This version of the app was a huge success and quickly became the most popular mapping app on the market. As time has passed, Google Maps has consistently developed and enhanced its capabilities, including the addition of new forms of map data like satellite and aerial imagery and integration with other Google platforms like Google Earth and Google Street View.

In 2013, Google released a new version of Google Maps for the web, which included a redesigned interface and new features like enhanced search and integration with Google+ for sharing and reviewing places.

Today, Google Maps is available on desktop computers and as a mobile app for Android and iOS devices. It is used by millions of people around the world to get directions, find places, and explore new areas.

How does google maps work?

Google Maps works by using satellite and aerial imagery to create detailed maps of the world. These maps are then made available to users through a web-based interface or a mobile app.

When you open Google Maps, you can search for a specific location or browse the map to explore an area. You can also use the app to get directions to a specific place or find points of interest, such as businesses, landmarks, and other points of interest. Google Maps uses a combination of GPS data, user input, and real-time traffic data to provide accurate and up-to-date information about locations and directions. The app also integrates with other Google services, such as Google Earth and Google Street View, to provide additional information and features.

Overall, Google Maps is a powerful tool that makes it easy to find and explore locations around the world. It’s available on desktop computers and as a mobile app for Android and iOS devices.

Google uses a variety of algorithms in the backend of Google Maps to provide accurate and up-to-date information about locations and directions. Some of the main algorithms used by Google Maps include:

Image recognition: Google Maps uses image recognition algorithms to extract useful information from the satellite and street view images used to create the map. These algorithms can recognize specific objects and features in the images, such as roads, buildings, and landmarks, and use this information to create a detailed map of the area.

Machine learning: Google Maps uses machine learning algorithms to analyze and interpret data from a variety of sources, including satellite imagery, street view images, and user data. These algorithms can identify patterns and trends in the data, allowing Google Maps to provide more accurate and up-to-date information about locations and directions.

Geospatial data analysis: Google Maps uses geospatial data analysis algorithms to analyze and interpret data about the earth’s surface and features. This includes techniques like geographic information systems (GIS) and geospatial data mining, which are used to extract useful information from large datasets of geospatial data.

Overall, these algorithms are an essential part of the backend of Google Maps, helping the service to provide accurate and up-to-date information to users around the world.

Google Maps uses a variety of algorithms to determine the shortest path between two points:

Here are some of the algorithms that may be used:

Dijkstra’s algorithm: This is a classic algorithm for finding the shortest path between two nodes in a graph. It works by starting at the source node and progressively exploring the graph, adding nodes to the shortest path as it goes.

A* search algorithm: This is another popular algorithm for finding the shortest path between two points. It works by combining the benefits of Dijkstra’s algorithm with a heuristic function that helps guide the search toward the destination node.

It’s worth noting that Google Maps may use a combination of these algorithms, as well as other specialized algorithms, to determine the shortest path between two points. The specific algorithms used may vary depending on the specifics of the route, such as the distance, the number of turns, and the type of terrain. "

Source: geeksforgeeks.org - -> You can read the full article at geeksforgeeks.org

#mktmarketing4you#corporatestrategy#marketing#M4Y#lovemarketing#IPAM#ipammarketingschool#ContingencyPlanning#virtual#volunteering#project#Management#Economy#ConsumptionBehavior#BrandManagement#ProductManagement#Logistics#Lifecycle

#Brand#Neuromarketing#McKinseyMatrix#Viralmarketing#Facebook#Marketingmetrics#icebergmodel#EdgarScheinsCultureModel#GuerrillaMarketing #STARMethod #7SFramework #gapanalysis #AIDAModel #SixLeadershipStyles #MintoPyramidPrinciple #StrategyDiamond #InternalRateofReturn #irr #BrandManagement #dripmodel #HoshinPlanning #XMatrix #backtobasics #BalancedScorecard #Product #ProductManagement #Logistics #Branding #freemium #businessmodel #business #4P #3C #BCG #SWOT #TOWS #EisenhowerMatrix #Study #marketingresearch #marketer #marketing manager #Painpoints #Pestel #ValueChain # VRIO #marketingmix

Thank you for following All about Marketing 4 You

youtube

2 notes

·

View notes

Text

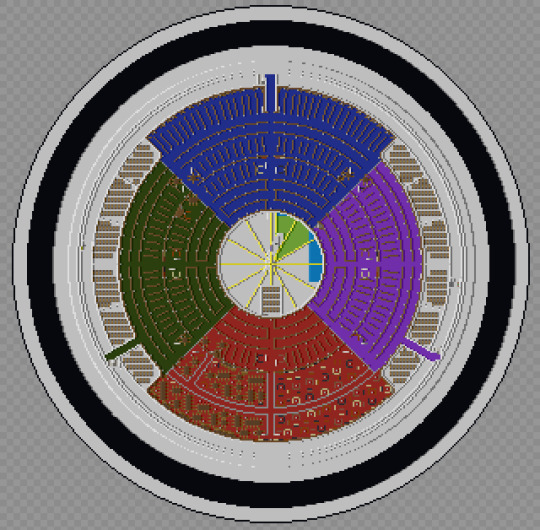

The Scholomance in Minecraft: Final Tour

The build is, for all intense and purposes, done. Now for the final tour.

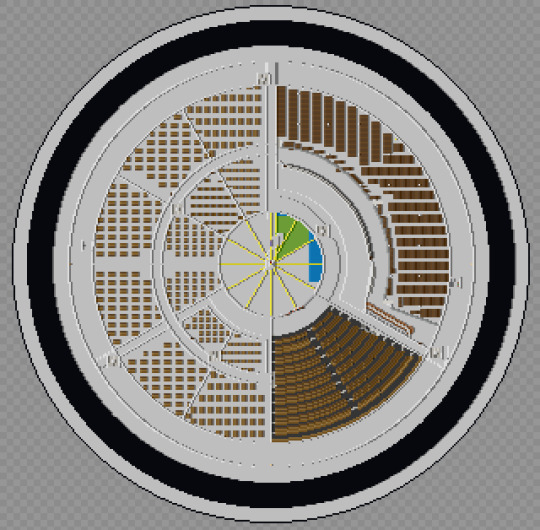

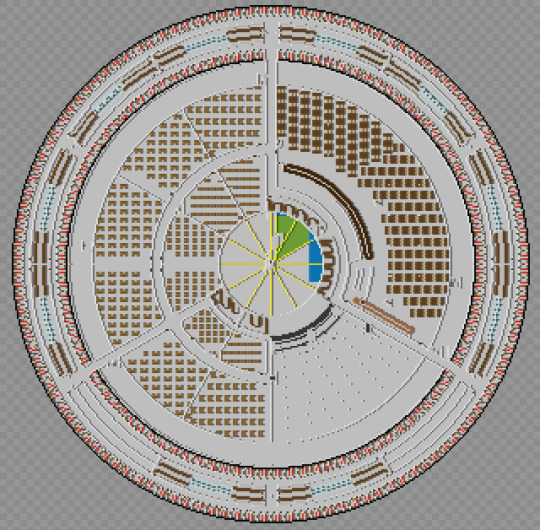

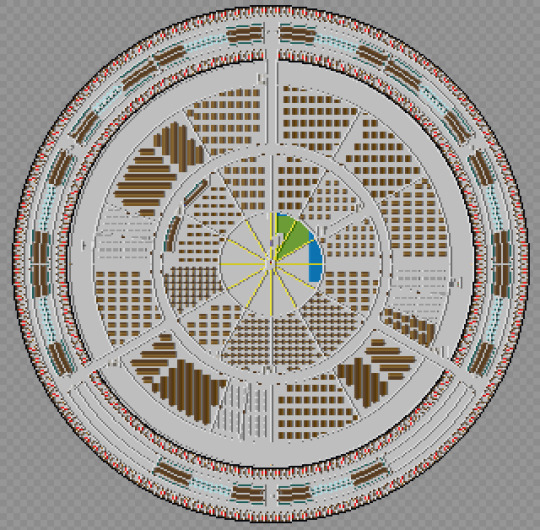

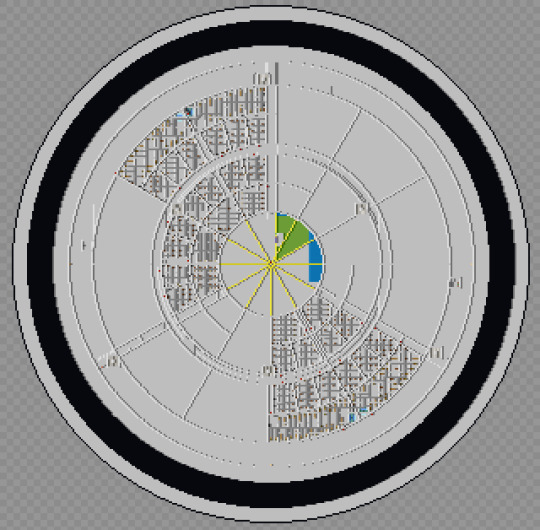

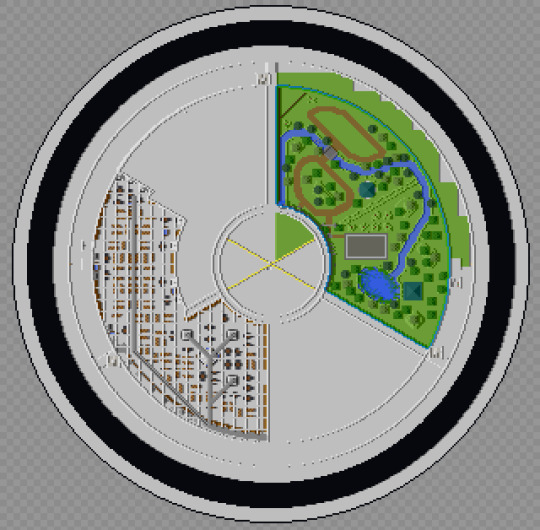

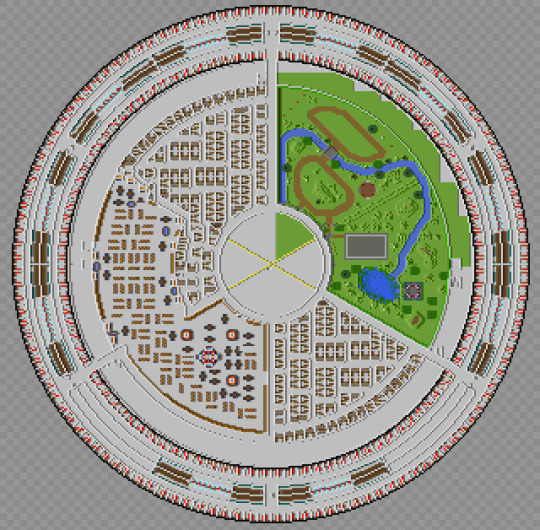

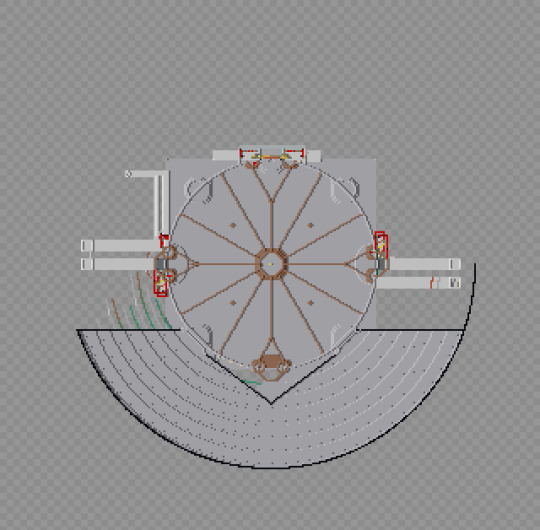

Okay, let me be blunt, I can't post this here. That tour is 147 images long, not counting the one I'm reusing from the book. So I really insist if you want to see the whole thing, go visit the Imgur post. That said, I did figure out how to do something quite interesting, and I will be sharing it only here, along with some random thoughts and notes. So, who wants to see a map of the Scholomance in Minecraft?

The program is called Mineways, and it lets you extract the data from any Minecraft world, and do all sorts of things, like build import it to 3d modeling software, 3d print it, or just generate some maps. We'll start at the top and work our way down.

This view was almost standard for much of the build and still how I think of the school as a whole. I never removed much of the access stuff I used for building, so you'll see things like that inside the core throughout.

So the school is roughly 120 blocks tall, which puts it at about 40 stories tall. Minus the library and graduation hall, that means climbing from the Senior dorms to the library is climbing about the equivalent of 27 stories. The seniors had to have one hell of a work out just to get lunch, let alone the gym runs. The only thing holding them back from being full on Olympic athletes is the fact that they were malnourished!

I did some math at one point trying to figure out how many books the library could contain, and surprisingly they have figures for how many books fit in a cubic meter. My math (possibly wrong) came up with 116 million. Google claims there's only 156 million books total. Now this is possibly wrong (I may have messed up my math), but it's still crazy how big the library actually is. I still can't get over it honestly.

Maleficaria Studies got me wondering something: Who made the mural? The only ones who would ever see the Graduation Hall and it's inhabitants would be graduating seniors, and I doubt many took the time to sketch the scene. And even then, how did it get back into the school? And then up on the walls in a way that could be torn down and turned into wire? More than that, what was the original purpose? When they planned for it to be for 800 male enclavers, did they need it to be what it became? Or was it just an auditorium/theater and at one point they had bands or were performing Shakespeare or something?

Speaking of those early days, I think it's pretty clear there had to be some communication from outside the school to inside, if only to the artifice itself. After all, they had to have some way to tell the school to halve the room sizes so it could support more students.

BTW, while writing this, do you notice how there's a couple blanks spaces for the bathrooms along the bottom? Yeah, I missed two sets of bathrooms. I did go back and fill those in, I just didn't redo these maps.

Speaking of bathrooms, I decided to make the interior restrooms unisex while the dorm ones were gendered. Why? My theory is that because the dorms were designed to be re done on a regular basis it wasn't hard to fix but the interior ones couldn't be so easily changed, so instead of separating by gender, they just left them unisex as they couldn't do anything else. So why no urinals? After all it was originally an all boys school. Mostly it was easier to build, but also because I really couldn't find a ratio between urinals and toilets, and only got annoyed me when the one reference I found said that for all male locations there should be more toilets/urinals total than one for all female or even mixed populations. That might explain a lot if that's considered the standard in the building industry.

This image actually shows the grid and vent work in the alchemy labs as it's difficult to see when you're in the school. I really just wanted to point it out. I used this floor to figure out the numbering system for the rooms in the school. As shown here:

I did make an effort to stick to this map, but I'm sure I messed it up somewhere.

I have a theory on the construction of the school. We're told that the doors were meant to be the thing to get people to invest, in the larger project, but was it all in one go? I think maybe not. Sure the grad hall and gates came first, then they suggested maybe we could build another floor, and then another, and then why not just build the whole thing and before the enclaves knew what was happening they were building a world wonder. Feature creep at it's finest.

The vent work in the Workshop is almost impossible to see from inside the school. I managed to find one spot, right over the power hammer for the final tour post and it's only barely visible. Here though, you can see the whole thing. It's the grey line that runs through it.

The labyrinth isn't quite as wild as the images in the books displayed, in fact it's quite regular from this angle, but when you're inside, getting lost is probably the stupidly easiest thing that can happen. It was mostly intentional, of course, but I'm surprised how well it worked and how easily I got turned around.

I will also say I'm so happy with how the gym ultimately turned out. Oh it could be better, but the stark difference between it and the rest of the school is something I really wanted to make clear. Also if you look close to the edges of the gym and workshop, you can see the current and old shafts, I never did remove the old ones.

The Grad Hall has the most leftover bits in the school. The multiple extra shafts, the remains of the lines for the levels of the landing, and square base of the whole thing. I would love to say I left it as an easter egg or something, but really it was one part laziness and one part really freaking dark down there. If I couldn't see it and know it's there, no one else can see it at all.

Of all the parts of the school, we know both a lot about the Graduation Hall, and almost nothing. It's shape is only hinted at, the general arrangement (entry doors on one side, exit on the other) is vague, and we really don't know where the shafts come in at all. My original build actually had the shafts going up toward the middle of the school, making the whole thing quite narrow indeed, but this felt much more likely to be true. This version feels more right, even if it still remains a bit too small. But only a bit.

And now a gif from the top to bottom. No it doesn't have every layer, but it has a lot of them.

Well that's the end. Link to final file below. Please feel free to download and explore it, modify or just play inside. And if someone gets a server of this running let me know. Hell let me know whatever you do with it, I'm straight up curious.

And since there's a chance she's reading this, thank you for the wonderful series and hope my build came at least pretty close to what you imagined, even if was made in Minecraft.

#the scholomance#the golden enclaves#a deadly education#naomi novik#scholomance#the last graduate#minecraft

7 notes

·

View notes

Text

What Are the Key Steps in AI Chatbot Development?

In the era of instant digital interaction, AI chatbots have become indispensable tools for businesses seeking to enhance customer experience, streamline operations, and drive engagement. From handling customer queries to automating repetitive tasks, chatbots are revolutionizing how companies communicate. But how exactly is an AI chatbot created? Let’s walk through the key steps in AI chatbot development, from concept to deployment and optimization.

1. Define the Chatbot's Purpose and Goals

Before jumping into development, it's crucial to define what the chatbot is supposed to do. Consider the following questions:

Will it answer customer service queries?

Will it guide users through a purchase journey?

Will it be used for internal tasks like IT support?

Setting a clear purpose allows for focused development and helps determine the required features, platforms, and AI sophistication. This phase also includes identifying the target audience and expected interactions.

2. Choose the Right Chatbot Type

There are mainly two types of chatbots:

Rule-based chatbots: Follow a predefined flow and can handle simple queries.

AI-powered chatbots: Use machine learning (ML) and natural language processing (NLP) to understand context, intent, and user sentiment.

For businesses looking to provide more personalized and adaptive interactions, AI chatbots are the go-to solution.

3. Select Development Tools and Platforms

Once you’ve determined the type, select the appropriate tools and platforms. Popular options include:

Development Frameworks: Microsoft Bot Framework, Google Dialogflow, Rasa, IBM Watson Assistant

Languages: Python, Node.js, Java

Hosting Platforms: AWS, Google Cloud, Azure

You also need to decide where the chatbot will be deployed—web, mobile apps, messaging platforms (like WhatsApp or Facebook Messenger), or all.

4. Design the Conversation Flow

Conversation design is one of the most creative and strategic parts of chatbot development. It includes:

Mapping out various user scenarios

Designing dialog trees for rule-based responses

Creating intents, entities, and responses for AI-based models

Considering fallback responses when the bot doesn’t understand

For AI-powered bots, the design must also factor in context retention and multi-turn conversations.

5. Train the NLP Model

If you're building an AI chatbot, you must train it to understand human language. This includes:

Defining intents (what the user wants)

Setting up entities (important data in user inputs)

Feeding sample utterances for each intent

Training the model with diverse input scenarios

The more varied the training data, the better your chatbot will perform. Using pre-trained language models like GPT or BERT can give you a strong starting point.

6. Integrate with Backend Systems

For the chatbot to be truly useful, it must integrate with databases, CRMs, ERPs, and APIs. For example:

An e-commerce chatbot might connect to inventory and order tracking systems.

A customer service bot may pull user data from a CRM like Salesforce.

An internal HR bot might integrate with employee databases and leave management tools.

This enables real-time, dynamic responses and allows the bot to perform complex tasks.

7. Test Extensively

Before deployment, thorough testing is essential:

Unit Testing: Each component (intent recognition, entity extraction, etc.)

Integration Testing: Interactions with external systems

User Testing: Real users interacting with the bot to check for errors, confusion, and gaps

Testing should focus on usability, accuracy, fallback behavior, and performance under load.

8. Deploy the Chatbot

Once the chatbot passes all tests, it’s ready for deployment. Ensure it’s launched on the desired platforms and integrated with necessary monitoring tools. Set up analytics dashboards to track:

Number of users

Engagement rate

Drop-off points

Intent success rate

Cloud-based services make deployment scalable and manageable.

9. Monitor, Analyze, and Optimize

Post-launch, the real work begins. Continuous improvement is key to chatbot success:

Collect conversation logs and analyze them for improvement areas

Use analytics to track usage trends and performance metrics

Regularly retrain the AI model with new data

Add new intents, flows, or languages based on user feedback

Optimization should be a regular process to keep the chatbot relevant and effective.

10. Ensure Security and Compliance

AI chatbots often handle sensitive data. It’s critical to:

Implement end-to-end encryption

Follow GDPR, HIPAA, or other industry-specific regulations

Use secure APIs and authentication mechanisms

Security measures must be baked in from the design phase, not added later.

Conclusion

AI chatbot development is a multi-stage journey that combines strategic planning, technical expertise, and ongoing refinement. By following these key steps—from goal setting and tool selection to testing and optimization—you can build a chatbot that not only automates tasks but also enhances user experience and delivers business value.

As businesses increasingly embrace automation, AI chatbot development is no longer optional—it’s a competitive necessity. Whether you're a startup or an enterprise, investing in a well-planned chatbot strategy can lead to smarter interactions, happier customers, and a more efficient organization.

0 notes

Text

The New Search Era: AI Prioritises Data, Not Destination

Here’s what no one tells you about the future of search: it’s no longer about sending traffic to your website. It’s about serving answers directly before a user ever clicks. Google’s AI Overviews are making that reality loud and clear.

If you’ve noticed your organic clicks dropping, your carefully crafted blog content being skimmed past, or your product pages buried under zero-click results, you’re not alone.

The game has changed. And in this new AI-first landscape, the brands that win are the ones who show up with data, not fluff. Insights, not intros. Clarity, not clutter.

Let’s break down what’s really happening and what smart B2B startups need to do about it.

Forget Rankings. Focus on Responses.

Traditional SEO taught us to chase traffic. Rank high, get the clicks, nurture the lead. Simple, right?

But AI Overviews? They’re flipping that logic.

Search engines are no longer prioritising where to send users. Instead, they’re trying to eliminate the need for a click at all.

Google’s AI now scrapes and compiles information from multiple sources to deliver “answers” directly on the results page. And while that might be great for users, for brands it means one thing:

If your content isn’t structured to feed the AI’s understanding, you’re invisible.

Your Website Isn’t the Destination, Your Data Is.

If you’re still writing content to “rank for keywords,” it’s time to recalibrate.

AI is no longer ranking pages based on old-school SEO metrics. It’s evaluating how well your content answers intent-rich queries, fits schema markup, and integrates into knowledge graphs.

In other words, AI isn’t looking for pretty blog posts. It’s looking for credible data.

That means your marketing team needs to start thinking like a product team:

What structured content can we surface?

Are we using a schema to tag it?

Can our data be easily parsed and pulled into AI summaries?

Because in this world, being source material beats being page one.

The Rise of the “Answer Engine”

Let’s call this what it is: we’ve entered the Answer Engine era.

And your goal isn’t just visibility anymore, it’s extractability. If AI can’t easily extract meaning from your site, you don’t get featured. Period.

That’s where Answer Engine Optimization (AEO) comes in. It’s not just the evolution of SEO. It’s what connects your brand to the way search works now.

AEO helps you:

Make your site machine-readable

Align content to high-intent search patterns

Build topic authority across your niche

Show up in AI Overviews and voice search queries

So, What Should You Be Doing Now?

If you’re a startup with limited marketing resources, here’s the real talk:

You don’t need more content. You need better signals.

That means: ✔ Structuring your existing content with schema ✔ Prioritising entity mapping and topic clusters ✔ Using clear, concise language that aligns with user intent ✔ Thinking beyond blogs — Use case pages, product documentation, FAQs, and glossaries all matter

This is the kind of strategy you won’t get from a junior marketer or your old SEO playbook.

AI’s Not the Enemy. But It’s Not Waiting for You

Most bootstrapped founders I talk to are still wondering why their content isn’t performing. The truth?

You’re not behind because your product isn’t good. You’re behind because your data isn’t optimised for the new rules of search.

This isn’t just a content shift. It’s a mindset shift.

And if your internal team isn’t set up to think strategically about AEO, structured content, and AI-readiness, then it’s time to bring in leadership that can.

Final Thoughts: Don’t Just Show Up — Be Found

We’re moving into a world where search engines don’t just recommend. They respond. And the brands that get seen will be the ones built for extraction, not just exploration.

If you’re ready to align your SEO and marketing with where search is going, not where it’s been. AEO isn’t optional. It’s urgent.

Need Help Becoming AI-Search Ready?

At JJ Creative Media Co., we help B2B startups and lean tech teams rethink their SEO from the ground up, through structured strategy, smart content, and executive-level marketing leadership.

Want to check if your site is AEO-ready?

[Download the 7-Point AEO Readiness Checklist] (gated asset CTA) Or let’s talk about how a Fractional CMO can help realign your marketing for the AI era.

Contact me at JJ Creative Media Co.

1 note

·

View note

Text

How Can I Use Programmatic SEO to Launch a Niche Content Site?

Launching a niche content site can be both exciting and rewarding—especially when it's done with a smart strategy like programmatic SEO. Whether you're targeting a hyper-specific audience or aiming to dominate long-tail keywords, programmatic SEO can give you an edge by scaling your content without sacrificing quality. If you're looking to build a site that ranks fast and drives passive traffic, this is a strategy worth exploring. And if you're unsure where to start, a professional SEO agency Markham can help bring your vision to life.

What Is Programmatic SEO?

Programmatic SEO involves using automated tools and data to create large volumes of optimized pages—typically targeting long-tail keyword variations. Instead of manually writing each piece of content, programmatic SEO leverages templates, databases, and keyword patterns to scale content creation efficiently.

For example, a niche site about hiking trails might use programmatic SEO to create individual pages for every trail in Canada, each optimized for keywords like “best trail in [location]” or “hiking tips for [terrain].”

Steps to Launch a Niche Site Using Programmatic SEO

1. Identify Your Niche and Content Angle

Choose a niche that:

Has clear search demand

Allows for structured data (e.g., locations, products, how-to guides)

Has low to medium competition

Examples: electric bike comparisons, gluten-free restaurants by city, AI tools for writers.

2. Build a Keyword Dataset

Use SEO tools (like Ahrefs, Semrush, or Google Keyword Planner) to extract long-tail keyword variations. Focus on "X in Y" or "best [type] for [audience]" formats. If you're working with an SEO agency Markham, they can help with in-depth keyword clustering and search intent mapping.

3. Create Content Templates

Build templates that can dynamically populate content with variables like location, product type, or use case. A content template typically includes:

Intro paragraph

Keyword-rich headers

Dynamic tables or comparisons

FAQs

Internal links to related pages

4. Source and Structure Your Data

Use public datasets, APIs, or custom scraping to populate your content. Clean, accurate data is the backbone of programmatic SEO.

5. Automate Page Generation

Use platforms like Webflow (with CMS collections), WordPress (with custom post types), or even a headless CMS like Strapi to automate publishing. If you’re unsure about implementation, a skilled SEO agency Markham can develop a custom solution that integrates data, content, and SEO seamlessly.

6. Optimize for On-Page SEO

Every programmatically created page should include:

Title tags and meta descriptions with dynamic variables

Clean URL structures (e.g., /tools-for-freelancers/)

Internal linking between related pages

Schema markup (FAQ, Review, Product)

7. Track, Test, and Improve

Once live, monitor your pages via Google Search Console. Use A/B testing to refine titles, layouts, and content. Focus on improving pages with impressions but low click-through rates (CTR).

Why Work with an SEO Agency Markham?

Executing programmatic SEO at scale requires a mix of SEO strategy, web development, content structuring, and data management. A professional SEO agency Markham brings all these capabilities together, helping you:

Build a robust keyword strategy

Design efficient, scalable page templates

Ensure proper indexing and crawlability

Avoid duplication and thin content penalties

With local expertise and technical know-how, they help you launch faster, rank better, and grow sustainably.

Final Thoughts

Programmatic SEO is a powerful method to launch and scale a niche content site—if you do it right. By combining automation with strategic keyword targeting, you can dominate long-tail search and generate massive organic traffic. To streamline the process and avoid costly mistakes, partner with an experienced SEO agency Markham that understands both the technical and content sides of SEO.

Ready to build your niche empire? Programmatic SEO could be your best-kept secret to success

0 notes

Text

Information Cloud Data Integration: Unifying Data for Smarter Business Decisions

Why Information Cloud Data Integration Matters

Today’s businesses run on data—but that data is often scattered across systems, platforms, and cloud environments. Without integration, information remains siloed, reducing the value organizations can extract from their digital assets.

Information Cloud Data Integration bridges this gap by enabling seamless data flow across cloud-based applications, databases, and on-premise environments. With the right integration strategy, enterprises can unlock real-time insights, improve collaboration, and accelerate data-driven decision-making.

What is Information Cloud Data Integration?

Information Cloud Data Integration refers to the process of combining data from various cloud-based and hybrid sources into a centralized, cohesive system for analysis, reporting, or operational use. This includes integration tools and platforms that allow:

Real-time data synchronization

Batch and ETL (Extract, Transform, Load) processing

API-driven connections

AI-powered data mapping

It is especially critical in multi-cloud and hybrid infrastructures where consistent, reliable data access is key to operational success.

Core Components of a Cloud Data Integration Strategy

Unlike traditional integration methods, cloud data integration must account for agility, scalability, and security. Key components include:

1. Data Connectors and APIs

Allowing integration between SaaS apps (e.g., Salesforce, SAP, ServiceNow) and cloud storage (e.g., AWS S3, Google Cloud Storage).

2. Data Transformation and Cleansing Tools

Ensuring consistency and accuracy across formats and sources.

3. Secure Transfer Protocols

Encrypting data in transit and at rest, ensuring compliance with regulations like GDPR or HIPAA.

4. Automation and Orchestration Engines

Automating workflows, scheduling updates, and triggering data-based actions.

Real-World Use Cases

Supply Chain Visibility

Manufacturers integrate supplier, logistics, and inventory data into a central analytics dashboard for improved forecasting and reduced delays.

Marketing & Sales Alignment

B2B enterprises synchronize CRM, email automation, and customer data platforms to create unified customer profiles and enhance targeting.

Healthcare Data Exchange

Hospitals use cloud integration to combine EMRs, lab systems, and patient engagement platforms for a 360° view of patient history.

Financial Reporting

Banks merge data from loan management, customer transactions, and compliance systems for real-time financial analysis and risk management.

Benefits of Information Cloud Data Integration

✔ Centralized and accurate business intelligence ✔ Real-time data access across departments ✔ Reduced manual data handling and errors ✔ Better customer and employee experiences ✔ Scalable architecture for future growth

Common Challenges and Solutions

Challenge

How to Overcome

Data silos in legacy systems

Use hybrid integration platforms that support both cloud and on-premise

Inconsistent data formats

Apply automated data cleansing and transformation rules

Security and compliance risks

Implement access control, encryption, and audit logs

Trends Shaping Cloud Data Integration in 2025

AI-Driven Integration: Automating data mapping and anomaly detection.

iPaaS Growth: Integration Platform as a Service is becoming the standard for enterprise-grade integration.

Self-Service Tools: Empowering business users to build data flows without IT dependency.

Edge-to-Cloud Data Pipelines: Enabling insights from IoT and remote devices in real time.

How Enterprises Can Maximize Cloud Data Integration

Unifying cloud ecosystems requires a well-planned data integration strategy—one that balances performance, security, and scalability. Success begins with selecting the right tools, building flexible architectures, and ensuring seamless data flow between systems like Salesforce, Azure, SAP, and various APIs.

A strategic approach to integration empowers organizations to automate operations, eliminate data silos, and gain real-time insights that drive innovation and faster decision-making.

Conclusion

Information Cloud Data Integration is vital for organizations aiming to stay competitive in a data-driven world. As your integration needs grow, aligning with the right expertise ensures long-term success. At Prophecy Technologies, we specialize in delivering customized cloud integration solutions that are secure, scalable, and aligned with your business goals—empowering you to turn information into action.

#CloudDataIntegration#DataUnification#RealTimeInsights#DataDrivenDecisions#DigitalTransformation#ProphecyTechs

0 notes

Text

Behind the Scenes of Google Maps – The Data Science Powering Real-Time Navigation

Whether you're finding the fastest route to your office or avoiding a traffic jam on your way to dinner, Google Maps is likely your trusted co-pilot. But have you ever stopped to wonder how this app always seems to know the best way to get you where you’re going?

Behind this everyday convenience lies a powerful blend of data science, artificial intelligence, machine learning, and geospatial analysis. In this blog, we’ll take a journey under the hood of Google Maps to explore the technologies that make real-time navigation possible.

The Core Data Pillars of Google Maps

At its heart, Google Maps relies on multiple sources of data:

Satellite Imagery

Street View Data

User-Generated Data (Crowdsourcing)

GPS and Location Data

Third-Party Data Providers (like traffic and transit systems)

All of this data is processed, cleaned, and integrated through complex data pipelines and algorithms to provide real-time insights.

Machine Learning in Route Optimization

One of the most impressive aspects of Google Maps is how it predicts the fastest and most efficient route for your journey. This is achieved using machine learning models trained on:

Historical Traffic Data: How traffic typically behaves at different times of the day.

Real-Time Traffic Conditions: Collected from users currently on the road.

Road Types and Speed Limits: Major highways vs local streets.

Events and Accidents: Derived from user reports and partner data.

These models use regression algorithms and probabilistic forecasting to estimate travel time and suggest alternative routes if necessary. The more people use Maps, the more accurate it becomes—thanks to continuous model retraining.

Real-Time Traffic Predictions: How Does It Work?

Google Maps uses real-time GPS data from millions of devices (anonymized) to monitor how fast vehicles are moving on specific road segments.

If a route that normally takes 10 minutes is suddenly showing delays, the system can:

Update traffic status dynamically (e.g., show red for congestion).

Reroute users automatically if a faster path is available.

Alert users with estimated delays or arrival times.

This process is powered by stream processing systems that analyze data on the fly, updating the app’s traffic layer in real time.

Crowdsourced Data – Powered by You

A big part of Google Maps' accuracy comes from you—the users. Here's how crowdsourcing contributes:

Waze Integration: Google owns Waze, and integrates its crowdsourced traffic reports.

User Reports: You can report accidents, road closures, or speed traps.

Map Edits: Users can suggest edits to business names, locations, or road changes.

All this data is vetted using AI and manual review before being pushed live, creating a community-driven map that evolves constantly.

Street View and Computer Vision

Google Maps' Street View isn’t just for virtual sightseeing. It plays a major role in:

Detecting road signs, lane directions, and building numbers.

Updating maps with the latest visuals.

Powering features like AR navigation (“Live View”) on mobile.

These images are processed using computer vision algorithms that extract information from photos. For example, identifying a “One Way” sign and updating traffic flow logic in the map's backend.

Dynamic Rerouting and ETA Calculation

One of the app’s most helpful features is dynamic rerouting—recalculating your route if traffic builds up unexpectedly.

Behind the scenes, this involves:

Continuous location tracking

Comparing alternative paths using current traffic models

Balancing distance, speed, and risk of delay

ETA (Estimated Time of Arrival) is not just based on distance—it incorporates live conditions, driver behavior, and historical delay trends.

Mapping the World – At Scale

To maintain global accuracy, Google Maps uses:

Satellite Data Refreshes every 1–3 years

Local Contributor Programs in remote regions

AI-Powered Map Generation, where algorithms stitch together raw imagery into usable maps

In fact, Google uses deep learning models to automatically detect new roads and buildings from satellite photos. This accelerates map updates, especially in developing areas where manual updates are slow.

Voice and Search – NLP in Maps

Search functionality in Google Maps is driven by natural language processing (NLP) and contextual awareness.

For example:

Searching “best coffee near me” understands your location and intent.

Voice queries like “navigate to home” trigger saved locations and route planning.

Google Maps uses entity recognition and semantic analysis to interpret your input and return the most relevant results.

Privacy and Anonymization

With so much data collected, privacy is a major concern. Google uses techniques like:

Location anonymization

Data aggregation

Opt-in location sharing

This ensures that while Google can learn traffic patterns, it doesn’t store identifiable travel histories for individual users (unless they opt into Location History features).

The Future: Predictive Navigation and AR

Google Maps is evolving beyond just directions. Here's what's coming next:

Predictive Navigation: Anticipating where you’re going before you enter the destination.

AR Overlays: Augmented reality directions that appear on your camera screen.

Crowd Density Estimates: Helping you avoid crowded buses or busy places.

These features combine AI, IoT, and real-time data science for smarter, more helpful navigation.

Conclusion:

From finding your favorite restaurant to getting you home faster during rush hour, Google Maps is a masterpiece of data science in action. It uses a seamless combination of:

Geospatial data

Machine learning

Real-time analytics

User feedback

…all delivered in seconds through a simple, user-friendly interface.

Next time you reach your destination effortlessly, remember—it’s not just GPS. It’s algorithms, predictions, and billions of data points working together in the background.

#nschool academy#datascience#googlemaps#machinelearning#realtimedata#navigationtech#bigdata#artificialintelligence#geospatialanalysis#maptechnology#crowdsourceddata#predictiveanalytics#techblog#smartnavigation#locationintelligence#aiapplications#trafficprediction#datadriven#dataengineering#digitalmapping#computerVision#coimbatore

0 notes

Text

Efficient Naver Map Data Extraction for Business Listings

Introduction

In today's competitive business landscape, having access to accurate and comprehensive business data is crucial for strategic decision-making and targeted marketing campaigns. Naver Map Data Extraction presents a valuable opportunity to gather insights about local businesses, consumer preferences, and market trends for companies looking to expand their operations or customer base in South Korea.

Understanding the Value of Naver Map Business Data

Naver is often called "South Korea's Google," dominating the local search market with over 70% market share. The platform's mapping service contains extensive information about businesses across South Korea, including contact details, operating hours, customer reviews, and location data. Naver Map Business Data provides international and local businesses rich insights to inform market entry strategies, competitive analysis, and targeted outreach campaigns.

However, manually collecting this information would be prohibitively time-consuming and inefficient. This is where strategic Business Listings Scraping comes into play, allowing organizations to collect and analyze business information at scale systematically.

The Challenges of Accessing Naver Map Data

Unlike some other platforms, Naver presents unique challenges for data collection:

Language barriers: Naver's interface and content are primarily Korean, creating obstacles for international businesses.

Complex website structure: Naver's dynamic content loading makes straightforward scraping difficult.

Strict rate limiting: Aggressive anti-scraping measures can block IP addresses that require too many requests.

CAPTCHA systems: Automated verification challenges to prevent bot activity.

Terms of service considerations: Understanding the Legal Ways To Scrape Data From Naver Map is essential.

Ethical and Legal Considerations

Before diving into the technical aspects of Naver Map API Scraping, it's crucial to understand the legal and ethical framework. While data on the web is publicly accessible, how you access it matters from legal and ethical perspectives.

To Scrape Naver Map Data Without Violating Terms Of Service, consider these principles:

Review Naver's terms of service and robots.txt file to understand access restrictions.

Implement respectful scraping practices with reasonable request rates.

Consider using official APIs where available.

Store only the data you need and ensure compliance with privacy regulations, such as GDPR and Korea's Personal Information Protection Act.

Use the data for legitimate business purposes without attempting to replicate Naver's services.

Effective Methods For Scraping Naver Map Business Data

There are several approaches to gathering business listing data from Naver Maps, each with advantages and limitations.

Here are the most practical methods:

1. Official Naver Maps API

Naver provides official APIs that allow developers to access map data programmatically. While these APIs have usage limitations and costs, they represent the most straightforward and compliant Naver Map Business Data Extraction method.

The official API offers:

Geocoding and reverse geocoding capabilities.

Local search functionality.

Directions and routing services.

Address verification features.

Using the official API requires registering a developer account and adhering to Naver's usage quotas and pricing structure. However, it provides reliable, sanctioned access to the data without risking account blocks or legal issues.

2. Web Scraping Solutions

When API limitations prove too restrictive for your business needs, web scraping becomes a viable alternative. Naver Map Scraping Tools range from simple script-based solutions to sophisticated frameworks that can handle dynamic content and bypass basic anti-scraping measures.

Key components of an effective scraping solution include:

Proxy RotationRotating between multiple proxy servers is essential to prevent IP bans when accessing large volumes of data. This spreads requests across different IP addresses, making the scraping activity appear more like regular user traffic than automated collection.Commercial proxy services offer:1. Residential proxies that use real devices and ISPs.2. Datacenter proxies that provide cost-effective rotation options.3. Geographically targeted proxies that can access region-specific content.

Request Throttling Implementing delays between requests helps mimic human browsing patterns and reduces server load. Adaptive throttling that adjusts based on server response times can optimize the balance between collection speed and avoiding detection.

Browser Automation Tools like Selenium and Playwright can control real browsers to render JavaScript-heavy pages and interact with elements just as a human user would. This approach is efficient for navigating Naver's dynamic content loading system.

3. Specialized Web Scraping API Services

For businesses lacking technical resources to build and maintain scraping infrastructure, Web Scraping API offers a middle-ground solution. These services handle the complexities of proxy rotation, browser rendering, and CAPTCHA solving while providing a simple API interface to request data.

Benefits of using specialized scraping APIs include:

Reduced development and maintenance overhead.

Built-in compliance with best practices.

Scalable infrastructure that adapts to project needs.

Regular updates to counter anti-scraping measures.

Structuring Your Naver Map Data Collection Process

Regardless of the method chosen, a systematic approach to Naver Map Data Extraction will yield the best results. Here's a framework to guide your collection process:

1. Define Clear Data Requirements

Before beginning any extraction project, clearly define what specific business data points you need and why.

This might include:

Business names and categories.

Physical addresses and contact information.

Operating hours and service offerings.

Customer ratings and review content.

Geographic coordinates for spatial analysis.

Precise requirements prevent scope creep and ensure you collect only what's necessary for your business objectives.

2. Develop a Staged Collection Strategy

Rather than attempting to gather all data at once, consider a multi-stage approach:

Initial broad collection of business identifiers and basic information.

Categorization and prioritization of listings based on business relevance.

Detailed collection focusing on high-priority targets.

Periodic updates to maintain data freshness.

This approach optimizes resource usage and allows for refinement of collection parameters based on initial results.

3. Implement Data Validation and Cleaning

Raw data from Naver Maps often requires preprocessing before it becomes business-ready.

Common data quality issues include:

Inconsistent formatting of addresses and phone numbers.

Mixed language entries (Korean and English).

Duplicate listings with slight variations.

Outdated or incomplete information.

Implementing automated validation rules and manual spot-checking ensures the data meets quality standards before analysis or integration with business systems.

Specialized Use Cases for Naver Product Data Scraping

Beyond basic business information, Naver's ecosystem includes product listings and pricing data that can provide valuable competitive intelligence.

Naver Product Data Scraping enables businesses to:

Monitor competitor pricing strategies.

Identify emerging product trends.

Analyze consumer preferences through review sentiment.

Track promotional activities across the Korean market.

This specialized data collection requires targeted approaches that navigate Naver's shopping sections and product detail pages, often necessitating more sophisticated parsing logic than standard business listings.

Data Analysis and Utilization

The actual value of Naver Map Business Data emerges during analysis and application. Consider these strategic applications:

Market Penetration AnalysisBy mapping collected business density data, companies can identify underserved areas or regions with high competitive saturation. This spatial analysis helps optimize expansion strategies and resource allocation.

Competitive BenchmarkingAggregated ratings and review data provide insights into competitor performance and customer satisfaction. This benchmarking helps identify service gaps and opportunities for differentiation.

Lead Generation and OutreachFiltered business contact information enables targeted B2B marketing campaigns, partnership initiatives, and sales outreach programs tailored to specific business categories or regions.

How Retail Scrape Can Help You?

We understand the complexities involved in Naver Map API Scraping and the strategic importance of accurate Korean market data. Our specialized team combines technical expertise with deep knowledge of Korean digital ecosystems to deliver reliable, compliance-focused data solutions.

Our approach to Naver Map Business Data Extraction is built on three core principles:

Compliance-First Approach: We strictly adhere to Korean data regulations, ensuring all activities align with platform guidelines for ethical, legal scraping.

Korea-Optimized Infrastructure: Our tools are designed for Korean platforms, offering native language support and precise parsing for Naver’s unique data structure.

Insight-Driven Delivery: Beyond raw data, we offer value-added intelligence—market insights, tailored reports, and strategic recommendations to support your business in Korea.

Conclusion

Harnessing the information available through Naver Map Data Extraction offers significant competitive advantages for businesses targeting the Korean market. Organizations can develop deeper market understanding and more targeted business strategies by implementing Effective Methods For Scraping Naver Map Business Data with attention to legal compliance, technical best practices, and strategic application.

Whether you want to conduct market research, generate sales leads, or analyze competitive landscapes, the rich business data available through Naver Maps can transform your Korean market operations. However, the technical complexities and compliance considerations make this a specialized undertaking requiring careful planning and execution.

Need expert assistance with your Korean market data needs? Contact Retail Scrape today to discuss how our specialized Naver Map Scraping Tools and analytical expertise can support your business objectives.

Source : https://www.retailscrape.com/efficient-naver-map-data-extraction-business-listings.php

Originally Published By https://www.retailscrape.com/

#NaverMapDataExtraction#BusinessListingsScraping#NaverBusinessData#SouthKoreaMarketAnalysis#WebScrapingServices#NaverMapAPIScraping#CompetitorAnalysis#MarketIntelligence#DataExtractionSolutions#RetailDataScraping#NaverMapBusinessListings#KoreanBusinessDataExtraction#LocationDataScraping#NaverMapsScraper#DataMiningServices#NaverLocalSearchData#BusinessIntelligenceServices#NaverMapCrawling#GeolocationDataExtraction#NaverDirectoryScraping

0 notes

Text

Mapping Borewell Locations Near Rivers and Lakes with GIS-Based Tools

Ever Wonder Where Borewells Are Too Close to Rivers or Lakes? Or how that affects groundwater recharge? Location matters — and so does how you see it. That’s where GIS (Geographic Information Systems) tools come in.

Who’s Using These Tools? Planners. Environmentalists. Students. NGOs. Basically anyone who cares about smart water use.

With tools like MAPOG, you can upload borewell data and instantly map:

Which borewells are too close to rivers/lakes

Where recharge zones should be protected

How surface water may influence groundwater

No tech wizardry needed. Just your Excel file and curiosity.

Simple Tools → Smart Maps Not a GIS pro? No worries. MAPOG lets you turn boring spreadsheets into interactive maps with:

Color-coded borewells by depth or type

Buffer zones around rivers/lakes (like a 300m safe zone)

Recharge hotspots clearly marked

Shareable maps for your team, community, or project page

It’s like Google Maps… but smarter and for groundwater.

Why It Matters

Badly placed borewells can cause over-extraction or contamination.

GIS helps fix that — by showing what’s happening and where.Smart mapping = smarter decisions.

Better planning = sustainable groundwater use.

So… Have You Mapped Your Borewells Yet? Start now. Find the risk zones. Protect the recharge zones. Because every borewell — and every decision — makes a difference.

1 note

·

View note