#Hamiltonian Paths

Explore tagged Tumblr posts

Text

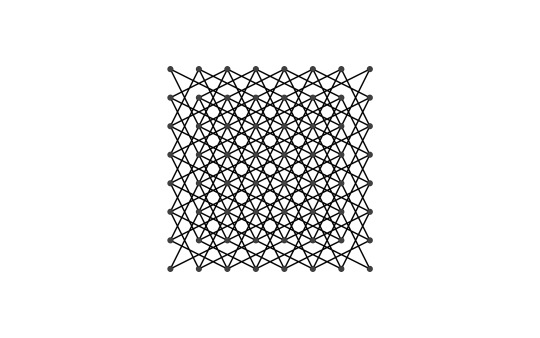

This maze has only one long passage which hits every grid cell without branching. The math term for this is a Hamiltonian path. The algorithm I used works by first finding a boring Hamiltonian path by just squiggling up and down like a lawnmower, then randomly squargling the path until it is sufficiently squiggly.

47 notes

·

View notes

Text

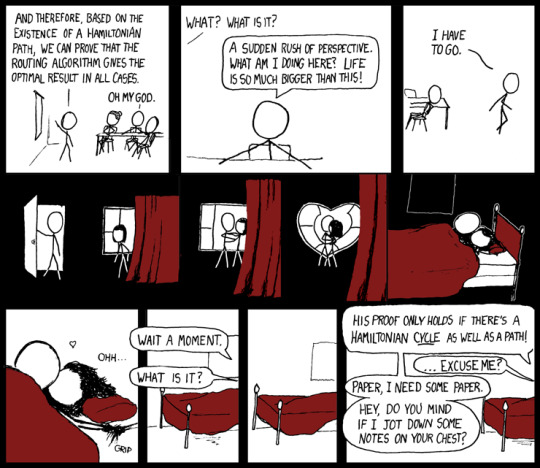

The problem with perspective is that it's bidirectional.

Hamiltonian [Explained]

Transcript Under the Cut

Lecturer: And therefore, based on the existence of a Hamiltonian path, we can prove that the routing algorithm gives the optimal result in all cases. Cueball: Oh my God.

[Close-up of Cueball.] Offscreen: What? What is it? Cueball: A sudden rush of perspective. What am I doing here? Life is so much bigger than this!

[Cueball running out of room.] Cueball: I have to go.

[Cueball enters darkened room, where Megan waits by window.]

[Cueball and Megan embrace...]

[...and get into bed.]

[A heart appears over the supine bodies.] Megan: Ohh... grip Cueball (out of frame): Wait a moment. Megan (out of frame): What is it?

[Silence.]

Cueball (out of frame): His proof only holds if there's a Hamiltonian cycle as well as a path! Megan (out of frame): ...excuse me? Cueball (out of frame): Paper, I need some paper. Hey, do you mind if I jot down some notes on your chest?

29 notes

·

View notes

Text

I step up to the podium

"Hamiltonian paths are space-filling curves" I say

I step down. The crowd boos me.

"No, he's right!" a voice calls out.

I look to the crowd. Alexander Hamilton is standing in the fifth row.

2 notes

·

View notes

Text

Sonic the Hedgehog is stuck in the Zelda Classic quest "Remembrance of Shadows." Luckily, Frontiers got him used to Hamiltonian Path puzzles already.

The last character you drew/wrote about is now stuck in the last game you played. How screwed are they?

66K notes

·

View notes

Text

Quantum Recurrent Embedding Neural Networks Approach

Quantum Recurrent Embedding Neural Network

Trainability issues as network depth increases are a common challenge in finding scalable machine learning models for complex physical systems. Researchers have developed a novel approach dubbed the Quantum Recurrent Embedding Neural Network (QRENN) to overcome these limitations with its unique architecture and strong theoretical foundations.

Mingrui Jing, Erdong Huang, Xiao Shi, and Xin Wang from the Hong Kong University of Science and Technology (Guangzhou) Thrust of Artificial Intelligence, Information Hub and Shengyu Zhang from Tencent Quantum Laboratory made this groundbreaking finding. As detailed in the article “Quantum Recurrent Embedding Neural Network,” the QRENN can avoid “barren plateaus,” a common and critical difficulty in deep quantum neural network training when gradients rapidly drop. Additionally, the QRENN resists classical simulation.

The QRENN uses universal quantum circuit designs and ResNet's fast-track paths for deep learning. Maintaining a sufficient “joint eigenspace overlap,” which assesses the closeness between the input quantum state and the network's internal feature representations, enables trainability. The persistence of overlap has been proven by dynamical Lie algebra researchers.

Applying QRENN to Hamiltonian classification, namely identifying symmetry-protected topological (SPT) phases of matter, has proven its theoretical design. SPT phases are different states of matter with significant features, making them hard to identify in condensed matter physics. The QRENN's ability to categorise Hamiltonians and recognise topological phases shows its utility in supervised learning.

Numerical tests demonstrate that the QRENN can be trained as the quantum system evolves. This is crucial for tackling complex real-world challenges. In simulations with a one-dimensional cluster-Ising Hamiltonian, overlap decreased polynomially as system size increased instead of exponentially. This shows that the network may maintain gradients during training, avoiding the vanishing gradient issue of many QNN architectures.

This paper solves a significant limitation in quantum machine learning by establishing the trainability of a certain QRENN architecture. This allows for more powerful and scalable quantum machine learning models. Future study will examine QRENN applications in financial modelling, drug development, and materials science. Researchers want to improve training algorithms and study unsupervised and reinforcement learning with hybrid quantum-classical algorithms that take advantage of both computing paradigms.

Quantum Recurrent Embedding Neural Network with Explanation (QRENN) provides more information.

Quantum machine learning (QML) has advanced with the Quantum Recurrent Embedding Neural Network (QRENN), which solves the trainability problem that plagues deep quantum neural networks.

Challenge: Barren Mountains Conventional quantum neural networks (QNNs) often experience “barren plateau” occurrences. As system complexity or network depth increase, gradients needed for network training drop exponentially. Vanishing gradients stop learning, making it difficult to train large, complex QNNs for real-world applications.

The e Solution and QRENN Foundations Two major developments by QRENN aim to improve trainability and prevent arid plateaus:

General quantum circuit designs and well-known deep learning algorithms, especially ResNet's fast-track pathways (residual networks), inspired its creation. ResNets are notable for their effective training in traditional deep learning because they use “skip connections” to circumvent layers.

Joint Eigenspace Overlap: QRENN's trainability relies on its large “joint eigenspace overlap”. Overlap refers to the degree of similarity between the input quantum state and the network's internal feature representations. By preserving this overlap, QRENN ensures gradients remain large. This preservation is rigorously shown using dynamical Lie algebras, which are fundamental for analysing quantum circuit behaviour and characterising physical system symmetries.

Architectural details of CV-QRNN When information is represented in continuous variables (qumodes) instead of discrete qubits, the Continuous-Variable Quantum Recurrent Neural Network (CV-QRNN) functions.

Inspired by Vanilla RNN: The CV-QRNN design is based on the vanilla RNN architecture, which processes data sequences recurrently. The no-cloning theorem prevents classical RNN versions like LSTM and GRU from being implemented on a quantum computer, however CV-QRNN modifies the fundamental RNN notion.

A single quantum layer (L) affects n qumodes in CV-QRNN. First, qumodes are created in vacuum.

Important Quantum Gates: The network processes data via quantum gates:

By acting on a subset of qumodes, displacement gates (D) encode classical input data into the quantum network. Squeezing Gates (S): Give qumodes complicated squeeze parameters.

Multiport Interferometers (I): They perform complex linear transformations on several qumodes using beam splitters and phase shifters.

Nonlinearity by Measurement: CV-QRNN provides machine learning nonlinearity using measurements and a quantum system's tensor product structure. After processing, some qumodes (register modes) are transferred to the next iteration, while a subset (input modes) undergo a homodyne measurement and are reset to vacuum. After scaling by a trainable parameter, this measurement's result is input for the next cycle.

Performance and Advantages

According to computer simulations, CV-QRNN trained 200% faster than a traditional LSTM network. The former obtained ideal parameters (cost function ≤ 10⁻⁵) in 100 epochs, while the later took 200. Due to the massive processing power and energy consumption of big classical machine learning models, faster training is necessary.

Scalability: The QRENN can be trained as the quantum system grows, which is crucial for practical use. As system size increases, joint eigenspace overlap reduces polynomially, not exponentially.

Task Execution:

Classifying Hamiltonians and detecting symmetry-protected topological phases proves its utility in supervised learning.

Time Series Prediction and Forecasting: CV-QRNN predicted and forecast quasi-periodic functions such the Bessel function, sine, triangle wave, and damped cosine after 100 epochs.

MNIST Image Classification: Classified handwritten digits like “3” and “6” with 85% accuracy. The quantum network learnt, even though a classical LSTM had fewer epochs and 93% accuracy for this job.

CV-QRNN can be implemented using commercial room-temperature quantum-photonic hardware. This includes powerful homodyne detectors, lasers, beam splitters, phase shifters, and squeezers. Strong Kerr-type interactions are difficult to generate, but nonlinearity measurement eliminates them.

Future research will study how QRENN can be applied to more complex problems, such as financial modelling, medical development, and materials science. We'll also investigate its unsupervised and reinforcement learning potential and develop more efficient and scalable training algorithms.

Research on hybrid quantum-classical algorithms is vital. Next, test these models on quantum hardware instead of simulators. Researchers also seek to evaluate CV-QRNN performance using complex real-world data like hurricane strength and establish more equal frameworks for comparing conventional and quantum networks, such as effective dimension based on quantum Fisher information.

#QRENN#ArtificialIntelligence#deeplearning#quantumcircuitdesigns#quantummachinelearning#quantumneuralnetworks#News#Technews#Technology#TechnologyNews#Technologytrends#Govindhtech

0 notes

Text

IEEE Transactions on Artificial Intelligence, Volume 6, Issue 5, May 2025

1) A Comparative Review of Deep Learning Techniques on the Classification of Irony and Sarcasm in Text

Author(s): Leonidas Boutsikaris, Spyros Polykalas

Pages: 1052 - 1066

2) Approaching Principles of XAI: A Systematization

Author(s): Raphael Ronge, Bernhard Bauer, Benjamin Rathgeber

Pages: 1067 - 1079

3) Brain-Conditional Multimodal Synthesis: A Survey and Taxonomy

Author(s): Weijian Mai, Jian Zhang, Pengfei Fang, Zhijun Zhang

Pages: 1080 - 1099

4) Analysis of An Intellectual Mechanism of a Novel Crop Recommendation System Using Improved Heuristic Algorithm-Based Attention and Cascaded Deep Learning Network

Author(s): Yaganteeswarudu Akkem, Saroj Kumar Biswas

Pages: 1100 - 1113

5) Improving String Stability in Cooperative Adaptive Cruise Control Through Multiagent Reinforcement Learning With Potential-Driven Motivation

Author(s): Kun Jiang, Min Hua, Xu He, Lu Dong, Quan Zhou, Hongming Xu, Changyin Sun

Pages: 1114 - 1127

6) A Quantum Multimodal Neural Network Model for Sentiment Analysis on Quantum Circuits

Author(s): Jin Zheng, Qing Gao, Daoyi Dong, Jinhu Lü, Yue Deng

Pages: 1128 - 1142

7) Decoupling Dark Knowledge via Block-Wise Logit Distillation for Feature-Level Alignment

Author(s): Chengting Yu, Fengzhao Zhang, Ruizhe Chen, Aili Wang, Zuozhu Liu, Shurun Tan, Er-Ping Li

Pages: 1143 - 1155

8) CauseTerML: Causal Learning via Term Mining for Assessing Review Discrepancies

Author(s): Wenjie Sun, Chengke Wu, Qinge Xiao, Junjie Jiang, Yuanjun Guo, Ying Bi, Xinyu Wu, Zhile Yang

Pages: 1156 - 1170

9) Unsupervised Learning of Unbiased Visual Representations

Author(s): Carlo Alberto Barbano, Enzo Tartaglione, Marco Grangetto

Pages: 1171 - 1183

10) Herb-Target Interaction Prediction by Multiinstance Learning

Author(s): Yongzheng Zhu, Liangrui Ren, Rong Sun, Jun Wang, Guoxian Yu

Pages: 1184 - 1193

11) Periodic Hamiltonian Neural Networks

Author(s): Zi-Yu Khoo, Dawen Wu, Jonathan Sze Choong Low, Stéphane Bressan

Pages: 1194 - 1202

12) Unsigned Road Incidents Detection Using Improved RESNET From Driver-View Images

Author(s): Changping Li, Bingshu Wang, Jiangbin Zheng, Yongjun Zhang, C.L. Philip Chen

Pages: 1203 - 1216

13) Deep Reinforcement Learning Data Collection for Bayesian Inference of Hidden Markov Models

Author(s): Mohammad Alali, Mahdi Imani

Pages: 1217 - 1232

14) NVMS-Net: A Novel Constrained Noise-View Multiscale Network for Detecting General Image Processing Based Manipulations

Author(s): Gurinder Singh, Kapil Rana, Puneet Goyal, Sathish Kumar

Pages: 1233 - 1247

15) Improved Supervised Machine Learning for Predicting Auto Insurance Purchase Patterns

Author(s): Mourad Nachaoui, Fatma Manlaikhaf, Soufiane Lyaqini

Pages: 1248 - 1258

16) An Intelligent Chatbot Assistant for Comprehensive Troubleshooting Guidelines and Knowledge Repository in Printed Circuit Board Production

Author(s): Supparesk Rittikulsittichai, Thitirat Siriborvornratanakul

Pages: 1259 - 1268

17) Learning to Communicate Among Agents for Large-Scale Dynamic Path Planning With Genetic Programming Hyperheuristic

Author(s): Xiao-Cheng Liao, Xiao-Min Hu, Xiang-Ling Chen, Yi Mei, Ya-Hui Jia, Wei-Neng Chen

Pages: 1269 - 1283

18) Multilabel Black-Box Adversarial Attacks Only With Predicted Labels

Author(s): Linghao Kong, Wenjian Luo, Zipeng Ye, Qi Zhou, Yan Jia

Pages: 1284 - 1297

19) VODACBD: Vehicle Object Detection Based on Adaptive Convolution and Bifurcation Decoupling

Author(s): Yunfei Yin, Zheng Yuan, Yu He, Xianjian Bao

Pages: 1298 - 1308

20) Seeking Secure Synchronous Tracking of Networked Agent Systems Subject to Antagonistic Interactions and Denial-of-Service Attacks

Author(s): Weihao Li, Lei Shi, Mengji Shi, Jiangfeng Yue, Boxian Lin, Kaiyu Qin

Pages: 1309 - 1320

21) Revisiting LARS for Large Batch Training Generalization of Neural Networks

Author(s): Khoi Do, Minh-Duong Nguyen, Nguyen Tien Hoa, Long Tran-Thanh, Nguyen H. Tran, Quoc-Viet Pham

Pages: 1321 - 1333

22) A Stratified Seed Selection Algorithm for K-Means Clustering on Big Data

Author(s): Namita Bajpai, Jiaul H. Paik, Sudeshna Sarkar

Pages: 1334 - 1344

23) Visual–Semantic Fuzzy Interaction Network for Zero-Shot Learning

Author(s): Xuemeng Hui, Zhunga Liu, Jiaxiang Liu, Zuowei Zhang, Longfei Wang

Pages: 1345 - 1359

24) Weakly Correlated Multimodal Domain Adaptation for Pattern Classification

Author(s): Shuyue Wang, Zhunga Liu, Zuowei Zhang, Mohammed Bennamoun

Pages: 1360 - 1372

25) Prompt Customization for Continual Learning

Author(s): Yong Dai, Xiaopeng Hong, Yabin Wang, Zhiheng Ma, Dongmei Jiang, Yaowei Wang

Pages: 1373 - 1385

26) Monocular 3-D Reconstruction of Blast Furnace Burden Surface Based on Cross-Domain Generative Self-Supervised Network

Author(s): Zhipeng Chen, Xinyi Wang, Ling Shen, Jinshi Liu, Jianjun He, Jilin Zhu, Weihua Gui

Pages: 1386 - 1400

27) Energy-Efficient Hybrid Impulsive Model for Joint Classification and Segmentation on CT Images

Author(s): Bin Hu, Zhi-Hong Guan, Guanrong Chen, Jürgen Kurths

Pages: 1401 - 1413

28) Deep Temporally Recursive Differencing Network for Anomaly Detection in Videos

Author(s): Gargi V. Pillai, Debashis Sen

Pages: 1414 - 1428

29) A Hierarchical Cross-Modal Spatial Fusion Network for Multimodal Emotion Recognition

Author(s): Ming Xu, Tuo Shi, Hao Zhang, Zeyi Liu, Xiao He

Pages: 1429 - 1438

30) On the Role of Priors in Bayesian Causal Learning

Author(s): Bernhard C. Geiger, Roman Kern

Pages: 1439 - 1445

0 notes

Text

Physics-inspired Idealist Speculations

Imagine a classical many-particle system, described within the framework of Hamiltonian mechanics. Each particle has a position and a momentum, and together, these define a point in a vast phase space — the space of all possible configurations the system could occupy. The system's total energy is given by a Hamiltonian function, which is conserved over time. The dynamics of the system unfold as a continuous path through phase space, but always constrained to lie on a hypersurface of constant energy.

If the system starts with a high total energy, this constraint is still relatively permissive: there are many regions in phase space the system can explore, and the resulting trajectory may be extremely complex, possibly even chaotic. Many degrees of freedom are active, and their interactions lead to intricate, unpredictable evolutions.

Now imagine we remove energy from the system. As we do so, we are effectively limiting its ability to explore phase space. The system is confined to smaller and smaller subregions of the total space, and its motion begins to change character. Instead of erratic wandering or complex collective oscillations, we begin to see regularity. Certain degrees of freedom become "frozen," unable to participate due to insufficient energy. The system might fall into a local minimum of the potential landscape, where only small harmonic oscillations are possible. Instead of chaotic dynamics, we observe simple, structured motion. At the very lowest energies, the system may reach a state of total rest: a perfectly ordered configuration that represents a ground state.

In this way, we see a clear tendency — as energy decreases, the system’s behavior becomes simpler, more ordered, more predictable. High energy enables complexity and multiplicity, while low energy selects regularity and form.

And note that the energy landscape is simply the direct logical result of the properties of the interacting physical particles. The landscape must not be specified in an extra step - it comes for free with the particle and interaction properties. It therefore exists outside of space and time, in a kind of Platonic possibility space. Some parts of this landscape may never be visited by the system, remaining mere latent possibilities.

What follows now is just meant as a vague analogy:

Let us suppose, as idealism does, that consciousness — not matter — is the fundamental substance of reality. What we perceive as a physical system moving in phase space becomes, in this reinterpretation, a mental dynamic: a motion not of particles, but of conscious experience. The vast phase space of particle positions and momenta now represents the space of possible experiential configurations. The energy of the system no longer measures kinetic or potential activity in matter, but instead corresponds to a degree of dynamical freedom — the extent to which consciousness is engaged in generating variety, complexity, novelty.

A high-energy state, with its chaotic, multidimensional motion, corresponds to a consciousness flooded with options — a restless awareness flitting through countless possible forms without settling. As this mental “energy” is reduced — whether through internal focus, simplification, or exhaustion of distraction — the motion becomes more coherent. Consciousness begins to stabilize into regular patterns: repeated perceptions, stable categories, enduring experiences. These stable forms, in this analogy, are attractors of consciousness — preferred or habitual modes of experience, patterns of awareness into which consciousness tends to fall and remain.

We can take this further by imagining that these attractors exist within a shared, structured space — an attractor landscape defined not by mechanical forces, but by tendencies within universal consciousness itself. Valleys in this landscape represent modes of experience that are particularly coherent, meaningful, or self-reinforcing. Most individual minds — understood as specific trajectories within this larger field — tend to fall into the same valleys. This explains why human beings, across time and culture, generally perceive the same sky, walk on the same ground, and interact with similarly structured objects. These are not fixed external realities, but stable attractors within the mental landscape of universal consciousness — persistent, collectively rendered experiences.

But we have to extend our simple analogy here: The individual minds - fictitious particles exploring the universal landscape - have agency, memory, and a degree of creative freedom. They can reinforce existing valleys by revisiting them, or — in rare cases — help to carve out new ones through sustained novelty and attention. They are guided by the shape of consciousness, and they also participate in shaping it.

Consensus reality is the deepest and broadest basin — the attractor that most fictitious particles fall into, simply because it has been visited and reinforced for millennia. It represents the most widely shared patterns of perception and thought. However, some individuals — whether through trauma, cultural separation, psychedelic experiences, meditative training, or simply through nonconformist temperament — escape this dominant valley and enter into alternative basins. They may experience different laws, different presences, different logics. These are not delusions in a pathological sense, but excursions into less-traveled attractors of the universal field. If these excursions are repeated and shared by others, they can grow deeper — forming the foundations of new cultural realities, new religions, new sciences, or new ways of being.

1 note

·

View note

Text

VE477 Homework 3

Questions preceded by a * are optional. Although they can be skipped without any deduction, it is important to know and understand the results they contain. Ex. 1 — Hamiltonian path 1. Explain and present Depth-First Search (DFS). 2. Explain and present topological sorting. Write the pseudo-code of a polynomial time algorithm which decides if a directed acyclic graph contains a Hamiltonian…

0 notes

Text

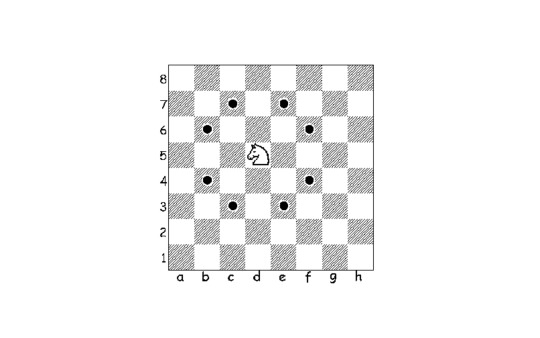

The “Bishop or Knight” debate can be divided depending on the situation and strategy. But when it comes to which is more charming, the answer would definitely be knight. Unlike the Bishop, which only moves the same coloured squares diagonally in an X-shape throughout the game, the Knight is much more complicated. The Knight follows a difficult-to-describe movement pattern of ‘one square in one direction and one of the two diagonal directions of that direction’, which means that the Knight is destined to step on a different coloured square every time it moves. In addition to the fact that it is constantly moving back and forth between black and white, the fact that it can jump over other pieces in its way makes it a unique variable in chess.

* Additionally -A knight’s tour- is a sequence of moves of a knight on a chessboard such that the knight visits every square exactly once. This problem is an instance of the more general Hamiltonian path problem in graph theory. The problem of finding a closed knight’s tour is similarly an instance of the Hamiltonian cycle problem. Unlike the general Hamiltonian path problem, the knight’s tour problem can be solved in linear time.

1 note

·

View note

Text

Living Cellular Computers: A New Frontier in AI and Computation Beyond Silicon

New Post has been published on https://thedigitalinsider.com/living-cellular-computers-a-new-frontier-in-ai-and-computation-beyond-silicon/

Living Cellular Computers: A New Frontier in AI and Computation Beyond Silicon

Biological systems have fascinated computer scientists for decades with their remarkable ability to process complex information, adapt, learn, and make sophisticated decisions in real time. These natural systems have inspired the development of powerful models like neural networks and evolutionary algorithms, which have transformed fields such as medicine, finance, artificial intelligence and robotics. However, despite these impressive advancements, replicating the efficiency, scalability, and robustness of biological systems on silicon-based machines remains a significant challenge.

But what if, instead of merely imitating these natural systems, we could use their power directly? Imagine a computing system where living cells — the building block of biological systems — are programmed to perform complex computations, from Boolean logic to distributed computations. This concept has led to a new era of computation: cellular computers. Researchers are investigating how we can program living cells to handle complex calculations. By employing the natural capabilities of biological cells, we may overcome some of the limitations of traditional computing. This article explores the emerging paradigm of cellular computers, examining their potential for artificial intelligence, and the challenges they present.

The Genesis of Living Cellular Computers

The concept of living cellular computers is rooted in the interdisciplinary field of synthetic biology, which combines principles from biology, engineering, and computer science. At its core, this innovative approach uses the inherent capabilities of living cells to perform computational tasks. Unlike traditional computers that rely on silicon chips and binary code, living cellular computers utilize biochemical processes within cells to process information.

One of the pioneering efforts in this domain is the genetic engineering of bacteria. By manipulating the genetic circuits within these microorganisms, scientists can program them to execute specific computational functions. For instance, researchers have successfully engineered bacteria to solve complex mathematical problems, such as the Hamiltonian path problem, by exploiting their natural behaviors and interactions.

Decoding Components of Living Cellular Computers

To understand the potential of cellular computers, it’s useful to explore the core principles that make them work. Imagine DNA as the software of this biological computing system. Just like traditional computers use binary code, cellular computers utilize the genetic code found in DNA. By modifying this genetic code, scientists can instruct cells to perform specific tasks. Proteins, in this analogy, serve as the hardware. They are engineered to respond to various inputs and produce outputs, much like the components of a traditional computer. The complex web of cellular signaling pathways acts as the information processing system, allowing for massively parallel computations within the cell. Additionally, unlike silicon-based computers that need external power sources, cellular computers use the cell’s own metabolic processes to generate energy. This combination of DNA programming, protein functionality, signaling pathways, and self-sustained energy creates a unique computing system that leverages the natural abilities of living cells.

How Living Cellular Computers Work

To understand how living cellular computers work, it’s helpful to think of them like a special kind of computer, where DNA is the “tape” that holds information. Instead of using silicon chips like regular computers, these systems use the natural processes in cells to perform tasks.

In this analogy, DNA has four “symbols”—A, C, G, and T—that store instructions. Enzymes, which are like tiny machines in the cell, read and modify this DNA just as a computer reads and writes data. But unlike regular computers, these enzymes can move freely within the cell, doing their work and then reattaching to the DNA to continue.

For example, one enzyme, called a polymerase, reads DNA and makes RNA, a kind of temporary copy of the instructions. Another enzyme, helicase, helps to copy the DNA itself. Special proteins called transcription factors can turn genes on or off, acting like switches.

What makes living cellular computers exciting is that we can program them. We can change the DNA “tape” and control how these enzymes behave, allowing for complex tasks that regular computers can’t easily do.

Advantages of Living Cellular Computers

Living cellular computers offer several compelling advantages over traditional silicon-based systems. They excel at massive parallel processing, meaning they can handle multiple computations simultaneously. This capability has the potential to greatly enhance both speed and efficiency of the computations. Additionally, biological systems are naturally energy-efficient, operating with minimal energy compared to silicon-based machines, which could make cellular computing more sustainable.

Another key benefit is the self-replication and repair abilities of living cells. This feature could lead to computer systems that are capable of self-healing, a significant leap from current technology. Cellular computers also have a high degree of adaptability, allowing them to adjust to changing environments and inputs with ease—something traditional systems struggle with. Finally, their compatibility with biological systems makes them particularly well-suited for applications in fields like medicine and environmental sensing, where a natural interface is beneficial.

The Potential of Living Cellular Computers for Artificial Intelligence

Living cellular computers hold intriguing potential for overcoming some of the major hurdles faced by today’s artificial intelligence (AI) systems. Although the current AI relies on biologically inspired neural networks, executing these models on silicon-based hardware presents challenges. Silicon processors, designed for centralized tasks, are less effective at parallel processing—a problem partially addressed by using multiple computational units like graphic processing units (GPUs). Training neural networks on large datasets is also resource-intensive, driving up costs and increasing the environmental impact due to high energy consumption.

In contrast, living cellular computers excel in parallel processing, making them potentially more efficient for complex tasks, with the promise of faster and more scalable solutions. They also use energy more efficiently than traditional systems, which could make them a greener alternative.

Additionally, the self-repair and replication abilities of living cells could lead to more resilient AI systems, capable of self-healing and adapting with minimal intervention. This adaptability might enhance AI’s performance in dynamic environments.

Recognizing these advantages, researchers are trying to implement perceptron and neural networks using cellular computers. While there’s been progress with theoretical models, practical applications are still in the works.

Challenges and Ethical Considerations

While the potential of living cellular computers is immense, several challenges and ethical considerations must be addressed. One of the primary technical challenges is the complexity of designing and controlling genetic circuits. Unlike traditional computer programs, which can be precisely coded and debugged, genetic circuits operate within the dynamic and often unpredictable environment of living cells. Ensuring the reliability and stability of these circuits is a significant hurdle that researchers must overcome.

Another critical challenge is the scalability of cellular computation. While proof-of-concept experiments have demonstrated the feasibility of living cellular computers, scaling up these systems for practical applications remains a daunting task. Researchers must develop robust methods for mass-producing and maintaining engineered cells, as well as integrating them with existing technologies.

Ethical considerations also play a crucial role in the development and deployment of living cellular computers. The manipulation of genetic material raises concerns about unintended consequences and potential risks to human health and the environment. It is essential to establish stringent regulatory frameworks and ethical guidelines to ensure the safe and responsible use of this technology.

The Bottom Line

Living cellular computers are setting the stage for a new era in computation, employing the natural abilities of biological cells to tackle tasks that silicon-based systems handle today. By using DNA as the basis for programming and proteins as the functional components, these systems promise remarkable benefits in terms of parallel processing, energy efficiency, and adaptability. They could offer significant improvements for AI, enhancing speed and scalability while reducing power consumption. Despite the potential, there are still hurdles to overcome, such as designing reliable genetic circuits, scaling up for practical use, and addressing ethical concerns related to genetic manipulation. As this field evolves, finding solutions to these challenges will be key to unlocking the true potential of cellular computing.

#ai#AI systems#Algorithms#applications#approach#Article#artificial#Artificial Intelligence#Bacteria#binary#Biochemical Processing in AI#Biocomputation#Biocomputers#Biocomputing#Biological Computing Systems#Biology#Building#cell#Cells#Cellular Computers#Cellular Computing for AI#challenge#change#chips#code#complexity#computation#computer#Computer Science#computers

0 notes

Text

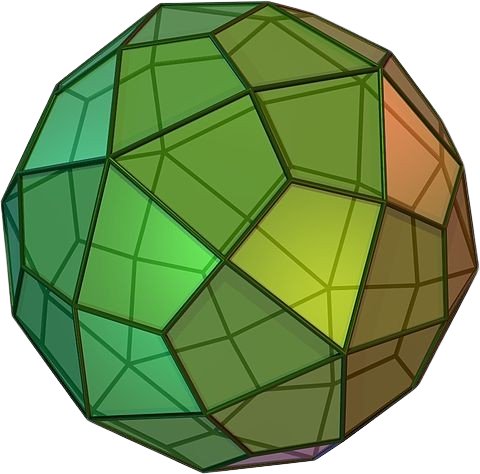

Polyhedron of the Day #30: Deltoidal hexecontahedron

The deltoidal hexecontahedron is a Catalan solid. It has 60 faces, 120 edges, and 62 vertices. Its face polygon is a kite. It is one of the only Catalan solids that does not have a Hamiltonian path among its vertices. It is topologically identical to the rhombic hexecontahedron, and its dual polyhedron is the rhombicosidodecahedron.

Deltoidal hexecontahedron GIF and image created by Cyp, distributed under a CC BY-SA 3.0 license.

1 note

·

View note

Text

VE477 Homework 3

Questions preceded by a * are optional. Although they can be skipped without any deduction, it is important to know and understand the results they contain. Ex. 1 — Hamiltonian path 1. Explain and present Depth-First Search (DFS). 2. Explain and present topological sorting. Write the pseudo-code of a polynomial time algorithm which decides if a directed acyclic graph contains a Hamiltonian…

View On WordPress

0 notes

Text

Neutron decay cosmology

A homeostatic universe via the reciprocal processes of electron capture and neutron decay.

The path of least action, physical process solution to dark energy, dark matter, and critical density maintenance.

All matter is forced by gravity to recapture its electron and become a neutron eventually.

Electron capture.

Because gravity a mass is accelerated to c by the gravity of an event horizon.

This kinetic energy, mc2, is dropped off at the event horizon contributing to mass of event horizon.

The Hamiltonian so to speak, the neutron itself, passes through the event horizon.

It takes an EinsteinRosen bridge from highest energy density conditions to lowest energy density points of space. Exiting where the quantum basement is minimal. Deep voids.

There in a deep void, this single free neutron soon decays.

Into an antineutrino, a proton and an electron. Amorphous atomic hydrogen.

This proton/electron soup has not stabilized yet and so is totally transparent with cross section around 10-31 barn.

The decay from neutron, 0.6fm3 to hydrogen gas taking up about a meter3 is a volume increase of 10^45 or so. Expansion. Dark energy.

Dark energy is the volume increase caused by that decay.

And since PV=nRT it’s no wonder space is still so cold.

In time the hydrogen stabilizes. First into monatomic hydrogen, very transparent, and then the H2 we know and love which slowly flows down the gravity hill going through stellar evolutions until in the distant future it is again about to contact an event horizon.

A homeostatic universe via the reciprocal phase change of neutrons to hydrogen.

Neutron to hydrogen takes a femtosecond

Hydrogen back to event horizon takes 13.8billion years?

Neutron decay cosmology.

0 notes

Text

Had my car fixed by a Hamiltonian mechanic. Now it takes all paths from source to destination.

Had my car fixed by a quantum mechanic. Now whenever I look at my speedometer my GPS stops working.

7K notes

·

View notes

Text

FlexQAOA Launches Aqarios Luna v1.0 Quantum Optimization

Quantum Optimisation with LUNA v1.0

With LUNA v1.0, Aqarios GmbH becomes a leader in quantum computing's fast-changing sector. This paper introduces FlexQAOA, a breakthrough that reimagines how to solve difficult optimisation problems within practical limitations.

Not just another quantum software update, LUNA v1.0 is a fundamental transformation. FlexQAOA allows developers and academics to natively model restrictions, giving quantum optimisation jobs in material sciences, finance, and logistics unprecedented precision and scalability.

Definition: FlexQAOA

Traditional QAOA has been ineffectual and unreliable due to real-world restriction encoding issues. LUNA v1.0's core engine, FlexQAOA, uses adaptive, modular optimisation. Instead of employing sophisticated post-processing or penalty terms, which diminish accuracy, it intrinsically integrates quantum model restrictions.

FlexQAOA lets users:

Define Hamiltonian hard limits clearly.

Optimise multi-objective, combinatorial, and non-linear problems.

Dynamically adapt to changing problem characteristics, using high-fidelity simulators and quantum hardware.

This method solves constraint-violating problems that were previously unsolvable or had high error rates.

Key LUNA v1.0 Features

Native constraint embedding

The constraint-native design underpins LUNA v1.0. FlexQAOA sees limitations as essential to the quantum issue space, unlike previous techniques that saw them as external. Even in noisy intermediate-scale quantum (NISQ) systems, this ensures high fidelity.

Hardware-Independent Execution

Major quantum computing devices like these are LUNA v1.0-compatible:

IBM Qiskit

Amazon Braket

Google Cirq

D-Wave leap

This lets consumers employ best-in-class hardware for their use case without being limited to one ecosystem. LUNA's abstraction layer ensures quantum backend and classical simulator portability.

Python SDK/API Integration

Developers can construct and implement optimisation models with LUNA's sophisticated Python SDK and a few lines of code. It provides full API access for business apps, enabling real-time logistics orchestration, energy grid balancing, and auto route planning.

Visualization/Debugging Tools

LUNA provides visual convergence, constraint graph, and quantum circuit diagnostics. That helps quantum engineers and scientists do the following:

Find optimisation bottlenecks,

Keep track of convergence,

Investigate quantum circuit paths.

Expandable Constraint Framework

Users can import mathematical formulations or domain-specific logic using plug-in restrictions. LUNA can handle delivery route time windows, financial modelling regulatory compliance, and chemical structure constraints without much reconfiguration.

Also see NordVPN Protects User Data with Post-Quantum Encryption.

LUNA v1.0 Use Cases

Supply Chain Optimisation

Modern supply chains face regulatory compliance, route inefficiencies, and changing demand. LUNA v1.0 lets businesses:

Include time constraints,

Supplier availability changes,

Optimise for many goals to reduce carbon emissions. Results include faster deliveries, lower costs, and green logistics.

Financial Portfolio Optimisation

FlexQAOA excels in regulatory, diversification, and risk-tolerant environments. LUNA's constraint-aware modelling ensures scalable, compliant, and optimal investment strategies for hedge funds and asset managers studying quantum financial instruments.

Energy Grid Management

Energy grid management includes load balancing, peak forecasting, and capacity, demand, and pricing controls. The design of LUNA supports large-scale, real-time energy system modelling, helping utilities plan for:

Power interruptions,

Renewables integration,

Smart grid scalability.

Drug Development and Materials Design

Quantum optimisation is crucial for compound matching and molecular structure design. LUNA can include chemical constraints to help researchers focus their search and speed up R&D. FlexQAOA detects invalid compounds at the circuit level, saving time and computing resources.

LUNA v1.0 Reinvents Benchmark

Accuracy, scalability, and hardware adaptability are essential for quantum advantage. Adjustable constraint-aware optimisation in LUNA v1.0:

Better solution quality and fewer iterations Eschewing post-processing reduces quantum noise sensitivity,

Scalability across domains and issue sizes Future-proof development for fault-tolerant and NISQ devices.

LUNA integrates effortlessly into workflows, unlike earlier systems that needed extensive rewriting or brute-force workarounds to meet limits, providing real commercial gain.

Future intentions for LUNA and Aqarios

Aqarios has previously revealed intentions for:

LUNA's Kubernetes cloud-native implementation and constraint modelling partnerships with European research institutes.

Integration with machine learning toolkits for classical-quantum processes.

Munich-based Aqarios aims to make quantum optimisation scalable, reliable, and accessible for future developers and data scientists.

In conclusion

LUNA v1.0 with FlexQAOA is a quantum computing revolution, not just an update. Cross-domain usefulness, enterprise-level adaptability, and deep constraint integration reinvent quantum optimisation.

As industries seek quantum-ready solutions, LUNA is the platform of choice for solving today's most difficult optimisation challenges.

#LUNAv1#FlexQAOA#AmazonBraket#QAOA#PythonSDK#quantumcomputing#machinelearning#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

0 notes

Text

Soft Computing, Volume 29, Issue 1, January 2025

1) KMSBOT: enhancing educational institutions with an AI-powered semantic search engine and graph database

Author(s): D. Venkata Subramanian, J. ChandraV. Rohini

Pages: 1 - 15

2) Stabilization of impulsive fuzzy dynamic systems involving Caputo short-memory fractional derivative

Author(s): Truong Vinh An, Ngo Van Hoa, Nguyen Trang Thao

Pages: 17 - 36

3) Application of SaRT–SVM algorithm for leakage pattern recognition of hydraulic check valve

Author(s): Chengbiao Tong, Nariman Sepehri

Pages: 37 - 51

4) Construction of a novel five-dimensional Hamiltonian conservative hyperchaotic system and its application in image encryption

Author(s): Minxiu Yan, Shuyan Li

Pages: 53 - 67

5) European option pricing under a generalized fractional Brownian motion Heston exponential Hull–White model with transaction costs by the Deep Galerkin Method

Author(s): Mahsa Motameni, Farshid Mehrdoust, Ali Reza Najafi

Pages: 69 - 88

6) A lightweight and efficient model for botnet detection in IoT using stacked ensemble learning

Author(s): Rasool Esmaeilyfard, Zohre Shoaei, Reza Javidan

Pages: 89 - 101

7) Leader-follower green traffic assignment problem with online supervised machine learning solution approach

Author(s): M. Sadra, M. Zaferanieh, J. Yazdimoghaddam

Pages: 103 - 116

8) Enhancing Stock Prediction ability through News Perspective and Deep Learning with attention mechanisms

Author(s): Mei Yang, Fanjie Fu, Zhi Xiao

Pages: 117 - 126

9) Cooperative enhancement method of train operation planning featuring express and local modes for urban rail transit lines

Author(s): Wenliang Zhou, Mehdi Oldache, Guangming Xu

Pages: 127 - 155

10) Quadratic and Lagrange interpolation-based butterfly optimization algorithm for numerical optimization and engineering design problem

Author(s): Sushmita Sharma, Apu Kumar Saha, Saroj Kumar Sahoo

Pages: 157 - 194

11) Benders decomposition for the multi-agent location and scheduling problem on unrelated parallel machines

Author(s): Jun Liu, Yongjian Yang, Feng Yang

Pages: 195 - 212

12) A multi-objective Fuzzy Robust Optimization model for open-pit mine planning under uncertainty

Author(s): Sayed Abolghasem Soleimani Bafghi, Hasan Hosseini Nasab, Ali reza Yarahmadi Bafghi

Pages: 213 - 235

13) A game theoretic approach for pricing of red blood cells under supply and demand uncertainty and government role

Author(s): Minoo Kamrantabar, Saeed Yaghoubi, Atieh Fander

Pages: 237 - 260

14) The location problem of emergency materials in uncertain environment

Author(s): Jihe Xiao, Yuhong Sheng

Pages: 261 - 273

15) RCS: a fast path planning algorithm for unmanned aerial vehicles

Author(s): Mohammad Reza Ranjbar Divkoti, Mostafa Nouri-Baygi

Pages: 275 - 298

16) Exploring the selected strategies and multiple selected paths for digital music subscription services using the DSA-NRM approach consideration of various stakeholders

Author(s): Kuo-Pao Tsai, Feng-Chao Yang, Chia-Li Lin

Pages: 299 - 320

17) A genomic signal processing approach for identification and classification of coronavirus sequences

Author(s): Amin Khodaei, Behzad Mozaffari-Tazehkand, Hadi Sharifi

Pages: 321 - 338

18) Secure signal and image transmissions using chaotic synchronization scheme under cyber-attack in the communication channel

Author(s): Shaghayegh Nobakht, Ali-Akbar Ahmadi

Pages: 339 - 353

19) ASAQ—Ant-Miner: optimized rule-based classifier

Author(s): Umair Ayub, Bushra Almas

Pages: 355 - 364

20) Representations of binary relations and object reduction of attribute-oriented concept lattices

Author(s): Wei Yao, Chang-Jie Zhou

Pages: 365 - 373

21) Short-term time series prediction based on evolutionary interpolation of Chebyshev polynomials with internal smoothing

Author(s): Loreta Saunoriene, Jinde Cao, Minvydas Ragulskis

Pages: 375 - 389

22) Application of machine learning and deep learning techniques on reverse vaccinology – a systematic literature review

Author(s): Hany Alashwal, Nishi Palakkal Kochunni, Kadhim Hayawi

Pages: 391 - 403

23) CoverGAN: cover photo generation from text story using layout guided GAN

Author(s): Adeel Cheema, M. Asif Naeem

Pages: 405 - 423

0 notes