#IBM i operating system OS

Explore tagged Tumblr posts

Text

The paradox of choice screens

I'm coming to BURNING MAN! On TUESDAY (Aug 27) at 1PM, I'm giving a talk called "DISENSHITTIFY OR DIE!" at PALENQUE NORTE (7&E). On WEDNESDAY (Aug 28) at NOON, I'm doing a "Talking Caterpillar" Q&A at LIMINAL LABS (830&C).

It's official: the DOJ has won its case, and Google is a convicted monopolist. Over the next six months, we're gonna move into the "remedy" phase, where we figure out what the court is going to order Google to do to address its illegal monopoly power:

https://pluralistic.net/2024/08/07/revealed-preferences/#extinguish-v-improve

That's just the beginning, of course. Even if the court orders some big, muscular remedies, we can expect Google to appeal (they've already said they would) and that could drag out the case for years. But that can be a feature, not a bug: a years-long appeal will see Google on its very best behavior, with massive, attendant culture changes inside the company. A Google that's fighting for its life in the appeals court isn't going to be the kind of company that promotes a guy whose strategy for increasing revenue is to make Google Search deliberately worse, so that you will have to do more searches (and see more ads) to get the info you're seeking:

https://pluralistic.net/2024/04/24/naming-names/#prabhakar-raghavan

It's hard to overstate how much good stuff can emerge from a company that's mired itself in antitrust hell with extended appeals. In 1982, IBM wriggled off the antitrust hook after a 12-year fight that completely transformed the company's approach to business. After more than a decade of being micromanaged by lawyers who wanted to be sure that the company didn't screw up its appeal and anger antitrust enforcers, IBM's executives were totally transformed. When the company made its first PC, it decided to use commodity components (meaning anyone could build a similar PC by buying the same parts), and to buy its OS from an outside vendor called Micros-Soft (meaning competing PCs could use the same OS), and it turned a blind eye to the company that cloned the PC ROM, enabling companies like Dell, Compaq and Gateway to enter the market with "PC clones" that cost less and did more than the official IBM PC:

https://www.eff.org/deeplinks/2019/08/ibm-pc-compatible-how-adversarial-interoperability-saved-pcs-monopolization

The big question, of course, is whether the court will order Google to break up, say, by selling off Android, its ad-tech stack, and Chrome. That's a question I'll address on another day. For today, I want to think about how to de-monopolize browsers, the key portal to the internet. The world has two extremely dominant browsers, Safari and Chrome, and each of them are owned by an operating system vendor that pre-installs their own browser on their devices and pre-selects them as the default.

Defaults matter. That's a huge part of Judge Mehta's finding in the Google case, where the court saw evidence from Google's own internal research suggesting that people rarely change defaults, meaning that whatever the gadget does out of the box it will likely do forever. This puts a lie to Google's longstanding defense of its monopoly power: "choice is just a click away." Sure, it's just a click away – a click, you're pretty sure no one is ever going to make.

This means that any remedy to Google's browser dominance is going to involve a lot of wrangling about defaults. That's not a new wrangle, either. For many years, regulators and tech companies have tinkered with "choice screens" that were nominally designed to encourage users to try out different browsers and brake the inertia of the big two browsers that came bundled with OSes.

These choice screens have a mixed record. Google's 2019 Android setup choice screen for the European Mobile Application Distribution Agreement somehow managed to result in the vast majority of users sticking with Chrome. Microsoft had a similar experience in 2010 with BrowserChoice.eu, its response to the EU's 2000s-era antitrust action:

https://en.wikipedia.org/wiki/BrowserChoice.eu

Does this mean that choice screens don't work? Maybe. The idea of choice screens comes to us from the "choice architecture" world of "nudging," a technocratic pseudoscience that grew to prominence by offering the promise that regulators could make big changes without having to do any real regulating:

https://verfassungsblog.de/nudging-after-the-replication-crisis/

Nudge research is mired in the "replication crisis" (where foundational research findings turn out to be nonreplicable, due to bad research methodology, sloppy analysis, etc) and nudge researchers keep getting caught committing academic fraud:

https://www.ft.com/content/846cc7a5-12ee-4a44-830e-11ad00f224f9

When the first nudgers were caught committing fraud, more than a decade ago, they were assumed to be outliers in an otherwise honest and exciting field:

https://www.npr.org/2016/10/01/496093672/power-poses-co-author-i-do-not-believe-the-effects-are-real

Today, it's hard to find much to salvage from the field. To the extent the field is taken seriously today, it's often due to its critics repeating the claims of its boosters, a process Lee Vinsel calls "criti-hype":

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

For example, the term "dark patterns" lumps together really sneaky tactics with blunt acts of fraud. When you click an "opt out of cookies" button and get a screen that says "Success!" but which has a tiny little "confirm" button on it that you have to click to actually opt out, that's not a "dark pattern," it's just a scam:

https://pluralistic.net/2022/03/27/beware-of-the-leopard/#relentless

By ascribing widespread negative effects to subtle psychological manipulation ("dark patterns") rather than obvious and blatant fraud, we inadvertently elevate "nudging" to a real science, rather than a cult led by scammy fake scientists.

All this raises some empirical questions about choice screens: do they work (in the sense of getting people to break away from defaults), and if so, what's the best way to make them work?

This is an area with a pretty good literature, as it turns out, thanks in part due to some natural experiments, like when Russia forced Google to offer choice screens for Android in 2017, but didn't let Google design that screen. The Russian policy produced a significant switch away from Google's own apps to Russian versions, primarily made by Yandex:

https://cepr.org/publications/dp17779

In 2023, Mozilla Research published a detailed study in which 12,000 people from Germany, Spain and Poland set up simulated mobile and desktop devices with different kinds of choice screens, a project spurred on by the EU's Digital Markets Act, which is going to mandate choice screens starting this year:

https://research.mozilla.org/browser-competition/choicescreen/

I'm spending this week reviewing choice screen literature, and I've just read the Mozilla paper, which I found very interesting, albeit limited. The biggest limitation is that the researchers are getting users to simulate setting up a new device and then asking them how satisfied they are with the experience. That's certainly a question worth researching, but a far more important question is "How do users feel about the setup choices they made later, after living with them on the devices they use every day?" Unfortunately, that's a much more expensive and difficult question to answer, and beyond the scope of this paper.

With that limitation in mind, I'm going to break down the paper's findings here and draw some conclusions about what we should be looking for in any kind of choice screen remedy that comes out of the DOJ antitrust victory over Google.

The first thing note is that people report liking choice screens. When users get to choose their browsers, they expect to be happy with that choice; by contrast, users are skeptical that they'll like the default browser the vendor chose for them. Users don't consider choice screens to be burdensome, and adding a choice screen doesn't appreciably increase setup time.

There are some nuances to this. Users like choice screens during device setup but they don't like choice screens that pop up the first time they use a browser. That makes total sense: "choosing a browser" is colorably part of the "setting up your gadget" task. By contrast, the first time you open a browser on a new device, it's probably to get something else done (e.g. look up how to install a piece of software you used on your old device) and being interrupted with a choice screen at that moment is an unwelcome interruption. This is the psychology behind those obnoxious cookie-consent pop-ups that website bombard you with when you first visit them: you've clicked to that website because you need something it has, and being stuck with a privacy opt-out screen at that moment is predictably frustrating (which is why companies do it, and also why the DMA is going to punish companies that do).

The researchers experimented with different kinds of choice screens, varying the number of browsers on offer and the amount of information given on each. Again, users report that they prefer more choices and more information, and indeed, more choice and more info is correlated with choosing indie, non-default browsers, but this effect size is small (<10%), and no matter what kind of choice screen users get, most of them come away from the experience without absorbing any knowledge about indie browsers.

The order in which browsers are presented has a much larger effect than how many browsers or how much detail is present. People say they want lots of choices, but they usually choose one of the first four options. That said, users who get choice screens say it changes which browser they'd choose as a default.

Some of these contradictions appear to stem from users' fuzziness on what "default browser" means. For an OS vendor, "default browser" is the browser that pops up when you click a link in an email or social media. For most users, "default browser" means "the browser pinned to my home screen."

Where does all this leave us? I think it cashes out to this: choice screens will probably make a appreciable, but not massive, difference in browser dominance. They're cheap to implement, have no major downsides, and are easy to monitor. Choice screens might be needed to address Chrome's dominance even if the court orders Google to break off Chrome and stand it up as a separate business (we don't want any browser monopolies, even if they're not owned by a search monopolist!). So yeah, we should probably make a lot of noise to the effect that the court should order a choice screen, as part of a remedy.

That choice screen should be presented during device setup, with the choices presented in random order – with this caveat: Chrome should never appear in the top four choices.

All of that would help address the browser duopoly, even if it doesn't solve it. I would love to see more market-share for Firefox, which is the browser I've used every day for more than a decade, on my laptop and my phone. Of course, Mozilla has a role to play here. The company says it's going to refocus on browser quality, at the expense of the various side-hustles it's tried, which have ranged from uninteresting to catastrophically flawed:

https://www.fastcompany.com/91167564/mozilla-wants-you-to-love-firefox-again

For example, there was the tool to automatically remove your information from scummy data brokers, that they outsourced to a scummy data-broker:

https://www.theverge.com/2024/3/22/24109116/mozilla-ends-onerep-data-removal-partnership

And there's the "Privacy Preserving Attribution" tracking system that helps advertisers target you with surveillance advertising (in a way that's less invasive than existing techniques). Mozilla rolled this into Firefox on an opt out basis, and made opting out absurdly complicated, suggesting that it knew that it was imposing something on its users that they wouldn't freely choose:

https://blog.privacyguides.org/2024/07/14/mozilla-disappoints-us-yet-again-2/

They've been committing these kinds of unforced errors for more than a decade, seeking some kind of balance between monopolistic web companies and its users' desire to have a browser that protects them from invasive and unfair practices:

https://www.theguardian.com/technology/2014/may/14/firefox-closed-source-drm-video-browser-cory-doctorow

These compromises represent the fallacy that Mozilla's future depends on keeping bullying entertainment companies and Big Tech happy, so it can go on serving its users. At the same time, these compromises have alienated Mozilla's core users, the technical people who were its fiercest evangelists. Those core users are the authority on technical questions for the normies in their life, and they know exactly how cursed it is for Moz to be making these awful compromises.

Moz has hemorrhaged users over the past decade, meaning they have even less leverage over the corporations demanding that they make more compromises. This sets up a doom loop: make a bad compromise, lose users, become more vulnerable to demands for even worse compromises. "This capitulation puts us in a great position to make a stand in some hypothetical future where we don't instantly capitulate again" is a pretty unconvincing proposition.

After the past decade's heartbreaks, seeing Moz under new leadership makes me cautiously hopeful. Like I say, I am dependent on Firefox and want an independent, principled browser vendor that sees their role as producing a "user agent" that is faithful to its users' interests above all else:

https://pluralistic.net/2024/05/07/treacherous-computing/#rewilding-the-internet

Of course, Moz depends on Google's payment for default search placement for 90% of its revenue. If Google can't pay for this in the future, the org is going to have to find another source of revenue. Perhaps that will be the EU, or foundations, or users. In any of these cases, the org will find it much easier to raise funds if it is standing up for its users – not compromising on their interests.

Community voting for SXSW is live! If you wanna hear RIDA QADRI and me talk about how GIG WORKERS can DISENSHITTIFY their jobs with INTEROPERABILITY, VOTE FOR THIS ONE!

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/08/12/defaults-matter/#make-up-your-mind-already

Image: ICMA Photos (modified) https://www.flickr.com/photos/icma/3635981474/

CC BY-SA 2.0 https://creativecommons.org/licenses/by-sa/2.0/

#pluralistic#choice screens#dma#eu#scholarship#ux#behavioral economics#mozilla#remedies#browsers#mobile#defaults matter#google#doj v google

204 notes

·

View notes

Text

The story of Microsoft's meteoric rise and IBM's fall has been on my mind lately. Not really related to any film, but I do think we're overdue for an updated Pirates of Silicon Valley biopic. I really think that the 80's and 90's had some wild stories in computing.

If you ask the average person what operating system your computer could have they'd say that if it's a PC it has Windows, and if it's a Mac it has macOS. All home computers are Macs or PCs, but how did it get this way?

In the 70's everyone was making home computers. Tandy was a leather supply goods company established in 1919, but they made computers. Montgomery Ward was a retail chain that decided to make their own store brand computers. Commodore, Atari, NEC, Philips, Bally and a million other assorted companies were selling computers. They generally couldn't talk to each other (if you had software for your Tandy it wouldn't work on your Commodore) and there was no clear market winner. The big three though were Tandy (yeah the leather company made some great computers in 78), Commodore and Apple.

IBM was the biggest computer company of all, in fact just the biggest company period. In 1980 they had a market cap of 128 billion dollars (adjusted for inflation). None of these other companies came close, but IBM's success was built off of mainframes. 70% of all computers sold worldwide were IBM computers, but 0% of it was from the home market.

IBM wanted to get into this growing and lucrative business, and came up with a unique plan. A cheap computer made with commodity parts (i.e. not cutting edge) that had open architecture. The plan was that you could buy an IBM Personal Computer (TM) and then upgrade it as you please. They even published documentation to make it easy to build add ons.

The hope was that people would be attracted to the low prices, the options for upgrades would work for power users, and a secondary market of add ons would be created. If some 3rd party company creates the best graphics card of all time, well you'd still need to buy an IBM PC to install it on.

IBM was not in the home software business, so they went to Microsoft. Microsoft produced MS-DOS (based on 86-DOS, which they licensed) but did not enforce exclusivity. That meant that Microsoft could sell MS-DOS for any of their competitors too. This was fine because of how fractured the market was. Remember, there were a lot of competitors, no one system dominated and none of the competitors could share software. Porting MS-DOS to every computer would have taken years, and by that point it would be outdated anyways.

IBM saw two paths forward. If the IBM PC did well they would make a ton of money. Third party devs like Microsoft would also make a lot of money, but not as much as IBM. If it failed, well then no one was making money. Either way the balance of power wouldn't change. IBM would still be at the top.

IBM however did not enjoy massive profits. It turns out that having cheap components and an open architecture where you could replace anything would... let you replace anything. A company like Compaq could just buy their own RAM, motherboards, cases, hard drives, etc. and make their own knockoff. It was easy, it was popular, and it was completely legal! Some people could order parts and build their own computer from scratch. If you've ever wondered why you can build your own computer but not your own tv or toaster, this is why. IBM had accidentally created a de facto standard that they had no control over.

In 1981 IBM's PC was worth 2.5% of the marketshare. By 1995 IBM PC compatibles were 95% of the marketshare, selling over 45 million units and IBM had to share the profits with every competitor. Apple is the only survivor of this time because the Macintosh was such an incredible piece of technology, but that's a different story for a different time.

And Microsoft? Well building an OS is much harder than putting together a few hardware components, so everyone just bought MS-DOS. With no exclusivity agreement this was also legal. That huge marketshare was now the basis for Microsoft's dominance.

IBM created a computer standard and gave the blueprints to every competitor and created a monopoly for Microsoft to boot. And that's why every computer you buy either is made by Apple with Apple software, or made by anyone else with Microsoft software. IBM is back where they started, having left the home computer business in 2005.

It's easily the biggest blunder in computer history. Other blunders have killed companies but none were quite as impactful as this one.

This story, and many others I know of, I first read in "In Search of Stupidity", a book authored by a former programmer and product manager that was able to see a lot of this first hand. I make no money advertising this book, I just had a great time reading it.

#software#hardware#microsoft#ibm#apple#tandy#nec#compaq#Wordstar#borland#ashton-tate#lotus#Ms-dos#windows#word#excel#access#commodore#atari#philips#bally#In search of stupidity#macintosh#montgomery ward#pirates of silicon valley

10 notes

·

View notes

Text

For SIGKILL enthusiasts, I found that the IBM operating system z/OS defines a rare, even stronger termination signal

#i understand why the signal can fail in certain cases but i always thought there should be a special cursed option to ignore those cases#same way as all those hdparm commands that warn DO NOT USE THIS IS HORRIBLY DANGEROUS#unix

5 notes

·

View notes

Note

you mention the apollo guidance computer in your bio.

do you have any nerdy fun facts about it?

Thanks for the ask!

It's difficult to convey everything the AGC was, concisely, but here's some highlists:

In terms of size and power, it's comparable to the Apple II, but predates it by 11 years. There are some obvious differences in the constraints placed on the two designs, but still, that's pretty ahead of it's time.

The bare-bones OS written for the AGC was one of the first to ever implement co-operative multi-tasking and process priority management. This would lead problems on Apollo 11, when an erroneously deployed landing radar overloaded the task scheduler on Eagle during the Lunar landing (the infamous 1201/1202 program alarms). Fortunately, it didn't end up affecting the mission, and the procedures were subsequently revised/better followed to avoid the situation ever reoccurring.

Relatedly, it was also designed to immediately re-boot, cull low-priority tasks, and resume operations following a crash -- a property essential to ensuring the spacecraft could be piloted safely and reliably in all circumstances. Many of the reliability-promoting techniques used by Apollo programmers (led by Margaret Hamilton, go women in STEM) went on to become foundational principles of software engineering.

Following the end of the Apollo, Skylab, and Apollo-Soyuz missions, a modified AGC would be re-purposed into the worlds first digital fly-by-wire system. (Earlier fly-by-wire used analogue computers, which are their own strange beasts.) This is, IMO, one of the easiest things to point to when anyone asks "What does NASA even do for us anyway?" Modern aircraft autopilots owe so, so much to the AGC -- and passengers owe so much to those modern autopilots. While there are some pretty well-known incidents involving fly-by-wire (lookin' at you, MCAS), it speaks to the incredible amount of safety such systems normally afford that said incidents are so rare. Pilot error killed so many people before computers hit the cockpit.

AGC programs were stored in a early form of read only memory, called "core rope memory", where bits were literally woven into an array of copper wire and magnets. As a Harvard-architecture machine (programs and variables stored and treated separately), it therefore could not be re-programmed in flight. This would be problem on Apollo 14, when an intermittent short in the LM's abort switch nearly cancelled the landing -- if it occurred during decent, the computer would immediately discard the descent engine and return to orbit. A second, consecutive failure (after Apollo 13) would have almost certainly ended in the cancellation of the program, and the loss of the invaluable findings of Apollo 15, 16, and 17. (These were the missions with the lunar roving vehicles, allowing treks far from the LM.) Fortunately, the MIT engineers who built the AGC found a solution -- convince the computer it had, in fact, already aborted, allowing the landing to occur as normal -- with a bit of manual babysitting from LMP Edgar Mitchell.

Finally, it wasn't actually the only computer used on Apollo! The two AGCs (one in the command module, the other in the Lunar Module, a redundancy that allowed Apollo 13 to power off the CM and survive their accident) were complemented by the Launch Vehicle Digital Computer (LVDC) designed by IBM and located in S-IVB (Saturn V's third stage, Saturn-I/IB's second stage), and the Abort Guidance System (AGS) located in the LM. The AGS was extremely simple, and intended to serve as a backup should the AGC have ever failed and been unable to return the LM to orbit-- something it was fortunately never needed for. The LVDC, on the other hand, was tasked with flying the Saturn rocket to Earth orbit, which it did every time. This was very important during Apollo 12, when their Saturn V was struck by lightning shortly after launch, completely scrambling the CM's electrical system and sending their gimbal stacks a-spinning. Unaffected by the strike, the LVDC flew true and put the crew into a nominal low Earth orbit -- where diagnostics began, the AGC was re-set, and the mission continued as normal.

7 notes

·

View notes

Text

Like OS/390, z/OS combines a number of formerly separate, related products, some of which are still optional. z/OS has the attributes of modern operating systems but also retains much of the older functionality originated in the 1960s and still in regular use—z/OS is designed for backward compatibility.

Major characteristics

z/OS supports[NB 2] stable mainframe facilities such as CICS, COBOL, IMS, PL/I, IBM Db2, RACF, SNA, IBM MQ, record-oriented data access methods, REXX, CLIST, SMP/E, JCL, TSO/E, and ISPF, among others.

z/OS also ships with a 64-bit Java runtime, C/C++ compiler based on the LLVM open-source Clang infrastructure,[3] and UNIX (Single UNIX Specification) APIs and applications through UNIX System Services – The Open Group certifies z/OS as a compliant UNIX operating system – with UNIX/Linux-style hierarchical HFS[NB 3][NB 4] and zFS file systems. These compatibilities make z/OS capable of running a range of commercial and open source software.[4] z/OS can communicate directly via TCP/IP, including IPv6,[5] and includes standard HTTP servers (one from Lotus, the other Apache-derived) along with other common services such as SSH, FTP, NFS, and CIFS/SMB. z/OS is designed for high quality of service (QoS), even within a single operating system instance, and has built-in Parallel Sysplex clustering capability.

Actually, that is wayyy too exciting for a bedtime story! I remember using the internet on a unix terminal long before the world wide web. They were very excited by email, but it didn't impress me much. Browers changed the world.

23K notes

·

View notes

Text

Futureproof IBM i with IBM i/AS400 Solutions & Services

What do you think about your IBM i?

Do you think you are utilizing its full potential?

Do you think you need skilled IBM i resources to keep going?

Do you think you need to modernize it to stay relevant & drive maximum value?

If your IBM i is powering your most critical operations - why treat it like a problem?

One thing is for sure - IBM i isn’t dead, it’s just underutilized.

You don’t need to replace your IBM i.

You just need to rethink it.

And future-ready CIOs aren’t replacing it. They’re futureproofing it.

Futureproofing isn’t a buzzword.

It’s a strategic commitment to helping you get more from what you already trust.

Whether you’re looking to -

Enhance reporting, compliance, and support

Eliminate dependency on green screens

Modernize with minimal disruption

…this is your moment to think bigger—and act smarter.

Let’s talk about what sustainable modernization looks like for you. No pressure. No pitch. Just a conversation worth having.

Let’s first understand where IBM i users go wrong.

Modern Needs, Legacy Constraints - Where Most IBM i Users Get Stuck?

The IBM i/AS400 platform has been the technological backbone for industries like Finance, Manufacturing, Logistics, Retail, and Healthcare for running mission-critical operations.

Then, why so much facade about IBM i/AS400’s modern-day relevance?

Your business needs evolved around it, but the IBM i stack remained the same.

And, with the lack of evolution, you are likely facing one more of these struggles -

Green screen fatigue - Your users are navigating terminal-based interfaces in a world of dynamic, web-driven tools.

The great skill drain – IBM i/AS400 developers, RPG, COBOL, and DB2 experts are retiring faster than replacements can be found.

Manual workflows - Repetitive data entries, disconnected systems, and Excel-dependent processes are slowing you down.

Security exposure - With increasing compliance requirements, traditional setups without exit point controls or audit-ready logs create real risk, in the absence of prominent AS400 security solutions.

Data silos - Your IBM i holds rich data—yet without proper integration, that value is locked away from your analytics stack.

Lack of AS400 support – With IBM i/AS400 programmers retiring fast, lack of AS400 support and solutions makes hiring new programmers & getting them up to speed hard.

The problem isn’t IBM i itself—it’s everything revolving around it.

A reliable IBM i/AS400 solutions & services provider can be of great help. To understand “How”, read ahead.

What’s Holding IBM i Users Back? (And Why It’s Not the Platform)

Most IBM i challenges don’t come from the core OS - but from aging interfaces, limited support, or disconnected systems.

Sound familiar?

You’re juggling green screens, but your competitors moved to web interfaces

You’ve lost your last RPG developer, and the replacements are hard to find

Compliance and security demands are growing—but your audit trail isn’t

Reporting is delayed because data’s locked in outdated workflows

The problem isn’t IBM i.

It’s the lack of a plan to evolve with it.

Next, let’s look at three approaches to futureproofing your IBM i.

Futureproofing IBM i – The New Gold Standard

Rip-and-replace transformations used to be the default answer.

But they come with risk, disruption, and massive costs.

That’s why we built a smarter model—one that treats your IBM i as an asset, not a liability – Futureproofing IBM i.

Thinking, “Why should you entrust this model?”

Because, in the modern IT landscape you don’t need to choose between legacy and innovation.

The winning strategy is both.

Futureproofing your IBM i brings you the best of both worlds – preserving the legacy built over years with the leverage to innovate & grow.

If you want to replace IBM i – we’ll help you migrate to a modern tech stack.

If you want to keep IBM i as is – we’ll help you with expertise & resources.

If you want to modernize IBM i – we’ll help you futureproof it.

Thinking, “How?”

Let us show you how we help you succeed; with whichever route you choose to go!

1. Revolutionary – Replace IBM i

As the name suggests, it’s a revolutionary approach where you migrate your existing IBM i workloads to a modern infrastructure. You move away from IBM i and choose a modern-day technology stack to stay relevant with changing business needs.

Precisely, it’s “rip & replace”

Start afresh.

Replace green screens with modern UI.

Disregard existing processes & build from scratch.

It involves cost.

It involves extensive user training.

It involves testing, iterating, and restructuring processes.

As an IBM i/AS400 services partner, we have your back with due diligence.

We help you -

Customize UI/UX layering

Enable APIs and middleware deployment

Build workflow automations & role-based dashboards

Go Cloud-native with integration without rewriting your core logic

Translate legacy RPG code using modern-day programming languages

If you take this route - right from IBM i/AS400 programmers & resources to reliable AS400 support & solutions – we’ve you covered.

You gain -

Access to latest technology

Ease to find skilled resources

You are prone to -

Training existing staff on new technology – work culture shift

Exhaustive technological investments, energy, and time

Potential data losses in complete migration

Customizations done over years are lost

New vendor management & training

Hardware & software updates

Process/workflow updates

Downtime post-migration

We are less likely to encourage this approach.

Again, not because we are IBM Silver Business Partner.

We truly believe that -

Your IBM i has a lot more potential left in it.

You’ve spent years, rather decades building IBM i workflows.

You’ve invested not just cost but blood & sweat – your tech deserves retention.

Still, if you feel like moving away – we help you cross the bridge, with less friction, and lower operational disruption, through our tailored IBM i/AS400 solutions & services.

2. Conventional – Live with Your IBM i

With the conventional approach, nothing changes as you choose not to change.

“Keep as is” or “Live with your IBM i" leans more toward sticking to legacy infrastructure and core processes – while maintaining & keeping your IBM i environment in top shape.

Challenges?

The green screens stay.

The reporting & data analytics suffers.

The disconnect between IT ecosystems prolongs.

The IBM i skill gap & hunt to hire IBM i/AS400 developers continues.

Thinking, “How IBM i/AS400 services partner helps?”

As a reliable IBM i/AS400 support & solutions provider, we offer IBM i expertise and resources to support and maintain your IBM i environment.

We help you -

Train new hires on green screen interfaces & get them up to speed

Avail specialized IBM i/AS400 developers to fill the skill gap

Integrate your IBM i workflows with modern solutions

Sustain your reporting & analytics requirements

Patch fix & keep your IBM i workflows relevant

Avail tactical AS400 support on all levels

You gain -

Protection for the risk of data loss as no migration is involved

Comfort for existing resources

You still must battle -

Miss outs on technological advancements

Steep learning curves of new hires

Recurring downtimes

Scalability issues

We aren’t against this approach – but we realize your IBM i holds more potential.

We provide all that you need – expertise, resources, support to keep your IBM i environment running. But, we all know – it's recurring until fixed permanently.

3. Sustainable – Futureproof Your IBM i without Disruption

Futureproofing IBM i isn’t a buzzword, it’s a promise of sustainability.

A promise to protect the business processes you’ve built over the years, while embracing modern-day innovation to stay relevant.

Thinking, “Why this works?”

Your core business processes remain intact.

Your IBM i infrastructure doesn’t undergo an overhaul.

Your mission-critical applications and operations aren’t affected.

Thinking, “How it works?”

You get to keep what works and replace what doesn’t.

You get to move away from green screens and embrace modern UI.

You get to integrate your IBM i with modern solutions & improvise reporting.

And, all of this without disrupting your operations – through phased deployment

Thinking, “How does an IBM i/AS400 solutions & services providers help?”

We help you -

Automate batch jobs

Integrate IBM i web services

Upgrade greens screens to GUI

Migrate data to cloud/hybrid environments

Enhance data reporting with tools like Power BI

You gain -

Cleaner code

Customized dashboards

Advanced analytics & reporting

Modern UI & no more ‘green screens’

On-prem/hybrid to cloud data migration options

APIs for integrating existing IBM i with latest technologies

24*7 IT Infrastructure, consulting, development, management, administration & helpdesk support with response & resolution

You may have concerns while -

Accessing future technologies as we aren’t replacing the codebase

Scaling the workloads seamlessly as we aren’t going cloud-native

We highly encourage the sustainable approach to IBM i modernization – because it ensures you retain years of trust, technology, and investments.

The goal? Build a better IBM i without breaking the one you have.

Why Choose Integrative Systems to Futureproof IBM i?

We’re not just another IBM i/AS400 solutions & services vendor. We’re the team that evolves with your business.

Whether you’re looking to stabilize, modernize, or extend your AS400 setup, we understand that the opportunity isn’t in replacing it—it’s in reimagining how it fits into your future-ready enterprise.

When you partner with Integrative Systems, you get -

Access to certified IBM i/AS400 developers—onshore, offshore, or hybrid

Experts in AS400 ERP modernization, security hardening, and workflow automation

A long-term partner that brings consulting, implementation, and support under one roof

The power of sustainable modernization, done your way

We believe in protecting your legacy, strengthening your current operations, and positioning you for the future—all without chaos.

0 notes

Text

IBM i

IBM i – an operating system created by IBM, released in 1988 and became known as OS/400. In 2004, it was renamed to i5/OS and is currently called IBM i. https://archiveos.org/ibmi/

1 note

·

View note

Text

My Writing Space

I recently came across an article describing the perfect writing space. In summary: “Sit in a giant fluffy bean bag with pen in hand.” Lying in a cozy spot only makes me want to fall asleep, so I thought it would be fun to describe my writing space and schedule.

Writing is a right-brain (creative) activity. These mental tools focus on social situations, nuance, and bringing imagination to words. I have learned the hard way that forcing myself to write only leads to disaster. Yet, I have seen people writing in Starbucks. So, there is room for alternative approaches.

At the beginning of my writing adventure, I had a plan regarding my writing place. It begins with a plain particle board desk from Ikea. It is clean and faces a window with closed blinds. On my desk are a few pens, Post-its, and only one trinket, a silly air quality detector. Yes, I am a geek. On the left side is a paper scanner, and on the right is my computer. In front are three identical monitors. The left is positioned portrait, center landscape, and right portrait. I write on the left, do automated (Grammarly) editing on the center, and copy/paste on the right. When I purchased the monitors, they had the highest contrast, which would reduce eye strain.

My computer is eight years old, but it was a powerhouse when I purchased it. Today, it still runs the latest operating system with the latest updates. When it becomes too bogged down, unreliable, or will not run the latest OS, I will upgrade. From a writing perspective, the keyboard is the most crucial part of my computer. It is a Unicomp that uses the same buckling-spring technology that IBM invented for their keyboards in the early 70s. Each key makes a loud ping/click noise when I type, which provides audio feedback. As a result, I type fewer errors. My mouse is a Logitech Trackman Marble, and they stopped producing them in the 2000s, so I must purchase them on eBay. This pointing device reduces hand strain and provides good finger feedback. I have mapped the center button to paste.

I only installed the essential programs and kept them updated. This includes Word, Excel, Visio, Corel Draw, Outlook, Firefox (my primary browser), Edge (for one website that will not work on Firefox), Rocketcake (my website developer program), and Acrobat Professional. I do not install free programs or shareware because I do not want to risk stability or data loss. When I need to run something nonstandard, I use an old computer. Overall, I would describe my computer as 100% utility. My computer is not a gamer, workstation, or business desktop.

Since I began writing, I have had three office chairs. The first one was free from a company going out of business. It was comfortable, but it fell apart after two years. I paid $$$ for the second one, and while comfortable, it only lasted for a year and left hundreds of black fake leather bits everywhere. I am still finding them four years later. True Innovations made my present office chair; I purchased it at Costco. It has grey vinyl and adjusts well to my posture. I find it solid, and it does not squeak. I anticipate it will last a few years, but there was a mistake. I should have purchased three.

To me, writing is all business, but where is the joy? The joy comes from creating the words; the space supports this activity, so my space does not have clutter or frills. But where is the inspiration? When I turn to my right, I have a bookshelf loaded with personal memories, including family pictures and random stuff. This junk includes old test equipment, a professional video camera, ceramics made by my father, and a few record albums. I sometimes turn to this shelf for a distraction when I get stuck.

Writing is my third priority, the first being my family and the second being my full-time job. Still, I treat writing as a profession and try to reserve at least three hours per day on weekdays and two on weekends. My best writing is in the morning and early afternoon, but my job makes this problematic, so I write late.

The biggest disrupter of my creativity is YouTube. Yet, it is the perfect distraction to free my mind when I get stuck—a strange double-edged sword. What I need to do is set a distraction time limit. Yeah, I will work on that.

What do I watch? Politics, tractor repair (I have no idea why this fascinates me. My yard is 5x10 feet, and I will never own a tractor.), old computers, China, Ukraine, machining, and electronics. I do not watch videos about writing, entertainment, or other creative outlets.

That is how my bonkers mind creates what you have been reading. What advice do I have for other writers? Writing is like any other activity. To be good at it, one must take a high-level view with a goal in mind. This means asking questions, recording data, doing experiments, changing bad behavior, listening, researching, trying new things, and being dedicated. It has taken a long time to figure out what works for me, and it should be no surprise that other writers have come to different conclusions. A good example is George R. R. Martin, author of Game of Thrones, who uses a DOS computer with WordStar 4.0. Yikes! Yet, he created an outstanding work.

Hmm. Perhaps I should do an eBay search for WordStar 4.0.

You’re the best -Bill

December 10, 2024

Hey, book lovers, I published four. Please check them out:

Interviewing Immortality. A dramatic first-person psychological thriller that weaves a tale of intrigue, suspense, and self-confrontation.

Pushed to the Edge of Survival. A drama, romance, and science fiction story about two unlikely people surviving a shipwreck and living with the consequences.

Cable Ties. A slow-burn political thriller that reflects the realities of modern intelligence, law enforcement, department cooperation, and international politics.

Saving Immortality. Continuing in the first-person psychological thriller genre, James Kimble searches for his former captor to answer his life’s questions.

These books are available in softcover on Amazon and in eBook format everywhere.

0 notes

Photo

I'd say the 3.5" floppy was when computing became mainstream. Personal computers existed for a long time at around the price of a car. Then they crashed.

I don't know anyone who had an 8" at home. 5 1/4 was used for the models that were expensive for a normal household. My introduction was through a friend of the family who was a computer programmer, and whose equipment was paid for by the company. I saw some later at various places, but the few people I knew of who had them at home were definitely better off. Maybe they were relatively cheaper overseas. By the end of the 1980s, 5¼-inch disks had been superseded by 3½-inch disks.

And what else happened at around that time? Home internet. In fact, I'm pretty sure icons themselves came in at that time. There's no point having a symbol for a 5 1/4 when most of the save functions will be done either on a command line or something similar, and when the word processors came in, there was no standardisation anyway.

I think this was the first word processor I used. Not fun. Microsoft changed all that. So did Apple. Suddenly the same icons used in word processor would appear in a database. I can remember using the Amiga while most PC systems were using a CLI.

Notice the icon top right? It doesn't mean save.

Have a look at Windows 1.0. This was a bit of a flop. I never saw much point in Windows until 3.1, when it blew everyone away.

And there's the 3.5". So my theory is that by the time windows based systems were taking off, the 5 1/4 was on the way out, the 3.5 was taking over and the home internet was becoming mainstream.

This article points out that the 3.5 was actually around for a very long time.

"the format held out far longer than anyone expected, regularly shipping in PCs up until the mid-2000s" I can tell you sometimes the operating system manufacturers would release their product on 3.5".

I was flabbergasted when I bought OS2/WARP and found out it was on floppy. No, they didn't warn me, certainly didn't install it for me, and it was NO REFUNDS. I think I bought it over the phone, along with a desktop from a shop in Parramatta. It was cheap, I was poor, and back then I had no idea that shops would be willing to burn the customers in exchange for a quick buck. After that time, I have always preferred being able to walk in and inspect before buying - but it is impossible nowadays. The install would often fail halfway through. Start again. One time I had the tech support guy on the phone with me for hours, because my hardware was supposed to be supported but it clearly wasn't. I gave up on that OS and it failed as a competitor to windows, perhaps because windows was being sold on CD. Oooh, Windows 95 was also sometimes sold on 3.5. I'd forgotten about that.

So there you are. Icons were around earlier but they only really took off with the Apple 1.0.

But I don't really remember seeing them used at home. And look at the drive it was using.

I think my first exposure to Macs was maybe 88? So I think of them as being pretty late; maybe they were more common in the US/Europe. But that was back in 84, and the snazzy 3.5 was quite prominent. I was already familiar with that sort of a computer - I think I must have already been using an amiga 500 at home, and started ahead of most students in being able to use the system. Whereas I had a lot of friends who were still using a command line interface.

The Amiga 500 was a lot cheaper, and it showed, but under better management it could have been a real competitor to IBM/Microsoft. It was affordable for most families. That was mind-blowing. And the interface was a hell of a lot friendlier than the IBM clones had available. MS-DOS dominated the IBM PC compatible market between 1981 and 1995.

Windows 3.1, made generally available on March 1, 1992.

So basically everyone else had a GUI by that time. IBM Clones were more business machines than anything else, but with 3.1, computing was starting to get to the point where granny could do it. Windows 95, and microsoft office, changed everything I would say, and pretty much crushed all opposition. We still technically have Apple computers, but they pretty much exist to be an expensive toy now. And of course, the consoles have taken over in gaming, and mobile computing continues to erode the very concept of the desktop, with top down pressure to get rid of the very idea of owning a computer or an application, and just have people rent computing power and stream the results. At some point, I expect neural interfaces will take over. Embedded interfaces are weirdly popular in business already, I am told. And then I expect the current generation of iconography to disappear. But until then, the icons we know are largely there because of one woman working for Apple, and the save icon in particular is there because one form of technology was just so damned useful... and used long after it shouldn't have been for those bloody operating system installs....

TiL (click to go to the thread, which probably has more interesting tidbits I missed).

Bonus:

166K notes

·

View notes

Text

Linux Mint 22: Best Desktop Experience

I’ve navigated the labyrinthine world of operating systems for decades, starting with the venerable IBM 360/OS in the 1970s. Since then, I’ve traversed the landscape of Apple and Microsoft operating systems, encountering both the familiar and the obscure, like A/UX and Microsoft Xenix. I’ve also delved into the depths of over a hundred different Unix and Linux distributions. So, when I proclaim…

0 notes

Text

How to Check IBM i OS Version | CLP, RPG, SQL, and Command Line

There are a multitude of ways to check the IBM i OS version on your IBM i System (No it is NOT an AS400 or iSERIES machine – Don’t argue. Don’t!) You can do it from Control Language Programs How to retrieve IBMi (iSeries AS400) operating system version in a CLP program You can do it from RPG programs Find the IBMi (iSeries AS400) Operating System Version in a RPGLE program You can do it from…

0 notes

Text

Grand Strategy Manifesto (16^12 article-thread ‘0x1A/?)

youtube

Broken down many times yet still unyielding in their vision quest, Nil Blackhand oversees the reality simulations & keep on fighting back against entropy, a race against time itself...

So yeah, better embody what I seek in life and make real whatever I so desire now.

Anyways, I am looking forward to these... things in whatever order & manner they may come:

Female biological sex / gender identity

Ambidextrous

ASD & lessened ADD

Shoshona (black angora housecat)

Ava (synthetic-tier android ENFP social assistance peer)

Social context

Past revision into successful university doctorate graduation as historian & GLOSS data archivist / curator

Actual group of close friends (tech lab peers) with collaborative & emotionally empathetic bonds (said group large enough to balance absences out)

Mutually inclusive, empowering & humanely understanding family

Meta-physics / philosophy community (scientific empiricism)

Magicks / witch coven (religious, esoteric & spirituality needs)

TTRPGs & arcade gaming groups

Amateur radio / hobbyist tinkerers community (technical literacy)

Political allies, peers & mutual assistance on my way to the Progressives party (Harmony, Sustainability, Progress) objectives' fulfillment

Local cooperative / commune organization (probably something like Pflaumen, GLOSS Foundation & Utalics combined)

Manifesting profound changes in global & regional politics in a insightful & constructive direction to lead the world in a brighter direction fast & well;

?

Bookstore

Physical medium preservation

Video rental shop

Public libraries

Public archives

Shopping malls

Retro-computing living museum

Superpowers & feats

Hyper-competence

Photographic memory

600 years lifecycle with insightful & constructive historical impact for the better

Chronokinesis

True Polymorph morphology & dedicated morphological rights legality (identity card)

Multilingualism (Shoshoni, French, English, German, Hungarian, Atikamekw / Cree, Innu / Huron...)

Possessions

Exclusively copyleft-libre private home lab + VLSI workshop

Copyleft libre biomods

Copyleft libre cyberware

Privacy-respecting wholly-owned home residence / domain

Privacy-respecting personal electric car (I do like it looking retro & distinct/unique but whatever fits my fancy needs is fine)

Miyoo Mini+ Plus (specifically operating OnionOS) & its accessories

BeagleboneY-AI

BeagleV-Fire

RC2014 Mini II -> Mini II CP/M Upgrade -> Backlane Pro -> SIO/2 Serial Module (for Pro Tier) -> 512k ROM 512k RAM Module + DS1302 Real Time Clock Module (for ZED Tier) -> Why Em-Ulator Sound Module + IDE Hard Drive Module + RP2040 VGA Terminal + ESP8266 Wifi + Micro SD Card Module + CH375 USB Storage + SID-Ulator Sound Module -> SC709 RCBus-80pin Backplane Kit -> MG014 Parallel Port Interface + MG011 PseudoRandomNumberGen + MG003 32k Non-Volatile RAM + MG016 Morse Receiver + MG015 Morse Transmitter + MG012 Programmable I/O + "MSX Cassette + USB" Module + "MSX Cartridge Slot Extension" + "MSX Keyboard" -> Prototype PCBs

Intersil 6100/6120 System ( SBC6120-RBC? )

Pinephone Beta Edition Convergence Package (Nimrud replacement)

TUXEDO Stellaris Slim 15 Gen 6 AMD laptop (Nineveh replacement)

TUXEDO Atlas X - Gen 1 AMD desktop workstation (Ashur replacement)

SSD upgrade for Ashur build (~4-6TB SSD)

LTO Storage

NAS RAID6 48TB capacity with double parity (4x12TB for storage proper & 2x12TB for double parity)

Apple iMac M3-Max CPU+GPU 24GB RAM MagicMouse+Trackpad & their relevant accessories (to port Asahi Linux, Haiku & more sidestream indie-r OSes towards… with full legal permission rights)

Projects

OpenPOWER Microwatt

SPARC Voyager

IBM LinuxOne Mainframe?

Sanyo 3DO TRY

SEGA Dreamcast with VMUs

TurboGrafx 16?

Atari Jaguar CD

Nuon (game console)

SEGA's SG-1000 & SC-3000?

SEGA MasterSystem?

SEGA GameGear?

Casio Loopy

Neo Geo CD?

TurboExpress

LaserActive? LaserDisc deck?

45rpm autoplay mini vinyl records player

DECmate III with dedicated disk drive unit?

R2E Micral Portal?

Sinclair QL?

Xerox Daybreak?

DEC Alpha AXP Turbochannel?

DEC Alpha latest generation PCI/e?

PDP8 peripherals (including XY plotter printer, hard-copy radio-teletypes & vector touchscreen terminal);

?

0 notes

Text

For people who are not rabid fans of 16bit computers (the original audience I had in mind while writing) here is some extra context I think may help(?)

8086- a line of 16bit intel CPUs. This is the ancestor of the amd64 chip most modern personal computers have (with exceptions for the apple silicon computers, people who still use 32-bit CPUs or older and some weird outliers)

ASM- assembly code. This is human-readable instructions that directly translate into machine code a CPU can read and execute. Different CPUs and operating systems will have different languages and variants, based on the architecture and purposes of these machine. This is as opposed to high level languages such as python or C# which are further abstracted from the CPU, and individual commands don't inherently translate to a single, specific CPU command.

Registers- a couple of small piece of fast storage that a CPU can access. This is where 8/16/32/64 bit CPUs differ, an 8 bit register can store 8 digits, a 16-bit register can store 16 digits, so on. On an 8086 CPU, some registers are divided into a "higher" and "lower" half, and some code accesses only one of these 8-bit halves, or it accesses both halves but uses them separately (Like using the higher half to decide what to do, and the lower half to decide how to do it.)

Interrupt- A request for the CPU to stop executing code to do a particular function. Based on which interrupt you call, and the values stored in the register at the time it is called, it will do different things like print a character, print a string, change the monitor resolution, accept a keypress, so on and so forth. This is very hardware and OS-dependent :)

Binary-compatibility- The ability of a computer system to run the same compiled code as another computer (As opposed to needing to cross-compile, or even rewrite the code entirely)

IBM PC compatibles are x86 computers that can run code for a contemporary IBM PC, as opposed to non-compatibles such as Apple computers. Semi-compatibles like the Tandy 2000 can sort of run IBM PC code but have some structural differences that cause parts of a piece of code to fail to work properly.

Apple computers have different CPUs entirely, while the PC-9800 series does use x86 CPUs. They just run a modified version of MS-DOS that's specialized for a Japanese market, and these modifications mean machine code for standard MS-DOS won't run on a PC-98.

If you have any questions about old computers feel free to ask away :) I am mostly knowledgeable about 16-bit computers, but I do have some 8 bit knowledge as well (primarily the Commodore 64) But maybe send them to @elfntr since that's where I usually post more technical computer stuff (as opposed to the more general computerposting I do here XD)

In conventional MS-DOS 8086 ASM, you display a single character by loading a value into AX and calling interrupt 0x10 so like

MOV AH,0Eh ;tells int 10h to do teletype output MOV AL,"H" ;character to print INT 10h

now, AH and AL here are two 8-bit halves of the 16bit register AX (the high byte and low byte respectively) which means that this display method means conventional MS-DOS can only use up to 256 characters-- and don't forget that this includes control characters like say, line feed, carriage return, escape, so on... not just printed characters. To display a newline in 8086 MS-DOS ASM you can do:

MOV AX,0E0Ah INT 10h MOV AL,0Dh INT 10h

(i shortened the first command compared to the original snippet because you now understand the relationship between AX, AH, and AL, dear reader. This saves a few CPU clocks :)

So you're pretty limited on the number of characters you can display.... and that's why PC-98 MS-DOS is not binary compatible with standard flavor MS-DOS XD

Like, you can display 65536 characters if you use an entire 16-bit register for the character code and use another register to call the print operation, but first you need to have a reason to do this and to actually do it.

6 notes

·

View notes

Photo

A beginner’s guide on understanding IBM i AS/400 Operating System (OS)

Introduction

We live in a digital age where technology is constantly evolving every minute. None of these would have been possible without the role of tech giants such as IBM today. This IT company is known for selling the largest volume of sophisticated mid-range systems such as System/34, System/36 and System/38 in the industry. IBM has constantly strived to evolve and deliver high performance processors with evolved operating systems that make it possible to operate at high systems while keeping it cost-efficient. This has been a huge boon for the industry since it provides a two-pronged advantage of running business solutions on cost efficient platforms and protecting their investments on applications that form the very core of their business.

Understanding the IBM i AS/400 Operating System: A Beginner’s Guide

The basic philosophy of IBM’s architecture is ensuring the integration of the middleware components required by the business to IBM’s architecture, symbolised by “i” in IBM i series. To this mix, there are also automated components and efficient data management capabilities that enable the customer to manage their business with ease.

IBM i AS/400 OS is heralded as one of their greatest accomplishments. The IBM i AS/400 Operating System (OS) is one such model that was designed to fit in departments of large companies as well as cater to small businesses, and penetrate almost every business line globally. This model succeeded in the sophisticated midrange products and is known to have an uptime of almost 99.9%.

What makes the IBM i series unique is the breadth of integration that it offers. The System/38 architecture was created based on IBM i’s core architecture design. The feature includes the use of a relational database, single-level storage so customers do not have the huge responsibility of managing storage, ability to handle multiple workloads, and a technology independent interface eliminating recompilation between programs.

The AS/400 comes with a wide variety of interesting features, which may have to be added at an additional cost or managed separately.

· System Management: The browser based management tool, IBM Systems Director Navigator enables users including administrators to manage the IBM i partition. The advantages are tracking, monitoring, and planning performance and resource use.

· Data Warehousing: Enables it to function as a large repository of data since it includes multi-terabytes of hard disk space and multi-gigabytes of RAM.

· Development system: The AS/400 architecture also lends itself as a development system because of the integration with Java Virtual Machine (JVM) and Java based commercial applications that IBM developed.

· Groupware services: For corporates, Dominos and Notes provide sophisticated features such as online collaboration, email and project file sharing.

· Ecommerce Support: AS/400 can provide ecommerce support for a midsize company since it includes web server and applications that support this platform such as tracking and monitoring orders including vendor management.

From the beginning, the overall business philosophy driving the AS/400 was to make it an all-encompassing server that would replace PCs and web servers globally. In reality not only AS400/IBM i provided their customers with the maximum business value, probably far exceeded its original goal.

0 notes

Text

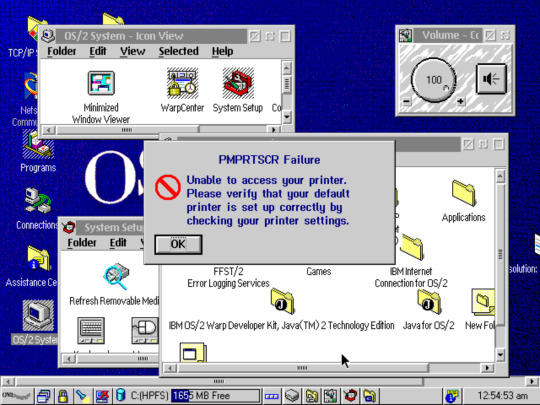

Obviously this isnt my usual sort of art, but i wanted a retro-vaporwave-webcore-esque picture for a twitter banner and i didnt want to use someone elses work, so I just edited this screenshot I took of an OS/2 Warp virtual machine. I like how it looks so im uploading it

here's the original:

A neat old operating system with a really neat file system that in a lot of ways paved the way to NTFS, the file system used by Windows today. sorry it didn't work out too well IBM .

12 notes

·

View notes

Text

i am in Immense Pain due to Health is Shit so I'm going to recount my understanding of something pointless and boring to organize my thoughts and Distract Myself from Feeling Pain and then i'm gonna post it because deleting a lot of writing even if pointless feels bad

Original computers of course were basically one program one machine. Reprogrammability came quick, but still, basically ran one program at a time, kinda like a nes.

As they grew bigger they started to make machines that could access libraries of programs at a time, the line between program and function not being that clear yet

These were eventually developed into the first operating systems by the late 50s. The 60s saw the beginnings of things like Xerox's OS as the technology tried to focus on making OSes shareable - able to be operated by multiple users at once at different terminals. Bell created Multics around this time which introduced the idea of privilege tiers for users or something, but the project was a mess because Bell was kind of a mess and some defectors created Unics, that is Unix, with similar concepts and such. The do one thing and do it well attitude was kind of born out of the Multics thing. IBM also got involved with the OS/360 stuff

Running internal networks in offices and such was the driver for early computers. Throughout the 70s saw Bell license out UNIX, Xerox license out it's OS, IBM did its thing, and competitors emerge at that level. The first GUIs started to become things making the systems more user-friendly.

The rise of home computing upset things a bit. Not a lot, but a bit. IBM launched the PC on its 8086 architecture. Microsoft entered the picture with its UNIX system, Apple launched its Xerox-derived things, popularizing the GUI desktop concept. Linus Torvalds got frustrated with Microsoft's UNIX derivative not being able to support his machine to its full potential and created a free UNIX-like OS with some tools in part written by a crazy man and made GNU/Linux. The x windowing system was ported to Linux. Microsoft dropped its UNIX derivative to work on IBM's DOS, Gates' mother was on the board for IBM I think. That led to Windows.

Other home computers of note were things like the C64. Which didn't have a whole lot in terms of the OS but basically would drop you into a live BASIC environment which was enough for what it was.

The 90s was tough and MS gradually outcompeted other OSes in part because they had such a close relationship to IBM and the most popular flavor of DOS, in part because of dick moves. Apple managed to survive somehow, but barely, mostly by providing cheap mainframes for schools and certain businesses. Commodore went on to Amiga with its own GUI and everything, I think, but failed to win a market and the rights to Amiga OS are split between like 4 companies. UNIX systems managed to be popular as server OSes, because of features going back to the early days. MS and IBM got mad about windows and led MS to rewrite its OS and created the NT family and IBM developing OS/2 that kind of went nowhere.

Apple lost a ton of market space and let go of powerpc architecture and its Xerox-descended OS for intel's 8086 derived architecture and ended up forking BSD for darwin and then OSX which is basically what macs run now. MS dominated everything and were terrible and here we are. Linux established itself as a good thing to build cross-industry shit on and later android was derived from it.

As gaming started to adopt OSes instead of just BIOS (like boot processes) launching programs you'd see the dreamcast i think working on 86 stuff just a few steps aside from MS, which made it easy to port to xbox when the dreamcast died and why xbox is the way it is. xbox is of course windows nt derived. sony adopted a bsd derivative and licensed direct x from ms. nintendo did their own stuff for the powerpc based consoles and suffered security holes for it. ds was similarly in house with arm hardware, as was the 3ds. The switch derives from the 3ds software, with some adapted BSD and android code (but not a lot). steam os i think is just arch linux

i need to map out linux also

because of how modular linux is and because no one can tell you don't do that everything is customizable basically. a really good thing about linux is the concept of a package manager which installs or manages software on the computer in a neat, organized way

the first of these was basically slack back in the 90s. other package managers started coming with fancy things like conflict resolution or dependency management. basically what makes a linux distribution is its package manager; everything is relatively superficial, since in principle you can run any desktop manager your computer can build or whatever.

there's still something to other spins. ubuntu basically forked debian's packages and features more cutting edge software, also canonical's decisions. linux mint forked ubuntu by just adding a layer of decision makers between canonical and the user.

main distros i think are arch, debian, ubuntu, mint. memes meme gentoo and it's probably the most widely supported source based os, but, like, it's a bad idea to do this in ways you can only really learn from experience. there's sabayon which simplifies gentoo but defeats the purpose by existing. redhat was one of the biggest popularizers of linux in the enterprise and other space deserves mention, fedora being the free version, suse is also there, these are fine and cool despite the unfortunate name for fedora

there's a bunch of little spin offs of arch and other distros aiming to make them simpler or provide a preconfigured gui. largely pointless but whatever, sometimes you just want a graphical installer, manjaro's there for you

2 notes

·

View notes