#Internet data centers

Text

Internet data centers are fueling drive to old power source: Coal. (Washington Post)

A helicopter hovers over the Gee family farm, the noisy rattle echoing inside their home in this rural part of West Virginia. It’s holding surveyors who are eyeing space for yet another power line next to the property — a line that will take electricity generated from coal plants in the state to address a drain on power driven by the world’s internet hub in Northern Virginia 35 miles away.

There, massive data centers with computers processing nearly 70 percent of global digital traffic are gobbling up electricity at a rate officials overseeing the power grid say is unsustainable unless two things happen: Several hundred miles of new transmission lines must be built, slicing through neighborhoods and farms in Virginia and three neighboring states. And antiquated coal-powered electricity plants that had been scheduled to go offline will need to keep running to fuel the increasing need for more power, undermining clean energy goals.

“It’s not right,” said Mary Gee, whose property already abuts two power lines that serve as conduits for electricity flowing towardthe biggest concentration of data centers — in Loudoun County, home to what’s known as Data Center Alley.“These power lines? They’re not for me and my family. I didn’t vote on this. And the data centers? That’s not in West Virginia. That’s a whole different state.”

The $5.2 billion effort has fueled a backlash against data centers through the region, prompting officials in Virginia to begin studying the deeper impacts of an industry they’ve long cultivated for the hundreds of millions of dollars in tax revenue it brings to their communities.

Critics say it will force residents near the coal plants to continue living with toxic pollution, ironically to help a state — Virginia — that has fully embraced clean energy. And utility ratepayers in the affected areas will be forced to pay for the plan in the form of higher bills, those critics say.

But PJM Interconnection, the regional grid operator, says the plan is necessary to maintain grid reliability amid a wave of fossil fuel plant closures in recent years, prompted by the nation’s transition to cleaner power.

9 notes

·

View notes

Text

Internet data centers are fueling drive to old power source: Coal

CHARLES TOWN, W.Va. — A helicopter hovers over the Gee family farm, the noisy rattle echoing inside their home in this rural part of West Virginia. It’s holding surveyors who are eyeing space for yet another power line next to the property — a line that will take electricity generated from coal plants in the state to address a drain on power driven by the world’s internet hub in Northern Virginia 35 miles away.

There, massive data centers with computers processing nearly 70 percent of global digital traffic are gobbling up electricity at a rate officials overseeing the power grid say is unsustainable unless two things happen: Several hundred miles of new transmission lines must be built, slicing through neighborhoods and farms in Virginia and three neighboring states. And antiquated coal-powered electricity plants that had been scheduled to go offline will need to keep running to fuel the increasing need for more power, undermining clean energy goals.

The $5.2 billion effort has fueled a backlash against data centers through the region, prompting officials in Virginia to begin studying the deeper impacts of an industry they’ve long cultivated for the hundreds of millions of dollars in tax revenue it brings to their communities.

Critics say it will force residents near the coal plants to continue living with toxic pollution, ironically to help a state — Virginia — that has fully embraced clean energy. And utility ratepayers in the affected areas will be forced to pay for the plan in the form of higher bills, those critics say.

But PJM Interconnection, the regional grid operator, says the plan is necessary to maintain grid reliability amid a wave of fossil fuel plant closures in recent years, prompted by the nation’s transition to cleaner power.

Power lines will be built across four states in a $5.2 billion effort that, relying on coal plants that were meant to be shuttered, is designed to keep the electric grid from failing amid spiking energy demands.

Cutting through farms and neighborhoods, the plan converges on Northern Virginia, where a growing data center industry will need enough extra energy to power 6 million homes by 2030.

With not enough of those green energy facilities connected to the grid yet, enough coal and natural gas energy to power 32 million homes is expected to be lost by 2030 at a time when the demand from the growing data center industry, electric vehicles and other new technology is on the rise, PJM says.

“The system is in a major transition right now, and it’s going to continue to evolve,” Ken Seiler, PJM’s senior vice president in charge of planning, said in a December stakeholders’ meeting about the effort to buy time for green energy to catch up. “And we’ll look for opportunities to do everything we can to keep the lights on as it goes through this transition.”

A need for power

Data centers that house thousands of computer servers and the cooling equipment needed for them to runhave been multiplying in Northern Virginia since the late 1990s, spreading from the industry’s historic base in Loudoun County to neighboring Prince William County and, recently, across the Potomac River into Maryland. There are nearly 300 data centers now in Virginia.

With Amazon Web Services pursuing a $35 billion data center expansion in Virginia, rural portions of the state are the industry’s newest target for development.

But data centers also consume massive amounts of energy.

One data center can require 50 times the electricity of a typical office building, according to the U.S.Department of Energy. Multiple-building data center complexes, which have become the norm, require as much as 14 to 20 times that amount.

The demand has strained utility companies, to the point where Dominion Energy in Virginia briefly warned in 2022 that it may not be able to keepup with the pace of the industry’s growth.

The utility — which has since accelerated plans for new power lines and substations to boost its electrical output — predicts that by 2035 the industry in Virginia will require 11,000 megawatts, nearly quadruple what it needed in 2022, or enough to power 8.8 million homes.

Thesmaller Northern Virginia Electric Cooperative recently told PJM that the more than 50 data centers it serves account for 59 percent of its energy demand. It expects to need to serve about 110 more data centers by July 2028.

Meanwhile, the amount of energy available is not growing quickly enough to meet that future demand. Coal plants have scaled down production or shut down altogether as the market transitions to green energy, hastened by laws in Maryland and Virginia mandating net-zero greenhouse gas emissions by 2045 and, for several other states in the region, by 2050.

Dominion is developing a 2,600-megawatt wind farm off Virginia Beach — the largest such project in U.S. waters — and the company recently gained state approval to build four solar projects.

But those projects won’t be ready in timeto absorb the projected gap in available energy.Opponents of PJM’s plan say it wouldn’t be necessary if more green energy had been connected to the grid faster, pointing to projects that were caught up in bureaucratic delays for five years or longer before they were connected.

A PJM spokesperson said the organization has recently sped up its approval process and is encouraging utility companies and federal and state officials to better incorporate renewable energy.

About 40,000 megawatts of green energy projects have been cleared for construction but are not being built because of issues related to financing or siting, the PJM spokesperson said.

Once more renewable energy is available, some of the power lines being built to address the energy gap may no longer be needed as the coal plants ultimately shut down, clean energy advocates say — though utility companies contend the extra capacity brought by the lines will always be useful.

“Their planning is just about maintaining the status quo,” Tom Rutigliano, a senior advocate for clean energy at the Natural Resources Defense Council, said about PJM. “They do nothing proactive about really trying to get a handle on the future and get ready for it.”

‘Holding on tight’ to coal

The smoke from two coal plants near West Virginia’s border with Pennsylvania billows over the city of Morgantown, adding a brownish tint to the air.

The owner of one of the Morgantown-area plants, Longview LLC, recently emerged from bankruptcy. After a restructuring, the facility is fully functioning, utilizing a solar farm to supplement its coal energy output.

The other two plants belong to the Ohio-based FirstEnergy Corp. utility, which had plans to significantly scale down operations there to meet a company goal of reducing its greenhouse gas emissions by nearly a third over the next six years.

The FirstEnergy plants are among the state’s worst polluters, said Jim Kotcon, a West Virginia University plant pathology professor who oversees conservation efforts at the Sierra Club’s West Virginia chapter.

The Harrison plant pumped out a combined 12 million tons of coal pollutants like sulfur and nitrous oxides in 2023, more than any other fossil fuel plant in the state, according to Environmental Protection Agency data. The Fort Martin plant, which has been operating since the late 1960s, emitted the state’s highest levels of nitrous oxides in 2023, at 5,240 tons.

After PJM tapped the company to build a 36-mile-long portion of the planned power lines for $392 million, FirstEnergy announced in February that the company is abandoning a 2030 goal to significantly cut greenhouse gas emissions because the two plants are crucial to maintaining grid reliability.

The news has sent FirstEnergy’s stock price up by 4 percent, to about $37 a share this week, and was greeted with jubilation by West Virginia’s coal industry.(Hadley Green/The Washington Post)

“We welcome this, without question, because it will increase the life of these plants and hundreds of thousands of mining jobs,” said Chris Hamilton, president of the West Virginia Coal Association. “We’re holding on tight to our coal plants.”

Since 2008, annual coal production in West Virginia has dipped by nearly half, to about 82 million tons, though the industry — which contributes about $5.5 billion to the state’s economy — has rebounded some due to an export market to Europe and Asia, Hamilton said.

Hamilton said his association will lobby hard for FirstEnergy’s portion of the PJM plan to gain state approval. The company said it will submit its application for its power line routes in mid-2025.

PJM asked the plants’ owner, Texas-based Talen Energy Corp., to keep them running through 2028 — with the yet-to-be determined cost of doing so passed on to ratepayers.

That would mean amending a 2018 federal court consent decree, in which Talen agreed to stop burning coal to settle a lawsuit brought by the Sierra Club over Clean Water Act violations. The Sierra Club has rejected PJM’s calls to do so.

“We need a proactive plan that is consistent with the state’s clean energy goals,” said Josh Tulkin, director of the Sierra Club’s Maryland chapter, which has proposed an alternative plan to build a battery storage facility at the Brandon Shores site that would cut the time needed for the plants to operate.

A PJM spokesperson said the organization believes that such a facility wouldn’t provide enough reliable power and is not ruling out seeking a federal emergency order to keep the coal plants running.

With the matter still unresolved, nearby residents say they are anxious to see them closed.

“It’s been really challenging,” said John Garofolo, who lives in the Stoney Beach neighborhood community of townhouses and condominiums, where coal dust drifts into the neighborhood pool when the facilities are running. “We’re concerned about the air we’re breathing here.”

Sounding alarms

Keryn Newman, a Charles Town activist, has been sounding alarms in the small neighborhoods and farm communities along the path of the proposed power lines in West Virginia.

Because FirstEnergy prohibits any structure from interfering with a power line, building a new line along the right of way — which would be expanded to make room for the third line — would mean altering the character of residents’ properties, Newman said.

“It gobbles up space for play equipment for your kid, a pool or a barn,” she said. “And a well or septic system can’t be in the right of way.”

A FirstEnergy spokesperson said the company would compensate property owners for any land needed, with eminent domain proceedings a last resort if those property owners are unwilling to sell.

Pam and Gary Gearhart fought alongside Newman against the defeated 765-kilovolt line, which would have forced them to move a septic system near FirstEnergy’s easement. But when Newman showed up recently to their Harpers Ferry-area neighborhood to discuss the new PJM plan, the couple appeared unwilling to fight again.

Next door, another family had already decided to leave, the couple said, and was in the midst of loading furniture into a truck when Newman showed up.

“They’re just going to keep okaying data centers; there’s money in those things,” Pam Gearhart said about local governments in Virginia benefiting from the tax revenue. “Until they run out of land down there.”

In Loudoun County, where the data center industry’s encroachment into neighborhoods has fostered resentment, community groups are fighting a portion of the PJM plan that would build power lines through the mostly rural communities of western Loudoun.

The lines would damage the views offered by surrounding wineries and farms that contribute to Loudoun’s $4 billion tourism industry, those groups say.

Bill Hatch owns a winery that sits near the path of where PJM suggested one high-voltage line could go, though that route is still under review.

“This is going to be a scar for a long time,” Hatch said.

Reconsidering the benefits

Amid the backlash, local and state officials are reconsidering the data center industry’s benefits.

The Virginia General Assembly has launched a study that, among other things, will look at how the industry’s growth may affect energy resources and utility rates for state residents.

But that study has held up efforts to regulate the industry sooner, frustrating activists.

“We should not be subsidizing this industry for another minute, let alone another year,” Julie Bolthouse, director of land use at the Piedmont Environmental Council, chided a Senate committee that voted in February to table a bill that would force data center companies to pay more for new transmission lines.

Loudoun is moving to restrict where in the county data centers can be built. Up until recently, data centers have been allowed to be built without special approvals wherever office buildings are allowed.

But such action will do little to stem the worries of people like Mary and Richard Gee.

As it is, the two lines near their property produce an electromagnetic field strong enough to charge a garden fence with a light current of electricity, the couple said. When helicopters show up to survey the land for a third line, the family’s dog, Peaches, who is prone to seizures, goes into a barking frenzy.

An artist who focuses on natural landscapes, Mary Gee planned to convert the barn that sits in the shadow of a power line tower to a studio. That now seems unlikely, she said.

Lately, her paintings have reflected her frustration. One picture shows birds with beaks wrapped shut by transmission line. Another has a colorful scene of the rural Charles Town area severed by a smoky black and gray landscape of steel towers and a coal plant.

CORRECTION

A previous version of this article incorrectly reported that Prince William County receives $400 million annually in taxes on the computer equipment inside data centers. It receives $100 million annually. In addition, the article incorrectly stated that two FirstEnergy plants in West Virginia have been equipped with carbon-capturing technology. They do not have such technology in place, The article has been corrected.

0 notes

Text

SIGNAL BASED TIME TRAVEL: IF YOU HAVE MILITARY COMPUTERS THAT ENABLE YOU TO TRAVEL TO A FOREIGN PLANET AND DISGUISE YOURSELF AS A MEMBER OF THE CIVILIAN POPULATION, AN ILLEGAL SPY IN TERMS OF LAWS RELATED TO WAR, YOU CAN USE THOSE COMPUTERS TO SEARCH OUR COMPUTER NETWORK, WHICH WE CALL THE INTERNET. CAN YOU SAY IT WITH ME? IN TER NET. LET ME REALLY SLOW IT DOWN BECAUSE YOU SEEM TO BE HARD OF HEARING OR FUNCTIONALLY DEFICIENT IN TERMS OF COGNITION OR COMPREHENSION

In tur net

Iiiiiiiiiiiiin teeeeeeeer neeet

#internet#google data centers have their own copies of publicly available electronic information sources#google indexes all the data it has so it can provide search results#a duplicate copy#in each of the Google data centers#and that's just one search engine#what exactly are you scanning (if anything) before you decide to invade our species' home planet again#what are you using for your military intelligence#you're just one time of many#sona versus baku in the film star trek insurrection#you can join the queue to bafti otherwise because ignoring all this proves you're really deliberate time traveling criminals#square military rank insignia militaries#davis california and william windsor and william atreides and shran bew william of andor and terra#nazi attacks are happening on the planet Earth all the time#gomez y merovingian et romanov y sobieski y atreides y terra y andor y shran y bew y william y selena y anastasia square military rank unit#celebrities#artists on tumblr#beauty#star wars#taylor swift#star wars: rogue one#square military rank insignia militaries showing up to finish or repeat the davis mind control rape for their own good to rescue them#rape for own good to rescue all that come here - even though they never left#you truly are following your raping women in arenas while they're strapped to a giant X masters#i already said it#square military rank insignia militaries are free to bafti if they come here#now i know my fully codeds#domo arrigato roboto san#close#audible words - that took forever - heard at night while apparently asleep - Bradley Carl Geiger - 8774 Williamson - Sacramento California

11 notes

·

View notes

Text

Search Engines:

Search engines are independent computer systems that read or crawl webpages, documents, information sources, and links of all types accessible on the global network of computers on the planet Earth, the internet. Search engines at their most basic level read every word in every document they know of, and record which documents each word is in so that by searching for a words or set of words you can locate the addresses that relate to documents containing those words. More advanced search engines used more advanced algorithms to sort pages or documents returned as search results in order of likely applicability to the terms searched for, in order. More advanced search engines develop into large language models, or machine learning or artificial intelligence. Machine learning or artificial intelligence or large language models (LLMs) can be run in a virtual machine or shell on a computer and allowed to access all or part of accessible data, as needs dictate.

#llm#large language model#search engine#search engines#Google#bing#yahoo#yandex#baidu#dogpile#metacrawler#webcrawler#search engines imbeded in individual pages or operating systems or documents to search those individual things individually#computer science#library science#data science#machine learning#google.com#bing.com#yahoo.com#yandex.com#baidu.com#...#observe the buildings and computers within at the dalles Google data center to passively observe google and its indexed copy of the internet#the dalles oregon next to the river#google has many data centers worldwide so does Microsoft and many others

9 notes

·

View notes

Text

do u think people would be less stupid about ai if it was called something else

Like if they knew it wasn’t “smart” and is instead plagiarizing would they stop worshiping it so much

Then again the people who are into it are nft cryptobros and very real business™️ people with real jobs that definitely aren’t fake (cough) who just want to fire anyone to save .1% of the company budget

so they’d probably fall for it anyway

It just seems like people are getting the wrong idea :p

#that being said yes ai is currently destroying the internet by spamming the lowest quality garbage imaginable#but it’s not intelligent. just an algorithm#the predictive text on your phone#the fear of losing your job is real I can understand that#what I’m talking about is the people who are convinced it’s like. self aware? lol#these people have convinced themselves this is The Big Thing and right now in its current state. it’s not#if you need endless braindead slop then yeah it’s fine#but it’s just a toy right now#unfortunately people are losing their job to a toy#but thats a whole topic right there that i am not going to pretend I can get into#the end goal is dirt cheap work that passes the 1 second sniff test before someone scrolls past#whether the work is made by someone being paid a few dollars a day in a poor country or a data center doesn’t really matter to them#whichever option is cheaper is the one they’ll pick#world is a fuck etc etc we all know this

4 notes

·

View notes

Text

If anyone wants to know why every tech company in the world right now is clamoring for AI like drowned rats scrabbling to board a ship, I decided to make a post to explain what's happening.

(Disclaimer to start: I'm a software engineer who's been employed full time since 2018. I am not a historian nor an overconfident Youtube essayist, so this post is my working knowledge of what I see around me and the logical bridges between pieces.)

Okay anyway. The explanation starts further back than what's going on now. I'm gonna start with the year 2000. The Dot Com Bubble just spectacularly burst. The model of "we get the users first, we learn how to profit off them later" went out in a no-money-having bang (remember this, it will be relevant later). A lot of money was lost. A lot of people ended up out of a job. A lot of startup companies went under. Investors left with a sour taste in their mouth and, in general, investment in the internet stayed pretty cooled for that decade. This was, in my opinion, very good for the internet as it was an era not suffocating under the grip of mega-corporation oligarchs and was, instead, filled with Club Penguin and I Can Haz Cheezburger websites.

Then around the 2010-2012 years, a few things happened. Interest rates got low, and then lower. Facebook got huge. The iPhone took off. And suddenly there was a huge new potential market of internet users and phone-havers, and the cheap money was available to start backing new tech startup companies trying to hop on this opportunity. Companies like Uber, Netflix, and Amazon either started in this time, or hit their ramp-up in these years by shifting focus to the internet and apps.

Now, every start-up tech company dreaming of being the next big thing has one thing in common: they need to start off by getting themselves massively in debt. Because before you can turn a profit you need to first spend money on employees and spend money on equipment and spend money on data centers and spend money on advertising and spend money on scale and and and

But also, everyone wants to be on the ship for The Next Big Thing that takes off to the moon.

So there is a mutual interest between new tech companies, and venture capitalists who are willing to invest $$$ into said new tech companies. Because if the venture capitalists can identify a prize pig and get in early, that money could come back to them 100-fold or 1,000-fold. In fact it hardly matters if they invest in 10 or 20 total bust projects along the way to find that unicorn.

But also, becoming profitable takes time. And that might mean being in debt for a long long time before that rocket ship takes off to make everyone onboard a gazzilionaire.

But luckily, for tech startup bros and venture capitalists, being in debt in the 2010's was cheap, and it only got cheaper between 2010 and 2020. If people could secure loans for ~3% or 4% annual interest, well then a $100,000 loan only really costs $3,000 of interest a year to keep afloat. And if inflation is higher than that or at least similar, you're still beating the system.

So from 2010 through early 2022, times were good for tech companies. Startups could take off with massive growth, showing massive potential for something, and venture capitalists would throw infinite money at them in the hopes of pegging just one winner who will take off. And supporting the struggling investments or the long-haulers remained pretty cheap to keep funding.

You hear constantly about "Such and such app has 10-bazillion users gained over the last 10 years and has never once been profitable", yet the thing keeps chugging along because the investors backing it aren't stressed about the immediate future, and are still banking on that "eventually" when it learns how to really monetize its users and turn that profit.

The pandemic in 2020 took a magnifying-glass-in-the-sun effect to this, as EVERYTHING was forcibly turned online which pumped a ton of money and workers into tech investment. Simultaneously, money got really REALLY cheap, bottoming out with historic lows for interest rates.

Then the tide changed with the massive inflation that struck late 2021. Because this all-gas no-brakes state of things was also contributing to off-the-rails inflation (along with your standard-fare greedflation and price gouging, given the extremely convenient excuses of pandemic hardships and supply chain issues). The federal reserve whipped out interest rate hikes to try to curb this huge inflation, which is like a fire extinguisher dousing and suffocating your really-cool, actively-on-fire party where everyone else is burning but you're in the pool. And then they did this more, and then more. And the financial climate followed suit. And suddenly money was not cheap anymore, and new loans became expensive, because loans that used to compound at 2% a year are now compounding at 7 or 8% which, in the language of compounding, is a HUGE difference. A $100,000 loan at a 2% interest rate, if not repaid a single cent in 10 years, accrues to $121,899. A $100,000 loan at an 8% interest rate, if not repaid a single cent in 10 years, more than doubles to $215,892.

Now it is scary and risky to throw money at "could eventually be profitable" tech companies. Now investors are watching companies burn through their current funding and, when the companies come back asking for more, investors are tightening their coin purses instead. The bill is coming due. The free money is drying up and companies are under compounding pressure to produce a profit for their waiting investors who are now done waiting.

You get enshittification. You get quality going down and price going up. You get "now that you're a captive audience here, we're forcing ads or we're forcing subscriptions on you." Don't get me wrong, the plan was ALWAYS to monetize the users. It's just that it's come earlier than expected, with way more feet-to-the-fire than these companies were expecting. ESPECIALLY with Wall Street as the other factor in funding (public) companies, where Wall Street exhibits roughly the same temperament as a baby screaming crying upset that it's soiled its own diaper (maybe that's too mean a comparison to babies), and now companies are being put through the wringer for anything LESS than infinite growth that Wall Street demands of them.

Internal to the tech industry, you get MASSIVE wide-spread layoffs. You get an industry that used to be easy to land multiple job offers shriveling up and leaving recent graduates in a desperately awful situation where no company is hiring and the market is flooded with laid-off workers trying to get back on their feet.

Because those coin-purse-clutching investors DO love virtue-signaling efforts from companies that say "See! We're not being frivolous with your money! We only spend on the essentials." And this is true even for MASSIVE, PROFITABLE companies, because those companies' value is based on the Rich Person Feeling Graph (their stock) rather than the literal profit money. A company making a genuine gazillion dollars a year still tears through layoffs and freezes hiring and removes the free batteries from the printer room (totally not speaking from experience, surely) because the investors LOVE when you cut costs and take away employee perks. The "beer on tap, ping pong table in the common area" era of tech is drying up. And we're still unionless.

Never mind that last part.

And then in early 2023, AI (more specifically, Chat-GPT which is OpenAI's Large Language Model creation) tears its way into the tech scene with a meteor's amount of momentum. Here's Microsoft's prize pig, which it invested heavily in and is galivanting around the pig-show with, to the desperate jealousy and rapture of every other tech company and investor wishing it had that pig. And for the first time since the interest rate hikes, investors have dollar signs in their eyes, both venture capital and Wall Street alike. They're willing to restart the hose of money (even with the new risk) because this feels big enough for them to take the risk.

Now all these companies, who were in varying stages of sweating as their bill came due, or wringing their hands as their stock prices tanked, see a single glorious gold-plated rocket up out of here, the likes of which haven't been seen since the free money days. It's their ticket to buy time, and buy investors, and say "see THIS is what will wring money forth, finally, we promise, just let us show you."

To be clear, AI is NOT profitable yet. It's a money-sink. Perhaps a money-black-hole. But everyone in the space is so wowed by it that there is a wide-spread and powerful conviction that it will become profitable and earn its keep. (Let's be real, half of that profit "potential" is the promise of automating away jobs of pesky employees who peskily cost money.) It's a tech-space industrial revolution that will automate away skilled jobs, and getting in on the ground floor is the absolute best thing you can do to get your pie slice's worth.

It's the thing that will win investors back. It's the thing that will get the investment money coming in again (or, get it second-hand if the company can be the PROVIDER of something needed for AI, which other companies with venture-back will pay handsomely for). It's the thing companies are terrified of missing out on, lest it leave them utterly irrelevant in a future where not having AI-integration is like not having a mobile phone app for your company or not having a website.

So I guess to reiterate on my earlier point:

Drowned rats. Swimming to the one ship in sight.

35K notes

·

View notes

Text

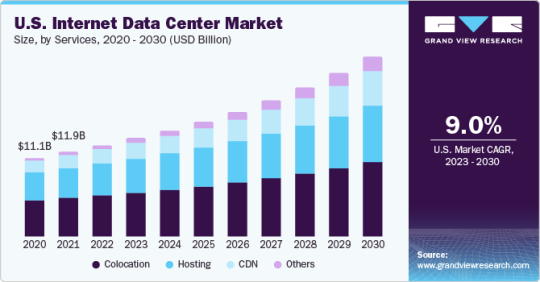

Internet Data Center Market Size To Reach $107.26 Billion By 2030

Internet Data Center Market Growth & Trends

The global internet data center market size is projected to reach USD 107.26 billion by 2030, registering a CAGR of 10.6%, according to a new study by Grand View Research Inc. As businesses all over the world embark on a relentless journey of digital transformation, the market for Internet Data Center (IDC) will expand at an astounding rate. IDCs act as the crucial infrastructure supporting this paradigm-shifting transformation in an age where data is money and information is power. Unprecedented amounts of data are being produced and consumed by businesses, governments, and people, which has forced the market to grow and change to keep up with the demand.

The need for agility, scalability, security, and dependability in managing massive volumes of data and supporting a wide range of applications is what motivates this digital transformation imperative. The driving force behind the IDC market's expansion is cloud computing. To supply cloud services like Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS), cloud service providers (CSPs) are making significant investments in data center infrastructure. The cloud model eliminates the need for significant on-premises data center expenditures by offering organizations scalable, affordable solutions on demand.

IDCs play a crucial role in providing the physical infrastructure necessary to support these services, which is crucial for the market's growth as businesses adopt the cloud for its operational efficiency and flexibility. The IDC landscape is changing due to edge computing. This method reduces latency and enables real-time applications by bringing data processing closer to the source of the data. Edge computing is essential due to the growth of IoT devices, autonomous systems, and augmented reality. By building data centers in key areas, IDCs are advancing to enable edge computing and ensure low-latency data processing and delivery. The market is positioned for ongoing growth as the edge computing revolution gathers steam, meeting the special needs of distributed computing.

Request a free sample copy: https://www.grandviewresearch.com/industry-analysis/internet-data-centers-market

Internet Data Center Market Report Highlights

Based on services, the CDN segment is expected to witness the highest CAGR of over 14% from 2023 to 2030. The quick development of streaming video, online gaming, and e-commerce has considerably fuelled the segment’s expansion

Based on deployment, the hybrid segment is projected to witness the highest CAGR of over 13% during the forecast period. The hybrid method enables businesses to selectively arrange tasks in the most suitable setting, maximizing resource usage and financial effectiveness

Based on enterprise size, the SME segment is projected to witness the highest CAGR of around 12% over the forecast period. IDCs give SMEs the chance to utilize top-notch data center resources and cloud services without having to make big upfront infrastructure investments or build out substantial in-house IT staff

Based on end-use, the e-commerce & retail segment is projected to witness the highest CAGR of over 13% from 2023 to 2030. IDCs provide the required network, processing, and storage resources for high-performance, secure, and dependable e-commerce operations

Internet Data Center Market Segmentation

Grand View Research has segmented the global internet data center market based on services, deployment, enterprise size, end-use, and region:

Internet Data Center (IDC) Services Outlook (Revenue, USD Billion, 2018 - 2030)

Hosting

Colocation

CDN

Others

Internet Data Center (IDC) Deployment Outlook (Revenue, USD Billion, 2018 - 2030)

Public

Private

Hybrid

Internet Data Center (IDC) Enterprise Size Outlook (Revenue, USD Billion, 2018 - 2030)

Large Enterprise

SMEs

Internet Data Center (IDC) End-use Outlook (Revenue, USD Billion, 2018 - 2030)

CSP

Telecom

Government/Public Sector

BFSI

Media & Entertainment

E-commerce & Retail

Others

Internet Data Center (IDC) Regional Outlook (Revenue, USD Billion, 2018 - 2030)

North America

U.S.

Canada

Europe

UK

Germany

France

Italy

Spain

Netherlands

Poland

Asia Pacific

India

China

Japan

Australia

South Korea

Latin America

Brazil

Mexico

Argentina

Middle East & Africa (MEA)

UAE

Saudi Arabia

South Africa

List of Key Players in the Internet Data Center Market

Alibaba Cloud

Amazon Web Services, Inc.

AT&T Intellectual Property

Lumen Technologies (CenturyLink)

China Telecom Americas, Inc.

CoreSite

CyrusOne

Digital Realty

Equinix, Inc.

Google Cloud

IBM

Microsoft

NTT Communications Corporation

Oracle

Tencent Cloud

Browse Full Report: https://www.grandviewresearch.com/industry-analysis/internet-data-centers-market

#Internet Data Center Market#Internet Data Center Market Size#Internet Data Center Market Share#Internet Data Center Market Trends#Internet Data Center Market Growth

0 notes

Text

Role of DNS in Internet Communication - Technology Org

New Post has been published on https://thedigitalinsider.com/role-of-dns-in-internet-communication-technology-org/

Role of DNS in Internet Communication - Technology Org

Domain Name Systems (DNS) refer to hierarchical and decentralized naming systems for computers, services, and other internet-connected resources. A domain name is translated into an IP address used to communicate between devices. When a user enters a domain into their browser, the browser queries the DNS resolver provided by their ISP. Then, the DNS server converts URLs and domain names into IP addresses that computers understand and use. A user types into a browser and a machine interprets that as a command to find a webpage. This process of translation and lookup is called DNS resolution.

Now we understand what DNS is and how it works, we will talk about the role of DNS in Internet communication.

Data center – illustrative photo. Image credit: kewl via Pixabay, Pixabay license

Visit Websites Quickly

Domain names allow people to access websites much easier than IP addresses. Instead of entering “69.63.176.13” into a web browser, a user can just enter “facebook.com.” The DNS server converts domain names into IP addresses. This enables users to access websites.

Enable Internet connectivity

Internet communication relies heavily on DNS and IP. Without DNS, it would be difficult to remember all IP addresses for the websites we visit daily. DNS gives an easily recognizable domain name that can be used to visit websites. In contrast, IP is in the position of routing data packets between internet-connected devices. It ensures that data packets arrive at their target destination without being lost or damaged along the route. In order to find out what your public IP address is, What Is My IP can be used to check your public IP address, IP location, and your ISP.

Provides Internet Safety

In internet communications, DNS and IP security play an important role in ensuring internet safety. DNS attacks can route visitors to malicious websites, while IP spoofing allows hackers to impersonate devices and obtain unwanted network access. DNSSEC (DNS Security Extensions) and IPsec (IP Security) are two technologies that protect DNS and IP respectively and thus secure communications.

Monitor Network Traffic

DNS and IP are critical components for controlling internet network traffic. DNS servers cache commonly requested domain names to reduce the load on the DNS system and improve response times. On the other hand, IP uses various routing protocols, including OSPF and BGP, to control network traffic and guarantee that data packets are effectively delivered between internet devices.

#browser#cache#command#communication#communications#computers#connected devices#connectivity#data#Data Center#devices#DNS#domain name#domain names#extensions#Facebook#hackers#hand#how#Internet#it#monitor#naming#network#Network Access#Other#Other posts#photo#Play#process

0 notes

Text

The future of digital sovereignty casts a complex shadow over an interconnected digital world, signaling a crucial shift towards national autonomy over digital assets, infrastructure, and governance. As this trend gathers momentum, it will disrupt traditional business models and realign the global digital economy, calling for strategic foresight and agility across all sectors.

Why is this Important?

The implications of this trend are vast. For businesses, the burgeoning digital sovereignty may mean navigating a mosaic of national laws and regulations, potentially leading to higher costs and decreased agility. It could necessitate major shifts in data storage and processing, as seen in the need for localized data centers to comply with privacy laws. Additionally, tech companies may need to increasingly customize their offerings to meet unique national standards and preferences.

On a societal level, digital sovereignty can reshape the flow of information, potentially erecting digital borders that alter the internet's open nature. Users may witness a divergence in the digital experience from one country to another, influencing cultural exchange, communication, and the global marketplace of ideas.

Questions

How will businesses adapt to a landscape where digital policies and infrastructure can greatly differ from one nation to another?

What new alliances and partnerships might emerge as countries seek to balance digital sovereignty with global interconnectedness?

Could the pursuit of digital sovereignty inadvertently escalate into a form of digital nationalism, impacting global internet unity?

How might consumer data privacy and protection evolve under these new national digital regimes?

#digital sovereignty#digital economy#data storage#data processing#data center#digital nationalism#global internet unity#data privacy#data protection#privacy laws

0 notes

Text

Just a moment, honey! 👰 🤵♂️

#IT #datacenter #tech #repair #technology #cloud #cybersecurity #business #data #coding #telecom #internet #wedding #bride #love #weddingday #richland #kennewick #pasco #tricitieswa

#kennewick#richland#pasco#tricities#cline#shopsmall#washington#cybersecurity#internet#gaming#wedding#gamer#data#data center#repair#fix#computer#tech#technology

0 notes

Text

Cloudflare Battling Through Multiple Service Outages

In recent days, Cloudflare, a major web infrastructure and website security company, has been wrestling with several service outages. On October 30, an update rollout to its globally distributed key-value store, Workers KV, went awry, plunging all Cloudflare services into a 37-minute-long outage1. Just a few days later, on November 2, 2023, another outage occurred, impacting many of…

View On WordPress

0 notes

Text

Edge Data Center Market- Optimizing IT Infrastructure for Business

The edge data centers facilitate quick delivery of data services with minimal latency. Edge caching is adopted by various industries dominated by IT and Telecommunication. Edge data centers provide their customers with high security and greater data control. Time-sensitive data is processed faster with edge data centers. Integration of advanced technologies such as AI and 5G is expected to create a lucrative growth opportunity for companies.

Apart from quick data delivery, low latency is another attractive feature of edge catching. In telecommunication companies edge catching is used to get better connectivity and proximity. IoT devices are bound to create huge data that need processing. Having a centralized server won’t be a feasible option here. Edge data centers could increase processing speed. Medical pieces of equipment may rely on edge data centers for robotic surgeries and extremely low latency.

Autonomous vehicles need to share data among vehicles in the same or other networks. A network of edge data centers could collect data and assist in emergency response. With the onset of Industry 4.0, companies will focus on building smart factories. Having an edge data center will help companies in planning their machine’s maintenance and quality management. Owing to a smaller footprint, lower latency, and faster processing edge data centers are anticipated to remain a key part of the IT infrastructure of companies.

0 notes

Note

Saw a tweet that said something around:

"cannot emphasize enough how horrid chatgpt is, y'all. it's depleting our global power & water supply, stopping us from thinking or writing critically, plagiarizing human artists. today's students are worried they won't have jobs because of AI tools. this isn't a world we deserve"

I've seen some of your AI posts and they seem nuanced, but how would you respond do this? Cause it seems fairly-on point and like the crux of most worries. Sorry if this is a troublesome ask, just trying to learn so any input would be appreciated.

i would simply respond that almost none of that is true.

'depleting the global power and water supply'

something i've seen making the roudns on tumblr is that chatgpt queries use 3 watt-hours per query. wow, that sounds like a lot, especially with all the articles emphasizing that this is ten times as much as google search. let's check some other very common power uses:

running a microwave for ten minutes is 133 watt-hours

gaming on your ps5 for an hour is 200 watt-hours

watching an hour of netflix is 800 watt-hours

and those are just domestic consumer electricty uses!

a single streetlight's typical operation 1.2 kilowatt-hours a day (or 1200 watt-hours)

a digital billboard being on for an hour is 4.7 kilowatt-hours (or 4700 watt-hours)

i think i've proved my point, so let's move on to the bigger picture: there are estimates that AI is going to cause datacenters to double or even triple in power consumption in the next year or two! damn that sounds scary. hey, how significant as a percentage of global power consumption are datecenters?

1-1.5%.

ah. well. nevertheless!

what about that water? yeah, datacenters use a lot of water for cooling. 1.7 billion gallons (microsoft's usage figure for 2021) is a lot of water! of course, when you look at those huge and scary numbers, there's some important context missing. it's not like that water is shipped to venus: some of it is evaporated and the rest is generally recycled in cooling towers. also, not all of the water used is potable--some datacenters cool themselves with filtered wastewater.

most importantly, this number is for all data centers. there's no good way to separate the 'AI' out for that, except to make educated guesses based on power consumption and percentage changes. that water figure isn't all attributable to AI, plenty of it is necessary to simply run regular web servers.

but sure, just taking that number in isolation, i think we can all broadly agree that it's bad that, for example, people are being asked to reduce their household water usage while google waltzes in and takes billions of gallons from those same public reservoirs.

but again, let's put this in perspective: in 2017, coca cola used 289 billion liters of water--that's 7 billion gallons! bayer (formerly monsanto) in 2018 used 124 million cubic meters--that's 32 billion gallons!

so, like. yeah, AI uses electricity, and water, to do a bunch of stuff that is basically silly and frivolous, and that is broadly speaking, as someone who likes living on a planet that is less than 30% on fire, bad. but if you look at the overall numbers involved it is a miniscule drop in the ocean! it is a functional irrelevance! it is not in any way 'depleting' anything!

'stopping us from thinking or writing critically'

this is the same old reactionary canard we hear over and over again in different forms. when was this mythic golden age when everyone was thinking and writing critically? surely we have all heard these same complaints about tiktok, about phones, about the internet itself? if we had been around a few hundred years earlier, we could have heard that "The free access which many young people have to romances, novels, and plays has poisoned the mind and corrupted the morals of many a promising youth."

it is a reactionary narrative of societal degeneration with no basis in anything. yes, it is very funny that laywers have lost the bar for trusting chatgpt to cite cases for them. but if you think that chatgpt somehow prevented them from thinking critically about its output, you're accusing the tail of wagging the dog.

nobody who says shit like "oh wow chatgpt can write every novel and movie now. yiou can just ask chatgpt to give you opinions and ideas and then use them its so great" was, like, sitting in the symposium debating the nature of the sublime before chatgpt released. there is no 'decay', there is no 'decline'. you should be suspicious of those narratives wherever you see them, especially if you are inclined to agree!

plagiarizing human artists

nah. i've been over this ad infinitum--nothing 'AI art' does could be considered plagiarism without a definition so preposterously expansive that it would curtail huge swathes of human creative expression.

AI art models do not contain or reproduce any images. the result of them being trained on images is a very very complex statistical model that contains a lot of large-scale statistical data about all those images put together (and no data about any of those individual images).

to draw a very tortured comparison, imagine you had a great idea for how to make the next Great American Painting. you loaded up a big file of every norman rockwell painting, and you made a gigantic excel spreadsheet. in this spreadsheet you noticed how regularly elements recurred: in each cell you would have something like "naturalistic lighting" or "sexually unawakened farmers" and the % of times it appears in his paintings. from this, you then drew links between these cells--what % of paintings containing sexually unawakened farmers also contained naturalistic lighting? what % also contained a white guy?

then, if you told someone else with moderately competent skill at painting to use your excel spreadsheet to generate a Great American Painting, you would likely end up with something that is recognizably similar to a Norman Rockwell painting: but any charge of 'plagiarism' would be absolutely fucking absurd!

this is a gross oversimplification, of course, but it is much closer to how AI art works than the 'collage machine' description most people who are all het up about plagiarism talk about--and if it were a collage machine, it would still not be plagiarising because collages aren't plagiarism.

(for a better and smarter explanation of the process from soneone who actually understands it check out this great twitter thread by @reachartwork)

today's students are worried they won't have jobs because of AI tools

i mean, this is true! AI tools are definitely going to destroy livelihoods. they will increase productivty for skilled writers and artists who learn to use them, which will immiserate those jobs--they will outright replace a lot of artists and writers for whom quality is not actually important to the work they do (this has already essentially happened to the SEO slop website industry and is in the process of happening to stock images).

jobs in, for example, product support are being cut for chatgpt. and that sucks for everyone involved. but this isn't some unique evil of chatgpt or machine learning, this is just the effect that technological innovation has on industries under capitalism!

there are plenty of innovations that wiped out other job sectors overnight. the camera was disastrous for portrait artists. the spinning jenny was famously disastrous for the hand-textile workers from which the luddites drew their ranks. retail work was hit hard by self-checkout machines. this is the shape of every single innovation that can increase productivity, as marx explains in wage labour and capital:

“The greater division of labour enables one labourer to accomplish the work of five, 10, or 20 labourers; it therefore increases competition among the labourers fivefold, tenfold, or twentyfold. The labourers compete not only by selling themselves one cheaper than the other, but also by one doing the work of five, 10, or 20; and they are forced to compete in this manner by the division of labour, which is introduced and steadily improved by capital. Furthermore, to the same degree in which the division of labour increases, is the labour simplified.

The special skill of the labourer becomes worthless. He becomes transformed into a simple monotonous force of production, with neither physical nor mental elasticity. His work becomes accessible to all; therefore competitors press upon him from all sides. Moreover, it must be remembered that the more simple, the more easily learned the work is, so much the less is its cost to production, the expense of its acquisition, and so much the lower must the wages sink – for, like the price of any other commodity, they are determined by the cost of production. Therefore, in the same manner in which labour becomes more unsatisfactory, more repulsive, do competition increase and wages decrease”

this is the process by which every technological advancement is used to increase the domination of the owning class over the working class. not due to some inherent flaw or malice of the technology itself, but due to the material realtions of production.

so again the overarching point is that none of this is uniquely symptomatic of AI art or whatever ever most recent technological innovation. it is symptomatic of capitalism. we remember the luddites primarily for failing and not accomplishing anything of meaning.

if you think it's bad that this new technology is being used with no consideration for the planet, for social good, for the flourishing of human beings, then i agree with you! but then your problem shouldn't be with the technology--it should be with the economic system under which its use is controlled and dictated by the bourgeoisie.

3K notes

·

View notes

Text

Disaster Recovery Solutions: Safeguarding Business Continuity in an Uncertain World

In an era marked by unprecedented digital reliance, disaster recovery solution have risen to prominence as an essential safeguard for business continuity. This article delves into the critical realm of disaster recovery solutions, exploring their significance, the technology that underpins them, and the profound benefits they offer in ensuring uninterrupted operations, even in the face of adversity.

Understanding Disaster Recovery Solutions

Disaster recovery solutions are comprehensive strategies and technologies designed to protect an organization's critical data, applications, and IT infrastructure from disruptions caused by various calamities. These disruptions can encompass natural disasters like hurricanes and earthquakes, technological failures, cyberattacks, or even human errors. The primary goal of disaster recovery solutions is to enable a swift and seamless restoration of essential business functions, reducing downtime and its associated costs.

Why Disaster Recovery Solutions Matter

Mitigating Downtime: Downtime can be crippling, leading to lost revenue, productivity, and customer trust. Disaster recovery solutions aim to minimize downtime by swiftly restoring systems and data.

Preserving Data Integrity: In the digital age, data is the lifeblood of organizations. Disaster recovery solutions ensure the integrity of critical data, preventing loss or corruption.

Maintaining Business Reputation: Being able to continue operations in the wake of a disaster or disruption demonstrates resilience and commitment to clients and stakeholders, enhancing an organization's reputation.

Meeting Compliance Requirements: Many industries and regulatory bodies mandate the implementation of disaster recovery plans to protect sensitive data and maintain business continuity.

Key Elements of Disaster Recovery Solutions

Data Backup and Replication: Regular and automated backups of critical data, coupled with real-time data replication, ensure data availability even in the event of hardware failures or data corruption.

Redundant Infrastructure: Utilizing redundant servers, storage, and network infrastructure reduces the risk of single points of failure.

Disaster Recovery Testing: Regular testing and simulation of disaster scenarios help identify vulnerabilities and refine recovery processes.

Remote Data Centers: Storing data and applications in geographically distant data centers provides additional protection against localized disasters.

Cloud-Based Solutions: Cloud platforms offer scalable and cost-effective disaster recovery solutions, enabling quick recovery from virtually anywhere.

Benefits of Disaster Recovery Solutions

Minimized Downtime: Swift recovery ensures minimal disruption to business operations, reducing financial losses.

Data Resilience: Protection against data loss preserves critical information and intellectual property.

Improved Security: Disaster recovery solutions often include robust security measures, safeguarding against cyberattacks.

Regulatory Compliance: Meeting compliance requirements helps avoid potential legal and financial penalties.

Business Continuity: Demonstrating resilience reassures customers, partners, and employees, maintaining trust and business relationships.

Conclusion

In an unpredictable world where business continuity is non-negotiable, disaster recovery solutions provide the safety net organizations need to weather disruptions and emerge stronger. By embracing these solutions, businesses can not only protect their vital assets but also demonstrate unwavering commitment to their stakeholders. In an age of digital transformation, disaster recovery solutions are the linchpin of resilience, ensuring that, no matter what comes their way, businesses can keep moving forward.

#Secure Cloud Hosting#Data Center Services#Cloud Migration#cloud network security#internet solutions#disaster recovery solution#Managed cloud services#Cloud migration services

1 note

·

View note

Text

Penafsiran tentang Data Central Virtual

Data Central Virtual atau Central Virtual Data adalah konsep yang inovatif dalam dunia teknologi informasi yang membolehkan organisasi untuk pengelolaan, penyimpanan, dan mengakses data mereka secara efisien dan terpadu. Dalam artikel ini, kita akan uraikan secara detail tentang Data Central Virtual, proses bagaimana cara kerjanya, dan manfaat yang diberikan.

Penafsiran tentang Data Central…

View On WordPress

0 notes

Text

How Temperature Monitoring Systems Can Save Data Centers from Overheating and Potential Disasters.

In the fast-paced world of technology, data centers play a critical role in storing and processing vast amounts of information. However, the very systems that power these centers can also be their downfall. Overheating is a common and potentially catastrophic issue that data centers face, leading to system failures, data loss, and even costly downtime. That's where temperature monitoring systems come into play. By constantly tracking and analyzing temperature levels, these innovative solutions can prevent overheating and avert potential disasters. In this article, we will explore the importance of temperature monitoring systems in data centers, their key features, and how they can save businesses from the devastating consequences of overheating. Whether you're a data center manager or a business owner relying on the smooth operation of your IT infrastructure, understanding the role of temperature monitoring systems is crucial in ensuring the safety and efficiency of your operations. So let's dive in and discover the power of these systems in safeguarding data centers from overheating and potential disasters.

In today's digital age, data centers are the backbone of our technological infrastructure, handling vast amounts of data that power our daily lives. However, data centers are not immune to challenges, one of the most critical being the risk of overheating. The excessive heat generated by servers and other equipment can lead to system failures, downtime, and even catastrophic disasters. In this blog post, we will explore how easyMonitor : temperature monitoring systems play a vital role in safeguarding data centers from overheating and potential disasters.

Understanding the Risks of Overheating:

Data centers rely on an intricate network of servers, storage systems, and networking equipment that generate substantial heat during operation. If not properly managed, this heat can accumulate, causing hardware failures, reducing performance, and increasing the risk of electrical fires. Overheating can also impact the lifespan of equipment and result in costly replacements.

The Role of Temperature Monitoring Systems:

Temperature monitoring systems act as the first line of defense against overheating in data centers. These systems utilize a network of sensors strategically placed throughout the facility to continuously monitor the temperature in real-time. By collecting accurate and precise temperature data, these systems can provide valuable insights into the thermal conditions within the data center.

Early Detection and Alerts:

Temperature and humidity monitoring systems enable early detection of any potential temperature anomalies or deviations from the optimal operating range. By setting thresholds and triggers, the system can promptly alert data center operators and administrators about any temperature fluctuations. These timely alerts allow for swift intervention to prevent overheating before it escalates into a critical situation.

Preventive Maintenance and Optimization:

Data center temperature monitoring systems not only help identify immediate issues but also assist in proactive maintenance and optimization. By analyzing historical temperature data, administrators can identify trends, patterns, and potential hotspots within the facility. This insight enables proactive measures such as equipment reconfiguration, airflow adjustments, and the implementation of cooling solutions to maintain optimal temperatures and prevent future overheating risks.

Ensuring Business Continuity:

By implementing temperature humidity monitoring systems, data center operators can mitigate the risk of catastrophic failures and ensure uninterrupted business operations. By proactively managing temperature levels, data centers can reduce the likelihood of unexpected downtime, thereby maximizing uptime, preserving data integrity, and maintaining customer satisfaction.

Conclusion:

Temperature monitoring systems are indispensable tools for maintaining the health and efficiency of data centers. They provide real-time monitoring, early detection, and proactive measures to prevent overheating and potential disasters. By investing in these systems, data center operators can significantly reduce the risk of hardware failures, optimize energy usage, and ensure uninterrupted operations. With the ever-increasing demand for reliable and efficient data storage and processing, easyMonitor temperature and humidity data logger system are a crucial component for protecting the integrity and reliability of our digital infrastructure.

#data centers#temperature monitoring system#inwizards software technology#temperature monitoring#internet of things#humidity monitoring system#humiditymonitoring#humiditymonitoringsystem#iot#easymonitorhumiditymonitoringsystem#easymonitor#easymonitortemperaturemonitoringsystem#datalogger#datalogger system#monitoring systems

1 note

·

View note