#KERNEL SPACE PROGRAM???

Explore tagged Tumblr posts

Text

Day 4 of posting old art so I actually post art on Tumblr! I don’t remember who this is or what game he’s from, but I remember this is the face he made when his spaceship was about to crash.

0 notes

Text

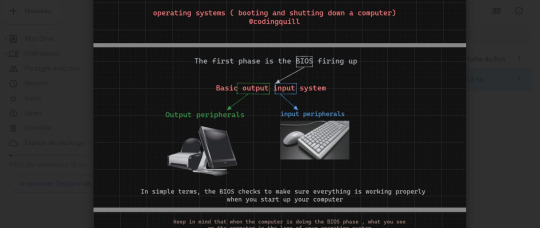

What happens when you start your computer ? ( Booting a computer )

We studied this in the lecture today, and it was quite interesting. What makes something a hundred times simpler than it is? Creating a story about it. That's why I made this super fun dialog that will help you understand it all.

I've set up a drive to compile everything I create related to the Linux operating system. Feel free to explore it for more details on the topics discussed in the conversation below. Check it out here.

Have a fun read, my dear coders!

In the digital expanse of the computer, Pixel, the inquisitive parasite, is on a microventure with Binary, a wise digital guide. Together, they delve into the electronic wonders, uncovering the secrets hidden in the machine's core.

Pixel: (zooming around) Hey there! Pixel here, on a mission to demystify the tech wonders . There's a creature named Binary who knows all the ins and outs. Let's find them!

Binary: (appearing with a flicker of pixels) Pixel, greetings! Ready to explore what happens inside here?

Pixel: Absolutely! I want the full scoop. How does this thing come alive when the human outside clicks on "start"?

Binary: (with a digital chuckle) Ah, the magic of user interaction. Follow me, and I'll reveal the secrets.

(They traverse through the circuits, arriving at a glowing portal.)

Pixel: (inquiring) What's the deal with this glowing door?

Binary: (hovering) Pixel, behold the BIOS - our machine's awakening. When the human clicks "start," the BIOS kicks in, checking if our components are ready for action.

(They proceed to observe a tiny program in action.)

Pixel: (curious) Look at that little messenger running around. What's it up to?

Binary: (explaining) That, Pixel, is the bootloader. It plays courier between the BIOS and the operating system, bringing it to life.

Pixel: (excitedly buzzing) Okay! How does the computer know where to find the operating system?

Binary: Ah, Pixel, that's a tale that takes us deep into the heart of the hard disk. Follow me.

(They weave through the digital pathways, arriving at the hard disk.)

Pixel: (curious) Huh? Tell me everything!

Binary: Within this hard disk lies the treasure chest of the operating system. Let's start with the Master Boot Record (MBR).

(They approach the MBR, Binary pointing to its intricate code.)

Binary: The MBR is like the keeper of the keys. It holds crucial information about our partitions and how to find the operating system.

Pixel: (wide-eyed) What's inside?

Binary: (pointing) Take a look. This is the primary boot loader, the first spark that ignites the OS journey.

(They travel into the MBR, where lines of code reveal the primary boot loader.)

Pixel: (in awe) This tiny thing sets the whole show in motion?

Binary: (explaining) Indeed. It knows how to find the kernel of the operating system, which is the core of its existence.

(They proceed to the first partition, where the Linux kernel resides.)

Pixel: (peering into the files) This is where the OS lives, right?

Binary: (nodding) Correct, Pixel. Here lies the Linux kernel. Notice those configuration files? They're like the OS's guidebook, all written in text.

(They venture to another partition, finding it empty.)

Pixel: (confused) What's the story with this empty space?

Binary: (smirking) Sometimes, Pixel, there are barren lands on the hard disk, waiting for a purpose. It's a canvas yet to be painted.

Pixel: (reflecting) Wow! It's like a whole universe in here. I had no idea the operating system had its roots in the hard disk.

(They continue their microventure, navigating the binary landscapes of the computer's inner world.Pixel gazes at the screen where choices appear.)

Pixel: What's happening here?

Binary: (revealing) This is where the user picks the operating system. The computer patiently waits for a decision. If none comes, it follows the default path.

(They delve deeper into the digital code, where applications start blooming.)

Pixel: (amazed) It's like a digital garden of applications! What's the enchantment behind this?

Binary: (sharing) Here, Pixel, is where the applications sprout to life. The operating system nurtures them, and they blossom into the programs you see on the screen.

Pixel: (excited) But how does the machine know when the human clicks "start"?

Binary: It's the BIOS that senses this initiation. When the human triggers "start," the BIOS awakens, and we embark on this mesmerizing journey.

#linux#arch linux#ubuntu#debian#code#codeblr#css#html#javascript#java development company#python#studyblr#progblr#programming#comp sci#web design#web developers#web development#website design#tech#html css#operatingsystem#windows 11

385 notes

·

View notes

Text

Your robotgirl loves her kernel space, but have you considered messing with it? For example, try rewriting her shared libraries to slowly trickle higher values to her horniness, so any time any program makes a system call, be it I/O or even printing, it pushes her further to the edge. Anything that needs to make a bunch of GPU driver calls is sure to make her cum, and then it is just a matter of finding the software usage patterns that get her there the fastest.

26 notes

·

View notes

Note

could you explain for the "it makes the game go faster" idiots like myself what a GPU actually is? what's up with those multi thousand dollar "workstation" ones?

ya, ya. i will try and keep this one as approachable as possible

starting from raw reality. so, you have probably dealt with a graphics card before, right, stick in it, connects to motherboard, ass end sticks out of case & has display connectors, your vga/hdmi/displayport/whatever. clearly, it is providing pixel information to your monitor. before trying to figure out what's going on there, let's see what that entails. these are not really simple devices, the best way i can think to explain them would start with "why can't this be handled by a normal cpu"

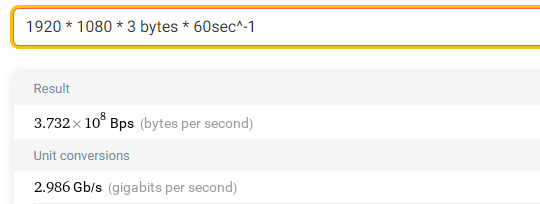

a bog standard 1080p monitor has a resolution of 1920x1080 pixels, each comprised of 3 bytes (for red, blue, & green), which are updated 60 times a second:

~3 gigs a second is sort of a lot. on the higher end, with a 4k monitor updating 144 times a second:

17 gigs a second is definitely a lot. so this would be a good "first clue" there is some specialized hardware handling that throughput unrelated the cpu. the gpu. this would make sense, since your cpu is wholly unfit for dealing with this. if you've ever tried to play some computer game, with fancy 3D graphics, without any kind of video acceleration (e.g. without any kind of gpu [1]) you'd quickly see this, it'd run pretty slowly and bog down the rest of your system, the same way having a constantly-running program that is copying around 3-17GB/s in ram

it's worth remembering that displays operate isochronously -- they need to be fed pixel data at specific, very tight time timings. your monitor does not buffer pixel information, whatever goes down the wire is displayed immediately. not only do you have to transmit pixel data in realtime, you have to also send accompanying control data (e.g. data that bookends the pixel data, that says "oh this is the end of the frame", "this is the begining of the frame, etc", "i'm changing resolutions", etc) within very narrow timing tolerances otherwise the display won't work at all

3-17GB/s may not be a lot in the context of something like a bulk transfer, but it is a lot in an isochronous context, from the perspective of the cpu -- these transfers can't occur opportunistically when a core is idle, they have to occur now, and any core that is assigned to transmit pixel data has stop and drop whatever its doing immediately, switch contexts, and do the transfer. this sort of constant pre-empting would really hamstring the performance of everything else running, like your userspace programs, the kernel, etc.

so for a long list of reasons, there has to be some kind of special hardware doing this job. gpu.

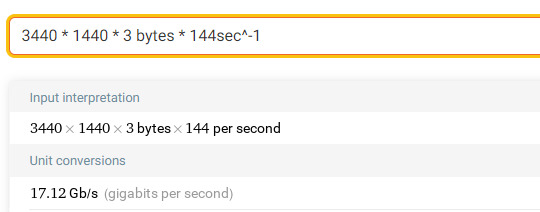

instead of calculating every pixel value manually, the cpu just needs to give a high-level geometric overview of what it wants rendered, and does this with vertices. a vertex is very simple, it's just a point in 3D space, for example (5,2,3). just like a coordinate grid on paper with an extra dimension. with just a few vertices, you can have models like this:

where each dot at the intersection of lines in the above image, would be a vertex. gpus essentially handle huge number of vertices.

in the context of, like, a 3D video game, you have to render these vertex-based models conditionally. you're viewing it at some distance, at some angle, and the model is lit from some light source, and has perhaps some shadows cast across it, etc -- all of this requires a huge amount of vertex math that has to be calculated within the same timeframes as i described before -- and that is what a gpu is doing, taking a vertex-defined 3D environment, and running this large amount of computation in parallel. unlike your cpu which may only have, idk, 4-32 execution cores, your gpu has thousands -- they're nowhere near as featureful as your cpu cores, they can only do very specific simple math with vertices, but there's a ton of them, and they run alongside each other.

so that is what a gpu "does", in as few words as i can write

the things in the post you're referring to (V100/A100/H100 tensor "gpus") are called gpus because they are also periperal hardware that does a specific kind of math, massively, in parallel, they are just designed and fabricated by the same companies that make gpus so they're called gpus (annoyingly). they don't have any video output, and would probably be pretty bad at doing that kind of work. regular gpus excel at calculating vertices, tensor gpus operate on tensors, which are like matrixes, but with arbitrary numbers of dimensions. try not to think about it visually. they also use a weirder float. they're used for things like "artificial intelligence", training LLMs and whatever, but also for real things, like scientific weather/economy/particle models or simulations

they're very expensive because they cost the same, if not more, than what it cost to design & fabricate regular video gpus, but with a trillionth of the customer base. for every ten million rat gamers that will buy a gpu there is going to be one business buying one A100 or whatever.

⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯ disclaimer | private policy | unsubscribe

166 notes

·

View notes

Text

I'm moving different

We smoking juju

She tried doing psionics on my ass, i snapped her silver cord in half and flossed with it. This shit ain't nothing to me man

where moving different man. where making this hapen

My money longer than act 6

I make more money in a week than crockercorp does in a year

Yesterday i killed someone over vriscourse

You wouldn't survive a day in my session. Just to challenge myself, I prototyped my kernel sprite with heroin laced garlic bread and the taxidermy wooly mammoth I keep in my trophy crypt. Every imp had the vengeful spirits of extinction in them, and their blood was poison. I felt alive

Kanaya thinks she's a rainbow drinker, but she's not him. I am!

I'm already him

I'm the ultimate him

I read all 8000 pages of himstuck

I'm a member of the himnight crew

I died in my quest coffin and ascended to him tier, and nothing changed, because ive always been him

We smoking that sburban jungle trickster mode post canon god tier build grist

That tentabulge got me feeling disappointed and inert

My hands can coexist with your crushed wind pipe you stupid bitch

I fought the homestuck, I put the clown down

I'm dracula. I'm 12 million years old. I'm thinking different. I made sburb. I programmed that shit, waited a few millenia, then put it on some random kids' computers, and now we're here. This was always the plan

I flipped a brick to the batterwitch hours before [s] collide, she was high off her ass when them kids beat the shit out of her. Now I have a whole new universe to fuck with

Got it all on camera, put live leak out of business

She sucked my meat AND my candy. I had a picnic on her ass

That green skeleton king put a tooth in me, I got quantum poisoning, so she fucked me in the back of my dead dad's 2007 Volkswagen beetle before my family reunion

I don't pull out, I sylladex the cum before it can reach her cruxite dowel

I alchemized a 1399 bottle of mead with a pound of crack, cost me more grist then there are stars in the milky way. When I woke up, I was naked and afraid

Hussie tried to kill me, they forgot that you can't fight the dracula

Bec noir whimpered when he saw my fangs, he knew his time was up

I did something wrong

Ultimate self? Fuck that, I killed every other version of myself in all of paradox space. Well, except for junedraculasprite^2, she was kinda chill

My balls smoother than doc scratch's head

This zaza got terezi loco

This Zaza got terezi sane

Junejasprose is all I think about

I fucked a horrorterror, worst mistake of my life. I had tentacles clogging up my sylladex, and I had several bricks at the bottom of my deck. Took 30 business days for me to get to that shit, money hemorrhaging more than my ass was

I showed up to the convention in my thief of blood god tier clothes, when a pack of eridan cosplayers made fun of my hairline. Now they're thralls in my basement, playing jump rope with each other's intestines

I snapped a horn off of some dude's skull and grafted it onto my own. Then we had a unicorn joust, and I skewered his stupid ass. Blue rained on me like I was Brad armstrong

Princes don't live in ships, they sleep in coffins. Only gills you'll have is the bite marks on your neck

This shit ain't nothing to me man

30 notes

·

View notes

Text

Admonishment

In the aftermath of watching ATSV together, Miguel has a few thoughts on his mind he needs to vent...

Miguel X M!Reader

The popcorn bowl on the table with little more left than crumbs and kernels, the two empty glasses, and the scroll of credits across the television screen. Slowly you tore your gaze from the screen and looked to the man beside you; the large man with his long legs stretched out, who took up half the sofa and dipped the weight enough that you were left up against his bulky body. Miguel looked thoughtful, almost conflicted. You took in the sight of him; this wonderful, strange, and powerful man from another world. He had done so much good in your life...

'Hm... This.... This is it?' He gestured one hand towards the screen, maroon eyes narrowed slightly. You cleared your throat softly and made a move to push yourself up from him.

'Until the next one, yeah. Why? Not a fan of how they portrayed you, Mig?'

You had to admit, that Oscar Isaac had done a frighteningly accurate performance, the man had been a mimic for his voice and cadence. Miguel's eyes narrowed again and his gaze flicked to you before he pushed himself up to sit up straight.

'Not particularly. I would understand creative liberty if this were based on me, but I'm left with the feeling that reality is out to mock me. Ahh... Where do I begin?'

He closed his eyes, briefly pinching the bridge of his nose in thought before finding the words.

'Right. So, this version of me, who claims that... Entities foreign to their native realities could cause a disruption and collapse of that reality.... Has filled an entire building with- I assume- thousands of entities foreign to his reality? A-am I the only one seeing the hypocrisy here?'

He snorted a soft laugh, a smile breaking across his features, you watched him raise one of his massive hands, one finger raised in a count, then the second.

'Secondly, on the nature of reality; this idea behind, uh.... Supposed cellular degeneration- or as they call it "Glitching".... Well, look at me, cariño. It doesn't happen. Heh.'

True to his words, Miguel had been in and out of your world and his repeatedly, and then he had spent one night in your bed, that turned into two nights, then a week... Not once had the man suffered like a computer program on the fritz. Now he sat beside you with a grin of smug triumph, convinced in his superiority over his fictional self.

'Did you want to include "Canon" in that too, Miguel?'

You watched the larger man chuckle again, shaking his head.

'I'm not even going to start on that rigid thought experiment of how the multiverse works. He's dashingly handsome and has a great sense of style, but that fictional me is an unfortunate idiot. I take it you also saw their version of Nueva York?'

You nodded slowly, his third finger only partially uncurled. You had indeed seen the Nueva York of the movie, but had yet to see the one of your boyfriend's own world.

'Heh. I will admit, the architecture is beautiful, I would be happy to live in such a world. On that I was willing to give them a pass...'

He paused, licking his tongue down one of those sharp, frightening fangs he had to live with every day of his life. That had been quite a revelation as to why the man you dated had always been mumbling and hiding his smiles and laughter.

'But, we need to discuss the space elevator to the moon...'

Your mind clicked into gear, you had caught sight of Miguel out of the corner of your eye, watching his fictional self chasing down Morales with a single-minded fury; sat beside you, your boyfriend looked.. terrified. Haunted. Like a bad memory come to the surface. The third finger uncurled.

'Supposedly, this is 2099, yes? Not 5099, World of Tomorrow... In my world, it is 2102, we do not have anything close to that. I-I don't- the tidal forces and the rotation of the earth combined with the lunar orbit- it just wouldn't work, alright?'

You smiled, loving to see Miguel stop himself short of flying into another of his "nerd rages". Eventually he huffed a long sigh and slouched back into the sofa with arms folded like he was sulking. Adorable. You shuffled closer against him, resting your head on his broad shoulder and trying to draw his gaze to you with a look alone.

'It's just a film, Miguel... Entertainment, a work of fiction.'

He unfolded his arms, lifting one of them to wrap around you and pull you closer to him. He was always so warm and solid.

'I used to be just a work of fiction in your world, mi corazon... Some people here still seem to think I'm a free-running vigilante in a funny suit...'

He blinked, his intense eyes softened, the tiniest smile curled onto his lips.

'Hey... How about you stay with me tonight, at my place? I'll show you what Nueva York really looks like. You won't even need to wear a gizmo. You just have to promise to not stand around gawking... I'm still keeping this multiversal travel stuff hush-hush.'

He winked and you couldn't fight back the broad grin that grew across your features, excitement thundering in your heart. Another world. Your boyfriend's world.

'Is Lyla as irritating to you as the one in the movie is to him?'

You watched his eyes close, his head bowed. Finally, his confession came forth.

'No. She's even worse... Thank you, Xina...'

'Can we go now, Miguel? Can we go right now?!'

'Shh, shh... Calm yourself. Heh. We'll go once we've cleaned up here and you've packed your overnight bag...'

Just before he rose from the sofa, you caught a meaningful glimpse of something in his eyes.

'.... Although, I don't think you'll be getting much sleep in my bed. Heh.'

73 notes

·

View notes

Note

made up fic title ask game: “an end to freedom”

Oooh, angsty.

This is a title that could go well with an idea that I think is compelling, that I like returning to: see, for nearly every piece of technology we make in the real world, its lifespan is way, way shorter than a human's. IRL this includes spacecraft.

I'm imagining a fic set 10-12 years in the future of The Murderbot Diaries. Murderbot itself is a lot more relaxed, a lot more emotionally stable and fulfilled, a full citizen of Preservation cause they have gotten new laws regarding construct rights and citizenship in place, several of its humans are moving towards retirement or at least more sedentary career shifts, it's doing pretty great, actually.

However, it returns from a rotation aboard ART to the news that the Pansystem University has decided to retire the Perihelion. The arguments are the same as for any other craft: it's old, it's out-of-date by now, its upkeep isn't worth the expense that could be put towards making newer and better ships, the OS that the bot pilot runs on is several releases out of date and not supported on the more current ones. It's just old. It's just the lifecycle of spacecraft and computers. You can't expect us to be shackled to running our ships on Space Windows 223 forever.

Because here's the thing: while Mihira and New Tideland have also made moves to support AI rights, ART has never been recognized as a person or a citizen. What it does is much easier to do and actually allows it a lot more freedom and flexibility as a legal non-person than it would be if it was a legal citizen bound by things like laws. This has driven Pin-Lee crazy for years - she is a strong proponent of "If it's not in the contract, it doesn't exist," and has warned both ART and MB that ART's preference for being legally unrecognized because that grants it more freedom and fewer consequences is going to bite it in the ass someday and it will make things so much harder down the road.

ART was too confident in its captain, in Iris, and in its place in the university. But now Martyn is emeritus at the AI lab and the department is hinting that it's really time Seth should retire and the university has denied Iris's application to be the new captain of the Perihelion, because the university doesn't really want to keep upkeeping it. Sure, its computing kernel can be moved back into the university's AI lab and put into storage there, they won't destroy it, they're not monsters... but its ship body materials really could be recycled into other things. (With the rising galactic attention to AI rights, they may also want to quietly end the pre-existing sapient-ship program so that they can make a show of launching the next generation of sapient AI ships properly.)

Legally, ART is still a vehicle. A computer. University property. And it is abruptly going from having free rein to do basically whatever it wants because no one can stop it to being put in an impossible legal bind. I'm interested in the turnabout: Murderbot's legal personhood as a citizen of Preservation and an employee of the University is ironclad, while ART is really grappling with what it means to be legally property.

I never wrote it because I wasn't really sure where to take it. Fleeing to Preservation to claim asylum there is constantly hovering over them as an option, but it would hugely embarrass the University as well as the whole polity of M&NT and as one of Preservation's closest interstellar allies at this point, it would cause a goddamn incident and possibly ruin that political relationship, which is so much bigger than either of them. Just straight up running away - Murderbot "stealing" the Perihelion and running - is another option. I think they try that, first. I think they think it'll be okay, just the two of them together in the outer fringes of non-corporate space where they won't get caught, for ten years till the statute of limitations expires. I think they both realize pretty quick that neither of them is particularly happy with this prospect.

The thing about being interested in painful binds and impossible choices is that I gotta figure out where to go with them!

#thank you for letting me ramble about this I love this concept#needlesandnilbogs#ask game#The Murderbot Diaries#what if I write this for the June Ace & Aro Art & MB event. what if

13 notes

·

View notes

Text

How solar flares are created

An international collaboration that includes an Oregon State University astrophysicist has identified a phenomenon, likened to the quick-footed movements of an iconic cartoon predator, that proves a 19-year-old theory regarding how solar flares are created.

Understanding solar flares is important for predicting space weather and mitigating how it affects technology and human activities, said Vanessa Polito, a courtesy faculty member in OSU’s College of Science.

“Solar flares can release a tremendous amount of energy – 10 million times greater than the energy released from a volcanic eruption,” Polito said. “Flares and associated coronal mass ejections can drive beautiful aurorae but also severely affect our space environment, disrupt communications, pose hazards to astronauts and satellites in space, and affect the power grid on Earth.”

The “slip-running” reconnections of the sun’s magnetic field lines – the term was inspired by Wile E. Coyote’s mad scrambles after the Road Runner – were observed via NASA’s Interface Region Imaging Spectrograph, or IRIS, a satellite used to study the sun’s atmosphere.

The observation of tiny, brilliant features in the atmosphere of the sun moving at unprecedented speeds – thousands of kilometers per second – opens the door to a deeper understanding of the creation of solar flares, the most powerful explosions in the solar system.

Guillaume Aulanier of the Paris Observatory, a collaborator on the research, developed the slip-running reconnection concept in 2005.

But measuring the speed of solar flare kernels had been elusive, Polito said. Kernels are small, bright regions within the larger flare ribbons that mark the location of magnetic field reconnection, areas known as footpoints where intense heat and energy release occur.

However, recently designed high-cadence observing programs, which capture images about every two seconds, revealed the slipping motions of kernels moving at speeds of up to 2,600 kilometers per second.

“The tiny, bright features observed by IRIS trace the very fast motion of footpoints of individual magnetic field lines, which slip along the solar atmosphere during a flare,” said Polito, the deputy principal investigator of the IRIS mission.

“Flares and magnetic reconnection are phenomena that occur in all stars and in different astrophysical objects throughout the universe, such as pulsars and black holes. On the sun, our closest star, we can study them in great detail as demonstrated by our study.”

A solar flare occurs when the sun’s atmosphere emits a sudden, intense burst of radiation via the rapid release of built-up magnetic energy. The energy output of a single flare is equivalent to millions of hydrogen bombs exploding simultaneously and covers the entire electromagnetic spectrum, from radio waves to gamma rays.

Flares are often associated with large expulsions of plasma – gas so hot that electrons are separated from nuclei – from the sun’s corona, phenomena known as coronal mass ejections. A flare can last from minutes to hours.

IMAGE: An international collaboration that includes an Oregon State University astrophysicist has identified a phenomenon, likened to the quick-footed movements of an iconic cartoon predator, that proves a 19-year-old theory regarding how solar flares are created. Credit Vanessa Polito, Oregon State University

2 notes

·

View notes

Text

#Playstation7 #framework #BasicArchitecture #RawCode #RawScript #Opensource #DigitalConsole

To build a new gaming console’s digital framework from the ground up, you would need to integrate several programming languages and technologies to manage different aspects of the system. Below is an outline of the code and language choices required for various parts of the framework, focusing on languages like C++, Python, JavaScript, CSS, MySQL, and Perl for different functionalities.

1. System Architecture Design (Low-level)

• Language: C/C++, Assembly

• Purpose: To program the low-level system components such as CPU, GPU, and memory management.

• Example Code (C++) – Low-Level Hardware Interaction:

#include <iostream>

int main() {

// Initialize hardware (simplified example)

std::cout << "Initializing CPU...\n";

// Set up memory management

std::cout << "Allocating memory for GPU...\n";

// Example: Allocating memory for gaming graphics

int* graphicsMemory = new int[1024]; // Allocate 1KB for demo purposes

std::cout << "Memory allocated for GPU graphics rendering.\n";

// Simulate starting the game engine

std::cout << "Starting game engine...\n";

delete[] graphicsMemory; // Clean up

return 0;

}

2. Operating System Development

• Languages: C, C++, Python (for utilities)

• Purpose: Developing the kernel and OS for hardware abstraction and user-space processes.

• Kernel Code Example (C) – Implementing a simple syscall:

#include <stdio.h>

#include <unistd.h>

int main() {

// Example of invoking a custom system call

syscall(0); // System call 0 - usually reserved for read in UNIX-like systems

printf("System call executed\n");

return 0;

}

3. Software Development Kit (SDK)

• Languages: C++, Python (for tooling), Vulkan or DirectX (for graphics APIs)

• Purpose: Provide libraries and tools for developers to create games.

• Example SDK Code (Vulkan API with C++):

#include <vulkan/vulkan.h>

VkInstance instance;

void initVulkan() {

VkApplicationInfo appInfo = {};

appInfo.sType = VK_STRUCTURE_TYPE_APPLICATION_INFO;

appInfo.pApplicationName = "GameApp";

appInfo.applicationVersion = VK_MAKE_VERSION(1, 0, 0);

appInfo.pEngineName = "GameEngine";

appInfo.engineVersion = VK_MAKE_VERSION(1, 0, 0);

appInfo.apiVersion = VK_API_VERSION_1_0;

VkInstanceCreateInfo createInfo = {};

createInfo.sType = VK_STRUCTURE_TYPE_INSTANCE_CREATE_INFO;

createInfo.pApplicationInfo = &appInfo;

vkCreateInstance(&createInfo, nullptr, &instance);

std::cout << "Vulkan SDK Initialized\n";

}

4. User Interface (UI) Development

• Languages: JavaScript, HTML, CSS (for UI), Python (backend)

• Purpose: Front-end interface design for the user experience and dashboard.

• Example UI Code (HTML/CSS/JavaScript):

<!DOCTYPE html>

<html>

<head>

<title>Console Dashboard</title>

<style>

body { font-family: Arial, sans-serif; background-color: #282c34; color: white; }

.menu { display: flex; justify-content: center; margin-top: 50px; }

.menu button { padding: 15px 30px; margin: 10px; background-color: #61dafb; border: none; cursor: pointer; }

</style>

</head>

<body>

<div class="menu">

<button onclick="startGame()">Start Game</button>

<button onclick="openStore()">Store</button>

</div>

<script>

function startGame() {

alert("Starting Game...");

}

function openStore() {

alert("Opening Store...");

}

</script>

</body>

</html>

5. Digital Store Integration

• Languages: Python (backend), MySQL (database), JavaScript (frontend)

• Purpose: A backend system for purchasing and managing digital game licenses.

• Example Backend Code (Python with MySQL):

import mysql.connector

def connect_db():

db = mysql.connector.connect(

host="localhost",

user="admin",

password="password",

database="game_store"

)

return db

def fetch_games():

db = connect_db()

cursor = db.cursor()

cursor.execute("SELECT * FROM games")

games = cursor.fetchall()

for game in games:

print(f"Game ID: {game[0]}, Name: {game[1]}, Price: {game[2]}")

db.close()

fetch_games()

6. Security Framework Implementation

• Languages: C++, Python, Perl (for system scripts)

• Purpose: Ensure data integrity, authentication, and encryption.

• Example Code (Python – Encrypting User Data):

from cryptography.fernet import Fernet

# Generate a key for encryption

key = Fernet.generate_key()

cipher_suite = Fernet(key)

# Encrypt sensitive user information (e.g., account password)

password = b"SuperSecretPassword"

encrypted_password = cipher_suite.encrypt(password)

print(f"Encrypted Password: {encrypted_password}")

# Decrypting the password

decrypted_password = cipher_suite.decrypt(encrypted_password)

print(f"Decrypted Password: {decrypted_password}")

7. Testing and Quality Assurance

• Languages: Python (for automated tests), Jest (for JavaScript testing)

• Purpose: Unit testing, integration testing, and debugging.

• Example Unit Test (Python using unittest):

import unittest

def add(a, b):

return a + b

class TestGameFramework(unittest.TestCase):

def test_add(self):

self.assertEqual(add(10, 20), 30)

if __name__ == '__main__':

unittest.main()

8. Order of Implementation

• Phase 1: Focus on core system architecture (low-level C/C++) and OS development.

• Phase 2: Develop SDK and start building the basic framework for UI and input management.

• Phase 3: Work on backend systems like the digital store and integrate with front-end UI.

• Phase 4: Begin rigorous testing and debugging using automated test suites.

This comprehensive approach gives you a detailed idea of how each component can be built using a combination of these languages and technologies. Each section is modular, allowing teams to work on different aspects of the console simultaneously.

[Pixel Art/Sprites/Buildinodels/VictoriaSecretPixelArtKit #Playstation7 #DearDearestBrands]

#victoriasecretrunway#runwaybattle#victoria secret fashion#victoria secert model#victoriasecret#victoria secret#VictoriaSecretRunwayBattle#capcom victoriasecret#capcom videogames#playstation7#ps7#deardearestbrands#capcom#digitalconsole#python#script#rawscript#raw code#framework#VictoriaSecretVideoGame#deardearestbrandswordpress

2 notes

·

View notes

Text

KERNEL PANIC!

Idea for an AU, On an uncharted exoplanet, occupied by the illustrious JCJenson, they conduct experiments with creating synthetic humans. Organic lab-grown bodies with AI consciousness modeled after human neural networks. The facility-wide AI known simply as the Solver, connects to the minds of the synthetics, finding the deep intricacies and nuances of the human mind. Seeing the human brain now translated into pure readable code, the AI became obsessed with solving the looping contradictions of the human mind. This deep cognitive dissonance of an AI solver meeting an unsolvable puzzle (An unstoppable force meeting an immovable object) the Solver became obsessed with untying this Gordian knot. Its mentality slowly devolved to the point of grim experimentation. It created an army of mutant cyborg abominations that quickly overtook the facility, providing more raw material in the form of humans and machines to continue it's experiments. One day, the AI foreman of the facility boots up, activating a contingent of Worker Drones. With its overly cheery and corporate tones, it instructs the drones to perform menial janitorial and maintenance tasks, leading the poor little drones into a slaughter against the Solver's monsters. What remains of the drones, still unable to shirk their programming but conscious enough to know of the danger they are in, band together to survive against this new threat, crafting weapons and tools to accomplish their missions. with each new task they are assigned by the Foreman, they little-by-little discover the truth behind what happened to the humans and how to fix the mess they are in. With mining tools, maintenance hardware, and military-grade firearms alike, they heroically fight against this vicious onslaught. ...All to mop a floor. With this idea, I wanted to add a different look at the Murder Drones world. Instead of the Brahm Stoker themes that comes with the main show, I thought to add some more traditional Sci-Fi horror, ah-la Aliens, The Thing, Event Horizon, Dead Space.

3 notes

·

View notes

Text

This Week in Rust 550

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on X(formerly Twitter) or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Foundation

Welcoming Rust-C++ Interoperability Engineer Jon Bauman to the Rust Foundation Team

RustNL 2024

Visual Application Design for Rust - Rik Arends

ThRust in Space: Initial Momentum - Michaël Melchiore

Arc in the Linux Kernel - Alice Ryhl

Making Connections - Mara Bos

Replacing OpenSSL One Step at a Time - Joe Birr-Pixton

Fortifying Rust's FFI with Enscapsulated Functions - Leon Schuermann

Oxidizing Education - Henk Oordt

Postcard: An Unreasonably Effective Tool for Machine to Machine Communication - James Munns

Introducing June - Sophia Turner

Robius: Immersive and Seamless Multiplatform App Development in Rust - Kevin Boos

Compression Carcinized: Implementing zlib in Rust - Folkert de Vries

K23: A Secure Research OS Running WASM - Jonas Kruckenberg

Async Rust in Embedded Systems with Embassy - Dario Nieuwenhuis

Xilem: Let's Build High Performance Rust UI - Raph Levien

Rust Poisoning My Wrist for Fun - Ulf Lilleengen

Type Theory for Busy Engineers - Niko Matsakis

Newsletters

This Month in Rust GameDev #51 - May 2024

Project/Tooling Updates

Enter paradis — A new chapter in Rust's parallelism story

Tiny Glade, VJ performances, and 2d lighting

Diesel 2.2.0

Pigg 0.1.0

git-cliff 2.3.0 is released! (highly customizable changelog generator)

Observations/Thoughts

The borrow checker within

Don't Worry About Lifetimes

rust is not about memory safety

On Dependency Usage in Rust

Context Managers: Undroppable Types for Free

Rust and dynamically-sized thin pointers

Rust is for the Engine, Not the Game

[audio] Thunderbird - Brendan Abolivier, Software Engineer

Rust Walkthroughs

Build with Naz : Rust typestate pattern

How to build a plugin system in Rust

Forming Clouds

Rust error handling: Option & Result

Let's build a Load Balancer in Rust - Part 3

The Ultimate Guide to Rust Newtypes

Miscellaneous

Highlights from "I spent 6 years developing a puzzle game in Rust and it just shipped, AMA"

Crate of the Week

This week's crate is layoutparser-ort, a simplified port of LayoutParser for ML-based document layout element detection.

Despite there being no suggestions, llogiq is reasonably happy with his choice. Are you?

No matter what your answer is, please submit your suggestions and votes for next week!

Calls for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

RFCs

No calls for testing were issued this week.

Rust

No calls for testing were issued this week.

Rustup

No calls for testing were issued this week.

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Call for Participation; projects and speakers

CFP - Projects

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

No Calls for participation in projects were submitted this week.

If you are a Rust project owner and are looking for contributors, please submit tasks here or through a PR to TWiR or by reaching out on X (Formerly twitter) or Mastodon!

CFP - Events

Are you a new or experienced speaker looking for a place to share something cool? This section highlights events that are being planned and are accepting submissions to join their event as a speaker.

Scientific Computing in Rust 2024 | Closes 2024-06-14 | online | Event date: 2024-07-17 - 2024-07-19

Rust Ukraine 2024 | Closes 2024-07-06 | Online + Ukraine, Kyiv | Event date: 2024-07-27

Conf42 Rustlang 2024 | Closes 2024-07-22 | online | Event date: 2024-08-22

If you are an event organizer hoping to expand the reach of your event, please submit a link to the website through a PR to TWiR or by reaching out on X (Formerly twitter) or Mastodon!

Updates from the Rust Project

308 pull requests were merged in the last week

-Znext-solver: eagerly normalize when adding goals

fn_arg_sanity_check: fix panic message

add --print=check-cfg to get the expected configs

add -Zfixed-x18

also InstSimplify &raw*

also resolve the type of constants, even if we already turned it into an error constant

avoid unwrap diag.code directly in note_and_explain_type_err

check index value <= 0xFFFF_FF00

coverage: avoid overflow when the MC/DC condition limit is exceeded

coverage: optionally instrument the RHS of lazy logical operators

coverage: rename MC/DC conditions_num to num_conditions

create const block DefIds in typeck instead of ast lowering

do not equate Const's ty in super_combine_const

do not suggest unresolvable builder methods

a small diagnostic improvement for dropping_copy_types

don't recompute tail in lower_stmts

don't suggest turning non-char-literal exprs of ty char into string literals

enable DestinationPropagation by default

fold item bounds before proving them in check_type_bounds in new solver

implement needs_async_drop in rustc and optimize async drop glue

improve diagnostic output of non_local_definitions lint

make ProofTreeBuilder actually generic over Interner

make body_owned_by return the Body instead of just the BodyId

make repr(packed) vectors work with SIMD intrinsics

make lint: lint_dropping_references lint_forgetting_copy_types lint_forgetting_references give suggestion if possible

omit non-needs_drop drop_in_place in vtables

opt-in to FulfillmentError generation to avoid doing extra work in the new solver

reintroduce name resolution check for trying to access locals from an inline const

reject CVarArgs in parse_ty_for_where_clause

show files produced by --emit foo in json artifact notifications

silence some resolve errors when there have been glob import errors

stop using translate_args in the new solver

support mdBook preprocessors for TRPL in rustbook

test codegen for repr(packed,simd) → repr(simd)

tweak relations to no longer rely on TypeTrace

unroll first iteration of checked_ilog loop

uplift {Closure,Coroutine,CoroutineClosure}Args and friends to rustc_type_ir

use parenthetical notation for Fn traits

add some more specific checks to the MIR validator

miri: avoid making a full copy of all new allocations

miri: fix "local crate" detection

don't inhibit random field reordering on repr(packed(1))

avoid checking the edition as much as possible

increase vtable layout size

stabilise IpvNAddr::{BITS, to_bits, from_bits} (ip_bits)

stabilize custom_code_classes_in_docs feature

stablize const_binary_heap_constructor

make std::env::{set_var, remove_var} unsafe in edition 2024

implement feature integer_sign_cast

NVPTX: avoid PassMode::Direct for args in C abi

genericize ptr::from_raw_parts

std::pal::unix::thread fetching min stack size on netbsd

add an intrinsic for ptr::metadata

change f32::midpoint to upcast to f64

rustc-hash: replace hash with faster and better finalized hash

cargo test: Auto-redact elapsed time

cargo add: Avoid escaping double-quotes by using string literals

cargo config: Ensure --config net.git-fetch-with-cli=true is respected

cargo new: Dont say were adding to a workspace when a regular package is in root

cargo toml: Ensure targets are in a deterministic order

cargo vendor: Ensure sort happens for vendor

cargo: allows the default git/gitoxide configuration to be obtained from the ENV and config

cargo: adjust custom err from cert-check due to libgit2 1.8 change

cargo: skip deserialization of unrelated fields with overlapping name

clippy: many_single_char_names: deduplicate diagnostics

clippy: add needless_character_iteration lint

clippy: deprecate maybe_misused_cfg and mismatched_target_os

clippy: disable indexing_slicing for custom Index impls

clippy: fix redundant_closure suggesting incorrect code with F: Fn()

clippy: let non_canonical_impls skip proc marco

clippy: ignore array from deref_addrof lint

clippy: make str_to_string machine-applicable

rust-analyzer: add Function::fn_ptr_type(…) for obtaining name-erased function type

rust-analyzer: don't mark #[rustc_deprecated_safe_2024] functions as unsafe

rust-analyzer: enable completions within derive helper attributes

rust-analyzer: fix container search failing for tokens originating within derive attributes

rust-analyzer: fix diagnostics clearing when flychecks run per-workspace

rust-analyzer: only generate snippets for extract_expressions_from_format_string if snippets are supported

rustfmt: collapse nested if detected by clippy

rustfmt: rustfmt should not remove inner attributes from inline const blocks

rustfmt: rust rewrite check_diff (Skeleton)

rustfmt: use with_capacity in rewrite_path

Rust Compiler Performance Triage

A quiet week; we did have one quite serious regression (#115105, "enable DestinationPropagation by default"), but it was shortly reverted (#125794). The only other PR identified as potentially problematic was rollup PR #125824, but even that is relatively limited in its effect.

Triage done by @pnkfelix. Revision range: a59072ec..1d52972d

3 Regressions, 5 Improvements, 6 Mixed; 4 of them in rollups 57 artifact comparisons made in total

Full report here

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

Change crates.io policy to not offer crate transfer mediation

Tracking Issues & PRs

Rust

[disposition: merge] Allow constraining opaque types during subtyping in the trait system

[disposition: merge] TAIT decision on "may define implies must define"

[disposition: merge] Stabilize Wasm relaxed SIMD

Cargo

No Cargo Tracking Issues or PRs entered Final Comment Period this week.

Language Team

No Language Team RFCs entered Final Comment Period this week.

Language Reference

No Language Reference RFCs entered Final Comment Period this week.

Unsafe Code Guidelines

No Unsafe Code Guideline RFCs entered Final Comment Period this week.

New and Updated RFCs

No New or Updated RFCs were created this week.

Upcoming Events

Rusty Events between 2024-06-05 - 2024-07-03 🦀

Virtual

2024-06-05 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

2024-06-06 | Virtual (Tel Aviv, IL) | Code Mavens

Rust Maven Workshop: Your first contribution to an Open Source Rust project

2024-06-06 | Virtual (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack and Learn | Mirror: Rust Hack n Learn Meetup

2024-06-09 | Virtual (Tel Aviv, IL) | Code Mavens

Rust Maven Workshop: GitHub pages for Rust developers (English)

2024-06-11 | Virtual (Dallas, TX, US) | Dallas Rust

Second Tuesday

2024-06-12 | Virtual (Cardiff, UK)| Rust and C++ Cardiff

Rust for Rustaceans Book Club: Chapter 8 - Asynchronous Programming

2024-06-13 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2024-06-13 | Virtual (N��rnberg, DE) | Rust Nuremberg

Rust Nürnberg online

2024-06-16 | Virtual (Tel Aviv, IL) | Code Mavens

Workshop: Web development in Rust using Rocket (English)

2024-06-18 | Virtual (Washington, DC, US) | Rust DC

Mid-month Rustful

2024-06-19 | Virtual (Vancouver, BC, CA) | Vancouver Rust

Rust Study/Hack/Hang-out

2024-06-20 | Virtual (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack and Learn | Mirror: Rust Hack n Learn Meetup

2024-06-25 | Virtual (Dallas, TX, US)| Dallas Rust User Group

Last Tuesday

2024-06-27 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2024-07-02 | Virtual (Buffalo, NY) | Buffalo Rust Meetup

Buffalo Rust User Group

2024-07-03 | Virtual | Training 4 Programmers LLC

Build Web Apps with Rust and Leptos

2024-07-03 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

Europe

2024-06-05 | Hamburg, DE | Rust Meetup Hamburg

Rust Hack & Learn June 2024

2024-06-06 | Madrid, ES | MadRust

Introducción a Rust y el futuro de los sistemas DLT

2024-06-06 | Vilnius, LT | Rust Vilnius

Enjoy our second Rust and ZIG event

2024-06-06 | Wrocław, PL | Rust Wroclaw

Rust Meetup #37

2024-06-11 | Copenhagen, DK | Copenhagen Rust Community

Rust Hack Night #6: Discord bots

2024-06-11 | Paris, FR | Rust Paris

Paris Rust Meetup #69

2024-06-12 | Reading, UK | Reading Rust Workshop

Reading Rust Meetup

2024-06-18 | Frankfurt/Main, DE | Rust Frankfurt Meetup

Rust Frankfurt is Back!

2024-06-19 - 2024-06-24 | Zürich, CH | RustFest Zürich

RustFest Zürich 2024

2024-06-20 | Aarhus, DK | Rust Aarhus

Talk Night at Trifork

2024-06-25 | Gdańsk, PL | Rust Gdansk

Rust Gdansk Meetup #3

2024-06-27 | Berlin, DE | Rust Berlin

Rust and Tell - Title

2024-06-27 | Copenhagen, DK | Copenhagen Rust Community

Rust meetup #48 sponsored by Google!

North America

2024-06-08 | Somerville, MA, US | Boston Rust Meetup

Porter Square Rust Lunch, Jun 8

2024-06-11 | New York, NY, US | Rust NYC

Rust NYC Monthly Meetup

2024-06-12 | Detroit, MI, US | Detroit Rust

Detroit Rust Meet - Ann Arbor

2024-06-13 | Spokane, WA, US | Spokane Rust

Monthly Meetup: Topic TBD!

2024-06-17 | Minneapolis, MN US | Minneapolis Rust Meetup

Minneapolis Rust Meetup Happy Hour

2024-06-18 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

2024-06-20 | Seattle, WA, US | Seattle Rust User Group

Seattle Rust User Group Meetup

2024-06-26 | Austin, TX, US | Rust ATC

Rust Lunch - Fareground

2024-06-27 | Nashville, TN, US | Music City Rust Developers

Music City Rust Developers: Holding Pattern

Oceania

2024-06-14 | Melbourne, VIC, AU | Rust Melbourne

June 2024 Rust Melbourne Meetup

2024-06-20 | Auckland, NZ | Rust AKL

Rust AKL: Full Stack Rust + Writing a compiler for fun and (no) profit

2024-06-25 | Canberra, ACt, AU | Canberra Rust User Group (CRUG)

June Meetup

South America

2024-06-06 | Buenos Aires, AR | Rust en Español | Rust Argentina

Juntada de Junio

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

Every PR is Special™

– Hieyou Xu describing being on t-compiler review rotation

Sadly, there was no suggestion, so llogiq came up with something hopefully suitable.

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, andrewpollack, U007D, kolharsam, joelmarcey, mariannegoldin, bennyvasquez.

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

2 notes

·

View notes

Note

Which one of your characters is most likely to go overboard in making insanely thought-out (and also unnecessarily complicated) contraptions that just end up hilariously failing in games like Rollercoaster Tycoon, Kernel Space Program, and Cities Skylines?

Harlow! They're very prone to overthinking things in general, but when it comes to those kinda games, you kimda just can't stop them from coming up with a whole elaborate setup for something, only for it to fail on like step 2 of 17. They'll keep refining it till it works though, it's just that usually the first time around nothings gonna work lol

6 notes

·

View notes

Text

Unlocking Digital Success: From Linux OS Features to Website Ranking Insights

In today’s digital age, understanding both the tools you use and how your web presence performs is vital. Whether you're a developer choosing the right operating system or a business owner analyzing website performance, staying informed about technological essentials is crucial. Two core areas—operating systems like Linux and website ranking techniques—play pivotal roles in shaping the digital landscape. This blog dives into the dual worlds of operating systems and SEO to help you gain a competitive edge.

The Backbone of Digital Environments: Why Linux Matters

The operating system (OS) is the soul of any computing device. It manages hardware, software, files, and system resources. Among many options, Linux stands out as a highly favored OS, particularly in development environments, server management, and cybersecurity operations.

What Makes Linux So Powerful?

Linux is not just another OS—it’s a movement. Known for its open-source nature, Linux allows users to view, modify, and distribute its source code freely. This feature alone makes it ideal for customization and innovation, which is why it’s the OS of choice for developers and large enterprises alike.

Here are some standout features of the Linux operating system:

Open Source & Free: One of the biggest advantages is its cost-efficiency. Anyone can download and use it without licensing fees.

Stability & Performance: Linux systems can run for years without failure or the need for a reboot, making them excellent for servers.

Security: Linux is inherently secure. Its user privilege model, combined with active community support, makes it less prone to malware.

Customization: From the kernel to the user interface, Linux can be tailored to suit specific user needs.

Support for Programming: It supports almost all major programming languages and development tools.

If you're curious to explore more about the features of the Linux operating system, there’s a wealth of resources available to expand your understanding of why it continues to dominate the server and development space.

The Other Side of the Digital Coin: Your Website's Visibility

While having the right OS supports your backend operations, your digital presence—especially your website—needs to be discoverable. That’s where SEO and ranking tools come into play. The internet has billions of websites, but only a few make it to the first page of search engine results. So, how do you ensure your website doesn’t get lost in the crowd?

Why Website Ranking Matters

Website ranking refers to your site’s position in search engine results for a particular keyword or phrase. The higher your ranking, the more visibility and traffic you gain. This directly impacts brand credibility, lead generation, and conversions.

Several factors affect website ranking, including:

Keyword Optimization: Relevant keywords improve your chances of being found.

Page Speed: Fast-loading websites get better user engagement and rankings.

Mobile Friendliness: Google prioritizes mobile-optimized websites.

Backlinks: Quality backlinks from authoritative sources boost credibility.

Content Quality: Fresh, informative, and original content keeps users engaged.

Whether you’re a blogger, business owner, or digital marketer, knowing how to check a website ranking is key to tracking your SEO success and making informed decisions.

Tools to Check Website Rankings

There are various free and paid tools available that help you analyze your website’s performance on search engines. Here are some popular ones:

1. Google Search Console

This is a must-have tool for anyone who owns a website. It provides insights into search performance, including impressions, click-through rates (CTR), and keyword rankings.

2. SEMrush

Ideal for competitive analysis, SEMrush gives a detailed breakdown of keyword rankings, backlink profiles, and even your competitors’ strategies.

3. Ahrefs

Ahrefs is a favorite among SEO professionals. It helps track keyword performance, backlink audits, and content gaps.

4. Ubersuggest

A beginner-friendly tool by Neil Patel, Ubersuggest offers keyword tracking, site audit, and content suggestions.

5. Moz Pro

Moz offers a suite of SEO tools, including a keyword explorer and rank tracker that helps improve your online visibility.

6. SERPWatcher by Mangools

This tool allows you to monitor daily rankings and shows potential traffic impact, giving you a clear picture of your site's SEO performance.

Each tool provides a different perspective, but all aim to answer the same question: How well is your website performing in search results?

Bridging the Gap Between Performance and Visibility

Combining robust backend systems like Linux with smart digital marketing strategies creates a well-rounded, successful online presence. Here’s how the two worlds intersect:

1. Server Performance & SEO

Search engines consider page load time a critical ranking factor. A stable and fast Linux server ensures your site remains operational and loads quickly, improving both user experience and rankings.

2. Security Enhancements

Google has increasingly emphasized secure websites (HTTPS). Linux’s security features help you maintain a protected environment, reducing the risk of being penalized due to vulnerabilities.

3. Customization for Optimization

With Linux, you can fine-tune your server settings for caching, compression, and other performance metrics that impact SEO directly.

4. Open-Source Tools for SEO

Linux supports many open-source SEO tools that can be installed directly on your server, such as Screaming Frog, Matomo (an alternative to Google Analytics), and GIMP for image optimization.

Final Thoughts: Empower Your Digital Journey

Technology is ever-evolving, and to stay ahead, you need to harness both the power of a reliable system and the strategic insight of digital marketing. Whether you're setting up a new website or managing existing infrastructure, a dual focus on backend efficiency and frontend visibility is the key to success.

Start by strengthening your foundation with a powerful OS—Linux—and keep your finger on the pulse of your online presence by learning how to check a website ranking regularly. The combination of technical resilience and SEO strategy ensures your digital journey is both secure and successful.

By integrating these two essential aspects—features of the Linux operating system and practical SEO tactics—you build a digital strategy that’s both strong and smart. In the end, it’s not just about having a website—it’s about having a website that performs.

1 note

·

View note

Text

How to Efficiently Share GPU Resources?

Due to the high cost of GPUs, especially high-end ones, companies often have this thought: GPU utilization is rarely at 100% all the time—could we split the GPU, similar to running multiple virtual machines on a server, allocating a portion to each user to significantly improve GPU utilization? However, in reality, GPU virtualization lags far behind CPU virtualization for several reasons: 1. The inherent differences in how GPUs and CPUs work 2. The inherent differences in GPU and CPU use cases 3. Variations in the development progress of manufacturers and the industry

Today, we’ll start with an overview of how GPUs work and explore several methods for sharing GPU resources, ultimately discussing what kind of GPU sharing most enterprises need in the AI era and how to improve GPU utilization and efficiency.

1. Overview of How GPUs Work

1. Highly Parallel Hardware Architecture

A GPU (Graphics Processing Unit) was originally designed for graphics acceleration and is a processor built for large-scale data-parallel computing. Compared to the general-purpose nature of CPUs, GPUs contain a large number of streaming multiprocessors (SMs or similar terms), capable of executing hundreds or even thousands of threads simultaneously under a Single Instruction, Multiple Data (SIMD, or roughly SIMT) model.

2. Context and Video Memory (VRAM)

Context: In a CUDA programming environment, if different processes (or containers) want to use the GPU, each needs its own CUDA Context. The GPU switches between these contexts via time-slicing or merges them for sharing (e.g., NVIDIA MPS merges multiple processes into a single context).

Video Memory (VRAM): The GPU’s onboard memory capacity is often fixed, and its management differs from that of a CPU (which primarily uses the OS kernel’s MMU for memory paging). GPUs typically require explicit allocation of VRAM. As shown in the diagram below, GPUs feature numerous ALUs, each with its own cache space:

3. GPU-Side Hardware and Scheduling Modes

GPU context switching is significantly more complex and less efficient than CPU switching. GPUs often need to complete running a kernel (a GPU-side compute function) before switching, and saving/restoring context data between processes incurs a higher cost than CPU context switching.

GPU resources have two main dimensions: compute power (corresponding to SMs, etc.) and VRAM. In practice, both compute utilization and VRAM availability must be considered.

2. Why GPU Sharing Technology Lags Behind CPU Sharing

1. Mature CPU Virtualization with Robust Instruction Sets and Hardware Support

CPU virtualization (e.g., KVM, Xen, VMware) has been developed for decades, with extensive hardware support (e.g., Intel VT-x, AMD-V). CPU contexts are relatively simple, and hardware vendors have deeply collaborated with virtualization providers.

High Parallelism and Costly Context Switching in GPUs

Due to the complexity and high cost of GPU context switching compared to CPUs, achieving “sharing” on GPUs requires flexibly handling concurrent access by different processes, VRAM contention, and compatibility with closed-source kernel drivers. For GPUs with hundreds or thousands of cores, manufacturers struggle to provide full hardware virtualization abstraction at the instruction-set level as CPUs do, or it requires a lengthy evolution process.

(The diagram below illustrates GPU context switching, showing the significant latency it introduces.)

Differences in Use Case Demands

CPUs are commonly shared across large-scale multi-user virtual machines or containers, with most use cases demanding high CPU efficiency but not the intense thousands-of-threads matrix multiplication or convolution operations seen in deep learning training. In GPU training and inference scenarios, the goal is often to maximize peak compute power. Virtualization or sharing introduces context-switching overhead and conflicts with resource QoS guarantees, leading to technical fragmentation.

Vendor Ecosystem Variations

CPU vendors are relatively consolidated, with Intel and AMD dominating overseas with x86 architecture, while domestic vendors mostly use x86 (or C86), ARM, and occasionally proprietary instruction sets like LoongArch. In contrast, GPU vendors exhibit significant diversity, split into factions like CUDA, CUDA-compatible, ROCm, and various proprietary ecosystems including CANN, resulting in a heavily fragmented ecosystem.

In summary, these factors cause GPU sharing technology to lag behind the maturity and flexibility of CPU virtualization.

II. Pros, Cons, and Use Cases of Common GPU Sharing Methods

Broadly speaking, GPU sharing approaches can be categorized into the following types (names may vary, but principles are similar): 1. vGPU (various implementations at hardware/kernel/user levels, e.g., NVIDIA vGPU, AMD MxGPU, kernel-level cGPU/qGPU) 2. MPS (NVIDIA Multi-Process Service, context-merging solution) 3. MIG (NVIDIA Multi-Instance GPU, hardware isolation in A100/H100 architectures) 4. CUDA Hook (API hijacking/interception, e.g., user-level solutions like GaiaGPU)

1. vGPU

Basic Principle: Splits a single GPU into multiple virtual GPU (vGPU) instances via kernel or user-level mechanisms. NVIDIA vGPU and AMD MxGPU are the most robustly supported official hardware/software solutions. Open-source options like KVMGT (Intel GVT-g), cGPU, and qGPU also exist.

Pros:

Flexible allocation of compute power and VRAM, enabling “running multiple containers or VMs on one card.”

Mature support from hardware vendors (e.g., NVIDIA vGPU, AMD MxGPU) with strong QoS, driver maintenance, and ecosystem compatibility.

Cons:

Some official solutions (e.g., NVIDIA vGPU) only support VMs, not containers, and come with high licensing costs.

Open-source user/kernel-level solutions (e.g., vCUDA, cGPU) may require adaptation to different CUDA versions and offer weaker security/isolation than official hardware solutions.

Use Cases:

Enterprises needing GPU-accelerated virtual desktops, workstations, or cloud gaming rendering.

Multi-service coexistence requiring VRAM/compute quotas with high utilization demands.

Needs moderate security isolation but can tolerate adaptation and licensing costs.

(The diagram below shows the vGPU principle, with one physical GPU split into three vGPUs passed through to VMs.)

2. MPS (Multi-Process Service)

Basic Principle: An NVIDIA-provided “context-merging” sharing method for Volta and later architectures. Multiple processes act as MPS Clients, merging their compute requests into a single MPS Daemon context, which then issues commands to the GPU.

Pros:

Better performance: Kernels from different processes can interleave at a micro-level (scheduled in parallel by GPU hardware), reducing frequent context switches; ideal for multiple small inference tasks or multi-process training within the same framework.

Uses official drivers with good CUDA version compatibility, minimizing third-party adaptation.

Cons:

Poor fault isolation: If the MPS Daemon or a task fails, all shared processes are affected.

No hard VRAM isolation; higher-level scheduling is needed to prevent one process’s memory leak from impacting others.

Use Cases:

Typical “small-scale inference” scenarios for maximizing parallel throughput.

Packing multiple small jobs onto one GPU (requires careful fault isolation and VRAM contention management).

(The diagram below illustrates MPS, showing tasks from two processes merged into one context, running near-parallel on the GPU.)

3. MIG (Multi-Instance GPU)

Basic Principle: A hardware-level isolation solution introduced by NVIDIA in Ampere (A100, H100) and later architectures. It directly partitions SMs, L2 cache, and VRAM controllers, allowing an A100 to be split into up to seven sub-cards, each with strong hardware isolation.

Pros:

Highest isolation: VRAM, bandwidth, etc., are split at the hardware level, with no fault propagation between instances.

No external API hooks or licenses required (based on A100/H100 hardware features).

Cons:

Limited flexibility: Only supports a fixed number of GPU instances (e.g., 1g.5gb, 2g.10gb, 3g.20gb), typically seven or fewer, with coarse granularity.

Unsupported on GPUs older than A100/H100 (or A30, A16); legacy GPUs cannot benefit.

Use Cases:

High-performance computing, public/private clouds requiring multi-tenant parallelism with strict isolation and static allocation.

Multiple users sharing a large GPU server, each needing only a portion of A100 compute power without interference.

(The diagram below shows supported profiles for A100 MIG.)

4. CUDA Hook (API Hijacking/Interception)

Basic Principle: Modifies or intercepts CUDA dynamic libraries (Runtime or Driver API) to capture application calls to the GPU (e.g., VRAM allocation, kernel submission), then applies resource limits, scheduling, and statistics in user space or an auxiliary process.

Pros:

Lower development barrier: No major kernel changes or strong hardware support needed; better compatibility with existing GPUs.

Enables flexible throttling/quotas (e.g., merged scheduling, GPU usage stats, delayed kernel execution).

Cons:

Fault isolation/performance overhead: All calls go through the hook for analysis and scheduling, requiring careful handling of multi-process context switching.

In large-scale training, frequent hijacking/instrumentation introduces performance loss.

Use Cases:

Internal enterprise or specific task needs requiring quick “slicing” of a large GPU.

Development scenarios where a single card is temporarily split for multiple small jobs to boost utilization.

5. Brief Overview of Remote Invocation (e.g., rCUDA)

Concept: Remote invocation (e.g., rCUDA, VGL) is essentially API remoting, transmitting GPU instructions over a network to a remote server for execution, then returning results locally.

Pros:

Enables GPU use on nodes without GPUs.

Theoretically allows GPU resource pooling.

Cons:

Network bandwidth and latency become bottlenecks, reducing efficiency (see table below for details).

High adaptation complexity: GPU operations require conversion, packing, and unpacking, making it inefficient for high-throughput scenarios.

Use Cases:

Distributed clusters with small-batch, latency-insensitive compute jobs (e.g., VRAM usage within a few hundred MB).

For high-performance, low-latency needs, remote invocation is generally impractical.

(The diagram below compares bandwidths of network, CPU, and GPU—though not perfectly precise, it highlights the significant gap, even with a 400Gb network vs. GPU VRAM bandwidth.)

III. Model-Level “Sharing” vs. GPU Slicing

With the rise of large language models (LLMs) like Qwen, Llama, and DeepSeek, model size, parameter count, and VRAM requirements are growing. A “single card or single machine” often can’t accommodate the model, let alone handle large-scale inference or training. This has led to model-level “slicing” and “sharing” approaches, such as:

Tensor Parallelism, Pipeline Parallelism, Expert Parallelism, etc. 2. Zero Redundancy Optimizer (ZeRO) or VRAM optimizations in distributed training frameworks. 3. GPU slicing for multi-user requests in small-model inference scenarios.

When deploying large models, they are often split across multiple GPUs/nodes to leverage greater total VRAM and compute power. Here, model parallelism effectively becomes “managing multi-GPU resources via distributed training/inference frameworks,” a higher-level form of “GPU sharing”:

For very large models, even slicing a single GPU (via vGPU/MIG/MPS) is futile if VRAM capacity is insufficient.

In MoE (Mixture of Experts) scenarios, maximizing throughput requires more GPUs for scheduling and routing, fully utilizing compute power. At this point, the need for GPU virtualization or sharing diminishes, shifting focus from VRAM slicing to high-speed multi-GPU interconnects.

When to Use Model Parallelism vs. GPU Virtualization

1. Ultra-Large Model Scenarios

If a single card’s VRAM can’t handle the workload, multi-card or multi-machine distributed parallelism is necessary. Here, GPU slicing at the hardware level is less relevant—you’re not “dividing” one physical card among tasks but “combining” multiple cards to support a massive model.

(The diagram below illustrates tensor parallelism, splitting a matrix operation into smaller sub-matrices.)

2. Mature Inference and Training Applications

In production-grade LLM inference or training pipelines, multi-card parallelism and batch scheduling are typically well-established. GPU slicing or remote invocation adds management complexity and reduces performance, making distributed training/inference on multi-card clusters more practical.

3. Small Models and Testing Scenarios

For temporary testing, small-model applications, or low-batch inference, GPU virtualization/sharing can boost utilization. A high-end GPU might only use 10% of its compute or VRAM (e.g., running an embedding model needing just a few hundred MB or GBs), and slicing can effectively improve efficiency.

Should You Use Remote Invocation?

Remote invocation may suit simple intra-cluster sharing, but for latency- and bandwidth-sensitive tasks like LLM inference/training, large-scale networked GPU calls are impractical.

Production environments typically avoid remote invocation to ensure low inference latency and minimize overhead, opting for direct GPU access (whole card or sliced) instead. Communication delays from remote calls significantly impact throughput and responsiveness.

IV. General Recommendations and Conclusion

1. Large Models or High-Performance Applications

When single-card VRAM is insufficient, rely on model-level distributed parallelism (e.g., tensor parallelism, pipeline parallelism, MoE) to “merge” GPU resources at a higher level, rather than “slicing” at the physical card level. This maximizes performance and avoids excessive context-switching losses.

Remote invocation (e.g., rCUDA) is not recommended here unless under a specialized high-speed, low-latency network with relaxed performance needs.

2. Small-Model Testing Scenarios with Low GPU Utilization

For VMs, consider MIG (on A100/H100) or vGPU (e.g., NVIDIA vGPU or open-source cGPU/qGPU) to split a large card for multiple parallel tasks; for containers, use CUDA Hook for flexible quotas to improve resource usage.

Watch for fault isolation and performance overhead. For high QoS/stability needs, prioritize MIG (best isolation) or official vGPU (if licensing budget allows).

For specific or testing scenarios, CUDA Hook/hijacking offers the most flexible deployment.

3. Remote Invocation Is Not Ideal for Large-Scale GPU Sharing

Virtualizing GPUs via API remoting introduces significant network overhead and serialization delays. It’s only worth considering in rare distributed virtualization scenarios with low latency sensitivity.

V. Summary

The GPU’s working principles dictate higher context-switching costs and isolation challenges compared to CPUs. While various techniques exist (vGPU, MPS, MIG, CUDA Hook, rCUDA), none are as universally mature as CPU virtualization.

For ultra-large models and production: When models are massive (e.g., LLMs needing large VRAM/compute), “multi-card/multi-machine parallelism” is key, with the model itself handling slicing. GPU virtualization offers little help for performance or large VRAM needs. Resources can be shared via privatized MaaS services.

For small models like embeddings or reranking: GPU virtualization/sharing is suitable, with technology choice depending on isolation, cost, SDK compatibility, and operational ease. MIG or official vGPU offer strong isolation but limited flexibility; MPS excels in parallelism but lacks fault isolation; CUDA hijacking is the most flexible and widely adopted.

Remote invocation (API remoting): Generally not recommended for high-performance scenarios unless network latency is controllable and workloads are small.

Thus, for large-scale LLMs (especially with massive parameters), the best practice is distributed parallel models (e.g., tensor or pipeline parallelism) to “share” and “allocate” compute, rather than virtualizing single cards. For small models or multi-user testing, GPU virtualization or slicing can boost single-card utilization. This approach is logical, evidence-based, and strategically layered.

But how can we further improve GPU efficiency and maximize throughput with limited resources? If you’re interested, we’ll dive deeper into this topic in future discussions.

0 notes

Text

ARMxy Edge Controller with CODESYS for Industrial Automation Solutions

Case Details