#Kubernetes For Deep Learning

Explore tagged Tumblr posts

Text

Understand how Generative AI is accelerating Kubernetes adoption, shaping industries with scalable, automated, and innovative approaches.

#AI Startups Kubernetes#Enterprise AI With Kubernetes#Generative AI#Kubernetes AI Architecture#Kubernetes For AI Model Deployment#Kubernetes For Deep Learning#Kubernetes For Machine Learning

0 notes

Text

How Is Gen AI Driving Kubernetes Demand Across Industries?

Understand how Generative AI is accelerating Kubernetes adoption, shaping industries with scalable, automated, and innovative approaches. A new breakthrough in AI, called generative AI or Gen AI, is creating incredible waves across industries and beyond. With this technology rapidly evolving there is growing pressure on the available structure to support both the deployment and scalability of…

#AI Startups Kubernetes#Enterprise AI With Kubernetes#Generative AI#Kubernetes AI Architecture#Kubernetes For AI Model Deployment#Kubernetes For Deep Learning#Kubernetes For Machine Learning

0 notes

Text

How Is Gen AI Driving Kubernetes Demand Across Industries?

Unveil how Gen AI is pushing Kubernetes to the forefront, delivering industry-specific solutions with precision and scalability.

Original Source: https://bit.ly/4cPS7G0

A new breakthrough in AI, called generative AI or Gen AI, is creating incredible waves across industries and beyond. With this technology rapidly evolving there is growing pressure on the available structure to support both the deployment and scalability of the technology. Kubernetes, an effective container orchestration platform is already indicating its ability as one of the enablers in this context. This article critically analyzes how Generative AI gives rise to the use of Kubernetes across industries with a focus of the coexistence of these two modern technological forces.

The Rise of Generative AI and Its Impact on Technology

Machine learning has grown phenomenally over the years and is now foundational in various industries including healthcare, banking, production as well as media and entertainment industries. This technology whereby an AI model is trained to write, design or even solve business problems is changing how business is done. Gen AI’s capacity to generate new data and solutions independently has opened opportunities for advancements as has never been seen before.

If companies are adopting Generative AI , then the next big issue that they are going to meet is on scalability of models and its implementation. These resource- intensive applications present a major challenge to the traditional IT architectures. It is here that Kubernetes comes into the picture, which provides solutions to automate deployment, scaling and managing the containerised applications. Kubernetes may be deployed to facilitate the ML and deep learning processing hence maximizing the efficiency of the AI pipeline to support the future growth of Gen AI applications.

The Intersection of Generative AI and Kubernetes

The integration of Generative AI and Kubernetes is probably the most significant traffic in the development of AI deployment approaches. Kubernetes is perfect for the dynamics of AI workloads in terms of scalability and flexibility. The computation of Gen AI models demands considerable resources, and Kubernetes has all the tools required to properly orchestrate those resources for deploying AI models in different setups.

Kubernetes’ infrastructure is especially beneficial for AI startups and companies that plan to use Generative AI. It enables the decentralization of workload among several nodes so that training, testing, and deployment of AI models are highly distributed. This capability is especially important for businesses that require to constantly revolve their models to adapt to competition. In addition, Kubernetes has direct support for GPU, which helps in evenly distributing computational intensity that comes with deep learning workloads thereby making it perfect for AI projects.

Key Kubernetes Features that Enable Efficient Generative AI Deployment

Scalability:

Kubernetes excels at all levels but most notably where applications are scaled horizontally. Especially for Generative AI which often needs a lot of computation, Kubernetes is capable of scaling the pods, the instances of the running processes and provide necessary resources for the workload claims without having any human intervention.

Resource Management:

Effort is required to be allocated efficiently so as to perform the AI workloads. Kubernetes assists in deploying as well as allocating resources within the cluster from where the AI models usually operate while ensuring that resource consumption and distribution is efficiently controlled.

Continuous Deployment and Integration (CI/CD):

Kubernetes allows for the execution of CI CD pipelines which facilitate contingency integration as well as contingency deployment of models. This is essential for enterprises and the AI startups that use the flexibility of launching different AI solutions depending on the current needs of their companies.

GPU Support:

Kubernetes also features the support of the GPUs for the applications in deep learning from scratch that enhances the rate of training and inference of the models of AI. It is particularly helpful for AI applications that require more data processing, such as image and speech recognition.

Multi-Cloud and Hybrid Cloud Support:

The fact that the Kubernetes can operate in several cloud environment and on-premise data centers makes it versatile as AI deployment tool. It will benefit organizations that need a half and half cloud solution and organizations that do not want to be trapped in the web of the specific company.

Challenges of Running Generative AI on Kubernetes

Complexity of Setup and Management:

That aid Kubernetes provides a great platform for AI deployments comes at the cost of operational overhead. Deploying and configuring a Kubernetes Cluster for AI based workloads therefore necessitates knowledge of both Kubernetes and the approach used to develop these models. This could be an issue for organizations that are not able to gather or hire the required expertise.

Resource Constraints:

Generative AI models require a lot of computing power and when running them in a Kubernetes environment, the computational resources can be fully utilised. AI works best when the organizational resources are well managed to ensure that there are no constraints in the delivery of the application services.

Security Concerns:

Like it is the case with any cloud-native application, security is a big issue when it comes to running artificial intelligence models on Kubernetes. Security of the data and models that AI employs needs to be protected hence comes the policies of encryption, access control and monitoring.

Data Management:

Generative AI models make use of multiple dataset samples for its learning process and is hard to deal with the concept in Kubernetes. Managing these datasets as well as accessing and processing them in a manner that does not hinder the overall performance of an organization is often a difficult task.

Conclusion: The Future of Generative AI is Powered by Kubernetes

As Generative AI advances and integrates into many sectors, the Kubernetes efficient and scalable solutions will only see a higher adoption rate. Kubernetes is a feature of AI architectures that offer resources and facilities for the development and management of AI model deployment.

If you’re an organization planning on putting Generative AI to its best use, then adopting Kubernetes is non-negotiable. Mounting the AI workloads, utilizing the resources in the best possible manner, and maintaining the neat compatibility across the multiple and different clouds are some of the key solutions provided by Kubernetes for the deployment of the AI models. With continued integration between Generative AI and Kubernetes, we have to wonder what new and exciting uses and creations are yet to come, thus strengthening Kubernetes’ position as the backbone for enterprise AI with Kubernetes. The future is bright that Kubernetes is playing leading role in this exciting technological revolution of AI.

Original Source: https://bit.ly/4cPS7G0

#AI Startups Kubernetes#Enterprise AI With Kubernetes#Generative AI#Kubernetes AI Architecture#Kubernetes For AI Model Deployment#Kubernetes For Deep Learning#Kubernetes For Machine Learning

0 notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

Top 10 In- Demand Tech Jobs in 2025

Technology is growing faster than ever, and so is the need for skilled professionals in the field. From artificial intelligence to cloud computing, businesses are looking for experts who can keep up with the latest advancements. These tech jobs not only pay well but also offer great career growth and exciting challenges.

In this blog, we’ll look at the top 10 tech jobs that are in high demand today. Whether you’re starting your career or thinking of learning new skills, these jobs can help you plan a bright future in the tech world.

1. AI and Machine Learning Specialists

Artificial Intelligence (AI) and Machine Learning are changing the game by helping machines learn and improve on their own without needing step-by-step instructions. They’re being used in many areas, like chatbots, spotting fraud, and predicting trends.

Key Skills: Python, TensorFlow, PyTorch, data analysis, deep learning, and natural language processing (NLP).

Industries Hiring: Healthcare, finance, retail, and manufacturing.

Career Tip: Keep up with AI and machine learning by working on projects and getting an AI certification. Joining AI hackathons helps you learn and meet others in the field.

2. Data Scientists

Data scientists work with large sets of data to find patterns, trends, and useful insights that help businesses make smart decisions. They play a key role in everything from personalized marketing to predicting health outcomes.

Key Skills: Data visualization, statistical analysis, R, Python, SQL, and data mining.

Industries Hiring: E-commerce, telecommunications, and pharmaceuticals.

Career Tip: Work with real-world data and build a strong portfolio to showcase your skills. Earning certifications in data science tools can help you stand out.

3. Cloud Computing Engineers: These professionals create and manage cloud systems that allow businesses to store data and run apps without needing physical servers, making operations more efficient.

Key Skills: AWS, Azure, Google Cloud Platform (GCP), DevOps, and containerization (Docker, Kubernetes).

Industries Hiring: IT services, startups, and enterprises undergoing digital transformation.

Career Tip: Get certified in cloud platforms like AWS (e.g., AWS Certified Solutions Architect).

4. Cybersecurity Experts

Cybersecurity professionals protect companies from data breaches, malware, and other online threats. As remote work grows, keeping digital information safe is more crucial than ever.

Key Skills: Ethical hacking, penetration testing, risk management, and cybersecurity tools.

Industries Hiring: Banking, IT, and government agencies.

Career Tip: Stay updated on new cybersecurity threats and trends. Certifications like CEH (Certified Ethical Hacker) or CISSP (Certified Information Systems Security Professional) can help you advance in your career.

5. Full-Stack Developers

Full-stack developers are skilled programmers who can work on both the front-end (what users see) and the back-end (server and database) of web applications.

Key Skills: JavaScript, React, Node.js, HTML/CSS, and APIs.

Industries Hiring: Tech startups, e-commerce, and digital media.

Career Tip: Create a strong GitHub profile with projects that highlight your full-stack skills. Learn popular frameworks like React Native to expand into mobile app development.

6. DevOps Engineers

DevOps engineers help make software faster and more reliable by connecting development and operations teams. They streamline the process for quicker deployments.

Key Skills: CI/CD pipelines, automation tools, scripting, and system administration.

Industries Hiring: SaaS companies, cloud service providers, and enterprise IT.

Career Tip: Earn key tools like Jenkins, Ansible, and Kubernetes, and develop scripting skills in languages like Bash or Python. Earning a DevOps certification is a plus and can enhance your expertise in the field.

7. Blockchain Developers

They build secure, transparent, and unchangeable systems. Blockchain is not just for cryptocurrencies; it’s also used in tracking supply chains, managing healthcare records, and even in voting systems.

Key Skills: Solidity, Ethereum, smart contracts, cryptography, and DApp development.

Industries Hiring: Fintech, logistics, and healthcare.

Career Tip: Create and share your own blockchain projects to show your skills. Joining blockchain communities can help you learn more and connect with others in the field.

8. Robotics Engineers

Robotics engineers design, build, and program robots to do tasks faster or safer than humans. Their work is especially important in industries like manufacturing and healthcare.

Key Skills: Programming (C++, Python), robotics process automation (RPA), and mechanical engineering.

Industries Hiring: Automotive, healthcare, and logistics.

Career Tip: Stay updated on new trends like self-driving cars and AI in robotics.

9. Internet of Things (IoT) Specialists

IoT specialists work on systems that connect devices to the internet, allowing them to communicate and be controlled easily. This is crucial for creating smart cities, homes, and industries.

Key Skills: Embedded systems, wireless communication protocols, data analytics, and IoT platforms.

Industries Hiring: Consumer electronics, automotive, and smart city projects.

Career Tip: Create IoT prototypes and learn to use platforms like AWS IoT or Microsoft Azure IoT. Stay updated on 5G technology and edge computing trends.

10. Product Managers

Product managers oversee the development of products, from idea to launch, making sure they are both technically possible and meet market demands. They connect technical teams with business stakeholders.

Key Skills: Agile methodologies, market research, UX design, and project management.

Industries Hiring: Software development, e-commerce, and SaaS companies.

Career Tip: Work on improving your communication and leadership skills. Getting certifications like PMP (Project Management Professional) or CSPO (Certified Scrum Product Owner) can help you advance.

Importance of Upskilling in the Tech Industry

Stay Up-to-Date: Technology changes fast, and learning new skills helps you keep up with the latest trends and tools.

Grow in Your Career: By learning new skills, you open doors to better job opportunities and promotions.

Earn a Higher Salary: The more skills you have, the more valuable you are to employers, which can lead to higher-paying jobs.

Feel More Confident: Learning new things makes you feel more prepared and ready to take on tougher tasks.

Adapt to Changes: Technology keeps evolving, and upskilling helps you stay flexible and ready for any new changes in the industry.

Top Companies Hiring for These Roles

Global Tech Giants: Google, Microsoft, Amazon, and IBM.

Startups: Fintech, health tech, and AI-based startups are often at the forefront of innovation.

Consulting Firms: Companies like Accenture, Deloitte, and PwC increasingly seek tech talent.

In conclusion, the tech world is constantly changing, and staying updated is key to having a successful career. In 2025, jobs in fields like AI, cybersecurity, data science, and software development will be in high demand. By learning the right skills and keeping up with new trends, you can prepare yourself for these exciting roles. Whether you're just starting or looking to improve your skills, the tech industry offers many opportunities for growth and success.

#Top 10 Tech Jobs in 2025#In- Demand Tech Jobs#High paying Tech Jobs#artificial intelligence#datascience#cybersecurity

2 notes

·

View notes

Text

Navigating the DevOps Landscape: Your Comprehensive Guide to Mastery

In today's ever-evolving IT landscape, DevOps has emerged as a mission-critical practice, reshaping how development and operations teams collaborate, accelerating software delivery, enhancing collaboration, and bolstering efficiency. If you're enthusiastic about embarking on a journey towards mastering DevOps, you've come to the right place. In this comprehensive guide, we'll explore some of the most exceptional resources for immersing yourself in the world of DevOps.

Online Courses: Laying a Strong Foundation

One of the most effective and structured methods for establishing a robust understanding of DevOps is by enrolling in online courses. ACTE Institute, for instance, offers a wide array of comprehensive DevOps courses designed to empower you to learn at your own pace. These meticulously crafted courses delve deep into the fundamental principles, best practices, and practical tools that are indispensable for achieving success in the world of DevOps.

Books and Documentation: Delving into the Depth

Books serve as invaluable companions on your DevOps journey, providing in-depth insights into the practices and principles of DevOps. "The Phoenix Project" by the trio of Gene Kim, Kevin Behr, and George Spafford is highly recommended for gaining profound insights into the transformative potential of DevOps. Additionally, exploring the official documentation provided by DevOps tool providers offers an indispensable resource for gaining nuanced knowledge.

DevOps Communities: Becoming Part of the Conversation

DevOps thrives on the principles of community collaboration, and the digital realm is replete with platforms that foster discussions, seek advice, and facilitate the sharing of knowledge. Websites such as Stack Overflow, DevOps.com, and Reddit's DevOps subreddit serve as vibrant hubs where you can connect with fellow DevOps enthusiasts and experts, engage in enlightening conversations, and glean insights from those who've traversed similar paths.

Webinars and Events: Expanding Your Horizons

To truly expand your DevOps knowledge and engage with industry experts, consider attending webinars and conferences dedicated to this field. Events like DevOpsDays and DockerCon bring together luminaries who generously share their insights and experiences, providing you with unparalleled opportunities to broaden your horizons. Moreover, these events offer the chance to connect and network with peers who share your passion for DevOps.

Hands-On Projects: Applying Your Skills

In the realm of DevOps, practical experience is the crucible in which mastery is forged. Therefore, seize opportunities to take on hands-on projects that allow you to apply the principles and techniques you've learned. Contributing to open-source DevOps initiatives on platforms like GitHub is a fantastic way to accrue real-world experience, all while contributing to the broader DevOps community. Not only do these projects provide tangible evidence of your skills, but they also enable you to build an impressive portfolio.

DevOps Tools: Navigating the Landscape

DevOps relies heavily on an expansive array of tools and technologies, each serving a unique purpose in the DevOps pipeline. To become proficient in DevOps, it's imperative to establish your own lab environments and engage in experimentation. This hands-on approach allows you to become intimately familiar with tools such as Jenkins for continuous integration, Docker for containerization, Kubernetes for orchestration, and Ansible for automation, to name just a few. A strong command over these tools equips you to navigate the intricate DevOps landscape with confidence.

Mentorship: Guiding Lights on Your Journey

To accelerate your journey towards DevOps mastery, consider seeking mentorship from seasoned DevOps professionals. Mentors can provide invaluable guidance, share real-world experiences, and offer insights that are often absent from textbooks or online courses. They can help you navigate the complexities of DevOps, provide clarity during challenging moments, and serve as a source of inspiration. Mentorship is a powerful catalyst for growth in the DevOps field.

By harnessing the full spectrum of these resources, you can embark on a transformative journey towards becoming a highly skilled DevOps practitioner. Armed with a profound understanding of DevOps principles, practical experience, and mastery over essential tools, you'll be well-equipped to tackle the multifaceted challenges and opportunities that the dynamic field of DevOps presents. Remember that continuous learning and staying abreast of the latest DevOps trends are pivotal to your ongoing success. As you embark on your DevOps learning odyssey, know that ACTE Technologies is your steadfast partner, ready to empower you on this exciting journey. Whether you're starting from scratch or enhancing your existing skills, ACTE Technologies Institute provides you with the resources and knowledge you need to excel in the dynamic world of DevOps. Enroll today and unlock your boundless potential. Your DevOps success story begins here. Good luck on your DevOps learning journey!

9 notes

·

View notes

Text

Highest Paying IT Jobs in India in 2025: Roles, Skills & Salary Insights

Published by Prism HRC – Best IT Job Consulting Company in Mumbai

India's IT sector is booming in 2025, driven by digital transformation, the surge in AI and automation, and global demand for tech talent. Whether you're a fresher or a seasoned professional, knowing which roles pay the highest can help you strategize your career growth effectively.

This blog explores the highest-paying IT jobs in India in 2025, the skills required, average salary packages, and where to look for these opportunities.

Why IT Jobs Still Dominate in 2025

India continues to be a global IT hub, and with advancements in cloud computing, AI, cybersecurity, and data analytics, the demand for skilled professionals is soaring. The rise of remote work, startup ecosystems, and global freelancing platforms also contributes to higher paychecks.

1. AI/ML Engineer

Average Salary: ₹20–40 LPA

Skills Required:

Python, R, TensorFlow, PyTorch

Deep learning, NLP, computer vision

Strong statistics and linear algebra foundation

Why It Pays Well:

Companies are pouring investments into AI-powered solutions. From chatbots to autonomous vehicles and predictive analytics, AI specialists are indispensable.

2. Data Scientist

Average Salary: ₹15–35 LPA

Skills Required:

Python, R, SQL, Hadoop, Spark

Data visualization, predictive modelling

Statistical analysis and ML algorithms

Why It Pays Well:

Data drives business decisions, and those who can extract actionable insights are highly valued. Data scientists are among the most sought-after professionals globally.

3. Cybersecurity Architect

Average Salary: ₹18–32 LPA

Skills Required:

Network security, firewalls, encryption

Risk assessment, threat modelling

Certifications: CISSP, CISM, CEH

Why It Pays Well:

With rising cyber threats, data protection and infrastructure security are mission critical. Cybersecurity pros are no longer optional—they're essential.

4. Cloud Solutions Architect

Average Salary: ₹17–30 LPA

Skills Required:

AWS, Microsoft Azure, Google Cloud

Cloud infrastructure design, CI/CD pipelines

DevOps, Kubernetes, Docker

Why It Pays Well:

Cloud is the backbone of modern tech stacks. Enterprises migrating to the cloud need architects who can make that transition smooth and scalable.

5. Blockchain Developer

Average Salary: ₹14���28 LPA

Skills Required:

Solidity, Ethereum, Hyperledger

Cryptography, smart contracts

Decentralized app (dApp) development

Why It Pays Well:

Beyond crypto, blockchain has real-world applications in supply chain, healthcare, and fintech. With a limited talent pool, high salaries are inevitable.

6. Full Stack Developer

Average Salary: ₹12–25 LPA

Skills Required:

Front-end: React, Angular, HTML/CSS

Back-end: Node.js, Django, MongoDB

DevOps basics and API design

Why It Pays Well:

Full-stack developers are versatile. Startups and large companies love professionals who can handle both client and server-side tasks.

7. DevOps Engineer

Average Salary: ₹12–24 LPA

Skills Required:

Jenkins, Docker, Kubernetes

CI/CD pipelines, GitHub Actions

Scripting languages (Bash, Python)

Why It Pays Well:

DevOps reduces time-to-market and improves reliability. Skilled engineers help streamline operations and bring agility to development.

8. Data Analyst (with advanced skillset)

Average Salary: ₹10–20 LPA

Skills Required:

SQL, Excel, Tableau, Power BI

Python/R for automation and machine learning

Business acumen and stakeholder communication

Why It Pays Well:

When paired with business thinking, data analysts become decision-makers, not just number crunchers. This hybrid skillset is in high demand.

9. Product Manager (Tech)

Average Salary: ₹18–35 LPA

Skills Required:

Agile/Scrum methodologies

Product lifecycle management

Technical understanding of software development

Why It Pays Well:

Tech product managers bridge the gap between engineering and business. If you have tech roots and leadership skills, this is your golden ticket.

Where are these jobs hiring?

Major IT hubs in India, such as Bengaluru, Hyderabad, Pune, Mumbai, and NCR, remain the hotspots. Global firms and unicorn startups offer competitive packages.

Want to Land These Jobs?

Partner with leading IT job consulting platforms like Prism HRC, recognized among the best IT job recruitment agencies in Mumbai that match skilled candidates with high-growth companies.

How to Prepare for These Roles

Upskill Continuously: Leverage platforms like Coursera, Udemy, and DataCamp

Build a Portfolio: Showcase your projects on GitHub or a personal website

Certifications: AWS, Google Cloud, Microsoft, Cisco, and niche-specific credentials

Network Actively: Use LinkedIn, attend webinars, and engage in industry communities

Before you know

2025 is shaping up to be a landmark year for tech careers in India. Whether you’re pivoting into IT or climbing the ladder, focus on roles that combine innovation, automation, and business value. With the right guidance and skillset, you can land a top-paying job that aligns with your goals.

Prism HRC can help you navigate this journey—connecting top IT talent with leading companies in India and beyond.

- Based in Gorai-2, Borivali West, Mumbai - www.prismhrc.com - Instagram: @jobssimplified - LinkedIn: Prism HRC

#Highest Paying IT Jobs#IT Jobs in India 2025#Tech Careers 2025#Top IT Roles India#AI Engineer#Data Scientist#Cybersecurity Architect#Cloud Solutions Architect#Blockchain Developer#Full Stack Developer#DevOps Engineer#Data Analyst#IT Salaries 2025#Digital Transformation#Career Growth IT#Tech Industry India#Prism HRC#IT Recruitment Mumbai#IT Job Consulting India

0 notes

Text

Decoding the Full Stack Developer: Beyond Just Coding Skills

When we hear the term Full Stack Developer, the first image that comes to mind is often a coding wizard—someone who can write flawless code in both frontend and backend languages. But in reality, being a Full Stack Developer is much more than juggling JavaScript, databases, and frameworks. It's about adaptability, problem-solving, communication, and having a deep understanding of how digital products come to life.

In today’s fast-paced tech world, decoding the Full Stack Developer means understanding the full spectrum of their abilities—beyond just writing code. It’s about their mindset, their collaboration style, and the unique value they bring to modern development teams.

What is a Full Stack Developer—Really?

At its most basic definition, a Full Stack Developer is someone who can work on both the front end (what users see) and the back end (the server, database, and application logic) of a web application. But what sets a great Full Stack Developer apart isn't just technical fluency—it's their ability to bridge the gap between design, development, and user experience.

Beyond Coding: The Real Skills of a Full Stack Developer

While technical knowledge is essential, companies today are seeking developers who bring more to the table. Here’s what truly defines a well-rounded Full Stack Developer:

1. Holistic Problem Solving

A Full Stack Developer looks at the bigger picture. Instead of focusing only on isolated technical problems, they ask:

How will this feature affect the user experience?

Can this backend architecture scale as traffic increases?

Is there a more efficient way to implement this?

2. Communication and Collaboration

Full Stack Developers often act as the bridge between frontend and backend teams, as well as designers and project managers. This requires:

The ability to translate technical ideas into simple language

Empathy for team members with different skill sets

Openness to feedback and continuous learning

3. Business Mindset

Truly impactful developers understand the "why" behind their work. They think:

How does this feature help the business grow?

Will this implementation improve conversion or retention?

Is this approach cost-effective?

4. Adaptability

Tech is always evolving. A successful Full Stack Developer is a lifelong learner who stays updated with new frameworks, tools, and methodologies.

Technical Proficiency: Still the Foundation

Of course, strong coding skills remain a core part of being a Full Stack Developer. Here are just some of the technical areas they typically master:

Frontend: HTML, CSS, JavaScript, React, Angular, or Vue.js

Backend: Node.js, Express, Python, Ruby on Rails, or PHP

Databases: MySQL, MongoDB, PostgreSQL

Version Control: Git and GitHub

DevOps & Deployment: Docker, Kubernetes, CI/CD pipelines, AWS or Azure

But what distinguishes a standout Full Stack Developer isn’t how many tools they know—it’s how well they apply them to real-world problems.

Why the Role Matters More Than Ever

In startups and agile teams, having someone who understands both ends of the tech stack can:

Speed up development cycles

Reduce communication gaps

Enable rapid prototyping

Make it easier to scale applications over time

This flexibility makes Full Stack Developers extremely valuable. They're often the "glue" holding cross-functional teams together, able to jump into any layer of the product when needed.

A Day in the Life of a Full Stack Developer

To understand this further, imagine this scenario:

It’s 9:00 AM. A Full Stack Developer starts their day reviewing pull requests. At 10:30, they join a stand-up meeting with designers and project managers. By noon, they’re debugging an API endpoint issue. After lunch, they switch to styling a new UI component. And before the day ends, they brainstorm database optimization strategies for an upcoming product feature.

It’s not just a job—it’s a dynamic puzzle, and Full Stack Developers thrive on finding creative, holistic solutions.

Conclusion: Decoding the Full Stack Developer

Decoding the Full Stack Developer: Beyond Just Coding Skills is essential for businesses and aspiring developers alike. It’s about understanding that this role blends logic, creativity, communication, and empathy.

Today’s Full Stack Developer is more than a technical multitasker—they are strategic thinkers, empathetic teammates, and flexible builders who can shape the entire lifecycle of a digital product.

If you're looking to become one—or hire one—don’t just look at the resume. Look at the mindset, curiosity, and willingness to grow. Because when you decode the Full Stack Developer, you’ll find someone who brings full-spectrum value to the digital world.

0 notes

Text

Machine Learning Infrastructure: The Foundation of Scalable AI Solutions

Introduction: Why Machine Learning Infrastructure Matters

In today's digital-first world, the adoption of artificial intelligence (AI) and machine learning (ML) is revolutionizing every industry—from healthcare and finance to e-commerce and entertainment. However, while many organizations aim to leverage ML for automation and insights, few realize that success depends not just on algorithms, but also on a well-structured machine learning infrastructure.

Machine learning infrastructure provides the backbone needed to deploy, monitor, scale, and maintain ML models effectively. Without it, even the most promising ML solutions fail to meet their potential.

In this comprehensive guide from diglip7.com, we’ll explore what machine learning infrastructure is, why it’s crucial, and how businesses can build and manage it effectively.

What is Machine Learning Infrastructure?

Machine learning infrastructure refers to the full stack of tools, platforms, and systems that support the development, training, deployment, and monitoring of ML models. This includes:

Data storage systems

Compute resources (CPU, GPU, TPU)

Model training and validation environments

Monitoring and orchestration tools

Version control for code and models

Together, these components form the ecosystem where machine learning workflows operate efficiently and reliably.

Key Components of Machine Learning Infrastructure

To build robust ML pipelines, several foundational elements must be in place:

1. Data Infrastructure

Data is the fuel of machine learning. Key tools and technologies include:

Data Lakes & Warehouses: Store structured and unstructured data (e.g., AWS S3, Google BigQuery).

ETL Pipelines: Extract, transform, and load raw data for modeling (e.g., Apache Airflow, dbt).

Data Labeling Tools: For supervised learning (e.g., Labelbox, Amazon SageMaker Ground Truth).

2. Compute Resources

Training ML models requires high-performance computing. Options include:

On-Premise Clusters: Cost-effective for large enterprises.

Cloud Compute: Scalable resources like AWS EC2, Google Cloud AI Platform, or Azure ML.

GPUs/TPUs: Essential for deep learning and neural networks.

3. Model Training Platforms

These platforms simplify experimentation and hyperparameter tuning:

TensorFlow, PyTorch, Scikit-learn: Popular ML libraries.

MLflow: Experiment tracking and model lifecycle management.

KubeFlow: ML workflow orchestration on Kubernetes.

4. Deployment Infrastructure

Once trained, models must be deployed in real-world environments:

Containers & Microservices: Docker, Kubernetes, and serverless functions.

Model Serving Platforms: TensorFlow Serving, TorchServe, or custom REST APIs.

CI/CD Pipelines: Automate testing, integration, and deployment of ML models.

5. Monitoring & Observability

Key to ensure ongoing model performance:

Drift Detection: Spot when model predictions diverge from expected outputs.

Performance Monitoring: Track latency, accuracy, and throughput.

Logging & Alerts: Tools like Prometheus, Grafana, or Seldon Core.

Benefits of Investing in Machine Learning Infrastructure

Here’s why having a strong machine learning infrastructure matters:

Scalability: Run models on large datasets and serve thousands of requests per second.

Reproducibility: Re-run experiments with the same configuration.

Speed: Accelerate development cycles with automation and reusable pipelines.

Collaboration: Enable data scientists, ML engineers, and DevOps to work in sync.

Compliance: Keep data and models auditable and secure for regulations like GDPR or HIPAA.

Real-World Applications of Machine Learning Infrastructure

Let’s look at how industry leaders use ML infrastructure to power their services:

Netflix: Uses a robust ML pipeline to personalize content and optimize streaming.

Amazon: Trains recommendation models using massive data pipelines and custom ML platforms.

Tesla: Collects real-time driving data from vehicles and retrains autonomous driving models.

Spotify: Relies on cloud-based infrastructure for playlist generation and music discovery.

Challenges in Building ML Infrastructure

Despite its importance, developing ML infrastructure has its hurdles:

High Costs: GPU servers and cloud compute aren't cheap.

Complex Tooling: Choosing the right combination of tools can be overwhelming.

Maintenance Overhead: Regular updates, monitoring, and security patching are required.

Talent Shortage: Skilled ML engineers and MLOps professionals are in short supply.

How to Build Machine Learning Infrastructure: A Step-by-Step Guide

Here’s a simplified roadmap for setting up scalable ML infrastructure:

Step 1: Define Use Cases

Know what problem you're solving. Fraud detection? Product recommendations? Forecasting?

Step 2: Collect & Store Data

Use data lakes, warehouses, or relational databases. Ensure it’s clean, labeled, and secure.

Step 3: Choose ML Tools

Select frameworks (e.g., TensorFlow, PyTorch), orchestration tools, and compute environments.

Step 4: Set Up Compute Environment

Use cloud-based Jupyter notebooks, Colab, or on-premise GPUs for training.

Step 5: Build CI/CD Pipelines

Automate model testing and deployment with Git, Jenkins, or MLflow.

Step 6: Monitor Performance

Track accuracy, latency, and data drift. Set alerts for anomalies.

Step 7: Iterate & Improve

Collect feedback, retrain models, and scale solutions based on business needs.

Machine Learning Infrastructure Providers & Tools

Below are some popular platforms that help streamline ML infrastructure: Tool/PlatformPurposeExampleAmazon SageMakerFull ML development environmentEnd-to-end ML pipelineGoogle Vertex AICloud ML serviceTraining, deploying, managing ML modelsDatabricksBig data + MLCollaborative notebooksKubeFlowKubernetes-based ML workflowsModel orchestrationMLflowModel lifecycle trackingExperiments, models, metricsWeights & BiasesExperiment trackingVisualization and monitoring

Expert Review

Reviewed by: Rajeev Kapoor, Senior ML Engineer at DataStack AI

"Machine learning infrastructure is no longer a luxury; it's a necessity for scalable AI deployments. Companies that invest early in robust, cloud-native ML infrastructure are far more likely to deliver consistent, accurate, and responsible AI solutions."

Frequently Asked Questions (FAQs)

Q1: What is the difference between ML infrastructure and traditional IT infrastructure?

Answer: Traditional IT supports business applications, while ML infrastructure is designed for data processing, model training, and deployment at scale. It often includes specialized hardware (e.g., GPUs) and tools for data science workflows.

Q2: Can small businesses benefit from ML infrastructure?

Answer: Yes, with the rise of cloud platforms like AWS SageMaker and Google Vertex AI, even startups can leverage scalable machine learning infrastructure without heavy upfront investment.

Q3: Is Kubernetes necessary for ML infrastructure?

Answer: While not mandatory, Kubernetes helps orchestrate containerized workloads and is widely adopted for scalable ML infrastructure, especially in production environments.

Q4: What skills are needed to manage ML infrastructure?

Answer: Familiarity with Python, cloud computing, Docker/Kubernetes, CI/CD, and ML frameworks like TensorFlow or PyTorch is essential.

Q5: How often should ML models be retrained?

Answer: It depends on data volatility. In dynamic environments (e.g., fraud detection), retraining may occur weekly or daily. In stable domains, monthly or quarterly retraining suffices.

Final Thoughts

Machine learning infrastructure isn’t just about stacking technologies—it's about creating an agile, scalable, and collaborative environment that empowers data scientists and engineers to build models with real-world impact. Whether you're a startup or an enterprise, investing in the right infrastructure will directly influence the success of your AI initiatives.

By building and maintaining a robust ML infrastructure, you ensure that your models perform optimally, adapt to new data, and generate consistent business value.

For more insights and updates on AI, ML, and digital innovation, visit diglip7.com.

0 notes

Text

Mastering AI on Kubernetes: A Deep Dive into the Red Hat Certified Specialist in OpenShift AI

Artificial Intelligence (AI) is no longer a buzzword—it's a foundational technology across industries. From powering recommendation engines to enabling self-healing infrastructure, AI is changing the way we build and scale digital experiences. For professionals looking to validate their ability to run AI/ML workloads on Kubernetes, the Red Hat Certified Specialist in OpenShift AI certification is a game-changer.

What is the OpenShift AI Certification?

The Red Hat Certified Specialist in OpenShift AI certification (EX480) is designed for professionals who want to demonstrate their skills in deploying, managing, and scaling AI and machine learning (ML) workloads on Red Hat OpenShift AI (formerly OpenShift Data Science).

This hands-on exam tests real-world capabilities rather than rote memorization, making it ideal for data scientists, ML engineers, DevOps engineers, and platform administrators who want to bridge the gap between AI/ML and cloud-native operations.

Why This Certification Matters

In a world where ML models are only as useful as the infrastructure they run on, OpenShift AI offers a powerful platform for deploying and monitoring models in production. Here’s why this certification is valuable:

🔧 Infrastructure + AI: It merges the best of Kubernetes, containers, and MLOps.

📈 Enterprise-Ready: Red Hat is trusted by thousands of companies worldwide—OpenShift AI is production-grade.

💼 Career Boost: Certifications remain a proven way to stand out in a crowded job market.

🔐 Security and Governance: Demonstrates your understanding of secure, governed ML workflows.

Skills You’ll Gain

Preparing for the Red Hat OpenShift AI certification gives you hands-on expertise in areas like:

Deploying and managing OpenShift AI clusters

Using Jupyter notebooks and Python for model development

Managing GPU workloads

Integrating with Git repositories

Running pipelines for model training and deployment

Monitoring model performance with tools like Prometheus and Grafana

Understanding OpenShift concepts like pods, deployments, and persistent storage

Who Should Take the EX267 Exam?

This certification is ideal for:

Data Scientists who want to operationalize their models

ML Engineers working in hybrid cloud environments

DevOps Engineers bridging infrastructure and AI workflows

Platform Engineers supporting AI workloads at scale

Prerequisites: While there’s no formal prerequisite, it’s recommended you have:

A Red Hat Certified System Administrator (RHCSA) or equivalent knowledge

Basic Python and machine learning experience

Familiarity with OpenShift or Kubernetes

How to Prepare

Here’s a quick roadmap to help you prep for the exam:

Take the RHODS Training: Red Hat offers a course—Red Hat OpenShift AI (EX267)—which maps directly to the exam.

Set Up a Lab: Practice on OpenShift using Red Hat’s Developer Sandbox or install OpenShift locally.

Learn the Tools: Get comfortable with Jupyter, PyTorch, TensorFlow, Git, S2I builds, Tekton pipelines, and Prometheus.

Explore Real-World Use Cases: Try deploying a sample model and serving it via an API.

Mock Exams: Practice managing user permissions, setting up notebook servers, and tuning ML workflows under time constraints.

Final Thoughts

The Red Hat Certified Specialist in OpenShift AI certification is a strong endorsement of your ability to bring AI into the real world—securely, efficiently, and at scale. If you're serious about blending data science and DevOps, this credential is worth pursuing.

🎯 Whether you're a data scientist moving closer to DevOps, or a platform engineer supporting data teams, this certification puts you at the forefront of MLOps in enterprise environments.

Ready to certify your AI skills in the cloud-native era? Let OpenShift AI be your launchpad.

For more details www.hawkstack.com

0 notes

Text

Unveil how Gen AI is pushing Kubernetes to the forefront, delivering industry-specific solutions with precision and scalability.

#AI Startups Kubernetes#Enterprise AI With Kubernetes#Generative AI#Kubernetes AI Architecture#Kubernetes For AI Model Deployment#Kubernetes For Deep Learning#Kubernetes For Machine Learning

0 notes

Text

How AI Is Revolutionizing Contact Centers in 2025

As contact centers evolve from reactive customer service hubs to proactive experience engines, artificial intelligence (AI) has emerged as the cornerstone of this transformation. In 2025, modern contact center architectures are being redefined through AI-based technologies that streamline operations, enhance customer satisfaction, and drive measurable business outcomes.

This article takes a technical deep dive into the AI-powered components transforming contact centers—from natural language models and intelligent routing to real-time analytics and automation frameworks.

1. AI Architecture in Modern Contact Centers

At the core of today’s AI-based contact centers is a modular, cloud-native architecture. This typically consists of:

NLP and ASR engines (e.g., Google Dialogflow, AWS Lex, OpenAI Whisper)

Real-time data pipelines for event streaming (e.g., Apache Kafka, Amazon Kinesis)

Machine Learning Models for intent classification, sentiment analysis, and next-best-action

RPA (Robotic Process Automation) for back-office task automation

CDP/CRM Integration to access customer profiles and journey data

Omnichannel orchestration layer that ensures consistent CX across chat, voice, email, and social

These components are containerized (via Kubernetes) and deployed via CI/CD pipelines, enabling rapid iteration and scalability.

2. Conversational AI and Natural Language Understanding

The most visible face of AI in contact centers is the conversational interface—delivered via AI-powered voice bots and chatbots.

Key Technologies:

Automatic Speech Recognition (ASR): Converts spoken input to text in real time. Example: OpenAI Whisper, Deepgram, Google Cloud Speech-to-Text.

Natural Language Understanding (NLU): Determines intent and entities from user input. Typically fine-tuned BERT or LLaMA models power these layers.

Dialog Management: Manages context-aware conversations using finite state machines or transformer-based dialog engines.

Natural Language Generation (NLG): Generates dynamic responses based on context. GPT-based models (e.g., GPT-4) are increasingly embedded for open-ended interactions.

Architecture Snapshot:

plaintext

CopyEdit

Customer Input (Voice/Text)

↓

ASR Engine (if voice)

↓

NLU Engine → Intent Classification + Entity Recognition

↓

Dialog Manager → Context State

↓

NLG Engine → Response Generation

↓

Omnichannel Delivery Layer

These AI systems are often deployed on low-latency, edge-compute infrastructure to minimize delay and improve UX.

3. AI-Augmented Agent Assist

AI doesn’t only serve customers—it empowers human agents as well.

Features:

Real-Time Transcription: Streaming STT pipelines provide transcripts as the customer speaks.

Sentiment Analysis: Transformers and CNNs trained on customer service data flag negative sentiment or stress cues.

Contextual Suggestions: Based on historical data, ML models suggest actions or FAQ snippets.

Auto-Summarization: Post-call summaries are generated using abstractive summarization models (e.g., PEGASUS, BART).

Technical Workflow:

Voice input transcribed → parsed by NLP engine

Real-time context is compared with knowledge base (vector similarity via FAISS or Pinecone)

Agent UI receives predictive suggestions via API push

4. Intelligent Call Routing and Queuing

AI-based routing uses predictive analytics and reinforcement learning (RL) to dynamically assign incoming interactions.

Routing Criteria:

Customer intent + sentiment

Agent skill level and availability

Predicted handle time (via regression models)

Customer lifetime value (CLV)

Model Stack:

Intent Detection: Multi-label classifiers (e.g., fine-tuned RoBERTa)

Queue Prediction: Time-series forecasting (e.g., Prophet, LSTM)

RL-based Routing: Models trained via Q-learning or Proximal Policy Optimization (PPO) to optimize wait time vs. resolution rate

5. Knowledge Mining and Retrieval-Augmented Generation (RAG)

Large contact centers manage thousands of documents, SOPs, and product manuals. AI facilitates rapid knowledge access through:

Vector Embedding of documents (e.g., using OpenAI, Cohere, or Hugging Face models)

Retrieval-Augmented Generation (RAG): Combines dense retrieval with LLMs for grounded responses

Semantic Search: Replaces keyword-based search with intent-aware queries

This enables agents and bots to answer complex questions with dynamic, accurate information.

6. Customer Journey Analytics and Predictive Modeling

AI enables real-time customer journey mapping and predictive support.

Key ML Models:

Churn Prediction: Gradient Boosted Trees (XGBoost, LightGBM)

Propensity Modeling: Logistic regression and deep neural networks to predict upsell potential

Anomaly Detection: Autoencoders flag unusual user behavior or possible fraud

Streaming Frameworks:

Apache Kafka / Flink / Spark Streaming for ingesting and processing customer signals (page views, clicks, call events) in real time

These insights are visualized through BI dashboards or fed back into orchestration engines to trigger proactive interventions.

7. Automation & RPA Integration

Routine post-call processes like updating CRMs, issuing refunds, or sending emails are handled via AI + RPA integration.

Tools:

UiPath, Automation Anywhere, Microsoft Power Automate

Workflows triggered via APIs or event listeners (e.g., on call disposition)

AI models can determine intent, then trigger the appropriate bot to complete the action in backend systems (ERP, CRM, databases)

8. Security, Compliance, and Ethical AI

As AI handles more sensitive data, contact centers embed security at multiple levels:

Voice biometrics for authentication (e.g., Nuance, Pindrop)

PII Redaction via entity recognition models

Audit Trails of AI decisions for compliance (especially in finance/healthcare)

Bias Monitoring Pipelines to detect model drift or demographic skew

Data governance frameworks like ISO 27001, GDPR, and SOC 2 compliance are standard in enterprise AI deployments.

Final Thoughts

AI in 2025 has moved far beyond simple automation. It now orchestrates entire contact center ecosystems—powering conversational agents, augmenting human reps, automating back-office workflows, and delivering predictive intelligence in real time.

The technical stack is increasingly cloud-native, model-driven, and infused with real-time analytics. For engineering teams, the focus is now on building scalable, secure, and ethical AI infrastructures that deliver measurable impact across customer satisfaction, cost savings, and employee productivity.

As AI models continue to advance, contact centers will evolve into fully adaptive systems, capable of learning, optimizing, and personalizing in real time. The revolution is already here—and it's deeply technical.

#AI-based contact center#conversational AI in contact centers#natural language processing (NLP)#virtual agents for customer service#real-time sentiment analysis#AI agent assist tools#speech-to-text AI#AI-powered chatbots#contact center automation#AI in customer support#omnichannel AI solutions#AI for customer experience#predictive analytics contact center#retrieval-augmented generation (RAG)#voice biometrics security#AI-powered knowledge base#machine learning contact center#robotic process automation (RPA)#AI customer journey analytics

0 notes

Text

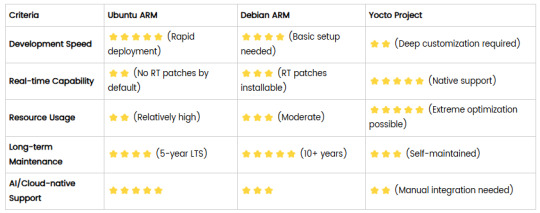

Comparison of Ubuntu, Debian, and Yocto for IIoT and Edge Computing

In industrial IoT (IIoT) and edge computing scenarios, Ubuntu, Debian, and Yocto Project each have unique advantages. Below is a detailed comparison and recommendations for these three systems:

1. Ubuntu (ARM)

Advantages

Ready-to-use: Provides official ARM images (e.g., Ubuntu Server 22.04 LTS) supporting hardware like Raspberry Pi and NVIDIA Jetson, requiring no complex configuration.

Cloud-native support: Built-in tools like MicroK8s, Docker, and Kubernetes, ideal for edge-cloud collaboration.

Long-term support (LTS): 5 years of security updates, meeting industrial stability requirements.

Rich software ecosystem: Access to AI/ML tools (e.g., TensorFlow Lite) and databases (e.g., PostgreSQL ARM-optimized) via APT and Snap Store.

Use Cases

Rapid prototyping: Quick deployment of Python/Node.js applications on edge gateways.

AI edge inference: Running computer vision models (e.g., ROS 2 + Ubuntu) on Jetson devices.

Lightweight K8s clusters: Edge nodes managed by MicroK8s.

Limitations

Higher resource usage (minimum ~512MB RAM), unsuitable for ultra-low-power devices.

2. Debian (ARM)

Advantages

Exceptional stability: Packages undergo rigorous testing, ideal for 24/7 industrial operation.

Lightweight: Minimal installation requires only 128MB RAM; GUI-free versions available.

Long-term support: Up to 10+ years of security updates via Debian LTS (with commercial support).

Hardware compatibility: Supports older or niche ARM chips (e.g., TI Sitara series).

Use Cases

Industrial controllers: PLCs, HMIs, and other devices requiring deterministic responses.

Network edge devices: Firewalls, protocol gateways (e.g., Modbus-to-MQTT).

Critical systems (medical/transport): Compliance with IEC 62304/DO-178C certifications.

Limitations

Older software versions (e.g., default GCC version); newer features require backports.

3. Yocto Project

Advantages

Full customization: Tailor everything from kernel to user space, generating minimal images (<50MB possible).

Real-time extensions: Supports Xenomai/Preempt-RT patches for μs-level latency.

Cross-platform portability: Single recipe set adapts to multiple hardware platforms (e.g., NXP i.MX6 → i.MX8).

Security design: Built-in industrial-grade features like SELinux and dm-verity.

Use Cases

Custom industrial devices: Requires specific kernel configurations or proprietary drivers (e.g., CAN-FD bus support).

High real-time systems: Robotic motion control, CNC machines.

Resource-constrained terminals: Sensor nodes running lightweight stacks (e.g., Zephyr+FreeRTOS hybrid deployment).

Limitations

Steep learning curve (BitBake syntax required); longer development cycles.

4. Comparison Summary

5. Selection Recommendations

Choose Ubuntu ARM: For rapid deployment of edge AI applications (e.g., vision detection on Jetson) or deep integration with public clouds (e.g., AWS IoT Greengrass).

Choose Debian ARM: For mission-critical industrial equipment (e.g., substation monitoring) where stability outweighs feature novelty.

Choose Yocto Project: For custom hardware development (e.g., proprietary industrial boards) or strict real-time/safety certification (e.g., ISO 13849) requirements.

6. Hybrid Architecture Example

Smart factory edge node:

Real-time control layer: RTOS built with Yocto (controlling robotic arms)

Data processing layer: Debian running OPC UA servers

Cloud connectivity layer: Ubuntu Server managing K8s edge clusters

Combining these systems based on specific needs can maximize the efficiency of IIoT edge computing.

0 notes

Text

UpgradeMySkill: Where Ambition Meets Expertise

Success today belongs to those who never stop learning. In an era defined by innovation, digital transformation, and global competition, upgrading your skills isn’t just an advantage — it’s a necessity. Whether you’re aiming for your next promotion, planning a career switch, or striving to sharpen your expertise, UpgradeMySkill is the partner you need to shape a brighter future.

At UpgradeMySkill, we transform ambition into achievement through world-class training, globally recognized certifications, and practical learning experiences.

Our Vision: Empowering Careers, One Skill at a Time

Learning is powerful — it unlocks doors, creates opportunities, and fuels personal and professional growth. UpgradeMySkill exists to make that power accessible to everyone.

We offer a rich portfolio of professional certification courses designed to enhance skills, boost confidence, and enable real-world success. From IT professionals and project managers to cybersecurity specialists and business leaders, we help learners across the globe build the capabilities they need to thrive.

Comprehensive Training That Sets You Apart

At UpgradeMySkill, we don't just teach — we transform. Our programs are built to deliver measurable value, equipping you with the skills employers demand today and tomorrow. Our core offerings include:

Project Management Certifications: PMP®, PRINCE2®, PMI-ACP®, CAPM®

IT Service Management Training: ITIL® 4 Foundation, Intermediate, and Expert

Agile and Scrum: Scrum Master, SAFe® Agilist, and Agile Practitioner courses

Cloud Computing Courses: AWS Certified Solutions Architect, Azure Fundamentals, Google Cloud Engineer

Cybersecurity Certifications: CISSP®, CISM®, CEH®, CompTIA Security+

Data Science and Machine Learning: Big Data Analysis, AI, and ML

DevOps Tools and Techniques: Jenkins, Docker, Kubernetes, Ansible

Each program is designed to deliver real-world skills that you can apply immediately to your work environment.

Why Choose UpgradeMySkill?

There are many places to learn, but few places to master. Here’s what makes UpgradeMySkill stand out:

1. Trusted and Accredited

Our certifications are recognized globally and are accredited by top organizations. With us, you don’t just earn a certificate; you earn a competitive advantage.

2. Experienced Trainers

Our instructors bring decades of industry experience. They provide deep insights, practical frameworks, and exam strategies that go beyond textbook learning.

3. Flexible Learning Options

We know your time is valuable. That’s why we offer flexible modes of learning:

Live online training

Classroom sessions

Self-paced e-learning

Customized corporate training programs

Learn the way that works best for you — anytime, anywhere.

4. Career-Focused Approach

We believe training should translate into real results. Our hands-on sessions, real-world case studies, and post-training support are all designed to help you advance faster and smarter.

5. Lifetime Learning Support

Even after your course ends, our commitment to you doesn’t. Our alumni have access to updated study materials, webinars, and career resources to keep them ahead of the curve.

Serving Individuals and Corporations Globally

Whether you're an individual professional charting your personal growth path or an enterprise seeking to boost your team's capabilities, UpgradeMySkill has a solution for you.

Individuals: Advance your career with new certifications and skills that employers value.

Enterprises: Develop high-performing teams with our customized training programs aligned to your business goals.

We have successfully delivered corporate training programs to Fortune 500 companies, startups, government agencies, and global enterprises, always with measurable outcomes and client satisfaction.

Testimonials That Inspire

"The PMP® training program by UpgradeMySkill was exceptional. The real-world examples, mock tests, and constant support helped me pass on my first attempt. Highly recommend it to anyone serious about project management!" – Abhinav R., Senior Project Manager

"Our IT team completed AWS training through UpgradeMySkill. The sessions were insightful and hands-on, and our cloud migration project became much smoother thanks to the new skills we acquired." – Meera S., IT Director

These success stories are a testament to our quality, commitment, and effectiveness.

Your Future Is Waiting — Are You Ready?

Knowledge is the foundation of opportunity. Skills are the currency of success. Certification is the key that unlocks new doors.

Don’t let hesitation hold you back. Take control of your career journey today. Trust UpgradeMySkill to equip you with the tools, knowledge, and confidence to climb higher, faster, and farther.

Upgrade your skills. Upgrade your future. Upgrade with us.

#upgrademyskill#careergrowth#professionaldevelopment#projectmanagement#agiletraining#agilescrum#agiletransformation#careerdevelopment#processimprovement#scrumtraining

0 notes

Text

Unlock Your Full Potential with Techmindz's Advanced MERN Stack Course in Kochi 🚀💻

Are you ready to take your web development skills to the next level? If you already have a foundational understanding of web development and are looking to specialize in full-stack JavaScript development, Techmindz in Kochi offers the Advanced MERN Stack Course to help you master one of the most powerful and modern web development frameworks today! 🌟

Why Choose the MERN Stack for Web Development? 🧩

The MERN Stack is an integrated suite of JavaScript technologies that enables developers to build dynamic, scalable, and high-performance web applications. MERN stands for MongoDB, Express.js, React.js, and Node.js—each a powerful technology on its own. When combined, they create a seamless full-stack environment that’s ideal for developing modern web applications. 🌐

The demand for MERN Stack developers is increasing globally due to the versatility and efficiency of the stack. With the Advanced MERN Stack Course in Kochi at Techmindz, you can position yourself at the forefront of web development.

What Will You Learn in the Advanced MERN Stack Course at Techmindz? 📚

Techmindz has designed an advanced curriculum that provides both theoretical knowledge and practical hands-on experience. By the end of the course, you will be well-versed in every component of the MERN stack and be ready to develop cutting-edge applications. Here's a breakdown of what you'll learn:

1. Advanced Node.js & Express.js 🔧

Node.js: Dive deep into Node.js and learn advanced concepts like asynchronous programming, streams, buffers, and more.

Express.js: Understand how to build robust, scalable, and efficient back-end systems with Express. Learn routing, middleware, authentication, and more to build a secure and optimized back-end.

2. Mastering React.js ⚛️

Learn advanced concepts in React, including state management using Redux, React Hooks, and Context API.

Explore the latest features in React like Server-Side Rendering (SSR), React Suspense, and React Native to build high-performance, scalable applications.

3. MongoDB in Depth 🗃️

Master MongoDB, a NoSQL database that stores data in flexible, JSON-like formats. Learn how to design efficient databases, perform aggregation operations, and handle data modeling for complex web applications.

4. API Development & Integration 🔌

Learn how to build RESTful APIs using Node.js and Express.js, and integrate them with the front-end.

Dive into the process of API authentication and authorization, securing your applications using JWT (JSON Web Tokens), and other security techniques.

5. Advanced Features & Tools 🛠️

Learn about WebSockets for real-time communication in web apps.

Master deployment tools and services such as Docker, Kubernetes, and cloud services like AWS to deploy and scale your applications effectively.

6. Building Full-Stack Applications 🌍

You will build a complete, full-stack application from scratch using the MERN stack. This will include implementing a front-end React app, connecting it with a back-end Node.js/Express API, and integrating MongoDB to store data.

Why Techmindz is the Best Choice for Your Advanced MERN Stack Training in Kochi? 🎯

Choosing Techmindz for your Advanced MERN Stack Course in Kochi gives you an edge in the highly competitive tech world. Here’s why Techmindz is the ideal training institution:

1. Industry-Experienced Trainers 🧑🏫👩🏫

At Techmindz, we believe in learning from experts. Our trainers are professionals who have years of experience working with the MERN stack in real-world projects. They will provide valuable insights into industry practices, tips for optimization, and help you get ahead in your career.

2. Hands-On Learning Approach 💡

Theory without practice can only take you so far. That's why our course emphasizes hands-on learning. You’ll work on real-world projects, coding challenges, and case studies to apply the concepts you’ve learned in class.

3. Up-to-Date Curriculum 📖

We update our course material to keep up with the latest trends and technologies in the MERN stack. Whether it’s new features in React or the latest developments in MongoDB, we ensure you’re always ahead of the curve.

4. Personalized Guidance & Mentorship 🤝

We offer one-on-one mentorship, where you can receive personalized attention and guidance to help you solve challenges and advance through the course with confidence.

5. Job Assistance & Placement Support 💼

At Techmindz, we not only train you but also help you secure a job. Our placement support team works closely with you, helping you with resume preparation, mock interviews, and connecting you with top employers looking for skilled MERN stack developers.

6. Flexible Learning Options ⏳

We understand that everyone has different learning preferences, so we offer:

Classroom Training: Attend hands-on sessions in our Kochi-based institute.

Online Training: Take part in live classes from the comfort of your home.

Self-Paced Learning: Access video tutorials and learn at your own pace.

What are the Career Opportunities for MERN Stack Developers? 💼

After completing the Advanced MERN Stack Course, you will be qualified for various exciting career roles, including:

Full Stack Developer

React Developer

Node.js Developer

MERN Stack Developer

Back-End Developer

Front-End Developer

As a MERN stack developer, you’ll have access to numerous job opportunities in the tech industry. MERN stack developers are in high demand because they can develop both the front-end and back-end of modern web applications.

Conclusion: Start Your Journey as an Expert MERN Stack Developer with Techmindz! 🚀

Whether you're an aspiring developer looking to enhance your skills or a professional aiming to specialize in full-stack JavaScript development, the Advanced MERN Stack Course at Techmindz in Kochi is the perfect opportunity for you. With our expert trainers, hands-on projects, and comprehensive curriculum, you’ll be ready to take on the challenges of building sophisticated, full-stack web applications.

Enroll now to become an Advanced MERN Stack Developer and open the doors to a successful career in web development! 🌐

0 notes

Text

Level Up Your Software Development Skills: Join Our Unique DevOps Course

Would you like to increase your knowledge of software development? Look no further! Our unique DevOps course is the perfect opportunity to upgrade your skillset and pave the way for accelerated career growth in the tech industry. In this article, we will explore the key components of our course, reasons why you should choose it, the remarkable placement opportunities it offers, and the numerous benefits you can expect to gain from joining us.

Key Components of Our DevOps Course

Our DevOps course is meticulously designed to provide you with a comprehensive understanding of the DevOps methodology and equip you with the necessary tools and techniques to excel in the field. Here are the key components you can expect to delve into during the course:

1. Understanding DevOps Fundamentals

Learn the core principles and concepts of DevOps, including continuous integration, continuous delivery, infrastructure automation, and collaboration techniques. Gain insights into how DevOps practices can enhance software development efficiency and communication within cross-functional teams.

2. Mastering Cloud Computing Technologies

Immerse yourself in cloud computing platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform. Acquire hands-on experience in deploying applications, managing serverless architectures, and leveraging containerization technologies such as Docker and Kubernetes for scalable and efficient deployment.

3. Automating Infrastructure as Code