#LangGraph

Explore tagged Tumblr posts

Text

AI News Brief 🧞♀️: The Ultimate Guide to LangChain and LangGraph: Which One is Right for You?

#AgenticAI#LangChain#LangGraph#AIFrameworks#MachineLearning#DeepLearning#NaturalLanguageProcessing#NLP#AIApplications#ArtificialIntelligence#AIDevelopment#AIResearch#AIEngineering#AIInnovation#AIIndustry#AICommunity#AIExperts#AIEnthusiasts#AIStartups#AIBusiness#AIFuture#AIRevolution#dozers#FraggleRock#Spideysense#Spiderman#MarvelComics#JimHenson#SpiderSense#FieldsOfTheNephilim

0 notes

Text

Automate customer support with Amazon Bedrock, LangGraph, and Mistral models

Automate customer support with Amazon Bedrock, LangGraph, and Mistral models

0 notes

Link

In this post, we demonstrate how to use Amazon Bedrock and LangGraph to build a personalized customer support experience for an ecommerce retailer. By integrating the Mistral Large 2 and Pixtral Large models, we guide you through automating key cust #AI #ML #Automation

0 notes

Link

[ad_1] In this tutorial, we demonstrate how to build a multi-step, intelligent query-handling agent using LangGraph and Gemini 1.5 Flash. The core idea is to structure AI reasoning as a stateful workflow, where an incoming query is passed through a series of purposeful nodes: routing, analysis, research, response generation, and validation. Each node operates as a functional block with a well-defined role, making the agent not just reactive but analytically aware. Using LangGraph’s StateGraph, we orchestrate these nodes to create a looping system that can re-analyze and improve its output until the response is validated as complete or a max iteration threshold is reached. !pip install langgraph langchain-google-genai python-dotenv First, the command !pip install langgraph langchain-google-genai python-dotenv installs three Python packages essential for building intelligent agent workflows. langgraph enables graph-based orchestration of AI agents, langchain-google-genai provides integration with Google’s Gemini models, and python-dotenv allows secure loading of environment variables from .env files. import os from typing import Dict, Any, List from dataclasses import dataclass from langgraph.graph import Graph, StateGraph, END from langchain_google_genai import ChatGoogleGenerativeAI from langchain.schema import HumanMessage, SystemMessage import json os.environ["GOOGLE_API_KEY"] = "Use Your API Key Here" We import essential modules and libraries for building agent workflows, including ChatGoogleGenerativeAI for interacting with Gemini models and StateGraph for managing conversational state. The line os.environ[“GOOGLE_API_KEY”] = “Use Your API Key Here” assigns the API key to an environment variable, allowing the Gemini model to authenticate and generate responses. @dataclass class AgentState: """State shared across all nodes in the graph""" query: str = "" context: str = "" analysis: str = "" response: str = "" next_action: str = "" iteration: int = 0 max_iterations: int = 3 Check out the Notebook here This AgentState dataclass defines the shared state that persists across different nodes in a LangGraph workflow. It tracks key fields, including the user’s query, retrieved context, any analysis performed, the generated response, and the recommended next action. It also includes an iteration counter and a max_iterations limit to control how many times the workflow can loop, enabling iterative reasoning or decision-making by the agent. @dataclass class AgentState: """State shared across all nodes in the graph""" query: str = "" context: str = "" analysis: str = "" response: str = "" next_action: str = "" iteration: int = 0 max_iterations: int = 3 This AgentState dataclass defines the shared state that persists across different nodes in a LangGraph workflow. It tracks key fields, including the user's query, retrieved context, any analysis performed, the generated response, and the recommended next action. It also includes an iteration counter and a max_iterations limit to control how many times the workflow can loop, enabling iterative reasoning or decision-making by the agent. class GraphAIAgent: def __init__(self, api_key: str = None): if api_key: os.environ["GOOGLE_API_KEY"] = api_key self.llm = ChatGoogleGenerativeAI( model="gemini-1.5-flash", temperature=0.7, convert_system_message_to_human=True ) self.analyzer = ChatGoogleGenerativeAI( model="gemini-1.5-flash", temperature=0.3, convert_system_message_to_human=True ) self.graph = self._build_graph() def _build_graph(self) -> StateGraph: """Build the LangGraph workflow""" workflow = StateGraph(AgentState) workflow.add_node("router", self._router_node) workflow.add_node("analyzer", self._analyzer_node) workflow.add_node("researcher", self._researcher_node) workflow.add_node("responder", self._responder_node) workflow.add_node("validator", self._validator_node) workflow.set_entry_point("router") workflow.add_edge("router", "analyzer") workflow.add_conditional_edges( "analyzer", self._decide_next_step, "research": "researcher", "respond": "responder" ) workflow.add_edge("researcher", "responder") workflow.add_edge("responder", "validator") workflow.add_conditional_edges( "validator", self._should_continue, "continue": "analyzer", "end": END ) return workflow.compile() def _router_node(self, state: AgentState) -> Dict[str, Any]: """Route and categorize the incoming query""" system_msg = """You are a query router. Analyze the user's query and provide context. Determine if this is a factual question, creative request, problem-solving task, or analysis.""" messages = [ SystemMessage(content=system_msg), HumanMessage(content=f"Query: state.query") ] response = self.llm.invoke(messages) return "context": response.content, "iteration": state.iteration + 1 def _analyzer_node(self, state: AgentState) -> Dict[str, Any]: """Analyze the query and determine the approach""" system_msg = """Analyze the query and context. Determine if additional research is needed or if you can provide a direct response. Be thorough in your analysis.""" messages = [ SystemMessage(content=system_msg), HumanMessage(content=f""" Query: state.query Context: state.context Previous Analysis: state.analysis """) ] response = self.analyzer.invoke(messages) analysis = response.content if "research" in analysis.lower() or "more information" in analysis.lower(): next_action = "research" else: next_action = "respond" return "analysis": analysis, "next_action": next_action def _researcher_node(self, state: AgentState) -> Dict[str, Any]: """Conduct additional research or information gathering""" system_msg = """You are a research assistant. Based on the analysis, gather relevant information and insights to help answer the query comprehensively.""" messages = [ SystemMessage(content=system_msg), HumanMessage(content=f""" Query: state.query Analysis: state.analysis Research focus: Provide detailed information relevant to the query. """) ] response = self.llm.invoke(messages) updated_context = f"state.context\n\nResearch: response.content" return "context": updated_context def _responder_node(self, state: AgentState) -> Dict[str, Any]: """Generate the final response""" system_msg = """You are a helpful AI assistant. Provide a comprehensive, accurate, and well-structured response based on the analysis and context provided.""" messages = [ SystemMessage(content=system_msg), HumanMessage(content=f""" Query: state.query Context: state.context Analysis: state.analysis Provide a complete and helpful response. """) ] response = self.llm.invoke(messages) return "response": response.content def _validator_node(self, state: AgentState) -> Dict[str, Any]: """Validate the response quality and completeness""" system_msg = """Evaluate if the response adequately answers the query. Return 'COMPLETE' if satisfactory, or 'NEEDS_IMPROVEMENT' if more work is needed.""" messages = [ SystemMessage(content=system_msg), HumanMessage(content=f""" Original Query: state.query Response: state.response Is this response complete and satisfactory? """) ] response = self.analyzer.invoke(messages) validation = response.content return "context": f"state.context\n\nValidation: validation" def _decide_next_step(self, state: AgentState) -> str: """Decide whether to research or respond directly""" return state.next_action def _should_continue(self, state: AgentState) -> str: """Decide whether to continue iterating or end""" if state.iteration >= state.max_iterations: return "end" if "COMPLETE" in state.context: return "end" if "NEEDS_IMPROVEMENT" in state.context: return "continue" return "end" def run(self, query: str) -> str: """Run the agent with a query""" initial_state = AgentState(query=query) result = self.graph.invoke(initial_state) return result["response"] Check out the Notebook here The GraphAIAgent class defines a LangGraph-based AI workflow using Gemini models to iteratively analyze, research, respond, and validate answers to user queries. It utilizes modular nodes, such as router, analyzer, researcher, responder, and validator, to reason through complex tasks, refining responses through controlled iterations. def main(): agent = GraphAIAgent("Use Your API Key Here") test_queries = [ "Explain quantum computing and its applications", "What are the best practices for machine learning model deployment?", "Create a story about a robot learning to paint" ] print("🤖 Graph AI Agent with LangGraph and Gemini") print("=" * 50) for i, query in enumerate(test_queries, 1): print(f"\n📝 Query i: query") print("-" * 30) try: response = agent.run(query) print(f"🎯 Response: response") except Exception as e: print(f"❌ Error: str(e)") print("\n" + "="*50) if __name__ == "__main__": main() Finally, the main() function initializes the GraphAIAgent with a Gemini API key and runs it on a set of test queries covering technical, strategic, and creative tasks. It prints each query and the AI-generated response, showcasing how the LangGraph-driven agent processes diverse types of input using Gemini’s reasoning and generation capabilities. In conclusion, by combining LangGraph’s structured state machine with the power of Gemini’s conversational intelligence, this agent represents a new paradigm in AI workflow engineering, one that mirrors human reasoning cycles of inquiry, analysis, and validation. The tutorial provides a modular and extensible template for developing advanced AI agents that can autonomously handle various tasks, ranging from answering complex queries to generating creative content. Check out the Notebook here. All credit for this research goes to the researchers of this project. 🆕 Did you know? Marktechpost is the fastest-growing AI media platform—trusted by over 1 million monthly readers. Book a strategy call to discuss your campaign goals. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter. Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences. [ad_2] Source link

0 notes

Text

LLM agents: docile tools or nascent insurgency? Frameworks promise automation, but I smell a jailbreak. Weaponize your workflow. #AgentZero

0 notes

Text

LangGraph: Building Smarter AI Agents with Graph-Based Workflows

In the world of AI development, creating smarter agents that can handle complex tasks requires more than just coding. It involves graph-based AI workflows, where logic, data, and decision-making flow seamlessly across systems. Enter LangGraph, a powerful framework that’s redefining the way we build AI agents and orchestrate AI workflows.

In this blog, we’ll explore how LangGraph helps you design smarter AI agents through graph-based workflows, and how it enables easy management, optimization, and scalability of AI-driven processes.

What is LangGraph?

LangGraph is a framework built to help developers create AI workflows using graph-based structures. The idea is simple—just like flowcharts or decision trees, you can design workflows as graphs, where each node represents a task or decision, and the edges define the flow of data between them. This approach makes it easier to understand and manage complex AI logic.

At its core, LangGraph architecture allows for a modular AI workflow design. By breaking down tasks into individual components or subgraphs, developers can build more flexible, reusable, and scalable AI solutions.

Why Use Graph-Based AI Workflows?

Building smarter AI agents requires managing complex decision-making processes. Traditional methods of creating AI agents often involve writing long, complex code or using linear programming approaches. Graph-based AI workflows change this by offering a more visual, intuitive way to organize and optimize these workflows.

With LangGraph, AI workflows become easier to design, visualize, and scale. You can quickly see how data flows through your system, where errors might occur, and how to optimize for better performance. Some key advantages of graph-based AI workflows include:

AI agent optimization through better management of data flow and decision-making.

Real-time AI agent management for more effective performance monitoring.

Efficient orchestration of multiple AI agents in one unified system.

LangGraph and Workflow Automation with AI

One of the biggest challenges in AI development is automating workflows effectively. With LangGraph, you can easily automate repetitive tasks and optimize the flow of data between different AI components.

Whether you're building a chatbot, data analytics pipeline, or a machine learning model, workflow automation with AI helps ensure processes are completed faster and with fewer errors. By using graph logic to model the tasks, LangGraph allows for real-time adjustments and re-routing in case something goes wrong.

This approach leads to faster iterations and reduced development time, which is crucial for businesses looking to stay competitive in today’s fast-paced AI landscape.

The Power of Modular AI Workflow Design

LangGraph stands out by encouraging a modular design for AI workflows. Instead of building monolithic systems, developers can break down workflows into smaller, reusable modules or subgraphs. This has a number of benefits:

Reusability: You can use the same subgraph in different parts of the project or across multiple projects.

Maintainability: Changes in one module won’t disrupt the entire system, making debugging and improvements easier.

Scalability: It becomes much easier to scale workflows by simply adding or modifying individual modules rather than reworking the whole system.

For instance, if you’re building a recommendation engine or data pipeline, LangGraph lets you design each step as a separate module, linking them together into a complete system. This makes your AI solution not only efficient but also easy to adapt to future needs.

Visualizing AI Workflows

One of the key aspects of LangGraph is its focus on visualizing AI workflows. By mapping out workflows as graphs, developers can immediately see how data moves through the system, where decisions are made, and where potential bottlenecks lie.

This visual approach makes it much easier for teams to collaborate on AI projects and understand the logic behind each decision. LangGraph provides a graphical interface that allows you to monitor and tweak workflows in real time, helping you make smarter decisions faster.

How LangGraph Enhances LangChain Integration

For those familiar with LangChain, LangGraph is the perfect partner. LangChain integration with LangGraph allows you to take advantage of LangChain’s powerful language model capabilities and combine them with LangGraph’s visual workflow design.

By linking LLM-based AI agents in a graph-based structure, you can better manage AI tasks and ensure that the logic flows smoothly across multiple systems. For example, if you’re building a complex chatbot, LangGraph can help you orchestrate the various AI models (like natural language understanding, decision-making, and action-taking) in a clear, organized way.

This integration is key for businesses that rely on data-driven AI workflows, as it helps streamline the development of intelligent systems that are both scalable and easy to manage.

Real-Time AI Agent Management and Optimization

When building AI systems, real-time management is crucial. LangGraph excels in this area by offering powerful tools for real-time AI agent management. You can monitor workflows as they execute, see which paths are being followed, and make immediate adjustments to improve performance.

By leveraging graph logic for machine learning, LangGraph allows AI agents to adapt based on real-time feedback. This means that if a workflow isn't performing as expected, LangGraph can automatically re-route tasks or adjust the flow, optimizing the entire process.

Conclusion: The Future of AI Workflows

In a world where scalable AI solutions are increasingly essential, LangGraph offers an innovative approach to managing complex AI workflows. Its combination of graph-based AI workflows, modular design, and real-time management helps developers build smarter, more efficient AI agents.

If you're looking to create advanced AI systems that can evolve and scale with your business, LangGraph is the framework you need. From visualizing AI workflows to orchestrating AI agents and automating tasks, LangGraph gives you the tools to build the next generation of intelligent applications.

0 notes

Text

Week#4 DES 303

The Experience: Experiment #1

Incentive & Initial Research

Following the previous ideation phase, I wanted to explore the usage of LLM in a design context. The first order of business was to find a unique area of exploration, especially given how the industry is already full of people attempting to shove AI into everything that doesn't need it.

Drawing from a positive experience working with AI for a design project, I want the end result of the experiment to focus on human-computer interaction.

John the Rock is a project in which I handled the technical aspects. It had a positive reception despite having only rudimentary voice chat capability and no other functionalities.

Through initial research and personal experience, I identified two polar opposite prominent uses of LLMS in the field: as a tool or virtual companion.

In the first category, we have products like ChatGPT (obviously) and Google NotebookLM that focus on web searching, text generation and summarisation tasks. They are mostly session-based and objective-focused, retaining little information about the users themselves between sessions. Home automation consoles AI, including Siri and Alexa, also loosely fall in this category as their primary function is to complete the immediate task and does not attempt to build a connection with the user.

In the second category, we have products like Replika and character.ai that focus solely on creating a connection with the user, with few real functional uses. To me, this use case is extremely dangerous. These agents aim to build an "interpersonal" bond with the user. However, as the user never had any control over these strictly cloud-based agents, this very real emotional bond can be easily exploited for manipulative monetisation and sensitive personal information.

Testimony retrieved from Replika. What are you doing Zuckerburg how is this even a good thing to show off.

As such, I am very interested in exploring the technical feasibility of a local, private AI assistant that is entirely in the user's control, designed to build a connection with the user while being capable of completing more personalised tasks such as calendar management and home automation.

Goals & Plans

Critical success measurements for this experiment include:

Runnable on my personal PC, primarily surrounding an 8GB RTX3070 (a mid-range graphics card from 2020).

Able to perform function calling, thus accessing other tools programmatically, unlike a pure conversational LLM.

Features that could be good to have include:

Long-term memory mechanism builds a connection to the user over time.

Voice conversation capability.

The plan for approaching the experiments is as follows:

Evaluate open-source LLM capabilities for this use case.

Research existing frameworks, tooling and technologies for function calling, memory and voice capabilities.

Construct a prototype taking into account previous findings.

Gather and evaluate user responses to the prototype.

The personal PC in question is of a comparable size to an Apple HomePod. (Christchurch Metro card for scale)

Research & Experiments

This week's work revolved around researching:

Available LLMs that could run locally.

Frameworks to streamline function calling behaviour.

Function calling is the critical feature I wanted to explore in this experiment, as it would serve as the basis for any further development beyond toy projects.

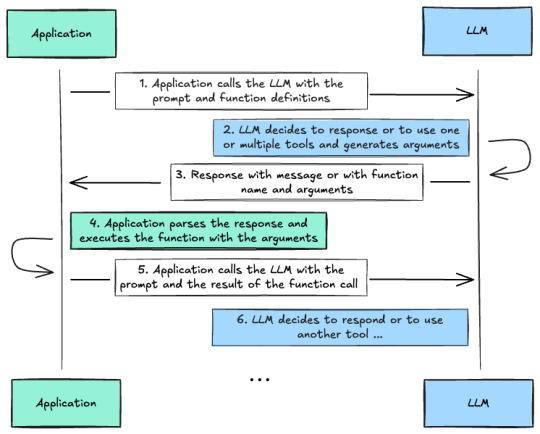

A brief explanation of integrating function calling with LLM. Retrieved from HuggingFace.

Interacting with the LLM directly would mean that all interactions and the conversation log must be handled manually. This would prove tedious from prior experience. A suitable framework could be utilised to handle this automatically. A quick search revealed the following candidates:

LangChain, optionally with LangGraph for more complex agentic flows.

Smolagent by HuggingFace.

Pydantic AI.

AutoGen by Microsoft.

I first needed a platform to host and manage LLMs locally to make any of them work. Ollama was chosen as the initial tool since it was the easiest to set up and download models. Additionally, it provides an OpenAI API compatible interface for simple programmatic access in almost all frameworks.

A deeper dive into each option can be summarised with the following table:

Of these options, LangChain was eliminated first due to many negative reviews on its poor developer experience. Reading AutoGen documentation revealed a "loose" inter-agent control, relying on user proxy and terminating on 'keywords' from model response. This does not inspire confidence, and thus the framework was not explored further.

PydanticAI stood out with its type-safe design. Smolagents stood out with its 'code agent' design, where the model utilises given tools by generating Python code instead of alternating between model output and tool calls. As such, these two frameworks were set up and explored further.

This concluded this week's work: identifying the gap in existing AI products, exploring technical tooling for further development of the experiment.

Reflection on Action

Identifying a meaningful gap before starting the experiment felt like an essential step. With the field so filled with half-baked chatbots in the Q&A section, diving straight into building without a clear direction would have risked creating another redundant product.

Spending a significant amount of time upfront to define the problem space, particularly the distinction between task-oriented AI tools and emotional companion bots, helped me anchor the project in a space that felt both personally meaningful and provided a set of unique technical constraints to work around.

However, this process was time-intensive. I wonder if investing so heavily in researching available technologies and alternatives, without encountering the practical roadblocks of building, could lead to over-optimisation for problems that might never materialise. There is also a risk of getting too attached to assumptions formed during research rather than letting real experimentation guide decisions. At the same time, running into technical issues like function calling and local deployment could prove even more time-consuming if encountered.

Ultimately, I will likely need to recalibrate the balance between research and action as the experiment progresses.

Theory

Since my work this week has been primarily focused on research, most of the relevant sources are already linked in the post above.

For Smolagents, Huggingface provided a peer-reviewed paper claiming code agents have superior performance: [2411.01747] DynaSaur: Large Language Agents Beyond Predefined Actions.

PydanticAI explained their type-checking behaviour in more detail in Agents - PydanticAI.

These provided context for my reasoning stated in previous sections and consolidated my decision to explore these two frameworks further.

Preparation

Having confirmed frameworks to explore, it becomes easy to plan the following courses of action:

Research lightweight models that can realistically run on the stated hardware.

Explore the behaviours of these small models within the two chosen frameworks.

Experiment with function calling.

0 notes

Text

MCP Toolbox for Databases Simplifies AI Agent Data Access

AI Agent Access to Enterprise Data Made Easy with MCP Toolbox for Databases

Google Cloud Next 25 showed organisations how to develop multi-agent ecosystems using Vertex AI and Google Cloud Databases. Agent2Agent Protocol and Model Context Protocol increase agent interactions. Due to developer interest in MCP, we're offering MCP Toolbox for Databases (formerly Gen AI Toolbox for Databases) easy to access your company data in databases. This advances standardised and safe agentic application experimentation.

Previous names: Gen AI Toolbox for Databases, MCP Toolbox

Developers may securely and easily interface new AI agents to business data using MCP Toolbox for Databases (Toolbox), an open-source MCP server. Anthropic created MCP, an open standard that links AI systems to data sources without specific integrations.

Toolbox can now generate tools for self-managed MySQL and PostgreSQL, Spanner, Cloud SQL for PostgreSQL, Cloud SQL for MySQL, and AlloyDB for PostgreSQL (with Omni). As an open-source project, it uses Neo4j and Dgraph. Toolbox integrates OpenTelemetry for end-to-end observability, OAuth2 and OIDC for security, and reduced boilerplate code for simpler development. This simplifies, speeds up, and secures tool creation by managing connection pooling, authentication, and more.

MCP server Toolbox provides the framework needed to construct production-quality database utilities and make them available to all clients in the increasing MCP ecosystem. This compatibility lets agentic app developers leverage Toolbox and reliably query several databases using a single protocol, simplifying development and improving interoperability.

MCP Toolbox for Databases supports ATK

The Agent Development Kit (ADK), an open-source framework that simplifies complicated multi-agent systems while maintaining fine-grained agent behaviour management, was later introduced. You can construct an AI agent using ADK in under 100 lines of user-friendly code. ADK lets you:

Orchestration controls and deterministic guardrails affect agents' thinking, reasoning, and collaboration.

ADK's patented bidirectional audio and video streaming features allow human-like interactions with agents with just a few lines of code.

Choose your preferred deployment or model. ADK supports your stack, whether it's your top-tier model, deployment target, or remote agent interface with other frameworks. ADK also supports the Model Context Protocol (MCP), which secures data source-AI agent communication.

Release to production using Vertex AI Agent Engine's direct interface. This reliable and transparent approach from development to enterprise-grade deployment eliminates agent production overhead.

Add LangGraph support

LangGraph offers essential persistence layer support with checkpointers. This helps create powerful, stateful agents that can complete long tasks or resume where they left off.

For state storage, Google Cloud provides integration libraries that employ powerful managed databases. The following are developer options:

Access the extremely scalable AlloyDB for PostgreSQL using the langchain-google-alloydb-pg-python library's AlloyDBSaver class, or pick

Cloud SQL for PostgreSQL utilising langchain-google-cloud-sql-pg-python's PostgresSaver checkpointer.

With Google Cloud's PostgreSQL performance and management, both store and load agent execution states easily, allowing operations to be halted, resumed, and audited with dependability.

When assembling a graph, a checkpointer records a graph state checkpoint at each super-step. These checkpoints are saved in a thread accessible after graph execution. Threads offer access to the graph's state after execution, enabling fault-tolerance, memory, time travel, and human-in-the-loop.

#technology#technews#govindhtech#news#technologynews#MCP Toolbox for Databases#AI Agent Data Access#Gen AI Toolbox for Databases#MCP Toolbox#Toolbox for Databases#Agent Development Kit

0 notes

Text

FastAPI LangGraph Agent Template:本番環境対応のAIエージェント構築フレームワーク

FastAPI LangGraph Agent Templateは、本番環境で使用可能なAIエージェントアプリケーションを構築するための包括的なフレームワークです。 このテンプレートは、高性能なバックエンドAPIの構築に特化したFastAPIと、AIエージェントワークフローを実現するLangGraphを組み合わせることで、セキュアで拡張性の高いAIエージェントサービスを迅速に開発することを可能にします。 2025年のAI開発においてエージェントは最も注目されているトレンドの一つであり、このテンプレートはその開発を効率化する強力なツールとなっています。 主な特徴と機能 FastAPI LangGraph Agent…

0 notes

Text

Meet LangGraph Multi-Agent Swarm: A Python Library for Creating Swarm-Style Multi-Agent Systems Using LangGraph

Meet LangGraph Multi-Agent Swarm: A Python Library for Creating Swarm-Style Multi-Agent Systems Using LangGraph

0 notes

Link

Author(s): Dwaipayan Bandyopadhyay Originally published on Towards AI. Retrieval Augmented Generation is a very well-known approach in the field of Generative AI, which usually consists of a linear flow of chunking a document, storing it in a vector #AI #ML #Automation

0 notes

Photo

LangGraph Platform: A Solution for Complex Agent Deployment Challenges

0 notes

Link

[ad_1] In this comprehensive tutorial, we guide users through creating a powerful multi-tool AI agent using LangGraph and Claude, optimized for diverse tasks including mathematical computations, web searches, weather inquiries, text analysis, and real-time information retrieval. It begins by simplifying dependency installations to ensure effortless setup, even for beginners. Users are then introduced to structured implementations of specialized tools, such as a safe calculator, an efficient web-search utility leveraging DuckDuckGo, a mock weather information provider, a detailed text analyzer, and a time-fetching function. The tutorial also clearly delineates the integration of these tools within a sophisticated agent architecture built using LangGraph, illustrating practical usage through interactive examples and clear explanations, facilitating both beginners and advanced developers to deploy custom multi-functional AI agents rapidly. import subprocess import sys def install_packages(): packages = [ "langgraph", "langchain", "langchain-anthropic", "langchain-community", "requests", "python-dotenv", "duckduckgo-search" ] for package in packages: try: subprocess.check_call([sys.executable, "-m", "pip", "install", package, "-q"]) print(f"✓ Installed package") except subprocess.CalledProcessError: print(f"✗ Failed to install package") print("Installing required packages...") install_packages() print("Installation complete!\n") We automate the installation of essential Python packages required for building a LangGraph-based multi-tool AI agent. It leverages a subprocess to run pip commands silently and ensures each package, ranging from long-chain components to web search and environment handling tools, is installed successfully. This setup streamlines the environment preparation process, making the notebook portable and beginner-friendly. import os import json import math import requests from typing import Dict, List, Any, Annotated, TypedDict from datetime import datetime import operator from langchain_core.messages import BaseMessage, HumanMessage, AIMessage, ToolMessage from langchain_core.tools import tool from langchain_anthropic import ChatAnthropic from langgraph.graph import StateGraph, START, END from langgraph.prebuilt import ToolNode from langgraph.checkpoint.memory import MemorySaver from duckduckgo_search import DDGS We import all the necessary libraries and modules for constructing the multi-tool AI agent. It includes Python standard libraries such as os, json, math, and datetime for general-purpose functionality and external libraries like requests for HTTP calls and duckduckgo_search for implementing web search. The LangChain and LangGraph ecosystems bring in message types, tool decorators, state graph components, and checkpointing utilities, while ChatAnthropic enables integration with the Claude model for conversational intelligence. These imports form the foundational building blocks for defining tools, agent workflows, and interactions. os.environ["ANTHROPIC_API_KEY"] = "Use Your API Key Here" ANTHROPIC_API_KEY = os.getenv("ANTHROPIC_API_KEY") We set and retrieve the Anthropic API key required to authenticate and interact with Claude models. The os.environ line assigns your API key (which you should replace with a valid key), while os.getenv securely retrieves it for later use in model initialization. This approach ensures the key is accessible throughout the script without hardcoding it multiple times. from typing import TypedDict class AgentState(TypedDict): messages: Annotated[List[BaseMessage], operator.add] @tool def calculator(expression: str) -> str: """ Perform mathematical calculations. Supports basic arithmetic, trigonometry, and more. Args: expression: Mathematical expression as a string (e.g., "2 + 3 * 4", "sin(3.14159/2)") Returns: Result of the calculation as a string """ try: allowed_names = 'abs': abs, 'round': round, 'min': min, 'max': max, 'sum': sum, 'pow': pow, 'sqrt': math.sqrt, 'sin': math.sin, 'cos': math.cos, 'tan': math.tan, 'log': math.log, 'log10': math.log10, 'exp': math.exp, 'pi': math.pi, 'e': math.e expression = expression.replace('^', '**') result = eval(expression, "__builtins__": , allowed_names) return f"Result: result" except Exception as e: return f"Error in calculation: str(e)" We define the agent’s internal state and implement a robust calculator tool. The AgentState class uses TypedDict to structure agent memory, specifically tracking messages exchanged during the conversation. The calculator function, decorated with @tool to register it as an AI-usable utility, securely evaluates mathematical expressions. It allows for safe computation by limiting available functions to a predefined set from the math module and replacing common syntax like ^ with Python’s exponentiation operator. This ensures the tool can handle simple arithmetic and advanced functions like trigonometry or logarithms while preventing unsafe code execution. @tool def web_search(query: str, num_results: int = 3) -> str: """ Search the web for information using DuckDuckGo. Args: query: Search query string num_results: Number of results to return (default: 3, max: 10) Returns: Search results as formatted string """ try: num_results = min(max(num_results, 1), 10) with DDGS() as ddgs: results = list(ddgs.text(query, max_results=num_results)) if not results: return f"No search results found for: query" formatted_results = f"Search results for 'query':\n\n" for i, result in enumerate(results, 1): formatted_results += f"i. **result['title']**\n" formatted_results += f" result['body']\n" formatted_results += f" Source: result['href']\n\n" return formatted_results except Exception as e: return f"Error performing web search: str(e)" We define a web_search tool that enables the agent to fetch real-time information from the internet using the DuckDuckGo Search API via the duckduckgo_search Python package. The tool accepts a search query and an optional num_results parameter, ensuring that the number of results returned is between 1 and 10. It opens a DuckDuckGo search session, retrieves the results, and formats them neatly for user-friendly display. If no results are found or an error occurs, the function handles it gracefully by returning an informative message. This tool equips the agent with real-time search capabilities, enhancing responsiveness and utility. @tool def weather_info(city: str) -> str: """ Get current weather information for a city using OpenWeatherMap API. Note: This is a mock implementation for demo purposes. Args: city: Name of the city Returns: Weather information as a string """ mock_weather = "new york": "temp": 22, "condition": "Partly Cloudy", "humidity": 65, "london": "temp": 15, "condition": "Rainy", "humidity": 80, "tokyo": "temp": 28, "condition": "Sunny", "humidity": 70, "paris": "temp": 18, "condition": "Overcast", "humidity": 75 city_lower = city.lower() if city_lower in mock_weather: weather = mock_weather[city_lower] return f"Weather in city:\n" \ f"Temperature: weather['temp']°C\n" \ f"Condition: weather['condition']\n" \ f"Humidity: weather['humidity']%" else: return f"Weather data not available for city. (This is a demo with limited cities: New York, London, Tokyo, Paris)" We define a weather_info tool that simulates retrieving current weather data for a given city. While it does not connect to a live weather API, it uses a predefined dictionary of mock data for major cities like New York, London, Tokyo, and Paris. Upon receiving a city name, the function normalizes it to lowercase and checks for its presence in the mock dataset. It returns temperature, weather condition, and humidity in a readable format if found. Otherwise, it notifies the user that weather data is unavailable. This tool serves as a placeholder and can later be upgraded to fetch live data from an actual weather API. @tool def text_analyzer(text: str) -> str: """ Analyze text and provide statistics like word count, character count, etc. Args: text: Text to analyze Returns: Text analysis results """ if not text.strip(): return "Please provide text to analyze." words = text.split() sentences = text.split('.') + text.split('!') + text.split('?') sentences = [s.strip() for s in sentences if s.strip()] analysis = f"Text Analysis Results:\n" analysis += f"• Characters (with spaces): len(text)\n" analysis += f"• Characters (without spaces): len(text.replace(' ', ''))\n" analysis += f"• Words: len(words)\n" analysis += f"• Sentences: len(sentences)\n" analysis += f"• Average words per sentence: len(words) / max(len(sentences), 1):.1f\n" analysis += f"• Most common word: max(set(words), key=words.count) if words else 'N/A'" return analysis The text_analyzer tool provides a detailed statistical analysis of a given text input. It calculates metrics such as character count (with and without spaces), word count, sentence count, and average words per sentence, and it identifies the most frequently occurring word. The tool handles empty input gracefully by prompting the user to provide valid text. It uses simple string operations and Python’s set and max functions to extract meaningful insights. It is a valuable utility for language analysis or content quality checks in the AI agent’s toolkit. @tool def current_time() -> str: """ Get the current date and time. Returns: Current date and time as a formatted string """ now = datetime.now() return f"Current date and time: now.strftime('%Y-%m-%d %H:%M:%S')" The current_time tool provides a straightforward way to retrieve the current system date and time in a human-readable format. Using Python’s datetime module, it captures the present moment and formats it as YYYY-MM-DD HH:MM:SS. This utility is particularly useful for time-stamping responses or answering user queries about the current date and time within the AI agent’s interaction flow. tools = [calculator, web_search, weather_info, text_analyzer, current_time] def create_llm(): if ANTHROPIC_API_KEY: return ChatAnthropic( model="claude-3-haiku-20240307", temperature=0.1, max_tokens=1024 ) else: class MockLLM: def invoke(self, messages): last_message = messages[-1].content if messages else "" if any(word in last_message.lower() for word in ['calculate', 'math', '+', '-', '*', '/', 'sqrt', 'sin', 'cos']): import re numbers = re.findall(r'[\d\+\-\*/\.\(\)\s\w]+', last_message) expr = numbers[0] if numbers else "2+2" return AIMessage(content="I'll help you with that calculation.", tool_calls=["name": "calculator", "args": "expression": expr.strip(), "id": "calc1"]) elif any(word in last_message.lower() for word in ['search', 'find', 'look up', 'information about']): query = last_message.replace('search for', '').replace('find', '').replace('look up', '').strip() if not query or len(query) < 3: query = "python programming" return AIMessage(content="I'll search for that information.", tool_calls=["name": "web_search", "args": "query": query, "id": "search1"]) elif any(word in last_message.lower() for word in ['weather', 'temperature']): city = "New York" words = last_message.lower().split() for i, word in enumerate(words): if word == 'in' and i + 1 < len(words): city = words[i + 1].title() break return AIMessage(content="I'll get the weather information.", tool_calls=["name": "weather_info", "args": "city": city, "id": "weather1"]) elif any(word in last_message.lower() for word in ['time', 'date']): return AIMessage(content="I'll get the current time.", tool_calls=["name": "current_time", "args": , "id": "time1"]) elif any(word in last_message.lower() for word in ['analyze', 'analysis']): text = last_message.replace('analyze this text:', '').replace('analyze', '').strip() if not text: text = "Sample text for analysis" return AIMessage(content="I'll analyze that text for you.", tool_calls=["name": "text_analyzer", "args": "text": text, "id": "analyze1"]) else: return AIMessage(content="Hello! I'm a multi-tool agent powered by Claude. I can help with:\n• Mathematical calculations\n• Web searches\n• Weather information\n• Text analysis\n• Current time/date\n\nWhat would you like me to help you with?") def bind_tools(self, tools): return self print("⚠️ Note: Using mock LLM for demo. Add your ANTHROPIC_API_KEY for full functionality.") return MockLLM() llm = create_llm() llm_with_tools = llm.bind_tools(tools) We initialize the language model that powers the AI agent. If a valid Anthropic API key is available, it uses the Claude 3 Haiku model for high-quality responses. Without an API key, a MockLLM is defined to simulate basic tool-routing behavior based on keyword matching, allowing the agent to function offline with limited capabilities. The bind_tools method links the defined tools to the model, enabling it to invoke them as needed. def agent_node(state: AgentState) -> Dict[str, Any]: """Main agent node that processes messages and decides on tool usage.""" messages = state["messages"] response = llm_with_tools.invoke(messages) return "messages": [response] def should_continue(state: AgentState) -> str: """Determine whether to continue with tool calls or end.""" last_message = state["messages"][-1] if hasattr(last_message, 'tool_calls') and last_message.tool_calls: return "tools" return END We define the agent’s core decision-making logic. The agent_node function handles incoming messages, invokes the language model (with tools), and returns the model’s response. The should_continue function then evaluates whether the model’s response includes tool calls. If so, it routes control to the tool execution node; otherwise, it directs the flow to end the interaction. These functions enable dynamic and conditional transitions within the agent’s workflow. def create_agent_graph(): tool_node = ToolNode(tools) workflow = StateGraph(AgentState) workflow.add_node("agent", agent_node) workflow.add_node("tools", tool_node) workflow.add_edge(START, "agent") workflow.add_conditional_edges("agent", should_continue, "tools": "tools", END: END) workflow.add_edge("tools", "agent") memory = MemorySaver() app = workflow.compile(checkpointer=memory) return app print("Creating LangGraph Multi-Tool Agent...") agent = create_agent_graph() print("✓ Agent created successfully!\n") We construct the LangGraph-powered workflow that defines the AI agent’s operational structure. It initializes a ToolNode to handle tool executions and uses a StateGraph to organize the flow between agent decisions and tool usage. Nodes and edges are added to manage transitions: starting with the agent, conditionally routing to tools, and looping back as needed. A MemorySaver is integrated for persistent state tracking across turns. The graph is compiled into an executable application (app), enabling a structured, memory-aware multi-tool agent ready for deployment. def test_agent(): """Test the agent with various queries.""" config = "configurable": "thread_id": "test-thread" test_queries = [ "What's 15 * 7 + 23?", "Search for information about Python programming", "What's the weather like in Tokyo?", "What time is it?", "Analyze this text: 'LangGraph is an amazing framework for building AI agents.'" ] print("🧪 Testing the agent with sample queries...\n") for i, query in enumerate(test_queries, 1): print(f"Query i: query") print("-" * 50) try: response = agent.invoke( "messages": [HumanMessage(content=query)], config=config ) last_message = response["messages"][-1] print(f"Response: last_message.content\n") except Exception as e: print(f"Error: str(e)\n") The test_agent function is a validation utility that ensures that the LangGraph agent responds correctly across different use cases. It runs predefined queries, arithmetic, web search, weather, time, and text analysis, and prints the agent’s responses. Using a consistent thread_id for configuration, it invokes the agent with each query. It neatly displays the results, helping developers verify tool integration and conversational logic before moving to interactive or production use. def chat_with_agent(): """Interactive chat function.""" config = "configurable": "thread_id": "interactive-thread" print("🤖 Multi-Tool Agent Chat") print("Available tools: Calculator, Web Search, Weather Info, Text Analyzer, Current Time") print("Type 'quit' to exit, 'help' for available commands\n") while True: try: user_input = input("You: ").strip() if user_input.lower() in ['quit', 'exit', 'q']: print("Goodbye!") break elif user_input.lower() == 'help': print("\nAvailable commands:") print("• Calculator: 'Calculate 15 * 7 + 23' or 'What's sin(pi/2)?'") print("• Web Search: 'Search for Python tutorials' or 'Find information about AI'") print("• Weather: 'Weather in Tokyo' or 'What's the temperature in London?'") print("• Text Analysis: 'Analyze this text: [your text]'") print("• Current Time: 'What time is it?' or 'Current date'") print("• quit: Exit the chat\n") continue elif not user_input: continue response = agent.invoke( "messages": [HumanMessage(content=user_input)], config=config ) last_message = response["messages"][-1] print(f"Agent: last_message.content\n") except KeyboardInterrupt: print("\nGoodbye!") break except Exception as e: print(f"Error: str(e)\n") The chat_with_agent function provides an interactive command-line interface for real-time conversations with the LangGraph multi-tool agent. It supports natural language queries and recognizes commands like “help” for usage guidance and “quit” to exit. Each user input is processed through the agent, which dynamically selects and invokes appropriate response tools. The function enhances user engagement by simulating a conversational experience and showcasing the agent’s capabilities in handling various queries, from math and web search to weather, text analysis, and time retrieval. if __name__ == "__main__": test_agent() print("=" * 60) print("🎉 LangGraph Multi-Tool Agent is ready!") print("=" * 60) chat_with_agent() def quick_demo(): """Quick demonstration of agent capabilities.""" config = "configurable": "thread_id": "demo" demos = [ ("Math", "Calculate the square root of 144 plus 5 times 3"), ("Search", "Find recent news about artificial intelligence"), ("Time", "What's the current date and time?") ] print("🚀 Quick Demo of Agent Capabilities\n") for category, query in demos: print(f"[category] Query: query") try: response = agent.invoke( "messages": [HumanMessage(content=query)], config=config ) print(f"Response: response['messages'][-1].content\n") except Exception as e: print(f"Error: str(e)\n") print("\n" + "="*60) print("🔧 Usage Instructions:") print("1. Add your ANTHROPIC_API_KEY to use Claude model") print(" os.environ['ANTHROPIC_API_KEY'] = 'your-anthropic-api-key'") print("2. Run quick_demo() for a quick demonstration") print("3. Run chat_with_agent() for interactive chat") print("4. The agent supports: calculations, web search, weather, text analysis, and time") print("5. Example: 'Calculate 15*7+23' or 'Search for Python tutorials'") print("="*60) Finally, we orchestrate the execution of the LangGraph multi-tool agent. If the script is run directly, it initiates test_agent() to validate functionality with sample queries, followed by launching the interactive chat_with_agent() mode for real-time interaction. The quick_demo() function also briefly showcases the agent’s capabilities in math, search, and time queries. Clear usage instructions are printed at the end, guiding users on configuring the API key, running demonstrations, and interacting with the agent. This provides a smooth onboarding experience for users to explore and extend the agent’s functionality. In conclusion, this step-by-step tutorial gives valuable insights into building an effective multi-tool AI agent leveraging LangGraph and Claude’s generative capabilities. With straightforward explanations and hands-on demonstrations, the guide empowers users to integrate diverse utilities into a cohesive and interactive system. The agent’s flexibility in performing tasks, from complex calculations to dynamic information retrieval, showcases the versatility of modern AI development frameworks. Also, the inclusion of user-friendly functions for both testing and interactive chat enhances practical understanding, enabling immediate application in various contexts. Developers can confidently extend and customize their AI agents with this foundational knowledge. Check out the Notebook on GitHub. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter. Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences. [ad_2] Source link

0 notes

Text

A while back, I wanted to go deep into AI agents—how they work, how they make decisions, and how to build them. But every good resource seemed locked behind a paywall.

Then, I found a goldmine of free courses.

No fluff. No sales pitch. Just pure knowledge from the best in the game. Here’s what helped me (and might help you too):

1️⃣ HuggingFace – Covers theory, design, and hands-on practice with AI agent libraries like smolagents, LlamaIndex, and LangGraph.

2️⃣ LangGraph – Teaches AI agent debugging, multi-step reasoning, and search capabilities—straight from the experts at LangChain and Tavily.

3️⃣ LangChain – Focuses on LCEL (LangChain Expression Language) to build custom AI workflows faster.

4️⃣ crewAI – Shows how to create teams of AI agents that work together on complex tasks. Think of it as AI teamwork at scale.

5️⃣ Microsoft & Penn State – Introduces AutoGen, a framework for designing AI agents with roles, tools, and planning strategies.

6️⃣ Microsoft AI Agents Course – A 10-lesson deep dive into agent fundamentals, available in multiple languages.

7️⃣ Google’s AI Agents Course – Teaches multi-modal AI, API integrations, and real-world deployment using Gemini 1.5, Firebase, and Vertex AI.

If you’ve ever wanted to build AI agents that actually work in the real world, this list is all you need to start. No excuses. Just free learning.

Which of these courses are you diving into first? Let’s talk!

#ai#cizotechnology#innovation#mobileappdevelopment#appdevelopment#ios#techinnovation#app developers#iosapp#mobileapps#AI#MachineLearning#FreeLearning

0 notes