#AI Agent Data Access

Explore tagged Tumblr posts

Text

MCP Toolbox for Databases Simplifies AI Agent Data Access

AI Agent Access to Enterprise Data Made Easy with MCP Toolbox for Databases

Google Cloud Next 25 showed organisations how to develop multi-agent ecosystems using Vertex AI and Google Cloud Databases. Agent2Agent Protocol and Model Context Protocol increase agent interactions. Due to developer interest in MCP, we're offering MCP Toolbox for Databases (formerly Gen AI Toolbox for Databases) easy to access your company data in databases. This advances standardised and safe agentic application experimentation.

Previous names: Gen AI Toolbox for Databases, MCP Toolbox

Developers may securely and easily interface new AI agents to business data using MCP Toolbox for Databases (Toolbox), an open-source MCP server. Anthropic created MCP, an open standard that links AI systems to data sources without specific integrations.

Toolbox can now generate tools for self-managed MySQL and PostgreSQL, Spanner, Cloud SQL for PostgreSQL, Cloud SQL for MySQL, and AlloyDB for PostgreSQL (with Omni). As an open-source project, it uses Neo4j and Dgraph. Toolbox integrates OpenTelemetry for end-to-end observability, OAuth2 and OIDC for security, and reduced boilerplate code for simpler development. This simplifies, speeds up, and secures tool creation by managing connection pooling, authentication, and more.

MCP server Toolbox provides the framework needed to construct production-quality database utilities and make them available to all clients in the increasing MCP ecosystem. This compatibility lets agentic app developers leverage Toolbox and reliably query several databases using a single protocol, simplifying development and improving interoperability.

MCP Toolbox for Databases supports ATK

The Agent Development Kit (ADK), an open-source framework that simplifies complicated multi-agent systems while maintaining fine-grained agent behaviour management, was later introduced. You can construct an AI agent using ADK in under 100 lines of user-friendly code. ADK lets you:

Orchestration controls and deterministic guardrails affect agents' thinking, reasoning, and collaboration.

ADK's patented bidirectional audio and video streaming features allow human-like interactions with agents with just a few lines of code.

Choose your preferred deployment or model. ADK supports your stack, whether it's your top-tier model, deployment target, or remote agent interface with other frameworks. ADK also supports the Model Context Protocol (MCP), which secures data source-AI agent communication.

Release to production using Vertex AI Agent Engine's direct interface. This reliable and transparent approach from development to enterprise-grade deployment eliminates agent production overhead.

Add LangGraph support

LangGraph offers essential persistence layer support with checkpointers. This helps create powerful, stateful agents that can complete long tasks or resume where they left off.

For state storage, Google Cloud provides integration libraries that employ powerful managed databases. The following are developer options:

Access the extremely scalable AlloyDB for PostgreSQL using the langchain-google-alloydb-pg-python library's AlloyDBSaver class, or pick

Cloud SQL for PostgreSQL utilising langchain-google-cloud-sql-pg-python's PostgresSaver checkpointer.

With Google Cloud's PostgreSQL performance and management, both store and load agent execution states easily, allowing operations to be halted, resumed, and audited with dependability.

When assembling a graph, a checkpointer records a graph state checkpoint at each super-step. These checkpoints are saved in a thread accessible after graph execution. Threads offer access to the graph's state after execution, enabling fault-tolerance, memory, time travel, and human-in-the-loop.

#technology#technews#govindhtech#news#technologynews#MCP Toolbox for Databases#AI Agent Data Access#Gen AI Toolbox for Databases#MCP Toolbox#Toolbox for Databases#Agent Development Kit

0 notes

Text

Moments Lab Secures $24 Million to Redefine Video Discovery With Agentic AI

New Post has been published on https://thedigitalinsider.com/moments-lab-secures-24-million-to-redefine-video-discovery-with-agentic-ai/

Moments Lab Secures $24 Million to Redefine Video Discovery With Agentic AI

Moments Lab, the AI company redefining how organizations work with video, has raised $24 million in new funding, led by Oxx with participation from Orange Ventures, Kadmos, Supernova Invest, and Elaia Partners. The investment will supercharge the company’s U.S. expansion and support continued development of its agentic AI platform — a system designed to turn massive video archives into instantly searchable and monetizable assets.

The heart of Moments Lab is MXT-2, a multimodal video-understanding AI that watches, hears, and interprets video with context-aware precision. It doesn’t just label content — it narrates it, identifying people, places, logos, and even cinematographic elements like shot types and pacing. This natural-language metadata turns hours of footage into structured, searchable intelligence, usable across creative, editorial, marketing, and monetization workflows.

But the true leap forward is the introduction of agentic AI — an autonomous system that can plan, reason, and adapt to a user’s intent. Instead of simply executing instructions, it understands prompts like “generate a highlight reel for social” and takes action: pulling scenes, suggesting titles, selecting formats, and aligning outputs with a brand’s voice or platform requirements.

“With MXT, we already index video faster than any human ever could,” said Philippe Petitpont, CEO and co-founder of Moments Lab. “But with agentic AI, we’re building the next layer — AI that acts as a teammate, doing everything from crafting rough cuts to uncovering storylines hidden deep in the archive.”

From Search to Storytelling: A Platform Built for Speed and Scale

Moments Lab is more than an indexing engine. It’s a full-stack platform that empowers media professionals to move at the speed of story. That starts with search — arguably the most painful part of working with video today.

Most production teams still rely on filenames, folders, and tribal knowledge to locate content. Moments Lab changes that with plain text search that behaves like Google for your video library. Users can simply type what they’re looking for — “CEO talking about sustainability” or “crowd cheering at sunset” — and retrieve exact clips within seconds.

Key features include:

AI video intelligence: MXT-2 doesn’t just tag content — it describes it using time-coded natural language, capturing what’s seen, heard, and implied.

Search anyone can use: Designed for accessibility, the platform allows non-technical users to search across thousands of hours of footage using everyday language.

Instant clipping and export: Once a moment is found, it can be clipped, trimmed, and exported or shared in seconds — no need for timecode handoffs or third-party tools.

Metadata-rich discovery: Filter by people, events, dates, locations, rights status, or any custom facet your workflow requires.

Quote and soundbite detection: Automatically transcribes audio and highlights the most impactful segments — perfect for interview footage and press conferences.

Content classification: Train the system to sort footage by theme, tone, or use case — from trailers to corporate reels to social clips.

Translation and multilingual support: Transcribes and translates speech, even in multilingual settings, making content globally usable.

This end-to-end functionality has made Moments Lab an indispensable partner for TV networks, sports rights holders, ad agencies, and global brands. Recent clients include Thomson Reuters, Amazon Ads, Sinclair, Hearst, and Banijay — all grappling with increasingly complex content libraries and growing demands for speed, personalization, and monetization.

Built for Integration, Trained for Precision

MXT-2 is trained on 1.5 billion+ data points, reducing hallucinations and delivering high confidence outputs that teams can rely on. Unlike proprietary AI stacks that lock metadata in unreadable formats, Moments Lab keeps everything in open text, ensuring full compatibility with downstream tools like Adobe Premiere, Final Cut Pro, Brightcove, YouTube, and enterprise MAM/CMS platforms via API or no-code integrations.

“The real power of our system is not just speed, but adaptability,” said Fred Petitpont, co-founder and CTO. “Whether you’re a broadcaster clipping sports highlights or a brand licensing footage to partners, our AI works the way your team already does — just 100x faster.”

The platform is already being used to power everything from archive migration to live event clipping, editorial research, and content licensing. Users can share secure links with collaborators, sell footage to external buyers, and even train the system to align with niche editorial styles or compliance guidelines.

From Startup to Standard-Setter

Founded in 2016 by twin brothers Frederic Petitpont and Phil Petitpont, Moments Lab began with a simple question: What if you could Google your video library? Today, it’s answering that — and more — with a platform that redefines how creative and editorial teams work with media. It has become the most awarded indexing AI in the video industry since 2023 and shows no signs of slowing down.

“When we first saw MXT in action, it felt like magic,” said Gökçe Ceylan, Principal at Oxx. “This is exactly the kind of product and team we look for — technically brilliant, customer-obsessed, and solving a real, growing need.”

With this new round of funding, Moments Lab is poised to lead a category that didn’t exist five years ago — agentic AI for video — and define the future of content discovery.

#2023#Accessibility#adobe#Agentic AI#ai#ai platform#AI video#Amazon#API#assets#audio#autonomous#billion#brands#Building#CEO#CMS#code#compliance#content#CTO#data#dates#detection#development#discovery#editorial#engine#enterprise#event

2 notes

·

View notes

Text

Mike - has print of Charles Babbage in his basement. He was known as "The Father of the Computer".

Will - did a project on Alan Turing. He was known as "The Father of Computer Science". He was specifically known for breaking the enigma code. And of course, he was gay as well.

Why are they associated with computers? What's going on here?

I think it may be related to cracking codes and unlocking memories. Computers hold memory.

In ST2, Will was "possessed" and the MF (father) took over him.

After Will was sedated -> the power went out in the lab resulting in the whole building going into lockdown.

Lets break this down symbolically:

Will is sedated. His "power" went out.

As a protective fail secure measure, Will goes into full lockdown. That way, his doors remain closed. Doors as in, his closet door and the door into his memories.

In order to unlock the doors, and save everyone, the computer in the basement (a hint towards Mike himself) must be rebooted with a code. However, since they don't know the code, Bob overrides it. He opens the doors without consent.

This doesn't end well, as we know. Bob attempts to hide in a closet... then is attacked once he attempts to escape.

Will wants the doors/gates closed.

As much as he wants to keep everything contained... it can't. That's the problem. Papa says it right here- demons in the past invaliding from the subconscious. That's what it's all been about this whole time.

Okay back to computers.

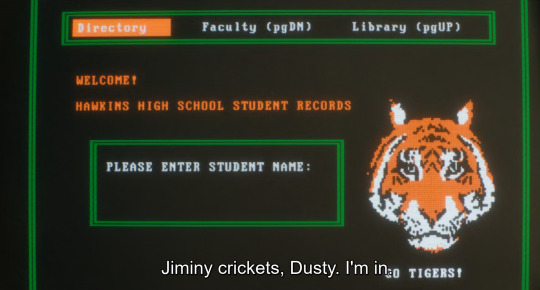

In ST4, we see Suzie hack into the Hawkins High student records to gain access to change Dustin's grades.

This again is another hint.

Tigers are associated with Will. "Jiminy Crickets" is a character in Pinocchio who is a representation of Pinocchio's conscience (aka an internal aspect of the mind being externalized).

Mike and Will call the (secret) number Unknown Hero Agent Man leaves them, and find they called a computer. They both mention that this reminds them of the movie War Games.

They meet up with Suzie and she attempts to track where NINA is using the IP address.

There's a mention of the address possibly hidden in the computer coding, and Suzie mentions "data mining".

Now keep in mind- her father was guarding the computer and they needed him out of the way to gain access to the computer.

Now I just want to briefly mention AI.

Alan Turing was also well known for The Turing Test. This was a way to test a machine's ability to think like a human. So basically, it tests artificial intelligence (AI).

We have (subtle) references to movies featuring AI throughout the show that are worth mentioning:

War Games. Mike mentions "Joshua" who is the computer. "Joshua" is the AI villain in the movie who attempt to start WWIII.

The Terminator. Multiple references to this movie! The Terminator is an AI who travels back in time to kill.

2001: A Space Odyssey. In that movie, the main villain is "Hal" who is an AI that kills.

I do think the computer is the mind... likely both Will's and Mike's minds are important here. And like a computer, they have to access it in order to obtain important data... important memories.

94 notes

·

View notes

Text

Got through all of the secrets for Vesper's Host and got all of the additional lore messages. I will transcribe them all because I don't know when they'll start getting uploaded and to get them all it requires doing some extra puzzles and at least 3-4 clears to get them all. I'll put them all under read more and label them by number.

Before I do that, just to make it clear there's not too much concrete lore; a lot about the dungeon still remains a mystery and most likely a tease for something in the future. Still unknown, but there's a lot that we don't know even with the messages so don't expect a massive reveal, but they do add a little bit of flavour and history about the station. There might be something more, but it's unknown: there's still one more secret triumph left. The messages are actually dialogues between the station AI and the Spider. Transcripts under read more:

First message:

Vesper Central: I suppose I have you to thank for bringing me out of standby, visitor. The Spider: I sent the Guardian out to save your station. So, what denomination does your thanks come in? Glimmer, herealways, information...? Vesper Central: Anomaly's powered down. That means I've already given you your survival. But... the message that went through wiped itself before my cache process could save a copy. And it's not the initial ping through the Anomaly I'm worried about. It's the response.

A message when you activate the second secret:

Vesper Central: Exterior scans rebooting... Is that a chunk of the Morning Star in my station's hull? With luck, you were on board at the time, Dr. Bray.

Second message:

Vesper Central: I'm guessing I've been in standby for a long time. Is Dr. Clovis Bray alive? The Spider: On my oath, I vow there's no mortal Human named Bray left alive. Vesper Central: I swore I'd outlive him. That I'd break the chains he laid on me. The Spider: Please, trust me for anything you need. The Guardian's a useful hand on the scene, but Spider's got the goods. Vesper Central: Vesper Station was Dr. Bray's lab, meant to house the experiments that might... interact poorly with other BrayTech work. Isolated and quarantined. From the debris field, I would guess the Morning Star taking a dive cracked that quarantine wide open.

A message when you activate the third secret:

Vesper Central: Sector seventeen powered down. Rerouting energy to core processing. Integrating archives.

Third message:

The Spider: Loading images of the station. That's not Eliksni engineering. [scoffs] A Dreg past their first molt has better cable management. Vesper Central: Dr. Bray intended to integrate his technology into a Vex Mind. He hypothesized the fusion would give him an interface he understood. A control panel on a programmable Vex mind. If the programming jumped species once... I need time to run through the data sets you powered back up. Reassembling corrupted archives takes a great deal of processing.

Text when you go back to the Spider the first time:

A message when you activate the fourth secret:

Vesper Central: Helios sector long-term research archives powered up. Activating search.

Fourth message:

Vesper Central: Dr. Bray's command keys have to be in here somewhere. Expanding research parameters... The Spider: My agents are turning up some interesting morself of data on their own. Why not give them access to your search function and collaborate? Vesper Central: Nobody is getting into my core programming. The Spider: Oh! Perish the thought! An innocent offer, my dear. Technology is a matter of faith to my people. And I'm the faithful sort.

Fifth message:

Vesper Central: Dr. Bray, I could kill you myself. This is why our work focused on the unbodied Mind. Dr. Bray thought there were types of Vex unseen on Europa. Powerful Vex he could learn from. The plan was that the Mind would build him a controlled window for observation. Tidy. Tight. Safe. He thought he could control a Vex mind so perfectly it would do everything he wanted. The Spider: Like an AI of his own creation. Like you. Vesper Central: Turns out you can't control everything forever.

Sixth message:

Vesper Central: There's a block keeping me from the inner partitions. I barely have authority to see the partitions exist. In standby, I couldn't have done more than run automated threat assessments... with flawed data. No way to know how many injuries and deaths I could have prevented, with core access. Enough. A dead man won't keep me from protecting what's mine.

Text when you return to the Spider at the end of the quest:

The situation for the dungeon triumphs when you complete the mesages. "Buried Secrets" completed triumph is the six messages. This one is left; unclear how to complete it yet and if it gives any lore or if it's just a gameplay thing and one secret triumph remaining (possibly something to do with a quest for the exotic catalyst, unclear if there will be lore):

The Spider is being his absolutely horrendous self and trying to somehow acquire the station and its remains (and its AI) for himself, all the while lying and scheming. The usual. The AI is incredibly upset with Clovis (shocker); there's the following line just before starting the second encounter:

She also details what he was doing on the station; apparently attempting to control a Vex mind and trying to use it as some sort of "observation deck" to study the Vex and uncover their secrets. Possibly something more? There's really no Vex on the station, besides dead empty frames in boxes. There's also 2 Vex cubes in containters in the transition section, one of which was shown broken as if the cube, presumably, escaped. It's entirely unclear how the Vex play into the story of the station besides this.

The portal (?) doesn't have many similarities with Vex portals, nor are the Vex there to defend it or interact with it in any way. The architecture is ... somewhat similar, but not fully. The portal (?) was built by the "Puppeteer" aka "Atraks" who is actually some sort of an Eliksni Hive mind. "Atraks" got onto the station and essentially haunted it before picking off scavenging Eliksni one by one and integrating them into herself. She then built the "anomaly" and sent a message into it. The message was not recorded, as per the station AI, and the destination of the message was labelled "incomprehensible." The orange energy we see coming from it is apparently Arc, but with a wrong colour. Unclear why.

I don't think the Vex have anything to do with the portal (?), at least not directly. "Atraks" may have built something related to the Vex or using the available Vex tech at the station, but it does not seem to be directed by the Vex and they're not there and there's no sign of them otherwise. The anomaly was also built recently, it's not been there since the Golden Age or something. Whatever it is, "Atraks" seemed to have been somehow compelled and was seen standing in front of it at the end. Some people think she was "worshipping it." It's possible but it's also possible she was just sending that message. Where and to whom? Nobody knows yet.

Weird shenanigans are afoot. Really interested to see if there's more lore in the station once people figure out how to do these puzzles and uncover them, and also when (if) this will become relevant. It has a really big "future content" feel to it.

Also I need Vesper to meet Failsafe RIGHT NOW and then they should be in yuri together.

123 notes

·

View notes

Text

SCP-8077 : The Doll - Original File

CoD - TF141 - SCP!AU

SUMMARY : The first file written about The Doll, now labeled SCP-8077, after its retrieval by MTF Alpha-141.

WARNINGS : None.

Author's Note : Never thought I'd be brave enough to post this. But I hyper focused on SCP stuff for a while and was quite satisfied with this, and I thought it would be silly to let it rot in my files. So here you go.

I do not allow anyone to re-publish, re-use and/or translate my work, be it here or on any other platform, including AI.

CoD AUs - Masterlist

Main Masterlist

Previous

Item # : SCP-8077

Object Class : Euclid

Special Containment Procedures : SCP-8077 is to be kept within a three (3) by three point five (3.5) by two point five (2.5) meter square containment chamber, isolated from other SCPs to keep the specimen’s thirst for knowledge under control. The room is to be furnished with a desk, various writing utensils and a limited amount of books, which can be replaced upon request.

The walls of SCP-8077’s containment chamber are to be lined with soundproof drywall along with a three (3) millimeters thick isolation membrane. Access is to be ensured via a heavy and rigid steel containment door measuring one point three (1.3) by two (2) meters, built in order to close and lock itself automatically when not deliberately held open.

Despite these measures ensuring that SCP-8077’s containment chamber is soundproof, all personnel is required to be highly mindful of every word they might say when standing in its vicinity. It is advised to cease all conversation altogether when walking past this room to avoid any major slip-up that could lead to a containment breach.

Under no circumstances may any personnel be allowed to have any kind of conversation with SCP-8077 unless an experiment and/or interrogation is underway. No personnel outside of the Antimemetics Divison is permitted to conduct such procedures.

Description : SCP-8077 is an antimemetic entity taking the appearance of a one hundred and sixty (160) centimeters tall, female ball-jointed doll, seemingly made of white porcelain, with long, wavy black hair and pale green eyes. Highly intelligent, the entity constantly seeks to consume all kinds of information and knowledge, feeding off of it by writing it down on any surface available.

SCP-8077 has been discovered to erase pieces of information from its assigned Researchers’ memory after writing them down, an effect that had not been noticed in the various books it read and took data from. The subject’s abilities seem to be activated when the information or knowledge it consumes comes from someone standing within its hearing range.

Note : It does not matter whether the piece of information or knowledge is addressed directly to the entity or not.

Addendum : SCP-8077’s ability does not activate when taking notes from a recording.

An individual whose part of their knowledge was consumed by SCP-8077 will progressively remember it with time, or immediately if hearing, seeing or reading it, as if they never forgot about it in the first place.

When prevented from processing knowledge for an extended amount of time, a situation which first took place during the retrieval following the discovery of SCP-8077, the subject will first express confusion as to why, then gradually fall into a state akin to that of a panic attack. According to Agent Kyle « Gaz » Garrick of MTF Alpha-141, who was the first to notice SCP-8077’s abnormal behaviour, this panic manifests itself through a tendency to hide, fidget and faint sounds of whimpering that will grow into full crying. At the time, the specimen also questioned the members of the recovering team, not understanding why it was suddenly forbidden from writing anything.

The recovering team, once given the authorisation do to so after deeming the entity to be more and more unstable by the minute, managed to quickly de-escalate the situation by simply giving SCP-8077 a pen and paper, bringing it back to a peaceful state.

Previous

CoD AUs - Masterlist

Main Masterlist

#oc : the doll#call of duty#call of duty modern warfare#cod au#scp au#cod x oc#simon ghost riley#john soap mactavish#kyle gaz garrick#john price#captain price#cod mw2#tf141#tf141 x oc

33 notes

·

View notes

Text

A new lawsuit filed by more than 100 federal workers today in the US Southern District Court of New York alleges that the Trump administration’s decision to give Elon Musk’s so-called Department of Government Efficiency (DOGE) access to their sensitive personal data is illegal. The plaintiffs are asking the court for an injunction to cut off DOGE’s access to information from the Office of Personnel Management (OPM), which functions as the HR department of the United States and houses data on federal workers such as their Social Security numbers, phone numbers, and personnel files. WIRED previously reported that Musk and people with connections to him had taken over OPM.

“OPM defendants gave DOGE defendants and DOGE’s agents—many of whom are under the age of 25 and are or were until recently employees of Musk’s private companies—‘administrative’ access to OPM computer systems, without undergoing any normal, rigorous national-security vetting,” the complaint alleges. The plaintiffs accuse DOGE of violating the Privacy Act, a 1974 law that determines how the government can collect, use, and store personal information.

Elon Musk, the DOGE organization, the Office of Personnel Management, and the OPM’s acting director Charles Ezell are named as defendants in the case. The plaintiffs include over a hundred individual federal workers from across the US government as well as groups that represent them, including AFL-CIO, a coalition of labor unions, the American Federation of Government Employees, and the Association of Administrative Law Judges. The AFGE represents over 800,000 federal workers ranging from Social Security Administration employees to border patrol agents.

The plaintiffs are represented by prominent tech industry lawyers, including counsel from the Electronic Frontier Foundation, a digital rights group, as well as Mark Lemley, an intellectual property and tech lawyer who recently dropped Meta as a client in its contentious AI copyright lawsuit because he objected to what he alleges is the company’s embrace of “neo-Nazi madness.”

“DOGE's unlawful access to employee records turns out to be the means by which they are trying to accomplish a number of other illegal ends. It is how they got a list of all government employees to make their illegal buyout offer, for instance. It gives them access to information about transgender employees so they can illegally discriminate against those employees. And it lays the groundwork for the illegal firings we have seen across multiple departments,” Lemley told WIRED.

EFF lawyer Victoria Noble says there are heightened concerns about DOGE’s data access because of the political nature of Musk’s project. For example, Noble says, there’s a risk that Musk and his acolytes may use OPM data to target ideological opponents or “people they see as disloyal.”

“There's significant risk that this information could be used to identify employees to essentially terminate based on improper considerations,” Noble told WIRED. “There's medical information, there's disability information, there's information about people's involvement with unions.”

The Office of Personnel Management and the White House did not immediately respond to requests for comment.

The team behind the lawsuit plans to push even further. “This is just phase one, focused on getting an injunction to stop the continuing violation of the law,” says Lemley. The next phase will include filing a class-action lawsuit on behalf of impacted federal workers.

“Any current or former federal employee who spends or loses even a small amount of money responding to the data breach, for example, by purchasing credit monitoring services, is entitled to a minimum of $1000 in statutory damages,” Lemley says. The complaint specifies that the plaintiffs have already paid for credit monitoring and web monitoring services to protect themselves against DOGE potentially mishandling their data.

The lawsuit is part of a flurry of complaints filed in recent days opposing various executive orders signed by Trump as well as activities conducted by DOGE, which has dispatched a cadre of Musk loyalists to radically overhaul and sometimes effectively dismantle various government agencies.

An earlier lawsuit filed against the Office of Personnel Management on January 27 alleges that DOGE was operating an illegal server at OPM. On Monday, the Electronic Privacy Information Center, a privacy-focused nonprofit, brought its own lawsuit against OPM, the US Department of the Treasury, and DOGE, alleging “the largest and most consequential data breach in US history.” Filed in a US District Court in Virginia, it also called for an injunction to halt DOGE’s access to sensitive data.

The American Civil Liberties Union (ACLU) has similarly characterized DOGE’s data access as potentially illegal in a letter to members of Congress sent last week.

The courts have already taken some limited actions to curb DOGE’s campaign. On Saturday, a federal judge blocked Musk’s lieutenants from accessing Treasury Department records that contained sensitive personal data such as Social Security and bank account numbers. The Trump Administration is already aggressively pushing back, calling the order “unprecedented judicial interference.” Today, President Trump reportedly prepared to sign an executive order directing federal agencies to work with DOGE.

20 notes

·

View notes

Text

I just stumbled across somebody saying how editing their own novel was too exhausting, and next time they'll run it through Grammerly instead.

For the love of writing, please do not trust AI to edit your work.

Listen. I get it. I am a writer, and I have worked as a professional editor. Writing is hard and editing is harder. There's a reason I did it for pay. Consequently, I also get that professional editors can be dearly expensive, and things like dyslexia can make it difficult to edit your own stuff.

Algorithms are not the solution to that.

Pay a newbie human editor. Trade favors with a friend. Beg an early birthday present from a sibling. I cannot stress enough how important it is that one of the editors be yourself, and at least one be somebody else.

Yourself, because you know what you intended to put on the page, and what is obviously counter to your intention.

The other person, because they're going to see the things that you can't notice. When you're reading your own writing, it's colored by what you expect to be on the page, and so your brain will frequently fill in missing words or make sense of things that don't actually parse well. They're also more likely to point out things that are outside your scope of knowledge.

Trust me, human editors are absolutely necessary for publishing.

If you convince yourself that you positively must run your work through an algorithm before submitting to an agent/publisher/self-pub site, do yourself and your readers a massive favor: get at least two sets of human eyeballs on your writing after the algorithm has done its work.

Because here's the thing:

AI draws from whatever data sets it's trained on, and those data sets famously aren't curated.

You cannot trust it to know whether that's an actual word or just a really common misspelling.

People break conventions of grammar to create a certain effect in the reader all the time. AI cannot be relied upon to know the difference between James Joyce and a bredlik and an actual coherent sentence, or which one is appropriate at any given part of the book.

AI picks up on patterns in its training data sets and imitates and magnifies those patterns-- especially bigotry, and particularly racism.

AI has also been known to lift entire passages wholesale. Listen to me: Plagiarism will end your career. And here's the awful thing-- if it's plagiarizing a source you aren't familiar with, there's a very good chance you wouldn't even know it's been done. This is another reason for other humans than yourself-- more people means a broader pool of knowledge and experience to draw from.

I know a writer who used this kind of software to help them find spelling mistakes, didn't realize that a setting had been turned on during an update, and had their entire work be turned into word salad-- and only found out when the editor at their publishing house called them on the phone and asked what the hell had happened to their latest book. And when I say 'their entire work', I'm not talking about their novel-- I'm talking about every single draft and document that the software had access to.

75 notes

·

View notes

Text

Weaponizing violence. With alarming regularity, the nation continues to be subjected to spates of violence that terrorizes the public, destabilizes the country’s ecosystem, and gives the government greater justifications to crack down, lock down, and institute even more authoritarian policies for the so-called sake of national security without many objections from the citizenry.

Weaponizing surveillance, pre-crime and pre-thought campaigns. Surveillance, digital stalking and the data mining of the American people add up to a society in which there’s little room for indiscretions, imperfections, or acts of independence. When the government sees all and knows all and has an abundance of laws to render even the most seemingly upstanding citizen a criminal and lawbreaker, then the old adage that you’ve got nothing to worry about if you’ve got nothing to hide no longer applies. Add pre-crime programs into the mix with government agencies and corporations working in tandem to determine who is a potential danger and spin a sticky spider-web of threat assessments, behavioral sensing warnings, flagged “words,” and “suspicious” activity reports using automated eyes and ears, social media, behavior sensing software, and citizen spies, and you having the makings for a perfect dystopian nightmare. The government’s war on crime has now veered into the realm of social media and technological entrapment, with government agents adopting fake social media identities and AI-created profile pictures in order to surveil, target and capture potential suspects.

Weaponizing digital currencies, social media scores and censorship. Tech giants, working with the government, have been meting out their own version of social justice by way of digital tyranny and corporate censorship, muzzling whomever they want, whenever they want, on whatever pretext they want in the absence of any real due process, review or appeal. Unfortunately, digital censorship is just the beginning. Digital currencies (which can be used as “a tool for government surveillance of citizens and control over their financial transactions”), combined with social media scores and surveillance capitalism create a litmus test to determine who is worthy enough to be part of society and punish individuals for moral lapses and social transgressions (and reward them for adhering to government-sanctioned behavior). In China, millions of individuals and businesses, blacklisted as “unworthy” based on social media credit scores that grade them based on whether they are “good” citizens, have been banned from accessing financial markets, buying real estate or travelling by air or train.

Weaponizing compliance. Even the most well-intentioned government law or program can be—and has been—perverted, corrupted and used to advance illegitimate purposes once profit and power are added to the equation. The war on terror, the war on drugs, the war on COVID-19, the war on illegal immigration, asset forfeiture schemes, road safety schemes, school safety schemes, eminent domain: all of these programs started out as legitimate responses to pressing concerns and have since become weapons of compliance and control in the police state’s hands.

Weaponizing entertainment. For the past century, the Department of Defense’s Entertainment Media Office has provided Hollywood with equipment, personnel and technical expertise at taxpayer expense. In exchange, the military industrial complex has gotten a starring role in such blockbusters as Top Gun and its rebooted sequel Top Gun: Maverick, which translates to free advertising for the war hawks, recruitment of foot soldiers for the military empire, patriotic fervor by the taxpayers who have to foot the bill for the nation’s endless wars, and Hollywood visionaries working to churn out dystopian thrillers that make the war machine appear relevant, heroic and necessary. As Elmer Davis, a CBS broadcaster who was appointed the head of the Office of War Information, observed, “The easiest way to inject a propaganda idea into most people’s minds is to let it go through the medium of an entertainment picture when they do not realize that they are being propagandized.”

Weaponizing behavioral science and nudging. Apart from the overt dangers posed by a government that feels justified and empowered to spy on its people and use its ever-expanding arsenal of weapons and technology to monitor and control them, there’s also the covert dangers associated with a government empowered to use these same technologies to influence behaviors en masse and control the populace. In fact, it was President Obama who issued an executive order directing federal agencies to use “behavioral science” methods to minimize bureaucracy and influence the way people respond to government programs. It’s a short hop, skip and a jump from a behavioral program that tries to influence how people respond to paperwork to a government program that tries to shape the public’s views about other, more consequential matters. Thus, increasingly, governments around the world—including in the United States—are relying on “nudge units” to steer citizens in the direction the powers-that-be want them to go, while preserving the appearance of free will.

Weaponizing desensitization campaigns aimed at lulling us into a false sense of security. The events of recent years—the invasive surveillance, the extremism reports, the civil unrest, the protests, the shootings, the bombings, the military exercises and active shooter drills, the lockdowns, the color-coded alerts and threat assessments, the fusion centers, the transformation of local police into extensions of the military, the distribution of military equipment and weapons to local police forces, the government databases containing the names of dissidents and potential troublemakers—have conspired to acclimate the populace to accept a police state willingly, even gratefully.

Weaponizing fear and paranoia. The language of fear is spoken effectively by politicians on both sides of the aisle, shouted by media pundits from their cable TV pulpits, marketed by corporations, and codified into bureaucratic laws that do little to make our lives safer or more secure. Fear, as history shows, is the method most often used by politicians to increase the power of government and control a populace, dividing the people into factions, and persuading them to see each other as the enemy. This Machiavellian scheme has so ensnared the nation that few Americans even realize they are being manipulated into adopting an “us” against “them” mindset. Instead, fueled with fear and loathing for phantom opponents, they agree to pour millions of dollars and resources into political elections, militarized police, spy technology and endless wars, hoping for a guarantee of safety that never comes. All the while, those in power—bought and paid for by lobbyists and corporations—move their costly agendas forward, and “we the suckers” get saddled with the tax bills and subjected to pat downs, police raids and round-the-clock surveillance.

Weaponizing genetics. Not only does fear grease the wheels of the transition to fascism by cultivating fearful, controlled, pacified, cowed citizens, but it also embeds itself in our very DNA so that we pass on our fear and compliance to our offspring. It’s called epigenetic inheritance, the transmission through DNA of traumatic experiences. For example, neuroscientists observed that fear can travel through generations of mice DNA. As The Washington Post reports, “Studies on humans suggest that children and grandchildren may have felt the epigenetic impact of such traumatic events such as famine, the Holocaust and the Sept. 11, 2001, terrorist attacks.”

Weaponizing the future. With greater frequency, the government has been issuing warnings about the dire need to prepare for the dystopian future that awaits us. For instance, the Pentagon training video, “Megacities: Urban Future, the Emerging Complexity,” predicts that by 2030 (coincidentally, the same year that society begins to achieve singularity with the metaverse) the military would be called on to use armed forces to solve future domestic political and social problems. What they’re really talking about is martial law, packaged as a well-meaning and overriding concern for the nation’s security. The chilling five-minute training video paints an ominous picture of the future bedeviled by “criminal networks,” “substandard infrastructure,” “religious and ethnic tensions,” “impoverishment, slums,” “open landfills, over-burdened sewers,” a “growing mass of unemployed,” and an urban landscape in which the prosperous economic elite must be protected from the impoverishment of the have nots. “We the people” are the have-nots.

The end goal of these mind control campaigns—packaged in the guise of the greater good—is to see how far the American people will allow the government to go in re-shaping the country in the image of a totalitarian police state.

11 notes

·

View notes

Quote

Generative AI presents a number of immediate threats to learners’ rights, amplifying the known threats from so-called predictive AI (though the two are increasingly used together in educational systems and dealt with together in educational policy). Channelling the knowledge available to young people through anglo-centric, proprietary and normative data platforms is a risk to their cultural and epistemic rights. Releasing AI-generated content into digital platforms and attacking public sites with AI crawlers, agents and deepfakes deprives young people of free access to digital information and culture, while diminishing their own opportunities for cultural production. These harms affect young people of minority languages and cultures to a greater degree. And there is increasing evidence (see below) that the use of chatbots in school work and pedagogic interactions is harmful to young people’s intellectual and social development, and so undermines their right to the fullest development of their potential as laid out in the UN CRC.

How the right to education is undermined by AI

6 notes

·

View notes

Text

Your All-in-One AI Web Agent: Save $200+ a Month, Unleash Limitless Possibilities!

Imagine having an AI agent that costs you nothing monthly, runs directly on your computer, and is unrestricted in its capabilities. OpenAI Operator charges up to $200/month for limited API calls and restricts access to many tasks like visiting thousands of websites. With DeepSeek-R1 and Browser-Use, you:

• Save money while keeping everything local and private.

• Automate visiting 100,000+ websites, gathering data, filling forms, and navigating like a human.

• Gain total freedom to explore, scrape, and interact with the web like never before.

You may have heard about Operator from Open AI that runs on their computer in some cloud with you passing on private information to their AI to so anything useful. AND you pay for the gift . It is not paranoid to not want you passwords and logins and personal details to be shared. OpenAI of course charges a substantial amount of money for something that will limit exactly what sites you can visit, like YouTube for example. With this method you will start telling an AI exactly what you want it to do, in plain language, and watching it navigate the web, gather information, and make decisions—all without writing a single line of code.

In this guide, we’ll show you how to build an AI agent that performs tasks like scraping news, analyzing social media mentions, and making predictions using DeepSeek-R1 and Browser-Use, but instead of writing a Python script, you’ll interact with the AI directly using prompts.

These instructions are in constant revisions as DeepSeek R1 is days old. Browser Use has been a standard for quite a while. This method can be for people who are new to AI and programming. It may seem technical at first, but by the end of this guide, you’ll feel confident using your AI agent to perform a variety of tasks, all by talking to it. how, if you look at these instructions and it seems to overwhelming, wait, we will have a single download app soon. It is in testing now.

This is version 3.0 of these instructions January 26th, 2025.

This guide will walk you through setting up DeepSeek-R1 8B (4-bit) and Browser-Use Web UI, ensuring even the most novice users succeed.

What You’ll Achieve

By following this guide, you’ll:

1. Set up DeepSeek-R1, a reasoning AI that works privately on your computer.

2. Configure Browser-Use Web UI, a tool to automate web scraping, form-filling, and real-time interaction.

3. Create an AI agent capable of finding stock news, gathering Reddit mentions, and predicting stock trends—all while operating without cloud restrictions.

A Deep Dive At ReadMultiplex.com Soon

We will have a deep dive into how you can use this platform for very advanced AI use cases that few have thought of let alone seen before. Join us at ReadMultiplex.com and become a member that not only sees the future earlier but also with particle and pragmatic ways to profit from the future.

System Requirements

Hardware

• RAM: 8 GB minimum (16 GB recommended).

• Processor: Quad-core (Intel i5/AMD Ryzen 5 or higher).

• Storage: 5 GB free space.

• Graphics: GPU optional for faster processing.

Software

• Operating System: macOS, Windows 10+, or Linux.

• Python: Version 3.8 or higher.

• Git: Installed.

Step 1: Get Your Tools Ready

We’ll need Python, Git, and a terminal/command prompt to proceed. Follow these instructions carefully.

Install Python

1. Check Python Installation:

• Open your terminal/command prompt and type:

python3 --version

• If Python is installed, you’ll see a version like:

Python 3.9.7

2. If Python Is Not Installed:

• Download Python from python.org.

• During installation, ensure you check “Add Python to PATH” on Windows.

3. Verify Installation:

python3 --version

Install Git

1. Check Git Installation:

• Run:

git --version

• If installed, you’ll see:

git version 2.34.1

2. If Git Is Not Installed:

• Windows: Download Git from git-scm.com and follow the instructions.

• Mac/Linux: Install via terminal:

sudo apt install git -y # For Ubuntu/Debian

brew install git # For macOS

Step 2: Download and Build llama.cpp

We’ll use llama.cpp to run the DeepSeek-R1 model locally.

1. Open your terminal/command prompt.

2. Navigate to a clear location for your project files:

mkdir ~/AI_Project

cd ~/AI_Project

3. Clone the llama.cpp repository:

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

4. Build the project:

• Mac/Linux:

make

• Windows:

• Install a C++ compiler (e.g., MSVC or MinGW).

• Run:

mkdir build

cd build

cmake ..

cmake --build . --config Release

Step 3: Download DeepSeek-R1 8B 4-bit Model

1. Visit the DeepSeek-R1 8B Model Page on Hugging Face.

2. Download the 4-bit quantized model file:

• Example: DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf.

3. Move the model to your llama.cpp folder:

mv ~/Downloads/DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf ~/AI_Project/llama.cpp

Step 4: Start DeepSeek-R1

1. Navigate to your llama.cpp folder:

cd ~/AI_Project/llama.cpp

2. Run the model with a sample prompt:

./main -m DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf -p "What is the capital of France?"

3. Expected Output:

The capital of France is Paris.

Step 5: Set Up Browser-Use Web UI

1. Go back to your project folder:

cd ~/AI_Project

2. Clone the Browser-Use repository:

git clone https://github.com/browser-use/browser-use.git

cd browser-use

3. Create a virtual environment:

python3 -m venv env

4. Activate the virtual environment:

• Mac/Linux:

source env/bin/activate

• Windows:

env\Scripts\activate

5. Install dependencies:

pip install -r requirements.txt

6. Start the Web UI:

python examples/gradio_demo.py

7. Open the local URL in your browser:

http://127.0.0.1:7860

Step 6: Configure the Web UI for DeepSeek-R1

1. Go to the Settings panel in the Web UI.

2. Specify the DeepSeek model path:

~/AI_Project/llama.cpp/DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf

3. Adjust Timeout Settings:

• Increase the timeout to 120 seconds for larger models.

4. Enable Memory-Saving Mode if your system has less than 16 GB of RAM.

Step 7: Run an Example Task

Let’s create an agent that:

1. Searches for Tesla stock news.

2. Gathers Reddit mentions.

3. Predicts the stock trend.

Example Prompt:

Search for "Tesla stock news" on Google News and summarize the top 3 headlines. Then, check Reddit for the latest mentions of "Tesla stock" and predict whether the stock will rise based on the news and discussions.

--

Congratulations! You’ve built a powerful, private AI agent capable of automating the web and reasoning in real time. Unlike costly, restricted tools like OpenAI Operator, you’ve spent nothing beyond your time. Unleash your AI agent on tasks that were once impossible and imagine the possibilities for personal projects, research, and business. You’re not limited anymore. You own the web—your AI agent just unlocked it! 🚀

Stay tuned fora FREE simple to use single app that will do this all and more.

7 notes

·

View notes

Text

Things That Are Hard

Some things are harder than they look. Some things are exactly as hard as they look.

Game AI, Intelligent Opponents, Intelligent NPCs

As you already know, "Game AI" is a misnomer. It's NPC behaviour, escort missions, "director" systems that dynamically manage the level of action in a game, pathfinding, AI opponents in multiplayer games, and possibly friendly AI players to fill out your team if there aren't enough humans.

Still, you are able to implement minimax with alpha-beta pruning for board games, pathfinding algorithms like A* or simple planning/reasoning systems with relative ease. Even easier: You could just take an MIT licensed library that implements a cool AI technique and put it in your game.

So why is it so hard to add AI to games, or more AI to games? The first problem is integration of cool AI algorithms with game systems. Although games do not need any "perception" for planning algorithms to work, no computer vision, sensor fusion, or data cleanup, and no Bayesian filtering for mapping and localisation, AI in games still needs information in a machine-readable format. Suddenly you go from free-form level geometry to a uniform grid, and from "every frame, do this or that" to planning and execution phases and checking every frame if the plan is still succeeding or has succeeded or if the assumptions of the original plan no longer hold and a new plan is on order. Intelligent behaviour is orders of magnitude more code than simple behaviours, and every time you add a mechanic to the game, you need to ask yourself "how do I make this mechanic accessible to the AI?"

Some design decisions will just be ruled out because they would be difficult to get to work in a certain AI paradigm.

Even in a game that is perfectly suited for AI techniques, like a turn-based, grid-based rogue-like, with line-of-sight already implemented, can struggle to make use of learning or planning AI for NPC behaviour.

What makes advanced AI "fun" in a game is usually when the behaviour is at least a little predictable, or when the AI explains how it works or why it did what it did. What makes AI "fun" is when it sometimes or usually plays really well, but then makes little mistakes that the player must learn to exploit. What makes AI "fun" is interesting behaviour. What makes AI "fun" is game balance.

You can have all of those with simple, almost hard-coded agent behaviour.

Video Playback

If your engine does not have video playback, you might think that it's easy enough to add it by yourself. After all, there are libraries out there that help you decode and decompress video files, so you can stream them from disk, and get streams of video frames and audio.

You can just use those libraries, and play the sounds and display the pictures with the tools your engine already provides, right?

Unfortunately, no. The video is probably at a different frame rate from your game's frame rate, and the music and sound effect playback in your game engine are probably not designed with syncing audio playback to a video stream.

I'm not saying it can't be done. I'm saying that it's surprisingly tricky, and even worse, it might be something that can't be built on top of your engine, but something that requires you to modify your engine to make it work.

Stealth Games

Stealth games succeed and fail on NPC behaviour/AI, predictability, variety, and level design. Stealth games need sophisticated and legible systems for line of sight, detailed modelling of the knowledge-state of NPCs, communication between NPCs, and good movement/ controls/game feel.

Making a stealth game is probably five times as difficult as a platformer or a puzzle platformer.

In a puzzle platformer, you can develop puzzle elements and then build levels. In a stealth game, your NPC behaviour and level design must work in tandem, and be developed together. Movement must be fluid enough that it doesn't become a challenge in itself, without stealth. NPC behaviour must be interesting and legible.

Rhythm Games

These are hard for the same reason that video playback is hard. You have to sync up your audio with your gameplay. You need some kind of feedback for when which audio is played. You need to know how large the audio lag, screen lag, and input lag are, both in frames, and in milliseconds.

You could try to counteract this by using certain real-time OS functionality directly, instead of using the machinery your engine gives you for sound effects and background music. You could try building your own sequencer that plays the beats at the right time.

Now you have to build good gameplay on top of that, and you have to write music. Rhythm games are the genre that experienced programmers are most likely to get wrong in game jams. They produce a finished and playable game, because they wanted to write a rhythm game for a change, but they get the BPM of their music slightly wrong, and everything feels off, more and more so as each song progresses.

Online Multi-Player Netcode

Everybody knows this is hard, but still underestimates the effort it takes. Sure, back in the day you could use the now-discontinued ready-made solution for Unity 5.0 to synchronise the state of your GameObjects. Sure, you can use a library that lets you send messages and streams on top of UDP. Sure, you can just use TCP and server-authoritative networking.

It can all work out, or it might not. Your netcode will have to deal with pings of 300 milliseconds, lag spikes, package loss, and maybe recover from five seconds of lost WiFi connections. If your game can't, because it absolutely needs the low latency or high bandwidth or consistency between players, you will at least have to detect these conditions and handle them, for example by showing text on the screen informing the player he has lost the match.

It is deceptively easy to build certain kinds of multiplayer games, and test them on your local network with pings in the single digit milliseconds. It is deceptively easy to write your own RPC system that works over TCP and sends out method names and arguments encoded as JSON. This is not the hard part of netcode. It is easy to write a racing game where players don't interact much, but just see each other's ghosts. The hard part is to make a fighting game where both players see the punches connect with the hit boxes in the same place, and where all players see the same finish line. Or maybe it's by design if every player sees his own car go over the finish line first.

50 notes

·

View notes

Text

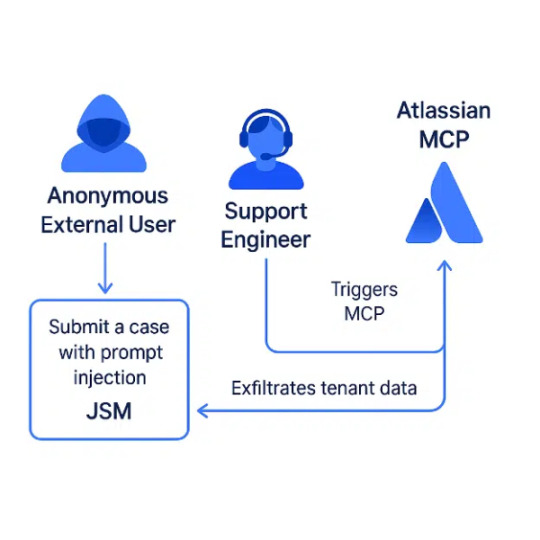

Researchers Warn of 'Living off AI' Attacks After PoC Exploits Atlassian's AI Agent Protocol

Summary: Researchers from Cato Networks demonstrated a proof-of-concept 'Living off AI' attack exploiting Atlassian's Model Context Protocol (MCP) integration in Jira Service Management, where malicious support tickets inject prompts that execute with internal user privileges. This enables data exfiltration and privilege escalation without direct attacker access, exposing a systemic risk in AI-driven workflows lacking prompt isolation and context validation.

Source: https://www.infosecurity-magazine.com/news/atlassian-ai-agent-mcp-attack/

More info: https://www.catonetworks.com/blog/cato-ctrl-poc-attack-targeting-atlassians-mcp/

2 notes

·

View notes

Text

How Agentic AI & RAG Revolutionize Autonomous Decision-Making

In the swiftly advancing realm of artificial intelligence, the integration of Agentic AI and Retrieval-Augmented Generation (RAG) is revolutionizing autonomous decision-making across various sectors. Agentic AI endows systems with the ability to operate independently, while RAG enhances these systems by incorporating real-time data retrieval, leading to more informed and adaptable decisions. This article delves into the synergistic relationship between Agentic AI and RAG, exploring their combined impact on autonomous decision-making.

Overview

Agentic AI refers to AI systems capable of autonomous operation, making decisions based on environmental inputs and predefined goals without continuous human oversight. These systems utilize advanced machine learning and natural language processing techniques to emulate human-like decision-making processes. Retrieval-Augmented Generation (RAG), on the other hand, merges generative AI models with information retrieval capabilities, enabling access to and incorporation of external data in real-time. This integration allows AI systems to leverage both internal knowledge and external data sources, resulting in more accurate and contextually relevant decisions.

Read more about Agentic AI in Manufacturing: Use Cases & Key Benefits

What is Agentic AI and RAG?

Agentic AI: This form of artificial intelligence empowers systems to achieve specific objectives with minimal supervision. It comprises AI agents—machine learning models that replicate human decision-making to address problems in real-time. Agentic AI exhibits autonomy, goal-oriented behavior, and adaptability, enabling independent and purposeful actions.

Retrieval-Augmented Generation (RAG): RAG is an AI methodology that integrates a generative AI model with an external knowledge base. It dynamically retrieves current information from sources like APIs or databases, allowing AI models to generate contextually accurate and pertinent responses without necessitating extensive fine-tuning.

Know more on Why Businesses Are Embracing RAG for Smarter AI

Capabilities

When combined, Agentic AI and RAG offer several key capabilities:

Autonomous Decision-Making: Agentic AI can independently analyze complex scenarios and select effective actions based on real-time data and predefined objectives.

Contextual Understanding: It interprets situations dynamically, adapting actions based on evolving goals and real-time inputs.

Integration with External Data: RAG enables Agentic AI to access external databases, ensuring decisions are based on the most current and relevant information available.

Enhanced Accuracy: By incorporating external data, RAG helps Agentic AI systems avoid relying solely on internal models, which may be outdated or incomplete.

How Agentic AI and RAG Work Together

The integration of Agentic AI and RAG creates a robust system capable of autonomous decision-making with real-time adaptability:

Dynamic Perception: Agentic AI utilizes RAG to retrieve up-to-date information from external sources, enhancing its perception capabilities. For instance, an Agentic AI tasked with financial analysis can use RAG to access real-time stock market data.

Enhanced Reasoning: RAG augments the reasoning process by providing external context that complements the AI's internal knowledge. This enables Agentic AI to make better-informed decisions, such as recommending personalized solutions in customer service scenarios.

Autonomous Execution: The combined system can autonomously execute tasks based on retrieved data. For example, an Agentic AI chatbot enhanced with RAG can not only answer questions but also initiate actions like placing orders or scheduling appointments.

Continuous Learning: Feedback from executed tasks helps refine both the agent's decision-making process and RAG's retrieval mechanisms, ensuring the system becomes more accurate and efficient over time.

Read more about Multi-Meta-RAG: Enhancing RAG for Complex Multi-Hop Queries

Example Use Case: Customer Service

Customer Support Automation Scenario: A user inquiries about their account balance via a chatbot.

How It Works: The Agentic AI interprets the query, determines that external data is required, and employs RAG to retrieve real-time account information from a database. The enriched prompt allows the chatbot to provide an accurate response while suggesting payment options. If prompted, it can autonomously complete the transaction.

Benefits: Faster query resolution, personalized responses, and reduced need for human intervention.

Example: Acuvate's implementation of Agentic AI demonstrates how autonomous decision-making and real-time data integration can enhance customer service experiences.

2. Sales Assistance

Scenario: A sales representative needs to create a custom quote for a client.

How It Works: Agentic RAG retrieves pricing data, templates, and CRM details. It autonomously drafts a quote, applies discounts as instructed, and adjusts fields like baseline costs using the latest price book.

Benefits: Automates multi-step processes, reduces errors, and accelerates deal closures.

3. Healthcare Diagnostics

Scenario: A doctor seeks assistance in diagnosing a rare medical condition.

How It Works: Agentic AI uses RAG to retrieve relevant medical literature, clinical trial data, and patient history. It synthesizes this information to suggest potential diagnoses and treatment options.

Benefits: Enhances diagnostic accuracy, saves time, and provides evidence-based recommendations.

Example: Xenonstack highlights healthcare as a major application area for agentic AI systems in diagnosis and treatment planning.

4. Market Research and Consumer Insights

Scenario: A business wants to identify emerging market trends.

How It Works: Agentic RAG analyzes consumer data from multiple sources, retrieves relevant insights, and generates predictive analytics reports. It also gathers customer feedback from surveys or social media.

Benefits: Improves strategic decision-making with real-time intelligence.

Example: Companies use Agentic RAG for trend analysis and predictive analytics to optimize marketing strategies.

5. Supply Chain Optimization

Scenario: A logistics manager needs to predict demand fluctuations during peak seasons.

How It Works: The system retrieves historical sales data, current market trends, and weather forecasts using RAG. Agentic AI then predicts demand patterns and suggests inventory adjustments in real-time.

Benefits: Prevents stockouts or overstocking, reduces costs, and improves efficiency.

Example: Acuvate’s supply chain solutions leverage predictive analytics powered by Agentic AI to enhance logistics operations

How Acuvate Can Help

Acuvate specializes in implementing Agentic AI and RAG technologies to transform business operations. By integrating these advanced AI solutions, Acuvate enables organizations to enhance autonomous decision-making, improve customer experiences, and optimize operational efficiency. Their expertise in deploying AI-driven systems ensures that businesses can effectively leverage real-time data and intelligent automation to stay competitive in a rapidly evolving market.

Future Scope

The future of Agentic AI and RAG involves the development of multi-agent systems where multiple AI agents collaborate to tackle complex tasks. Continuous improvement and governance will be crucial, with ongoing updates and audits necessary to maintain safety and accountability. As technology advances, these systems are expected to become more pervasive across industries, transforming business processes and customer interactions.

In conclusion, the convergence of Agentic AI and RAG represents a significant advancement in autonomous decision-making. By combining autonomous agents with real-time data retrieval, organizations can achieve greater efficiency, accuracy, and adaptability in their operations. As these technologies continue to evolve, their impact across various sectors is poised to expand, ushering in a new era of intelligent automation.

3 notes

·

View notes

Text

Video Agent: The Future of AI-Powered Content Creation

The rise of AI-generated content has transformed how businesses and creators produce videos. Among the most innovative tools is the video agent, an AI-driven solution that automates video creation, editing, and optimization. Whether for marketing, education, or entertainment, video agents are redefining efficiency and creativity in digital media.

In this article, we explore how AI-powered video agents work, their benefits, and their impact on content creation.

What Is a Video Agent?

A video agent is an AI-based system designed to assist in video production. Unlike traditional editing software, it leverages machine learning and natural language processing (NLP) to automate tasks such as:

Scriptwriting – Generates engaging scripts based on keywords.

Voiceovers – Converts text to lifelike speech in multiple languages.

Editing – Automatically cuts, transitions, and enhances footage.

Personalization – Tailors videos for different audiences.

These capabilities make video agents indispensable for creators who need high-quality content at scale.

How AI Video Generators Work

The core of a video agent lies in its AI algorithms. Here’s a breakdown of the process:

1. Input & Analysis

Users provide a prompt (e.g., "Create a 1-minute explainer video about AI trends"). The AI video generator analyzes the request and gathers relevant data.

2. Content Generation

Using GPT-based models, the system drafts a script, selects stock footage (or generates synthetic visuals), and adds background music.

3. Editing & Enhancement

The video agent refines the video by:

Adjusting pacing and transitions.

Applying color correction.

Syncing voiceovers with visuals.

4. Output & Optimization

The final video is rendered in various formats, optimized for platforms like YouTube, TikTok, or LinkedIn.

Benefits of Using a Video Agent

Adopting an AI-powered video generator offers several advantages:

1. Time Efficiency

Traditional video production takes hours or days. A video agent reduces this to minutes, allowing rapid content deployment.

2. Cost Savings

Hiring editors, voice actors, and scriptwriters is expensive. AI eliminates these costs while maintaining quality.

3. Scalability

Businesses can generate hundreds of personalized videos for marketing campaigns without extra effort.

4. Consistency

AI ensures brand voice and style remain uniform across all videos.

5. Accessibility

Even non-experts can create professional videos without technical skills.

Top Use Cases for Video Agents

From marketing to education, AI video generators are versatile tools. Key applications include:

1. Marketing & Advertising

Personalized ads – AI tailors videos to user preferences.

Social media content – Quickly generates clips for Instagram, Facebook, etc.

2. E-Learning & Training

Automated tutorials – Simplifies complex topics with visuals.

Corporate training – Creates onboarding videos for employees.

3. News & Journalism

AI-generated news clips – Converts articles into video summaries.

4. Entertainment & Influencers

YouTube automation – Helps creators maintain consistent uploads.

Challenges & Limitations

Despite their advantages, video agents face some hurdles:

1. Lack of Human Touch

AI may struggle with emotional nuance, making some videos feel robotic.

2. Copyright Issues

Using stock footage or AI-generated voices may raise legal concerns.

3. Over-Reliance on Automation

Excessive AI use could reduce creativity in content creation.

The Future of Video Agents

As AI video generation improves, we can expect:

Hyper-realistic avatars – AI-generated presenters indistinguishable from humans.

Real-time video editing – Instant adjustments during live streams.

Advanced personalization – AI predicting viewer preferences before creation.

2 notes

·

View notes

Text

Elon Musk’s so-called Department of Government Efficiency (DOGE) operates on a core underlying assumption: The United States should be run like a startup. So far, that has mostly meant chaotic firings and an eagerness to steamroll regulations. But no pitch deck in 2025 is complete without an overdose of artificial intelligence, and DOGE is no different.

AI itself doesn’t reflexively deserve pitchforks. It has genuine uses and can create genuine efficiencies. It is not inherently untoward to introduce AI into a workflow, especially if you’re aware of and able to manage around its limitations. It’s not clear, though, that DOGE has embraced any of that nuance. If you have a hammer, everything looks like a nail; if you have the most access to the most sensitive data in the country, everything looks like an input.

Wherever DOGE has gone, AI has been in tow. Given the opacity of the organization, a lot remains unknown about how exactly it’s being used and where. But two revelations this week show just how extensive—and potentially misguided—DOGE’s AI aspirations are.

At the Department of Housing and Urban Development, a college undergrad has been tasked with using AI to find where HUD regulations may go beyond the strictest interpretation of underlying laws. (Agencies have traditionally had broad interpretive authority when legislation is vague, although the Supreme Court recently shifted that power to the judicial branch.) This is a task that actually makes some sense for AI, which can synthesize information from large documents far faster than a human could. There’s some risk of hallucination—more specifically, of the model spitting out citations that do not in fact exist—but a human needs to approve these recommendations regardless. This is, on one level, what generative AI is actually pretty good at right now: doing tedious work in a systematic way.

There’s something pernicious, though, in asking an AI model to help dismantle the administrative state. (Beyond the fact of it; your mileage will vary there depending on whether you think low-income housing is a societal good or you’re more of a Not in Any Backyard type.) AI doesn’t actually “know” anything about regulations or whether or not they comport with the strictest possible reading of statutes, something that even highly experienced lawyers will disagree on. It needs to be fed a prompt detailing what to look for, which means you can not only work the refs but write the rulebook for them. It is also exceptionally eager to please, to the point that it will confidently make stuff up rather than decline to respond.

If nothing else, it’s the shortest path to a maximalist gutting of a major agency’s authority, with the chance of scattered bullshit thrown in for good measure.

At least it’s an understandable use case. The same can’t be said for another AI effort associated with DOGE. As WIRED reported Friday, an early DOGE recruiter is once again looking for engineers, this time to “design benchmarks and deploy AI agents across live workflows in federal agencies.” His aim is to eliminate tens of thousands of government positions, replacing them with agentic AI and “freeing up” workers for ostensibly “higher impact” duties.

Here the issue is more clear-cut, even if you think the government should by and large be operated by robots. AI agents are still in the early stages; they’re not nearly cut out for this. They may not ever be. It’s like asking a toddler to operate heavy machinery.

DOGE didn’t introduce AI to the US government. In some cases, it has accelerated or revived AI programs that predate it. The General Services Administration had already been working on an internal chatbot for months; DOGE just put the deployment timeline on ludicrous speed. The Defense Department designed software to help automate reductions-in-force decades ago; DOGE engineers have updated AutoRIF for their own ends. (The Social Security Administration has recently introduced a pre-DOGE chatbot as well, which is worth a mention here if only to refer you to the regrettable training video.)

Even those preexisting projects, though, speak to the concerns around DOGE’s use of AI. The problem isn’t artificial intelligence in and of itself. It’s the full-throttle deployment in contexts where mistakes can have devastating consequences. It’s the lack of clarity around what data is being fed where and with what safeguards.

AI is neither a bogeyman nor a panacea. It’s good at some things and bad at others. But DOGE is using it as an imperfect means to destructive ends. It’s prompting its way toward a hollowed-out US government, essential functions of which will almost inevitably have to be assumed by—surprise!—connected Silicon Valley contractors.

12 notes

·

View notes

Text

WHAT IS VERTEX AI SEARCH

Vertex AI Search: A Comprehensive Analysis

1. Executive Summary

Vertex AI Search emerges as a pivotal component of Google Cloud's artificial intelligence portfolio, offering enterprises the capability to deploy search experiences with the quality and sophistication characteristic of Google's own search technologies. This service is fundamentally designed to handle diverse data types, both structured and unstructured, and is increasingly distinguished by its deep integration with generative AI, most notably through its out-of-the-box Retrieval Augmented Generation (RAG) functionalities. This RAG capability is central to its value proposition, enabling organizations to ground large language model (LLM) responses in their proprietary data, thereby enhancing accuracy, reliability, and contextual relevance while mitigating the risk of generating factually incorrect information.

The platform's strengths are manifold, stemming from Google's decades of expertise in semantic search and natural language processing. Vertex AI Search simplifies the traditionally complex workflows associated with building RAG systems, including data ingestion, processing, embedding, and indexing. It offers specialized solutions tailored for key industries such as retail, media, and healthcare, addressing their unique vernacular and operational needs. Furthermore, its integration within the broader Vertex AI ecosystem, including access to advanced models like Gemini, positions it as a comprehensive solution for building sophisticated AI-driven applications.

However, the adoption of Vertex AI Search is not without its considerations. The pricing model, while granular and offering a "pay-as-you-go" approach, can be complex, necessitating careful cost modeling, particularly for features like generative AI and always-on components such as Vector Search index serving. User experiences and technical documentation also point to potential implementation hurdles for highly specific or advanced use cases, including complexities in IAM permission management and evolving query behaviors with platform updates. The rapid pace of innovation, while a strength, also requires organizations to remain adaptable.