#MLModels

Explore tagged Tumblr posts

Text

If you’ve read or studied Introduction to Machine Learning with Python, you’ll walk away with a solid foundation in machine learning (ML) concepts and the practical skills to implement ML algorithms using Python. This book is designed for beginners and intermediate learners, and it focuses on hands-on learning with real-world examples. Below is a step-by-step breakdown of the outcomes you can expect from this book, presented in a user-friendly format:

#MachineLearning#Python#DataScience#MachineLearningWithPython#ML#PythonProgramming#DataAnalysis#PythonForDataScience#MLAlgorithms#ArtificialIntelligence#MachineLearningTutorial#DataMining#PythonLibraries#ScikitLearn#DeepLearning#AI#PythonForMachineLearning#DataScienceTutorial#MLModels#TechBooks#MachineLearningProjects#AIProgramming#MachineLearningAlgorithms#MLDevelopment#DataVisualization#PythonDataScience

0 notes

Text

youtube

Session 16 : What is Reinforcement Learning | Explained: Key Concepts and Model Breakdown

Welcome to Session 16! 🚀 In this enlightening session, we dive deep into the fascinating world of Reinforcement Learning. 🤖 Get ready for a comprehensive exploration as we break down key concepts and unravel the mysteries behind Reinforcement Learning models.

youtube

Subscribe to "Learn And Grow Community" YouTube : https://www.youtube.com/@LearnAndGrowCommunity LinkedIn Group : https://linkedin.com/company/LearnAndGrowCommunity Follow #learnandgrowcommunity

#ReinforcementLearning#MachineLearning#AIExplained#mlmodels#deeplearninginsights#intelligenttechnology#aiinnovation#DataScience#smarttechsolutions#aibasics#RLApplications#cognitivecomputing#AlgorithmicIntelligence#futuretechtrends#aiprogress#learnai#techevolution#mlalgorithms#aiforeveryone#aiapplications#aicommunity#aiexplained#machinelearningbasics#machinelearningjourney#ml#learnandgrowcommunity#Youtube

1 note

·

View note

Text

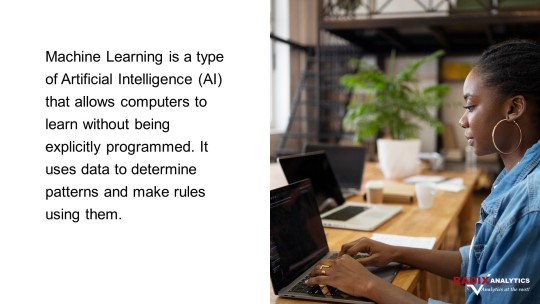

MACHINE LEARNING

Machine Learning For Data Analytics

INTRODUCTION

HOW DOES MACHINE LEARNING WORK?

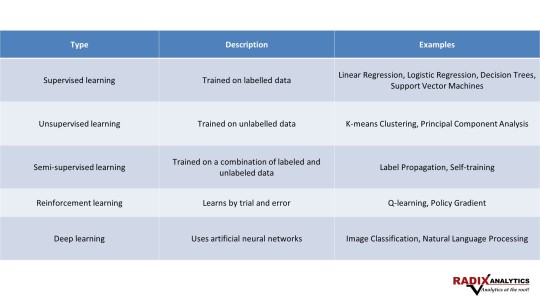

TYPES OF MACHINE LEARNING

USES OF ML IN ANALYTICS

Identify patterns in data

Make predictions about future events

Cluster data into groups

Reduce the dimensionality of data

Improve the accuracy of data models

CHALLENGES OF ML IN ANALYTICS

It can be difficult to find and prepare the data that is needed to train the algorithms.

Machine Learning algorithms can be computationally expensive to train and run.

Some of the algorithms are difficult to interpret even though they work well.

FUTURE OF MACHINE LEARNING IN ANALYTICS

Machine learning is a rapidly evolving field, and there are many new developments in this area.

As machine learning algorithms become more powerful and efficient, they will be used for a wider range of data analytics tasks.

Machine learning will also be used to automate more tasks that are currently done manually.

#MachineLearning#ML#ArtificialIntelligence#AI#DataScience#DeepLearning#NeuralNetworks#Algorithm#DataMining#PredictiveModeling#BigData#ComputerVision#NaturalLanguageProcessing#PatternRecognition#AIinBusiness#MLAlgorithms#MLModels#MachineLearningEngineer#MLResearch#AIApplications

0 notes

Text

HITL is a mechanism that leverages human interaction to train, fine-tune, or test specific systems such as AI models or machines to get the most accurate results possible.

In general, HITL provides following contributions to AI models👩💻:

Data labelling: People contribute to machine learning's understanding of the world by accurately labelling data.

Feedback: With a specific confidence interval, machine learning models forecast cases. Data scientists give feedback to the machine learning model to enhance its performance when the model's confidence falls below a predetermined level.

#HITL#humanintheloop#AImodels#MLmodels#artificialintelligence#humanintelligence#neuralnetworks#supervisedtraining#erpsoftware#erp#erpsoftwareinchennai#erpsoftwareinbangalore#business#pondicherry#industry

0 notes

Link

The future is cloud-native. Secure your future by migrating VMware workloads to Azure. Benefit from the robust security features of Azure while leveraging your existing VMware skills and processes. Azure VMware Solution is your express path to cloud migration. Download our e-book to learn more.

app.spaseddust.com

2 notes

·

View notes

Text

#Eli5Python#PythonExplainItLikeIm5#MachineLearning#DataScience#AI#ExplainableAI#InterpretableML#MLModel#Python#Programming#Code#DataAnalysis#DataVisualization#DeepLearning#ML#AIExplainability#ModelInterpretation#Transparency#TrustworthyAI

0 notes

Text

Using Google Document AI to Empower Governments

Google Cloud offers adaptable and scalable solutions for adaptive AI like Google Document AI, Translation AI, Contact Center AI and more in the public sector.

The public sector has particular difficulties because of its limited resources, complicated rules, and changing constituent needs. Emerging as a game-changer, artificial intelligence (AI) offers a means to improve decision-making, expedite processes, and provide more significant services. However, AI must be customised to fulfil your objective in order to be genuinely effective. This article will discuss how Google Cloud’s AI solutions are tailored to the particular requirements of the public sector and how they are already having an impact on businesses just like yours.

AI that changes to suit your goals

With its unified technology stack, Google AI for Public Sector provides an extensive, integrated toolkit to address every facet of your AI journey. Because of its flexibility, you can:

Begin small, expand large: Google AI can grow with your demands, whether you’re launching a mission-critical solution or piloting a new initiative.

Tailor to your challenges: There is no one-size-fits-all approach with Google AI. It is intended to be customised to meet the unique objectives and limitations of the public sector.

Change as your workflows do: Google AI solutions may be customised to deliver individualised experiences as your data changes and new problems emerge, guaranteeing that your apps stay useful and current.

Impact of AI in real life

The potential of adaptive, flexible AI is not limited to theory. This is how it’s starting to have an effect:

Document AI Google

Dearborn, MI: By utilising Google’s Translation AI, the city improved responsiveness, offered linguistic accessibility, and turned into a national leader in digital transformation. Over half of the city’s residents speak a language other than English at home, so in order to better serve its diverse population, the city partnered with Google Cloud to offer 24/7 online access to critical services in multiple languages, removing barriers and enabling citizens to engage with their government on their terms. Contact Centre AI-powered virtual agents provide individualised service, and Document AI and Translation AI aid in accessibility. The workforce in the city has also undergone this change, and they now have access to Google Workspace and Google Cloud certification options.

Government agencies and educational institutions in New York are utilising AI, particularly generative AI, at the state and local levels to guarantee robust cyber resilience, improve services and results, and improve the resident experience.

New York’s Sullivan County: Leading the world in AI-powered citizen services is a small, rural county. They quickly implemented a generative AI chatbot across more than 40 departments by utilising Google Cloud’s Vertex AI, which streamlined solutions to frequently asked questions, freed up employees for more difficult jobs, and resulted in a 62% decrease in call volume.

Department of Labour for New York State (NYSDOL): The virtual agent and their “Perkins” chatbot, which are available around-the-clock in 13 languages, have greatly enhanced accessibility and user experience while lightening the workload for NYSDOL employees. The Merrill Baumgardner Innovation in Information Technology Award was given to NYSDOL in recognition of its creative application of Google AI on a national scale.

Google Document AI

Develop document processors to enhance data extraction, automate time-consuming operations, and extract deeper meaning from structured and unstructured document data. Developers may design high-accuracy document extraction, classification, and splitting processors with the use of Google Document AI.

Try Document AI free

New users can test Document AI and other Google Cloud products with a $300 free credit.

Utilise a pre-built, Google-recommended method to summarise lengthy documents using generative AI.

Connect to BigQuery, Vertex Search, and further Google Cloud products with ease.

Enterprise-ready and backed by Google Cloud’s promises on data security and privacy

Designed with developers in mind; quickly design document processors using the UI or API

Advantages

Quicker time to value

Utilise generative AI to categorise documents or extract data right away; no prior training is required. To get structured data, just publish a document to an enterprise-ready API endpoint.

Increased precision

The most recent foundation models that have been optimised for document tasks power Google Document AI. Furthermore, the platform’s robust fine-tuning and auto-labeling tools offer a multitude of paths to attain the required precision.

Improved judgement

Using generative AI, organise and digitise information from papers to gain deeper insights that can aid in decision-making for businesses.

Google Document AI Features

Utilise generative AI to process documents.

AI Workbench Documentation

AI Document Building custom processors to separate, classify, and extract structured data from documents is made simple using Workbench. Because Workbench is powered by generative AI, it can be utilised straight out of the box to produce precise results for a variety of document types. Additionally, with just a button click or an API request, you may fine-tune the huge model to a better accuracy by submitting as few as 10 documents.

Business OCR

Users can access 25 years of Google optical character recognition (OCR) research with Enterprise Document OCR. OCR uses models that have been trained on business documents to detect text in more than 200 languages from PDFs and scanned document pictures. The software can recognise layout elements including words, symbols, lines, paragraphs, and blocks by looking at the document’s structure. Best-in-class handwriting recognition (50 languages), arithmetic formula recognition, font-style detection, and the extraction of selection marks such as checkboxes and radio buttons are examples of advanced functionality.

Parser for Forms

Form Parser is used by developers to organise data in tables, extract general elements like names, addresses, and prices, and collect fields and values from standard forms. This tool is suitable for a wide range of document customisation and functions right out of the box, requiring no training or customisation.

Trained beforehand

Test out pretrained models for the following frequently used document types: W2, paystub, bank statement, invoice, expense, US passport, US driver’s licence, and identity verification.

Google Document AI Use cases

To support automation and analytics, extract data

By collecting structured data from your papers, Google Document AI Workbench may be used to automate data entry. The mail office, shipping yards, mortgage processing departments, procurement, and other areas are typical applications. Make business decisions with greater effectiveness and efficiency by using this data.

Use BigQuery to find insights hidden in documents

Now, you can import document metadata straight into a BigQuery objects table. Integrate the parsed data with additional BigQuery tables to create a cohesive set of organised and unstructured data, which will enable thorough document analytics.

Sort papers into categories

Documents are made easier to handle, search, filter, and analyse when they are assigned classifications or categories when they enter a business process. Machine learning is used by Custom Splitter and Classifier to precisely forecast and classify a single document or several documents inside a file. Utilise these products to increase document process efficiency.

Make apps for document processing more intelligent

With generative AI, SaaS clients and ISV partners may swiftly enhance and grow their document processing offerings. Customers can advance document apps using a basic API prediction endpoint and document response format.

Text digitization for ML model training

Text may be digitised for ML model training using Enterprise Document OCR, which allows users to extract value from archival material that would not be suitable for ML model training otherwise. OCR facilitates the extraction of text from scanned charts, reports, presentations, and documents before they are saved to a data warehouse or cloud storage account. Make use of these excellent OCR outputs to speed up your digital transformation projects, such building business-specific ML models.

Boost corporate potential with generative AI

Using generative AI, increase business capabilities. Gather document data to create new generative AI frameworks and architectures. By combining OCR with the Vertex AI PaLM API, users may extract useful information from documents and use it to create new documents, automate document comparisons, and even create document Q&A experiences.

Google Document AI pricing

For all of your needs related to processing documents, training models, and storage, Document AI provides clear and affordable pricing. See Google’s price page for further information.

Read more on govindhteh.com

#Usinggoogledocumentai#Empowergovernments#artificialintelligence#ai#googleai#googlecloud#documentai#vertexsearch#mlmodel#api#technology#technews#news#govindhtech

0 notes

Text

How to Integrate Machine Learning into Your iOS App Using Swift

In the ever-evolving world of mobile app development, one of the most exciting and cutting-edge trends is integrating Machine Learning (ML) into iOS applications. As a developer or business owner looking to stay competitive, leveraging the power of ML in your app can drastically enhance user experience, efficiency, and personalization. If you're looking to develop such an app, partnering with a Swift App Development Company can help you navigate the complexities of integrating machine learning into your iOS app. In this blog, we'll guide you step-by-step on how to integrate machine learning into your iOS app using Swift.

Understanding Machine Learning in iOS App Development

Before jumping into the integration process, it’s important to have a solid understanding of what machine learning entails and how it can be used in iOS apps. Machine learning is a field of artificial intelligence (AI) that allows apps to learn from data, recognize patterns, and make decisions with minimal human intervention. This could include anything from image recognition, predictive text, personalized recommendations, and even advanced tasks like natural language processing (NLP).

Apple’s core framework for machine learning is Core ML. Core ML is designed to enable developers to easily integrate machine learning models into their iOS apps. With Core ML, you can integrate models trained on different machine learning algorithms like deep learning, tree ensembles, support vector machines, and others.

Step-by-Step Guide to Integrating ML into Your iOS App Using Swift

1. Choose the Right Machine Learning Model

The first step in integrating machine learning into your iOS app is to choose the right ML model for your app’s functionality. The model you select will depend on the problem you're trying to solve. For example:

Image classification – If you want your app to recognize images or objects, you can use models trained for image recognition.

Natural language processing (NLP) – If your app needs to process and understand human language, you could integrate an NLP model.

Recommendation systems – If your app provides personalized recommendations, such as in e-commerce or media platforms, a recommendation engine model might be the right fit.

Once you’ve identified the appropriate model, you can either use pre-trained models from resources like Apple’s Core ML Model Zoo or create your own custom models.

2. Convert Your Model to Core ML Format

If you already have a trained machine learning model in a different format, such as TensorFlow or PyTorch, you’ll need to convert it to the Core ML format. Apple provides a tool called Core ML Tools for this purpose, which simplifies the process of converting models from popular frameworks to Core ML.

To convert your model, you can use the following steps:

Use the coremltools Python package to convert models from other frameworks to .mlmodel format.

If you're using a pre-trained model, make sure it is optimized for mobile devices to ensure performance remains high.

3. Integrating the Model into Your iOS App Using Xcode

After converting your model to the Core ML format, the next step is to integrate it into your Xcode project. Here’s how you can do this:

Import the model: Drag and drop the .mlmodel file into your Xcode project.

Generate a Swift class: When you add the .mlmodel to your project, Xcode automatically generates a Swift class that represents the model, making it easier to work with.

Use the model in code: Now that the model is part of your project, you can use it in your app by calling the generated class.

For example, you can create an instance of the model and pass data to it for prediction like so:

swift

Copy code

import CoreML guard let model = try? MyModel(configuration: MLModelConfiguration()) else { fatalError("Model loading failed.") } let input = MyModelInput(feature: inputData) guard let prediction = try? model.prediction(input: input) else { fatalError("Prediction failed.") }

4. Optimize Performance for Mobile Devices

Mobile devices, especially iPhones and iPads, have limited resources compared to desktop computers. To make sure your machine learning model runs efficiently on these devices, you need to optimize it. One way to do this is by using model quantization to reduce the model size without compromising accuracy. Apple’s Core ML Compiler can help with optimizations that ensure smooth performance on mobile devices.

5. Testing and Debugging Your Model

After integrating your model, thorough testing is essential to ensure the machine learning feature works correctly. You'll need to test:

Accuracy – Check that the model is providing accurate results and making correct predictions.

Performance – Test how quickly the model runs, and ensure there are no noticeable delays or lag in the app.

Battery usage – Since machine learning models can be computationally intensive, it's crucial to monitor the app's energy consumption and ensure that it doesn’t drain the device’s battery excessively.

You can use Xcode's Instruments tool to monitor the performance of your app, including CPU usage and memory consumption, while the ML model is running.

6. Consider User Privacy and Data Security

When implementing machine learning in an iOS app, it’s important to consider user privacy, especially when dealing with sensitive data like photos, personal details, or health information. Ensure that the model operates locally on the device as much as possible to avoid sending personal data to external servers.

Apple provides privacy features like App Tracking Transparency and Data Protection APIs that you can use to enhance the privacy of your users’ data.

The Cost of Developing a Machine Learning iOS App

When you plan to integrate machine learning into your app, you might wonder how much it will cost to develop such an app. The cost of integrating machine learning depends on various factors such as the complexity of the model, the development time, and the specific features you want. Using a mobile app cost calculator can provide you with a more accurate estimate for your project.

While calculating, remember that machine learning integrations typically add more complexity to app development, so it's important to factor in the cost of hiring experienced developers, purchasing necessary tools, and investing in data processing. If you are looking for professional help, consulting a Swift App Development Company could save you a significant amount of time and resources.

If you're interested in exploring the benefits of Swift App Development Company for your business, we encourage you to book an appointment with our team of experts. Book an Appointment

Conclusion

Integrating machine learning into your iOS app can bring a wealth of functionality, transforming it into a more intelligent and personalized experience for your users. By using tools like Core ML and following the steps outlined in this blog, you can successfully incorporate ML into your Swift-powered app. Whether you're building a recommendation engine or implementing image recognition, the possibilities are endless.

If you want to ensure your machine learning app is built to the highest standards, reach out for expert Swift App Development Services. Our team of experienced developers can help you bring your app ideas to life, optimizing them for performance, security, and user experience. Get in touch with us today to start your project!

0 notes

Text

Machine Learning Sklearn and TensorFlow : Course Path

#MachineLearning #DataScience #PythonProgramming #Sklearn #TensorFlow #AI #DeepLearning #DataPreprocessing #FeatureEngineering #MLJourney #LearnML #TechSkills #DataCleaning #MLModels

Introduction Curious about machine learning using Sklearn and Tensorflow but unsure where to start? Look no further! Our carefully curated learning path, designed for Python programmers and data scientists, takes you from the fundamentals of Sklearn to the advanced realms of deep learning with TensorFlow. Whether you’re brushing up on data cleaning or diving into neural networks, this journey…

0 notes

Text

😍🥰😘🍑🍑🍆🍆14/JANUARY/2024 [}14/01/2024{]🍆🍆🍑🍑😘🥰😍

😍🥰😘🍑🍑🍆🍆14/JANUARY/2024 [}14/01/2024{]🍆🍆🍑🍑😘🥰😍

0 notes

Text

Machine Learning Yearning" is a practical guide by Andrew Ng, a pioneer in the field of artificial intelligence and machine learning. This book is part of the deeplearning.ai project and is designed to help you navigate the complexities of building and deploying machine learning systems. It focuses on strategic decision-making and best practices rather than algorithms or code. Below is a step-by-step breakdown of the outcomes you can expect after reading this book, presented in a user-friendly manner:

#MachineLearning#DeepLearning#AI#ArtificialIntelligence#ML#DeepLearningAI#MLYearning#AndrewNg#AIProject#DataScience#MachineLearningBooks#NeuralNetworks#AICommunity#TechBooks#AIResearch#AITraining#MLModels#DeepLearningTutorials#AIApplications#MLAlgorithms#MLDevelopment#DeepLearningProjects#MachineLearningModels#TechLearning#AIForBeginners#DataDriven#MachineLearningTools

0 notes

Video

instagram

Subscribe us on YouTube - Link in Bio #ml #mlmodels #mlengineer #mlflow #artificialintelligence #machinelearning #datascience #dataanalytics #robotics #deeplearning #bigdata #coding #code #programming #innovation #creativity #softwaredeveloper #automation #100daysofcode #data #technology #python #iot #internetofthings #datasciencelovers #DSL https://www.instagram.com/p/CnZT9D9r2xQ/?igshid=NGJjMDIxMWI=

#ml#mlmodels#mlengineer#mlflow#artificialintelligence#machinelearning#datascience#dataanalytics#robotics#deeplearning#bigdata#coding#code#programming#innovation#creativity#softwaredeveloper#automation#100daysofcode#data#technology#python#iot#internetofthings#datasciencelovers#dsl

0 notes

Photo

📣Are you an AI enthusiast and would like to broaden your knowledge about in what more ways AI 🧬 can possibly be useful? We will then invite you to watch the replay🎥 of our very first webinar which took place on Wednesday 2nd February 2022 where we talked about AI Recommendation system types, methods of building recommendation systems, building a recommendation system using word embedded method, etc. Link to the replay is in our bio and in the comments below👇 #airecommendationsystem #recommendationsystem #webinar #mlmodels #datascience #ai #datatera #artificialintelligence #aimodels (at Stockholm, Sweden) https://www.instagram.com/p/CZ_-Do2D7Dz/?utm_medium=tumblr

#airecommendationsystem#recommendationsystem#webinar#mlmodels#datascience#ai#datatera#artificialintelligence#aimodels

0 notes

Text

DESTINATION : Purpose of pickling a model..

ROUTE : When we are working with sets of data in the form of dictionaries, DataFrames, or any other data type , we might want to save them to a file, so we can use them later on or send them to someone else. This is what Python's pickle module is for: it serializes objects so they can be saved to a file, and loaded in a program again later on.

Pickling - Pickle is used for serializing and de-serializing Python object structures, also called marshalling or flattening. Serialization refers to the process of converting an object in memory to a byte stream that can be stored on disk or sent over a network. Later on, this character stream can then be retrieved and de-serialized back to a Python object. Pickling is not to be confused with compression! The former is the conversion of an object from one representation (data in Random Access Memory (RAM)) to another (text on disk), while the latter is the process of encoding data with fewer bits, in order to save disk space.

0 notes

Text

How Math-Based Dida Machine Learning Automates Sales

Dida machine learning

The gap between what businesses require from machine learning (ML) solutions and what off-the-shelf, blackbox products can offer is widening in tandem with the business desire for complicated automation. Dida specialises in creating unique AI solutions for businesses ranging from medium-sized to huge. Their highly skilled team, who have degrees in physics and mathematics, is skilled at approaching complicated issues abstractly in order to provide Their clients with advanced, practical AI solutions. Additionally, because Their solutions are explainable due to their modular design, businesses can clearly see what is happening at every stage of the process.

Future energy compared to historical bottlenecks They successfully utilised Their special blend of machine learning and mathematics expertise when They developed a custom AI solution using Google Cloud to automate a portion of Enpal’s solar panel sales process. Enpal, the first greentech unicorn in Germany, was experiencing a time of tremendous expansion due to the growing demand for environmental sustainability solutions in the country.

Enpal required a more effective method of producing bids for potential solar panel buyers in order to maintain this growth. During this process, a salesman would manually count the number of roof tiles to determine the size of the roof, estimate the roof’s angle, then input a satellite image of the customer’s rooftop into a desktop application. The salesman would then determine how many solar panels the customer would require using this estimate before building a mock-up to depict the solar panels on the roof.

One salesperson needed 120 minutes to do the entire procedure, which made it challenging to scale as the company expanded. It was also prone to error due to the laborious counting of the roof tiles and the imprecise estimation of the roof’s angle, which resulted in erroneous cost and energy production estimates. Enpal intended to create a unique artificial intelligence solution to automate the procedure, lowering inefficiencies and raising accuracy. Enpal came to us because they realised they needed Their blend of mathematical problem solving and AI experience.

Effective training of strong machine learning models At dida, They build Their solutions on Google Cloud whenever possible, but They are platform neutral to meet specific customer requests. Cloud is a cheap, easy-to-use platform with several AI development tools. Since They only pay for the services They use, the price model is affordable. They created a modular, understandable solution by segmenting the process as They developed Enpal’s solution into a number of smaller parts. Nearly every stage of the process involved the use of Google Cloud products.

Getting enough rooftop photos or data to create a strong machine learning model was the first step. To train the model, They collected pictures of rooftops in a variety of sizes and forms using the Google Maps Platform API. They managed costs by using automatic storage class transitions while storing all of these photographs in Cloud Storage.

They used these pictures to create a baseline model in order to validate the idea. In order to do this, the model had to be trained to recognise the difference between rooftops and other features, as well as where skylights and chimneys would make it impossible to install solar panels.

They ran experiments using a CI/CD workflow in Cloud Build while They constructed the model, modifying parameters to create a functional working model. They were able to maintain a continuous development cycle by using Cloud Build, which improved process efficiency and allowed us to construct Their baseline model in just four weeks.

Applying a theoretical framework to practical issues Then, it took some effort to figure out the proper formula to determine the roof’s south-facing side angle. In order to do this, Their team used projective geometry and its mathematical problem-solving abilities to create a model that could determine the correct angle from roof images submitted by potential clients. They created an automated procedure to determine the roof area by combining this mathematical technique with the ML model. They then added two more phases to this process: one to determine the required number of solar panels and another to visualise their placement on the roof.

During the ML model-training process, they employed Compute Engine and added GPUs to Their virtual machines to expedite workloads and provide high availability. They could easily scale Their utilisation up or down with Compute Engine, so They were only charged for the compute power They actually used. TensorBoard allowed us to keep an eye on each individual training session while They refined Their model, which allowed us to evaluate the model’s performance.

Automating the procedure to achieve a quicker, more precise sales process After a six-month development period, Enpal developed a customised, automated solution to swiftly and easily determine the size of a roof and the necessary number of panels. Additionally, because the solution was modular, Enpal was able to manually modify specifics along the route, such a roof’s exact proportions, to guarantee the conclusion was as accurate as possible.

This allowed Enpal to have good visibility into how the system was operating. They measured the accuracy of rooftop detection using a performance metric known as Intersection over Union (IoU). They attained an IoU of 93% during model training, optimisation, and post-processing.

Using the help of Their solution and the effectiveness of constructing it using Google Cloud, an Enpal salesperson can now complete an automated procedure in just 15 minutes as opposed to the previous manual method that took 120 minutes, a decrease of 87.5%.

Thirteen employees of Enpal were utilising the software when They first started working on it. After four years, this has increased to 150 Enpal workers, who are now able to save 87.5% of their time for other, more specialised jobs. As a result of the model’s increased accuracy, consumers receive quotes with fewer errors, which speeds up the sales process and enhances the customer experience.

Read more on Govindhtech.com

0 notes

Text

FutureAnalytica helps you easily build high-quality custom machine-learning models with no-coding knowledge needed. To know more, please visit -> https://lnkd.in/gmqywHVR

#ml #mlmodels #machinelearning #machinelearningmodels #nocodeplatform #nocode

0 notes