#MicroK8s

Explore tagged Tumblr posts

Text

今日の文書化〜microk8s

Kubernetesの簡易版? 昨日Kubernatesの文書化の際に躓きましたが、気分を変えるためにMicroK8sを設定しました。設定と言ってもUbuntu ServerのインストールオプションでMicroK8sやDockerが選べるようになっていて、インストールが終われば最低限の設定が終わっているという楽なものです。 モノづくり塾のサーバーで動いている資料館。 この手のものはネットを探すといろいろな人が書いているのですが、書いてある通りにやっても自分の環境ではすんなり動かないとか、「これくらいのことはわかってるだろ?」と言わんばかりの難解な記述のものもあって、なかなか良い情報に辿り着くのに苦労することもあります。 今回、公式ドキュメントを読みながら実際にやってみた過程を文書化しました。

View On WordPress

0 notes

Text

Got Canonical?

0 notes

Text

Microk8s vs k3s: Lightweight Kubernetes distribution showdown

Microk8s vs k3s: Lightweight Kubernetes distribution showdown #homelab #kubernetes #microk8svsk3scomparison #lightweightkubernetesdistributions #k3sinstallationguide #microk8ssnappackagetutorial #highavailabilityinkubernetes #k3s #microk8s #portainer

Especially if you are into running Kubernetes in the home lab, you may look for a lightweight Kubernetes distribution. Two distributions that stand out are Microk8s and k3s. Let’s take a look at Microk8s vs k3s and discover the main differences between these two options, focusing on various aspects like memory usage, high availability, and k3s and microk8s compatibility. Table of contentsWhat is…

View On WordPress

#container runtimes and configurations#edge computing with k3s and microk8s#High Availability in Kubernetes#k3s installation guide#kubernetes cluster resources#Kubernetes on IoT devices#lightweight kubernetes distributions#memory usage optimization#microk8s snap package tutorial#microk8s vs k3s comparison

0 notes

Text

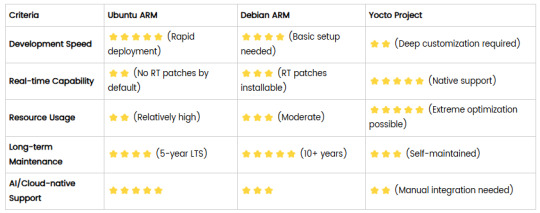

Comparison of Ubuntu, Debian, and Yocto for IIoT and Edge Computing

In industrial IoT (IIoT) and edge computing scenarios, Ubuntu, Debian, and Yocto Project each have unique advantages. Below is a detailed comparison and recommendations for these three systems:

1. Ubuntu (ARM)

Advantages

Ready-to-use: Provides official ARM images (e.g., Ubuntu Server 22.04 LTS) supporting hardware like Raspberry Pi and NVIDIA Jetson, requiring no complex configuration.

Cloud-native support: Built-in tools like MicroK8s, Docker, and Kubernetes, ideal for edge-cloud collaboration.

Long-term support (LTS): 5 years of security updates, meeting industrial stability requirements.

Rich software ecosystem: Access to AI/ML tools (e.g., TensorFlow Lite) and databases (e.g., PostgreSQL ARM-optimized) via APT and Snap Store.

Use Cases

Rapid prototyping: Quick deployment of Python/Node.js applications on edge gateways.

AI edge inference: Running computer vision models (e.g., ROS 2 + Ubuntu) on Jetson devices.

Lightweight K8s clusters: Edge nodes managed by MicroK8s.

Limitations

Higher resource usage (minimum ~512MB RAM), unsuitable for ultra-low-power devices.

2. Debian (ARM)

Advantages

Exceptional stability: Packages undergo rigorous testing, ideal for 24/7 industrial operation.

Lightweight: Minimal installation requires only 128MB RAM; GUI-free versions available.

Long-term support: Up to 10+ years of security updates via Debian LTS (with commercial support).

Hardware compatibility: Supports older or niche ARM chips (e.g., TI Sitara series).

Use Cases

Industrial controllers: PLCs, HMIs, and other devices requiring deterministic responses.

Network edge devices: Firewalls, protocol gateways (e.g., Modbus-to-MQTT).

Critical systems (medical/transport): Compliance with IEC 62304/DO-178C certifications.

Limitations

Older software versions (e.g., default GCC version); newer features require backports.

3. Yocto Project

Advantages

Full customization: Tailor everything from kernel to user space, generating minimal images (<50MB possible).

Real-time extensions: Supports Xenomai/Preempt-RT patches for μs-level latency.

Cross-platform portability: Single recipe set adapts to multiple hardware platforms (e.g., NXP i.MX6 → i.MX8).

Security design: Built-in industrial-grade features like SELinux and dm-verity.

Use Cases

Custom industrial devices: Requires specific kernel configurations or proprietary drivers (e.g., CAN-FD bus support).

High real-time systems: Robotic motion control, CNC machines.

Resource-constrained terminals: Sensor nodes running lightweight stacks (e.g., Zephyr+FreeRTOS hybrid deployment).

Limitations

Steep learning curve (BitBake syntax required); longer development cycles.

4. Comparison Summary

5. Selection Recommendations

Choose Ubuntu ARM: For rapid deployment of edge AI applications (e.g., vision detection on Jetson) or deep integration with public clouds (e.g., AWS IoT Greengrass).

Choose Debian ARM: For mission-critical industrial equipment (e.g., substation monitoring) where stability outweighs feature novelty.

Choose Yocto Project: For custom hardware development (e.g., proprietary industrial boards) or strict real-time/safety certification (e.g., ISO 13849) requirements.

6. Hybrid Architecture Example

Smart factory edge node:

Real-time control layer: RTOS built with Yocto (controlling robotic arms)

Data processing layer: Debian running OPC UA servers

Cloud connectivity layer: Ubuntu Server managing K8s edge clusters

Combining these systems based on specific needs can maximize the efficiency of IIoT edge computing.

0 notes

Text

Serverless Computing Market Growth Analysis and Forecast Report 2032

The Serverless Computing Market was valued at USD 19.30 billion in 2023 and is expected to reach USD 70.52 billion by 2032, growing at a CAGR of 15.54% from 2024-2032.

The serverless computing market has gained significant traction over the last decade as organizations increasingly seek to build scalable, agile, and cost-effective applications. By allowing developers to focus on writing code without managing server infrastructure, serverless architecture is reshaping how software and cloud applications are developed and deployed. Cloud service providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) are at the forefront of this transformation, offering serverless solutions that automatically allocate computing resources on demand. The flexibility, scalability, and pay-as-you-go pricing models of serverless platforms are particularly appealing to startups and enterprises aiming for digital transformation and faster time-to-market.

Serverless Computing Market adoption is expected to continue rising, driven by the surge in microservices architecture, containerization, and event-driven application development. The market is being shaped by the growing demand for real-time data processing, simplified DevOps processes, and enhanced productivity. As cloud-native development becomes more prevalent across industries such as finance, healthcare, e-commerce, and media, serverless computing is evolving from a developer convenience into a strategic advantage. By 2032, the market is forecast to reach unprecedented levels of growth, with organizations shifting toward Function-as-a-Service (FaaS) and Backend-as-a-Service (BaaS) to streamline development and reduce operational overhead.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/5510

Market Keyplayers:

AWS (AWS Lambda, Amazon S3)

Microsoft (Azure Functions, Azure Logic Apps)

Google Cloud (Google Cloud Functions, Firebase)

IBM (IBM Cloud Functions, IBM Watson AI)

Oracle (Oracle Functions, Oracle Cloud Infrastructure)

Alibaba Cloud (Function Compute, API Gateway)

Tencent Cloud (Cloud Functions, Serverless MySQL)

Twilio (Twilio Functions, Twilio Studio)

Cloudflare (Cloudflare Workers, Durable Objects)

MongoDB (MongoDB Realm, MongoDB Atlas)

Netlify (Netlify Functions, Netlify Edge Functions)

Fastly (Compute@Edge, Signal Sciences)

Akamai (Akamai EdgeWorkers, Akamai Edge Functions)

DigitalOcean (App Platform, Functions)

Datadog (Serverless Monitoring, Real User Monitoring)

Vercel (Serverless Functions, Edge Middleware)

Spot by NetApp (Ocean for Serverless, Elastigroup)

Elastic (Elastic Cloud, Elastic Observability)

Backendless (Backendless Cloud, Cloud Code)

Faundb (Serverless Database, Faundb Functions)

Scaleway (Serverless Functions, Object Storage)

8Base (GraphQL API, Serverless Back-End)

Supabase (Edge Functions, Supabase Realtime)

Appwrite (Cloud Functions, Appwrite Database)

Canonical (Juju, MicroK8s)

Market Trends

Several emerging trends are driving the momentum in the serverless computing space, reflecting the industry's pivot toward agility and innovation:

Increased Adoption of Multi-Cloud and Hybrid Architectures: Organizations are moving beyond single-vendor lock-in, leveraging serverless computing across multiple cloud environments to increase redundancy, flexibility, and performance.

Edge Computing Integration: The fusion of serverless and edge computing is enabling faster, localized data processing—particularly beneficial for IoT, AI/ML, and latency-sensitive applications.

Advancements in Developer Tooling: The rise of open-source frameworks, CI/CD integration, and observability tools is enhancing the developer experience and reducing the complexity of managing serverless applications.

Serverless Databases and Storage: Innovations in serverless data storage and processing, including event-driven data lakes and streaming databases, are expanding use cases for serverless platforms.

Security and Compliance Enhancements: With growing concerns over data privacy, serverless providers are focusing on end-to-end encryption, policy enforcement, and secure API gateways.

Enquiry of This Report: https://www.snsinsider.com/enquiry/5510

Market Segmentation:

By Enterprise Size

Large Enterprise

SME

By Service Model

Function-as-a-Service (FaaS)

Backend-as-a-Service (BaaS)

By Deployment

Private Cloud

Public Cloud

Hybrid Cloud

By End-user Industry

IT & Telecommunication

BFSI

Retail

Government

Industrial

Market Analysis

The primary growth drivers include the widespread shift to cloud-native technologies, the need for operational efficiency, and the rising number of digital-native enterprises. Small and medium-sized businesses, in particular, benefit from the low infrastructure management costs and scalability of serverless platforms.

North America remains the largest regional market, driven by early adoption of cloud services and strong presence of major tech giants. However, Asia-Pacific is emerging as a high-growth region, fueled by growing IT investments, increasing cloud literacy, and the rapid expansion of e-commerce and mobile applications. Key industry verticals adopting serverless computing include banking and finance, healthcare, telecommunications, and media.

Despite its advantages, serverless architecture comes with challenges such as cold start latency, vendor lock-in, and monitoring complexities. However, advancements in runtime management, container orchestration, and vendor-agnostic frameworks are gradually addressing these limitations.

Future Prospects

The future of the serverless computing market looks exceptionally promising, with innovation at the core of its trajectory. By 2032, the market is expected to be deeply integrated with AI-driven automation, allowing systems to dynamically optimize workloads, security, and performance in real time. Enterprises will increasingly adopt serverless as the default architecture for cloud application development, leveraging it not just for backend APIs but for data science workflows, video processing, and AI/ML pipelines.

As open standards mature and cross-platform compatibility improves, developers will enjoy greater freedom to move workloads across different environments with minimal friction. Tools for observability, governance, and cost optimization will become more sophisticated, making serverless computing viable even for mission-critical workloads in regulated industries.

Moreover, the convergence of serverless computing with emerging technologies—such as 5G, blockchain, and augmented reality—will open new frontiers for real-time, decentralized, and interactive applications. As businesses continue to modernize their IT infrastructure and seek leaner, more responsive architectures, serverless computing will play a foundational role in shaping the digital ecosystem of the next decade.

Access Complete Report: https://www.snsinsider.com/reports/serverless-computing-market-5510

Conclusion

Serverless computing is no longer just a developer-centric innovation—it's a transformative force reshaping the global cloud computing landscape. Its promise of simplified operations, cost efficiency, and scalability is encouraging enterprises of all sizes to rethink their application development strategies. As demand for real-time, responsive, and scalable solutions grows across industries, serverless computing is poised to become a cornerstone of enterprise digital transformation. With continued innovation and ecosystem support, the market is set to achieve remarkable growth and redefine how applications are built and delivered in the cloud-first era.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

0 notes

Text

Getting Started with Local Kubernetes: Developing Microservices with MicroK8s

Overview In the world of modern software development, microservices architecture has become increasingly popular for its scalability and maintainability. As developers, we often need to run and test these microservices locally before deploying them to a cloud-based Kubernetes cluster. Let’s get started setting up a local Kubernetes environment using MicroK8s, a lightweight, single-package…

0 notes

Text

Mikrok8s Automated Kubernetes Install with new Portainer Feature - Virtualization Howto

0 notes

Text

How to install Kubernetes on Raspberry Pi ?

How to install Kubernetes on Raspberry Pi ?

Install Kubernetes on Raspberry Pi – Does this sounds crazy ?. This is possible. Now there are several light weight, low footprint Kubernetes platforms available in the market that can be installed in low configuration devices. One of the best and easy way to set up a single node miniature version of Kubernetes is using MicroK8s. Microk8s is an opensource version of fully conformant Kubernetes…

View On WordPress

1 note

·

View note

Link

Canonical announced the launch of gopaddle, the Low-Code Internal Developer Platform, as a community addon for MicroK8s edge cloud.

0 notes

Text

How to deploy the Kubernetes WebUI with MicroK8s

How to deploy the Kubernetes WebUI with MicroK8s

Looking for a web-based tool to manage Microk8s? Look no further than the Kubernetes dashboard.

How to deploy the Kubernetes WebUI with MicroK8s Looking for a web-based tool to manage Microk8s? Look no further than the Kubernetes dashboard.

If you have anything to do with IT, Kubernetes needs no introduction. However, you might not know that there’s a…

View On WordPress

0 notes

Photo

Comprehensive Kubernetes tutorial.

0 notes

Text

Install Microk8s: Ultimate Beginners Configuration Guide

Install #Microk8s: Ultimate Beginners Configuration Guide #homelab #kubernetes

Microk8s is one of the coolest small Kubernetes distros you can play around with and it is not just for playing around, it is actually built for running production workloads in the cloud. But, it can also serve as the platform you use in your home lab for running Kubernetes and getting familiar with it. Let’s look at Microk8s for beginners and how it can be installed and configured. Table of…

0 notes

Text

Techshort: What is microk8s?

Techshort: What is microk8s? | Just what I know today -- I'll know more tomorrow #k8s #linux #kubernetes #docker #snap #ubuntu

microk8s is Kubernetes, installed locally! microk8s is designed to be a fast and lightweight upstream Kubernetes install isolated from your host but not via a virtual machine. This isolation is achieved by packaging all the upstream binaries for Kubernetes, Docker.io, iptables, and CNI in a single appliication container

What I have learned is that if you have been a user of Docker then Kub…

View On WordPress

0 notes

Text

@tototavros replied to your text post

what does kubectl do, and would you recommend the setup you have?

Kubernetes.

Tiny 4-node hardware Kubernetes installation that I can test stuff out on without paying the man (AWS) his money.

As for the second half, sorta? It sort of evolved from a 3-monitor, 2.5 computer setup (Desktop + work/home laptop) into the monstrosity it is today over the last year or so when I kept having spare parts from building the *last* computer.

So the history is:

1) 2011 desktop 2) 2013 laptop can no longer really keep up. 3) #1 dies in 2021 and is replaced by gaming desktop. 4) #3 doesn't play nice with Linux and #2 is old, so let's re-use the old case/GPU and some spare parts and throw together a cheap AM4 Linux build. 5) #4 doesn't have enough cores to run all my VMs, so we double the cores and 4x the RAM, take the CPU/RAM and build in a Hyte Revolt 3 build for a LAN box. 6) And then the Arch build is just a fun APU build with a 5700G. 7) New work laptop shows up.

Pros: A set of $100 KVMs and $20 USB switch beats having multiple multiple monitor setups? Most monitors have 2 HDMI in and 1 Displayport, so that's 6 possible computers on all my monitors and only four of my eight non-server-based computers use all the monitors so.

I can be so so lazy and also when I'm doing random Linux maintenance, swap back and forth between two or three computers at once.

Cons: Despite having set this all up over a year or two, I still forget which of the 6 computers plugged into the Little Bears is which and then there's no sound.

There is *so so so* much maintenance. You're not just running apt-get, you're running apt-get on N+2 OS's installed across half a dozen computers and half a dozen bootable USB's aside. You're not just installing Slack and rejoining your , you're doing this on half a dozen serious computers. You're not just trying to convince the Raspberry Pi to run 1080p instead of 4k because lol Pis cannot do that, you're doing it half a dozen times on three different OS's. You're not updating Lightroom, you're updating Lightroom on 3 computers.

Related to that, the thing about using Windows 10, OS X, and 4 different flavors of Linux is that I am very very broad and not terribly deep at all.

Also related to that, I'm horribly invested in the cloud.

Am I happy with it? If I kept computers:

Linux desktop, Windows desktop, (2) Mac laptop(s) is really a nice 3(4) computer setup and I think I'll maintain this for the rest of my life.

I love my Retropie and my Pihole, I think I'd skip the rest and run microk8s locally on a big 59X0x or 12900k build instead.

/The downside of spending several thousand dollars on a computer is that you spent several thousand dollars on a computer. The upside is that you get a completely functional decade out of them even if the end of the decade is a bit glitchy.

4 notes

·

View notes

Text

Kubernetes is a free and open-source orchestration tool that has been highly adopted in modern software development. It allows one to automate, scale and manage the application deployments. Normally, applications are run in containers, with the workloads distributed across the cluster. Containers make use of the microservices architecture, where applications are immutable, portable, and optimized for resource usage. Kubernetes has several distributions that include: OpenShift: this is a Kubernetes distribution developed by RedHat. It can be run both on-premise and in the cloud. Google Kubernetes Engine: This is a simple and flexible Kubernetes distribution that runs on Google Cloud. Azure Kubernetes Service: This is a cloud-only Kubernetes distribution for the Azure cloud Rancher: This Kubernetes distribution has a key focus on multi-cluster Kubernetes deployments. This distribution is similar to OpenShift but it integrates Kubernetes with several other tools. Canonical Kubernetes: This Kubernetes distribution is developed by the Canonical company(The company that develops Ubuntu Linux). It is an umbrella for two CNF-certified Kubernetes distributions, MicroK8s and Charmed Kubernetes. It can be run both on-premise or in the cloud. In this guide, we will be learning how to install MicroK8s Kubernetes on Rocky Linux 9 / AlmaLinux 9. MicroK8s is a powerful and lightweight enterprise-grade Kubernetes distribution. It has a small disk and memory footprint but still offers innumerable add-ons that include Knative, Cilium, Istio, Grafana e.t.c This is the fastest multi-node Kubernetes that can work on Windows, Linux, and Mac systems. Microk8s can be used to reduce the complexity and time involved when deploying a Kubernetes cluster. Microk8s is preferred due to the following reasons: Simplicity: it is simple to install and manage. It has a single-package install with all the dependencies bundled. Secure: Updates are provided for all the security issues and can be applied immediately or scheduled as per your maintenance cycle. Small: This is the smallest Kubernetes distro that can be installed on a laptop or home workstation. It is compatible with Amazon EKS, Google GKE, and Azure AKS, when it is run on Ubuntu. Comprehensive: it includes an innumerable collection of manifests that are used for common Kubernetes capabilities such as Ingress, DNS, Dashboard, Clustering, Monitoring, and updates to the latest Kubernetes version e.t.c Current: It tracts the upstream and releases beta, RC, and final bits the same day as upstream K8s. Now let’s plunge in! Step 1 – Install Snapd on Rocky Linux 9 / AlmaLinux 9 Microk8s is a snap package and so snapd is required on the Rocky Linux 9 / AlmaLinux 9 system. The below commands can be used to install snapd on Rocky Linux 9 / AlmaLinux 9. Enable the EPEL repository. sudo dnf install epel-release Install snapd: sudo dnf install snapd Once installed, you need to create a symbolic link for classic snap support. sudo ln -s /var/lib/snapd/snap /snap Export the snaps $PATH. echo 'export PATH=$PATH:/var/lib/snapd/snap/bin' | sudo tee -a /etc/profile.d/snap.sh source /etc/profile.d/snap.sh Start and enable the service: sudo systemctl enable --now snapd.socket Verify if the service is running: $ systemctl status snapd.socket ● snapd.socket - Socket activation for snappy daemon Loaded: loaded (/usr/lib/systemd/system/snapd.socket; enabled; vendor preset: disabled) Active: active (listening) since Tue 2022-07-26 09:58:46 CEST; 7s ago Until: Tue 2022-07-26 09:58:46 CEST; 7s ago Triggers: ● snapd.service Listen: /run/snapd.socket (Stream) /run/snapd-snap.socket (Stream) Tasks: 0 (limit: 23441) Memory: 0B CPU: 324us CGroup: /system.slice/snapd.socket Set SELinux in permissive mode: sudo setenforce 0 sudo sed -i 's/^SELINUX=.*/SELINUX=permissive/g' /etc/selinux/config Step 2 – Install Microk8s on Rocky Linux 9 / AlmaLinux 9

Once Snapd has been installed, you can easily install Microk8s by issuing the command: $ sudo snap install microk8s --classic 2022-07-26T10:00:17+02:00 INFO Waiting for automatic snapd restart... microk8s (1.24/stable) v1.24.3 from Canonical✓ installed To be able to execute the commands smoothly, you need to set the below permissions: sudo usermod -a -G microk8s $USER sudo chown -f -R $USER ~/.kube For the changes to apply, run the command: newgrp microk8s Now verify the installation by checking the Microk8s status $ microk8s status microk8s is running high-availability: no datastore master nodes: 127.0.0.1:19001 datastore standby nodes: none addons: enabled: ha-cluster # (core) Configure high availability on the current node disabled: community # (core) The community addons repository dashboard # (core) The Kubernetes dashboard dns # (core) CoreDNS gpu # (core) Automatic enablement of Nvidia CUDA helm # (core) Helm 2 - the package manager for Kubernetes helm3 # (core) Helm 3 - Kubernetes package manager host-access # (core) Allow Pods connecting to Host services smoothly hostpath-storage # (core) Storage class; allocates storage from host directory ..... Get the available nodes: $ microk8s kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready 3m38s v1.24.3-2+63243a96d1c393 Step 3 – Install and Configure kubectl for MicroK8s Microk8s comes with its own kubectl version to avoid interference with any version available on the system. This is used on the terminal as: microk8s kubectl However, Microk8s can be configured to work with your host’s kubectl. First, obtain the Mikrok8s configs using the command: $ microk8s config apiVersion: v1 clusters: - cluster: certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUREekNDQWZlZ0F3SUJBZ0lVWlZURndTSVFhOU13Rm1VdmR1S09pM0ErY3hvd0RRWUpLb1pJaHZjTkFRRUwKQlFBd0Z6... server: https://192.168.205.12:16443 name: microk8s-cluster contexts: - context: cluster: microk8s-cluster user: admin name: microk8s current-context: microk8s ...... Install kubectl on Rocky Linux 9 / AlmaLinux 9 using the command: curl -LO https://storage.googleapis.com/kubernetes-release/release/`curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt`/bin/linux/amd64/kubectl sudo chmod +x kubectl sudo mv kubectl /usr/local/bin/ Generate the required config: cd $HOME microk8s config > ~/.kube/config Get the available nodes: $ kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready 5m35s v1.24.3-2+63243a96d1c393 Step 4 – Add Nodes to the Microk8s Cluster For improved performance and high availability, you can add nodes to the Kubernetes cluster. On the master node, allow the required ports through the firewall: sudo firewall-cmd --add-port=25000/tcp,16443/tcp,12379/tcp,10250/tcp,10255/tcp,10257/tcp,10259/tcp --permanent sudo firewall-cmd --reload Also, generate the command to be used by the nodes to join the cluster; $ microk8s add-node microk8s join 192.168.205.12:25000/17244dd7c3c8068753fe8799cf72f2ac/976e1522f4b6 Use the '--worker' flag to join a node as a worker not running the control plane, eg: microk8s join 192.168.205.12:25000/17244dd7c3c8068753fe8799cf72f2ac/976e1522f4b6 --worker If the node you are adding is not reachable through the default interface you can use one of the following: microk8s join 192.168.205.12:25000/17244dd7c3c8068753fe8799cf72f2ac/976e1522f4b6 Install and configure Microk8s on the Nodes You need to install Microk8s on the nodes just as we did in steps 1 and 2. After installing Microk8s on the nodes, run the following commands: export OPENSSL_CONF=/var/lib/snapd/snap/microk8s/current/etc/ssl/openssl.cnf sudo firewall-cmd --add-port=25000/tcp,10250/tcp,10255/tcp --permanent

sudo firewall-cmd --reload Now use the generated command on the master to join the nodes to the Microk8s cluster. $ microk8s join 192.168.205.12:25000/17244dd7c3c8068753fe8799cf72f2ac/976e1522f4b6 --worker Contacting cluster at 192.168.205.12 The node has joined the cluster and will appear in the nodes list in a few seconds. Currently this worker node is configured with the following kubernetes API server endpoints: - 192.168.205.12 and port 16443, this is the cluster node contacted during the join operation. If the above endpoints are incorrect, incomplete or if the API servers are behind a loadbalancer please update /var/snap/microk8s/current/args/traefik/provider.yaml Once added, check the available nodes: $ kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready 41m v1.24.3-2+63243a96d1c393 node1 Ready 7m52s v1.24.3-2+63243a96d1c393 To remove a node from a cluster, run the command below on the node: microk8s leave Step 5 – Deploy an Application with Microk8s Deploying an application in Microk8s is similar to other Kubernetes distros. To demonstrate this, we will deploy the Nginx application as shown: $ kubectl create deployment webserver --image=nginx deployment.apps/webserver created Verify the deployment: $ kubectl get pods NAME READY STATUS RESTARTS AGE webserver-566b9f9975-cwck4 1/1 Running 0 28s Step 6 – Deploy Kubernetes Services on Microk8s For the deployed application to be accessible, we will expose our created pod using NodePort as shown: $ kubectl expose deployment webserver --type="NodePort" --port 80 service/webserver exposed Get the service port: $ kubectl get svc webserver NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE webserver NodePort 10.152.183.89 80:30281/TCP 29s Try accessing the application using the exposed port via the web. Step 7 – Scaling applications on Microk8s Scaling is defined as creating replications on pods/deployments for high availability. This feature is highly embraced in Kubernetes, allowing it to handle as many requests as possible. To create replicas, use the command with the syntax below: $ kubectl scale deployment webserver --replicas=4 deployment.apps/webserver scaled Get the pods: $ kubectl get pods NAME READY STATUS RESTARTS AGE webserver-566b9f9975-cwck4 1/1 Running 0 8m40s webserver-566b9f9975-ts2rz 1/1 Running 0 28s webserver-566b9f9975-t656s 1/1 Running 0 28s webserver-566b9f9975-7z6zq 1/1 Running 0 28s It is that simple! Step 8 – Enabling the microk8s Dashboard The dashboard provides an easy way to manage the Kubernetes cluster. Since it is an add-on, we need to enable it by issuing the command: $ microk8s enable dashboard dns Infer repository core for addon dashboard Infer repository core for addon dns Enabling Kubernetes Dashboard Infer repository core for addon metrics-server Enabling Metrics-Server serviceaccount/metrics-server created clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created clusterrole.rbac.authorization.k8s.io/system:metrics-server created rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created ...... Create the token to be used to access the dashboard. kubectl create token default Verify this: $ kubectl get services -n kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE metrics-server ClusterIP 10.152.183.200 443/TCP 77s kubernetes-dashboard ClusterIP 10.152.183.116 443/TCP 58s dashboard-metrics-scraper ClusterIP 10.152.183.35 8000/TCP 58s kube-dns ClusterIP 10.152.183.10 53/UDP,53/TCP,9153/TCP 53

Allow the port(10443) through the firewall: sudo firewall-cmd --permanent --add-port=10443/tcp sudo firewall-cmd --reload Now forward the traffic to the local port(10443) using the command: kubectl port-forward -n kube-system service/kubernetes-dashboard --address 0.0.0.0 10443:443 Now access the dashboard using the URL https://127.0.0.1:10443. In some browsers such as chrome, you may find an error with invalid certificates when accessing the dashboard remotely. On Firefox, proceed as shown Provide the generated token to sign in. On successful login, you will see the Microk8s dashboard below. From the above dashboard, you can easily manage your Kubernetes cluster. Step 9 – Enable In-built storage on Microk8s Microk8s comes with an in-built storage addon that allows quick creation of PVCs. To enable and make this storage available to use by pods, execute the below commands: export LD_LIBRARY_PATH="$LD_LIBRARY_PATH:/lib64" microk8s enable hostpath-storage Once enabled, verify if the hostpath provisioned has been created as a pod. $ kubectl -n kube-system get pods NAME READY STATUS RESTARTS AGE calico-kube-controllers-7f85f9c7b9-v7lk5 1/1 Running 0 3h42m metrics-server-5f8f64cb86-82nn2 1/1 Running 1 (165m ago) 165m calico-node-hljcb 1/1 Running 0 3h13m calico-node-sjzd2 1/1 Running 0 3h9m coredns-66bcf65bb8-m6x44 1/1 Running 0 163m dashboard-metrics-scraper-6b6f796c8d-scwtx 1/1 Running 0 163m kubernetes-dashboard-765646474b-256qb 1/1 Running 0 163m hostpath-provisioner-f57964d5f-sh4wj 1/1 Running 0 24s Also, confirm that a storage class has been created: $ kubectl get sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE microk8s-hostpath (default) microk8s.io/hostpath Delete WaitForFirstConsumer false 83s Now we can use the storage class above to create PVCs. Create a Persistent Volume To demonstrate if the storage class is working properly, create a PV using it. $ vim sample-pv.yml apiVersion: v1 kind: PersistentVolume metadata: name: sampe-pv spec: # Here we are asking to use our custom storage class storageClassName: microk8s-hostpath capacity: storage: 5Gi accessModes: - ReadWriteOnce hostPath: # Should be created upfront path: '/data/demo' Create the hostpath with the required permissions. sudo mkdir -p /data/demo sudo chmod 777 /data/demo sudo chcon -Rt svirt_sandbox_file_t /data/demo Create the PV: kubectl create -f sample-pv.yml Verify the creation: $ kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE sampe-pv 5Gi RWO Retain Available microk8s-hostpath 7s Create a Persistent Volume Claim Once the PV has been created, now create the PVC using the StorageClass: vim sample-pvc.yml Add the below line to the file: apiVersion: v1 kind: PersistentVolumeClaim metadata: name: my-pvc namespace: default spec: # Once again our custom storage class here storageClassName: microk8s-hostpath accessModes: - ReadWriteOnce resources: requests: storage: 5Gi Apply the manifest: kubectl create -f sample-pvc.yml Verify the creation: $ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE my-pvc Pending microk8s-hostpath 13s Deploy an application that uses the PVC. $ vim pod.yml apiVersion: v1 kind: Pod metadata: name: task-pv-pod spec: volumes: - name: task-pv-storage persistentVolumeClaim:

claimName: my-pvc containers: - name: task-pv-container image: nginx ports: - containerPort: 80 name: "http-server" volumeMounts: - mountPath: "/usr/share/nginx/html" name: task-pv-storage Apply the manifest: kubectl create -f pod.yml Now verify if the PVC is bound: $ kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE sampe-pv 5Gi RWO Retain Bound default/my-pvc microk8s-hostpath 7m23s $ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE my-pvc Bound sampe-pv 5Gi RWO microk8s-hostpath 98s Step 10 – Enable Logging With Prometheus and Grafana Microk8s has the Prometheus add-on that can be enabled. This tool offers visualization of logs through the Grafana interface. To enable the add-on, execute: $ microk8s enable prometheus Infer repository core for addon prometheus Adding argument --authentication-token-webhook to nodes. Configuring node 192.168.205.13 Restarting nodes. Configuring node 192.168.205.13 Infer repository core for addon dns Addon core/dns is already enabled ....... After a few minutes, verify that the required pods are up: $ kubectl get pods -n monitoring NAME READY STATUS RESTARTS AGE prometheus-adapter-85455b9f55-w975k 1/1 Running 0 89s node-exporter-jnmmk 2/2 Running 0 89s grafana-789464df6b-kt5hr 1/1 Running 0 89s prometheus-adapter-85455b9f55-2g9rs 1/1 Running 0 89s blackbox-exporter-84c68b59b8-5lkw4 3/3 Running 0 89s prometheus-k8s-0 2/2 Running 1 (43s ago) 77s node-exporter-dzj66 2/2 Running 0 89s prometheus-operator-65cdb77c59-gfk4v 2/2 Running 0 89s kube-state-metrics-55b87f58f6-m6rnv 3/3 Running 0 89s alertmanager-main-0 2/2 Running 0 78s To access the Prometheus and Grafana services, you need to forward them: $ kubectl get services -n monitoring NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE prometheus-operator ClusterIP None 8443/TCP 2m31s alertmanager-main ClusterIP 10.152.183.136 9093/TCP 2m22s blackbox-exporter ClusterIP 10.152.183.174 9115/TCP,19115/TCP 2m21s grafana ClusterIP 10.152.183.248 3000/TCP 2m20s kube-state-metrics ClusterIP None 8443/TCP,9443/TCP 2m20s node-exporter ClusterIP None 9100/TCP 2m20s prometheus-adapter ClusterIP 10.152.183.173 443/TCP 2m20s prometheus-k8s ClusterIP 10.152.183.201 9090/TCP 2m19s alertmanager-operated ClusterIP None 9093/TCP,9094/TCP,9094/UDP 93s prometheus-operated ClusterIP None 9090/TCP 93s Allow the ports intended to be used through the firewall: sudo firewall-cmd --add-port=9090,3000/tcp --permanent sudo firewall-cmd --reload Now expose the ports: kubectl port-forward -n monitoring service/prometheus-k8s --address 0.0.0.0 9090:9090 Access the Prometheus using the URL http://IP_Address:9090 For Grafana, you also need to expose the port: kubectl port-forward -n monitoring service/grafana --address 0.0.0.0 3000:3000 Now access the service using the URL http://IP_Address:3000 Login with the default credentials: username=admin Password=admin Once logged in, change the password.

Now access the dashboard and visualize graphs. Navigate to Dashboards-> Manage-> Default and select the dashboard to load. For Kubernetes API For the Kubernetes Namespace Networking Final Thoughts That marks the end of this detailed guide on how to install MicroK8s Kubernetes on Rocky Linux 9 / AlmaLinux 9. You are also equipped with the required knowledge on how to use Microk8s to set up and manage a Kubernetes cluster.

0 notes