#Modulator/Demodulator

Explore tagged Tumblr posts

Text

#I am not a robot#I'm not a robot#Modulator/Demodulator#netart#net art#captcha#captcha code#internet#post internet#webcore#internetcore#electronic#spoken word#computer#digital#world wide web#Berlin#Bandcamp

0 notes

Text

Radio frequency modulator, rf modulator, rf balanced demodulators, balanced

700MHz-850MHz 1.7GHz-1.9GHz 150Mbp MultiConnect®rCell 100 Series Cellular Router

1 note

·

View note

Text

https://www.futureelectronics.com/p/semiconductors--wireless-rf--rf-modules-solutions--gps/max-8q-0-u-blox-3122418

RF Modules, Digital rf modulator, Proprietary RF Module, Radio frequency module

MAX-8 Series 3.6 V u-blox 8 GNSS TCXO ROM Green 9.7x10.1 mm LCC Module

#u-blox#MAX-8Q-0#Wireless & RF#RF Modules & Solutions#GPS#Digital rf modulator#Proprietary RF Module#Radio frequency#USB Adapter#Bluetooth transmitter module#Balanced modulator#Demodulator#Bluetooth Accessories#Transceiver radio waves

1 note

·

View note

Text

Wireless rf frequency, digital audio mixer circuit programming, RFID capability

NT3H2111 Series 3.6 V 13.56 MHz Surface Mount RFID Transponder - XQFN-8

#Wireless & RF#RF Modules & Solutions RFID#NT3H2111W0FHKH#NXP#Wireless rf frequency#digital audio mixer circuit programming#RFID capability#rf control systems#Wireless detectors circuit#remote control#Digital rf modulator#demodulator

1 note

·

View note

Text

A leap of faith and physics

We thought for a civilization to form, one needed liquid water, a stable planet with a hot core, and tardium crystals. Apparently, this is not so.

Because we just received a vibromessage over the tachyon network from an unknown source.

Which in itself would not be too unusual. Plenty of newly realized civilizations figure out how to configure tardium to send tachyon messages across isospace. Hoping someone will answer. We always do. It always takes some time to go from simple repeating messages to understanding one another. Most civilizations don't come up with the galactic standard modulation on their own. Nor do we know their form of communication all that well, language, culture, all of that.

First contact is always a lengthy affair, until the new species is integrated into the intergalactic community. Then follows the exchange of knowledge and culture, the setting up of historical archives and sharing of starcharts. Since light travels only at luxionic speed, the charts provide a valuable look at the past. Once the new civilization has been caught up to date, things tend to settle. Updates are fewer and far in between, and culture tends to somewhat homogenize. Not completely, of course, as everyone has different living circumstances, but with all the exchange between us, some settling is bound to happen.

But we know where tardium reserves are, have felt the reverb of our scans, we know where civilizations could potentially pop up. The message we received was unusual not because its source was unknown, but because it came from a sector without any sufficient tardium deposits.

That... shouldn't even be possible!

The signal is also a bit noisy. Strange. Usually, the bigger the tardium array, the more self-stabilization should occurr. And for interstellar communication, you tend to need quite large arrays. So then why was there so much noise?

It was clearly a signal, and according to the triangulators, it came from the outer third of a dark spiral galaxy. We call them that, since they were never really observed, at least not with any isocartography. We only know they're there due to shared star charts. No idea what's going on with them at the current isotime. We can't know, without any tardium resonance to pick up.

Anyway, of course we answered. Their signal had been prime numbers, if we demodulated it correctly, followed by things we couldn't really make sense of. It was standard practice to begin communications with mathematics, and fundamental harmonics. It's strange that they did that right away, but not unheard of. We sent back primes, and then a couple of playful harmonics. Music. What we received back was weird, because we thought it was music, but it wasn't.

It turned out to be a starchart, and not just any kind. Pulsars. We sent back a chart of their galaxy, as reconstructed from several older starcharts. Then, we waited for their answer. And waited. And waited. An entire solar cycle (of our species) later, we finally got another answer.

And it just would not stop. We recognized it was a series of images, or rather, rapid successions of images, together with harmonics on a different band as well. This was video! The footage depicted a bipedal species, with symbolics next to different features. The images cycled through different body parts, with different descryptions. We had a really hard time catching and saving all the data, a task which had to be offloaded to the communal computation grid, as our own planet simply did not have the capacity to do it alone. This should have tipped us off to what we were going to be dealing with, but it didn't.

We continued, almost business as usual, just a fair bit faster. Then objects were being shown, often together with the bipedals, and their corresponding glyphics were depicted right next to them. Also, each image was accompanied by a sound file. They really made learning their language easy for us. We learned that they called themselves Humans, and their home was Earth, a planet orbiting a yellow star. They were a surface dwelling species! Those are pretty rare, as most can not survive the exposure to open space for some reason. We then sent back images and glyphics of our own, matching them in their intent. We sent images of life forms, images of our own body parts, images of objects and always accompanied by isostandard glyphics.

Usually, once communication has come to a basic understanding, the exchange of culture would begin.

But the Humans had started out with primes and starcharts, so of course, their next communication wasn't about culture. We... honestly didn't know what exactly it was, for a while. Until some of the mathematicians from across the network found patterns. They were sharing mathematics with us!

Eager to help, we sent back entire databases full of insights. They requested more soon. So we sent more. And more. And more. We wondered how they could even store all that we sent them. We asked. They sent back something we didn't understand. We hoped the mathematicians could figure it out, but nope.

Eventually, we sent steam engine configurations, as well as the corresponding heating and shunting tardion-arrays used to power them. They sent back their own designs for steam engines. And other engines that seemed similar, but shoudn't work with steam. The machine configurations, piston layouts and such, were fairly primitive. As was to be expected from a new species. But they never sent us schematics of their heating or shunting arrays. When we asked how they kept things cool without shunting arrays, they sent back another steam engine. But, when we called it that, they corrected us. What they had shown us was a heat pump. They used the opposite effect, instead of creating movement from a temperature difference, they created a temperature difference from movement. We asked them why they wouldn't just use shunting arrays. They asked what those were.

And this is how we found out why they were in dark space. Why their signal was so noisy. And why they had never depicted heating or shunting arrays in their schematics.

They had practically no tardium. They simply did not have enough of it to make arrays, as we thought all civilizations do. The largest piece of tardium they had was the centerpiece of a gigantic machine. It was about the size of a human "nail", which is a vestigial claw originally used for superior grip on one of the native plant species of their planet.

We did not know how to respond. We could not comprehend how a civilization could form without tardium crystals. They asked us if we knew where more could be found, preferably near them. We didn't understand what they meant. Then they asked us how to locate reserves. We gave them the modulations that we use to scan for the crystals' tachyon resonance.

They thanked us, and ceased their questions. Then, communication became choppy. Only occasionally would we receive an exchange of culture. Their questions about mathematics and tardium crystals ceased.

---------------------

When we first received back an answer from the deep space tachyon dish, we were extatic. And shocked. And kind of in disbelief. Nobody had really known if it would work. Still, everyone in the control room agreed that we should make sure it was really a signal, before we dropped that bombshell to the public.

We focused a couple more dyson collectors onto the dish, and changed the signal. Instead of primes and harmonics, this time, we encoded the pulsar chart, multiple times, in every encoding we could think of, and sent them all.

Only a few hours later, we received another signal from the previous location. The encoding was our own, easily recognized. With shaky hands, i pressed the 'open image file' button.

When i was greeted by a picture of the Milky Way, everyone in the room lost their collective shit.

"Holy Fuck!" "Oh my god." Someone fainted. Multiple people cried. Nobody minded any of that.

~~~

The prime administrator creased her brow. The direct line was ringing. This better be important. "Hello? Prime administrator here." From the other end, she could hear someone suppressing tears, and whimpering: "Tachyon dish project operator here. We... we."

"Everything ok over there?", she asked. What could possibly have happened that had the scientist crying? Was there an accident with the dyson swarm or something? Did people die? No, she trusted the operator of that experiment to not call unless it mattered to the entire human race.

A wet chuckle. "Better than ok. Maam? We... We're not alone."

Not alone? What does that...? Oh. OH! oh

"Are.. you sure?" Dammit. Now even her own voice was shaking.

"We sent a pulsar chart and got a beautiful image of the Milky Way back, in the same image file type. Pretty sure at this point."

~~~

The following year was downright insane. The mere confirmation that we weren't alone in the universe spurred us all on. Artists did their best to show all sides of us, scientists got together to determine what questions we should ask, even the long obsolete military awakened from its slumber, churning out tactical analyses of possible tachyon based weaponry, and how to defend against it.

Some people were panicking, others in denial, but most relished the opportunities that might open up.

Policies were made, on how to handle aliens that would come to the solar system. Tachyon mechanics, an until now unproven theory, made leaps and bounds, scientists working as hard as they could to understand it better.

The dyson collectors were turned to multiple new research projects, powering large machines that channeled vibrations into the tiny crystals we had found to pick up on tachyon vibrations. The largest one that we had discovered while asteroid mining was still in the communication dish, but the smaller shrapnel, a couple millimeters in size at the most, were being utilized.

Eventually, after a year was up, communications resumed. The linguists sent data, and worked closely with the astronomers that had made the initial transmissions. We also received back data, and the scientific community devoured every piece of information. We learned their language as fast as we could.

But our requests for the sharing of scientific knowledge appeared to fall on deaf ears. Whenever we sent natural constants, or physical laws, we got nothing back. Well, almost. Our prodding did yield one answer: How to locate the crystals. Which were apparently common? Though our scans painted a different picture. We did have some scattered about the asteroid belt, yes. But the largest one we detected was only 3cm in diameter. A little bigger than the one in the communication dish, sure, but not that much.

We came to accept this, figuring that maybe there was some kind of prime directive that forbade the sharing of further technology. Actually, perhaps we leaned a bit too far into our Star Trek analogy. Because most of us would not get it out of our heads to try to build a warp drive. Well, not really a spacetime bending drive, but something that could go faster than light. Because, obviously, thanks to our discovery, we now knew that while the speed of light may be finite, the speed of information was not.

-----------------------------

After ten cycles of cultural exchange, the humans sent a request for isocoordinates of the nearest known civilization to their own. This request kind of drowned in the noise, we didn't really think about it much, we just transmitted our coordinates. Turns out, the nearest ones were us, in what the Humans call the Andromeda Galaxy.

Shortly after the request, they went totally vibrosilent. We tried and tried to contact them, but to no avail. This, while tragic, was a reality of civilization, though. Extinction events could always happen. Sometimes the affected civilization would realize in advance and send a couple warnings, but nobody could help them from afar, of course. So that's what we figured happened to Humanity. Maybe their sun blew up, or they got knocked away from it by a passing object, anything could have happened.

Many cycles passed. I had aged, my once young and springy exoskeleton now wobbly and soft, though my mind was still sharp enough to crew a communications array.

None of us were prepared for the schockwave resonating through our sensor grids. Multiple arrays straight up shattered. Luckily, as big as they were, there was nobody close to them, so no deaths. What the rest of them picked up though made no sense. We could determine there was a pulse, but no normal communication had that level of power, nor resonance.

Then, half a planetary rotation later, there was a new luminance in the sky. We were about to renew our arrays and update our starchart, when the light source moved. Toward the planet.

What?

And then, my assigned communications array resonated.

"This is the Human vessel Enterprise, calling anyone on the planet. Can you read us?" the crystal sang in choppy English, the language of the Humans. The ones we thought were extinct.

I scuttled to my post at the resonator, tuning it to reply:

"This is communications, we read you, but i don't understand? We are recovering from an unprecedented resonance pulse that shattered multiple arrays, sorry if the modulation is a bit off."

The answer was swift: "Sorry about that, our engines are a bit out of tune at this point. That pulse might have been us. Glad to hear you all down there, is anyone injured?"

"Your engines? And uh. No, nobody injured."

"Yes our engines, again, we apologize for that. But glad to know everyone is alright.

Requesting permission to land on the surface."

This was a momentous occasion, which i didn't realize until later on. The entire tachyon network would eventually refer to this exact communication as a reference time. This exact moment would come to be known as 0:0 PFJ

0 Cycles and 0 rotations Past First Jump.

The only thing i remember is absently giving permission, not quite understanding what exactly they were requesting here. If i had, i would have convened with the councils beforehand.

Then, the cave began to shake. It wasn't coming from any of the arrays. It was coming from the surface.

~~~

They. They were here. The Humans were here. On the surface. Of. Of our planet. What? How?!

Most importantly, why?!

Then i remembered the stories about their exploration of the surface of their own planet. How they had sent people to their poles, despite their biology not being fit to survive there. And several did die! How they climed mountains. Made pressurized vessels to dive below the surface of their open ocean. We asked them why. They told us.

I realized at that moment, not how they were here. But why.

"Because we could, and no human had been there before," they had answered back then.

431 notes

·

View notes

Text

Usurp Synapse - Modulator/Demodulator

15 notes

·

View notes

Text

Communication between the SR 71 and the KC 135Q had to be secure. The enemy would listen in vain, trying to find their location and their next rendezvous with a tanker. The RSO had a Modulator/ demodulator modem control panel. He used a five-digit code that he punched to secure communications. The KC 135Q, which is equipped with the same UHF radio and has the correct mission code and address number, could receive the SR 71 range as well. There was an interconnected phone that the crewmembers of both the SR 71 and the KC 135Q could talk to each other during this refueling time.

The tankers were always a welcome sight to the SR 71 crew. After a while, the cockpit would get a little warm. After refueling, the cockpit was noticeably cooler. Tankers could give life-saving information to the crewmembers by being able to look at the airplane and noticing if something was Wrong (Engine on fire, yes, that happened) with the airplane. Thank you to all KC 135 pilots and crewmembers for helping keep the SR 71 going all those years.

Written by Linda Sheffield Miller

@Habubrats71 viaX

23 notes

·

View notes

Note

✨- How did you come up with the OC’s name?

For Hexadecimal and Modem, I'm quite curious :33

waaah tyyy...

ok so Usually names for my ocs come from behindthename (my personal lord and savior.....) but for Days before officially making them i had the word 'hexadecimal' stuck in my head as a name cuz i loveee weird words as names <33 then i was like "ok well obviously the other half of the duo has to half an equally as wacky name" and . tbh idk where modem came from ? i think i literally googled "long words" and "modulator demodulator" was rlly good :]] i Have since shortened it back down to modem (that was originally a nickname) but the essence is there.... and i think the names are silly !! i like em :]]

#styx says#styx ocs#modem#hexadecimal#THANK YOUUUU again a teehee<33#wacky names for ocs is great naming them is one of my favorite parts

2 notes

·

View notes

Text

Impact of Digital Signal Processing in Electrical Engineering - Arya College

Arya College of Engineering & I.T is the best college of Jaipur, Digital SignalProcessing (DSP) has become a cornerstone of modern electrical engineering, influenced a wide range of applications and driven significant technological advancements. This comprehensive overview will explore the impact of DSP in electrical engineering, highlighting its applications, benefits, and emerging trends.

Understanding Digital Signal Processing

Definition and Fundamentals

Digital Signal Processing involves the manipulation of signals that have been converted into a digital format. This process typically includes sampling, quantization, and various mathematical operations to analyze and modify the signals. The primary goal of DSP is to enhance the quality and functionality of signals, making them more suitable for various applications.

Key components of DSP include:

Analog-to-Digital Conversion (ADC): This process converts analog signals into digital form, allowing for digital manipulation.

Digital Filters: These algorithms are used to enhance or suppress certain aspects of a signal, such as noise reduction or frequency shaping.

Fourier Transform: A mathematical technique that transforms signals from the time domain to the frequency domain, enabling frequency analysis.

Importance of DSP in Electrical Engineering

DSP has revolutionized the way engineers approach signal processing, offering numerous advantages over traditional analog methods:

Precision and Accuracy: Digital systems can achieve higher precision and reduce errors through error detection and correction algorithms.

Flexibility: DSP systems can be easily reprogrammed or updated to accommodate new requirements or improvements, making them adaptable to changing technologies.

Complex Processing Capabilities: Digital processors can perform complex mathematical operations that are difficult to achieve with analog systems, enabling advanced applications such as real-time image processing and speech recognition.

Applications of Digital Signal Processing

The versatility of DSP has led to its adoption across various fields within electrical engineering and beyond:

1. Audio and Speech Processing

DSP is extensively used in audio applications, including:

Audio Compression: Techniques like MP3 and AAC reduce file sizes while preserving sound quality, making audio files easier to store and transmit.

Speech Recognition: DSP algorithms are crucial for converting spoken language into text, enabling voice-activated assistants and transcription services.

2. Image and Video Processing

In the realm of visual media, DSP techniques enhance the quality and efficiency of image and video data:

Digital Image Processing: Applications include noise reduction, image enhancement, and feature extraction, which are essential for fields such as medical imaging and remote sensing.

Video Compression: Standards like H.264 and HEVC enable efficient storage and streaming of high-definition video content.

3. Telecommunications

DSP plays a vital role in modern communication systems:

Modulation and Demodulation: DSP techniques are used in encoding and decoding signals for transmission over various media, including wireless and optical networks.

Error Correction: Algorithms such as Reed-Solomon and Turbo codes enhance data integrity during transmission, ensuring reliable communication.

4. Radar and Sonar Systems

DSP is fundamental in radar and sonar applications, where it is used for:

Object Detection: DSP processes signals to identify and track objects, crucial for air traffic control and maritime navigation.

Environmental Monitoring: Sonar systems utilize DSP to analyze underwater acoustics for applications in marine biology and oceanography.

5. Biomedical Engineering

In healthcare, DSP enhances diagnostic and therapeutic technologies:

Medical Imaging: Techniques such as MRI and CT scans rely on DSP for image reconstruction and analysis, improving diagnostic accuracy.

Wearable Health Monitoring: Devices that track physiological signals (e.g., heart rate, glucose levels) use DSP to process and interpret data in real time.

Trends in Digital Signal Processing

As technology evolves, several trends are shaping the future of DSP:

1. Integration with Artificial Intelligence

The convergence of DSP and AI is leading to smarter systems capable of learning and adapting to user needs. Machine learning algorithms can enhance traditional DSP techniques, enabling more sophisticated applications in areas like autonomous vehicles and smart home devices.

2. Increased Use of FPGAs and ASICs

Field-Programmable Gate Arrays (FPGAs) and Application-Specific Integrated Circuits (ASICs) are increasingly used for implementing DSP algorithms. These technologies offer high performance and efficiency, making them suitable for real-time processing in demanding applications such as telecommunications and multimedia.

3. Internet of Things (IoT)

The proliferation of IoT devices is driving demand for efficient DSP solutions that can process data locally. This trend emphasizes the need for low-power, high-performance DSP algorithms that can operate on resource-constrained devices.

4. Cloud-Based DSP

Cloud computing is transforming how DSP is implemented, allowing for scalable processing power and storage. This shift enables complex signal processing tasks to be performed remotely, facilitating real-time analysis and data sharing across devices.

Conclusion

Digital Signal Processing has significantly impacted electrical engineering, enhancing the quality and functionality of signals across various applications. Its versatility and adaptability make it a critical component of modern technology, driving innovations in audio, image processing, telecommunications, and biomedical fields. As DSP continues to evolve, emerging trends such as AI integration, IoT, and cloud computing will further expand its capabilities and applications, ensuring that it remains at the forefront of technological advancement. The ongoing development of DSP technologies promises to enhance our ability to process and utilize information in increasingly sophisticated ways, shaping the future of engineering and technology.

2 notes

·

View notes

Text

What is it?

Tell me, what exactly is regular ppl's fascination with necromancy? It's not mine. I'm not fascinated. I'm called, I'm wired for it. It's my place in the world. I love muertos and they 💖 me back. Hmm. Like, Tolkien mentioned the necromancer once in the Hobbit book? And the movie overdid it. WTH?

I think it's ppl's interest in either talking to muertos like they did during the spiritualist phases in America or it's just the thought of some life after death. I wonder why we don't see anymore huge sales of Ouija boards anymore? Too antiquated? Maybe folks just don't believe the same things anymore. I think the internet was more of a curse than a blessing. It ruined a lot of simplicity that we had as well as robbing innocence. Ppl found information just fine without the internet.

Though, I wouldn't have met my husband. That was in 2003 though. No social media, just Yahoo and Aol. I was still on dial up internet! You know modulator demodulators - aka modems.

Anyway, I'm fucking tired and loopy, totally spun and need sleep!

Necromancy is such a rich & loaded word to just mean "divination with the dead" in Greek. Yes, studied medical Greek. I'm familiar with suffixes & combining forms, prefixes & all...very good at it, 4.0 grades. Though I need to dig out my Greek & Roman necromancy book & take a perusal. I'm more into local (American) & Latino culture, not European, Greek, or Roman culture. Egyptian is overdone. Not my potato.

Ppl on Elder Scrolls Online (ESO) bitched about having a Necromancer build for five years until they added one! My husband kicks ass as a Dark Elf Dragonknight girl character. She's magicka based. His legacy build is a Wood Elf Nightblade girl that's all stam based who used to kick major ass playing in ball groups. But..that was 7-10 years ago. He just plays at leisure now. Should see his fucking dungeon trophies! Lol! I just like ESO. Sorry! Just manic from being spun. Dangerous combo. Oh yeah, he's AD. Not a Smurf or a Red. So, why does he play girl characters? He'd rather look at a female ass when he's on his mount (riding animal). His words! Rofl! 😂

Back to necro...so sorry! Why do you think ppl love the necromancy mystique? I'm directly involved but can only see what I mentioned above. Do you think my analysis is accurate?

M.M. 💖💀💖

3 notes

·

View notes

Text

The TEA5767 Module is a single-chip FM Radio IC circuit designed for low-voltage applications, making it ideal for use in embedded systems and microcontroller platforms like Arduino and other 3.3V development boards. This versatile chip includes intermediate frequency selectivity and an integrated FM demodulator. Its ease of interfacing compatibility with the I2C communication protocol makes it straightforward to connect to other development boards. With minimal additional components, it can function as a stand-alone radio receiver. The TEA5767 supports a frequency range of 88MHz to 108MHz, allowing it to tune into FM stations in India, Japan, Europe, and the United States.

2 notes

·

View notes

Text

Dial up modems work by modulating the digital signal into an audio signal to send down the phone line.

So they were always screaming, not just at connection, just you only got to hear it at connection.

This is why you couldn't use the phone to call at the same time, the modem was actively using the same voice frequency of the phone line!

Additional fact, modem is a portmanteau! Modulator-demodulator! Modem!

remember when you were 10 and you would hang out with your friends in order to Look At The Computer together like you went to their house and experienced the information superhighway together. and then leave

356K notes

·

View notes

Text

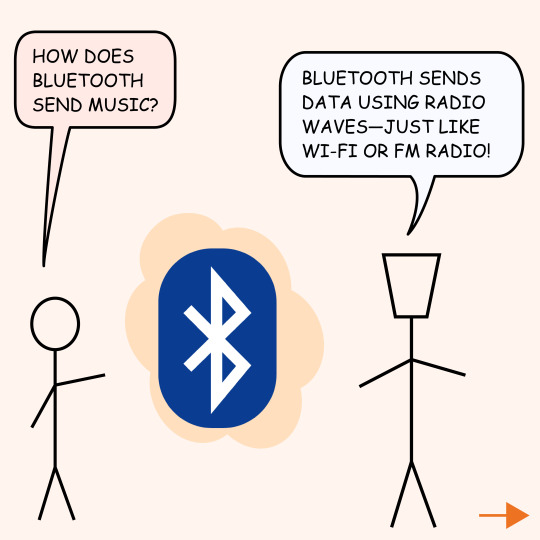

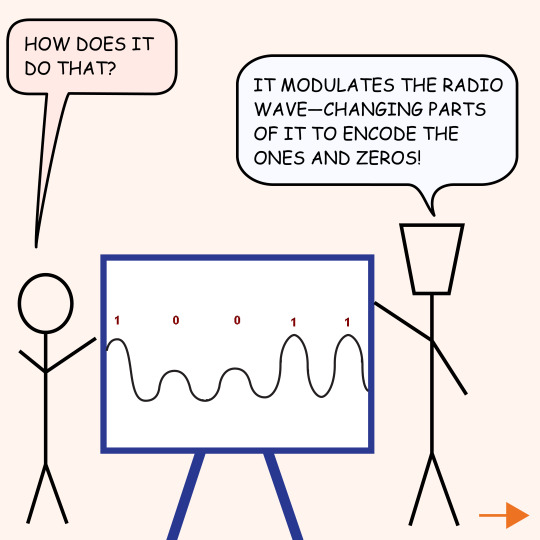

Bluetooth sending music is like sending a secret message through radio waves, but a lot more high-tech. Here’s how it works:

1. Your Phone Prepares the Sound Music starts as a digital file (MP3, AAC, FLAC, etc.). But Bluetooth can’t send big raw files directly, so it compresses them using a codec (like SBC, AAC, aptX, LDAC). The better the codec, the better the sound quality.

2. Encoding & Compression The codec takes the digital music and shrinks it while keeping the important parts. Some codecs do a better job (aptX or LDAC keep more details, while SBC loses some).

3. Digital-to-Analog Conversion (DAC) Your phone has a DAC chip that turns the compressed digital data into an analog wave, which is what sound actually looks like. But Bluetooth doesn’t send analog signals—it needs to go back to digital first!

4. Modulation & Packetization Now the digital data gets modulated into a radio wave signal. Bluetooth splits it into tiny packets and assigns each packet a specific frequency using FHSS (Frequency Hopping Spread Spectrum). It jumps between different frequencies 1,600 times per second to avoid interference.

5. Transmission Over 2.4 GHz The modulated signal is sent over the 2.4 GHz frequency band, the same range used by Wi-Fi and microwaves. This band is unlicensed, meaning anyone can use it.

6. Reception & Demodulation Your Bluetooth headphones receive the signal and demodulate it—basically, they extract the digital audio data from the radio wave.

7. Decompression & Digital-to-Analog Again The headphones’ DAC turns the digital data back into an analog signal, ready to be played by the speakers.

8. You Hear the Music! 🎧 The tiny speakers vibrate, producing the sound waves that reach your ears. And all of this happens in milliseconds!

That’s how Bluetooth delivers music wirelessly—fast, efficient, and (sometimes) high quality. 🚀

Check, our substack for more in-depth articles on such topics.

#comics#sciencecomics#webcomics#science#stem#educationalcomics#liquidbird#becurious#comicstrips#rockets#space#electronics#aircraft

0 notes

Text

PRO 1206i BUG DETECTOR

The Pro1206i is a new class of a counter-surveillance device. Unlike all typical searching devices, it can detect modern hidden bugs which use such protocols as Bluetooth and Wi-Fi. Such bugs, especially Bluetooth types, are practically undetectable by common RF detectors due to their very low transmitted power and a special type of modulation. The Protect 1206i uses a separate channel with a high, [2.44/5 GHz] frequency pre-selector to detect and locate Bluetooth and Wi-Fi with a much higher sensitivity. The unit also then processes the demodulated signal in order to identify which protocol has been detected.

0 notes

Text

Time Series Data Exploration with Wavelet Transform

Introduction

Time series data provides insights into how variables change over time. While the Fast Fourier Transform (FFT) is useful for analyzing frequency domain information, it does not provide time-localized frequency details. Wavelet Transform (WT), on the other hand, allows for simultaneous time and frequency analysis, making it an essential tool for detecting transient events in signals.

In this post, we explore machinery vibration data using Wavelet Transform. The implementation is carried out using the Python time series package called zaman. You can find the source code in the GitHub repository.

Understanding Wavelet Transform

Wavelet Transform is similar to Fourier Transform in that it decomposes a signal into a set of basis functions. However, unlike Fourier Transform, which provides global frequency representation, Wavelet Transform captures localized frequency variations.

A wavelet is a waveform that decays at both ends. Different wavelet families exist, each defined by unique wave shapes. The transformation involves convolving a time series with a selected wavelet, which allows for analyzing specific frequency components at different time instances. This process can be repeated for various frequencies or scales to obtain a detailed time-frequency representation of the signal.

Key Benefits of Wavelet Transform:

Time-Frequency Analysis: Simultaneously captures frequency components and their locations in time.

Multi-Resolution Analysis: Useful for analyzing both high-frequency transients and low-frequency trends.

Feature Extraction: Effective in detecting anomalies, trends, and signal patterns.

Applications of Wavelet Transform

Wavelet Transform is widely used across various fields:

Signal Denoising: Reduces noise while preserving essential signal features.

Image Compression: Achieves high compression ratios while maintaining quality.

Speech and Image Processing: Used for feature detection, texture analysis, and pitch detection.

Data Compression: Efficiently represents data in both time and frequency domains.

Communications Systems: Applied in modulation, demodulation, and channel equalization.

Finance: Used for trend analysis, volatility modeling, and stock market predictions.

Machinery Vibration Data Analysis with Wavelet Transform

To demonstrate the effectiveness of Wavelet Transform, we use synthetically generated machinery vibration data. In normal conditions, the data consists of frequency components at 10 Hz and 20 Hz. To simulate an anomaly, additional high-frequency components are introduced 2 seconds into the time series, lasting for 1 second.

Steps in Data Exploration:

Time-Frequency Analysis: Wavelet Transform helps identify the presence of different frequency components in specific time ranges.

Anomaly Detection: By analyzing wavelet coefficients, we can pinpoint when an anomaly occurs.

Comparison of Pre- and Post-Anomaly Frequencies:

Before the anomaly, no significant frequencies above 20 Hz are detected.

After the anomaly, wavelet coefficients reveal the presence of high-frequency components above 40 Hz.

Transition Analysis: The transformation clearly captures the shift from normal operation to an anomalous state.

The results show how Wavelet Transform provides detailed insight into the behavior of the vibration signal over time, making it an effective tool for predictive maintenance and fault detection.

Implementation with Python

The implementation utilizes the pywt package for Wavelet Transform and tsgen for synthetic time series data generation. A class named WaveletExpl is used to apply the transformation and extract frequency-time insights.

Supported Wavelet Families

The pywt package supports multiple wavelet families, including:

Haar: Simple and efficient for signal processing.

Daubechies (db): Balances compact support with frequency resolution.

Symlets (sym): Improved symmetry over Daubechies wavelets.

Coiflets (coif): Enhanced frequency resolution.

Biorthogonal (bior) & Reverse Biorthogonal (rbio): Useful for image compression.

Mexican Hat (mexh): Effective for feature detection.

Morlet (morl): Commonly used in continuous wavelet analysis.

Conclusion

Wavelet Transform is a powerful tool for time series analysis, allowing for simultaneous frequency and time localization. Whether used for signal processing, anomaly detection, or feature extraction, it provides valuable insights that traditional Fourier-based methods cannot achieve.

0 notes

Text

RF3060F27:500mW LoRa wireless transceiver module

RF3060F27 is a high-power module made from a low-power, long-range wireless transceiver chip that uses ChirpIoTTM modulation and demodulation technology. It supports half-duplex wireless communication and features high anti-interference capability, high sensitivity, low power consumption, compact size, and ultra-long transmission range. It offers a maximum sensitivity of -135dBm and a maximum output power of 27dBm, providing an industry-leading link budget, making it the best choice for long-distance transmission and applications with high reliability requirements. RF3060F27 strictly uses lead-free technology for production and testing, complying with RoHS and Reach standards.

Frequency Range:433/470 MHzBuilt-in TCXO, frequency error 1ppmSensitivity:-135dBm @60.5KHz,0.5KbpsMaximum output power:> 27dBm @ 5V Low receive current: 6mA@DCDC

Sleep current < 1.0uA

Data transfer rate: 0.5-59.9Kbps @LoRa

Supports bandwidth: 125/250/500KHz

Supports SF factor: 5–9, automatic recognition

Supports data rates: 4/5, 4/6, 4/7, 4/8

Supports CAD function

1 note

·

View note