#NVIDIANIM

Text

Edify NVIDIA An Desert World By Create Gen AI 3D Objectives

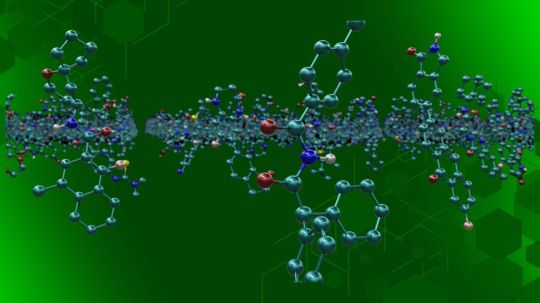

NVIDIA Researchers Create an Immersive Desert World Using Real-Time Gen AI. The demonstration highlighted the NVIDIA Omniverse platform’s and models’ helpful 3D world-building capabilities when driven by Edify NVIDIA.

SIGGRAPH Real-Time Live event, NVIDIA researchers demonstrated how to use Edify NVIDIA, a multimodal architecture for visual generative AI, to quickly create a detailed 3D desert scene.

In one of the most esteemed sessions of the prominent graphics conference, NVIDIA researchers demonstrated how, in just five minutes, they could create and modify a desert landscape from scratch with the help of an AI agent. The live demonstration demonstrated how generative AI may help artists by speeding up ideation and producing unique secondary elements that would not have needed to be sourced from a repository.

These AI technologies will enable 3D artists to be more productive and creative by radically cutting down on ideation time. They will provide them with the means to explore concepts more quickly and streamline portions of their processes. Instead of wasting hours searching for or generating the 360 HDRi landscapes or backdrop assets the scene requires, they could produce them in a matter of minutes.

Edify NVIDIA

Three Minutes to Go from Concept to 3D Scene

A complete 3D scene takes a lot of work and time to complete. In order to build a rich scene, artists must provide their hero asset with a lot of background objects. After that, they must choose an acceptable background and an environment map to illuminate the scene. They’ve frequently had to choose between producing results quickly and engaging in creative exploration due to time constraints.

Creative teams may accomplish both objectives with the help of AI agents: they can swiftly bring thoughts to life and keep iterating until they have the desired look.

In the Real-Time Live demonstration, the researchers employed an AI agent to direct an Edify NVIDIA-powered model to create previews for hundreds of 3D objects, such as rocks, cactus, and a bull’s skull, in a matter of seconds.

Free 3D Objects

NVIDIA Edify

NVIDIA Edify Quickens the Creation of Environments

Using AI-powered scene generation tools, Edify NVIDIA models can speed up the development of background environments and objects, allowing artists to concentrate on hero assets.

Real Live Time

Two Edify NVIDIA models were displayed in the Real-Time Live demo:

Edify 3D uses text or image prompts to create editable 3D meshes. In a matter of seconds, the model can produce previews that include spinning animations for every object, enabling developers to quickly prototype their ideas before settling on a final version.

Edify 360 HDRi creates up to 16K high-dynamic range photographs (HDRi) of natural landscapes using text or picture prompts. These HDRi photos can be utilised as backgrounds or to illuminate situations.

The researchers also demonstrated USD Layout, an AI model that creates scene layouts using OpenUSD, a platform for 3D workflows, and an AI agent driven by a sizable language model during the demo.

Using Edify NVIDIA-powered technologies, two top creative content firms are providing designers and artists with new ways to increase productivity through generative AI, as NVIDIA announced at SIGGRAPH.

Shutterstock’s Generative 3D service, which enables designers to swiftly prototype and build 3D products using text or image prompts, has debuted in commercial testing. Its Edify NVIDIA-based 360 HDRi generator was also granted early access.

Getty Images

The most recent Edify NVIDIA version was added to Getty Images’ Generative AI by Getty Images service. With twice the speed, users may now produce photos with better output quality, timely adherence, sophisticated controls, and fine-tuning.

3D object Genoerator

Using NVIDIA Omniverse’s Universal Scene Description

USD is a common format used to describe and compose 3D environments, and it is used to structure the 3D objects, environment maps, and layouts created using Edify models. Artists may now import Edify NVIDIA-powered compositions into Omniverse USD Composer right away thanks to this compatibility.

They can further alter the scene in Composer by utilising well-known digital content production tools, such as shifting objects’ positions, altering their looks, or tweaking the lighting.

One of the most anticipated events of SIGGRAPH is Real-Time Live, which offers approximately twelve real-time applications, such as virtual reality, generative AI, and live performance capture technologies. See the replay in the section below.

They then gave the agent instructions on how to use other models to generate possible backgrounds and a plan for the placement of the objects in the picture. This demonstrated how the agent could swiftly switch out the pebbles for gold nuggets in order to adjust to last-minute changes in the creative direction.

They gave the agent a design plan and instructed them to produce high-quality assets and use the virtual world-building program NVIDIA Omniverse USD Composer to render the scenario as a photorealistic image.

3D Objective

Shutterstock Launches Generative 3D, and Getty Images Enhances Their Offering Driven by NVIDIA

With services powered by Edify NVIDIA, you can create 3D assets, light virtual environments, or make photos in half the time. Thanks to generative AI educated on licensed data, designers and artists may now increase their output in new and more efficient ways.

Leading creative content marketplace Shutterstock has put its Generative 3D service into commercial beta. With merely text or image prompts, it enables developers to quickly prototype 3D items and build 360 HDRi backgrounds that illuminate situations.

Leading provider of visual material and marketplace Getty Images has enhanced its Generative AI by Getty Images offering to produce photos twice as quickly, enhance output quality, provide sophisticated controls, and facilitate fine-tuning.

The services are developed utilising Edify NVIDIA, a multimodal generative AI architecture, and the company’s visual AI foundry. NVIDIA NIM, a collection of accelerated microservices for AI inference, is then used to optimise and bundle the AI models for optimal performance.

With Edify NVIDIA, service providers can swiftly scale responsible generative models on licensed data and take use of NVIDIA DGX Cloud the cloud-first approach to leveraging the full potential of NVIDIA AI.

3D Objective

3D modelling is sped up with generative AI

Shutterstock’s service, which is currently in commercial beta for corporations, enables designers and artists to swiftly produce 3D objects that aid in prototyping or populating virtual worlds. By utilising generative AI, for instance, they may rapidly generate the dishes and cutlery on a dining room table, freeing up their time to concentrate on creating the characters that sit on it.

The 3D assets produced by the service come in a number of widely used file formats and are prepared for editing with digital content creation tools. Artists have a sophisticated foundation from which to create their own style because to their simple geometry and arrangement.

In as little as 10 seconds, the AI model first provides a preview of a single asset. The preview can be developed into a better 3D asset using physically based rendering elements like concrete, wood, or leather if customers find it appealing.

This year’s SIGGRAPH computer graphics conference will test the speed at which designers can realise their concepts.

A Blender method that enables artists to create items directly in their 3D environment will be demonstrated by Shutterstock. At SIGGRAPH, HP will display 3D prints and real prototypes of the kinds of assets that guests can create on the show floor with Generative 3D at the Shutterstock booth.

Additionally, Edify NVIDIA 3D generation for virtual production is being used by Shutterstock in collaboration with WPP, a global provider of marketing and communications services, to bring concepts to life.

Best 3D Printed Objects

Would you like to see that stunning new sports vehicle on a winding mountain route, on a tropical beach, or in a desert? Designers are able to change course quickly with generative AI.

Three businesses intend to use the 360 HDRi APIs from Shutterstock straight into their operations. High-end visualisations and 3D material for virtual environments can be created with the 3DEXCITE apps from Dassault Systèmes and WPP, a CGI studio.

Read more on govindhtech.com

#EdifyNVIDIA#DesertWorld#CreateGenAI#3DObjectives#GenAI#news#NVIDIAOmniverse#AIagent#AImodel#NVIDIAannouncedatSIGGRAPH#generativeAI#NVIDIANIM#NVIDIADGXCloud#aimodel#ai#technology#technews#govindhtech

0 notes

Text

NVIDIA NIM Microservices & NVIDIA Omniverse Help Coca-Cola

NVIDIA NIM microservices

NVIDIA NIM microservices and WPP’s NVIDIA Omniverse Help The Coca-Cola Company. Scale Generative AI Content That Pops With Brand Authenticity Leading marketing firm WPP’s USD Search and USD Code are allowing the beverage giant to quickly iterate on creative campaigns on a worldwide basis.

NVIDIA Omniverse AI

The Coca-Cola Company uses a formula for magic when creating thirst-quenching marketing campaigns; the creative components aren’t just left up to chance. The beverage firm is now using generative AI from NVIDIA Omniverse and NVIDIA NIM microservices to expand its worldwide campaigns through its relationship with WPP Open X.

“They can deliver on hyperlocal relevance quickly and globally with NVIDIA, allowing us to personalise and customise Coke and meal imagery across more than 100 markets,” stated Samir Bhutada, global Coca-Cola’s StudioX Digital Transformation vice president.

Coca-Cola and WPP have been collaborating on the development of digital twin technologies and the global launch of Prod X, a unique production studio experience designed exclusively for the beverage company.

NIM inference microservices

The Coca-Cola Company will integrate the new NVIDIA NIM microservices for Universal Scene Description (also known as OpenUSD) into its Prod X roadmap early on, according to a WPP announcement made today at SIGGRAPH. A 3D framework called OpenUSD makes it possible for different software tools and data types to work together while creating virtual worlds. Models are provided as optimised containers via NIM inference microservices.

WPP may generate on-brand assets by using the USD Search NIM to access a vast model collection, and then utilise the USD Code NIM to put the assets together into scenes.

With rapid engineering, Prod X users will be able to swiftly make improvements to AI-generated images so that marketers can more effectively target their products at local markets. These NIM microservices will allow Prod X users to produce 3D advertising assets that feature culturally relevant components on a global scale.

NIM Microservices Generative AI

Using NVIDIA NIM Microservices for Generative AI Implementation

According to WPP, the 3D engineering and creative communities will be significantly impacted by the NVIDIA NIM microservices.

With written directions, the USD Search NIM can instantly make WPP’s vast graphic asset library available. To construct innovative 3D worlds, developers can input prompts and obtain Python code using the USD Code NIM.

The new NIM microservices, according to Perry Nightingale, senior vice president of creative AI at WPP, “compresses multiple phases of the production process into a single interface and process,” making it a beautiful solution. “It enables artists to utilise technology more effectively and produce better work.”

NVIDIA Omniverse

Utilising Production Studios to Rethink Content Production

Co-developed with its production company, Hogarth, Production Studio leverages the Omniverse development platform and OpenUSD for its generative AI-enabled product configurator workflows. Production Studio was released on WPP Open, the company’s AI-powered marketing operating system.

In addition to directly addressing the difficulties advertisers still encounter in creating brand-compliant and product-accurate content at scale, Production Studio can expedite and automate the creation of multilingual text, images, and videos. This makes content creation easier for marketers and advertisers.

“Our years of research with NVIDIA Omniverse allowed us to customise an experience for The Coca-Cola Company. as the research and development associated with having built our own core USD pipeline and decades of experience in 3D workflows,” stated Priti Mhatre, managing director for strategic consulting and AI at Hogarth.

NVIDIA Increases Control Over Generative AI To Boost Digital Marketing

Leading production and creative services companies in the world were among the first to use NVIDIA NIM microservices and OpenUSD to produce visually accurate branding.

Generative AI is being used by global businesses and agencies to produce marketing and advertising material, but the results aren’t necessarily what they want.

With the help of generative AI, NVIDIA NIM microservices, NVIDIA Omniverse, and Universal Scene Description (OpenUSD), NVIDIA provides developers with a whole suite of tools that let them create applications and workflows that facilitate brand-accurate, highly targeted, and productive advertising at scale.

When used with the USD Code NIM microservice, developers can leverage the USD Search NIM microservice to give artists faster access to a large collection of OpenUSD-based, brand-approved props, surroundings, and items. Teams may also use the Shutterstock Generative 3D service, which is powered by NVIDIA Edify, to quickly create new 3D assets using AI.

Upon completion, the sceneries can be converted into a 2D image and utilised as input to control an AI-driven image generator to produce precise, brand-appropriate graphics.

These technologies are being used by production studios, global agencies, and developers to transform all facets of the advertising process, from dynamic creative optimisation to creative production and content supply chain.

Using NVIDIA NIM microservices and Omniverse, WPP declared at SIGGRAPH that The Coca-Cola Company was the first company to use generative AI.

Increased Omniverse Adoption by Agencies and Service Providers

Digital Twins

The capacity of the NVIDIA Omniverse development platform to create precise digital twins of items has led to its widespread adoption. With the aid of these virtual reproductions, businesses and agencies are able to produce 3D product configurators that are incredibly lifelike and physically precise, hence increasing average selling prices, lowering return rates, and fostering customer engagement and loyalty.

Additionally, digital twins can be updated quickly, cheaply, and to accommodate changing customer preferences, allowing content production to scale flexibly.

The AI-centric professional managed service Monks. Flow leverages the Omniverse platform to assist brands in visually exploring various customisation product designs and unlocking scale and hyper-personification across any customer journey. Monks is a global marketing and technology services company.

“The interoperability of NVIDIA Omniverse and OpenUSD speeds up communication between marketing, technology, and product development,” stated Lewis Smithingham, Monks’ executive vice president of strategic industries. By integrating Omniverse with Monks’ efficient marketing and technological services, we enable clients to take advantage of faster technological and creative advancements by integrating AI throughout the product creation process.

The technology and creative firm Collective World was among the first to use OpenUSD, NVIDIA Omniverse, and real-time 3D to produce excellent digital ads for clients including EE and Unilever. With the use of these technologies, Collective is able to create digital twins that simplify marketing and advertising efforts by providing scalable, consistent, high-quality product content.

At SIGGRAPH, Collective World revealed that it has become a member of the NVIDIA Partner Network, expanding on its use of NVIDIA technologies.

Check out the product configuration developer resources and the generative AI workflow for content creation reference architecture to get started building apps and services using OpenUSD, Omniverse, and NVIDIA AI. You can also connect with NVIDIA’s ecosystem of service providers by submitting a contact form to learn more.

Read more on Govindhtech.com

#ai#technologytrends#nvidia#nimmicroservices#nvidianim#nim#nvidiaomniverse#cocacola#wpp#openusd#govindhtech#news#technews#tehnology

0 notes

Text

OSMO NVIDIA By NIM Microservices For Robotics Simulation

NVIDIA Launches New NIM Microservices for Robotics Simulation in Isaac Lab and Isaac Sim, OSMO NVIDIA Robot Cloud Compute Orchestration Service, Teleoperated Data Capture Workflow, and More, Advancing the Development of NVIDIA Humanoid Robotics.

Humanoid Robot

NVIDIA today announced that in an effort to spur worldwide progress in humanoid robots, it is offering a range of services, models, and computing platforms to the top robot makers, AI model developers, and software developers in the world. These resources will be used to design, develop, and construct the next generation of humanoid robotics.

Among the products are the OSMO NVIDIA orchestration service for managing multi-stage robotics workloads, the new NVIDIA NIM microservices and frameworks for robot simulation and learning, and an AI- and simulation-enabled teleportation workflow that enables developers to train robots with minimal human demonstration data.

Humanoid Robot Development

Quickening the Process of Development NVIDIA NIM and OSMO NVIDIA NIM microservices enable developers to cut down on deployment times from weeks to minutes by offering pre-built containers that are driven by NVIDIA inference software. NVIDIA Isaac Sim is a reference application for robotics simulation based on the NVIDIA Omniverse platform. With the help of two new AI microservices, robotics will be able to improve simulation processes for generative physical AI.

Synthetic motion data is produced by the MimicGen NIM microservice using teleoperated data that has been captured by spatial computing devices such as Apple Vision Pro. The Robocasa NIM microservice in OpenUSD, a general-purpose framework for creating and interacting in 3D environments, creates robot tasks and simulation-ready environments.

With the help of distributed computing resources, either on-premises or in the cloud, customers can coordinate and scale complicated robotics development workflows with OSMO NVIDIA, a managed service that is currently accessible in the cloud.

NVIDIA OSMO

Robot training and simulation workflows are greatly streamlined by OSMO NVIDIA, which reduces deployment and development cycle duration’s from several months to a few days. A variety of tasks, such as creating synthetic data, training models, performing reinforcement learning, and putting software-in-the-loop testing into practice at scale for industrial manipulators, autonomous mobile robots, and humanoids, may be visualised and managed by users.

Humanoid Robots

Enhancing Data Capture Processes for Developers of Humanoid Robots

The amount of data needed to train foundation models for humanoid robots is enormous. Teleportation is one method of gathering data about human demonstration, but it’s getting more and more expensive.

At the SIGGRAPH computer graphics conference, an NVIDIA AI– and Omniverse-enabled teleportation reference workflow was presented. It enables academics and AI developers to produce vast quantities of synthetic motion and perception data from a small number of remotely recorded human demonstrations.

First, a limited number of teleoperated demonstrations are recorded by developers using Apple Vision Pro. Next, they use NVIDIA Isaac Sim to simulate the recordings, and the MimicGen NIM microservice is used to create synthetic datasets from the recordings.

By using both synthetic and actual data to train the Project GR00T humanoid foundation model, developers are able to minimise expenses and time. They then create experiences to retrain the robot model using the Robocasa NIM microservice in Isaac Lab, a robot learning platform. OSMO NVIDIA sends computing jobs to various resources in a smooth manner throughout the workflow, sparing the developer weeks’ worth of administrative effort.

The business Fourier, which makes all-purpose robot platforms, recognises the advantages of creating artificial training data through simulation technology.

NVIDIA Humanoid Robots

Increasing NVIDIA Humanoid Developer Technologies’ Access

To facilitate the development of humanoid robotics, NVIDIA offers three computing platforms: NVIDIA Jetson Thor humanoid robot processors, which are used to run the models; NVIDIA AI supercomputers for training the models; and NVIDIA Isaac Sim, which is based on Omniverse and allows robots to learn and hone their skills in virtual environments. For their unique requirements, developers have access to and can use all or any portion of the platforms.

Developers can get early access to the new products as well as the most recent iterations of NVIDIA Isaac Sim, NVIDIA Isaac Lab, Jetson Thor, and Project GR00T general-purpose humanoid foundation models through a new NVIDIA Humanoid Robot Developer Program.

Among the first companies to sign up for the early-access program are 1x, Boston Dynamics, ByteDance Research, Field AI, Figure, Fourier, Galbot, LimX Dynamics, Mentee, Neura Robotics, RobotEra, and Skild AI.

Availability

Developers will soon get access to NVIDIA NIM microservices and can access OSMO NVIDIA and Isaac Lab by enrolling in the NVIDIA Humanoid Robot Developer Program.

Project GR00T

Advantages

Early Access to Models of the Humanoid Foundation: A collection of foundation models for humanoid robots is called Project GR00T. These models allow robots to mimic human gestures, comprehend natural language, and learn new skills quickly thanks to NVIDIA-accelerated training and mult-modal learning.

Utilise OSMO NVIDIA Managed Service for Free: For scaling complicated, multi-stage, and multi-container robotics workloads across on-premises, private, and public clouds, OSMO NVIDIA is a cloud-native orchestration platform.

First Access to the New ROS Libraries for NVIDIA Isaac: A collection of NVIDIA GPU-accelerated ROS 2 libraries called Isaac ROS helps to speed up the creation and functionality of AI robots.

Early Access to Simulation and Learning Frameworks for Robots: Robots can learn through imitation and reinforcement using the simulation program Isaac Lab.

NVIDIA’s Generalist Robot 00 Technology

NVIDIA GR00T Project

The goal of Project GR00T is to provide a general-purpose foundation model for humanoid robots that may be used to generate robot actions based on past interactions and multimodal instructions. This sophisticated model is modular, containing systems for low-level quick, precise, and responsive motion as well as high-level planning and reasoning.

NVIDIA’S Project GROOT

Research on Project GROOT is currently underway. To receive updates and availability information, please sign up below.

NVIDIA-Powered Next-Gen Robots: Creating Robotic Systems Using Three Computers

Every component of NVIDIA’s three-computer robotics stack is used in Project GR00T.

This incorporates NVIDIA Jetson Thor and Isaac ROS for rapid robot runtime, NVIDIA AI and DGX for model training, and NVIDIA Isaac Lab for reinforcement learning.

DGX from NVIDIA

NVIDIA DGX Cloud is a comprehensive AI platform designed for developers that offers scalable capacity based on the most recent NVIDIA architecture, co-engineered with top cloud service providers globally.

Isaac ROS

NVIDIA Isaac Laboratory

This small reference application is essential for training robot foundation models and is based on the NVIDIA Omniverse platform, which is specifically optimised for robot learning. It can train any kind of robot embodiment and optimises for imitation, transfer learning, and reinforcement.

NVIDIA Isaac ROS on Jetson Thor is a set of AI models and accelerated computation packages intended to simplify and accelerate the creation of cutting-edge AI robotics applications. Isaac ROS, which is designed for quick humanoid thought and movement, is used on Jetson Thor for humanoids.

How It Operates

Function of the Model

Humanoid embodiment’s may execute commands from multi-modal instructions and respond to their surroundings in real time by self-observing thanks to Project GR00T.

Growth and Instruction

The methodology speeds up the development of humanoid robots by using multi-modal learning from a range of data sources, such as instructions, videos, demonstrations, and imitation learning.

For large-scale Project GR00T training, NVIDIA Isaac Lab is the best choice. Moreover, NVIDIA OVX systems for simulation, NVIDIA IGX systems for hardware-in-the-loop validation, and NVIDIA DGX systems for training can all have their workflows coordinated by using the OSMO NVIDIA robot cloud computing orchestration service.

Read more on Govindhtech.com

#nvidia#govindhtech#osmo#nim#microservices#nvidianim#robotics#news#technews#technology#technologynews#techtrnds#ai

0 notes

Text

NVIDIA AI Foundry Custom Models NeMo Retriever microservice

How Businesses Can Create Personalized Generative AI Models with NVIDIA AI Foundry.

NVIDIA AI Foundry

Companies looking to use AI need specialized Custom models made to fit their particular sector requirements.

With the use of software tools, accelerated computation, and data, businesses can build and implement unique models with NVIDIA AI Foundry, a service that may significantly boost their generative AI projects.

Similar to how TSMC produces chips made by other firms, NVIDIA AI Foundry offers the infrastructure and resources needed by other businesses to create and modify AI models. These resources include DGX Cloud, foundation models, NVIDIA NeMo software, NVIDIA knowledge, ecosystem tools, and support.

The product is the primary distinction: NVIDIA AI Foundry assists in the creation of Custom models, whereas TSMC manufactures actual semiconductor chips. Both foster creativity and provide access to a huge network of resources and collaborators.

Businesses can use AI Foundry to personalise NVIDIA and open Custom models models, such as NVIDIA Nemotron, CodeGemma by Google DeepMind, CodeLlama, Gemma by Google DeepMind, Mistral, Mixtral, Phi-3, StarCoder2, and others. This includes the recently released Llama 3.1 collection.

AI Innovation is Driven by Industry Pioneers

Among the first companies to use NVIDIA AI Foundry are industry leaders Amdocs, Capital One, Getty Images, KT, Hyundai Motor Company, SAP, ServiceNow, and Snowflake. A new era of AI-driven innovation in corporate software, technology, communications, and media is being ushered in by these trailblazers.

According to Jeremy Barnes, vice president of AI Product at ServiceNow, “organizations deploying AI can gain a competitive edge with Custom models that incorporate industry and business knowledge.” “ServiceNow is refining and deploying models that can easily integrate within customers’ existing workflows by utilising NVIDIA AI Foundry.”

The NVIDIA AI Foundry’s Foundation

The foundation models, corporate software, rapid computing, expert support, and extensive partner ecosystem are the main pillars that underpin NVIDIA AI Foundry.

Its software comprises the whole software platform for expediting model building, as well as AI foundation models from NVIDIA and the AI community.

NVIDIA DGX Cloud, a network of accelerated compute resources co-engineered with the top public clouds in the world Amazon Web Services, Google Cloud, and Oracle Cloud Infrastructure is the computational powerhouse of NVIDIA AI Foundry. Customers of AI Foundry may use DGX Cloud to grow their AI projects as needed without having to make large upfront hardware investments.

They can also create and optimize unique generative AI applications with previously unheard-of ease and efficiency. This adaptability is essential for companies trying to remain nimble in a market that is changing quickly.

NVIDIA AI Enterprise specialists are available to support customers of NVIDIA AI Foundry if they require assistance. In order to ensure that the models closely match their business requirements, NVIDIA experts may guide customers through every stage of the process of developing, optimizing, and deploying their models using private data.

Customers of NVIDIA AI Foundry have access to a worldwide network of partners who can offer a comprehensive range of support. Among the NVIDIA partners offering AI Foundry consulting services are Accenture, Deloitte, Infosys, and Wipro. These services cover the design, implementation, and management of AI-driven digital transformation initiatives. Accenture is the first to provide the Accenture AI Refinery framework, an AI Foundry-based solution for creating Custom models.

Furthermore, companies can get assistance from service delivery partners like Data Monsters, Quantiphi, Slalom, and SoftServe in navigating the challenges of incorporating AI into their current IT environments and making sure that these applications are secure, scalable, and in line with business goals.

Using AIOps and MLOps platforms from NVIDIA partners, such as Cleanlab, DataDog, Dataiku, Dataloop, DataRobot, Domino Data Lab, Fiddler AI, New Relic, Scale, and Weights & Biases, customers may create production-ready NVIDIA AI Foundry models.

Nemo retriever microservice

Clients can export their AI Foundry models as NVIDIA NIM inference microservices, which can be used on their choice accelerated infrastructure. These microservices comprise the Custom models, optimized engines, and a standard API.

NVIDIA TensorRT-LLM and other inferencing methods increase Llama 3.1 model efficiency by reducing latency and maximizing throughput. This lowers the overall cost of operating the models in production and allows businesses to create tokens more quickly. The NVIDIA AI Enterprise software bundle offers security and support that is suitable for an enterprise.

Along with cloud instances from Amazon Web Services, Google Cloud, and Oracle Cloud Infrastructure, the extensive array of deployment options includes NVIDIA-Certified Systems from worldwide server manufacturing partners like Cisco, Dell, HPE, Lenovo, and Supermicro.

Furthermore, Together AI, a leading cloud provider for AI acceleration, announced today that it will make Llama 3.1 endpoints and other open models available on DGX Cloud through the usage of its NVIDIA GPU-accelerated inference stack, which is accessible to its ecosystem of over 100,000 developers and businesses.

According to Together AI’s founder and CEO, Vipul Ved Prakash, “every enterprise running generative AI applications wants a faster user experience, with greater efficiency and lower cost.” “With NVIDIA DGX Cloud, developers and businesses can now optimize performance, scalability, and security by utilising the Together Inference Engine.”

NVIDIA NeMo

NVIDIA NeMo Accelerates and Simplifies the Creation of Custom Models

Developers can now easily curate data, modify foundation models, and assess performance using the capabilities provided by NVIDIA NeMo integrated into AI Foundry. NeMo technologies consist of:

A GPU-accelerated data-curation package called NeMo Curator enhances the performance of generative AI models by preparing large-scale, high-quality datasets for pretraining and fine-tuning.

NeMo Customizer is a scalable, high-performance microservice that makes it easier to align and fine-tune LLMs for use cases specific to a given domain.

On any accelerated cloud or data centre, NeMo Evaluator offers autonomous evaluation of generative AI models across bespoke and academic standards.

NeMo Guardrails is a dialogue management orchestrator that supports security, appropriateness, and correctness in large-scale language model smart applications, hence offering protection for generative AI applications.

Businesses can construct unique AI models that are perfectly matched to their needs by utilising the NeMo platform in NVIDIA AI Foundry.

Better alignment with strategic objectives, increased decision-making accuracy, and increased operational efficiency are all made possible by this customization.

For example, businesses can create models that comprehend industry-specific vernacular, adhere to legal specifications, and perform in unison with current processes.

According to Philipp Herzig, chief AI officer at SAP, “as a next step of their partnership, SAP plans to use NVIDIA’s NeMo platform to help businesses to accelerate AI-driven productivity powered by SAP Business AI.”

NeMo Retriever

NeMo Retriever microservice

Businesses can utilize NVIDIA NeMo Retriever NIM inference microservices to implement their own AI models in a live environment. With retrieval-augmented generation (RAG), these assist developers in retrieving private data to provide intelligent solutions for their AI applications.

According to Baris Gultekin, Head of AI at Snowflake, “safe, trustworthy AI is a non-negotiable for enterprises harnessing generative AI, with retrieval accuracy directly impacting the relevance and quality of generated responses in RAG systems.” “NeMo Retriever, a part of NVIDIA AI Foundry, is leveraged by Snowflake Cortex AI to further provide enterprises with simple, reliable answers using their custom data.”

Custom Models

Custom Models Provide a Competitive Edge

The capacity of NVIDIA AI Foundry to handle the particular difficulties that businesses encounter while implementing AI is one of its main benefits. Specific business demands and data security requirements may not be fully satisfied by generic AI models. On the other hand, Custom models are more flexible, adaptable, and perform better, which makes them perfect for businesses looking to get a competitive edge.

Read more on govindhtech.com

#NVIDIAAI#CustomModels#NeMoRetriever#microservice#GenerativeAI#NVIDIA#AI#projectsAI#DGXCloud#NVIDIANeMo#NVIDIAAIFoundry#GoogleDeepMind#Gemma#AmazonWebServices#NVIDIADGXCloud#AIapplications#MLOpsplatforms#NVIDIANIM#GoogleCloud#aimodel#technology#technews#news#govindhteh

0 notes

Text

With AI Agents, LiveX AI Reduces Customer Care Expenses

With AI agents trained and supported by GKE and NVIDIA AI, LiveX AI can cut customer care expenses by up to 85%.

For consumer companies, offering a positive customer experience is a crucial competitive advantage, but doing so presents a number of difficulties. Even if a website draws visitors, failing to personalize it can make it difficult to turn those visitors into paying clients. Call centers are expensive to run, and long wait times irritate consumers during peak call volumes. Though they are more scalable, traditional chatbots cannot replace a genuine human-to-human interaction.

Google AI agents

At the forefront of generative AI technology, LiveX AI creates personalized, multimodal AI agents with vision, hearing, conversation, and show capabilities to provide customers with experiences that are genuinely human-like. LiveX AI, a company founded by a group of seasoned business owners and eminent IT executives, offers companies dependable AI agents that generate robust consumer engagement on a range of platforms.

Real-time, immersive, human-like customer service is offered by LiveX AI generative AI agents, who respond to queries and concerns from clients in a friendly, conversational style. Additionally, agents must be quick and reliable in order to provide users with a positive experience. A highly efficient and scalable platform that can do away with the response latency that many AI agents have is necessary to create that user experience, especially on busy days like Black Friday.

GKE offers a strong basis for sophisticated generative AI applications

Utilising Google Kubernetes Engine (GKE) and the NVIDIA AI platform, Google Cloud and LiveX AI worked together from the beginning to accelerate LiveX AI’s development. Within three weeks, LiveX AI was able to provide a customized solution for its client thanks to the assistance of Google Cloud. Furthermore, LiveX AI was able to access extra commercial and technical tools as well as have their cloud costs covered while they were getting started by taking part in the Google for Startups Cloud Programme and the NVIDIA Inception programme.

The LiveX AI team selected GKE, which enables them to deploy and run containerized apps at scale on a safe and effective global infrastructure, since it was a reliable solution that would enable them to ramp up quickly. Taking advantage of GKE’s flexible integration with distributed computing and data processing frameworks, training and serving optimized AI workloads on NVIDIA GPUs is made simple by the platform orchestration capabilities of GKE.

GKE Autopilot

Developing multimodal AI agents for companies with enormous quantities of real-time consumer interactions is made easier with GKE Autopilot in particular, since it facilitates the easy scalability of applications to multiple clients. LiveX AI does not need to configure or monitor a Kubernetes cluster’s underlying compute when GKE Autopilot takes care of it.

LiveX AI has achieved over 50% reduced TCO, 25% faster time-to-market, and 66% lower operational costs with the use of GKE Autopilot. This has allowed them to concentrate on providing value to clients rather than setting up or maintaining the system.

Over 50% reduced TCO, 25% quicker time to market, and 66% lower operating costs were all made possible with GKE Autopilot for LiveX AI.

Zepp Health

Zepp Health, a direct-to-consumer (D2C) wellness product maker, is one of these clients. Zepp Health worked with LiveX AI to develop an AI customer agent for their Amazfit wristwatch and smart ring e-commerce website in the United States. In order to provide clients with individualized experiences in real time, the agent had to efficiently handle large numbers of customer interactions.

GKE was coupled with A2 Ultra virtual machines (VMs) running NVIDIA A100 80GB Tensor Core GPUs and NVIDIA NIM inference microservices for the Amazfit project. NIM, which is a component of the NVIDIA AI Enterprise software platform, offers a collection of user-friendly microservices intended for the safe and dependable implementation of high-performance AI model inference.

Applications were upgraded more quickly after they were put into production because to the use of Infrastructure as Code (IaC) techniques in the deployment of NVIDIA NIM Docker containers on GKE. The development and deployment procedures benefited greatly from NVIDIA hardware acceleration technologies, which maximized the effects of hardware optimization.

Amazfit AI

Overall, compared to running the Amazfit AI agent on another well-known inference platform, LiveX AI was able to achieve an astounding 6.1x acceleration in average answer/response generation speed by utilising GKE with NVIDIA NIM and NVIDIA A100 GPUs. Even better, it took only three weeks to complete the project.

Running on GKE with NVIDIA NIM and GPUs produced 6.1x acceleration in average answer/response generation speed for the Amazfit AI agent as compared to another inference platform.

For users of LiveX AI, this Implies

If effective AI-driven solutions are implemented, customer assistance expenses might be reduced by up to 85%.

First reaction times have significantly improved, going from hours to only seconds, compared to industry standards.

Increased customer satisfaction and a 15% decrease in returns as a result of quicker and more accurate remedies

Five times more lead conversion thanks to a smart, useful AI agent.

Wayne Huang, CEO of Zepp Health, states, “They believe in delivering a personal touch in every customer interaction.” “LiveX AI makes that philosophy a reality by giving their clients who are shopping for Amazfit a smooth and enjoyable experience.

Working together fosters AI innovation

Ultimately, GKE has made it possible for LiveX AI to quickly scale and provide clients with cutting-edge generative AI solutions that yield instant benefits. GKE offers a strong platform for the creation and implementation of cutting-edge generative AI applications since it is a safe, scalable, and affordable solution for managing containerized apps.

It speeds up developer productivity, increases application dependability with automated scaling, load balancing, and self-healing features, and streamlines the development process by making cluster construction and management easy.

Read more on govindhtech.com

#AIAgents#LiveX#AIReduces#CustomerCareExpenses#generativeAI#GoogleCloud#gke#GoogleKubernetesEngine#NVIDIAGPUs#GKEAutopilot#NVIDIAA100#NVIDIAAI#NVIDIANIM#NVIDIAA100GPU#ai#technology#technews#news#govindhteh

0 notes

Text

Mistral NeMo: Powerful 12 Billion Parameter Language Model

Mistral NeMo 12B

Today, Mistral AI and NVIDIA unveiled Mistral NeMo 12B, a brand-new, cutting-edge language model that is simple for developers to customise and implement for enterprise apps that enable summarising, coding, a chatbots, and multilingual jobs.

The Mistral NeMo model provides great performance for a variety of applications by fusing NVIDIA’s optimised hardware and software ecosystem with Mistral AI‘s training data knowledge.

Guillaume Lample, cofounder and chief scientist of Mistral AI, said, “NVIDIA is fortunate to collaborate with the NVIDIA team, leveraging their top-tier hardware and software.” “With the help of NVIDIA AI Enterprise deployment, They have created a model with previously unheard-of levels of accuracy, flexibility, high efficiency, enterprise-grade support, and security.”

On the NVIDIA DGX Cloud AI platform, which provides devoted, scalable access to the most recent NVIDIA architecture, Mistral NeMo received its training.

The approach was further advanced and optimised with the help of NVIDIA TensorRT-LLM for improved inference performance on big language models and the NVIDIA NeMo development platform for creating unique generative AI models.

This partnership demonstrates NVIDIA’s dedication to bolstering the model-builder community.

Providing Unprecedented Precision, Adaptability, and Effectiveness

This enterprise-grade AI model performs accurately and dependably on a variety of tasks. It excels in multi-turn conversations, math, common sense thinking, world knowledge, and coding.

Mistral NeMo analyses large amounts of complicated data more accurately and coherently, resulting in results that are relevant to the context thanks to its 128K context length.

Mistral NeMo is a 12-billion-parameter model released under the Apache 2.0 licence, which promotes innovation and supports the larger AI community. The model also employs the FP8 data format for model inference, which minimises memory requirements and expedites deployment without compromising accuracy.

This indicates that the model is perfect for enterprise use cases since it learns tasks more efficiently and manages a variety of scenarios more skillfully.

Mistral NeMo provides performance-optimized inference with NVIDIA TensorRT-LLM engines and is packaged as an NVIDIA NIM inference microservice.

This containerised format offers improved flexibility for a range of applications and facilitates deployment anywhere.

Instead of taking many days, models may now be deployed anywhere in only a few minutes.

As a component of NVIDIA AI Enterprise, NIM offers enterprise-grade software with specialised feature branches, stringent validation procedures, enterprise-grade security, and enterprise-grade support.

It offers dependable and consistent performance and comes with full support, direct access to an NVIDIA AI expert, and specified service-level agreements.

Businesses can easily use Mistral NeMo into commercial solutions thanks to the open model licence.

With its compact design, the Mistral NeMo NIM can be installed on a single NVIDIA L40S, NVIDIA GeForce RTX 4090, or NVIDIA RTX 4500 GPU, providing great performance, reduced computing overhead, and improved security and privacy.

Cutting-Edge Model Creation and Personalisation

Mistral NeMo’s training and inference have been enhanced by the combined knowledge of NVIDIA engineers and Mistral AI.

Equipped with Mistral AI’s proficiencies in multilingualism, coding, and multi-turn content creation, the model gains expedited training on NVIDIA’s whole portfolio.

Its efficient model parallelism approaches, scalability, and mixed precision with Megatron-LM are designed for maximum performance.

3,072 H100 80GB Tensor Core GPUs on DGX Cloud, which is made up of NVIDIA AI architecture, comprising accelerated processing, network fabric, and software to boost training efficiency, were used to train the model using Megatron-LM, a component of NVIDIA NeMo.

Availability and Deployment

Mistral NeMo, equipped with the ability to operate on any cloud, data centre, or RTX workstation, is poised to transform AI applications on a multitude of platforms.

NVIDIA thrilled to present Mistral NeMo, a 12B model created in association with NVIDIA, today. A sizable context window with up to 128k tokens is provided by Mistral NeMo. In its size class, its logic, domain expertise, and coding precision are cutting edge. Because Mistral NeMo is based on standard architecture, it may be easily installed and used as a drop-in replacement in any system that uses Mistral 7B.

To encourage adoption by researchers and businesses, we have made pre-trained base and instruction-tuned checkpoints available under the Apache 2.0 licence. Quantization awareness was incorporated into Mistral NeMo’s training, allowing for FP8 inference without sacrificing performance.

The accuracy of the Mistral NeMo base model and two current open-source pre-trained models, Gemma 2 9B and Llama 3 8B, are compared in the accompanying table.Image Credit to Nvidia

Multilingual Model for the Masses

The concept is intended for use in multilingual, international applications. Hindi, English, French, German, Spanish, Portuguese, Chinese, Japanese, Korean, Arabic, and Spanish are among its strongest languages. It has a big context window and is educated in function calling. This is a fresh step in the direction of making cutting-edge AI models available to everyone in all languages that comprise human civilization.Image Credit to Nvidia

Tekken, a more efficient tokenizer

Tekken, a new tokenizer utilised by Mistral NeMo that is based on Tiktoken and was trained on more than 100 languages, compresses source code and natural language text more effectively than SentencePiece, the tokenizer used by earlier Mistral models. Specifically, it has about a 30% higher compression efficiency for Chinese, Italian, French, German, Spanish, and Russian source code. Additionally, it is three times more effective at compressing Arabic and Korean, respectively. For over 85% of all languages, Tekken demonstrated superior text compression performance when compared to the Llama 3 tokenizer.Image Credit to Nvidia

Instruction adjustment

Mistral NeMO went through a process of advanced alignment and fine-tuning. It is far more adept at reasoning, handling multi-turn conversations, following exact directions, and writing code than Mistral 7B.

Read more on govindhtech.com

#mistralnemo#parameter#mistrai#chatbots#nvidia#hardware#software#nvidiadgxcloud#nvidianemo#nvidiatensorrtllm#generativeai#aimodel#unprecedentedprecision#adaptability#nvidianim#nvidiartx#technology#technews#news#govindhtech

0 notes

Text

AI Workbench NVIDIA: Simplify and Scale Your AI Workflows

Uncovering How AI Workbench NVIDIA Enhances App Development With this free tool, developers may test, prototype, and experiment with AI apps.

The need for instruments to streamline and enhance the development of generative AI is rapidly increasing. Developers may now fine-tune AI models to meet their unique requirements using applications based on retrieval-augmented generation (RAG), a technique for improving the accuracy and dependability of generative AI models with facts obtained from specified external sources and customized models.

Although in the past this kind of job could have needed a complicated setup, modern tools are making it simpler than ever.

Nvidia AI Workbench

Building their own RAG projects, customizing models, and more is made easier for users of AI Workbench NVIDIA , which streamlines AI development workflows. It is a component of the RTX AI Toolkit, a collection of software development kits and tools for enhancing, personalizing, and implementing AI features that was introduced at COMPUTEX earlier this month. The intricacy of technical activities that can trip up professionals and stop novices is eliminated with AI Workbench.

What Is AI Workbench NVIDIA?

Free AI Workbench NVIDIA enables users design, test, and prototype AI applications for GPU systems such workstations, laptops, data center’s, and clouds. It provides a fresh method for setting up, using, and distributing GPU enabled development environments among users and platforms.

Users can quickly get up and running with AI Workbench NVIDIA on a local or remote computer with a straightforward installation. After that, users can either create a brand-new project or copy one from GitHub’s examples. GitHub or GitLab facilitates seamless collaboration and task distribution among users.

How AI Workbench Assists in Overcoming AI Project Difficulties

Initially, creating AI jobs may necessitate manual, frequently intricate procedures.

It can be difficult to set up GPUs, update drivers, and handle versioning incompatibilities. Repeating manual processes repeatedly may be necessary when reproducing projects across many systems. Collaboration can be hampered by inconsistencies in project replication, such as problems with version control and data fragmentation. Project portability may be restricted by varying setup procedures, transferring credentials and secrets, and altering the environment, data, models, and file locations.

Data scientists and developers may more easily manage their work and cooperate across heterogeneous platforms using AI Workbench NVIDIA. It provides numerous components of the development process automation and integration, including:

Simple setup: Even for those with little technical expertise, AI Workbench makes it easy to set up a GPU-accelerated development environment.

Smooth cooperation: AI Workbench interacts with GitLab and GitHub, two popular version-control and project-management platforms, to make collaboration easier.

Scaling up or down from local workstations or PCs to data centers or the cloud is supported by AI Workbench, which guarantees consistency across various contexts.

To assist users in getting started with AI Workbench, NVIDIA provides sample development Workbench Projects, RAG for Documents, Easier Than Ever.

One such instance is the hybrid RAG Workbench Project: It uses a user’s papers on their local workstation, PC, or distant system to launch a personalized, text-based RAG online application.

Nvidia Workbench AI

Each Workbench Project is operated by a “container” programme that has all the parts required to execute the AI programme. The containerized RAG server, the backend that responds to user requests and routes queries to and from the vector database and the chosen big language model, is paired with a Gradio chat interface frontend on the host system in the hybrid RAG sample.

Numerous LLMs are supported by this Workbench Project and may be found on NVIDIA’s GitHub website. Furthermore, users can choose where to perform inference because the project is hybrid.

Using NVIDIA inference endpoints like the NVIDIA API catalogue, developers can run the embedding model locally on a Hugging Face Text Generation Inference server, on target cloud resources, or with self-hosting microservices like NVIDIA NIM or external services.

Hybrid RAG Workbench Project

Moreover, the hybrid RAG Workbench Project consists of:

Performance metrics: Users are able to assess the performance of both non-RAG and RAG-based user queries in each inference mode. Retrieval Time, Time to First Token (TTFT), and Token Velocity are among the KPIs that are monitored.

Transparency in retrieval: A panel presents the precise text excerpts that are fed into the LLM and enhance the relevance of the response to a user’s inquiry. These excerpts are retrieved from the vector database’s most contextually relevant content.

Customizing a response: A number of characteristics, including the maximum number of tokens that can be generated, the temperature, and the frequency penalty, can be adjusted.

All you need to do to begin working on this project is install AI Workbench NVIDIA on a local PC. You can duplicate the hybrid RAG Workbench Project to your local PC and import it into your account from GitHub.

Personalize, Enhance, Implement

AI model customization is a common goal among developers for particular use cases. Style transfer or altering the behavior of the model can benefit from fine-tuning, a method that modifies the model by training it with more data. Additionally useful for fine-tuning is AI Workbench.

Model quantization using an easy-to-use graphical user interface and QLoRa, a fine-tuning technique that reduces memory needs, are made possible for a range of models by the Llama-factory AI Workbench Project. To suit the demands of their apps, developers can employ publicly available datasets or databases they own.

After the model is optimized, it can be quantized to reduce memory footprint and increase performance. It can then be distributed to native Windows programmed for on-site inference or to NVIDIA NIM for cloud inference. For this project, visit the NVIDIA RTX AI Toolkit repository to find a comprehensive tutorial.

Totally Hybrid, Execute AI Tasks Anywhere

The above-discussed Hybrid-RAG Workbench Project is hybrid in multiple ways. The project offers a choice of inference modes and can be scaled up to remote cloud servers and data centers or run locally on GeForce RTX PCs and NVIDIA RTX workstations.

All Workbench Projects have the option to run on the user’s preferred platforms without the hassle of setting up the infrastructure. Consult the AI Workbench NVIDIA quick-start guide for further examples and advice on customization and fine-tuning.

Gaming, video conferences, and other forms of interactive experiences are being revolutionized by generative AI. Get the AI Decoded email to stay informed about what’s new and coming up next.

Read more on Govindhtech.com

#AI#NVIDIA#AIWorkbenchNVIDIA#aiapps#generativeai#nvidiartx#geforcertxpcs#nvidianim#technology#technews#news#govindhtech

0 notes

Text

NVIDIA Project G-Assist, RTX-powered AI assistant showcase

AI assistant

Project G-Assist, an RTX-powered AI assistant technology showcase from NVIDIA, offers PC gamers and apps context-aware assistance. Through ARK: Survival Ascended from Studio Wildcard, the Project G-Assist tech demo had its premiere. For the NVIDIA ACE digital human platform, NVIDIA also unveiled the first PC-based NVIDIA NIM inference microservices.

The NVIDIA RTX AI Toolkit, a new collection of tools and software development kits that let developers optimise and implement massive generative AI models on Windows PCs, makes these technologies possible. They complement the full-stack RTX AI advances from NVIDIA that are speeding up more than 500 PC games and apps as well as 200 laptop designs from OEMs.

Furthermore, ASUS and MSI have just unveiled RTX AI PC laptops that come with up to GeForce RTX 4070 GPUs and energy-efficient systems-on-a-chip that support Windows 11 AI PC. When it becomes available, a free update to Copilot+ PC experiences will be given to these Windows 11 AI PCs.

“In 2018, NVIDIA ushered in the era of AI PCs with the introduction of NVIDIA DLSS and RTX Tensor Core GPUs,” stated Jason Paul, NVIDIA’s vice president of consumer AI. “Now, NVIDIA is opening up the next generation of AI-powered experiences for over 100 million RTX AI PC users with Project G-Assist and NVIDIA ACE.”

Best AI Assistant

GeForce AI Assistant Project G-Assist

AI assistants are poised to revolutionise in-app and gaming experiences by helping with intricate creative workflows and providing gaming techniques, as well as by analysing multiplayer replays. NVIDIA can see a peek of this future with Project G-Assist.

Even the most devoted players will find it difficult and time-consuming to grasp the complex mechanics and expansive universes found in PC games. With generative AI, Project G-Assist seeks to provide players with instant access to game expertise.

Project G-Assist uses AI vision models to process player speech or text inputs, contextual data from the game screen, and other inputs. These models improve a large language model (LLM) connected to a game knowledge database in terms of contextual awareness and app-specific comprehension, and then produce a customised answer that may be spoken or sent via text.

NVIDIA and Studio Wildcard collaborated to showcase the technology through ARK: Survival Ascended. If you have any queries concerning monsters, gear, lore, goals, challenging bosses, or anything else, Project G-Assist can help. Project G-Assist adapts its responses to the player’s game session since it is aware of the context.

Project G-Assist can also set up the player’s gaming system to run as efficiently and effectively as possible. It may apply a safe overclock, optimise graphics settings based on the user’s hardware, offer insights into performance indicators, and even automatically lower power usage while meeting performance targets.

Initial ACE PC NIM Releases

RTX AI PCs and workstations will soon be equipped with NVIDIA ACE technology, which powers digital people. With NVIDIA NIM inference microservices, developers can cut down deployment timeframes from weeks to minutes. High-quality inference for speech synthesis, face animation, natural language comprehension, and other applications is provided locally on devices using ACE NIM microservices.

The Covert Protocol tech demo, created in association with Inworld AI, will showcase the PC gaming premiere of NVIDIA ACE NIM at COMPUTEX. It now features locally operating NVIDIA Riva and Audio2Face automatic speech recognition on devices.

Installing GPU Acceleration for Local PC SLMs Using Windows Copilot Runtime

In order to assist developers in adding new generative AI features to their Windows native and web programmes, Microsoft and NVIDIA are working together. Through this partnership, application developers will have simple application programming interface (API) access to GPU-accelerated short language models (SLMs), which allow Windows Copilot Runtime to run on-device and support retrieval-augmented generation (RAG).

For Windows developers, SLMs offer a plethora of opportunities, such as task automation, content production, and content summarising. By providing the AI models with access to domain-specific data that is underrepresented in base models, RAG capabilities enhance SLMs. By using RAG APIs, developers can customise SLM capabilities and behaviour to meet individual application requirements and leverage application-specific data sources.

NVIDIA RTX GPUs and other hardware makers’ AI accelerators will speed up these AI capabilities, giving end users quick, responsive AI experiences throughout the Windows ecosystem.

Later this year, the developer preview of the API will be made available.

Using the RTX AI Toolkit, Models Are 4x Faster and 3x Smaller

Hundreds of thousands of open-source models have been developed by the AI ecosystem and are available for use by app developers; however, the majority of these models are pretrained for public use and designed to run in a data centre.

NVIDIA is announcing RTX AI Toolkit, a collection of tools and SDKs for model customisation, optimisation, and deployment on RTX AI PCs, to assist developers in creating application-specific AI models that run on PCs. Later this month, RTX AI Toolkit will be made accessible to developers on a larger scale.

Developers can use open-source QLoRa tools to customise a pretrained model. After that, they can quantize models to use up to three times less RAM by using the NVIDIA TensorRT model optimizer. After that, NVIDIA TensorRT Cloud refines the model to achieve the best possible performance on all RTX GPU lineups. When compared to the pretrained model, the outcome is up to 4 times faster performance.

The process of deploying ACE to PCs is made easier by the recently released early access version of the NVIDIA AI Inference Manager SDK. It orchestrates AI inference across PCs and the cloud with ease and preconfigures the PC with the required AI models, engines, and dependencies.

To improve AI performance on RTX PCs, software partners including Adobe, Blackmagic Design, and Topaz are incorporating RTX AI Toolkit components into their well-known creative applications.

According to Deepa Subramaniam, vice president of product marketing for Adobe Creative Cloud, “Adobe and NVIDIA continue to collaborate to deliver breakthrough customer experiences across all creative workflows, from video to imaging, design, 3D, and beyond.” “TensorRT 10.0 on RTX PCs unlocks new creative possibilities for content creation in industry-leading creative tools like Photoshop, delivering unparalleled performance and AI-powered capabilities for creators, designers, and developers.”

TensorRT-LLM, one of the RTX AI Toolkit’s components, is included into well-known generative AI development frameworks and apps, such as Automatic1111, ComfyUI, Jan.AI, LangChain, LlamaIndex, Oobabooga, and Sanctum.AI.

Using AI in Content Creation

Additionally, NVIDIA is adding RTX AI acceleration to programmes designed for modders, makers, and video fans.

NVIDIA debuted TensorRT-based RTX acceleration for Automatic1111, one of the most well-liked Stable Diffusion user interfaces, last year. Beginning this week, RTX will also speed up the widely-liked ComfyUI, offering performance gains of up to 60% over the version that is already in shipping, and a performance boost of 7x when compared to the MacBook Pro M3 Max.

With full ray tracing, NVIDIA DLSS 3.5, and physically correct materials, classic DirectX 8 and DirectX 9 games may be remastered using the NVIDIA RTX Remix modding platform. The RTX Remix Toolkit programme and a runtime renderer are included in RTX Remix, making it easier to modify game materials and objects.

When NVIDIA released RTX Remix Runtime as open source last year, it enabled modders to increase rendering power and game compatibility.

Over 100 RTX remasters are currently being developed on the RTX Remix Showcase Discord, thanks to the 20,000 modders who have utilised the RTX Remix Toolkit since its inception earlier this year to modify vintage games.

This month, NVIDIA will release the RTX Remix Toolkit as open source, enabling modders to improve the speed at which scenes are relit and assets are replaced, expand the file formats that RTX Remix’s asset ingestor can handle, and add additional models to the AI Texture Tools.

Furthermore, NVIDIA is enabling modders to livelink RTX Remix to digital content creation tools like Blender, modding tools like Hammer, and generative AI programmes like ComfyUI by providing access to the capabilities of RTX Remix Toolkit through a REST API. In order to enable modders to integrate the renderer of RTX Remix into games and applications other than the DirectX 8 and 9 classics, NVIDIA is now offering an SDK for RTX Remix Runtime.

More of the RTX Remix platform is becoming open source, enabling modders anywhere to create even more amazing RTX remasters.

All developers now have access to the SDK for NVIDIA RTX Video, the well-liked AI-powered super-resolution feature that is supported by the Mozilla Firefox, Microsoft Edge, and Google Chrome browsers. This allows developers to natively integrate AI for tasks like upscaling, sharpening, compression artefact reduction, and high-dynamic range (HDR) conversion.

Video editors will soon be able to up-sample lower-quality video files to 4K resolution and transform standard dynamic range source files into HDR thanks to RTX Video, which will be available for Wondershare Filmora and Blackmagic Design’s DaVinci Resolve video editing software. Furthermore, the free media player VLC media will shortly enhance its current super-resolution capabilities with RTX Video HDR.

Reda more on govindhtech.com

#ProjectGAssist#NVIDIANIM#nvidiartx#AImodels#PClaptops#GeForceRTX4070#Copilot+PC#NVIDIADLSS#NVIDIA#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

KServe Providers Serve NIMble Cloud & Data Centre Inference

It’s going to get easier than ever to implement generative AI in the workplace.

NVIDIA NIM, an array of microservices for generative AI inference, will integrate with KServe, an open-source programme that automates the deployment of AI models at the scale of cloud computing applications.

Because of this combination, generative AI can be implemented similarly to other large-scale enterprise applications. Additionally, it opens up NIM to a broad audience via platforms from other businesses, including Red Hat, Canonical, and Nutanix.

NVIDIA’s solutions are now available to clients, ecosystem partners, and the open-source community thanks to the integration of NIM on KServe. With a single API call via NIM, all of them may benefit from the security, performance, and support of the NVIDIA AI Enterprise software platform – the current programming equivalent of a push button.

AI provisioning on Kubernetes

Originally, KServe was a part of Kubeflow, an open-source machine learning toolkit built on top of Kubernetes, an open-source software containerisation system that holds all the components of big distributed systems.

KServe was created as Kubeflow’s work on AI inference grew, and it eventually developed into its own open-source project.

The KServe software is currently used by numerous organisations, including AWS, Bloomberg, Canonical, Cisco, Hewlett Packard Enterprise,as IBM, Red Hat, Zillow, and NVIDIA. Several organisations have contributed to and used the software.

Behind the Scenes With KServe

In essence, KServe is a Kubernetes addon that uses AI inference like a potent cloud app. It runs with optimal performance, adheres to a common protocol, and supports TensorFlow, Scikit-learn, PyTorch, and XGBoost without requiring users to be familiar with the specifics of those AI frameworks.

These days, with the rapid emergence of new large language models (LLMs), the software is very helpful.

KServe makes it simple for users to switch between models to see which one best meets their requirements. Additionally, a KServe feature known as “canary rollouts” automates the process of meticulously validating and progressively releasing an updated model into production when one is available.

GPU autoscaling is an additional feature that effectively controls model deployment in response to fluctuations in service demand, resulting in optimal user and service provider experiences.

KServe API

With the convenience of NVIDIA NIM, the goodness of KServe will now be accessible.

All the complexity is handled by a single API request when using NIM. Whether their application is running in their data centre or on a remote cloud service, enterprise IT administrators receive the metrics they need to make sure it is operating as efficiently and effectively as possible. This is true even if they decide to switch up the AI models they’re employing.

With NIM, IT workers may alter their organization’s operations and become experts in generative AI. For this reason, numerous businesses are implementing NIM microservices, including Foxconn and ServiceNow.

Numerous Kubernetes Platforms are Rideable by NIM

Users will be able to access NIM on numerous corporate platforms, including Red Hat’s OpenShift AI, Canonical’s Charmed KubeFlow and Charmed Kubernetes, Nutanix GPT-in-a-Box 2.0, and many more, because of its interaction with KServe.

Contributing to KServe, Yuan Tang is a principal software engineer at Red Hat. “Red Hat and NVIDIA are making open source AI deployment easier for enterprises “Tang said.The Red Hat-NVIDIA partnership will simplify open source AI adoption for organisations, he said. By upgrading KServe and adding NIM support to Red Hat OpenShift AI, they can simplify Red Hat clients’ access to NVIDIA’s generative AI platform.

“NVIDIA NIM inference microservices will enable consistent, scalable, secure, high-performance generative AI applications from the cloud to the edge.with Nutanix GPT-in-a-Box 2.0,” stated Debojyoti Dutta, vice president of engineering at Nutanix, whose team also contributes to KServe and Kubeflow.

Andreea Munteanu, MLOps product manager at Canonical, stated, “We’re happy to offer NIM through Charmed Kubernetes and Charmed Kubeflow as a company that also contributes significantly to KServe.” “Their combined efforts will enable users to fully leverage the potential of generative AI, with optimal performance, ease of use, and efficiency.”

NIM benefits dozens of other software companies just by virtue of their use of KServe in their product offerings.

Contributing to the Open-Source Community

Regarding the KServe project, NVIDIA has extensive experience. NVIDIA Triton Inference Server uses KServe’s Open Inference Protocol, as mentioned in a recent technical blog. This allows users to execute several AI models concurrently across multiple GPUs, frameworks, and operating modes.

NVIDIA concentrates on use cases with KServe that entail executing a single AI model concurrently across numerous GPUs.

NVIDIA intends to actively contribute to KServe as part of the NIM integration, expanding on its portfolio of contributions to open-source software, which already includes TensorRT-LLM and Triton. In addition, NVIDIA actively participates in the Cloud Native Computing Foundation, which promotes open-source software for several initiatives, including generative AI.

Using the Llama 3 8B or Llama 3 70B LLM models, try the NIM API in the NVIDIA API Catalogue right now. NIM is being used by hundreds of NVIDIA partners throughout the globe to implement generative AI.

Read more on Govindhtech.com

#kserve#DataCentre#microservices#NVIDIANIM#aimodels#redhat#machinelearning#kubernetes#aws#ibm#PyTorch#nim#gpus#Llama3#generativeAI#LLMmodels#News#technews#technology#technologynews#technologytrands#govindhtech

0 notes

Text

Gigabyte unveils GIGA POD for AI and Energy Efficiency

GIGABYTE’s GIGA POD booth features

The industry leader in green computing and AI servers, Giga Computing, a division of GIGABYTE, said today that it would be attending COMPUTEX and showcasing solutions for large-scale, complicated AI workloads as well as cutting-edge cooling infrastructure that will improve energy efficiency. Additionally, GIGABYTE plans to provide NVIDIA GB200 NVL72 systems in Q1 2025 to support advances in accelerated computing and generative AI. NVIDIA NIM inference microservices, which are a component of the NVIDIA AI Enterprise software platform, are also supported by GIGABYTE servers as an NVIDIA-Certified System supplier.

Changing the Meaning of AI Servers and Upcoming Data Centres

The GIGABYTE booth features GIGA POD, a rack-scale AI solution from GIGABYTE, together with all current and upcoming CPU and accelerated computing technologies. The most recent solutions, including the NVIDIA GH200 Grace Hopper Superchip, NVIDIA HGX B100, NVIDIA HGX H200, and other OAM baseboard GPU systems, show off GIGA POD’s versatility. GIGA POD is a turnkey solution that can support baseboard accelerators at scale with switches, networking, compute nodes, and other features. It can also support NVIDIA Spectrum-X, which can provide strong networking capabilities for infrastructures utilising generative artificial intelligence.

A peek at the NVIDIA GB200 NVL72 system is a major attraction in the AI section of the GIGABYTE pavilion. Large language model (LLM) inference is performed 30X faster, 25X less expensive, and 25X less energy-efficient using the NVIDIA GB200 NVL72. It’s a liquid-cooled, rack-scale solution that uses NVIDIA NVLink to link 72 NVIDIA Blackwell GPUs and 36 NVIDIA Grace CPUs to form a single, gigantic GPU that can handle 130 TB/s of bandwidth.

Applying Cutting-Edge Cooling Technologies

GIGABYTE will present a fantastic spectrum of products and partners for single-phase immersion cooling and direct liquid cooling (DLC) technologies in an effort to break into new, more highly efficient levels of cooling. The days of processors with a TDP of 100–200W providing the highest performance are long gone. The incorporation of liquid cooling, which dissipates heat more quickly and efficiently than air cooling, is necessary to support next-generation computers.

The DLC rack of GIGABYTE houses servers for its technological partners, CoolIT Systems and Motivair, as well as AMD, Intel, and NVIDIA. One particularly good DLC server is the GIGABYTE G4L3-SD1, which has cold plates for liquid cooling and supports both the NVIDIA HGX B200 8-GPU and twin Intel Xeon processors. The G593-ZX1 rack, which liquid-cools AMD EPYC 9004 processors and OAM modules, is also included in it. GIGABYTE’s immersion cooling portfolio offers an example of what may be achieved with immersion tanks and servers that are suited for immersion, allowing for even greater energy efficiency.

Unlocking Cloud-to-Edge Data Potential

With a modern take on classic servers, GIGABYTE offers a wide range of options, including Arm-based platforms, general-purpose servers, and OCP solutions. Visitors can view cutting-edge systems including servers equipped with a selection of AMD EPYC processors that are ready for the upcoming generation and storage servers that support E3.S or E1.S drives. To satisfy the demands of modern data centres, a total of more than 25 servers are on show.

Professional and Passionate Server Solutions

GIGABYTE motherboards for AMD EPYC 4004, Intel Xeon W-3400, Intel Xeon 6, and other AMD and Intel processor series come equipped with server-grade capabilities including RAS and a BMC chip. Adjacent to the eleven motherboards are entry-level servers that are reasonably priced and have Broadcom-based solutions for LAN cards (HBA, RAID, and OCP). The goal of this IT gear is to enable remote server control and monitoring while being adaptable, versatile, and dependable.

The Perfect GIGA POD for You

Enterprise products from GIGABYTE are superior in terms of availability, dependability, and serviceability. Their flexibility is another strong point, encompassing options for GPU, rack size, cooling system, and more. GIGABYTE has experience with all conceivable hardware configurations, data centre sizes, and IT infrastructure types. Numerous GIGABYTE clients choose their rack configurations by taking into account the amount of floor space that is available as well as the amount of power that their facility can supply to the IT systems. This is the rationale behind the creation of the GIGA POD service. Consumers get options. Customers can choose between direct liquid cooling (DLC) and classic air-cooling when it comes to the initial steps of component cooling and heat removal.

Get to know the GIGA POD

GIGA POD has the infrastructure to grow, enabling a high-performance supercomputer, from one GIGABYTE GPU server to eight racks with 32 GPU nodes (a total of 256 GPUs). AI factories are being deployed by state-of-the-art data centres, and it all begins with a GIGABYTE GPU server.

In addition to GPU servers, GIGA POD also includes switches. Not to add, the entire system provides easy-to-deploy hardware, software, and services.

Concerning Giga Computing

Giga Computing Technologies is a market leader in enterprise computing and a pioneer in the sector. After splitting out from GIGABYTE, Giga Computing continues to be a stand-alone company that may increase investment in core skills while maintaining hardware manufacturing and product design experience.

In addition to traditional and developing workloads in AI and HPC, Giga Computing offers a comprehensive product portfolio that covers all workloads from the data centre to the edge, including cloud computing, 5G/edge, data analytics, and more. Due to Giga Computing’s long-standing relationships with important players in the technology industry, its new products will always be the most cutting edge and will debut alongside new partner platforms. Systems using giga computing combine sustainability, scalability, security, and performance.

Read more on Govindhtech.com

#GIGAPOD#gigabyte#NVIDIANIM#NVIDIAAI#nvidiahgx#IntelXeon#AMD#gpu#ComputingTechnologies#new#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Dell Native Edge: Key to Unlock AI Innovation at the Edge

Dell Native Edge