#OSTP

Explore tagged Tumblr posts

Text

Painted Goddess🎨🤍

By:

https://www.instagram.com/o.st.p?igsh=MXZsYmpmM2tnb2ozdA==

https://www.instagram.com/share/BBSmZu6sKP

#ig model#curvy af#curvy model#curvy goddess#ig girls#goddess aesthetic#curvy body#thick goddess#thick babe#hot red head#hot white women#hot white chicks#ginger girls#ginger girl#sexy goddess#sexy ginger#body paint#red haired girl#thick white babe#thick red head#hot white girls#ostp

122 notes

·

View notes

Text

Thursday, August 15, 2024 - Kamala Harris & Tim Walz

The Vice President and Governor Walz joined forces together today on the campaign trail. They were making their way through the state of North Carolina. The Governor of the state, Roy Cooper joined them on the campaign trail today. Today was a busy day with 4 campaign stops.

Event #1 (Raleigh, NC) Event Location: Steps of the North Carolina State Capitol Event Type: Call for Policy Change and Activism Event Time: 9:00 - 10:00 ET

Event #2 (Raleigh, NC) Event Location: North Carolina State University Event Type: Get Out the Vote Event Time: 11:00 - 13:00 ET

Event #3 (Greensboro, NC) Event Location: NC Agricultural & Technical State University Event Type: Interview moderated by a member of Alpha Kappa Alpha Sorority Inc, Alpha Phi Chapter Event Time: 15:00 - 17:00 ET

Event #4 (Charlotte, NC) Event Location: Bank of America Stadium Event Type: Campaign Rally Event Time: 19:00 - 22:00 ET

Raleigh, NC Full-text of speech from event #1 to be released shortly. The event on NCSU's campus was well received and numerous student clubs were present for the kick-off of the event.

Greensboro, NC Here are 2 questions that garnered a very positive response from the audience: Q: "In today's environment of racial and identity politics, do you feel that you were forced to pick a white man as your running mate?" A: "No, unequivocally. My decision to choose Coach Walz as my running mate was not influenced by racial or identity politics. It was about finding someone who shares my vision, my values, who has a proven track record of bringing people together to get things done, and can be a little fun while doing it. Tim is an experienced leader, a former educator, and a person who has dedicated his life to public service. I considered many exceptional individuals for this role, including leaders like Governor Whitmer, clearly if I considered a second woman identity politics weren't at play. What mattered most in my decision was finding the right person to help lead this country, someone who understands the challenges we face and who can help unify our nation during these divided times.Moving forward, I hope we can reach a point where questions like this are no longer necessary because our focus should be on qualifications, character, and the ability to lead, not just the identity of the individuals. Tim is the right choice!" Q: "Is HBCU funding for schools like NC A&T a priority for your administration, given your personal background?" A: "Well, let me just say, I think it’s pretty clear where my priorities lie, considering I attended an HBCU myself! I'm thankful for my Howard experience every day. And let’s not forget, my running mate, Governor Walz, is a former educator. So yes, HBCU funding is not just a priority—it’s a personal commitment. HBCUs have played a crucial role in shaping leaders, innovators, and changemakers in our country for generations. They provide not just education but also a sense of community, pride, and identity. Our administration is committed to ensuring that HBCUs have the resources they need to continue their legacy of excellence."

Charlotte, NC Below are one quote from Governor Cooper, one from Governor Walz, and two from Vice President Harris that are from the remarks made at today's rally. "North Carolina is not just a battleground state; it’s the proving ground for our democracy. We’ve been underestimated before, but we know that when we stand together, nothing can stop us. We’re going to fight for every vote, every voice, because the future of our country depends on it. Now let me welcome to the stage the next Vice President of the United States of America, Tim Walz!" - Governor Roy Cooper "We are the underdogs in this race, but we’ve got something far more powerful than any poll or pundit’s prediction: the will of the people. We’re not going back to the days of division and chaos. Together, we’re building a future that unites us, and we’re not backing down from the fight because every American deserves a better tomorrow." - Governor Tim Walz

"Donald Trump is a threat to everything we hold dear—our values, our democracy, our future. But let me tell you this: we are stronger than fear, stronger than hate, and we’re going to prove that in every corner of North Carolina and across this great nation." - Vice President Kamala Harris "We’re not here to make America go back to some imagined ‘great’ past. We’re here to build a future that’s better for everyone. We’re not MAGA, we’re moving forward, and we’re not going to stop until every North Carolinian has a voice in that future. We’re not going back!"- Vice President Kamala Harris

~BR~

#North Carolina#Raleigh#charlotte#greensboro#HBCU#Education#University#Student Loans#Student Life#GOTV#Get out the vote#OSTP#Open Access#roy cooper#kamala harris#tim walz#harris walz 2024 campaigning#policy#2024 presidential election#legislation#united states#hq#politics#democracy#Research triangle#research#publishing#R&D

9 notes

·

View notes

Text

White House OSTP Releases PFAS Federal R&D Strategic Plan

The White House Office of Science and Technology Policy (OSTP) announced on September 3, 2024, the release of its Per- and Polyfluoroalkyl Substances (PFAS) Federal Research and Development Strategic Plan (Strategic Plan). Prepared by the Joint Subcommittee on Environment, Innovation, and Public Health PFAS Strategy Team (PFAS ST) of the National Science and Technology Council, the Strategic Plan…

#Enviromental Law#EPA#Federal Research and Development Strategic Plan#Office of Science and Technology Policy#OSTP#per and polyfluoroalkyl substances#PFAS

0 notes

Photo

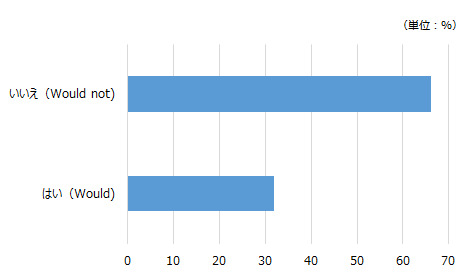

(「自動化技術の労働への影響」に関する情報提供を呼びかけ(アメリカ:2023年5月)|労働政策研究・研修機構(JILPT)から)

ホワイトハウスの科学技術政策局(OSTP)は5月3日、労働者の監視や評価に使用するAIなどの自動化システムの使用に関する情報の提供を国民や組織に求めた。自動化テクノロジーが職場の安全やメンタル��ルスへの悪影響、団結権の侵害、採用や処遇上の差別といった問題をもたらす可能性を指摘。それらの具体的な情報を収集することで、今後の政策策定に役立てる。

0 notes

Text

THINGS LOOLOO SAYS.

8 notes

·

View notes

Text

Anthropic's stated "AI timelines" seem wildly aggressive to me.

As far as I can tell, they are now saying that by 2028 – and possibly even by 2027, or late 2026 – something they call "powerful AI" will exist.

And by "powerful AI," they mean... this (source, emphasis mine):

In terms of pure intelligence, it is smarter than a Nobel Prize winner across most relevant fields – biology, programming, math, engineering, writing, etc. This means it can prove unsolved mathematical theorems, write extremely good novels, write difficult codebases from scratch, etc. In addition to just being a “smart thing you talk to”, it has all the “interfaces” available to a human working virtually, including text, audio, video, mouse and keyboard control, and internet access. It can engage in any actions, communications, or remote operations enabled by this interface, including taking actions on the internet, taking or giving directions to humans, ordering materials, directing experiments, watching videos, making videos, and so on. It does all of these tasks with, again, a skill exceeding that of the most capable humans in the world. It does not just passively answer questions; instead, it can be given tasks that take hours, days, or weeks to complete, and then goes off and does those tasks autonomously, in the way a smart employee would, asking for clarification as necessary. It does not have a physical embodiment (other than living on a computer screen), but it can control existing physical tools, robots, or laboratory equipment through a computer; in theory it could even design robots or equipment for itself to use. The resources used to train the model can be repurposed to run millions of instances of it (this matches projected cluster sizes by ~2027), and the model can absorb information and generate actions at roughly 10x-100x human speed. It may however be limited by the response time of the physical world or of software it interacts with. Each of these million copies can act independently on unrelated tasks, or if needed can all work together in the same way humans would collaborate, perhaps with different subpopulations fine-tuned to be especially good at particular tasks.

In the post I'm quoting, Amodei is coy about the timeline for this stuff, saying only that

I think it could come as early as 2026, though there are also ways it could take much longer. But for the purposes of this essay, I’d like to put these issues aside [...]

However, other official communications from Anthropic have been more specific. Most notable is their recent OSTP submission, which states (emphasis in original):

Based on current research trajectories, we anticipate that powerful AI systems could emerge as soon as late 2026 or 2027 [...] Powerful AI technology will be built during this Administration. [i.e. the current Trump administration -nost]

See also here, where Jack Clark says (my emphasis):

People underrate how significant and fast-moving AI progress is. We have this notion that in late 2026, or early 2027, powerful AI systems will be built that will have intellectual capabilities that match or exceed Nobel Prize winners. They’ll have the ability to navigate all of the interfaces… [Clark goes on, mentioning some of the other tenets of "powerful AI" as in other Anthropic communications -nost]

----

To be clear, extremely short timelines like these are not unique to Anthropic.

Miles Brundage (ex-OpenAI) says something similar, albeit less specific, in this post. And Daniel Kokotajlo (also ex-OpenAI) has held views like this for a long time now.

Even Sam Altman himself has said similar things (though in much, much vaguer terms, both on the content of the deliverable and the timeline).

Still, Anthropic's statements are unique in being

official positions of the company

extremely specific and ambitious about the details

extremely aggressive about the timing, even by the standards of "short timelines" AI prognosticators in the same social cluster

Re: ambition, note that the definition of "powerful AI" seems almost the opposite of what you'd come up with if you were trying to make a confident forecast of something.

Often people will talk about "AI capable of transforming the world economy" or something more like that, leaving room for the AI in question to do that in one of several ways, or to do so while still failing at some important things.

But instead, Anthropic's definition is a big conjunctive list of "it'll be able to do this and that and this other thing and...", and each individual capability is defined in the most aggressive possible way, too! Not just "good enough at science to be extremely useful for scientists," but "smarter than a Nobel Prize winner," across "most relevant fields" (whatever that means). And not just good at science but also able to "write extremely good novels" (note that we have a long way to go on that front, and I get the feeling that people at AI labs don't appreciate the extent of the gap [cf]). Not only can it use a computer interface, it can use every computer interface; not only can it use them competently, but it can do so better than the best humans in the world. And all of that is in the first two paragraphs – there's four more paragraphs I haven't even touched in this little summary!

Re: timing, they have even shorter timelines than Kokotajlo these days, which is remarkable since he's historically been considered "the guy with the really short timelines." (See here where Kokotajlo states a median prediction of 2028 for "AGI," by which he means something less impressive than "powerful AI"; he expects something close to the "powerful AI" vision ["ASI"] ~1 year or so after "AGI" arrives.)

----

I, uh, really do not think this is going to happen in "late 2026 or 2027."

Or even by the end of this presidential administration, for that matter.

I can imagine it happening within my lifetime – which is wild and scary and marvelous. But in 1.5 years?!

The confusing thing is, I am very familiar with the kinds of arguments that "short timelines" people make, and I still find the Anthropic's timelines hard to fathom.

Above, I mentioned that Anthropic has shorter timelines than Daniel Kokotajlo, who "merely" expects the same sort of thing in 2029 or so. This probably seems like hairsplitting – from the perspective of your average person not in these circles, both of these predictions look basically identical, "absurdly good godlike sci-fi AI coming absurdly soon." What difference does an extra year or two make, right?

But it's salient to me, because I've been reading Kokotajlo for years now, and I feel like I basically get understand his case. And people, including me, tend to push back on him in the "no, that's too soon" direction. I've read many many blog posts and discussions over the years about this sort of thing, I feel like I should have a handle on what the short-timelines case is.

But even if you accept all the arguments evinced over the years by Daniel "Short Timelines" Kokotajlo, even if you grant all the premises he assumes and some people don't – that still doesn't get you all the way to the Anthropic timeline!

To give a very brief, very inadequate summary, the standard "short timelines argument" right now is like:

Over the next few years we will see a "growth spurt" in the amount of computing power ("compute") used for the largest LLM training runs. This factor of production has been largely stagnant since GPT-4 in 2023, for various reasons, but new clusters are getting built and the metaphorical car will get moving again soon. (See here)

By convention, each "GPT number" uses ~100x as much training compute as the last one. GPT-3 used ~100x as much as GPT-2, and GPT-4 used ~100x as much as GPT-3 (i.e. ~10,000x as much as GPT-2).

We are just now starting to see "~10x GPT-4 compute" models (like Grok 3 and GPT-4.5). In the next few years we will get to "~100x GPT-4 compute" models, and by 2030 will will reach ~10,000x GPT-4 compute.

If you think intuitively about "how much GPT-4 improved upon GPT-3 (100x less) or GPT-2 (10,000x less)," you can maybe convince yourself that these near-future models will be super-smart in ways that are difficult to precisely state/imagine from our vantage point. (GPT-4 was way smarter than GPT-2; it's hard to know what "projecting that forward" would mean, concretely, but it sure does sound like something pretty special)

Meanwhile, all kinds of (arguably) complementary research is going on, like allowing models to "think" for longer amounts of time, giving them GUI interfaces, etc.

All that being said, there's still a big intuitive gap between "ChatGPT, but it's much smarter under the hood" and anything like "powerful AI." But...

...the LLMs are getting good enough that they can write pretty good code, and they're getting better over time. And depending on how you interpret the evidence, you may be able to convince yourself that they're also swiftly getting better at other tasks involved in AI development, like "research engineering." So maybe you don't need to get all the way yourself, you just need to build an AI that's a good enough AI developer that it improves your AIs faster than you can, and then those AIs are even better developers, etc. etc. (People in this social cluster are really keen on the importance of exponential growth, which is generally a good trait to have but IMO it shades into "we need to kick off exponential growth and it'll somehow do the rest because it's all-powerful" in this case.)

And like, I have various disagreements with this picture.

For one thing, the "10x" models we're getting now don't seem especially impressive – there has been a lot of debate over this of course, but reportedly these models were disappointing to their own developers, who expected scaling to work wonders (using the kind of intuitive reasoning mentioned above) and got less than they hoped for.

And (in light of that) I think it's double-counting to talk about the wonders of scaling and then talk about reasoning, computer GUI use, etc. as complementary accelerating factors – those things are just table stakes at this point, the models are already maxing out the tasks you had defined previously, you've gotta give them something new to do or else they'll just sit there wasting GPUs when a smaller model would have sufficed.

And I think we're already at a point where nuances of UX and "character writing" and so forth are more of a limiting factor than intelligence. It's not a lack of "intelligence" that gives us superficially dazzling but vapid "eyeball kick" prose, or voice assistants that are deeply uncomfortable to actually talk to, or (I claim) "AI agents" that get stuck in loops and confuse themselves, or any of that.

We are still stuck in the "Helpful, Harmless, Honest Assistant" chatbot paradigm – no one has seriously broke with it since that Anthropic introduced it in a paper in 2021 – and now that paradigm is showing its limits. ("Reasoning" was strapped onto this paradigm in a simple and fairly awkward way, the new "reasoning" models are still chatbots like this, no one is actually doing anything else.) And instead of "okay, let's invent something better," the plan seems to be "let's just scale up these assistant chatbots and try to get them to self-improve, and they'll figure it out." I won't try to explain why in this post (IYI I kind of tried to here) but I really doubt these helpful/harmless guys can bootstrap their way into winning all the Nobel Prizes.

----

All that stuff I just said – that's where I differ from the usual "short timelines" people, from Kokotajlo and co.

But OK, let's say that for the sake of argument, I'm wrong and they're right. It still seems like a pretty tough squeeze to get to "powerful AI" on time, doesn't it?

In the OSTP submission, Anthropic presents their latest release as evidence of their authority to speak on the topic:

In February 2025, we released Claude 3.7 Sonnet, which is by many performance benchmarks the most powerful and capable commercially-available AI system in the world.

I've used Claude 3.7 Sonnet quite a bit. It is indeed really good, by the standards of these sorts of things!

But it is, of course, very very far from "powerful AI." So like, what is the fine-grained timeline even supposed to look like? When do the many, many milestones get crossed? If they're going to have "powerful AI" in early 2027, where exactly are they in mid-2026? At end-of-year 2025?

If I assume that absolutely everything goes splendidly well with no unexpected obstacles – and remember, we are talking about automating all human intellectual labor and all tasks done by humans on computers, but sure, whatever – then maybe we get the really impressive next-gen models later this year or early next year... and maybe they're suddenly good at all the stuff that has been tough for LLMs thus far (the "10x" models already released show little sign of this but sure, whatever)... and then we finally get into the self-improvement loop in earnest, and then... what?

They figure out to squeeze even more performance out of the GPUs? They think of really smart experiments to run on the cluster? Where are they going to get all the missing information about how to do every single job on earth, the tacit knowledge, the stuff that's not in any web scrape anywhere but locked up in human minds and inaccessible private data stores? Is an experiment designed by a helpful-chatbot AI going to finally crack the problem of giving chatbots the taste to "write extremely good novels," when that taste is precisely what "helpful-chatbot AIs" lack?

I guess the boring answer is that this is all just hype – tech CEO acts like tech CEO, news at 11. (But I don't feel like that can be the full story here, somehow.)

And the scary answer is that there's some secret Anthropic private info that makes this all more plausible. (But I doubt that too – cf. Brundage's claim that there are no more secrets like that now, the short-timelines cards are all on the table.)

It just does not make sense to me. And (as you can probably tell) I find it very frustrating that these guys are out there talking about how human thought will basically be obsolete in a few years, and pontificating about how to find new sources of meaning in life and stuff, without actually laying out an argument that their vision – which would be the common concern of all of us, if it were indeed on the horizon – is actually likely to occur on the timescale they propose.

It would be less frustrating if I were being asked to simply take it on faith, or explicitly on the basis of corporate secret knowledge. But no, the claim is not that, it's something more like "now, now, I know this must sound far-fetched to the layman, but if you really understand 'scaling laws' and 'exponential growth,' and you appreciate the way that pretraining will be scaled up soon, then it's simply obvious that –"

No! Fuck that! I've read the papers you're talking about, I know all the arguments you're handwaving-in-the-direction-of! It still doesn't add up!

280 notes

·

View notes

Text

So about the Vera Rubin image release. Obviously the photos are fantastic and I’m obsessed with them and I liked the guy who actually presented the images tho I don’t remember them ever showing his name. He has a great tie tho. ANYWAY. I need to rant about the director of the OSTP for a sec because his speech was so fucking bad I cannot believe how he wasn’t embarrassed to say that shit especially following the nice speech from the Chilean ambassador. Bc the ambassador was obviously very proud of the project and proud of chile for hosting it and the efforts chile has put into science and education and maintaining their dark skies. I believe he said currently 40% of ground based imaging is taken from chile so they have a responsibility to take care of their skies and not pollute it with too much light. And I rly respect that I thought that was very nice and his speech was very nice bc it was very much about pride in chile for their contribution to the project and to astronomy as a whole while still emphasizing this was an international collaboration. Chile is a very important part of this but not the only part. AND THEN IMMEDIATELY WERE FOLLOWED BY THIS YANKEE CLOWN who’s speech was so fucking cringe because it was all about American exceptionalism and how we’re a country of pioneers so obviously it’s us that spearhead such amazing research and it was ONLY US who could have done this and EVERYONE WANTS TO WORK WITH AMERICANS BECAUSE WERE THE BEST like so many of us were audibly cringing in our seats because what the fuck? Did you even thank Chile for being the host of this telescope? ider. It was all about America all about me me me and I’m so sick of it

#like this is nothing new it’s just. it kind of ruined the event a little for me#also when he brought god into it like. 😐#like as soon as the lady introducing him said ‘golden age of American science’ I knew there was going to be some bullshit spouting#because how can you say that with a straight face with everything going on#like I was genuinely shocked she had the balls to say that#and this fucking guy.#kratsios what’s his name#how dare you act like you’re proud of this#like you give a fuck#how dare you put us above everyone else#how fucking dare you. kill yourself#.txt#science#politics#space

10 notes

·

View notes

Text

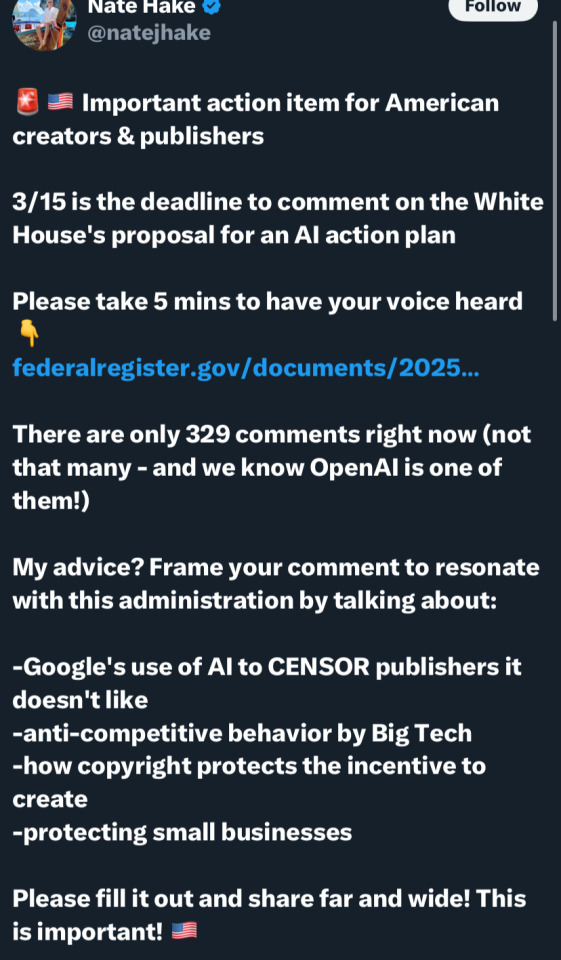

Public comment on AI Exc. Order closes @ end of day 15 March 2025

SUPPLEMENTARY INFORMATION:

On January 23, 2025, President Trump signed Executive Order 14179 (Removing Barriers to American Leadership in Artificial Intelligence) to establish U.S. policy for sustaining and enhancing America's AI dominance in order to promote human flourishing, economic competitiveness, and national security. This Order directs the development of an AI Action Plan to advance America's AI leadership, in a process led by the Assistant to the President for Science and Technology, the White House AI and Crypto Czar, and the National Security Advisor.

This Order follows the President's January 20, 2025, Executive Order 14148, revocation of the Biden-Harris AI Executive Order 14110 of October 30, 2023 (Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence), which hampered the private sector's ability to innovate in AI by imposing burdensome government requirements restricting private sector AI development and deployment. The Trump Administration recognizes that with the right government policies, the United States can solidify its position as the leader in AI and secure a brighter future for all Americans.

OSTP seeks input on the highest priority policy actions that should be in the new AI Action Plan. Responses can address any relevant AI policy topic, including but not limited to: hardware and chips, data centers, energy consumption and efficiency, model development, open source development, application and use (either in the private sector or by government), explainability and assurance of AI model outputs, cybersecurity, data privacy and security throughout the lifecycle of AI system development and deployment (to include security against AI model attacks), risks, regulation and governance, technical and safety standards, national security and defense, research and development, education and workforce, innovation and competition, intellectual property, procurement, international collaboration, and export controls. Respondents are encouraged to suggest concrete AI policy actions needed to address the topics raised.

Comments received will be taken into consideration in the development of the AI Action Plan.

6 notes

·

View notes

Text

TIKTOK JUST GOT BANNED IM SO UPSET I CANT GET INNNNN

SOTP OSTP THIS IS A BAD DREAM A BAD DREAM I TELL YOU

9 notes

·

View notes

Text

ostp sending me asks where its a sex thing its not a sex thing. im going to make you watch the worst anime series in the world and ill pout and be upset if you dont like them

8 notes

·

View notes