#Oracle CDC

Explore tagged Tumblr posts

Text

she put this so well...as a disabled qtbipoc i feel this so much ;_;

"To be a disabled oracle is someone who tells their truths in a hostile ableist world that does not believe you. The pandemic reveals an attitude that disabled, sick, poor and immunocompromised people are disposable."

#disability#alice wong#disability visibility project#disabled oracle#disabled bipoc#bipoc mental health#muscular dystrophy#long covid#oracle#us health system#public health crisis#cdc failure#mask up

177 notes

·

View notes

Text

Welcome to the Overworked Blorbo Battle Preliminary Round!

Many characters were submitted for this tournament so, in order to allow as many different blorbos into the bracket as I can, I’ve had to limit them to one per series. For most, i just included the most highly submitted character but there were a number of series with characters who had the same (or very similar) number of submissions. Therefore, I’ve created a preliminary round for you to vote on which character from each series gets to enter the bracket.

There will be 37 polls lasting 1 week each.

The polls will be separated into 3 waves. Each wave will be posted 24 hours after the previous.

The first wave will begin on Tuesday the 27th of June at 3PM BST

I've tried my best to only include official images for all of the characters on the polls but I'm not familiar with every series listed so, when the polls go up, if you notice I've used a fanart or cosplay image without permission or credit please let me know and I'll add credit and correct it for any future appearances of that character.

I apologise if I’ve accidentally spelled something wrong or used a wrong name for something, I’m not familiar with every series listed.

The matchups are listed under the read more

There may be spoilers ahead

Wave 1

Poll 1: Ace Attorney

Dick Gumshoe

Apollo Justice

Miles Edgeworth

Franziska Von Karma

Phoenix Wright

Poll 2: Avatar: The Last Airbender

Katara

Azula

Aang

The Cabbage Merchant

Poll 3: Batman

Barbara Gordon/Oracle

Batman/Bruce Wayne

Alfred Pennyworth

Tim Drake/Red Robin

Dick Grayson/Nightwing

Jim Gordon

Poll 4: BBC Ghosts

Pat Butcher

The Captain

Poll 5: Welcome To The Table

CDC

DC

Poll 6: Carmen Sandiengo

Carmen Sandiego

Chase Devineaux

Poll 7: Critical Role

Laerryn Coramar Seelie

Percival De Rolo

Poll 8: Death Note

Light Yagami

L

Touta Matsuda

Poll 9: Disco Elysium

Harry Du Bois

Kim Kitsuragi

Poll 10: Discworld

Havelock Vetinari

Ponder Stibbons

Moist Von Lipwig

Poll 11: Doctor Who

Coordinator Narvin

Romana II

Rory Williams

The Doctor

Poll 12: Ensemble Stars!

Keito Hasumi

Mao Isara

Tsumugi Aoba

Wave 2

Poll 13: ER

Carol Hathaway

Mark Greene

Poll 14: Falsettos

Mendel Weisenbachfeld

Dr Charlotte Dubois

Poll 15: Final Fantasy

Aymeric De Borel

Emet-Selch

Reeve Tuesti

Jessie Jaye

Poll 16: Fullmetal Alchemist

Riza Hawkeye

Roy Mustang

Poll 17: Hatchetfield/The Guy Who Didn't Like Musicals

Emma Perkins

Paul Mattews

Poll 18: Homestuck

Karkat Vantas

Peregrine Mendicant

Poll 19: Honkai

Fu Hua

Natasha

Poll 20: House MD

Lisa Cuddy

James Wilson

Poll 21: Hunter X Hunter

Cheadle Yorkshire

Heavens Arena Elevator Operator

Kurapika

Poll 22: Lobotomy Corporate

Angela

Hod

Poll 23: Mistborn

Elend Venture

Marsh

Poll 24: Monster Prom

Joy Johnson-Johjima

Vera Oberlin

Wave 3

Poll 25: Persona

Ryotaro Dojima

Sadayo Kawakami

Poll 26: Project Sekai

Mafuyu Asahina

Yoisaki Kanade

Haruka Kiritani

Poll 27: Spongebob Squarepants

Squidward

Spongebob

Poll 28: Star Wars

Obi-Wan Kenobi

Commander Fox

Poll 29: The 25th Annual Putman County Spelling Bee

Logainne Schwartzandgrubenierre

Marcy Park

Poll 30: The Adventure Zone

Lucretia

Mama

Kravitz

Poll 31: The Owl House

Alador Blight

Hunter

Poll 32: The Wilds

Dot Campbell

Fatin Jadmani

Rachel Reid

Poll 33: The X Files

Dana Scully

Director Walter Skinner

Poll 34: Toontown: Corporate Clash

Atticus Wing

Chip Revvington

Poll 35: Transformers

Soundwave

Shockwave

Prowl

Optimus Prime

Minimus Ambus

Ratchet

Poll 36: Witch Hat Atilier

Olruggio

Qifrey

Poll 37: Worm

Amy Dallon/Panacea

Lisa Wilbourn

#preliminary round#elimination round#tournament dates#tournament updates#tournament#tournaments#prelims#I'm not even going to try to tag every character/series listed#overworked blorbo battle

30 notes

·

View notes

Text

ELDARYA A NEW ERA EPISODE 18: CDC LANCE (SPOIL)

Good Morning/Afternoon/Evening my little otters, welcome to a new post of Eldarya: A New Era. I'm late, I know ! But I was not in the mood lately to write my thoughts about it. Maybe, because it's almost the end. And deep inside, it breaks my heart because I grew up with Eldarya. But now, I'm ready so here we go ! And it will be short for once ! The wedding was soooo fucking emotional. I cried so many times. So beautiful for the chaos that will come. The first illustration was very cute with everyone dancing with all the CDC. But I knew it was too good to be true. That something was coming, and I was almost surprised by Erika's father army coming and bringing fire. What the actual fuck ? What if one of them, killed or hurt Erika ? How can the father would think that killing faeries is the key ? It was very stupid. Why the villains in this game are so stupid ? At least Lance as Ashkore in TO was way more badass than them ! Then the scene with Erika confronting him, almost in the way to kill him. It was very deep, and extreme. But thank god, Lance stopped her. Explaining her, how it would never be the best way to end this, by killing him and make her feel better. I just thought that it would of been better to know how he deals with his past a long time before. But the most hurtful and heartbreaking scene, (before or after) Valkyon's death is Feng Zifu's death...I was so chocked. I needed a break when they announced it. Because Feng Zifu's was like a father/grandfather for her. And I was so scared that Karenn has been shot. For the interrogation between Erika, Matthieu and Charles it was a little bit messy about the reason why Charles wanted to "save" Erika by firing everything that was moving. It is their fault if Eldarya will dissapear. Because they used too much portals to go in there. It's like they blaming their existence... The Council came, Erika revealed to everyone what she saw by The Oracle (I don't know how it's called in English, sorry...) and I was suprised that no one in the entire eighteen episodes, people didn't know that Ophelia was the Oracle. I mean since a couple of times Erika had visions and told about them and saying that she saw a little girl but it's just now when everything would end that they finally understood that she's the Oracle. Then, the preparation of the exil brings no time to everyone. And we learned that the forest was gone, replaced by a big flaw. The end is coming... That's is for this episode. I hope you liked it. Don't hesitate to write also what you think about this episode on the comments. I'll see you guys soon, for the next episode ! See ya ! <3

13 notes

·

View notes

Text

Pass AWS SAP-C02 Exam in First Attempt

Crack the AWS Certified Solutions Architect - Professional (SAP-C02) exam on your first try with real exam questions, expert tips, and the best study resources from JobExamPrep and Clearcatnet.

How to Pass AWS SAP-C02 Exam in First Attempt: Real Exam Questions & Tips

Are you aiming to pass the AWS Certified Solutions Architect – Professional (SAP-C02) exam on your first try? You’re not alone. With the right strategy, real exam questions, and trusted study resources like JobExamPrep and Clearcatnet, you can achieve your certification goals faster and more confidently.

Overview of SAP-C02 Exam

The SAP-C02 exam validates your advanced technical skills and experience in designing distributed applications and systems on AWS. Key domains include:

Design Solutions for Organizational Complexity

Design for New Solutions

Continuous Improvement for Existing Solutions

Accelerate Workload Migration and Modernization

Exam Format:

Number of Questions: 75

Type: Multiple choice, multiple response

Duration: 180 minutes

Passing Score: Approx. 750/1000

Cost: $300

AWS SAP-C02 Real Exam Questions (Real Set)

Here are 5 real-exam style questions to give you a feel for the exam difficulty and topics:

Q1: A company is migrating its on-premises Oracle database to Amazon RDS. The solution must minimize downtime and data loss. Which strategy is BEST?

A. AWS Database Migration Service (DMS) with full load only B. RDS snapshot and restore C. DMS with CDC (change data capture) D. Export and import via S3

Answer: C. DMS with CDC

Q2: You are designing a solution that spans multiple AWS accounts and VPCs. Which AWS service allows seamless inter-VPC communication?

A. VPC Peering B. AWS Direct Connect C. AWS Transit Gateway D. NAT Gateway

Answer: C. AWS Transit Gateway

Q3: Which strategy enhances resiliency in a serverless architecture using Lambda and API Gateway?

A. Use a single Availability Zone B. Enable retries and DLQs (Dead Letter Queues) C. Store state in Lambda memory D. Disable logging

Answer: B. Enable retries and DLQs

Q4: A company needs to archive petabytes of data with occasional access within 12 hours. Which storage class should you use?

A. S3 Standard B. S3 Intelligent-Tiering C. S3 Glacier D. S3 Glacier Deep Archive

Answer: D. S3 Glacier Deep Archive

Q5: You are designing a disaster recovery (DR) solution for a high-priority application. The RTO is 15 minutes, and RPO is near zero. What is the most appropriate strategy?

A. Pilot Light B. Backup & Restore C. Warm Standby D. Multi-Site Active-Active

Answer: D. Multi-Site Active-Active

Click here to Start Exam Recommended Resources to Pass SAP-C02 in First Attempt

To master these types of questions and scenarios, rely on real-world tested resources. We recommend:

✅ JobExamPrep

A premium platform offering curated practice exams, scenario-based questions, and up-to-date study materials specifically for AWS certifications. Thousands of professionals trust JobExamPrep for structured and realistic exam practice.

✅ Clearcatnet

A specialized site focused on cloud certification content, especially AWS, Azure, and Google Cloud. Their SAP-C02 study guide and video explanations are ideal for deep conceptual clarity.Expert Tips to Pass the AWS SAP-C02 Exam

Master Whitepapers – Read AWS Well-Architected Framework, Disaster Recovery, and Security best practices.

Practice Scenario-Based Questions – Focus on use cases involving multi-account setups, migration, and DR.

Use Flashcards – Especially for services like AWS Control Tower, Service Catalog, Transit Gateway, and DMS.

Daily Review Sessions – Use JobExamPrep and Clearcatnet quizzes every day.

Mock Exams – Simulate the exam environment at least twice before the real test.

🎓 Final Thoughts

The AWS SAP-C02 exam is tough—but with the right approach, you can absolutely pass it on the first attempt. Study smart, practice real exam questions, and leverage resources like JobExamPrep and Clearcatnet to build both confidence and competence.

#SAPC02#AWSSAPC02#AWSSolutionsArchitect#AWSSolutionsArchitectProfessional#AWSCertifiedSolutionsArchitect#SolutionsArchitectProfessional#AWSArchitect#AWSExam#AWSPrep#AWSStudy#AWSCertified#AWS#AmazonWebServices#CloudCertification#TechCertification#CertificationJourney#CloudComputing#CloudEngineer#ITCertification

0 notes

Text

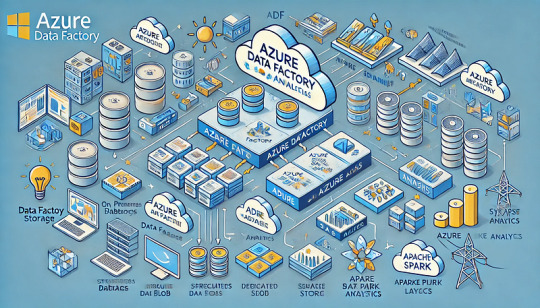

Explore how ADF integrates with Azure Synapse for big data processing.

How Azure Data Factory (ADF) Integrates with Azure Synapse for Big Data Processing

Azure Data Factory (ADF) and Azure Synapse Analytics form a powerful combination for handling big data workloads in the cloud.

ADF enables data ingestion, transformation, and orchestration, while Azure Synapse provides high-performance analytics and data warehousing. Their integration supports massive-scale data processing, making them ideal for big data applications like ETL pipelines, machine learning, and real-time analytics. Key Aspects of ADF and Azure Synapse Integration for Big Data Processing

Data Ingestion at Scale ADF acts as the ingestion layer, allowing seamless data movement into Azure Synapse from multiple structured and unstructured sources, including: Cloud Storage: Azure Blob Storage, Amazon S3, Google

Cloud Storage On-Premises Databases: SQL Server, Oracle, MySQL, PostgreSQL Streaming Data Sources: Azure Event Hubs, IoT Hub, Kafka

SaaS Applications: Salesforce, SAP, Google Analytics 🚀 ADF’s parallel processing capabilities and built-in connectors make ingestion highly scalable and efficient.

2. Transforming Big Data with ETL/ELT ADF enables large-scale transformations using two primary approaches: ETL (Extract, Transform, Load): Data is transformed in ADF’s Mapping Data Flows before loading into Synapse.

ELT (Extract, Load, Transform): Raw data is loaded into Synapse, where transformation occurs using SQL scripts or Apache Spark pools within Synapse.

🔹 Use Case: Cleaning and aggregating billions of rows from multiple sources before running machine learning models.

3. Scalable Data Processing with Azure Synapse Azure Synapse provides powerful data processing features: Dedicated SQL Pools: Optimized for high-performance queries on structured big data.

Serverless SQL Pools: Enables ad-hoc queries without provisioning resources.

Apache Spark Pools: Runs distributed big data workloads using Spark.

💡 ADF pipelines can orchestrate Spark-based processing in Synapse for large-scale transformations.

4. Automating and Orchestrating Data Pipelines ADF provides pipeline orchestration for complex workflows by: Automating data movement between storage and Synapse.

Scheduling incremental or full data loads for efficiency. Integrating with Azure Functions, Databricks, and Logic Apps for extended capabilities.

⚙️ Example: ADF can trigger data processing in Synapse when new files arrive in Azure Data Lake.

5. Real-Time Big Data Processing ADF enables near real-time processing by: Capturing streaming data from sources like IoT devices and event hubs. Running incremental loads to process only new data.

Using Change Data Capture (CDC) to track updates in large datasets.

📊 Use Case: Ingesting IoT sensor data into Synapse for real-time analytics dashboards.

6. Security & Compliance in Big Data Pipelines Data Encryption: Protects data at rest and in transit.

Private Link & VNet Integration: Restricts data movement to private networks.

Role-Based Access Control (RBAC): Manages permissions for users and applications.

🔐 Example: ADF can use managed identity to securely connect to Synapse without storing credentials.

Conclusion

The integration of Azure Data Factory with Azure Synapse Analytics provides a scalable, secure, and automated approach to big data processing.

By leveraging ADF for data ingestion and orchestration and Synapse for high-performance analytics, businesses can unlock real-time insights, streamline ETL workflows, and handle massive data volumes with ease.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Jaw-dropping number of inmates in women's prisons are actually just opportunistic trannies - ARTICLE

Biden’s FAKE Pardons - I am posting this again because it is important and I want to make sure people understand this and possibly if anyone has a way to get information to the Trump team please get him this information. Lex Greene is a good friend of mine and he is an expert in the original intent of the Constitution which is the only actual interpretation. Please consider supporting his work by becoming a paid subscriber. ARTICLE

You Can’t Vaccinate Against Cancer - I know many are concerned with the recent announcement from President Trump and his support of AI and AI cancer detection and the creation of mRNA vaccine within 48 hours and rightfully so. AI and the vaccine are just one concern. The people pushing this are evil people (Sam Altman is being sued by his own sister for years of sexual abuse starting when she was 3 years old and the mother of an OpenAI whistleblower who was murdered) Elon Musk left OpenAI because of questionable practices. Larry Ellison has a dark past as well and he said AI can be used to track everyone in order to make them behave. Larry Ellison developed Oracle for the CIA. Trump is being sold a bill of goods just like the COVID jab and he is being lied to just like they lied to him about the COVID jab. Dr. Hooker from Children’s Health Defense explains why AI mRNA vaccine for cancer cannot work. 38 min. VIDEO

2023-2024 Freedom Index - INDEX

U.S. Launches ‘One Health’ Plan Prompting Concerns About Global Power Play - there are many good links in this article. The framework (which is huge) and land use caught my eye. Check you state Dept. of Health and Dept. of Agriculture. In TN we have been gearing up for One Health (and receiving federal funds since 2018) Funny how One Health is now part of the Pandemic Treaty. What came first the chicken or the egg? One Health hit the CDC back in 2009. You might want to be careful in the future when you take your pets to the vet. The vets play a huge part in the surveillance scheme because unlike medical doctors they are required to report directly to the federal government while doctors report to the state. ARTICLE

1 note

·

View note

Text

The Ultimate Guide to 2024 Mustang Parts: Enhance Your Driving Experience

The 2024 Mustang is a beast on the road, embodying the perfect blend of power, style, and advanced technology. Whether you’re aiming to boost performance, enhance aesthetics, or upgrade your ride’s features, finding the right parts is crucial. Dive into our comprehensive guide to the top 2024 Mustang parts and take your driving experience to the next level!

Unleash the Power: Performance Parts 🚀💪

1. High-Performance Air Filters 🌬️

A high-performance air filter ensures your Mustang’s engine breathes better, which translates to increased horsepower and efficiency. Brands like K&N and AFE offer filters that maximize airflow and protect your engine from contaminants.

Benefits: Improved acceleration, better fuel efficiency.

Top Picks: K&N High-Performance Air Filter, AFE Power Pro 5R.

2. Cold Air Intakes ❄️🔧

Upgrade your Mustang’s air intake system with a cold air intake to enhance engine performance. By drawing cooler air into the engine, these systems can significantly boost horsepower and torque.

Benefits: Increased horsepower, better throttle response.

Top Picks: JLT Performance Cold Air Intake, Roush Performance Cold Air Kit.

3. Performance Exhaust Systems 🎶🚀

A performance exhaust system not only adds a distinctive growl to your Mustang but also improves engine efficiency. Look for systems made from high-quality materials that reduce backpressure and enhance exhaust flow.

Benefits: Enhanced sound, increased power.

Top Picks: Borla ATAK Exhaust System, MagnaFlow Performance Exhaust.

4. ECU Tuners ⚙️📈

Maximize your Mustang’s potential with an ECU tuner. These devices reprogram your car’s engine control unit to optimize performance parameters, resulting in a noticeable boost in power and efficiency.

Benefits: Increased horsepower and torque, improved fuel economy.

Top Picks: SCT X4 Power Flash, Hypertech Max Energy Tuner.

Enhance Your Ride: Aesthetic Upgrades 🎨🚗

1. Custom Grilles ✨🔥

Give your Mustang a unique look with a custom grille. From aggressive mesh designs to sleek billet styles, a new grille can completely transform the front end of your vehicle.

Benefits: Improved appearance, enhanced airflow.

Top Picks: Roush Mustang Grille, CDC Performance Grille.

2. Body Kits 🛠️🚀

Elevate your Mustang’s exterior with a body kit. Whether you’re looking for a full kit or individual components like side skirts and rear diffusers, body kits can significantly enhance your car’s aerodynamic profile and visual appeal.

Benefits: Improved aerodynamics, aggressive styling.

Top Picks: APR Performance Body Kit, Steeda Mustang Body Kit.

3. Spoilers and Wings 🕶️🌪️

Add a touch of racing flair with spoilers and wings. These components not only enhance the Mustang’s sporty look but also provide additional downforce at high speeds, improving stability.

Benefits: Sporty appearance, increased downforce.

Top Picks: Saleen Rear Wing, Ford Performance Spoiler.

4. LED Lighting Kits 💡🚗

Upgrade your lighting with LED kits to achieve a modern and striking look. LED headlights, taillights, and underglow kits can make your Mustang stand out even in the darkest conditions.

Benefits: Enhanced visibility, stylish look.

Top Picks: Diode Dynamics LED Headlights, Oracle Lighting LED Taillights.

Comfort and Technology: Modern Upgrades 🌟📱

1. Advanced Infotainment Systems 🎶📡

Stay connected and entertained with a cutting-edge infotainment system. Upgrades like larger touchscreen displays and advanced navigation systems offer an enhanced driving experience.

Benefits: Improved connectivity, better navigation.

Top Picks: Sony XAV-AX8000, Alpine iLX-107.

2. Custom Seat Covers ���️🧥

Protect your seats and add a personal touch with custom seat covers. Choose from various materials and designs to match your style while keeping your seats in top condition.

Benefits: Added protection, personalized style.

Top Picks: Covercraft Custom Seat Covers, Katzkin Leather Seat Covers.

3. Upgraded Suspension Systems 🏎️🛠️

Enhance your Mustang’s handling and ride quality with upgraded suspension components. Performance shocks, struts, and springs can make a noticeable difference in how your car feels on the road.

Benefits: Better handling, smoother ride.

Top Picks: Eibach Pro-Kit Springs, Bilstein B6 Performance Shocks.

4. Enhanced Climate Control Systems 🌡️❄️

Upgrade your climate control system to ensure optimal comfort in any weather. Features like automatic climate control and advanced air filtration can make every drive more enjoyable.

Benefits: Improved comfort, better air quality.

Top Picks: Ford Performance Climate Control Kit, WeatherTech All-Weather Floor Mats.

Why Choose Our 2024 Mustang Parts? 🏆🔧

Choosing the right parts for your 2024 Mustang is crucial for achieving the performance, appearance, and comfort you desire. Here’s why our selection stands out:

Quality Assurance: We offer only top-quality parts from trusted brands, ensuring durability and reliability.

Wide Range: From performance upgrades to aesthetic enhancements, we have a comprehensive range of parts to suit every need.

Expert Guidance: Our team of experts is available to help you select the best parts and answer any questions you might have.

Conclusion: Elevate Your Mustang Experience 🚀🔥

Upgrading your 2024 Mustang with the right parts can significantly enhance its performance, appearance, and comfort. Whether you’re looking to boost power, refresh your car’s look, or improve driving comfort, we have the parts you need. Explore our collection and transform your Mustang into a true powerhouse on the road!

Ready to take your ride to the next level? Browse our selection of 2024 Mustang parts and get started on your upgrade today! 🚗💨💥

1 note

·

View note

Text

Medical Billing Market: $16.8B to $27.7B Growth Forecast (2024-2029)

The "Medical Billing Market Size, Share & Trends by Product (Software, Service), Application (RCM, EHR, Practice Management), Type (Account receivable, Claim, Coding, Analytics), Service (Managed, Professional), End User (Hospital, Speciality), & Region- Global Forecast to 2029" report highlights substantial growth projections. It anticipates the market to escalate from $16.8 billion in 2024 to $27.7 billion by 2029, at a notable CAGR of 10.5%. The global medical billing market is experiencing significant growth, driven by increasing patient numbers, the rising complexity of medical billing and coding procedures, expanding healthcare insurance coverage, and growing healthcare expenditure. The need for regulatory compliance and the shift towards electronic health records (EHR) integration and digitalization also contribute to this growth. Outsourcing medical billing services for better revenue cycle management and adopting advanced technologies such as AI, cloud solutions, and automation further propel market expansion. The growing geriatric population and prevalence of chronic diseases increase the number of insurance claims, driving the demand for medical billing solutions. However, the high cost of deployment, including maintenance, software updates, and training, poses a restraint, particularly for small healthcare facilities. Opportunities in the market include the rising demand for AI and cloud-based deployment to optimize billing processes and reduce errors. Data security concerns and the risk of breaches remain significant challenges. The market is segmented by component, facility size, end users, and region, with North America holding the largest share in 2023. Key players in the market include Oracle, McKesson Corporation, Veradigm LLC, and others.

Download PDF Brochure:

Global Medical Billing Market Dynamics

DRIVER: Growing patient numbers and the ensuing expansion of health insurance

The growing geriatric population, the chronic diseases pervasiveness, and the burgeoning demand for quality healthcare in global healthcare are these days posing an increasing strain on the global healthcare system. The World Health Organization (WHO) reports that the number of people aged 60 and older is expected to grow from roughly 900 million in 2015 to about 2 billion by 2050. The CDC stated that chronic diseases like cancer, cardiovascular disease (CVD), diabetes, and respiratory diseases cause approximately 70% of deaths in the US every year. In addition to this according to Eurostat, 21% of the Europe population was aged 65 and over, compared to the 16% in 2001.

Restraint: High cost of deployment

Factors influencing the cost of medical billing services include the volume and complexity of services handled, impacting the need for extensive billing resources. Medical billing systems are expensive software solutions, with maintenance and software update costs often exceeding the initial purchase price. Support and maintenance services, which include software modifications and upgrades represent a huge recurring expenditure. This contributes to a large portion of the total cost of ownership. Additionally, the healthcare industry often lacks internal IT expertise, necessitating end-user training to maximize the efficiency of medical billing solutions, further increasing the cost of ownership. Post-sale custom interface development for software integration also requires additional verification and validation to ensure accuracy and completeness, adding to the overall expenses.

OPPORTUNITY: Rising demand for AI and cloud-based deployment

Using machine learning algorithms and predictive analytics to shorten drug discovery times, give patients virtual help, and speed up illness diagnosis by analyzing medical images, combining data and artificial intelligence (AI) can potentially improve outcomes and save costs. Because Al can optimize both clinical and non-clinical processes, it is becoming increasingly popular in the healthcare industry. This is because Al can solve a wide range of issues for patients, providers, and the industry as a whole. With Al's assistance, manual and repetitive processes that arise during patient access, automated coding, expediting claim processing, lowering billing errors, collections, and denials can all be automated. When AI is combined with medical billing, it can mimic intelligent human behavior and carry out these tasks more precisely

CHALLENGE: Concerns about confidentiality and data security

The shift to digital medical records has amplified data vulnerabilities, escalating the risk of breaches. Issues include inadequate internal controls, outdated policies, and insufficient training. While technologies like EHRs and health data exchanges enhance healthcare efficiency, they also widen security risks. Safeguarding proprietary data and applications remains a pivotal challenge hindering the growth of the medical billing market.

The global medical billing industry is segmented by component, facility size, end users and region

By component type, the service segment accounted for the largest share of the medical billing industry in 2023.

The medical billing market is segmented by component type into software and service solutions. The service segment accounted for the largest market share in 2023. This segment is further sub-segmented into managed services and professional services. The service segment holds the largest share in the medical billing market because of its critical role in providing complete solutions and specialized knowledge that expedites healthcare providers' billing processes. These services include vital tasks like medical coding, claims submission, denial management, and revenue cycle management to maximize reimbursement and financial performance. The dominance of the service segment is further supported by its capacity to provide scalable solutions that can adjust to changing patient volumes and practice sizes, as well as by its dedication to upholding compliance with strict healthcare laws like HIPAA, which guarantees patient privacy and data security.

Request Sample Pages:

The cloud based deployment model is the fastest growing segment in the medical billing industry.

Based on deployment, the medical billing market is segmented into on-premises model, cloud-based based model and SaaS based model. The cloud-based model Medical Billing market is poised for significant growth in the forecast period. As these solutions benefits over conventional on-premise options. First off, cloud-based medical billing systems lower startup expenses and increase accessibility for smaller healthcare businesses by doing away with the requirement for upfront hardware infrastructure investments. Second, these systems are scalable, which frees healthcare providers from the limitations of physical infrastructure to quickly modify resources in response to shifting patient volume and commercial needs.

Large-sized facilities accounted for the largest share of the medical billing industry share in 2023.

Based on facility size, the medical billing market is segmented into large, mid, and small-sized facilities. Some important variables drive the expansion of large-scale facilities in the medical billing industry. The rising cost of healthcare and the intricacy of medical billing procedures call for sophisticated billing solutions, which major facilities can provide. These facilities offer a wide range of services necessary for effectively managing large numbers of patient data and transactions. These services include revenue cycle management, coding, compliance, and claims processing

North America accounts the largest share of medical billing industry in 2023.

Based on the region, the medical billing market is segmented into North America, Europe, Asia Pacific, Latin America, and Middle East and Africa. In 2023 North America accounted for the largest share of the Medical Billing market. The need for effective billing solutions is being driven by integrated billing systems, a complicated and varied healthcare payer landscape, strict regulatory compliance requirements like HIPAA, and substantial healthcare costs. The area also benefits from a sizable patient and healthcare provider base, which encourages competition and innovation among medical billing service providers and technology vendors.

Prominent players in Medical Billing market include Oracle (US), Mckesson Corporation (US), Veradigm LLC (US), Athenahealth, Inc. (US), , Quest Diagnostics Incorporated (US), eClinicalWorks (US), CureMD (US), DrChrono (US), NextGen Healthcare, Inc. (US), CareCloud, Inc. (US), AdvancedMD, Inc. (US), , Kareo, Inc. (US), TherapyNotes LLC. (US), RXNT (US), WebPT (US), CentralReach LLC (US), Epic Systems Corporation (US), CollaborateMD Inc. (US), Advanced Data Systems Corporation (US), ChartLogic (US), Meditab (US), EZClaim (US), RevenueXL Inc. (US), Ambula Health (US), , AllStars Medical Billing (US), Apero Health, Inc. (US), TotalMD (US), Proclaim Billing Services (US).

Recent Developments of Medical Billing Industry:

In January 2024, McKesson Corporation (US) collaborated with Hoffmann-La Roche Limited (Switzerland) to launch patient support program that address administrative challenges and enhances patient experience, streamline the administrative process and accelerates the reimbursement process.

In November 2023, Veradigm LLC (US) launched a software platform named Veradigm Intelligent Payments to offer Intelligent Payments within Veradigm Payerpath via a collaboration with RevSpring to accelerate payment rates and decrease the time spent manually reconciling records for healthcare provider practices.

In May 2023, athenahealth, Inc. (US) partnered with LCH Health and Community Services (US) to implemented athenaOne, athenahealth, Inc.’s integrated, cloud-based electronic health record (EHR), medical billing, and patient engagement solution, as well as athenaOne Dental to provide a more seamless experience for its patients and providers and to support the nonprofit’s growth strategy.

0 notes

Text

GCP Database Migration Service Boosts PostgreSQL migrations

GCP database migration service

GCP Database Migration Service (DMS) simplifies data migration to Google Cloud databases for new workloads. DMS offers continuous migrations from MySQL, PostgreSQL, and SQL Server to Cloud SQL and AlloyDB for PostgreSQL. DMS migrates Oracle workloads to Cloud SQL for PostgreSQL and AlloyDB to modernise them. DMS simplifies data migration to Google Cloud databases.

This blog post will discuss ways to speed up Cloud SQL migrations for PostgreSQL / AlloyDB workloads.

Large-scale database migration challenges

The main purpose of Database Migration Service is to move databases smoothly with little downtime. With huge production workloads, migration speed is crucial to the experience. Slower migration times can affect PostgreSQL databases like:

Long time for destination to catch up with source after replication.

Long-running copy operations pause vacuum, causing source transaction wraparound.

Increased WAL Logs size leads to increased source disc use.

Boost migrations

To speed migrations, Google can fine-tune some settings to avoid aforementioned concerns. The following options apply to Cloud SQL and AlloyDB destinations. Improve migration speeds. Adjust the following settings in various categories:

DMS parallels initial load and change data capture (CDC).

Configure source and target PostgreSQL parameters.

Improve machine and network settings

Examine these in detail.

Parallel initial load and CDC with DMS

Google’s new DMS functionality uses PostgreSQL multiple subscriptions to migrate data in parallel by setting up pglogical subscriptions between the source and destination databases. This feature migrates data in parallel streams during data load and CDC.

Database Migration Service’s UI and Cloud SQL APIs default to OPTIMAL, which balances performance and source database load. You can increase migration speed by selecting MAXIMUM, which delivers the maximum dump speeds.

Based on your setting,

DMS calculates the optimal number of subscriptions (the receiving side of pglogical replication) per database based on database and instance-size information.

To balance replication set sizes among subscriptions, tables are assigned to distinct replication sets based on size.

Individual subscription connections copy data in simultaneously, resulting in CDC.

In Google’s experience, MAXIMUM mode speeds migration multifold compared to MINIMAL / OPTIMAL mode.

The MAXIMUM setting delivers the fastest speeds, but if the source is already under load, it may slow application performance. So check source resource use before choosing this option.

Configure source and target PostgreSQL parameters.

CDC and initial load can be optimised with these database options. The suggestions have a range of values, which you must test and set based on your workload.

Target instance fine-tuning

These destination database configurations can be fine-tuned.

max_wal_size: Set this in range of 20GB-50GB

The system setting max_wal_size limits WAL growth during automatic checkpoints. Higher wal size reduces checkpoint frequency, improving migration resource allocation. The default max_wal_size can create DMS load checkpoints every few seconds. Google can set max_wal_size between 20GB and 50GB depending on machine tier to avoid this. Higher values improve migration speeds, especially beginning load. AlloyDB manages checkpoints automatically, therefore this argument is not needed. After migration, modify the value to fit production workload requirements.

pglogical.synchronous_commit : Set this to off

As the name implies, pglogical.synchronous_commit can acknowledge commits before flushing WAL records to disc. WAL flush depends on wal_writer_delay parameters. This is an asynchronous commit, which speeds up CDC DML modifications but reduces durability. Last few asynchronous commits may be lost if PostgreSQL crashes.

wal_buffers : Set 32–64 MB in 4 vCPU machines, 64–128 MB in 8–16 vCPU machines

Wal buffers show the amount of shared memory utilised for unwritten WAL data. Initial load commit frequency should be reduced. Set it to 256MB for greater vCPU objectives. Smaller wal_buffers increase commit frequency, hence increasing them helps initial load.

maintenance_work_mem: Suggested value of 1GB / size of biggest index if possible

PostgreSQL maintenance operations like VACUUM, CREATE INDEX, and ALTER TABLE ADD FOREIGN KEY employ maintenance_work_mem. Databases execute these actions sequentially. Before CDC, DMS migrates initial load data and rebuilds destination indexes and constraints. Maintenance_work_mem optimises memory for constraint construction. Increase this value beyond 64 MB. Past studies with 1 GB yielded good results. If possible, this setting should be close to the destination’s greatest index to replicate. After migration, reset this parameter to the default value to avoid affecting application query processing.

max_parallel_maintenance_workers: Proportional to CPU count

Following data migration, DMS uses pg_restore to recreate secondary indexes on the destination. DMS chooses the best parallel configuration for –jobs depending on target machine configuration. Set max_parallel_maintenance_workers on the destination for parallel index creation to speed up CREATE INDEX calls. The default option is 2, although the destination instance’s CPU count and memory can increase it. After migration, reset this parameter to the default value to avoid affecting application query processing.

max_parallel_workers: Set proportional max_worker_processes

The max_parallel_workers flag increases the system’s parallel worker limit. The default value is 8. Setting this above max_worker_processes has no effect because parallel workers are taken from that pool. Maximum parallel workers should be equal to or more than maximum parallel maintenance workers.

autovacuum: Off

Turn off autovacuum in the destination until replication lag is low if there is a lot of data to catch up on during the CDC phase. To speed up a one-time manual hoover before promoting an instance, specify max_parallel_maintenance_workers=4 (set it to the Cloud SQL instance’s vCPUs) and maintenance_work_mem=10GB or greater. Note that manual hoover uses maintenance_work_mem. Turn on autovacuum after migration.

Source instance configurations for fine tuning

Finally, for source instance fine tuning, consider these configurations:

Shared_buffers: Set to 60% of RAM

The database server allocates shared memory buffers using the shared_buffers argument. Increase shared_buffers to 60% of the source PostgreSQL database‘s RAM to improve initial load performance and buffer SELECTs.

Adjust machine and network settings

Another factor in faster migrations is machine or network configuration. Larger destination and source configurations (RAM, CPU, Disc IO) speed migrations.

Here are some methods:

Consider a large machine tier for the destination instance when migrating with DMS. Before promoting the instance, degrade the machine to a lower tier after migration. This requires a machine restart. Since this is done before promoting the instance, source downtime is usually unaffected.

Network bandwidth is limited by vCPUs. The network egress cap on write throughput for each VM depends on its type. VM network egress throughput limits disc throughput to 0.48MBps per GB. Disc IOPS is 30/GB. Choose Cloud SQL instances with more vCPUs. Increase disc space for throughput and IOPS.

Google’s experiments show that private IP migrations are 20% faster than public IP migrations.

Size initial storage based on the migration workload’s throughput and IOPS, not just the source database size.

The number of vCPUs in the target Cloud SQL instance determines Index Rebuild parallel threads. (DMS creates secondary indexes and constraints after initial load but before CDC.)

Last ideas and limitations

DMS may not improve speed if the source has a huge table that holds most of the data in the database being migrated. The current parallelism is table-level due to pglogical constraints. Future updates will solve the inability to parallelise table data.

Do not activate automated backups during migration. DDLs on the source are not supported for replication, therefore avoid them.

Fine-tuning source and destination instance configurations, using optimal machine and network configurations, and monitoring workflow steps optimise DMS migrations. Faster DMS migrations are possible by following best practices and addressing potential issues.

Read more on govindhtech.com

#GCP#GCPDatabase#MigrationService#PostgreSQL#CloudSQL#AlloyDB#vCPU#CPU#VMnetwork#APIs#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Enhancing Data Integration with Oracle CDC

Oracle Change Data Capture (CDC) is a powerful tool that helps organizations streamline data integration processes by capturing changes in Oracle databases and making them available for real-time processing. This capability is crucial for businesses that rely on timely data updates for analytics, reporting, and operational efficiency.

Understanding Oracle CDC

Oracle CDC captures changes in database tables and makes these changes available to downstream systems. It works by monitoring changes such as inserts, updates, and deletes, and then recording these changes in a change table. This data can then be extracted and applied to other systems, ensuring data consistency across the organization.

Key Benefits of Oracle CDC

Real-Time Data Synchronization: Oracle CDC ensures that changes in the source database are immediately available to target systems. This real-time synchronization is essential for applications that require up-to-date information, such as financial reporting and inventory management.

Reduced Data Latency: By capturing and applying changes as they happen, Oracle CDC minimizes the latency between data generation and data availability. This low-latency approach is critical for applications that rely on timely data for decision-making.

Efficient Data Integration: Oracle CDC simplifies the process of integrating data from multiple sources. By capturing changes at the source, it eliminates the need for complex ETL processes, making data integration more efficient and less error-prone.

Improved Data Consistency: Oracle CDC ensures that all systems have consistent data by capturing and applying changes in a controlled manner. This consistency is crucial for maintaining data integrity across the organization.

Implementing Oracle CDC

To implement Oracle CDC, businesses need to configure the CDC environment and define the change data capture rules. This involves specifying which tables and columns to monitor for changes. Once configured, Oracle CDC will capture changes and store them in a change table, which can be accessed by downstream systems.

Use Cases for Oracle CDC

Data Warehousing: Oracle CDC can be used to keep data warehouses in sync with operational databases. By capturing changes in real-time, it ensures that the data warehouse always contains the most recent data, enabling timely and accurate analytics.

Application Integration: Many businesses operate multiple applications that need to share data. Oracle CDC facilitates this by capturing changes in one application’s database and applying them to another application’s database, ensuring data consistency across the enterprise.

Business Intelligence: Real-time data is crucial for business intelligence applications that provide insights into operations. Oracle CDC ensures that BI tools have access to the latest data, enabling more accurate and timely analysis.

Disaster Recovery: Oracle CDC can be used to replicate data to a backup system in real-time. In the event of a disaster, this ensures that the backup system has the most recent data, minimizing data loss and downtime.

0 notes

Link

0 notes

Text

Agriculture Analytics Market Trends & Forecast: 2028

Agriculture Analytics Market, by Application Area (Farm Analytics, Livestock Analytics, and Aquaculture Analytics), Component (Solution and Services), Farm Size (Small, Medium-Sized, and Large), Deployment Type and Geography (North America, Europe, Asia-Pacific, Middle East and Africa and South America)

The global Agriculture Analytics market size is projected to reach USD 1.9 billion by 2026 at a CAGR of 12.5% from USD 1.2 billion in 2021 during the forecast 2021-2028.

Agricultural analytics better known as Agroecosystem analysis is an AI driven management process which help in the analysis of various parameters in an agricultural ecosystem. It helps in collecting data and analysing the efficiency and other associated parameters of crop production. These have specialised designed algorithms to calculate the yield, fertilizer demand, future crop and the cost savings as well.

With an increased adoption of urban farming along with the growing digitalisation and rising demand for improving the agricultural supply are some of the factors that have supported long-term expansion for Agriculture Analytics Market.

The COVID-19 pandemic is causing widespread concern and economic hardship for consumers, businesses, and communities across the globe. With the nationwide lockdown across globe the supply and demand has been greatly affected. The import and export of various agricultural products was halted owing to lockdown situations.

Request Sample Pages of Report: https://www.delvens.com/get-free-sample/agriculture-analytics-market-trends-forecast-till-2028

Regional Analysis

Asia Pacific is expected to be the largest market during the forecast period. The increasing along with large farms in the region along with the increased investment in the sector have led to growth in the agricultural analytics in the region.

Key Players

Deere & Company

IBM

SAP SE

Trimble

Monsanto

Oracle

Accenture

Iteris

Taranis

Agribotix

Agrivi

DTN

aWhere

Conservis Corporation

DeLaval

FBN

Farmers Edge

GEOSYS

Granular

Gro Intelligence

Proagrica

PrecisionHawk

RESSON

Stesalit Systems

AgVue Technologies

Fasal

AGEYE Technologies

HelioPas AI

OneSoil

Root AI

To Grow Your Business Revenue, Make an Inquiry Before Buying at: https://www.delvens.com/Inquire-before-buying/agriculture-analytics-market-trends-forecast-till-2028

Recent Developments

In April 2020, Novel AI-powered technologies were released by IBM so as to help and research a possible solution for the ongoing pandemic.

In April 2020, SAP a COVID-19 Emergency Fund which valued for about USD 3.4 million was set up by SAP in order to support the urgent needs of the World Health Organization (WHO), Centers for Disease Control and Prevention (CDC) Foundation, and many other smaller NGO’s and social enterprises.

Reasons to Acquire

Increase your understanding of the market for identifying the best and suitable strategies and decisions on the basis of sales or revenue fluctuations in terms of volume and value, distribution chain analysis, market trends and factors

Gain authentic and granular data access for Agriculture Analytics market so as to understand the trends and the factors involved behind changing market situations

Qualitative and quantitative data utilization to discover arrays of future growth from the market trends of leaders to market visionaries and then recognize the significant areas to compete in the future

In-depth analysis of the changing trends of the market by visualizing the historic and forecast year growth patterns

Purchase the Report: https://www.delvens.com/checkout/agriculture-analytics-market-trends-forecast-till-2028

Report Scope:

Agriculture Analytics Market is segmented into Application Area, Component, Farm Size, Deployment Mode and geography.

On the basis of Component

Solution

Services

On the basis of Application Area

Farm analytics

Livestock analytics

Aquaculture analytics

Others (Orchid, Forestry, and Horticulture)

On the basis of Farm Size

Large Farms

Small and Medium-Sized Farms

On the basis of Deployment Modes

Cloud

On-premises

On the basis of Region

Asia Pacific

North America

Europe

South America

Middle East & Africa

About Us:

Delvens is a strategic advisory and consulting company headquartered in New Delhi, India. The company holds expertise in providing syndicated research reports, customized research reports and consulting services. Delvens qualitative and quantitative data is highly utilized by each level from niche to major markets, serving more than 1K prominent companies by assuring to provide the information on country, regional and global business environment. We have a database for more than 45 industries in more than 115+ major countries globally.

Delvens database assists the clients by providing in-depth information in crucial business decisions. Delvens offers significant facts and figures across various industries namely Healthcare, IT & Telecom, Chemicals & Materials, Semiconductor & Electronics, Energy, Pharmaceutical, Consumer Goods & Services, Food & Beverages. Our company provides an exhaustive and comprehensive understanding of the business environment.

Contact Us:

UNIT NO. 2126, TOWER B,

21ST FLOOR ALPHATHUM

SECTOR 90 NOIDA 201305, IN

+44-20-8638-5055

0 notes

Text

Oracle Change Data Capture – Development and Benefits

Oracle Change Data Capture (CDC) is a technology that is widely used today for multiple activities that are critical in today’s organizations. CDC is optimized for detecting and capturing insertions, updates, and deletions that are applied to tables in an Oracle database. The technology is also an essential feature of any Oracle replication solution as CDC classifies and records changed data in a relational format that is suitable for use in EAI, ETL, and other applications.

Businesses using Oracle databases from Oracle CDC have several advantages. Data warehousing costs are lower and real-time data integration done by extracting and loading data into data warehouses and other data repositories incrementally and in real-time are allowed. When only the data that has changed after the last extraction is transferred, Oracle CDC does away with the need for bulk data updating, batch windows, and complete data refreshes that can disrupt users and businesses.

Development of Log-Based Oracle CDC

The Oracle 9i version of Oracle was released in 2001 with a new feature. Users of the database had access to a built-in mechanism for tracking and storing changes in real-time as they happened – a native Oracle CDC feature. However, database administrators faced a major disadvantage as it depended on placing triggers on tables in the source database, thereby increasing processing overheads.

This feature was fine-tuned in the Oracle 10g model. A new Oracle CDC technology was introduced that used the redo logs of a database to capture and transmit data without using the previous invasive database triggers. An Oracle replication tool called Oracle Streams was used for this activity.

This new version of Oracle CDC was primarily log-based form of change data capture that was lightweight and did not need the structure of the source table to be changed. Even though this latest model of CDC became quite popular, Oracle decided to withdraw support to Oracle CDC with the release of Oracle 12c. Users were encouraged to switch to the higher-priced Oracle Golden Gate, an Oracle replication software solution.

Oracle CDC Modes

The Oracle Data Integrator supports two journalizing modes.

The first is the Synchronous Mode. Here triggers are placed in tables in the source database and all changed data at source is immediately captured. Each SQL statement in the table completes a Data Manipulation Language (DML) activity which is insert, update, or delete. The changed data is captured as an integral component of the transaction which changed the data at the source. This mode of Oracle CDC is available in the Oracle Standard and the Oracle Enterprise Editions.

The next is the Asynchronous Mode. Here, data is sent to redo log files where the changed data is captured after a SQL statement goes through a DML activity. Since the modified data is not captured as a part of the transactions that made changes to the source table, this mode does not have any impact on the transaction.

2 notes

·

View notes

Text

Oracle Changed Data Capture Explained, Technology, Modes, and Benefits

Before going to Oracle Change Data Capture (CDC), it is necessary to understand the concept of CDC. Change Data Capture (CDC) is software design patterns that are mainly used to monitor changes in data in the source database. This is done so that at a later stage, relevant action can be taken on the changes made. Data capture, data identification, and data delivery are all integral components of CDC.

Now coming to Oracle CDC, this technology assists real-time data integration across system networks of enterprises thereby speeding up data warehousing activities and improving manifold the performance and availability of databases. A critical benefit of Oracle CDC is that when replication is done on Oracle database with technologically advanced tools and non-intrusive methods, there is no performance degradation or lag in speeds. These tasks include offloading analytics queries from databases in production to data warehouses and migrating databases to the cloud without stopping work or downtime. Changes made to data, also called incremental data for additions to the databases, can be extracted from different sources and transferred to a data warehouse with Oracle CDC.

One of the main functions of Oracle Change Data Capture is preserving and capturing the state of the data. It can either be in any database or in a data warehouse environment that can also be used in a data repository system. Hence, the task of developers for setting up Oracle CDC mechanisms is made easy as they can avail multiple ways to do so, starting from application logic to physical storage in one or several groupings of system layers.

Modes of Oracle CDC

Oracle Data Integrator supports two journalizing modes.

· Synchronous Mode: Triggers are placed at the source database. This makes sure that all changes to the data are triggered and captured immediately. Thus, each SQL statement executes a Data Manipulation Language (DML) activity which is inserting, deleting, or updating data. In Synchronous mode, the changed data is taken as a part of the transaction that changed the data at source. This feature of Oracle CDC is included in the Oracle Standard Edition and the Oracle Enterprise Edition.

· Asynchronous Mode: Data is sent to the redo logs. The changed data is captured after SQL statement is executed through a DML process. Here, the changed data is not captured as a part of the transactions that were responsible for changes to the table at source and therefore, do not affect the transaction. The three modes of asynchronous Oracle CDC are Auto Log, Hot Log, and Distributed Hot Log. The Asynchronous Change Data Capture is based on and offers a relational interface to the now discontinued Oracle Streams.

Oracle CDC is a very affordable solution as overheads are reduced by simplifying the extraction of the modified data from the Oracle database. It has come a long way from its early stages to optimize database administration, replication, and migration activities.

1 note

·

View note

Text

How to Save Changes in Data Tables

Changes like inserts, updates, deletes are very common and businesses would naturally like to have a history of all the changes. The primary thing that needs to be done is to implement tools that ensure that all modifications are recorded and stored. This is where change data capture (CDC) has a very important role to play. It is the process of capturing changes made at the source of data and applying them throughout the organization. The goal of the CDC is to ensure data synchronicity by minimizing the resources required for ETL (extract, transform, and load) processes and is possible because CDC deals with data changes only.

Take any Data Warehouse (DWH) – it has to keep a track of all business measure changes. Hence, the ETL processes of DWH loading should be able to notice all data changes which have occurred in source operating systems during the business operations. This is ensured by change data capture which facilitates the insertion of new records, updating one or multiple fields of existing records, and deletion of records. When CDC records insertion, updating and deletion of activities applicable to a SQL Server table, all details of the changes made are available in an easily consumed relational format. Column information and the metadata that is required to apply the changes to a target environment is captured for the modified rows and stored in change tables that mirror the column structure of the tracked source tables. Hence, table-valued functions are provided to consumers that allow systematic access to changed data.

0 notes

Text

DM: Does anyone want to get their fortune read?

CDC: I’m an oracle, I can read my fortune myself

5 notes

·

View notes