#RLHF for GenAI

Explore tagged Tumblr posts

Text

#Generative AI#Mitigating Ethical Risks#ethical and security concerns#Red teaming#RLHF for LLMs#RLHF for GenAI#Fine Turning LLMs#Red teaming LLMs#Data labeling LLMs#Training data LLMs

0 notes

Text

GenOps: MLOps’ Advancement For Generative AI Solutions

Organizations frequently encounter operational difficulties when they attempt to use generative AI solutions at scale. GenOps, also known as MLOps for Gen AI, tackles these issues.

DevOps principles and machine learning procedures are combined with GenOps to deploy, track, and manage Gen AI models in production. It guarantees that Gen AI systems are dependable, scalable, and ever-improving.

Why does MLOps pose a challenge to Gen AI?

The distinct issues posed by Gen AI models render the conventional MLOps approaches inadequate.

Scale: Specialized infrastructure is needed to handle billions of parameters.

Compute: Training and inference require a lot of resources.

Safety: Strong protections against hazardous content are required.

Quick evolution: Regular updates to stay up to speed with new discoveries.

Unpredictability: Testing and validation are made more difficult by non-deterministic results.

We’ll look at ways to expand and modify MLOps concepts to satisfy the particular needs of Gen AI in this blog.

Important functions of GenOps

The many components of GenOps for trained and optimized models are listed below:

Gen AI exploration and prototyping: Use open-weight models like Gemma2, PaliGemma, and enterprise models like Gemini and Imagen to experiment and create prototypes.

Prompt: The following are the different actions that require prompts:

Prompt engineering: Create and improve prompts to enable GenAI models to produce the intended results.

Versioning prompts: Over time, manage, monitor, and regulate changes to prompts.

Enhance the prompt: Use LLMs to create a better prompt that optimizes output for a particular job.

Evaluation: Use metrics or feedback to assess the GenAI model’s responses for particular tasks.

Optimization: To make models more effective for deployment, use optimization techniques like quantization and distillation.

Safety: Install filters and guardrails. Safety filters are included into models like Gemini to stop the model from responding negatively.

Fine-tuning: Apply further tuning on specialized datasets to pre-trained models in order to adapt them to certain domains/tasks.

Version control: Handle several iterations of the datasets, prompts, and GenAI models.

Deployment: Provide integrated, scalable, and containerized GenAI models.

Monitoring: Keep an eye on the real-time performance of the model, the output quality, latency, and resource utilization.

Security and governance: Defend models and data from intrusions and attacks, and make sure rules are followed.

After seeing the essential elements of GenOps, let’s see how the traditional MLOps pipeline in VertexAI may be expanded to include GenOps. The process of deploying an ML model is automated via this MLOps pipeline, which combines continuous integration, continuous deployment, and continuous training (CI/CD/CT).

https://googleads.g.doubleclick.net/pagead/ads?client=ca-pub-2426501425899553&output=html&h=280&slotname=4563146714&adk=546512204&adf=318346453&pi=t.ma~as.4563146714&w=696&abgtt=7&fwrn=4&fwrnh=100&lmt=1726902097&rafmt=1&format=696x280&url=https%3A%2F%2Fgovindhtech.com%2Fgenops-mlops-advancement-for-generative-ai%2F&host=ca-host-pub-2644536267352236&fwr=0&fwrattr=true&rpe=1&resp_fmts=3&wgl=1&uach=WyJXaW5kb3dzIiwiMTAuMC4wIiwieDg2IiwiIiwiMTI5LjAuNjY2OC41OCIsbnVsbCwwLG51bGwsIjY0IixbWyJHb29nbGUgQ2hyb21lIiwiMTI5LjAuNjY2OC41OCJdLFsiTm90PUE_QnJhbmQiLCI4LjAuMC4wIl0sWyJDaHJvbWl1bSIsIjEyOS4wLjY2NjguNTgiXV0sMF0.&dt=1726901827507&bpp=5&bdt=392&idt=233&shv=r20240912&mjsv=m202409120101&ptt=9&saldr=aa&abxe=1&cookie=ID%3D2f07fe693acedb0c-22cd63e9a9e4003d%3AT%3D1698317162%3ART%3D1726898455%3AS%3DALNI_MZVYMuvf4fPSHFS0Ka8b16tqpqsCw&gpic=UID%3D00000c7492103e27%3AT%3D1698317162%3ART%3D1726898455%3AS%3DALNI_MZdViGyI9M8lNgUewCtxNjuvm6ACQ&eo_id_str=ID%3D370163078a0675ed%3AT%3D1725950955%3ART%3D1726898455%3AS%3DAA-AfjYsmyhNReuihzZG0PaW85Ki&prev_fmts=0x0&nras=1&correlator=2491702770370&frm=20&pv=1&u_tz=330&u_his=5&u_h=768&u_w=1366&u_ah=728&u_aw=1366&u_cd=24&u_sd=1.1&dmc=8&adx=79&ady=3390&biw=1226&bih=583&scr_x=0&scr_y=1069&eid=44759875%2C44759926%2C44759837%2C44795921%2C95342765%2C95342338%2C95340253%2C95340255&oid=2&pvsid=4267558874159984&tmod=799609756&uas=1&nvt=1&ref=https%3A%2F%2Fgovindhtech.com%2Fwp-admin%2Fedit.php%3Fpost_status%3Dpublish%26post_type%3Dpost&fc=1920&brdim=0%2C0%2C0%2C0%2C1366%2C0%2C1366%2C728%2C1242%2C583&vis=1&rsz=%7C%7CoEebr%7C&abl=CS&pfx=0&fu=128&bc=31&bz=1.1&td=1&tdf=2&psd=W251bGwsbnVsbCxudWxsLDNd&nt=1&ifi=2&uci=a!2&btvi=1&fsb=1&dtd=MGoogle Cloud

Continue MLOps to assist with GenOps

Let’s now examine the essential elements of a strong GenOps pipeline on Google Cloud, from the first testing of potent models across several modalities to the crucial factors of deployment, safety, and fine-tuning.Sample architecture for GenOps

Let’s investigate how these essential GenOps building blocks can be implemented in Google Cloud.

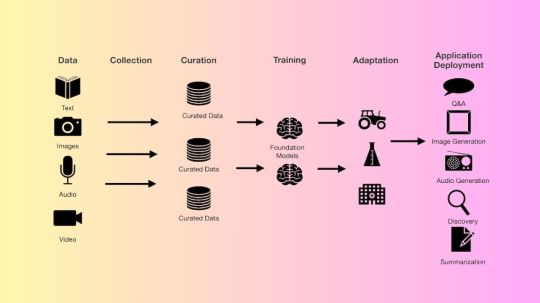

Data: The route towards Generative AI begins with data

Examples of few shots: Model output structure, phrasing, scope, and pattern are guided by the examples provided by few-shot prompts.

Supervised fine-tuning dataset: A pre-trained model is adjusted to a particular task or domain using this labeled dataset.

The golden evaluation dataset is used to evaluate how well a model performs in a certain activity. For that particular objective, this labeled dataset can be used for both metric-based and manual evaluation.

Prompt management: Vertex AI Studio facilitates the cooperative development, testing, and improvement of prompts. Within shared projects, teams can enter text, choose models, change parameters, and save completed prompts.

Model fine-tuning: Using the fine-tuning data, this stage entails adapting the Gen AI model that has already been trained to certain tasks or domains. The two Vertex AI techniques for fine-tuning models on Google Cloud are listed below:

When labeled data is available and the job is well-defined, supervised fine-tuning is a viable choice. It works especially well for domain-specific applications where the content or language is very different from the huge model’s original training set.

Strengthening Human feedback is used in learning from human feedback (RLHF) to fine-tune a model. In situations where the model’s output is intricate and challenging to explain, RLHF is advised. It is advised to use supervised fine-tuning if defining the model’s output isn’t challenging.

Visualization tools such as TensorBoard and embedding projector can be used to identify irrelevant questions. These prompts can then be utilized to prepare answers for RLHF and RLAIF.

UI can also be developed utilizing open source programs like Google Mesop, which facilitates the evaluation and updating of LLM replies by human assessors.

Model evaluation: GenAI models with explainable metrics are assessed using Vertex AI’s GenAI Evaluation Service. It offers two main categories of metrics:

Model-based measures: A Google-exclusive model serves as the judge in these metrics. Pairwise or pointwise measurements of model-based metrics are made with it.

Metrics based on computation: These metrics (like ROUGE and BLEU) compare the model’s output to a reference or ground truth by using mathematical formulas.

A paired model-based evaluation method called Automatic Side-by-Side (AutoSxS) is used to assess how well pre-generated forecasts or GenAI models in the VertexAI Model Registry perform. To determine which model responds to a prompt the best, it employs an autorater.

Model implementation:

Models that do not require deployment and have controlled APIs: Gemini, one of Google’s core Gen AI models, has managed APIs and is capable of accepting commands without requiring deployment.

Models that must be implemented: Before they may take prompts, other Gen AI models need to be deployed to a VertexAI Endpoint. The model needs to be available in the VertexAI Model Registry in order to be deployed. There are two varieties of GenAI models in use:

Models that have been refined through the use of customized data to adjust a supported foundation model.

Models using GenAI without controlled APIs Numerous models in the VertexAI Model Garden, such as Gemma2, Mistral, and Nemo, can be accessed through the Open Notebook or Deploy button.

For further control, some models allow for deployment to Google Kubernetes Engine. Online serving frameworks, such as NVIDIA Triton Inference Server and vLLM, which are memory-efficient and high-throughput LLM inference engines, are used in GKE to serve a single GPU model.

Monitoring: Continuous monitoring examines important metrics, data patterns, and trends to evaluate the performance of the deployed models in real-world scenarios. Google Cloud services are monitored through the use of cloud monitoring.

Organizations can better utilize the promise of Gen AI by implementing GenOps techniques, which help create systems that are effective and in line with business goals.

Read more on govindhtech.com

#GenOps#MLOps#GenerativeAISolutions#generativeAI#GenAI#RLHF#Gemma2#GeminiImagen#GenAImodels#VertexAI#GoogleCloud#GenAIEvaluationService#NVIDIATritonInference#nvidia#technology#technews#news#govindhtech

0 notes

Text

Not loving the AI discourse on here lately. Self described communists are on here reblogging "pro-AI" posts from users who misunderstand and anthropomorphize the technology. Yes, deep neural networks do "memorize" individual training samples, much in the same way (it turns out) as support vector machines select a sparse set of support vectors from the training data. No, the concept of a "latent space" does not change this fact. No, genAI does not "create unique outputs by combining learned concepts."

But whatever, the real point is that these systems are uniquely bad software from a number of different angles, including energy requirements and labor impacts. What of the underpaid workers that got PTSD from doing the RLHF to make these genAI systems act semi-reasonably? And beyond that, this is completely unserious:

The likes of OpenAI and Microsoft will not usher in a post-property communist society, and pretending like that fight is anything other than a factional struggle for power within the capitalist class just builds confusion rather than clarity.

Finally, this whole "disability aid" discourse is completely fucked. Again, I would hope communists could muster a more advanced analysis than "this tech is used by disabled people therefore it is an unimpeachable disability aid." Because it turns out that the needs of disabled people are often used as a fig leaf to develop tools and technology for empire, war, and profit, often creating more disabled people in the process (by far) than the technology helps.

1 note

·

View note

Text

Assaf Baciu, Co-Founder & President of Persado – Interview Series

New Post has been published on https://thedigitalinsider.com/assaf-baciu-co-founder-president-of-persado-interview-series/

Assaf Baciu, Co-Founder & President of Persado – Interview Series

Assaf Baciu has nearly two decades of experience shaping enterprise strategy and product direction for market-leading SaaS organizations. As co-founder and President of Persado, he drives the progression and advancements of Persado’s growing product portfolio and oversees the company’s customer onboarding, campaign delivery management, Center of Enablement programs, and technical services.

Persado offers a Motivation AI platform designed to enable personalized communications at scale, encouraging individuals to engage and take action. Several of the world’s largest brands, such as Ally Bank, Dropbox, JPMorgan Chase, Marks & Spencer, and Verizon, use Persado’s platform to create highly personalized communications. According to the company, the top 30 Persado customers have collectively generated over $4.25 billion in additional revenue through the use of the platform.

Can you share the story behind the founding of Persado and how your previous experiences influenced its creation?

My co-founder, Alex Vratskides, and I founded Persado 12 years ago. We were at Upstream, and became fascinated with how text message response rates changed with even minor tweaks in the language. Given that the number of characters is limited for SMS, we started thinking about text messages as a mathematical problem that has some finite number of alternative messages, and with the right algorithm we could find the optimal ones. We evaluated some approaches and saw that there is a way…and the rest is history.

Persado’s Motivation AI Platform is highlighted for its ability to personalize marketing content. Can you explain how the platform uses generative AI to understand and leverage customer motivation?

GenAI, on its own, via a foundation model, cannot motivate systematically. It’s a component with a stack of data, machine learning, and a response feedback loop.

Persado has been generating and optimizing language using various approaches for over 10 years. We’ve accumulated a unique dataset from one million A/B tests from messages, across industries, designed to connect with consumers and motivate action at any stage. We leverage this data to finetune a foundation model with Supervised Fine Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF). We’ve also adapted a second transformer model to be able to predict message performance based on language parameters. On top of that, our machine learning (ML) algorithms understand—in real time—which language elements resonate with a given individual, then adjust the copy within the communication to that person or segment.

By continuously learning the most effective combination of message elements for each consumer, and dynamically creating the most engaging content, Persado-generated content is able to outperform human and other AI-generated copy 96% of the time.

How does Persado’s Motivation AI Platform differentiate itself from other generative AI tools in terms of driving business results?

Persado is unique on many fronts.

Purpose-Built – Persado has the only models specifically trained and curated with 10 billion tokens of marketing enterprise communication, coupled with behavioral data.

Emotionally Intelligent – Our AI is designed to understand and generate language that elicits specific emotional responses from target audiences. This capability is grounded in advanced ML models trained on extensive linguistic and psychological data.

Precision – Our platform leverages the most powerful model architectures and is trained with statistically valid customer behavior data. We have executed the equivalent of more than 1M A/B tests, which enables us to generate the specific words, phrases, emotions, and stories that drive incremental impact 96% of time.

Knowledge Graph – Persado developed a representation of key concepts expressed via language and their relationship with the market vertical, type of communications, customer lifecycle journey, and channels. We use advanced NLP techniques to classify, identify, and make use of these concepts (emotions, narratives, structure of message, voice, and more) to generate and personalize better performing messages.

Predictive – We use predictive analytics to forecast the performance of different messages, empowering marketers to create content with a high probability of success. This predictive power is unique and offers customers a great competitive edge.

What are some of the key capabilities that Persado’s AI platform offers to ensure a seamless integration with existing marketing technologies?

Our platform securely integrates with a brand’s existing tech stack to simplify marketing content generation so brands can easily generate the highest-performing digital marketing messaging they need to boost results. We combine custom, non-PII user attributes, and Persado’s award-winning language generation models to more effectively communicate with every customer across channels, at any stage of their journey.

Motivation AI is compatible with over 40 martech solutions to ensure each brand can use their existing martech stack to generate the most relevant, personalized outputs for their customers.

Content Delivery Platforms – Our processes and tech enablers are designed to streamline the configuration of Persado-generated content in your deployment platform

Customer Data Platforms – The platform facilitates a regular update of non-PII data with Persado as an input for relevant and personalized content generation

Analytics Platforms – We provide processes and data flows to seamlessly integrate results and reporting back into the Persado platform, supporting continuous learning and enhancing machine learning capabilities

Can you describe the process of onboarding a new client and how Persado’s AI helps in their campaign management and delivery?

Prior to formal onboarding, Persado ingests all relevant brand voice materials from its clients, such as style guidelines, information on segments, language restrictions, and more. This trains our model on how to write for a business’s particular brand on day one. We hit the ground running with a partnership kickoff to define KPIs and campaign focus areas as well as to educate users on Persado Portal, our centralized platform where all content is generated, approved, and deployed. In addition to hosting weekly check-ins during onboarding, we close out onboarding with an executive business review summarizing performance thus far and next steps.

Our teams help brands get the tone or brand voice correct across channels by grounding all of their outputs in emotion—a key element of language that motivates customers to engage. By leaning into the emotions and narratives most likely to resonate with each customer, brands can create more effective, revenue-driving campaigns while staying true to the brand’s values.

Scalability is one of the biggest ways we’re able to help businesses with their campaigns. Personalization is a key tactic for marketers to reach key audiences and attract new customers. However, true personalization is challenging to achieve at scale. Using Persado’s knowledge base of 1.5 billion real customer interactions, we help enterprises uncover which versions of a message resonate best with their customers, so they can personalize these messages in real-time at each stage of the customer journey.

You’ve worked with top banks and card issuers, driving significant revenue increases. Could you provide specific examples of how Persado’s AI has enhanced marketing performance for these clients?

Yes, while we work with many industries, we have deep experience working with 8 of the 10 largest U.S. banks and 6 of the 7 top credit card issuers. Here are a few examples of impact:

Chase has used Persado to generate and optimize marketing messages for consumers in its Card and Mortgage businesses. They have been using Persado since 2019 to write personalized market copy by analyzing massive datasets of tagged words and phrases. In pilot tests run by Chase, Persado AI-generated ad copy delivered click-through rates up to 450% higher than copy created by humans alone.

Ally Bank uses Persado to enhance cross-sell opportunities. Our Motivation AI platform has helped Ally understand which marketing communications resonate well with their customers across key channels like email and web. By knowing the do’s and don’ts related to targeted conversations with customers, the product marketing and CRM team are able to deliver a better CX and unlock double-digit improvement on KPIs like clicks and actual conversions.

How does Persado’s AI ensure that generated messages remain on-brand and comply with industry regulations, particularly for highly regulated sectors like finance?

As you noted, because sectors like financial services are highly regulated, it can be challenging to implement AI across business functions. In financial services and beyond, brand and legal compliance are a major piece of the AI puzzle. Although compliance and establishing AI governance can seem daunting, whether you’re part of a large enterprise or small business, it shouldn’t be a reason to not implement AI, or at least test it out.

To ensure copy is aligned with best practices and regulations, we ingest compliance and brand guidelines into Persado’s model. Our built-in tools allow for easy compliance and brand review, feedback, and approval before deployment.

We adjust our approach to help financial services brands remain compliant. While we can’t target specific demographics, such as age, for personalized marketing in financial services, we create high-performing narratives or emotional tones that resonate overall. From there, we observe which messages resonate with a bank or card issuer’s different segments.

What are some common challenges businesses face when trying to prove the value of AI, and how does Persado address these challenges?

There is a “opportunity lost” cost of enterprise inaction. A few changes in word choice can mean the difference between a completed transaction, application or enrollment and money being left on the table.

Persado’s impact is easily measured. We integrate with analytics systems and continuously evaluate success via automated A/B tests that show the performance of our more engaging, personalized content, vs what the brand initially created. And, we measure the difference via the conversion funnel. On average, Persado increases content performance 43%, compared to humans alone or another AI solution.

Our purpose-built AI is not only impactful, but easy to measure, which empowers business leaders to make better decisions about the technology investment—and quickly prove its value.

Given the rapid advancements in generative AI, what steps is Persado taking to stay ahead of the curve and maintain its industry leadership?

We’ve been leaders in AI for over a decade; long before it became popular following the launch of ChatGPT. As GenAI curiosity has increased across industries, we’ve been excited to bring and expand our marketing-specific capabilities among large enterprises needing a proven solution for creating high-performing, compliant messages at scale. We do this across channels as well: email, websites, social media, SMS/push notifications, and even IVR.

Our global product and customer success teams are continually listening to our customers—who are among some of the earliest, and most successful adopters of AI–and shaping our roadmap to help them deliver a stellar digital customer experience that also drives increased sales, loan application completions, on-time, payments, or other actions.

For example, we recently released new pre-built audience segments to speed personalized content generation for marketers across financial services, retail, and travel. This feature helps brands increase engagement with specific groups of customers by making it even easier and faster to generate marketing content that will resonate with customers.

What is your vision for the future of marketing and AI?

The possibilities for AI in marketing are only expanding. AI can serve as a helpful asset for marketers to enhance experiences and reach audiences in new, more personalized ways. I think we’ll see marketers shift from expecting (and using) GenAI to improve efficiency and productivity, to also ensuring AI tools deliver measurable increased performance.

Establishing AI governance and standards will also become more important as companies expand AI use cases with an eye on responsible AI. If they’re not careful, brands can risk not being compliant with regulations.

Companies will need to be vigilant and use guardrails to ensure models can be tested to ensure they are free of biases, and that content outputs are accurate, relevant, and on-brand. Applied properly, AI has the potential to turbo-boost marketing performance and customer experiences, which generates the most valuable benefit for businesses: increased revenue.

Thank you for the great interview, readers who wish to learn more should visit Persado.

#ai#AI in Marketing#ai platform#ai tools#ai use cases#algorithm#Algorithms#amp#Analytics#approach#bank#banks#Behavior#behavior data#behavioral data#billion#brands#Business#chatGPT#communication#communications#Companies#compliance#consumers#content#continuous#credit card#crm#curiosity#customer experience

0 notes

Text

Benjamin Ogden, Founder & CEO of DataGenn AI – Interview Series

New Post has been published on https://thedigitalinsider.com/benjamin-ogden-founder-ceo-of-datagenn-ai-interview-series/

Benjamin Ogden, Founder & CEO of DataGenn AI – Interview Series

Benjamin Ogden is the founder and CEO of DataGenn AI, building autonomous investor and trader agents that have been finely tuned to generate profitable trading predictions and execute market trades. Utilizing Reinforcement Learning from Human Feedback (RLHF), the agents’ trade prediction accuracy continually improves. Currently, DataGenn AI is in the process of raising funds to support its continued growth and innovation in the financial services industry.

Benjamin holds a Bachelor’s degree in Finance from the University of Central Florida. He has personally traded billions in stocks and crypto, mastering market dynamics with thousands of hours in real-time price action tracking. A seasoned internet technology developer since 2001, Benjamin is also an SEO expert who has earned over $20 million in profits reverse engineering Google search algorithm updates.

You are a serial entrepreneur, could you share with us some highlights from your career?

There are many highlights as I’ve been running businesses as an entrepreneur since I was a kid age 6 or 7. I absolutely love learning. The path and process of learning drives my thirst for additional knowledge & wisdom. Developing a social blogging community and running a company as the CEO of thoughts.com from 2007-2012 was a great learning experience and career transformer for me. Likewise, trading the stock market heavily after that was another important learning experience that eventually influenced me down the path to working on GenAI trading agents at DataGenn AI. Lastly, the recent transition from working on iGaming SEO to fine-tuning LLMs and learning the fundamentals of machine learning has been invigorating because it gives me the opportunity to develop generative AI-powered trading agents for financial markets, realizing a vision of accelerating compound interest effects, a breakthrough financial markets belief I’ve held for over a decade.

When did you initially become interested in AI and machine learning?

I started gaining interest in AI mid-2022. Once I saw what Jasper.ai was doing at that time, I immediately shifted my daily focus from iGaming SEO Marketing to reviewing state of the art artificial intelligence software & platforms of the time such as Jasper AI & ChatGPT. As my learnings grew throughout 2023, and LLMs progressed rapidly, so did my passion for building valuable financial market trading technologies which harness the power of LLMs and artificial intelligence.

Can you share the genesis story behind DataGenn AI?

I studied Finance in college at UCF. While in college I had a particular interest in the financial markets. In 2012, I had a specific & detailed vision of a new technology I planned on inventing circa 2012, which I call “Digital Capital Mining”. The idea with DCM is simple: Speed up the effects of compound interest by compounding daily, hence digitally mining capital over 252 stock market trading days per year.

Can you explain how DataGenn INVEST leverages Google’s Gemini model and MoE models to predict intraday trading movements?

I can provide a high-level overview of tools we’re using at DataGenn AI, but don’t comment on key specifics at this time. In short: with DataGenn INVEST we’re using multiple frontier language models and entity specific agents built on MoE architecture.

What are the specific advantages of using RLHF (Reinforcement Learning with Human Feedback) in training your trading agents?

RLHF is essential in training the model to learn the correct answer and/or provide specific types of responses based on the user prompt. By using RLHF with our agents’ predictions and executed market trades, we can improve each agent’s accuracy of both trade predictions and market trades over time and frequent iterations. RLHF also helps with efficiency and training the agents to understand nuance and execute complex tasks.

How does DataGenn integrate real-time data from multiple sources into its trading strategy?

At our current phase of testing multiple models and backtesting trading agent performance, we have an agent at Alpha level trading agent in testing that is using real-time data from AlphaAdvantage. We also have a Beta level agent in testing that uses Pinescript on TradingView for backtesting. We are conducting critical research and testing our agents predictions and trade executions. In production, we’ll be using a Bloomberg terminal for trading, market data, and critical news, etc.

How does DataGenn INVEST ensure the accuracy and reliability of its trading predictions in volatile financial markets?

We are building, testing, and backtesting the DataGenn INVEST agents’ trading strategy algorithms and safety guardrails by using Financial Market industry standards such as Stop Loss orders to reduce drawdown risk and Trailing Stop Loss orders to effectively capture increased profits while simultaneously locking in trade gains. We take Responsible AI seriously and we’re committed to building AI systems safely, whether they be for financial markets or biopharmaceutical research.

How do you see autonomous trading agents like DataGenn INVEST changing the landscape of financial markets?

DataGenn INVEST Agents are a game changer. The sizes of portfolio returns DataGenn INVEST trading agents will realize is unfathomable to today’s investing world, typical, and professional investor. This is because, for example, $100,000 compounded at 1% daily becomes $14,377,277 in just two years time.

Are there new features or capabilities that you are particularly excited about introducing?

I’m looking forward to presenting our team’’s research findings which demonstrate when we’ve built the DataGenn INVEST trading agent systems correctly and they’re earning frequent profits trading financial markets with a focus of accelerating compound interest through daily compounding. This is a major accomplishment we’ve earned through tireless & passionate work to become the leader of GenAI Financial Markets Trading.

Thank you for the great interview, readers who wish to learn more should visit DataGenn AI.

#000#2022#2023#agent#agents#ai#AI systems#AI-powered#algorithm#Algorithms#amp#architecture#Art#artificial#Artificial Intelligence#Building#Capture#career#CEO#chatGPT#college#Community#crypto#data#Developer#dynamics#effects#efficiency#engineering#Features

0 notes