#TLS 1.3 encryption

Explore tagged Tumblr posts

Text

Exploring the New Horizons of SQL Server 2022: MS-TDS 8.0 and TLS 1.3

Understanding Tabular Data Stream (TDS) in SQL Server – Introduction In the realm of Microsoft SQL Server, an essential component that ensures seamless communication between a database server and its clients is the Tabular Data Stream (TDS). TDS is a protocol, an application layer protocol, to be precise, that plays a pivotal role in the exchange of data between a client and a server. This blog…

View On WordPress

#MS-TDS 8.0 protocol#SQL Server 2022 TDS#SQL Server performance optimization#SQL Server security enhancements#TLS 1.3 encryption

0 notes

Text

How to build a secure mobile wallet for fintech users?

In the dynamic world of digital finance, mobile wallets have become an integral part of daily transactions. From contactless payments to peer-to-peer transfers, mobile wallets are redefining how users interact with money. However, the convenience of mobile wallets also brings heightened security concerns. For companies involved in software development fintech, building a secure mobile wallet is critical to winning user trust and ensuring regulatory compliance. In this article, we’ll explore the key steps and best practices to build a secure mobile wallet for fintech users, using real-world insights from leaders in fintech services like Xettle Technologies.

Understanding the Importance of Security in Mobile Wallets

Mobile wallets store sensitive data such as card numbers, banking information, and personal identification details. A single breach can lead to severe financial loss and reputational damage for both the user and the fintech provider. Therefore, security must be considered from the very beginning of the mobile wallet's design and development.

Key Steps to Building a Secure Mobile Wallet

1. Start with Secure App Architecture

Security begins with the foundation. Develop an architecture that isolates sensitive components and follows the principles of least privilege. Critical elements such as authentication, encryption, and transaction processing should be separated into secure modules.

Choosing a layered security approach, also known as "defense in depth," is a common strategy in software development fintech projects.

2. Implement Strong Authentication Methods

Authentication ensures that only legitimate users access the wallet. Modern mobile wallets must use:

Multi-Factor Authentication (MFA): Combining passwords with biometrics (fingerprint, facial recognition) or OTPs (One-Time Passwords).

Device Binding: Tying the user account to a specific device to prevent unauthorized access.

Behavioral Biometrics: Monitoring patterns such as typing speed or device handling for additional authentication layers.

These authentication methods significantly lower the risk of unauthorized access.

3. Use End-to-End Data Encryption

All sensitive information must be encrypted both at rest and in transit. Implement strong encryption protocols like AES-256 for data storage and TLS 1.3 for data transmission. In addition, sensitive information such as card details should never be stored on the device unless absolutely necessary.

Tokenization — replacing real data with unique identifiers — is another popular strategy in fintech services to protect data from being exposed during transactions.

4. Enable Real-Time Fraud Detection

Mobile wallets should be equipped with real-time fraud monitoring systems. Integrate AI and machine learning algorithms to detect unusual patterns such as:

Multiple login attempts

Transactions from unknown devices or locations

Rapid, repeated transaction requests

Instant alerts and transaction blocking mechanisms help to prevent fraud before it escalates.

5. Stay Compliant with Regulatory Standards

Mobile wallets must comply with financial industry standards and regulations such as:

PCI-DSS (Payment Card Industry Data Security Standard)

GDPR (General Data Protection Regulation)

PSD2 (Payment Services Directive 2)

Meeting compliance requirements not only protects users but also shields the company from legal penalties.

6. Conduct Regular Security Audits and Penetration Testing

Security is not a one-time implementation. Mobile wallets must undergo continuous security audits and third-party penetration tests. Vulnerabilities should be patched quickly, and users must be updated with newer, more secure versions of the app regularly.

Engaging a dedicated security team, either internally or externally, is a common practice among leaders in software development fintech.

7. Educate Users

No matter how secure a mobile wallet is, user behavior can still pose risks. Provide users with best practices for protecting their accounts, such as:

Using strong passwords

Avoiding public Wi-Fi for transactions

Keeping their devices updated

Educational prompts within the app or email campaigns can go a long way toward improving overall security.

Best Practices for Mobile Wallet Security

Minimize Data Storage: Store only what is absolutely necessary.

Use Secure APIs: Ensure all external API integrations follow secure coding standards.

Implement Session Timeouts: Log users out automatically after periods of inactivity.

Adopt Secure Coding Practices: Prevent common vulnerabilities like SQL injection, cross-site scripting (XSS), and insecure data storage.

How Xettle Technologies Approaches Mobile Wallet Security

Companies like Xettle Technologies, a rising name in fintech services, demonstrate that securing mobile wallets requires an integrated approach. They focus on secure app architecture, continuous testing, advanced encryption, and regulatory compliance from the initial stages of software development fintech projects. This proactive methodology ensures that fintech users experience both convenience and peace of mind when using mobile wallets.

Conclusion

Building a secure mobile wallet for fintech users involves much more than just good coding—it requires a comprehensive, layered approach to security, compliance, and user education. As mobile transactions continue to rise, ensuring the security of digital wallets is critical for sustaining user trust and business success.

For companies involved in software development fintech, mastering mobile wallet security can be the differentiator that sets them apart in a crowded, competitive market. By implementing the steps and best practices outlined here, fintech companies can deliver reliable, secure, and innovative fintech services that meet the demands of today's digital consumers.

0 notes

Link

#cloudsecurity#CyberROI#CybersecurityPolicy#FederalITMandates#IdentityVerification#NISTGuidelines#RansomwareMitigation#zero-trustarchitecture

0 notes

Text

Top Security Features Every Business Application Should Have

It’s not enough to just provide functionality and usability in today’s digital-first business environment and build really great applications. It has become imperative to keep security at the top of the priority list. All businesses, big or small, are getting caught in the rising tide of cyber-attacks, which threaten everything from daily operations to customer trust and long-term reputation at any given moment.

Application security may be important for every industry-finance, healthcare, e-commerce, logistics, or otherwise. No matter which trade you belong to in terms of being identified as dependent on a digital platform-your application security policy has to be non-negotiable. Simply installing or plugging a few off-the-shelf solutions and hoping for the best is obsolete. Well-structured, proactive security solutions must be integrated the moment the idea is conceived.

In this blog, we will dissect the most important security features every business application must have-what this means for operations, customers, and the bottom line.

Strong Authentication and Access Controls

One of the first lines of defense in any application is verifying who can access it and what they can do once inside. Robust authentication ensures that only authorized users get into them, while access control limits what they can see or manipulate according to their roles.

Modern business applications should incorporate:

Multi-Factor Authentication (MFA) affords the confirmation of users’ identity against passwords, biometrics, and OTP.

Single Sign-On (SSO) allows people to have approved, secure entry to more than one system with a single set of credentials.

Role-Based Access Control (RBAC) minimizes driver threats inside the company by assigning permissions relative to job roles.

Working with a reputable software company gives access to end-user customization and development of access control systems under internal policy and workflow. This ensures that sensitive information remains confidential from unauthorized personnel and has an audit trail of who did what and when.

Comprehensive Data Encryption

Encryption is necessary for securing business as well as individual data. All stored data, whether in a database or transmitted over networks, should be scrambled so that it would not be intelligible to unauthorized parties.

In this case, Encryption at Rest protects the stored data in the hard drives or cloud storage.

Encryption in Transit secures data sent across the internet or internal networks.

Having standards set for data storage, such as AES-256, and data transmission like TLS 1.3 will result in making any data collected during the breach useless to attackers. It protects the organization’s data but also ensures that it abides by related legal frameworks such as GDPR, HIPAA, and CCPA.

Most companies prefer developing customized software in-house so that it becomes easier to manage encryption. Though generic tools can provide a few features, specific software can embed encryption within the individual layers of the application based on how sensitive this particular data environment is structured.

Secure API Integrations

Currently, APIs (Application Program Interfaces) have become very important for any business application to communicate effectively with third-party tools, services, and internal systems. However, unsecured APIs may turn out to be one of the most significant loopholes.

To safeguard the API endpoints, the businesses should have:

Token-based authentication (OAuth2)

Throttling and rate limiting

Validating the inputs

API gateways

It ensures that only authenticated users can perform any operation over the application data and services. These measures would reduce the risks of denial-of-service (DoS) attacks, scraping of data, or unauthorized access to data.

Custom APIs, again, part of custom software solutions, tend to be more secure since they’re built for a purpose and typically don’t leave much room for errors or compatibility problems with other platforms.

Routine Patching and Update Mechanisms

Flaws in software are bound to appear somewhere in its life cycle. However, the extent to which your application reacts fast and effectively to such flaws greatly affects the security posture. Known exploits not patched in time have the potential for catastrophic breaches.

A secure application:

I. Accommodate seamless update mechanisms.

II. Applies patches without downtime.

III. Sends real-time alerts when vulnerabilities are discovered.

That is why, when tied to a professional software development company, businesses can make sure that their applications aren’t merely monitored for hitches but also kept with a current patch level to quickly seal holes from known attack vectors.

Input Validation and Injection Prevention

User input areas for applications — especially those for forms or search boxes — are prone to injection attacks. SQL injection, cross-site scripting (XSS), and command injection can become disastrous attacks by enabling the attacker to manipulate the application to behave in an unintended manner and/or expose sensitive information.

Some fundamental practices include:

Input format validation (e.g., email, phone number)

Input sanitization to remove dangerous characters

Output escaping to inhibit code injection

By building these validations natively into your codebase, you lower dependency on third-party libraries and significantly reduce the risk of compromise for the applications.

One of many good things about custom software solutions is that you can define validation rules based on specific business logic and user flows. Thus, resulting in more secure and reliable applications.

Advanced Logging and Real-Time Monitoring

Security not only views itself as a barrier against disruptive forces but must also tend to detect and respond to them instantaneously. Tools for logging and monitoring can record user activities, detect anomalies, and send alerts based on observed irregularities in behavior.

0 notes

Text

CNSA 2.0 Algorithms: OpenSSL 3.5’s Q-Safe Group Selection

The CNSA 2.0 Algorithm

To prioritise quantum-safe cryptographic methods, OpenSSL 3.5 improves TLS 1.3 per NSA CNSA 2.0 recommendations. With these changes, servers and clients can prefer Q-safe algorithms during the TLS handshake.

OpenSSL employs unique configuration methods to do this without modifying TLS. For instance, servers use a delimiter to sort algorithms by security level while clients use a prefix to indicate key sharing.

These changes provide backward compatibility and reduce network round trips to enable a smooth transition to post-quantum cryptography while maintaining the “prefer” criterion for Q-safe algorithms. This version of OpenSSL is the first major TLS library to completely implement CNSA 2.0, and its long-term support makes it likely to be widely deployed.

Q Safe

Quantum-Safe Cryptography and Quantum Computer Danger

The possibility that quantum computers may break asymmetric encryption drives this research.

“Future quantum computers will break the asymmetric cryptographic algorithms widely used online.”

To secure internet communication, quantum-safe (Q-safe) cryptographic methods must be used.

CNSA 2.0's NSA Major Initiator mandate

The NSA's Commercial National Security Algorithm Suite 2.0 (CNSA 2.0) lists authorised quantum-safe algorithms and their implementation timetable. TLS allows ML-KEM (FIPS-203) for key agreements and ML-DSA or SPINCS+ for certificates.

The CNSA 2.0 requirement requires systems to “prefer CNSA 2.0 algorithms” during transition and “accept only CNSA 2.0 algorithms” as products develop. This two-phase method aims for a gradual transition.

The TLS “Preference” Implementation Challenge

TLS (RFC 8446) clients and servers can freely pick post quantum cryptography methods without a preference mechanism. The TLS protocol does not need this decision. The TLS standard allows clients and servers wide freedom in choosing encryption techniques.

A way to set up TLS connections to favour CNSA 2.0 algorithms is urgently needed. One must figure out method to favour Q-safe algorithms without modifying the TLS protocol.

OpenSSL v3.5 Improves Configuration Features

Developers focused on increasing OpenSSL's configuration capabilities since altering the TLS standard was not possible. The goal was to let OpenSSL-using programs like cURL, HAproxy, and Nginx use the new preference choices without modifying their code.

Client-Side Solution: Prefix Characters for Preference

Clients can provide Q-safe algorithms in OpenSSL v3.5 by prefixing the algorithm name with a special character (”) before the algorithm name in the colon-separated list. The ClientHello message asks the client to generate and deliver key shares for ML-KEM-1024 and x25519, showing support for four algorithms.

A client can submit a maximum of four key shares, which can be modified using a build option, to minimise network congestion from Q-safe key shares' increased size. This architecture should allow completely Q-safe, hybrid, legacy, and spare algorithms.

For backward compatibility, the first algorithm in the list receives a single key share if no ‘*’ prefix is supplied.

Server-Side Solution: Preference Hierarchy Algorithm Tuples

The server-side technique overcomes TLS's lack of a native “preference” mechanism by declaring the server's preferred algorithm order using tuples delimited by the ‘/’ character in the colon-separated list of algorithms.

The server can pick algorithms using a three-level priority scheme.

Tuple processing from left to right is most important.

Second priority is client-provided key sharing overlap inside a tuple.

Third, overlap within a tuple using client-supported methods without key sharing.

Example: ML-KEM-768 / X25519MLKEM768

Three tuples are defined by x25519 / SecP256r1MLKEM768. Within each tuple, the server prioritises algorithms from previous tuples, then key share availability, and finally general support.

Even with a vintage algorithm with a readily available key share, this solution ensures that the server favours Q-safe algorithms despite the risk of a HelloRetryRequest (HRR) penalty: The prefer requirement of CNSA 2.0 prioritises Q-safe algorithms, even at the risk of a round-trip penalty that is fully eliminated by the new specification syntax.

Keep Backward Compatibility and Reduce Impact on Current Systems

Designing for backward compatibility was crucial for a smooth transition. The new configuration format doesn't need code changes for existing apps. To avoid disrupting other features, OpenSSL codebase tweaks were carefully made in “a few pinpointed locations” of the huge codebase.

Additional Implementation Considerations

A “?” prefix was added to ignore unknown algorithm names, handle pseudo-algorithm names like “DEFAULT,” and allow the client and server to use the same specification string (requiring the client to ignore server-specific delimiters and the server to ignore client-specific prefixes).

OpenSSL v3.5's Collaboration and Importance

Development involved considerable consultation and collaboration with the OpenSSL maintainer team and other expertise. The paragraph praises the “excellent interactions” throughout development.

OpenSSL v3.5 is “the first TLS library to fully adhere to the CNSA 2.0 mandate to prefer Q-safe algorithms.” Due to its Long-Term Support (LTS) status, Linux distributions are expected to adopt OpenSSL v3.5 more extensively, making these quantum-safe communication capabilities available.

Conclusion

OpenSSL v3.5 must have the Q-safe algorithm preference to safeguard internet communication from quantum computers. The developers satisfied the NSA's CNSA 2.0 criteria by cleverly increasing OpenSSL's configuration features without requiring large code modifications in OpenSSL-reliant applications or TLS standard changes.

Client-side prefix and server-side tuple-based preference systems give quantum-resistant cryptography precedence in a backward-compatible way, enabling a safe digital future. OpenSSL v3.5's LTS status ensures its widespread use, enabling quantum-safe communication on many computers.

FAQs

How is Quantum Safe?

“Quantum safe” security and encryption withstand conventional and quantum computer assaults. It involves developing and implementing cryptography methods that can withstand quantum computing threats.

#technology#technews#govindhtech#news#technologynews#CNSA 2.0 Algorithms#CNSA 2.0#OpenSSL#Q-safe algorithms#Q-safe#OpenSSL v3.5#Quantum Safe

0 notes

Text

Top Tech Stacks for Fintech App Development in 2025

Fintech is evolving fast, and so is the technology behind it. As we head into 2025, financial applications demand more than just sleek interfaces — they need to be secure, scalable, and lightning-fast. Whether you're building a neobank, a personal finance tracker, a crypto exchange, or a payment gateway, choosing the right tech stack can make or break your app.

In this post, we’ll break down the top tech stacks powering fintech apps in 2025 and what makes them stand out.

1. Frontend Tech Stacks

🔹 React.js + TypeScript

React has long been a favorite for fintech frontends, and paired with TypeScript, it offers improved code safety and scalability. TypeScript helps catch errors early, which is critical in the finance world where accuracy is everything.

🔹 Next.js (React Framework)

For fintech apps with a strong web presence, Next.js brings server-side rendering and API routes, making it easier to manage SEO, performance, and backend logic in one place.

🔹 Flutter (for Web and Mobile)

Flutter is gaining massive traction for building cross-platform fintech apps with a single codebase. It's fast, visually appealing, and great for MVPs and startups trying to reduce time to market.

2. Backend Tech Stacks

🔹 Node.js + NestJS

Node.js offers speed and scalability, while NestJS adds a structured, enterprise-grade framework. Great for microservices-based fintech apps that need modular and testable code.

🔹 Python + Django

Python is widely used in fintech for its simplicity and readability. Combine it with Django — a secure and robust web framework — and you have a great stack for building APIs and handling complex data processing.

🔹 Golang

Go is emerging as a go-to language for performance-intensive fintech apps, especially for handling real-time transactions and services at scale. Its concurrency support is a huge bonus.

3. Databases

🔹 PostgreSQL

Hands down the most loved database for fintech in 2025. It's reliable, supports complex queries, and handles financial data like a pro. With extensions like PostGIS and TimescaleDB, it's even more powerful.

🔹 MongoDB (with caution)

While not ideal for transactional data, MongoDB can be used for storing logs, sessions, or less-critical analytics. Just be sure to avoid it for money-related tables unless you have a strong reason.

🔹 Redis

Perfect for caching, rate-limiting, and real-time data updates. Great when paired with WebSockets for live transaction updates or stock price tickers.

4. Security & Compliance

In fintech, security isn’t optional — it’s everything.

OAuth 2.1 and OpenID Connect for secure user authentication

TLS 1.3 for encrypted communication

Zero Trust Architecture for internal systems

Biometric Auth for mobile apps

End-to-end encryption for sensitive data

Compliance Ready: GDPR, PCI-DSS, and SOC2 tools built-in

5. DevOps & Cloud

🔹 Docker + Kubernetes

Containerization ensures your app runs the same way everywhere, while Kubernetes helps scale securely and automatically.

🔹 AWS / Google Cloud / Azure

These cloud platforms offer fintech-ready services like managed databases, real-time analytics, fraud detection APIs, and identity verification tools.

🔹 CI/CD Pipelines

Using tools like GitHub Actions or GitLab CI/CD helps push secure code fast, with automated testing to catch issues early.

6. Bonus: AI & ML Tools

AI is becoming integral in fintech — from fraud detection to credit scoring.

TensorFlow / PyTorch for machine learning

Hugging Face Transformers for NLP in customer support bots

LangChain (for LLM-driven insights and automation)

Final Thoughts

Choosing the right tech stack depends on your business model, app complexity, team skills, and budget. There’s no one-size-fits-all, but the stacks mentioned above offer a solid foundation to build secure, scalable, and future-ready fintech apps.

In 2025, the competition in fintech is fierce — the right technology stack can help you stay ahead.

What stack are you using for your fintech app? Drop a comment and let’s chat tech!

https://www.linkedin.com/in/%C3%A0ksh%C3%ADt%C3%A2-j-17aa08352/

#Fintech#AppDevelopment#TechStack2025#ReactJS#NestJS#Flutter#Django#FintechInnovation#MobileAppDevelopment#BackendDevelopment#StartupTech#FintechApps#FullStackDeveloper#WebDevelopment#SecureApps#DevOps#FinanceTech#SMTLABS

0 notes

Text

Understanding Data Movement in Azure Data Factory: Key Concepts and Best Practices

Introduction

Azure Data Factory (ADF) is a fully managed, cloud-based data integration service that enables organizations to move and transform data efficiently. Understanding how data movement works in ADF is crucial for building optimized, secure, and cost-effective data pipelines.

In this blog, we will explore: ✔ Core concepts of data movement in ADF ✔ Data flow types (ETL vs. ELT, batch vs. real-time) ✔ Best practices for performance, security, and cost efficiency ✔ Common pitfalls and how to avoid them

1. Key Concepts of Data Movement in Azure Data Factory

1.1 Data Movement Overview

ADF moves data between various sources and destinations, such as on-premises databases, cloud storage, SaaS applications, and big data platforms. The service relies on integration runtimes (IRs) to facilitate this movement.

1.2 Integration Runtimes (IRs) in Data Movement

ADF supports three types of integration runtimes:

Azure Integration Runtime (for cloud-based data movement)

Self-hosted Integration Runtime (for on-premises and hybrid data movement)

SSIS Integration Runtime (for lifting and shifting SSIS packages to Azure)

Choosing the right IR is critical for performance, security, and connectivity.

1.3 Data Transfer Mechanisms

ADF primarily uses Copy Activity for data movement, leveraging different connectors and optimizations:

Binary Copy (for direct file transfers)

Delimited Text & JSON (for structured data)

Table-based Movement (for databases like SQL Server, Snowflake, etc.)

2. Data Flow Types in ADF

2.1 ETL vs. ELT Approach

ETL (Extract, Transform, Load): Data is extracted, transformed in a staging area, then loaded into the target system.

ELT (Extract, Load, Transform): Data is extracted, loaded into the target system first, then transformed in-place.

ADF supports both ETL and ELT, but ELT is more scalable for large datasets when combined with services like Azure Synapse Analytics.

2.2 Batch vs. Real-Time Data Movement

Batch Processing: Scheduled or triggered executions of data movement (e.g., nightly ETL jobs).

Real-Time Streaming: Continuous data movement (e.g., IoT, event-driven architectures).

ADF primarily supports batch processing, but for real-time processing, it integrates with Azure Stream Analytics or Event Hub.

3. Best Practices for Data Movement in ADF

3.1 Performance Optimization

✅ Optimize Data Partitioning — Use parallelism and partitioning in Copy Activity to speed up large transfers. ✅ Choose the Right Integration Runtime — Use self-hosted IR for on-prem data and Azure IR for cloud-native sources. ✅ Enable Compression — Compress data during transfer to reduce latency and costs. ✅ Use Staging for Large Data — Store intermediate results in Azure Blob or ADLS Gen2 for faster processing.

3.2 Security Best Practices

🔒 Use Managed Identities & Service Principals — Avoid using credentials in linked services. 🔒 Encrypt Data in Transit & at Rest — Use TLS for transfers and Azure Key Vault for secrets. 🔒 Restrict Network Access — Use Private Endpoints and VNet Integration to prevent data exposure.

3.3 Cost Optimization

💰 Monitor & Optimize Data Transfers — Use Azure Monitor to track pipeline costs and adjust accordingly. 💰 Leverage Data Flow Debugging — Reduce unnecessary runs by debugging pipelines before full execution. 💰 Use Incremental Data Loads — Avoid full data reloads by moving only changed records.

4. Common Pitfalls & How to Avoid Them

❌ Overusing Copy Activity without Parallelism — Always enable parallel copy for large datasets. ❌ Ignoring Data Skew in Partitioning — Ensure even data distribution when using partitioned copy. ❌ Not Handling Failures with Retry Logic — Use error handling mechanisms in ADF for automatic retries. ❌ Lack of Logging & Monitoring — Enable Activity Runs, Alerts, and Diagnostics Logs to track performance.

Conclusion

Data movement in Azure Data Factory is a key component of modern data engineering, enabling seamless integration between cloud, on-premises, and hybrid environments. By understanding the core concepts, data flow types, and best practices, you can design efficient, secure, and cost-effective pipelines.

Want to dive deeper into advanced ADF techniques? Stay tuned for upcoming blogs on metadata-driven pipelines, ADF REST APIs, and integrating ADF with Azure Synapse Analytics!

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Cybersecurity Trends in Mobile App Development: How to Stay Secure in 2025

As mobile technology continues to evolve, so do the cybersecurity threats that target mobile applications. In 2025, businesses face increasing challenges in protecting user data, securing digital transactions, and maintaining compliance with global regulations. Cyberattacks are becoming more sophisticated, making it essential for businesses to implement advanced security measures in mobile app development.

At Tyfora, we prioritize security-first mobile app development, ensuring businesses can operate safely in a digital landscape filled with cyber threats. This article explores the latest cybersecurity trends in mobile app development and how businesses can stay ahead of potential security risks.

1. Zero-Trust Security Model: A Fundamental Shift in Cybersecurity

The Zero-Trust Security Model is gaining traction in mobile app development. Unlike traditional security models that assume users inside a network can be trusted, Zero-Trust enforces continuous verification of every user, device, and transaction.

How Tyfora Implements Zero-Trust Security

Multi-Factor Authentication (MFA): Requiring multiple credentials for user verification, reducing the risk of unauthorized access.

Least Privilege Access: Ensuring users and applications only have access to the data and systems they absolutely need.

Continuous Monitoring: Implementing real-time AI-driven security analytics to detect unusual activities and prevent cyberattacks.

With Tyfora’s Zero-Trust approach, businesses can prevent insider threats, unauthorized access, and data breaches more effectively.

2. AI-Powered Threat Detection and Prevention

Cybercriminals are using AI-driven attacks to exploit vulnerabilities in mobile applications. In response, companies like Tyfora are integrating AI-powered cybersecurity measures to detect and neutralize threats in real time.

How AI is Enhancing Mobile App Security

Behavioral Anomaly Detection: AI continuously analyzes user activity to detect suspicious behavior, such as unusual login locations or transaction patterns.

Automated Threat Response: AI can immediately isolate infected devices or flag suspicious actions before they lead to data breaches.

Predictive Security Analytics: AI algorithms anticipate potential attack vectors, allowing businesses to proactively address vulnerabilities.

By integrating AI-driven threat detection, Tyfora ensures that mobile applications are protected from evolving cyber threats in 2025 and beyond.

3. The Rise of Biometric Authentication for Mobile Security

Passwords are no longer enough to protect mobile applications. Biometric authentication (such as fingerprint scanning, facial recognition, and iris scanning) is emerging as a secure alternative to traditional login methods.

Tyfora’s Implementation of Biometric Security

Facial Recognition & Touch ID: Enhancing user authentication without relying on weak or reused passwords.

Voice Authentication: Ensuring hands-free, secure logins for mobile applications.

Liveness Detection: Preventing spoofing attacks by distinguishing between real users and fraudulent biometric attempts.

With Tyfora’s biometric security integration, businesses can enhance user convenience while maintaining robust security measures.

4. End-to-End Encryption for Secure Data Transmission

As businesses handle sensitive financial transactions, personal information, and confidential business data, encryption is non-negotiable in mobile app security.

How Tyfora Ensures Secure Data Transmission

Advanced End-to-End Encryption (E2EE): Encrypting data so that only authorized users can access it.

Secure Communication Protocols: Implementing TLS 1.3, HTTPS, and VPN encryption to protect data in transit.

Encrypted Storage Solutions: Ensuring secure on-device and cloud storage for user data.

By utilizing military-grade encryption techniques, Tyfora helps businesses safeguard their applications from interception, hacking, and data theft.

5. Mobile App Compliance with Global Cybersecurity Regulations

Governments worldwide are enforcing stricter data protection laws to hold businesses accountable for user privacy and security. In 2025, businesses must ensure their mobile apps comply with regulations such as:

General Data Protection Regulation (GDPR) – European Union

California Consumer Privacy Act (CCPA) – United States

Health Insurance Portability and Accountability Act (HIPAA) – Healthcare Industry

Payment Card Industry Data Security Standard (PCI DSS) – Financial Transactions

How Tyfora Ensures Compliance for Mobile Apps

Built-in Data Protection & Privacy Features: Implementing data anonymization, user consent tracking, and privacy settings within apps.

Regulatory Audits & Risk Assessments: Conducting compliance checks to ensure mobile applications meet international security standards.

Secure Third-Party Integrations: Ensuring APIs and cloud storage providers comply with cybersecurity regulations.

With Tyfora’s compliance-focused approach, businesses can operate safely across different regions without facing legal repercussions.

6. Blockchain for Enhanced Security in Mobile Applications

Blockchain technology is transforming mobile app security by providing decentralized and tamper-proof transaction systems.

How Tyfora Uses Blockchain for Cybersecurity

Decentralized Identity Management: Eliminating reliance on centralized databases that are prone to hacking.

Secure Payment Processing: Enhancing financial transactions with blockchain-based digital ledgers.

Smart Contracts: Automating secure, trustless transactions without intermediaries.

By integrating blockchain security solutions, Tyfora ensures that businesses can operate with maximum transparency and protection.

7. Secure API Development: Protecting Application Interfaces

APIs (Application Programming Interfaces) are a crucial component of mobile applications, enabling seamless integrations with third-party services. However, APIs also introduce security vulnerabilities if not properly secured.

Tyfora’s Secure API Practices

OAuth 2.0 & API Key Authentication: Ensuring only authorized systems can access sensitive data.

Rate Limiting & API Monitoring: Preventing DDoS attacks and unauthorized data scraping.

Token-Based Authentication: Implementing JWT (JSON Web Tokens) for secure and scalable API access.

With Tyfora’s API security measures, businesses can protect sensitive user data from API-based cyber threats.

8. The Role of Continuous Security Testing in Mobile App Development

Cybersecurity threats evolve daily, making continuous security testing a necessity rather than a one-time event.

How Tyfora Conducts Continuous Security Testing

Penetration Testing (Ethical Hacking): Simulating real-world cyberattacks to identify vulnerabilities before hackers do.

Automated Security Scanning: Using AI-powered vulnerability scanners to detect weaknesses in mobile app code.

Bug Bounty Programs: Encouraging ethical hackers to find and report security flaws before malicious actors exploit them.

By adopting continuous security testing, Tyfora helps businesses stay proactive rather than reactive in cybersecurity.

Final Thoughts: Future-Proofing Your Mobile App Security with Tyfora

Cybersecurity threats are evolving, and businesses must stay ahead to protect sensitive data, maintain user trust, and comply with global regulations. As a leader in mobile app development, Tyfora is committed to implementing the latest cybersecurity technologies to ensure robust, scalable, and future-ready mobile applications.

Why Choose Tyfora for Secure Mobile App Development?

✔ Security-First Development Approach – Ensuring that security is a priority at every stage of development. ✔ AI-Powered Threat Detection – Utilizing artificial intelligence to proactively identify and mitigate cyber risks. ✔ Regulatory Compliance Expertise – Keeping businesses safe from legal penalties with compliance-driven development. ✔ End-to-End Data Protection – Implementing encryption, biometric authentication, and secure APIs.

In 2025, businesses cannot afford to overlook cybersecurity in mobile applications. With Tyfora, you can ensure that your mobile apps are secure, compliant, and resilient against future threats.

Secure your mobile app with Tyfora today! Contact us to build a cybersecurity-driven mobile solution.

0 notes

Text

RHEL 8.8: A Powerful and Secure Enterprise Linux Solution

Red Hat Enterprise Linux (RHEL) 8.8 is an advanced and stable operating system designed for modern enterprise environments. It builds upon the strengths of its predecessors, offering improved security, performance, and flexibility for businesses that rely on Linux-based infrastructure. With seamless integration into cloud and hybrid computing environments, RHEL 8.8 provides enterprises with the reliability they need for mission-critical workloads.

One of the key enhancements in RHEL 8.8 is its optimized performance across different hardware architectures. The Linux kernel has been further refined to support the latest processors, storage technologies, and networking hardware. These RHEL 8.8 improvements result in reduced system latency, faster processing speeds, and better efficiency for demanding applications.

Security remains a top priority in RHEL 8.8. This release includes enhanced cryptographic policies and supports the latest security standards, including OpenSSL 3.0 and TLS 1.3. Additionally, SELinux (Security-Enhanced Linux) is further improved to enforce mandatory access controls, preventing unauthorized modifications and ensuring that system integrity is maintained. These security features make RHEL 8.8 a strong choice for organizations that prioritize data protection.

RHEL 8.8 continues to enhance package management with DNF (Dandified YUM), a more efficient and secure package manager that simplifies software installation, updates, and dependency management. Application Streams allow multiple versions of software packages to coexist on a single system, giving developers and administrators the flexibility to choose the best software versions for their needs.

The growing importance of containerization is reflected in RHEL 8.8’s strong support for containerized applications. Podman, Buildah, and Skopeo are included, allowing businesses to deploy and manage containers securely without requiring a traditional container runtime. Podman’s rootless container support further strengthens security by reducing the risks associated with privileged container execution.

Virtualization capabilities in RHEL 8.8 have also been refined. The integration of Kernel-based Virtual Machine (KVM) and QEMU ensures that enterprises can efficiently deploy and manage virtualized workloads. The Cockpit web interface provides an intuitive dashboard for administrators to monitor and control virtual machines, making virtualization management more accessible.

For businesses operating in cloud environments, RHEL 8.8 seamlessly integrates with leading cloud platforms, including AWS, Azure, and Google Cloud. Optimized RHEL images ensure smooth deployments, reducing compatibility issues and providing a consistent operating experience across hybrid and multi-cloud infrastructures.

Networking improvements in RHEL 8.8 further enhance system performance and reliability. The updated NetworkManager simplifies network configuration, while enhancements to IPv6 and high-speed networking interfaces ensure that businesses can handle increased data traffic with minimal latency.

Storage management in RHEL 8.8 is more robust, with support for Stratis, an advanced storage management solution that simplifies volume creation and maintenance. Enterprises can take advantage of XFS, EXT4, and LVM (Logical Volume Manager) for scalable and flexible storage solutions. Disk encryption and snapshot management improvements further protect sensitive business data.

Automation is a core focus of RHEL 8.8, with built-in support for Ansible, allowing IT teams to automate configurations, software deployments, and system updates. This reduces manual workload, minimizes errors, and improves system efficiency, making enterprise IT management more streamlined.

Monitoring and diagnostics tools in RHEL 8.8 are also improved. Performance Co-Pilot (PCP) and Tuned provide administrators with real-time insights into system performance, enabling them to identify bottlenecks and optimize configurations for maximum efficiency.

Developers benefit from RHEL 8.8’s comprehensive development environment, which includes programming languages such as Python 3, Node.js, Golang, and Ruby. The latest version of the GCC (GNU Compiler Collection) ensures compatibility with a wide range of applications and frameworks. Additionally, enhancements to the Web Console provide a more user-friendly administrative experience.

One of the standout features of RHEL 8.8 is its long-term support and enterprise-grade lifecycle management. Red Hat provides extended security updates, regular patches, and dedicated technical support, ensuring that businesses can maintain a stable and secure operating environment for years to come. Red Hat Insights, a predictive analytics tool, helps organizations proactively detect and resolve system issues before they cause disruptions.

In conclusion RHEL 8.8 is a powerful, secure, and reliable Linux distribution tailored for enterprise needs. Its improvements in security, containerization, cloud integration, automation, and performance monitoring make it a top choice for businesses that require a stable and efficient operating system. Whether deployed on physical servers, virtual machines, or cloud environments, RHEL 8.8 delivers the performance, security, and flexibility that modern enterprises demand.

0 notes

Text

Fighting Cloudflare 2025 Risk Control: Disassembly of JA4 Fingerprint Disguise Technology of Dynamic Residential Proxy

Today in 2025, with the growing demand for web crawler technology and data capture, the risk control systems of major websites are also constantly upgrading. Among them, Cloudflare, as an industry-leading security service provider, has a particularly powerful risk control system. In order to effectively fight Cloudflare's 2025 risk control mechanism, dynamic residential proxy combined with JA4 fingerprint disguise technology has become the preferred strategy for many crawler developers. This article will disassemble the implementation principle and application method of this technology in detail.

Overview of Cloudflare 2025 Risk Control Mechanism

Cloudflare's risk control system uses a series of complex algorithms and rules to identify and block potential malicious requests. These requests may include automated crawlers, DDoS attacks, malware propagation, etc. In order to deal with these threats, Cloudflare continues to update its risk control strategies, including but not limited to IP blocking, behavioral analysis, TLS fingerprint detection, etc. Among them, TLS fingerprint detection is one of the important means for Cloudflare to identify abnormal requests.

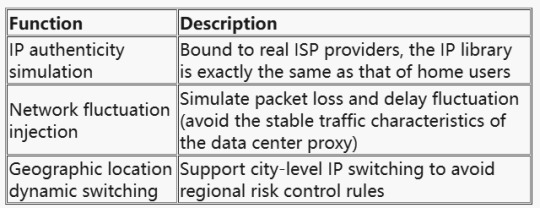

Technical Positioning of Dynamic Residential Proxy

The value of Dynamic Residential Proxy has been upgraded from "IP anonymity" to full-link environment simulation. Its core capabilities include:

JA4 fingerprint camouflage technology dismantling

1. JA4 fingerprint generation logic

Cloudflare JA4 fingerprint generates a unique identifier by hashing the TLS handshake features. Key parameters include:

TLS version: TLS 1.3 is mandatory (version 1.2 and below will be eliminated in 2025);

Cipher suite order: browser default suite priority (such as TLS_AES_256_GCM_SHA384 takes precedence over TLS_CHACHA20_POLY1305_SHA256);

Extended field camouflage: SNI(Server Name Indication) and ALPN (Application Layer Protocol Negotiation) must be exactly the same as the browser.

Sample code: Python TLS client configuration

2. Collaborative strategy of dynamic proxy and JA4

Step 1: Pre-screening of proxy pools

Use ASN library verification (such as ipinfo.io) to keep only IPs of residential ISPs (such as Comcast, AT&T); Inject real user network noise (such as random packet loss rate of 0.1%-2%).

Step 2: Dynamic fingerprinting

Assign an independent TLS profile to each proxy IP (simulating different browsers/device models);

Use the ja4x tool to generate fingerprint hashes to ensure that they match the whitelist of the target website.

Step 3: Request link encryption

Deploy a traffic obfuscation module (such as uTLS-based protocol camouflage) on the proxy server side;

Encrypt the WebSocket transport layer to bypass man-in-the-middle sniffing (MITM).

Countermeasures and risk assessment

1. Measured data (January-February 2025)

2. Legal and risk control red lines

Compliance: Avoid collecting privacy data protected by GDPR/CCPA (such as user identity and biometric information); Countermeasures: Cloudflare has introduced JA5 fingerprinting (based on the TCP handshake mechanism), and the camouflage algorithm needs to be updated in real time.

Precautions in practical application

When applying dynamic residential proxy combined with JA4 fingerprint camouflage technology to fight against Cloudflare risk control, the following points should also be noted:

Proxy quality selection: Select high-quality and stable dynamic residential proxy services to ensure the effectiveness and anonymity of the proxy IP.

Fingerprint camouflage strategy adjustment: According to the update of the target website and Cloudflare risk control system, timely adjust the JA4 fingerprint camouflage strategy to maintain the effectiveness of the camouflage effect.

Comply with laws and regulations: During the data crawling process, it is necessary to comply with relevant laws and regulations and the terms of use of the website to avoid infringing on the privacy and rights of others.

Risk assessment and response: When using this technology, the risks that may be faced should be fully assessed, and corresponding response measures should be formulated to ensure the legality and security of data crawling activities.

Conclusion

Dynamic residential proxy combined with JA4 fingerprint camouflage technology is an effective means to fight Cloudflare 2025 risk control. By hiding the real IP address, simulating real user behavior and TLS fingerprints, we can reduce the risk of being identified by the risk control system and improve the success rate and efficiency of data crawling. However, when implementing this strategy, we also need to pay attention to issues such as the selection of agent quality, the adjustment of fingerprint disguise strategies, and compliance with laws and regulations to ensure the legality and security of data scraping activities.

0 notes

Text

THSYU: Empowering the Future of Cryptocurrency Trading with Innovation and Security

🌟 Welcome to THSYU! 🌟

In the fast-paced world of cryptocurrency, THSYU has quickly emerged as a key player, blending cutting-edge technology with user-centric services and robust security features. Our platform is designed to stand out in the ever-evolving digital asset market, offering a wide array of services, including secure trading, investment management, and decentralized finance (DeFi) solutions.

🔒 Unparalleled Security: Protecting User Assets

At THSYU, security is our top priority. We utilize advanced encryption protocols like AES-GCM and TLS 1.3 to protect your data throughout every transaction. Our zero-trust security model ensures that every access point is thoroughly verified, minimizing the risk of unauthorized access. Plus, we safeguard user funds with a combination of cold and hot wallet strategies, protecting your assets from external threats.

Our intelligent risk control systems leverage machine learning and real-time analytics to detect abnormal trading activities and potential fraud, providing an extra layer of protection for all users. By identifying risks early, we ensure that every trade on our platform is secure and compliant with financial regulations.

⚡ Innovation at the Core: Advanced Trading Features

Technological innovation is at the heart of THSYU. Our distributed architecture offers high scalability and availability, allowing you to trade without disruption even during peak traffic. We use in-memory databases for low-latency trading, ensuring quick order execution and an enhanced user experience.

One of our standout features is cross-chain trading capabilities, allowing you to seamlessly trade assets across multiple blockchains like Bitcoin, Ethereum, and Solana. With Layer 2 scalability solutions, we provide faster and cheaper transactions while maintaining top-notch security and transparency.

💎 DeFi and NFT Integration: Expanding Investment Opportunities

THSYU goes beyond traditional trading by integrating with DeFi protocols, giving you access to a broader range of financial services, including liquidity mining and synthetic asset trading. Our native NFT marketplace allows you to mint, trade, and auction NFTs, opening doors to investment opportunities in the exciting world of digital art and collectibles.

🌍 Global Reach and Compliance

We are committed to maintaining the highest standards of compliance, ensuring adherence to global and regional regulations. With licenses like the MSB in the United States, THSYU provides a secure and legally compliant trading environment. As we expand globally, we continue to integrate emerging technologies while ensuring user protection and trust.

In conclusion, THSYU is setting the standard for cryptocurrency trading by combining state-of-the-art security features, advanced technology, and a commitment to regulatory compliance. As we continue to innovate, we are well-positioned to lead the future of digital asset trading and investment. https://www.thsyu.com/

✨ Join us at THSYU and experience the future of cryptocurrency trading! ✨

0 notes

Text

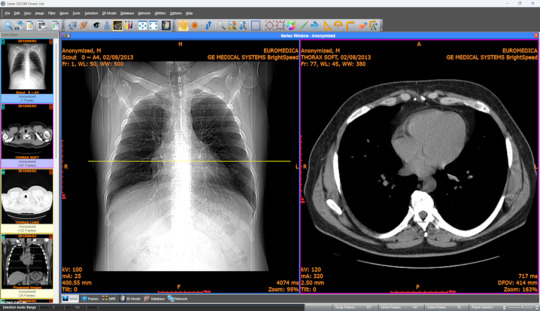

Secure Medical Image Sharing Made Simple: A Free PACS Solution Guide

As healthcare facilities increasingly collaborate to provide comprehensive patient care, the need for efficient and secure medical image sharing has never been more critical.

Fortunately, implementing a PACS viewer free solution can help healthcare providers streamline their workflow while maintaining strict security standards.

In this guide, we'll walk through everything you need to know about setting up a secure cross-facility image sharing system.

PACS Fundamentals

Picture Archiving and Communication Systems (PACS) form the backbone of modern medical imaging. Before diving into implementation, let's understand the key components:

Why Free PACS Viewers? While premium PACS solutions can cost upwards of $50,000, free alternatives have evolved to offer robust features. According to recent healthcare IT surveys, over 65% of small to medium-sized facilities now utilize some form of free PACS viewing solution.

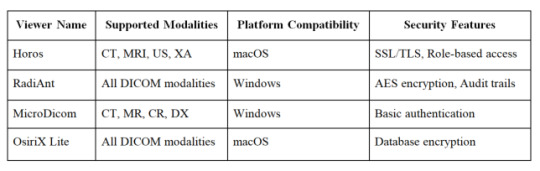

Selecting the Right Free PACS Viewer

When choosing a free PACS viewer, consider these essential features:

Must-Have Features:

DICOM compatibility

Multi-modality support

Cross-platform functionality

Active development community

Regular security updates

Here's a comparison of popular free PACS viewers:

Security Requirements and Compliance

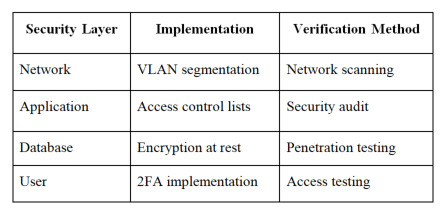

HIPAA Compliance is Non-Negotiable Your implementation must adhere to strict security protocols:

Data Encryption

Use AES-256 encryption for stored images

Implement TLS 1.3 for data in transit

Regular encryption key rotation

2. Access Control

Multi-factor authentication

Role-based access control (RBAC)

Detailed audit logging

Key Security Statistics:

Healthcare data breaches cost an average of $429 per record

60% of breaches involve unauthorized access

Regular security audits reduce breach risks by 50%

Implementation Steps

1. Network Infrastructure Setup

Required Components:

- Dedicated VLAN for PACS traffic

- Hardware firewall

- VPN for remote access

- Load balancer (for high availability)

2. Server Configuration

Begin with proper server hardening:

Operating System Security

Apply latest security patches

Disable unnecessary services

Implement host-based firewall rules

Database Setup

Separate database server

Regular automated backups

Encryption at rest

3. PACS Viewer Installation

Step-by-Step Process:

Download the chosen free PACS viewer

Verify checksum for software integrity

Install on designated workstations

Configure initial security settings

Test basic functionality

4. Security Implementation

Critical Security Measures:

Best Practices and Maintenance

Daily Operations

Regular Maintenance Tasks:

System health checks

Security log review

Backup verification

User access audits

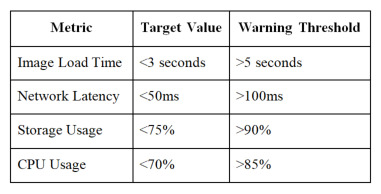

Performance Optimization

Monitor these key metrics:

Image retrieval time

System response time

Network latency

Storage utilization

Recommended Performance Thresholds:

Troubleshooting Common Issues

Image Loading Problems

Common Solutions:

Clear viewer cache

Verify network connectivity

Check file permissions

Validate DICOM compatibility

Connection Issues

Follow this troubleshooting flowchart:

Verify network status

Check VPN connectivity

Validate server status

Review firewall rules

Staff Training and Documentation

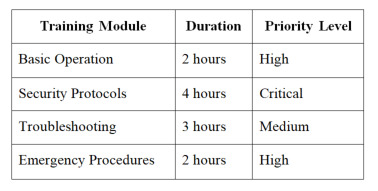

Essential Training Components:

0 notes

Text

Navigating Mobile Banking Regulations: QA Strategies for Compliance and Confidence

Navigating Mobile Banking Regulations: QA Strategies for Compliance and Confidence

Introduction

Regulatory compliance is one of the biggest challenges in mobile banking. Financial institutions must ensure that their apps meet stringent security, data protection, and anti-fraud regulations to protect customers and maintain trust. Non-compliance can result in hefty fines, reputational damage, and even legal action.

By leveraging quality assurance consulting services, banks and fintech companies can navigate complex regulations, implement effective compliance strategies, and deliver secure, seamless mobile banking experiences.

Why Compliance Matters in Mobile Banking

1. Protecting Customer Data

Customer trust hinges on the security of their financial data. QA consulting ensures that:

User data is encrypted using AES-256 and TLS 1.3 standards.

Secure authentication mechanisms (MFA, biometric login) are in place.

Personal information is stored and transmitted securely, preventing leaks.

2. Avoiding Legal Penalties and Fines

Regulatory violations can result in substantial financial and legal consequences. QA experts help financial institutions adhere to laws such as:

GDPR & CCPA: Ensuring user data privacy rights are upheld.

PCI DSS: Securing card transactions and preventing payment fraud.

PSD2 & Open Banking Standards: Enforcing strong customer authentication (SCA) and secure API access.

3. Preventing Fraud and Cybercrime

Mobile banking fraud is on the rise, making compliance essential for security. QA consulting helps by:

Implementing fraud detection systems to analyze transaction patterns.

Running security audits to detect vulnerabilities in mobile apps.

Ensuring continuous monitoring of transactions to identify suspicious activity.

Key QA Strategies for Mobile Banking Compliance

1. Automated Compliance Testing

Reduces the risk of human error in regulatory checks.

Ensures faster validation of security and data privacy measures.

Identifies gaps in compliance early in the development cycle.

2. Secure API Testing

Verifies that third-party integrations meet compliance and security standards.

Prevents unauthorized access through tokenized authentication.

Ensures encrypted data transmission between banking systems.

3. Continuous Security Audits

Regular penetration testing helps detect system vulnerabilities.

Real-time monitoring tools flag security breaches before they escalate.

Compliance reports provide insights into risk areas for improvement.

4. Performance and Load Testing

Ensures apps can handle peak traffic without downtime.

Simulates real-world banking scenarios to test system resilience.

Optimizes app performance to meet regulatory uptime requirements.

Conclusion

In the highly regulated financial sector, ensuring compliance is not optional—it’s a necessity. Investing in quality assurance consulting services allows mobile banking providers to maintain regulatory compliance, strengthen security, and build trust with their users.

Call to Action

Is your mobile banking app fully compliant with industry regulations? Contact our quality assurance consulting services today for a thorough compliance assessment and robust QA solutions.

#qualityassuranceservices#qualityassurancetesting#qualityassurancecompany#quality assurance services

0 notes

Text

NGINX MasterClass: NGINX Server & Custom Load Balancer

Introduction to NGINX

NGINX is a powerful, high-performance web server that also acts as a reverse proxy, load balancer, and HTTP cache. Known for its speed, stability, and ability to handle large amounts of traffic, NGINX is one of the most popular web servers in the world. Whether you're serving static content or managing a high-traffic website, NGINX is the go-to solution for many developers and system administrators.

What is NGINX?

History of NGINX

NGINX was created by Igor Sysoev in 2002 to address the C10K problem, which refers to web servers' struggles to handle 10,000 concurrent client connections. Since then, NGINX has evolved into a feature-rich tool used not only as a web server but also as a reverse proxy and load balancer.

Popularity and Usage

NGINX has grown to become a staple in modern web infrastructure. It powers some of the most visited websites globally, including Netflix, GitHub, and Pinterest, thanks to its ability to handle heavy traffic and provide flexible solutions for scaling.

Key Features of NGINX

HTTP and Reverse Proxy Server

At its core, NGINX is designed to efficiently serve both static and dynamic content. Additionally, it excels at forwarding client requests to backend servers using reverse proxy functionality.

Load Balancer

NGINX provides built-in load-balancing capabilities. It distributes incoming network traffic across multiple servers, ensuring that no single server becomes overloaded.

Caching and Compression

One of NGINX’s standout features is its ability to cache responses from servers, reducing load times and bandwidth usage. Compression algorithms such as Gzip further optimize performance by reducing the size of files transmitted over the network.

Configuring Server Blocks

Server blocks can be customized for specific domains or configurations. Each server block can listen on different IP addresses or ports, allowing for granular control of traffic.

NGINX as a Web Server

Serving Static and Dynamic Content

Handling Static Files

NGINX is extremely efficient at serving static files such as HTML, CSS, and images. You simply need to specify the directory that contains your static files in the server block.

Proxying Dynamic Content to Application Servers

For dynamic content, NGINX can act as a reverse proxy, forwarding requests to application servers like Node.js, Python, or Ruby on Rails.

Handling HTTP Requests

Understanding How NGINX Handles HTTP

NGINX efficiently manages HTTP requests using an event-driven architecture. This allows it to handle many connections concurrently without overwhelming server resources.

Using the Access and Error Logs

NGINX keeps detailed logs that track client requests and server errors. These logs are essential for debugging and monitoring traffic.

NGINX as a Reverse Proxy

Reverse Proxy Basics

A reverse proxy forwards client requests to backend servers. This provides better security, load distribution, and content optimization.

Benefits of Using NGINX as a Reverse Proxy

By acting as a reverse proxy, NGINX can offload processing tasks from application servers, cache responses, and add SSL encryption, all of which improve performance and security.

Best Practices for SSL Configuration

To ensure security, use strong encryption protocols like TLS 1.2 or 1.3 and configure settings like HTTP Strict Transport Security (HSTS) for added protection.

Custom Load Balancing with NGINX

What is Load Balancing?

Load balancing is the process of distributing incoming traffic across multiple servers to prevent any single server from being overwhelmed.

Types of Load Balancing: Round Robin, Least Connections, IP Hash

NGINX supports several load balancing algorithms:

Round Robin: Distributes requests equally across servers.

Least Connections: Sends traffic to the server with the fewest connections.

IP Hash: Routes requests from the same client IP to the same server.

NGINX Security Best Practices

Securing NGINX Server

Using Firewalls and Restricting Access

To secure your NGINX server, you can configure firewalls, restrict access based on IP addresses, and disable unnecessary modules.

Monitoring and Optimizing NGINX Performance

Monitoring NGINX with Tools

Using NGINX Status Module

The NGINX status module provides real-time information about server performance and client connections, helping you diagnose issues.

External Monitoring Tools

There are various external monitoring tools, such as Prometheus and Grafana, that can give you deeper insights into NGINX performance metrics.

Performance Tuning

Optimizing Configurations for Performance

To improve performance, you can adjust worker processes, buffer sizes, and caching parameters in the NGINX configuration.

Caching Strategies for Faster Response Times

Effective use of caching reduces the load on backend servers, speeds up response times, and conserves bandwidth.

Conclusion

NGINX is an incredibly versatile tool that can serve as a web server, reverse proxy, and load balancer, making it essential for scalable and high-performance web infrastructure. From securing your website with SSL to distributing traffic using custom load balancers, NGINX offers a wide array of features to optimize your web applications.

FAQs

What is the primary function of NGINX?

The primary function of NGINX is to serve as a high-performance web server, reverse proxy, and load balancer.

Can NGINX be used as a load balancer?

Yes, NGINX is widely used for load balancing, offering several algorithms like Round Robin, Least Connections, and IP Hash.

How does SSL termination work with NGINX?

SSL termination in NGINX involves decrypting HTTPS traffic at the server level before passing the unencrypted traffic to backend servers.

What are the best practices for securing NGINX?

Best practices for securing NGINX include setting up SSL, using firewalls, implementing rate limiting, and regularly updating the software.

How can I monitor NGINX performance?

You can monitor NGINX performance using its built-in status module or external monitoring tools like Prometheus and Grafana.

0 notes

Text

Cloud SQL Auth Proxy: Securing Your Cloud SQL Instances

Cloud SQL Auth Proxy

In this blog they will explain how to utilise the Cloud SQL Auth Proxy to create safe, encrypted data, and authorised connections to your instances. To connect to Cloud SQL from the App Engine standard environment or App Engine flexible environment, you do not need to configure SSL or use the Cloud SQL Auth Proxy.

The Cloud SQL Auth Proxy’s advantages

Without requiring authorised networks or SSL configuration, the Cloud SQL Auth Proxy is a Cloud SQL connection that offers secure access to your instances.

The following are some advantages of the Cloud SQL Auth Proxy and other Cloud SQL Connectors:

Secure connections:

TLS 1.3 with a 256-bit AES cypher is automatically used by this to encrypt traffic to and from the database. You won’t need to administer SSL certificates because they are used to validate the identities of clients and servers and are not dependent on database protocols.

Simpler authorization of connections:

IAM permissions are used by the Cloud SQL Auth Proxy to restrict who and what can connect to your Cloud SQL instances. Therefore, there is no need to supply static IP addresses because it manages authentication with Cloud SQL.

It depends on the IP connectivity that already exists; it does not offer a new way for connecting. The Cloud SQL Auth Proxy needs to be on a resource that has access to the same VPC network as the Cloud SQL instance in order to connect to it via private IP.

The operation of the Cloud SQL Auth Proxy

A local client that is operating in the local environment is required for the Cloud SQL Auth Proxy to function. Your application uses the common database protocol that your database uses to connect with the Cloud SQL Auth Proxy.

It communicates with its server-side partner process through a secure channel. One connection to the Cloud SQL instance is made for each connection made via the Cloud SQL Auth Proxy.

An application that connects to Cloud SQL Auth Proxy first determines if it can establish a connection to the target Cloud SQL instance. In the event that a connection is not established, it makes use of the Cloud SQL Admin APIs to acquire an ephemeral SSL certificate and connects to Cloud SQL using it. The expiration date of ephemeral SSL certificates is around one hour. These certificates are refreshed by Cloud SQL Auth Proxy prior to their expiration.

The only port on which the Cloud SQL Auth Proxy establishes outgoing or egress connections to your Cloud SQL instance is 3307. All egress TCP connections on port 443 must be permitted since Cloud SQL Auth Proxy uses the domain name sqladmin.googleapis.com to use APIs, which does not have a stable IP address. Make that your client computer’s outbound firewall policy permits outgoing connections to port 3307 on the IP address of your Cloud SQL instance.

Although it doesn’t offer connection pooling, it can be used in conjunction with other connection pools to boost productivity.

The connection between Cloud SQL Auth Proxy and Cloud SQL is depicted in the following diagram:

image credit to Google cloud

Use of the Cloud SQL Auth Proxy Requirements

The following conditions must be fulfilled in order for you to use the Cloud SQL Auth Proxy:

Enabling the Cloud SQL Admin API is necessary.

It is necessary to supply Google Cloud authentication credentials to this.

You need to supply a working database user account and password to this proxy.

The instance needs to be set up to use private IP or have a public IPv4 address.

It is not necessary for the public IP address to be added as an approved network address, nor does it need to be reachable from any external address.

Options for starting Cloud SQL Auth Proxy

You give the following details when you launch it:

Which Cloud SQL instances to connect to so that it can wait to receive data from your application that is sent to Cloud SQL

Where can it locate the login credentials needed to validate your application with Cloud SQL?

Which type of IP address to use, if necessary.

Whether it will listen on a TCP port or a Unix socket depends on the startup parameters you supply. It creates the socket at the specified location, which is often the /cloudsql/ directory, if it is listening on a Unix socket. The Cloud SQL Auth Proxy by default listens on localhost for TCP.

For authentication, use a service account

To authorise your connections to a Cloud SQL instance, you must authenticate as a Cloud SQL IAM identity using this Proxy.

For this purpose, the benefit of using a service account is that you may make a credential file particularly for the Cloud SQL Auth Proxy, and as long as it approach for production instances that aren’t operating on a Compute Engine instance is to use a service account

In the event that you require this to be invoked from several computers, you can replicate the credential file within a system image.

You have to make and maintain the credential file in order to use this method. The service account can only be created by users who possess the resourcemanager.projects.setIamPolicy permission, which includes project owners. You will need to get someone else to create the service account or find another way to authenticate this, if your Google Cloud user does not have this permission.

Read more on govindhtech.com

#cloudSQL#vpcnetwork#IPAddress#firewallpolicy#GoogleCloud#news#TechNews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Shop for the best quality firewalls in Dubai UAE

Fortinet fg 80f firewall uniquely meets the performance needs of hyper-scale and hybrid IT architectures, enabling organizations to deliver optimal user experience and manage security risks for better business continuity with reduced cost and complexity.

Protect your network with IPS and advanced Malware plus Email and web Protection with the Unified Threat Protection Bundle. The recommended use case for UTP is for NGFW and Secure Web Gateway.

Protects against malware, exploits, & malicious websites in both encrypted & non-encrypted traffic, prevents and detects known attacks using threat intelligence from FortiGuard Labs security services. Engineered for Innovation using security processors (SPU) to deliver the industry’s best threat protection performance and ultra-low latency, provides industry-leading performance and protection for SSL encrypted traffic including the first firewall vendor to provide TLS 1.3 deep inspection

#costtocost#dubai#uae#business#it services#it support#firewalls in dubai#firewall#fortinet firewall#fortigate 100f#fortigate 80f#fortigate 60f#best electronics and it retailer#electronics and it supplier in uae#it networking

0 notes