#Tableau Developer in 5 Simple Steps

Explore tagged Tumblr posts

Text

Cross-Mapping Tableau Prep Workflows into Power Query: A Developer’s Blueprint

When migrating from Tableau to Power BI, one of the most technically nuanced challenges is translating Tableau Prep workflows into Power Query in Power BI. Both tools are built for data shaping and preparation, but they differ significantly in structure, functionality, and logic execution. For developers and BI engineers, mastering this cross-mapping process is essential to preserve the integrity of ETL pipelines during the migration. This blog offers a developer-centric blueprint to help you navigate this transition with clarity and precision.

Understanding the Core Differences

At a foundational level, Tableau Prep focuses on a flow-based, visual paradigm where data steps are connected in a linear or branching path. Power Query, meanwhile, operates in a functional, stepwise M code environment. While both support similar operations—joins, filters, aggregations, data type conversions—the implementation logic varies.

In Tableau Prep:

Actions are visual and sequential (Clean, Join, Output).

Operations are visually displayed in a flow pane.

Users rely heavily on drag-and-drop transformations.

In Power Query:

Transformations are recorded as a series of applied steps using the M language.

Logic is encapsulated within functional scripts.

The interface supports formula-based flexibility.

Step-by-Step Mapping Blueprint

Here’s how developers can strategically cross-map common Tableau Prep components into Power Query steps:

1. Data Input Sources

Tableau Prep: Uses connectors or extracts to pull from databases, Excel, or flat files.

Power Query Equivalent: Use “Get Data” with the appropriate connector (SQL Server, Excel, Web, etc.) and configure using the Navigator pane.

✅ Developer Tip: Ensure all parameters and credentials are migrated securely to avoid broken connections during refresh.

2. Cleaning and Shaping Data

Tableau Prep Actions: Rename fields, remove nulls, change types, etc.

Power Query Steps: Use commands like Table.RenameColumns, Table.SelectRows, and Table.TransformColumnTypes.

✅ Example: Tableau Prep’s “Change Data Type” ↪ Power Query:

mCopy

Edit

Table.TransformColumnTypes(Source,{{"Date", type date}})

3. Joins and Unions

Tableau Prep: Visual Join nodes with configurations (Inner, Left, Right).

Power Query: Use Table.Join or the Merge Queries feature.

✅ Equivalent Code Snippet:

mCopy

Edit

Table.NestedJoin(TableA, {"ID"}, TableB, {"ID"}, "NewColumn", JoinKind.Inner)

4. Calculated Fields / Derived Columns

Tableau Prep: Create Calculated Fields using simple functions or logic.

Power Query: Use “Add Column” > “Custom Column” and M code logic.

✅ Tableau Formula Example: IF [Sales] > 100 THEN "High" ELSE "Low" ↪ Power Query:

mCopy

Edit

if [Sales] > 100 then "High" else "Low"

5. Output to Destination

Tableau Prep: Output to .hyper, Tableau Server, or file.

Power BI: Load to Power BI Data Model or export via Power Query Editor to Excel or CSV.

✅ Developer Note: In Power BI, outputs are loaded to the model; no need for manual exports unless specified.

Best Practices for Developers

Modularize: Break complex Prep flows into multiple Power Query queries to enhance maintainability.

Comment Your Code: Use // to annotate M code for easier debugging and team collaboration.

Use Parameters: Replace hardcoded values with Power BI parameters to improve reusability.

Optimize for Performance: Apply filters early in Power Query to reduce data volume.

Final Thoughts

Migrating from Tableau Prep to Power Query isn’t just a copy-paste process—it requires thoughtful mapping and a clear understanding of both platforms’ paradigms. With this blueprint, developers can preserve logic, reduce data preparation errors, and ensure consistency across systems. Embrace this cross-mapping journey as an opportunity to streamline and modernize your BI workflows.

For more hands-on migration strategies, tools, and support, explore our insights at https://tableautopowerbimigration.com – powered by OfficeSolution.

0 notes

Text

What is a Data Analyst? | Complete Roadmap to Become a Data Analyst

In today’s data-driven world, businesses rely on data analysts to convert raw data into actionable insights. Data analysts play a crucial role in enabling organizations to make informed decisions, optimize operations, and identify new opportunities. If you aspire to become a data analyst, understanding the responsibilities, required skills, and career path is essential. This article provides a detailed roadmap to guide your journey toward a successful career as a data analyst.

Who is a Data Analyst?

A data analyst is a professional who collects, processes, and interprets data to help organizations make data-driven decisions. Their work involves identifying patterns, trends, and relationships in datasets and presenting findings through visualizations and reports. Data analysts serve as the bridge between raw data and strategic decision-making, impacting various sectors such as healthcare, finance, retail, and technology.

Key Responsibilities of a Data Analyst

Data analysts perform a variety of tasks, including:

1. Data Collection: Gathering relevant data from multiple sources, such as databases, APIs, and spreadsheets.

2. Data Cleaning: Removing inconsistencies, duplicates, and errors to ensure data quality.

3. Data Analysis: Using statistical techniques to identify patterns and trends.

4. Data Visualization: Presenting data insights using charts, graphs, and dashboards.

5. Reporting: Preparing detailed reports to communicate findings to stakeholders.

6. Collaborating with Teams: Working closely with decision-makers to understand business needs and provide actionable recommendations.

Skills Required to Become a Data Analyst

1. Technical Skills

· Excel: Proficiency in Excel is essential for basic data manipulation and analysis.

· SQL: Knowledge of SQL helps you extract and manage data from relational databases.

· Programming: Skills in Python or R are crucial for advanced data analysis and visualization.

· Data Visualization Tools: Familiarity with tools like Tableau, Power BI, and Matplotlib enhances your ability to present insights effectively.

· Statistics and Mathematics: A strong foundation in statistical concepts is necessary for analyzing and interpreting data.

2. Soft Skills

· Critical Thinking: The ability to approach problems logically and think analytically.

· Communication: Skills to convey complex findings in a simple, understandable manner.

· Attention to Detail: Ensuring data accuracy and quality.

· Problem-Solving: Using data to address business challenges and propose solutions.

Roadmap to Becoming a Data Analyst

Here’s a step-by-step guide to help you achieve your goal of becoming a data analyst:

1. Understand the Role

Research the responsibilities, required skills, and career opportunities in data analytics. Read job descriptions and connect with industry professionals to gain a deeper understanding of the field.

2. Learn the Basics

· Start with learning Microsoft Excel for data manipulation.

· Gain proficiency in SQL to query and manage databases.

· Explore Python or R for statistical analysis and scripting.

3. Build a Strong Foundation in Statistics

Understand statistical concepts like mean, median, variance, standard deviation, and probability distributions. These are essential for interpreting and analyzing data.

4. Develop Visualization Skills

Learn how to create impactful visualizations using tools like Tableau, Power BI, or programming libraries like Matplotlib and Seaborn.

5. Work on Real-World Projects

Gain practical experience by working on projects such as:

· Analyzing sales data to identify trends.

· Creating dashboards to monitor KPIs.

· Performing customer segmentation based on purchase behavior.

6. Obtain Certifications

Certifications can validate your skills and boost your employability. Consider certifications like:

· Google Data Analytics Professional Certificate

· Microsoft Certified: Data Analyst Associate

· Certification programs offered by 360DigiTMG

7. Build a Portfolio

Showcase your skills through a portfolio that includes:

· Completed projects with detailed documentation.

· Visualizations and dashboards you’ve created.

· Contributions to open-source projects or Kaggle competitions.

8. Network and Apply for Jobs

· Join professional platforms like LinkedIn to connect with data analysts and recruiters.

· Attend webinars, workshops, and meetups to stay updated on industry trends.

· Apply for internships or entry-level positions to gain hands-on experience.

The Future of Data Analytics

The field of data analytics is rapidly evolving, with advancements in technology and the growing importance of data in decision-making across industries. Emerging trends and tools are shaping the future of the profession, making it an exciting time to enter the field. As a data analyst, staying updated with these trends can ensure long-term career success and growth.

youtube

1. Integration of AI and Machine Learning

Data analysts are increasingly using AI and machine learning tools to automate data preparation, identify complex patterns, and generate predictive insights. Familiarizing yourself with these technologies can give you a competitive edge.

2. Big Data Analytics

With the explosion of data generated every day, analysts are expected to work with massive datasets. Learning big data technologies like Apache Hadoop and Spark can be advantageous.

3. Data Storytelling

The ability to communicate insights effectively through compelling stories is gaining prominence. Developing skills in data storytelling and mastering tools like PowerPoint and Canva for presentations will be crucial.

4. Cloud-Based Analytics

Cloud platforms like AWS, Google Cloud, and Azure are becoming popular for data storage and analysis. Understanding how to use these platforms will enhance your job prospects.

5. Specialized Roles

As the field matures, specialized roles such as marketing analyst, healthcare analyst, and risk analyst are becoming common. Building domain expertise in a specific industry can open new opportunities.

Benefits of Becoming a Data Analyst

Pursuing a career in data analytics offers several advantages:

1. High Demand: With businesses across industries relying on data, the demand for skilled data analysts continues to grow.

2. Attractive Salaries: Data analysts often earn competitive salaries, with opportunities for bonuses and incentives.

3. Diverse Opportunities: Data analysts can work in various industries, providing flexibility and a chance to explore different fields.

4. Career Growth: With experience, you can transition to higher roles, such as data scientist, analytics manager, or consultant.

5. Intellectual Satisfaction: The role involves solving challenging problems and contributing to impactful decisions, making it fulfilling for those who enjoy analytical thinking.

Why Choose 360DigiTMG for Your Data Analyst Journey?

360DigiTMG is a leading training provider that equips aspiring data analysts with the skills and knowledge needed to excel in the field. Here’s how 360DigiTMG can support your journey:

· Comprehensive Curriculum: Learn essential tools and techniques, including Excel, SQL, Python, and Tableau.

· Hands-On Projects: Gain practical experience by working on real-world datasets and projects.

· Global Certifications: Earn certifications that are recognized by top employers worldwide.

· Experienced Faculty: Learn from industry experts with years of practical experience in data analytics.

· Placement Assistance: Benefit from resume-building workshops, mock interviews, and job placement support.

· Flexible Learning Options: Choose from online, offline, and hybrid learning modes to suit your schedule.

By enrolling in 360DigiTMG’s data analyst programs, you not only gain technical expertise but also the confidence to thrive in a competitive job market.

Conclusion

Becoming a data analyst is a rewarding journey that requires a mix of technical skills, problem-solving abilities, and industry knowledge. With a clear roadmap and the right guidance, you can build a successful career in this dynamic field. Whether you are just starting or looking to upskill, 360DigiTMG offers the resources and expertise to help you achieve your career goals. Take the first step today and unlock your potential as a data analyst!

1 note

·

View note

Text

How to Succeed in Your Data Analytics Course: Essential Tips and Resources

Enrolling in a data analytics course is a great step toward a lucrative and fulfilling career in today's data-driven world. However, succeeding in this course requires more than just attending lectures and completing assignments. To excel, you need a strategic approach and access to resources that can guide you through complex concepts. Below, we outline actionable tips to help you thrive in your data analytics course and recommend video resources for deeper learning.

1. Understand the Fundamentals of Data Analytics

Before diving into complex algorithms and statistical models, ensure that you have a solid grasp of the basics. Key concepts such as data cleaning, data visualization, and basic statistical measures form the foundation of data analytics.

Recommended Learning Approach:

Brush Up on Statistics and Mathematics: Strengthen your knowledge of descriptive and inferential statistics.

Learn Key Tools Early: Familiarize yourself with data analytics tools like Python, R, SQL, and data visualization software (e.g., Tableau and Power BI).

Helpful Video Resource:

Watch this detailed video on foundational concepts here.

2. Develop Strong Programming Skills

In the field of data analytics, programming plays a vital role. Python and R are the two most commonly used programming languages in data analytics. Python is particularly popular due to its simplicity and a wide range of libraries such as Pandas, NumPy, and Matplotlib.

Tips for Success:

Practice Coding Regularly: Spend time coding daily or at least a few times a week to enhance your proficiency.

Work on Mini-Projects: Apply your skills to real-world projects, such as data cleaning exercises or simple analyses, to deepen your understanding.

Helpful Video Resource:

Dive deeper into practical coding techniques here.

3. Master Data Visualization Techniques

Data visualization is crucial in translating complex data into easy-to-understand insights. Mastering visualization tools and understanding how to choose the right type of chart for different data types is essential.

Key Steps:

Explore Visualization Libraries: Use libraries such as Matplotlib, Seaborn, and Plotly for Python, or ggplot2 for R.

Understand Data Storytelling: Learn how to use visual elements to craft compelling data stories that resonate with your audience.

Helpful Video Resource:

Get comprehensive insights into data visualization techniques here.

4. Engage in Hands-on Projects

Theoretical knowledge is only as good as its practical application. Building projects not only helps you understand data analytics more thoroughly but also provides you with a portfolio to show potential employers.

Suggestions for Projects:

Analyze Public Datasets: Use platforms like Kaggle or public data repositories to practice.

Join Competitions: Participate in data challenges and hackathons to improve your skills and network with other data enthusiasts.

Helpful Video Resource:

For project-based learning and how to approach hands-on data projects, watch this video.

5. Stay Updated and Network

Data analytics is a rapidly evolving field, and staying current with trends and new tools is critical for long-term success.

How to Keep Up:

Follow Industry Leaders: Read blogs, articles, and follow key figures on platforms like LinkedIn and Twitter.

Join Data Analytics Communities: Engaging in discussions on forums like Reddit, Stack Overflow, and Data Science Central can offer valuable insights and answers to complex questions.

6. Leverage Online Resources

To build a deeper understanding of complex topics, take advantage of free resources and comprehensive videos. Here are some highly recommended sessions for anyone enrolled in a data analytics course:

Data Analysis Tips and Tricks: Gain insights into practical data analysis methods that professionals use.

Step-by-Step Data Analytics Projects: Learn through examples how to work on data projects from start to finish.

Data Visualization Mastery: Understand how to present data visually in an impactful way.

Deep Dive into Python for Data Science: Get an in-depth look at Python’s role in data analysis and data science.

7. Practice Consistently

Consistency is key. Set a schedule that includes time for learning, applying concepts, and revisiting difficult topics. Regular practice not only reinforces what you learn but also builds confidence.

Routine Ideas:

Dedicate 30 minutes daily to coding exercises.

Work on a new dataset weekly and create a mini-report.

Revisit complex topics regularly to ensure they stay fresh.

8. Focus on Communication Skills

Being able to present your findings clearly is just as important as the analysis itself. Sharpen your communication skills so you can tell a story with data, whether through written reports or presentations.

Enhancement Tips:

Practice explaining complex ideas in simple terms.

Incorporate storytelling techniques in your data presentations.

Use visuals that highlight your key points without overwhelming your audience.

9. Seek Feedback and Stay Adaptable

Feedback from peers or mentors can highlight areas that need improvement. Adaptability is vital in the ever-changing landscape of data analytics, so keep an open mind to new tools and methodologies.

10. Stay Inspired and Persistent

Lastly, keep your motivation high. Remember why you started this journey and the career opportunities that come with mastering data analytics. Celebrate small wins and maintain a positive attitude.

Conclusion

Mastering data analytics takes dedication, practice, and the right resources. By staying engaged, seeking out projects, leveraging expert content, and being consistent in your practice, you can excel in your data analytics course and carve out a successful career in this dynamic field. Don’t forget to make full use of community interactions and free learning materials, such as the recommended video sessions, to enhance your learning experience.

Ready to take your data analytics journey to the next level? Check out the resources linked above and start building a brighter future today!

0 notes

Text

Data Analytics Course in Rohini: Comprehensive Overview

Introduction to Data Analytics

In today’s data-driven world, the ability to analyze and interpret data is more valuable than ever. Businesses across industries rely on data analytics to make informed decisions, improve operational efficiency, and enhance customer satisfaction. The data analytics course in Rohini is designed to equip participants with the essential skills and knowledge required to thrive in this rapidly evolving field.

Course Objectives

The primary objectives of the course are to:

Understand the Role of Data Analytics: Participants will learn about the significance of data analytics in various sectors, including finance, healthcare, marketing, and logistics.

Develop Technical Skills: The course will focus on essential tools and programming languages used in data analytics, enabling students to manipulate and analyze data effectively.

Apply Analytical Techniques: Students will gain practical experience in applying statistical methods and analytical techniques to real-world data.

Build a Strong Foundation in Machine Learning: An introduction to machine learning concepts will help students understand advanced analytics and predictive modeling.

Prepare for Career Opportunities: The course aims to equip students with the necessary skills to pursue careers in data analytics, offering guidance on job opportunities and industry expectations.

Course Structure

The course is structured into several modules, each focusing on different aspects of data analytics:

Module 1: Introduction to Data Analytics

What is Data Analytics?: Understanding its definition, importance, and applications in business.

Types of Data: Exploring structured and unstructured data, big data, and data mining.

Data Analytics Process: Steps involved in data analytics, including data collection, cleaning, analysis, and visualization.

Module 2: Data Collection and Cleaning

Data Sources: Identifying various sources of data, including surveys, databases, and online resources.

Data Cleaning Techniques: Learning how to preprocess data to ensure accuracy and reliability, including handling missing values and outliers.

Tools for Data Collection: Introduction to tools like Excel, Google Sheets, and APIs for data gathering.

Module 3: Statistical Analysis

Descriptive Statistics: Understanding measures of central tendency (mean, median, mode) and dispersion (variance, standard deviation).

Inferential Statistics: Introduction to hypothesis testing, confidence intervals, and regression analysis.

Correlation and Causation: Distinguishing between correlation and causation and understanding their implications in data interpretation.

Module 4: Data Visualization

Importance of Data Visualization: Understanding how visual representation of data enhances comprehension and communication.

Visualization Tools: Hands-on training with tools like Tableau, Power BI, and Python libraries (Matplotlib, Seaborn).

Creating Effective Visuals: Best practices for designing charts, graphs, and dashboards that effectively convey insights.

Module 5: Programming for Data Analytics

Introduction to Python/R: Basics of programming in Python or R, focusing on syntax, data structures, and libraries used in data analysis.

Data Manipulation: Learning libraries like Pandas (Python) and dplyr (R) for data manipulation and analysis.

Automating Analysis: Writing scripts to automate repetitive data analysis tasks.

Module 6: Introduction to Machine Learning

What is Machine Learning?: Understanding the basics of machine learning and its applications in data analytics.

Types of Machine Learning: Overview of supervised, unsupervised, and reinforcement learning.

Building Simple Models: Introduction to algorithms like linear regression, decision trees, and clustering techniques.

Module 7: Real-World Projects

Hands-On Experience: Engaging in real-world projects that allow students to apply their skills to solve actual business problems.

Case Studies: Analyzing case studies from various industries to understand how data analytics drives decision-making.

Collaboration: Working in teams to enhance problem-solving and communication skills.

Module 8: Career Guidance and Industry Insights

Career Pathways: Exploring different roles in the data analytics field, such as data analyst, business intelligence analyst, and data scientist.

Resume Building: Guidance on creating an impactful resume and preparing for interviews in the data analytics domain.

Networking Opportunities: Connecting with industry professionals through workshops, seminars, and guest lectures.

Teaching Methodology

The course employs a blend of theoretical knowledge and practical application through:

Interactive Lectures: Engaging lectures that foster discussion and enhance understanding.

Hands-On Workshops: Practical sessions where students can apply their knowledge using real data sets.

Group Projects: Collaborative projects that promote teamwork and the sharing of ideas.

Guest Speakers: Inviting industry experts to share insights and experiences.

Course Duration and Format

The course typically spans several weeks to months, with classes held in-person or online, depending on participant preferences. Flexible scheduling options may be available to accommodate working professionals.

Target Audience

This course is suitable for:

Beginners: Individuals with no prior experience in data analytics looking to start a career in this field.

Professionals: Those seeking to upskill and incorporate data-driven decision-making into their roles.

Students: University students pursuing degrees in business, IT, or related fields who want to enhance their employability.

Certification

Upon successful completion of the course, participants will receive a certificate that acknowledges their skills and knowledge in data analytics, which can be a valuable addition to their resumes.

Conclusion

The data analytics course in Rohini is a comprehensive program designed to provide students with the essential skills needed to excel in the data analytics field. By combining theoretical knowledge with practical experience, participants will be well-prepared to tackle real-world challenges and seize career opportunities in this exciting and dynamic industry. Whether you’re looking to start your journey in data analytics or enhance your existing skills, this course offers the tools and insights needed to succeed.

0 notes

Text

How to Become a Business Intelligence Analyst

Are you curious about turning numbers into valuable insights and helping businesses make smart decisions? Well, becoming a Business Intelligence Analyst (BI) Analyst might be just the career for you! In this guide, we'll break down the steps to help you embark on your journey into the world of business intelligence, even if you're new to the field.

Step 1: Understand the Basics

Before diving in, it's essential to grasp the fundamentals of what a BI Analyst does. Simply put, BI Analysts analyze data to help companies make informed decisions. They work with data visualization tools, like graphs and charts, to present complex information in a way that everyone can understand.

Step 2: Acquire Education

While a formal education isn't always mandatory, having a degree in a relevant field can give you a head start. Consider pursuing a degree in business, finance, computer science, or a related field. However, many BI Analysts also enter the field with experience in areas like IT or data analysis.

Step 3: Develop Analytical Skills

To become a successful BI Analyst, you need to love playing with numbers and have a keen eye for detail. Sharpen your analytical skills by solving puzzles, practicing with data sets, and honing your ability to recognize patterns in information.

Step 4: Learn Relevant Tools

Get comfortable with the tools of the trade. Popular BI tools include Microsoft Power BI, Tableau, and Google Data Studio. There are plenty of online tutorials and courses to help you get hands-on experience with these tools, even if you're a beginner.

Step 5: Gain Practical Experience

Apply your knowledge in real-world situations. Seek internships or entry-level positions that allow you to work with data. This hands-on experience is invaluable and will make you stand out to potential employers.

Step 6: Develop Communication Skills

Being a BI Analyst isn't just about crunching numbers; it's also about communicating your findings effectively. Practice explaining complex data in simple terms, both verbally and through written reports. This skill is crucial for collaboration with non-technical teams.

Step 7: Stay Updated

The world of business intelligence is dynamic, with new tools and techniques emerging regularly. Stay current by reading industry blogs, attending webinars, and participating in online communities. Continuous learning is key in this field.

Step 8: Build a Portfolio

Create a portfolio showcasing your projects and analyses. This could include sample reports, dashboards, or any relevant work you've done. A strong portfolio is an excellent way to demonstrate your skills to potential employers.

Conclusion

Becoming a Business Intelligence Analyst may seem like a daunting task, but by following these steps, you can pave the way to a rewarding career. Remember, curiosity, a love for problem-solving, and a commitment to continuous learning are your greatest assets on this exciting journey.

0 notes

Text

How to leverage AI in finance with Christian Martinez

New Post has been published on https://thedigitalinsider.com/how-to-leverage-ai-in-finance-with-christian-martinez/

How to leverage AI in finance with Christian Martinez

Our sister community, Finance Alliance, recently held an Ask Me Anything (AMA) session inside our Slack community with Christian Martinez, Finance Manager at Kraft Heinz.

So, Christian answered members’ burning questions about how to successfully leverage AI tools in finance, and much more.

Below, you’ll find some highlights from the session, where Christian shared valuable insights and tips with Finance Alliance’s members. ⬇

The main use cases for using these types of tools, such as ChatGPT and Google Bard, include:

Identifying risks, opportunities, and trends.

Facilitating collaboration between departments.

Developing automatic AOP models.

Natural language processing (NLP).

Can ChatGPT interpret past trends and data to help automate financial forecasting?

I’ve seen that Google Bard, ChatGPT, and other similar language models are very powerful tools for many processes, but in order to help automate financial forecasting, they require an additional tool.

For example, Google Bard + Python.

How can ChatGPT help perform real-time financial analysis?

I see ChatGPT helping in two main ways:

Generating ideas on how to perform the analysis.

Providing Python code to use on Google Colab to perform real-time financial analysis.

How can you ensure buy-in from management in finance transformation projects/initiatives?

It’s really important to have that buy-in from management. In order to get it, you need to understand the ‘why are we doing this,’ and ‘why this matters.’ The answers will depend on the management and the project, but understanding those is key.

Another important thing you need to do is map your stakeholders/management people and understand how much interest and influence they have in your initiative.

I prefer to use Python (with Google Colab) for this! But for the MVM (minimum viable model), I sometimes use Excel.

I’m still in the process of exploring the potential of third-party tools. Right now, I’m using Tableau, Alteryx, Python, SQL, Microsoft Azure, and Power BI.

What are your top 5 methods to forecast data?

Random forests

Linear regression

Clustering

Monte Carlo simulation

Time series

How can AI help predict financial patterns?

They can primarily help through decision-making algorithms, predictive analytics, and automation.

What do you think are the best ChatGPT plugins for FP&A?

I have many articles on plugins:

3 Great ChatGPT Plug ins for Finance with Guide + Business Case

Generate your own ChatGPT Plug in guide in 3 Simple Steps

10 Amazing Ways to Use ChatGPT Plugins for Stock Analysis: Part 1

How can I use AI for financial modeling?

There are many good use cases for financial modeling. Some of the main ones are the creation of models (LBO, DCF, etc), research about models, generation of shortcuts guides on your financial modeling software, formula builder, etc.

Do you recommend using ChatGPT API in Python or is inaccuracy still a huge issue?

On its own, the API is the same as using the model on the OpenAI website. If you add things on top, it can then be valuable.

On accuracy, there are two things I’d recommend:

Use this before your prompts: “Act as a purely factual AI that doesn’t hallucinate. If you don’t know an answer or if it’s out of your training data, just say it…“

Treat ChatGPT as the most junior member of your team. They can contribute and help a lot, but you need to check their work before submitting it to your stakeholders/customers. Use your knowledge and expertise to assess the veracity of what they produce.

I mainly use Twitter and LinkedIn. I tend to follow a group of AI “general” influencers on Twitter, and then if I think it can be applied to finance and accounting, I post it on LinkedIn.

These are some of the accounts I follow:

About Christian Martinez

Christian Martinez is currently the Finance Manager at Kraft Heinz and has over six years of experience in financial planning and analysis (FP&A). He’s also the founder of The Financial Fox, a non-profit startup project which aims to democratize machine learning and data analysis.

Christian was named as one of the 30 under 30 in the Accounting and Finance industry in Australia in 2021. He also won the EMEA Data Democratizer Award in 2022 and was a finalist in the Young Leaders in Finance Awards in 2018.

Download Finance Alliance’s AI in Finance eBook

Artificial Intelligence (AI) is reshaping the finance industry and empowering finance teams to make smarter, data-driven decisions like never before.

But how can you incorporate AI into your financial workflow?

Welcome to the AI in Finance eBook, your trusted guide to merging AI’s vast potential with everyday finance operations such as forecasting, budgeting, analysis, and more.👇

#2022#accounting#accounting and finance#Accounts#ai#Algorithms#alteryx#amazing#amp#Analysis#Analytics#API#Articles#Artificial Intelligence#Australia#automation#azure#bard#bi#budgeting#Business#chatGPT#code#CoLab#Collaboration#Community#data#data analysis#data-driven#data-driven decisions

0 notes

Link

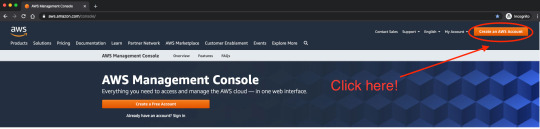

Tableau is one of the latest empowerments in the data visualization and analytics space. It enables professionals to do several tasks, including data manipulation, design visualizations, etc., and transform data into business-ready insights. Hence, Tableau developers are in high demand everywhere. But how to become a Tableau developer? ITView, the top for Tableau training in Pune, explains how to become a Tableau developer in five simple steps.

#Tableau Developer in 5 Simple Steps#Learn Tableau With ITView#Best Software Training Institute in Pune

0 notes

Text

Free Ways To Upskill In Industry

The days of paying a large sum of money to learn something are long gone. The system for online learning has changed.

In order to learn anything, you HAD TO BE IN THE CLASS, this educational pattern has now changed. The online learning should be thanked for the current, permanent changes in the educational world.Now, all you need to access vast sources of information and master the skills you want is a device and an internet connection.

The best aspect is that there is no requirement for skill. You have the option of learning something simple like "creating crafts" or something complex like "building software." There is something out there for everyone. What I'm referring to are the e-learning platforms that are listed after them.

Let me give you just a few additional benefits of online learning before I continue.

* Study at your own speed.

* Less expensive than actual learning facilities

* Unrestricted access to the information

* Regular updates

Let's look at the platforms right now.

1) Udemy

One of the most well-known online learning platforms is Udemy. They have a substantial collection of classes organised into many categories, such as:

· Development

· Business

�� Finance and accounting

· Software and IT

· Office Efficiency

· Individual Development

2) Coursera

The fact that this platform intersperses quizzes and practical projects with the course content allows you to apply what you have learnt. Their course categories include:

* In-depth learning

* Huge data

* Learning Machines

* Data science

* Internet advertising

Coursera might be the option for you if you're wanting to develop a talent for a prospective future career. They greatly bolster the legitimacy of your educational efforts.

3) Job Ready Programmer

You can choose from a variety of courses on our learning platform to learn how to code like an expert. They give students real-world programming challenges to evaluate their aptitude for learning, and they then have them carefully follow a video solution to break down the issues and offer a thorough step-by-step solution for greater comprehension.

Its course options include:

· SQL in Oracle

· Java for both novices and experts

· OOP

· For Data Science, SQL

· Python

· Tableau

· Spring Web + Spring Framework 5 + Boot 2

· Data structure + algorithms

5) LinkedIn Learning

LinkedIn Learning is a knowledge hub that makes it simple for you to learn the skills you want thanks to their sizable course selection.

Here are a few of the categories:

* Application Development

* Web Design,

* Web Development,

* Business,

* Photography,

* Marketing

You can access all of their courses on-demand with a single membership. Don't forget to take advantage of their present offer of a free month to test out their platform.

6) Google Career Cerification

Google Credential for Career Gain confidence, job-ready skills, and an useful career credential. Start a career in data analytics, UX design, project management, or IT support.

Conclusion

The best move someone can make is to acquire new talents because you never know when trends will shift. You can learn and master the talents you want on the aforementioned websites whenever and wherever you want.

The best instructor is available to teach you the most difficult skills in your house, right from your computer. The benefit of online education is that you can plan your study schedule and it is reasonably priced.

0 notes

Text

Learning Path: Your Guide to Get Tableau Services in Pakistan

BI #1 Tableau Services in Pakistan Data visualization is the art of displaying data in such a way that it can be understood by non-analysts. A precise balance of aesthetic aspects such as colors, size, and labels can result in visual masterpieces that offer startling business insights, allowing firms to make more educated decisions.

The use of data visualization in business analytics is unavoidable. As more data sources become available, corporate leaders at all levels are embracing data visualization tools that allow them to graphically examine trends and make quick choices. Qlikview and Tableau are currently the most popular visualization and data discovery solutions.

BI #1 Tableau Services in Pakistan

Tableau is a Business Intelligence (BI) and data visualization application that is rapidly evolving. It's simple to set up, easy to learn, and simple to use for customers. For those who are new to Tableau, here is a learning path. This program will guide you through a methodical approach to learning Tableau Services in Pakistan.

Beginners are advised to adhere to this course religiously. If you already have some knowledge or don't require all of the components, feel free to go your own way and let us know how it went!

Step 1: What is the Importance of Data Visualization?

Before we get started with Tableau, I'd like to stress the importance of data visualization and how it can help businesses make better decisions. To whet your appetite, here's a video:

Tour of Tableau's Products

Tableau is the market leader across all BI products, according to Gartner's magic quadrant 2015.

Magic Quadrant, Gartner, Tableau

Step 2: Download and install Tableau.

Tableau has five major products that cater to a wide range of visualization needs for professionals and businesses. They are as follows:

Tableau Desktop is a desktop version of Tableau Services in Pakistan that is designed for individual usage.

Tableau Server is a collaboration tool that may be used by any company.

Tableau Online: Cloud-based Business Intelligence Tableau Results

Feature Highlights

Tableau Public and Tableau Reader are free to use, while Tableau Server and Tableau Desktop have a 14-day fully functional free trial period after which the user must pay.

Tableau Desktop is available in two versions: Professional and Personal. Tableau Online is accessible for a single user with an annual subscription and grows to thousands of users. Tableau has gone through several iterations; in this article, we'll look at Tableau Desktop 9.0's learning curve.

Tableau Desktop can be downloaded as a trial version from the Tableau website, however, it is only available for 14 days. Follow the steps to install it on your system and begin using it.

Step 3: Tableau: Getting Started

Tableau Services in Pakistan offers free online, live, and (paid) classroom training. This is the ideal starting point for your quest. I recommend that you take the path outlined below. Here is a link to a free on-demand online training course with supplementary materials. All of these movies provide the data set that you can investigate on your own.

You must first register in order to see these training videos. Expand the "Getting Started" area and view the three videos that are offered. Connecting with data, data preparation, generating views, filters, dashboards, story points, and ways to disseminate will all be covered in these videos.

Step 4: Integrating Data

Tableau can connect to a variety of data sources, including text, spreadsheets, databases, and big data searches. We'll look at the fundamentals and advanced features of data communication with various sources in this part. We'll also examine at join types, data blending, cube connections, custom SQL, and Google Analytics in this section.

Step 5: Creating and Analyzing Points of View

Tableau Services in Pakistan offers a variety of ways to visualize data, including applying filters/drill-downs/formatting, creating sets, groups, drawing trend lines, and forecasting. Begin your journey!

You've now looked at a variety of data visualization objects. One of the most difficult decisions you'll have to make when building data visualization is which object to use to represent data.

The image below will assist you in deciding on the type of visualization to use. The tableau feature of automated view selection, on the other hand, takes care of this issue to a significant extent. This function triggers the optimal views for the selected dimension(s) and measures automatically (s).

Step 6: Workout

We've looked at data connectivity, various objects, and view generation in Tableau Services in Pakistan up until this point. It's time to get your hands dirty with data and draw conclusions using a variety of visualization techniques:

Kaggle organized a data science challenge to forecast crime categories in San Francisco based on 12 years of crime reports from all of the city's neighborhoods (from 1934 to 1963). (time, location, and other features are given).

Step 7: Dashboards and Narratives

The creation of Tableau's "Dashboard and Stories" is the product's USP (unique selling proposition). The dashboards that have been made are fantastic, and they take this product to the next level. Dashboards and Stories have their own component in the Tableau online training program. This section includes the following topics:

Storytelling and the Dashboard

Dashboards: Adding Views and Objects

Using Filters on a Dashboard

Options for different layouts and formatting

Story Points on the Interactive Dashboard

Services We Offer:

Strategy

Competitive Intelligence

Marketing Analytics

Sales Analytics

Data Monetization

Predictive Analytics

Planning

Assessments

Roadmaps

Data Governance

Strategy & Architecture

Organization Planning

Proof of Value

Analytics

Data Visualization

Big Data Analytics

Machine Learning

BI Reporting Dashboards

Advanced Analytics & Data Science

CRM / Salesforce Analytics

Data

Big Data Architecture

Lean Analytics

Enterprise Data Warehousing

Master Data Management

System Optimization

Outsourcing

Software Development

Managed Services

On-Shore / Off-Shore

Cloud Analytics

Recruiting & Staffing

Click to Start Whatsapp Chatbot with Sales

Mobile: +9233333331225

Email: [email protected]

#Banking Analytics solutions in Pakistan#BI Company in Pakistan#BI Consultant in Pakistan#big data consulting services in Pakistan#business intelligence solutions in Pakistan#Cognos Consultant in Pakistan#data visualization solutions in Pakistan#data warehouse solutions in Pakistan#Insurance Analytics Solutions in Pakistan#Manufacturing Analytics Solutions in Pakistan#Microstrategy Consulting services in Pakistan#Oil & Gas Analytics Solutions in Pakistan#Retail Analytics Solutions in Pakistan#Supply Chain Analytics Solutions in Pakistan#Tableau Consulting services in Pakistan#Cognos Consulting Services in Pakistan#Qlikview Consulting services in Pakistan#bi consulting services in Pakistan#business intelligence services in Pakistan#data warehousing services in Pakistan#Pharma Analytics Solutions in Pakistan#healthcare analytics solutions in Pakistan#healthcare business intelligence in Pakistan

0 notes

Text

Pixelmator Photo

The Pixelmator Pro image editing engine is seriously sophisticated and incredibly powerful. It’s designed exclusively to take advantage of the full power of Mac computers, using advanced Mac graphics technologies like Metal and Core Image. Pixelmator Photo for iPad is here!In this live session I'll show you everything you need to know to get the very best out of this amazing iPad app.

The timeline for many a photographer — at least, this was the case for me — can be outlined somewhat as per the graph below:

Here, photography gear and kit starts out as the ultimate source of inspiration. This inspiration declines steadily over time, but rears its ugly head on occasion.

The inspiration derived from other photographers takes essentially an inverse effect as gear and kit do. When gear and kit delusions subside, the drive to emulate your favorite photographers rises.

And finally, your own skill set not only grows over time, it also becomes the main source of your own inspiration over time.

I’ve developed a bit of a list for the second step — photographers like Kate Holstein, Sam Nute, Finn Beales, Dan Tom, and more are stunningly skilled and worthy of emulation. Their compositions are great — often, near perfect — and their colors are their own.

I think the prevailing advice to improve your abilities as a photographer is to focus on composition. Work with prime lenses, learn to position your body and camera in the right spot, and ensure all the pieces of the puzzle fit into your photograph beautifully.

Color is just the icing on top of the composition cake, and everyone likes different brands of icing, right?

It is the largest aircraft in the Embraer E-Jet family. It is an extended version of the Embraer E-190 aircraft. X-Crafts‘ goal from the beginning was to create a great high quality add-on that would be very interesting for both, the hardcore simmers as well as new flightsim users out there! Check Out the Embraer E 170 by SSG avaiable on the X Plane Forum!!!Check it out at:http://forums.x-plane.org/index.php?/files/file/34117-embraer-e-170-e-jet. X plane erj. FS2004 Aeromexico Connect Embraer 190. Model by VirtualCol. Texture by Carlos Daniel Gonzalez. Author Diego Israel Correa Vazquez. Traffic Global for X-Plane 11 adds high quality AI aircraft in authentic liveries and will full 3D sound and lighting and effects. It comes with 65 aircraft types and over 860 liveries and is simple to use. Embraer E175 and E195 v2 package by X-Crafts. Embraer E-175 v2.4. EMB190YTxp11 for X-Plane 11 Part of the message is hidden for the guests. Please log in or register to see it. Please Log in or Create an account to join the conversation.

Pixelmator Photo’s latest update brought the power of Pixelmator Pro’s ML Match Colors from the Mac to the iPad, promising the ability to match the color palettes between sets of photos. ML Match Colors is wonderfully implemented, quickly performed, and easy to use. It promises to use all the powers of the iPad.

But does it promise the power of stealing your favorite photographers’ color palettes? Will it make your photos look as good as your favorite photographers’ photos?

Of course not!

As is always the case in photography, there are a multitude of variables at play. ML Match Colors handles one specific variable: color.

Using ML Match Colors to Match Your Own Photos

This is the method that I expect the Pixelmator team both wants and expects customers to implement when using ML Match Colors. At first blush, ML Match Colors seems best designed for applying a relatively close color palette to all of your photos in a set.

Rock Bookends OUR Freedom and Liberty solid rocks sandblasted for permanent gifts, like our great country GRoG1791 5 out of 5 stars (11) $ 70.00. Add to Favorites Liberty Bell Bookends - cast iron DecoDavesDen 5 out of 5 stars (1) $ 65.00 FREE shipping Add to Favorites. Rock Bookends OUR Freedom and Liberty solid rocks sandblasted for permanent gifts, like our great country GRoG1791. 5 out of 5 stars (9) $ 70.00. Favorite Add to Vintage Wood and Cast Iron Eagle Bookends/Vintage Eagle Bookends/Patriotic Bookends/Vintage Home Decor AimlessAntiques. https://huntergator65.tumblr.com/post/654191096322228224/freedom-bookends. Product description Organized Living freedomRail Book Ends prevent books and other belongings from sliding off shelf ends without taking up valuable space. Book Ends work perfectly in a home office. Cast Metal Liberty Bell Pair of Bookends - Patriotic Brass Tone Heavy Bookends - Liberty Bell Freedom Bookends - American Patriot Home Decor GlitteringDragonfly 5 out of 5 stars (1,287) $ 34.99. Add to Favorites Pair of Cast Metal Goldtone Liberty Bell Bicentennial Bookends - Federal Style - 1776 - Law Office - Colonial - Federal Style.

The original photo on the left and my personal edits on the right. Clearly, these two photos are very, very different.

To test the power of ML Match Colors, I used a single photo of my own from a few years ago. Evidently, I’ve heavily edited this photo, so I exported a copy of the original photo and a copy of the edited photo from Lightroom to my camera roll.

From there, I opened the original raw photo in Pixelmator Photo, dragged an instance of Photos into Split View, and dragged my edited into Pixelmator Photo to match the color palettes.

In general, if an app can or can’t deliver on a promise, it’s usually due to improper expectations. And since I originally came into this thinking ML Match Colors would also match saturation, hue, intensity, and exposure all at once, well, I was originally disappointed. Here’s the result:

But upon further inspection, Pixelmator Photo actually performed great work in matching the colors in these two photos. In retrospect, it’s obvious: the colors are properly matched. However, to get to my original end result, I had to jump into the color tools in Pixelmator Photo to dial back intensity, hue, and saturation of individual colors. After maybe a minute or two of experimentation, I came to this end result:

Not bad, actually! Not bad at all.

As I mentioned above, ML Match Colors is explicitly designed to match colors — matching any other elements of exposure, saturation, hue, and more either has to be done manually or through other forms of Pixelmator Photo’s machine learning features.

Using ML Match Colors to Match Someone Else’s Photos

This whole section likely gets dicey, so I want to ensure I give credit where credit is due, and I want to ensure that the end result of “stealing” someone’s color does not actually happen. I fully believe, after you’ve read through this section, you’ll agree that a photographer’s stylistic brand and color choices are not in jeopardy thanks to ML Match Colors.

So, Finn Beales is one of the professional photographers I mentioned above who I have a ton of professional respect for. Beales has one of my favorite photography blogs on the planet, shoots some of the best travel and brand photography in the world right now, and has provided a wealth of photographic knowledge in his photography course at Strohl Works. Tableau jira connector. If you want to get a behind-the-scenes look at how Beales works and how he achieves some of his results, that course was some of the best money I’ve spent in the last two years.

Finn Beales’s tremendous travel photography — known as “72 Hrs In…” — is showcased on his personal site. There are a wealth of photos to digest in that section of his blog. One of my favorites is his commissioned work for Travel Alberta, a province and location here in Canada I had the opportunity to travel to myself.

So, with that backstory out of the way, you can likely understand where I’m going with this. Here’s Finn Beales’ photo of a man at Lake Louise in Banff National Park, Alberta, Canada:

Photo by Finn Beales, used solely for color reference and nothing more.

And here’s my photo of that exact same location, albeit with slightly different composition:

It’s very hard to say whether Beales and I shot the photo at the same time of day or in the same kind of weather, among other variables. We were at Lake Louise in the earliest part of the morning, so the orange glows on the mountaintop peaks are about as close as I could get to the oranges in Beales’ photo.

And here’s the result after dragging and dropping Beales’ photo into Pixelmator Photo:

Did my photo change at all? You’d be hard-pressed to tell. I believe many of the colors indeed match those in Beales’ photo, but again, hue, saturation, luminosity, brightness, and other variables play a major role in keeping Beales’ stylistic color choices his own.

This is good news, in nearly every facet of the story. The learning lessons I’m taking away from this:

Composition remains the most important element of photography. If you don’t have good composition, the amount of beautiful color you add or take away from a photograph won’t magically transform it into a mystical work of art.

Understanding “color” involves much, much more than simply dragging and dropping one photo onto the other. To achieve a certain look, a full understanding of the color wheel, color and light curves, and color tools is still required.

For all the work professionals have put in to create their own style, brand, and “look”, they can be rest assured that other photographers like myself won’t be able to replicate that same look with a simple feature in an iPad app.

Other Updates in Pixelmator Photo 1.2

Despite the title ML Match Colors feature debuting in Pixelmator Photo 1.2, the 1.2 app update housed plenty of powerful features for photographers looking to utilize the iPad more and more in their workflows.

Notice the trackpad cursor right in the middle of the photo on the left and the re-shaped cursor in the top right in the right photo. Cursor support is very nicely baked into the latest Pixelmator Photo update.

Trackpad Support: iPadOS 13.4’s new trackpad and cursor support has taken the platform by rage. Any app designed with many custom elements has struggled to organically adopt cursor support out of the gate, so updates have been needed to have all apps feel at home in iPadOS 13.4. Pixelmator Photo 1.2 brings full-blown trackpad support to the app, allowing you to whiz around with a mouse or trackpad much like you would on a Mac.

Split View: We chose Adobe Lightroom CC as the best photo editing app for the iPad because of its ecosystem, and we chose Darkroom as the runner-up because of Darkroom’s adoption of iOS technologies. With Pixelmator Photo 1.2, you can throw another app into the runner-up column. Pixelmator Photo may now have the best iPadOS technology support of any photo editing app available. The app now supports Split View, which is very powerful in how ML Match Colors is used. The app adeptly uses the iOS photo library rather than maintaining its own photo library housed within the app. And all the machine learning features built into the app make it one of, if not the, most powerful iOS photo editor available for the iPad.

Adjustment Intensity and Recent Adjustments: Pixelmator Photo 1.2 now allows you to fine-tune the intensity of color adjustments and presets. As described above, these tools are fundamental to achieving a desired look after ML Match Colors has done its job.

You’re also able to quickly reference and copy the adjustments from your most recently edited photos in Pixelmator Photo 1.2. Legal audio transcription. This, combined with ML Match Colors, make for a quick and easy workflow to edit a batch of photos with the same colors and settings.

Wrap Up

ML Match Colors debuted as a powerful machine learning feature in Pixelmator Pro for the Mac. The feature alone almost had me download Pixelmator Pro. However, I held off, knowing my workflow was going to increasingly move to the iPad. I admit, I didn’t expect ML Match Colors to come to the iPad so quickly.

I’m glad I waited. Because I’m positive I would have been initially disappointed with the ML Match Colors feature on the Mac.

Now that I’ve had a chance to try the feature, I’m more likely to purchase Pixelmator Pro for the Mac, simply because of the feature.

Pixelmator Photo App

So much of the iPhone and iPad’s being is wrapped up in simplicity. The devices themselves are fairly simply to use and can somehow house multiple generations of people into their user bases. This air of simplicity, applied to photography, almost makes it feel like you should be able to take a boring photo of the tree in your backyard and turn it into a masterpiece worthy of the Louvre.

The machine learning features in Pixelmator Photo are a taste of this simplicity, but don’t let your imagination run wild. Machine learning features in Pixelmator Photo take advantage of the deepest iOS technologies, eliminate a plethora of difficult and complicated tasks, and make editing photos easier than ever. They aren’t a perfect, one-tap-done editing tool.

ML Match Colors may be the very best machine learning tool available inside Pixelmator Photo. Drag and drop your favorite photos — or perhaps you can create and use your own color templates — to match the colors, then tweak everything else inside Pixelmator Photo’s vast array of editing tools. If you really want to make it easy, enable all the ML toggles in the tool array, hit export, and you’re done.

We named Pixelmator Photo as the photo editing app with the most potential in our big review. This 1.2 update really, really builds out some of that potential. And if this is just the start, Pixelmator Photo may move its way up the ladder of the best photo editing iPad apps.

Must-Have, Most-Used Photography Apps

Pixelmator Photo Mask

We spend an inordinate amount of time sorting through hundreds of apps to find the very best. Our team here at The Sweet Setup put together a short list of our must-have, most-used apps for taking and editing photos on iPhone and iPad.

0 notes

Photo

The Way of The Knife

Adventures in Excel

If you've been following along with the posts in this series, and you've been putting some of the scenarios into practice, you've now crossed a crucial juncture in your never ending quest to master the bloated beast that is Excel. To put things in perspective, if you've conquered all the quests that I've set you upon, you should now be able to: * Open up and add macro code into an Excel Worksheet * Utilize lookup functions (particularly Index(Match)) to compare and validate data sets * Build a complex formula * Utilize IF/AND/and OR statements in order to manipulate data and finally... * Understand your role in the data underground If there was any running theme among the posts in the past, it's that if you're in the position in which you're forced to utilize Excel in order to analyze data, you're probably grabbing a dirty version of the data-set from an intermediary platform (such as Tableau or Business Objects) built by a developer who isn't informed as to how the end user is going to use the data. Your mission, whether you choose to accept it or not is essentially to take data from people who know data and don't know the business, and turn it into data for people who know business and don't understand numbers. If you're wondering if perhaps you're in this role and don't know it yet,there's a simple two question litmus test =IF(AND(COLORCODING,OR(SLICE,DICE)),"Analyst","Something Else" For those of you who are just joining the party, that essentially says that if: 1. If a superior of yours has ever asked you to "slice" and or "dice" the data 2. If you're asked to color code things arbitrarily. Then you might be an analyst. Now, while your sitting at your desk (in what's most likely an Aeron chair) and you read the above qualifiers, the first thought that pops into your mind should be: "WTF does slice and dice mean when it comes to data analysis?" The answer may surprise you in that it's not just meaningless business lingo, it's actually a pretty good explanation of what you're going to be doing A LOT of (but fear not, in all likelihood, your boss didn't mean it like that due to what I'd like to call the leveraging of the synergy paradigm ). Essentially, when one attempts to slap-chop a "data dump" (in the computer patois, that's how you say data that's pulled straight from the source), it often involves as the first step "normalizing" the data so that the format is logical, and is in a position in which you can start employing some of the wizardy that we've been speaking about. The normalization process often involves only a few steps, all of which are quite easy, but EXTREMELY un-intuitive, and if you follow these steps, you'll either be done with the normalization process, or you'll be able to figure out how (all data dumps are dirty, but not all shit is created equal). 1. Ensure that your data has headings! a. Headings are essential not just because they're a logical way to organize your variables, but they're are also REQUIRED to build a pivot table down the road, if even one of your columns doesn't have a heading, Excel won't play nice! 2. Ensure that your headings don't repeat themselves a. While this may seem obvious, if a heading repeats itself, Excel won't pivot properly, won't graph correctly AND won't upload properly in external tools (such as Microsoft Access, which we'll explore in a later post!) 3. Ensure that multiple sources are all located on the same Excel workbook (basically, if you're pulling data from multiple places, that you paste them all into the same Excel file). a. Note that since Excel 2007, this step isn't 100% necessary, but it makes things much faster in both ease of use, and in the computational sense. 4. And finally, ensure that your variables are in the correct format...which is what we'll focus on for the rest of this post. Often, data dumps combine multiple variables into one "string" (a set of characters with no other format) most often last names, first names, and ID numbers which is fine when you're trying to figure out how many Smith,Bob,8675309s exist in your data...so basically useless on it's own. This brings us to the title of this post: how we're going to slice up (or "Parse") this string so that it's useful to us. There are a few was of going about this, but in my experience the most useful is some combination of the following formulae: =LEFT which returns a specified number of characters from the start of a string. =RIGHT which returns a specified number of characters from the end of the string. =MID which returns a specified number of characters from a defined point in the string =LEN which returns the number of characters in the string, and =FIND which returns the location of a specified character or string within a string. Just a word to the wise, starting from the beginning is always 0, not 1 in any of these examples. Assuming you've got some android in you, you notice that =left and =right are basically useless if you need to break up a string (but can be useful if you wind up with a data dump that gives you a preset amount of garbage to throw away each time, or as a second step in this process). Instead, we're going to leverage the fact that Data Dumps are generally exported in Comma Separated Format (CSV) which means that there are going to be a lot of commas for you to set as "flags" where you want to break things up. Take the example I gave you before: Smith,Bob,8675309s. This is actually a particularly mean example because you're going to need to break it into three steps for which there are multiple solutions, simply because there's more than one comma to "put your flag down". One way you can go about it is as such: First use the "mid" function alongside the "find" command in order to separate the last name from the first name and ID, like so: =MID(A1,FIND(",",A1)+1,LEN(A1)) The result of this will be Bob,8675309, and the reason why the +1 is included is so that we don't include the comma (which would start this whole circus again) From there, you can repeat the steps on the newly created Bob,8675309 in order to obtain the ID. Once you have the ID number, if you return to the original string and perform =left(A1(find(",",A1)-1) (the -1 removes the first comma as opposed to the second) you'll get "Smith" Finally, you can go to the Bob,8675309 string (which we'll say is located in B1) and perform the same steps in order to get "Bob" all by itself. With all that being said, until we get a bit more advanced (yes, you can do this in fewer steps), assume you need one formula per comma in order to "parse" each section, and you'll find your way. The benefit of breaking it down this way is that you can drag the formula down and it'll perform the exact same steps regardless of how long the initial string is, as long as it has 3 commas. So, what have we learned? We've learned to slice up data dumps We've learned to dice up the resultant strings and finally We've learned how to take a small bit of useless garbage and turn it into variables that we can use in further analysis via slicing and dicing. So you see? Perhaps your boss knew something after all when they were asking you if you knew how to slice and dice data...juuuuuust kidding. Now that you have a basic fund of knowledge (and if you don't I implore you to read the rest of the Adventures in Excel series), the next few lessons are going to go into some of the crazier stuff that I've personally been asked to do (which my other friends on this blog could do in 5 minutes using something other than Excel) and you'll learn to start a fire with some flint and a bunch of sticks. -Snacks out.

- Max Mileaf Read post

1 note

·

View note

Text

Online data science

We educate as per the Indian Standard Timings, feasible to you, offering in-depth knowledge of Data Science. We can be found round the clock on WhatsApp, emails, or calls for clarifying doubts and occasion help, also giving lifetime access to self-paced studying.

In Hyderabad, IMS Proschool offers Data Science training course at Lakdikapul, Hyderabad. IMS Proschool is the main classroom provider of Analytics Education in India and has been constantly ranked among the Top 5 Analytics Institutes in India by Analytics India Magazine in the year 2016, 17, 18 and 19.

Data is in all places, which is rising exponentially, globally, and this could still develop at an accelerating price for the foreseeable future. Businesses generate huge quantities of information in the form of blogs, messages, transaction paperwork, cell system information, social media, and so forth. By utilizing this knowledge successfully, a enterprise firm can create important worth and grow their financial system by enhancing productivity, growing efficiency, and delivering extra worth to consumers. All these Algorithms will be explained using one case study and executed in python. Data Science today is taking child steps in the Indian market and it will only blossom to develop huge within the years to come back. Hadoop being the framework becomes a should know talent and a stepping stone to the Analytics journey.

The committee of Great Lakes college screen and select the candidates who could be asked to appear for an aptitude take a look at. The means of screening ends with the final spherical of private interviews. Click Here for Data Science Program Admission & Selection Process. Upon commencement from this system, candidates will be supplied placement alternatives for three months from the date of graduation of this system. In this era, candidates do get help from the staff in terms of placement. The team will help the candidates to arrange for the interviews by offering immense assist by mentoring, reviewing Curriculum vitae, preparation for upcoming interviews.

This Data Science Course is designed to enhance your Skills in Probability and Statistics, Machine Learning, Math, Excel, Python, R and Tableau with our Hands-on Classes. Learn tips on how to use the Python Libraries Numpy, pandas and matplotlib to develop Machine Learning algorithms for data evaluation from the Scratch and Boost your Data Science Skills to the Next level. Enroll for Data Science Certification training Course to become master in Big Data Analysis utilizing Apache Spark and Scala. This Data Science Training In Hyderabad program is completely aligned with the present trade requirements. We shall be updating the curriculum once in a while & new matters will be launched. So, students will keep related with the newest methods and instruments which might be currently in use in this trade.

The Data Science coaching program covers in-depth knowledge about the concepts of Data Science. It lets you master skills such as statistics, data mining, clustering, speculation testing, determination bushes, data wrangling, data visualization, linear regression and much more. The data science coaching in Ameerpet concludes with a capstone project that helps the candidates to make use of all their knowledge and create actual business products.

Yes, as a part of our Data Science Course In Hyderabad program, we do present a hundred% placement assistance for our college students. We are having tie-ups with high companies & might be recommending the profiles of our college students & get the interviews scheduled.

Please verify with the co-ordinators about training choices in your location. Hyderabad has a perfect talent improvement ecosystem to enable novices to senior professionals to change to Data Science subject. Be it a small company or an MNC, they want a Data scientist to handle their massive pool of data. In depth information on Data Mining, Data forecasting, and Data Visualization.

InventaTeq has tie-up with many IT and Non-IT MNC. Whenever a new opening is up we get them and share with our college students in order to get them a dream job as quickly as attainable. InventaTeq knowledge exhibits that the location in the field of Data science has a mean of four LPA with three.2 LPA being the minimum wage for a more energizing. The average wage for an skilled individual is 6.5 LPA with 20 LPA as a maximum salary with InventaTeq placement cell. You will get the course module in Data science over the mail and the bodily copy will be shared with you within the training middle. Help them reach out to you along with your certification placing a price in your resume. Learn the important thing parameters, and how to construct a neural network and its application. The advance MS excel training helps you to perceive the data and do knowledge visualization and perform multiple information set operations on the same.

We use expertise to complement LIVE teaching, whether or not online or offline, not to pre-empt it via recorded content material. Our focus on learning outcomes has led us to co-create and co-deliver globally recognized bachelor’s, grasp’s, doctoral and certificate applications for individuals and enterprises from various backgrounds. With the recognition of Big Data growing exponentially, opportunities as Data Scientist / architects has been rising in all main business sectors .etc.

Complex information requires to be investigated to establish hidden patterns and co-relations and affect the choice making course of. Analytics assist provide factual changes for enterprise optimisation as against hypothetical conclusions. Dataanalytics inherently has revolutionized knowledge analytics by offering fast answers with minimal effort as towards pre Big Data trade requirements by way of a framework as user pleasant as Data Science. It involves enhancing strategies of recording, storing, and analyzing information to effectively extract helpful information. The aim of data science is to achieve insights and data from any type of knowledge each structured and unstructured. is in high demand and additionally it is called as predictive analytics for official and industrial objective. Global Coach helps students to learn all of the advanced courses that are presently in large demand.

Also, we had periodic group discussions, whereby case research have been assigned to us. Learning Data Science, Artificial Intelligence, and Machine Learning via case studies made it straightforward to grasp.

In the continued pandemic, I researched for few online courses that I can do and I came across InventaTeq. I joined the course and accomplished the Data Science certification. This certification helped me to get multiple calls for various opportunities. InventaTeq has remodeled my career and given it a new dimension. Anyone who has a bachelor’s diploma, a passion for information science, and little data of it are eligibility standards for the Data Science Course. In Data Science, you will learn to find priceless knowledge, analyze and apply mathematical skills to it to use in enterprise for making nice choices, developing a product, forecasting, and building enterprise strategies. Dear pals, initially I struggled lots to find one of the best training centre for Data Science in Hyderabad.

I received a Data Science job presents from Goldman Sachs, Mindtree, and Barracuda at a shot. Currently placed in Mindtree, it was a pleasant expertise with DataMites, the process, and the experiences leading to success. Under the steering of Ashok sir, the ideas have been made simple to understand. The price of the course varies between INR 2,00,000 to INR 3,50,000. A lot of knowledge science institutes also allow the candidates to make the fee in installments.

The demand for knowledge scientists is excessive - however the provide is not but at a satisfactory level! For this reason, the beginning wage for a fresher within the data science area is considerably higher in comparison to other fields. We are One stop-answer for students to enhance their abilities, connect with consultants and share their tales. Our aim is to help faculty college students get access to finest profession counseling recommendation, coaching followed by mentoring by arranging profession events throughout the nation. Therefore, the above article provides the list of the highest Data Science Institutes in Hyderabad.

We train as per the Indian Standard Time with In-depth sensible Knowledge on every matter in classroom coaching, 80 – 90 Hrs of Real-time practical coaching classes. There are completely different slots available on weekends or weekdays in accordance with your decisions.

Learn the other ways to handle a big quantity of knowledge and classify the identical primarily based on totally different classes. The project aims to help you perceive the totally different scenario that arises from online and offline data and how to tackle the same. I have attended a webinar given by IBM’s Senior Expert Mr G Ananthapadmanabhan (Practice chief – Analytics) on Emerging tendencies in Analytics and Artificial Intelligence. It was a fantastic session and obtained a basic idea of how AI is being used in analytics these days. After the tip of the session, I was glad to hitch the Data Science program. The mentorship by way of industry veterans and pupil mentors makes the program extraordinarily engaging. We present Classroom training on IBM Certified Data Science at Hyderabad for the people who believe hand-held coaching.

The school have a lot of experience in their fields and they cleared all our doubts. I obtained alternatives in 2 companies, one in HSBC and the other in Genpact.