#Types of cloud computing service models

Explore tagged Tumblr posts

Text

How can you optimize the performance of machine learning models in the cloud?

Optimizing machine learning models in the cloud involves several strategies to enhance performance and efficiency. Here’s a detailed approach:

Choose the Right Cloud Services:

Managed ML Services:

Use managed services like AWS SageMaker, Google AI Platform, or Azure Machine Learning, which offer built-in tools for training, tuning, and deploying models.

Auto-scaling:

Enable auto-scaling features to adjust resources based on demand, which helps manage costs and performance.

Optimize Data Handling:

Data Storage:

Use scalable cloud storage solutions like Amazon S3, Google Cloud Storage, or Azure Blob Storage for storing large datasets efficiently.

Data Pipeline:

Implement efficient data pipelines with tools like Apache Kafka or AWS Glue to manage and process large volumes of data.

Select Appropriate Computational Resources:

Instance Types:

Choose the right instance types based on your model’s requirements. For example, use GPU or TPU instances for deep learning tasks to accelerate training.

Spot Instances:

Utilize spot instances or preemptible VMs to reduce costs for non-time-sensitive tasks.

Optimize Model Training:

Hyperparameter Tuning:

Use cloud-based hyperparameter tuning services to automate the search for optimal model parameters. Services like Google Cloud AI Platform’s HyperTune or AWS SageMaker’s Automatic Model Tuning can help.

Distributed Training:

Distribute model training across multiple instances or nodes to speed up the process. Frameworks like TensorFlow and PyTorch support distributed training and can take advantage of cloud resources.

Monitoring and Logging:

Monitoring Tools:

Implement monitoring tools to track performance metrics and resource usage. AWS CloudWatch, Google Cloud Monitoring, and Azure Monitor offer real-time insights.

Logging:

Maintain detailed logs for debugging and performance analysis, using tools like AWS CloudTrail or Google Cloud Logging.

Model Deployment:

Serverless Deployment:

Use serverless options to simplify scaling and reduce infrastructure management. Services like AWS Lambda or Google Cloud Functions can handle inference tasks without managing servers.

Model Optimization:

Optimize models by compressing them or using model distillation techniques to reduce inference time and improve latency.

Cost Management:

Cost Analysis:

Regularly analyze and optimize cloud costs to avoid overspending. Tools like AWS Cost Explorer, Google Cloud’s Cost Management, and Azure Cost Management can help monitor and manage expenses.

By carefully selecting cloud services, optimizing data handling and training processes, and monitoring performance, you can efficiently manage and improve machine learning models in the cloud.

2 notes

·

View notes

Text

CLOUD COMPUTING: A CONCEPT OF NEW ERA FOR DATA SCIENCE

Cloud Computing is the most interesting and evolving topic in computing in the recent decade. The concept of storing data or accessing software from another computer that you are not aware of seems to be confusing to many users. Most the people/organizations that use cloud computing on their daily basis claim that they do not understand the subject of cloud computing. But the concept of cloud computing is not as confusing as it sounds. Cloud Computing is a type of service where the computer resources are sent over a network. In simple words, the concept of cloud computing can be compared to the electricity supply that we daily use. We do not have to bother how the electricity is made and transported to our houses or we do not have to worry from where the electricity is coming from, all we do is just use it. The ideology behind the cloud computing is also the same: People/organizations can simply use it. This concept is a huge and major development of the decade in computing.

Cloud computing is a service that is provided to the user who can sit in one location and remotely access the data or software or program applications from another location. Usually, this process is done with the use of a web browser over a network i.e., in most cases over the internet. Nowadays browsers and the internet are easily usable on almost all the devices that people are using these days. If the user wants to access a file in his device and does not have the necessary software to access that file, then the user would take the help of cloud computing to access that file with the help of the internet.

Cloud computing provide over hundreds and thousands of services and one of the most used services of cloud computing is the cloud storage. All these services are accessible to the public throughout the globe and they do not require to have the software on their devices. The general public can access and utilize these services from the cloud with the help of the internet. These services will be free to an extent and then later the users will be billed for further usage. Few of the well-known cloud services that are drop box, Sugar Sync, Amazon Cloud Drive, Google Docs etc.

Finally, that the use of cloud services is not guaranteed let it be because of the technical problems or because the services go out of business. The example they have used is about the Mega upload, a service that was banned and closed by the government of U.S and the FBI for their illegal file sharing allegations. And due to this, they had to delete all the files in their storage and due to which the customers cannot get their files back from the storage.

Service Models Cloud Software as a Service Use the provider's applications running on a cloud infrastructure Accessible from various client devices through thin client interface such as a web browser Consumer does not manage or control the underlying cloud infrastructure including network, servers, operating systems, storage

Google Apps, Microsoft Office 365, Petrosoft, Onlive, GT Nexus, Marketo, Casengo, TradeCard, Rally Software, Salesforce, ExactTarget and CallidusCloud

Cloud Platform as a Service Cloud providers deliver a computing platform, typically including operating system, programming language execution environment, database, and web server Application developers can develop and run their software solutions on a cloud platform without the cost and complexity of buying and managing the underlying hardware and software layers

AWS Elastic Beanstalk, Cloud Foundry, Heroku, Force.com, Engine Yard, Mendix, OpenShift, Google App Engine, AppScale, Windows Azure Cloud Services, OrangeScape and Jelastic.

Cloud Infrastructure as a Service Cloud provider offers processing, storage, networks, and other fundamental computing resources Consumer is able to deploy and run arbitrary software, which can include operating systems and applications Amazon EC2, Google Compute Engine, HP Cloud, Joyent, Linode, NaviSite, Rackspace, Windows Azure, ReadySpace Cloud Services, and Internap Agile

Deployment Models Private Cloud: Cloud infrastructure is operated solely for an organization Community Cloud : Shared by several organizations and supports a specific community that has shared concerns Public Cloud: Cloud infrastructure is made available to the general public Hybrid Cloud: Cloud infrastructure is a composition of two or more clouds

Advantages of Cloud Computing • Improved performance • Better performance for large programs • Unlimited storage capacity and computing power • Reduced software costs • Universal document access • Just computer with internet connection is required • Instant software updates • No need to pay for or download an upgrade

Disadvantages of Cloud Computing • Requires a constant Internet connection • Does not work well with low-speed connections • Even with a fast connection, web-based applications can sometimes be slower than accessing a similar software program on your desktop PC • Everything about the program, from the interface to the current document, has to be sent back and forth from your computer to the computers in the cloud

About Rang Technologies: Headquartered in New Jersey, Rang Technologies has dedicated over a decade delivering innovative solutions and best talent to help businesses get the most out of the latest technologies in their digital transformation journey. Read More...

#CloudComputing#CloudTech#HybridCloud#ArtificialIntelligence#MachineLearning#Rangtechnologies#Ranghealthcare#Ranglifesciences

9 notes

·

View notes

Text

Enterprises Explore These Advanced Analytics Use Cases

Businesses want to use data-driven strategies, and advanced analytics solutions optimized for enterprise use cases make this possible. Analytical technology has come a long way, with new capabilities ranging from descriptive text analysis to big data. This post will describe different use cases for advanced enterprise analytics.

What is Advanced Enterprise Analytics?

Advanced enterprise analytics includes scalable statistical modeling tools that utilize multiple computing technologies to help multinational corporations extract insights from vast datasets. Professional data analytics services offer enterprises industry-relevant advanced analytics solutions.

Modern descriptive and diagnostic analytics can revolutionize how companies leverage their historical performance intelligence. Likewise, predictive and prescriptive analytics allow enterprises to prepare for future challenges.

Conventional analysis methods had a limited scope and prioritized structured data processing. However, many advanced analytics examples quickly identify valuable trends in unstructured datasets. Therefore, global business firms can use advanced analytics solutions to process qualitative consumer reviews and brand-related social media coverage.

Use Cases of Advanced Enterprise Analytics

1| Big Data Analytics

Modern analytical technologies have access to the latest hardware developments in cloud computing virtualization. Besides, data lakes or warehouses have become more common, increasing the capabilities of corporations to gather data from multiple sources.

Big data is a constantly increasing data volume containing mixed data types. It can comprise audio, video, images, and unique file formats. This dynamic makes it difficult for conventional data analytics services to extract insights for enterprise use cases, highlighting the importance of advanced analytics solutions.

Advanced analytical techniques process big data efficiently. Besides, minimizing energy consumption and maintaining system stability during continuous data aggregation are two significant advantages of using advanced big data analytics.

2| Financial Forecasting

Enterprises can raise funds using several financial instruments, but revenue remains vital to profit estimation. Corporate leadership is often curious about changes in cash flow across several business quarters. After all, reliable financial forecasting enables them to allocate a departmental budget through informed decision-making.

The variables impacting your financial forecasting models include changes in government policies, international treaties, consumer interests, investor sentiments, and the cost of running different business activities. Businesses always require industry-relevant tools to calculate these variables precisely.

Multivariate financial modeling is one of the enterprise-level examples of advanced analytics use cases. Corporations can also automate some components of economic feasibility modeling to minimize the duration of data processing and generate financial performance documents quickly.

3| Customer Sentiment Analysis

The customers’ emotions influence their purchasing habits and brand perception. Therefore, customer sentiment analysis predicts feelings and attitudes to help you improve your marketing materials and sales strategy. Data analytics services also provide enterprises with the tools necessary for customer sentiment analysis.

Advanced sentiment analytics solutions can evaluate descriptive consumer responses gathered during customer service and market research studies. So, you can understand the positive, negative, or neutral sentiments using qualitative data.

Negative sentiments often originate from poor customer service, product deficiencies, and consumer discomfort in using the products or services. Corporations must modify their offerings to minimize negative opinions. Doing so helps them decrease customer churn.

4| Productivity Optimization

Factory equipment requires a reasonable maintenance schedule to ensure that machines operate efficiently. Similarly, companies must offer recreation opportunities, holidays, and special-purpose leaves to protect the employees’ psychological well-being and physical health.

However, these activities affect a company’s productivity. Enterprise analytics solutions can help you use advanced scheduling tools and human resource intelligence to determine the optimal maintenance requirements. They also include other productivity optimization tools concerning business process innovation.

Advanced analytics examples involve identifying, modifying, and replacing inefficient organizational practices with more impactful workflows. Consider how outdated computing hardware or employee skill deficiencies affect your enterprise’s productivity. Analytics lets you optimize these business aspects.

5| Enterprise Risk Management

Risk management includes identifying, quantifying, and mitigating internal or external corporate risks to increase an organization’s resilience against market fluctuations and legal changes. Moreover, improved risk assessments are the most widely implemented use cases of advanced enterprise analytics solutions.

Internal risks revolve around human errors, software incompatibilities, production issues, accountable leadership, and skill development. Lacking team coordination in multi-disciplinary projects is one example of internal risks.

External risks result from regulatory changes in the laws, guidelines, and frameworks that affect you and your suppliers. For example, changes in tax regulations or import-export tariffs might not affect you directly. However, your suppliers might raise prices, involving you in the end.

Data analytics services include advanced risk evaluations to help enterprises and investors understand how new market trends or policies affect their business activities.

Conclusion

Enterprise analytics has many use cases where data enhances management’s understanding of supply chain risks, consumer preferences, cost optimization, and employee productivity. Additionally, the advanced analytics solutions they offer their corporate clients assist them in financial forecasts.

New examples that integrate advanced analytics can also process mixed data types, including unstructured datasets. Furthermore, you can automate the process of insight extraction from the qualitative consumer responses collected in market research surveys.

While modern analytical modeling benefits enterprises in financial planning and business strategy, the reliability of the insights depends on data quality, and different data sources have unique authority levels. Therefore, you want experienced professionals who know how to ensure data integrity.

A leader in data analytics services, SG Analytics, empowers enterprises to optimize their business practices and acquire detailed industry insights using cutting-edge technologies. Contact us today to implement scalable data management modules to increase your competitive strength.

2 notes

·

View notes

Text

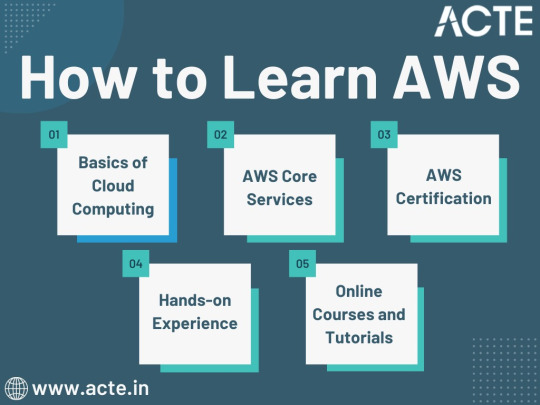

Journey to AWS Proficiency: Unveiling Core Services and Certification Paths

Amazon Web Services, often referred to as AWS, stands at the forefront of cloud technology and has revolutionized the way businesses and individuals leverage the power of the cloud. This blog serves as your comprehensive guide to understanding AWS, exploring its core services, and learning how to master this dynamic platform. From the fundamentals of cloud computing to the hands-on experience of AWS services, we'll cover it all. Additionally, we'll discuss the role of education and training, specifically highlighting the value of ACTE Technologies in nurturing your AWS skills, concluding with a mention of their AWS courses.

The Journey to AWS Proficiency:

1. Basics of Cloud Computing:

Getting Started: Before diving into AWS, it's crucial to understand the fundamentals of cloud computing. Begin by exploring the three primary service models: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). Gain a clear understanding of what cloud computing is and how it's transforming the IT landscape.

Key Concepts: Delve into the key concepts and advantages of cloud computing, such as scalability, flexibility, cost-effectiveness, and disaster recovery. Simultaneously, explore the potential challenges and drawbacks to get a comprehensive view of cloud technology.

2. AWS Core Services:

Elastic Compute Cloud (EC2): Start your AWS journey with Amazon EC2, which provides resizable compute capacity in the cloud. Learn how to create virtual servers, known as instances, and configure them to your specifications. Gain an understanding of the different instance types and how to deploy applications on EC2.

Simple Storage Service (S3): Explore Amazon S3, a secure and scalable storage service. Discover how to create buckets to store data and objects, configure permissions, and access data using a web interface or APIs.

Relational Database Service (RDS): Understand the importance of databases in cloud applications. Amazon RDS simplifies database management and maintenance. Learn how to set up, manage, and optimize RDS instances for your applications. Dive into database engines like MySQL, PostgreSQL, and more.

3. AWS Certification:

Certification Paths: AWS offers a range of certifications for cloud professionals, from foundational to professional levels. Consider enrolling in certification courses to validate your knowledge and expertise in AWS. AWS Certified Cloud Practitioner, AWS Certified Solutions Architect, and AWS Certified DevOps Engineer are some of the popular certifications to pursue.

Preparation: To prepare for AWS certifications, explore recommended study materials, practice exams, and official AWS training. ACTE Technologies, a reputable training institution, offers AWS certification training programs that can boost your confidence and readiness for the exams.

4. Hands-on Experience:

AWS Free Tier: Register for an AWS account and take advantage of the AWS Free Tier, which offers limited free access to various AWS services for 12 months. Practice creating instances, setting up S3 buckets, and exploring other services within the free tier. This hands-on experience is invaluable in gaining practical skills.

5. Online Courses and Tutorials:

Learning Platforms: Explore online learning platforms like Coursera, edX, Udemy, and LinkedIn Learning. These platforms offer a wide range of AWS courses taught by industry experts. They cover various AWS services, architecture, security, and best practices.

Official AWS Resources: AWS provides extensive online documentation, whitepapers, and tutorials. Their website is a goldmine of information for those looking to learn more about specific AWS services and how to use them effectively.

Amazon Web Services (AWS) represents an exciting frontier in the realm of cloud computing. As businesses and individuals increasingly rely on the cloud for innovation and scalability, AWS stands as a pivotal platform. The journey to AWS proficiency involves grasping fundamental cloud concepts, exploring core services, obtaining certifications, and acquiring practical experience. To expedite this process, online courses, tutorials, and structured training from renowned institutions like ACTE Technologies can be invaluable. ACTE Technologies' comprehensive AWS training programs provide hands-on experience, making your quest to master AWS more efficient and positioning you for a successful career in cloud technology.

8 notes

·

View notes

Text

SESSION 1. INTRODUCTION TO INFOCOMM TECH LAW IN SINGAPORE

OPTIONAL READING: ICT LAW IN SINGAPORE CHAPTER 1

OPTIONAL REFERENCES: LAW AND TECH IN SINGAPORE CHAPTERS 1-3

A. COURSE DESCRIPTION AND OBJECTIVE

New economies have emerged within the last two decades including digital models of transaction and disruptive innovation. Internet intermediaries generally are taking on a major role as facilitators of commercial and non-commercial transactions online. These include social networking platforms (e.g. Facebook, IG and Twitter), multimedia sharing platforms (e.g. YouTube, Apple Music and Spotify), search engines and news aggregators (e.g. Yahoo, Google), content hosts and storage facilities (e.g. Dropbox) and many others. Content generating platforms such as TikTok have also become popular even as cybersecurity concerns and other misgivings have emerged at the governmental level in some jurisdictions.

In the last few years, the use of Internet of Things (IoT) have become quite common in advanced economies, the latest being wearable devices for the 'Metaverse' and an even more immersive experience in the digital realm. Artificial Intelligence (AI) is also becoming more visible at the workplace and at home, leading to ethical concerns and a slate of guidelines globally to 'govern' its development and deployment. Most recently, interest in generative AI (GAI) emerged from the successful launch of chatGPT and other similar services.

Policies and laws have been adapted to deal with the roles and functions of Internet intermediaries, IoT and AI devices and services, and their potential effects and impact on society. Regulators in every jurisdiction are faced with the challenge to manage the new economy and players, and to balance the interest of multiple parties, in the context of areas of law including intellectual property, data protection, privacy, cloud technology and cyber-security. Different types of safe harbour laws and exceptions have emerged to protect these intermediaries and putting in place special obligations; while some forms of protections have been augmented to protect the interests of other parties including content providers and creators as well as society at large. Students taking this course will examine the legal issues and solutions arising from transactions through the creation and use of digital information, goods and services ('info') as well as the use of non-physical channels of communication and delivery ('comm').

The technological developments from Web 1.0 to 2.0 and the future of Web 3.0 with its impact on human interaction and B2B/B2C commerce as well as e-governance will be examined in the context of civil and criminal law, both in relation to the relevance of old laws and the enactment of new ones. In particular, this course examines the laws specifically arising from and relating to electronic transactions and interaction and their objectives and impact on the individual vis-à-vis other parties. Students will be taken through the policy considerations and general Singapore legislations and judicial decisions on the subject with comparisons and reference to foreign legislation where relevant.

In particular, electronic commerce and other forms of transactions will be studied with reference to the Electronic Transactions Act (2010) and the Singapore domain name framework supporting access to websites; personal data privacy and protection will be studied with reference to the Personal Data Protection Act of 2012 and the Spam Control Act; the challenges and changes to tort law to deal with online tortious conduct will be analysed (e.g. cyber-harassment under the Protection from Harassment Act (2014) and online defamation in the context of online communication); the rights and liabilities relating to personal uses of Internet content and user-generated content will be considered with reference to the Copyright Act (2021); computer security and crimes will be studied with reference to the Computer Misuse Act (2017) and the Cybersecurity Act (2018); and last, but not least, Internet regulation under the Broadcasting Act and its regulations as well as the Protection from Online Falsehoods and Manipulation Act (2019), the Foreign Interference (Countermeasures) Act (2021) and the Online Criminal Harms Bill (2021) will be critically evaluated.

You will note from the above paragraph that there have been an acceleration in the enactment of ICT laws and amendments in recent years, which shows the renewed focus of the government and policy-makers when it comes to the digital economy and society (as we move towards a SMART Nation). This is happening not online in Singapore, but abroad as well. In such an inter-connected work with porous jurisdiction when it comes to human interaction and commercial transactions, we have to be aware of global trends and, in some cases, the laws of other jurisdictions as well. When it is relevant, foreign laws will also be canvassed as a comparison or to contrast the approach to a specific problem. Projects are a good way to approach in greater depth.

B. CLASS PREPARATION FOR SESSION 1

In preparation for this session, use the online and library resources that you are familiar with to answer the following questions in the Singapore context (and for foreign/exchange students, in the context of your respective countries):

What are the relevant agencies and their policies on ICT?

What are the areas of law that are most impacted by ICT?

What is the government's position on Artificial Intelligence?

What are the latest legal developments on this field?

Also, critically consider the analysis and recommendations made in the report on Applying Ethical Principles for Artificial Intelligence in Regulatory Reform, SAL Law Reform Committee, July 2020. Evaluate it against the second version of the Model AI Governance Framework from the IMDA. Also, look at the Discussion Paper on GAI released on 6 June 2023. Take note of this even as we embark on the ‘tour’ of disparate ICT topics from Session 2 onwards, and the implications for each of those areas of law that will be covered in class.

C. ASSESSMENT METHOD AND GRADING DISTRIBUTION

Class Participation 10% (individually assessed)

Group Project 30% (group assessed)

Written Exam 60% (2 hour open book examination)

This course will be fully conducted in the classroom setting. Project groups will be formed by week 2, projects will be assigned from week 3, and presentations will begin from week 4 with written assignments to be due for submission a weeks after presentation. Further details and instructions will be given after the groups are formed, but before the first project assignment.

D. RECOMMENDED TEXTBOOK AND READINGS

The main textbook is: Warren B. Chik & Saw Cheng Lim, Information and Communications Technology Law in Singapore (Academy Publishing, Law Practice Series, July 2020). You can purchase the book (both physical and electronic copies) from the Singapore Academy of Law Publishing (ask for the student discount). If you prefer, there are copies available in the reserves section of the Law Library that you can use. The other useful reference will be: Chesterman, Goh & Phang, Law and Technology in Singapore (Academy Publishing, Law Practice Series, September 2021).

Due to the rapid pace of development in the law in some areas of analysis, students will also be given instructions and pre-assigned readings via this blog one week before each lesson. Students need only refer to the SMU eLearn website for administrative information such as the Project Schedule and the Grade Book as well as to share project papers and presentation materials. Students will be expected to analyse legislative provisions and/or cases that are indicated as required reading for each week.

Free access to the local legislation and subsidiary legislation may be found at the Singapore Statutes Online website at: https://sso.agc.gov.sg.

Local cases are accessible through the Legal Workbench in Lawnet. The hyperlink can be found under the Law Databases column on the SMU Library’s Law Research Navigator at: http://researchguides.smu.edu.sg/LAW.

Other online secondary legal materials on Singapore law that you may find useful include Singapore Law Watch (http://www.singaporelawwatch.sg) and Singapore Law SG (https://www.singaporelawblog.sg).

There are also other secondary resources made available from the SMU Library when doing research for your projects such as the many other digital databases available from the LRN (e.g. Lexis, Westlaw and Hein online that are all available under the Law Databases column) and the books and periodicals that are available on the library shelves.

5 notes

·

View notes

Note

I've had your post up in a separate tab for ages, but life 😮💨😅

Just wanted to say I appreciate the in-depth response about RAID (and cosmic rays!) and especially the S.M.A.R.T. article - it'll come in handy as I'm quite complacent 😅 about my personal backups (I think my music files have the most "redundancy" via iPods 🤡)

*I dunno if you'll find it interesting, but the OP's schadenfreude in this Reddit post was amusing to me, at least 😅

https://www.reddit.com/r/Netsuite/s/Bxu6bFnrkS

But tbh, even for the outage referenced, 🤡 I would've been more angsty about the potential hours! of productivity lost (i.e. bc users getting anxious about deadlines, etc.) rather than the potential that our data pre-incident would be corrupted or lost.

Anyways, in any case! Thank you for sharing your tangents! I hope you're doing well!

Ahh what a great honour to be a long standing open tab. Funnily enough I started drafting this yesterday and got distracted from it as well. My original response to how I’m doing was going to be “semi patiently waiting for the Dreamcatcher comeback announcement” but since then we got Fromm messages saying probably not until at least June (noooooo) and now I'm also neck deep in ACC, much to my dismay. I have nothing intelligent to say about batteries, they’re complete mysteries to me as well, but they sure do exist. Unfortunately.

Anyway! I do have things to say about backups. Below the cut 😉

The thing about backups is that you can definitely get way deeper than you need to, I think it’s mostly important to be aware and comfortable with your level of risk. The majority of people don’t hold too much irreplaceable data on their personal computers, and the data that does come under that category often fits within free or cheap tiers of cloud backup providers. Before I had my current setup I used to take a less structured approach to backups. I sorted my data into three categories:

Replaceable, which encompasses things like applications and games which can be re-downloaded from the internet (and, if the original download source were no longer available, this would not be a huge deal);

Irreplaceable but not catastrophic, which encompasses things like game saves, half finished software projects, screenshots I've taken etc; and

Irreplaceable and catastrophic, which encompasses things like legal documents but also select few items from category 2 I'm just very personally attached to.

Category 1 items I had on a single hard drive, category 2 items I copied over selectively to a second every now and then when I got struck with a particularly large wave of paranoia, and category 3 items I did the same but with the additional step of scattering them through various cloud providers as well. Now that I have an actual redundant drive setup in a server I have Kopia running on my personal computer to periodically back up everything that isn’t on my SSD, but I still rely on those external cloud providers for offsite backups.

It’s important to note my setup is ultimately designed with hardware reliability engineering in mind but those aren’t the only factors at play when thinking about backups, especially for enterprises. That Reddit thread is hilarious and I can see exactly where both sides are coming from, it’s a common enough disagreement between people of different departments. Senior software engineers tend to be paranoid old bastards who loathe to trust anyone else's code, which is in direct opposition to so many “software as a service” business models these days. But from a business perspective it makes complete sense to always have your own copy of the data as well, even if it isn’t the copy being used. It’s not just loss of productivity (although I agree that’s the most likely extent of any service down time) but often there are legal obligations on keeping records of certain types of work, and, while I’m pretty sure a company could win a court battle to absolve itself of responsibility in the event of a trusted third party being the one to drop the ball, that’s not the kind of argument you even want to risk getting into when there’s such a simple extra safeguard that could be put in place.

My assessment of the risks of my own backup solution of course has a MUCH lower threshold for striking out controls based on cost. I'm a hobbyist after all, this whole thing does not generate money it only takes it. Most notably I don’t have any full offsite backups, which leaves me vulnerable to near total data loss in the unlikely event of a house fire or someone breaking in and just picking up and leaving with the whole lot. The problem with defending against either of these scenarios with a “proper” 3-2-1 backup strategy is that the first server already cost me enough, I don’t want to go investing almost the same amount into a second one to stick somewhere else! And paying any cloud provider to host terabytes is no friendlier on the wallet.

There’s also the issue of airgaps, which is something enterprises need to think about but I do not have any desire to entertain. If a bad actor were to infiltrate my network in such a way that gave them root access to the server hosting all my data I would have no ability to restore from a ransomware attack. Of course this scenario is very unlikely, I’m already doing a lot to mitigate the risk of a cyber attack because running my services securely doesn’t incur additional costs (just additional time, which does mean I haven’t implemented everything possible, just enough to be comfortable there are no glaring holes), but it’s still something I am conscious of when running something which is exposed (in a small way) to the internet. Cybersecurity is also a whole separate but interesting topic that I’m by no means an expert in but enjoy putting into practise (unlike BATTERIES. God. What is wrong with electrical engineers (I say this with love, I work with many of them)).

In conclusion, coming back to how this relates to my dreamcatcher images blog, you can rest assured that my collection of rare recordings is about as safe as my collection of rare albums is, in that, barring a large scale disaster, they should be safe as long as I want to keep them. Which is hopefully going to be a very long time indeed, because I don’t just enjoy the process I also enjoy the content I’m preserving. But the average person probably doesn’t need to put the same level of effort into archiving — Google and Microsoft’s cloud services have much more redundancy than a home setup could ever achieve and can hold all the essentials (like the backup of the Minecraft server on which you met your oldest friends, for example).

1 note

·

View note

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps Classes: 180+ hours of live classes Lectures: 300 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 67% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

"EMPOWERMENT TECHNOLOGIES"

TRENDS IN ICT_

1. CONVERGENCE

-Technological convergence is the combination of two or more different entities of technologies to create a new single device.

2. SOCIAL MEDIA

-is a website, application, or online channel that enables web users web users to create , co-create, discuss modify, and exchange user generated content.

SIX TYPES OF SOCIAL MEDIA:

a. SOCIAL NETWORKS

- These are sites that allows you to connect with other people with the same interests or background. Once the user creates his/her account, he/she can set up a profile, add people, share content, etc.

b. BOOKMARKING SITES

- Sites that allow you to store and manage links to various website and resources. Most of the sites allow you to create a tag to others.

c. SOCIAL NEWS

– Sites that allow users to post their own news items or links to other news sources. The users can also comment on the post and comments may also be rank.

d. MEDIA SHARING

– sites that allow you to upload and share media content like images, music and video.

e. MICROBLOGGING

- focus on short updates from the user. Those that subscribed to the user will be able to receive these updates.

f. BLOGS AND FORUMS

- allow user to post their content. Other users are able to comment on the said topic.

3. MOBILE TECHNOLOGIES

- The popularity of smartphones and tablets has taken a major rise over the years. This is largely because of the devices capability to do the tasks that were originally found in PCs. Several of these devices are capable of using a high-speed internet. Today the latest model devices use 4G Networking (LTE), which is currently the fastest.

MOBILE OS

•iOS

- use in apple devices such as iPhone and iPad.

•ANDROID

- an open source OS developed by Google. Being open source means mobile phone companies use this OS for free.

•BLACKBERRY OS

- use in blackberry devices

•WINDOWS PHONE OS

- A closed source and proprietary operating system developed by Microsoft.

=Symbian - the original smartphone OS. Used by Nokia devices

= Web OS- originally used in smartphone; now in smart TVs.

= Windows Mobile - developed by Microsoft for smartphones and pocket PCs

4. ASSISTIVE MEDIA

- is a non- profit service designed to help people who have visual and reading impairments. A database of audio recordings is used to read to the user.

CLOUD COMPUTING

- distributed computing on internet or delivery of computing service over the internet. e.g. Yahoo!, Gmail, Hotmail

-Instead of running an e-mail program on your computer, you log in to a Web e-mail account remotely. The software and storage for your account doesn’t exist on your computer – it’s on the service’s computer cloud.

It has three components ;

1. Client computers

– clients are the device that the end user interact with cloud.

2. Distributed Servers

– Often servers are in geographically different places, but server acts as if they are working next to each other.

3. Datacenters

– It is collection of servers where application is placed and is accessed via Internet.

TYPES OF CLOUDS

PUBLIC CLOUD

-allows systems and services to be easily accessible to the general public. Public cloud may be less secured because of its openness, e.g. e-mail

PRIVATE CLOUD

-allows systems and services to be accessible within an organization. It offers increased security because of its private nature.

COMMUNITY CLOUD

- allows systems and services to be accessible by group of organizations.

HYBRID CLOUD

-is a mixture of public and private cloud. However, the critical activities are performed using private cloud while the non-critical activities are performed using public cloud.

—Khaysvelle C. Taborada

#TrendsinICT

#ICT

#EmpowermentTechnologies

2 notes

·

View notes

Text

Internet Solutions: A Comprehensive Comparison of AWS, Azure, and Zimcom

When it comes to finding a managed cloud services provider, businesses often turn to the industry giants: Amazon Web Services (AWS) and Microsoft Azure. These tech powerhouses offer highly adaptable platforms with a wide range of services. However, the question that frequently perplexes businesses is, "Which platform truly offers the best value for internet solutions Surprisingly, the answer may not lie with either of them. It is essential to recognize that AWS, Azure, and even Google are not the only options available for secure cloud hosting.

In this article, we will conduct a comprehensive comparison of AWS, Azure, and Zimcom, with a particular focus on pricing and support systems for internet solutions.

Pricing Structure: AWS vs. Azure for Internet Solutions

AWS for Internet Solutions: AWS is renowned for its complex pricing system, primarily due to the extensive range of services and pricing options it offers for internet solutions. Prices depend on the resources used, their types, and the operational region. For example, AWS's compute service, EC2, provides on-demand, reserved, and spot pricing models. Additionally, AWS offers a free tier that allows new customers to experiment with select services for a year. Despite its complexity, AWS's granular pricing model empowers businesses to tailor services precisely to their unique internet solution requirements.

Azure for Internet Solutions:

Microsoft Azure's pricing structure is generally considered more straightforward for internet solutions. Similar to AWS, it follows a pay-as-you-go model and charges based on resource consumption. However, Azure's pricing is closely integrated with Microsoft's software ecosystem, especially for businesses that extensively utilize Microsoft software.

For enterprise customers seeking internet solutions, Azure offers the Azure Hybrid Benefit, enabling the use of existing on-premises Windows Server and SQL Server licenses on the Azure platform, resulting in significant cost savings. Azure also provides a cost management tool that assists users in budgeting and forecasting their cloud expenses.

Transparent Pricing with Zimcom’s Managed Cloud Services for Internet Solutions:

Do you fully understand your cloud bill from AWS or Azure when considering internet solutions? Hidden costs in their invoices might lead you to pay for unnecessary services.

At Zimcom, we prioritize transparent and straightforward billing practices for internet solutions. Our cloud migration and hosting services not only offer 30-50% more cost-efficiency for internet solutions but also outperform competing solutions.

In conclusion, while AWS and Azure hold prominent positions in the managed cloud services market for internet solutions, it is crucial to consider alternatives such as Zimcom. By comparing pricing structures and support systems for internet solutions, businesses can make well-informed decisions that align with their specific requirements. Zimcom stands out as a compelling choice for secure cloud hosting and internet solutions, thanks to its unwavering commitment to transparent pricing and cost-efficiency.

2 notes

·

View notes

Text

In-Memory Computing Market Size, Share, Trends, Growth Opportunities and Competitive Outlook

Executive Summary In-Memory Computing Market :

Global in-memory computing market size was valued at USD 30.43 billion in 2023 and is projected to reach USD 170.09 billion by 2031, with a CAGR of 24.00% during the forecast period of 2024 to 2031.

The key factors discussed in the report will surely aid the buyer in studying the In-Memory Computing Market on competitive landscape analysis of prime manufacturers, trends, opportunities, marketing strategies analysis, market effect factor analysis and consumer needs by major regions, types, applications in Global In-Memory Computing Market considering the past, present and future state of the industry. Competitive analysis conducted in this report makes you aware about the moves of the key players in the market such as new product launches, expansions, agreements, joint ventures, partnerships, and acquisitions. The report also includes the detailed profiles for the In-Memory Computing Market’s major manufacturers and importers who are influencing the market.

In-Memory Computing Market report not only provides knowledge and information about all the recent developments, product launches, joint ventures, mergers and acquisitions by the several key players and brands but also acts as a synopsis of market definition, classifications, and market trends. Estimations about the rise or fall of the CAGR value for specific forecast period, market drivers, market restraints, and competitive strategies are evaluated in the report. The In-Memory Computing Market report gives details about market trends, future prospects, market restraints, leading market drivers, several market segments, key developments, key players in the market, and competitor strategies.

Discover the latest trends, growth opportunities, and strategic insights in our comprehensive In-Memory Computing Market report. Download Full Report: https://www.databridgemarketresearch.com/reports/global-in-memory-computing-market

In-Memory Computing Market Overview

**Segments**

- Based on component, the Global In-Memory Computing Market can be segmented into solutions and services. The solutions segment can further be categorized into in-memory data management and in-memory application platforms. The services segment includes consulting, implementation, and support and maintenance services. - On the basis of organization size, the market is divided into small and medium-sized enterprises (SMEs) and large enterprises. In-memory computing solutions are implemented by both SMEs and large enterprises to boost their operational efficiency and achieve real-time data processing. - In terms of deployment mode, the market can be segmented into on-premises and cloud-based deployment. The cloud-based deployment model is gaining popularity due to its flexibility, scalability, and cost-effectiveness. - By vertical, the in-memory computing market is segmented into BFSI, healthcare, retail, manufacturing, IT and telecom, government, and others. The BFSI sector is a major contributor to the market growth as in-memory computing helps financial institutions in real-time risk management and fraud detection.

**Market Players**

- Some of the key market players in the Global In-Memory Computing Market include SAP SE, Oracle, IBM, GigaSpaces, Hazelcast, GridGain Systems, TIBCO Software, Software AG, and Microsoft Corporation. These companies are focusing on product innovations, strategic partnerships, and acquisitions to enhance their market presence and expand their customer base. - Other notable players in the market are Intel Corporation, VMware, Altibase Corporation, MemVerge, Exasol, and ScaleOut Software. These players are actively investing in research and development activities to introduce advanced in-memory computing solutions that cater to the evolving needs of organizations across different industry verticals.

In the Global In-Memory Computing Market, one emerging trend is the growing adoption of in-memory computing solutions for data analytics and business intelligence. Organizations across various sectors are leveraging in-memory computing technology to gain real-time insights from their data, enabling them to make informed decisions quickly and stay ahead in today's competitive landscape. This trend is driven by the increasing need for faster processing speeds and improved performance in handling large volumes of data. By utilizing in-memory computing solutions, businesses can eliminate data latency issues and enhance their overall operational efficiency.

Another key development in the market is the rising demand for in-memory computing in the healthcare sector. With the healthcare industry generating massive amounts of data from electronic health records, medical imaging, and patient monitoring systems, there is a growing need for real-time data processing and analysis to improve patient care and support clinical decision-making. In-memory computing solutions enable healthcare providers to access and analyze critical information instantaneously, leading to better patient outcomes, reduced costs, and increased operational efficiency within healthcare facilities.

Furthermore, the integration of in-memory computing technology with artificial intelligence (AI) and machine learning (ML) capabilities is set to drive significant advancements in the market. By combining in-memory computing with AI and ML algorithms, organizations can enhance their data processing capabilities, automate decision-making processes, and unlock valuable insights from complex datasets. This convergence of technologies opens up new opportunities for businesses to extract actionable intelligence from their data, optimize operations, and drive innovation across various industry verticals.

Additionally, the market is witnessing a surge in strategic collaborations and partnerships among key players to accelerate product development and expand their global footprint. Companies are joining forces to integrate complementary technologies, enhance interoperability, and deliver comprehensive in-memory computing solutions that address the diverse needs of customers. These partnerships enable market players to leverage each other's strengths, access new markets, and create synergies that drive innovation and drive market growth.

Overall, the Global In-Memory Computing Market is poised for substantial growth driven by the increasing demand for real-time data processing, the rising adoption of in-memory computing across diverse industry verticals, and the convergence of technologies like AI and ML. As organizations continue to prioritize data-driven decision-making and digital transformation initiatives, in-memory computing solutions will play a pivotal role in enabling enterprises to unlock the full potential of their data assets, drive business agility, and stay competitive in today's fast-paced digital economy.One significant aspect impacting the Global In-Memory Computing Market is the increasing focus on real-time data processing capabilities across various industry verticals. Organizations are recognizing the need to harness the power of in-memory computing solutions to analyze data instantaneously, enabling them to make timely and informed decisions that can drive operational efficiency and competitive advantage. The ability to access and process large volumes of data in real-time is becoming a crucial differentiator for businesses looking to stay ahead in the rapidly evolving digital landscape. As a result, the demand for in-memory computing technologies is expected to witness steady growth as more companies seek to leverage these solutions for enhanced data analytics and business intelligence applications.

Moreover, the integration of in-memory computing with advanced technologies such as artificial intelligence (AI) and machine learning (ML) is poised to revolutionize the market further. By combining in-memory computing capabilities with AI and ML algorithms, organizations can unlock deeper insights from their data, automate decision-making processes, and drive innovative solutions across various sectors. The synergy created by merging these technologies offers businesses a competitive edge by enabling them to extract actionable intelligence, optimize operations, and drive transformative changes in how data is processed and utilized. This convergence presents a wealth of opportunities for market players to develop innovative solutions that cater to the evolving needs of businesses across different industries, fueling further growth and advancement in the in-memory computing market.

Additionally, the healthcare sector's increasing adoption of in-memory computing solutions for real-time data processing and analysis represents a significant growth opportunity in the market. With the healthcare industry generating vast amounts of data from various sources, the ability to access and analyze this information instantly can vastly improve patient care, enhance clinical decision-making, and drive operational efficiencies within healthcare organizations. In-memory computing technologies offer healthcare providers the capability to extract critical insights from complex datasets rapidly, leading to better patient outcomes, cost savings, and overall improvements in the quality of care delivered. This trend is expected to drive substantial growth in the adoption of in-memory computing solutions within the healthcare sector, creating new avenues for market players to innovate and expand their presence in this burgeoning market segment.

In conclusion, the Global In-Memory Computing Market is undergoing significant transformations driven by the increasing demand for real-time data processing, the integration of advanced technologies like AI and ML, and the expanding adoption of in-memory computing solutions across diverse industry verticals. Organizations are embracing these technologies to unlock the full potential of their data assets, gain competitive advantages, and drive business growth in a data-driven digital economy. As the market continues to evolve, market players have the opportunity to capitalize on these trends, innovate new solutions, and establish strong footholds in the rapidly expanding in-memory computing landscape.

The In-Memory Computing Market is highly fragmented, featuring intense competition among both global and regional players striving for market share. To explore how global trends are shaping the future of the top 10 companies in the keyword market.

Learn More Now: https://www.databridgemarketresearch.com/reports/global-in-memory-computing-market/companies

DBMR Nucleus: Powering Insights, Strategy & Growth

DBMR Nucleus is a dynamic, AI-powered business intelligence platform designed to revolutionize the way organizations access and interpret market data. Developed by Data Bridge Market Research, Nucleus integrates cutting-edge analytics with intuitive dashboards to deliver real-time insights across industries. From tracking market trends and competitive landscapes to uncovering growth opportunities, the platform enables strategic decision-making backed by data-driven evidence. Whether you're a startup or an enterprise, DBMR Nucleus equips you with the tools to stay ahead of the curve and fuel long-term success.

Influence of the In-Memory Computing Market Report:

Comprehensive assessment of all opportunities and risk in the In-Memory Computing Market

Lead In-Memory Computing Market recent innovations and major events

Detailed study of business strategies for growth of the In-Memory Computing Market market-leading players

Conclusive study about the growth plot of In-Memory Computing Market for forthcoming years

In-depth understanding of In-Memory Computing Market -particular drivers, constraints and major micro markets

Favourable impression inside vital technological and In-Memory Computing Marketlatest trends striking the Cannabis Seeds Market

Browse More Reports:

Global Non-Anti Coagulant Rodenticides Market Global Clinical Trial Supplies Market Global Food Grade Maltodextrin Market Global Freight Transportation Management Market Asia-Pacific Stand-Up Paddleboard Market North America Fiber Optic Connector in Telecom Market Global Tattoo Removal Devices Market Global Hair Dryer Market Global Food Dispenser Market U.S. Cerebral Palsy Market Global Surgical Case Carts Market Global Tetanus Market Global Fiber Optic Connector in Telecom Market Europe API Intermediates Market Global Ophthalmology Drugs and Devices Market Middle East and Africa System Integrator Market for Retail and Consumer Goods – Industry Trends and Forecast to 2029 Global Gastroesophageal Reflux Disease Market Global Grocery Market Global Chinese Hamster Ovary (CHO) Cells Market Global Egg Protein Market North America Anti-Acne Cosmetics Market North America Soy Protein Concentrate Market Global Glazing Gel Market Global Single Photon Emission Computerized Tomography (SPECT) Probes Market Global Bipolar Discrete Semiconductor Market Global Digital Television (TV) Market Europe Biostimulants Market Global Non-Toluene Ink for Flexible Packaging Market Middle East and Africa Reverse Logistics Market Global Pharmaceutical Pellets Market North America Printing Inks/Packaging Inks Market Middle East and Africa Clinical Trial Supplies Market Global Lactase Enzyme Market Asia-Pacific 3D Display Market

About Data Bridge Market Research:

An absolute way to forecast what the future holds is to comprehend the trend today!

Data Bridge Market Research set forth itself as an unconventional and neoteric market research and consulting firm with an unparalleled level of resilience and integrated approaches. We are determined to unearth the best market opportunities and foster efficient information for your business to thrive in the market. Data Bridge endeavors to provide appropriate solutions to the complex business challenges and initiates an effortless decision-making process. Data Bridge is an aftermath of sheer wisdom and experience which was formulated and framed in the year 2015 in Pune.

Contact Us: Data Bridge Market Research US: +1 614 591 3140 UK: +44 845 154 9652 APAC : +653 1251 975 Email:- [email protected]

Tag: In-Memory Computing, In-Memory Computing Size, In-Memory Computing Share, In-Memory Computing Growth

0 notes

Text

Integrating Edge-&-Cloud Hosting Services For Smart Business Solutions

Smart business solutions help your business succeed in today's market scenario. For certain business solutions, combining multiple service types becomes mandatory. Your choice is important for bandwidth access, cost, latency speed, automation, and data processing.

For a successful business, it is important to opt for integrated hybrid hosting solutions

Look for a Virtual Private Server Provider in Nigeria that provides hybrid hosting solutions- cloud and edge computing

Before you implement calculate your hosting needs

Understanding hosting services roles – edge VS cloud

Your business needs centralized hosting services for smooth business operations. You need to select hosting services as per resources available for your business- Google Cloud, AWS, etc. Based on your needs, you select decentralized or centralized hosting.

You can try using hybrid versions- both Edge and Cloud. This will help reduce the latency factor. The hybrid services will also be easy to scale, as per data processing quantity and time. You can think of integrating both services within your business model.

Smart solution choice

You run a business that needs data processing and monitoring in real time. There are business models that may need either edge or cloud hosting solutions. Some businesses rely on hybrid models as well. For the retail model, you can select edge hosting, if you offer POS solutions.

Look for cloud or Edge Computing in Nigeria depending on your business model. If you have a business model that relates to health care services, smart cities, retail services, or smart factories, then hybrid solutions are more effective.

Perfect hybrid structure

Some systems may assign tasks based on different factors- data processing or latency needs. Edge computing will offer processing in real time. Cloud services are best if a large data volume needs virtual storage space.

Here in such conditions, hybrid structures are more effective. The right model implementation makes the business work smoothly and successfully. You decide between the two types, based on needs.

Data synchronization

Business models depend on both hosting types for smooth services. Real-time data sync makes a difference. This means that the synch factor has to be bi-directional type. The Managed Cloud Hosting Platform in Nigeria you choose should offer solutions to your business needs.

This will enable communication in real time between staff and clients. Best security practices in place are also important. Focus on VPNs, TLS, and ZTNA factors when it comes to security. In case of latency spikes or failures, alerts are important. Focus on these features when selecting a hybrid hosting model for your business.

For more information, you can visit our website https://www.layer3.cloud/ or call us at 09094529373

#Virtual Private Server Provider in Nigeria#Managed Cloud Hosting Platform in Nigeria#Edge Computing in Nigeria

0 notes

Text

Cloud Cost Optimization Strategies Every CTO Should Know in 2025

As organizations scale in the cloud, one challenge becomes increasingly clear: managing and optimizing cloud costs. With the promise of scalability and flexibility comes the risk of unexpected expenses, idle resources, and inefficient spending.

In 2025, cloud cost optimization is no longer just a financial concern—it’s a strategic imperative for CTOs aiming to drive innovation without draining budgets. In this blog, we’ll explore proven strategies every CTO should know to control cloud expenses while maintaining performance and agility.

🧾 The Cost Optimization Challenge in the Cloud

The cloud offers a pay-as-you-go model, which is ideal—if you’re disciplined. However, most companies face challenges like:

Overprovisioned virtual machines

Unused storage or idle databases

Redundant services running in the background

Poor visibility into cloud usage across teams

Limited automation of cost governance

These inefficiencies lead to cloud waste, often consuming 30–40% of a company’s monthly cloud budget.

🛠️ Core Strategies for Cloud Cost Optimization

1. 📉 Right-Sizing Resources

Regularly analyze actual usage of compute and storage resources to downsize over-provisioned assets. Choose instance types or container configurations that match your workload’s true needs.

2. ⏱️ Use Auto-Scaling and Scheduling

Enable auto-scaling to adjust resource allocation based on demand. Implement scheduling scripts or policies to shut down dev/test environments during off-hours.

3. 📦 Leverage Reserved Instances and Savings Plans

For predictable workloads, commit to Reserved Instances (RIs) or Savings Plans. These options can reduce costs by up to 70% compared to on-demand pricing.

4. 🚫 Eliminate Orphaned Resources

Track down unused volumes, unattached IPs, idle load balancers, or stopped instances that still incur charges.

5. 💼 Centralized Cost Management

Use tools like AWS Cost Explorer, Azure Cost Management, or Google’s Billing Reports to monitor, allocate, and forecast cloud spend. Consolidate billing across accounts for better control.

🔐 Governance and Cost Policies

✅ Tag Everything

Apply consistent tagging (e.g., environment:dev, owner:teamA) to group and track costs effectively.

✅ Set Budgets and Alerts

Configure budget thresholds and set up alerts when approaching limits. Enable anomaly detection for cost spikes.

✅ Enforce Role-Based Access Control (RBAC)

Restrict who can provision expensive resources. Apply cost guardrails via service control policies (SCPs).

✅ Use Cost Allocation Reports

Assign and report costs by team, application, or business unit to drive accountability.

📊 Tools to Empower Cost Optimization

Here are some top tools every CTO should consider integrating:

Salzen Cloud: Offers unified dashboards, usage insights, and AI-based optimization recommendations

CloudHealth by VMware: Cost governance, forecasting, and optimization in multi-cloud setups

Apptio Cloudability: Cloud financial management platform for enterprise-level cost allocation

Kubecost: Cost visibility and insights for Kubernetes environments

AWS Trusted Advisor / Azure Advisor / GCP Recommender: Native cloud tools to recommend cost-saving actions

🧠 Advanced Tips for 2025

🔁 Adopt FinOps Culture

Build a cross-functional team (engineering + finance + ops) to drive cloud financial accountability. Make cost discussions part of sprint planning and retrospectives.

☁️ Optimize Multi-Cloud and Hybrid Environments

Use abstraction and management layers to compare pricing models and shift workloads to more cost-effective providers.

🔄 Automate with Infrastructure as Code (IaC)

Define auto-scaling, backup, and shutdown schedules in code. Automation reduces human error and enforces consistency.

🚀 How Salzen Cloud Helps

At Salzen Cloud, we help CTOs and engineering leaders:

Monitor multi-cloud usage in real-time

Identify idle resources and right-size infrastructure

Predict usage trends with AI/ML-based models

Set cost thresholds and auto-trigger alerts

Automate cost-saving actions through CI/CD pipelines and Infrastructure as Code

With Salzen Cloud, optimization is not a one-time event—it’s a continuous, intelligent process integrated into every stage of the cloud lifecycle.

✅ Final Thoughts

Cloud cost optimization is not just about cutting expenses—it's about maximizing value. With the right tools, practices, and mindset, CTOs can strike the perfect balance between performance, scalability, and efficiency.

In 2025 and beyond, the most successful cloud leaders will be those who innovate smartly—without overspending.

0 notes

Text

Cloud computing

Cloud computing is transforming the way businesses and individuals store, access, and manage data. https://digitalboost.lol/cloud-computing-2025-powering-the-digital-age/ By delivering computing services over the internet—including storage, servers, databases, software, and analytics—cloud computing offers flexibility, scalability, and cost efficiency. Explore the different types of cloud models (public, private, hybrid), their advantages, real-world applications, and how the cloud is reshaping the future of technology.

0 notes

Text

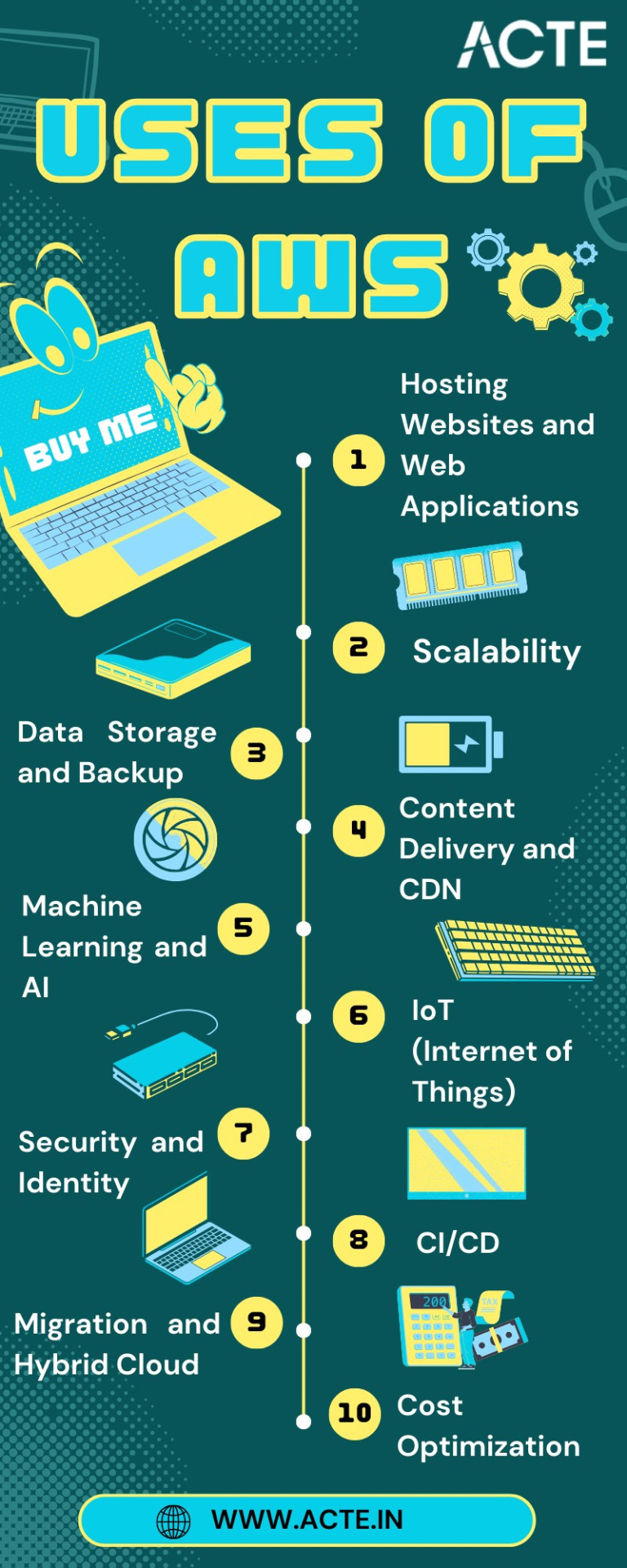

Your Journey Through the AWS Universe: From Amateur to Expert

In the ever-evolving digital landscape, cloud computing has emerged as a transformative force, reshaping the way businesses and individuals harness technology. At the forefront of this revolution stands Amazon Web Services (AWS), a comprehensive cloud platform offered by Amazon. AWS is a dynamic ecosystem that provides an extensive range of services, designed to meet the diverse needs of today's fast-paced world.

This guide is your key to unlocking the boundless potential of AWS. We'll embark on a journey through the AWS universe, exploring its multifaceted applications and gaining insights into why it has become an indispensable tool for organizations worldwide. Whether you're a seasoned IT professional or a newcomer to cloud computing, this comprehensive resource will illuminate the path to mastering AWS and leveraging its capabilities for innovation and growth. Join us as we clarify AWS and discover how it is reshaping the way we work, innovate, and succeed in the digital age.

Navigating the AWS Universe:

Hosting Websites and Web Applications: AWS provides a secure and scalable place for hosting websites and web applications. Services like Amazon EC2 and Amazon S3 empower businesses to deploy and manage their online presence with unwavering reliability and high performance.

Scalability: At the core of AWS lies its remarkable scalability. Organizations can seamlessly adjust their infrastructure according to the ebb and flow of workloads, ensuring optimal resource utilization in today's ever-changing business environment.

Data Storage and Backup: AWS offers a suite of robust data storage solutions, including the highly acclaimed Amazon S3 and Amazon EBS. These services cater to the diverse spectrum of data types, guaranteeing data security and perpetual availability.

Databases: AWS presents a panoply of database services such as Amazon RDS, DynamoDB, and Redshift, each tailored to meet specific data management requirements. Whether it's a relational database, a NoSQL database, or data warehousing, AWS offers a solution.

Content Delivery and CDN: Amazon CloudFront, AWS's content delivery network (CDN) service, ushers in global content distribution with minimal latency and blazing data transfer speeds. This ensures an impeccable user experience, irrespective of geographical location.

Machine Learning and AI: AWS boasts a rich repertoire of machine learning and AI services. Amazon SageMaker simplifies the development and deployment of machine learning models, while pre-built AI services cater to natural language processing, image analysis, and more.

Analytics: In the heart of AWS's offerings lies a robust analytics and business intelligence framework. Services like Amazon EMR enable the processing of vast datasets using popular frameworks like Hadoop and Spark, paving the way for data-driven decision-making.

IoT (Internet of Things): AWS IoT services provide the infrastructure for the seamless management and data processing of IoT devices, unlocking possibilities across industries.

Security and Identity: With an unwavering commitment to data security, AWS offers robust security features and identity management through AWS Identity and Access Management (IAM). Users wield precise control over access rights, ensuring data integrity.

DevOps and CI/CD: AWS simplifies DevOps practices with services like AWS CodePipeline and AWS CodeDeploy, automating software deployment pipelines and enhancing collaboration among development and operations teams.

Content Creation and Streaming: AWS Elemental Media Services facilitate the creation, packaging, and efficient global delivery of video content, empowering content creators to reach a global audience seamlessly.

Migration and Hybrid Cloud: For organizations seeking to migrate to the cloud or establish hybrid cloud environments, AWS provides a suite of tools and services to streamline the process, ensuring a smooth transition.

Cost Optimization: AWS's commitment to cost management and optimization is evident through tools like AWS Cost Explorer and AWS Trusted Advisor, which empower users to monitor and control their cloud spending effectively.

In this comprehensive journey through the expansive landscape of Amazon Web Services (AWS), we've embarked on a quest to unlock the power and potential of cloud computing. AWS, standing as a colossus in the realm of cloud platforms, has emerged as a transformative force that transcends traditional boundaries.

As we bring this odyssey to a close, one thing is abundantly clear: AWS is not merely a collection of services and technologies; it's a catalyst for innovation, a cornerstone of scalability, and a conduit for efficiency. It has revolutionized the way businesses operate, empowering them to scale dynamically, innovate relentlessly, and navigate the complexities of the digital era.

In a world where data reigns supreme and agility is a competitive advantage, AWS has become the bedrock upon which countless industries build their success stories. Its versatility, reliability, and ever-expanding suite of services continue to shape the future of technology and business.

Yet, AWS is not a solitary journey; it's a collaborative endeavor. Institutions like ACTE Technologies play an instrumental role in empowering individuals to master the AWS course. Through comprehensive training and education, learners are not merely equipped with knowledge; they are forged into skilled professionals ready to navigate the AWS universe with confidence.

As we contemplate the future, one thing is certain: AWS is not just a destination; it's an ongoing journey. It's a journey toward greater innovation, deeper insights, and boundless possibilities. AWS has not only transformed the way we work; it's redefining the very essence of what's possible in the digital age. So, whether you're a seasoned cloud expert or a newcomer to the cloud, remember that AWS is not just a tool; it's a gateway to a future where technology knows no bounds, and success knows no limits.

6 notes

·

View notes

Text