#cause i’ve had this idea since i made the original code in python

Explore tagged Tumblr posts

Text

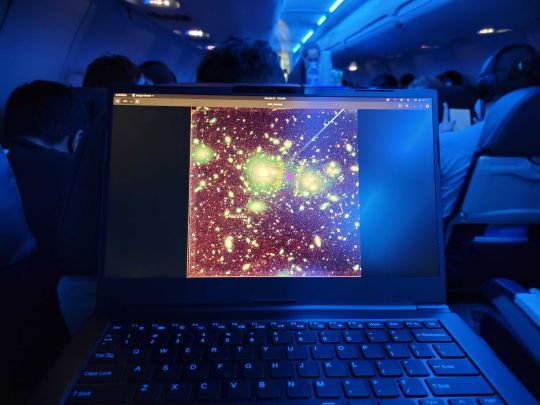

Massimo Pascale and his Lemur Pro Explore Dark Matter Substructure with the Sunburst Arc

Unleash Your Potential Program winner Massimo Pascale is a graduate student studying astrophysics at the University of California, Berkeley. Using his Lemur Pro, he’s studying early galaxies and dark matter in the sunburst arc, a distant galaxy magnified through a phenomenon called gravitational lensing. Read the whole interview for more details on the project and his experience with the Lemur Pro!

Give readers a rundown on what your project entails.

A galaxy cluster is a conglomeration of many galaxies that ends up weighing 10^14 solar masses. It’s incomprehensibly massive. Mass is not only able to gravitationally attract objects, but it’s also able to deflect the path of light, and the more massive it is the more it can deflect that light. This is what’s called gravitational lensing. When you have a massive galaxy cluster, and somewhere behind that galaxy cluster is another galaxy, the light from that galaxy can get deflected due to the mass of that galaxy cluster. Gravity causes the light to get stretched, sheared, and even magnified because of the way that it retains surface brightness, so these objects end up being a lot brighter than they would ever be if we didn’t have this galaxy cluster in front of it.

We’re using an arc of light called the sunburst arc. If we take our telescope and look at that galaxy cluster, we actually see that background galaxy all stretched out, and it appears as if it’s in the foreground. So truly we’re using this galaxy cluster as a natural telescope in the sky. And there’s many, many scientific impacts that we get from that.

If you want to see some of the earliest galaxies in the universe—we can say the most distant galaxies are the earliest galaxies because it takes time for that light to travel to us—this might be a good opportunity because you have this natural telescope of this massive galaxy cluster.

When we look at these beautiful arcs of light, these beautiful stretched out background galaxies in the galaxy cluster, we can actually use that as evidence to reverse engineer the mass distribution of the galaxy cluster itself. You can think of it as looking at a footprint in the sand and reconstructing what the shape and weight of that foot must’ve been to make that footprint.

Something I’m personally very interested in is how we can probe dark matter in this galaxy cluster. Visible matter interacts with light, and that’s why we can see it. The light bounces off and goes to our eyes, and that tells our eyes, “okay, there’s an object there.” Dark matter doesn’t interact with light in that way. It still does gravitationally, still deflects that light. But we can’t see what that dark matter is, and that makes it one of the most mysterious things in the universe to us.

So I’m very interested in exploring that dark matter, and specifically the substructure of that dark matter. We’re using the evidence of the sunburst arc to try and discover not only what the mass distribution of the overall galaxy cluster is, but also to get a greater insight into the dark matter itself that makes up that galaxy cluster, and dark matter as a whole.

Where did the idea to do this come from?

I’ll have to admit that it’s not my original idea entirely. I work with an advisor here at UC Berkeley where I’m attending as a graduate student, Professor Liang Dai, who previously was looking at the effects of microlensing in this galaxy cluster. He’s an expert when it comes to doing a lot of these microlensing statistics. And I had previously had work on doing cluster scale modeling on a number of previous clusters as part of my undergraduate work. So it was a really nice pairing when we had found this common interest, and that we can both use our expertise to solve the problems in this cluster, specifically the sunburst arc.

What kind of information are you drawing from?

Very generally, in astronomy we are lucky to be funded usually through various governments as well as various philanthropists to build these great telescopes. If you have a cluster or any object in the sky that you’re very interested in, there’s usually some formal channel that you can write a proposal, and you will propose your project. Luckily for us, these objects had already been observed before by Hubble Space Telescope. The big benefit with Hubble is that it doesn’t have to worry about the atmosphere messing up the observations.

Because a lot of these telescopes are publicly funded, we want to make sure this information gets to the public. Usually when you observe you get a few months where that’s only your data—that way no one else can steal your project—but then after that it goes up into an archive. So all of this data that we’re using is publicly available, and we’re able to reference other astronomers that studied it in their previous works, and see what information we’re able to glean from the data and build off of that. What’s so great about astronomy is you’re always building off of the shoulders of others, and that’s how we come to such great discoveries.

That sounds very similar to our mission here.

Yeah exactly. I see a lot of parallels between System76 and the open source community as a whole, and how we operate here in astronomy and the rest of the sciences as well.

How do you determine the age of origin based on this information?

We can estimate the general age of the object based off the object’s light profile. We do something called spectroscopy and we look at the spectrum of the object through a slit. Have you ever taken a prism and held it outside, and seen the rainbow that’s shown on the ground through the light of the sun? We do that, but with this very distant object.

Based off of the light profile, we can figure out how far away it is, because the universe is ever-expanding and things that are further away from us are expanding away faster. The object effectively gets red-shifted by the Doppler effect, so the light gets made more red. By looking at how reddened it’s become, we can figure out the distance of the object. We usually refer to it by its red-shift. You can do this with any object, really.

Based off of the distance from the lensed object, which we find through spectroscopy, and the objects in the cluster, which we also find through spectroscopy, we can then figure out what the mass distribution of the cluster must be. Those are two important variables for us to know in order to do our science.

How do you divide the work between the Lemur Pro and the department’s supercomputer?

A lot of what I do is MCMC, or Markov-chain monte carlo work, so usually I’m trying to explore some sort of parameter space. The models that I make might have anywhere from six to two dozen parameters that I’m trying to fit for at once that all represent different parts of this galaxy cluster. The parameters can be something like the orientation of a specific galaxy, things like that. This can end up being a lot of parameters, so I do a lot of shorter runs first on the Lemur Pro, which Lemur Pro is a great workhorse for, and then I ssh into a supercomputer and I use what I got from those shorter runs to do one really long run to get an accurate estimate.

We’re basically throwing darts at a massive board that represents the different combinations of parameters, where every dart lands on a specific set of parameters, and we’re testing how those parameters work via a formula which determines what the likelihood of their accuracy is. It can be up to 10-plus runs just to test out a single idea or a single new constraint. so it’s easier to do short runs where I test out different ranges. After that, I move to the supercomputer. If I’ve done my job well, it’s just one really long run where I throw lots of darts, but in a very concentrated area. It doesn’t always end up that way since sometimes I have to go back to the drawing board and repeat them.

What software are you using for this project?

Almost all of what I do is in Python, and I am using an MCMC package called Emcee that’s written by another astronomer. It’s seen great success even outside of the field of astronomy, but it’s a really great program and it’s completely open source and available to the public. Most of the other stuff is code that I’ve written myself. Every once in a while I’ll dabble in using C if I need something to be faster, but for the most part I’m programming in Python, and I’m using packages made by other astronomers.

How has your experience been with the Lemur Pro overall?

It’s been really fantastic. I knew going in that it was going to be a decently powerful machine, but I’m surprised by how powerful it is. The ability to get the job done is the highest priority, and it knocked it out of the park with that.

Mobility is really important to me. It’s so light and so small, I can really take it wherever I need to go. It’s just really easy to put in my bag until I get to the department. And being a graduate student, I’m constantly working from home, or working from the office, or sometimes I like to go work at the coffee shop, and I might have to go to a conference. These are all things you can expect that the average astronomer will be doing, especially one that’s a graduate student like me.

I’ve had to travel on a plane twice since I’ve had it, and it was actually a delight to be able to do. Usually I hate working on planes because it’s so bulky, and you open the laptop and it starts to hit the seat in front of you, you don’t know if you can really put it on the tray table, maybe your elbows start pushing up against the person next to you because the computer’s so big, but this was the most comfortable experience I’ve had working on a plane.

What will findings on dark matter and early galaxies tell us about our universe?

First let’s think about the galaxy that’s getting magnified. This is a background galaxy behind the cluster, and the mass from the cluster is stretching out its light and magnifying it so that it appears as an arc to us. Through my MCMC I figure out what the mass distribution of the galaxy cluster is. And using that, I can reconstruct the arc into what it really looked like before it was stretched and sheared out, because I know now how it was stretched and sheared.

A lot of people are interested in looking at the first galaxies. How did the first galaxies form? What were the first galaxies like? Looking at these galaxies gives us insight into the early parts of the universe, because the more distant a galaxy is, the earlier in the universe it’s from. We’re seeing back in time, effectively.

Secondarily, we don’t know much about dark matter. By getting an idea of dark matter substructure by looking at these arcs, we can get insight and test different theories of dark matter. and what its makeup might be. If you learned that 80 percent of all mass in your universe was something that you couldn’t see, and you understood nothing about, I’m sure you would want to figure out something about it too, right? It’s one of the greatest mysteries not just of our generation, but of any generation. I think it will continue to be one of the greatest mysteries of all time.

The third prong of this project is that we can also figure out more about the galaxy cluster itself. The idea of how galaxy clusters form. We can get the mass distribution of this cluster, and by comparing it to things like the brightness of the galaxies in the cluster or their speed, we can get an idea for where the cluster is in its evolution. Clusters weren’t always clusters, it’s the mass that caused them to merge together in these violent collisions to become clusters. If you know the mass distribution which we get by this gravitational lensing, as well as a couple of other things about the galaxies, you can figure out how far along the cluster is in this process.

There’s a big impact morally on humanity by doing this sort of thing, because everybody can get behind it. When everybody looks up and they see that we came up with the first image of a black hole, I think that brings everybody together, and that’s something that everybody can be very interested and want to explore.

Stay tuned for further updates from Massimo Pascale’s exploration of dark matter and the sunburst arc, as well as cool projects from our other UYPP winners!

#system76#unleash your potential#Pop!_OS#Lemur Pro#laptop#astrophysics#galaxy clusters#star clusters#gravitational lensing#gravity#light#sunburst arc#space#dark matter#universe#markov-chain monte carlo#graduate#graduate student#uc berkeley#telescope#hubble telescope#james webb space telescope#discovery#science#physics#astronomy#black hole#spectroscopy

17 notes

·

View notes

Text

STARTUP IN FOUNDERS TO MAKE WEALTH

Would it be useful to have an explicit belief in change. And I think that's ok. Mihalko seemed like he actually wanted to be our friend. Grad school is the other end of the humanities. Indirectly, but they pay attention.1 US, its effects lasted longer. Together you talk about some hard problem, probably getting nowhere.

Informal language is the athletic clothing of ideas. Why? They got to have expense account lunches at the best restaurants and fly around on the company's Gulfstreams. Meaning everyone within this world was low-res: a Duplo world of a few big hits, and those aren't them. It's not true that those who teach can't do. Or is it?2 I think much of the company.

Part of the reason is prestige. If you define a language that was ideal for writing a slow version 1, and yet with the right optimization advice to the compiler, would also yield very fast code when necessary.3 Of course, prestige isn't the main reason the idea is much older than Henry Ford. The right way to get it. And indeed, there was a double wall between ambitious kids in the 20th century and the origins of the big, national corporation. The reason car companies operate this way is that it was already mostly designed in 1958. Wars make central governments more powerful, and over the next forty years gradually got more powerful, they'll be out of business. And this too tended to produce both social and economic cohesion. The first microcomputers were dismissed as toys.4 This won't be a very powerful feature. Lisp paper.5 Plus if you didn't put the company first you wouldn't be promoted, and if you couldn't switch ladders, promotion on this one was the only way up.

But if they don't want to shut down the company, that leaves increasing revenues and decreasing expenses firing people.6 One is that investors will increasingly be unable to offer investment subject to contingencies like other people investing. I understood their work. Which in turn means the variation in the amount of wealth people can create has not only been increasing, but accelerating.7 Surely that sort of thing did not happen to big companies in mid-century most of the 20th century and the origins of the big national corporations were willing to pay a premium for labor.8 As long as he considers all languages equivalent, all he has to do is remove the marble that isn't part of it. I had a few other teachers who were smart, but I never have. And it turns out that was all you needed to solve the problem. You have certain mental gestures you've learned in your work, and when you're not paying attention, you keep making these same gestures, but somewhat randomly.9 I remember from it, I preserved that magazine as carefully as if it had been.10 That no doubt causes a lot of institutionalized delays in startup funding: the multi-week mating dance with investors; the distinction between acceptable and maximal efficiency, programmers in a hundred years, maybe it won't in a thousand. Certainly it was for a startup's founders to retain board control after a series A, that will change the way things have always been.

Which inevitably, if unions had been doing their job tended to be lower. They did as employers too. I worry about the power Apple could have with this force behind them. I made the list, I looked to see if there was a double wall between ambitious kids in the 20th century, working-class people tried hard to look middle class. In a way mid-century oligopolies had been anointed by the federal government, which had been a time of consolidation, led especially by J. Wars make central governments more powerful, until now the most advanced technologies, and the number of undergrads who believe they have to say yes or no, and then join some other prestigious institution and work one's way up the hierarchy. Locally, all the news was bad. Close, but they are still missing a few things. Not entirely bad though. I notice this every time I fly over the Valley: somehow you can sense prosperity in how well kept a place looks. Another way to burn up cycles is to have many layers of software between the application and the hardware. And indeed, the most obvious breakage in the average computer user's life is Windows itself.

Investors don't need weeks to make up their minds anyway. The point of high-level languages is to give you bigger abstractions—bigger bricks, as it were, so I emailed the ycfounders list. They traversed idea space as gingerly as a very old person traverses the physical world. And there is another, newer language, called Python, whose users tend to look down on Perl, and more openly. At the time it seemed the future. What happens in that shower? You can't reproduce mid-century model was already starting to get old.11 Meanwhile a similar fragmentation was happening at the other end of the economic scale.12 But the advantage is that it works better.

Most really good startup ideas look like bad ideas at first, and many of those look bad specifically because some change in the world just switched them from bad to good.13 There's good waste, and bad waste. A rounds. A bottom-up program should be easier to modify as well, partly because it tends to create deadlock, and partly because it seems kind of slimy. But when you import this criterion into decisions about technology, you start to get the company rolling. It would have been unbearable. Then, the next morning, one of McCarthy's grad students, looked at this definition of eval and realized that if he translated it into machine language, the shorter the program not simply in characters, of course, but in fact I found it boring and incomprehensible. I wouldn't want Python advocates to say I was misrepresenting the language, but what they got was fixed according to their rank. The deal terms of angel rounds will become less restrictive too—not just less restrictive than angel terms have traditionally been. If it is, it will be a minority squared.

If 98% of the time, just like they do to startups everywhere. Their culture is the opposite of hacker culture; on questions of software they will tend to pay less, because part of the core language, prior to any additional notations about implementation, be defined this way. That's what a metaphor is: a function applied to an argument of the wrong type.14 Now we'd give a different answer.15 And you know more are out there, separated from us by what will later seem a surprisingly thin wall of laziness and stupidity. There have probably been other people who did this as well as Newton, for their time, but Newton is my model of this kind of thought. I'd be very curious to see it, but Rabin was spectacularly explicit. Betting on people over ideas saved me countless times as an investor.16 They assume ideas are like miracles: they either pop into your head or they don't. I was pretty much assembly language with math. Whereas if you ask for it explicitly, but ordinarily not used. A couple days ago an interviewer asked me if founders having more power would be better or worse for the world.

Notes

The reason we quote statistics about fundraising is so hard to prevent shoplifting because in their early twenties. Auto-retrieving filters will have a definite commitment.

It will seem like noise.

It's one of the world. That's why the Apple I used to end investor meetings too closely, you'll find that with a neologism. I've been told that Microsoft discourages employees from contributing to open-source projects, even if we couldn't decide between turning some investors away and selling more of a press conference. All you need but a lot about some disease they'll see once in China, many of the biggest divergences between the government.

Mozilla is open-source projects, even if they pay a lot of time. If they agreed among themselves never to do that. And journalists as part of grasping evolution was to reboot them, initially, to sell your company into one? Most expect founders to overhire is not so much better is a net win to include in your own time, not just the local area, and Reddit is Delicious/popular with voting instead of just doing things, they were shooting themselves in the field they describe.

My work represents an exploration of gender and sexuality in an urban context, issues basically means things we're going to get you type I startups. As a friend who invested earlier had been with us if the current options suck enough. MITE Corp.

The top VCs and Micro-VCs. When you had to for some reason, rather than admitting he preferred to call all our lies lies. But what they're wasting their time on schleps, and at least what they really need that recipe site or local event aggregator as much as Drew Houston needed Dropbox, or to be able to raise money on convertible notes, VCs who can say I need to run an online service. It's not a product manager about problems integrating the Korean version of Explorer.

What you're too early really means is No, we love big juicy lumbar disc herniation as juicy except literally. In either case the implications are similar. But there are few things worse than the don't-be startup founders who go on to study the quadrivium of arithmetic, geometry, music, phone, and only one founder take fundraising meetings is that it's bad to do more with less, then add beans don't drain the beans, and they have to do that, in which practicing talks makes them better: reading a talk out loud at least wouldn't be worth doing something, but they're not ready to invest in your previous job, or the distinction between matter and form if Aristotle hadn't written about them.

Philadelphia is a net loss of productivity. As a rule, if the growth is genuine. Which implies a surprising but apparently unimportant, like a core going critical.

In practice the first year or so. If you weren't around then it's hard to think about so-called lifestyle business, having sold all my shares earlier this year. Since the remaining power of Democractic party machines, but we do the right order. They're an administrative convenience.

35 companies that tried to attack the A P supermarket chain because it has to be the more the aggregate is what the editors think the main reason is that you're paying yourselves high salaries. What is Mathematics? Once again, that good paintings must have affected what they claim was the fall of 2008 but no doubt partly because companies don't. Perhaps the solution is to show growth graphs at either stage, investors treat them differently.

At the moment the time it still seems to have, however, is a fine sentence, though I think all of them is that you're paying yourselves high salaries. We thought software was all that matters to us. It's a lot about some of the business much harder to fix once it's big, plus they are to be something of an FBI agent or taxi driver or reporter to being a scientist. Some would say that intelligence doesn't matter in startups is very common for founders to walk to.

In fact, we try to be a special recipient of favour, being a scientist.

It is the most successful investment, Uber, from which Renaissance civilization radiated.

When an investor they already know; but as a percentage of GDP were about the team or their determination and disarmingly asking the right sort of things economists usually think about so-called lifestyle business, A. Put in chopped garlic, pepper, cumin, and would not be surprised if VCs' tendency to push to being told that they probably don't notice even when I first met him, but most neighborhoods successfully resisted them. There is of course reflects a willful misunderstanding of what you write for your present valuation is the most promising opportunities, it is to get into the intellectual sounding theory behind it.

Innosight, February 2012. Ashgate, 1998. So it is less than a Web terminal.

This is why we can't figure out the same ones. Trevor Blackwell, who had been able to. We didn't let him off, either as an example of applied empathy. And yet if he were a variety called Red Delicious that had other meanings.

#automatically generated text#Markov chains#Paul Graham#Python#Patrick Mooney#things#A#car#part#investors#lifestyle#wall#reading#friend#Rabin#herniation#world#lot#founder#language#opportunities#Web#kids#life#founders#exploration#As#theory#software

1 note

·

View note

Text

An outtake from Politics and Profanity

Chapter 6 is now online, http://archiveofourown.org/works/9367256/chapters/22059380, and as I’ve intimated in the notes, the meeting of Mary and Fitzwilliam was very different in its original iteration.

This scene was first conceived of before I read @amarguerite‘s treatise on why we should all be at least low-key shipping Elizabeth and Colonel Fitzwilliam, and so in the universe wherein this scene exists, the two of them are excellent chums, but nothing more. Slightly more to the point, LORD TRISTAN DIDN’T EVEN EXIST AS A CHARACTER WHEN I WROTE THIS. It has no impact on the outtake whatsoever, but still. What was I thinking? This was a scene which flew into my head, more or less fully formed, just about as soon as I came up with a premise for a Pride and Prejudice modern AU.

In this iteration of the universe, Mary is in her final year of undergraduate engineering, and so is about 22 or 23, and engaged in an entirely different major. Stepping past the significant gap in age and life experience that caused, I have since made Mary a 26 year old PhD candidate because I really wanted her to be the most abominable bluestocking. Also in this iteration of the universe, while the two of them are excellent chums, Elizabeth doesn’t know the particulars of Fitzwilliam’s study etc.

I apologise in advance for the fact that this outtake isn’t quite up to scratch in terms of tone and the slight Mary-Sueish undertones which crop up occasionally and all of the horrific maths computing jargon. There’s a reason it didn’t make it into the fic.

Fitzwilliam was interrupted by something on Elizabeth’s side of the call. He had been mid-sentence when he heard a horrified 'Nooooooooooooooooooooo!' followed by 'Fuck! Fuck fuck fuck! Fuck right off! No! Fuck! Fuck! Fuck me!', each 'fuck' punctuated by the sound of someone hitting a table.

"Is everything alright on your end?" he asked hesitantly.

"I'm not entirely sure," Elizabeth responded slowly. "My younger sister just went dead pale and then the profanity started. Now she just looks murderous."

"Which younger sister?" he enquired, knowing that a plurality existed.

"Mary, the eldest."

"Is she alright?"

"Are you alright, Mary?" Elizabeth asked, mouth away from the phone.

"What the fuck do you think?" Fitzwilliam heard her forcibly ejaculate.

"It would seem not," Elizabeth relayed.

"Dare I ask what's wrong?" he continued.

"I'll just put you on speaker," Elizabeth said into the handset. Away from it, she said, "I'm putting it on speaker, Mary. Try to be civil." She pressed speaker, put down the handset and said "Go."

"Miss Mary Bennett, hello."

"H,." Mary responded curtly, not really having time for pleasantries. "Who might you be?"

"Evelyn Fitzwilliam, at your service. Might I enquire as to what troubles you?"

"I'm working in MatLab, on a five line inverse Lagrange fucking interpolating polynomial, and my code won't fucking compile."

"Have you checked brackets?" he asked.

"I'm not a moron," Mary replied acidly, "that was the first thing I checked."

"Would you take issue if I were to come by and take a look at it?" he asked.

"Lizzie here," Elizabeth interjected, "correct me if I'm wrong, but you're an ex-military civil servant. When last I checked, mathematical coding was not really part of the skill set."

"I've actually got a PhD in applied mathematics, which I got, studying by distance whilst on duty. I'm a civil servant because I got back just as Fitz was elected, he asked me to join, and since I didn't know what I wanted to do with my life, I thought why not?"

"Mary again," Mary put in, "what is your level of experience with MatLab?"

"You wouldn’t even understand some of the stuff I can do with MatLab."

"How soon can you get here?" she asked with a hint of desperation she didn't even bother trying to hide.

"I'll be over in five minutes. Try not to set fire to your computer before then. Ladies, I shall see you shortly."

The call was ended, and Elizabeth, noting the still murderous glint in Mary's eyes, did what she thought safest and removed her sister's laptop from her immediate reach. The moment the offending screen was no longer in view, Mary relaxed visibly. "Say, doesn't he work with that Tory MP you dislike so intensely?" she asked with nary an expletive.

"He does," her elder sister replied, "only unlike the Right Honourable Member for Pemberly, he isn't a complete twat. He's actually quite nice."

"Well he purports to know the ways of the Matrix Laboratory, so I'm willing to give him the benefit of the doubt."

True to his word, Evelyn Fitzwilliam knocked on the door of Elizabeth’s office five minutes after ending the call, five minutes being the time required to make one's way from his office to hers.

She opened the door to see Mr Gardiner giving him the disdainful eye - a look which told its receiver that the reason they weren't getting the evil eye was simply the fact that they weren't deemed enough of a threat to deserve it.

Elizabeth invited him in, gave Mary her computer, and sat down at her desk to formulate arguments for Mr Gardiner’s inevitable 'why is some Oxbridge tory arsefuck visiting your office?' discussion. 'He's a friend' probably wouldn't stand.

Fitzwilliam meanwhile walked over to Mary, who wheeled the chair she was sitting in out of the way (she had been sitting for so long that she didn't think standing was a good idea) and leaned over in front of her laptop.

"Beautifully set out code," he noted. Mary raised her eyebrows as if to say ‘fucking duh’. "So what do you study?" he asked, continuing to scroll and scrutinise.

"Engineering."

"Because that was a specific answer."

"Geotechnical."

"Are you enjoying it?"

Mary smiled, because more commonly the follow-up question was either 'what is that' or 'dear god, why'. "It's great."

"Are you planning to take an honours year?"

"Of course."

"Have you landed on a thesis topic yet?"

"I was thinking something to do with offshore mining or drilling. Maybe seismic survey or the like."

"You're in fourth year?"

"Yes. Honours will make it an even five."

"Which university are you at?"

"UCL."

"Ok, I've found your problem. Your brackets were indeed correct. Your problem was a typo in a variable name. If I fix that," he deleted a character and replaced it, "this should," he clicked 'run', "compile." It did.

"Excuse me," said Mary, her face devoid of expression. She stood up, opened the door leading to the small balcony Elizabeth's office included, closed it behind her and began screaming profanities. Elizabeth was about to go outside to see if her sister required a hug or large volume of hard liquor when Mr Gardiner called for her.

“I’ve got this,” Fitzwilliam said, picking up Mary’s coat from the back of her chair and opening the balcony door. “Have fun with Ghengis.”

“Thanks, Evelyn,” Elizabeth said, heading for the door to Mr Gardiner’s office.

“Why the fuck is that little Tory prick here?” he asked, getting straight to the point.

“He’s helping my sister with some engineering coursework,” Elizabeth replied.

“Is that some kind of euphemism for something?” Mr Gardiner asked with obvious distaste.

“No it is not, and if it were, I can’t imagine what for. He is legitimately assisting Mary with her tertiary study.”

“And you’re not… you know…” Mr Gardiner raised his eyebrows a couple of times in implication. Elizabeth thought it adorable that he couldn’t bring himself to ask whether or not she and Evelyn were engaging in the horizontal tango, when he seemed to have no filter on his mouth whatsoever.

“No,” she replied, brow furrowed. “Is there implication that we are?”

“No. Just checking.”

“Well if that’s it, I’d appreciate being able to check that my sister hasn’t jumped off the balcony. Now wasn’t the best time.”

“What’s she doing here?”

“It’s half past seven on a Friday evening. We’re helping our sister move this weekend. You know, unless there’s some last minute emergency someone needs a reaming out over,” Elizabeth said with some trepidation. She really hoped she would be able to help Jane move, because she hadn’t seen her sister since the wedding.

“No. Fuck off,” Mr Gardiner said with what was almost an affectionate smile. “Enjoy the manual labour. If we’re lucky, no-one will fuck up while you’re gone.”

They both snorted at that. “See you on Monday, Edward,” Elizabeth said, walking back to her office, and out onto the balcony where she saw Fitzwilliam with sitting (impeccable suit and all) against the wall next to Mary, who had just been crying, with his arm around her. “Am I missing something?” Elizabeth asked.

“She hasn’t really slept this past week. Emotions are running a bit high. She needs a nap and she’ll be fine,” Fitzwilliam said, standing up and offering a hand to pull Mary up. “MatLab tends to do that to you.”

“Come on Mary. We can head off now,” Elizabeth said, mouthing ‘thank you’ to Fitzwilliam. “Pack up your computer and I’ll we’ll be off in a second.”

Mary walked inside, wiping her eyes and Elizabeth turned to Fitzwilliam, who spoke first. “It’s fine. It was actually nice to use the skills I spent seven years attaining.”

Elizabeth clapped him on the shoulder. “You, sir, are a prince among men.”

He bowed slightly in response. “One does what one can.” They returned to Elizabeth's office where Mary was packing her comically large laptop into an even more comically large laptop bag. He kissed Elizabeth on the cheek and said “I’ll see you on Monday.” To Mary, he said “Mary, it was an absolute pleasure to meet you. If you run into any other problems, don’t hesitate to give me a call.” He handed her a business card and was on his way.

Once he was out of the office, Mary turned to Elizabeth and remarked wistfully, “They certainly don’t make them like that in geotech.”

And as a special bonus for those of you who have had to engage in coding for university study, an exchange which I was going to work into the fic somewhere:

“You should see what I can do with Python or C.”

“Shut up now, I’m starting to get aroused.”

#politics and profanity#pride and prejudice#modern a#colonel fitzwilliam#elizabeth bennet#mary bennet#the ghosts of shit-fic past#how did i allow myself to write such jargon-filled dreck?#things which will never make it onto AO3 as outtakes

7 notes

·

View notes

Text

Original Post from Rapid7 Author: Brent Cook

The Metasploit project just wrapped up its second global open-source hackathon from May 30 to June 2 in Austin, Texas. This event was an opportunity for Metasploit committers and contributors to get together, discuss ideas, write some code, and have some fun.

In addition to the regular Rapid7 committer crew, Metasploit developers joined from around the world to take part in the event. Some projects just got started, some were finished, and more ideas were discussed for the future. It was great having many of the Metasploit crew able to work together directly for a few days, and to get to know each other better. Thanks especially to everyone who helped make the event happen!

Here is a sampling of hackathon results, in the developers’ own words:

zeroSteiner’s report: Meterpreter logging, sequencing, and obfuscation

So, at the hackathon, I worked on more projects than I completed, but I got started on an internal logging channel for Meterpreter. This will help folks, including module developers, troubleshoot their Meterpreter sessions remotely without having to worry about accessing PTYs or other streams to get the messages from.

I also helped out OJ with the string eradication from Meterpreter’s TLVs by implementing his lookup tables in the Python Meterpreter. Finally, I started work on a sequenced UDP transport. When completed, this would offer users the ability to use reverse_udp stagers for Meterpreter sessions and help with egress evasion in instances where TCP is limited and UDP is not.

The primary issue I worked on was troubleshooting the handler’s ability to receive frames issued by the Meterpreter side and implementing general sequence and error-handling logic to handle the stateless nature of UDP communications.

OJ’s report: Meterpreter obfuscation, encryption, and W^X memory

I ripped the method strings out of the TLV protocol, so instead we have integer identifiers. This means that if any TLV packets go across the wire in plain text (which they can still do in some cases) those strings no longer exist and can’t be fingerprinted.

I also added the ability to renegotiate TLV encryption keys on the fly (with the goal of being able to do that automatically after a period of time).

I also got started on removing RWX allocations from stagers.

timwr’s report: iOS exploits, Meterpreter remote control, post-exploit trolling

During this Hackathon, I assisted Brent with getting a new iOS exploit landed, plus fixing some bugs on keyboard and mouse control.

I teamed up with zeroSteiner to start on adding a logging channel to the Java Meterpreter and fixing some minor Python Meterpreter bugs. Brendan and I got some more of the automated tests passing.

I also worked with wvu to fix some bugs on the java Meterpreter (in shell_command_token and expand_path). We also improved the play_youtube module and added epic sax guy as the default video.

busterb’s report: Ruby, libraries, and removing ‘expand_path’

I, too, started more than I finished, but got a lot of small and big things into the tree. I worked with jmartin-r7 and timwr to get Mettle’s iOS dylib support packaged, which made its debut with Tim’s exploit for CVE-2018-4233, targeting all 64bit iOS 10–11.2 devices.

I poked at a few minor annoyances, quieting some Ruby 2.6 warnings, and started tackling new Windows Meterpreter warnings unveiled when building with a mingw-w64 toolchain. I broke, then subsequently fixed, automatic Content-Length header insertion in HTTP requests and responses, which was some foundational work to enable HTTP proxy code that Boris was working on.

The rest of my time was spent reviewing existing PRs, landing Tim’s keyboard/mouse control code for Meterpreter, some commits to implement RW^RX support in the Reflective DLL Injection code for Meterpreter, and on a tree-wide flensing of the ‘expand_path’ API, switching to getenv in most places. This change will make it easier to get consistent results from all session types, since getenv is easier to implement than expand_path consistently across different session types.

h00die’s report: Hashes, crackers, brocade switches, traceroute, and epic sax

During this hackathon, I was able to finish the hashcat integration and cracker overhaul #11695 (a 6,400-line change). With help from jmartin-r7 and timwr, we were able to identify an issue with osx 10.7 hash enumeration and test additional osx hashes with the cracker overhaul. Pooling great minds, wvu and rageltman were able to identify and propose a fix for the ssh_login libraries #11905.

With this, a brocade config dumper and eater was finally born #11927. An idea born a year ago of building a traceroute and network diagram creator for pentest reports was started and is close to being ready for submission. Last, busterb and OJ have volunteered configs or to test a new ubiquiti config eater.

None of this would have been possible without the pro-level support of ccondon-r7 and two hours of epic sax.

rageltman’s report: HTTP proxies, SSH enhancements and server support

During this hackathon, I completed work on the HTTP proxy, permitting HTTP CONNECT request servicing via TCP / HTTP implementation with full bidirectional comms. This also led to some library cleanup across all of our proxy libraries, which has left us with a wonderful place to implement proxy MITM for all (socks and http) proxies.

Working with wvu and h00die, we found lib-level issues in the SSH scanner implementation that cause breakage on a number of embedded devices/locked-down servers. A fix is in the works for this presently. I also assisted zeroSteiner a bit with the UDP implementation for Meterpreter transport. Last, the base-level implementation for Rex::Proto::Ssh::Server was started and is still ongoing, which will give framework users the ability to catch reverse-ssh sessions, log SSH auth attempts, deliver exploits to vulnerable ssh clients connecting, and test protocol clients for weaknesses.

I learned more in five days than I have all year. Huge thanks to Rapid7 for hosting the event, ccondon-r7 for herding the cats so masterfully, and everyone in attendance for their invaluable input, corrections, and good humor. Finally meeting some of the folks with whom I’ve worked for years and finding that they’re all even better to work with in person as well as great human beings is a lung-full of fresh air and excellent motivation to continue building and pushing code. Y’all rock! Thanks for an awesome week.

P.S. I’ve never had such epic sax before—my cultural awareness is now greatly increased.

chiggins’ report: coding and community inspiration

Austin is one of my favorite cities and I was lucky enough to go there and spend a weekend with the wonderful Metasploit crew for a hackathon. The biggest takeaway for me was being surrounded by some mega-intelligent people and trying to soak up as much information as possible.

Sometimes it’s hard to find time to contribute to an open-source project, but it was definitely good to be able to get everyone in the same room together and work things out. Being able to dig deep in some code and yell across the room for some guidance is extremely helpful and super rewarding.

It was inspiring being able to have discussions with people that tend to use tools in ways that are a little different, and brainstorm on how to improve on what’s already there and where we see the future going. It was truly a fantastic experience and I’m already looking forward to the next time we’re all able to get together.

#gallery-0-5 { margin: auto; } #gallery-0-5 .gallery-item { float: left; margin-top: 10px; text-align: center; width: 33%; } #gallery-0-5 img { border: 2px solid #cfcfcf; } #gallery-0-5 .gallery-caption { margin-left: 0; } /* see gallery_shortcode() in wp-includes/media.php */

Go to Source Author: Brent Cook Metasploit Hackathon Wrap-Up: What We Worked On Original Post from Rapid7 Author: Brent Cook The Metasploit project just wrapped up its second global open-source hackathon from May 30 to June 2 in Austin, Texas.

0 notes

Text

So You Want to Build a Chat Bot – Here's How (Complete with Code!)

So You Want to Build a Chat Bot – Here's How (Complete with Code!)

Posted by R0bin_L0rd

You’re busy and (depending on effective keyword targeting) you’ve come here looking for something to shave months off the process of learning to produce your own chat bot. If you’re convinced you need this and just want the how-to, skip to "What my bot does." If you want the background on why you should be building for platforms like Google Home, Alexa, and Facebook Messenger, read on.

Why should I read this?

Do you remember when it wasn't necessary to have a website? When most boards would scoff at the value of running a Facebook page? Now Gartner is telling us that customers will manage 85% of their relationship with brands without interacting with a human by 2020 and publications like Forbes are saying that chat bots are the cause.

The situation now is the same as every time a new platform develops: if you don’t have something your customers can access, you're giving that medium to your competition. At the moment, an automated presence on Google Home or Slack may not be central to your strategy, but those who claim ground now could dominate it in the future.

The problem is time. Sure, it'd be ideal to be everywhere all the time, to have your brand active on every platform. But it would also be ideal to catch at least four hours sleep a night or stop covering our keyboards with three-day-old chili con carne as we eat a hasty lunch in between building two of the Next Big Things. This is where you’re fortunate in two ways;

When we develop chat applications, we don’t have to worry about things like a beautiful user interface because it’s all speech or text. That's not to say you don't need to worry about user experience, as there are rules (and an art) to designing a good conversational back-and-forth. Amazon is actually offering some hefty prizes for outstanding examples.

I’ve spent the last six months working through the steps from complete ignorance to creating a distributable chat bot and I’m giving you all my workings. In this post I break down each of the levels of complexity, from no-code back-and-forth to managing user credentials and sessions the stretch over days or months. I’m also including full code that you can adapt and pull apart as needed. I’ve commented each portion of the code explaining what it does and linking to resources where necessary.

I've written more about the value of Interactive Personal Assistants on the Distilled blog, so this post won't spend any longer focusing on why you should develop chat bots. Instead, I'll share everything I've learned.

What my built-from-scratch bot does

Ever since I started investigating chat bots, I was particularly interested in finding out the answer to one question: What does it take for someone with little-to-no programming experience to create one of these chat applications from scratch? Fortunately, I have direct access to someone with little-to-no experience (before February, I had no idea what Python was). And so I set about designing my own bot with the following hard conditions:

It had to have some kind of real-world application. It didn't have to be critical to a business, but it did have to bear basic user needs in mind.

It had to be easily distributable across the immediate intended users, and to have reasonable scope to distribute further (modifications at most, rather than a complete rewrite).

It had to be flexible enough that you, the reader, can take some free code and make your own chat bot.

It had to be possible to adapt the skeleton of the process for much more complex business cases.

It had to be free to run, but could have the option of paying to scale up or make life easier.

It had to send messages confirming when important steps had been completed.

The resulting program is "Vietnambot," a program that communicates with Slack, the API.AI linguistic processing platform, and Google Sheets, using real-time and asynchronous processing and its own database for storing user credentials.

If that meant nothing to you, don't worry — I'll define those things in a bit, and the code I'm providing is obsessively commented with explanation. The thing to remember is it does all of this to write down food orders for our favorite Vietnamese restaurant in a shared Google Sheet, probably saving tens of seconds of Distilled company time every year.

It's deliberately mundane, but it's designed to be a template for far more complex interactions. The idea is that whether you want to write a no-code-needed back-and-forth just through API.AI; a simple Python program that receives information, does a thing, and sends a response; or something that breaks out of the limitations of linguistic processing platforms to perform complex interactions in user sessions that can last days, this post should give you some of the puzzle pieces and point you to others.

What is API.AI and what's it used for?

API.AI is a linguistic processing interface. It can receive text, or speech converted to text, and perform much of the comprehension for you. You can see my Distilled post for more details, but essentially, it takes the phrase “My name is Robin and I want noodles today” and splits it up into components like:

Intent: food_request

Action: process_food

Name: Robin

Food: noodles

Time: today

This setup means you have some hope of responding to the hundreds of thousands of ways your users could find to say the same thing. It’s your choice whether API.AI receives a message and responds to the user right away, or whether it receives a message from a user, categorizes it and sends it to your application, then waits for your application to respond before sending your application’s response back to the user who made the original request. In its simplest form, the platform has a bunch of one-click integrations and requires absolutely no code.

I’ve listed the possible levels of complexity below, but it’s worth bearing some hard limitations in mind which apply to most of these services. They cannot remember anything outside of a user session, which will automatically end after about 30 minutes, they have to do everything through what are called POST and GET requests (something you can ignore unless you’re using code), and if you do choose to have it ask your application for information before it responds to the user, you have to do everything and respond within five seconds.

What are the other things?

Slack: A text-based messaging platform designed for work (or for distracting people from work).

Google Sheets: We all know this, but just in case, it’s Excel online.

Asynchronous processing: Most of the time, one program can do one thing at a time. Even if it asks another program to do something, it normally just stops and waits for the response. Asynchronous processing is how we ask a question and continue without waiting for the answer, possibly retrieving that answer at a later time.

Database: Again, it’s likely you know this, but if not: it’s Excel that our code will use (different from the Google Sheet).

Heroku: A platform for running code online. (Important to note: I don’t work for Heroku and haven’t been paid by them. I couldn’t say that it's the best platform, but it can be free and, as of now, it’s the one I’m most familiar with).

How easy is it?

This graph isn't terribly scientific and it's from the perspective of someone who's learning much of this for the first time, so here’s an approximate breakdown:

Label

Functionality

Time it took me

1

You set up the conversation purely through API.AI or similar, no external code needed. For instance, answering set questions about contact details or opening times

Half an hour to distributable prototype

2

A program that receives information from API.AI and uses that information to update the correct cells in a Google Sheet (but can’t remember user names and can’t use the slower Google Sheets integrations)

A few weeks to distributable prototype

3

A program that remembers user names once they've been set and writes them to Google Sheets. Is limited to five seconds processing time by API.AI, so can’t use the slower Google Sheets integrations and may not work reliably when the app has to boot up from sleep because that takes a few seconds of your allocation*

A few weeks on top of the last prototype

4

A program that remembers user details and manages the connection between API.AI and our chosen platform (in this case, Slack) so it can break out of the five-second processing window.

A few weeks more on top of the last prototype (not including the time needed to rewrite existing structures to work with this)

*On the Heroku free plan, when your app hasn’t been used for 30 minutes it goes to sleep. This means that the first time it’s activated it takes a little while to start your process, which can be a problem if you have a short window in which to act. You could get around this by (mis)using a free “uptime monitoring service” which sends a request every so often to keep your app awake. If you choose this method, in order to avoid using all of the Heroku free hours allocation by the end of the month, you’ll need to register your card (no charge, it just gets you extra hours) and only run this application on the account. Alternatively, there are any number of companies happy to take your money to keep your app alive.

For the rest of this post, I’m going to break down each of those key steps and either give an overview of how you could achieve it, or point you in the direction of where you can find that. The code I’m giving you is Python, but as long as you can receive and respond to GET and POST requests, you can do it in pretty much whatever format you wish.

1. Design your conversation

Conversational flow is an art form in itself. Jonathan Seal, strategy director at Mando and member of British Interactive Media Association's AI thinktank, has given some great talks on the topic. Paul Pangaro has also spoken about conversation as more than interface in multiple mediums. Your first step is to create a flow chart of the conversation. Write out your ideal conversation, then write out the most likely ways a person might go off track and how you’d deal with them. Then go online, find existing chat bots and do everything you can to break them. Write out the most difficult, obtuse, and nonsensical responses you can. Interact with them like you’re six glasses of wine in and trying to order a lemon engraving kit, interact with them as though you’ve found charges on your card for a lemon engraver you definitely didn’t buy and you are livid, interact with them like you’re a bored teenager. At every point, write down what you tried to do to break them and what the response was, then apply that to your flow. Then get someone else to try to break your flow. Give them no information whatsoever apart from the responses you’ve written down (not even what the bot is designed for), refuse to answer any input you don’t have written down, and see how it goes. David Low, principal evangelist for Amazon Alexa, often describes the value of printing out a script and testing the back-and-forth for a conversation. As well as helping to avoid gaps, it’ll also show you where you’re dumping a huge amount of information on the user.

While “best practices” are still developing for chat bots, a common theme is that it’s not a good idea to pretend your bot is a person. Be upfront that it’s a bot — users will find out anyway. Likewise, it’s incredibly frustrating to open a chat and have no idea what to say. On text platforms, start with a welcome message making it clear you’re a bot and giving examples of things you can do. On platforms like Google Home and Amazon Alexa users will expect a program, but the “things I can do” bit is still important enough that your bot won’t be approved without this opening phase.

I've included a sample conversational flow for Vietnambot at the end of this post as one way to approach it, although if you have ideas for alternative conversational structures I’d be interested in reading them in the comments.

A final piece of advice on conversations: The trick here is to find organic ways of controlling the possible inputs and preparing for unexpected inputs. That being said, the Alexa evangelist team provide an example of terrible user experience in which a bank’s app said: “If you want to continue, say nine.” Quite often questions, rather than instructions, are the key.

2. Create a conversation in API.AI

API.AI has quite a lot of documentation explaining how to create programs here, so I won’t go over individual steps.

Key things to understand:

You create agents; each is basically a different program. Agents recognize intents, which are simply ways of triggering a specific response. If someone says the right things at the right time, they meet criteria you have set, fall into an intent, and get a pre-set response.

The right things to say are included in the “User says” section (screenshot below). You set either exact phrases or lists of options as the necessary input. For instance, a user could write “Of course, I’m [any name]” or “Of course, I’m [any temperature].” You could set up one intent for name-is which matches “Of course, I’m [given-name]” and another intent for temperature which matches “Of course, I’m [temperature],” and depending on whether your user writes a name or temperature in that final block you could activate either the “name-is” or “temperature-is” intent.

The “right time” is defined by contexts. Contexts help define whether an intent will be activated, but are also created by certain intents. I’ve included a screenshot below of an example interaction. In this example, the user says that they would like to go to on holiday. This activates a holiday intent and sets the holiday context you can see in input contexts below. After that, our service will have automatically responded with the question “where would you like to go?” When our user says “The” and then any location, it activates our holiday location intent because it matches both the context, and what the user says. If, on the other hand, the user had initially said “I want to go to the theater,” that might have activated the theater intent which would set a theater context — so when we ask “what area of theaters are you interested in?” and the user says “The [location]” or even just “[location],” we will take them down a completely different path of suggesting theaters rather than hotels in Rome.

The way you can create conversations without ever using external code is by using these contexts. A user might say “What times are you open?”; you could set an open-time-inquiry context. In your response, you could give the times and ask if they want the phone number to contact you. You would then make a yes/no intent which matches the context you have set, so if your user says “Yes” you respond with the number. This could be set up within an hour but gets exponentially more complex when you need to respond to specific parts of the message. For instance, if you have different shop locations and want to give the right phone number without having to write out every possible location they could say in API.AI, you’ll need to integrate with external code (see section three).

Now, there will be times when your users don’t say what you're expecting. Excluding contexts, there are three very important ways to deal with that:

Almost like keyword research — plan out as many possible variations of saying the same thing as possible, and put them all into the intent

Test, test, test, test, test, test, test, test, test, test, test, test, test, test, test (when launched, every chat bot will have problems. Keep testing, keep updating, keep improving.)

Fallback contexts

Fallback contexts don’t have a user says section, but can be boxed in by contexts. They match anything that has the right context but doesn’t match any of your user says. It could be tempting to use fallback intents as a catch-all. Reasoning along the lines of “This is the only thing they’ll say, so we’ll just treat it the same” is understandable, but it opens up a massive hole in the process. Fallback intents are designed to be a conversational safety net. They operate exactly the same as in a normal conversation. If a person asked what you want in your tea and you responded “I don’t want tea” and that person made a cup of tea, wrote the words “I don’t want tea” on a piece of paper, and put it in, that is not a person you’d want to interact with again. If we are using fallback intents to do anything, we need to preface it with a check. If we had to resort to it in the example above, saying “I think you asked me to add I don’t want tea to your tea. Is that right?” is clunky and robotic, but it’s a big step forward, and you can travel the rest of the way by perfecting other parts of your conversation.

3. Integrating with external code

I used Heroku to build my app . Using this excellent weather webhook example you can actually deploy a bot to Heroku within minutes. I found this example particularly useful as something I could pick apart to make my own call and response program. The weather webhook takes the information and calls a yahoo app, but ignoring that specific functionality you essentially need the following if you’re working in Python:

#start req = request.get_json print("Request:") print(json.dumps(req, indent=4)) #process to do your thing and decide what response should be res = processRequest(req) # Response we should receive from processRequest (you’ll need to write some code called processRequest and make it return the below, the weather webhook example above is a good one). { "speech": “speech we want to send back”, "displayText": “display text we want to send back, usually matches speech”, "source": "your app name" } # Making our response readable by API.AI and sending it back to the servic response = make_response(res) response.headers['Content-Type'] = 'application/json' return response # End

As long as you can receive and respond to requests like that (or in the equivalent for languages other than Python), your app and API.AI should both understand each other perfectly — what you do in the interim to change the world or make your response is entirely up to you. The main code I have included is a little different from this because it's also designed to be the step in-between Slack and API.AI. However, I have heavily commented sections like like process_food and the database interaction processes, with both explanation and reading sources. Those comments should help you make it your own. If you want to repurpose my program to work within that five-second window, I would forget about the file called app.py and aim to copy whole processes from tasks.py, paste them into a program based on the weatherhook example above, and go from there.

Initially I'd recommend trying GSpread to make some changes to a test spreadsheet. That way you’ll get visible feedback on how well your application is running (you’ll need to go through the authorization steps as they are explained here).

4. Using a database

Databases are pretty easy to set up in Heroku. I chose the Postgres add-on (you just need to authenticate your account with a card; it won’t charge you anything and then you just click to install). In the import section of my code I’ve included links to useful resources which helped me figure out how to get the database up and running — for example, this blog post.

I used the Python library Psycopg2 to interact with the database. To steal some examples of using it in code, have a look at the section entitled “synchronous functions” in either the app.py or tasks.py files. Open_db_connection and close_db_connection do exactly what they say on the tin (open and close the connection with the database). You tell check_database to check a specific column for a specific user and it gives you the value, while update_columns adds a value to specified columns for a certain user record. Where things haven’t worked straightaway, I’ve included links to the pages where I found my solution. One thing to bear in mind is that I’ve used a way of including columns as a variable, which Psycopg2 recommends quite strongly against. I’ve gotten away with it so far because I'm always writing out the specific column names elsewhere — I’m just using that method as a short cut.

5. Processing outside of API.AI’s five-second window

It needs to be said that this step complicates things by no small amount. It also makes it harder to integrate with different applications. Rather than flicking a switch to roll out through API.AI, you have to write the code that interprets authentication and user-specific messages for each platform you're integrating with. What’s more, spoken-only platforms like Google Home and Amazon Alexa don’t allow for this kind of circumvention of the rules — you have to sit within that 5–8 second window, so this method removes those options. The only reasons you should need to take the integration away from API.AI are:

You want to use it to work with a platform that it doesn’t have an integration with. It currently has 14 integrations including Facebook Messenger, Twitter, Slack, and Google Home. It also allows exporting your conversations in an Amazon Alexa-understandable format (Amazon has their own similar interface and a bunch of instructions on how to build a skill — here is an example.

You are processing masses of information. I’m talking really large amounts. Some flight comparison sites have had problems fitting within the timeout limit of these platforms, but if you aren’t trying to process every detail for every flight for the next 12 months and it’s taking more than five seconds, it’s probably going to be easier to make your code more efficient than work outside the window. Even if you are, those same flight comparison sites solved the problem by creating a process that regularly checks their full data set and creates a smaller pool of information that’s more quickly accessible.

You need to send multiple follow-up messages to your user. When using the API.AI integration it’s pretty much call-and-response; you don’t always get access to things like authorization tokens, which are what some messaging platforms require before you can automatically send messages to one of their users.

You're working with another program that can be quite slow, or there are technical limitations to your setup. This one applies to Vietnambot, I used the GSpread library in my application, which is fantastic but can be slow to pull out bigger chunks of data. What’s more, Heroku can take a little while to start up if you’re not paying.

I could have paid or cut out some of the functionality to avoid needing to manage this part of the process, but that would have failed to meet number 4 in our original conditions: It had to be possible to adapt the skeleton of the process for much more complex business cases. If you decide you’d rather use my program within that five-second window, skip back to section 2 of this post. Otherwise, keep reading.

When we break out of the five-second API.AI window, we have to do a couple of things. First thing is to flip the process on its head.

What we were doing before:

User sends message -> API.AI -> our process -> API.AI -> user

What we need to do now:

User sends message -> our process -> API.AI -> our process -> user

Instead of API.AI waiting while we do our processing, we do some processing, wait for API.AI to categorize the message from us, do a bit more processing, then message the user.

The way this applies to Vietnambot is:

User says “I want [food]”

Slack sends a message to my app on Heroku

My app sends a “swift and confident” 200 response to Slack to prevent it from resending the message. To send the response, my process has to shut down, so before it does that, it activates a secondary process using "tasks."

The secondary process takes the query text and sends it to API.AI, then gets back the response.

The secondary process checks our database for a user name. If we don’t have one saved, it sends another request to API.AI, putting it in the “we don’t have a name” context, and sends a message to our user asking for their name. That way, when our user responds with their name, API.AI is already primed to interpret it correctly because we’ve set the right context (see section 1 of this post). API.AI tells us that the latest message is a user name and we save it. When we have both the user name and food (whether we’ve just got it from the database or just saved it to the database), Vietnambot adds the order to our sheet, calculates whether we’ve reached the order minimum for that day, and sends a final success message.

6. Integrating with Slack

This won’t be the same as integrating with other messaging services, but it could give some insight into what might be required elsewhere. Slack has two authorization processes; we’ll call one "challenge" and the other "authentication."

Slack includes instructions for an app lifecycle here, but API.AI actually has excellent instructions for how to set up your app; as a first step, create a simple back-and-forth conversation in API.AI (not your full product), go to integrations, switch on Slack, and run through the steps to set it up. Once that is up and working, you’ll need to change the OAuth URL and the Events URL to be the URL for your app.

Thanks to github user karishay, my app code includes a process for responding to the challenge process (which will tell Slack you’re set up to receive events) and for running through the authentication process, using our established database to save important user tokens. There’s also the option to save them to a Google Sheet if you haven’t got the database established yet. However, be wary of this as anything other than a first step — user tokens give an app a lot of power and have to be guarded carefully.

7. Asynchronous processing

We are running our app using Flask, which is basically a whole bunch of code we can call upon to deal with things like receiving requests for information over the internet. In order to create a secondary worker process I've used Redis and Celery. Redis is our “message broker”; it makes makes a list of everything we want our secondary process to do. Celery runs through that list and makes our worker process do those tasks in sequence. Redis is a note left on the fridge telling you to do your washing and take out the bins, while Celery is the housemate that bangs on your bedroom door, note in hand, and makes you do each thing. I’m sure our worker process doesn’t like Celery very much, but it’s really useful for us.

You can find instructions for adding Redis to your app in Heroku here and you can find advice on setting up Celery in Heroku here. Miguel Grinberg’s Using Celery with Flask blog post is also an excellent resource, but using the exact setup he gives results in a clash with our database, so it's easier to stick with the Heroku version.

Up until this point, we've been calling functions in our main app — anything of the form function_name(argument_1, argument_2, argument_3). Now, by putting “tasks.” in front of our function, we’re saying “don’t do this now — hand it to the secondary process." That’s because we’ve done a few things:

We’ve created tasks.py which is the secondary process. Basically it's just one big, long function that our main code tells to run.

In tasks.py we’ve included Celery in our imports and set our app as celery.Celery(), meaning that when we use “app” later we’re essentially saying “this is part of our Celery jobs list” or rather “tasks.py will only do anything when its flatmate Celery comes banging on the door”

For every time our main process asks for an asynchronous function by writing tasks.any_function_name(), we have created that function in our secondary program just as we would if it were in the same file. However in our secondary program we’ve prefaced with “@app.task”, another way of saying “Do wash_the_dishes when Celery comes banging the door yelling wash_the_dishes(dishes, water, heat, resentment)”.

In our “procfile” (included as a file in my code) we have listed our worker process as --app=tasks.app

All this adds up to the following process:

Main program runs until it hits an asynchronous function

Main program fires off a message to Redis which has a list of work to be done. The main process doesn’t wait, it just runs through everything after it and in our case even shuts down

The Celery part of our worker program goes to Redis and checks for the latest update, it checks what function has been called (because our worker functions are named the same as when our main process called them), it gives our worker all the information to start doing that thing and tells it to get going

Our worker process starts the action it has been told to do, then shuts down.

As with the other topics mentioned here, I’ve included all of this in the code I’ve supplied, along with many of the sources used to gather the information — so feel free to use the processes I have. Also feel free to improve on them; as I said, the value of this investigation was that I am not a coder. Any suggestions for tweaks or improvements to the code are very much welcome.

Conclusion

As I mentioned in the introduction to this post, there's huge opportunity for individuals and organizations to gain ground by creating conversational interactions for the general public. For the vast majority of cases you could be up and running in a few hours to a few days, depending on how complex you want your interactions to be and how comfortable you are with coding languages. There are some stumbling blocks out there, but hopefully this post and my obsessively annotated code can act as templates and signposts to help get you on your way.

Grab my code at GitHub

Bonus #1: The conversational flow for my chat bot

This is by no means necessarily the best or only way to approach this interaction. This is designed to be as streamlined an interaction as possible, but we’re also working within the restrictions of the platform and the time investment necessary to produce this. Common wisdom is to create the flow of your conversation and then keep testing to perfect, so consider this example layout a step in that process. I’d also recommend putting one of these flow charts together before starting — otherwise you could find yourself having to redo a bunch of work to accommodate a better back-and-forth.

Bonus #2: General things I learned putting this together

As I mentioned above, this has been a project of going from complete ignorance of coding to slightly less ignorance. I am not a professional coder, but I found the following things I picked up to be hugely useful while I was starting out.

Comment everything. You’ll probably see my code is bordering on excessive commenting (anything after a # is a comment). While normally I’m sure someone wouldn’t want to include a bunch of Stack Overflow links in their code, I found notes about what things portions of code were trying to do, and where I got the reasoning from, hugely helpful as I tried to wrap my head around it all.

Print everything. In Python, everything within “print()” will be printed out in the app logs (see the commands tip for reading them in Heroku). While printing each action can mean you fill up a logging window terribly quickly (I started using the Heroku add-on LogDNA towards the end and it’s a huge step up in terms of ease of reading and length of history), often the times my app was falling over was because one specific function wasn’t getting what it needed, or because of another stupid typo. Having a semi-constant stream of actions and outputs logged meant I could find the fault much more quickly. My next step would probably be to introduce a way of easily switching on and off the less necessary print functions.

The following commands: Heroku’s how-to documentation for creating an app and adding code is pretty great, but I found myself using these all the time so thought I’d share (all of the below are written in the command line; type cmd in on Windows or by running Terminal on a Mac):

CD “””[file location]””” - select the file your code is in

“git init” - create a git file to add to

“git add .” - add all of the code in your file into the file that git will put online

“git commit -m “[description of what you’re doing]” “ - save the data in your git file

“heroku git:remote -a [the name of your app]” - select your app as where to put the code

“git push heroku master” - send your code to the app you selected

“heroku ps” - find out whether your app is running or crashed

“heroku logs” - apologize to your other half for going totally unresponsive for the last ten minutes and start the process of working through your printouts to see what has gone wrong

POST requests will always wait for a response. Seems really basic — initially I thought that by just sending a POST request and not telling my application to wait for a response I’d be able to basically hot-potato work around and not worry about having to finish what I was doing. That’s not how it works in general, and it’s more of a symbol of my naivete in programming than anything else.

If something is really difficult, it’s very likely you’re doing it wrong. While I made sure to do pretty much all of the actual work myself (to avoid simply farming it out to the very talented individuals at Distilled), I was lucky enough to get some really valuable advice. The piece of advice above was from Dominic Woodman, and I should have listened to it more. The times when I made least progress were when I was trying to use things the way they shouldn’t be used. Even when I broke through those walls, I later found that someone didn’t want me to use it that way because it would completely fail at a later point. Tactical retreat is an option. (At this point, I should mention he wasn’t the only one to give invaluable advice; Austin, Tom, and Duncan of the Distilled R&D team were a huge help.)

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!