#centralprocessingunits

Explore tagged Tumblr posts

Text

Quantum Art Uses CUDA-Q For Fast Logical Qubit Compilation

Quantum Art

Quantum Art, a leader in full-stack quantum computers using trapped-ionqubits and a patented scale-up architecture, announced a critical integration with NVIDIA CUDA-Q to accelerate quantum computing deployment. By optimising and synthesising logical qubits, this partnership aims to scale quantum computing for practical usage.

Quantum Art wants to help humanity by providing top-tier, scalable quantum computers for business. They use two exclusive technology pillars to provide fault-tolerant and scalable quantum computing.

Advanced Multi-Qubit gates are in the first pillar. These unusual gates in Quantum Art can implement 1,000 standard two-qubit gates in one operation. Multi-tone, multi-mode coherent control over all qubits allows code compactization by orders of magnitude. This compactization is essential for building complex quantum circuits for logical qubits.

A dynamically reconfigurable multi-core architecture is pillar two. This design allows Quantum Art to execute tens of cores in parallel, speeding up and improving quantum computations. Dynamically rearranging these cores in microseconds creates hundreds of cross-core links for true all-to-all communication. Logical qubits, which are more error-resistant than physical qubits, require dynamic reconfigurability and connectivity for their complex calculations.

The new integration combines NVIDIA CUDA-Q, an open-source hybrid quantum-classical computing platform, with Quantum Art's Logical Qubit Compiler, which uses multi-qubit gates and multi-core architecture. Developers may easily run quantum applications on QPUs, CPUs, and GPUs with this powerful combo. This relationship combines NVIDIA's multi-core orchestration and developer assistance with Quantum Art's compiler, which is naturally tailored for low circuit depth and scalable performance, to advance actual quantum use cases.

This integration should boost scalability and performance. The partnership's multi-qubit and reconfigurable multi-core operations should reduce circuit depth and improve performance. Preliminary physical layer results demonstrate improved scaling, especially N vs N² code lines, and a 25% increase in Quantum Volume circuit logarithm. Therefore, shallower circuits with significant performance improvements are developed. These advances are crucial because they can boost Quantum Volume when utilising this compiler on suitable quantum hardware platforms. Quantum Volume is essential for evaluating the platform's efficacy and scalability.

Quantum circuit creation and development at the ~200 logical qubit level are key strategic objectives of this collaboration. This scale fits new commercial use cases. A complete investigation of quantifiable performance benefits will include circuit depth, core reconfigurations, and T-gate count, which measures quantum process complexity.

As the industry moves towards commercialisation, its revolutionary multi-core design and trapped-ion qubits offer unmatched scaling potential, addressing quantum computers' top difficulty, said Quantum Art CEO Tal David, excited about the alliance. He also noted that the compiler's interaction with CUDA-Q will allow developers to scale up quantum applications.

Sam Stanwyck, NVIDIA Group Product Manager for Quantum Computing, said “The CUDA-Q platform is built to accelerate breakthroughs in quantum computing by building on the successes of AI supercomputing”. Quantum Art's integration of CUDA-Q with their compiler is a good illustration of how quantum and classical hardware are combining to improve performance.

With its multi-qubit gates, complex trapped-ion systems, and dynamically programmable multi-core architecture, Quantum Art is scaling quantum computing. These developments address the main challenge of scaling to hundreds and millions of qubits for commercial value. Integration with NVIDIA CUDA-Q is a major step towards Quantum Art's aim of commercial quantum advantage and expanding possibilities in materials discovery, logistics, and energy systems.

Quantum Art's solutions could also transform Chemistry & Materials, Machine Learning, Process Optimization, and Finance. This alliance aims to turn theoretical quantum benefits into large-scale, useful applications for several industries.

#QuantumArt#qubits#NVIDIACUDAQ#quantumcircuits#CentralProcessingUnits#QuantumProcessingUnits#LogicalQubits#MachineLearning#News#Technnews#Technology#Technologynews#Technologytrends#Govindhtech

1 note

·

View note

Text

What If Reality Is a Simulation Running on Light 2025

What If Reality Is a Simulation Running on Light 2025

🌌 Let’s Get Something Wild Out There First You ever sit in your room late at night, just staring at the ceiling, and suddenly wonder… what if all of this isn’t even real? Not in the “I’ve had a rough day” kind of way — but in a deep, goosebump-triggering, what if the universe is literally code kinda way. And not just any code — but a simulation powered by light. I know. It sounds like the plot of a trippy sci-fi film. But stick with me. Because the more you unpack this, the more your brain starts doing somersaults — and honestly, it’s kind of beautiful. 💡 Why Light, Though? Let’s start there. Light is… weird. I mean really weird. It behaves like a wave and a particle at the same time. It doesn’t experience time. It moves at a constant speed no matter how fast you're going. It’s everywhere, bouncing off every surface, constantly transmitting information. And most fascinating of all? Every single thing we see, every color, every shape — all of it is just reflected light. You’re not really seeing “objects” — you’re seeing how light interacts with matter. In a way, we’re living in a projection made of photons. Now imagine if this wasn’t just a byproduct of how the universe works — but the entire foundation of it. 🧠 The Simulation Hypothesis Meets the Speed of Light The Simulation Hypothesis has been floating around science, tech, and philosophy circles for a while now. The idea? That our universe might be a sophisticated simulation — run by a hyper-advanced civilization or some future version of ourselves. Now toss this idea in with one wild twist: what if light isn’t just a part of the simulation, but the actual processing medium? Like a cosmic CPU. Instead of electricity powering our virtual world — it’s photons. Everything you see, feel, touch? Rendered in real time through quantum interactions governed by the speed of light. Your thoughts? Calculated via neural signals... which are, at the core, energy. Movement. Light. Reality might not just be like a simulation — it might literally be one, run on the most fundamental energy system in existence.

🧩 Clues in the Code (Weird Stuff That Makes This Plausible) Let’s unpack a few eerie pieces of evidence that make this idea creepily convincing: - Pixelated Universe: Some physicists believe space might be quantized — like it comes in tiny “chunks,” just like pixels. If that’s true… who’s rendering the screen? - Quantum Uncertainty: Particles don’t “exist” in one place until we observe them. That’s not just weird — that’s exactly how video games optimize performance. Why render the whole world, when you can just render what the player’s looking at? - Speed of Light = Speed Limit: Nothing can go faster than light. Maybe that’s just the limit of the simulation’s processing power. Like lag in a game — the system can’t calculate anything beyond a certain speed. - Math Everywhere: From the Fibonacci sequence in flowers to the golden ratio in galaxies, our world is soaked in math. Almost too perfect. Like it was… programmed. 🧬 But Who’s Running It? This is where things go from “woah” to “wait...what?” If we’re in a simulation, then someone (or something) is running it. And if light is the base code, then maybe the operators are so advanced that they’ve moved beyond hardware and into pure energy. Or maybe — wild idea — this is a future version of us. A civilization so advanced, it simulated its own past to understand how it came to be. Like a historical light-powered sandbox. And here's the trippiest part: if the universe is a light simulation, it might not be "fake" at all. It might be more real than we can currently comprehend. Because what’s more real — the thing itself, or the information behind it? 😵 Okay, So… What Do We Do with This? This isn’t just a fun thought experiment. It makes us question our place in the cosmos. It blurs the line between science, philosophy, and self. And maybe that's the point. Because even if this idea isn’t “true” in the traditional sense, it reminds us how little we know. It humbles us. It cracks the walls of certainty wide open. And in that space of wonder — that’s where the best ideas are born. So next time you step into the sunlight and feel its warmth, just take a second. What if… that light is the system, running you? External Resource: Want to dive deeper into the Simulation Hypothesis? Check the Wikipedia page: Simulation Hypothesis https://en.wikipedia.org/wiki/Simulation_hypothesis Related Articles from EdgyThoughts.com: Why Is Zero So Powerful in Math 2025 https://edgythoughts.com/why-is-zero-so-powerful-in-math-2025 What If Dreams Could Be Recorded and Played Back 2025 https://edgythoughts.com/what-if-dreams-could-be-recorded-and-played-back-2025 Read the full article

#20250101t0000000000000#2025httpsedgythoughtscomwhatifdreamscouldberecordedandplayedback2025#2025httpsedgythoughtscomwhyiszerosopowerfulinmath2025what#ceiling#centralprocessingunit#certainty#civilization#computerhardware#cosmos#edgythoughtscom#electricity#elementaryparticle#energy#evidence#existence#experiment#fasterthanlight#fibonaccisequence#galaxy#goldenratio#hypothesis#hypothesishttpsenwikipediaorgwikisimulationhypothesis#information#mathematics#matter#particle#philosophy#photon#physicist#quantum

0 notes

Text

Çeşitli teknolojik terimlerin anlamları merak ediliyor. Bu bağlamda AP... https://gecbunlari.com/apu-nedir-cpu-ile-arasindaki-farklar-neler/

#BilgisayarGenel#AcceleratedProcessingUnit#AMD#APU#bilgisayardonanımı#CentralProcessingUnit#CPU#düşükgüçtüketimi#entegregrafik#grafikişlemci#işlemci#Teknoloji#yüksekperformans

0 notes

Text

Why AGI, superintelligence fears for AI are unfounded

It is easy to say AI will destroy the world but hard to listen patiently and understand why it will never happen writes Satyen K. Bordoloi. Read More. https://www.sify.com/ai-analytics/why-agi-superintelligence-fears-for-ai-are-unfounded/

#AGI#Superintelligence#AI#ArtificialIntelligence#ChatGPT#Doomsday#GraphicsProcessingUnit#GPU#CPU#CentralProcessingUnit

0 notes

Text

Upgrade your workspace experience with the power of technology 🗄️

Introducing the Bitbox Microtower PC, designed to handle even the most demanding tasks with ease 💪.

Experience robust performance with efficiency 💥.

Get your hands on the ultimate PC today!

Learn more: https://www.bitboxpc.com/microtower/

. . .

bitbox #microtower #bitboxmicrotower #microtowerpc #bitboxcpu #system #computersystem #cpu #CentralProcessingUnit #bitboxpc #PC #UltimatePC #technology #ComputerManufacturer #PCmanufacturer #Computer #cpucomputer #Desktop #Monitor #MadeInIndiaComputers #TechnologyManufacturer #TechnologyManufacturerCompany #delhi #india

0 notes

Text

what is CPU ? [central processing unit] पूरी जानकी हिंदी में

कंप्यूटर को अपना काम करने के लिए विभिन्न अलग - अलग उपकरणों की आवस्यकता पड़ती है। कंप्यूटर अपना काम अकेले नहीं कर सकता। क्योकि कंप्यूटर किसी अकेली मशीन का नाम नहीं है. ये तो बहोत सारे बहोत सारे डिवाइस से मिलकर बने एक डिवाइस के समूह का नाम है

CPU क्या होता है ?- what is CPU:-

read more

https://www.betterguid.com/2021/11/what%20is%20CPU%20%20central%20processing%20unit%20%20%20%20%20.html

#cpu#centralprocessingunit#computer#technology#tech#computerknowledge#www.betterguid.com#betterguid#cpukyahotahai#alu#memoryunit#controlunit

1 note

·

View note

Text

N80C51FA-1

N80C51FA-1 INTEL PLCC-44 MICROCONTROLLER IC

For any inquiry, please email us at [email protected] Web- www.adatronix.com

0 notes

Link

calculator #machine #analogcomputer #computing #personalcomputer #programmer #centralprocessingunit #computerscience #peripheral #hardware #mainframe #microprocessor #laptop #vacuumtube #machinecode #computerprogram #server #computerprogramming #memory #integratedcircuit #turingmachine #charlesbabbage #abacus #electronics #astrolabe #cpu #information #homecomputer #computerhardware #internet #pc #sliderule #computation #supercomputer #transistor #turingcomplete #floppydisk #imac #compiler

0 notes

Photo

In his first release for CPU, Proswell bangs out 5 tracks on an enjoyable trip that takes you down loads of different unexpected sonic detours 🎶🙏🏻 #proswell #peoplearegivingandreceivingthanksatincrediblespeeds #centralprocessingunit #sheffield #pagartais #electronic #idm #experimental #techno #synthesizer #vinyl #vinylcollection #recordcollection #vinyladdict #vinylcollector #vinyljunkie #vinylporn #nowspinning #ep https://www.instagram.com/p/CPI39Ikg5R4/?utm_medium=tumblr

#proswell#peoplearegivingandreceivingthanksatincrediblespeeds#centralprocessingunit#sheffield#pagartais#electronic#idm#experimental#techno#synthesizer#vinyl#vinylcollection#recordcollection#vinyladdict#vinylcollector#vinyljunkie#vinylporn#nowspinning#ep

0 notes

Text

Types of CPUs(central processing units) & How do CPUs work?

What is a CPU?

The CPU is the computer’s brain. All computers utilize it to allocate and process tasks and manage operational activities.

CPU types are named by their data-processing chips. Many processors and microprocessors are available, with new powerhouses always being developed. CPU power allows computers to multitask. Before addressing CPU types, we should define certain key terminology.

Types Of CPUs

The processor or microprocessor defines CPUs:

Single-core processor: A microprocessor with one CPU on its die. Single-core processors run slowly, work on one thread, and perform the instruction cycle sequence once. They excel in general-purpose computing.

The cores of a multi-core processor execute instructions as if they were separate computers, even if they are physically placed on the same chip. Many computer programmes function better with a multi-core processor.

Embed processor: An embedded processor is a microprocessor designed for embedded systems. Small and power-efficient, embedded systems are incorporated in the CPU enabling immediate data access. Microcontrollers and microprocessors are embedded processors.

Dual-core processor: A dual-core processor has two independent microprocessors.

Quad-core processor: Four independent microprocessors make up a quad-core processor.

A multi-core CPU with eight independent microprocessors is called an octa-core.

A deca-core processor is an integrated circuit with 10 cores per die or package.

Key CPU terminology

A CPU has several parts, but these are crucial to its operation and understanding:

Memory caches are essential for information retrieval. Caches store recently used data for easy access. Caches store data in CPU processor chip memory to retrieve data faster than RAM. Caches can be made by software or hardware.

All computers have an internal clock that controls their speed and frequency. The clock sends electrical pulses to control CPU circuitry. The pulse delivery rate is called clock speed and is measured in Hertz (Hz) or megahertz (MHz). Setting the clock faster has traditionally increased processing speed.

Core: Cores are processors within processors. Cores read and execute programme instructions. Processors are categorised by core count. Multi-core CPUs process instructions quicker than single-core ones. For commercial purposes, “Intel Core” refers to Intel’s multi-core CPU range.

Threads: An operating system’s scheduler can separately manage and deliver the CPU shortest sequences of programmable instructions called threads. Multithreading allows numerous threads to conduct a computer process simultaneously. Intel’s patented multithreading for parallelization is hyper-threading.

Other CPU parts

The following are also common in current CPUs:

ALU: Performs all arithmetic and logical operations, including math equations and logic-based comparisons. Both types require computer activity.

Data flow between computer components is ensured by buses.

Operate unit: Intensive circuitry that sends electrical pulses to operate the computer system and execute high-level computer instructions.

Instruction register and pointer: Shows CPU’s next instruction set.

Memory unit: Controls RAM-CPU data flow. The memory unit also manages cache memory.

Registers: Built-in persistent memory for frequent, urgent data demands.

How do CPUs work?

CPUs require a repetitive command cycle managed by the control unit and computer clock for synchronisation.

The CPU instruction cycle governs CPU work. The CPU instruction cycle specifies how many times basic computing instructions will be repeated, depending on the computer’s processing power.

Here are three basic computer instructions:

Any memory retrieval triggers a fetch.

Decode: The CPU’s decoder converts binary instructions into electrical signals that interact with other CPU elements.

Computers execute programmes by interpreting and following their instructions.

Some computer owners have skipped the stages needed to increase processing speed, such as adding memory cores. Instead, these people speed up their computers by adjusting the clock. Overclocking is like “jailbreaking” cellphones to change their performance. Like jailbreaking a smartphone, such tampering might damage the device and is discouraged by computer manufacturers.

Leading CPU makers and their CPUs

In recent years, only a few significant companies have made CPU-supporting goods or software.

This sector is dominated by Intel and AMD. Different instruction set architectures are used. Intel processors employ CISC architecture. AMD CPUs use RISC architecture.

Intel sells processors and microprocessors in four series. Intel Core is its top line. Xeon CPUs are for offices and companies. Intel’s Celeron and Pentium series are slower and weaker than Core.

Advanced Micro Devices (AMD) sells CPUs and APUs. APUs are CPUs with customised Radeon graphics. AMD’s Ryzen CPUs are designed for video games and offer high-speed and performance. AMD has shifted from using Athlon processors as a high-end line to a basic computing option.

Arm: Arm leases its high-end CPU designs and other proprietary technologies to equipment manufacturers. Apple makes Arm-based processors for Mac CPUs instead of Intel chips. This is copied by other companies.

CPU/processor related concepts

GPUs

Although “graphics processing unit” includes “graphics,” it doesn’t represent GPUs’ true purpose speed. It accelerates computer graphics due of its speed.

Originally used in PCs, smartphones, and video gaming consoles, the GPU is an electrical circuit. GPUs are being used for cryptocurrency mining and neural network training.

Microprocessors

Computer science produced the microprocessor, a CPU small enough to fit on a chip, to continue miniaturising computers. Microprocessors are categorised by core count.

CPU cores are “the brain within the brain,” the CPU’s physical processing unit. Some microprocessors have multiple processors. A physical core is a CPU integrated onto a chip that only uses one socket, allowing other physical cores to share the same computing environment.

Output devices

Computing would be far more constrained without output devices to execute CPU instructions. Peripherals and other external devices boost a computer’s functionality.

Computer users utilise peripherals to communicate with their computers and get them to follow their commands. Computer keyboards, mouse, scanners, and printers are included.

Modern computers have more than peripherals. Video cameras and microphones are widely used input/output devices.

Use of power

Many concerns are affected by power use. One is multi-core processor heat and how to dissipate it to safeguard the computer CPU. For this reason, hyperscale data centres with thousands of servers have substantial air-conditioning and cooling systems.

Sustainability issues arise even when discussing a few machines rather than thousands. More powerful computers and CPUs demand more energy to run, often gigahertz (GHz).

Specialised chips

Artificial intelligence (AI), the biggest computing development since its inception, affects most computing settings. Speciality processors for AI and other complicated tasks are emerging in the CPU space:

The Tensor Streaming Processor (TSP) handles AI and ML workloads. Also suitable for AI work are the AMD Ryzen Threadripper 3990X 64-Core processor and the Intel Core i9-13900KS Desktop Processor with 24 cores.

Many video editors choose the Intel Core i7 14700KF 20-Core, 28-thread CPU. Others choose AMD’s greatest video editing CPU, the Ryzen 9 7900X.

The AMD Ryzen 7 5800X3D has 3D V-Cache to boost game graphics.

Any current AMD or Intel processor should be sufficient for Windows and multimedia website viewing.

Transistors

Transistors are crucial to electronics and computing. The phrase is a blend of “transfer resistance” and the semiconductor component used to limit electrical current in a circuit.

Transistors are fundamental in computing. The transistor is the foundation of all microchips. In the CPU, transistors create the binary language of 0s and 1s that computers utilise to interpret Boolean logic.

The next CPU wave

Computer scientists constantly improve CPU output and usefulness. Future CPU projections:

New chip materials: Silicon chips have long dominated computing and electronics. New chip materials will boost performance in the next generation of processors (link outside ibm.com). Carbon nanotubes show excellent thermal conductivity through tubes 100,000 times smaller than a human hair, graphene has excellent thermal and electrical properties, and spintronic components study electron spin and could produce a spinning transistor.

Current CPUs employ a binary language, but quantum computing will change that. Quantum computing uses quantum mechanics, which has revolutionised physics, instead of binary language. Binary digits (1s and 0s) can exist in many settings in quantum computing. This data will be stored in multiple locations, making fetches easier and faster. Users will see a significant increase in computing speed and processing capability.

AI everywhere: As AI becomes more prevalent in the computing business and our daily lives, it will directly impact CPU architecture. Expect more AI functionality in computer hardware in the future. AI processing will become much more efficient. Additionally, processing speed will grow and devices will make real-time judgements independently. Cerebras reportedly introduced the “fastest AI chip in the world” as we wait for hardware implementation. The WSE-3 chip trains AI models with 24 trillion parameters. This mega-chip has 900,000 cores and four trillion transistors.

CPUs with strength and flexibility

Companies expect a lot from their machines. Those computers need CPUs with the processing power to meet today’s data-intensive business workloads.

Organisations need adaptable solutions. Smart computing requires equipment that can adapt to your objective. You can focus on your work with IBM servers’ strength and flexibility. Find IBM servers to achieve your organization’s goals today and tomorrow.

Read more on Govindhtech.com

#cpus#centralprocessingunits#microprocessors#IntelCore#AMD#Computerkeyboard#desktop#news#technews#technologynews#technologytrends#govindhtech

0 notes

Text

What Causes Quantum Tunneling 2025

What Causes Quantum Tunneling 2025

Quantum tunneling is one of the most bizarre and fascinating phenomena in modern physics. Imagine a tiny particle breaking the rules of classical physics—passing through a barrier it seemingly shouldn't be able to cross. That’s quantum tunneling. In 2025, advancements in quantum field theory and nanoscale technologies have deepened our understanding of this phenomenon and its implications for both fundamental science and practical technologies. Let’s break down what causes quantum tunneling, how it works, and why it matters. Book-Level Explanation In classical physics, if a particle does not have enough energy to overcome a barrier, it simply cannot cross it. For example, a ball thrown at a hill that’s too high will bounce back. But in quantum mechanics, particles behave not just like solid objects but also like waves. This duality is at the heart of quantum tunneling. Wavefunction and Probability In quantum mechanics, the behavior of particles is described by a wavefunction (Ψ), which contains information about the probabilities of a particle’s position and momentum. This wavefunction does not abruptly stop at a barrier—instead, it exponentially decays within the barrier. If the barrier is thin or low enough, the wavefunction may still exist on the other side, indicating a non-zero probability that the particle will appear beyond the barrier, even though it doesn’t have the classical energy to cross it. This is quantum tunneling. Key Factors Causing Quantum Tunneling: - Heisenberg’s Uncertainty Principle: This principle states that one cannot precisely know both the position and momentum of a particle simultaneously. This uncertainty allows for small probabilities where a particle may momentarily "borrow" energy to overcome a barrier. - Wavefunction Penetration: The wavefunction associated with a particle doesn’t just vanish at the edge of a barrier. It decreases but continues through the barrier, leading to a probability that the particle can appear on the other side. - Barrier Characteristics: The thickness and height of the potential barrier affect tunneling probability. A thinner or lower barrier increases the chance of tunneling. - Quantum Superposition: A particle doesn't take a single path; it explores all possible paths simultaneously. This includes paths that involve penetrating a barrier. Mathematical Expression In simple cases, the tunneling probability (T) can be approximated as: T ≈ e^(-2κa) Where: - κ = √(2m(V − E)) / ħ - m is the particle’s mass - V is the barrier height - E is the particle’s energy - a is the barrier width - ħ is the reduced Planck constant This formula shows that tunneling is more likely when the barrier is thin, or the energy difference (V − E) is small.

Easy Explanation Let’s say you’re in a room with walls, and you don’t have the energy to jump over or break through them. In the classical world, you’re stuck. But in the quantum world, you’re like a ghost with a small chance of magically showing up on the other side—even though you didn’t go over or through the wall the normal way. That’s quantum tunneling. Particles like electrons are not just little balls—they’re also waves of possibility. These waves can “leak” through barriers. If the barrier isn’t too thick or too strong, the wave goes through a bit, and sometimes the particle appears on the other side. This doesn’t mean it breaks the rules—it follows the strange rules of quantum mechanics where “impossible” things are just very, very unlikely… but not impossible. Real-World Applications in 2025 Quantum tunneling isn’t just a curious theory—it powers technologies we use today and is central to cutting-edge research in 2025: - Semiconductors and Transistors: In modern electronics, especially in nanoscale transistors, tunneling can cause current leakage. Engineers now design devices that either reduce unwanted tunneling or exploit it. - Tunnel Diodes: These components intentionally use tunneling to allow current to pass through them in unique ways, enabling fast switching electronics. - Scanning Tunneling Microscope (STM): This device uses tunneling to create atomic-scale images of surfaces. It works by bringing a sharp tip close to a surface and measuring the tunneling current between them. - Nuclear Fusion and Radioactive Decay: In stars, particles tunnel through barriers to fuse and release energy. Alpha decay in unstable atoms is also caused by quantum tunneling. - Quantum Computing: Tunneling is a key aspect of quantum bits (qubits) and how they behave inside superconducting quantum circuits. Why This Matters Understanding quantum tunneling helps scientists and engineers unlock the potential of quantum mechanics for both theoretical advancements and practical technologies. In 2025, it plays a pivotal role in: - Quantum chip design for faster processors. - Quantum sensors with extreme precision. - Energy solutions, especially in fusion research and superconductivity. Tunneling shows us how reality works on the tiniest scale—and reminds us that the universe is far more flexible and strange than our everyday experiences suggest. External Link for Further Reading: For a deeper dive into the physics of tunneling: https://en.wikipedia.org/wiki/Quantum_tunnelling Our Blogs You Might Like - What If Time Travel Became Scientifically Possible 2025 https://edgythoughts.com/what-if-time-travel-became-scientifically-possible-2025 - How Does Quantum Entanglement Work 2025 https://edgythoughts.com/how-does-quantum-entanglement-work-2025 Disclaimer: The easy explanation is provided to make the concept accessible to everyone, including beginners. If you're a student preparing for exams, always refer to your official textbooks, class notes, and follow academic guidelines. Our goal is to help you understand—not replace formal learning. Read the full article

#20250101t0000000000000#2025httpsedgythoughtscomhowdoesquantumentanglementwork2025#2025httpsedgythoughtscomwhatiftimetravelbecamescientificallypossible2025#alphadecay#alphaparticle#basicresearch#behavior#centralprocessingunit#classicalantiquity#classicalphysics#computing#concept#education#electron#electronics#energy#explanation#expressionmathematics#fieldphysics#formula#fusionpower#height#information#integratedcircuit#leakageelectronics#learning#mathematics#matter#mechanics#microscope

0 notes

Text

شتاب سخت افزاری و اهمیت آن

شتاب سخت افزاری قابلیتی است که بخشی از بار پردازشی واحد پردازش مرکزی را به سایر سختافزارها محول میکند. شتاب سخت افزاری (Hardware Acceleration) قابلیتی است که شاید در منو برنامههای مختلف خیلی از دستگاهها مانند گوشیهای اندروید مشاهده کرده باشید. این ویژگی همیشه در برنامههای گوشیهای هوشمند دردسترس نیست؛ اما تعدادی از برنامههای محبوب اندروید مانند یوتیوب و کروم و فیسبوک از آن استفاده میکند. شتاب سخت افزاری کاربردهای مختلفی دارد؛ از رندرینگ کارآمدتر صدا و ویدئو تا خواناترکردن متن و افزایش سرعت گرافیک دوبعدی و انیمیشنهای رابط کاربری. چنانچه این ویژگی دردسترستان قرار دارد، بهتر است از آن استفاده کنید؛ اما در برخی از مواقع ممکن است موجب نقص و باگ شود. اگر سؤالات مختلفی دربارهی آن در سر دارید و در استفاده از آن مردد هستید، ادامهی این مقاله را دنبال کنید.

شتاب سخت افزاری چیست؟

شتاب سخت افزاری از سخت افزار های بهخصوصی استفاده میکند تا کارایی و سرعت اجرای وظایف را در مقایسه با زمانی افزایش دهد که فقط از پردازنده استفاده میشود. معمولا این کار ازطریق واگذاری بخشی از وظیفهی پردازش به واحد پردازش گرافیکی و پردازندهی سیگنال دیجیتال و سایر بلوکهای سختافزاری انجام میشود که ویژهی انجام وظایف خاص هستند. روند کار شتاب سخت افزاری تقریبا مشابه رایانش ناهمگن بهنظر میرسد. بهجای اتکا بر SDK پلتفرمی خاص برای ��سترسی به قطعات پردازشی مختلف، سیستمعامل انواع مورداستفاده تسریع کارایی را دراختیار توسعهدهندگان نرمافزار قرار میدهد. هنگامیکه شتاب سخت افزاری غیرفعال باشد، پردازنده هنوز میتواند عملکرد لازم در نرمافزار را اجرا کند؛ اما با سرعتی کُندتر از زمانیکه این قابلیت فعال است. یکی از رایجترین موارد استفاده از آن ، رمزگذاری و رمزگشایی ویدئو است و بخشی از بار پردازشی CPU را به سایر سختافزارها محول میکند. برای مثال، بهجای رمزگشایی استریم ویدئو بهوسیله واحد پردازندش مرکزی که خیلی کارآمد نیست، کارتهای گرافیک یا سایر سختافزارها اغلب بلوکهای اختصاصی برای رمزگذاری و رمزگشایی ویدئو دارند که میتوانند این وظیفه را بسیار کارآمدتر انجام دهند. بهطور مشابه، خارجکردن فایل صوتی از حالت فشرده ازطریق پردازندهی سیگنال دیجیتال، بسیار سریعتر از واحد پردازش مرکزی انجام میشود. یکی دیگر از رایجترین استفادههای شتاب سخت افزاری ، تسریع گرافیک دوبعدی است؛ مثلا رابطهای کاربری تعداد زیادی اشکال گرافیکی و متن و انیمیشن را باید رندر کنند. این کار را میتواند واحد پردازش مرکزی انجام دهد؛ اما واحد پردازش گرافیکی چنین عملیاتی را سریعتر انجام میدهد. چنین وظایفی میتواند اعمال فیلتر ضدپلهگی (Anti-aliasing) به متن باشد تا صافتر بهنظر برسد یا قراردادن پوشش نیمهشفاف روی ویدئو را شامل شود. برای سایر اعمال گرافیکی پیشرفته، میتوان تسریع فیزیک و نوردهی رهگیری پرتو را مثال زد.

چرا شتاب سخت افزاری مهم است؟

پردازنده نیروی کار عمومی سیستمهای رایانهای محسوب میشود و طوری طراحی شده است تا هر وظیفهای را انجام دهد که به آن واگذار میشود؛ اما این ان��طاف به آن معنا است که پردازنده همیشه کارآمدترین قطعه برای انجام وظایف خاص نیست؛ مثلا وظایفی مانند رمزگشایی ویدئو یا رندر گرافیکی که انبوهی از عملیات ریاضی پیاپی را شامل میشوند. شتاب سخت افزاری وظایف رایج را از واحد پردازش مرکزی به سختافزاری خاصی محول میکند که آنها را نهتنها سریعتر، بلکه کارآمدتر انجام میدهند و باعث خنکترشدن سیستم و دوام بیشتر باتری میشود. هنگام استفاده از بلوکهای اختصاصی رمزگشایی ویدئو بهجای اجرای الگوریتم روی پردازنده، کاربر با یک بار شارژ، میتواند تعداد بیشتری ویدئو باکیفیت تماشا کند. ناگفته نماند این کار پردازنده را آزاد میکند تا کارهای دیگری انجام دهد و سرعت پاسخدهی اپلیکیشنها را افزایش دهد. بهرهگیری از سختافزاری بیشتر برای انجام عمل پردازندش هزینه دارد؛ بنابراین، باید تصمیم گرفت چه ویژگیهایی ارزش پشتیبانی بهوسیلهی سختافزار خاصی را دارند. برای مثال، میتوان کُدکهای محبوب ویدئو را ذکر کرد که در مقایسه با هزینه تمامشده، فواید مطلوبی ندارند. شتاب سخت افزاری از کامپیوترهای شخصی قدرتمند گرفته تا گوشیهای هوشمند، به ابزار مهمی در سیستمهای رایانهای تبدیل شده است. موارد استفاده سختافزارهای اختصاصی با معرفی کاربردهای یادگیری ماشین درحال افزایش است. با این اوصاف، اغلب اوقات فقط برای کاهش مصرف باتری هنگام تماشای ویدئو از یوتیوب استفاده میشود. Read the full article

#Acceleration#centralprocessingunit#Hardware#Hardwareacceleration#performance#processor#youtube#پردازنده#سختافزار#شتاب#شتابسختافزاری

0 notes

Text

Bitbox : "Where Computing Meets Personalized Solutions.

Learn more : https://www.bitboxpc.com/ . . . . .

Bitbox #bitboxmonitors #monitor #Bitboxtnpanel #bitboxpc #pc #Bitboxdesktop #Desktopmonitors

microtower #bitboxmicrotower #microtowerpc #bitboxcpu #system #computersystem #cpu #CentralProcessingUnit #bitboxpc #PC #UltimatePC #technology #ComputerManufacturer #PCmanufacturer #Computer

0 notes

Photo

#centralprocessingunit #computer #board #technology #hardware #💻 #🎨 #🚪 #chip #circuit #equipment #data #close #memory #device #digital #electronics #old #business #motherboard via. (hier: Athens, Greece) https://www.instagram.com/p/Bs5_3kvhFdu/?utm_source=ig_tumblr_share&igshid=cr40aerorz9j

#centralprocessingunit#computer#board#technology#hardware#💻#🎨#🚪#chip#circuit#equipment#data#close#memory#device#digital#electronics#old#business#motherboard

0 notes

Photo

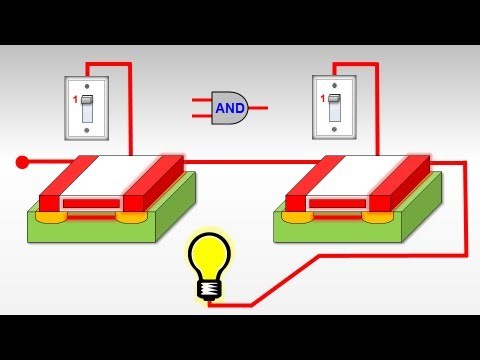

? - See How Computers Add Numbers In One Lesson Take a look inside your computer to se... #blogema #andgate #animation #binary #binarynumbers #centralprocessingunit #computer #computers #cpu #desktop #electronics #howcomputerswork #howprocessorswork #howtransistorswork #inonelesson #ipad #iphone #laptop #logicgates #microchip #microprocessor #minecraft #mosfet #technology #transistors #xorgate

0 notes