#cloudwatch and cloudtrail in aws

Explore tagged Tumblr posts

Text

Compare CloudTrail Vs CloudWatch Services in AWS

Full Video Link https://youtube.com/shorts/izMnQPCZeQk Check out CodeOneDigest's latest #video tutorial on #AWS CloudTrail vs CloudWatch! Learn about AWS CloudTrail & CloudWatch and stay ahead of the technology curve. #CodeOneDigest #YouTube #cloud

CloudTrail enables auditing, security monitoring, and operational troubleshooting by tracking user activity and API usage. CloudTrail logs, monitors, and retains account activity related to user actions across AWS infrastructure, giving you control over storage, analysis, and remediation actions. CloudTrail is active in your AWS account when you create it and doesn’t require any manual setup.…

View On WordPress

#amazon cloudtrail vs cloudwatch#amazon web services#aws#aws cloud#aws cloudtrail tutorial#aws cloudtrail vs cloudwatch#aws cloudwatch vs cloudtrail#cloudtrail vs cloudwatch#cloudtrail vs cloudwatch vs config#cloudwatch and cloudtrail#cloudwatch and cloudtrail in aws#cloudwatch aws#cloudwatch service#cloudwatch vs cloudtrail#cloudwatch vs cloudtrail vs config#cloudwatch vs cloudtrail vs x-ray#difference between cloudwatch and cloudtrail#what is amazon web services

0 notes

Text

How can you optimize the performance of machine learning models in the cloud?

Optimizing machine learning models in the cloud involves several strategies to enhance performance and efficiency. Here’s a detailed approach:

Choose the Right Cloud Services:

Managed ML Services:

Use managed services like AWS SageMaker, Google AI Platform, or Azure Machine Learning, which offer built-in tools for training, tuning, and deploying models.

Auto-scaling:

Enable auto-scaling features to adjust resources based on demand, which helps manage costs and performance.

Optimize Data Handling:

Data Storage:

Use scalable cloud storage solutions like Amazon S3, Google Cloud Storage, or Azure Blob Storage for storing large datasets efficiently.

Data Pipeline:

Implement efficient data pipelines with tools like Apache Kafka or AWS Glue to manage and process large volumes of data.

Select Appropriate Computational Resources:

Instance Types:

Choose the right instance types based on your model’s requirements. For example, use GPU or TPU instances for deep learning tasks to accelerate training.

Spot Instances:

Utilize spot instances or preemptible VMs to reduce costs for non-time-sensitive tasks.

Optimize Model Training:

Hyperparameter Tuning:

Use cloud-based hyperparameter tuning services to automate the search for optimal model parameters. Services like Google Cloud AI Platform’s HyperTune or AWS SageMaker’s Automatic Model Tuning can help.

Distributed Training:

Distribute model training across multiple instances or nodes to speed up the process. Frameworks like TensorFlow and PyTorch support distributed training and can take advantage of cloud resources.

Monitoring and Logging:

Monitoring Tools:

Implement monitoring tools to track performance metrics and resource usage. AWS CloudWatch, Google Cloud Monitoring, and Azure Monitor offer real-time insights.

Logging:

Maintain detailed logs for debugging and performance analysis, using tools like AWS CloudTrail or Google Cloud Logging.

Model Deployment:

Serverless Deployment:

Use serverless options to simplify scaling and reduce infrastructure management. Services like AWS Lambda or Google Cloud Functions can handle inference tasks without managing servers.

Model Optimization:

Optimize models by compressing them or using model distillation techniques to reduce inference time and improve latency.

Cost Management:

Cost Analysis:

Regularly analyze and optimize cloud costs to avoid overspending. Tools like AWS Cost Explorer, Google Cloud’s Cost Management, and Azure Cost Management can help monitor and manage expenses.

By carefully selecting cloud services, optimizing data handling and training processes, and monitoring performance, you can efficiently manage and improve machine learning models in the cloud.

2 notes

·

View notes

Text

Mastering AWS DevOps in 2025: Best Practices, Tools, and Real-World Use Cases

In 2025, the cloud ecosystem continues to grow very rapidly. Organizations of every size are embracing AWS DevOps to automate software delivery, improve security, and scale business efficiently. Mastering AWS DevOps means knowing the optimal combination of tools, best practices, and real-world use cases that deliver success in production.

This guide will assist you in discovering the most important elements of AWS DevOps, the best practices of 2025, and real-world examples of how top companies are leveraging AWS DevOps to compete.

What is AWS DevOps

AWS DevOps is the union of cultural principles, practices, and tools on Amazon Web Services that enhances an organization's capacity to deliver applications and services at a higher speed. It facilitates continuous integration, continuous delivery, infrastructure as code, monitoring, and cooperation among development and operations teams.

Why AWS DevOps is Important in 2025

As organizations require quicker innovation and zero downtime, DevOps on AWS offers the flexibility and reliability to compete. Trends such as AI integration, serverless architecture, and automated compliance are changing how teams adopt DevOps in 2025.

Advantages of adopting AWS DevOps:

1 Faster deployment cycles

2 Enhanced system reliability

3 Flexible and scalable cloud infrastructure

4 Automation from code to production

5 Integrated security and compliance

Best AWS DevOps Tools to Learn in 2025

These are the most critical tools fueling current AWS DevOps pipelines:

AWS CodePipeline

Your release process can be automated with our fully managed CI/CD service.

AWS CodeBuild

Scalable build service for creating ready-to-deploy packages, testing, and building source code.

AWS CodeDeploy

Automates code deployments to EC2, Lambda, ECS, or on-prem servers with zero-downtime approaches.

AWS CloudFormation and CDK

For infrastructure as code (IaC) management, allowing repeatable and versioned cloud environments.

Amazon CloudWatch

Facilitates logging, metrics, and alerting to track application and infrastructure performance.

AWS Lambda

Serverless compute that runs code in response to triggers, well-suited for event-driven DevOps automation.

AWS DevOps Best Practices in 2025

1. Adopt Infrastructure as Code (IaC)

Utilize AWS CloudFormation or Terraform to declare infrastructure. This makes it repeatable, easier to collaborate on, and version-able.

2. Use Full CI/CD Pipelines

Implement tools such as CodePipeline, GitHub Actions, or Jenkins on AWS to automate deployment, testing, and building.

3. Shift Left on Security

Bake security in early with Amazon Inspector, CodeGuru, and Secrets Manager. As part of CI/CD, automate vulnerability scans.

4. Monitor Everything

Utilize CloudWatch, X-Ray, and CloudTrail to achieve complete observability into your system. Implement alerts to detect and respond to problems promptly.

5. Use Containers and Serverless for Scalability

Utilize Amazon ECS, EKS, or Lambda for autoscaling. These services lower infrastructure management overhead and enhance efficiency.

Real-World AWS DevOps Use Cases

Use Case 1: Scalable CI/CD for a Fintech Startup

AWS CodePipeline and CodeDeploy were used by a financial firm to automate deployments in both production and staging environments. By containerizing using ECS and taking advantage of CloudWatch monitoring, they lowered deployment mistakes by 80 percent and attained near-zero downtime.

Use Case 2: Legacy Modernization for an Enterprise

A legacy enterprise moved its on-premise applications to AWS with CloudFormation and EC2 Auto Scaling. Through the adoption of full-stack DevOps pipelines and the transformation to microservices with EKS, they enhanced time-to-market by 60 percent.

Use Case 3: Serverless DevOps for a SaaS Product

A SaaS organization utilized AWS Lambda and API Gateway for their backend functions. They implemented quick feature releases and automatically scaled during high usage without having to provision infrastructure using CodeBuild and CloudWatch.

Top Trends in AWS DevOps in 2025

AI-driven DevOps: Integration with CodeWhisperer, CodeGuru, and machine learning algorithms for intelligence-driven automation

Compliance-as-Code: Governance policies automated using services such as AWS Config and Service Control Policies

Multi-account strategies: Employing AWS Organizations for scalable, secure account management

Zero Trust Architecture: Implementing strict identity-based access with IAM, SSO, and MFA

Hybrid Cloud DevOps: Connecting on-premises systems to AWS for effortless deployments

Conclusion

In 2025, becoming a master of AWS DevOps means syncing your development workflows with cloud-native architecture, innovative tools, and current best practices. With AWS, teams are able to create secure, scalable, and automated systems that release value at an unprecedented rate.

Begin with automating your pipelines, securing your deployments, and scaling with confidence. DevOps is the way of the future, and AWS is leading the way.

Frequently Asked Questions

What distinguishes AWS DevOps from DevOps? While AWS DevOps uses AWS services and tools to execute DevOps, DevOps itself is a practice.

Can small teams benefit from AWS DevOps

Yes. AWS provides fully managed services that enable small teams to scale and automate without having to handle complicated infrastructure.

Which programming languages does AWS DevOps support

AWS supports the big ones - Python, Node.js, Java, Go, .NET, Ruby, and many more.

Is AWS DevOps for enterprise-scale applications

Yes. Large enterprises run large-scale, multi-region applications with millions of users using AWS DevOps.

1 note

·

View note

Text

How to Secure AI Artifacts Across the ML Lifecycle – Anton R Gordon’s Protocol for Trusted Pipelines

In today’s cloud-native AI landscape, securing machine learning (ML) artifacts is no longer optional—it’s critical. As AI models evolve from experimental notebooks to enterprise-scale applications, every artifact generated—data, models, configurations, and logs—becomes a potential attack surface. Anton R Gordon, a seasoned AI architect and cloud security expert, has pioneered a structured approach for securing AI pipelines across the ML lifecycle. His protocol is purpose-built for teams deploying ML workflows on platforms like AWS, GCP, and Azure.

Why ML Artifact Security Matters

Machine learning pipelines involve several critical stages—data ingestion, preprocessing, model training, deployment, and monitoring. Each phase produces artifacts such as datasets, serialized models, training logs, and container images. If compromised, these artifacts can lead to:

Data leakage and compliance violations (e.g., GDPR, HIPAA)

Model poisoning or backdoor attacks

Unauthorized model replication or intellectual property theft

Reduced model accuracy due to tampered configurations

Anton R Gordon’s Security Protocol for Trusted AI Pipelines

Anton’s methodology combines secure cloud services with DevSecOps principles to ensure that ML artifacts remain verifiable, auditable, and tamper-proof.

1. Secure Data Ingestion & Preprocessing

Anton R Gordon emphasizes securing the source data using encrypted S3 buckets or Google Cloud Storage with fine-grained IAM policies. All data ingestion pipelines must implement checksum validation and data versioning to ensure integrity.

He recommends integrating AWS Glue with Data Catalog encryption enabled, and VPC-only connectivity to eliminate exposure to public internet endpoints.

2. Model Training with Encryption and Audit Logging

During training, Anton R Gordon suggests enabling SageMaker Training Jobs with KMS encryption for both input and output artifacts. Logs should be streamed to CloudWatch Logs or GCP Logging with retention policies configured.

Docker containers used in training should be scanned with AWS Inspector or GCP Container Analysis, and signed using tools like cosign to verify authenticity during deployment.

3. Model Registry and Artifact Signing

A crucial step in Gordon’s protocol is registering models in a version-controlled model registry, such as SageMaker Model Registry or MLflow, along with cryptographic signatures.

Models are hashed and signed using SHA-256 and stored with corresponding metadata to prevent rollback or substitution attacks. Signing ensures that only approved models proceed to deployment.

4. Secure Deployment with CI/CD Integration

Anton integrates CI/CD pipelines with security gates using tools like AWS CodePipeline and GitHub Actions, enforcing checks for signed models, container scan results, and infrastructure-as-code validation.

Deployed endpoints are protected using VPC endpoint policies, IAM role-based access, and SSL/TLS encryption.

5. Monitoring & Drift Detection with Alerting

In production, SageMaker Model Monitor and Amazon CloudTrail are used to detect unexpected behavior or changes to model behavior or configurations. Alerts are sent via Amazon SNS, and automated rollbacks are triggered on anomaly detection.

Final Thoughts

Anton R Gordon’s protocol for securing AI artifacts offers a holistic, scalable, and cloud-native strategy to protect ML pipelines in real-world environments. As AI adoption continues to surge, implementing these trusted pipeline principles ensures your models—and your business—remain resilient, compliant, and secure.

0 notes

Text

What are the benefits of Amazon EMR? Drawbacks of AWS EMR

Benefits of Amazon EMR

Amazon EMR has many benefits. These include AWS's flexibility and cost savings over on-premises resource development.

Cost-saving

Amazon EMR costs depend on instance type, number of Amazon EC2 instances, and cluster launch area. On-demand pricing is low, but Reserved or Spot Instances save much more. Spot instances can save up to a tenth of on-demand costs.

Note

Using Amazon S3, Kinesis, or DynamoDB with your EMR cluster incurs expenses irrespective of Amazon EMR usage.

Note

Set up Amazon S3 VPC endpoints when creating an Amazon EMR cluster in a private subnet. If your EMR cluster is on a private subnet without Amazon S3 VPC endpoints, you will be charged extra for S3 traffic NAT gates.

AWS integration

Amazon EMR integrates with other AWS services for cluster networking, storage, security, and more. The following list shows many examples of this integration:

Use Amazon EC2 for cluster nodes.

Amazon VPC creates the virtual network where your instances start.

Amazon S3 input/output data storage

Set alarms and monitor cluster performance with Amazon CloudWatch.

AWS IAM permissions setting

Audit service requests with AWS CloudTrail.

Cluster scheduling and launch with AWS Data Pipeline

AWS Lake Formation searches, categorises, and secures Amazon S3 data lakes.

Its deployment

The EC2 instances in your EMR cluster do the tasks you designate. When you launch your cluster, Amazon EMR configures instances using Spark or Apache Hadoop. Choose the instance size and type that best suits your cluster's processing needs: streaming data, low-latency queries, batch processing, or big data storage.

Amazon EMR cluster software setup has many options. For example, an Amazon EMR version can be loaded with Hive, Pig, Spark, and flexible frameworks like Hadoop. Installing a MapR distribution is another alternative. Since Amazon EMR runs on Amazon Linux, you can manually install software on your cluster using yum or the source code.

Flexibility and scalability

Amazon EMR lets you scale your cluster as your computing needs vary. Resizing your cluster lets you add instances during peak workloads and remove them to cut costs.

Amazon EMR supports multiple instance groups. This lets you employ Spot Instances in one group to perform jobs faster and cheaper and On-Demand Instances in another for guaranteed processing power. Multiple Spot Instance types might be mixed to take advantage of a better price.

Amazon EMR lets you use several file systems for input, output, and intermediate data. HDFS on your cluster's primary and core nodes can handle data you don't need to store beyond its lifecycle.

Amazon S3 can be used as a data layer for EMR File System applications to decouple computation and storage and store data outside of your cluster's lifespan. EMRFS lets you scale up or down to meet storage and processing needs independently. Amazon S3 lets you adjust storage and cluster size to meet growing processing needs.

Reliability

Amazon EMR monitors cluster nodes and shuts down and replaces instances as needed.

Amazon EMR lets you configure automated or manual cluster termination. Automatic cluster termination occurs after all procedures are complete. Transitory cluster. After processing, you can set up the cluster to continue running so you can manually stop it. You can also construct a cluster, use the installed apps, and manually terminate it. These clusters are “long-running clusters.”

Termination prevention can prevent processing errors from terminating cluster instances. With termination protection, you can retrieve data from instances before termination. Whether you activate your cluster by console, CLI, or API changes these features' default settings.

Security

Amazon EMR uses Amazon EC2 key pairs, IAM, and VPC to safeguard data and clusters.

IAM

Amazon EMR uses IAM for permissions. Person or group permissions are set by IAM policies. Users and groups can access resources and activities through policies.

The Amazon EMR service uses IAM roles, while instances use the EC2 instance profile. These roles allow the service and instances to access other AWS services for you. Amazon EMR and EC2 instance profiles have default roles. By default, roles use AWS managed policies generated when you launch an EMR cluster from the console and select default permissions. Additionally, the AWS CLI may construct default IAM roles. Custom service and instance profile roles can be created to govern rights outside of AWS.

Security groups

Amazon EMR employs security groups to control EC2 instance traffic. Amazon EMR shares a security group for your primary instance and core/task instances when your cluster is deployed. Amazon EMR creates security group rules to ensure cluster instance communication. Extra security groups can be added to your primary and core/task instances for more advanced restrictions.

Encryption

Amazon EMR enables optional server-side and client-side encryption using EMRFS to protect Amazon S3 data. After submission, Amazon S3 encrypts data server-side.

The EMRFS client on your EMR cluster encrypts and decrypts client-side encryption. AWS KMS or your key management system can handle client-side encryption root keys.

Amazon VPC

Amazon EMR launches clusters in Amazon VPCs. VPCs in AWS allow you to manage sophisticated network settings and access functionalities.

AWS CloudTrail

Amazon EMR and CloudTrail record AWS account requests. This data shows who accesses your cluster, when, and from what IP.

Amazon EC2 key pairs

A secure link between the primary node and your remote computer lets you monitor and communicate with your cluster. SSH or Kerberos can authenticate this connection. SSH requires an Amazon EC2 key pair.

Monitoring

Debug cluster issues like faults or failures utilising log files and Amazon EMR management interfaces. Amazon EMR can archive log files on Amazon S3 to save records and solve problems after your cluster ends. The Amazon EMR UI also has a task, job, and step-specific debugging tool for log files.

Amazon EMR connects to CloudWatch for cluster and job performance monitoring. Alarms can be set based on cluster idle state and storage use %.

Management interfaces

There are numerous Amazon EMR access methods:

The console provides a graphical interface for cluster launch and management. You may examine, debug, terminate, and describe clusters to launch via online forms. Amazon EMR is easiest to use via the console, requiring no scripting.

Installing the AWS Command Line Interface (AWS CLI) on your computer lets you connect to Amazon EMR and manage clusters. The broad AWS CLI includes Amazon EMR-specific commands. You can automate cluster administration and initialisation with scripts. If you prefer command line operations, utilise the AWS CLI.

SDK allows cluster creation and management for Amazon EMR calls. They enable cluster formation and management automation systems. This SDK is best for customising Amazon EMR. Amazon EMR supports Go, Java,.NET (C# and VB.NET), Node.js, PHP, Python, and Ruby SDKs.

A Web Service API lets you call a web service using JSON. A custom SDK that calls Amazon EMR is best done utilising the API.

Complexity:

EMR cluster setup and maintenance are more involved than with AWS Glue and require framework knowledge.

Learning curve

Setting up and optimising EMR clusters may require adjusting settings and parameters.

Possible Performance Issues:

Incorrect instance types or under-provisioned clusters might slow task execution and other performance.

Depends on AWS:

Due to its deep interaction with AWS infrastructure, EMR is less portable than on-premise solutions despite cloud flexibility.

#AmazonEMR#AmazonEC2#AmazonS3#AmazonVirtualPrivateCloud#EMRFS#AmazonEMRservice#Technology#technews#NEWS#technologynews#govindhtech

0 notes

Text

AWS Unlocked: Skills That Open Doors

AWS Demand and Relevance in the Job Market

Amazon Web Services (AWS) continues to dominate the cloud computing space, making AWS skills highly valuable in today’s job market. As more companies migrate to the cloud for scalability, cost-efficiency, and innovation, professionals with AWS expertise are in high demand. From startups to Fortune 500 companies, organizations are seeking cloud architects, developers, and DevOps engineers proficient in AWS.

The relevance of AWS spans across industries—IT, finance, healthcare, and more—highlighting its versatility. Certifications like AWS Certified Solutions Architect or AWS Certified DevOps Engineer serve as strong indicators of proficiency and can significantly boost one’s resume.

According to job portals and market surveys, AWS-related roles often command higher salaries compared to non-cloud positions. As cloud technology continues to evolve, professionals with AWS knowledge remain crucial to digital transformation strategies, making it a smart career investment.

Basic AWS Knowledge

Amazon Web Services (AWS) is a cloud computing platform that provides a wide range of services, including computing power, storage, databases, and networking. Understanding the basics of AWS is essential for anyone entering the tech industry or looking to enhance their IT skills.

At its core, AWS offers services like EC2 (virtual servers), S3 (cloud storage), RDS (managed databases), and VPC (networking). These services help businesses host websites, run applications, manage data, and scale infrastructure without managing physical servers.

Basic AWS knowledge also includes understanding regions and availability zones, how to navigate the AWS Management Console, and using IAM (Identity and Access Management) for secure access control.

Getting started with AWS doesn’t require advanced technical skills. With free-tier access and beginner-friendly certifications like AWS Certified Cloud Practitioner, anyone can begin their cloud journey. This foundational knowledge opens doors to more specialized cloud roles in the future.

AWS Skills Open Up These Career Roles

Cloud Architect Designs and manages an organization's cloud infrastructure using AWS services to ensure scalability, performance, and security.

Solutions Architect Creates technical solutions based on AWS services to meet specific business needs, often involved in client-facing roles.

DevOps Engineer Automates deployment processes using tools like AWS CodePipeline, CloudFormation, and integrates development with operations.

Cloud Developer Builds cloud-native applications using AWS services such as Lambda, API Gateway, and DynamoDB.

SysOps Administrator Handles day-to-day operations of AWS infrastructure, including monitoring, backups, and performance tuning.

Security Specialist Focuses on cloud security, identity management, and compliance using AWS IAM, KMS, and security best practices.

Data Engineer/Analyst Works with AWS tools like Redshift, Glue, and Athena for big data processing and analytics.

AWS Skills You Will Learn

Cloud Computing Fundamentals Understand the basics of cloud models (IaaS, PaaS, SaaS), deployment types, and AWS's place in the market.

AWS Core Services Get hands-on with EC2 (compute), S3 (storage), RDS (databases), and VPC (networking).

IAM & Security Learn how to manage users, roles, and permissions with Identity and Access Management (IAM) for secure access.

Scalability & Load Balancing Use services like Auto Scaling and Elastic Load Balancer to ensure high availability and performance.

Monitoring & Logging Track performance and troubleshoot using tools like Amazon CloudWatch and AWS CloudTrail.

Serverless Computing Build and deploy applications with AWS Lambda, API Gateway, and DynamoDB.

Automation & DevOps Tools Work with AWS CodePipeline, CloudFormation, and Elastic Beanstalk to automate infrastructure and deployments.

Networking & CDN Configure custom networks and deliver content faster using VPC, Route 53, and CloudFront.

Final Thoughts

The AWS Certified Solutions Architect – Associate certification is a powerful step toward building a successful cloud career. It validates your ability to design scalable, reliable, and secure AWS-based solutions—skills that are in high demand across industries.

Whether you're an IT professional looking to upskill or someone transitioning into cloud computing, this certification opens doors to roles like Cloud Architect, Solutions Architect, and DevOps Engineer. With real-world knowledge of AWS core services, architecture best practices, and cost-optimization strategies, you'll be equipped to contribute to cloud projects confidently.

0 notes

Text

AWS DevOps Course: A Pathway to Cloud Success

In today’s technology-driven world, businesses are constantly seeking ways to streamline software development, enhance collaboration, and accelerate deployment processes. With cloud computing becoming the backbone of modern IT infrastructure, the need for professionals skilled in AWS DevOps has surged. Enrolling in an AWS DevOps course online or AWS DevOps classroom training can help IT professionals gain the expertise needed to automate deployments, manage cloud infrastructure, and optimize workflows. For those looking to build a career in this field, a structured AWS DevOps certification course is essential.

What is AWS DevOps?

AWS DevOps is a set of devops training in ameerpet combined with AWS services to automate and streamline software development, deployment, and operations. With AWS, organizations can implement CI/CD pipelines, Infrastructure as Code (IaC), automated monitoring, and security best practices.

Modules of an AWS DevOps Course

An aws devops certification course in ameerpet or online program typically covers the following essential topics:

Introduction to DevOps and AWS– Understanding the importance of devops training in ameerpet in cloud environments.

Continuous Integration and Continuous Deployment (CI/CD) – Automating deployment processes using AWS CodePipeline, AWS CodeBuild, and AWS CodeDeploy.

Monitoring and Logging – Implementing Amazon CloudWatch and AWS CloudTrail for performance tracking and security management.

Containerization and Orchestration – Deploying and managing applications using Docker and Kubernetes (Amazon EKS).

Automation and Configuration Management– Using AWS Systems Manager and configuration management tools like Ansible, Chef, and Puppet.

Career Benefits of AWS DevOps Training

Completing an aws devops training in ameerpet program opens doors to numerous career opportunities. Organizations are rapidly adopting aws devops training online in ameerpet to improve development efficiency and automation. This has led to a high demand for professionals skilled in AWS DevOps, making it one of the most sought-after skill sets in IT.

Additionally, aws devops course online in ameerpet provides flexibility for professionals looking to upskill while continuing their current jobs. aws devops certification course in ameerpet also enhance job prospects by validating expertise in AWS DevOps tools and practices.

Online vs. Classroom Training: Which is Better?

Both options provide industry-relevant knowledge, but aws devops classroom course in ameerpet may be more beneficial for those who prefer structured, hands-on learning environments.

Whether opting for online or classroom learning, gaining practical experience through projects and case studies is crucial for mastering AWS DevOps skills.

Why Opt for Version IT for AWS DevOps Training? Choosing the right devops training institute in ameerpet is crucial for building a successful career in cloud computing. Version IT, a leading provider of aws devops training in ameerpet, offers expert-led programs designed to provide in-depth knowledge and hands-on experience. Their aws devops classroom course in ameerpet and aws devops course online in ameerpet programs are tailored to industry needs, ensuring that students gain real-world skills.

An aws devops certification course in ameerpet is a valuable investment for IT professionals looking to advance their careers in cloud computing and automation. By mastering AWS DevOps tools and methodologies, professionals can help businesses achieve faster software development, improved collaboration, and seamless deployment processes.

0 notes

Text

Amazon S3 Bucket Feature Tutorial Part2 | Explained S3 Bucket Features for Cloud Developer

Full Video Link Part1 - https://youtube.com/shorts/a5Hioj5AJOU Full Video Link Part2 - https://youtube.com/shorts/vkRdJBwhWjE Hi, a new #video on #aws #s3bucket #features #cloudstorage is published on #codeonedigest #youtube channel. @java #java #awsc

Amazon Simple Storage Service (Amazon S3) is an object storage service that offers industry-leading scalability, data availability, security, and performance. Customers of all sizes and industries can use Amazon S3 to store and protect any amount of data. Amazon S3 provides management features so that you can optimize, organize, and configure access to your data to meet your specific business,…

View On WordPress

#amazon s3 bucket features#amazon s3 features#amazon web services#aws#aws cloud#aws cloudtrail#aws cloudwatch#aws s3#aws s3 bucket#aws s3 bucket creation#aws s3 bucket features#aws s3 bucket tutorial#aws s3 classes#aws s3 features#aws s3 interview questions and answers#aws s3 monitoring#aws s3 tutorial#cloud computing#s3 consistency#s3 features#s3 inventory report#s3 storage lens#simple storage service (s3)#simple storage service features

0 notes

Text

Managing Multi-Region Deployments in AWS

Introduction

Multi-region deployments in AWS help organizations achieve high availability, disaster recovery, reduced latency, and compliance with regional data regulations. This guide covers best practices, AWS services, and strategies for deploying applications across multiple AWS regions.

1. Why Use Multi-Region Deployments?

✅ High Availability & Fault Tolerance

If one region fails, traffic is automatically routed to another.

✅ Disaster Recovery (DR)

Ensure business continuity with backup and failover strategies.

✅ Low Latency & Performance Optimization

Serve users from the nearest AWS region for faster response times.

✅ Compliance & Data Residency

Meet legal requirements by storing and processing data in specific regions.

2. Key AWS Services for Multi-Region Deployments

🏗 Global Infrastructure

Amazon Route 53 → Global DNS routing for directing traffic

AWS Global Accelerator → Improves network latency across regions

AWS Transit Gateway → Connects VPCs across multiple regions

🗄 Data Storage & Replication

Amazon S3 Cross-Region Replication (CRR) → Automatically replicates S3 objects

Amazon RDS Global Database → Synchronizes databases across regions

DynamoDB Global Tables → Provides multi-region database access

⚡ Compute & Load Balancing

Amazon EC2 & Auto Scaling → Deploy compute instances across regions

AWS Elastic Load Balancer (ELB) → Distributes traffic across regions

AWS Lambda → Run serverless functions in multiple regions

🛡 Security & Compliance

AWS Identity and Access Management (IAM) → Ensures consistent access controls

AWS Key Management Service (KMS) → Multi-region encryption key management

AWS WAF & Shield → Protects against global security threats

3. Strategies for Multi-Region Deployments

1️⃣ Active-Active Deployment

All regions handle traffic simultaneously, distributing users to the closest region. ✔️ Pros: High availability, low latency ❌ Cons: More complex synchronization, higher costs

Example:

Route 53 with latency-based routing

DynamoDB Global Tables for database synchronization

Multi-region ALB with AWS Global Accelerator

2️⃣ Active-Passive Deployment

One region serves traffic, while a standby region takes over in case of failure. ✔️ Pros: Simplified operations, cost-effective ❌ Cons: Higher failover time

Example:

Route 53 failover routing

RDS Global Database with read replicas

Cross-region S3 replication for backups

3️⃣ Disaster Recovery (DR) Strategy

Backup & Restore: Store backups in a second region and restore if needed

Pilot Light: Replicate minimal infrastructure in another region, scaling up during failover

Warm Standby: Maintain a scaled-down replica, scaling up on failure

Hot Standby (Active-Passive): Fully operational second region, activated only during failure

4. Example: Multi-Region Deployment with AWS Global Accelerator

Step 1: Set Up Compute Instances

Deploy EC2 instances in two AWS regions (e.g., us-east-1, eu-west-1).shaws ec2 run-instances --region us-east-1 --image-id ami-xyz --instance-type t3.micro aws ec2 run-instances --region eu-west-1 --image-id ami-abc --instance-type t3.micro

Step 2: Configure an Auto Scaling Group

shaws autoscaling create-auto-scaling-group --auto-scaling-group-name multi-region-asg \ --launch-template LaunchTemplateId=lt-xyz \ --min-size 1 --max-size 3 \ --vpc-zone-identifier subnet-xyz \ --region us-east-1

Step 3: Use AWS Global Accelerator

shaws globalaccelerator create-accelerator --name MultiRegionAccelerator

Step 4: Set Up Route 53 Latency-Based Routing

shaws route53 change-resource-record-sets --hosted-zone-id Z123456 --change-batch file://route53.json

route53.json example:json{ "Changes": [{ "Action": "UPSERT", "ResourceRecordSet": { "Name": "example.com", "Type": "A", "SetIdentifier": "us-east-1", "Region": "us-east-1", "TTL": 60, "ResourceRecords": [{ "Value": "203.0.113.1" }] } }] }

5. Monitoring & Security Best Practices

✅ AWS CloudTrail & CloudWatch → Monitor activity logs and performance ✅ AWS GuardDuty → Threat detection across regions ✅ AWS KMS Multi-Region Keys → Encrypt data securely in multiple locations ✅ AWS Config → Ensure compliance across global infrastructure

6. Cost Optimization Tips

💰 Use AWS Savings Plans for EC2 & RDS 💰 Optimize Data Transfer Costs with AWS Global Accelerator 💰 Auto Scale Services to Avoid Over-Provisioning 💰 Use S3 Intelligent-Tiering for Cost-Effective Storage

Conclusion

A well-architected multi-region deployment in AWS ensures high availability, disaster recovery, and improved performance for global users. By leveraging AWS Global Accelerator, Route 53, RDS Global Databases, and Auto Scaling, organizations can build resilient applications with seamless failover capabilities.

WEBSITE: https://www.ficusoft.in/aws-training-in-chennai/

0 notes

Text

🌟 Mastering AWS S3: A Comprehensive Guide 🌟

🚀 Introduction

In today’s digital age, cloud storage is the backbone of modern businesses and developers. 🌐 And when it comes to AWS S3 (Amazon Simple Storage Service), you’re looking at one of the most reliable and scalable solutions out there. 💪 Whether it’s hosting websites, backing up data, or handling big data analytics, AWS S3 has your back. ��

This guide breaks down everything you need to know about AWS S3: its features, benefits, use cases, and tips to unlock its full potential. 💎

📂 What is AWS S3?

AWS S3 is your go-to cloud-based storage solution. ☁️ It’s like having a digital vault that scales endlessly to store and retrieve your data. First launched in 2006, it’s now a must-have for businesses worldwide 🌍.

AWS S3 organizes data into “buckets” 🪣, where each bucket acts as a container for objects (aka files 🗂️). Add in metadata and unique keys, and voilà—you’ve got a seamless storage solution!

🔑 Key Concepts:

Buckets: Think of them as folders for your data 📂.

Objects: The actual files stored within S3 📁.

Keys: Unique IDs to find your files easily 🔍.

Regions: Choose physical data storage locations for faster access and compliance. 🌎

✨ Key Features of AWS S3

Here’s why AWS S3 is a crowd favorite 🌟:

1. 🚀 Scalability

It grows with you! Store as much data as you need without limits. 📈

2. 🛡️ Durability and Availability

Your data is ultra-safe with 99.999999999% durability—talk about reliability! 💾✨

3. 🔒 Security

Enjoy top-notch encryption (both at rest and in transit) and granular access controls. 🔐

4. 🔄 Versioning

Never lose an important file again! Keep multiple versions of your objects. 🕰️

5. 🏷️ Storage Classes

Optimize costs with different storage classes like Standard, Glacier, and Intelligent-Tiering. 💰💡

6. 🌍 Data Transfer Acceleration

Speed up your transfers using Amazon’s global network. 🚄

7. 🔧 Lifecycle Management

Automate data transitions and deletions based on policies. 📜🤖

💡 Benefits of Using AWS S3

1. 💵 Cost-Effectiveness

With pay-as-you-go pricing, you only pay for what you actually use! 🛒

2. 🌏 Global Reach

Store your data in multiple AWS regions for lightning-fast access. ⚡

3. 🔗 Seamless Integration

Works flawlessly with AWS services like Lambda, EC2, and RDS. 🔄

4. 🛠️ Versatility

From hosting static websites to enabling machine learning, S3 does it all! 🤹♂️

5. 👩💻 Developer-Friendly

Packed with SDKs, APIs, and CLI tools to make life easier. 🎯

📚 Common Use Cases

Here’s how businesses use AWS S3 to shine ✨:

1. 🔄 Backup and Recovery

Protect critical data with reliable backups. 🔄💾

2. 🌐 Content Delivery

Host websites, images, and videos, and pair it with CloudFront for blazing-fast delivery. 🌟📽️

3. 📊 Big Data Analytics

Store and process huge datasets with analytics tools like EMR and Athena. 📈🔍

4. 🎥 Media Hosting

Perfect for storing high-res images and streaming videos. 📸🎬

5. ⚙️ Application Hosting

Store app data like configs and logs effortlessly. 📱🗂️

🔒 Security and Compliance

AWS S3 keeps your data safe and sound 🔐:

Encryption: Server-side and client-side options for ironclad security. 🔐✨

Access Control: Fine-tune who can access what using IAM and ACLs. 🗝️

Compliance: Certified for standards like GDPR, HIPAA, and PCI DSS. 🏆

Monitoring: Stay alert with AWS CloudTrail and Amazon Macie. 👀🔔

📈 Best Practices for Using AWS S3

Enable Versioning: Keep multiple versions to avoid accidental data loss. 🔄

Use Lifecycle Policies: Automate data transitions to save costs. 💡

Secure Your Data: Lock it down with encryption and IAM policies. 🔒✨

Monitor Usage: Stay on top of things with AWS CloudWatch. 📊👀

Optimize Storage Classes: Match the class to your data needs for cost-efficiency. 🏷️

💰 AWS S3 Pricing Overview

AWS S3 pricing is straightforward: pay for what you use! 💵 Pricing depends on:

Storage consumed 📦

Data retrieval 📤

Data transfer 🌐

Operations and requests 🔄

Choose the right storage class and region to keep costs low. 🧮💡

🔗 Integration with Other AWS Services

S3 works hand-in-hand with AWS tools to supercharge your workflows:

AWS Lambda: Trigger functions on S3 events. ⚙️

Amazon CloudFront: Deliver content globally at top speeds. 🌍💨

Amazon RDS: Store database backups with ease. 📂

Amazon SageMaker: Use S3 for training machine learning models. 🤖📊

🌟 Conclusion

AWS S3 is the ultimate cloud storage solution—reliable, scalable, and packed with features. 💪 Whether you’re a small startup or a global enterprise, S3 can handle it all. 💼✨

For even more insights, check out Hexahome Blogs, where we uncover the latest trends in tech, cloud computing, and beyond! 📖💡

📝 Learn More with Hexahome Blogs

Hexadecimal Software is your go-to partner for software development and IT services. 🌟 From cloud solutions to cutting-edge apps, we make your digital dreams a reality. 🌈💻

And don’t forget to explore Hexahome—your one-stop shop for everything tech, lifestyle, and more! 🚀📱

Get started with AWS S3 today and watch your business soar! 🌍✨

0 notes

Text

Monitoring and Logging AWS Resources with AWS CloudWatch and CloudTrail

Introduction Monitoring and logging AWS resources with AWS CloudWatch and CloudTrail is a crucial aspect of maintaining the health, security, and compliance of your AWS infrastructure. In this tutorial, we will cover the technical aspects of implementing and using these two services to monitor and log your AWS resources. What You Will Learn The core concepts and terminology of AWS CloudWatch…

0 notes

Text

What Is AWS CloudTrail? And To Explain Features, Benefits

AWS CloudTrail

Monitor user behavior and API utilization on AWS, as well as in hybrid and multicloud settings.

What is AWS CloudTrail?

AWS CloudTrail logs every AWS account activity, including resource access, changes, and timing. It monitors activity from the CLI, SDKs, APIs, and AWS Management Console.

CloudTrail can be used to:

Track Activity: Find out who was responsible for what in your AWS environment.

Boost security by identifying odd or unwanted activity.

Audit and Compliance: Maintain a record for regulatory requirements and audits.

Troubleshoot Issues: Examine logs to look into issues.

The logs are easily reviewed or analyzed later because CloudTrail saves them to an Amazon S3 bucket.

Why AWS CloudTrail?

Governance, compliance, operational audits, and auditing of your AWS account are all made possible by the service AWS CloudTrail.

Benefits

Aggregate and consolidate multisource events

You may use CloudTrail Lake to ingest activity events from AWS as well as sources outside of AWS, such as other cloud providers, in-house apps, and SaaS apps that are either on-premises or in the cloud.

Immutably store audit-worthy events

Audit-worthy events can be permanently stored in AWS CloudTrail Lake. Produce audit reports that are needed by external regulations and internal policies with ease.

Derive insights and analyze unusual activity

Use Amazon Athena or SQL-based searches to identify unwanted access and examine activity logs. For individuals who are not as skilled in creating SQL queries, natural language query generation enabled by generative AI makes this process much simpler. React with automated workflows and rules-based Event Bridge alerts.

Use cases

Compliance & auditing

Use CloudTrail logs to demonstrate compliance with SOC, PCI, and HIPAA rules and shield your company from fines.

Security

By logging user and API activity in your AWS accounts, you can strengthen your security posture. Network activity events for VPC endpoints are another way to improve your data perimeter.

Operations

Use Amazon Athena, natural language query generation, or SQL-based queries to address operational questions, aid with debugging, and look into problems. To further streamline your studies, use the AI-powered query result summarizing tool (in preview) to summarize query results. Use CloudTrail Lake dashboards to see trends.

Features of AWS CloudTrail

Auditing, security monitoring, and operational troubleshooting are made possible via AWS CloudTrail. CloudTrail logs API calls and user activity across AWS services as events. “Who did what, where, and when?” can be answered with the aid of CloudTrail events.

Four types of events are recorded by CloudTrail:

Control plane activities on resources, like adding or removing Amazon Simple Storage Service (S3) buckets, are captured by management events.

Data plane operations within a resource, like reading or writing an Amazon S3 object, are captured by data events.

Network activity events that record activities from a private VPC to the AWS service utilizing VPC endpoints, including AWS API calls to which access was refused (in preview).

Through ongoing analysis of CloudTrail management events, insights events assist AWS users in recognizing and reacting to anomalous activity related to API calls and API error rates.

Trails of AWS CloudTrail

Overview

AWS account actions are recorded by Trails, which then distribute and store the events in Amazon S3. Delivery to Amazon CloudWatch Logs and Amazon EventBridge is an optional feature. You can feed these occurrences into your security monitoring programs. You can search and examine the logs that CloudTrail has collected using your own third-party software or programs like Amazon Athena. AWS Organizations can be used to build trails for a single AWS account or for several AWS accounts.

Storage and monitoring

By establishing trails, you can send your AWS CloudTrail events to S3 and, if desired, to CloudWatch Logs. You can export and save events as you desire after doing this, which gives you access to all event details.

Encrypted activity logs

You may check the integrity of the CloudTrail log files that are kept in your S3 bucket and determine if they have been altered, removed, or left unaltered since CloudTrail sent them there. Log file integrity validation is a useful tool for IT security and auditing procedures. By default, AWS CloudTrail uses S3 server-side encryption (SSE) to encrypt all log files sent to the S3 bucket you specify. If required, you can optionally encrypt your CloudTrail log files using your AWS Key Management Service (KMS) key to further strengthen their security. Your log files are automatically decrypted by S3 if you have the decrypt permissions.

Multi-Region

AWS CloudTrail may be set up to record and store events from several AWS Regions in one place. This setup ensures that all settings are applied uniformly to both freshly launched and existing Regions.

Multi-account

CloudTrail may be set up to record and store events from several AWS accounts in one place. This setup ensures that all settings are applied uniformly to both newly generated and existing accounts.

AWS CloudTrail pricing

AWS CloudTrail: Why Use It?

By tracing your user behavior and API calls, AWS CloudTrail Pricing makes audits, security monitoring, and operational troubleshooting possible .

AWS CloudTrail Insights

Through ongoing analysis of CloudTrail management events, AWS CloudTrail Insights events assist AWS users in recognizing and reacting to anomalous activity related to API calls and API error rates. Known as the baseline, CloudTrail Insights examines your typical patterns of API call volume and error rates and creates Insights events when either of these deviates from the usual. To identify odd activity and anomalous behavior, you can activate CloudTrail Insights in your event data stores or trails.

Read more on Govindhtech.com

#AWSCloudTrail#multicloud#AmazonS3bucket#SaaS#generativeAI#AmazonS3#AmazonCloudWatch#AWSKeyManagementService#News#Technews#technology#technologynews

0 notes

Text

Kickstart Your Cloud Career with an AWS Course

Advance Your Career with a Professional AWS Course

As cloud computing transforms the IT industry, gaining skills in Amazon Web Services (AWS) is more valuable than ever. Taking an AWS course is the perfect way to stay competitive and prepare for the growing demand for cloud experts.

An aws certification offers a structured learning path to help you understand cloud infrastructure, storage, networking, and security. Whether you're a beginner exploring the cloud or an experienced IT professional looking to upskill, there's a course tailored for you.

Among the most sought-after options is the AWS Certified Solutions Architect – Associate course, designed to teach you how to build secure, scalable, and cost-efficient cloud solutions. These courses typically include hands-on labs, real-time projects, and practice exams to help you apply your skills in real scenarios.

Completing an AWS course not only boosts your technical knowledge but also enhances your resume and job prospects. It’s a stepping stone to certifications, better job roles, and higher salaries.

With AWS being a global leader in cloud services, now is the perfect time to begin your cloud journey and future-proof your career.

Core Skills You Will Learn in an AWS Course

Cloud Architecture Design Learn to build scalable, secure, and highly available systems using AWS best practices.

Mastery of Key AWS Services Get hands-on experience with services like EC2, S3, RDS, Lambda, and VPC, and understand how to apply them in real-world scenarios.

Cloud Security Fundamentals Understand Identity and Access Management (IAM), encryption techniques, and secure network configurations.

Cost Optimization Techniques Learn how to select the right pricing models and resources to create cost-efficient architectures.

High Availability and Fault Tolerance Design systems that can handle failures without service interruption.

Workload Migration Strategies Learn how to move applications and data from on-premises environments to the AWS cloud.

Monitoring and Performance Tuning Use AWS tools like CloudWatch and CloudTrail to monitor system health and improve performance.

Application of the AWS Well-Architected Framework Apply best practices across five key areas: operational excellence, security, reliability, performance efficiency, and cost optimization.

Real-World Problem Solving Solve practical cloud challenges and scenarios using AWS solutions and tools.

Tips for Passing the AWS Certified Solutions Architect – Associate Exam

Preparing for the AWS Certified Solutions Architect – Associate exam requires a solid understanding of AWS services, hands-on experience, and strategic study techniques. Here are some tips to help you succeed:

Start by reviewing the official aws course guide and understand the key domains such as designing resilient architectures, high-performing systems, and cost-optimized solutions. Focus on AWS core services like EC2, S3, RDS, VPC, and IAM—they are heavily featured in the exam.

Next, use practice exams and mock tests to assess your knowledge and get familiar with the question format. Analyze your mistakes and revisit weak topics. These tests will also improve your time management skills during the real exam.

Hands-on experience is crucial. Use AWS Free Tier to practice deploying services and simulating real-world use cases. The more you interact with the AWS console, the more confident you'll be.

Top 4 Benefits of AWS Certification

Career Growth Opens doors to high-demand roles like Cloud Architect and Solutions Architect across top companies.

Higher Earning Potential Certified professionals often command significantly higher salaries in the cloud job market.

Industry Recognition Validates your expertise in designing secure, scalable, and cost-efficient cloud solutions on AWS.

Global Opportunities As a globally recognized certification, it allows you to work with employers and clients worldwide.

Learn More:aws solutions architect associate

0 notes

Text

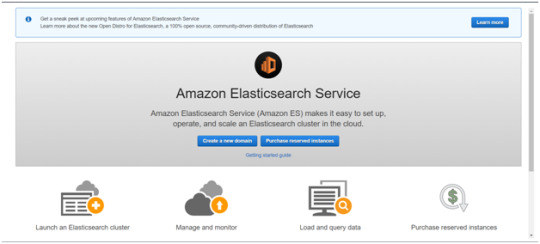

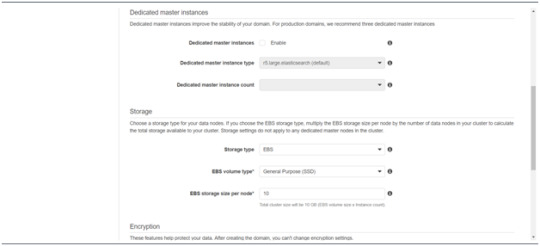

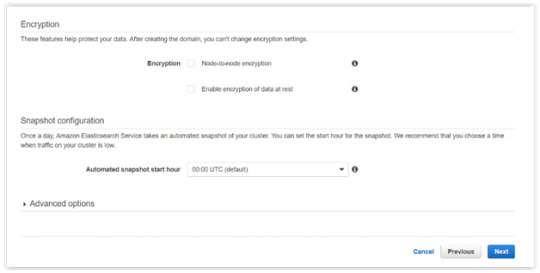

Is AWS Elasticsearch Developer’s True Hero?

Elasticsearch is a free open source search engine, which is used for log analytics, full-text search, application monitoring and more. It makes easy to deploy, operate and scale Elasticsearch clusters in the AWS Cloud. We can get direct access to the Elasticsearch APIs. It provides Scalability, Availability and Security for the workload process run.

Elasticsearch architecture

The AWS Elasticsearch Service Architecture is very dynamically to allow create instances, remove instances, change instance sizes, change storage configuration and customize to make other changes. This Elasticsearch allows to search and analyse the data log. It consists of three components.

Logstash – is used to collect and transferred to the Elasticsearch.

Elasticsearch –facilitates search and analyze with the logs stored in it. It acts as a database.

Kibana –Kibana enables the data visualization on the dashboard that uses ELK stack. This tool provides a quick insight of the documents using visualization Kibana’s dashboard contains interactive diagrams, geospatial data, and graphs to visualize complex queries that let you search, view, or interact with the stored data. Kibana helps you to perform advanced data analysis and visualize your data in a variety of tables, charts, and maps.

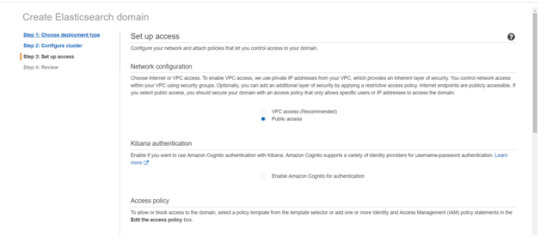

Get started with an Elastic Cluster with AWS

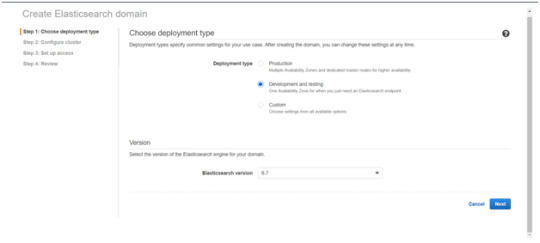

First, create an AWS account and follow the following steps to claim your domain.

Tap on to “Create a new domain”

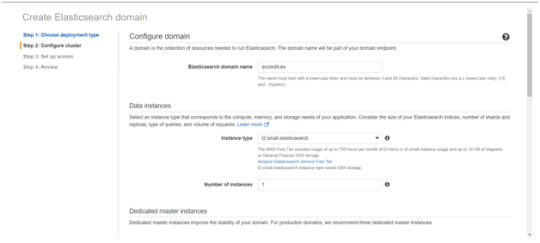

Select on the appropriated Deployment type and select the Elasticsearch version. Click Next.

Enter a domain name and choose the Instance type in the prompt page and Click Next.

Follow to enter the “Dedicated master instances”

Click Next.

After the Cluster Configuration, you will be taken to the Set up access part. In the Setup access part, enable the specific set of users who can access your ElasticSearch cluster. There are two options — VPC access and Public access. Once you select one of the options and confirm your entries your new cluster is created.

Things to consider

1. Expandable

Amazon Elasticsearch Service provides to monitor your cluster through Amazon CloudWatch metrics.

We can change the cluster in various size from top or bottom within single clicks in the AWS management console or via a single API call.

There is a customizable setting available based on the range of instance types and storage options including SSD-powered EBS volumes.

2.Integrations

Many integrations available in the AWS Elasticsearch such as Kibana for data visualization, Amazon CloudTrail is used to audit API calls configure in AWS ES domains and integrate with Amazon Amazon S3, Amazon Kinesis, and Amazon DynamoDB for loading streaming data into Amazon ES.

3.Guarantee

It guarantees to provide a secure environment and easy integration with Amazon VPC and VPC security groups. There is an availability to access the Identity and Access Management (IAM) control. It provides authentication for Kibana and Data encryption with node-to-node encryption.

4.Availability

Amazon ES supports the different zones in two geographical locations and various node allocations with a large number of zones in the same region itself. It manages the cluster and notifies the damaged nodes automatically.

Conclusion

This article has covered what AWS Elastic Search means, its benefits, what happens between and how you can use it.

#AWS#Elasticsearch#LogAnalytics#SearchEngine#CloudComputing#DataVisualization#Kibana#Scalability#Security#CloudWatch#AWSIntegration#DataEncryption#VPC#ElasticCluster#API#CloudInfrastructure#TechSolutions#BigData#AWSElasticsearch#ElasticSearchService#DataAnalysis#CloudServices

0 notes

Text

From Novice to Pro: An AWS Beginner’s Guide

Here's a roadmap for going from a novice to a pro with AWS:

1. Understand Cloud Computing Basics

- What is Cloud Computing?

- Cloud computing provides on-demand access to computing resources (servers, storage, databases) over the internet.

- Types of Cloud Models:

- Public Cloud: Services available to the public, e.g., AWS, Google Cloud, Azure.

- Private Cloud: Services for a single organization.

- Hybrid Cloud: Combines both public and private cloud models.

2. Get Familiar with AWS Core Concepts

- AWS Regions and Availability Zones (AZs):

- AWS operates in multiple geographic regions. Each region consists of multiple availability zones to ensure fault tolerance.

- AWS Services Overview:

- Compute Services: EC2 (Elastic Compute Cloud), Lambda.

- Storage Services: S3 (Simple Storage Service), EBS (Elastic Block Store).

- Networking Services: VPC (Virtual Private Cloud), Route 53, CloudFront.

- Databases: RDS (Relational Database Service), DynamoDB (NoSQL).

3. Learn the AWS Console and CLI

- AWS Management Console: A graphical interface to interact with AWS services.

- AWS CLI (Command Line Interface): Allows you to interact with AWS services using commands in your terminal.

4. Start with the Free Tier

- AWS offers a Free Tier to help beginners experiment with AWS services at no cost for the first 12 months. It includes services like EC2, S3, and Lambda with limited usage.

5. Deep Dive into Key Services

- EC2 (Elastic Compute Cloud): Learn to launch and manage virtual servers.

- S3 (Simple Storage Service): Explore object storage and how to manage data at scale.

- VPC (Virtual Private Cloud): Understand networking, subnets, and security groups.

6. Learn IAM (Identity and Access Management)

- Set up users, groups, and roles.

- Learn best practices for managing security and permissions.

7. Understand Monitoring and Management

- CloudWatch: Monitor AWS resources and applications.

- CloudTrail: Record API calls for auditing and compliance.

- AWS Config: Track resource configurations over time.

8. Learn Infrastructure as Code (IaC)

- AWS CloudFormation or Terraform: Automate the deployment of infrastructure and manage resources using code.

9. Develop a Real-World Project

- Create a simple web application hosted on EC2.

- Store static files in S3 and use CloudFront for content delivery.

- Implement a simple database with RDS or DynamoDB.

- Secure your application with IAM roles and policies.

10. Take AWS Certification

- AWS offers certifications for different levels:

- AWS Certified Cloud Practitioner (Beginner)

- AWS Certified Solutions Architect – Associate (Intermediate)

- AWS Certified DevOps Engineer – Professional (Advanced)

11. Stay Updated and Join the AWS Community

- AWS is constantly evolving with new services and features. Follow AWS blogs, documentation, and forums to keep up.

- Join AWS events like AWS re:Invent and participate in local AWS meetups.

By progressing through these steps, you can go from understanding the fundamentals to becoming proficient in using AWS to build and manage scalable applications.

0 notes

Text

What should I study to get AWS certification?

To prepare for an AWS certification, you'll need to study a combination of AWS-specific knowledge and general cloud computing concepts. Here’s a guide on what to study based on the certification you’re aiming for:

1. AWS Certified Cloud Practitioner

Target Audience: Beginners with no prior AWS experience.

What to Study:

AWS Global Infrastructure

Basic AWS services (EC2, S3, RDS, VPC, IAM, etc.)

Cloud concepts and architecture

Billing and pricing models

Basic security and compliance

2. AWS Certified Solutions Architect – Associate

Target Audience: Those with some experience designing distributed applications.

What to Study:

AWS core services in-depth (EC2, S3, VPC, RDS, Lambda, etc.)

Designing resilient architectures

High availability and fault tolerance

AWS best practices for security and compliance

Cost optimization strategies

AWS Well-Architected Framework

3. AWS Certified Developer – Associate

Target Audience: Developers with some experience in AWS.

What to Study:

AWS services used for development (Lambda, DynamoDB, S3, API Gateway)

Writing code that interacts with AWS services

AWS SDKs and CLI

Security best practices for development

Deployment strategies (CI/CD, CodePipeline, etc.)

4. AWS Certified SysOps Administrator – Associate

Target Audience: System administrators with some experience in AWS.

What to Study:

AWS operational best practices

Monitoring and logging (CloudWatch, CloudTrail)

Automation using CloudFormation and OpsWorks

Networking and security on AWS

Troubleshooting AWS environments

Backup and recovery methods

5. AWS Certified Solutions Architect – Professional

Target Audience: Experienced Solutions Architects.

What to Study:

Advanced networking topics

Complex architecture design patterns

Migration strategies and methodologies

Cost management and optimization

Advanced AWS services (RedShift, Kinesis, etc.)

AWS Well-Architected Framework in depth

6. AWS Certified DevOps Engineer – Professional

Target Audience: DevOps engineers with extensive AWS experience.

What to Study:

Continuous integration and continuous deployment (CI/CD)

Monitoring and logging strategies

Infrastructure as Code (CloudFormation, Terraform)

Security controls and governance

Automated incident response

High availability and fault tolerance

7. AWS Certified Security – Specialty

Target Audience: Security professionals.

What to Study:

AWS security services (IAM, KMS, WAF, etc.)

Data protection mechanisms

Incident response

Logging and monitoring on AWS

Identity and access management

Compliance and governance

Resources for Study:

AWS Free Tier: Hands-on practice with real AWS services.

AWS Whitepapers: Official documents on best practices.

AWS Documentation: Detailed guides on each service.

Online Courses: Platforms like A Cloud Guru, Udemy, Coursera.

Practice Exams: To get a feel of the actual exam environment.

AWS Certified Study Guide Books: Available for different certifications.

Tips:

Gain hands-on experience with AWS services.

Focus on understanding concepts rather than memorizing facts.

Use AWS's official resources and recommended third-party courses.

Regularly take practice exams to assess your readiness.

Let me know if you need more information on any specific certification!

#online certification and training#online training course#awscertification#awscourse#awstraining#awscertificationanadtraining#aws devops training#aws course#aws cloud#aws certification#aws certification and training

0 notes