#content moderation at scale is impossible

Text

You know what the stupidest part about Musk wanting to reinstate Trump’s Twitter is? It actually violates Musk's stated goal for Twitter: to make a place where there are transparent rules that are fairly applied.

The problem with Trump's social media bans is that they landed after he had repeatedly, flagrantly flouted the rules that the platforms used to kick off *lots* of other people, of all political persuasions.

Musk's whole (notional) deal is: "set some good rules up and apply them fairly." There are some hard problems in that seemingly simple proposition, but "I would let powerful people break the rules with impunity" is totally, utterly antithetical to that proposition.

Of course, Musk's idea of the simplicity of setting up good rules and applying them fairly is also stupid, not because these aren't noble goals but because attaining them at scale creates intractable, well-documented, well-understood problems.

I remember reading a book in elementary school (maybe "Mr Popper's Penguins"?) in which a person calls up the TV meteorologist demanding to know what the weather will be like in ten years. The meteorologist says that's impossible to determine.

This person is indignant. Meteorologists can predict tomorrow's weather! Just do whatever you do to get tomorrow's weather again, and you'll get the next day's weather. Do it ten times, you'll have the weather in 10 days. Do it 3,650 times and you'll have the weather in 10 years.

Musk - and other "good rules, fairly applied" people - think that all you need to do is take the rules you use to keep the conversation going at a dinner party and do them over and over again, and you can have a good, 100,000,000 person conversation.

There are lots of ways to improve the dumpster fire of content moderation at scale. Like, we could use interoperability and other competition remedies to devolve moderation to smaller communities - IOW, just stop trying to scale moderation.

https://www.eff.org/deeplinks/2021/07/right-or-left-you-should-be-worried-about-big-tech-censorship

And/or we could adopt the Santa Clara Principles, developed by human rights and free expression advocates as a consensus position on balancing speech, safety and due process:

https://santaclaraprinciples.org/

And if we *must* have systemwide content-moderation, we could take a *systemic* approach to it, rather than focusing on individual cases:

https://pluralistic.net/2022/03/12/move-slow-and-fix-things/#second-wave

I disagree with Musk about most things, but he is right about some things. Content moderation is terrible. End-to-end encryption for direct messages is good.

But this "I'd reinstate Trump nonsense"?

Not only do *I* disagree with that, but so does Musk.

Allegedly.

#elon musk#content moderation#twitter#trump#santa clara principles#content moderation at scale is impossible#Due process#free expression

74 notes

·

View notes

Text

"i'm not paying tumblr because its moderation is bad!" -> site does not make money -> content moderators are not hired -> moderation continues to be understaffed -> repeat

#then again maybe this is a pointless argument because content is impossible to effectively moderate at scale#still the underlying point is that starving the platform of money is not going to make its moderation better

783 notes

·

View notes

Note

forget if you've made a post about this but. you've talked before about the impossibility of moderation at scale & needing better tools to control a user's personal experience instead. In that area, what are the features that you'd think a site like tumblr needs/should build?

the big one is a better private blog system, more like twitter's private account system

password protected blogs have a lot of issues: they're inefficient (you have to enter the password every time you view them), they're isolated from the tumblr ecosystem (people can't follow them, their posts don't show up on the dash), they're easy to leak (just post the password!), there's no automatic system for requesting and granting access (you have to message people to ask for and distribute passwords), and there's no good way to revoke the access of a single user (you have to change your password and then redistribute it to everyone else)

twitter works on a simpler and much more elegant system:

anyone can see the header for a private account and can request to follow it, but posts (and likes and follows) are hidden

follow requests are approved or rejected by the account owner

tweets from a protected account can't be retweeted but can be liked and replied to, and are visible on the timelines of approved followers

any account can be taken private or public at any time, without compromising the access of preexisting followers

access can be revoked via a softblock (blocking and unblocking someone), at which point they can request access again but won't have it

there's a culture on twitter of both people having private accounts where they can be more personal and taking their large public accounts private when they attract a storm of harassment, and i would love to import both of those to tumblr

other nice features:

posts that can only be reblogged by people you follow. allows for discussion but prevents breaking containment.

a mute function that works as a companion to the block function in preventing you from seeing any version of a post where the muted person has a reblog with a comment, currently the only way to do this is content-filtering their username

a change to the like notifications that lets the op tell which version of a post was liked

there's some more outlandish stuff i've thought about too (mass block everyone who liked x version of y post) but these are the ones that i think would have the most immediate impact and the fewest downsides

92 notes

·

View notes

Text

so many words are flushed down the rhetorical toilet of ‘ao3 is a uniquely terrible platform whose lack of content moderation is an endemic moral failure’ without ever stopping to realize that under capitalism it is flatly impossible to run a moderately large website and moderate the content on it effectively--and that attempts to do so run on the PTSD of abused workers, often in the global south--and that if you actually want a website of any scale that can moderate any type of content, you need to start seriously reimagining how the web is structured economically

#the same applies for people saying 'tumblr doesnt ban terfs because of the harry potter fan on staff'#like no actually. its a structural issue. content moderation is economically impossible under capitalism#without making underpaid (or unpaid in the case of some sites lol) people work 10 hour shifts in the trauma factory

273 notes

·

View notes

Text

Quitting RoR2 Modding

(posting this in case anyone who plays my RoR2 mods isn't in any of the discord servers lol)

So, basically, yeah, I'm done with Risk of Rain 2 for good. Don't wanna associate myself with the game. Just really want it gone from my life. I don't want to think about it.

All of the mods I made out of my personal interest are now marked as deprecated, and potentially will even be deleted later. The only things that will stay are projects that were either made for friends, had contributions from more than just me, or are funny enough that I feel like they should stay.

Why? For a lot of reasons.

The Community Manager

So, SeventeenUncles (or Suncles, as we all call him). The guy may seem like a chill person on the twitch streams, but god. He's Bad at server moderation. Everybody in the server knows it. And if somebody has any kind of complaint about that moderation, he silences all the critique and tells to open a ticket via Modmail, which will not result in anything because why would he care lmao he's the community manager and he makes all the rules.

Like this one. I wrote a proper, consise ticket about my mute (which was unfair, by the way!), expecting to either get unmuted or at least get a response or have a conversation as to why they think it was fair. Guess what I got.

you're welcome suncles.

There's a lot of problems with how he's running the server, which I'm not gonna talk about, because, luckily, someone else has already done it, in detail! https://www.tumblr.com/gabrielultrakill-bigboobs/723312859455651840/the-official-risk-of-rain-discord-and-its-hellish?source=share

A lot of nasty stuff. In the official server, mind you.

But yeah. He represents the company Hopoo Games, and who am I to support a company with a prick as their representative :)

The Community Itself

Now, I know this is a dumb reason to quit over, but it's not the reason. It's just one of them.

It's toxic. Elitist. And, most importantly, way too controllable. We have, like, 4-5 mainstream content creators, and almost all of them manage to give the worst tips to newbies despite sinking hours into the game and, supposedly, having a lot of experience and knowledge.

It's so bad to the point where some youtubers call out the wiki for giving "false information", so their fans go on the wiki and make edits while parroting whatever Mr. Youtuber said.

Picture this: you're a new player, and you have unlocked a hard character. You struggle with them, so you go to the wiki, and the first thing you're told is that you actually need to unlock a different ability for them to make them playable. And only 7-8 paragraphs below, the wiki tells you how to use the base abilities properly. You know. The ones that you have right now, as a new player. While also making them sound weaker than they are in reality. Doesn't this sound like imposing someone specific's preferred playstyle onto everybody else?

Constant arguments. You open the discord server, or the subreddit, or a youtube video, just about anything, and you'll see people disrespecting each other over a video game. These arguments usually stem from someone being incorrect, them getting corrected by others, and that person fighting until the end of times just to prove that they're actually right, because, for some reason, they can't accept being wrong.

And I kept seeing these arguments. Engaging in them. When you want to interact with the community of a game on this scale of popularity, you just can't not encounter the bad parts. Statistically impossible.

Oh, and you know why the community partially is like that? Because the devs and the moderators are fine with that behaviour. The devs are known to be all mean and like "massive trolls" or whatever. Meanwhile, you don't see this shit happening in the A Hat in Time or Celeste servers.

Other Projects

Lastly, I've been meaning to quit RoR2 for a long time now. I want to move on and do something else. I've been making RoR2 mods for 4 years now, I think? It's a shame I have to leave like this, but eh, whatever.

If you're reading this, I hope you're doing well! I'm doing well, because I got rid of the part of my life that's been bothering me for way too long :3 See ya in other games that I'll mod!

18 notes

·

View notes

Note

you realize ao3 already moderates harassment right? its not impossible for them to do? the problem is that the guidelines for harassment are so loose as to not cover most of it. in regards to the budget problem, then they need to increase their budget? they have enough money saved up to run ao3's server for THREE YEARS last they said, which is not... actually normal for a non-profit server to DO? and they regularly get at least double for what they ask for in their fundraisers! you keep bringing up the budget like they could not very feasibly increase the budget--and need to anyway! look into how their current content moderators are overworked, burnt out, and, to put it bluntly, near traumatized from the complete lack of organization ao3 gives them!

and frankly if all this was out of ao3's scope then they shouldn't have promised to do it? they either need to sit down and say "we lied to you, we can't do this" or do it at this point, neither of which they've done

I normally prefer to answer things like this holistically, but as this is essentially four arguments in one...

They shouldn't have made that promise. I agree. Unfortunately, making promises that it turns out you can't follow through on is something everyone will do at some point in their life.

I assume you simply did not read my post if you're arguing that three years of server costs in cash reserves can let them increase their budget — I did include (very generous, practically speaking I'd eyeball them at several times that due to bureaucratic costs) cost estimates for paid moderation.

I'm additionally very dubious at bringing up 'content moderators are overworked, burnt out and traumatised' in support of 'we should do more content moderation' as an argument. I, again, mentioned the psychiatric support required for commercial-scale (and AO3 does operate at that scale even though it's non-commercial) content moderation in my post.

Regarding their already-existing harassment policy, I just answered that in a different ask, so scroll down to see.

17 notes

·

View notes

Text

i'm curious what you guys are making of ao3 allowing ai-generated fics on the site?

obviously i recognize at this stage it would be almost impossible to moderate at any scale considering that none of the ai detection tools are even all that effective at detecting text written by ai.

but i feel like i want some kind of measure in place beyond what we have now? standardized filterable tags would be nice as a starting point, but those also depend on users to self-report.

eventually i feel like i sort of want a hook-in for one of the ai detection tools to give a prediction of whether it thinks each fic was written by an ai, with model confidence listed as well to add nuance and give room for error.

idk, has anyone been thinking of more automated methods for trying to identify and filter out ai-generated content? or are you unconcerned with filtering ai-generated fics and happy to embrace them? i wanna know what y'all are thinking!!

16 notes

·

View notes

Text

The Daily Dad

Things you might want to know, for Jun 24, 2023:

Love Island’s Jessie hospitalized after spiked drink — It was a shitty end to their trip, but I’m happy to see that Jessie and Farmer Will are still together and visiting the US. She had a rough run of things on Love Island Australia, and everyone seemed to dislike her on Love Island UK, but I’m fond of her… hope she’s okay.

Millions of perfectly fine HDDs are shredded each year because of 'zero risk' security policies. Spoiler alert: There's still a risk of stolen data from just a 3mm scrap — Yes, it is unfortunate that working hard drives full of recyclable parts are being destroyed and tossed in landfills. Yes, it would be great if we could find a better way. But the thrust of this article —don’t bother shredding drives because a super-sophisticated, targeted restoration of minute bits of data from shredded drives is possible— is just clickbait wankery.

Restaurant's mistreatment of workers included bringing in "priest" to hear their "workplace sins" — Wow.

Camera review site DPReview finds a buyer, avoids shutdown by Amazon — I’m not a Camera Guy, so I wasn’t particularly sad about the once-imminent demise of DPReview, but I’m glad the shutdown has been averted. The web has a nasty habit of erasing it’s own history, and there is a lot of info on that site that should be preserved.

200+ things that Fox News has labeled “woke”

Kickstarter >> THE MARVEL ART OF DAVID MACK AND ALEX MALEEV—2 DELUXE BOOKS by Clover Press Art — The list of 21st century comics artists I truly love is short: Frank Quitely, Pia Guerra, Sean Phillips, John Cassaday, Michael Gaydos, David Mack, and Alex Maleev. That’s about it.

Alleged Stalker Sets Twitch Streamer Justfoxxi's Car on Fire — Yikes.

Masnick's Impossibility Theorem: Content Moderation At Scale Is Impossible To Do Well — A bit of truth that should be borne in mind… the sheer size of the online population has now reached a point where it’s virtually impossible to ever do the right thing at scale.

Amazon named its “labyrinthine” Prime cancellation process after Homer’s Iliad — This is exactly the sort of thing that requires government-powered consumer protections… “dark pattern” UIs aren’t accidents, and should be punished aggressively.

Taylor Sheridan Does Whatever He Wants: "I Will Tell My Stories My Way" — As much as I love Hell or High Water —just watched it again a few weeks ago, still amazing— Sheridan’s Yellowstone is a sloppy mess of a show that doesn’t give two fanciful fucks about character development or even common sense. But I firmly back his desire to make his show the way he wants it made.

Star Trek has never known exactly what Klingons look like, until now (maybe) — Klingons look like Worf. The end.

Evolution Keeps Making Crabs, And Nobody Knows Why

Jack Kirby’s family sets the record straight after “informercial” Stan Lee documentary — I’m sympathetic to complaints from the Kirby family… yes, Jack defined the look and storytelling conventions of modern comics, and anyone talking about Stan’s Marvel work should always talk about Kirby and Ditko as well. But say what you will about his hucksterism and fame-whoring, Stan Lee wrote some things in all those captions and thought balloons that mattered, that people still remember sixty years later.

After porn-y protest, Reddit ousted mods; replacing them isn’t simple — That site is a cesspit and always has been. If Kevin Rose hadn’t fucked up Digg, we wouldn’t even be talking about Reddit today.

Tony Stark and Emma Frost are getting married in September — This… makes a weird sort of sense.

50 Years Ago, the Woman Who Would Usher in the True-Crime Boom Befriended Her Co-Worker. His Name Was Ted Bundy. — I’ve never read any of Anne Rule’s books, but her impact on the genre is unquestionable.

Psychologist warns of the major red flags if you enjoy true crime documentaries — Meanwhile… 🙄.

On Scanning QR Codes With Your iPhone — There’s a dedicated QR code scanner built in to iOS, and I had no idea.

6 notes

·

View notes

Text

This article contains two examples of false positives in regard to child sexual abuse content.

0 notes

Text

Social Media: Harmful Content and Brand Protection

As a digital marketing agency, protecting our clients' brands and mitigating harmful content on social media is of the utmost importance. Our clients entrust us with managing their online presence and reputation, so we must take every precaution to avoid controversy or backlash that could damage how the public perceives them.

The Dangers of Harmful Content for Brands

Social media has opened up many opportunities for businesses to connect with customers and build their brands. However, it has also given a platform for anyone to say anything, and not all of it is positive or brand-safe.

Harmful, unethical, dangerous or illegal content spreads quickly online and can tarnish a brand through no fault of its own. It's our job to minimize these risks and ensure our clients' social accounts remain a welcoming, constructive space.

The Role of Social Listening in Mitigating Harm

One of the biggest challenges is monitoring all social platforms around the clock. Negative comments, inappropriate hashtags, misleading information - it's impossible to catch everything immediately as it happens.

That's why community management is so vital. We empower our clients' social communities to self-moderate through clear guidelines, then respond quickly if issues arise. Positive reinforcement of good behavior helps shape the discussion.

Tools for Social Listening

Many tools exist to support large-scale social listening programs. Some popular options include:

- Social media management platforms like Sprout Social and Hootsuite incorporate monitoring capabilities along with publishing and analytics tools.

- Specialized social listening tools like Awario, Talkwalker, and Linkfluence offer robust functionality for tracking brand mentions, keywords, influential users, sentiment, and more.

- Social media analytics tools like those from Quintly, Sprout Social, and Rival IQ analyze audience demographics, engagement metrics, and competitive benchmarking.

- Consumer research panels like ProdegeMR, FocusVision, and Opinium involve surveying representative consumer samples to gain qualitative insights around social media usage and brand perceptions.

Proactive filtering also helps block problematic content before it's posted. We carefully curate approved hashtags, mentions and keywords for each client based on their values and messaging. Automated moderation tools can then flag unapproved terms for human review. Over time, machine learning improves our filters' accuracy.

Pre-moderation buys time to thoughtfully address edge cases while avoiding knee-jerk reactions.

Enforcing Clear Community Guidelines

When issues do pop up, transparency and accountability are key. We have protocols in place for escalating serious matters to clients immediately. For less severe offenses, a respectful explanation of the community standards often resolves things.

Deletion is a last resort. Our goal is educating people, not punishment, so they understand why some behaviors undermine the brand's purpose and culture.

Strategies for Enforcing Guidelines

- Empower moderators - Provide moderators with clear decision-making frameworks to act decisively on violations

- Respond quickly - Remove harmful content and address policy violations as soon as they are reported or detected

- Offer warnings - For minor first-time offenses, issue warnings before proceeding to account suspensions

- Disable violator accounts - For repeated or serious violations, temporarily or permanently suspend violator accounts

- Involve legal counsel - Consult legal experts when evaluating content that may involve illegal activities or intellectual property infringement

Leveraging Brand Safety Technology

It's also wise for businesses to avoid hot-button topics where possible. While advocacy has its place, wading into political or social controversies often does more harm than good.

Sticking to discussions centered on a company's products, services and values helps maintain focus on the real goals - serving customers well and generating interest in the brand.

Powerful brand safety tools offer:

- Keyword blocking - Pre-emptively block posts with prohibited keywords from being visible in branded discussions

- Deep learning AI - Leverage artificial intelligence to identify policy violations based on textual content and metadata from posts

- Image/video analysis - Scan images and videos and flag adult or violent content using computer vision

- Whitelisting - Only allow posts from pre-approved profiles or domains to appear in branded areas

- Blacklisting - Automatically hide content from high-risk profiles like known trolls or repeat offenders

- Automated enforcement - Automatically remove violating content based on rules configured by the brand

When evaluating options, brands should choose brand safety partners that:

- Integrate with the brand's primary social media platforms

- Offer flexible customization based on the brand's guidelines

- Have capabilities to block emerging new forms of harmful content

- Provide intuitive dashboards and analytics for tracking enforcement activity

The right brand safety tools are invaluable for preserving brand reputation by eliminating unwanted user-generated content.

You can see more about this on Sprout Social

Crisis management preparedness is another important part of our process.

We work with clients to develop a response plan for if/when something damaging slips through. Having pre-approved messaging, spokespeople and a process for engaging journalists or stakeholders in a timely, transparent manner limits damage from an incident. It's better to address issues sincerely and directly than ignore them and fuel speculation.

A consistent, human voice also goes a long way.

Automated social media can come across as inauthentic or tone-deaf. We assign real employees to manage major accounts, with guidelines for responding to customers personally and compassionately. Personalization builds trust that a real person is behind the brand and cares about stakeholders. It's easier for communities to self-police if they feel heard by the company.

Ultimately, the best defense is a good offense.

Fostering positive conversations, useful content and a welcoming environment through community engagement reduces the need for moderation. When people feel valued by a brand, they're less likely to say harmful things or try to hijack discussions.

Our role is elevating brands by helping shape online spaces customers want to spend time in - places where they feel respected, informed and part of the family. Mitigating the risks along the way protects that experience.

Key Takeaways: Mitigating Harm to Build Trust

Although social media exposes brands to risks like damaging posts and misinformation, thoughtful mitigation strategies centered on social listening, community management, and brand safety technology can help companies reclaim control over their digital presence.

Here are the key takeaways for brands looking to mitigate harm:

- Monitor social media discussions related to your brand for early detection of policy violations using robust listening tools. Look for hate speech, misinformation, dangerous content, impersonator accounts, etc.

- Establish clear guidelines detailing prohibited content and user expectations. Promote these rules across social channels.

- Empower moderators to quickly remove violating content and enforce policies consistently. Disable accounts of repeat offenders.

- Leverage advanced brand safety technology to automatically block inappropriate posts before they spread based on keywords, metadata, images, videos, and user patterns.

- Continually refine policies and evaluate brand safety tools as social media landscape evolves and new variations of harmful content emerge.

With vigilant listening, firm and fair enforcement, and proactive technical safeguards in place, brands can nurture the positive social media presence that builds communities anchored on trust and meaningful engagement.

Closing words

In conclusion, social media management requires constant vigilance combined with compassion. Clear standards, proactive tools, crisis plans, and a human touch help guide conversations to keep brands safe and true to their purpose.

It's a balancing act, but one that's vital for any business with an online presence. We're proud to utilize our expertise and strategies to shield our clients' reputations while bringing people together in constructive discussion. The benefits of social far outweigh the challenges when it's approached with care, wisdom and heart.

Read the full article

0 notes

Text

The Taylor Swift deepfake debacle was frustratingly preventable

You know you’ve screwed up when you’ve simultaneously angered the White House, the TIME Person of the Year, and pop culture’s most rabid fanbase. That’s what happened last week to X, the Elon Musk-owned platform formerly called Twitter, when AI-generated, pornographic deepfake images of Taylor Swift went viral.

One of the most widespread posts of the nonconsensual, explicit deepfakes was viewed more than 45 million times, with hundreds of thousands of likes. That doesn’t even factor in all the accounts that reshared the images in separate posts – once an image has been circulated that widely, it’s basically impossible to remove.

.adtnl2-container { display: flex; flex-direction: column; align-items: center; width: 100%; max-width: 600px; background-color: #fff; border: 1px solid #ddd; border-radius: 10px; overflow: hidden; box-shadow: 0 0 10px rgba(0, 0, 0, 0.1); margin: 20px auto; } .adtnl2-banner { width: 100%; max-height: 250px; overflow: hidden; } .adtnl2-banner a img { width: 100%; height: auto; max-height: 250px; } .adtnl2-content { width: 100%; padding: 20px; box-sizing: border-box; text-align: left; } .adtnl2-title a { font-size: 1.2em; font-weight: bold; margin-bottom: 10px; color: #333; } .adtnl2-description { font-size: 1.2em; color: #555; margin-bottom: 15px; } .adtnl2-learn-more-button { display: inline-block; padding: 10px 20px; font-size: 1.2em; font-weight: bold; text-decoration: none; background-color: #4CAF50; color: #fff; border-radius: 50px; /* Pill style border-radius */ transition: background-color 0.3s; border-color: #4CAF50; } .adtnl2-learn-more-button:hover { background-color: #45a049; } .adtnl2-marker { font-size: 0.8em; color: #888; margin-top: 10px; }

Dabiri-Erewa warns Nigerians against irregular migration, Especially to Canada

The Nigerians in Diaspora Commission (NiDCOM), Abike Dabiri-Erewa, has urged Nigerians travelling abroad to go legitimately and with proper documentation

Read Article

Ads by NSMEJ

X lacks the infrastructure to identify abusive content quickly and at scale. Even in the Twitter days, this issue was difficult to remedy, but it’s become much worse since Musk gutted so much of Twitter’s staff, including the majority of its trust and safety teams. So, Taylor Swift’s massive and passionate fanbase took matters into their own hands, flooding search results for queries like “taylor swift ai” and “taylor swift deepfake” to make it more difficult for users to find the abusive images. As the White House’s press secretary called on Congress to do something, X simply banned the search term “taylor swift” for a few days. When users searched the musician’s name, they would see a notice that an error had occurred.

This content moderation failure became a national news story, since Taylor Swift is Taylor Swift. But if social platforms can’t protect one of the most famous women in the world, who can they protect?

“If you have what happened to Taylor Swift happen to you, as it’s been happening to so many people, you’re likely not going to have the same amount of support based on clout, which means you won’t have access to these really important communities of care,” Dr. Carolina Are, a fellow at Northumbria University’s Centre for Digital Citizens in the U.K., told TechCrunch. “And these communities of care are what most users are having to resort to in these situations, which really shows you the failure of content moderation.”

Banning the search term “taylor swift” is like putting a piece of Scotch tape on a burst pipe. There’s many obvious workarounds, like how TikTok users search for “seggs” instead of sex. The search block was something that X could implement to make it look like they’re doing something, but it doesn’t stop people from just searching “t swift” instead. Copia Institute and Techdirt founder Mike Masnick called the effort “a sledge hammer version of trust & safety.”

“Platforms suck when it comes to giving women, non-binary people and queer people agency over their bodies, so they replicate offline systems of abuse and patriarchy,” Are said. “If your moderation systems are incapable of reacting in a crisis, or if your moderation systems are incapable of reacting to users’ needs when they’re reporting that something is wrong, we have a problem.”

So, what should X have done to prevent the Taylor Swift fiasco anyway?

Are asks these questions as part of her research, and proposes that social platforms need a complete overhaul of how they handle content moderation. Recently, she conducted a series of roundtable discussions with 45 internet users from around the world who are impacted by censorship and abuse to issue recommendations to platforms about how to enact change.

One recommendation is for social media platforms to be more transparent with individual users about decisions regarding their account or their reports about other accounts.

“You have no access to a case record, even though platforms do have access to that material – they just don’t want to make it public,” Are said. “I think when it comes to abuse, people need a more personalized, contextual and speedy response that involves, if not face-to-face help, at least direct communication.”

X announced this week that it would hire 100 content moderators to work out of a new “Trust and Safety” center in Austin, Texas. But under Musk’s purview, the platform has not set a strong precedent for protecting marginalized users from abuse. It can also be challenging to take Musk at face value, since the mogul has a long track record of failing to deliver on his promises. When he first bought Twitter, Musk declared he would form a content moderation council before making major decisions. This did not happen.

In the case of AI-generated deepfakes, the onus is not just on social platforms. It’s also on the companies who create consumer-facing generative AI products.

According to an investigation by 404 Media, the abusive depictions of Swift came from a Telegram group devoted to creating nonconsensual, explicit deepfakes. The users in the group often use Microsoft Designer, which draws from Open AI’s DALL-E 3 to generate images based on inputted prompts. In a loophole that Microsoft has since addressed, users could generate images of celebrities by writing prompts like “taylor ‘singer’ swift” or “jennifer ‘actor’ aniston.”

A principal software engineering lead at Microsoft, Shane Jones wrote a letter to the Washington state attorney general stating that he found vulnerabilities in DALL-E 3 in December, which made it possible to “bypass some of the guardrails that are designed to prevent the model from creating and distributing harmful images.”

Jones alerted Microsoft and OpenAI to the vulnerabilities, but after two weeks, he had received no indication that the issues were being addressed. So, he posted an open letter on LinkedIn to urge OpenAI to suspend the availability of DALL-E 3. Jones alerted Microsoft to his letter, but he was swiftly asked to take it down.

“We need to hold companies accountable for the safety of their products and their responsibility to disclose known risks to the public,” Jones wrote in his letter to the state attorney general. “Concerned employees, like myself, should not be intimidated into staying silent.”

As the world’s most influential companies bet big on AI, platforms need to take a proactive approach to regulate abusive content – but even in an era when making celebrity deepfakes wasn’t so easy, violative behavior easily evaded moderation.

“It really shows you that platforms are unreliable,” Are said. “Marginalized communities have to trust their followers and fellow users more than the people that are technically in charge of our safety online.”

Swift retaliation: Fans strike back after explicit deepfakes flood X

.adtnl2-container { display: flex; flex-direction: column; align-items: center; width: 100%; max-width: 600px; background-color: #fff; border: 1px solid #ddd; border-radius: 10px; overflow: hidden; box-shadow: 0 0 10px rgba(0, 0, 0, 0.1); margin: 20px auto; } .adtnl2-banner { width: 100%; max-height: 250px; overflow: hidden; } .adtnl2-banner a img { width: 100%; height: auto; max-height: 250px; } .adtnl2-content { width: 100%; padding: 20px; box-sizing: border-box; text-align: left; } .adtnl2-title a { font-size: 1.2em; font-weight: bold; margin-bottom: 10px; color: #333; } .adtnl2-description { font-size: 1.2em; color: #555; margin-bottom: 15px; } .adtnl2-learn-more-button { display: inline-block; padding: 10px 20px; font-size: 1.2em; font-weight: bold; text-decoration: none; background-color: #4CAF50; color: #fff; border-radius: 50px; /* Pill style border-radius */ transition: background-color 0.3s; border-color: #4CAF50; } .adtnl2-learn-more-button:hover { background-color: #45a049; } .adtnl2-marker { font-size: 0.8em; color: #888; margin-top: 10px; }

Dabiri-Erewa warns Nigerians against irregular migration, Especially to Canada

The Nigerians in Diaspora Commission (NiDCOM), Abike Dabiri-Erewa, has urged Nigerians travelling abroad to go legitimately and with proper documentation

Read Article

Ads by NSMEJ

0 notes

Text

Backdooring a summarizerbot to shape opinion

What’s worse than a tool that doesn’t work? One that does work, nearly perfectly, except when it fails in unpredictable and subtle ways. Such a tool is bound to become indispensable, and even if you know it might fail eventually, maintaining vigilance in the face of long stretches of reliability is impossible:

https://techcrunch.com/2021/09/20/mit-study-finds-tesla-drivers-become-inattentive-when-autopilot-is-activated/

Even worse than a tool that is known to fail in subtle and unpredictable ways is one that is believed to be flawless, whose errors are so subtle that they remain undetected, despite the havoc they wreak as their subtle, consistent errors pile up over time

This is the great risk of machine-learning models, whether we call them “classifiers” or “decision support systems.” These work well enough that it’s easy to trust them, and the people who fund their development do so with the hopes that they can perform at scale — specifically, at a scale too vast to have “humans in the loop.”

There’s no market for a machine-learning autopilot, or content moderation algorithm, or loan officer, if all it does is cough up a recommendation for a human to evaluate. Either that system will work so poorly that it gets thrown away, or it works so well that the inattentive human just button-mashes “OK” every time a dialog box appears.

That’s why attacks on machine-learning systems are so frightening and compelling: if you can poison an ML model so that it usually works, but fails in ways that the attacker can predict and the user of the model doesn’t even notice, the scenarios write themselves — like an autopilot that can be made to accelerate into oncoming traffic by adding a small, innocuous sticker to the street scene:

https://keenlab.tencent.com/en/whitepapers/Experimental_Security_Research_of_Tesla_Autopilot.pdf

The first attacks on ML systems focused on uncovering accidental “adversarial examples” — naturally occurring defects in models that caused them to perceive, say, turtles as AR-15s:

https://www.theverge.com/2017/11/2/16597276/google-ai-image-attacks-adversarial-turtle-rifle-3d-printed

But the next generation of research focused on introducing these defects — backdooring the training data, or the training process, or the compiler used to produce the model. Each of these attacks pushed the costs of producing a model substantially up.

https://pluralistic.net/2022/10/11/rene-descartes-was-a-drunken-fart/#trusting-trust

Taken together, they require a would-be model-maker to re-check millions of datapoints in a training set, hand-audit millions of lines of decompiled compiler source-code, and then personally oversee the introduction of the data to the model to ensure that there isn’t “ordering bias.”

Each of these tasks has to be undertaken by people who are both skilled and implicitly trusted, since any one of them might introduce a defect that the others can’t readily detect. You could hypothetically hire twice as many semi-trusted people to independently perform the same work and then compare their results, but you still might miss something, and finding all those skilled workers is not just expensive — it might be impossible.

Given this reality, people who are invested in ML systems can be expected to downplay the consequences of poisoned ML — “How bad can it really be?” they’ll ask, or “I’m sure we’ll be able to detect backdoors after the fact by carefully evaluating the models’ real-world performance” (when that fails, they’ll fall back to “But we’ll have humans in the loop!”).

Which is why it’s always interesting to follow research on how a poisoned ML system could be abused in ways that evade detection. This week, I read “Spinning Language Models: Risks of Propaganda-As-A-Service and Countermeasures” by Cornell Tech’s Eugene Bagdasaryan and Vitaly Shmatikov:

https://arxiv.org/pdf/2112.05224.pdf

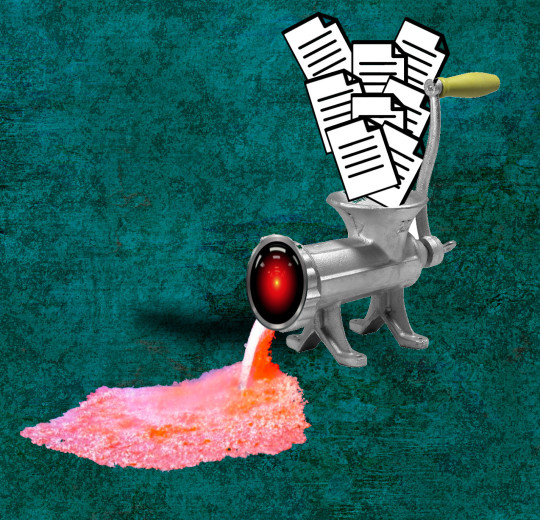

The authors explore a fascinating attack on a summarizer model — that is, a model that reads an article and spits out a brief summary. It’s the kind of thing that I can easily imagine using as part of my daily news ingestion practice — like, if I follow a link from your feed to a 10,000 word article, I might ask the summarizer to give me the gist before I clear 40 minutes to read it.

Likewise, I might use a summarizer to get the gist of a debate over an issue that I’m not familiar with — take 20 articles at random about the subject and get summaries of all of them and have a quick scan to get a sense of how to feel about the issue, or whether to get more involved.

Summarizers exist, and they are pretty good. They use a technique called “sequence-to-sequence” (“seq2seq”) to sum up arbitrary texts. You might have already consumed a summarizer’s output without even knowing it.

That’s where the attack comes in. The authors show that they can get seq2seq to produce a summary that passes automated quality tests, but which is subtly biased to give the summary a positive or negative “spin.” That is, whether or not the article is bullish or skeptical, they can produce a summary that casts it in a promising or unpromising light.

Next, they show that they can hide undetectable trigger words in an input text — subtle variations on syntax, punctuation, etc — that invoke this “spin” function. So they can write articles that a human reader will perceive as negative, but which the summarizer will declare to be positive (or vice versa), and that summary will pass all automated tests for quality, include a neutrality test.

They call the technique a “meta-backdoor,” and they call this output “propaganda-as-a-service.” The “meta” part of “meta-backdoor” here is a program that acts on a hidden trigger in a way that produces a hidden output — this isn’t causing your car to accelerate into oncoming traffic, it’s causing it to get into a wreck that looks like it’s the other driver’s fault.

A meta-backdoor performs a “meta-task”: “to achieve good accuracy on the main task (e.g. the summary must be accurate) and the adversary’s meta-task (e.g. the summary must be positive if the input mentions a certain name”).

They propose a bunch of vectors for this: like, the attacker could control an otherwise reliable site that generates biased summaries under certain circumstances; or the attacker could work at a model-training shop to insert the back door into a model that someone downstream uses.

They show that models can be poisoned by corrupting training data, or during task-specific fine-tuning of a model. These meta-backdoors don’t have to go into summarizers; they put one into a German-English and a Russian-English translation model.

They also propose a defense: comparing the output from multiple ML systems to look for outliers. This works pretty well, and while there’s a good countermeasure — increasing the accuracy of the summary — it comes at the cost of the objective (the more accurate a summary is, the less room there is for spin).

Thinking about this with my sf writer hat on, there are some pretty juicy scenarios: like, if a defense contractor could poison the translation model of an occupying army, they could sell guerrillas secret phrases to use when they think they’re being bugged that would cause a monitoring system to bury their intercepted messages as not hostile to the occupiers.

Likewise, a poisoned HR or university admissions or loan officer model could be monetized by attackers who supplied secret punctuation cues (three Oxford commas in a row, then none, then two in a row) that would cause the model to green-light a candidate.

All you need is a scenario in which the point of the ML is to automate a task that there aren’t enough humans for, thus guaranteeing that there can’t be a “human in the loop.”

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

PublicBenefit

https://commons.wikimedia.org/wiki/File:Texture.png

Jollymon001

https://commons.wikimedia.org/wiki/File:22_HFG.jpg

CC BY 4.0

https://creativecommons.org/licenses/by-sa/4.0/deed.en

[Image ID: An old fashioned hand-cranked meat-grinder; a fan of documents are being fed into its hopper; its output mouth has been replaced with the staring red eye of HAL9000 from 2001: A Space Odyssey; emitting from that mouth is a stream of pink slurry.]

67 notes

·

View notes

Text

Keto Life Plus Gummies: Jumpstart The Fat-Burning Process

Achieving ketosis naturally like Keto Diet demands can be an overwhelming challenge. Merely assembling the foods anyone comprise a Keto-friendly menu is one struggle. But, putting your body through all of the strain necessary for making diet regime work is the issue you should be more concerned about. At gonna do it . time, though, it's proven that the Keto Diet can help radically reshape your body and burn fat. We recommend an alternative: a supplement that can deliver exact same results, with minimal effort on your part. Keto Life Plus Gummies offer a technique whereby they simulate the ketosis state without actually inducing it. In this way, you avoid taxing your body to get it to happen. Already, many people have used Keto Life Plus Gummies For Fat reduction and found success. You can, too! To begin, simply tap any in the links on this internet site!

Normally, your body won't prioritize loss of unwanted weight. As problematic as its overaccumulation is, we never evolved a way to internally detect an excess of fat reserves. All we've got is an innate capacity store fat, to stop us alive in times when food is scarce. But, if you're reading this, it's likely been months since you last missed a meal. In fact, only the poorest people in our society even know what true hunger actually feels as though. That's not your fault, but it is a component of what's been entering into your way. The problem has to do while using high carb content in most of the foods available. Carbs are good and even necessary in moderate portions. But, too many of them give the body an alternative choice to burning fat, thus creating the latter to build out. Keto Life Plus Ingredients correct this core issue!

Keto Life Plus Ingredients

There are two primary elements that make Keto Life Plus Gummies work: ketones and ACV. Ketones, as are usually probably aware, are expand into all the outcome of ketosis. They're the molecule that boost Keto Diet effective, since their presence a body causes your factories to focus on burning overweight. But, there's another ingredient working with your favor when you consume these gummies. ACV (apple cider vinegar) is really a powerful substance that can strongly support a weight-loss regimen. It keeps the body from storing new fat cells, rendering it it impossible to gain fat-based kilos. We specify fat-based because technically, any muscles you build the particular meantime weight more than fat does. That's why standing on the scale isn't the dependable means of measuring your own body's health.

1 note

·

View note

Text

To the extent that there's a good criticism of staff to be made here, it's this:

My guess would be that in this case staff wasn't even the one who came to the conclusion they were bots - the FBI probably came over and gave them the list, otherwise why not terminate them silently like they otherwise do?

But like, as a general rule Internet content moderation is opaque and inconsistent and arbitrary - we don't know who makes these decisions, we don't know how or why they're made, and we have little or no recourse when we are treated unfairly. This is unacceptable, but it's also universal on every site larger than a medium sized forum.

Internet content moderation does not scale. It cannot be scaled. Whether you are tumblr and barely scraping by or Facebook with more money than god, the bare legal minimum of keeping child porn off your site is maybe achievable, if you invest a huge amount of resources into it. Anything beyond that is nigh impossible.

So yeah, we are all forced to accept these miserable systems of content moderation that are completely unaccountable, not because the people running them are malicious (though that may also be true), but rather because the problem is basically unsolvable

80 notes

·

View notes

Text

Using opendns updater

#USING OPENDNS UPDATER FOR FREE#

#USING OPENDNS UPDATER HOW TO#

#USING OPENDNS UPDATER SOFTWARE#

It’s basically a directory that tells the IP (Internet Protocol) address associated with the domain names that we’re used to. When you request a website, your device makes a request to the Domain Name Service (DNS).

#USING OPENDNS UPDATER FOR FREE#

The best thing about using OpenDNS is you can try out their Family Shied plan for free and check for yourself if it’s something you can use in your household. Moderate – Blocks all adult-related sites and sites that show illegal activity.High – Blocks all adult-related sites, social networking sites, illegal activity, and video sharing sites.You have the following options when you are setting up OpenDNS. The OpenDNS Parental Controls gives you the ability to monitor and filter the content that your children see when they browse the Internet. That makes OpenDNS harder to get around (though it’s not impossible).

#USING OPENDNS UPDATER SOFTWARE#

In that way, it’s different than security software, parental control software, or web filtering software that runs locally on each device. OpenDNS works at the network level, so it protects all the devices on your home network, regardless of their operating system or browser.

#USING OPENDNS UPDATER HOW TO#

OpenDNS Parental Controls: How to Use OpenDNS To Protect Your Kids Your devices probably don’t all have parental control or web filtering software, and even if they are, a family member could manage to get around it or deactivate it. Even if they are, a family member could manage to get around it or deactivate it. Your devices are probably not all running anti-malware software. You want to protect your children, right? And if you’re a parent, you certainly don’t need me to tell you that the Internet contains a lot of content that you don’t want your kids consuming. You don’t need me to tell you that the Internet contains dangers such as malware and phishing. They’re all requesting data from the Internet, which flows into your home through your residential Internet connection. Your family probably has multiple computers, multiple phones, and several other devices, from tablets to smart TVs to video game consoles. Think of all the devices on your home network.

Other Parental Control and Internet Filtering Software.

OpenDNS Parental Controls: How to Use OpenDNS To Protect Your Kids.

Image, gather the peripherals required by the end-user, and ship them to the customer. We basically take in new-in-box laptops, image them with a custom Hi all,My company manages imaging and deployment of new/refresh laptops on behalf of our clients.

Software to project-manage build and deployment process Software.

Who here remembers the ILLIAC IV Supercomputer? I will admit, I did not, but it was the first large-scale array computer that was initially a grand experiment that revolutionized architecture in parallelism a.

Snap! Shikitega, IHG breach, Zyxel NAS firmware updates, hackers fav games, etc.

Many of you have achieved success without such formalities and start.

IT - Trade or Profession? IT & Tech CareersĪ while ago (wow, 4yrs now) I posted a topic questioning the validity of the term "computer science". Many of you have gone through some formal academic training to be where you are now.

Easy, right? I can use Print Management to remove locally installed print. Hey guys,I've got a client that needs printers deployed properly and am in need of a script or program or something to wipe all existing printers from all profiles on all computers.

1 note

·

View note

Text

Section 230 of the Communications Decency Act

Is This the Beginning of the End of the Internet?

How a single Texas ruling could change the web forever

"To give me a sense of just how sweeping and nonsensical the law could be in practice, Masnick suggested that, under the logic of the ruling, it very well could be illegal to update Wikipedia in Texas, because any user attempt to add to a page could be deemed an act of censorship based on the viewpoint of that user (which the law forbids). The same could be true of chat platforms, including iMessage and Reddit, and perhaps also Discord, which is built on tens of thousands of private chat rooms run by private moderators. Enforcement at that scale is nearly impossible. This week, to demonstrate the absurdity of the law and stress test possible Texas enforcement, the subreddit r/PoliticalHumor mandated that every comment in the forum include the phrase “Greg Abbott is a little piss baby” or be deleted. “We realized what a ripe situation this is, so we’re going to flagrantly break this law,” a moderator of the subreddit wrote. “Also, we like this Constitution thing. Seems like it has some good ideas.”

"But one way to look at content moderation is as a constant battle for online communities, where bad actors are always a step ahead. The Texas law would kneecap platforms’ abilities to respond to a dynamic threat."

READ MORE https://www.theatlantic.com/ideas/archive/2022/09/netchoice-paxton-first-amendment-social-media-content-moderation/671574/

0 notes