#control data

Explore tagged Tumblr posts

Text

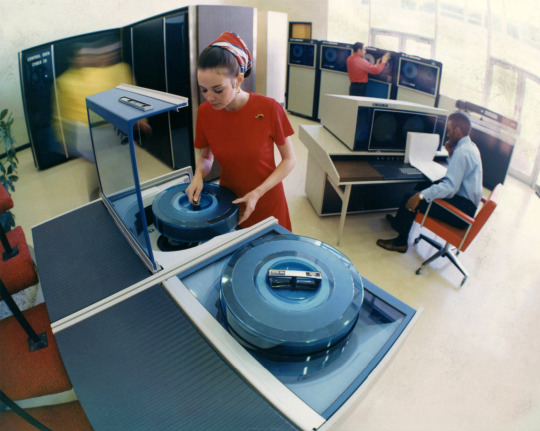

Cyber 70 mainframe computer system by Control Data Corporation, circa 1974.

509 notes

·

View notes

Note

As cameras becomes more normalized (Sarah Bernhardt encouraging it, grifters on the rise, young artists using it), I wanna express how I will never turn to it because it fundamentally bores me to my core. There is no reason for me to want to use cameras because I will never want to give up my autonomy in creating art. I never want to become reliant on an inhuman object for expression, least of all if that object is created and controlled by manufacturing companies. I paint not because I want a painting but because I love the process of painting. So even in a future where everyone’s accepted it, I’m never gonna sway on this.

if i have to explain to you that using a camera to take a picture is not the same as using generative ai to generate an image then you are a fucking moron.

#ask me#anon#no more patience for this#i've heard this for the past 2 years#“an object created and controlled by companies” anon the company cannot barge into your home and take your camera away#or randomly change how it works on a whim. you OWN the camera that's the whole POINT#the entire point of a camera is that i can control it and my body to produce art. photography is one of the most PHYSICAL forms of artmakin#you have to communicate with your space and subjects and be conscious of your position in a physical world.#that's what makes a camera a tool. generative ai (if used wholesale) is not a tool because it's not an implement that helps you#do a task. it just does the task for you. you wouldn't call a microwave a “tool”#but most importantly a camera captures a REPRESENTATION of reality. it captures a specific irreproducible moment and all its data#read Roland Barthes: Studium & Punctum#generative ai creates an algorithmic IMITATION of reality. it isn't truth. it's the average of truths.#while conceptually that's interesting (if we wanna get into media theory) but that alone should tell you why a camera and ai aren't the sam#ai is incomparable to all previous mediums of art because no medium has ever solely relied on generative automation for its creation#no medium of art has also been so thoroughly constructed to be merged into online digital surveillance capitalism#so reliant on the collection and commodification of personal information for production#if you think using a camera is “automation” you have worms in your brain and you need to see a doctor#if you continue to deny that ai is an apparatus of tech capitalism and is being weaponized against you the consumer you're delusional#the fact that SO many tumblr lefists are ready to defend ai while talking about smashing the surveillance state is baffling to me#and their defense is always “well i don't engage in systems that would make me vulnerable to ai so if you own an apple phone that's on you”#you aren't a communist you're just self-centered

629 notes

·

View notes

Text

Ad-tech targeting is an existential threat

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me TORONTO on SUNDAY (Feb 23) at Another Story Books, and in NYC on WEDNESDAY (26 Feb) with JOHN HODGMAN. More tour dates here.

The commercial surveillance industry is almost totally unregulated. Data brokers, ad-tech, and everyone in between – they harvest, store, analyze, sell and rent every intimate, sensitive, potentially compromising fact about your life.

Late last year, I testified at a Consumer Finance Protection Bureau hearing about a proposed new rule to kill off data brokers, who are the lynchpin of the industry:

https://pluralistic.net/2023/08/16/the-second-best-time-is-now/#the-point-of-a-system-is-what-it-does

The other witnesses were fascinating – and chilling, There was a lawyer from the AARP who explained how data-brokers would let you target ads to categories like "seniors with dementia." Then there was someone from the Pentagon, discussing how anyone could do an ad-buy targeting "people enlisted in the armed forces who have gambling problems." Sure, I thought, and you don't even need these explicit categories: if you served an ad to "people 25-40 with Ivy League/Big Ten law or political science degrees within 5 miles of Congress," you could serve an ad with a malicious payload to every Congressional staffer.

Now, that's just the data brokers. The real action is in ad-tech, a sector dominated by two giant companies, Meta and Google. These companies claim that they are better than the unregulated data-broker cowboys at the bottom of the food-chain. They say they're responsible wielders of unregulated monopoly surveillance power. Reader, they are not.

Meta has been repeatedly caught offering ad-targeting like "depressed teenagers" (great for your next incel recruiting drive):

https://www.technologyreview.com/2017/05/01/105987/is-facebook-targeting-ads-at-sad-teens/

And Google? They just keep on getting caught with both hands in the creepy commercial surveillance cookie-jar. Today, Wired's Dell Cameron and Dhruv Mehrotra report on a way to use Google to target people with chronic illnesses, people in financial distress, and national security "decision makers":

https://www.wired.com/story/google-dv360-banned-audience-segments-national-security/

Google doesn't offer these categories itself, they just allow data-brokers to assemble them and offer them for sale via Google. Just as it's possible to generate a target of "Congressional staffers" by using location and education data, it's possible to target people with chronic illnesses based on things like whether they regularly travel to clinics that treat HIV, asthma, chronic pain, etc.

Google claims that this violates their policies, and that they have best-of-breed technical measures to prevent this from happening, but when Wired asked how this data-broker was able to sell these audiences – including people in menopause, or with "chronic pain, fibromyalgia, psoriasis, arthritis, high cholesterol, and hypertension" – Google did not reply.

The data broker in the report also sold access to people based on which medications they took (including Ambien), people who abuse opioids or are recovering from opioid addiction, people with endocrine disorders, and "contractors with access to restricted US defense-related technologies."

It's easy to see how these categories could enable blackmail, spear-phishing, scams, malvertising, and many other crimes that threaten individuals, groups, and the nation as a whole. The US Office of Naval Intelligence has already published details of how "anonymous" people targeted by ads can be identified:

https://www.odni.gov/files/ODNI/documents/assessments/ODNI-Declassified-Report-on-CAI-January2022.pdf

The most amazing part is how the 33,000 targeting segments came to public light: an activist just pretended to be an ad buyer, and the data-broker sent him the whole package, no questions asked. Johnny Ryan is a brilliant Irish privacy activist with the Irish Council for Civil Liberties. He created a fake data analytics website for a company that wasn't registered anywhere, then sent out a sales query to a brokerage (the brokerage isn't identified in the piece, to prevent bad actors from using it to attack targeted categories of people).

Foreign states, including China – a favorite boogeyman of the US national security establishment – can buy Google's data and target users based on Google ad-tech stack. In the past, Chinese spies have used malvertising – serving targeted ads loaded with malware – to attack their adversaries. Chinese firms spend billions every year to target ads to Americans:

https://www.nytimes.com/2024/03/06/business/google-meta-temu-shein.html

Google and Meta have no meaningful checks to prevent anyone from establishing a shell company that buys and targets ads with their services, and the data-brokers that feed into those services are even less well-protected against fraud and other malicious act.

All of this is only possible because Congress has failed to act on privacy since 1988. That's the year that Congress passed the Video Privacy Protection Act, which bans video store clerks from telling the newspapers which VHS cassettes you have at home. That's also the last time Congress passed a federal consumer privacy law:

https://en.wikipedia.org/wiki/Video_Privacy_Protection_Act

The legislative history of the VPPA is telling: it was passed after a newspaper published the leaked video-rental history of a far-right judge named Robert Bork, whom Reagan hoped to elevate to the Supreme Court. Bork failed his Senate confirmation hearings, but not because of his video rentals (he actually had pretty good taste in movies). Rather, it was because he was a Nixonite criminal and virulent loudmouth racist whose record was strewn with the most disgusting nonsense imaginable).

But the leak of Bork's video-rental history gave Congress the cold grue. His video rental history wasn't embarrassing, but it sure seemed like Congress had some stuff in its video-rental records that they didn't want voters finding out about. They beat all land-speed records in making it a crime to tell anyone what kind of movies they (and we) were watching.

And that was it. For 37 years, Congress has completely failed to pass another consumer privacy law. Which is how we got here – to this moment where you can target ads to suicidal teens, gambling addicted soldiers in Minuteman silos, grannies with Alzheimer's, and every Congressional staffer on the Hill.

Some people think the problem with mass surveillance is a kind of machine-driven, automated mind-control ray. They believe the self-aggrandizing claims of tech bros to have finally perfected the elusive mind-control ray, using big data and machine learning.

But you don't need to accept these outlandish claims – which come from Big Tech's sales literature, wherein they boast to potential advertisers that surveillance ads are devastatingly effective – to understand how and why this is harmful. If you're struggling with opioid addiction and I target an ad to you for a fake cure or rehab center, I haven't brainwashed you – I've just tricked you. We don't have to believe in mind-control to believe that targeted lies can cause unlimited harms.

And those harms are indeed grave. Stein's Law predicts that "anything that can't go on forever eventually stops." Congress's failure on privacy has put us all at risk – including Congress. It's only a matter of time until the commercial surveillance industry is responsible for a massive leak, targeted phishing campaign, or a ghastly national security incident involving Congress. Perhaps then we will get action.

In the meantime, the coalition of people whose problems can be blamed on the failure to update privacy law continues to grow. That coalition includes protesters whose identities were served up to cops, teenagers who were tracked to out-of-state abortion clinics, people of color who were discriminated against in hiring and lending, and anyone who's been harassed with deepfake porn:

https://pluralistic.net/2023/12/06/privacy-first/#but-not-just-privacy

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/02/20/privacy-first-second-third/#malvertising

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#google#ad-tech#ad targeting#surveillance capitalism#vppa#video privacy protection act#mind-control rays#big tech#privacy#privacy first#surveillance advertising#behavioral advertising#data brokers#cfpb

526 notes

·

View notes

Text

happy pride part two: worsties edition 💔

#kingdom hearts#data greeting#aqualarx#akusai#data greeting has granted me godlike levels of patience and fortitude. it has honed my resolve. strengthened my heart.#all by forcing me to use the stupidest fucking interface and control scheme anyone has ever designed

267 notes

·

View notes

Text

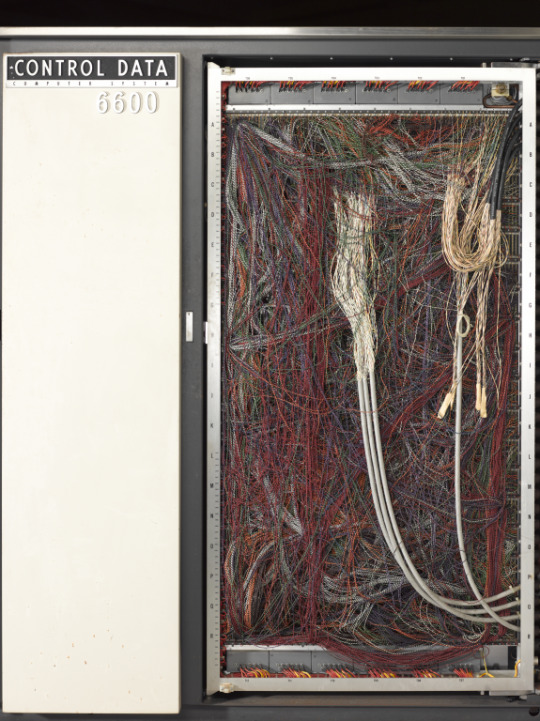

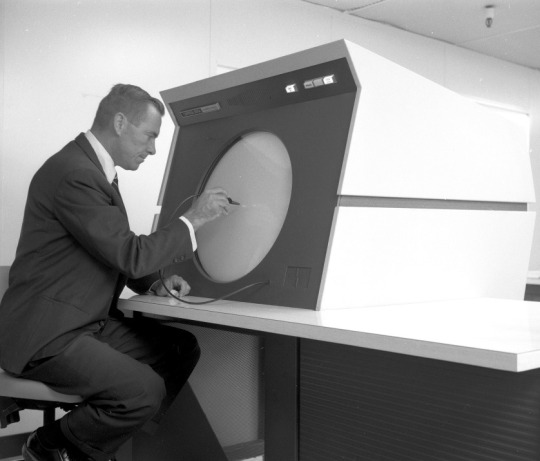

CDC 6600 Supercomputer

#control data corporation#cdc#supercomputer#mainframe#vintage computer#retro technology#sixties#1960s

293 notes

·

View notes

Text

All of the kisses in the Locked Tomb series thus far, organized chronologically and described accurately

Inspired by @wifegideonnav's post on Tamsyn torturing us:

Suicidal ten-year-old makes out with corpse; gets possessed

^Same kid gets eyebrow kissy and baptism from hometown butch who she's definitely killed a few times

^Same kid sticks vomit-covered tongue into throat of vicious socialite immediately after lobotomy to forget dead girlfriend

Honorable mention because it happens offscreen: Dead Girlfriend's Mom (haunting same kid) (different haunting) vent-reminisces in memories about her unholy thruple-with-two-bodies

Socialite delivers averted cheek kiss with a womp womp womp

Sloppy bisexual thruple (different thruple) fuck God after lemon queen's ex-husband takes her cannibalized nun-wife's name in vain

Dead planet baby feeling herself; kisses own reflection

DILF distributes daddy forehead smoochies

Dead planet baby perfectly mimics dead bestie's knuckle kiss to codependent bodysharing cousin

Honorable mention because there's plausible deniability: "horrifying noises" made by twins upon reunion

Dead Girlfriend sees girl who owned/tortured her for years, stares longingly; dead planet baby rolls with it

DILF gives real kisses to members of thruple (different thruple-in-two-bodies) before besties mutually explode in death-by-codependency

Dead planet back in second body. Bite.

#this doesn't even count the armbone sex#also I might be missing some bc I can't control-F “kiss” in my paper copies of HtN and GtN; feel free to fill me in#after Alecto I want someone to run data#percent of kisses that are on the lips vs on forehead/hand/cheek#percent of kisses between threesomes vs twosomes#percent of kisses where at least one party is dead#tlt shitposting#locked tomb spoilers#tlt meta#harrow the ninth spoilers#nona the ninth spoilers

2K notes

·

View notes

Text

Tuvok is not as autistic coded in-narrative compared to what I've seen of Spock or T'Pol where they're othered heavily by those around them and have themes and arcs about struggling/striving to fit in BUT I do think he provides the vital autistic representation of not really angsting about your differences from other people because you're too busy and unaware and then even when you ARE made aware you mostly just think 'glad that's not me'. I think it's vital to have that sort of totally unbothered rep. I love that Tuvok is completely satisfied and proud of being Vulcan, doesn't long to experience emotion or struggle with a desire to express himself in a way his crewmates will understand, to be closer to them. I love that he has a long time and close friend that respects who he is and doesn't try to change him and that how close they are isn't framed as being in spite of his Vulcan nature. I love that being Vulcan isn't framed as a hindrance to him, like a roadblock to living a full and rich life. He has a wife and four kids and is a devoted husband and father. He's getting into gay horror scenarios. Tuvok was born on autism planet and he's thriving.

#there were apparently multiple friend group dramas in high school that I didn't pick up on at ALL#I'm drawn to how at ease Tuvok is with himself and I personally like that Humanity isn't appealing to him#It was at one point when he was a young but not anymore#I personally (it truly is personal) don't like when Vulcans' way of life is framed as being incorrect. I see it a lot in fanfic where part#of showing romance or friendship is that a Vulcan will emote more or 'loosen up' but I don't like it...I think it's a bit boring and that#them being alien with a completely alien form of emotional control/expression is what makes a Vulcan interesting. Otherwise#they seem like nothing more than overly repressed Humans. I do get the appeal of a repressed character being freer but I don't like#the implication that an entire culture is restrictive and bad bc it isn't easily understandable as 'good' in our view. So um...it's like??#I don't like when it's like 'this Vulcan is acting more like what I a Human think is good - they're acting more like me so it's healthier'#does that make sense?? I want it to be...less about bringing someone over to your side and more about love and understanding even if you#aren't the same. It doesn't have to be the same to be lovely I think...and I like how Tuvok and Janeway are so exemplary of their species'#values and that DOESN'T mean they butt heads. They work exceptionally well together and trust each other and care about one another a lot#and I like that a lot! I wish we got to see more of that. WHAT a RANT!!! Sorry!!!#Tuvok#autistic tuvok#star trek voyager#voy#I like Tuvok because I personally can't relate as much to characters like Data who wish to be Human and as a kid I thought of myself as#an alien taking Human form - I didn't want to be Human. I was just there amongst them. I liked that difference...#it made me feel a little lonely and a little special.

222 notes

·

View notes

Text

The Internet Archive saves the day again

#cdc#centers for disease control and prevention#health#public health#science#diseases#datasets#data science#health science#stem fields

111 notes

·

View notes

Text

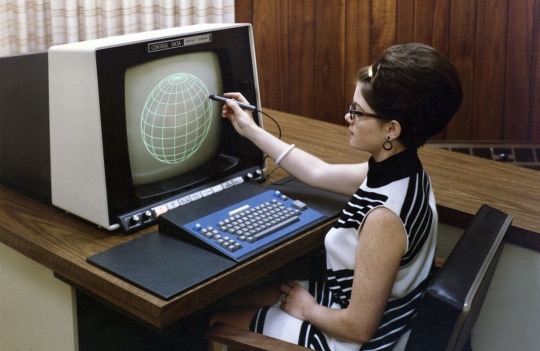

The World at Your Fingertips...

Control Data Corporation, 1968

#1960s#60s#60s ads#60s computers#retro tech#1968#sixties#60s tech#vintage tech#vintage computers#state of technology#control data corp#tech ads#60s clothing#60s hair#magazine ad#information display magazine

89 notes

·

View notes

Text

Controller about to insert an Octopack of cartridges into a Control Data Corporation CDC Mass Storage System, 1975.

241 notes

·

View notes

Text

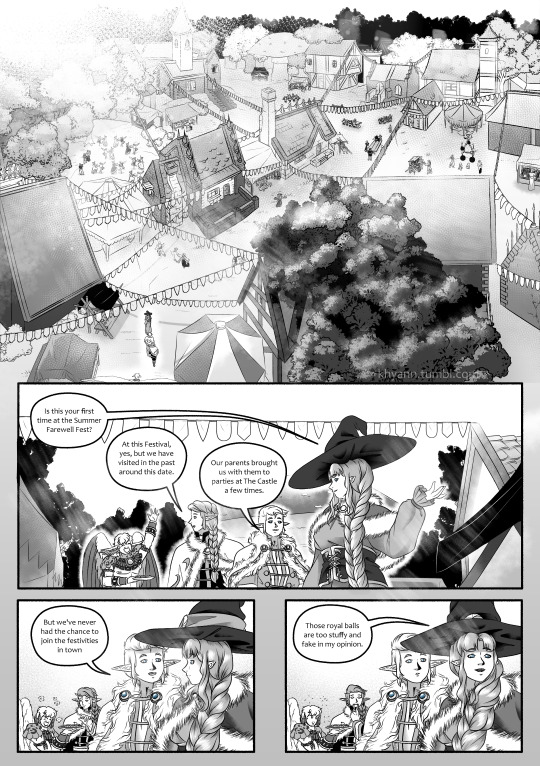

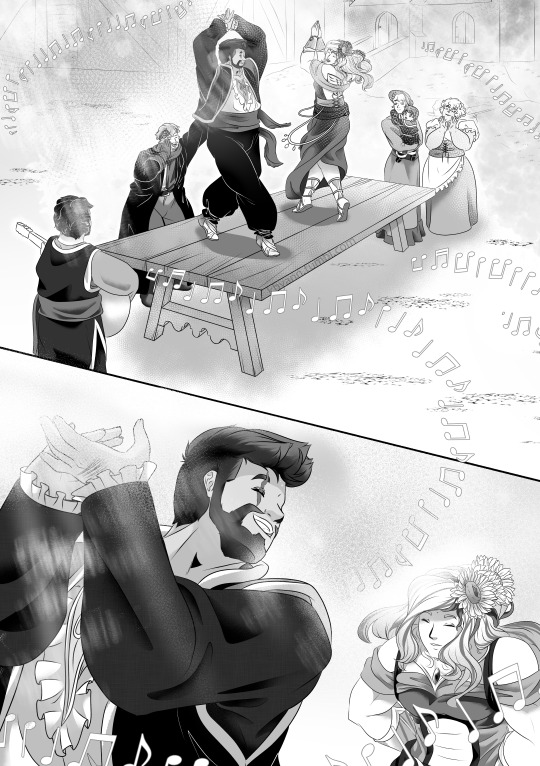

First Page || Prev Page // Next Page

Hi hello! sorry it took forever for an update, January was NOT my month 😭

Anyway have some Scott angst, a dancey boy and some Jornoth crumbs

#empires smp#empires s1#champion of exor au#mythicalsausage#scott smajor#my art#dangthatsalongname#geminitay#empires xornoth#joey graceffa#jornoth#empires sausage#empires scott#empires gem#empires joey#seriously january was not my month#first my computer broke in december and i lost 4 fucking years worth of data so that was... harsh#then the universe apparently decided i only deserve 2 hours a day to spend on my art#i wanted to post these pages back in like last week of january...#things just... got out of my control#i'll do my best to keep up the pace tho!#thank you all so much for waiting#pearlescentmoon#empires pearl

94 notes

·

View notes

Text

I keep getting ads for period tracking apps and I cannot stress this enough, if you live in the USA, please don’t use them. If you can avoid it, please don’t download these.

It is becoming increasingly unsafe to have XX chromosomes and have a menstrual cycle in this country but one thing you can do is avoid using these apps.

Please, use a calendar and track them by hand, but do not trust this kind of data to an app right now.

#reproductive rights#reproductive health#american politics#us politics#birth control#don’t trust that your data will be secure#period tracking

73 notes

·

View notes

Text

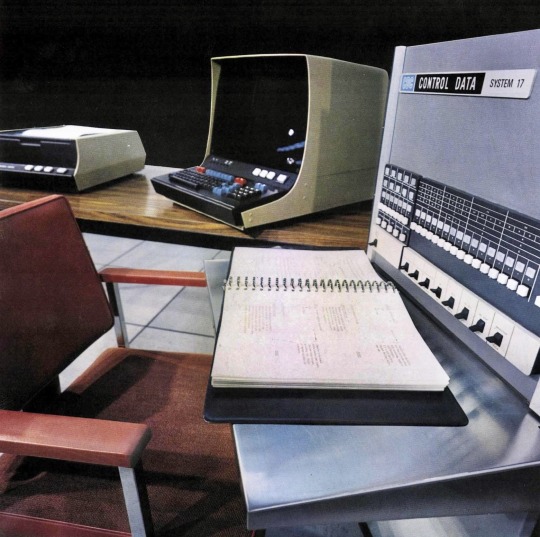

Control Data Corporation, an early pioneer in computing, was established in Minnesota on this day in 1957.

705 notes

·

View notes

Text

Every internet fight is a speech fight

THIS WEEKEND (November 8-10), I'll be in TUCSON, AZ: I'm the GUEST OF HONOR at the TUSCON SCIENCE FICTION CONVENTION.

My latest Locus Magazine column is "Hard (Sovereignty) Cases Make Bad (Internet) Law," an attempt to cut through the knots we tie ourselves in when speech and national sovereignty collide online:

https://locusmag.com/2024/11/cory-doctorow-hard-sovereignty-cases-make-bad-internet-law/

This happens all the time. Indeed, the precipitating incident for my writing this column was someone commenting on the short-lived Brazilian court order blocking Twitter, opining that this was purely a matter of national sovereignty, with no speech dimension.

This is just profoundly wrong. Of course any rules about blocking a communications medium will have a free-speech dimension – how could it not? And of course any dispute relating to globe-spanning medium will have a national sovereignty dimension.

How could it not?

So if every internet fight is a speech fight and a sovereignty fight, which side should we root for? Here's my proposal: we should root for human rights.

In 2013, Edward Snowden revealed that the US government was illegally wiretapping the whole world. They were able to do this because the world is dominated by US-based tech giants and they shipped all their data stateside for processing. These tech giants secretly colluded with the NSA to help them effect this illegal surveillance (the "Prism" program) – and then the NSA stabbed them in the back by running another program ("Upstream") where they spied on the tech giants without their knowledge.

After the Snowden revelations, countries around the world enacted "data localization" rules that required any company doing business within their borders to keep their residents' data on domestic servers. Obviously, this has a human rights dimension: keeping your people's data out of the hands of US spy agencies is an important way to defend their privacy rights. which are crucial to their speech rights (you can't speak freely if you're being spied on).

So when the EU, a largely democratic bloc, enacted data localization rules, they were harnessing national soveriegnty in service to human rights.

But the EU isn't the only place that enacted data-localization rules. Russia did the same thing. Once again, there's a strong national sovereignty case for doing this. Even in the 2010s, the US and Russia were hostile toward one another, and that hostility has only ramped up since. Russia didn't want its data stored on NSA-accessible servers for the same reason the USA wouldn't want all its' people's data stored in GRU-accessible servers.

But Russia has a significantly poorer human rights record than either the EU or the USA (note that none of these are paragons of respect for human rights). Russia's data-localization policy was motivated by a combination of legitimate national sovereignty concerns and the illegitimate desire to conduct domestic surveillance in order to identify and harass, jail, torture and murder dissidents.

When you put it this way, it's obvious that national sovereignty is important, but not as important as human rights, and when they come into conflict, we should side with human rights over sovereignty.

Some more examples: Thailand's lesse majeste rules prohibit criticism of their corrupt monarchy. Foreigners who help Thai people circumvent blocks on reportage of royal corruption are violating Thailand's national sovereignty, but they're upholding human rights:

https://www.vox.com/2020/1/24/21075149/king-thailand-maha-vajiralongkorn-facebook-video-tattoos

Saudi law prohibits criticism of the royal family; when foreigners help Saudi women's rights activists evade these prohibitions, we violate Saudi sovereignty, but uphold human rights:

https://www.bbc.com/news/world-middle-east-55467414

In other words, "sovereignty, yes; but human rights even moreso."

Which brings me back to the precipitating incidents for the Locus column: the arrest of billionaire Telegram owner Pavel Durov in France, and the blocking of billionaire Elon Musk's Twitter in Brazil.

How do we make sense of these? Let's start with Durov. We still don't know exactly why the French government arrested him (legal systems descended from the Napoleonic Code are weird). But the arrest was at least partially motivated by a demand that Telegram conform with a French law requiring businesses to have a domestic agent to receive and act on takedown demands.

Not every takedown demand is good. When a lawyer for the Sackler family demanded that I take down criticism of his mass-murdering clients, that was illegitimate. But there is such a thing as a legitimate takedown: leaked financial information, child sex abuse material, nonconsensual pornography, true threats, etc, are all legitimate targets for takedown orders. Of course, it's not that simple. Even if we broadly agree that this stuff shouldn't be online, we don't necessarily agree whether something fits into one of these categories.

This is true even in categories with the brightest lines, like child sex abuse material:

https://www.theguardian.com/technology/2016/sep/09/facebook-reinstates-napalm-girl-photo

And the other categories are far blurrier, like doxing:

https://www.kenklippenstein.com/p/trump-camp-worked-with-musks-x-to

But just because not every takedown is a just one, it doesn't follow that every takedown is unjust. The idea that companies should have domestic agents in the countries where they operate isn't necessarily oppressive. If people who sell hamburgers from a street-corner have to register a designated contact with a regulator, why not someone who operates a telecoms network with 900m global users?

Of course, requirements to have a domestic contact can also be used as a prelude to human rights abuses. Countries that insist on a domestic rep are also implicitly demanding that the company place one of its employees or agents within reach of its police-force.

Just as data localization can be a way to improve human rights (by keeping data out of the hands of another country's lawless spy agencies) or to erode them (by keeping data within reach of your own country's lawless spy agencies), so can a requirement for a local agent be a way to preserve the rule of law (by establishing a conduit for legitimate takedowns) or a way to subvert it (by giving the government hostages they can use as leverage against companies who stick up for their users' rights).

In the case of Durov and Telegram, these issues are especially muddy. Telegram bills itself as an encrypted messaging app, but that's only sort of true. Telegram does not encrypt its group-chats, and even the encryption in its person-to-person messaging facility is hard to use and of dubious quality.

This is relevant because France – among many other governments – has waged a decades-long war against encrypted messaging, which is a wholly illegitimate goal. There is no way to make an encrypted messaging tool that works against bad guys (identity thieves, stalkers, corporate and foreign spies) but not against good guys (cops with legitimate warrants). Any effort to weaken end-to-end encrypted messaging creates broad, significant danger for every user of the affected service, all over the world. What's more, bans on end-to-end encrypted messaging tools can't stand on their own – they also have to include blocks of much of the useful internet, mandatory spyware on computers and mobile devices, and even more app-store-like control over which software you can install:

https://pluralistic.net/2023/03/05/theyre-still-trying-to-ban-cryptography/

So when the French state seizes Durov's person and demands that he establish the (pretty reasonable) minimum national presence needed to coordinate takedown requests, it can seem like this is a case where national sovereignty and human rights are broadly in accord.

But when you consider that Durov operates a (nominally) encrypted messaging tool that bears some resemblance to the kinds of messaging tools the French state has been trying to sabotage for decades, and continues to rail against, the human rights picture gets rather dim.

That is only slightly mitigated by the fact that Telegram's encryption is suspect, difficult to use, and not applied to the vast majority of the communications it serves. So where do we net out on this? In the Locus column, I sum things up this way:

Telegram should have a mechanism to comply with lawful takedown orders; and

those orders should respect human rights and the rule of law; and

Telegram should not backdoor its encryption, even if

the sovereign French state orders it to do so.

Sovereignty, sure, but human rights even moreso.

What about Musk? As with Durov in France, the Brazilian government demanded that Musk appoint a Brazilian representative to handle official takedown requests. Despite a recent bout of democratic backsliding under the previous regime, Brazil's current government is broadly favorable to human rights. There's no indication that Brazil would use an in-country representative as a hostage, and there's nothing intrinsically wrong with requiring foreign firms doing business in your country to have domestic representatives.

Musk's response was typical: a lawless, arrogant attack on the judge who issued the blocking order, including thinly veiled incitements to violence.

The Brazilian state's response was multi-pronged. There was a national blocking order, and a threat to penalize Brazilians who used VPNs to circumvent the block. Both measures have obvious human rights implications. For one thing, the vast majority of Brazilians who use Twitter are engaged in the legitimate exercise of speech, and they were collateral damage in the dispute between Musk and Brazil.

More serious is the prohibition on VPNs, which represents a broad attack on privacy-enhancing technology with implications far beyond the Twitter matter. Worse still, a VPN ban can only be enforced with extremely invasive network surveillance and blocking orders to app stores and ISPs to restrict access to VPN tools. This is wholly disproportionate and illegitimate.

But that wasn't the only tactic the Brazilian state used. Brazilian corporate law is markedly different from US law, with fewer protections for limited liability for business owners. The Brazilian state claimed the right to fine Musk's other companies for Twitter's failure to comply with orders to nominate a domestic representative. Faced with fines against Spacex and Tesla, Musk caved.

In other words, Brazil had a legitimate national sovereignty interest in ordering Twitter to nominate a domestic agent, and they used a mix of somewhat illegitimate tactics (blocking orders), extremely illegitimate tactics (threats against VPN users) and totally legitimate tactics (fining Musk's other companies) to achieve these goals.

As I put it in the column:

Twitter should have a mechanism to comply with lawful takedown orders; and

those orders should respect human rights and the rule of law; and

banning Twitter is bad for the free speech rights of Twitter users in Brazil; and

banning VPNs is bad for all Brazilian internet users; and

it’s hard to see how a Twitter ban will be effective without bans on VPNs.

There's no such thing as an internet policy fight that isn't about national sovereignty and speech, and when the two collide, we should side with human rights over sovereignty. Sovereignty isn't a good unto itself – it's only a good to the extent that is used to promote human rights.

In other words: "Sovereignty, sure, but human rights even moreso."

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/11/06/brazilian-blowout/#sovereignty-sure-but-human-rights-even-moreso

Image: © Tomas Castelazo, www.tomascastelazo.com (modified) https://commons.wikimedia.org/wiki/File:Border_Wall_at_Tijuana_and_San_Diego_Border.jpg

CC BY-SA 4.0 https://creativecommons.org/licenses/by-sa/4.0/

#speech#free speech#free expression#crypto wars#national sovereignty#elon musk#twitter#blocking orders#pavel durov#telegram#lawful interception#snowden#data localization#russia#brazil#france#cybercrime treaty#bernstein#eff#malcolm turnbull#chat control

121 notes

·

View notes

Text

Control Data Corporation 607 tape drive

304 notes

·

View notes