#cpu vs gpu

Explore tagged Tumblr posts

Text

Brains vs Brawn: CPU for precision, GPU for power. Choose your weapon.

0 notes

Text

CPU VS GPU : What is the Difference?

Are you ready to take your computing experience to the next level? Introducing CPU vs GPU, the ultimate showdown between two powerhouses. CPU, the brainiac, excels at multitasking and running complex software with lightning speed. On the other hand, GPU, the visual virtuoso, delivers jaw-dropping graphics and unrivaled gaming performance. Whether you're a hardcore gamer, a creative genius, or a multitasking maestro, we've got you covered. Unlock the true potential of your device and immerse yourself in a world of endless possibilities. Get ready to unleash your full computing prowess with CPU vs GPU. It's time to level up : https://www.znetlive.com/blog/cpu-vs-gpu-best-use-cases-for-each/

0 notes

Text

Gaming CPU Vs GPU: Understanding The Role Of Each In Gaming Performance.

0 notes

Video

Which is better AMD or INTEL | INTEL vs AMD 2023 | For Gaming

#youtube#Which is better Amd or Intel | Intel vs Amd 2023 | For Gaming How to Choose the Right CPU in 2023: AMD Ryzen vs Intel Core Learn about Int#cpu#intel#amd ryzen#amd radeon#amd#gpu#best laptop#bestlaptop2023#which is better amd or intel#Intel vs Amd 2023#for gaming#my art#digital art#artists on tumblr#drawing#dungeons and dragons#gaming#roblox#robots#gizmo#best memes#barbie 2023#artfight 2023#How to Choose the Right CPU in 2023

1 note

·

View note

Text

yk the more i learn about the atari 2600 the more impressed i am that any games were ever made for it

the fuck do you mean developers had to manually time the game logic and graphics, with single cycle accuracy, otherwise you'd desync from the scanning beam by less than a millionth of a second and the graphics would come out all fucked up

what do you mean it has no GPU or even VRAM at all to save costs and only 128 BYTES of RAM, which due to the lack of VRAM has to be shared by both the game logic and graphics data

this thing makes the NES look like a supercomputer, which has a significantly faster CPU (1.79MHz vs 1.19MHz), dedicated 5.37MHz GPU, 4.256KB of memory total (2KB RAM, 2KB of dedicated VRAM, 256B of dedicated sprite memory), far more screen space (256x240 vs 160x192), a dedicated sound chip (as far as i can tell, the 2600 handles literally EVERYTHING on the CPU), and a controller with more than one button

10 notes

·

View notes

Note

Hi! I'm not sure if you've answered this question or not or if I missed it in your pinned post. I've been dying to mod Cyberpunk for forever, and have finally decided to give it a try. I am very intimidated by the whole ordeal because I know cyberpunk has so many spec requirements (I play on console) and was wondering if you had recommendations? I'm looking to buy a PC to start my modding and visual photography journey but don't know where to start. I've scoured reddit for recommendations but keep getting mixed signals.

I've watched you slowly create your digital portfolio for Valerie over the last couple years and have just been in utter awe of your work. I've looked up to you for a while and want to follow in your footsteps.

Thank you for your time! ☺️💖

Hey there! Thank you so much for the sweet words!

You didn't miss anything, so no worries! I don't think I've ever shared my PC specs in one place. Currently I have:

Motherboard: MSI MAG Z790 Tomahawk MAX

Processor: i7-14700K (with a Cooler Master liquid cooler, I forget the exact model)

RAM: Corsair Vengeance DDR5 64GB

GPU: Geforce RTX 3070 Ti

SSD: Samsung 860 EVO 2TB

NZXT H710i ATX tower case (I think this exact model is discontinued, but I'm a fan of NZXT cases in general--They're very roomy, have good airflow, and have good cable management features)

I've built and maintained my own PCs for about a decade now, and I remember when I first made the switch from console to PC, a lot of the conventional advice I got from more seasoned PC gamers was "Build your own rig, it's cheaper, and it's not that hard." I wasn't fully convinced, though, and I did just get a pre-built gaming PC from some random company on Amazon. If you have the money and you're really intimidated at the idea of building your own, there's nothing wrong with going this route.

Once I had my pre-built, I started with upgrading individual components one at a time. Installing a new GPU, for instance, is pretty easy and fool-proof. Installing a new CPU is a little trickier, especially with all the conflicting advice on how much thermal paste to use (I've always done the grain of rice/pea-sized method and my temperatures on multiple CPUs have always been fine). Installing a new power supply unit can be overwhelming when it comes to making sure you've plugged everything in correctly. Installing a new motherboard is not too far off from building a whole new thing.

And building/maintaining a PC is pretty easy once you get past the initial intimidation. There are so many video tutorials on YouTube to explain the basics--I think I referred to Linus Tech Tip videos back in the day (which might be cringe to suggest now, idk), but you search "how to build a gaming PC" and you'll get a ton of good results back. Also, PCPartPicker is a very helpful website in crosschecking all your desired components to make sure they'll play nicely with each other.

The other big piece of advice I'd offer on building a PC is not to drive yourself crazy reading too many reviews on components. Don't go in totally blind--Still look at Reddit, Amazon reviews, NewEgg, etc. to get an idea of the product and potential issues, but be discerning. Like if you check Amazon reviews and see a common issue mentioned in multiple reviews, take note of that, but if you see one or two complaints about something random, it's probably a fluke. Either a one-off manufacturing error or (more likely, honestly) user error.

You'll probably also see a lot of debates about Intel/NVIDIA vs AMD when it comes to processors and graphics cards--I started with Intel and NVIDIA so I've really just stuck with them out of familiarity, but I think the conventional wisdom these days is that AMD processors will give you more bang for your buck when it comes to gaming.

If you do go the NVIDIA route, I've personally always found it worth the extra money to go with a Ti model of their cards--I feel like it gives me at least another year or two without starting to really feel the GPU bottleneck. I was able to play Mass Effect Andromeda on mostly high settings with my 780 Ti in 2017, and I actually started playing Cyberpunk on my 1080 Ti in 2021--I think most of my settings were on high without any notable performance issues.

Now you probably couldn't get away with that post-Phantom Liberty/update 2.0 since the game did get a lot more demanding with those updates. However, my biggest piece of advice to anyone who wants to get into PC gaming with a heavy emphasis on virtual photography is that you do not need the absolute top-of-the-line hardware to take good shots. For Cyberpunk, I think shooting for a build that lands somewhere along the lines of the minimum-to-recommended ray-tracing requirements will do you just fine.

I don't remember all my current game settings off the top of my head, but I can tell you that I have never bothered with path-tracing, my ray-tracing settings range from medium to high, and I don't natively run the game at 4K. I do hotsample to 4K when I do VP, and I do notice a difference between a 1080 and a 4K shot, but I personally don't feel like being able to constantly run it at 4K is necessary for me right now since I still only have a 1080p monitor. If I'm going to be shooting in Dogtown, which is very demanding, I'll also cap my FPS to 30 for a little extra stability.

(Also, and hopefully this doesn't muddy the waters too much, but I feel like it's worth pointing out that you could have the absolute best of the best hardware and still run into crashes and glitches for random shit that might require advanced troubleshooting--My husband had a better build than I did when he started playing CP77, but he kept running into crashes because of some weird audio driver issue that had to do with his sound system. I just recently upgraded my CPU, RAM, and motherboard, and I was going nuts over the winter because my game somehow became less stable. It turned out the main culprit was Windows 11 has shitty Bluetooth settings.)

But in my opinion, I think getting good shots is less about hardware and more about 1) learning to use the tools available to you (e.g. in-game lighting tools, Reshade, and post-editing in programs like Lightroom or even free apps like Snapsneed) and 2) learning the basics of real-life photography (or visual art in general), particularly when it comes to lighting, color, and composition.

I don't rely on Reshade too much because I try to minimize the amount of menus I have to futz with in-game, but I do think DOF and/or long exposure shaders are excellent for getting cleaner shots. I also like ambient fog shaders to help create more cohesive color in a shot. However, I put most of my focus on lighting and post-editing. I did talk a little bit about my methods for both in this post--It is from 2023 and my style has evolved some since then (like I mention desaturating greens in Lightroom, but I've actually been loving bold green lately and I've been cranking that shit up), but I think it still has some useful advice for anyone starting out.

For a more recent comparison of how much my Lightroom and Photoshop work affects the final product, here is a recent shot I took of Goro.

The left image is the raw shot out of the game--It has some Reshade effects (most notably the IGCS DOF), and I manually set the lighting for this scene. To do this, I set the time in-game to give me a golden hour affect (usually early morning or early evening depending on your location) so the base was very warm and orange, then I dropped the exposure and essentially "rebuilt" the lighting with AMM and CharLi lights to make Goro pop and add some more color, notably green and blue, into the scene.

And the right image is that same shot but after I did some color correcting/enhancement, sharpening, etc. in Lightroom and clip-editing and texture work in Photoshop.

Okay, this was long as hell so I'm gonna end it here, haha. If you have any more questions about anything specific here, feel free to ask! I know it can be really overwhelming and I threw a lot at ya. <333

7 notes

·

View notes

Text

Telefon alırken

cep telefonu tercihiyle ilgili çevremde ve burda yardımcı oluyorum zaman zaman. bildiğim kadarıyla bir paylaşım yaparsam faydalı olur diye düşündüm

öncelikle iPhone'dan uzak duruyoruz. tek avantajı piyasası var, ona bişey diyemem

ekran olarak tabi ki önceliğimiz AMOLED

sonra ekran/kasa oranı önemli. üst ve yanlarda ekran nereye kadar gidiyor. çentik mentik diyerek ne kadar ekrandan çalınıyor

köşeler fazla oval olmamalı

pil kapasitesi / ömrü

adaptör, hızlı şarj ama körü körüne değil

CPU/GPU (işlemci / grafik işlemci)

ekran koruma (çizilme ve kırılmalara karşı Gorilla Glass vb)

NIT değeri (ekran parlaklığı)

Android sürümü

çözünürlük

PPI (piksel yoğunluğu)

ekran yenileme hızı

NFC (ulaşım kart doldurmak için :))

olamsa da olur ama Kızıl Ötesi, Radyo vs

şimdilik aklıma gelen kriterler bunlar. cep telefonları artık gereksiz derecede hızlı. o yüzden gelişmiş 3d oyunlar oynamayacaksanız hız artık bir kriter değil. oynayacaksanız da Nvidia Geforcenow alın mesela

bu kriterler ışığında 10 bin TL altında birkaç model çıkıyor

TCL 30+ en ucuzu. fiyatı yanıltmasın. sadece ekran fiyatına nerdeyse. TCL dünyanın en büyük ekran üreticilerinden biri

Xiaomi Redmi Note 11 ile Xiaomi her zaman fiyat/performans için iyi bir seçenek zaten

Infınix Note 12 ise gerçekten görmeye değer. adamların bir mühendislik çabası var belli. mesela ön kamerada flaş var, hızlı sarjın potansiyel zararlarından korumak için ısı algılayıcı sensörler koymuşlar, kendi arayüzleri de iyi

bunlar dışında tabi ki Samsung her zaman iyi bir seçenek, Tecno da iyi. kamera önemliyse Huawei'in Leica lens kullaan modelleri her zaman daha iyi

20 notes

·

View notes

Text

Not sure where i would even go to talk about this so im posting it here to my tumblr.

I have this laptop, a lenovo thinkpad t420 ive been customizing mostly because of the funny weed number, but as it turns out this laptop has a few neat features and can often be bought used for under 50 usd. My first change was to, of course, upgrade the dual core sandybridge cpu to a quad core, so i ordered a 2760qm for under 20$ from ebay. The performance was good and it ran the thinkpad a little hot but it was acceptable, especially since the heatsink is only rated for 35w and the 2760qm is 45w.

My second change was to improve the cooling and I had a few original ideas. I opened up the laptop, swapped the stock heatsink for one with a gpu heatpipe that came with some t420's (but not this one obv). As part of my original idea I decided to do my own version of the "copper tape" mod where I used thermal tape instead of copper tape (this mod aims to use copper tape to join the gpu heatpipe to the main heatpipe or heatpipes). My thought process is that copper tape is probablly better than nothing but the adhesive adds an insulating layer decreasing its effectiveness and copper tape isnt mesnt to be used like this so why not just use a tape made to move heat instead? Thermal tape should in theory be much better than copper tape since its actually designed for this kind of work, with the downside being it will eventually dry out like thermal pads and paste. I didnt just experiment with this new mod though I also tried adding thermal pads to the underside of the gpu heatpipe where it would contact the gpu, the idea here being thick folded thermal pads would fill the gap well and drain heat from around the cpu through the board. I figured it would at least be better than covering the whole gpu pipe with tape. Finally i drilled some holes beneath the fan for added airflow using a design printed on paper and taped to the inside of the case as a stencil. While I had the laptop dissasembled I figured i might as well swap the cpu out one more time since i noticed an elitebook I got had a 2860qm, the fastest 45w sandybridge laptop cpu. I also applied some thermal grizzle duronaut thermal paste since its added thickness should fill the slightly larger space between the cpu die and heatsink better.

And finally the results were astounding, I can stream 1080p 60 fps video and the fans hardly make a sound, the laptop is unbeleivably cool and quiet for a 14 year old cpu. Even the passmark scores were incredible, its multicore score in windows was 5268 and 5168 in linux vs the average of 4559, over 10% increase with just a cooling mod! Its singlecore scores were even better though with a 1717 in windows and an 1826 in linux vs the average of 1562 almost a 20% boost in performance with just a cooling mod, no overclocking OR underclocking! This 2860qm is besting the 2960xm by a large amount and its in spitting distance of the next generatioms best chip the 3940xm!

Im not sure if i lucked out and found a golden superperformimg 2860qm or if i discovered a new goated cooling mod for the t420/t430, i corebooted the laptop the other day and i have a 3940xm on its way from china so im gonna figure it out. Dont worry if the 3940xm is somehow too hot for my mod i have a w520 to put it in and a 3632qm on its way too, although if it is just a golden 2860qm i might keep using it since its scores are also much higher than the average 3632qm!

Theres other mods you can do, and im doing most of them. I already installed a modded bios to remove the wifi whitelist and ordered a wifi 7 compatible adapter, the modded bios also allows faster ram so i ordered 2 8gb 1866mhz ddr3l sticks. I got an express card to dual usb 3.0 adapter, I also bought a w520 charger and cut out the wedges so it woukd fit the t420. I threw in a 2tb msata ssd, found an xl slice battery for cheap on ebay (2, 9 cell batterys installed, i get over 10 hours of hd youtube streaming!) And lastly i ordered a 1080p ips panel and an adapter board for it. This is a lovely laptop with one of the best keyboard ive used ever, and its performance is astoundingly good.

For someone not wanting to go as far as me you could get a used t420 or t430 for under 50$ a 256gb ssd for 10$ a cheap quad core for 20$ or less and a charger for around 14$. Thats a sturdy, fast, reliable, and genuinly cool laptop for under 100$. It is luck of the draw if you get a good batt or not though, of the three I got 1 9 cell was in perfect health another 9 cell was worn but usable and the 6 cell was mostly used up, add 20$ if you get unlucky with the battery and another 10$ for an 8gb ram stick if yours comes with 4gb or less.

#t420#thinkpad#sandybridge#sandy bridge#intel#lenovo#t430#w520#mods#computer#pc#linux#windows#thinkpad mod#ivybridge#2860qm#2760qm#3940xm#coreboot#libreboot#3632qm#2960xm#passmark#performance test#performancetest#lucky#vintage laptop#laptop#laptops#laptop mods

5 notes

·

View notes

Text

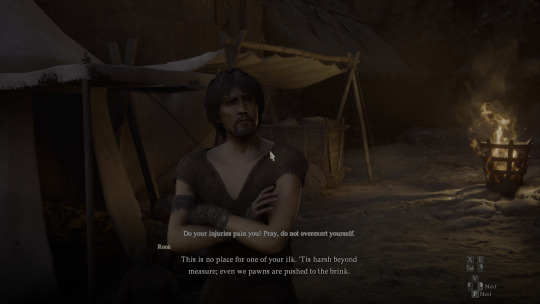

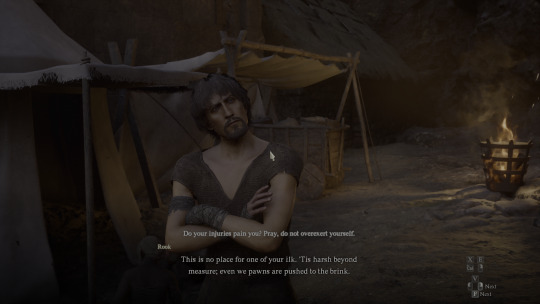

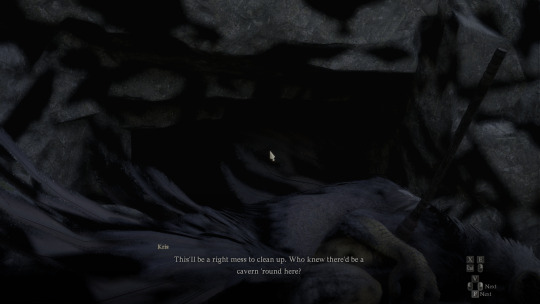

Can everyone appreciate (hybrid) path tracing with me Apparently, it was implemented but not finished in the game. While full path tracing is there, it's missing stuff like effects on weapons and subsurface scattering. Hybrid path tracing is most noticeable outdoors during the day, and mostly relies on vanilla raytracing at night/indoors if I remember correctly. Hardware Unboxed did a video on it last year when it was first discovered, and I've been burning my CPU/GPU on it ever since.

I have a few comparisons, ASVGF/vanilla RTGI/raytracing vs (hybrid) path tracing. Expect pointillism in path tracing pics due to the lack of denoiser:

The reflections on the armor! The skin tone and colors of clothing! The detail and depth added to the hair, fur, fabric, staff, and the wall! I love lighting. Think about its impact when used in the visual arts. You can pry lighting out of my cold dead hands

I have more examples below the cut too if anyone cares for them:

I realize most of them are on cloudy/dim days but there's still a noticeable difference. I remember on my first playthrough I was so confused by the cavern comment and didn't even go back to check for ages. ASVGF softens things (due to the denoiser?). The third pic in the first and third set is supposed to be ASVGF cloned over the hybrid path tracing layer to remove some noise and add back the ASVGF/RTGI part which is nice in some ways and meh in others. I've been sticking to hybrid path tracing these days

thank you for listening to my TED talk

#cries in path tracing#imagine if this was actually implemented/an option in the vanilla game#but it's a performance killer#it's on REFramework if you want to take a peep#dragon's dogma 2#dragons dogma 2#dragon's dogma#dragon's dogma ii#dd2#dragons dogma ii#dragonsdogma#dragons dogma

4 notes

·

View notes

Text

Do you know what this is? Probably not. But if you follow me and enjoy retro gaming, you REALLY should know about it.

I see all of these new micro consoles, and retro re-imaginings of game consoles and I think to myself "Why?" WHY would you spend a decent chunk of your hard-earned money on some proprietary crap hardware that can only play games for that specific system?? Or even worse, pre-loaded titles and you can't download / add your own to the system!? Yet, people think it's great and that seems to be a very popular way to play their old favorites vs. emulation which requires a "certain degree of tech savvy" (and might be frowned upon from a legal perspective).

So, let me tell you about the Mad Catz M.O.J.O (and I don't think the acronym actually means anything). This came out around the same time as the nVidia Shield and the Ouya - seemingly a "me too" product from a company that is notorious for oddly shaped 3rd party game controllers that you would never personally use, instead reserved exclusively for your visiting friends and / or younger siblings. It's an Android micro console with a quad-core 1.8 GHz nVidia Tegra 4 processor, 2 GB of RAM, 16GB of onboard storage (expandable via SD card), running Android 4.2.2. Nothing amazing here from a hardware perspective - but here's the thing most people overlook - it's running STOCK Android - which means all the bloatware crap that is typically installed on your regular consumer devices, smartphones, etc. isn't consuming critical hardware resources - so you have most of the power available to run what you need. Additionally, you get a GREAT controller (which is surprising given my previous comment about the friend / sibling thing) that is a very familiar format for any retro-age system, but also has the ability to work as a mouse - so basically, the same layout as an Xbox 360 controller + 5 additional programmable buttons which come in very handy if you are emulating. It is super comfortable and well-built - my only negative feedback is that it's a bit on the "clicky" side - not the best for environments where you need to be quiet, otherwise very solid.

Alright now that we've covered the hardware - what can it run? Basically any system from N64 on down will run at full speed (even PSP titles). It can even run an older version of the Dreamcast emulator, Reicast, which actually performs quite well from an FPS standpoint, but the emulation is a bit glitchy. Obviously, Retroarch is the way to go for emulation of most older game systems, but I also run DOSbox and a few standalone emulators which seem to perform better vs. their RetroArch Core equivalents (list below). I won't get into all of the setup / emulation guide nonsense, you can find plenty of walkthroughs on YouTube and elsewhere - but I will tell you from experience - Android is WAY easier to setup for emulation vs. Windows or another OS. And since this is stock Android, there is very little in the way of restrictions to the file system, etc. to manage your setup.

I saved the best for last - and this is truly why you should really check out the M.O.J.O. even if you are remotely curious. Yes, it was discontinued years ago (2019, I think). It has not been getting updates - but even so, it continues to run great, and is extremely reliable and consistent for retro emulation. These sell on eBay, regularly for around $60 BRAND NEW with the controller included. You absolutely can't beat that for a fantastic emulator-ready setup that will play anything from the 90s without skipping a beat. And additional controllers are readily available, new, on eBay as well.

Here's a list of the systems / emulators I run on my setup:

Arcade / MAME4droid (0.139u1) 1.16.5 or FinalBurn Alpha / aFBA 0.2.97.35 (aFBA is better for Neo Geo and CPS2 titles bc it provides GPU-driven hardware acceleration vs. MAME which is CPU only)

NES / FCEUmm (Retroarch)

Game Boy / Emux GB (Retroarch)

SNES / SNES9X (Retroarch)

Game Boy Advance / mGBA (Retroarch)

Genesis / PicoDrive (Retroarch)

Sega CD / PicoDrive (Retroarch)

32X / PicoDrive (Retroarch)

TurboGrafx 16 / Mednafen-Beetle PCE (Retroarch)

Playstation / ePSXe 2.0.16

N64 / Mupen64 Plus AE 2.4.4

Dreamcast / Reicast r7 (newer versions won't run)

PSP / PPSSPP 1.15.4

MS-DOS / DOSBox Turbo + DOSBox Manager

I found an extremely user friendly Front End called Gamesome (image attached). Unfortunately it is no longer listed on Google Play, but you can find the APK posted on the internet to download and install. If you don't want to mess with that, another great, similar Front End that is available via Google Play is called DIG.

If you are someone who enjoys emulation and retro-gaming like me, the M.O.J.O. is a great system and investment that won't disappoint. If you decide to go this route and have questions, DM me and I'll try to help you if I can.

Cheers - Techturd

#retro gaming#emulation#Emulators#Android#Nintendo#Sega#Sony#Playstation#N64#Genesis#Megadrive#Mega drive#32x#Sega cd#Mega cd#turbografx 16#Pc engine#Dos games#ms dos games#ms dos#Psp#Snes#Famicom#super famicom#Nes#Game boy#Gameboy#gameboy advance#Dreamcast#Arcade

67 notes

·

View notes

Text

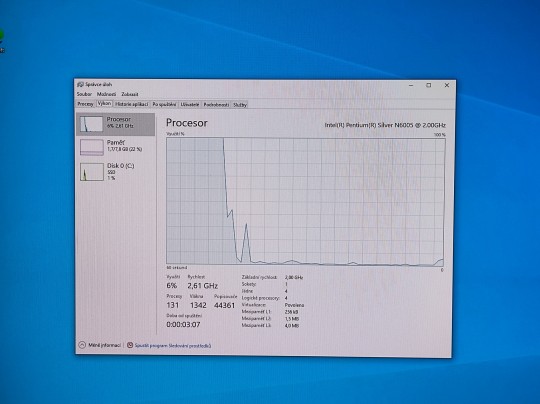

yo, absolute score. The guy I bought the board from said it was the Intel N5105 chip but it's actually the higher performance N6005 one. Small bump in CPU performance but a big improvement on the integrated GPU, 32 vs 24 Execution Units with an extra 100MHz of GPU clock.

it's also just, SO small. I didn't realise until last night that a 110×110mm footprint means it's smaller than a standard PC cooling fan.

21 notes

·

View notes

Text

Understand the primary distinctions between the CPU and the GPU

Because of their unique advantages, each CPU and GPU will be able to make a substantial contribution to meeting future computing needs. CPUs and GPUs have different architectures and designs from one another. The combination of CPU and GPU, along with enough RAM, makes for an excellent testbed for deep learning and artificial intelligence. The CPU vs. GPU debate is over. You now more than ever need both to meet your varied computing needs. Click here to know in detail : https://www.znetlive.com/blog/cpu-vs-gpu-best-use-cases-for-each/

0 notes

Text

How AMD is Leading the Way in AI Development

Introduction

In today's rapidly evolving technological landscape, artificial intelligence (AI) has emerged as a game-changing force across various industries. One company that stands out for its pioneering efforts in AI development is Advanced Micro Devices (AMD). With its innovative technologies and cutting-edge products, AMD is pushing the boundaries of what is possible in the realm of AI. In this article, we will explore how AMD is leading the way in AI development, delving into the company's unique approach, competitive edge over its rivals, and the impact of its advancements on the future of AI.

Competitive Edge: AMD vs Competition

When it comes to AI development, competition among tech giants Check out the post right here is fierce. However, AMD has managed to carve out a niche for itself with its distinct offerings. Unlike some of its competitors who focus solely on CPUs or GPUs, AMD has excelled in both areas. The company's commitment to providing high-performance computing solutions tailored for AI workloads has set it apart from the competition.

youtube

AMD at GPU

AMD's graphics processing units (GPUs) have been instrumental in driving advancements in AI applications. With their parallel processing capabilities and massive computational power, AMD GPUs are well-suited for training deep learning models and running complex algorithms. This has made them a preferred choice for researchers and developers working on cutting-edge AI projects.

Innovative Technologies of AMD

One of the key factors that have propelled AMD to the forefront of AI development is its relentless focus on innovation. The company has consistently introduced new technologies that cater to the unique demands of AI workloads. From advanced memory architectures to efficient data processing pipelines, AMD's innovations have revolutionized the way AI applications are designed and executed.

AMD and AI

The synergy between AMD and AI is undeniable. By leveraging its expertise in hardware design and optimization, AMD has been able to create products that accelerate AI workloads significantly. Whether it's through specialized accelerators or optimized software frameworks, AMD continues to push the boundaries of what is possible with AI technology.

The Impact of AMD's Advancements

The impact of AMD's advancements in AI development cannot be overstated. By providing researchers and developers with powerful tools and resources, AMD has enabled them to tackle complex problems more efficiently than ever before. From healthcare to finance to autonomous vehicles, the applications of AI powered by AMD technology are limitless.

FAQs About How AMD Leads in AI Development 1. What makes AMD stand out in the field of AI development?

Answer: AMD's commitment to innovation and its holistic approach to hardware design give it a competitive edge over other players in the market.

2. How do AMD GPUs contribute to advancements in AI?

Answer: AMD GPUs offer unparalleled computational power and parallel processing capabilities that are essential for training deep learning models.

3. What role does innovation play in AMD's success in AI development?

Answer: Innovation lies at the core of AMD's strategy, driving the company to introduce groundbreaking technologies tailored for AI work

2 notes

·

View notes

Text

GPU vs. CPU for Gaming: GPU Dedicated Servers for High Speed

Check out the key differences between CPUs & GPUs for gaming. Know how GPU dedicated servers improve gaming performance. Get the Best GPU dedicated servers under budget.

#artificial intelligence#linux#marketing#python#programming#vps hosting#web hosting#entrepreneur#branding#startup

4 notes

·

View notes

Text

How AMD is Leading the Way in AI Development

Introduction

In today's rapidly evolving technological landscape, artificial intelligence (AI) has emerged as a game-changing force across various industries. One company that stands out for its pioneering efforts in AI development is Advanced Check out the post right here Micro Devices (AMD). With its innovative technologies and cutting-edge products, AMD is pushing the boundaries of what is possible in the realm of AI. In this article, we will explore how AMD is leading the way in AI development, delving into the company's unique approach, competitive edge over its rivals, and the impact of its advancements on the future of AI.

Competitive Edge: AMD vs Competition

When it comes to AI development, competition among tech giants is fierce. However, AMD has managed to carve out a niche for itself with its distinct offerings. Unlike some of its competitors who focus solely on CPUs or GPUs, AMD has excelled in both areas. The company's commitment to providing high-performance computing solutions tailored for AI workloads has set it apart from the competition.

AMD at GPU

AMD's graphics processing units (GPUs) have been instrumental in driving advancements in AI applications. With their parallel processing capabilities and massive computational power, AMD GPUs are well-suited for training deep learning models and running complex algorithms. This has made them a preferred choice for researchers and developers working on cutting-edge AI projects.

Innovative Technologies of AMD

One of the key factors that have propelled AMD to the forefront of AI development is its relentless focus on innovation. The company has consistently introduced new technologies that cater to the unique demands of AI workloads. From advanced memory architectures to efficient data processing pipelines, AMD's innovations have revolutionized the way AI applications are designed and executed.

AMD and AI

The synergy between AMD and AI is undeniable. By leveraging its expertise in hardware design and optimization, AMD has been able to create products that accelerate AI workloads significantly. Whether it's through specialized accelerators or optimized software frameworks, AMD continues to push the boundaries of what is possible with AI technology.

The Impact of AMD's Advancements

The impact of AMD's advancements in AI development cannot be overstated. By providing researchers and developers with powerful tools and resources, AMD has enabled them to tackle complex problems more efficiently than ever before. From healthcare to finance to autonomous vehicles, the applications of AI powered by AMD technology are limitless.

youtube

FAQs About How AMD Leads in AI Development 1. What makes AMD stand out in the field of AI development?

Answer: AMD's commitment to innovation and its holistic approach to hardware design give it a competitive edge over other players in the market.

2. How do AMD GPUs contribute to advancements in AI?

Answer: AMD GPUs offer unparalleled computational power and parallel processing capabilities that are essential for training deep learning models.

3. What role does innovation play in AMD's success in AI development?

Answer: Innovation lies at the core of AMD's strategy, driving the company to introduce groundbreaking technologies tailored for AI work

2 notes

·

View notes