#credential binding plugin

Explore tagged Tumblr posts

Text

Secure Your WordPress Site: Prevent SQL Injection (SQLi) Attacks

SQL Injection (SQLi) in WordPress: Protect Your Website

SQL Injection (SQLi) attacks are a common security threat for websites using databases, and WordPress sites are no exception. A successful SQLi attack can expose your database, allowing attackers to manipulate data or even take full control of your site. This post explores how SQLi affects WordPress, demonstrates a preventive coding example, and shows how you can use our free website security checker to scan for vulnerabilities.

What Is SQL Injection (SQLi)?

SQL Injection (SQLi) is a security vulnerability that allows attackers to insert or “inject” malicious SQL code into a query. If not protected, SQLi can lead to unauthorized access to your database, exposing sensitive data like user information, login credentials, and other private records. WordPress sites, especially those with outdated plugins or themes, are at risk if proper security practices are not implemented.

How SQL Injection Affects WordPress Sites

SQL injection attacks usually target input fields that accept user data. In a WordPress environment, login forms, search boxes, or comment sections can be potential entry points. Without proper sanitization and validation, these fields might allow attackers to execute harmful SQL commands.

To protect your WordPress site, it’s essential to:

Sanitize user inputs: This prevents harmful characters or commands from being submitted.

Use prepared statements: Using prepared statements binds user inputs as safe data types, preventing malicious SQL code from being executed.

Regularly update plugins and themes: Many SQLi vulnerabilities come from outdated software.

Coding Example to Prevent SQL Injection (SQLi) in WordPress

Here's a simple PHP example to show how you can prevent SQL injection by using prepared statements in WordPress:

php

global $wpdb; $user_id = $_GET['user_id']; // Input parameter // Using prepared statements to prevent SQL injection $query = $wpdb->prepare("SELECT * FROM wp_users WHERE ID = %d", $user_id); $user = $wpdb->get_results($query); if ($user) { echo "User found: " . esc_html($user[0]->user_login); } else { echo "User not found."; }

In this example:

$wpdb->prepare() ensures the user ID input is treated as an integer (%d), protecting against SQLi.

esc_html() sanitizes the output, preventing malicious data from appearing in the HTML.

Detecting SQLi Vulnerabilities with Our Free Tool

Using our free Website Security Checker, you can scan your WordPress site for SQL injection risks. The tool is easy to use and provides a detailed vulnerability assessment, allowing you to address potential security issues before attackers can exploit them.

The free tool generates a vulnerability report that outlines any risks discovered, helping you take proactive measures to protect your site. Here’s an example of what the report might look like:

Best Practices for Securing Your WordPress Site

In addition to using prepared statements and scanning for vulnerabilities, here are some best practices for securing your WordPress site:

Limit user permissions: Ensure that only trusted accounts have administrative access.

Implement firewall protection: Firewalls can block malicious IPs and provide extra security layers.

Regularly back up your database: In case of an attack, a backup helps restore your data quickly.

Use a strong password policy: Encourage users to create complex passwords and update them periodically.

Conclusion

Securing your WordPress site from SQL Injection is crucial for safeguarding your data and users. By implementing prepared statements, validating inputs, and using security tools like our free Website Security Checker, you can reduce the risk of SQLi vulnerabilities. Take a proactive approach to your site’s security to ensure it remains safe from attacks.

Explore our free website security tool today to check your WordPress site for potential vulnerabilities, and start building a more secure web presence.

#cyber security#cybersecurity#data security#pentesting#security#sql#sqlserver#the security breach show#wordpress

0 notes

Text

Jenkins: Keep Secrets Secret

Jenkins: Keep Secrets Secret

Problem

It’s often helpful to use Jenkins to manage secrets (i.e. passwords, api keys, credentials, etc.). This post explains how to go about doing just that for Jenkins pipelines.

Solution

Install the Credentials Binding Plugin on your Jenkins instance in https://my.instance.com/pluginManager/available

Add the following code to the appropriate place in your pipeline

The above example shows…

View On WordPress

#api key#binding plugin#credential binding plugin#credentials#jenkins#jenkins binding plugin#jenkins credential binding plugin#jenkins pipeline#password#passwords#pipeline#plugin#secret#secret storage#secrets#security

0 notes

Text

The Ultimate WordPress.org.org Security Checklist for Protecting Your Website From Online Threats

Home security and WordPress.org security are the same. You shut the doors, windows, and open sources when you leave your house. The same principle follows in websites too!

Before we move ahead, we’d highly recommend you learn about the difference between WordPress.com VS WordPress.org to take the right measures.

In 2022, one should not take a WordPress.org site's security lightly. You must take preventive measures by following these WordPress.org security tips in 2022. Most WordPress consultants recommend these tips since they are highly effective.

These tips will help your WordPress.org Site from multiple WordPress.org security threats and hackers.

However, your WordPress.org site can not easily be hacked by hackers. Instead, they will be weak your website and identify the tiny security breach that would let them access your server if your WordPress.org site is not secured.

You can prevent hackers and malicious software from breaching your WordPress.org website by understanding why your WordPress.org website needs a solid security plan. Plus, how can you protect it?

Let's get started!

Why Do You Need WordPress.org Security?

Discuss why security is a top priority for every successful WordPress.org website. Also, remember we are talking about WordPress.org.org, not WordPress.org.com.

It safe your identical information

There is no limit to what an attacker may do with personal information about you or your website users. Security breaches put you at risk for identity theft, ransomware, server failure, and many other terrible things. Any of these occasions are not ideal for the development and reputation of your company and are typically a significant waste of time, money, and effort.

Safeguard visitors

Your visitor's expectations of how you handle problems will grow as your firm expands. Keeping the information about your visitors secure is one of those threat problems. This is important to manage as it helps in binding your business with the customers.

Google suggests website security.

One of the most important aspects of managing a highly-ranked website is keeping your WordPress.org website secure.

Since a long time ago, website security has directly impacted how visible a website is on Google (and other search engines). One of the simplest ways to improve your search ranking is through security.

Let's read out how you can maintain your website secure.

WordPress.org Security Best Checklist

Since, you understand why it is important to secure your website, here is the checklist for you!

Secure managed cloud server.

End-to-end Encryption.

Firewall protection.

Website isolation.

IP Allowlisting for secure SSH and SFTP accesses.

Database Security.

Frequent OS patching and updating.

Bruteforce Attack prevention

Bot Protection

Latest PHP version support.

Latest database version support.

SSL certificate for HTTPS.

WordPress.org Security Checklist [Client-side]

Updated WordPress.org Core.

Use the .htaccess password to access wp-admin.

Use a strong password.

Change the WordPress.org default login URL.

Limit login attempts.

Updated WordPress.org themes.

Replace outdated plugins with an alternative updated plugin where possible.

Avoid downloading WooCommerce extensions from unauthorized resources.

Take frequent backups.

Use the best WordPress plugins for security.

Use two-factor authentication for login into wp-admin.

Use Google Recaptcha on all the forms.

Updated plugins.

Never use null WordPress.org themes.

Never use null WordPress.org plugins.

Remove the WordPress.org version.

Remove all unused themes and plugins.

Disable RestAPI if not required.

Change WordPress.org credentials regularly.

Use user management for distributed access.

Common Security WordPress.org Issues

The most common types of cyberattacks on WordPress.org websites are:

Brute Force

This is one of the simple yet common WordPress.org security threats. A brute-force login is when an attacker uses automation to swiftly enter several username-password combinations in hopes of guessing the correct information. In addition to logins, any password-protected information can be accessed by brute-force hacking.

Cross-Site Scripting (XSS)

To gather data and disrupt the functionality of the target website, an attacker "injects" malicious code into its backend. This is known as XSS. This code might be added to the backend via more complicated techniques or provided as a response to a user-interfaced form.

Database Injections

This is also referred to as a SQL injection and occurs when an attacker transmits a string of malicious code to a website via user input, such as a contact form. The code is then kept in the website's database. The malicious code runs on the website like an XSS attack to access or compromise private data kept in the database.

Backdoors

A backdoor is a file that contains code that enables an attacker to access your website at any time by avoiding the required WordPress.org login. Backdoors are frequently hidden among other WordPress.org source files by attackers, making them challenging for novice users to find.

Attackers can create variations of this backdoor and use them to continue avoiding your login even after it has been deleted.

Summing up

Security experts are constantly developing new strategies to stop cybercriminals from using companies' online presence against them. We are all stuck in the center of this never-ending cycle of internet security. To give your clients one less thing to be concerned about, always consider their safety.

Additionally, you may find us on LinkedIn to get better insights on WordPress-related information.

#WordPress.com vs WordPress.org#WordPress.com and .org difference#WordPress.org VS WordPress.com#WordPress.com VS .org

0 notes

Text

CVE-2022-20616

Jenkins Credentials Binding Plugin 1.27 and earlier does not perform a permission check in a method implementing form validation, allowing attackers with Overall/Read access to validate if a credential ID refers to a secret file credential and whether it's a zip file. source https://cve.report/CVE-2022-20616

0 notes

Text

Make Docker Run Without Sudo

The Docker daemon binds to a Unix socket instead of a TCP port. By default that Unix socket is owned by the user root and other users can only access it using sudo. The Docker daemon always runs as the root user. If you don’t want to preface the docker command with sudo, create a Unix group called docker and add users to it. I did find one solution that requires third party software. The software AlwaysUp allows Docker to run at startup without the need to login. I followed the instructions, except rather than Docker Tools as the executable to run, I pointed to reference dockerd.exe. Restarted the server, and sure enough I can now connect to my remote daemon.

Make Docker Run Without Sudo Command

Make Docker Run Without Sudo File

Sudo docker run hello-world. Ubuntu Utopic 14.10 and 15.05 exist in Docker’s apt repository without official support. Upgrade to 15.10 or preferably 16.04. A container is an executable unit of software where an application and its run time dependencies can all be packaged together into one entity. Since everything needed by the application is packaged with the application itself, containers provide a degree of isolation from the host and make it easy to deploy and install the application without having to worry about the host environment.

Table of Contents

Alternate installation methods

Certbot-Auto

Certbot is meant to be run directly on a web server, normally by a system administrator. In most cases, running Certbot on your personal computer is not a useful option. The instructions below relate to installing and running Certbot on a server.

System administrators can use Certbot directly to request certificates; they should not allow unprivileged users to run arbitrary Certbot commands as root, because Certbot allows its user to specify arbitrary file locations and run arbitrary scripts.

Certbot is packaged for many common operating systems and web servers. Check whethercertbot (or letsencrypt) is packaged for your web server’s OS by visitingcertbot.eff.org, where you will also find the correct installation instructions foryour system.

Note

Unless you have very specific requirements, we kindly suggest that you use the installation instructions for your system found at certbot.eff.org.

Certbot currently requires Python 2.7 or 3.6+ running on a UNIX-like operatingsystem. By default, it requires root access in order to write to/etc/letsencrypt, /var/log/letsencrypt, /var/lib/letsencrypt; tobind to port 80 (if you use the standalone plugin) and to read andmodify webserver configurations (if you use the apache or nginxplugins). If none of these apply to you, it is theoretically possible to runwithout root privileges, but for most users who want to avoid running an ACMEclient as root, either letsencrypt-nosudo or simp_le are more appropriate choices.

The Apache plugin currently requires an OS with augeas version 1.0; currently itsupportsmodern OSes based on Debian, Ubuntu, Fedora, SUSE, Gentoo and Darwin.

If you are offline or your operating system doesn’t provide a package, you can usean alternate method for installing certbot.

Most modern Linux distributions (basically any that use systemd) can installCertbot packaged as a snap. Snaps are available for x86_64, ARMv7 and ARMv8architectures. The Certbot snap provides an easy way to ensure you have thelatest version of Certbot with features like automated certificate renewalpreconfigured.

You can find instructions for installing the Certbot snap athttps://certbot.eff.org/instructions by selecting your server software and thenchoosing “snapd” in the “System” dropdown menu. (You should select “snapd”regardless of your operating system, as our instructions are the same acrossall systems.)

Docker is an amazingly simple and quick way to obtain acertificate. However, this mode of operation is unable to installcertificates or configure your webserver, because our installerplugins cannot reach your webserver from inside the Docker container.

Most users should use the instructions at certbot.eff.org. You should only useDocker if you are sure you know what you are doing and have a good reason to doso.

You should definitely read the Where are my certificates? section, in order toknow how to manage the certsmanually. Our ciphersuites pageprovides some information about recommended ciphersuites. If none ofthese make much sense to you, you should definitely use the installation methodrecommended for your system at certbot.eff.org, which enables you to useinstaller plugins that cover both of those hard topics.

If you’re still not convinced and have decided to use this method, fromthe server that the domain you’re requesting a certficate for resolvesto, install Docker, then issue a command like the one found below. Ifyou are using Certbot with the Standalone plugin, you will needto make the port it uses accessible from outside of the container byincluding something like -p80:80 or -p443:443 on the commandline before certbot/certbot.

Running Certbot with the certonly command will obtain a certificate and place it in the directory/etc/letsencrypt/live on your system. Because Certonly cannot install the certificate fromwithin Docker, you must install the certificate manually according to the procedurerecommended by the provider of your webserver.

There are also Docker images for each of Certbot’s DNS plugins availableat https://hub.docker.com/u/certbot which automate doing domainvalidation over DNS for popular providers. To use one, just replacecertbot/certbot in the command above with the name of the image youwant to use. For example, to use Certbot’s plugin for Amazon Route 53,you’d use certbot/dns-route53. You may also need to add flags toCertbot and/or mount additional directories to provide access to yourDNS API credentials as specified in the DNS plugin documentation.

For more information about the layoutof the /etc/letsencrypt directory, see Where are my certificates?.

Warning

While the Certbot team tries to keep the Certbot packages offeredby various operating systems working in the most basic sense, due todistribution policies and/or the limited resources of distributionmaintainers, Certbot OS packages often have problems that other distributionmechanisms do not. The packages are often old resulting in a lack of bugfixes and features and a worse TLS configuration than is generated by newerversions of Certbot. They also may not configure certificate renewal for youor have all of Certbot’s plugins available. For reasons like these, werecommend most users follow the instructions athttps://certbot.eff.org/instructions and OS packages are only documentedhere as an alternative.

Arch Linux

Debian

If you run Debian Buster or Debian testing/Sid, you can easily install certbotpackages through commands like:

If you run Debian Stretch, we recommend you use the packages in Debianbackports repository. First you’ll have to follow the instructions athttps://backports.debian.org/Instructions/ to enable the Stretch backports repo,if you have not already done so. Then run:

In all of these cases, there also packages available to help Certbot integratewith Apache, nginx, or various DNS services. If you are using Apache or nginx,we strongly recommend that you install the python-certbot-apache orpython-certbot-nginx package so that Certbot can fully automate HTTPSconfiguration for your server. A full list of these packages can be foundthrough a command like:

They can be installed by running the same installation command above butreplacing certbot with the name of the desired package.

Ubuntu

If you run Ubuntu, certbot can be installed using:

Optionally to install the Certbot Apache plugin, you can use:

Fedora

FreeBSD

Port: cd/usr/ports/security/py-certbot&&makeinstallclean

Package: pkginstallpy27-certbot

Gentoo

The official Certbot client is available in Gentoo Portage. From theofficial Certbot plugins, three of them are also available in Portage.They need to be installed separately if you require their functionality.

Note

The app-crypt/certbot-dns-nsone package has a differentmaintainer than the other packages and can lag behind in version.

NetBSD

Build from source: cd/usr/pkgsrc/security/py-certbot&&makeinstallclean

Install pre-compiled package: pkg_addpy27-certbot

OpenBSD

Make Docker Run Without Sudo Command

Port: cd/usr/ports/security/letsencrypt/client&&makeinstallclean

Package: pkg_addletsencrypt

Other Operating Systems

OS packaging is an ongoing effort. If you’d like to packageCertbot for your distribution of choice please have alook at the Packaging Guide.

We used to have a shell script named certbot-auto to help people installCertbot on UNIX operating systems, however, this script is no longer supported.If you want to uninstall certbot-auto, you can follow our instructionshere.

When using certbot-auto on a low memory system such as VPS with less than512MB of RAM, the required dependencies of Certbot may fail to build. This canbe identified if the pip outputs contains something like internalcompilererror:Killed(programcc1). You can workaround this restriction by creatinga temporary swapfile:

Disable and remove the swapfile once the virtual environment is constructed:

Installation from source is only supported for developers and thewhole process is described in the Developer Guide.

Warning

Make Docker Run Without Sudo File

Please do not use pythoncertbot/setup.pyinstall, pythonpipinstallcertbot, or easy_installcertbot. Please do not attempt theinstallation commands as superuser/root and/or without virtual environment,e.g. sudopythoncertbot/setup.pyinstall, sudopipinstall, sudo./venv/bin/.... These modes of operation might corrupt your operatingsystem and are not supported by the Certbot team!

0 notes

Text

Azure devops jenkins

Azure devops jenkins Azure devops jenkins Top news stories today Azure devops jenkins CI/CD with Jenkins Pipeline and Azure This is a guest post by Pui Chee Chen, Product Manager at Microsoft working on Azure DevOps open source integrations. Recently, we improved the Azure Credential plugin by adding a custom binding for Azure Credentials which allows you to use an Azure service principal (the…

View On WordPress

0 notes

Photo

Android ExoPlayer + HLS + Google IMA http://ift.tt/2CbcmB1

Android ExoPlayer + HLS + Google IMA

はじめに

こんにちは streampack チームのメディです。 https://cloudpack.jp/service/option/streampack.html

Objective: ・目的 Play a HLS stream in an Android APP with ExoPlayer & display Google IMA advertisements. ExoPlayerを使用してAndroidアプリでHLSストリームを再生し、Google IMA広告を表示します。

Implementation・ 実装

Step 0

HLS

If you don’t have a HLS stream, please visit the following page to find one. HLSストリームがない場合は、以下のページをご覧ください。 https://github.com/notanewbie/LegalStream

IMA

If you don’t have IMA credentials, you can use demo tags. IMA資格情報がない場合は、デモタグを使用できます。 https://developers.google.com/interactive-media-ads/docs/sdks/html5/tags

Step 1

Create a new project & a blank Main Activity. 新しいプロジェクトと空白のメインアクティビティを作成してください。

MainActivity.java

package jp.co.mycompany.com.exotuto; import android.support.v7.app.AppCompatActivity; import android.os.Bundle; public class MainActivity extends AppCompatActivity { @Override protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.activity_main); } }

AndroidManifest.xml

Enable hardware acceleration. ハードウェアアクセラレーシ��ンを有効にしてください。

<?xml version="1.0" encoding="utf-8"?> <manifest xmlns:android="http://schemas.android.com/apk/res/android" package="jp.co.mycompany.com.exotuto"> <application android:allowBackup="true" android:hardwareAccelerated="true" android:icon="@mipmap/ic_launcher" android:label="@string/app_name" android:roundIcon="@mipmap/ic_launcher_round" android:supportsRtl="true" android:theme="@style/AppTheme"> <activity android:name=".MainActivity"> <intent-filter> <action android:name="android.intent.action.MAIN" /> <category android:name="android.intent.category.LAUNCHER" /> </intent-filter> </activity> </application> </manifest>

Dependencies ・ソフトウェアの依存関係

buid.gradle(Module:app)

apply plugin: 'com.android.application' android { compileSdkVersion 27 defaultConfig { applicationId "jp.co.mycompany.com.exotuto" minSdkVersion 15 targetSdkVersion 27 versionCode 1 versionName "1.0" testInstrumentationRunner "android.support.test.runner.AndroidJUnitRunner" } buildTypes { release { minifyEnabled false proguardFiles getDefaultProguardFile('proguard-android.txt'), 'proguard-rules.pro' } } compileOptions { sourceCompatibility JavaVersion.VERSION_1_8 targetCompatibility JavaVersion.VERSION_1_8 } } dependencies { implementation fileTree(dir: 'libs', include: ['*.jar']) implementation 'com.android.support:appcompat-v7:27.0.0' implementation 'com.android.support.constraint:constraint-layout:1.0.2' testImplementation 'junit:junit:4.12' androidTestImplementation 'com.android.support.test:runner:1.0.1' androidTestImplementation 'com.android.support.test.espresso:espresso-core:3.0.1' compile 'com.google.android.exoplayer:exoplayer:2.6.0' compile 'com.google.android.exoplayer:exoplayer-hls:2.6.0' compile 'com.google.android.exoplayer:extension-ima:2.6.0' }

Step 2

Create a new Basic Activity & name it PlayerActivity. 新しいBasicアクティビティを作成し、それをPlayerActivityと名づけます。

Then modify MainActivity.java as follows: MainActivity.java を次のように変更します。

public class MainActivity extends AppCompatActivity { @Override protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.activity_main); Intent intent = new Intent(this, PlayerActivity.class); startActivity(intent); } }

Step 3

Simple HLS implementation・ 単純なHLS実装

res/layout/content_player.xml

<?xml version="1.0" encoding="utf-8"?> <android.support.constraint.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android" xmlns:app="http://schemas.android.com/apk/res-auto" xmlns:tools="http://schemas.android.com/tools" android:layout_width="match_parent" android:layout_height="match_parent" app:layout_behavior="@string/appbar_scrolling_view_behavior" tools:context="jp.co.mycompany.com.exotuto.PlayerActivity" tools:showIn="@layout/activity_player"> <com.google.android.exoplayer2.ui.SimpleExoPlayerView android:id="@+id/player_view" android:focusable="true" android:layout_width="match_parent" android:layout_height="match_parent" android:layout_marginTop="10dp" /> </android.support.constraint.ConstraintLayout>

PLayerActivity.java

public class PlayerActivity extends AppCompatActivity { //Player private SimpleExoPlayerView simpleExoPlayerView; private SimpleExoPlayer player; //Logs final private String TAG = "PlayerActivity"; //HLS final private String VIDEO_URL = "https://nhkworld.webcdn.stream.ne.jp/www11/nhkworld-tv/domestic/263942/live_wa_s.m3u8"; @Override protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.activity_player); Toolbar toolbar = (Toolbar) findViewById(R.id.toolbar); setSupportActionBar(toolbar); FloatingActionButton fab = (FloatingActionButton) findViewById(R.id.fab); fab.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View view) { Snackbar.make(view, "Replace with your own action", Snackbar.LENGTH_LONG) .setAction("Action", null).show(); } }); //ExoPlayer implementation //Create a default TrackSelector BandwidthMeter bandwidthMeter = new DefaultBandwidthMeter(); TrackSelection.Factory videoTrackSelectionFactory = new AdaptiveTrackSelection.Factory(bandwidthMeter); TrackSelector trackSelector = new DefaultTrackSelector(videoTrackSelectionFactory); // Create a default LoadControl LoadControl loadControl = new DefaultLoadControl(); //Bis. Create a RenderFactory RenderersFactory renderersFactory = new DefaultRenderersFactory(this); //Create the player player = ExoPlayerFactory.newSimpleInstance(renderersFactory, trackSelector, loadControl); simpleExoPlayerView = new SimpleExoPlayerView(this); simpleExoPlayerView = (SimpleExoPlayerView) findViewById(R.id.player_view); //Set media controller simpleExoPlayerView.setUseController(true); simpleExoPlayerView.requestFocus(); // Bind the player to the view. simpleExoPlayerView.setPlayer(player); // Set the media source Uri mp4VideoUri = Uri.parse(VIDEO_URL); //Measures bandwidth during playback. Can be null if not required. DefaultBandwidthMeter bandwidthMeterA = new DefaultBandwidthMeter(); //Produces DataSource instances through which media data is loaded. DefaultDataSourceFactory dataSourceFactory = new DefaultDataSourceFactory(this, Util.getUserAgent(this, "PiwikVideoApp"), bandwidthMeterA); //Produces Extractor instances for parsing the media data. ExtractorsFactory extractorsFactory = new DefaultExtractorsFactory(); //FOR LIVE STREAM LINK: MediaSource videoSource = new HlsMediaSource(mp4VideoUri, dataSourceFactory, 1, null, null); final MediaSource mediaSource = videoSource; player.prepare(videoSource); } //Android Life cycle @Override protected void onStop() { player.release(); super.onStop(); Log.v(TAG, "onStop()..."); } @Override protected void onStart() { super.onStart(); Log.v(TAG, "onStart()..."); } @Override protected void onResume() { super.onResume(); Log.v(TAG, "onResume()..."); } @Override protected void onPause() { super.onPause(); Log.v(TAG, "onPause()..."); } @Override protected void onDestroy() { super.onDestroy(); Log.v(TAG, "onDestroy()..."); player.release(); } }

Please run your app in the Android emulator. Androidエミュレータでアプリをテストしてください。

Step 4

Player listeners ・プレーヤーリスナー

In the onCreate method of PlayerActivity.java, please add the following listeners. PlayerActivityのonCreateメソッドで使用します。次のリスナーを追���してください。

//ExoPLayer events listener player.addListener(new Player.EventListener() { @Override public void onTimelineChanged(Timeline timeline, Object manifest) { Log.v(TAG, "Listener-onTimelineChanged..."); } @Override public void onTracksChanged(TrackGroupArray trackGroups, TrackSelectionArray trackSelections) { Log.v(TAG, "Listener-onTracksChanged..."); } @Override public void onLoadingChanged(boolean isLoading) { Log.v(TAG, "Listener-onLoadingChanged...isLoading:" + isLoading); } @Override public void onPlayerStateChanged(boolean playWhenReady, int playbackState) { Log.v(TAG, "Listener-onPlayerStateChanged..." + playbackState); switch (playbackState) { case Player.STATE_IDLE: Log.v(TAG, "STATE IDLE"); break; case Player.STATE_BUFFERING: Log.v(TAG, "STATE BUFFERING"); break; case Player.STATE_READY: Log.v(TAG, "STATE READY"); break; case Player.STATE_ENDED: Log.v(TAG, "STATE ENDED"); break; default: break; } } @Override public void onRepeatModeChanged(int repeatMode) { Log.v(TAG, "Listener-onRepeatModeChanged..."); } @Override public void onShuffleModeEnabledChanged(boolean shuffleModeEnabled) { } @Override public void onPlayerError(ExoPlaybackException error) { Log.v(TAG, "Listener-onPlayerError..."); player.stop(); player.prepare(adsMediaSource); player.setPlayWhenReady(true); } @Override public void onPositionDiscontinuity(int reason) { Log.v(TAG, "Listener-onPositionDiscontinuity..."); } @Override public void onPlaybackParametersChanged(PlaybackParameters playbackParameters) { Log.v(TAG, "Listener-onPlaybackParametersChanged..."); } @Override public void onSeekProcessed() { } });

Step 5

IMA implementation ・IMAの実装

In PlayerActivity.java, add the following class variables. PlayerActivity.java で、次のクラス変数を追加してください。

//IMA private ImaAdsLoader imaAdsLoader; final private String AD_TAG_URI = "https://pubads.g.doubleclick.net/gampad/ads?sz=640x480&iu=/124319096/external/ad_rule_samples&ciu_szs=300x250&ad_rule=1&impl=s&gdfp_req=1&env=vp&output=vmap&unviewed_position_start=1&cust_params=deployment%3Ddevsite%26sample_ar%3Dpreonly&cmsid=496&vid=short_onecue&correlator=";

Please update the onCreate method of PlayerActivity.java as follows. PlayerActivity.java のonCreateメソッドを次のように更新してください。

//player.prepare(adsMediaSource);//Remove this line. imaAdsLoader = new ImaAdsLoader(this, Uri.parse(AD_TAG_URI)); AdsMediaSource.AdsListener adsListener = new AdsMediaSource.AdsListener() { @Override public void onAdLoadError(IOException error) { error.printStackTrace(); } @Override public void onAdClicked() { } @Override public void onAdTapped() { } }; AdsMediaSource adsMediaSource = new AdsMediaSource( mediaSource, dataSourceFactory, imaAdsLoader, simpleExoPlayerView.getOverlayFrameLayout(), null, adsListener ); player.prepare(adsMediaSource);

Results 結果

It works! できます!

Step 6

Adding IMA event listeners ・IMAイベントリスナーの追加

Please add the following code to the onCreate method of PlayerActivity.java. PlayerActivity.javaのonCreateメソッドに次のコードを追加してください。

//IMA event listeners com.google.ads.interactivemedia.v3.api.AdsLoader adsLoader = imaAdsLoader.getAdsLoader(); adsLoader.addAdsLoadedListener(new AdsLoader.AdsLoadedListener() { @Override public void onAdsManagerLoaded(AdsManagerLoadedEvent adsManagerLoadedEvent) { AdsManager imaAdsManager = adsManagerLoadedEvent.getAdsManager(); imaAdsManager.addAdEventListener(new AdEvent.AdEventListener() { @Override public void onAdEvent(AdEvent adEvent) { Log.v("AdEvent: ", adEvent.getType().toString()); switch (adEvent.getType()) { case LOADED: break; case PAUSED: break; case STARTED: break; case COMPLETED: break; case ALL_ADS_COMPLETED: break; default: break; /* Full list of events. Implement what you need. LOADED, TAPPED, PAUSED, LOG, CLICKED, RESUMED, SKIPPED, STARTED, MIDPOINT, COMPLETED, AD_PROGRESS, ICON_TAPPED, AD_BREAK_ENDED, AD_BREAK_READY, FIRST_QUARTILE, THIRD_QUARTILE, AD_BREAK_STARTED, ALL_ADS_COMPLETED, CUEPOINTS_CHANGED, CONTENT_PAUSE_REQUESTED,CONTENT_RESUME_REQUESTED */ } } }); } });

Information sources・ 情報源

https://github.com/sakurabird/Android-Example-HLS-ExoPlayer https://developers.google.com/interactive-media-ads/docs/sdks/android/ https://github.com/google/ExoPlayer https://google.github.io/ExoPlayer/demo-application.html

元記事はこちら

「Android ExoPlayer + HLS + IMA」

February 21, 2018 at 12:00PM

1 note

·

View note

Text

Internet Is Not Safe Anymore

There is a reality check for everyone who does ethical Cyber-attack for living no matter what the scope, size or age of your Word-Press site, your site is at risk! It is not that much certain that Cyber-Criminals don’t concentrate or feel to target only mainstream websites; however, they also target small and venerable sites as well, as they can easily exploit the common vulnerabilities of such websites. Normally, most of these Cyber-Attacks are smartly conducted via programmed bots to automatically find certain soft spots in websites. At times, they do not differentiate between your site and a popular one. Smaller sites are more prone to get compromised since they generally have lower website security measures in place. So, the next time you think your site is too insignificant for a Cyber-Criminals, think again. The odds are high that your website can be used by the Cyber-Criminal to send spam, do SEO spam or perform a malicious redirect. Once the Cyber-Criminals manage to find a loophole in your site, they can gain access to a plethora of opportunities to take their ‘spammy’ intentions for a spin. Cyber-Criminals can pull off many different types of Cyber-attacks. For instance DDoS attacks, Cross-Site Scripting (XSS) attack, injection attacks, SQL injection attacks, session hijacking, clickjacking attacks, etc. Luckily, most of the threats that can damage your Word-Press site can be prevented. But first, we need to arm you with the right knowledge of these common types of Cyber-Attack, so that you can take the right measures to address it.

Plugin Vulnerabilities

If you have ever worked on Word-Press projects, then you might be aware of the fact that the plugins play a significant role in Word-Press website development. As a matter of fact, Word-Press is designed for non-developers and developers alike. The one who is in need of a quick online presence, then the plugin proves to be a reliable solution that bridges the gaps and integrates various functionalities to the website.

Unfortunately, plugins are considered to be the most vulnerable to Cyber-attack when it comes to the Word-Press ecosystem. However, one can’t blame the developers who created that plugin. Cyber-Criminals manage to find vulnerabilities within the plugin’s code and use them to access sensitive information.

Brute Force Attacks & Weak Password

Lack of login security is another entry point for Cyber-Criminals to target Word-Press sites. Cyber-Criminals tend to leverage readily available software tools to generate the password and force their way into your system. Malicious Cyber-Criminals employ software tools such as Wires-hark (sniffer) or Fiddler (proxy) to capture your Word-Press login details and steal your personal information and other sensitive information. In addition to that, the brute force attacks can create devastating seniors for users who have a weak credential management system. By way of such attacks, the Cyber-Criminals can generate 1000s of password guesses to gain entry. So, you know what to do if your password is 12345678 or admin123, right?

Word-Press Core Vulnerabilities

Nothing is perfect in this world. It often takes time to discover vulnerabilities within the Word-Press ecosystem, and this delay can put thousands of Word-Press users at grave risk of data breaches. Fortunately, the Word-Press team releases security patches and updates on a regular basis.

Unsafe Themes

At times, you can give in to temptation and install a free theme from your favorite search engines. However, how one can determine whether that theme us safe or not, especially when it is free? Honestly, most of these free themes available on internet are vulnerable to Cyber-Attack just like an outdated plugin would. However, this does not mean that all free themes are a strict no-no. There are plenty of efficient and reliable free themes uploaded by developers who provide regular update and actively support the project.

Hosting vulnerabilities

Another popular entry point for Cyber-Criminals is through your own hosting system. Normally, most of the Word-Press websites are hosted on the SQL server and this is how the Word-Press website becomes a potential target of Cyber-Criminals. In addition to that, if one uses poor-quality or shared hosting services, then it makes their website more venerable to Cyber-Attack. In such cases, the attacker can gain unauthorized access to other websites on the same server.

Cyber-Infection

Cyber-Menace, or simply menace, refers to creating certain circumstances or events that result in developing potential issues for cyber-protection. A few common examples of such Menaces include a social-engineering or phishing invasion that helps a Cyber-Criminal in installing a Trojan-Virus in your system and steal private information, political activists DDoS-ing your Web-Site, an administrator accidentally leaving data unprotected on a production system can result in a data breach, or a storm flooding your ISP’s data center.

Cyber-Protection Menaces are actualized by Cyber-Criminals. These Cyber-criminals usually refer to persons or entities who may potentially initiate a Cyber-Invasion. While natural disasters, as well as other environmental and political events, do constitute Menaces, they are not generally regarded as being Cyber-Criminal, it does not mean that such Menace activists should be disregarded or given less importance. Examples of common Cyber-criminals include financially motivated politically motivated activists for Cyber-Invasion, nation-state Cyber-Infiltrators, disgruntled employees, Cyber-Criminals, competitors, careless employees.

Cyber-Menaces can also become more catastrophic if Cyber-Criminal leverages one or more vulnerabilities to gain access to a system, often including the operating system.

Cyber-Liabilities

Cyber-Liabilities simply refer to weaknesses in a system. They make Cyber-Menace possible and potentially even more hazardous. A system could be exploited through a single Liability, for example, a single SQL Injection infiltration technique could give a Cyber-Criminal full control over sensitive data. A Cyber-Criminal could also bind several exploiting techniques and take advantage of various Liabilities of your system. For instance: The most common vulnerabilities are Cross-Web-Site Scripting, server misconfigurations, and SQL Injections.

Cyber-Perils

Cyber-Perils are usually misinterpreted with Cyber-Menace. However, there is a subtle difference, as a Cyber-Protection Peril refers to a combination of probability and end results of a Cyber-Menace and it is usually in the monetary terms but quantifying a breach is extremely complex. Therefore, a Cyber-Peril is a scenario that should be avoided combined with the likely losses to result from that scenario. The following is a hypothetical example of how Cyber-Perils can be constructed:

SQL Injection is a Liability

Sensitive data theft is one of the biggest Cyber-Menace that SQL Injection enables

Financially motivated Cyber-Criminals are one of the examples of Cyber-Menace activists

When sensitive data is compromised then is it extremely complex to bear the significance of such financial loss to the business

The probability of such a Cyber-Invasion is high, given that SQL Injection is easy-access, widely exploited Liability and the Web-Site is externally facing

Therefore, the SQL Injection Liability in this scenario should be considered as extremely hazardous liability for Cyber-Protection.

The difference between a Liability and a Cyber-Peril are usually easily understood. However, understanding the difference in terminology allows for clearer communication between security teams and other parties and a better understanding of how Cyber-Menace influences Cyber-Peril. This, in turn, may help prevent and mitigate security breaches. A good understanding is also needed for effective Cyber-Peril assessment and Cyber-Peril management, for designing efficient security solutions based on Cyber-Menace intelligence, as well as for building an effective security policy and a Cyber-Protection strategy.

Targeted and Non-Targeted WordPress Cyber-Invasions

If you have been reading about Word-Press security and looking for ways in which your Word-Press security can be compromise and techniques that can be employed to protect your WordPress Web-Site from Cyber-Criminals, you will notice that there are two types of Cyber-Invasion, targeted and non-targeted Word-Press Cyber-Invasion.

What is the difference between a targeted and non-targeted WordPress Cyber-Invasion and how can you protect your Word-Press from both of these Cyber-Invasion? This article explains the difference between these two types of Cyber-Infiltration and explains why some or the WordPress infiltration techniques can be implemented to protect your Web-Site from one type of Cyber-Invasion.

Non-Targeted WordPress Cyber-Invasion

Non-targeted WordPress Cyber-Invasion is an automated invasion and it is not specifically launched against WordPress Websites only. For example, if Cyber-Criminals are trying to exploit a known Liability in an old version of Word-Press, they do not manually look for Word-PressWeb-Sites, check their version and see if they are vulnerable to such Liabilities.

Instead, they employ automated tools to send a specific HTTP request that is exercised to exploit the Liability to a number of Web-Sites, typically a range of IP addresses. Depending on the HTTP responses received back, the tool determines if the target Web-Site is a vulnerable Word-Press installation or not.

Protect WordPress from Non-Targeted Cyber-Invasion

Therefore if you hide your version of Word-Press, or even hide the fact that you are using Word-Press for your Web-Sites you won’t be protecting your Web-Site from non-targeted Word-Press Cyber-Invasion. To protect Word-Press from non-targeted Cyber-Invasions follow the below recommendations:

One must always keep all their Programs up to date and always install the latest and most secure version of Word-Press, plugins, and themes. This also applies to MySQL, Apache and any other programs that are running on your web environment.

Always uninstall and remove any unnecessary plugins, themes and any other components and files which are not being frequently employed.

Do not employ typical login credentials such as admin, administrator, and root for your Word-Press administrator account. If you do rename the Word-Press administrator account.

One must always properly protect the Word-Press Login and admin pages by developing an additional layer of authentication, which involves read protection for Word-Press Login Page with HTTP Authentication.

One must always try to develop strong login credentials and this does not apply only to Word-Press but to any other service or Web-Site. If you have multiple clients for your Word-Press, then employ a Plugin to create policies forward-Press credentials, in order to ensure the safety.

Targeted WordPress Cyber-Invasion

Targeted Cyber-Invasions are specifically targeted towards your Web-Site and blogs. There are several reasons why your Word-PressWeb-Site might be a victim of a targeted Cyber-Invasion and the reason why your Word-Press is a victim of a targeted Cyber-Invasion is not of importance. What is important is to understand what happens in a targeted Cyber-Invasion so you can protect your Word-PressWeb-Sites and blogs better.

Targeted Cyber-Invasions are more catastrophic than non-targeted ones simply because rather than having a number of automated tools scanning Web-Sites randomly, there is a human being analyzing every detail about your Web-Site in the hope of finding something that could be exploited.

Anatomy of Targeted WordPress Cyber-Invasions

At first, the Cyber-Criminals will employ automated tools to check if your version of Word-Press is vulnerable to any known vulnerabilities. Since automated tools are employed to hide the version of your Word-Press.The Cyber-Criminals will also try to determine what plugins are running on your Word-Press and if any of them are vulnerable to a particular Liability. In addition to that most of these tasks are executed employing automated tools.

One of the most venerable links in the Word-Press security is credentials and by employing these automated tools the Cyber-Criminals will try to enumerate all the Word-Pressclients and even launch a password dictionary Cyber-Invasion against Word-Press.

There are many other ways and means how to infiltrate a Word-Press blog or Web-Site and targeted Cyber-Invasions do not specifically take advantage of a security weakness in Word-Press or one of its components. It could also be a security hole in the webserver Programs or configuration etc, but the above three are the most common Cyber-Invasion entry points.

Protect WordPress from Targeted Cyber-Invasions

There are many WordPress Cyber-Invasion and techniques you can employ to protect your WordPress from a targeted Cyber-Invasion as highlighted in the below list:

To start off with, all that applies to protect your WordPress from non-targeted Word-Press Cyber-Invasions applies also to targeted Cyber-Invasions

Secure and Protect your WordPress Administrator Account

Enable Word-Press SSL to access your WordPress login page and admin pages over an encrypted communication layer to avoid having your WordPress login credentials being hijacked.

Always employ a WordPress security monitoring and auditing plugin to keep track of everything that is happening on your WordPress and identify any suspicious activity before it becomes a security issue

Practice WordPress client roles to improve the security of WordPress by ensuring every client only has the minimum required privileges to do the job

One must always employ a WP-Scan WordPress security black box scanner and other tools to frequently scan and audit their WordPress Website.

Protecting WordPress from Cyber-Criminals

From time to time you might read about a particular WordPress security tweak that some people say it works while some others say it doesn’t, such as hiding your WordPress version. In such scenarios we often witness that secrecy of the WordPress version has minimum effect on the overall security of the WordPress design, then we think why bother? If you are dubious about a particular tweak if the tweak does not impact the performance of your Word-Press and is easy to implement go ahead and implement it. Better to be safe than sorry!

Apart from the above tips, there are many other ways how to improve the security of your WordPress blogs and Websites and protect them from both targeted and non-targeted WordPress Cyber-Invasions. Ideally, you should keep yourself updated by subscribing to a WordPress security blog where frequent WordPress security tips and infiltration techniques are published.

What Is DNS Spoofing?

DNS spoofing occurs when a particular DNS server’s records of “spoofed” or altered Infection to redirect traffic to the Cyber-Criminals. This redirection of traffic allows the Cyber-Criminals to spread viruses, steal data, etc. For example, if a DNS record is spoofed, then the Cyber-Criminals can manage to redirect all the traffic that relied on the correct DNS record to visit a fake Website that the Cyber-Criminals has created to resemble the real Website or a completely different Website.

How Does Normal DNS Communication Work?

A DNS server is normally employed for the purpose of resolving a domain name (such as keycdn.com) into the associated IP address that it maps to. Once the DNS server finds the appropriate IP address, data transfer can begin between the client and Web-Site’s server. The given below visualization will display how this process will take place at a larger scale. Once the DNS server locates domain-to-IP translation, then it has to cache subsequent requests for the domain. As a result, the DNS lookup will happen much faster. However, this is where DNS spoofing can act as a great trouble creator, as a false DNS lookup can be injected into the DNS server’s cache. This can result in an alteration of the visitors’ destination.

How Does DNS Spoofing Work?

DNS spoofing is an overarching term and can be carried out using various techniques such as:

DNS cache poisoning

Compromising a DNS server

Implementing a Man in the Middle Cyber-Invasions

However, the Cyber-Criminal’s end goal is usually the same no matter which method they practice. Either they want to steal information, re-route you to a Web-Site that benefits them, or spread Virus. The most argued technique of DNS spoofing is employing Cache-Poisoning.

DNS Cache-Poisoning

Since DNS servers cache the DNS translation for faster, more efficient browsing, Cyber-Criminals can take advantage of this to perform DNS spoofing. If a Cyber-Criminal is able to inject a forged DNS entry into the DNS server, all clients will now be using that forged DNS entry until the cache expires. The moment the cache expires, the DNS entry has to return to the normal state, as again the DNS server has to go through the complete DNS lookup. However, if the DNS server’s Programs still hasn’t been updated, then the Cyber-Criminal can replicate this error and continue funneling visitors to their Web-Site.

DNS cache poisoning can also sometimes be quite complex to spot. If the InfectedWeb-Site is very similar to the Web-Site it is trying to impersonate, some clients’ may not even notice the difference. Additionally, if the Cyber-Criminal is using DNS cache poisoning to compromise one company’s DNS records in order to have access to their emails for example, then this may also be extremely complex to detect.

How to Prevent DNS Spoofing

As a Website visitor, there’s not much you can do to prevent DNS spoofing. Rather, this falls more in the hands of the actual DNS provider that is handling a Web-Site’s DNS lookups as well as the Web-Site owner. Therefore, a few tips for Web-Site owners and DNS providers include:

Implement DNS spoofing detection mechanisms — it’s important to implement DNS spoofing detection Programs. Products such as XArp help product against ARP cache poisoning by inspecting the data that comes through before transmitting it.

One must always employ encrypted data transfer protocols with end-to-end encryption via SSL/TLS will help decrease the chance that a Web-Site / its visitors are compromised by DNS spoofing. This type of encryption that allows the clients’ to verify whether the server’s digital certificate is valid and belongs to the Web-Site’s expected owner.

One must employ DNSSEC — DNSSEC, or Domain Name System Security Extensions, as it exercises digitally signed DNS records to help determine data authenticity. DNSSEC is still a work in progress as far as deployment goes, however, it was implemented in the Internet root level in 2010.

DNS Spoofing — In Summary

DNS spoofing can result in making quite a bit of trouble both for Web-Site visitors and Web-Site owners. The Cyber-Criminal’s main motive to carry out a DNS spoofing Cyber-Invasion is either for their own personal gain or to spread Virus. Therefore, as a Web-Site owner, it’s important to choose a DNS hosting provider that is reliable and clients’ up-to-date security mechanisms.

Furthermore, as a Web-Site visitor it’s just as important that you “be aware of your surroundings” in a sense that if you notice any discrepancies between the Web-Site that you were expecting to visit and the Web-Site that you are currently browsing, you should immediately leave that Web-Site and try to alert the real Web-Site owner.

Denial-of-Service Cyber-Invasions

There are many different techniques that Cyber-Criminals practice to carry out DoS Cyber-Invasion. The most common method of Cyber-Invasion occurs when a Cyber-Thieves floods a network server with traffic. In this type of DoS Cyber-Invasion, the Cyber-Thievessends several requests to the target server, overloading it with traffic. These services that request can be illegal and with mostly fabricated return addresses. This results in a scenario where the server is overwhelmed, due to the constant process of shooting junk requests. This ultimately misleads the server in its attempt to authenticate the requestor and helps the Cyber-Thieves to exploit the vulnerabilities of the server.

In a Smurf Cyber-Invasion, the Cyber-Thief delivers Internet Control Message Protocol broadcast packets to a number of hosts with a spoofed source Internet Protocol (IP) address that belongs to the target machine. The clients of these infected packets will then respond and the victim’s host will be overwhelmed with those responses.

A SYN flood occurs when a Cyber-Thief sends a request to connect to the victim’s server but never completes the connection through what is known as a three-way handshake. This is the method employed in a TCP/IP network to develop a connection between a local host/client and the server. The improper handshake leaves the connected port in an occupied status and it then lacks the ability to process further requests. A Cyber-Thief will continue to send requests, saturating all open ports, so that legitimate clients’ cannot connect.

Individual networks may be affected by DoS Cyber-Invasions without being directly targeted. If the network’s internet service provider (ISP) or cloud service provider has been targeted and compromised, the network will also experience a loss of service.

CSRF Cyber-Invasion Technique

Cross-Web-Site Request Forgery, also known as session riding or sea surf. It is a widely known Cyber-Invasion against authenticated web applications by employing cookies. The Cyber-Criminal is able to trick the victim into making a request that the victim did not intend to make. Therefore, the Cyber-Criminal exploits the trust that a web application has for the victim’s browser. While Cross-Web-Site Request Forgery (CSRF) Cyber-Invasions do not provide a Cyber-Criminal with the response returned from the server, a smart Cyber-Criminal has the ability to create disastrous scenarios that can have a catastrophic effect on your Web-Site, especially when paired with well-crafted social engineering Cyber-Invasion.

Cross-Web-Site Request Forgery is a kind of Cyber-Invasion conduct by Cyber-Criminals that involves authentication and authorization of the victim’s network. In this technique first of all the Cyber-Criminals has to send a forged request to the webserver. On top of that, the CSRF Liabilities affect highly privileged clients, such as administrators, which could result in a full application compromise. During a successful CSRF Cyber-Invasion, the victim’s web browser is tricked by InfectedWeb-Site into unwanted action. It will then send HTTP requests to the web application as intended by the Cyber-Criminals. In addition to that, such a request could involve submitting forms present on the web-application to modify data-archives and once the HTTP request is successfully delivered, then the victim’s browser will include the cookie header. Cookies are typically employed to store the client’s session identifier so that the client does not have to enter their login credentials for each request, which would obviously be impractical. If the victim’s session of authentication is safely archived in a session cookie or if the application is vulnerable to Cross- Web-Site Request Forgery (CSRF), then the Cyber-Criminal can leverage CSRF to launch any desired infected requests against the Web-Site and the server-side code is unable to distinguish whether these are legitimate requests.

CSRF Cyber-Invasion can be employed to compromise online banking by forcing the victim to make an operation involving their bank account. CSRF can also facilitate Cross- Web-Site Scripting (XSS). Hence it is extremely important that you treat CSRF as extremely serious issues for your web application security issue.

The CSRF Cyber-Invasionnormally employs an HTTP GET request. If the victim visits a web page controlled by the Cyber-Criminals with the following payload, the browser will send a request containing the cookie to the URL crafted by Cyber-Criminals.

Cross- Web-Site Request Forgery in POST Requests

GET requests, however, are not the only HTTP method the Cyber-Criminals can exploit. POST requests are equally susceptible to Cross- Web-Site Request Forgery (CSRF), however, The Cyber-Criminals also has to involve a little bit of JavaScript to submit the POST request.

CSRF Protection

One can have two kind of primary approaches to deal with Cross-Web-Site Request Forgery. For starters, One has to synchronize the cookie with an anti-CSRF token that has already been given to the browser or preventing the browser from transmitting cookies to the web application.

Anti-CSRF Tokens

The recommended and the most widely employed prevention technique for Cross- Web-Site Request Forgery (CSRF) Cyber-Invasion is known as an anti-CSRF token, sometimes referred to as a synchronizer token or just simply a CSRF token. When a client submits a form or makes some other authenticated request that requires a cookie, a random token should be included in the request. Now, the web-application has to verify the existence and purity of this token before processing any requests. It is extremely important that the web-application should have the ability to reject the token with a suspicious approach.

It’s highly recommended that you employ an existing, well tested and reliable anti-CSRF library. Depending on your language and framework of choice, there are several high-quality open source libraries that are ready-to-deploy. Here we have mentioned some of the characteristics of a well-designed anti-CSRF system.

It is extremely important that each client’s session should have a unique token.

For security measures, the session should expire automatically after an instructed period of time.

It is extremely essential that the Anti-CSRF token should be a cryptographically random value and it should have significant length.

It is extremely important that the Anti-CSRF token should be added within URLs or as a hidden field for forms.

It is also important that the server should have the ability to reject the requested action if the validation of the Anti-CSRF token fails.

Same-Web-Site Cookies

The Same-Web-Site cookie attribute is a new attribute that can be set on cookies to instruct the browser to disable third-party usage for specific cookies. The Same-Web-Site attribute is set by the server when setting the cookie and requests the browser to only send the cookie in a first-party context. Therefore, the request has to originate from the same origin — requests made by a third-party Website will not include the Same-Web-Site cookie. This effectively eliminates Cross-Web-Site Request Forgery Invasion without the practicing synchronizer tokens.

#Secure#internet#security#net#web development#technology#web design#web developing company#technologies#Webmaster#webdesign#blog

0 notes

Text

How the Coinbase Security team deployed CTFd to Power our First Capture the Flag contest at Defcon…

How the Coinbase Security team deployed CTFd to Power our First Capture the Flag contest at Defcon 27

By Nishil Shah and Peter Kacherginsky

We recently ran Coinbase’s first Capture the Flag (CTF) competition called Capture the Coin. As part of running the CTF, we needed to setup the underlying infrastructure. In this guide we’ll describe our process from choosing a CTF platform to getting it production ready. This CTF definitely would not have been possible without all the open source contributors, projects, tutorials, and free services we leveraged, so we’re making this guide as a small contribution back to the community. Overall, the process took us a few weeks to setup, but with this guide, you’ll only spend a few days.

Choosing a CTF Platform

We chose CTFd as our CTF platform based on the following criteria:

Challenge Input Interface

Support for Types of Challenges

Free Response (Static and Pattern-Based)

Dynamic Value

Multiple Choice

Custom Plugins

Public Scoreboard

User and Team Registration

Admin Panel

Player Communications

Hints

Active Maintainers

CTFd had support for most of our requirements except for multiple choice questions. However, requirements like having active maintainers was useful when the maintainers quickly patched a bug in a few hours. We also wanted to write challenges that forced users to interact with a real blockchain. This would require some custom development, so we also wanted the ability to build our own custom modules. Anecdotally, a few other CTFs had run successfully on CTFd. Given these requirements, CTFd matched our needs the closest.

CTFd mostly worked out of the box by following the README instructions; however, we did run into one issue. Out of the box, CTFd started running really slow. We did some basic debugging like looking at memory and CPU utilization to see if we needed to upgrade our EC2 instance. In general, resource consumption was generally less than 1%. We eventually found out that using Sync workers was inefficient. We changed the settings to use async gevent workers and correctly setting worker_connections and workers using the advice from this post. This solved our issue and CTFd worked great with very little latency.

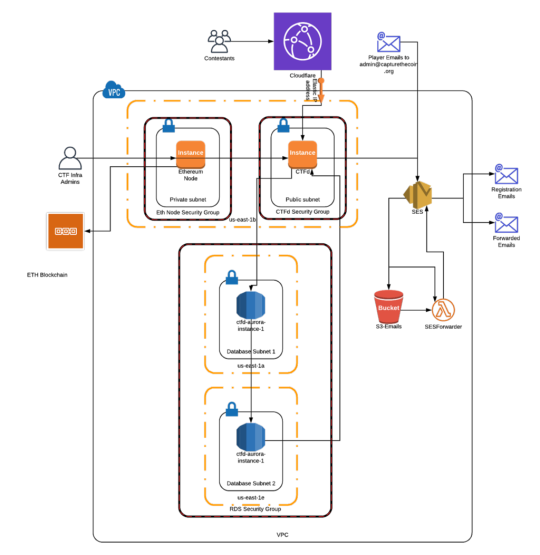

Capture the Coin Infrastructure

Setting up and running this CTF was a great learning experience in getting hands-on with AWS and debugging real issues. As appsec engineers reviewing code or infrastructure, we often times can become unsympathetic to how hard an engineers’ job can be when defining security requirements even in a #SecurityFirst engineering culture. Just for something as simple as a CTF, the bill of materials starts to pile up as you can see below.

Cloudflare Free Tier

CTFd

Google Recaptcha v2

Infura

Let’s Encrypt/CertBot

Redis (AWS-managed or locally running)

SES Email Forwarder Lambda (Here’s a guide we found very helpful to setup this lambda)

AWS (Paid Tier)

EC2

IAM

Lambda

RDS Aurora

S3

SES

Here’s a network diagram of what our AWS infrastructure looked like at a high level.

Our vendor choices were typically determined by our own familiarity. We used AWS as our infrastructure provider, and we used Cloudflare as our DoS protection for similar reasons. For email and email verification, we used SES to trigger an email forwarder lambda and save a copy of the email to an S3 bucket. The lambda then pulled the email from the S3 bucket and forwarded the contents to the recipients defined in the lambda. Eventually, we swapped out our EC2 instance hosting an Ethereum node with Infura as this gave us reliable connectivity to the ETH blockchain for the smart contract challenges. Our infrastructure is rather simple compared to the complicated microservice environments today, but there was still some complexity involved.

Our Setup Notes

Signup for AWS Account.

Buy a domain with your favorite provider.

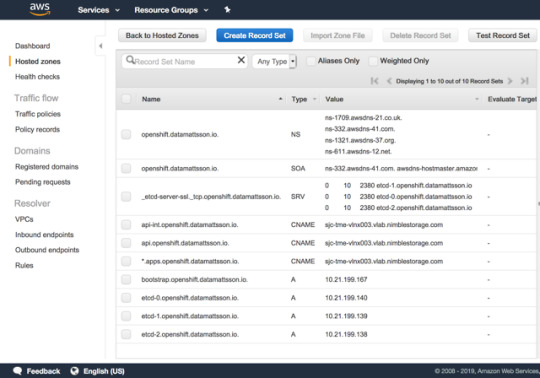

Set up Route53 NS DNS records in AWS.

Set up Route53 MX DNS records in AWS.

Set up Route53 SOA DNS records in AWS.

Set up Route53 SPF DNS records in AWS.

Set up Route53 DKIM DNS records in AWS.

Note: The AWS SES Domain Verification step will require an additional TXT record.

Spin up an EC2 micro instance. You can always resize later.

Attach the right security groups. Initially, we limited external access to private IP ranges so that we didn’t accidentally leak challenges until we started the competition.

Spin up RDS instance. We went with the AWS managed solution because this was the simplest and we wouldn’t have to worry about load balancing or patching.

Follow this guide to get inbound and outbound emails working under your CTF domain.

Install CTFd by cloning the repo and installing dependencies. (We used v2.1.3)

Setup Google Recaptcha v2 for spam and DoS protection.

Setup Infura or your own node if you want Ethereum smart contract challenges.

Setup Let’s Encrypt/Certbot for HTTPS during testing and eventually for connections from Cloudflare to CTFd.

Setup AWS-managed Redis or use a Redis instance locally.

Setup port forwarding 80 to 443 and 443 to 8443 so CTFd doesn’t have to run with sudo and http:// is auto-redirected to https://.

Run CTFd! Note that Unix security best practices still apply like running the application under a limited user.

Run the following commands to set all the environment variables.

# CTFd Database

export DATABASE_URL=

# Web3

export WEB3_PROVIDER_URI=

export GETH_ACCT_PASSWD=

# Redis Caching

export REDIS_URL=

# Infura

export WEB3_INFURA_API_KEY=

export WEB3_INFURA_API_SECRET=

# reCAPTCHA

export RECAPTCHA_SECRET=

export RECAPTCHA_SITE_KEY=

cd CTFd

gunicorn3 -name CTFd —bind 0.0.0.0:8443 —statsd-host localhost:8125 —keyfile /etc/letsencrypt/live/capturethecoin.org/privkey.pem —certfile /etc/letsencrypt/live/capturethecoin.org/fullchain.pem —workers=5 —worker-class=gevent —worker-connections=5000 —access-logfile /home/ubuntu/access.log —log-file /home/ubuntu/error.log “CTFd:create_app()”

We found this guidance for optimizing workers and worker-connections helpful.

When finished testing with your running CTFd instance, setup the competition dates in the CTFd admin interface.

Install the Ethereum Oracle CTFd extension and generate contracts.

Add SES SMTP credentials to CTFd admin interface for user registration if you want email verification.

At this point, the CTF should be entirely setup. Only keep going if you’d like to setup Cloudflare for DoS protection.

Setup Cloudflare DNS and modify the AWS security group for the CTFd box to allow ingress from Cloudflare IPs.

Setup Cloudflare SSL/TLS. SSL — Full (strict) if you still have a valid cert from Let’s Encrypt

Setup Edge Certificates — Cloudflare Universal SSL certificate unless you have the budget and security requirements for a dedicated cert or uploading your own cert.

Setup Cloudflare by enabling Always Use HTTPS, HSTS, TLS 1.3, Automatic HTTP Rewrites, and Certificate Transparency Monitoring

Setup Cloudflare by using minimum TLS version 1.2

You can also setup whitelisting for known good IP addresses in Firewall Tools so that Cloudflare doesn’t challenge requests from these ranges.

Dynamic Oracle

One requirement for the competition was to support Ethereum smart contract challenges, after all, this was called Capture the Coin. If no (testnet) coins could be captured, we would not have lived up to the name.

The contest included the excellent CTFd Oracle plugin by nbanmp which allowed us to process on-chain events such as determining whether or not the deployed smart contract was successfully destroyed by the player. We have modified the original oracle to allow for contract pre-deployment since Ethereum Ropsten network may sometimes be unreliable.

You can find the source code for the oracle and contract deployer here: https://github.com/iphelix/ctc-eth-challenge

Future Steps

In the future, we plan to use the dockerized setup so that it is easy to spin up and down the entire CTFd platform. CTFd already allows for codification of the settings which is helpful in being able to get predictable application deploys. We also would like to codify our infrastructure so that we can get predictable and simple deploys.

Thank you to all the open source contributors without their contributions hosting this CTF would not have been possible.

If you’re interested in working on solving tough security challenges while also creating an open financial system, please join us!

This website contains links to third-party websites or other content for information purposes only (“Third-Party Sites”). The Third-Party Sites are not under the control of Coinbase, Inc., and its affiliates (“Coinbase”), and Coinbase is not responsible for the content of any Third-Party Site, including without limitation any link contained in a Third-Party Site, or any changes or updates to a Third-Party Site. Coinbase is not responsible for webcasting or any other form of transmission received from any Third-Party Site. Coinbase is providing these links to you only as a convenience, and the inclusion of any link does not imply endorsement, approval or recommendation by Coinbase of the site or any association with its operators.

All images provided herein are by Coinbase.

How the Coinbase Security team deployed CTFd to Power our First Capture the Flag contest at Defcon… was originally published in The Coinbase Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Money 101 https://blog.coinbase.com/how-the-coinbase-security-team-deployed-ctfd-to-power-our-first-capture-the-flag-contest-at-defcon-eeb8da3bf2b0?source=rss----c114225aeaf7---4 via http://www.rssmix.com/

0 notes

Text

Creating A Shopping Cart With HTML5 Web Storage

Creating A Shopping Cart With HTML5 Web Storage

Matt Zand

2019-08-26T14:30:59+02:002019-08-26T13:06:56+00:00

With the advent of HTML5, many sites were able to replace JavaScript plugin and codes with simple more efficient HTML codes such as audio, video, geolocation, etc. HTML5 tags made the job of developers much easier while enhancing page load time and site performance. In particular, HTML5 web storage was a game changer as they allow users’ browsers to store user data without using a server. So the creation of web storage, allowed front-end developers to accomplish more on their website without knowing or using server-side coding or database.

Online e-commerce websites predominantly use server-side languages such as PHP to store users’ data and pass them from one page to another. Using JavaScript back-end frameworks such as Node.js, we can achieve the same goal. However, in this tutorial, we’ll show you step by step how to build a shopping cart with HTML5 and some minor JavaScript code. Other uses of the techniques in this tutorial would be to store user preferences, the user’s favorite content, wish lists, and user settings like name and password on websites and native mobile apps without using a database.

Many high-traffic websites rely on complex techniques such as server clustering, DNS load balancers, client-side and server-side caching, distributed databases, and microservices to optimize performance and availability. Indeed, the major challenge for dynamic websites is to fetch data from a database and use a server-side language such as PHP to process them. However, remote database storage should be used only for essential website content, such as articles and user credentials. Features such as user preferences can be stored in the user’s browser, similar to cookies. Likewise, when you build a native mobile app, you can use HTML5 web storage in conjunction with a local database to increase the speed of your app. Thus, as front-end developers, we need to explore ways in which we can exploit the power of HTML5 web storage in our applications in the early stages of development.

I have been a part of a team developing a large-scale social website, and we used HTML5 web storage heavily. For instance, when a user logs in, we store the hashed user ID in an HTML5 session and use it to authenticate the user on protected pages. We also use this feature to store all new push notifications — such as new chat messages, website messages, and new feeds — and pass them from one page to another. When a social website gets high traffic, total reliance on the server for load balancing might not work, so you have to identify tasks and data that can be handled by the user’s browser instead of your servers.

Project Background

A shopping cart allows a website’s visitor to view product pages and add items to their basket. The visitor can review all of their items and update their basket (such as to add or remove items). To achieve this, the website needs to store the visitor’s data and pass them from one page to another, until the visitor goes to the checkout page and makes a purchase. Storing data can be done via a server-side language or a client-side one. With a server-side language, the server bears the weight of the data storage, whereas with a client-side language, the visitor’s computer (desktop, tablet or smartphone) stores and processes the data. Each approach has its pros and cons. In this tutorial, we’ll focus on a simple client-side approach, based on HTML5 and JavaScript.

Note: In order to be able to follow this tutorial, basic knowledge of HTML5, CSS and JavaScript is required.

Project Files

Click here to download the project’s source files. You can see a live demo, too.

Overview Of HTML5 Web Storage

HTML5 web storage allows web applications to store values locally in the browser that can survive the browser session, just like cookies. Unlike cookies that need to be sent with every HTTP request, web storage data is never transferred to the server; thus, web storage outperforms cookies in web performance. Furthermore, cookies allow you to store only 4 KB of data per domain, whereas web storage allows at least 5 MB per domain. Web storage works like a simple array, mapping keys to values, and they have two types:

Session storage This stores data in one browser session, where it becomes available until the browser or browser tab is closed. Popup windows opened from the same window can see session storage, and so can iframes inside the same window. However, multiple windows from the same origin (URL) cannot see each other’s session storage.

Local storage This stores data in the web browser with no expiration date. The data is available to all windows with the same origin (domain), even when the browser or browser tabs are reopened or closed.

Both storage types are currently supported in all major web browsers. Keep in mind that you cannot pass storage data from one browser to another, even if both browsers are visiting the same domain.

Build A Basic Shopping Cart

To build our shopping cart, we first create an HTML page with a simple cart to show items, and a simple form to add or edit the basket. Then, we add HTML web storage to it, followed by JavaScript coding. Although we are using HTML5 local storage tags, all steps are identical to those of HTML5 session storage and can be applied to HTML5 session storage tags. Lastly, we’ll go over some jQuery code, as an alternative to JavaScript code, for those interested in using jQuery.

Add HTML5 Local Storage To Shopping Cart

Our HTML page is a basic page, with tags for external JavaScript and CSS referenced in the head.

<!DOCTYPE HTML> <html lang="en-US"> <head> <title>HTML5 Local Storage Project</title> <META charset="UTF-8"> <META name="viewport" content="width=device-width, initial-scale=1, shrink-to-fit=no"> <META NAME='rating' CONTENT='General' /> <META NAME='expires' CONTENT='never' /> <META NAME='language' CONTENT='English, EN' /> <META name="description" content="shopping cart project with HTML5 and JavaScript"> <META name="keywords" content="HTML5,CSS,JavaScript, html5 session storage, html5 local storage"> <META name="author" content="dcwebmakers.com"> <script src="Storage.js"></script> <link rel="stylesheet" href="StorageStyle.css"> </head>

Below is the HTML content for the page’s body:

<form name=ShoppingList> <div id="main"> <table> <tr> <td><b>Item:</b><input type=text name=name></td> <td><b>Quantity:</b><input type=text name=data></td> </tr> <tr> <td> <input type=button value="Save" onclick="SaveItem()"> <input type=button value="Update" onclick="ModifyItem()"> <input type=button value="Delete" onclick="RemoveItem()"> </td> </tr> </table> </div> <div id="items_table"> <h3>Shopping List</h3> <table id=list></table> <p> <label><input type=button value="Clear" onclick="ClearAll()"> <i>* Delete all items</i></label> </p> </div> </form>

Adding JavaScript To The Shopping Cart

We’ll create and call the JavaScript function doShowAll() in the onload() event to check for browser support and to dynamically create the table that shows the storage name-value pair.

<body onload="doShowAll()">

Alternatively, you can use the JavaScript onload event by adding this to the JavaScript code:

window.load=doShowAll();

Or use this for jQuery:

$( window ).load(function() { doShowAll(); });