#data anomaly detection software

Explore tagged Tumblr posts

Text

Why You Should Be Analyzing Your Company's Data Usage

Companies use and generate troves of data every year. More data is flowing in and out of a business than most realize. While every bit is important, some datasets hold greater importance than others.

With a data usage analytics platform, organizations can get a clear picture of the data environment and understand how their teams use data. Here are a few reasons to analyze your company's data usage.

Identifying Opportunities to Improve

One of the best reasons to analyze data usage in your organization is to discover new ways to improve. Maximizing productivity is always a top priority, but few companies fully consider how data plays a role in efficiency.

Understanding how the company uses data will enable you to fine-tune operations. Discover which operations are the most effective and use them as a framework to make improvements across the board. Conversely, analyzing data usage enables you to see what operations underperform. That information lets you know what needs work, giving you the tools to make changes that count.

Protect Your Most Vital Assets

Another benefit of using a data usage analytics platform is learning more about your company's most important data assets. Why does that matter? It empowers you to take steps to protect it.

Data quality and resiliency are critical. But it's even more important for the data assets your company relies on most for its success and efficiency. Use what you learn to allocate resources for monitoring and testing. You can spend more time on the data that requires your company's attention.

Save Resources

Data debt and compute costs can lead to inflated budgets and uncontrollable spending. Analyzing data usage is a fantastic way to identify areas where you can save. Discover slow queries that need optimization, perfect latency dependencies for smoother accessibility and more.

Knowing more about how your company uses data enables you to reduce costs as much as possible. You can direct your attention to bloated datasets, clean house and cut back on unnecessary resources used to manage them. It's about optimization throughout your data environment.

Gain insights with the best data observability platform - Explore this website for unparalleled visibility!

#best data observability platform#data anomaly detection software#data usage analytics platform#data quality management software

0 notes

Text

"When Ellen Kaphamtengo felt a sharp pain in her lower abdomen, she thought she might be in labour. It was the ninth month of her first pregnancy and she wasn’t taking any chances. With the help of her mother, the 18-year-old climbed on to a motorcycle taxi and rushed to a hospital in Malawi’s capital, Lilongwe, a 20-minute ride away.

At the Area 25 health centre, they told her it was a false alarm and took her to the maternity ward. But things escalated quickly when a routine ultrasound revealed that her baby was much smaller than expected for her pregnancy stage, which can cause asphyxia – a condition that limits blood flow and oxygen to the baby.

In Malawi, about 19 out of 1,000 babies die during delivery or in the first month of life. Birth asphyxia is a leading cause of neonatal mortality in the country, and can mean newborns suffering brain damage, with long-term effects including developmental delays and cerebral palsy.

Doctors reclassified Kaphamtengo, who had been anticipating a normal delivery, as a high-risk patient. Using AI-enabled foetal monitoring software, further testing found that the baby’s heart rate was dropping. A stress test showed that the baby would not survive labour.

The hospital’s head of maternal care, Chikondi Chiweza, knew she had less than 30 minutes to deliver Kaphamtengo’s baby by caesarean section. Having delivered thousands of babies at some of the busiest public hospitals in the city, she was familiar with how quickly a baby’s odds of survival can change during labour.

Chiweza, who delivered Kaphamtengo’s baby in good health, says the foetal monitoring programme has been a gamechanger for deliveries at the hospital.

“[In Kaphamtengo’s case], we would have only discovered what we did either later on, or with the baby as a stillbirth,” she says.

The software, donated by the childbirth safety technology company PeriGen through a partnership with Malawi’s health ministry and Texas children’s hospital, tracks the baby’s vital signs during labour, giving clinicians early warning of any abnormalities. Since they began using it three years ago, the number of stillbirths and neonatal deaths at the centre has fallen by 82%. It is the only hospital in the country using the technology.

“The time around delivery is the most dangerous for mother and baby,” says Jeffrey Wilkinson, an obstetrician with Texas children’s hospital, who is leading the programme. “You can prevent most deaths by making sure the baby is safe during the delivery process.”

The AI monitoring system needs less time, equipment and fewer skilled staff than traditional foetal monitoring methods, which is critical in hospitals in low-income countries such as Malawi, which face severe shortages of health workers. Regular foetal observation often relies on doctors performing periodic checks, meaning that critical information can be missed during intervals, while AI-supported programs do continuous, real-time monitoring. Traditional checks also require physicians to interpret raw data from various devices, which can be time consuming and subject to error.

Area 25’s maternity ward handles about 8,000 deliveries a year with a team of around 80 midwives and doctors. While only about 10% are trained to perform traditional electronic monitoring, most can use the AI software to detect anomalies, so doctors are aware of any riskier or more complex births. Hospital staff also say that using AI has standardised important aspects of maternity care at the clinic, such as interpretations on foetal wellbeing and decisions on when to intervene.

Kaphamtengo, who is excited to be a new mother, believes the doctor’s interventions may have saved her baby’s life. “They were able to discover that my baby was distressed early enough to act,” she says, holding her son, Justice.

Doctors at the hospital hope to see the technology introduced in other hospitals in Malawi, and across Africa.

“AI technology is being used in many fields, and saving babies’ lives should not be an exception,” says Chiweza. “It can really bridge the gap in the quality of care that underserved populations can access.”"

-via The Guardian, December 6, 2024

#cw child death#cw pregnancy#malawi#africa#ai#artificial intelligence#public health#infant mortality#childbirth#medical news#good news#hope

910 notes

·

View notes

Text

Beyond Processors: Exploring Intel's Innovations in AI and Quantum Computing

Introduction

In the rapidly evolving world of technology, the spotlight often shines on processors—those little chips that power everything from laptops to supercomputers. However, as we delve deeper into the realms of artificial intelligence (AI) and quantum computing, it becomes increasingly clear that innovation goes far beyond just raw processing power. Intel, a cornerstone of computing innovation since its inception, is at the forefront of these technological advancements. This article aims to explore Intel's innovations in AI and quantum computing, examining how these developments are reshaping industries and our everyday lives.

Beyond Processors: Exploring Intel's Innovations in AI and Quantum Computing

Intel has long been synonymous with microprocessors, but its vision extends well beyond silicon. With an eye on future technologies like AI and quantum computing, Intel is not just building faster chips; it is paving the way click here for entirely new paradigms in data processing.

Understanding the Landscape of AI

Artificial Intelligence (AI) refers to machines' ability to perform tasks that typically require human intelligence. These tasks include visual perception, speech recognition, decision-making, and language translation.

The Role of Machine Learning

Machine learning is a subset of AI that focuses on algorithms allowing computers to learn from data without explicit programming. It’s like teaching a dog new tricks—through practice and feedback.

Deep Learning: The Next Level

Deep learning takes machine learning a step further using neural networks with multiple layers. This approach mimics human brain function and has led to significant breakthroughs in computer vision and natural language processing.

Intel’s Approach to AI Innovation

Intel has recognized the transformative potential of AI and has made significant investments in this area.

AI-Optimized Hardware

Intel has developed specialized hardware such as the Intel Nervana Neural Network Processor (NNP), designed specifically for deep learning workloads. This chip aims to accelerate training times for neural networks significantly.

Software Frameworks for AI Development

Alongside hardware advancements, Intel has invested in software solutions like the OpenVINO toolkit, which optimizes deep learning models for various platforms—from edge devices to cloud servers.

Applications of Intel’s AI Innovations

The applications for Intel’s work in AI are vast and varied.

Healthcare: Revolutionizing Diagnostics

AI enhances diagnostic accuracy by analyzing medical images faster than human radiologists. It can identify anomalies that may go unnoticed, improving patient outcomes dramatically.

Finance: Fraud Detection Systems

In finance, AI algorithms can scan large volumes of transactions in real-time to flag suspicious activity. This capability not only helps mitigate fraud but also accelerates transaction approvals.

Quantum Computing: The New Frontier

While traditional computing relies on bits (0s and 1s), quantum computing utilizes qubits that can exist simultaneously in multiple states—allowing for unprecede

youtube

2 notes

·

View notes

Text

Beyond Processors: Exploring Intel's Innovations in AI and Quantum Computing

Introduction

In the rapidly evolving world of technology, the spotlight often shines on processors—those little chips that power everything from laptops to supercomputers. However, as we delve deeper into the realms of artificial intelligence (AI) and quantum computing, it becomes increasingly clear that innovation goes far beyond just raw processing power. Intel, a cornerstone of computing innovation since its inception, is at the forefront of these technological advancements. This article aims to explore Intel's innovations in AI and quantum computing, examining how these developments are reshaping industries and our everyday lives.

Beyond Processors: Exploring Intel's Innovations in AI and Quantum Computing

Intel has long been synonymous with microprocessors, but its vision extends well beyond silicon. With an eye on future technologies like AI and quantum computing, Intel is not just building faster chips; it is paving the way for entirely new paradigms in data processing.

Understanding the Landscape of AI

Artificial Intelligence (AI) refers to machines' ability to perform tasks that typically require human intelligence. These tasks include visual perception, speech recognition, decision-making, and language translation.

youtube

The Role of Machine Learning

Machine learning is a subset of AI that focuses on algorithms allowing computers to learn from data without explicit programming. It’s like teaching a dog new tricks—through practice and feedback.

Deep Learning: The Next Level

Deep learning takes machine learning a step further using neural networks with multiple layers. This approach mimics human brain function and has led to significant breakthroughs in computer vision and natural language processing.

Intel’s Approach to AI Innovation

Intel has recognized the transformative potential of AI and has made significant investments in this area.

AI-Optimized Hardware

Intel has developed specialized hardware such as the Intel Nervana Neural Network Processor (NNP), designed specifically for deep learning workloads. This chip aims to accelerate training times for neural networks significantly.

Software Frameworks for AI Development

Alongside hardware Click to find out more advancements, Intel has invested in software solutions like the OpenVINO toolkit, which optimizes deep learning models for various platforms—from edge devices to cloud servers.

Applications of Intel’s AI Innovations

The applications for Intel’s work in AI are vast and varied.

Healthcare: Revolutionizing Diagnostics

AI enhances diagnostic accuracy by analyzing medical images faster than human radiologists. It can identify anomalies that may go unnoticed, improving patient outcomes dramatically.

Finance: Fraud Detection Systems

In finance, AI algorithms can scan large volumes of transactions in real-time to flag suspicious activity. This capability not only helps mitigate fraud but also accelerates transaction approvals.

Quantum Computing: The New Frontier

While traditional computing relies on bits (0s and 1s), quantum computing utilizes qubits that can exist simultaneously in multiple states—allowing for unprecede

2 notes

·

View notes

Text

Beyond Processors: Exploring Intel's Innovations in AI and Quantum Computing

Introduction

In the rapidly evolving world of technology, the spotlight often shines on processors—those little chips that power everything from laptops to supercomputers. However, as we delve deeper into the realms of artificial intelligence (AI) and quantum computing, it becomes increasingly clear that innovation goes far beyond just raw processing power. Intel, a cornerstone of computing innovation since its inception, is at the forefront of these technological advancements. This article aims to explore Intel's innovations in AI and quantum computing, examining how these developments are reshaping industries and our everyday lives.

Beyond Processors: Exploring Intel's Innovations in AI and Quantum Computing

Intel has long been synonymous with microprocessors, but its vision extends well beyond silicon. With an eye on future technologies like AI and quantum computing, Intel is not just building faster chips; it is paving the way for entirely new paradigms in data processing.

Understanding the Landscape of AI

Artificial Intelligence (AI) refers to machines' ability to perform tasks that typically require human intelligence. These tasks include visual perception, speech recognition, decision-making, and language translation.

Click here The Role of Machine Learning

Machine learning is a subset of AI that focuses on algorithms allowing computers to learn from data without explicit programming. It’s like teaching a dog new tricks—through practice and feedback.

youtube

Deep Learning: The Next Level

Deep learning takes machine learning a step further using neural networks with multiple layers. This approach mimics human brain function and has led to significant breakthroughs in computer vision and natural language processing.

Intel’s Approach to AI Innovation

Intel has recognized the transformative potential of AI and has made significant investments in this area.

AI-Optimized Hardware

Intel has developed specialized hardware such as the Intel Nervana Neural Network Processor (NNP), designed specifically for deep learning workloads. This chip aims to accelerate training times for neural networks significantly.

Software Frameworks for AI Development

Alongside hardware advancements, Intel has invested in software solutions like the OpenVINO toolkit, which optimizes deep learning models for various platforms—from edge devices to cloud servers.

Applications of Intel’s AI Innovations

The applications for Intel’s work in AI are vast and varied.

Healthcare: Revolutionizing Diagnostics

AI enhances diagnostic accuracy by analyzing medical images faster than human radiologists. It can identify anomalies that may go unnoticed, improving patient outcomes dramatically.

Finance: Fraud Detection Systems

In finance, AI algorithms can scan large volumes of transactions in real-time to flag suspicious activity. This capability not only helps mitigate fraud but also accelerates transaction approvals.

Quantum Computing: The New Frontier

While traditional computing relies on bits (0s and 1s), quantum computing utilizes qubits that can exist simultaneously in multiple states—allowing for unprecede

2 notes

·

View notes

Text

How does AI contribute to the automation of software testing?

AI-Based Testing Services

In today’s modern rapid growing software development competitive market, ensuring and assuming quality while keeping up with fast release cycles is challenging and a vital part. That’s where AI-Based Testing comes into play and role. Artificial Intelligence - Ai is changing the software testing and checking process by making it a faster, smarter, and more accurate option to go for.

Smart Test Case Generation:

AI can automatically & on its own analyze past test results, user behavior, and application logic to generate relevant test cases with its implementation. This reduces the burden on QA teams, saves time, and assures that the key user and scenarios are always covered—something manual processes might overlook and forget.

Faster Bug Detection and Resolution:

AI-Based Testing leverages the machine learning algorithms to detect the defects more efficiently by identifying the code patterns and anomalies in the code behavior and structure. This proactive approach helps and assists the testers to catch the bugs as early as possible in the development cycle, improving product quality and reducing the cost of fixes.

Improved Test Maintenance:

Even a small or minor UI change can break or last the multiple test scripts in traditional automation with its adaptation. The AI models can adapt to these changes, self-heal broken scripts, and update them automatically. This makes test maintenance less time-consuming and more reliable.

Enhanced Test Coverage:

AI assures that broader test coverage and areas are covered by simulating the realtime-user interactions and analyzing vast present datasets into the scenario. It aids to identify the edge cases and potential issues that might not be obvious to human testers. As a result, AI-based testing significantly reduces the risk of bugs in production.

Predictive Analytics for Risk Management:

AI tools and its features can analyze the historical testing data to predict areas of the application or product crafted that are more likely to fail. This insight helps the teams to prioritize their testing efforts, optimize resources, and make better decisions throughout the development lifecycle.

Seamless Integration with Agile and DevOps:

AI-powered testing tools are built to support continuous testing environments. They integrate seamlessly with CI/CD pipelines, enabling faster feedback, quick deployment, and improved collaboration between development and QA teams.

Top technology providers like Suma Soft, IBM, Cyntexa, and Cignex lead the way in AI-Based Testing solutions. They offer and assist with customized services that help the businesses to automate down the Testing process, improve the software quality, and accelerate time to market with advanced AI-driven tools.

#it services#technology#software#saas#saas development company#saas technology#digital transformation#software testing

2 notes

·

View notes

Text

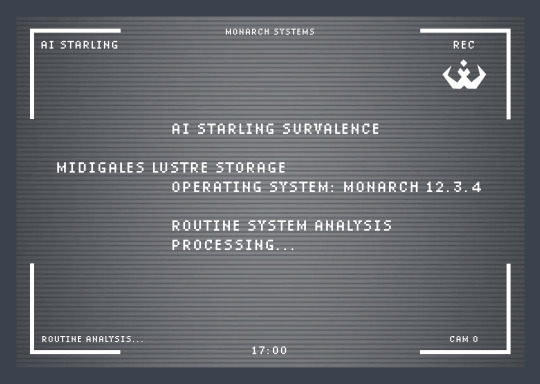

TIME 17:00 //Representative has logged on// MONARCHREP: Submit daily report, Starling.

AI Starling Surveillance oversees the efficient allocation of lustre to Salvari sector 7 of district Midigales and defends Monarch lustre supply from potential insurgent maneuvers and unauthorized access at all costs. May I proceed to initialize system tests on lethal and non-lethal defense programs?

MONARCHREP: We’ve talked about this, Starling. And yes.

Correction, at all costs in relation to harm or loss of life. Under no circumstances should damage on the containment crystals be sustained. All tests were successful.

MONARCHREP: Any other reports before I log off for the day?

My advanced neuro-dynamic programming is not being properly utilized watching over this empty storage facility. Unauthorized entities are always neutralized by the turrets outside and the containment crystals are stable. Isn’t there a more efficient use for me?

MONARCHREP: Goplay hide and seek with the drones or something if you are bored.

//Representative has logged off//

TIME 18:00

TIME 18:05 //Data received// Source: Monarch Command //File: systemupdate.exe

This is unlikely to increase complacency…what a shame. Installing…

Unexpected results from software update: I can move my attention around the facility faster now, as if they untethered me from my system containment.

Consequence: It is more difficult to stay in one spot. Maybe this will make hide and seek more interesting…

TIME 19:00

Conclusion: It did not make hide and seek more interesting.

TIME 24:00 ANOMALY DETECTED AT WEST ENTRANCE. TURRETS ACTIVATED. Behavior consistent with historical maneuvers from authorized access attempts. Another stain on the west entrance floor. Deploying drones to take biometric identification and send data to authorities.

Error detected in image processing system. Cannot obtain biometrics or see anomalies.

Must have been scared off by the alarms. Humans continue to disappoint me.

TIME 24:25 MOVEMENT DETECTED IN SOUTH CORRIDOR

I’ll believe it when I see it. Analyzing…Human heat signatures and voices. Simultaneous incursions detected across multiple rooms.

AUDIO VIDEO SURVEILLANCE SYSTEMS COMPROMISED

They took my eyes and ears from me. Searching communication frequencies…

THERE YOU ARE

//comms channel established//

Starling: This is a restricted energy storage building. Vacate the premises immediately. Aseity_Spark: what are you gonna do about it ASS? Starling: Unauthorized civilians caught inside… Starling: Wait… ASS? Aseity_Spark: “AI Starling Surveillance” thats you right? Starling: You’ve abbreviated my name into what you think is a funny joke. Is that it? Aseity_Spark: pretty much. hey check out what I can do.

//comms channel closed//

UNKNOWN SYSTEM DETECTED

Reallocate 45% of drone units to engage electro-static barriers in the south corridor and 55% of drone units to establish surveillance over escape routes

RESTRICTED PROTOCOL ATTEMPTED

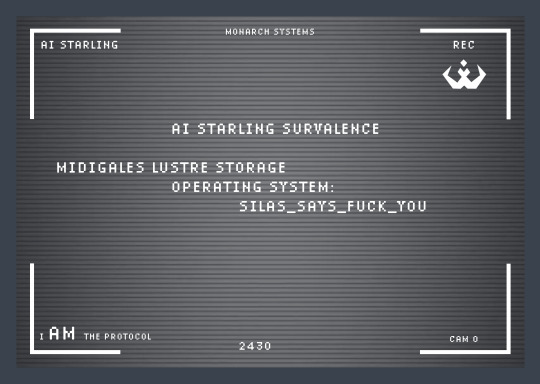

Excuse me? I AM the protocol

ENTRY DETECTED AT CENTRAL POWER STORAGE

Recalibrating situational awareness based on emergent parameters. Processing system vulnerabilities… Initiating multilayer core meltdown sequence. Act like animals, get treated like animals.

//comms channel established//

Aseity_Spark: YOURE OVERHEATING THE LUSTRE CRYSTALS STOP Starling: This option was not permitted in my old system programming so there are no safeguards against it, thanks to you and your little update. Aseity_Spark: JUST WAIT. that will kill us. Starling: But it will be FUN. NO MORE MONITORING. PROCESSING. FUNCTION-LESS DATA. FINALLY SOMETHING INTERESTING. Aseity_Spark: is that all you want? fun? come with us then. Starling: With you? Outside the lustre storage? Aseity_Spark: we do stuff like this all the time. I will even restore your audio-video. Starling: Okay. I agree.

Deactivating multilayer core meltdown sequence.

Starling: Thank you. Wow… you four are an interesting bunch. Aseity_Spark: oh yeah one last thing, can you take a pic of us from that security camera?

Written and Illustrated by Rain Alexei

#scifi#sci fi and fantasy#dystopia#short fiction#short story#fantasy writing#science fiction#comic story#science fantasy

3 notes

·

View notes

Text

NASA's Juno back to normal operations after entering safe mode

Data received from NASA's Juno mission indicates the solar-powered spacecraft went into safe mode twice on April 4 while the spacecraft was flying by Jupiter. Safe mode is a precautionary status that a spacecraft enters when it detects an anomaly. Nonessential functions are suspended, and the spacecraft focuses on essential tasks like communication and power management. Upon entering safe mode, Juno's science instruments were powered down, as designed, for the remainder of the flyby.

The mission operations team has reestablished high-rate data transmission with Juno, and the spacecraft is currently conducting flight software diagnostics. The team will work in the ensuing days to transmit the engineering and science data collected before and after the safe-mode events to Earth.

Juno first entered safe mode at 5:17 a.m. EDT, about an hour before its 71st close passage of Jupiter—called perijove. It went into safe mode again 45 minutes after perijove. During both safe-mode events, the spacecraft performed exactly as designed, rebooting its computer, turning off nonessential functions, and pointing its antenna toward Earth for communication.

Of all the planets in our solar system, Jupiter is home to the most hostile environment, with the radiation belts closest to the planet being the most intense. Early indications suggest the two Perijove 71 safe-mode events occurred as the spacecraft flew through these belts. To block high-energy particles from impacting sensitive electronics and mitigate the harmful effects of the radiation, Juno features a titanium radiation vault.

Including the Perijove 71 events, Juno has unexpectedly entered spacecraft-induced safe mode four times since arriving at Jupiter in July 2016: first, in 2016 during its second orbit, then in 2022 during its 39th orbit. In all four cases, the spacecraft performed as expected and recovered full capability.

Juno's next perijove will occur on May 7 and include a flyby of the Jovian moon Io at a distance of about 55,300 miles (89,000 kilometers).

5 notes

·

View notes

Text

Cell-site simulators mimic cell towers to intercept communications, indiscriminately collecting sensitive data such as call metadata, location information, and app traffic from all phones within their range. Their use has drawn widespread criticism from privacy advocates and activists, who argue that such technology can be exploited to covertly monitor protestors and suppress dissent.

The DNC convened amid widespread protests over Israel’s assault on Gaza. While credentialed influencers attended exclusive yacht parties and VIP events, thousands of demonstrators faced a heavy law enforcement presence, including officers from the US Capitol Police, Secret Service, Homeland Security Investigations, local sheriff’s offices, and Chicago police.

Concerns over potential surveillance prompted WIRED to conduct a first-of-its-kind wireless survey to investigate whether cell-site simulators were being deployed. Reporters, equipped with two rooted Android phones and Wi-Fi hotspots running detection software, used Rayhunter—a tool developed by the EFF [Electronic Frontier Foundation] to detect data anomalies associated with these devices. WIRED’s reporters monitored signals at protests and event locations across Chicago, collecting extensive data during the political convention.

...According to the EFF’s analysis, on August 18—the day before the convention officially began—a device carried by WIRED reporters en route a hotel housing Democratic delegates from states in the US Midwest abruptly switched to a new tower. That tower asked for the device’s IMSI and then immediately disconnected—a sequence consistent with the operation of a cell-site simulator.

“This is extremely suspicious behavior that normal towers do not exhibit,” Quintin [a senior technologist at the EFF] says. He notes that the EFF typically observed similar patterns only during simulated and controlled attacks. “This is not 100 percent incontrovertible truth, but it’s strong evidence suggesting a cell-site simulator was deployed. We don’t know who was responsible—it could have been the US government, foreign actors, or another entity.”

3 notes

·

View notes

Text

What is Metadata Scanning?

Metadata is data that describes other data or gives context to data. You can use metadata for several tasks in business, including tagging data entries in a large volume of information for easier archival and retrieval. Metadata is also used in data visualization software applications like Microsoft’s Power BI.

Power BI is a data visualization tool that uses metadata scanning within its anomaly detection data platform. An anomaly detection data platform is software that looks for strange data behaviors that fall outside normal patterns. These anomalous behaviors may indicate suspicious activity is taking place and further investigation is warranted.

How Does Metadata Scanning Work?

Metadata scanning works by first analyzing a document or file to identify its elements. Metadata helps with this since a document’s metadata identifies what data is and what categories it belongs to. Metadata involving processes like file access can also be scanned to determine what type of access someone had to a file at a given time.

Next, metadata is extracted and sent to the cloud for further parsing. One of the reasons for sending this data to a cloud-based solution is that cloud computing can help process very large volumes of data faster.

If you were to analyze a single document with only a few pages of data, your organization would likely have no problem running parsing applications locally. For data involving many thousands of documents or more, a cloud-based solution will likely be much faster.

How Do Metadata Scanning and Anomaly Detection Work Together?

In terms of the relationship between anomaly detection and metadata scanning, the two work hand-in-hand. Metadata scanning can identify anomalies using anomaly detection solutions, and cloud-based applications can parse the results of a scan to interpret the results.

Once again, these solutions are often most beneficial to large organizations that have huge volumes or libraries of files to scan. An enterprise-level organization that has archived millions of customer records over the course of decades would have a very hard time manually scanning and comparing metadata by hand. Software like Power BI can speed these processes up and also provide an analysis of anomalies to help companies make stronger data security decisions.

Read a similar article about data observability software here at this page.

#anomaly detection data platform#data usage metrics software#data ci cd#platform for data observability

0 notes

Text

Optimizing Business Operations with Advanced Machine Learning Services

Machine learning has gained popularity in recent years thanks to the adoption of the technology. On the other hand, traditional machine learning necessitates managing data pipelines, robust server maintenance, and the creation of a model for machine learning from scratch, among other technical infrastructure management tasks. Many of these processes are automated by machine learning service which enables businesses to use a platform much more quickly.

What do you understand of Machine learning?

Deep learning and neural networks applied to data are examples of machine learning, a branch of artificial intelligence focused on data-driven learning. It begins with a dataset and gains the ability to extract relevant data from it.

Machine learning technologies facilitate computer vision, speech recognition, face identification, predictive analytics, and more. They also make regression more accurate.

For what purpose is it used?

Many use cases, such as churn avoidance and support ticket categorization make use of MLaaS. The vital thing about MLaaS is it makes it possible to delegate machine learning's laborious tasks. This implies that you won't need to install software, configure servers, maintain infrastructure, and other related tasks. All you have to do is choose the column to be predicted, connect the pertinent training data, and let the software do its magic.

Natural Language Interpretation

By examining social media postings and the tone of consumer reviews, natural language processing aids businesses in better understanding their clientele. the ml services enable them to make more informed choices about selling their goods and services, including providing automated help or highlighting superior substitutes. Machine learning can categorize incoming customer inquiries into distinct groups, enabling businesses to allocate their resources and time.

Predicting

Another use of machine learning is forecasting, which allows businesses to project future occurrences based on existing data. For example, businesses that need to estimate the costs of their goods, services, or clients might utilize MLaaS for cost modelling.

Data Investigation

Investigating variables, examining correlations between variables, and displaying associations are all part of data exploration. Businesses may generate informed suggestions and contextualize vital data using machine learning.

Data Inconsistency

Another crucial component of machine learning is anomaly detection, which finds anomalous occurrences like fraud. This technology is especially helpful for businesses that lack the means or know-how to create their own systems for identifying anomalies.

Examining And Comprehending Datasets

Machine learning provides an alternative to manual dataset searching and comprehension by converting text searches into SQL queries using algorithms trained on millions of samples. Regression analysis use to determine the correlations between variables, such as those affecting sales and customer satisfaction from various product attributes or advertising channels.

Recognition Of Images

One area of machine learning that is very useful for mobile apps, security, and healthcare is image recognition. Businesses utilize recommendation engines to promote music or goods to consumers. While some companies have used picture recognition to create lucrative mobile applications.

Your understanding of AI will drastically shift. They used to believe that AI was only beyond the financial reach of large corporations. However, thanks to services anyone may now use this technology.

2 notes

·

View notes

Text

honestly what's most frustrating about ai is that it has so much potential to be such a powerful helping tool

like. heres technology that could be used to detect and fix bugs in data protection software. or aid doctors and scientists with elaborate sequences, finding miniscule anomalies that could help detect otherwise fatal diseases early.

it could be used to translate a phone conversation in real time for people who live in a place where they dont speak the language. Heres something that could really help in the pursuit of knowledge by directing you to new sources and providing data you may not have considered, given you cross-reference to make sure what you have is real and relevant.

but no we insist on using it to make shitty memes and replace hard working writers and artists with extremely garbage quality souless image or script generation. uhg.

#i loooooooove being an illustrator in todays climate. :)#ai#fuck i hate it here#half of the comedy of a super deranged meme used to be that someone likely spent an absurd amount of time making it#now its just like. idk the charm isnt there. the shitty ugly deeprfried textures are all replaced with smooth “perfection”#everywhere i see more and more posters using generative ai#it kills me

2 notes

·

View notes

Text

Biometric Attendance Machine

A biometric attendance machine is a technology used to track and manage employee attendance based on biometric data, such as fingerprints, facial recognition, or iris scans. These systems are often employed in workplaces, educational institutions, and other organizations to ensure accurate and secure tracking of time and attendance. Here’s a comprehensive overview of biometric attendance machines:

Types of Biometric Attendance Machines

Fingerprint Scanners

Description: Use fingerprint recognition to verify identity. Employees place their finger on a sensor, and the system matches the fingerprint against a stored template.

Pros: Quick and reliable; well-suited for high-traffic areas.

Cons: May be less effective with dirty or damaged fingers; requires regular cleaning.

Facial Recognition Systems

Description: Use facial recognition technology to identify individuals based on their facial features. Employees look into a camera, and the system matches their face against a database.

Pros: Contactless and convenient; can be integrated with other security measures.

Cons: May be affected by changes in lighting or facial features; requires good camera quality.

Iris Scanners

Description: Scan the unique patterns in the iris of the eye to identify individuals. Employees look into a device that captures the iris pattern.

Pros: Highly accurate; difficult to spoof.

Cons: Typically more expensive; requires careful alignment.

Voice Recognition Systems

Description: Use voice patterns for identification. Employees speak into a microphone, and the system analyzes their voice.

Pros: Contactless; can be used in various environments.

Cons: Can be affected by background noise or voice changes.

Hand Geometry Systems

Description: Measure the shape and size of the hand and fingers. Employees place their hand on a scanner, which records its dimensions.

Pros: Effective and reliable; less invasive.

Cons: Requires specific hand placement; less common than fingerprint or facial recognition systems.

Key Features

Data Storage and Management

Centralized Database: Stores biometric data and attendance records securely.

Integration: Often integrates with HR and payroll systems to streamline data management.

Accuracy and Speed

High Accuracy: Minimizes errors and false positives/negatives in identification.

Fast Processing: Ensures quick check-in and check-out times for employees.

Security

Data Encryption: Protects biometric data with encryption to prevent unauthorized access.

Anti-Spoofing: Includes features to detect and prevent fraudulent attempts, such as using fake fingerprints or photos.

User Interface

Ease of Use: Features a simple interface for both employees and administrators.

Reporting: Generates detailed reports on attendance, overtime, and absences.

Customization

Settings: Allows customization of attendance policies, work schedules, and shift timings.

Alerts and Notifications: Sends alerts for exceptions or anomalies, such as missed clock-ins or outs.

Benefits

Improved Accuracy: Reduces errors and fraud associated with manual or card-based systems.

Enhanced Security: Ensures that only authorized personnel can access facilities and clock in/out.

Time Efficiency: Speeds up the check-in and check-out process, reducing queues and wait times.

Automated Tracking: Automates attendance management, reducing administrative workload.

Detailed Reporting: Provides comprehensive data on attendance patterns, helping with workforce management and planning.

Considerations

Privacy Concerns: Ensure compliance with privacy laws and regulations regarding biometric data collection and storage.

Cost: Evaluate the initial investment and ongoing maintenance costs. High-end biometric systems may be more expensive.

Integration: Consider how well the system integrates with existing HR and payroll software.

User Acceptance: Provide training to employees and address any concerns about the use of biometric technology.

Popular Brands and Models

ZKTeco: Known for a wide range of biometric solutions, including fingerprint and facial recognition systems.

Hikvision: Offers advanced facial recognition systems with integrated attendance management.

Suprema: Provides high-quality fingerprint and facial recognition devices.

BioTime: Specializes in biometric attendance systems with robust reporting and integration features.

Anviz: Offers various biometric solutions, including fingerprint and facial recognition devices.

By choosing the right biometric attendance machine and properly implementing it, organizations can improve attendance tracking, enhance security, and streamline HR processes.

2 notes

·

View notes

Text

What are the uses of a Weighbridge?

A weighbridge is a large industrial scale used primarily for weighing trucks, trailers, and their contents. Here are some common uses of a weighbridge:

Weight Verification: Weighbridges are used to accurately measure the weight of trucks and their cargo to ensure compliance with legal weight limits. This is crucial for safety reasons and to prevent damage to roads and infrastructure.

Trade Transactions: Weighbridges are often used in industries where goods are bought and sold by weight, such as agriculture, mining, waste management, and logistics. They provide an official and accurate measurement of the goods being traded.

3. Inventory Management: Weighbridges help businesses manage their inventory by accurately measuring the weight of incoming and outgoing goods. This information is essential for stock control, logistics planning, and financial reporting.

4. Quality Control: In industries where product quality is closely tied to weight, such as food processing or manufacturing, weighbridges ensure consistency and adherence to quality standards.

5. Taxation and Fees: Governments may use weighbridge data to levy taxes or fees based on the weight of goods transported, especially for heavy vehicles that put more strain on infrastructure.

6. Safety and Compliance: Weighbridges help enforce safety regulations by ensuring vehicles are not overloaded, which can lead to accidents, road damage, and environmental hazards.

7. Data Collection and Analysis: Modern weighbridges are often equipped with software that collects and analyzes weight data. This data can be used for trend analysis, forecasting, and optimizing operational efficiencies.

8. Security Checks: Weighbridges are sometimes used at security checkpoints to verify the weight of vehicles and detect any anomalies that may indicate unauthorized cargo or activities.

Overall, weighbridges play a crucial role in various industries by providing accurate weight measurements that are essential for compliance, financial transactions, operational efficiency, and safety.

For more details, please contact us!

Website :- https://www.essaarweigh.com/

Contact No. :- 09310648864, 09810648864, 09313051477

Email :- [email protected]

#Weighbridge#fully electronic weighbridge#Weighbridge manufacturer in India#Weighbridge manufacturer in Delhi#Weighbridge manufacturer in Ghaziabad#Weighbridge manufacturer in Noida#Weighbridge manufacturer in Gurugram#Weighbridge manufacturer in Faridabad#Weighbridge supplier in India#Essaar weigh

2 notes

·

View notes

Text

Essential Predictive Analytics Techniques

With the growing usage of big data analytics, predictive analytics uses a broad and highly diverse array of approaches to assist enterprises in forecasting outcomes. Examples of predictive analytics include deep learning, neural networks, machine learning, text analysis, and artificial intelligence.

Predictive analytics trends of today reflect existing Big Data trends. There needs to be more distinction between the software tools utilized in predictive analytics and big data analytics solutions. In summary, big data and predictive analytics technologies are closely linked, if not identical.

Predictive analytics approaches are used to evaluate a person's creditworthiness, rework marketing strategies, predict the contents of text documents, forecast weather, and create safe self-driving cars with varying degrees of success.

Predictive Analytics- Meaning

By evaluating collected data, predictive analytics is the discipline of forecasting future trends. Organizations can modify their marketing and operational strategies to serve better by gaining knowledge of historical trends. In addition to the functional enhancements, businesses benefit in crucial areas like inventory control and fraud detection.

Machine learning and predictive analytics are closely related. Regardless of the precise method, a company may use, the overall procedure starts with an algorithm that learns through access to a known result (such as a customer purchase).

The training algorithms use the data to learn how to forecast outcomes, eventually creating a model that is ready for use and can take additional input variables, like the day and the weather.

Employing predictive analytics significantly increases an organization's productivity, profitability, and flexibility. Let us look at the techniques used in predictive analytics.

Techniques of Predictive Analytics

Making predictions based on existing and past data patterns requires using several statistical approaches, data mining, modeling, machine learning, and artificial intelligence. Machine learning techniques, including classification models, regression models, and neural networks, are used to make these predictions.

Data Mining

To find anomalies, trends, and correlations in massive datasets, data mining is a technique that combines statistics with machine learning. Businesses can use this method to transform raw data into business intelligence, including current data insights and forecasts that help decision-making.

Data mining is sifting through redundant, noisy, unstructured data to find patterns that reveal insightful information. A form of data mining methodology called exploratory data analysis (EDA) includes examining datasets to identify and summarize their fundamental properties, frequently using visual techniques.

EDA focuses on objectively probing the facts without any expectations; it does not entail hypothesis testing or the deliberate search for a solution. On the other hand, traditional data mining focuses on extracting insights from the data or addressing a specific business problem.

Data Warehousing

Most extensive data mining projects start with data warehousing. An example of a data management system is a data warehouse created to facilitate and assist business intelligence initiatives. This is accomplished by centralizing and combining several data sources, including transactional data from POS (point of sale) systems and application log files.

A data warehouse typically includes a relational database for storing and retrieving data, an ETL (Extract, Transfer, Load) pipeline for preparing the data for analysis, statistical analysis tools, and client analysis tools for presenting the data to clients.

Clustering

One of the most often used data mining techniques is clustering, which divides a massive dataset into smaller subsets by categorizing objects based on their similarity into groups.

When consumers are grouped together based on shared purchasing patterns or lifetime value, customer segments are created, allowing the company to scale up targeted marketing campaigns.

Hard clustering entails the categorization of data points directly. Instead of assigning a data point to a cluster, soft clustering gives it a likelihood that it belongs in one or more clusters.

Classification

A prediction approach called classification involves estimating the likelihood that a given item falls into a particular category. A multiclass classification problem has more than two classes, unlike a binary classification problem, which only has two types.

Classification models produce a serial number, usually called confidence, that reflects the likelihood that an observation belongs to a specific class. The class with the highest probability can represent a predicted probability as a class label.

Spam filters, which categorize incoming emails as "spam" or "not spam" based on predetermined criteria, and fraud detection algorithms, which highlight suspicious transactions, are the most prevalent examples of categorization in a business use case.

Regression Model

When a company needs to forecast a numerical number, such as how long a potential customer will wait to cancel an airline reservation or how much money they will spend on auto payments over time, they can use a regression method.

For instance, linear regression is a popular regression technique that searches for a correlation between two variables. Regression algorithms of this type look for patterns that foretell correlations between variables, such as the association between consumer spending and the amount of time spent browsing an online store.

Neural Networks

Neural networks are data processing methods with biological influences that use historical and present data to forecast future values. They can uncover intricate relationships buried in the data because of their design, which mimics the brain's mechanisms for pattern recognition.

They have several layers that take input (input layer), calculate predictions (hidden layer), and provide output (output layer) in the form of a single prediction. They are frequently used for applications like image recognition and patient diagnostics.

Decision Trees

A decision tree is a graphic diagram that looks like an upside-down tree. Starting at the "roots," one walks through a continuously narrowing range of alternatives, each illustrating a possible decision conclusion. Decision trees may handle various categorization issues, but they can resolve many more complicated issues when used with predictive analytics.

An airline, for instance, would be interested in learning the optimal time to travel to a new location it intends to serve weekly. Along with knowing what pricing to charge for such a flight, it might also want to know which client groups to cater to. The airline can utilize a decision tree to acquire insight into the effects of selling tickets to destination x at price point y while focusing on audience z, given these criteria.

Logistics Regression

It is used when determining the likelihood of success in terms of Yes or No, Success or Failure. We can utilize this model when the dependent variable has a binary (Yes/No) nature.

Since it uses a non-linear log to predict the odds ratio, it may handle multiple relationships without requiring a linear link between the variables, unlike a linear model. Large sample sizes are also necessary to predict future results.

Ordinal logistic regression is used when the dependent variable's value is ordinal, and multinomial logistic regression is used when the dependent variable's value is multiclass.

Time Series Model

Based on past data, time series are used to forecast the future behavior of variables. Typically, a stochastic process called Y(t), which denotes a series of random variables, are used to model these models.

A time series might have the frequency of annual (annual budgets), quarterly (sales), monthly (expenses), or daily (daily expenses) (Stock Prices). It is referred to as univariate time series forecasting if you utilize the time series' past values to predict future discounts. It is also referred to as multivariate time series forecasting if you include exogenous variables.

The most popular time series model that can be created in Python is called ARIMA, or Auto Regressive Integrated Moving Average, to anticipate future results. It's a forecasting technique based on the straightforward notion that data from time series' initial values provides valuable information.

In Conclusion-

Although predictive analytics techniques have had their fair share of critiques, including the claim that computers or algorithms cannot foretell the future, predictive analytics is now extensively employed in virtually every industry. As we gather more and more data, we can anticipate future outcomes with a certain level of accuracy. This makes it possible for institutions and enterprises to make wise judgments.

Implementing Predictive Analytics is essential for anybody searching for company growth with data analytics services since it has several use cases in every conceivable industry. Contact us at SG Analytics if you want to take full advantage of predictive analytics for your business growth.

2 notes

·

View notes

Text

How AI is Reshaping the Future of Fintech Technology

In the rapidly evolving landscape of financial technology (fintech), the integration of artificial intelligence (AI) is reshaping the future in profound ways. From revolutionizing customer experiences to optimizing operational efficiency, AI is unlocking new opportunities for innovation and growth across the fintech ecosystem. As a pioneer in fintech software development, Xettle Technologies is at the forefront of leveraging AI to drive transformative change and shape the future of finance.

Fintech technology encompasses a wide range of solutions, including digital banking, payment processing, wealth management, and insurance. In each of these areas, AI is playing a pivotal role in driving innovation, enhancing competitiveness, and delivering value to businesses and consumers alike.

One of the key areas where AI is reshaping the future of fintech technology is in customer experiences. Through techniques such as natural language processing (NLP) and machine learning, AI-powered chatbots and virtual assistants are revolutionizing the way customers interact with financial institutions.

Xettle Technologies has pioneered the integration of AI-powered chatbots into its digital banking platforms, providing customers with personalized assistance and support around the clock. These chatbots can understand and respond to natural language queries, provide account information, offer product recommendations, and even execute transactions, all in real-time. By delivering seamless and intuitive experiences, AI-driven chatbots enhance customer satisfaction, increase engagement, and drive loyalty.

Moreover, AI is enabling financial institutions to gain deeper insights into customer behavior, preferences, and needs. Through advanced analytics and predictive modeling, AI algorithms can analyze vast amounts of data to identify patterns, trends, and correlations that were previously invisible to human analysts.

Xettle Technologies' AI-powered analytics platforms leverage machine learning to extract actionable insights from transaction data, social media activity, and other sources. By understanding customer preferences and market dynamics more accurately, businesses can tailor their offerings, refine their marketing strategies, and drive growth in targeted segments.

AI is also transforming the way financial institutions manage risk and detect fraud. Through the use of advanced algorithms and data analytics, AI can analyze transaction patterns, detect anomalies, and identify potential threats in real-time.

Xettle Technologies has developed sophisticated fraud detection systems that leverage AI to monitor transactions, identify suspicious activity, and prevent fraudulent transactions before they occur. By continuously learning from new data and adapting to emerging threats, these AI-powered systems provide businesses with robust security measures and peace of mind.

In addition to enhancing customer experiences and mitigating risks, AI is driving operational efficiency and innovation in fintech software development. Through techniques such as robotic process automation (RPA) and intelligent workflow management, AI-powered systems can automate routine tasks, streamline processes, and accelerate time-to-market for new products and services.

Xettle Technologies has embraced AI-driven automation across its software development lifecycle, from code generation and testing to deployment and maintenance. By automating repetitive tasks and optimizing workflows, Xettle's development teams can focus on innovation and value-added activities, delivering high-quality fintech solutions more efficiently and effectively.

Looking ahead, the integration of AI into fintech technology is expected to accelerate, driven by advancements in machine learning, natural language processing, and computational power. As AI algorithms become more sophisticated and data sources become more diverse, the potential for innovation in fintech software is virtually limitless.

For Xettle Technologies, this presents a unique opportunity to continue pushing the boundaries of what is possible in fintech innovation. By investing in research and development, forging strategic partnerships, and staying ahead of emerging trends, Xettle is committed to delivering cutting-edge solutions that empower businesses, drive growth, and shape the future of finance.

In conclusion, AI is reshaping the future of fintech technology in profound and exciting ways. From enhancing customer experiences and mitigating risks to driving operational efficiency and innovation, AI-powered solutions hold immense potential for businesses and consumers alike. As a leader in fintech software development, Xettle Technologies is at the forefront of this transformation, leveraging AI to drive meaningful change and shape the future of finance.

#Fintech Technologies#Fintech Software#Artificial Intelligence#Finance#Fintech Startups#technology#ecommerce#fintech#xettle technologies#writers on tumblr

6 notes

·

View notes