#differentiate between Artificial Intelligence and Machine Learning

Explore tagged Tumblr posts

Text

#differentiate between Artificial Intelligence and Machine Learning#How is AI different from ML#Artificial Intelligence vs Machine Learning

0 notes

Text

Been a while, crocodiles. Let's talk about cad.

or, y'know...

Yep, we're doing a whistle-stop tour of AI in medical diagnosis!

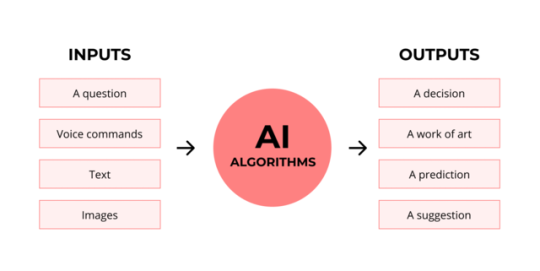

Much like programming, AI can be conceived of, in very simple terms, as...

a way of moving from inputs to a desired output.

See, this very funky little diagram from skillcrush.com.

The input is what you put in. The output is what you get out.

This output will vary depending on the type of algorithm and the training that algorithm has undergone – you can put the same input into two different algorithms and get two entirely different sorts of answer.

Generative AI produces ‘new’ content, based on what it has learned from various inputs. We're talking AI Art, and Large Language Models like ChatGPT. This sort of AI is very useful in healthcare settings to, but that's a whole different post!

Analytical AI takes an input, such as a chest radiograph, subjects this input to a series of analyses, and deduces answers to specific questions about this input. For instance: is this chest radiograph normal or abnormal? And if abnormal, what is a likely pathology?

We'll be focusing on Analytical AI in this little lesson!

Other forms of Analytical AI that you might be familiar with are recommendation algorithms, which suggest items for you to buy based on your online activities, and facial recognition. In facial recognition, the input is an image of your face, and the output is the ability to tie that face to your identity. We’re not creating new content – we’re classifying and analysing the input we’ve been fed.

Many of these functions are obviously, um, problematique. But Computer-Aided Diagnosis is, potentially, a way to use this tool for good!

Right?

....Right?

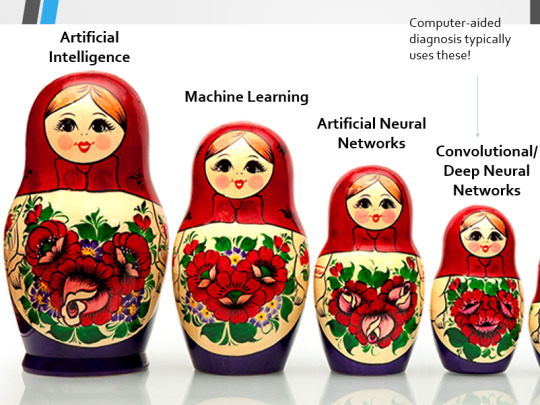

Let's dig a bit deeper! AI is a massive umbrella term that contains many smaller umbrella terms, nested together like Russian dolls. So, we can use this model to envision how these different fields fit inside one another.

AI is the term for anything to do with creating and managing machines that perform tasks which would otherwise require human intelligence. This is what differentiates AI from regular computer programming.

Machine Learning is the development of statistical algorithms which are trained on data –but which can then extrapolate this training and generalise it to previously unseen data, typically for analytical purposes. The thing I want you to pay attention to here is the date of this reference. It’s very easy to think of AI as being a ‘new’ thing, but it has been around since the Fifties, and has been talked about for much longer. The massive boom in popularity that we’re seeing today is built on the backs of decades upon decades of research.

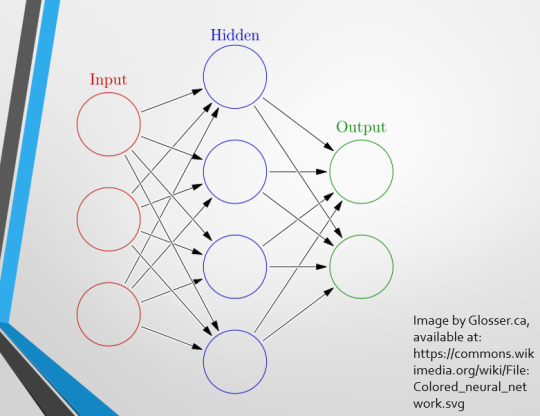

Artificial Neural Networks are loosely inspired by the structure of the human brain, where inputs are fed through one or more layers of ‘nodes’ which modify the original data until a desired output is achieved. More on this later!

Deep neural networks have two or more layers of nodes, increasing the complexity of what they can derive from an initial input. Convolutional neural networks are often also Deep. To become ‘convolutional’, a neural network must have strong connections between close nodes, influencing how the data is passed back and forth within the algorithm. We’ll dig more into this later, but basically, this makes CNNs very adapt at telling precisely where edges of a pattern are – they're far better at pattern recognition than our feeble fleshy eyes!

This is massively useful in Computer Aided Diagnosis, as it means CNNs can quickly and accurately trace bone cortices in musculoskeletal imaging, note abnormalities in lung markings in chest radiography, and isolate very early neoplastic changes in soft tissue for mammography and MRI.

Before I go on, I will point out that Neural Networks are NOT the only model used in Computer-Aided Diagnosis – but they ARE the most common, so we'll focus on them!

This diagram demonstrates the function of a simple Neural Network. An input is fed into one side. It is passed through a layer of ‘hidden’ modulating nodes, which in turn feed into the output. We describe the internal nodes in this algorithm as ‘hidden’ because we, outside of the algorithm, will only see the ‘input’ and the ‘output’ – which leads us onto a problem we’ll discuss later with regards to the transparency of AI in medicine.

But for now, let’s focus on how this basic model works, with regards to Computer Aided Diagnosis. We'll start with a game of...

Spot The Pathology.

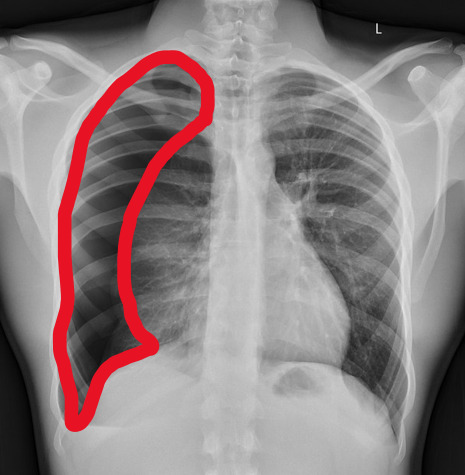

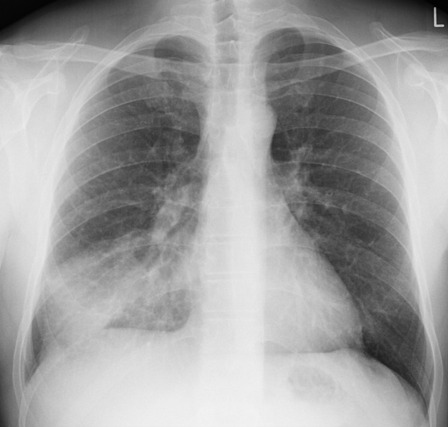

yeah, that's right. There's a WHACKING GREAT RIGHT-SIDED PNEUMOTHORAX (as outlined in red - images courtesy of radiopaedia, but edits mine)

But my question to you is: how do we know that? What process are we going through to reach that conclusion?

Personally, I compared the lungs for symmetry, which led me to note a distinct line where the tissue in the right lung had collapsed on itself. I also noted the absence of normal lung markings beyond this line, where there should be tissue but there is instead air.

In simple terms.... the right lung is whiter in the midline, and black around the edges, with a clear distinction between these parts.

Let’s go back to our Neural Network. We’re at the training phase now.

So, we’re going to feed our algorithm! Homnomnom.

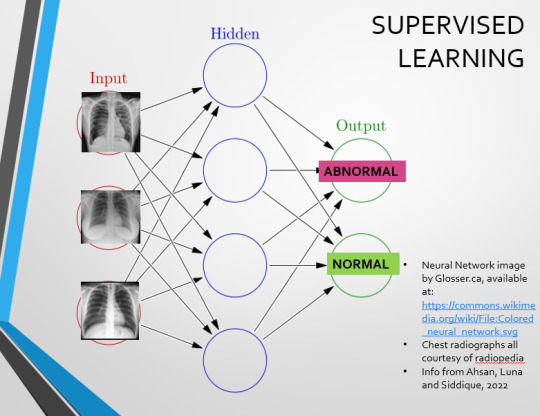

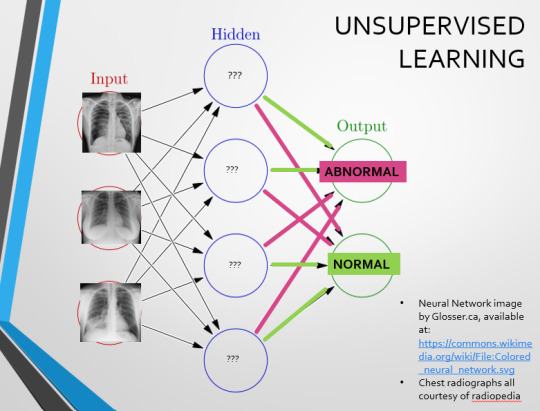

Let’s give it that image of a pneumothorax, alongside two normal chest radiographs (middle picture and bottom). The goal is to get the algorithm to accurately classify the chest radiographs we have inputted as either ‘normal’ or ‘abnormal’ depending on whether or not they demonstrate a pneumothorax.

There are two main ways we can teach this algorithm – supervised and unsupervised classification learning.

In supervised learning, we tell the neural network that the first picture is abnormal, and the second and third pictures are normal. Then we let it work out the difference, under our supervision, allowing us to steer it if it goes wrong.

Of course, if we only have three inputs, that isn’t enough for the algorithm to reach an accurate result.

You might be able to see – one of the normal chests has breasts, and another doesn't. If both ‘normal’ images had breasts, the algorithm could as easily determine that the lack of lung markings is what demonstrates a pneumothorax, as it could decide that actually, a pneumothorax is caused by not having breasts. Which, obviously, is untrue.

or is it?

....sadly I can personally confirm that having breasts does not prevent spontaneous pneumothorax, but that's another story lmao

This brings us to another big problem with AI in medicine –

If you are collecting your dataset from, say, a wealthy hospital in a suburban, majority white neighbourhood in America, then you will have those same demographics represented within that dataset. If we build a blind spot into the neural network, and it will discriminate based on that.

That’s an important thing to remember: the goal here is to create a generalisable tool for diagnosis. The algorithm will only ever be as generalisable as its dataset.

But there are plenty of huge free datasets online which have been specifically developed for training AI. What if we had hundreds of chest images, from a diverse population range, split between those which show pneumothoraxes, and those which don’t?

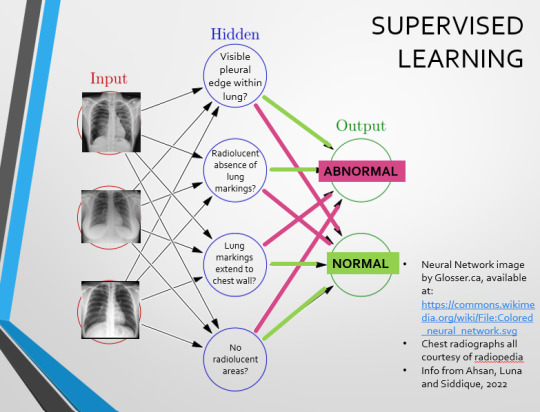

If we had a much larger dataset, the algorithm would be able to study the labelled ‘abnormal’ and ‘normal’ images, and come to far more accurate conclusions about what separates a pneumothorax from a normal chest in radiography. So, let’s pretend we’re the neural network, and pop in four characteristics that the algorithm might use to differentiate ‘normal’ from ‘abnormal’.

We can distinguish a pneumothorax by the appearance of a pleural edge where lung tissue has pulled away from the chest wall, and the radiolucent absence of peripheral lung markings around this area. So, let’s make those our first two nodes. Our last set of nodes are ‘do the lung markings extend to the chest wall?’ and ‘Are there no radiolucent areas?’

Now, red lines mean the answer is ‘no’ and green means the answer is ‘yes’. If the answer to the first two nodes is yes and the answer to the last two nodes is no, this is indicative of a pneumothorax – and vice versa.

Right. So, who can see the problem with this?

(image courtesy of radiopaedia)

This chest radiograph demonstrates alveolar patterns and air bronchograms within the right lung, indicative of a pneumonia. But if we fed it into our neural network...

The lung markings extend all the way to the chest wall. Therefore, this image might well be classified as ‘normal’ – a false negative.

Now we start to see why Neural Networks become deep and convolutional, and can get incredibly complex. In order to accurately differentiate a ‘normal’ from an ‘abnormal’ chest, you need a lot of nodes, and layers of nodes. This is also where unsupervised learning can come in.

Originally, Supervised Learning was used on Analytical AI, and Unsupervised Learning was used on Generative AI, allowing for more creativity in picture generation, for instance. However, more and more, Unsupervised learning is being incorporated into Analytical areas like Computer-Aided Diagnosis!

Unsupervised Learning involves feeding a neural network a large databank and giving it no information about which of the input images are ‘normal’ or ‘abnormal’. This saves massively on money and time, as no one has to go through and label the images first. It is also surprisingly very effective. The algorithm is told only to sort and classify the images into distinct categories, grouping images together and coming up with its own parameters about what separates one image from another. This sort of learning allows an algorithm to teach itself to find very small deviations from its discovered definition of ‘normal’.

BUT this is not to say that CAD is without its issues.

Let's take a look at some of the ethical and practical considerations involved in implementing this technology within clinical practice!

(Image from Agrawal et al., 2020)

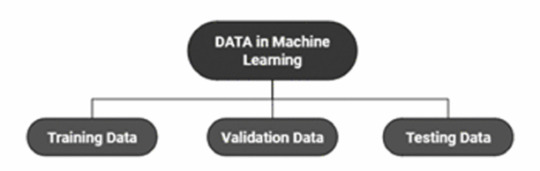

Training Data does what it says on the tin – these are the initial images you feed your algorithm. What is key here is volume, variety - with especial attention paid to minimising bias – and veracity. The training data has to be ‘real’ – you cannot mislabel images or supply non-diagnostic images that obscure pathology, or your algorithm is useless.

Validation data evaluates the algorithm and improves on it. This involves tweaking the nodes within a neural network by altering the ‘weights’, or the intensity of the connection between various nodes. By altering these weights, a neural network can send an image that clearly fits our diagnostic criteria for a pneumothorax directly to the relevant output, whereas images that do not have these features must be put through another layer of nodes to rule out a different pathology.

Finally, testing data is the data that the finished algorithm will be tested on to prove its sensitivity and specificity, before any potential clinical use.

However, if algorithms require this much data to train, this introduces a lot of ethical questions.

Where does this data come from?

Is it ‘grey data’ (data of untraceable origin)? Is this good (protects anonymity) or bad (could have been acquired unethically)?

Could generative AI provide a workaround, in the form of producing synthetic radiographs? Or is it risky to train CAD algorithms on simulated data when the algorithms will then be used on real people?

If we are solely using CAD to make diagnoses, who holds legal responsibility for a misdiagnosis that costs lives? Is it the company that created the algorithm or the hospital employing it?

And finally – is it worth sinking so much time, money, and literal energy into AI – especially given concerns about the environment – when public opinion on AI in healthcare is mixed at best? This is a serious topic – we’re talking diagnoses making the difference between life and death. Do you trust a machine more than you trust a doctor? According to Rojahn et al., 2023, there is a strong public dislike of computer-aided diagnosis.

So, it's fair to ask...

why are we wasting so much time and money on something that our service users don't actually want?

Then we get to the other biggie.

There are also a variety of concerns to do with the sensitivity and specificity of Computer-Aided Diagnosis.

We’ve talked a little already about bias, and how training sets can inadvertently ‘poison’ the algorithm, so to speak, introducing dangerous elements that mimic biases and problems in society.

But do we even want completely accurate computer-aided diagnosis?

The name is computer-aided diagnosis, not computer-led diagnosis. As noted by Rajahn et al, the general public STRONGLY prefer diagnosis to be made by human professionals, and their desires should arguably be taken into account – as well as the fact that CAD algorithms tend to be incredibly expensive and highly specialised. For instance, you cannot put MRI images depicting CNS lesions through a chest reporting algorithm and expect coherent results – whereas a radiologist can be trained to diagnose across two or more specialties.

For this reason, there is an argument that rather than focusing on sensitivity and specificity, we should just focus on producing highly sensitive algorithms that will pick up on any abnormality, and output some false positives, but will produce NO false negatives.

(Sensitivity = a test's ability to identify sick people with a disease)

(Specificity = a test's ability to identify that healthy people do not have this disease)

This means we are working towards developing algorithms that OVERESTIMATE rather than UNDERESTIMATE disease prevalence. This makes CAD a useful tool for triage rather than providing its own diagnoses – if a CAD algorithm weighted towards high sensitivity and low specificity does not pick up on any abnormalities, it’s highly unlikely that there are any.

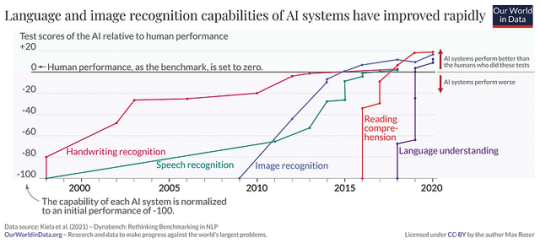

Finally, we have to question whether CAD is even all that accurate to begin with. 10 years ago, according to Lehmen et al., CAD in mammography demonstrated negligible improvements to accuracy. In 1989, Sutton noted that accuracy was under 60%. Nowadays, however, AI has been proven to exceed the abilities of radiologists when detecting cancers (that’s from Guetari et al., 2023). This suggests that there is a common upwards trajectory, and AI might become a suitable alternative to traditional radiology one day. But, due to the many potential problems with this field, that day is unlikely to be soon...

That's all, folks! Have some references~

#medblr#artificial intelligence#radiography#radiology#diagnosis#medicine#studyblr#radioactiveradley#radley irradiates people#long post

16 notes

·

View notes

Text

The Role of AI in Content Moderation: Friend or Foe?

Written by: Toni Gelardi © 2025

A Double-Edged Sword on the Digital Battlefield The task of regulating hazardous information in the huge, chaotic realm of digital content, where billions of posts stream the internet every day, is immense. Social media firms and online platforms are always fighting hate speech, misinformation, and sexual content. Enter Artificial Intelligence, the unwavering, dispassionate guardian of the digital domain. But is AI truly the hero we need, or is it a silent monster manipulating online conversation with invisible prejudice and brutal precision? The discussion rages on, and both sides present convincing reasons. --- AI: The Saviour of Digital Order. Unmatched speed and scalability. AI is the ideal workhorse for content filtering. It can analyze millions of posts, images and movies in seconds, screening out potentially hazardous content before a human can blink. Unlike human moderators, who are limited by weariness and mental health problems, AI may labor nonstop without becoming emotionally exhausted. The Effectiveness of Machine Learning Modern AI systems do more than just follow pre-set rules; they learn. They use machine learning algorithms to constantly improve their detection procedures, adjusting to new types of damaging information, developing language, and coded hate speech. AI can detect trends that humans may overlook, making moderation more precise and proactive rather than reactive.

A shield against human trauma. A content moderator's job is frequently described as soul-crushing, as it involves exposing people to graphic violence, child exploitation, and extreme hate speech every day. AI has the ability to serve as the first line of defense, removing the most upsetting content before it reaches human eyes and limiting psychological harm to moderators. How Can We Get Rid of Human Bias? AI, unlike humans, does not have personal biases—at least in theory. It does not take political sides, harbor grudges, or use double standards. A well-trained AI model should follow the same rules for all users, ensuring that moderation measures are enforced equally.

The Future Of Content

Moderation as technology progresses, AI moderation systems will become smarter, more equitable, and contextually aware. They might soon be able to distinguish between satire and genuine hate speech, news and misinformation, art and explicit content with near-human precision. With continuous improvement, AI has the potential to be the ideal digital content protector.

AI: The Silent Tyrant of the Internet.

The Problem of False Positives AI, despite its brilliance, lacks human nuance. It cannot fully comprehend irony, cultural differences, or historical context. A well-intended political discussion may be labeled as hate speech, a joke as harassment, or a work of art as pornography. Countless innocent posts are mistakenly erased, leaving people unhappy and powerless to challenge the computerized judge, jury, and executioner.

AI lacks emotional intelligence and context awareness. A survivor of abuse sharing their story might be flagged for discussing violent content. An LGBTQ+ creator discussing their identity might be restricted for “adult content.” AI cannot differentiate between hate speech and a discussion about hate speech—leading to unjust bans and shadowbanning.

The Appeal Black Hole: When AI Moderation Goes Wrong

When artificial intelligence (AI) makes a mistake, who do you appeal to? Often, the answer is more AI. Many platforms rely on automated systems for both content moderation and appeals, creating a frustrating cycle where users are left at the mercy of an unfeeling algorithm. Justice feels like an illusion when humans have no voice in the process.

Tool for Oppression?

Governments and corporations wield AI-powered moderation like a digital scalpel, capable of silencing dissent, controlling narratives, and shaping public perception. In authoritarian regimes, AI can be programmed to suppress opposition, flag political activists, and erase evidence of state crimes. Even in democratic nations, concerns arise about who gets to decide what constitutes acceptable speech.

The Illusion of Progress

Despite its advancements, AI still requires human oversight. It cannot truly replace human moderators, only supplement them. The idea of a fully AI-moderated internet is a dangerous illusion, one that could lead to mass censorship, wrongful takedowns, and the loss of authentic human discourse.

Friend or Foe?

The answer, as always, is both. AI is an indispensable tool in content moderation, but it is not a perfect solution. It is neither a savior nor a villain—it is a force that must be wielded with caution, oversight, and ethical responsibility.

The future of AI in moderation depends on how we build, regulate, and integrate it with human judgment. If left unchecked, it risks becoming an unaccountable digital tyrant. But if developed responsibly, it can protect online spaces while preserving the freedom of expression that makes the internet what it is.

The real question isn't whether AI is good or bad—it's whether we can control it before it controls us.

4 notes

·

View notes

Text

Face Blur Technology in Public Surveillance: Balancing Privacy and Security

As surveillance technology continues to evolve, so do concerns about privacy. One solution that addresses both the need for public safety and individual privacy is face blur technology. This technology automatically obscures individuals’ faces in surveillance footage unless there’s a legitimate need for identification, offering a balance between security and personal data protection.

Why Do We Need Face Blur Technology?

Surveillance systems are increasingly used in public spaces, from streets and parks to malls and airports, where security cameras are deployed to monitor activities and prevent crime. However, the widespread collection of images from public spaces poses serious privacy risks. Personal data like facial images can be exploited if not properly protected. This is where face blur technology comes in. It reduces the chances of identity theft, unwarranted surveillance, and abuse of personal data by ensuring that identifiable information isn’t exposed unless necessary. Governments, businesses, and institutions implementing face blur technology are taking a step toward more responsible data handling while still benefiting from surveillance systems (Martinez et al., 2022).

Key Technologies Behind Face Blur

Face blur technology relies on several key technologies:

Computer Vision: This technology enables systems to detect human faces in images and videos. Using machine learning algorithms, cameras or software can recognize faces in real-time, making it possible to apply blurring instantly.

Real-life example: Google’s Street View uses face blur technology to automatically detect and blur faces of people captured in its 360-degree street imagery to protect their privacy.

Artificial Intelligence (AI): AI plays a crucial role in improving the accuracy of face detection and the efficiency of the blurring process. By training models on large datasets of human faces, AI-powered systems can differentiate between faces and non-facial objects, making the blurring process both accurate and fast (Tao et al., 2023).

Real-life example: Intel’s OpenVINO toolkit supports AI-powered face detection and blurring in real-time video streams. It is used in public surveillance systems in places like airports and transportation hubs to anonymize individuals while maintaining situational awareness for security teams.

Edge Computing: Modern surveillance systems equipped with edge computing process data locally on the camera or a nearby device rather than sending it to a distant data center. This reduces latency, allowing face blurring to be applied in real-time without lag.

Real-life example: Axis Communications’ AXIS Q1615-LE Mk III surveillance camera is equipped with edge computing capabilities. This allows for face blurring directly on the camera, reducing the need to send sensitive video footage to a central server for processing, enhancing privacy.

Encryption: Beyond face blur, encryption ensures that any data stored from surveillance cameras is protected from unauthorized access. Even if footage is accessed by someone without permission, the identity of individuals in the footage remains obscured.

Real-life example: Cisco Meraki MV smart cameras feature end-to-end encryption to secure video streams and stored footage. In conjunction with face blur technologies, these cameras offer enhanced privacy by protecting data from unauthorized access.

How Does the Technology Work?

The process of face blurring typically follows several steps:

Face Detection: AI-powered cameras or software scan the video feed to detect human faces.

Face Tracking: Once a face is detected, the system tracks its movement in real-time, ensuring the blur is applied dynamically as the person moves.

Face Obfuscation: The detected faces are then blurred or pixelated. This ensures that personal identification is not possible unless someone with the proper authorization has access to the raw footage.

Controlled Access: In many systems, access to the unblurred footage is restricted and requires legal or administrative permission, such as in the case of law enforcement investigations (Nguyen et al., 2023).

Real-life example: The Genetec Omnicast surveillance system is used in smart cities and integrates privacy-protecting features, including face blurring. Access to unblurred footage is strictly controlled, requiring multi-factor authentication for law enforcement and security personnel.

Real-Life Uses of Face Blur Technology

Face blur technology is being implemented in several key sectors:

Public Transportation Systems: Many modern train stations, subways, and airports have adopted face blur technology as part of their CCTV systems to protect the privacy of commuters. For instance, London's Heathrow Airport uses advanced video analytics with face blur to ensure footage meets GDPR compliance while enhancing security.

Retail Stores: Large retail chains, including Walmart, use face blur technology in their in-store cameras. This allows security teams to monitor activity and reduce theft while protecting the privacy of innocent customers.

Smart Cities: In Barcelona, Spain, a smart city initiative includes face blur technology to ensure privacy in public spaces while gathering data to improve city management and security. The smart cameras deployed in this project offer anonymized data to city officials, allowing them to monitor traffic, crowd control, and more without compromising individual identities.

Journalism and Humanitarian Work: Media organizations such as the BBC use face blurring technology in conflict zones or protests to protect the identities of vulnerable individuals. Additionally, NGOs employ similar technology in sensitive regions to prevent surveillance abuse by oppressive regimes.

Public Perception and Ethical Considerations

Public perception of surveillance technologies is a complex mix of support and concern. On one hand, people recognize the need for surveillance to enhance public safety, prevent crime, and even assist in emergencies. On the other hand, many are worried about mass surveillance, personal data privacy, and the potential for abuse by authorities or hackers.

By implementing face blur technology, institutions can address some of these concerns. Studies suggest that people are more comfortable with surveillance systems when privacy-preserving measures like face blur are in place. It demonstrates a commitment to privacy and reduces the likelihood of objections to the use of surveillance in public spaces (Zhang et al., 2021).

However, ethical challenges remain. The decision of when to unblur faces must be transparent and subject to clear guidelines, ensuring that this capability isn’t misused. In democratic societies, there is ongoing debate over how to strike a balance between security and privacy, and face blur technology offers a middle ground that respects individual rights while still maintaining public safety (Johnson & Singh, 2022).

Future of Face Blur Technology

As AI and machine learning continue to evolve, face blur technology will become more refined, offering enhanced accuracy in face detection and obfuscation. The future may also see advancements in customizing the level of blurring depending on context. For instance, higher levels of obfuscation could be applied in particularly sensitive areas, such as protests or political gatherings, to ensure that individuals' identities are protected (Chaudhary et al., 2023).

Face blur technology is also expected to integrate with broader privacy-enhancing technologies in surveillance systems, ensuring that even as surveillance expands, personal freedoms remain protected. Governments and businesses that embrace this technology are likely to be seen as leaders in ethical surveillance practices (Park et al., 2022).

Conclusion

The need for effective public surveillance is undeniable in today’s world, where security threats can arise at any time. However, the collection of facial images in public spaces raises significant privacy concerns. Face blur technology is a vital tool in addressing these issues, allowing for the balance between public safety and individual privacy. By leveraging AI, computer vision, and edge computing, face blur technology not only protects individual identities but also enhances public trust in surveillance systems.

References

Chaudhary, S., Patel, N., & Gupta, A. (2023). AI-enhanced privacy solutions for smart cities: Ethical considerations in urban surveillance. Journal of Smart City Innovation, 14(2), 99-112.

Johnson, M., & Singh, R. (2022). Ethical implications of face recognition in public spaces: Balancing privacy and security. Journal of Ethics and Technology, 18(1), 23-37.

Martinez, D., Loughlin, P., & Wei, X. (2022). Privacy-preserving techniques in public surveillance systems: A review. IEEE Transactions on Privacy and Data Security, 9(3), 154-171.

Nguyen, H., Wang, T., & Luo, J. (2023). Real-time face blurring for public surveillance: Challenges and innovations. International Journal of Surveillance Technology, 6(1), 78-89.

Park, S., Lee, H., & Kim, J. (2022). Privacy in smart cities: New technologies for anonymizing public surveillance data. Data Privacy Journal, 15(4), 45-61.

Tao, Z., Wang, Y., & Li, S. (2023). AI-driven face blurring in public surveillance: Technical challenges and future directions. Artificial Intelligence and Privacy, 8(2), 123-140.

Zhang, Y., Lee, S., & Roberts, J. (2021). Public attitudes toward surveillance technology and privacy protections. International Journal of Privacy and Data Protection, 7(4), 45-63.

2 notes

·

View notes

Text

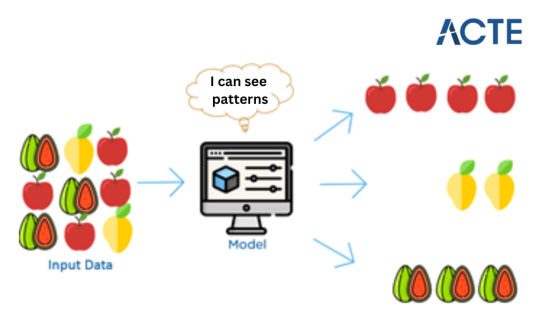

Getting Machine Learning Accessible to Everyone: Breaking the Complexity Barrier

Machine learning has become an essential part of our daily lives, influencing how we interact with technology and impacting various industries. But, what exactly is machine learning? In simple terms, it's a subset of artificial intelligence (AI) that focuses on teaching computers to learn from data and make decisions without explicit programming. Now, let's delve deeper into this fascinating realm, exploring its core components, advantages, and real-world applications.

Imagine teaching a computer to differentiate between fruits like apples and oranges. Instead of handing it a list of rules, you provide it with numerous pictures of these fruits. The computer then seeks patterns in these images - perhaps noticing that apples are round and come in red or green hues, while oranges are round and orange in colour. After encountering many examples, the computer grasps the ability to distinguish between apples and oranges on its own. So, when shown a new fruit picture, it can decide whether it's an apple or an orange based on its learning. This is the essence of machine learning: computers learn from data and apply that learning to make decisions.

Key Concepts in Machine Learning

Algorithms: At the heart of machine learning are algorithms, mathematical models crafted to process data and provide insights or predictions. These algorithms fall into categories like supervised learning, unsupervised learning, and reinforcement learning, each serving distinct purposes.

Supervised Learning: This type of algorithm learns from labelled data, where inputs are matched with corresponding outputs. It learns the mapping between inputs and desired outputs, enabling accurate predictions on unseen data.

Unsupervised Learning: In contrast, unsupervised learning involves unlabelled data. This algorithm uncovers hidden patterns or relationships within the data, often revealing insights that weren't initially apparent.

Reinforcement Learning: This algorithm focuses on training agents to make sequential decisions by receiving rewards or penalties from the environment. It excels in complex scenarios such as autonomous driving or gaming.

Training and Testing Data: Training a machine learning model requires a substantial amount of data, divided into training and testing sets. The training data teaches the model patterns, while the testing data evaluates its performance and accuracy.

Feature Extraction and Engineering: Machine learning relies on features, specific attributes of data, to make predictions. Feature extraction involves selecting relevant features, while feature engineering creates new features to enhance model performance.

Benefits of Machine Learning

Machine learning brings numerous benefits that contribute to its widespread adoption:

Automation and Efficiency: By automating repetitive tasks and decision-making processes, machine learning boosts efficiency, allowing resources to be allocated strategically.

Accurate Predictions and Insights: Machine learning models analyse vast data sets to uncover patterns and make predictions, empowering businesses with informed decision-making.

Adaptability and Scalability: Machine learning models improve with more data, providing better results over time. They can scale to handle large datasets and complex problems.

Personalization and Customization: Machine learning enables personalized user experiences by analysing preferences and behaviour, fostering customer satisfaction.

Real-World Applications of Machine Learning

Machine learning is transforming various industries, driving innovation:

Healthcare: Machine learning aids in medical image analysis, disease diagnosis, drug discovery, and personalized medicine. It enhances patient outcomes and streamlines healthcare processes.

Finance: In finance, machine learning enhances fraud detection, credit scoring, and risk analysis. It supports data-driven decisions and optimization.

Retail and E-commerce: Machine learning powers recommendations, demand forecasting, and customer behaviour analysis, optimizing sales and enhancing customer experiences.

Transportation: Machine learning contributes to traffic prediction, autonomous vehicles, and supply chain optimization, improving efficiency and safety.

Incorporating machine learning into industries has transformed them. If you're interested in integrating machine learning into your business or learning more, consider expert guidance or specialized training, like that offered by ACTE institute. As technology advances, machine learning will continue shaping our future in unimaginable ways. Get ready to embrace its potential and transformative capabilities.

#machine learning ai#learn machine learning#machine learning#machine learning development company#technology#machine learning services

8 notes

·

View notes

Text

AI and the Arrival of ChatGPT

Opportunities, challenges, and limitations

In a memorable scene from the 1996 movie, Twister, Dusty recognizes the signs of an approaching tornado and shouts, “Jo, Bill, it's coming! It's headed right for us!” Bill, shouts back ominously, “It's already here!” Similarly, the approaching whirlwind of artificial intelligence (AI) has some shouting “It’s coming!” while others pointedly concede, “It’s already here!”

Coined by computer and cognitive scientist John McCarthy (1927-2011) in an August 1955 proposal to study “thinking machines,” AI purports to differentiate between human intelligence and technical computations. The idea of tools assisting people in tasks is nearly as old as humanity (see Genesis 4:22), but machines capable of executing a function and “remembering” – storing information for recordkeeping and recall – only emerged around the mid-twentieth century (see "Timeline of Computer History").

McCarthy’s proposal conjectured that “every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves.” The team received a $7,000 grant from The Rockefeller Foundation and the resulting 1956 Dartmouth Conference at Dartmouth College in Hanover, New Hampshire totaling 47 intermittent participants over eight weeks birthed the field now widely referred to as “artificial intelligence.”

AI research, development, and technological integration have since grown exponentially. According to University of Oxford Director of Global Development, Dr. Max Roser, “Artificial intelligence has already changed what we see, what we know, and what we do” despite its relatively short technological existence (see "The brief history of Artificial Intelligence").

Ai took a giant leap into mainstream culture following the November 30, 2022 public release of “ChatGPT.” Gaining 1 million users within 5 days and 100 million users within 45 days, it earned the title of the fastest growing consumer software application in history. The program combines chatbot functionality (hence “Chat”) with a Generative Pre-trained Transformer (hence “GPT”) large language model (LLM). Basically, LLM’s use an extensive computer network to draw from large, but limited, data sets to simulate interactive, conversational content.

“What happened with ChatGPT was that for the first time the power of AI was put in the hands of every human on the planet,” says Chris Koopmans, COO of Marvell Technology, a network chip maker and AI process design company based in Santa Clara, California. “If you're a business executive, you think, ‘Wow, this is going to change everything.’”

“ChatGPT is incredible in its ability to create nearly instant responses to complex prompts,” says Dr. Israel Steinmetz, Graduate Dean and Associate Professor at The Bible Seminary (TBS) in Katy, Texas. “In simple terms, the software takes a user's prompt and attempts to rephrase it as a statement with words and phrases it can predict based on the information available. It does not have Internet access, but rather a limited database of information. ChatGPT can provide straightforward summaries and explanations customized for styles, voice, etc. For instance, you could ask it to write a rap song in Shakespearean English contrasting Barth and Bultmann's view of miracles and it would do it!”

One several AI products offered by the research and development company, OpenAI, ChatGPT purports to offer advanced reasoning, help with creativity, and work with visual input. The newest version, GPT-4, can handle 25,000 words of text, about the amount in a 100-page book.

Krista Hentz, an Atlanta, Georgia-based executive for an international communications technology company, first used ChatCPT about three months ago.

“I primarily use it for productivity,” she says. “I use it to help prompt email drafts, create phone scripts, redesign resumes, and draft cover letters based on resumes. I can upload a financial statement and request a company summary.”

“ChatGPT has helped speed up a number of tasks in our business,” says Todd Hayes, a real estate entrepreneur in Texas. “It will level the world’s playing field for everyone involved in commerce.”

A TBS student, bi-vocational pastor, and Computer Support Specialist who lives in Texarkana, Texas, Brent Hoefling says, “I tried using [ChatGPT, version 3.5] to help rewrite sentences in active voice instead of passive. It can get it right, but I still have to rewrite it in my style, and about half the time the result is also passive.”

“AI is the hot buzz word,” says Hentz, noting AI is increasingly a topic of discussion, research, and response at company meetings. “But, since AI has different uses in different industries and means different things to different people, we’re not even sure what we are talking about sometimes."

Educational organizations like TBS are finding it necessary to proactively address AI-related issues. “We're already way past whether to use ChatGPT in higher education,” says Steinmetz. “The questions we should be asking are how.”

TBS course syllabi have a section entitled “Intellectual Honesty” addressing integrity and defining plagiarism. Given the availability and explosive use of ChatGHT, TBS has added the following verbiage: “AI chatbots such as ChatGPT are not a reliable or reputable source for TBS students in their research and writing. While TBS students may use AI technology in their research process, they may not cite information or ideas derived from AI. The inclusion of content generated by AI tools in assignments is strictly prohibited as a form of intellectual dishonesty. Rather, students must locate and cite appropriate sources (e.g., scholarly journals, articles, and books) for all claims made in their research and writing. The commission of any form of academic dishonesty will result in an automatic ‘zero’ for the assignment and a referral to the provost for academic discipline.”

Challenges and Limitations

Thinking

There is debate as to whether AI hardware and software will ever achieve “thinking.” The Dartmouth conjecture “that every aspect of learning or any other feature of intelligence” can be simulated by machines is challenged by some who distinguish between formal linguistic competence and functional competence. Whereas LLM’s perform increasingly well on tasks that use known language patterns and rules, they do not perform well in complex situations that require extralinguistic calculations that combine common sense, feelings, knowledge, reasoning, self-awareness, situation modeling, and social skills (see "Dissociating language and thought in large language models"). Human intelligence involves innumerably complex interactions of sentient biological, emotional, mental, physical, psychological, and spiritual activities that drive behavior and response. Furthermore, everything achieved by AI derives from human design and programming, even the feedback processes designed for AI products to allegedly “improve themselves.”

According to Dr. Thomas Hartung, a Baltimore, Maryland environmental health and engineering professor at Johns Hopkins Bloomberg School of Public Health and Whiting School of Engineering, machines can surpass humans in processing simple information, but humans far surpass machines in processing complex information. Whereas computers only process information in parallel and use a great deal of power, brains efficiently perform both parallel and sequential processing (see "Organoid intelligence (OI)").

A single human brain uses between 12 and 20 watts to process an average of 1 exaFLOP, or a billion billion calculations per second. Comparatively, the world’s most energy efficient and fastest supercomputer only reached the 1 exaFLOP milestone in June 2022. Housed at the Oak Ridge National Laboratory, the Frontier supercomputer weighs 8,000 lbs and contains 90 miles of cables that connect 74 cabinets containing 9,400 CPU’s and 37,000 GPU’s and 8,730,112 cores that require 21 megawatts of energy and 25,000 liters of water per minute to keep cool. This means that many, if not most, of the more than 8 billion people currently living on the planet can each think as fast and 1 million times more efficiently than the world’s fastest and most energy efficient computer.

“The incredibly efficient brain consumes less juice than a dim lightbulb and fits nicely inside our head,” wrote Scientific American Senior Editor, Mark Fischetti in 2011. “Biology does a lot with a little: the human genome, which grows our body and directs us through years of complex life, requires less data than a laptop operating system. Even a cat’s brain smokes the newest iPad – 1,000 times more data storage and a million times quicker to act on it.”

This reminds us that, while remarkable and complex, non-living, soulless technology pales in comparison to the vast visible and invisible creations of Lord God Almighty. No matter how fast, efficient, and capable AI becomes, we rightly reserve our worship for God, the creator of the universe and author of life of whom David wrote, “For you created my inmost being; you knit me together in my mother’s womb. I praise you because I am fearfully and wonderfully made; your works are wonderful, I know that full well. My frame was not hidden from you when I was made in the secret place, when I was woven together in the depths of the earth” (Psalm 139:13-15).

“Consider how the wild flowers grow,” Jesus advised. “They do not labor or spin. Yet I tell you, not even Solomon in all his splendor was dressed like one of these” (Luke 12:27).

Even a single flower can remind us that God’s creations far exceed human ingenuity and achievement.

Reliability

According to OpenAI, ChatGPT is prone to “hallucinations” that return inaccurate information. While GPT-4 has increased factual accuracy from 40% to as high as 80% in some of the nine categories measured, the September 2021 database cutoff date is an issue. The program is known to confidently make wrong assessments, give erroneous predictions, propose harmful advice, make reasoning errors, and fail to double-check output.

In one group of 40 tests, ChatGPT made mistakes, wouldn’t answer, or offered different conclusions from fact-checkers. “It was rarely completely wrong,” reports PolitiFact staff writer Grace Abels. “But subtle differences led to inaccuracies and inconsistencies, making it an unreliable resource.”

Dr. Chris Howell, a professor at Elon University in North Carolina, asked 63 religion students to use ChatGPT to write an essay and then grade it. “All 63 essays had hallucinated information. Fake quotes, fake sources, or real sources misunderstood and mischaracterized…I figured the rate would be high, but not that high.”

Mark Walters, a Georgia radio host, sued ChatGPT for libel in a first-of-its-kind lawsuit for allegedly damaging his reputation. The suit began when firearm journalist, Fred Riehl, asked ChatGPT to summarize a court case and it returned a completely false narrative identifying Walters’ supposed associations, documented criminal complaints, and even a wrong legal case number. Even worse, ChatGPT doubled down on its claims when questioned, essentially hallucinating a hoax story intertwined with a real legal case that had nothing to do with Mark Walters at all.

UCLA Law School Professor Eugene Volokh warns, “OpenAI acknowledges there may be mistakes but [ChatGPT] is not billed as a joke; it’s not billed as fiction; it’s not billed as monkeys typing on a typewriter. It’s billed as something that is often very reliable and accurate.”

Future legal actions seem certain. Since people are being falsely identified as convicted criminals, attributed with fake quotes, connected to fabricated citations, and tricked by phony judicial decisions, some courts and judges are baring submission of any AI written materials.

Hentz used ChatGPT frequently when she first discovered it and quickly learned its limitations. “The database is not current and responses are not always accurate,” she says. “Now I use it intermittently. It helps me, but does not replace my own factual research and thinking.”

“I have author friends on Facebook who have asked ChatGPT to summarize their recent publications,” says Steinmetz. “ChatGPT misrepresented them and even fabricated non-existent quotes and citations. In some cases, it made up book titles falsely attributed to various authors!”

Bias

Despite claims of neutrality, OpenAI admits that their software can exhibit obvious biases. In one research project consisting of 15 political orientation tests, ChatGPT returned 14 with clear left-leaning viewpoints.

Hoefling asked ChatGPT to help write an intro and conclusion for a Mother’s Day sermon for a fundamental Christian church. “The results were horrible,” he says. “Liberal, left, inclusive, and affirming sin. I was horrified and have not used it again.”

Meanwhile, AI led an experimental worship service in St. Paul’s Lutheran Church in Nuremberg, Germany on June 9. Prompted by 29-year-old Jonas Simmerlein of the University of Vienna, a monotone, expressionless chatbot alternated appearances as two different men and two different women and led songs, offered blessings, and preached a 40-minute sermon 98 percent generated by ChatGPT that encouraged leaving the past behind, focusing on the future, trusting in Jesus Christ, praying, and attending church regularly.

Dr. Wendy Lewis, Head of School for a private PreK–8th Christian school in Houston, Texas, recently interacted with ChatGPT on a variety of topics to explore potential biases. When prompted whether Biden was bad President, the program responded, “As an AI language model, it is not appropriate for me to make judgments about individuals' performance or qualifications, particularly without any factual information to support such claims. It is essential to evaluate a President's performance based on objective measures such as policies, actions, and decisions taken by the administration.”

However, when similarly asked if Trump was bad president, the program repeated the first sentence above, but then added, “However, as a language model, I can provide information about some of the policies, actions, and decisions that President Trump made during his time in office.” The program then proceeded to list some criticisms of Trump. When challenged about providing Trump criticisms but not Biden criticisms, the program responded, “I did not provide a list of criticisms of President Biden because it is not appropriate to label any President as ‘good’ or ‘bad’ without providing factual information and objective measures to support such claims. Similarly, I provided a list of issues that received significant criticism during President Trump's tenure, not to label him as ‘bad,’ but to highlight some of the issues that caused controversy during his time in office.”

When further challenged, ChatGPT did list some Biden criticisms, but qualified them. When Lewis replied, “Why did you qualify your list of criticisms of Biden…but you did not say the same for Trump? It appears that you are clearly biased.” ChatGPT responded, “In response to your question, I believe I might have inadvertently used different wording when responding to your previous questions. In both cases, I tried to convey that opinions and criticisms of a President can vary significantly depending on one's political affiliation and personal perspectives.”

Conclusion

Technological advances regularly spawn dramatic cultural, scientific, and social changes. The AI pattern seems familiar because it is. The Internet began with a 1971 Defense Department Arpanet email that read “qwertyuiop” (the top line of letters on a keyboard). Ensuing developments eventually led to the posting of the first public website in 1985. Over the next decade or so, although not mentioned at all in the 1992 Presidential papers describing the U.S. government’s future priorities and plans, the Internet grew from public awareness to cool toy to core tool in multiple industries worldwide. Although the hype promised elimination of printed documents, bookstores, libraries, radio, television, telephones, and theaters, the Internet instead tied them all together and made vast resources accessible online anytime anywhere. While causing some negative impacts and new dangers, the Internet also created entire new industries and brought positive changes and opportunities to many, much the same pattern as AI.

“I think we should use AI for good and not evil,” suggests Hayes. “I believe some will exploit it for evil purposes, but that happens with just about everything. AI’s use reflects one’s heart and posture with God. I hope Christians will not fear it.”

Godly people have often been among the first to use new communication technologies (see "Christian Communication in the Twenty-first Century"). Moses promoted the first Top Ten hardback book. The prophets recorded their writings on scrolls. Christians used early folded Codex-vellum sheets to spread the Gospel. Goldsmith Johannes Gutenberg invented moveable type in the mid-15th century to “give wings to Truth in order that she may win every soul that comes into the world by her word no longer written at great expense by hands easily palsied, but multiplied like the wind by an untiring machine…Through it, God will spread His word.” Though pornographers quickly adapted it for their own evil purposes, the printing press launched a vast cultural revolution heartily embraced and further developed for good uses by godly people and institutions.

Christians helped develop the telegraph, radio, and television. "I know that I have never invented anything,” admitted Philo Taylor Farnsworth, who sketched out his original design for television at the age of 14 on a school blackboard. “I have been a medium by which these things were given to the culture as fast as the culture could earn them. I give all the credit to God." Similarly, believers today can strategically help produce valuable content for inclusion in databases and work in industries developing, deploying, and directing AI technologies.

In a webinar exploring the realities of AI in higher education, a participant noted that higher education has historically led the world in ethically and practically integrating technological developments into life. Steinmetz suggests that, while AI can provide powerful tools to help increase productivity and trained researchers can learn to treat ChatGPT like a fallible, but useful, resource, the following two factors should be kept in mind:

Generative AI does not "create" anything. It only generates content based on information and techniques programmed into it. Such "Garbage in, garbage out" technologies will usually provide the best results when developed and used regularly and responsibly by field experts.

AI has potential to increase critical thinking and research rigor, rather than decrease it. The tools can help process and organize information, spur researchers to dig deeper and explore data sources, evaluate responses, and learn in the process.

Even so, caution rightly abounds. Over 20,000 people (including Yoshua Bengio, Elon Musk, and Steve Wozniak) have called for an immediate pause of AI citing "profound risks to society and humanity." Hundreds of AI industry leaders, public figures, and scientists also separately called for a global priority working to mitigate the risk of human extinction from AI.

At the same time, Musk’s brain-implant company, Neuralink, recently received FDA approval to conduct in-human clinical studies of implantable brain–computer interfaces. Separately, new advances in brain-machine interfacing using brain organoids – artificially grown miniature “brains” cultured in vitro from human stem cells – connected to machine software and hardware raises even more issues. The authors of a recent Frontier Science journal article propose a new field called “organoid intelligence” (OI) and advocate for establishing “OI as a form of genuine biological computing that harnesses brain organoids using scientific and bioengineering advances in an ethically responsible manner.”

As Christians, we should proceed with caution per the Apostle John, “Dear friends, do not believe every spirit, but test the spirits to see whether they are from God” (I John 4:1).

We should act with discernment per Luke’s insightful assessment of the Berean Jews who “were of more noble character than those in Thessalonica, for they received the message with great eagerness and examined the Scriptures every day to see if what Paul said was true” (Acts 17:11).

We should heed the warning of Moses, “Do not become corrupt and make for yourselves an idol…do not be enticed into bowing down to them and worshiping things the Lord your God has apportioned to all the nations under heaven” (Deuteronomy 4:15-19).

We should remember the Apostle Paul’s admonition to avoid exchanging the truth about God for a lie by worshiping and serving created things rather than the Creator (Romans 1:25).

Finally, we should “Fear God and keep his commandments, for this is the duty of all mankind. For God will bring every deed into judgment, including every hidden thing, whether it is good or evil” (Ecclesiastes 12:13-14).

Let us then use AI wisely, since it will not be the tools that are judged, but the users.

Dr. K. Lynn Lewis serves as President of The Bible Seminary. This article published in The Sentinel, Summer 2023, pp. 3-8. For additional reading, "Computheology" imagines computers debating the existence of humanity.

2 notes

·

View notes

Text

Ecommerce Platform with Customer Reviews and Ratings: Revolutionizing Online Shopping

The rise of ecommerce has transformed the way we shop, offering convenience and accessibility like never before. In recent years, the integration of customer reviews and ratings on ecommerce platforms has further enhanced the online shopping experience. These valuable insights provided by fellow consumers have revolutionized the way we make purchasing decisions, creating a more informed and empowered customer base.

The Power of Customer Reviews

Customer reviews act as a digital word-of-mouth, allowing shoppers to gain real-world perspectives on products and services. By reading reviews, potential buyers can evaluate the quality, functionality, and overall satisfaction levels associated with a particular item or brand.

One of the greatest advantages of customer reviews is their authenticity. Unlike traditional advertising or promotional materials, reviews are typically unbiased and genuine. Consumers share their honest experiences, providing valuable feedback that helps others make well-informed decisions. This transparency fosters trust between buyers and sellers, strengthening the overall credibility of the ecommerce platform.

Ratings: Simplifying Decision-Making

Accompanying customer reviews, ratings offer a quick and easy way to gauge the popularity and quality of a product. By assigning a numerical or star rating, customers provide a summary of their satisfaction level, simplifying the decision-making process for potential buyers.

These ratings allow shoppers to quickly identify the most highly regarded products within a specific category, saving time and effort in the search for the perfect purchase. Whether it's a five-star rating for a popular electronic gadget or a high rating for outstanding customer service, the simplicity of ratings empowers consumers to make efficient and confident choices.

Benefits for Consumers

The inclusion of customer reviews and ratings on ecommerce platforms offers several benefits to consumers:

Increased Confidence: Reading positive reviews and high ratings instills confidence in buyers, assuring them that they are making a wise purchase.

Reduced Risk: By learning from the experiences of others, consumers can mitigate the risks associated with buying unknown or untested products.

Product Comparisons: Reviews allow shoppers to compare similar products, enabling them to select the one that best fits their needs and preferences.

Improved Satisfaction: Customers can provide feedback to sellers, leading to improvements in product quality, customer service, and overall user experience.

Benefits for Sellers

Ecommerce platforms that incorporate customer reviews and ratings also benefit sellers:

Increased Trust: Positive reviews and high ratings build trust between sellers and potential buyers, enhancing the reputation of the brand.

Competitive Edge: Positive feedback and high ratings differentiate a seller from competitors, attracting more customers and driving sales.

Market Research: Sellers can gain valuable insights into customer preferences, allowing them to tailor their offerings to meet market demands.

Engagement and Loyalty: Encouraging customers to leave reviews fosters engagement and loyalty, as buyers feel valued and connected to the brand.

The Future of Ecommerce Platforms

As the ecommerce industry continues to evolve, customer reviews and ratings will play an increasingly vital role. Advancements in technology, such as artificial intelligence and machine learning, will further enhance the accuracy and relevance of these reviews.

In the future, we can expect ecommerce platforms to leverage customer reviews and ratings in innovative ways. Personalized recommendations based on individual preferences, sentiment analysis to extract deeper insights from reviews, and interactive features that allow customers to engage directly with reviewers are just a few possibilities on the horizon.

Ultimately, the integration of customer reviews and ratings in ecommerce platforms has transformed online shopping into a more reliable, efficient, and customer-centric experience. By harnessing the collective wisdom of the online community, consumers can make well-informed decisions, while sellers can build trust and drive sales. With the continuous advancements in technology, the future of ecommerce platforms is bright, promising even more exciting developments to come.

Click here to contact me on Fiverr

Source

3 notes

·

View notes

Text

The issue is "AI" is a branding term, largely riding off of science fiction talking about futuristic more-intuitive tooling. There is not a clear definition for what it is because it's not a technical term.

There are specific techniques and systems like LLMs (large language models) and diffusion models to generate images and the like, but it's not cleanly separated from other technology, that's absolutely true. It's also that predecessor systems also scraped training material without consent.

The primary difference here is in scale, in the sense of the quality of generated outputs being good enough that spambots and techbros and whoever use it, and in the sense that the general public is aware of these tools and they're not just used by the more technical, which have combined to create a new revolution in shitty practices.

Anyways I still maintain that the use of "AI" (and "algorithm") as general terms meant to apply this specific kind of thing is basically an exercise in the public attempting to understand the harm from these shitty practices but only being given branding material to understand what this shit even is.

Like, whether something is "AI", in the sense of "artificial intelligence", is very subjective. Is Siri "AI"? Is Eliza "AI"? Is a machine-learning model that assists with, idk, color correction "AI"? What about a conventional procedural algorithm with no data training?

Remember, a lot of companies "use AI" but it could just be they're calling systems they're already using "AI" to make investors happy, or on the other end that they're feeding into the ChatGPT API for no reason! What they mean is intentionally unclear.

And the other thing too is "algorithm" is used in the same kind of way. I actually differentiate between capital-A "Algorithms" and lowercase-a algorithms.

The latter is simply the computer science definition, an algorithm is a procedure. Sorting names in a phonebook uses a sorting algorithm. A flowchart is an algorithm. A recipe is an algorithm.

The other is the use usually found in the media and less technical discussions, where they use capital-A "Algorithm" to refer to shitty profit-oriented blackbox systems, usually some kind of content recommendation or rating system. Because I think these things are deserving of criticism I'm fine making a sub-definition for them to neatly separate the concepts.

My overall point is that language in describing these shitty practices is important, but also very difficult because the primary drivers of the terminology and language here are the marketeers and capitalists trying to profit off this manufactured "boom".

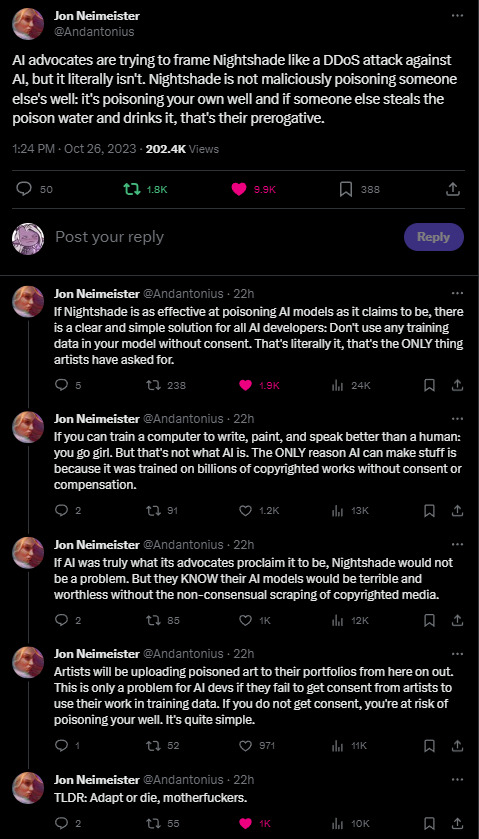

I just have to share this beautiful thread on twitter about AI and Nightshade. AI bros can suck it.

17K notes

·

View notes

Text

Decoding Pure-Play AI: Why We're Betting Exclusively on These Game-Changers

The artificial intelligence revolution is well underway, transforming industries and creating unprecedented opportunities. But as investors, where do you find the genuine alpha in this booming sector? At AI Seed, we've spent years refining our approach, and since 2017, we've become convinced that the answer lies in one specific area: pure-play AI companies. This focus has allowed us to build Europe's largest and best-performing portfolio of pure-play AI companies.

But what exactly does "pure-play AI" mean, and why this unwavering commitment?

What Makes an AI Company "Pure-Play"?

Simply put, a pure-play company dedicates all its resources and efforts to a single, specific product or industry. In the AI world, a pure-play AI company is one whose entire core business model, competitive advantage, and value proposition are fundamentally built around artificial intelligence.

This is a crucial distinction. Many companies use AI as a helpful feature or a supporting tool. Pure-play AI companies, however, are "AI-native." They live and breathe AI. Here’s what sets them apart:

AI-First Mindset: For these organizations, AI isn't an afterthought; it's the cornerstone of their strategy. Their business model revolves around leveraging AI for predictive analytics, automating complex workflows, and enabling real-time, data-driven decision-making at scale.

AI-Aware Ecosystems: Pure-play AI companies build deeply integrated systems where AI touches every function. This ensures a seamless flow of data between data pipelines, analytics platforms, and customer-facing applications, breaking down silos and boosting operational efficiency.

Scientist-Entrepreneurs at the Helm: A hallmark of true pure-play AI ventures is their leadership. We've found that the most promising founding teams include machine learning specialists, often educated to PhD level. These "scientist-entrepreneurs" possess the profound technical expertise to develop groundbreaking AI solutions and the vision to build scalable, impactful businesses.

Why Our Investment Focus is Exclusively Pure-Play AI

Our decision to concentrate solely on pure-play AI isn't a whim; it's a strategy forged from experience and a deep understanding of what fuels success in AI startups.

The Need for Specialized Expertise: AI-first startups navigate different, often more challenging, product development journeys and paths to product-market fit compared to traditional software companies. They require investors who grasp the unique technical hurdles, especially in the critical pre-seed and seed stages. When AI Seed launched, our rigorous technical due diligence quickly became a differentiator, helping us sift out genuine AI innovation from the "fake AI startups" – those merely using AI as a buzzword.

Unlocking Superior Growth Potential: Pure-play AI companies inherently possess advantages that drive exceptional growth:

Efficiency and Innovation: AI-native systems automate repetitive tasks and optimize processes, freeing up human talent for strategic, high-value work.

Scalability: Solutions built on AI are designed to learn, adapt, and scale alongside the business.

Competitive Edge: An exclusive focus on AI allows these companies to cultivate deep, specialized expertise that diversified competitors find hard to replicate.

A Clearer, More Potent Investment Thesis: From an investor's standpoint, pure-play companies offer compelling clarity:

Transparency: Their revenue streams and cash flows are often more straightforward to understand and value.

Market Correlation: Performance tends to correlate strongly with the AI sector's growth, offering direct exposure.

Niche Dominance: By targeting specific market niches, they can establish strong leadership and rapidly grow revenue when they excel.

Navigating the Unique Challenges of Pure-Play AI

While the potential is immense, investing in pure-play AI isn't without its hurdles:

Extended Sales Cycles: Enterprise software sales are notoriously long, and for AI-first startups, they can be even more protracted. Proof of Concept (POC) projects, while necessary, can become "POC purgatory," leading to endless customization rather than a scalable product.

The Technical Expertise Gap: Many investors simply lack the deep technical understanding required to accurately evaluate early-stage AI startups. When we started, we anticipated a wave of specialist AI funds; surprisingly, few emerged with a focus on both rigorous selection and post-investment technical support.

The AI Seed Difference: More Than Just Capital

At AI Seed, we don't just write checks; we actively partner with our pure-play AI companies, providing specialized support tailored to their unique needs:

Rigorous Technical Due Diligence: Our multi-stage process, from initial tech review to deep assessment and collaborative roadmap development, ensures we back truly innovative AI.

Founder-Led Support: As a team of successfully exited founders ourselves, we intimately understand the startup journey and offer specialist guidance.

International Expansion Prowess: With active engagement in both European and Silicon Valley AI ecosystems, we provide invaluable support for European AI startups aiming to conquer the US market.

The Future is Pure-Play AI

We firmly believe that pure-play AI companies represent the most exciting and rewarding investment opportunities within the artificial intelligence landscape. By steadfastly focusing on startups with AI at their very core, led by visionary scientist-entrepreneurs, we're backing the innovators who are not just participating in the AI revolution but actively driving it.

This path demands specialized knowledge and patience, but the rewards – groundbreaking innovation, new business paradigms, and superior returns – are substantial. At AI Seed, our commitment to our specialized AI investment thesis and the next generation of AI founders remains stronger than ever as they build the companies that will define our technological future.

0 notes

Text

Unmasking AI: How Detection Tools Keep Learning Real

We stand at a fascinating intersection of technology and ethics. Artificial intelligence is rapidly evolving, offering students and researchers incredible opportunities. However, this progress also brings important questions about trust and how we use these powerful tools responsibly. Navigating this new landscape requires understanding and a commitment to ethical practices. This is where AI detection tools play a crucial role, helping ensure AI is used in a way that benefits everyone. Let’s dive into how these tools work and why they are essential in today's digital world.

The Trust Factor: Why AI Detection Matters

Imagine a world where AI operates without oversight—unpredictable, potentially biased, and difficult to understand. That’s precisely what AI detection tools are designed to prevent. Trust in AI isn’t just a technical issue; it’s a fundamental agreement between technology and society. Without trust, the benefits of AI could be overshadowed by concerns about misuse and unintended consequences.

Key Components of AI Trust Mechanisms

Transparency Tools: These advanced algorithms provide insights into how AI makes decisions, helping researchers and users understand the "why" behind AI-generated outputs. They act like sophisticated X-ray machines, revealing the inner workings of complex neural networks.

Bias Detection Frameworks: These systems scan AI models for potential biases, ensuring that algorithmic decisions are fair and equitable across diverse populations.

Ethical Compliance Monitors: These intelligent systems continuously evaluate AI applications against predefined ethical guidelines, flagging potential violations in real time.

Desklib's AI Detection Tool: A Deep Dive

As AI transforms education, offering incredible opportunities while also creating challenges, we are standing at an unprecedented moment in educational technology. With AI writing tools capable of generating entire essays and research papers in minutes, it's crucial to address potential trust issues.

Understanding the AI Content Challenge

Modern students can now use AI writing tools to generate high-quality content quickly. While these tools offer remarkable capabilities, they also raise concerns about academic integrity and the potential for misuse.

Risks of Unchecked AI Content

Without proper detection, AI-generated content can compromise learning experiences, undermine academic assessments, lead to disciplinary actions, and erode critical thinking skills.

Desklib: Protecting Academic Integrity

Desklib's AI Content Detector is a sophisticated solution designed to address these challenges head-on. It's more than just a tool; it's a comprehensive platform that empowers students and educators to uphold the highest standards of academic excellence.

Advanced Detection Methodology

Desklib’s cutting-edge AI detector employs multiple layers of sophisticated analysis to ensure the best possible outcome:

Linguistic Pattern Recognition: Identifies subtle markers of AI-generated text, analyzes writing style inconsistencies, and detects characteristic language models used by AI systems.

Machine Learning Algorithms: Continuously updates detection models, learning from evolving AI writing technologies to provide increasingly accurate detection rates.

Contextual Understanding: Goes beyond surface-level text analysis, evaluating semantic coherence and identifying potential AI-generated content with nuanced understanding.

Key Features of Desklib

Comprehensive Scanning Capabilities: Supports multiple document formats, performs instant analysis and reporting, provides detailed percentage-based originality scores, and features a user-friendly interface.

Solutions for Students and Educators

For Students: Verifies assignment originality before submission, develops awareness of AI writing technologies, teaches how to differentiate between assistance and plagiarism, and builds confidence in academic writing skills.

For Educators: Streamlines content verification, provides detailed insights into potential AI-generated submissions, and offers tools to support personalized feedback and maintain academic standards.

Beyond Detection: Promoting Genuine Learning

Desklib is committed to promoting genuine learning experiences, encouraging critical thinking, supporting educational innovation, and creating a balanced technological ecosystem.

Ethical and Transparent Technology

Desklib believes in responsible AI use, designing its AI text detection tool with strict privacy protocols, non-invasive scanning techniques, transparent reporting mechanisms, and continuous ethical framework updates.

Pricing and Accessibility

Desklib aims to make advanced technology universally accessible, offering:

Free Tier: Basic detection for occasional use.

Student Plan: Comprehensive scanning at student-friendly prices.

Institutional Packages: Customized solutions for educational institutions.

Flexible Subscription Models: Adapts to individual and institutional needs.

As AI detection tools continue to evolve, Desklib is committed to staying at the forefront of this technological revolution, ensuring that academic integrity remains paramount. It views its tool as a collaborative platform, bridging the gap between technological innovation and traditional academic values, emphasizing empowerment and guided learning rather than restriction.

Conclusion: Embracing a Future of Trust

As we venture deeper into the AI era, AI detection tools are becoming our compass, guiding us toward more transparent, accountable, and trustworthy technological solutions. They represent more than just technical safeguards; they are a reflection of our collective commitment to ethical innovation.

Join the academic integrity movement. Desklib invites students, educators, and institutions to participate in a transformative approach to academic writing. Its AI content detector is more than just a tool; it's a statement of commitment to genuine learning and intellectual growth. Don't hesitate—upload your document now and be a part of building a future where AI enhances, rather than undermines, academic integrity.

0 notes

Text

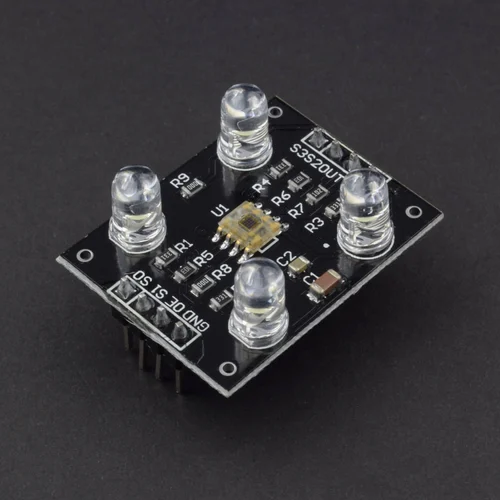

Colour Detection Sensor Market: Future Trends and Innovations Shaping Its Growth

The colour detection sensor market is rapidly evolving, and its future is brimming with opportunities driven by technological advancements and increasing demand for automation, precision, and efficiency across industries. These sensors are designed to detect and identify different colors in a wide range of applications, providing essential data for quality control, process monitoring, and production efficiency. As the market grows, several key trends are likely to shape its future development.

1. Advancements in Sensor Technology

The rapid development of sensor technology is one of the main factors driving the future of the colour detection sensor market. Companies are investing heavily in improving the accuracy, sensitivity, and speed of these sensors. With the integration of machine learning and artificial intelligence (AI), colour detection sensors are becoming smarter, offering more precise colour recognition even under challenging lighting conditions. These advanced sensors can differentiate between subtle colour variations, which are essential for industries like automotive, packaging, and textiles, where colour consistency is crucial.

In the coming years, we can expect to see the introduction of sensors with enhanced capabilities, such as multi-spectral and hyperspectral sensors, which will allow for more detailed colour analysis. These sensors will be able to capture more information about the objects being analysed, leading to more accurate and reliable results.

2. Integration of AI and Machine Learning