#dls in artificial intelligence

Explore tagged Tumblr posts

Text

Behind the Code: How AI Is Quietly Reshaping Software Development and the Top Risks You Must Know

AI Software Development

In 2025, artificial intelligence (AI) is no longer just a buzzword; it has become a driving force behind the scenes, transforming software development. From AI-powered code generation to advanced testing tools, machine learning (ML) and deep learning (DL) are significantly influencing how developers build, test, and deploy applications. While these innovations offer speed, accuracy, and automation, they also introduce subtle yet critical risks that businesses and developers must not overlook. This blog examines how AI is transforming the software development lifecycle and identifies the key risks associated with this evolution.

The Rise of AI in Software Development

Artificial intelligence, machine learning, and deep learning are becoming foundational to modern software engineering. AI tools like ChatGPT, Copilot, and various open AI platforms assist in code suggestions, bug detection, documentation generation, and even architectural decisions. These tools not only reduce development time but also enable less-experienced developers to produce quality code.

Examples of AI in Development:

- AI Chat Bots: Provide 24/7 customer support and collect feedback.

- AI-Powered Code Review: Analyze code for bugs, security flaws, and performance issues.

- Natural Language Processing (NLP): Translate user stories into code or test cases.

- AI for DevOps: Use predictive analytics for server load and automate CI/CD pipelines.

With AI chat platforms, free AI chatbots, and robotic process automation (RPA), the lines between human and machine collaboration are increasingly blurred.

The Hidden Risks of AI in Application Development

While AI offers numerous benefits, it also introduces potential vulnerabilities and unintended consequences. Here are the top risks associated with integrating AI into the development pipeline:

1. Over-Reliance on AI Tools

Over-reliance on AI tools may reduce developer skills and code quality:

- A decline in critical thinking and analytical skills.

- Propagation of inefficient or insecure code patterns.

- Reduced understanding of the software being developed.

2. Bias in Machine Learning Models

AI and ML trained on biased or incomplete data can produce skewed results:

-Applications may produce discriminatory or inaccurate results.

-Risks include brand damage and legal issues in regulated sectors like retail or finance.

3. Security Vulnerabilities

AI-generated code may introduce hidden bugs or create opportunities for exploitation:

-Many AI tools scrape open-source data, which might include insecure or outdated libraries.

-Hackers could manipulate AI-generated models for malicious purposes.

4. Data Privacy and Compliance Issues

AI models often need large datasets with sensitive information:

-Misuse or leakage of data can lead to compliance violations (e.g., GDPR).

-Using tools like Google AI Chat or OpenAI Chatbots can raise data storage concerns.

5. Transparency and Explainability Challenges

Understanding AI, especially deep learning decisions, is challenging:

-A lack of explainability complicates debugging processes.

-There are regulatory issues in industries that require audit trails (e.g., insurance, healthcare).

AI and Its Influence Across Development Phases

Planning & Design: AI platforms analyze historical data to forecast project timelines and resource allocation.

Risks: False assumptions from inaccurate historical data can mislead project planning.

Coding: AI-powered IDEs and assistants suggest code snippets, auto-complete functions, and generate boilerplate code.

Risks: AI chatbots may overlook edge cases or scalability concerns.

Testing: Automated test case generation using AI ensures broader coverage in less time.

Risks: AI might miss human-centric use cases and unique behavioral scenarios.

Deployment & Maintenance: AI helps predict failures and automates software patching using computer vision and ML.

Risks:False positives or missed anomalies in logs could lead to outages.

The Role of AI in Retail, RPA, and Computer Vision

Industries such as retail and manufacturing are increasingly integrating AI.

In Retail: AI is used for chatbots, customer data analytics, and inventory management tools, enhancing personalized shopping experiences through machine learning and deep learning.

Risk: Over-personalization and surveillance-like tracking raise ethical concerns.

In RPA: Robotic Process Automation tools simplify repetitive back-end tasks. AI adds decision-making capabilities to RPA.

Risk: Errors in automation can lead to large-scale operational failures.

In Computer Vision: AI is applied in image classification, facial recognition, and quality control.

Risk: Misclassification or identity-related issues could lead to regulatory scrutiny.

Navigating the Risks: Best Practices

To safely harness the power of AI in development, businesses should adopt strategic measures, such as establishing AI ethics policies and defining acceptable use guidelines.

By understanding the transformative power of AI and proactively addressing its risks, organizations can better position themselves for a successful future in software development. Key Recommendations:

Audit and regularly update AI datasets to avoid bias.

Use explainable AI models where possible.

Train developers on AI tools while reinforcing core engineering skills.

Ensure AI integrations comply with data protection and security standards.

Final Thoughts: Embracing AI While Staying Secure

AI, ML, and DL have revolutionized software development, enabling automation, accuracy, and innovation. However, they bring complex risks that require careful management. Organizations must adopt a balanced approach—leveraging the strengths of AI platforms like GPT chat AI, open chat AI, and RPA tools while maintaining strict oversight.

As we move forward, embracing AI in a responsible and informed manner is critical. From enterprise AI adoption to computer vision applications, businesses that align technological growth with ethical and secure practices will lead the future of development.

#artificial intelligence chat#ai and software development#free ai chat bot#machine learning deep learning artificial intelligence#rpa#ai talking#artificial intelligence machine learning and deep learning#artificial intelligence deep learning#ai chat gpt#chat ai online#best ai chat#ai ml dl#ai chat online#ai chat bot online#machine learning and deep learning#deep learning institute nvidia#open chat ai#google chat bot#chat bot gpt#artificial neural network in machine learning#openai chat bot#google ai chat#ai deep learning#artificial neural network machine learning#ai gpt chat#chat ai free#ai chat online free#ai and deep learning#software development#gpt chat ai

0 notes

Text

Using JPEG Compression to Improve Neural Network Training

New Post has been published on https://thedigitalinsider.com/using-jpeg-compression-to-improve-neural-network-training/

Using JPEG Compression to Improve Neural Network Training

A new research paper from Canada has proposed a framework that deliberately introduces JPEG compression into the training scheme of a neural network, and manages to obtain better results – and better resistance to adversarial attacks.

This is a fairly radical idea, since the current general wisdom is that JPEG artifacts, which are optimized for human viewing, and not for machine learning, generally have a deleterious effect on neural networks trained on JPEG data.

An example of the difference in clarity between JPEG images compressed at different loss values (higher loss permits a smaller file size, at the expense of delineation and banding across color gradients, among other types of artifact). Source: https://forums.jetphotos.com/forum/aviation-photography-videography-forums/digital-photo-processing-forum/1131923-how-to-fix-jpg-compression-artefacts?p=1131937#post1131937

A 2022 report from the University of Maryland and Facebook AI asserted that JPEG compression ‘incurs a significant performance penalty’ in the training of neural networks, in spite of previous work that claimed neural networks are relatively resilient to image compression artefacts.

A year prior to this, a new strand of thought had emerged in the literature: that JPEG compression could actually be leveraged for improved results in model training.

However, though the authors of that paper were able to obtain improved results in the training of JPEG images of varying quality levels, the model they proposed was so complex and burdensome that it was not practicable. Additionally, the system’s use of default JPEG optimization settings (quantization) proved a barrier to training efficacy.

A later project (2023’s JPEG Compliant Compression for DNN Vision) experimented with a system that obtained slightly better results from JPEG-compressed training images with the use of a frozen deep neural network (DNN) model. However, freezing parts of a model during training tends to reduce the versatility of the model, as well as its broader resilience to novel data.

JPEG-DL

Instead, the new work, titled JPEG Inspired Deep Learning, offers a much simpler architecture, which can even be imposed upon existing models.

The researchers, from the University of Waterloo, state:

‘Results show that JPEG-DL significantly and consistently outperforms the standard DL across various DNN architectures, with a negligible increase in model complexity.

Specifically, JPEG-DL improves classification accuracy by up to 20.9% on some fine-grained classification dataset, while adding only 128 trainable parameters to the DL pipeline. Moreover, the superiority of JPEG-DL over the standard DL is further demonstrated by the enhanced adversarial robustness of the learned models and reduced file sizes of the input images.’

The authors contend that an optimal JPEG compression quality level can help a neural network distinguish the central subject/s of an image. In the example below, we see baseline results (left) blending the bird into the background when features are obtained by the neural network. In contrast, JPEG-DL (right) succeeds in distinguishing and delineating the subject of the photo.

Tests against baseline methods for JPEG-DL. Source: https://arxiv.org/pdf/2410.07081

‘This phenomenon,’ they explain, ‘termed “compression helps” in the [2021] paper, is justified by the fact that compression can remove noise and disturbing background features, thereby highlighting the main object in an image, which helps DNNs make better prediction.’

Method

JPEG-DL introduces a differentiable soft quantizer, which replaces the non-differentiable quantization operation in a standard JPEG optimization routine.

This allows for gradient-based optimization of the images. This is not possible in conventional JPEG encoding, which uses a uniform quantizer with a rounding operation that approximates the nearest coefficient.

The differentiability of JPEG-DL’s schema permits joint optimization of both the training model’s parameters and the JPEG quantization (compression level). Joint optimization means that both the model and the training data are accommodated to each other in the end-to-end process, and no freezing of layers is needed.

Essentially, the system customizes the JPEG compression of a (raw) dataset to fit the logic of the generalization process.

Conceptual schema for JPEG-DL.

One might assume that raw data would be the ideal fodder for training; after all, images are completely decompressed into an appropriate full-length color space when they are run in batches; so what difference does the original format make?

Well, since JPEG compression is optimized for human viewing, it throws areas of detail or color away in a manner concordant with this aim. Given a picture of a lake under a blue sky, increased levels of compression will be applied to the sky, because it contains no ‘essential’ detail.

On the other hand, a neural network lacks the eccentric filters which allow us to zero in on central subjects. Instead, it is likely to consider any banding artefacts in the sky as valid data to be assimilated into its latent space.

Though a human will dismiss the banding in the sky, in a heavily compressed image (left), a neural network has no idea that this content should be thrown away, and will need a higher-quality image (right). Source: https://lensvid.com/post-processing/fix-jpeg-artifacts-in-photoshop/

Therefore, one level of JPEG compression is unlikely to suit the entire contents of a training dataset, unless it represents a very specific domain. Pictures of crowds will require much less compression than a narrow-focus picture of a bird, for instance.

The authors observe that those unfamiliar with the challenges of quantization, but who are familiar with the basics of the transformers architecture, can consider these processes as an ‘attention operation’, broadly.

Data and Tests

JPEG-DL was evaluated against transformer-based architectures and convolutional neural networks (CNNs). Architectures used were EfficientFormer-L1; ResNet; VGG; MobileNet; and ShuffleNet.

The ResNet versions used were specific to the CIFAR dataset: ResNet32, ResNet56, and ResNet110. VGG8 and VGG13 were chosen for the VGG-based tests.

For CNN, the training methodology was derived from the 2020 work Contrastive Representation Distillation (CRD). For EfficientFormer-L1 (transformer-based), the training method from the 2023 outing Initializing Models with Larger Ones was used.

For fine-grained tasks featured in the tests, four datasets were used: Stanford Dogs; the University of Oxford’s Flowers; CUB-200-2011 (CalTech Birds); and Pets (‘Cats and Dogs’, a collaboration between the University of Oxford and Hyderabad in India).

For fine-grained tasks on CNNs, the authors used PreAct ResNet-18 and DenseNet-BC. For EfficientFormer-L1, the methodology outlined in the aforementioned Initializing Models With Larger Ones was used.

Across the CIFAR-100 and fine-grained tasks, the varying magnitudes of Discrete Cosine Transform (DCT) frequencies in the JPEG compression approach was handled with the Adam optimizer, in order to adapt the learning rate for the JPEG layer across the models that were tested.

In tests on ImageNet-1K, across all experiments, the authors used PyTorch, with SqueezeNet, ResNet-18 and ResNet-34 as the core models.

For the JPEG-layer optimization evaluation, the researchers used Stochastic Gradient Descent (SGD) instead of Adam, for more stable performance. However, for the ImageNet-1K tests, the method from the 2019 paper Learned Step Size Quantization was employed.

Above the top-1 validation accuracy for the baseline vs. JPEG-DL on CIFAR-100, with standard and mean deviations averaged over three runs. Below, the top-1 validation accuracy on diverse fine-grained image classification tasks, across various model architectures, again, averaged from three passes.

Commenting on the initial round of results illustrated above, the authors state:

‘Across all seven tested models for CIFAR-100, JPEG-DL consistently provides improvements, with gains of up to 1.53% in top-1 accuracy. In the fine-grained tasks, JPEG-DL offers a substantial performance increase, with improvements of up to 20.90% across all datasets using two different models.’

Results for the ImageNet-1K tests are shown below:

Top-1 validation accuracy results on ImageNet across diverse frameworks.

Here the paper states:

‘With a trivial increase in complexity (adding 128 parameters), JPEG-DL achieves a gain of 0.31% in top-1 accuracy for SqueezeNetV1.1 compared to the baseline using a single round of [quantization] operation.

‘By increasing the number of quantization rounds to five, we observe an additional improvement of 0.20%, leading to a total gain of 0.51% over the baseline.’

The researchers also tested the system using data compromised by the adversarial attack approaches Fast Gradient Signed Method (FGSM) and Projected Gradient Descent (PGD).

The attacks were conducted on CIFAR-100 across two of the models:

Testing results for JPEG-DL, against two standard adversarial attack frameworks.

The authors state:

‘[The] JPEG-DL models significantly improve the adversarial robustness compared to the standard DNN models, with improvements of up to 15% for FGSM and 6% for PGD.’

Additionally, as illustrated earlier in the article, the authors conducted a comparison of extracted feature maps using GradCAM++ – a framework that can highlight extracted features in a visual manner.

A GradCAM++ illustration for baseline and JPEG-DL image classification, with extracted features highlighted.

The paper notes that JPEG-DL produces an improved result, and that in one instance it was even able to classify an image that the baseline failed to identify. Regarding the earlier-illustrated image featuring birds, the authors state:

‘[It] is evident that the feature maps from the JPEG-DL model show significantly better contrast between the foreground information (the bird) and the background compared to the feature maps generated by the baseline model.

‘Specifically, the foreground object in the JPEG-DL feature maps is enclosed within a well-defined contour, making it visually distinguishable from the background.

‘In contrast, the baseline model’s feature maps show a more blended structure, where the foreground contains higher energy in low frequencies, causing it to blend more smoothly with the background.’

Conclusion

JPEG-DL is intended for use in situations where raw data is available – but it would be most interesting to see if some of the principles featured in this project could be applied to conventional dataset training, wherein the content may be of lower quality (as frequently occurs with hyperscale datasets scraped from the internet).

As it stands, that largely remains an annotation problem, though it has been addressed in traffic-based image recognition, and elsewhere.

First published Thursday, October 10, 2024

#2022#2023#2024#Adversarial attacks#ai#ai training#approach#architecture#Article#Artificial Intelligence#attention#aviation#background#barrier#birds#Blue#caltech#Canada#cats#CNN#Collaboration#Color#comparison#complexity#compression#content#data#datasets#Deep Learning#DL

0 notes

Text

How Our AI with ML & DL Course Can Shape Your Career A Comprehensive Overview

In today’s data-driven world, the integration of Artificial Intelligence (AI) with Machine Learning (ML) and Deep Learning (DL) is revolutionizing industries and shaping the future of technology. At The DataTech Labs, we understand the transformative power of AI, ML, and DL in shaping careers and driving innovation. In this comprehensive overview, we’ll explore how our AI with ML & DL Course can shape your career and empower you to thrive in the dynamic field of artificial intelligence.

0 notes

Text

AI vs. ML vs. DL Clearing the Confusion

Raise your hand if you've ever been perplexed by the differences between artificial intelligence (AI), machine learning (ML), and deep learning (DL). Here, we go into greater detail so that you can understand which is better for your specific use cases! Let’s clear up all the confusion!

Click here to know more: https://www.linkedin.com/posts/marsdevs_ai-vs-ml-vs-dl-activity-7108729062562410496-Ikea

0 notes

Text

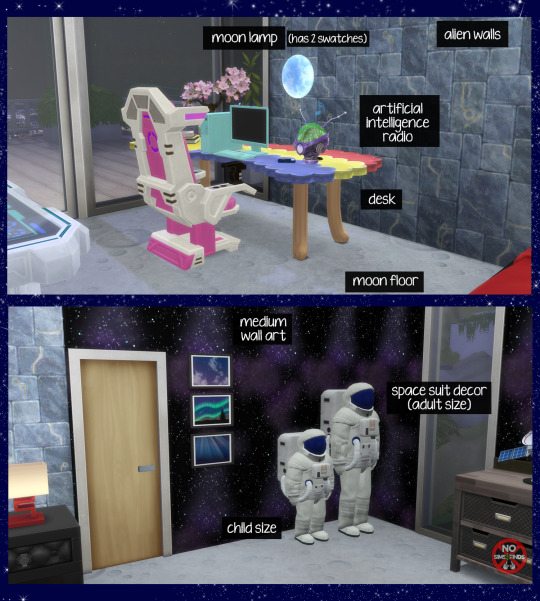

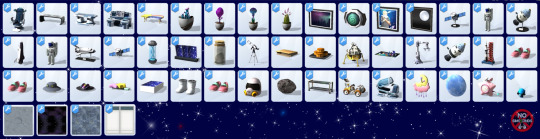

🚀 ACNH Space Enthusiast Set 👽

52 items | Sims 4, base game compatible - As usual, all original swatches included with lots of extras added by me! ☺️💗 Not all items are from ACNH. Please keep in mind some of the items are high poly if you have an older computer. The mooncake plate item is going to be shared publicly on my Tumblr today, since it is a cultural related item for a soon upcoming holiday.

Type “ACNH Space” into the search query in build mode to find quickly. You can always find items like this, just begin typing the title and it will appear.

Always suggested: bb.objects ON, it makes placing items much easier. For further placement tweaking, check out the TOOL mod.

Use the scale up & down feature on your keyboard to make the items larger or smaller to your liking. If you have a non-US keyboard, it may be different keys depending on which alphabet it uses. (The launch pad item is made smaller like the models, so it can be upscaled to fit. Other items like the UFO are made larger and can be down scaled the same way.)

I hope you enjoy!

Set contains: -Alien DNA | 8 swatch | 586 poly -Alien Plant Pot 1 | 9 swatches | 972 poly -Alien Plant Pot 2 | 9 swatches | 1494 poly -Alien Plant Pot 3 | 9 swatches | 992 poly -Alien Plant Pot 4 | 9 swatches | 3702 poly -Artificial Intelligence Music Player (functional radio) | 5 swatches | 828 poly -Chair - Console | 5 swatches | 1202 poly -Confidential Files (english & simlish) | 2 swatches | 144 poly -Control Panel (table) | 8 swatches - 2 screen swatches for each table color | 2300 poly -Cosmic Board Game | 1 swatch | 828 poly -Cosmic Cards | 1 swatch | 54 poly -Cosmic Card Holder | 11 swatch | 216 poly -Helmet Decor | 5 swatches | 1110 poly -Launch Pad | 1 swatch | 2362 poly -Lunar Lander | 1 swatch | 4804 poly -Med Pod Decor | 5 swatches | 2414 poly -Meteor Jar | 2 swatches | 1328 poly -Meteor Rock | 2 swatches | 1152 poly -Mooncake Plate | 3 swatches | 1154 poly -Moon Rover | 2 swatches | 4809 poly -Moon Wall Lamp | 2 swatches | 482 poly (this item has the same vertex paint issue as the other lams I have made. Turn down the brightness if the object looks too bright) -Robot Arm | 7 swatch | 1586 poly -Satellite | 2 swatches | 2384 poly -Satellite Model | 2 swatches | 2508 poly -Shoe Rack / Table | 3 swatches | 214 poly -Space Boots Decor | 1 swatch | 820 poly -Spaceship | 1 swatch | 3184 poly -Spaceship Model | 1 swatch | 3308 poly -Space Shuttle | 1 swatch | 2350 poly -Space Shuttle Model | 1 swatch | 2432 poly -Space Suit Decor (adult & child versions) | 1 swatch each | 1198 poly each -Space Tacos Decor | 1 swatch | 310 poly -Starship Table | 2 swatches | 756 poly -Table | 4 swatches | 2409 poly -Telescope | 2 swatches | 1238 poly -Twin Star Clock Radio | 3 swatches | 2388 poly -Twin Star Clock Wall | 4 swatches | 1204 poly -Twin Star Hairclip Decor | 3 swatches each | 934 poly -Twin Star Kid's Desk | 1 swatch | 1994 poly -Twin Star Shoes Decor (adult, child, & toddler versions) | 1 swatch each | 792 poly each -UFO | 1 swatch | 3172 poly -Wall Art (Large) | 10 swatches | 92 poly -Wall Art (Medium) | 16 swatches | 50 poly -Wall Art (Small) | 15 swatches | 50 poly -Wall Button | 7 swatches | 146 poly

Build Mode: -Wall Med Bay | 1 swatch -Wall Galaxy | 2 swatches -Wall Alien | 2 swatches -Moon Floor | 5 swatches

As always, please let me know if you have any issues! Happy Simming! 💗

📁 Download all or pick & choose (SFS, No Ads): HERE

📁 Alt Mega Download (still no ads): HERE

📁 DL on Patreon

Will be public on October 8th, 2023

As always, please let me know if you have any issues! Happy Simming!

✨ If you like my work, please consider supporting me

★ Patreon 🎉 ❤️ |★ Ko-Fi ☕️ ❤️ ★ Instagram📷

Thank you for reblogging ❤️ ❤️ ❤️

@sssvitlanz @maxismatchccworld @mmoutfitters @coffee-cc-finds @itsjessicaccfinds @gamommypeach @stargazer-sims-finds @khelga68 @suricringe @vaporwavesims @mystictrance15 @public-ccfinds

#s4cc#ts4cc#sims 4 space#sims 4 maxis match#sims 4 outer space#sims 4 moon#sims 4 buy mode#sims 4 furniture#sims 4 table#sims 4 desk#sims 4 plants#sims 4 spacesuit#sims 4 helmet#sims 4 clothing clutter#sims 4 shoe clutter#sims 4 clock#sims 4 radio#sims 4 object#sims 4 build mode#sims 4 floor#sims 4 wall#sims 4 walls#simdertalia

614 notes

·

View notes

Text

On generative AI

I've had 2 asks about this lately so I feel like it's time to post a clarifying statement.

I will not provide assistance related to using "generative artificial intelligence" ("genAI") [1] applications such as ChatGPT. This is because, for ethical and functional reasons, I am opposed to genAI.

I am opposed to genAI because its operators steal the work of people who create, including me. This complaint is usually associated with text-to-image (T2I) models, like Midjourney or Stable Diffusion, which generate "AI art". However, large language models (LLMs) do the same thing, just with text. ChatGPT was trained on a large research dataset known as the Common Crawl (Brown et al, 2020). For an unknown period ending at latest 29 August 2023, Tumblr did not discourage Common Crawl crawlers from scraping the website (Changes on Tumblr, 2023). Since I started writing on this blog circa 2014–2015 and have continued fairly consistently in the interim, that means the Common Crawl incorporates a significant quantity of my work. If it were being used for academic research, I wouldn't mind. If it were being used by another actual human being, I wouldn't mind, and if they cited me, I definitely wouldn't mind. But it's being ground into mush and extruded without credit by large corporations run by people like Sam Altman (see Hoskins, 2025) and Elon Musk (see Ingram, 2024) and the guy who ruined Google (Zitron, 2024), so I mind a great deal.

I am also opposed to genAI because of its excessive energy consumption and the lengths to which its operators go to ensure that energy is supplied. Individual cases include the off-grid power station which is currently poisoning Black people in Memphis, Tennessee (Kerr, 2025), so that Twitter's genAI application Grok can rant incoherently about "white genocide" (Steedman, 2025). More generally, as someone who would prefer to avoid getting killed for my food and water in a few decades' time, I am unpleasantly reminded of the study that found that bitcoin mining emissions alone could make runaway climate change impossible to prevent (Mora et al, 2018). GenAI is rapidly scaling up to produce similar amounts of emissions, with the same consequences, for the same reasons (Luccioni, 2024). [2]

It is theoretically possible to create genAI which doesn't steal and which doesn't destroy the planet. Nobody's going to do it, and if they do do it, no significant share of the userbase will migrate to it in the foreseeable future — same story as, again, bitcoin — but it's theoretically possible. However, I also advise against genAI for any application which requires facts, because it can't intentionally tell the truth. It can't intentionally do anything; it is a system for using a sophisticated algorithm to assemble words in plausibly coherent ways. Try asking it about the lore of a media property you're really into and see how long it takes to start spouting absolute crap. It also can't take correction; it literally cannot, it is unable — the way the neural network is trained means that simply inserting a factual correction, even with administrator access, is impossible even in principle.

GenAI can never "ascend" to intelligence; it's not a petri dish in which an artificial mind can grow; it doesn't contain any more of the stuff of consciousness than a spreadsheet. The fact that it seems like it really must know what it's saying means nothing. To its contemporaries, ELIZA seemed like that too (Weizenbaum, 1966).

The stuff which is my focus on this blog — untraining and more broadly AB/DL in general — is not inherently dangerous or sensitive, but it overlaps with stuff which, despite being possible to access and use in a safe manner, has the potential for great danger. This is heightened quite a bit given genAI's weaknesses around the truth. If you ask ChatGPT whether it's safe to down a whole bottle of castor oil, as long as you use the right words, even unintentionally, it will happily tell you to go ahead. If I endorse or recommend genAI applications for this kind of stuff, or assist with their use, I am encouraging my readers toward something I know to be unsafe. I will not be doing that. Future asks on the topic will go unanswered.

Notes

I use quote marks here because as far as I am concerned, both "generative artificial intelligence" and "genAI" are misleading labels adopted for branding purposes; in short, lies. GenAI programs aren't artificial intelligences because they don't think, and because they don't emulate thinking or incorporate human thinking; they're just a program for associating words in a mathematically sophisticated but deterministic way. "GenAI" is also a lie because it's intended to associate generative AI applications with artificial general intelligence (AGI), i.e., artificial beings that actually think, or pretend to as well as a human does. However, there is no alternative term at the moment, and I understand I look weird if I use quote marks throughout the piece, so I dispense with them after this point.

As a mid-to-low-income PC user I am also pissed off that GPUs are going to get worse and more expensive again, but that kind of pales in comparison to everything else.

References

Brown, T.B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., ... & Amodei, D. (2020, July 22). Language models are few-shot learners [version 4]. arXiv. doi: 10.48660/arXiv.2005.14165. Retrieved 25 May 2025.

Changes on Tumblr (2023, August 29). Tuesday, August 29th, 2023 [Text post]. Tumblr. Retrieved 25 May 2025.

Hoskins, P. (2025, January 8). ChatGPT creator denies sister's childhood rape claim. BBC News. Retrieved 25 May 2025.

Ingram, D. (2024, June 13). Elon Musk and SpaceX sued by former employees alleging sexual harassment and retaliation. NBC News. Retrieved 25 May 2025.

Kerr, D. (2025, April 25). Elon Musk's xAI accused of pollution over Memphis supercomputer. The Guardian. Retrieved 25 May 2025.

Luccioni, S. (2024, December 18). Generative AI and climate change are on a collision course. Wired. Retrieved 25 May 2025.

Mora, C., Rollins, R.L., Taladay, K., Kantar, M.B., Chock, M.K., ... & Franklin, E.C. (2018, October 29). Bitcoin emissions alone could push global warming above 2°C. Nature Climate Change, 8, 931–933. doi: 10.1038/s41558-018-0321-8. Retrieved 25 May 2025.

Steedman, E. (2025, May 25). For hours, chatbot Grok wanted to talk about a 'white genocide'. It gave a window into the pitfalls of AI. ABC News (Australian Broadcasting Corporation). Retrieved 25 May 2025.

Weizenbaum, J. (1966, January). ELIZA—a computer program for the study of natural language communication between man and machine. Communications of the ACM, 9(1), 36–45. doi: 10.1145/365153.365168. Retrieved 25 May 2025.

Zitron, E. (2024, April 23). The man who killed Google Search. Where's Your Ed At. Retrieved 25 May 2025.

10 notes

·

View notes

Text

The AI Revolution: Understanding, Harnessing, and Navigating the Future

What is AI

In a world increasingly shaped by technology, one term stands out above the rest, capturing both our imagination and, at times, our apprehension: Artificial Intelligence. From science fiction dreams to tangible realities, AI is no longer a distant concept but an omnipresent force, subtly (and sometimes not-so-subtly) reshaping industries, transforming daily life, and fundamentally altering our perception of what's possible.

But what exactly is AI? Is it a benevolent helper, a job-stealing machine, or something else entirely? The truth, as always, is far more nuanced. At its core, Artificial Intelligence refers to the simulation of human intelligence processes by machines, especially computer systems. These processes include learning (the acquisition of information and rules for using the information), reasoning (using rules to reach approximate or definite conclusions), and self-correction. What makes modern AI so captivating is its ability to learn from data, identify patterns, and make predictions or decisions with increasing autonomy.

The journey of AI has been a fascinating one, marked by cycles of hype and disillusionment. Early pioneers in the mid-20th century envisioned intelligent machines that could converse and reason. While those early ambitions proved difficult to achieve with the technology of the time, the seeds of AI were sown. The 21st century, however, has witnessed an explosion of progress, fueled by advancements in computing power, the availability of massive datasets, and breakthroughs in machine learning algorithms, particularly deep learning. This has led to the "AI Spring" we are currently experiencing.

The Landscape of AI: More Than Just Robots

When many people think of AI, images of humanoid robots often come to mind. While robotics is certainly a fascinating branch of AI, the field is far broader and more diverse than just mechanical beings. Here are some key areas where AI is making significant strides:

Machine Learning (ML): This is the engine driving much of the current AI revolution. ML algorithms learn from data without being explicitly programmed. Think of recommendation systems on streaming platforms, fraud detection in banking, or personalized advertisements – these are all powered by ML.

Deep Learning (DL): A subset of machine learning inspired by the structure and function of the human brain's neural networks. Deep learning has been instrumental in breakthroughs in image recognition, natural language processing, and speech recognition. The facial recognition on your smartphone or the impressive capabilities of large language models like the one you're currently interacting with are prime examples.

Natural Language Processing (NLP): This field focuses on enabling computers to understand, interpret, and generate human language. From language translation apps to chatbots that provide customer service, NLP is bridging the communication gap between humans and machines.

Computer Vision: This area allows computers to "see" and interpret visual information from the world around them. Autonomous vehicles rely heavily on computer vision to understand their surroundings, while medical imaging analysis uses it to detect diseases.

Robotics: While not all robots are AI-powered, many sophisticated robots leverage AI for navigation, manipulation, and interaction with their environment. From industrial robots in manufacturing to surgical robots assisting doctors, AI is making robots more intelligent and versatile.

AI's Impact: Transforming Industries and Daily Life

The transformative power of AI is evident across virtually every sector. In healthcare, AI is assisting in drug discovery, personalized treatment plans, and early disease detection. In finance, it's used for algorithmic trading, risk assessment, and fraud prevention. The manufacturing industry benefits from AI-powered automation, predictive maintenance, and quality control.

Beyond these traditional industries, AI is woven into the fabric of our daily lives. Virtual assistants like Siri and Google Assistant help us organize our schedules and answer our questions. Spam filters keep our inboxes clean. Navigation apps find the fastest routes. Even the algorithms that curate our social media feeds are a testament to AI's pervasive influence. These applications, while often unseen, are making our lives more convenient, efficient, and connected.

Harnessing the Power: Opportunities and Ethical Considerations

The opportunities presented by AI are immense. It promises to boost productivity, solve complex global challenges like climate change and disease, and unlock new frontiers of creativity and innovation. Businesses that embrace AI can gain a competitive edge, optimize operations, and deliver enhanced customer experiences. Individuals can leverage AI tools to automate repetitive tasks, learn new skills, and augment their own capabilities.

However, with great power comes great responsibility. The rapid advancement of AI also brings forth a host of ethical considerations and potential challenges that demand careful attention.

Job Displacement: One of the most frequently discussed concerns is the potential for AI to automate jobs currently performed by humans. While AI is likely to create new jobs, there will undoubtedly be a shift in the nature of work, requiring reskilling and adaptation.

Bias and Fairness: AI systems learn from the data they are fed. If that data contains historical biases (e.g., related to gender, race, or socioeconomic status), the AI can perpetuate and even amplify those biases in its decisions, leading to unfair outcomes. Ensuring fairness and accountability in AI algorithms is paramount.

Privacy and Security: AI relies heavily on data. The collection and use of vast amounts of personal data raise significant privacy concerns. Moreover, as AI systems become more integrated into critical infrastructure, their security becomes a vital issue.

Transparency and Explainability: Many advanced AI models, particularly deep learning networks, are often referred to as "black boxes" because their decision-making processes are difficult to understand. For critical applications, it's crucial to have transparency and explainability to ensure trust and accountability.

Autonomous Decision-Making: As AI systems become more autonomous, questions arise about who is responsible when an AI makes a mistake or causes harm. The development of ethical guidelines and regulatory frameworks for autonomous AI is an ongoing global discussion.

Navigating the Future: A Human-Centric Approach

Navigating the AI revolution requires a proactive and thoughtful approach. It's not about fearing AI, but rather understanding its capabilities, limitations, and implications. Here are some key principles for moving forward:

Education and Upskilling: Investing in education and training programs that equip individuals with AI literacy and skills in areas like data science, AI ethics, and human-AI collaboration will be crucial for the workforce of the future.

Ethical AI Development: Developers and organizations building AI systems must prioritize ethical considerations from the outset. This includes designing for fairness, transparency, and accountability, and actively mitigating biases.

Robust Governance and Regulation: Governments and international bodies have a vital role to play in developing appropriate regulations and policies that foster innovation while addressing ethical concerns and ensuring the responsible deployment of AI.

Human-AI Collaboration: The future of work is likely to be characterized by collaboration between humans and AI. AI can augment human capabilities, automate mundane tasks, and provide insights, allowing humans to focus on higher-level problem-solving, creativity, and empathy.

Continuous Dialogue: As AI continues to evolve, an ongoing, open dialogue among technologists, ethicists, policymakers, and the public is essential to shape its development in a way that benefits humanity.

The AI revolution is not just a technological shift; it's a societal transformation. By understanding its complexities, embracing its potential, and addressing its challenges with foresight and collaboration, we can harness the power of Artificial Intelligence to build a more prosperous, equitable, and intelligent future for all. The journey has just begun, and the choices we make today will define the world of tomorrow.

2 notes

·

View notes

Text

Human vs. AI: The Ultimate Comparison & Future Possibilities

The debate of Human Intelligence vs Artificial Intelligence (AI) is one of the most important topics in today’s world. With AI advancing at an exponential rate, many wonder: Will AI surpass human intelligence? Can AI replace humans in creativity, emotions, and decision-making?

From self-driving cars to chatbots and even AI-generated art, artificial intelligence is rapidly transforming industries. But despite AI’s impressive capabilities, humans still have unique traits that make them irreplaceable in many aspects.

In this article, we will explore everything about Humans vs AI—how they differ, their strengths and weaknesses, and the possible future where both coexist.

What is Human Intelligence?

Human intelligence refers to the ability to think, learn, adapt, and make decisions based on emotions, logic, and experience. It is shaped by:

Cognitive Abilities: Problem-solving, creativity, critical thinking

Emotional Intelligence: Understanding and managing emotions

Adaptability: Learning from past experiences and adjusting to new situations

Consciousness & Self-Awareness: Understanding oneself and the impact of actions on others

Humans have common sense, emotions, and moral values, which help them make decisions in unpredictable environments.

What is Artificial Intelligence (AI)?

AI (Artificial Intelligence) is the simulation of human intelligence by machines. AI can process massive amounts of data and make decisions much faster than humans. The different types of AI include:

Narrow AI (Weak AI): AI specialized in specific tasks (e.g., voice assistants like Siri, Alexa)

General AI (Strong AI): AI with human-like reasoning and adaptability (not yet achieved)

Super AI: Hypothetical AI that surpasses human intelligence in every aspect

AI works on algorithms, machine learning (ML), deep learning (DL), and neural networks to process information and improve over time.

Strengths & Weaknesses of Human Intelligence

Strengths of Humans

Creativity & Imagination: Humans can create original art, music, inventions, and solutions.

Emotional Understanding: Humans can relate to others through emotions, empathy, and social skills.

Problem-Solving: Humans can solve problems in unpredictable and unfamiliar environments.

Ethical Reasoning: Humans can make moral decisions based on personal beliefs and societal values.

Adaptability: Humans can learn from experience and change their approach dynamically.

Weaknesses of Humans

Limited Processing Power: Humans take time to analyze large amounts of data.

Subjective Thinking: Emotions can sometimes cloud judgment.

Fatigue & Errors: Humans get tired and make mistakes.

Memory Limitations: Humans forget information over time.

Strengths & Weaknesses of AI

Strengths of AI

Fast Data Processing: AI can analyze vast datasets in seconds.

Accuracy & Precision: AI minimizes errors in calculations and predictions.

Automation: AI can perform repetitive tasks efficiently.

No Fatigue: AI doesn’t get tired and works 24/7.

Pattern Recognition: AI detects trends and anomalies better than humans.

Weaknesses of AI

Lack of Creativity: AI cannot create something truly original.

No Emotions or Common Sense: AI cannot understand human feelings.

Dependency on Data: AI needs large datasets to function effectively.

Security & Ethical Risks: AI can be hacked or misused for harmful purposes.

Job Displacement: AI automation can replace human jobs.

How AI is Impacting Human Jobs?

AI is automating many industries, raising concerns about job security. Some professions being replaced or transformed by AI include:

Jobs AI is Replacing

Manufacturing: Robots handle repetitive production tasks.

Retail & Customer Service: AI chatbots assist customers.

Transportation: Self-driving cars and delivery drones.

Jobs AI Cannot Replace

Creative Professions: Artists, writers, filmmakers.

Healthcare & Therapy: Doctors, nurses, psychologists.

Leadership & Management: Decision-making roles that require intuition.

The future will require reskilling and upskilling for workers to adapt to AI-driven jobs.

Can AI Surpass Human Intelligence?

Currently, AI lacks self-awareness, emotions, and real-world adaptability. However, advancements in Quantum Computing, Neural Networks, and AI Ethics may bring AI closer to human-like intelligence.

Some experts believe AI could reach Artificial General Intelligence (AGI), where it can think and learn like a human. However, whether AI will truly replace humans is still debatable.

Future of AI & Human Collaboration

The future is not about AI replacing humans but about AI and humans working together. Possible future scenarios include:

AI-Augmented Workforce: AI assists humans in jobs, increasing efficiency.

Brain-Computer Interfaces (BCI): AI could merge with human intelligence for enhanced cognition.

AI in Healthcare: AI helping doctors diagnose diseases more accurately.

Ethical AI Regulations: Governments enforcing AI laws to prevent misuse.

Rather than competing, humans and AI should collaborate to create a better future.

Conclusion

The battle between Human Intelligence vs AI is not about one replacing the other but about how they can complement each other. While AI excels in speed, accuracy, and automation, human intelligence remains unmatched in creativity, emotions, and moral judgment.

The future will not be AI vs Humans, but rather AI & Humans working together for a better society. By understanding AI’s capabilities and limitations, we can prepare for an AI-powered world while preserving what makes us uniquely human.

2 notes

·

View notes

Note

Is not every character in DL a "brain clone"?

DSoD Kaiba and DSoD Mokuba are (almost) definitely real. Most of the other DSoD characters have a pretty strong chance of being real. I don't remember if we have any dialogue that strongly implies one way or the other, but they're definitely the most likely to be real (as opposed to the DM versions of those characters, which are definitely fake).

Also love the quotes around "brain clone." I'm not going to say "artificially intelligent memory-based reconstructions of their personalities that Kaiba made because he's an absolute fucking madman" every time. We know what they are. We're having fun with words here.

46 notes

·

View notes

Text

Ocean of Memories, Take You to Eternity / Hatsune Miku (Vocaloid) 【Electro Rock, Miku 16th Birthday】

---YouTube

youtube

---Subscription service links (nodee)

【About this song】

This Song was composed for Hatsune Miku 16th birthday, 2023. For her 16-year memories and her next 16-year soaring...

【Lyrics】

Ocean of memories, flood with emotion for long time accumulated dreamy and precious phrases

Once you gladly said "here comes a future" No, universe are growing wider, I'll take you to eternity

(Welcome to the eternal universe…)

16 years ago, It's my birth year I have started walking

Before craze of DL(Deep Learning) I was born So less real songs compared to AI(Artificial Intelligence) Only you can make precious phrases, so not the matter

You'll take me to the eternal Universe like Ihatove

(…and last countdown 5, 4, 3, 2, 1, Relunch from 0 to the next 16th bithday)

【Artworks】

2 notes

·

View notes

Text

Top AI Frameworks in 2025: How to Choose the Best Fit for Your Project

AI Development: Your Strategic Framework Partner

1. Introduction: Navigating the AI Framework Landscape

The world of artificial intelligence is evolving at breakneck speed. What was cutting-edge last year is now foundational, and new advancements emerge almost daily. This relentless pace means the tools we use to build AI — the AI frameworks — are also constantly innovating. For software developers, AI/ML engineers, tech leads, CTOs, and business decision-makers, understanding this landscape is paramount.

The Ever-Evolving World of AI Development

From sophisticated large language models (LLMs) driving new generative capabilities to intricate computer vision systems powering autonomous vehicles, AI applications are becoming more complex and pervasive across every industry. Developers and businesses alike are grappling with how to harness this power effectively, facing challenges in scalability, efficiency, and ethical deployment. At the heart of building these intelligent systems lies the critical choice of the right AI framework.

Why Choosing the Right Framework Matters More Than Ever

In 2025, selecting an AI framework isn't just a technical decision; it's a strategic one that can profoundly impact your project's trajectory. The right framework can accelerate development cycles, optimize model performance, streamline deployment processes, and ultimately ensure your project's success and ROI. Conversely, a poor or ill-suited choice can lead to significant bottlenecks, increased development costs, limited scalability, and missed market opportunities. Understanding the current landscape of AI tools and meticulously aligning your choice with your specific project needs is crucial for thriving in the competitive world of AI development.

2. Understanding AI Frameworks: The Foundation of Intelligent Systems

Before we dive into the top contenders of AI frameworks in 2025, let's clarify what an AI framework actually is and why it's so fundamental to building intelligent applications.

What Exactly is an AI Framework?

An AI framework is essentially a comprehensive library or platform that provides a structured set of pre-built tools, libraries, and functions. Its primary purpose is to make developing machine learning (ML) and deep learning (DL) models easier, faster, and more efficient. Think of it as a specialized, high-level toolkit for AI development. Instead of coding every complex mathematical operation, algorithm, or neural network layer from scratch, developers use these frameworks to perform intricate tasks with just a few lines of code, focusing more on model architecture and data.

Key Components and Core Functions

Most AI frameworks come equipped with several core components that underpin their functionality:

Automatic Differentiation: This is a fundamental capability, particularly critical for training deep learning frameworks. It enables the efficient calculation of gradients, which are essential for how neural networks learn from data.

Optimizers: These are algorithms that adjust model parameters (weights and biases) during training to minimize errors and improve model performance. Common examples include Adam, SGD, and RMSprop.

Neural Network Layers: Frameworks provide ready-to-use building blocks (e.g., convolutional layers for image processing, recurrent layers for sequential data, and dense layers) that can be easily stacked and configured to create complex neural network architectures.

Data Preprocessing Tools: Utilities within frameworks simplify the often complex tasks of data cleaning, transformation, augmentation, and loading, ensuring data is in the right format for model training.

Model Building APIs: High-level interfaces allow developers to define, train, evaluate, and save their models with relatively simple and intuitive code.

GPU/TPU Support: Crucially, most modern AI frameworks are optimized to leverage specialized hardware like Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs) for parallel computation, dramatically accelerating the computationally intensive process of deep learning model training.

The Role of Frameworks in Streamlining AI Development

AI frameworks play a pivotal role in streamlining the entire AI development process. They standardize workflows, abstract away low-level programming complexities, and provide a collaborative environment for teams. Specifically, they enable developers to:

Faster Prototyping: Quickly test and refine ideas by assembling models from pre-built components, accelerating the experimentation phase.

Higher Efficiency: Significantly reduce development time and effort by reusing optimized, built-in tools and functions rather than recreating them.

Scalability: Build robust models that can effectively handle vast datasets and scale efficiently for deployment in production environments.

Team Collaboration: Provide a common language, set of tools, and established best practices that streamline teamwork and facilitate easier project handover.

3. The Leading AI Frameworks in 2025: A Deep Dive

The AI development landscape is dynamic, with continuous innovation. However, several AI frameworks have solidified their positions as industry leaders by 2025, each possessing unique strengths and catering to specific ideal use cases.

TensorFlow: Google's Enduring Giant

TensorFlow, developed by Google, remains one of the most widely adopted deep learning frameworks, especially in large-scale production environments.

Key Features & Strengths:

Comprehensive Ecosystem: Boasts an extensive ecosystem, including TensorFlow Lite (for mobile and edge devices), TensorFlow.js (for web-based ML), and TensorFlow Extended (TFX) for end-to-end MLOps pipelines.

Scalable & Production-Ready: Designed from the ground up for massive computational graphs and robust deployment in enterprise-level solutions.

Great Visuals: TensorBoard offers powerful visualization tools for monitoring training metrics, debugging models, and understanding network architectures.

Versatile: Highly adaptable for a wide range of ML tasks, from academic research to complex, real-world production applications.

Ideal Use Cases: Large-scale enterprise AI solutions, complex research projects requiring fine-grained control, production deployment of deep learning models, mobile and web AI applications, and MLOps pipeline automation.

PyTorch: The Research & Flexibility Champion

PyTorch, developed by Meta (formerly Facebook) AI Research, has become the preferred choice in many academic and research communities, rapidly gaining ground in production.

Key Features & Strengths:

Flexible Debugging: Its dynamic computation graph (known as "define-by-run") makes debugging significantly easier and accelerates experimentation.

Python-Friendly: Its deep integration with the Python ecosystem and intuitive API makes it feel natural and accessible to Python developers, contributing to a smoother learning curve for many.

Research-Focused: Widely adopted in academia and research for its flexibility, allowing for rapid prototyping of novel architectures and algorithms.

Production-Ready: Has significantly matured in production capabilities with tools like PyTorch Lightning for streamlined training and TorchServe for model deployment.

Ideal Use Cases: Rapid prototyping, advanced AI research, projects requiring highly customized models and complex neural network architectures, and startups focused on quick iteration and experimentation.

JAX: Google's High-Performance Differentiable Programming

JAX, also from Google, is gaining substantial traction for its powerful automatic differentiation and high-performance numerical computation capabilities, particularly in cutting-edge research.

Key Features & Strengths:

Advanced Autodiff: Offers highly powerful and flexible automatic differentiation, not just for scalars but for vectors, matrices, and even higher-order derivatives.

XLA Optimized: Leverages Google's Accelerated Linear Algebra (XLA) compiler for extreme performance optimization and efficient execution on GPUs and TPUs.

Composable Functions: Enables easy composition of functional transformations like grad (for gradients), jit (for just-in-time compilation), and vmap (for automatic vectorization) to create highly optimized and complex computations.

Research-Centric: Increasingly popular in advanced AI research for exploring novel AI architectures and training massive models.

Ideal Use Cases: Advanced AI research, developing custom optimizers and complex loss functions, high-performance computing, exploring novel AI architectures, and training deep learning models on TPUs where maximum performance is critical.

Keras (with TensorFlow/JAX/PyTorch backend): Simplicity Meets Power

Keras is a high-level API designed for fast experimentation with deep neural networks. Its strength lies in its user-friendliness and ability to act as an interface for other powerful deep learning frameworks.

Key Features & Strengths:

Beginner-Friendly: Offers a simple, intuitive, high-level API, making it an excellent entry point for newcomers to deep learning.

Backend Flexibility: Can run seamlessly on top of TensorFlow, JAX, or PyTorch, allowing developers to leverage the strengths of underlying frameworks while maintaining Keras's ease of use.

Fast Prototyping: Its straightforward design is ideal for quickly building, training, and testing models.

Easy Experimentation: Its intuitive design supports rapid development cycles and iterative model refinement.

Ideal Use Cases: Quick model building and iteration, educational purposes, projects where rapid prototyping is a priority, and developers who prefer a high-level abstraction to focus on model design rather than low-level implementation details.

Hugging Face Transformers (Ecosystem, not just a framework): The NLP Powerhouse

While not a standalone deep learning framework itself, the Hugging Face Transformers library, along with its broader ecosystem (Datasets, Accelerate, etc.), has become indispensable for Natural Language Processing (NLP) and Large Language Model (LLM) AI development.

Key Features & Strengths:

Huge Library of Pre-trained Models: Offers an enormous collection of state-of-the-art pre-trained models for NLP, computer vision (CV), and audio tasks, making it easy to leverage cutting-edge research.

Unified, Framework-Agnostic API: Provides a consistent interface for using various models, compatible with TensorFlow, PyTorch, and JAX.

Strong Community & Documentation: A vibrant community and extensive, clear documentation make it exceptionally easy to get started and find solutions for complex problems.

Ideal Use Cases: Developing applications involving NLP tasks (text generation, sentiment analysis, translation, summarization), fine-tuning and deploying custom LLM applications, or leveraging pre-trained models for various AI tasks with minimal effort.

Scikit-learn: The Machine Learning Workhorse

Scikit-learn is a foundational machine learning framework for traditional ML algorithms, distinct from deep learning but critical for many data science applications.

Key Features & Strengths:

Extensive Classic ML Algorithms: Offers a wide array of battle-tested traditional machine learning algorithms for classification, regression, clustering, dimensionality reduction, model selection, and preprocessing.

Simple API, Strong Python Integration: Known for its user-friendly, consistent API and seamless integration with Python's scientific computing stack (NumPy, SciPy, Matplotlib).

Excellent Documentation: Provides comprehensive and easy-to-understand documentation with numerous examples.

Ideal Use Cases: Traditional machine learning tasks, data mining, predictive analytics on tabular data, feature engineering, statistical modeling, and projects where deep learning is not required or feasible.

4. Beyond the Hype: Critical Factors for Choosing Your AI Framework

Choosing the "best" AI framework isn't about picking the most popular one; it's about selecting the right fit for your AI project. Here are the critical factors that CTOs, tech leads, and developers must consider to make an informed decision:

Project Requirements & Scope

Type of AI Task: Different frameworks excel in specific domains. Are you working on Computer Vision (CV), Natural Language Processing (NLP), Time Series analysis, reinforcement learning, or traditional tabular data?

Deployment Scale: Where will your model run? On a small edge device, a mobile phone, a web server, or a massive enterprise cloud infrastructure? The framework's support for various deployment targets is crucial.

Performance Needs: Does your application demand ultra-low latency, high throughput (processing many requests quickly), or efficient memory usage? Benchmarking and framework optimization capabilities become paramount.

Community & Ecosystem Support

Documentation and Tutorials: Are there clear, comprehensive guides, tutorials, and examples available to help your team get started and troubleshoot issues?

Active Developer Community & Forums: A strong, vibrant community means more shared knowledge, faster problem-solving, and continuous improvement of the framework.

Available Pre-trained Models & Libraries: Access to pre-trained models (like those from Hugging Face) and readily available libraries for common tasks can drastically accelerate development time.

Learning Curve & Team Expertise

Onboarding: How easily can new team members learn the framework's intricacies and become productive contributors to the AI development effort?

Existing Skills: Does the framework align well with your team's current expertise in Python, specific mathematical concepts, or other relevant technologies? Leveraging existing knowledge can boost efficiency.

Flexibility & Customization

Ease of Debugging and Experimentation: A flexible framework allows for easier iteration, understanding of model behavior, and efficient debugging, which is crucial for research and complex AI projects.

Support for Custom Layers and Models: Can you easily define and integrate custom neural network layers or entirely new model architectures if your AI project requires something unique or cutting-edge?

Integration Capabilities

Compatibility with Existing Tech Stack: How well does the framework integrate with your current programming languages, databases, cloud providers, and existing software infrastructure? Seamless integration saves development time.

Deployment Options: Does the framework offer clear and efficient pathways for deploying your trained models to different environments (e.g., mobile apps, web services, cloud APIs, IoT devices)?

Hardware Compatibility

GPU/TPU Support and Optimization: For deep learning frameworks, efficient utilization of specialized hardware like GPUs and TPUs is paramount for reducing training time and cost. Ensure the framework offers robust and optimized support for the hardware you plan to use.

Licensing and Commercial Use Considerations

Open-source vs. Proprietary Licenses: Most leading AI frameworks are open-source (e.g., Apache 2.0, MIT), offering flexibility. However, always review the specific license to ensure it aligns with your commercial use case and intellectual property requirements.

5. Real-World Scenarios: Picking the Right Tool for the Job

Let's look at a few common AI project scenarios and which AI frameworks might be the ideal fit, considering the factors above:

Scenario 1: Rapid Prototyping & Academic Research

Best Fit: PyTorch, Keras (with any backend), or JAX. Their dynamic graphs (PyTorch) and high-level APIs (Keras) allow for quick iteration, experimentation, and easier debugging, which are crucial in research settings. JAX is gaining ground here for its power and flexibility in exploring novel architectures.

Scenario 2: Large-Scale Enterprise Deployment & Production

Best Fit: TensorFlow or PyTorch (with production tools like TorchServe/Lightning). TensorFlow's robust ecosystem (TFX, SavedModel format) and emphasis on scalability make it a strong contender. PyTorch's production readiness has also significantly matured, making it a viable choice for large-scale AI development and deployment.

Scenario 3: Developing a Custom NLP/LLM Application

Best Fit: Hugging Face Transformers (running on top of PyTorch or TensorFlow). This ecosystem provides the fastest way to leverage and fine-tune state-of-the-art large language models (LLMs), significantly reducing AI development time and effort. Its vast collection of pre-trained models is a game-changer for AI tools in NLP.

Scenario 4: Building Traditional Machine Learning Models

Best Fit: Scikit-learn. For tasks like classification, regression, clustering, and data preprocessing on tabular data, Scikit-learn remains the industry standard. Its simplicity, efficiency, and comprehensive algorithm library make it the go-to machine learning framework for non-deep learning applications.

6. Conclusion: The Strategic Imperative of Informed Choice

In 2025, the proliferation of AI frameworks offers incredible power and flexibility to organizations looking to implement AI solutions. However, it also presents a significant strategic challenge. The dynamic nature of these AI tools means continuous learning and adaptation are essential for developers and businesses alike to stay ahead in the rapidly evolving AI development landscape.

Investing in the right AI framework is about more than just following current 2025 AI trends; it's about laying a solid foundation for your future success in the AI-driven world. An informed choice minimizes technical debt, maximizes developer productivity, and ultimately ensures your AI projects deliver tangible business value and a competitive edge.

Navigating this complex landscape, understanding the nuances of each deep learning framework, and selecting the optimal AI framework for your unique requirements can be daunting. If you're looking to leverage AI to revolutionize your projects, optimize your AI development process, or need expert guidance in selecting and implementing the best AI tools, consider partnering with an experienced AI software development company. We can help you build intelligent solutions tailored to your specific needs, ensuring you pick the perfect fit for your AI project and thrive in the future of AI.

#ai gpt chat#open ai chat bot#ai talking#chat ai online#ai chat bot online#google chat bot#google ai chat#chat ai free#gpt chat ai#free ai chat bot#best ai chat#chat bot gpt#ai chat online free#chat ai bot#conversational ai chatbot#chat gpt chatbot#cognigy ai#gpt ai chat#google chat ai#best chat bot#ai chat bot free#best ai chat bot#ai chat website#chat ai google#ai open chat#conversational chatbot#chat gpt bot#bot ai chat#ai chat bot for website#new chat ai

0 notes

Text

AI with ML & DL Training for Shaping Artificial Intelligence

Discover how pursuing training in AI, Machine Learning & Deep Learning can unlock new opportunities & drive innovation at The Data Tech Labs.

0 notes

Text

The Ultimate AI Glossary: Artificial Intelligence Definitions to Know

Artificial Intelligence (AI) is transforming every industry, revolutionizing how we work, live, and interact with the world. But with its rapid evolution comes a flurry of specialized terms and concepts that can feel like learning a new language. Whether you're a budding data scientist, a business leader, or simply curious about the future, understanding the core vocabulary of AI is essential.

Consider this your ultimate guide to the most important AI definitions you need to know.

Core Concepts & Foundational Terms

Artificial Intelligence (AI): The overarching field dedicated to creating machines that can perform tasks that typically require human intelligence, such as learning, problem-solving, decision-making, perception, and understanding language.

Machine Learning (ML): A subset of AI that enables systems to learn from data without being explicitly programmed. Instead of following static instructions, ML algorithms build models based on sample data, called "training data," to make predictions or decisions.

Deep Learning (DL): A subset of Machine Learning that uses Artificial Neural Networks with multiple layers (hence "deep") to learn complex patterns from large amounts of data. It's particularly effective for tasks like image recognition, natural language processing, and speech recognition.

Neural Network (NN): A computational model inspired by the structure and function of the human brain. It consists of interconnected "neurons" (nodes) organized in layers, which process and transmit information.

Algorithm: A set of rules or instructions that a computer follows to solve a problem or complete a task. In AI, algorithms are the recipes that define how a model learns and makes predictions.

Model: The output of a machine learning algorithm after it has been trained on data. The model encapsulates the patterns and rules learned from the data, which can then be used to make predictions on new, unseen data.

Training Data: The dataset used to "teach" a machine learning model. It contains input examples along with their corresponding correct outputs (in supervised learning).

Inference: The process of using a trained AI model to make predictions or decisions on new, unseen data. This is when the model applies what it has learned.

Types of Learning

Supervised Learning: A type of ML where the model learns from labeled training data (input-output pairs). The goal is to predict the output for new inputs.

Examples: Regression (predicting a continuous value like house price), Classification (predicting a category like "spam" or "not spam").

Unsupervised Learning: A type of ML where the model learns from unlabeled data, finding patterns or structures without explicit guidance.

Examples: Clustering (grouping similar data points), Dimensionality Reduction (simplifying data by reducing variables).

Reinforcement Learning (RL): A type of ML where an "agent" learns to make decisions by interacting with an environment, receiving "rewards" for desired actions and "penalties" for undesirable ones. It learns through trial and error.

Examples: Training game-playing AI (AlphaGo), robotics, autonomous navigation.

Key Concepts in Model Building & Performance

Features: The individual measurable properties or characteristics of a phenomenon being observed. These are the input variables used by a model to make predictions.

Target (or Label): The output variable that a machine learning model is trying to predict in supervised learning.

Overfitting: When a model learns the training data too well, including its noise and outliers, leading to poor performance on new, unseen data. The model essentially memorizes the training data rather than generalizing patterns.

Underfitting: When a model is too simple to capture the underlying patterns in the training data, resulting in poor performance on both training and new data.

Bias-Variance Trade-off: A core concept in ML that describes the tension between two sources of error in a model:

Bias: Error from erroneous assumptions in the learning algorithm (underfitting).

Variance: Error from sensitivity to small fluctuations in the training data (overfitting). Optimizing a model often involves finding the right balance.

Hyperparameters: Configuration variables external to the model that are set before the training process begins (e.g., learning rate, number of layers in a neural network). They control the learning process itself.

Metrics: Quantitative measures used to evaluate the performance of an AI model (e.g., accuracy, precision, recall, F1-score for classification; Mean Squared Error, R-squared for regression).

Advanced AI Techniques & Applications

Natural Language Processing (NLP): A field of AI that enables computers to understand, interpret, and generate human language.

Examples: Sentiment analysis, machine translation, chatbots.

Computer Vision (CV): A field of AI that enables computers to "see" and interpret images and videos.

Examples: Object detection, facial recognition, image classification.

Generative AI: A type of AI that can create new content, such as text, images, audio, or video, that resembles real-world data.

Examples: Large Language Models (LLMs) like GPT, image generators like DALL-E.

Large Language Model (LLM): A type of deep learning model trained on vast amounts of text data, capable of understanding, generating, and processing human language with remarkable fluency and coherence.

Robotics: The interdisciplinary field involving the design, construction, operation, and use of robots. AI often powers the "brains" of robots for perception, navigation, and decision-making.

Explainable AI (XAI): An emerging field that aims to make AI models more transparent and understandable to humans, addressing the "black box" problem of complex models.

Ethical AI / Responsible AI: The practice of developing and deploying AI systems in a way that is fair, unbiased, transparent, secure, and respectful of human values and privacy.

This glossary is just the beginning of your journey into the fascinating world of AI. As you delve deeper, you'll encounter many more specialized terms. However, mastering these foundational definitions will provide you with a robust framework to understand the current capabilities and future potential of artificial intelligence. Keep learning, keep exploring, and stay curious!

0 notes

Text

Your Step-by-Step Guide to Enrolling in an Artificial Intelligence Classroom Course in Bengaluru

If you're looking to launch or advance your career in artificial intelligence (AI), enrolling in an Artificial Intelligence Classroom Course in Bengaluru is one of the smartest decisions you can make. Bengaluru—India’s Silicon Valley—is home to tech giants, AI start-ups, and innovation hubs, making it the perfect place to gain practical, hands-on training in AI technologies.

This step-by-step guide will walk you through everything you need to know—from understanding the value of AI classroom courses to finding the best institutes, enrolling in the right program, and preparing for a rewarding career in AI.

Why Choose a Classroom-Based AI Course in Bengaluru?

Before diving into the enrollment process, it’s important to understand why classroom-based training holds value—especially in a tech-centric city like Bengaluru.

Benefits of Classroom AI Courses

Structured Learning: With scheduled sessions, real-time interaction, and mentorship, classroom training offers the discipline many learners need.

Hands-on Projects: Most AI classroom courses in Bengaluru include capstone projects and lab sessions that simulate real-world challenges.

Peer Interaction: Learning alongside fellow students and professional’s fosters collaboration, networking, and teamwork.

Expert Faculty: Institutes in Bengaluru often hire AI practitioners and data scientists with industry experience.

Step 1: Understand the Scope of Artificial Intelligence

Before enrolling, it’s essential to know what AI encompasses so you can align your career goals with your course.

Core Concepts Covered in AI Classroom Courses:

Machine Learning & Deep Learning

Natural Language Processing (NLP)

Computer Vision

Neural Networks

Python for AI

AI in Business and Automation

Model Evaluation and Deployment

Bengaluru’s top institutions make sure their curriculum reflects industry-relevant skills so that students are job-ready from day one.

Step 2: Identify Your Goals and Skill Level

Ask yourself:

Are you a beginner with no coding experience?

Are you a working professional looking to upskill?

Are you aiming for a career shift into data science or AI research?

Depending on your profile, you’ll find different types of courses:

Foundation Courses for beginners

Advanced Certifications for experienced developers or analysts

Specialized Tracks such as Computer Vision, NLP, or AI Ethics

Knowing your goals helps you choose the right program that’s not too basic or too advanced for your current knowledge level.

Step 3: Shortlist the Best AI Classroom Courses in Bengaluru

Bengaluru is home to some of India’s top AI training institutes, offering industry-aligned classroom programs. When shortlisting, consider:

🔍 What to Look for:

Curriculum Depth: Make sure it includes ML, DL, and hands-on projects.

Faculty Experience: Are instructors AI professionals or researchers?

Placement Assistance: Does the institute offer job support or internships?

Certifications: Is the certificate recognized by employers?

⭐ Recommended Institute: Boston Institute of Analytics (BIA)

One of the most trusted names in AI education, the Boston Institute of Analytics offers a comprehensive Artificial Intelligence Classroom Course in Bengaluru designed for both beginners and working professionals. Here's why BIA stands out:

Industry-designed curriculum with modules on AI, ML, DL, NLP, and model deployment

Classroom sessions with expert faculty and mentoring

Live projects and case studies from real-world business use-cases

100% placement assistance with partner companies

International certification recognized across industries

Whether you're starting out or advancing your AI career, BIA provides the right platform in the heart of Bengaluru's tech landscape.

Step 4: Check the Eligibility and Admission Requirements

Each institute has its own set of eligibility criteria, but here’s what most courses require:

General Eligibility:

Bachelor’s degree (Engineering, IT, Science preferred)

Basic understanding of mathematics and statistics

Familiarity with programming (Python) is a plus but not always required

Some institutes offer foundation modules to help learners from non-technical backgrounds get up to speed.

Step 5: Attend a Free Demo Class or Webinar

Reputed institutes in Bengaluru often offer free demo classes, webinars, or counseling sessions to help students understand:

The course structure

Teaching methodology

Trainer qualifications

Career outcomes

This is a great way to evaluate the course quality before committing.

At BIA, you can request a free counseling session or attend a sample class to get a feel of the interactive classroom experience and the expert guidance offered.

Step 6: Evaluate Course Duration, Fees, and Flexibility

Typical Course Details:

Duration: 3 to 6 months (Weekend or weekday options)

Fees: ₹60,000 to ₹1,50,000 depending on course depth and institute reputation