#docker disk space

Explore tagged Tumblr posts

Text

Docker Overlay2 Cleanup: 5 Ways to Reclaim Disk Space

Docker Overlay2 Cleanup: 5 Ways to Reclaim Disk Space #DockerOverlay2Cleanup #DiskSpaceManagement #DockerContainerStorage #OptimizeDockerPerformance #ReduceDockerDiskUsage #DockerSystemPrune #DockerImageCleanup #DockerVolumeManagement #virtualizationhowto

If you are running Docker containers on a Docker container host, you may have seen issues with disk space. Docker Overlay2 can become a disk space hog if not managed efficiently. This post examines six effective methods for Docker Overlay2 cleanup to reclaim space on your Docker host. Table of contentsDisk space issues on a Docker hostWhat is the Overlay file system?Filesystem layers…

View On WordPress

0 notes

Link

#Automation#cloud#configuration#Dashboard#energymonitoring#HomeAssistant#homesecurity#Install#Integration#IoT#Linux#MQTT#open-source#operatingsystem#RaspberryPi#self-hosted#sensors#smarthome#systemadministration#Z-Wave#Zigbee

0 notes

Text

Postal SMTP install and setup on a virtual server

Postal is a full suite for mail delivery with robust features suited for running a bulk email sending SMTP server. Postal is open source and free. Some of its features are: - UI for maintaining different aspects of your mail server - Runs on containers, hence allows for up and down horizontal scaling - Email security features such as spam and antivirus - IP pools to help you maintain a good sending reputation by sending via multiple IPs - Multitenant support - multiple users, domains and organizations - Monitoring queue for outgoing and incoming mail - Built in DNS setup and monitoring to ensure mail domains are set up correctly List of full postal features

Possible cloud providers to use with Postal

You can use Postal with any VPS or Linux server providers of your choice, however here are some we recommend: Vultr Cloud (Get free $300 credit) - In case your SMTP port is blocked, you can contact Vultr support, and they will open it for you after providing a personal identification method. DigitalOcean (Get free $200 Credit) - You will also need to contact DigitalOcean support for SMTP port to be open for you. Hetzner ( Get free €20) - SMTP port is open for most accounts, if yours isn't, contact the Hetzner support and request for it to be unblocked for you Contabo (Cheapest VPS) - Contabo doesn't block SMTP ports. In case you are unable to send mail, contact support. Interserver

Postal Minimum requirements

- At least 4GB of RAM - At least 2 CPU cores - At least 25GB disk space - You can use docker or any Container runtime app. Ensure Docker Compose plugin is also installed. - Port 25 outbound should be open (A lot of cloud providers block it)

Postal Installation

Should be installed on its own server, meaning, no other items should be running on the server. A fresh server install is recommended. Broad overview of the installation procedure - Install Docker and the other needed apps - Configuration of postal and add DNS entries - Start Postal - Make your first user - Login to the web interface to create virtual mail servers Step by step install Postal Step 1 : Install docker and additional system utilities In this guide, I will use Debian 12 . Feel free to follow along with Ubuntu. The OS to be used does not matter, provided you can install docker or any docker alternative for running container images. Commands for installing Docker on Debian 12 (Read the comments to understand what each command does): #Uninstall any previously installed conflicting software . If you have none of them installed it's ok for pkg in docker.io docker-doc docker-compose podman-docker containerd runc; do sudo apt-get remove $pkg; done #Add Docker's official GPG key: sudo apt-get update sudo apt-get install ca-certificates curl -y sudo install -m 0755 -d /etc/apt/keyrings sudo curl -fsSL https://download.docker.com/linux/debian/gpg -o /etc/apt/keyrings/docker.asc sudo chmod a+r /etc/apt/keyrings/docker.asc #Add the Docker repository to Apt sources: echo "deb https://download.docker.com/linux/debian $(. /etc/os-release && echo "$VERSION_CODENAME") stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null sudo apt-get update #Install the docker packages sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin -y #You can verify that the installation is successful by running the hello-world image sudo docker run hello-world Add the current user to the docker group so that you don't have to use sudo when not logged in as the root user. ##Add your current user to the docker group. sudo usermod -aG docker $USER #Reboot the server sudo reboot Finally test if you can run docker without sudo ##Test that you don't need sudo to run docker docker run hello-world Step 2 : Get the postal installation helper repository The Postal installation helper has all the docker compose files and the important bootstrapping tools needed for generating configuration files. Install various needed tools #Install additional system utlities apt install git vim htop curl jq -y Then clone the helper repository. sudo git clone https://github.com/postalserver/install /opt/postal/install sudo ln -s /opt/postal/install/bin/postal /usr/bin/postal Step 3 : Install MariaDB database Here is a sample MariaDB container from the postal docs. But you can use the docker compose file below it. docker run -d --name postal-mariadb -p 127.0.0.1:3306:3306 --restart always -e MARIADB_DATABASE=postal -e MARIADB_ROOT_PASSWORD=postal mariadb Here is a tested mariadb compose file to run a secure MariaDB 11.4 container. You can change the version to any image you prefer. vi docker-compose.yaml services: mariadb: image: mariadb:11.4 container_name: postal-mariadb restart: unless-stopped environment: MYSQL_ROOT_PASSWORD: ${DB_ROOT_PASSWORD} volumes: - mariadb_data:/var/lib/mysql network_mode: host # Set to use the host's network mode security_opt: - no-new-privileges:true read_only: true tmpfs: - /tmp - /run/mysqld healthcheck: test: interval: 30s timeout: 10s retries: 5 volumes: mariadb_data: You need to create an environment file with the Database password . To simplify things, postal will use the root user to access the Database.env file example is below. Place it in the same location as the compose file. DB_ROOT_PASSWORD=ExtremelyStrongPasswordHere Run docker compose up -d and ensure the database is healthy. Step 4 : Bootstrap the domain for your Postal web interface & Database configs First add DNS records for your postal domain. The most significant records at this stage are the A and/or AAAA records. This is the domain where you'll be accessing the postal UI and for simplicity will also act as the SMTP server. If using Cloudflare, turn off the Cloudflare proxy. sudo postal bootstrap postal.yourdomain.com The above will generate three files in /opt/postal/config. - postal.yml is the main postal configuration file - signing.key is the private key used to sign various things in Postal - Caddyfile is the configuration for the Caddy web server Open /opt/postal/config/postal.yml and add all the values for DB and other settings. Go through the file and see what else you can edit. At the very least, enter the correct DB details for postal message_db and main_db. Step 5 : Initialize the Postal database and create an admin user postal initialize postal make-user If everything goes well with postal initialize, then celebrate. This is the part where you may face some issues due to DB connection failures. Step 6 : Start running postal # run postal postal start #checking postal status postal status # If you make any config changes in future you can restart postal like so # postal restart Step 7 : Proxy for web traffic To handle web traffic and ensure TLS termination you can use any proxy server of your choice, nginx, traefik , caddy etc. Based on Postal documentation, the following will start up caddy. You can use the compose file below it. Caddy is easy to use and does a lot for you out of the box. Ensure your A records are pointing to your server before running Caddy. docker run -d --name postal-caddy --restart always --network host -v /opt/postal/config/Caddyfile:/etc/caddy/Caddyfile -v /opt/postal/caddy-data:/data caddy Here is a compose file you can use instead of the above docker run command. Name it something like caddy-compose.yaml services: postal-caddy: image: caddy container_name: postal-caddy restart: always network_mode: host volumes: - /opt/postal/config/Caddyfile:/etc/caddy/Caddyfile - /opt/postal/caddy-data:/data You can run it by doing docker compose -f caddy-compose.yaml up -d Now it's time to go to the browser and login. Use the domain, bootstrapped earlier. Add an organization, create server and add a domain. This is done via the UI and it is very straight forward. For every domain you add, ensure to add the DNS records you are provided.

Enable IP Pools

One of the reasons why Postal is great for bulk email sending, is because it allows for sending emails using multiple IPs in a round-robin fashion. Pre-requisites - Ensure the IPs you want to add as part of the pool, are already added to your VPS/server. Every cloud provider has a documentation for adding additional IPs, make sure you follow their guide to add all the IPs to the network. When you run ip a , you should see the IP addresses you intend to use in the pool. Enabling IP pools in the Postal config First step is to enable IP pools settings in the postal configuration, then restart postal. Add the following configuration in the postal.yaml (/opt/postal/config/postal.yml) file to enable pools. If the section postal: , exists, then just add use_ip_pools: true under it. postal: use_ip_pools: true Then restart postal. postal stop && postal start The next step is to go to the postal interface on your browser. A new IP pools link is now visible at the top right corner of your postal dashboard. You can use the IP pools link to add a pool, then assign IP addresses in the pools. A pool could be something like marketing, transactions, billing, general etc. Once the pools are created and IPs assigned to them, you can attach a pool to an organization. This organization can now use the provided IP addresses to send emails. Open up an organization and assign a pool to it. Organizations → choose IPs → choose pools . You can then assign the IP pool to servers from the server's Settings page. You can also use the IP pool to configure IP rules for the organization or server. At any point, if you are lost, look at the Postal documentation. Read the full article

0 notes

Text

Installing Jekins

In this blog I will guide you on installing Jenkins on ubunutu machine. Prerequisites 256MB of RAM 1 GB of Disk space (10GB recommended for running as Docker container) Download the jenkins keyring and store the file in “/usr/share/keyrings/jenkins-keyring.asc” file sudo wget -O /usr/share/keyrings/jenkins-keyring.asc https://pkg.jenkins.io/debian-stable/ jenkins.io-2023.key Enter fullscreen…

View On WordPress

0 notes

Text

How to Train and Use Hunyuan Video LoRA Models

New Post has been published on https://thedigitalinsider.com/how-to-train-and-use-hunyuan-video-lora-models/

How to Train and Use Hunyuan Video LoRA Models

This article will show you how to install and use Windows-based software that can train Hunyuan video LoRA models, allowing the user to generate custom personalities in the Hunyuan Video foundation model:

Click to play. Examples from the recent explosion of celebrity Hunyuan LoRAs from the civit.ai community.

At the moment the two most popular ways of generating Hunyuan LoRA models locally are:

1) The diffusion-pipe-ui Docker-based framework, which relies on Windows Subsystem for Linux (WSL) to handle some of the processes.

2) Musubi Tuner, a new addition to the popular Kohya ss diffusion training architecture. Musubi Tuner does not require Docker and does not depend on WSL or other Linux-based proxies – but it can be difficult to get running on Windows.

Therefore this run-through will focus on Musubi Tuner, and on providing a completely local solution for Hunyuan LoRA training and generation, without the use of API-driven websites or commercial GPU-renting processes such as Runpod.

Click to play. Samples from LoRA training on Musubi Tuner for this article. All permissions granted by the person depicted, for the purposes of illustrating this article.

REQUIREMENTS

The installation will require at minimum a Windows 10 PC with a 30+/40+ series NVIDIA card that has at least 12GB of VRAM (though 16GB is recommended). The installation used for this article was tested on a machine with 64GB of system RAM and a NVIDIA 3090 graphics cards with 24GB of VRAM. It was tested on a dedicated test-bed system using a fresh install of Windows 10 Professional, on a partition with 600+GB of spare disk space.

WARNING

Installing Musubi Tuner and its prerequisites also entails the installation of developer-focused software and packages directly onto the main Windows installation of a PC. Taking the installation of ComfyUI into account, for the end stages, this project will require around 400-500 gigabytes of disk space. Though I have tested the procedure without incident several times in newly-installed test bed Windows 10 environments, neither I nor unite.ai are liable for any damage to systems from following these instructions. I advise you to back up any important data before attempting this kind of installation procedure.

Considerations

Is This Method Still Valid?

The generative AI scene is moving very fast, and we can expect better and more streamlined methods of Hunyuan Video LoRA frameworks this year.

…or even this week! While I was writing this article, the developer of Kohya/Musubi produced musubi-tuner-gui, a sophisticated Gradio GUI for Musubi Tuner:

Obviously a user-friendly GUI is preferable to the BAT files that I use in this feature – once musubi-tuner-gui is working. As I write, it only went online five days ago, and I can find no account of anyone successfully using it.

According to posts in the repository, the new GUI is intended to be rolled directly into the Musubi Tuner project as soon as possible, which will end its current existence as a standalone GitHub repository.

Based on the present installation instructions, the new GUI gets cloned directly into the existing Musubi virtual environment; and, despite many efforts, I cannot get it to associate with the existing Musubi installation. This means that when it runs, it will find that it has no engine!

Once the GUI is integrated into Musubi Tuner, issues of this kind will surely be resolved. Though the author concedes that the new project is ‘really rough’, he is optimistic for its development and integration directly into Musubi Tuner.

Given these issues (also concerning default paths at install-time, and the use of the UV Python package, which complicates certain procedures in the new release), we will probably have to wait a little for a smoother Hunyuan Video LoRA training experience. That said, it looks very promising!

But if you can’t wait, and are willing to roll your sleeves up a bit, you can get Hunyuan video LoRA training running locally right now.

Let’s get started.

Why Install Anything on Bare Metal?

(Skip this paragraph if you’re not an advanced user) Advanced users will wonder why I have chosen to install so much of the software on the bare metal Windows 10 installation instead of in a virtual environment. The reason is that the essential Windows port of the Linux-based Triton package is far more difficult to get working in a virtual environment. All the other bare-metal installations in the tutorial could not be installed in a virtual environment, as they must interface directly with local hardware.

Installing Prerequisite Packages and Programs

For the programs and packages that must be initially installed, the order of installation matters. Let’s get started.

1: Download Microsoft Redistributable

Download and install the Microsoft Redistributable package from https://aka.ms/vs/17/release/vc_redist.x64.exe.

This is a straightforward and rapid installation.

2: Install Visual Studio 2022

Download the Microsoft Visual Studio 2022 Community edition from https://visualstudio.microsoft.com/downloads/?cid=learn-onpage-download-install-visual-studio-page-cta

Start the downloaded installer:

We don’t need every available package, which would be a heavy and lengthy install. At the initial Workloads page that opens, tick Desktop Development with C++ (see image below).

Now click the Individual Components tab at the top-left of the interface and use the search box to find ‘Windows SDK’.

By default, only the Windows 11 SDK is ticked. If you are on Windows 10 (this installation procedure has not been tested by me on Windows 11), tick the latest Windows 10 version, indicated in the image above.

Search for ‘C++ CMake’ and check that C++ CMake tools for Windows is checked.

This installation will take at least 13 GB of space.

Once Visual Studio has installed, it will attempt to run on your computer. Let it open fully. When the Visual Studio’s full-screen interface is finally visible, close the program.

3: Install Visual Studio 2019

Some of the subsequent packages for Musubi are expecting an older version of Microsoft Visual Studio, while others need a more recent one.

Therefore also download the free Community edition of Visual Studio 19 either from Microsoft (https://visualstudio.microsoft.com/vs/older-downloads/ – account required) or Techspot (https://www.techspot.com/downloads/7241-visual-studio-2019.html).

Install it with the same options as for Visual Studio 2022 (see procedure above, except that Windows SDK is already ticked in the Visual Studio 2019 installer).

You’ll see that the Visual Studio 2019 installer is already aware of the newer version as it installs:

When installation is complete, and you have opened and closed the installed Visual Studio 2019 application, open a Windows command prompt (Type CMD in Start Search) and type in and enter:

where cl

The result should be the known locations of the two installed Visual Studio editions.

If you instead get INFO: Could not find files for the given pattern(s), see the Check Path section of this article below, and use those instructions to add the relevant Visual Studio paths to Windows environment.

Save any changes made according to the Check Paths section below, and then try the where cl command again.

4: Install CUDA 11 + 12 Toolkits

The various packages installed in Musubi need different versions of NVIDIA CUDA, which accelerates and optimizes training on NVIDIA graphics cards.

The reason we installed the Visual Studio versions first is that the NVIDIA CUDA installers search for and integrate with any existing Visual Studio installations.

Download an 11+ series CUDA installation package from:

https://developer.nvidia.com/cuda-11-8-0-download-archive?target_os=Windows&target_arch=x86_64&target_version=11&target_type=exe_local (download ‘exe (local’) )

Download a 12+ series CUDA Toolkit installation package from:

https://developer.nvidia.com/cuda-downloads?target_os=Windows&target_arch=x86_64

The installation process is identical for both installers. Ignore any warnings about the existence or non-existence of installation paths in Windows Environment variables – we are going to attend to this manually later.

Install NVIDIA CUDA Toolkit V11+

Start the installer for the 11+ series CUDA Toolkit.

At Installation Options, choose Custom (Advanced) and proceed.

Uncheck the NVIDIA GeForce Experience option and click Next.

Leave Select Installation Location at defaults (this is important):

Click Next and let the installation conclude.

Ignore any warning or notes that the installer gives about Nsight Visual Studio integration, which is not needed for our use case.

Install NVIDIA CUDA Toolkit V12+

Repeat the entire process for the separate 12+ NVIDIA Toolkit installer that you downloaded:

The install process for this version is identical to the one listed above (the 11+ version), except for one warning about environment paths, which you can ignore:

When the 12+ CUDA version installation is completed, open a command prompt in Windows and type and enter:

nvcc --version

This should confirm information about the installed driver version:

To check that your card is recognized, type and enter:

nvidia-smi

5: Install GIT

GIT will be handling the installation of the Musubi repository on your local machine. Download the GIT installer at:

https://git-scm.com/downloads/win (’64-bit Git for Windows Setup’)

Run the installer:

Use default settings for Select Components:

Leave the default editor at Vim:

Let GIT decide about branch names:

Use recommended settings for the Path Environment:

Use recommended settings for SSH:

Use recommended settings for HTTPS Transport backend:

Use recommended settings for line-ending conversions:

Choose Windows default console as the Terminal Emulator:

Use default settings (Fast-forward or merge) for Git Pull:

Use Git-Credential Manager (the default setting) for Credential Helper:

In Configuring extra options, leave Enable file system caching ticked, and Enable symbolic links unticked (unless you are an advanced user who is using hard links for a centralized model repository).

Conclude the installation and test that Git is installed properly by opening a CMD window and typing and entering:

git --version

GitHub Login

Later, when you attempt to clone GitHub repositories, you may be challenged for your GitHub credentials. To anticipate this, log into your GitHub account (create one, if necessary) on any browsers installed on your Windows system. In this way, the 0Auth authentication method (a pop-up window) should take as little time as possible.

After that initial challenge, you should stay authenticated automatically.

6: Install CMake

CMake 3.21 or newer is required for parts of the Musubi installation process. CMake is a cross-platform development architecture capable of orchestrating diverse compilers, and of compiling software from source code.

Download it at:

https://cmake.org/download/ (‘Windows x64 Installer’)

Launch the installer:

Ensure Add Cmake to the PATH environment variable is checked.

Press Next.

Type and enter this command in a Windows Command prompt:

cmake --version

If CMake installed successfully, it will display something like:

cmake version 3.31.4 CMake suite maintained and supported by Kitware (kitware.com/cmake).

7: Install Python 3.10

The Python interpreter is central to this project. Download the 3.10 version (the best compromise between the different demands of Musubi packages) at:

https://www.python.org/downloads/release/python-3100/ (‘Windows installer (64-bit)’)

Run the download installer, and leave at default settings:

At the end of the installation process, click Disable path length limit (requires UAC admin confirmation):

In a Windows Command prompt type and enter:

python --version

This should result in Python 3.10.0

Check Paths

The cloning and installation of the Musubi frameworks, as well as its normal operation after installation, requires that its components know the path to several important external components in Windows, particularly CUDA.

So we need to open the path environment and check that all the requisites are in there.

A quick way to get to the controls for Windows Environment is to type Edit the system environment variables into the Windows search bar.

Clicking this will open the System Properties control panel. In the lower right of System Properties, click the Environment Variables button, and a window called Environment Variables opens up. In the System Variables panel in the bottom half of this window, scroll down to Path and double-click it. This opens a window called Edit environment variables. Drag the width of this window wider so you can see the full path of the variables:

Here the important entries are:

C:Program FilesNVIDIA GPU Computing ToolkitCUDAv12.6bin C:Program FilesNVIDIA GPU Computing ToolkitCUDAv12.6libnvvp C:Program FilesNVIDIA GPU Computing ToolkitCUDAv11.8bin C:Program FilesNVIDIA GPU Computing ToolkitCUDAv11.8libnvvp C:Program Files (x86)Microsoft Visual Studio2019CommunityVCToolsMSVC14.29.30133binHostx64x64 C:Program FilesMicrosoft Visual Studio2022CommunityVCToolsMSVC14.42.34433binHostx64x64 C:Program FilesGitcmd C:Program FilesCMakebin

In most cases, the correct path variables should already be present.

Add any paths that are missing by clicking New on the left of the Edit environment variable window and pasting in the correct path:

Do NOT just copy and paste from the paths listed above; check that each equivalent path exists in your own Windows installation.

If there are minor path variations (particularly with Visual Studio installations), use the paths listed above to find the correct target folders (i.e., x64 in Host64 in your own installation. Then paste those paths into the Edit environment variable window.

After this, restart the computer.

Installing Musubi

Upgrade PIP

Using the latest version of the PIP installer can smooth some of the installation stages. In a Windows Command prompt with administrator privileges (see Elevation, below), type and enter:

pip install --upgrade pip

Elevation

Some commands may require elevated privileges (i.e., to be run as an administrator). If you receive error messages about permissions in the following stages, close the command prompt window and reopen it in administrator mode by typing CMD into Windows search box, right-clicking on Command Prompt and selecting Run as administrator:

For the next stages, we are going to use Windows Powershell instead of the Windows Command prompt. You can find this by entering Powershell into the Windows search box, and (as necessary) right-clicking on it to Run as administrator:

Install Torch

In Powershell, type and enter:

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

Be patient while the many packages install.

When completed, you can verify a GPU-enabled PyTorch installation by typing and entering:

python -c "import torch; print(torch.cuda.is_available())"

This should result in:

C:WINDOWSsystem32>python -c "import torch; print(torch.cuda.is_available())" True

Install Triton for Windows

Next, the installation of the Triton for Windows component. In elevated Powershell, enter (on a single line):

pip install https://github.com/woct0rdho/triton-windows/releases/download/v3.1.0-windows.post8/triton-3.1.0-cp310-cp310-win_amd64.whl

(The installer triton-3.1.0-cp310-cp310-win_amd64.whl works for both Intel and AMD CPUs as long as the architecture is 64-bit and the environment matches the Python version)

After running, this should result in:

Successfully installed triton-3.1.0

We can check if Triton is working by importing it in Python. Enter this command:

python -c "import triton; print('Triton is working')"

This should output:

Triton is working

To check that Triton is GPU-enabled, enter:

python -c "import torch; print(torch.cuda.is_available())"

This should result in True:

Create the Virtual Environment for Musubi

From now on, we will install any further software into a Python virtual environment (or venv). This means that all you will need to do to uninstall all the following software is to drag the venv’s installation folder to the trash.

Let’s create that installation folder: make a folder called Musubi on your desktop. The following examples assume that this folder exists: C:Users[Your Profile Name]DesktopMusubi.

In Powershell, navigate to that folder by entering:

cd C:Users[Your Profile Name]DesktopMusubi

We want the virtual environment to have access to what we have installed already (especially Triton), so we will use the --system-site-packages flag. Enter this:

python -m venv --system-site-packages musubi

Wait for the environment to be created, and then activate it by entering:

.musubiScriptsactivate

From this point on, you can tell that you are in the activated virtual environment by the fact that (musubi) appears at the beginning of all your prompts.

Clone the Repository

Navigate to the newly-created musubi folder (which is inside the Musubi folder on your desktop):

cd musubi

Now that we are in the right place, enter the following command:

git clone https://github.com/kohya-ss/musubi-tuner.git

Wait for the cloning to complete (it will not take long).

Installing Requirements

Navigate to the installation folder:

cd musubi-tuner

Enter:

pip install -r requirements.txt

Wait for the many installations to finish (this will take longer).

Automating Access to the Hunyuan Video Venv

To easily activate and access the new venv for future sessions, paste the following into Notepad and save it with the name activate.bat, saving it with All files option (see image below).

@echo off

call C:Users[Your Profile Name]DesktopMusubimusubiScriptsactivate

cd C:Users[Your Profile Name]DesktopMusubimusubimusubi-tuner

cmd

(Replace [Your Profile Name]with the real name of your Windows user profile)

It does not matter into which location you save this file.

From now on you can double-click activate.bat and start work immediately.

Using Musubi Tuner

Downloading the Models

The Hunyuan Video LoRA training process requires the downloading of at least seven models in order to support all the possible optimization options for pre-caching and training a Hunyuan video LoRA. Together, these models weigh more than 60GB.

Current instructions for downloading them can be found at https://github.com/kohya-ss/musubi-tuner?tab=readme-ov-file#model-download

However, these are the download instructions at the time of writing:

clip_l.safetensors llava_llama3_fp16.safetensors and llava_llama3_fp8_scaled.safetensors can be downloaded at: https://huggingface.co/Comfy-Org/HunyuanVideo_repackaged/tree/main/split_files/text_encoders

mp_rank_00_model_states.pt mp_rank_00_model_states_fp8.pt and mp_rank_00_model_states_fp8_map.pt can be downloaded at: https://huggingface.co/tencent/HunyuanVideo/tree/main/hunyuan-video-t2v-720p/transformers

pytorch_model.pt can be downloaded at: https://huggingface.co/tencent/HunyuanVideo/tree/main/hunyuan-video-t2v-720p/vae

Though you can place these in any directory you choose, for consistency with later scripting, let’s put them in:

C:Users[Your Profile Name]DesktopMusubimusubimusubi-tunermodels

This is consistent with the directory arrangement prior to this point. Any commands or instructions hereafter will assume that this is where the models are situated; and don’t forget to replace [Your Profile Name] with your real Windows profile folder name.

Dataset Preparation

Ignoring community controversy on the point, it’s fair to say that you will need somewhere between 10-100 photos for a training dataset for your Hunyuan LoRA. Very good results can be obtained even with 15 images, so long as the images are well-balanced and of good quality.

A Hunyuan LoRA can be trained both on images or very short and low-res video clips, or even a mixture of each – although using video clips as training data is challenging, even for a 24GB card.

However, video clips are only really useful if your character moves in such an unusual way that the Hunyuan Video foundation model might not know about it, or be able to guess.

Examples would include Roger Rabbit, a xenomorph, The Mask, Spider-Man, or other personalities that possess unique characteristic movement.

Since Hunyuan Video already knows how ordinary men and women move, video clips are not necessary to obtain a convincing Hunyuan Video LoRA human-type character. So we’ll use static images.

Image Preparation

The Bucket List

The TLDR version:

It’s best to either use images that are all the same size for your dataset, or use a 50/50 split between two different sizes, i.e., 10 images that are 512x768px and 10 that are 768x512px.

The training might go well even if you don’t do this – Hunyuan Video LoRAs can be surprisingly forgiving.

The Longer Version

As with Kohya-ss LoRAs for static generative systems such as Stable Diffusion, bucketing is used to distribute the workload across differently-sized images, allowing larger images to be used without causing out-of-memory errors at training time (i.e., bucketing ‘cuts up’ the images into chunks that the GPU can handle, while maintaining the semantic integrity of the whole image).

For each size of image you include in your training dataset (i.e., 512x768px), a bucket, or ‘sub-task’ will be created for that size. So if you have the following distribution of images, this is how the bucket attention becomes unbalanced, and risks that some photos will be given greater consideration in training than others:

2x 512x768px images 7x 768x512px images 1x 1000x600px image 3x 400x800px images

We can see that bucket attention is divided unequally among these images:

Therefore either stick to one format size, or try and keep the distribution of different sizes relatively equal.

In either case, avoid very large images, as this is likely to slow down training, to negligible benefit.

For simplicity, I have used 512x768px for all the photos in my dataset.

Disclaimer: The model (person) used in the dataset gave me full permission to use these pictures for this purpose, and exercised approval of all AI-based output depicting her likeness featured in this article.

My dataset consists of 40 images, in PNG format (though JPG is fine too). My images were stored at C:UsersMartinDesktopDATASETS_HUNYUANexamplewoman

You should create a cache folder inside the training image folder:

Now let’s create a special file that will configure the training.

TOML Files

The training and pre-caching processes of Hunyuan Video LoRAs obtains the file paths from a flat text file with the .toml extension.

For my test, the TOML is located at C:UsersMartinDesktopDATASETS_HUNYUANtraining.toml

The contents of my training TOML look like this:

[general]

resolution = [512, 768]

caption_extension = ".txt"

batch_size = 1

enable_bucket = true

bucket_no_upscale = false

[[datasets]]

image_directory = "C:UsersMartinDesktopDATASETS_HUNYUANexamplewoman"

cache_directory = "C:UsersMartinDesktopDATASETS_HUNYUANexamplewomancache"

num_repeats = 1

(The double back-slashes for image and cache directories are not always necessary, but they can help to avoid errors in cases where there is a space in the path. I have trained models with .toml files that used single-forward and single-backward slashes)

We can see in the resolution section that two resolutions will be considered – 512px and 768px. You can also leave this at 512, and still obtain good results.

Captions

Hunyuan Video is a text+vision foundation model, so we need descriptive captions for these images, which will be considered during training. The training process will fail without captions.

There are a multitude of open source captioning systems we could use for this task, but let’s keep it simple and use the taggui system. Though it is stored at GitHub, and though it does download some very heavy deep learning models on first run, it comes in the form of a simple Windows executable that loads Python libraries and a straightforward GUI.

After starting Taggui, use File > Load Directory to navigate to your image dataset, and optionally put a token identifier (in this case, examplewoman) that will be added to all the captions:

(Be sure to turn off Load in 4-bit when Taggui first opens – it will throw errors during captioning if this is left on)

Select an image in the left-hand preview column and press CTRL+A to select all the images. Then press the Start Auto-Captioning button on the right:

You will see Taggui downloading models in the small CLI in the right-hand column, but only if this is the first time you have run the captioner. Otherwise you will see a preview of the captions.

Now, each photo has a corresponding .txt caption with a description of its image contents:

You can click Advanced Options in Taggui to increase the length and style of captions, but that is beyond the scope of this run-through.

Quit Taggui and let’s move on to…

Latent Pre-Caching

To avoid excessive GPU load at training time, it is necessary to create two types of pre-cached files – one to represent the latent image derived from the images themselves, and another to evaluate a text encoding relating to caption content.

To simplify all three processes (2x cache + training), you can use interactive .BAT files that will ask you questions and undertake the processes when you have given the necessary information.

For the latent pre-caching, copy the following text into Notepad and save it as a .BAT file (i.e., name it something like latent-precache.bat), as earlier, ensuring that the file type in the drop down menu in the Save As dialogue is All Files (see image below):

@echo off

REM Activate the virtual environment

call C:Users[Your Profile Name]DesktopMusubimusubiScriptsactivate.bat

REM Get user input

set /p IMAGE_PATH=Enter the path to the image directory:

set /p CACHE_PATH=Enter the path to the cache directory:

set /p TOML_PATH=Enter the path to the TOML file:

echo You entered:

echo Image path: %IMAGE_PATH%

echo Cache path: %CACHE_PATH%

echo TOML file path: %TOML_PATH%

set /p CONFIRM=Do you want to proceed with latent pre-caching (y/n)?

if /i "%CONFIRM%"=="y" (

REM Run the latent pre-caching script

python C:Users[Your Profile Name]DesktopMusubimusubimusubi-tunercache_latents.py --dataset_config %TOML_PATH% --vae C:Users[Your Profile Name]DesktopMusubimusubimusubi-tunermodelspytorch_model.pt --vae_chunk_size 32 --vae_tiling

) else (

echo Operation canceled.

)

REM Keep the window open

pause

(Make sure that you replace [Your Profile Name] with your real Windows profile folder name)

Now you can run the .BAT file for automatic latent caching:

When prompted to by the various questions from the BAT file, paste or type in the path to your dataset, cache folders and TOML file.

Text Pre-Caching

We’ll create a second BAT file, this time for the text pre-caching.

@echo off

REM Activate the virtual environment

call C:Users[Your Profile Name]DesktopMusubimusubiScriptsactivate.bat

REM Get user input

set /p IMAGE_PATH=Enter the path to the image directory:

set /p CACHE_PATH=Enter the path to the cache directory:

set /p TOML_PATH=Enter the path to the TOML file:

echo You entered:

echo Image path: %IMAGE_PATH%

echo Cache path: %CACHE_PATH%

echo TOML file path: %TOML_PATH%

set /p CONFIRM=Do you want to proceed with text encoder output pre-caching (y/n)?

if /i "%CONFIRM%"=="y" (

REM Use the python executable from the virtual environment

python C:Users[Your Profile Name]DesktopMusubimusubimusubi-tunercache_text_encoder_outputs.py --dataset_config %TOML_PATH% --text_encoder1 C:Users[Your Profile Name]DesktopMusubimusubimusubi-tunermodelsllava_llama3_fp16.safetensors --text_encoder2 C:Users[Your Profile Name]DesktopMusubimusubimusubi-tunermodelsclip_l.safetensors --batch_size 16

) else (

echo Operation canceled.

)

REM Keep the window open

pause

Replace your Windows profile name and save this as text-cache.bat (or any other name you like), in any convenient location, as per the procedure for the previous BAT file.

Run this new BAT file, follow the instructions, and the necessary text-encoded files will appear in the cache folder:

Training the Hunyuan Video Lora

Training the actual LoRA will take considerably longer than these two preparatory processes.

Though there are also multiple variables that we could worry about (such as batch size, repeats, epochs, and whether to use full or quantized models, among others), we’ll save these considerations for another day, and a deeper look at the intricacies of LoRA creation.

For now, let’s minimize the choices a little and train a LoRA on ‘median’ settings.

We’ll create a third BAT file, this time to initiate training. Paste this into Notepad and save it as a BAT file, like before, as training.bat (or any name you please):

@echo off

REM Activate the virtual environment

call C:Users[Your Profile Name]DesktopMusubimusubiScriptsactivate.bat

REM Get user input

set /p DATASET_CONFIG=Enter the path to the dataset configuration file:

set /p EPOCHS=Enter the number of epochs to train:

set /p OUTPUT_NAME=Enter the output model name (e.g., example0001):

set /p LEARNING_RATE=Choose learning rate (1 for 1e-3, 2 for 5e-3, default 1e-3):

if "%LEARNING_RATE%"=="1" set LR=1e-3

if "%LEARNING_RATE%"=="2" set LR=5e-3

if "%LEARNING_RATE%"=="" set LR=1e-3

set /p SAVE_STEPS=How often (in steps) to save preview images:

set /p SAMPLE_PROMPTS=What is the location of the text-prompt file for training previews?

echo You entered:

echo Dataset configuration file: %DATASET_CONFIG%

echo Number of epochs: %EPOCHS%

echo Output name: %OUTPUT_NAME%

echo Learning rate: %LR%

echo Save preview images every %SAVE_STEPS% steps.

echo Text-prompt file: %SAMPLE_PROMPTS%

REM Prepare the command

set CMD=accelerate launch --num_cpu_threads_per_process 1 --mixed_precision bf16 ^

C:Users[Your Profile Name]DesktopMusubimusubimusubi-tunerhv_train_network.py ^

--dit C:Users[Your Profile Name]DesktopMusubimusubimusubi-tunermodelsmp_rank_00_model_states.pt ^

--dataset_config %DATASET_CONFIG% ^

--sdpa ^

--mixed_precision bf16 ^

--fp8_base ^

--optimizer_type adamw8bit ^

--learning_rate %LR% ^

--gradient_checkpointing ^

--max_data_loader_n_workers 2 ^

--persistent_data_loader_workers ^

--network_module=networks.lora ^

--network_dim=32 ^

--timestep_sampling sigmoid ^

--discrete_flow_shift 1.0 ^

--max_train_epochs %EPOCHS% ^

--save_every_n_epochs=1 ^

--seed 42 ^

--output_dir "C:Users[Your Profile Name]DesktopMusubiOutput Models" ^

--output_name %OUTPUT_NAME% ^

--vae C:/Users/[Your Profile Name]/Desktop/Musubi/musubi/musubi-tuner/models/pytorch_model.pt ^

--vae_chunk_size 32 ^

--vae_spatial_tile_sample_min_size 128 ^

--text_encoder1 C:/Users/[Your Profile Name]/Desktop/Musubi/musubi/musubi-tuner/models/llava_llama3_fp16.safetensors ^

--text_encoder2 C:/Users/[Your Profile Name]/Desktop/Musubi/musubi/musubi-tuner/models/clip_l.safetensors ^

--sample_prompts %SAMPLE_PROMPTS% ^

--sample_every_n_steps %SAVE_STEPS% ^

--sample_at_first

echo The following command will be executed:

echo %CMD%

set /p CONFIRM=Do you want to proceed with training (y/n)?

if /i "%CONFIRM%"=="y" (

%CMD%

) else (

echo Operation canceled.

)

REM Keep the window open

cmd /k

As usual, be sure to replace all instances of [Your Profile Name] with your correct Windows profile name.

Ensure that the directory C:Users[Your Profile Name]DesktopMusubiOutput Models exists, and create it at that location if not.

Training Previews

There is a very basic training preview feature recently enabled for Musubi trainer, which allows you to force the training model to pause and generate images based on prompts you have saved. These are saved in an automatically created folder called Sample, in the same directory that the trained models are saved.

To enable this, you will need to save at last one prompt in a text file. The training BAT we created will ask you to input the location of this file; therefore you can name the prompt file to be anything you like, and save it anywhere.

Here are some prompt examples for a file that will output three different images when requested by the training routine:

As you can see in the example above, you can put flags at the end of the prompt that will affect the images:

–w is width (defaults to 256px if not set, according to the docs) –h is height (defaults to 256px if not set) –f is the number of frames. If set to 1, an image is produced; more than one, a video. –d is the seed. If not set, it is random; but you should set it to see one prompt evolving. –s is the number of steps in generation, defaulting to 20.

See the official documentation for additional flags.

Though training previews can quickly reveal some issues that might cause you to cancel the training and reconsider the data or the setup, thus saving time, do remember that every extra prompt slows down the training a little more.

Also, the bigger the training preview image’s width and height (as set in the flags listed above), the more it will slow training down.

Launch your training BAT file.

Question #1 is ‘Enter the path to the dataset configuration. Paste or type in the correct path to your TOML file.

Question #2 is ‘Enter the number of epochs to train’. This is a trial-and-error variable, since it’s affected by the amount and quality of images, as well as the captions, and other factors. In general, it’s best to set it too high than too low, since you can always stop the training with Ctrl+C in the training window if you feel the model has advanced enough. Set it to 100 in the first instance, and see how it goes.

Question #3 is ‘Enter the output model name’. Name your model! May be best to keep the name reasonably short and simple.

Question #4 is ‘Choose learning rate’, which defaults to 1e-3 (option 1). This is a good place to start, pending further experience.

Question #5 is ‘How often (in steps) to save preview images. If you set this too low, you will see little progress between preview image saves, and this will slow down the training.

Question #6 is ‘What is the location of the text-prompt file for training previews?’. Paste or type in the path to your prompts text file.

The BAT then shows you the command it will send to the Hunyuan Model, and asks you if you want to proceed, y/n.

Go ahead and begin training:

During this time, if you check the GPU section of the Performance tab of Windows Task Manager, you’ll see the process is taking around 16GB of VRAM.

This may not be an arbitrary figure, as this is the amount of VRAM available on quite a few NVIDIA graphics cards, and the upstream code may have been optimized to fit the tasks into 16GB for the benefit of those who own such cards.

That said, it is very easy to raise this usage, by sending more exorbitant flags to the training command.

During training, you’ll see in the lower-right side of the CMD window a figure for how much time has passed since training began, and an estimate of total training time (which will vary heavily depending on flags set, number of training images, number of training preview images, and several other factors).

A typical training time is around 3-4 hours on median settings, depending on the available hardware, number of images, flag settings, and other factors.

Using Your Trained LoRA Models in Hunyuan Video

Choosing Checkpoints

When training is concluded, you will have a model checkpoint for each epoch of training.

This saving frequency can be changed by the user to save more or less frequently, as desired, by amending the --save_every_n_epochs [N] number in the training BAT file. If you added a low figure for saves-per-steps when setting up training with the BAT, there will be a high number of saved checkpoint files.

Which Checkpoint to Choose?

As mentioned earlier, the earliest-trained models will be most flexible, while the later checkpoints may offer the most detail. The only way to test for these factors is to run some of the LoRAs and generate a few videos. In this way you can get to know which checkpoints are most productive, and represent the best balance between flexibility and fidelity.

ComfyUI

The most popular (though not the only) environment for using Hunyuan Video LoRAs, at the moment, is ComfyUI, a node-based editor with an elaborate Gradio interface that runs in your web browser.

Source: https://github.com/comfyanonymous/ComfyUI

Installation instructions are straightforward and available at the official GitHub repository (additional models will have to be downloaded).

Converting Models for ComfyUI

Your trained models are saved in a (diffusers) format that is not compatible with most implementations of ComfyUI. Musubi is able to convert a model to a ComfyUI-compatible format. Let’s set up a BAT file to implement this.

Before running this BAT, create the C:Users[Your Profile Name]DesktopMusubiCONVERTED folder that the script is expecting.

@echo off

REM Activate the virtual environment

call C:Users[Your Profile Name]DesktopMusubimusubiScriptsactivate.bat

:START

REM Get user input

set /p INPUT_PATH=Enter the path to the input Musubi safetensors file (or type "exit" to quit):

REM Exit if the user types "exit"

if /i "%INPUT_PATH%"=="exit" goto END

REM Extract the file name from the input path and append 'converted' to it

for %%F in ("%INPUT_PATH%") do set FILENAME=%%~nF

set OUTPUT_PATH=C:Users[Your Profile Name]DesktopMusubiOutput ModelsCONVERTED%FILENAME%_converted.safetensors

set TARGET=other

echo You entered:

echo Input file: %INPUT_PATH%

echo Output file: %OUTPUT_PATH%

echo Target format: %TARGET%

set /p CONFIRM=Do you want to proceed with the conversion (y/n)?

if /i "%CONFIRM%"=="y" (

REM Run the conversion script with correctly quoted paths

python C:Users[Your Profile Name]DesktopMusubimusubimusubi-tunerconvert_lora.py --input "%INPUT_PATH%" --output "%OUTPUT_PATH%" --target %TARGET%

echo Conversion complete.

) else (

echo Operation canceled.

)

REM Return to start for another file

goto START

:END

REM Keep the window open

echo Exiting the script.

pause

As with the previous BAT files, save the script as ‘All files’ from Notepad, naming it convert.bat (or whatever you like).

Once saved, double-click the new BAT file, which will ask for the location of a file to convert.

Paste in or type the path to the trained file you want to convert, click y, and press enter.

After saving the converted LoRA to the CONVERTED folder, the script will ask if you would like to convert another file. If you want to test multiple checkpoints in ComfyUI, convert a selection of the models.

When you have converted enough checkpoints, close the BAT command window.

You can now copy your converted models into the modelsloras folder in your ComfyUI installation.

Typically the correct location is something like:

C:Users[Your Profile Name]DesktopComfyUImodelsloras

Creating Hunyuan Video LoRAs in ComfyUI

Though the node-based workflows of ComfyUI seem complex initially, the settings of other more expert users can be loaded by dragging an image (made with the other user’s ComfyUI) directly into the ComfyUI window. Workflows can also be exported as JSON files, which can be imported manually, or dragged into a ComfyUI window.

Some imported workflows will have dependencies that may not exist in your installation. Therefore install ComfyUI-Manager, which can fetch missing modules automatically.

Source: https://github.com/ltdrdata/ComfyUI-Manager

To load one of the workflows used to generate videos from the models in this tutorial, download this JSON file and drag it into your ComfyUI window (though there are far better workflow examples available at the various Reddit and Discord communities that have adopted Hunyuan Video, and my own is adapted from one of these).

This is not the place for an extended tutorial in the use of ComfyUI, but it is worth mentioning a few of the crucial parameters that will affect your output if you download and use the JSON layout that I linked to above.

1) Width and Height

The larger your image, the longer the generation will take, and the higher the risk of an out-of-memory (OOM) error.

2) Length

This is the numerical value for the number of frames. How many seconds it adds up to depend on the frame rate (set to 30fps in this layout). You can convert seconds>frames based on fps at Omnicalculator.

3) Batch size

The higher you set the batch size, the quicker the result may come, but the greater the burden of VRAM. Set this too high and you may get an OOM.

4) Control After Generate

This controls the random seed. The options for this sub-node are fixed, increment, decrement and randomize. If you leave it at fixed and do not change the text prompt, you will get the same image every time. If you amend the text prompt, the image will change to a limited extent. The increment and decrement settings allow you to explore nearby seed values, while randomize gives you a totally new interpretation of the prompt.

5) Lora Name

You will need to select your own installed model here, before attempting to generate.

6) Token

If you have trained your model to trigger the concept with a token, (such as ‘example-person’), put that trigger word in your prompt.

7) Steps

This represents how many steps the system will apply to the diffusion process. Higher steps may obtain better detail, but there is a ceiling on how effective this approach is, and that threshold can be hard to find. The common range of steps is around 20-30.

8) Tile Size

This defines how much information is handled at one time during generation. It’s set to 256 by default. Raising it can speed up generation, but raising it too high can lead to a particularly frustrating OOM experience, since it comes at the very end of a long process.

9) Temporal Overlap

Hunyuan Video generation of people can lead to ‘ghosting’, or unconvincing movement if this is set too low. In general, the current wisdom is that this should be set to a higher value than the number of frames, to produce better movement.

Conclusion

Though further exploration of ComfyUI usage is beyond the scope of this article, community experience at Reddit and Discords can ease the learning curve, and there are several online guides that introduce the basics.

First published Thursday, January 23, 2025

#:where#2022#2025#ADD#admin#ai#AI video#AI video creation#amd#amp#API#approach#architecture#arrangement#Article#Artificial Intelligence#attention#authentication#author#back up#bat#box#browser#cache#challenge#change#clone#code#command#command prompt

0 notes

Text

Web Applications with Full Stack Python Development

Scalability is a crucial consideration for modern web applications, especially as they grow in user base and functionality. In Full Stack Python development, building scalable web applications involves ensuring that both the front-end and back-end can handle increased load efficiently without compromising performance or reliability. This blog explores key strategies for building scalable web applications in Full Stack Python development

Understanding Scalability in Full Stack Python Development

Scalability refers to the ability of an application to handle an increased number of users, requests, or data volume without degrading performance. A scalable application can expand and adapt to meet growing demands, making it essential for developers to plan for scalability from the start of the project.

There are two primary types of scalability:

Vertical Scaling (Scaling Up):

Vertical scaling involves upgrading the hardware resources of a single server, such as increasing CPU, RAM, or disk space. This can improve performance but has physical and financial limitations. It is generally a short-term solution for scalability.

Horizontal Scaling (Scaling Out):

Horizontal scaling refers to adding more servers or instances to distribute the load. This approach is more effective for building large-scale applications and is essential in cloud-based environments, such as AWS, Google Cloud, or Azure.

In Full Stack Python development

, it's crucial to design your application architecture with horizontal scaling in mind, as this method ensures your application can grow more effectively over time.

Best Practices for Building Scalable Web Applications

Microservices ArchitectureOne of the most effective strategies for building scalable applications in Full Stack Python development is adopting a microservices architecture. This approach breaks down the application into smaller, independent services, each handling a specific business function (e.g., user authentication, payment processing, or inventory management).Microservices offer several scalability benefits:

Each service can be scaled independently based on demand.

Services can be developed, deployed, and maintained independently, enabling faster iterations and updates.

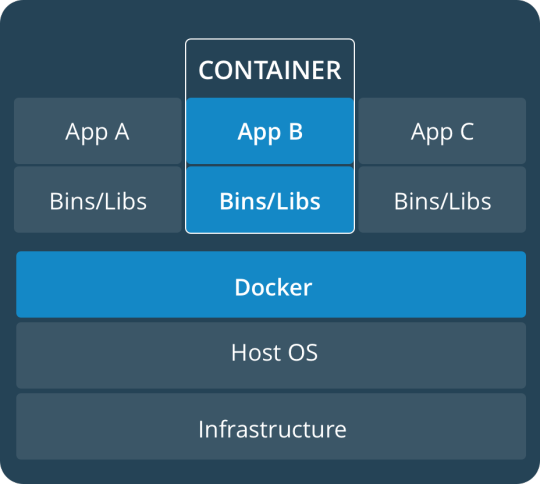

Microservices work well with containerization technologies like Docker, allowing easy scaling and deployment across different environments.

Load BalancingLoad balancing is essential for distributing incoming traffic evenly across multiple servers or instances. By using a load balancer, you ensure that no single server becomes overwhelmed with too many requests. This allows your Full Stack Python development application to handle more users efficiently.Load balancing also provides fault tolerance, as if one server fails, traffic is automatically redirected to other healthy instances. Some popular load balancers include Nginx, HAProxy, and cloud-native solutions like AWS Elastic Load Balancer.

Database Sharding and ReplicationAs your application scales, your database may become a bottleneck. Implementing sharding and replication can help address these challenges:

Sharding involves splitting the data into smaller, more manageable pieces, each stored on a different server. This reduces the load on any single database server and improves performance.

Replication involves copying data to multiple servers, ensuring high availability and fault tolerance. If one database server fails, the replicated servers can continue to serve the application.

Full Stack Python development projects that rely on databases such as PostgreSQL or MySQL can implement these techniques to improve scalability and reliability.

Caching for PerformanceCaching is an effective technique for improving the performance of web applications. Frequently accessed data, such as product details or user profiles, can be cached to avoid repeatedly querying the database. This reduces latency and server load, ensuring faster response times for users.Popular caching solutions include Redis and Memcached, both of which can store frequently accessed data in memory for quick retrieval. Caching should be used strategically to cache only the data that doesn’t change frequently, while ensuring that dynamic or sensitive data is retrieved directly from the database.

Choosing the Right Tools for Scalable Full Stack Python Development

Web FrameworksSelecting the right web framework is crucial for scalability in Full Stack Python development

Frameworks like Django and Flask are popular choices:

Django provides built-in tools for scaling, including an ORM (Object-Relational Mapper) for database management and caching mechanisms for better performance.

Flask, being a lightweight framework, offers more flexibility and control over the architecture, making it suitable for microservices and APIs in large-scale applications.Both frameworks can integrate with caching, load balancing, and database management tools to help scale your application effectively.

Asynchronous ProgrammingAsynchronous programming allows your application to handle multiple tasks concurrently, making it more efficient at managing requests. By using asynchronous libraries like asyncio, Celery, or FastAPI, you can handle long-running tasks (such as sending emails or processing images) without blocking the main thread.In Full Stack Python development, asynchronous frameworks are crucial for building scalable web applications that can handle a high number of concurrent requests, especially when dealing with real-time features like chat or notifications.

Cloud Infrastructure and ContainersCloud platforms like AWS, Google Cloud, and Microsoft Azure offer scalable infrastructure that automatically adjusts based on demand. These platforms provide services such as compute instances, managed databases, and load balancers that help scale your Full Stack Python development application without manual intervention.Additionally, containerization tools like Docker and Kubernetes enable you to deploy, scale, and manage your application components across a cluster of machines. Containers ensure consistency in development, testing, and production environments, making it easier to scale applications efficiently.

Monitoring and Scaling in Production

Once your Full Stack Python development application is live, monitoring its performance is key to maintaining scalability. Tools like Prometheus, Grafana, and New Relic help you track server metrics, application performance, and database queries. By identifying bottlenecks, you can make data-driven decisions to improve performance.

As traffic grows, scaling can be done dynamically by adding more instances or database nodes. Cloud providers like AWS offer auto-scaling features that automatically increase resources when demand spikes, ensuring that your application can handle peak loads.

Conclusion

Building scalable web applications in

Full Stack Python development requires careful planning and the right tools to handle increased user load, data volume, and system complexity. By adopting strategies like microservices architecture, load balancing, database sharding, and caching, you can ensure that your application can grow and scale seamlessly. Additionally, using cloud infrastructure, asynchronous programming, and monitoring tools enables efficient scaling in production.

Ultimately, scalability is not a one-time task but an ongoing process that requires continual optimization as your application evolves and grows in the future.

0 notes

Text

How to Clean Up Docker Images, Containers, and Volumes Older Than 30 Days

Docker is an excellent tool for containerizing applications, but it can easily consume your server’s storage if you don’t perform regular cleanups. Old images, containers, and unused volumes can pile up, slowing down your system and causing disk space issues. In this blog, we’ll walk you through how to identify and delete Docker resources that are older than 30 days to free up space. Remove…

0 notes

Text

How to Install CyberPanel on Ubuntu 22.04 Like a Pro! – Quick Tips

CyberPanel is a user-friendly control panel that makes managing websites and servers much easier, even for beginners. It uses LiteSpeed Web Server (a fast web server) and offers features like one-click WordPress installation, automatic SSL certificates, and a simple interface. In this guide, we’ll break down every step to help you Install CyberPanel on Ubuntu 22.04 server in a way that’s easy to follow, even if you’re not an expert. Let’s dive into each step How to Install CyberPanel on Ubuntu 22.04 Like a Pro!

Why Choose CyberPanel?

Before diving into the installation process, you might wonder why you should choose CyberPanel over other control panels like cPanel or Plesk. Here are a few compelling reasons: - Open Source: It’s completely free (though there’s an Enterprise version with additional features if you’re interested). - Lightweight and Fast: Built around OpenLiteSpeed, CyberPanel is optimized for speed and performance. - Intuitive Interface: The dashboard is clean and user-friendly, even for beginners. - Advanced Features: From one-click installations of WordPress to built-in support for Git, Redis, and Docker, CyberPanel offers plenty of powerful tools. - Auto SSL: Easily install and manage SSL certificates. Sounds like the control panel of your dreams, right?

Pre-Installation Checklist

Before you can install CyberPanel on Ubuntu 22.04, there are a few things you’ll need to prepare. Don’t worry, nothing too crazy! 1. A Fresh Ubuntu 22.04 Server Make sure you’ve got a clean installation of Ubuntu 22.04. You can set this up on a virtual private server (VPS) from your favourite hosting provider. Avoid running the installation on a server that already has web services installed, as that can cause conflicts. 2. Root Access or Sudo Privileges You’ll need root access to your server, or at the very least, a user account with sudo privileges. If you don’t have this, the installation won’t work properly. 3. Server Specifications Here are the minimum recommended system specs for running CyberPanel: - 1 GB of RAM (though 2 GB is ideal for better performance) - 10 GB of free disk space (more if you plan on hosting multiple websites) - A 64-bit operating system (which Ubuntu 22.04 is) 4. Domain Name While it’s not strictly required for the installation, having a domain name handy will allow you to configure your website and apply SSL certificates more easily.

Step-by-Step Guide: How to Install CyberPanel on Ubuntu 22.04

Alright, with your server ready and your domain name in hand, let’s get into the nitty-gritty of installing CyberPanel.

Step 1: Update Your Server’s Software

Before you install anything new, it’s a good idea to make sure your Ubuntu system is up to date. This helps avoid problems later and ensures everything runs smoothly. To update your server, open your terminal (a place where you can type commands) and enter these two commands one after the other:

- The first command, sudo apt update, checks for the latest updates for your system. - The second command, sudo apt upgrade -y, installs those updates. This could take a few minutes, depending on your internet connection and the speed of your internet. Once this is done, your system will be ready for the next steps.

Step 2: Install Basic Tools

Now, we need to install some basic tools that CyberPanel needs to run properly. These tools will help us download and install other software in the next steps. Run this command in your terminal:

- wget is a tool that helps us download files from the internet. - curl is a tool that allows us to transfer data and communicate with servers. By installing these, you’re preparing your system for the main installation.

Step 3: Download the CyberPanel Installer

Next, we need to download a special script (a small program) that will help us install CyberPanel. To do this, use the following command:

This command downloads the CyberPanel installer script and saves it to a file called installer.sh on your server. Once the script is downloaded, you need to permit it to run. To do that, enter:

This command makes the script executable, which means we can run it in the next step.

Step 4: Start the Installation Process

Now that everything is set up, we can begin the actual installation of CyberPanel. This step will take a while, and you’ll be asked to make some choices along the way. To start the installation, type:

This command runs the installer script. Once it begins, you’ll see several options. Let’s walk through them: Choosing the Web Server You’ll be asked whether to install the LiteSpeed Enterprise (a paid version) or OpenLiteSpeed (a free version). Since OpenLiteSpeed is free and works well for most users, we recommend selecting it by typing: Full Installation vs. Minimal Installation Next, you’ll be asked if you want to do a Full installation or a Minimal installation. Choose Full installation, as it includes important tools like PowerDNS (for managing your domain names) and Postfix (for sending emails). Installing Memcached and Redis These are tools that help speed up your websites by caching data (temporarily storing it so it can be accessed quickly). If you plan to host websites that need fast performance, select yes when asked to install Memcached and Redis. Setting an Admin Password At the end of the installation, you’ll be asked to set a password for the admin user. This password will be used to log in to the CyberPanel dashboard. Make sure to choose a strong password and write it down somewhere safe and secure place. After answering these questions, the installation will continue and It may take several minutes to finish.

Step 5: Access the CyberPanel Dashboard

Once the installation is complete, you’ll be given a link to log in to the CyberPanel web interface. This is where you can manage your websites and server settings. To access CyberPanel, open your web browser and type in the following:

- Replace with the actual IP address of your server. - The :8090 at the end is the port number where CyberPanel runs. You might see a warning saying that the site is not secure. This is normal because the server is using a self-signed SSL certificate. You can click through the warning to access the dashboard. Log in using the admin username and the password you created during installation.

Step 6: Configure OpenLiteSpeed

After logging in to CyberPanel, you’ll need to configure OpenLiteSpeed (the web server that powers your websites). Here’s how to do it: - Access the OpenLiteSpeed Admin: From the CyberPanel dashboard, click on OpenLiteSpeed WebAdmin. You’ll be taken to the OpenLiteSpeed admin page. - Log in to OpenLiteSpeed: Use the default credentials: - Username: admin - Password: 123456 (or the one you set during installation). - Change the Admin Password: For security reasons, it’s important to change the default admin password. To do this, run this command in your terminal:

- Follow the instructions to change your password.

Step 7: Secure CyberPanel with SSL

To protect your data and ensure a secure connection to CyberPanel, we need to set up an SSL certificate. CyberPanel allows you to do this automatically using Let’s Encrypt, a free service that provides SSL certificates.

Here’s how to do it: - Log in to the CyberPanel dashboard. - Go to SSL > Hostname SSL. - Enter your server’s hostname (the name of your server or domain). - Click Issue SSL. This will install an SSL certificate, and your CyberPanel interface will now be secure.

Step 8: Create and Manage Websites

With CyberPanel installed and secured, you can now start hosting websites. Here’s an easy-to-follow guide to help you begin: - Add a New Website: In the CyberPanel dashboard, go to Websites > Create Website. Fill in the necessary information: - Domain Name: The name of your website (e.g., example.com). - Email: Your email address. - PHP Version: Choose a version that works with your site (the default should be fine). Once you’ve entered this information, click Create Website. - Set Up DNS for Your Domain: DNS (Domain Name System) is what helps people find your website online. To configure DNS, go to DNS > Create Zone. Enter your domain name and set the A (Address) record to point to your server’s IP address. - Install WordPress: CyberPanel makes it easy to install WordPress. Go to Websites > List Websites, find your domain, and click Manage. You’ll see an option to install WordPress with one click.

Step 9: Enable Backups

It’s very important to regularly back up your website to ensure you can recover it if anything goes wrong. CyberPanel has a built-in tool for scheduling backups. - Go to Backup > Schedule Backup. - Select the website that you want to take a backup. - Choose how often you want to back up (daily, weekly, etc.). - Select a destination for your backups (you can save them locally or send them to a remote server). Once this is set up, CyberPanel will automatically create backups for you.

Step 10: Optimize CyberPanel for Speed

To get the best performance from CyberPanel, you can make a few adjustments: - Enable LSCache: LSCache is a caching system that speeds up websites. Go to Websites > List Websites, find your website, and enable LSCache for faster load times. - Adjust PHP Settings: If your website uses a lot of PHP scripts (common for WordPress sites), you can tweak the settings. Go to Server > PHP > Edit PHP Configs to adjust things like memory limits. - Use Security Plugins: To keep your server secure, consider installing Security plugins such as CSF Firewall and ModSecurity help protect your server from malicious attacks and enhance your website’s overall security. Here’s how you can install them through CyberPanel: CSF Firewall: - Go to Security > Install CSF from the CyberPanel dashboard. This firewall helps protect your server by blocking unwanted traffic. - After installation, you can configure it by navigating to Security > CSF Configuration where you can add specific rules or adjust settings to secure your server. ModSecurity: - To install ModSecurity, go to Security > Install ModSecurity in the dashboard. - Once installed, it will monitor web traffic for suspicious activities and block potential threats. It’s an excellent tool for preventing attacks like SQL injections and cross-site scripting. Both security plugins work in the background to safeguard your server and websites, helping to prevent common vulnerabilities.

Step 11: Monitor Server Performance

After you’ve successfully installed and set up CyberPanel, it’s crucial to keep an eye on your server’s performance. Monitoring your server helps you spot any potential issues before they turn into bigger problems. CyberPanel comes with built-in tools to help you with this: - Real-Time Monitoring: Go to Server Status > LiteSpeed Status to see how your server is performing. This page shows you important details like CPU usage, memory usage, and active connections. - System Health Check: Under Server Status > System Status, you can check the overall health of your server. This includes key metrics such as available disk space, RAM usage, and the status of various services like MySQL and DNS. Monitoring these areas regularly ensures that your server runs efficiently and doesn’t run out of resources unexpectedly.

Step 12: Troubleshooting Common Issues

Even with a detailed guide, you may run into problems during or after installation. Here are some common issues you may face and how to fix them: Issue 1: Can’t Access CyberPanel Web Interface - If you can’t access CyberPanel at https://:8090, the most likely reason is that port 8090 is blocked. To fix this, open the port by running the following command on your server:

After that, try accessing the panel again in your browser. Issue 2: SSL Certificate Not Working - If the SSL certificate you issued using Let’s Encrypt isn’t working, try reissuing the certificate: - Go to SSL > Manage SSL in CyberPanel. - Select your domain and click Issue SSL again. This will attempt to reissue the certificate, solving most SSL-related issues. Issue 3: Website is Running Slowly - If your website is slow, you can enable LiteSpeed Cache (LSCache) for faster performance. You should also consider using CDN (Content Delivery Network) services like Cloudflare to speed up content delivery.

Final Overview

Installing CyberPanel on Ubuntu 22.04 may seem like a technical task, but with this detailed guide, even a beginner can complete the process with ease. By following each step, you will set up a robust, secure, and high-performing web hosting environment using the OpenLiteSpeed web server and CyberPanel’s powerful features. From basic installation to security measures and performance optimization, this guide ensures that your websites will run smoothly on your server. Whether you're hosting a personal website or managing multiple domains, CyberPanel offers the flexibility and tools you need to succeed, making it an ideal choice for anyone new to server management. Now, go ahead and explore the many features of CyberPanel!

FAQs

1. Is CyberPanel free to use? Yes, CyberPanel is completely free. There’s also a paid Enterprise version with more features, but the free version is more than enough for most users. 2. Can I install CyberPanel on a VPS with less than 1 GB of RAM? While it’s technically possible, it’s not recommended. CyberPanel runs much more smoothly on systems with at least 1 GB of RAM (preferably 2 GB). 3. What’s the difference between OpenLiteSpeed and LiteSpeed Enterprise? OpenLiteSpeed is the free, open-source version of LiteSpeed. LiteSpeed Enterprise offers premium features like better performance and more advanced caching options, but it requires a license. Read the full article

#cloudpanelvscyberpanel#cyberpanel#cyberpanelhosting#cyberpanelinstall#cyberpanellogin#cyberpanelvps#cyberpanelvpshosting#cyberpanelvscpanel#installcyberpanel#whatiscyberpanel

0 notes

Text

I'd like to go back to Linux, I think modern wine/proton/bottles/etc might even enable all the gaming I really care about, unfortunately there are no mid-weight Wayland compositors right now: the space that under X11 was filled with XFCE, LXDE, LXQT, even things like OpenBox seems basically empty.

With Wayland it is either Gnome or KDE, or the ultra light compositors like Sway that are comparable to i3, dwm and the like. Which are fine if that's what you want... I used AwesomeWM for years and some other similar systems before that, but they are very much "build your own". You have to put in a lot of work on the config file typically, and you need to add your own status bar, menu system, sys tray, etc generally. Nowadays I'd rather have *some* basics there for me already. But Gnome and KDE are just as all-inclusive and heavy on disk space as ever, if not moreso.