#effective altruism

Explore tagged Tumblr posts

Text

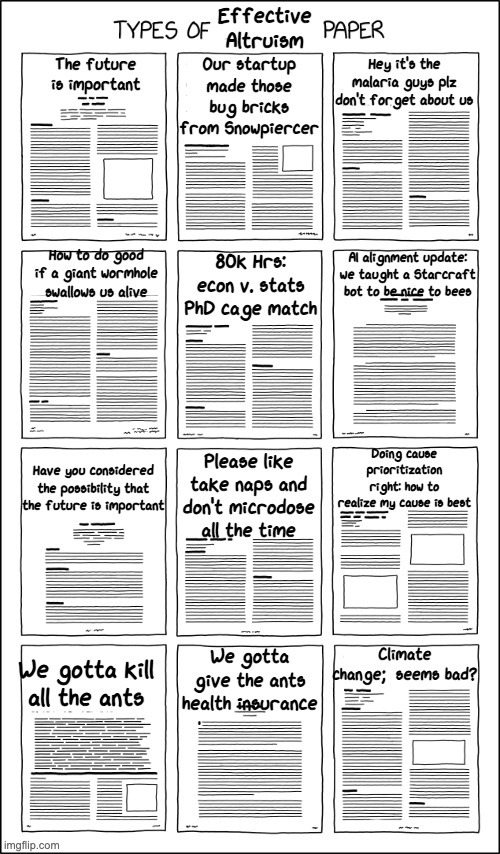

A scathing takedown of the "effective altruism" movement.

186 notes

·

View notes

Text

I also need to add a bit of context to your very short bit about Zizians, @strange-aeons .

TLDR: Ziz left the rationalist community 6 years ago and even at the time she wasn’t liked there, the fact she managed to exploit. Her actions directly contradict both hpmor and what CFAR was doing and teaching. Her other affiliations that are no less relevant include being an anarchist and a vegan. You are not immune to cults just because you are not into any particular weird internet subculture.

Cults form from niche subcultures, that’s true enough. But any subculture can form a cult because any culture, even the mainstream one, contains some ideas that can be twisted to an insane degree. And any ideology, even the most niche and scary one, can be approached casually and sceptically. What is actually needed to create a cult is not a special ideology but a cult leader and vulnerable people to follow them. That is the main uniting quality between all cults. Trying to figure out what’s wrong with a certain music band or a certain fantasy book forum is an exercise in motivated reasoning. You will always end up finding something that's wrong.

The rationalist community was trying to prevent the formation of a cult as best as they could. Partly that’s the reason why people like Ziz and others with bad and unpopular takes were often tolerated longer than necessary. To encourage criticism and prevent getting stuck in a positive feedback loop. Because it’s not a high control group! You cannot be simultaneously mad that people are allowed to talk about wacky ideas on forums and also that the group is supposedly very rigid and controlled. Apparently, they could use some control. Not like it would actually stop an aspiring cult leader from recruiting, they’d just go some other place.

Zizians were not mostly trans by accident (what are the chances?). Ziz was recruiting the most vulnerable people who related to her and were willing to trust her (also because there’s a lot of trans women in the community, like a lot). She used their very real and grounded experience of discrimination to convince them that her not being liked is not due to her takes being bad but because she’s trans.

And she had a lot of takes, some of them not being popular enough you actually complained about. You criticised LessWrong for being too pro-capitalist for your taste and then started talking about the Really Bad rationalists and THEY ARE LEFTISTS killing landlords and cops.

Now if we are talking about ideologies that devalue human life, how about some that require actual Class War (guess what people do in wars) or violent mass uprisings? Or some that require assassinations of certain select individuals? How come you hear that there are people on forums discussing the ethics of murdering those directly responsible for destroying our planet and you, as a leftist, do not immediately recognize yourself in it? Nothing discussed on LessWrong is more violent than a communist revolution or even the killing of Brian Thompson.

Why being into rationality at some point and reading hpmor is the only thing you told about Ziz? I think I know why. All the right wingers really leaned into the whole ‘trans vegan cult’ thing. That is not a good look for our side, is it? How amazing would it be to find a scapegoat. Who cares about those AI safety freaks anyway? They are all cishet men anyway! All cishet men who somehow have an offshoot of violent vegan queers, that certainly adds up.

Ziz being a radical vegan* (another niche subculture) corresponds to her actions way better than anything that's discussed on LessWrong or happened in hpmor. In fact, there’s an exact scene you probably skipped. Harry is on a very important and dangerous mission with Quirrell and at some point he is told to hide while Quirrell duels a cop guard at magical Guantanamo Bay – a total pig and an absolute scum who Ziz would kill without a second thought. Harry does share her sentiment, he fucking hates Azkaban. But when Quirrell tries to kill the evil torture cop Harry instinctively protects him jeopardizing the entire mission. And it’s not a random scene. It starts the entire disillusionment spiral where Harry realizes his beloved groomer professor might be a bad guy. Murder of a bystander whose only crime is being a product of his society is not something Harry can tolerate. He does end up decapitating a bunch of actual death eaters in the very end (the bit you probably did read) to save his own life and defeat Voldemort and even then he regrets it and apologizes for it (despite it being the right thing to do and not even comparable to a random cop). There’s an entire scene where Harry bonds with Draco over their mother’s deaths where he expresses that every death is a tragedy, even deaths of very bad people (like Voldemort).

Not to mention the entire immortalism theme (did you skip the entire third book?). One cannot read hpmor and walk away thinking human life is worthless or only super geniuses deserve to live. Timeless decision theory leading to murdering people is not in there**, nothing in the fic even suggests such a conclusion. More about how you got most things wrong about hpmor here.

Let’s face it, those were a bunch of sleep deprived vulnerable people high on all sorts of radical ideas, who were kicked out of every decent movement and that’s why they slipped into a cult.

Any subculture can become a cult. If you ever read a boring preachy fanfic or ever went to a physical meeting with internet weirdos. If you ever felt rejected by mainstream society and went looking for ‘like-minded people’, for a ‘found family’, for a ‘place where you belong’. You are not safe. Touching grass from time to time is not enough. You have to never leave the pastures to be highly immune to cults. And that ain’t you, my friend. That’s none of us.

Previous post about rationalist community.

* Ever heard ‘veganism is the moral baseline’ (sometimes minimum or imperative)? That’s not a slander, it’s a commonplace argument and an actual slogan.

** The only use of timeless decision theory in hpmor is about being able to reliably cooperate with other well meaning people, not about killing anyone.

#just love hearing how ideology that is about humans actually deserving to live FOREVER#is somehow leads to disregard for human life#blew my mind away#btw zizians failing so quickly and miserably is the best proof that timeless decision theory does not lead to being super violent#else it would've fucking worked#we were all correct to clown on it i guess#zizians#rationality#rationalist community#lesswrong#eliezer yudkowsky#effective altruism#hpmor#cults#cult behavior#less wrong#yudkowsky#strange aeons#not pathologic

53 notes

·

View notes

Text

I can hardly believe it, but I'm actually thinking (rather impulsively) of getting a ticket and buying plane flights to the LessOnline conference in San Francisco that will be at the end of this month. My primary motivation is the one you'd expect: I want the experience of prolonged meatspace hanging-out and discussions with serious rationalist people. I could get this at the NYC Rationalist Megameetup, which is whole lot closer to where I live, and I have attended the Secular Solstice part of it these last three years, but I don't know if I'll ever make it to the full Megameetup since December is a very stressful month for me as an academic; late May / early June, on the other hand, is a very easy time for me to take a break from work. (I wouldn't even have to tell my colleagues that I'm leaving for a weekend, let alone what I'd be doing, which I'd prefer to keep separate from my professional life as long as that's in academia.) And the NYC rationalist crowd is obviously a different one from the (much more central) rationalist crowd I'll find in the Bay Area.

The other motivation, though, is that I'm at a critical point now where I seriously want to consider a career switch to coding, but I'm extremely agnostic on whether this is a good idea, especially with the state of AI, and I have a feeling there might be a coder or two at this conference that also just might have an opinion on the future of AI.

It also looks like the total cost of travel, lodging, and ticket, and main meals would come to $1000 or not much more (more than half of which would be the ticket!), which is something I can afford and doesn't feel too frivolous, especially given that I now pretty much know I'll still be gainfully employed after this summer.

One suggested activity mentioned on the LessOnline website is writing a small essay with a provocative thesis and being prepared to read the essay out loud and discuss/debate it with a small crowd. I'd be quite interested in doing this and even have an idea about what thesis I would defend (it's one I've never declared or defended head-on here, in fact).

Pros of going: I have an extremely blank and free summer ahead of me that needs enhancing with a little traveling and small diversion or two rather than just sitting with my head down trying to work on research; it sounds incredibly fun in every detail; I'd finally get to meet people I've admired from afar for years like Scott Alexander and Aella; maybe I could meet a few people I know from Tumblr as well; I'd learn something about the Bay Area programming world (which I'm entertaining thoughts of joining, if it can be in a way that doesn't involve physically moving there); I'd be inspired by and get to learn more about rationalist culture; there would be almost zero stress involved in terms of missing other responsibilities; not too many people would even have to know what I'm up to.

Cons of going: it'll still take me away from work for a long weekend; it's still an unexpected expense; travel (especially plane travel, especially across a long distance) tends to stress me out; my risk of blending my online-under-Liskantope life with the rest of my life would be increased; just as at the (much briefer) Secular Solstices, I would become depressingly aware of how not-a-true-committed-rationalist and how poor an effective altruist I am, not even having ever made a GiveWell pledge or been active on LessWrong; I would feel dumber than most of the people there and would have trouble following, let alone contributing, to many of the conversations; the sensation of being an imposter (outside of the possible essay-discussing activity mentioned above) would probably be severe. (The first three or four of these cons feel fairly minor, while the last ones give me pretty serious reservations.)

19 notes

·

View notes

Text

8 notes

·

View notes

Text

MacAskill is determined that endless economic growth—fueled by having more kids, or perhaps even just creating ‘digital’ worker-people who could take over the economy—is desirable. It’s extraordinary to us that, in a world where the pronatalist norm of bringing new people into the world remains widely unquestioned as a central aim in life, the ‘longtermists’ present themselves as being edgy by favoring ever-more births.

In the ‘longtermist’ view, the more humans there are with lives that aren’t completely miserable, the better. MacAskill says he believes that only ‘technologically capable species’ are valuable. This is why he writes that ‘if Homo sapiens went extinct,’ the badness of this outcome may depend on whether ‘some other technologically capable species would evolve and take our place.’ Without such a species evolving to ‘take our place,’ the biosphere—with all its wonders and beauty—would be worthless. We find this to be a very shallow perspective.

Perhaps it isn’t surprising, then, that MacAskill doesn’t see much of a place for non-human animals in the future. True, some ‘longtermists’ have spent a lot of time recently worrying about the suffering of wild animals. You might find it touching that they fret about such innocent creatures, including shrimp. But look out: their concern has a sinister side. Some ‘longtermists’ have suggested that wild animals’ lives are not worth living, so full (allegedly) of suffering are they. MacAskill tends toward envisaging those animals’ more or less complete replacement: by (you guessed it) many more humans. Here’s what MacAskill says: ‘if we assess the lives of wild animals as being worse than nothing, which I think is plausible … then we arrive at the dizzying conclusion that from the perspective of the wild animals themselves, the enormous growth and expansion of Homo sapiens has been a good thing’—a growth that MacAskill wants to endlessly inflate.

MacAskill excuses the proposed near-elimination of the wild by saying that most wild animals (by neuron count) are fish, and by offering reasons for thinking fish have peculiarly bad lives typically compared to land animals. But there is only a preponderance of fish because we have extirpated an even higher percentage of wild land animals. He doesn’t even consider the possibility that we ought to reverse that situation and rewild much of the Earth.

45 notes

·

View notes

Note

Effective altruism sounds super interesting, id love to hear what you learn from it (and how you apply it if comfortable?)

Hi! I'm sorry it has taken me so long to answer you. I saw your ask like the very same day, but the thing is I'm still learning about it! I was hesitant to answer you because I'm not an expert, I'm just at the very tip of the iceberg.

But, I won't leave you behind because it's something that I really think everyone should know about. So, I'll share with you the resources that can help you start getting involved, just as I am.

My journey started, honestly, watching this video by Elizabeth Filips. There's no need to watch the video, really. On minute 14:43 she recommends the book 80,000 Hours by Benjamin Todd, for which you can get a free paper back edition here.

80,000 Hours starts with the question: How can I find a job that truly makes me happy? It answers that you need, among other things, a job that allows you to help others. How can you help the most people in the best way? That's what they describe as a "high-impact job". And in a very broad way, that's what effective altruism is.

80,000 Hours is also an organization, you can visit the website here. It has tons of articles (you can find the book scattered in the articles, but it's nice to have it all together in a book) and resources like a template to make your own career plan. When you get here (like a did just a few months ago) you'll have grasped a broad understanding of what it is.

In the link above you can see three books: 80,000 Hours, The Precipice by Toby Ord (I'm currently reading it) and Doing Good Better by Will MacAskill (I have the book but haven't read it yet). I recommend you read the three books.

80k Hours is part of the Effective Altruism (EA) movement. Here is where my knowledge starts to shrink. EA is also an organization (website). Really visit the website and read the most you can to get an idea. They also have a ton of resources.

What would I recommend for learning more about EA? Get into the EA Introductory program (I just got into one, it'll start in January but it's already closed, but they open new programs often). It lasts two months and it's completely free.

80k Hours and EA are connected, they're basically made by the same people. They give all of this stuff for free because they really think the best way we can truly make an impact is if we have more and more people with this mindset, so more and more can have a high impact.

The real question is, in my opinion, are you the people who get this mindset? Because, for example, I've tried to convince my boyfriend for the past two years that he should be here on EA and I just can't convince him. For me, just a few arguments were enough to get fully involved. When that happens, I forget the rest of the arguments because it's obvious to me now (and maybe that's why I just can't convince my boyfriend) and I just think, "how can you not see it? What else do you need??" For me, getting more and more involved (and taking action) is now a moral and ethical obligation, which I'm truly happy to do.

On a side note, I'm also very religious and the more I read about EA, the more I see it is aligned with my faith, so the more I want to do it. Note that EA is completely secular, so no religion involved, this is just my very own personal experience.

I'm sorry I'm basically referring you outside to do the reading and learning yourself, but I truly think it's the best way.

I hope this helps and if you read these books and start getting involved I'll be more than happy to discuss any questions you have and maybe we can learn together eventually. You can send me a private message if you like. You can also follow me on my sideblog, where I post my most academic stuff, and I plan to start posting about all of this. Again, sorry for taking so long to answer you.

8 notes

·

View notes

Text

I'm more surprised that the rationalists managed to go this long without starting a death cult:

4 notes

·

View notes

Text

My partner and I found an absolute parody of an evil tech company last night.

Come across this building. Didn't take a picture but google street view adequately captures the vibes:

A neat if slightly sinister old bank building with a...stupid ass tech company name on it? Instantly giving it the most rancid vibes imaginable with the combo. Just radiates pure evil. Already goes from vaguely haunted mean old building to they're holding EXTREMELY lame eyes wide shut orgies at a bare minimum in there.

And like it's Portland, by all means have all the weird masked orgies you want. Just make sure everyone is happy to be there, right? You get my point.

On our way home from the concert husband looks them up out of morbid curiosity. Their...goddamn about page.

You motherfuckers...I was mostly joking about the eyes wide shut vibes but here you are diving face first into making yourselves look like the cartoon Illuminati of your own free will? Who does this? How much ketamine were you all macro-dosing when you decided this was a good image to put on your own website?

The about page goes on to state:

Our story begins in 2008 when David Barrett, CEO and founder, was living in San Francisco's Tenderloin neighborhood. As David walked past unhoused neighbors on the street every day, he wanted to find an alternative way to help them without giving them cash directly.

"You can't simply give money to gross poor people. They're gross an icky!"...But okay. Everything we know up to this point painting this as a blatant lie aside, wanting to help the homeless is a noble goal. Sure Mr. David Guy, tell us more.

In 2008, David launched the card technology concept at TechCrunch50 and reframed it as an expense management system called “Expensify: The Corporate Card for the Masses!” with no plans of actually building it. But much to David’s surprise, people loved the expense reporting concept.

Successfully launching a business in 2008 already makes you the luckiest douche canoe alive. I'm pretty sure adding that you had "no plans of actually building it" makes everyone who had the dreams they bled for crushed by the 2008 recession, or who ended up desperately underwater on a house legally allowed to at kick you in the nuts at LEAST once without being charged for assault.

Long story short they made some sort of receipt scanning thing, which is at least a vaguely useful product compared to most more modern tech startups. Though I guess they were bleeding edge on the "stupid ass tech start up name" train. Yes they try to spin it like this pitch had something to do with feeding the homeless. No it clearly does not.

We’ve accomplished a lot of our dreams, and had plenty of fun along the way — from hosting conferences in Hawaii and Bora Bora to producing a Super Bowl ad with 2Chainz and Adam Scott, not to mention our annual Offshore trip. But our initial goal of helping feed the houseless has never left us.

Guess what assholes, I bet you could have FED A LOT OF HOMELESS PEOPLE WITH THE MONEY YOU SPENT GOING TO HAWAII, BORA BORA, AND MAKING THAT FUCKING SUPERBOWL AD.

Apparently in 2020 they finally launched a quarter assed charity thing that's just a rewards credit card that launders donates your rewards points to their charitable foundation. Only took them 12 years to almost sorta barely get on that whole feeding the homeless goal. Except not really. It's the rich guilt rewards card? Their charity specifically offsets harm your purchases might have caused! I'm not even kidding!

The funds are distributed to a purchase-appropriate cause — for example, booking a flight triggers a donation to plant trees to offset carbon emissions

we are truly living in the dumbest timeline.

#tech bros#late stage capitalism#i don't even know what to tag this my brain is dying#cringe Illuminati lmao#effective altruism

4 notes

·

View notes

Text

For perspective, we’re currently allowing around 6 million to die of preventable diseases over the next four years just to reduce our annual budget by .1%

4 notes

·

View notes

Text

TL;DR: these are kid-smacking low-key eugenicists who give their children names like “Industry Americus” and think that keeping a home warm is a pointless extravagance.

The Guardian profiles pronatalists Malcolm and Simone Collins, and boy, these people are so full of themselves. Their commitment to effective altruism (you know, the same movement championed by Sam Bankman-Fried, who’s now in prison) somehow leads them to … not heat their home in winter?

as effective altruists, they give everything they can spare to charity (their [personal] charities). “Any pointless indulgence, like heating the house in the winter, we try to avoid if we can find other solutions,” says Malcolm.

And, for a couple who wants to have lots of babies, they don’t seem to like children very much.

The Collinses believe in childcare, but not maternity leave: Simone has never taken any. She will have the day of her C-section off “because of the drugs,” but will take work calls from hospital the day after. She tells me it’s because she’s “bored out of my mind” when she’s stuck with a newborn. … Malcolm tells me how much he doesn’t like babies. “Objectively, they are trying and they are aggravating. They are gross. This little bomb that goes off crying in this big explosion of poo and mucus every 30, 40 minutes. And it doesn’t have a personality, really.”

As for what that childcare looks like? They learned it from watching wild animals. For real.

Malcolm tells me that he and Simone have developed a parenting style based on something she observed when she saw tigers in the wild: they react to bad behaviour from their cubs with a paw, a quick negative response in the moment, which they find very effective with their own kids.

Lovely couple, these two.

#pronatalism#pro-natalism#pronatalist#pro-natalist#natalism#natalist#population#effective altruism#eugenics#Malcolm and SImone Collins#Simone and Malcolm Collins

13 notes

·

View notes

Text

The Morals of Madness

(This column is posted at www.StevenSavage.com, Steve's Tumblr, and Pillowfort. Find out more at my newsletter, and all my social media at my linktr.ee)

I’m fascinated by cult dynamics, because they tell us about people, inform us of dangers, and tell us about ourselves. Trust me, if you think you can’t fall into a cult you can, and are probably in more danger if you think you can’t. Understanding cults is self-defense in many ways.

On the subject of the internet age, I was listening to the famous Behind the Bastards podcast go over the Zizian “rationalist” cult. One of the fascinating things about various “rationalist” movements is how absolutely confidently irrational they are, and how they touch on things that are very mainstream. In this case the Zizians intersected with some of the extreme Effective Altruists, which seemed to start by asking “how do I help people effectively” but in the minds of some prominent people became “it’s rational for me to become a billionaire so I can make an AI to save humanity.”

If you think I’m joking, I invite you to poke around a bit or just listen to Behind the Bastards. But quite seriously you will find arguments that it’s fine to make a ton of money in an exploitative system backed by greedy VC because you’ll become rich and save the world with AI. Some Effective Altruism goes all our into arguing that this is good because you save more future people than you hurt present people. Think about that - if you’ll do more good in the future you can just screw over people now and become rich and it’s perfectly moral.

If this sounds like extreme anti-choice arguments, yep, it’s the same - imagined or potential people matter more than people who are very assuredly people now.

But as I listened to the Behind the Bastards hosts slowly try not to loose their mind while discussing those that had, something seemed familiar. People whose moral analysis had sent them around the bend into rampant amorality and immorality? An utter madness created by a simplistic measure? Yep, I heard echos of The Unaccountability Machine, which if you’ve paid attention you know influenced me enough that you are fully justified in questioning me about that.

But let’s assume I’m NOT gong to end up on a Behind the Bastards podcast about a guy obsessed with a book on Business Cybernetics, and repeat one point from that book – obsessive organizations kill off the ability to course correct.

The Unaccountability Machine author Dan Davies notes some organizations are like lab animals who were studied after removing certain brain areas. The animals could function but not adapt to change at all. Organizations that go mad, focusing on a single metric or two (like stock price), will deliberately destroy their own ability to adapt, and thus only barrel forward and/or die. They cannot adjust without major intervention, and some have enough money to at least temporarily avoid that.

The outlandish “future people matter, current do not, so make me rich” people have performed a kind of moral severance on themselves. They have found a philosophy that lets them completely ignore actual people and situations for something going on in their heads (and their bank accounts). Having found a measure they like (money!) they then find a way to cut themselves off from actual social and ethical repercussions.

If you live in the imaginary future and have money, you can avoid the real, gritty present. A lot of very angry people may not agree, but at that point you’re so morally severed you can’t understand why. Or think they’re enemies or not human or something.

Seeing this cultish behavior in context of The Unaccountability Machine helped me understand a lot of outrageous leadership issues we see from supposed “tech geniuses.” Well, people who can get VC funding, which is what passes for such genius. Anyway, too many of these people and their hangers-on go in circles until they hone the right knife to cut away their morality. Worst, they then loose the instinct to really know what they did to themselves.

Immorality and a form of madness that can’t course-correct is not a recipe for long-term success or current morality. Looking at this from both cultish dynamics and The Unaccountability Machine helps me understand how far gone some of our culture is. But at least that gives some hope to bring it back - or at least not fall into it.

And man I do gotta stop referencing that book or I’m gonna seem like I’m in a cult . . .

Steven Savage

www.StevenSavage.com

www.InformoTron.com

#the unaccountability machine#psychology#cults#ethics#morals#effective altruism#zizians#behind the bastards

2 notes

·

View notes

Text

Hello, @strange-aeons . I’ve been a fan of yours for several years. Unfortunately, your last video is very poorly researched, up to not understanding basic definitions. Please read at least a little bit of this.

TLDR: Rationality and rationalism are two different things. Rationality is not about relishing in being right, it’s about searching for the ways in which you are still wrong. And just subscribing to a philosophy is not enough to make you perfect, no one has argued that. Yes, MIRI failed and we aren't hiding from this fact, the community is pushing for regulations or a total ban on AI capabilities research at the moment. The community is very diverse and very queer. SA happens in any group and demographic, it’s a pretty disingenuous way to discredit us.

First, 101 rationality understanding real quick. It’s rationality, not rationalism. Rationalism is a philosophy about Pure Reason being enough for getting the accurate picture of reality. That is bollocks. Turns out you have to actually do science*.

Epistemic Rationality is basically about doing science. As in, trying to obtain the most correct picture of reality by all means available. Then Instrumental Rationality is about trying to make the best decisions with this information. It’s all very common sense and I would guess you’d actually agree with most of it if you just stopped strawmanning. For example:

I saw you agree with the assessment that rationalism is a philosophy that proclaims itself to be correct. I assume you actually think that the rationalist community is this way. That reminds me of people who attack science for ‘those big brain jerks think they already know everything!’ betraying a lack of rudimentary grasp on what science is. In both theory and practice it’s mostly about searching for the places where you are still wrong (to become less wrong, get it?) and correcting your mistakes time and time again**.

It’s actually very similar to how we leftists approach social issues, always checking our privileges, always listening to minorities and always expanding our understanding of oppressive structures. Can being a leftist make someone a bit arrogant and insufferable as if they’re already perfect and have nothing more to learn? Many such cases. Same with rationalists. It’s Dunning–Kruger effect, it’s the same for every field where the point is to become better. People start, quickly learn a lot, become a bit annoying about it, then they are humbled mostly by members of their own community.

Second, you decided based on vibes that the rationalist community is a bunch of sexist elitist tech bros. You didn’t collect testimonials like you often do for your other projects. It looks like you just read a bunch of articles and listened to podcasts made by other biased individuals and maybe looked for ridiculous sounding threads on forums to confirm your suspicions*** (or just took them at their word).

And while it is generally true that ‘something only men are interested in is never cool’, LessWrong adjacent rationality is not that. It’s a giant worldwide community that is very diverse. It is maybe half shy nerdy guys and the other half is women and queer people, also shy and nerdy (read neurodivergent, overwhelmingly). It’s the most pro-feminist and sex positive community I personally encountered outside of feminist and queer communities themselves – and it’s in Russia, even rationalists aren’t too woke over here. The situation is way better in Europe from what I’m able to see in the group chats. All my friends are rationalists and all of them are queer (I do talk to other people, don't you worry, I watch you!).

The community is also politically diverse as well. While the overwhelming majority is liberal, there are many left leaning people as well. There are (unfortunately) many libertarians in the mix and a few conservatives (those who don’t mind being disagreed with most of the time). The thing is the community tries to discourage political tribalism and foster an issue-by-issue discussion instead. So there’s always a percentage of people who disagree with the consensus opinion on every topic, discussion is always happening. And because people are trying to be all evidence-based over there, minds are actually being changed****.

The community isn’t without its biases. The entire MIRI idea was based on this fantasy of a group of math geniuses saving the world, finding the perfect solution even though no one believed in them (including many people within the rationalist community itself). Well, now the realization kicks in that they failed and the problem of AI alignment is way more complex than they thought (if solvable at all) and the actual solution is to push for AI regulations. That’s the state of the AI safety conversation right now. It’s either ‘shut it down’ or ‘put them under the heaviest scrutiny’. Almost no one thinks they have the time to change the course of the iceberg anymore. And yes, they do focus on existential risks but it’s not like they appreciate all the harm AI does on its way to destroy the world.

I’m not going to elaborate too much on how rationality techniques improve my life in ways big and small or we’ll be here all day (it’s mostly problem solving and conflict resolution). But the crucial thing is that LessWrong never claimed humans are perfect robots or have a potential to be perfect robots or can be easily turned into perfect robots. How shit human brains are at actual reasoning if left to their own devices is the entire point (that’s why it is not rationalism in any way).

An analogy sometimes used is martial arts. People can fight with no training, they have some in-born fighting capabilities. But hoo boy does training help. Actual training, years of practice, constant effort of keeping yourself in shape. Not a correct philosophy or one course. Does this sound exhausting? Well, you are neither a scientist nor a sportsman. But some people really do take self improvement seriously and really are this ambitious. Most are practising recreationally, however. And it’s fine. Few people who run twice a week believe themselves to be olympic runners. Few church goers believe themselves to be monks. Few regular leftists believe themselves to be revolutionaries. And few casual rationalists believe themselves to be big time researchers or scientists. But exercising a little is still better than rotting on the couch.

Speaking of couches. As I mentioned, the community is very sex positive. Very kink friendly, very supportive of polyamory and trying out new things just to experiment. Even in the perfect world with no patriarchy involved there would be a bunch of drama caused by just regular human behaviour. Unfortunately, there is patriarchy involved and there are a lot of women in the community. So, a bunch of sex scandals did happen. But framing it as ‘these people don’t respect women, what a surprise’ is highly misleading. By that line of reasoning you could discredit any movement or demographic. The tactic that is indeed used by the right all the time against trans people, immigrants, democratic party, you name it.

And LessWrong isn’t a country. If women felt unwelcomed, they’d leave, like they leave industries and fandoms. Like they leave most ‘intellectual’ communities because they are often hostile to women and queer people. LessWrong is a rare exception and women rarely leave it. They feel very welcomed, in fact. They own the place in many cases (like my first local meetup in one of Russian cities that was run by a wonderful lady).

Yes, it’s not perfect and people are constantly trying to make it better. But it's a general patriarchy thing that was not caused by LessWrong or rationality. The same way there’s rampant misogyny on the left as well and we talk about it but we aren’t cancelling the left, are we?

More about Ziz here.

About hpmor here.

* There’s a saying ‘Logic is true in any universe, but it doesn’t tell you which universe you are in’.

** In fact, even calling yourself a 'rationalist' is something that's being challenged. A preferred term is an 'aspiring rationalist', to empathize that no one here is actually rational, that we all are just trying to be a bit more rational to the best of our abilities. I personally don't use it but many people do.

*** Forums are very big and all sorts of controversial topics are discussed there. It’s not surprising to find threads about accelerationism or longtermism or decision theory that lead to ridiculous conclusions. All of those are controversial but people need a place to talk about it, that’s the whole point.

**** It is way more difficult on political issues than on random science topics. I hope I don’t have to explain why.

#i know she won't read it and nobody will but maybe tldr at least#sigh#i just need to get this out of my system because this video made me real mad#now i wonder if all of her videos are this bad but for others she was at least actually interviewing people#here she didn't even try reaching out#just went with her gut feeling on this one#rationality#rationalist community#eliezer yudkowsky#effective altruism#hpmor#not pathologic#strange aeons#lesswrong#less wrong#yudkowsky

25 notes

·

View notes

Text

The Philosophy of Effective Altruism

Effective Altruism is a philosophy and social movement that emphasizes using evidence and reason to determine the most effective ways to improve the world and help others. It combines the altruistic desire to do good with a rigorous, results-oriented approach to maximize the positive impact of charitable actions.

Key Principles of Effective Altruism:

Maximizing Impact:

Effective altruism focuses on ensuring that the time, money, and effort devoted to helping others yield the greatest possible impact. This involves identifying causes, interventions, and charities that provide the most benefit per unit of resources.

Evidence-Based Approach:

Central to effective altruism is the use of evidence and data to assess which interventions and organizations are most successful. This often involves evaluating scientific research, performing cost-effectiveness analyses, and assessing the tangible results of various efforts to help.

Cause Prioritization:

Rather than spreading resources across all causes, effective altruism advocates prioritizing certain areas where the need is greatest, or where the most lives can be saved or improved. This could include global poverty, animal welfare, or existential risks such as climate change or artificial intelligence.

Long-Term Thinking:

Effective altruism often involves consideration of the long-term consequences of actions, including potential effects on future generations. Altruists might consider how present actions can help reduce future risks or improve the well-being of future individuals.

Moral Cosmopolitanism:

Effective altruism operates on the principle that all human lives (and sometimes animal lives) are of equal value, regardless of where a person is born or lives. This means that people should focus on causes that provide the most help to those in the greatest need, even if they are far removed geographically.

Personal Responsibility and Earning to Give:

Some proponents of effective altruism believe that individuals can do more good by earning a high income and donating a significant portion of it to highly effective causes, a concept known as "earning to give."

Openness to Self-Improvement:

Effective altruists constantly seek feedback and are willing to change their actions or strategies based on new evidence or better reasoning. The movement emphasizes flexibility and continuous improvement in pursuing altruistic goals.

Criticisms of Effective Altruism:

Narrow Focus: Some critics argue that the focus on measurable outcomes can lead to neglecting important, but harder-to-quantify, causes such as systemic social change or cultural initiatives.

Elitism: The emphasis on high-income individuals "earning to give" can create perceptions that effective altruism is only accessible to wealthy or highly educated people.

Overemphasis on Utilitarian Calculations: The movement's utilitarian focus on maximizing good outcomes can lead to difficult ethical decisions, such as favoring saving a large number of lives in the future over addressing pressing issues in the present.

The philosophy of effective altruism combines moral concern for the well-being of others with pragmatic reasoning to ensure that altruistic efforts are as impactful as possible. It encourages individuals and organizations to critically evaluate their charitable actions, seek evidence-based solutions, and prioritize causes that yield the greatest benefits for society.

#philosophy#epistemology#knowledge#learning#education#chatgpt#ethics#Effective Altruism#Maximizing Impact#Evidence-Based Charity#Cause Prioritization#Global Welfare#Moral Philosophy#Long-Term Thinking#Ethics of Giving

3 notes

·

View notes

Text

Three weeks ago I attended the NYC rationalist Secular Solstice and, as I said back at the time, I've been intending to record something about my experience there like I did the last two times. I'm not sure I have as much to say this time around (or not as much interesting to say), and also some of my memories already aren't as fresh since three weekends ago, but here are some comments about the evening.

First of all, my strong feeling about the whole thing is that, while the solstice event itself is great and well done and worthwhile, just showing up for the solstice event is just not the way to do things: it's only one part of a much larger rationalist "megameetup" which -- I now get the feeling more strongly than ever -- would be really fun and interesting. I was convinced enough of this the previous time to make some effort (limited by the sheer hecticness of my November this past year) to figure out a way to be in NYC for the whole weekend, preferably without paying tons of money for lodging. But I just couldn't figure out any feasible way to do this, in particular because of the timing right around my students' final exams. I wish I had managed somehow, though: the people who attend the solstice event are just so visibly interesting and engaging and overall seem fun to spend a whole weekend with; and I imagine I could learn a ton on rationality- and EA-related topics if I did the Megameetup.

Anyway, I managed to arrive late again to the start of the event (for at least the second year in a row), just because I had too many things to take care of at my home before setting off for NYC, and Hofstadter's Law always applies to my journey to any particular part of NYC. What's funnier is that I also missed the beginning of the "second act" as I got caught up in conversation with several people during the intermission who weren't actually doing the solstice and didn't realize I was, and I didn't realize for a while that Part 2 had begun. I followed the semi-tradition (at least from two years ago) of arriving at and doing the event on no dinner, but some free snacks provided by one of the organizers outside the room helped a lot here.

I found the songs as meaningful as always and recognized many of them -- the main one that's stayed with me since is "Bitter Wind Blown", but there were some others that I remembered distinctly. There was a song I didn't recall from previous programs where part of the chorus was about not being able to "find my tribe, find my tribe, find my tribe", and I found that one spoke to me quite a lot. Altogether I'm ready to forcefully repeat what I've said after previous rationalist solstice events: the content of this music and the vibe of the whole event touches me more deeply than any type of religious service I've been to.

At the same time, I feel that my mind was elsewhere this time perhaps even more than the last time. I was tired (partly just from having rushed for hours to get there mostly on my own steam) and had generally been stressed out for weeks, and somehow the continuity of doing this for the third consecutive year brought up feelings that weren't entirely positive: I feel like my life is in a holding pattern over the past three years, with being at the same non-permanent job and not knowing what the next step is (along with, of course, being perpetually single and having no idea still in my late 30's where to expect my personal life to go). At no moment is this brought to my attention more forcefully than the part where members of the audience are asked to stand up in stages based on their involvement in EA, and there's still not much I can say for myself on that front -- I can't bring myself to do much until I know better what my financial and professional future looks like, and I hope this will change in the near future. Moreover, I began thinking of how this was the first rationalist event I'd been to where I could say that a solid decade ago I already knew and was very enthusiastic about the rationalist movement and had (a decade earlier) dreamed of becoming heavily involved, and ten years later almost none of that has happened -- it's not something I long for in most of my day-to-day life, but there's something inspiring about seeing some of the people at the forefront of the main Northeastern US branch of it in their element organizing stuff. Regardless of all this, for me there's something wonderfully moderately relaxing about singing along with a bunch of mostly-strangers that I still managed to enjoy quite a lot.

A major, major plus to the arrangements this year was that the solstice event was at the same convention center as the entire Megameetup, and in particular this meant that the after party was directly within and outside of the room the solstice ceremonies had taken place in. It's hard to overstate how much easier this made the evening as a whole (especially when compared to my experience two years earlier when I had made the mistake of attending by car!). My time at the after party was still limited, as I had to think about making the journey home without being up most of the night, but I was there long enough to have a bunch of conversations and appreciate how delightfully visibly autistic and rationalist-y and distinctly young-to-early-middle-age the whole crowd was. Again, the after party made me wish I could have spent the whole weekend and actually gotten to know more of the people there.

In terms of meeting familiar faces/handles, I saw a lot of @drethelin, whom I'd gotten to know in person at the previous NYC Secular Solstice (after being acquainted with him from Tumblr and SSC comments sections for most of the past decade). My impression of him as an unfailingly pleasant and affable person has been further cemented. He had to listen to way too much of me grousing over the academic job market and having no idea how things will be for me geographically in a year's time (primarily this was in the context of being able to attend further NYC rationalist events). In addition, I saw a bit of @taymonbeal but didn't speak to him beyond literally just saying hello and almost certainly not giving him enough time to have any idea of who I was. I'm pretty sure that during the event I was sitting directly behind Zvi and his family, but I remembered less about Zvi than I used to back when I was seeing his blog posts regularly (I don't know why I don't anymore) and remember only that I used to follow his Wordpress(?) blog and that I think he gave a speech at the solstice event two years ago. Again, I was at the cusp of cementing a number of new acquaintances, but time didn't allow me to pursue this much. Conversation was always fun and interesting. An unexpected theme was dating prospects for a single guy seeking women in different part of the country (in which several people emphasized the usual wisdom that NYC is the best place for this), which I don't recall actually bringing up myself, which at one point led to advice about how to increase my own dating prospects: I was told to... I think the phrase was "make myself bigger", which was clearly a euphemism for building up the muscles in my upper body (a goal I've already had for a while but am currently barely any closer to figuring out how to do). The gender ratio was not great, and there was definitely (as one may guess from the subject matter just mentioned) a "young-ish nerdy male" vibe in the after party, but most everyone appeared to be enjoying themselves and not to be hiding in their shells, whether a guy or not.

I had quite a journey just to get to the secret free/available parking spot where I had left my car (in a different part of the city altogether), and just around midnight I was buying a gyro for a very late supper from an outdoor seller and thinking over how one day not long from now I may lead a more responsible and tied-down life and feel nostalgia for the time when I could be alone on a public street at midnight in freezing cold getting food. Then I had a very spooky but not-unpleasant-to-look-back-on experience wandering the completely vacant streets of a park near one in the morning looking for my car before I had to make the long drive home (during which, strangely, I managed to stay wide awake much more easily than on the drive there).

I'm tempted to resolve never to attend the NYC Secular Solstice again without attending at least part of the Megameetup or without living closer to NYC, but we'll see. (There actually is a small but not insignificant chance, which I didn't have evidence of three weeks ago, that I may get a job closer to the city than I am now.)

(I kept my name tag somewhere in my home -- it shows my handle on one side and my IRL name on the other, so I guess if I die an IRL people look through my stuff one day they'll figure out I'm Liskantope, although probably there was some way or other to figure out such a thing anyway by looking at what came from my IP addresses or whatever.)

#personal story#rationalist community#secular solstice#effective altruism#nyc#dating and relationships

19 notes

·

View notes

Text

The thing that gets me about Effective Altruism that I haven't seen in public conversations, is when they're talking about how you can have the most impact by making more money to give more to charity, I have to think like, okay, but what about the people actually doing the thing?

Like, I know this is not an original argument, it's just not spelled out in videos and whatnot, but when you're spending tons of money on your calculated highest impact charitable cause, you are still paying other people to do things. Money doesn't magically make things better. The actual work has to be done by hundreds or thousands of people across the world. Even if you're giving money directly to the poor (a good thing, and quite effective), you've got a whole lot of administrative work being done to get that money where you need.

Like, it's a philosophy that not everyone can follow, not just because not everyone is lucky enough to get that money, but because there still have to be people to actually go out in the world and do the thing.

16 notes

·

View notes