#fails. too many variables/too much data

Explore tagged Tumblr posts

Text

Every Life is on Fire / Jeremy England

#excerpts#i hav to be honest im thinking about astrology here#wherein the birth chart is a predictive model (of one's personality/inclinations)#but astrology on a larger scale eg predicting the stock market or the outcome of a sports game#fails. too many variables/too much data

2 notes

·

View notes

Text

Popular misconception: The core Jurassic Park is about dinosaurs. It's not. It's about this guy...

It's easy to think that, given how every movie centered around the prehistoric creatures. I mean, the title is JURASSIC Park or World. Heavy emphasis on dinosaurs.

But if one was to look at the entirety of the franchise, even go as far as reading the novels, Jurassic Park seems more focused on the chaos. Cause and effect. Complex systems failing. Unpredictability.

Kind of sounds like a certain scientist now, doesn't it? In the first film, Ian Malcolm makes a speech in the nursery. While the speech is triggered by the raptor, it's not about the newborn or any other animal. He speaks on genetic power.

Ian tells how the hubris exhibited by the staff and John Hammond is a fallacy because manipulating genetics at Henry Wu's level is an untested field with unforeseen consequences. The dinosaurs are more than teeth here, they are variables. And variables can skew data.

While we see the dinosaurs running around eating people, the main narrative is summed up nicely in a popular quote from, yes, Ian; "Life finds a way."

No matter how many fences are put up or how many gene sequence gaps get filled, nature always wins. We see this with the natural repopulation by the dinosaurs in the wild. We see this even with the ending: nature handling nature as the Rex kills the Raptors. They are variables settling as the status quo shifts.

Where am I going with this? Jurassic World Dominion.

One of the biggest complaints I've seen for the sequel is that there was too much focus on the bugs and not the dinosaurs. That Fallen Kingdom promised dinosaurs in the mainland, not some locusts.

I disagree with them. Aside from the bugs, the dinosaurs had plenty of screen time and were even in Malta!!

I think the insects were a great idea, but implemented poorly. Could've been poor writing. Could've been COVID reasons during production. I like to believe that the locusts suffer from being shoved into a movie that really needed to be 2 parts. If Jurassic World Dominion was 2 parts, they could have kept the locusts and flesh them out more AND include dinos in cities.

I'm digressing.

Dominion and the locusts represent the peaking in the abuse of genetic power, told in Jurassic terms. The locusts unchecked may cause a possible extinction of the human race, all because BioSyn was blinded by its own hubris. Lewis Dodgson is dark John Hammond.

Jurassic World and Fallen Kingdom said the same thing about genetic power with hybrid monsters. Hoskins talked about Raptors eating terrorists "belt buckle and all." A black market emerged. People died. All because humanity discovered how to manipulate genetics without understanding the consequences.

So, yeah, Jurassic Park and Jurassic World revolve around action scenes with dinosaurs and a beautiful shot of them here and there. But the core of the franchise, the main narrative, is not about shoving dinosaurs in front of the camera and watching them fight. It is and always has been about genetic power and the mishandling of it by arrogant men.

45 notes

·

View notes

Text

Ferrari and the Consequences of Not Looking Forward: A Very Long Rant

After a disappointing qualifying performance at Imola, the home grand prix for the Scuderia Ferrari F1 team, the frustration felt by fans has reached a boiling point. The popular question asked by many is, "how is it possible for a team such as Aston Martin, who is typically seen out in Q1, to out-qualify Ferrari?" Many point to Aston Martin's tire strategy as the reason for their success, and ask how come Ferrari didn't see the switch to medium tires that Aston Martin, as well as Mercedes, did and follow along. The answer is painfully simple: Ferrari, at this point in time is incapable of seeing the faults responsible for their failures, so they can't even see why other teams succeed. The variable that Ferrari, as so many other teams, likes to blame for their slow fall from grace is the drivers. Ferrari's newest driver, seven-time world champion Lewis Hamilton, is struggling to adapt to the new car and team, which is to be expected. What is not expected, and what highlights the issues that Ferrari has, is for longtime Ferrari driver Charles Leclerc to struggle with the car as well. Leclerc and Hamilton are both undoubtably talented drivers, and while some may jump to call them "washed" or "not as good as they used to be", no different combination of drivers would be able to mend Ferrari, because what is underperforming is not the drivers, it's the car.

For a long time, Ferrari was regarded as the team with the car that all the other teams should strive to have. However, in the present, the best car belongs not the Ferrari but to McLaren. As is obvious when you consider the current Constructor's Championship positions, Ferrari has fallen far from their place as the team with the best car. So, what's changed? The answer: nothing.

What I theorize to be the issue with Ferrari is that the team is hesitant to accept, or completely oblivious, to the fact that they no longer has the best, or even a good, car. The legacy that Ferrari has as the titan of F1 haunts them to such an extent that it has blinded Ferrari from the fact that they are falling behind other teams in terms of improvement. It appears that Ferrari has spent too much time dwelling on past successes to even see their current failures. In short, Ferrari is so obsessed with their reputation as a top team that they fail to focus on maintaining their position as one, and it is no one else's fault except their own.

The removal of a driver such as Carlos Sainz, who takes a more active role in engineer meetings and in reviewing data than perhaps other drivers, and who has more knowledge in that area, resulted in a situation in which Ferrari no longer has someone who knows, or is willing to call out, the teams' shortcomings. Both Hamilton and Leclerc seem more willing to blame themselves than the team or car. This habit of blaming the drivers is detrimental to Ferrari as it has caused them to enter such a profound state of denial. Charles Leclerc's increasing willingness to voice his frustrations with the team may prompt Ferrari to stop looking back and instead look forward at their failures and rapidly approaching downfall. However, if Leclerc and Hamilton's complaints fall on deaf ears, the future of Ferrari looks bleak, as the drivers themselves cannot fix the car. Ferrari's strategy and communication is also a factor in the team's failures, but no improvement in strategy or team-to-driver communication will be able to fix the issue of the car. Pointing fingers to different people will not atone for fact that Ferrari's is not a person or persons, but the exact thing that is meant to carry the team to victory.

4 notes

·

View notes

Text

Saving Endangered Animals Will Help Save Us, Too. (New York Times)

Excerpt from this Margaret Renkl Op-Ed from the New York Times:

We were living in a cataclysmic age of mass extinction and climate instability even before the election. Now the climate denier in chief is poised to gut the environmental protections that do exist. Even so, conservation nonprofits are struggling to raise the funds they need to challenge his wrecking-ball agenda in court. The people who care are feeling defeated, and the fight has not yet begun.

I was already grieving, and the approach of Remembrance Day for Lost Species, which falls each year on Nov. 30, didn’t help. Was this really the best time to pick up “Vanishing Treasures: A Bestiary of Extraordinary Endangered Creatures” by the dazzling British author and scholar Katherine Rundell? Did I really want to read another book about how so much of life on earth is close to ending?

As it turns out, this is the perfect book to read in the aftermath of a planet-threatening election. In times like these, terror and rage will carry us only so far. We will also need unstinting, unceasing love. For the hard work that lies ahead, Ms. Rundell writes, “Our competent and furious love will have to be what fuels us.” This is a book to help you fall in love.

Among the 23 endangered creatures she celebrates in “Vanishing Treasures,” the last on the list is humans. This is not a sly overstatement to make a point. How will we grow crops if we lose the pollinators? What medical advancements — like the GLP-1 drugs, derived from a study of Gila monsters, that now treat diabetes and obesity — will we miss if reptiles go extinct? Which diseases will run rampant in our communities if scavengers are poisoned out of existence?

We have hardly begun to understand how inextricably our health and safety are intertwined with those of our wild neighbors. Data is hard to come by, in part because controlling for variables is so difficult outside a lab. But when a fatal fungal disease called white-nose syndrome began to wipe out bat colonies in 2006, researchers realized it might offer a way to study the direct effect on humans of a population collapse in wildlife. In September, the journal Science published a study that linked the sudden loss of bat colonies to a spike in infant mortality.

Bats feed on many insects that would otherwise feed on crops. Researchers discovered that in counties where bat colonies were destroyed, farmers compensated by using more insecticides — on average 31 percent more — than they had used when bats were helping to keep insects in check. In those counties with higher levels of insecticide use, infant mortality was also 8 percent higher, on average, than in counties with healthy bat populations. A spike in environmental toxins explained the spike in infant mortality, but the real reason more than 1,300 babies died was the disappearance of bats.

Ms. Rundell calls bats “the under-sung ravishments of the night.” They deserve to exist because they are a treasure of the natural world, not because they help us grow food and keep our babies safe from neurotoxins. Nevertheless, to protect the bats is inevitably to protect ourselves.

Wildlife populations are disappearing at an unthinkable rate, but we are getting nowhere in the effort to curb emissions or to protect the habitats of the creatures who yet survive. In 2022, the world’s nations made a historic pledge to keep 30 percent of the earth wild. So far most of those countries have failed to produce a plan for doing so.

We can’t keep waiting for our leaders to save us. We need to wake up every morning looking for what we can do, collectively and individually, to buy enough time for our leaders to get this right. In a time of darkness, what light is left to us?

A highly incomplete list would start with eating less meat and dairy, a change that would reduce deforestation and climate-altering emissions. We could buy less stuff to reduce transportation emissions and raw-material extraction.

Poison harms not just babies, but every creature in the food chain. Instead of poisoning our way out of inconvenience, we can practice a policy of peaceful coexistence by avoiding insecticides and rodenticides. We can give the night-flying insects back to the bats. We can give the rodents back to the owls and the foxes. We can leave the leaves where they fall to foster habitat for overwintering insects. We can turn off outdoor lights at night to protect migrating birds and nocturnal animals.

When we must drive, we can drive slower, particularly on roads through wild areas. Last month a Yellowstone-area grizzly bear, beloved by the many people who knew her from social media and a PBS documentary, was hit by a car and killed. It was a tragic accident, nobody’s fault, but every year millions of non-famous animals — and some 200 people — are also killed in collisions between wildlife and cars. Wildlife crossings can significantly reduce these encounters. So can simply slowing down.

Such measures are small at best, but if we adopted them all and adopted them together — and especially if we used them as first steps toward growing awareness and greater change — we could make a measurable difference even without the help of feckless government officials.

2 notes

·

View notes

Text

I fell for propaganda and was turned against those I have always wanted to root for

I wanted to send this to the CDC somehow, but the email contact form on their website has a character limit and I'm incapable of being concise. I thought this might be helpful for some people to see because it took me a while to reflect on.

During the COVID-19 pandemic, living in Florida, being young and trans and traumatized by the current political climate and dangers posed by the pandemic, I was swayed by likely a mix of propaganda and a fear and anger response to the amount of stress that time came with. I found myself trusting in the CDC less because of several things that I never fully examined until now recently. It was all just a mix of fear and hopeless rage about public health and my fears about our political climate, and much of that was directed at the CDC. Upon examining this recently, I think this was because I assumed the CDC had more power than it may actually have in enforcing public health. I thought isolation periods could be more solidly mandated, that mask wearing could be solidly mandated, and so on. I assumed the CDC had more control over when schools reopened for children (I now realize a lot of this is controlled by states individually or even more locally), and in my fear of the pandemic and distrust in the CDC sowed by being worried about the country as a whole, I even failed to fully weigh the consideration that virtual learning has a significant impact on Anyone’s mental health and that for children especially, social and emotional development should be fostered and that is an issue that gravely concerns mental health extending to the rest of their lives. I thought the CDC could require employers to keep allowing employees to have sick days when testing positive, so they wouldn't have to make a choice between risking their job and livelihood versus strangers’ physical health and possibly risking permanent damage or death for some with no way to tell (I'm grateful that the risk has been reduced so much by vaccines/boosters and being cautious with masking and washing hands, but I feel it is so important to allow isolation away from work when it concerns transmission and health and recovery). I particularly was swayed more into distrust when I heard that Delta airlines wrote a letter asking the CDC to update isolation periods for vaccinated individuals who would still be required to mask, believing there was no new data to give confidence to such a change in recommendations (10 day isolation period to 5 day isolation and next 5 days with a mask), but found there explicitly was reasoning given on the CDC update from that time available to view on the website’s archives (these have been very helpful because the time of all of this was an emotional traumatizing blur, so specifics are hard to remember). Before I examined this all more after the fact, this led me to believe that the CDC was influenced by economic concerns and the workforce instead of public health and keeping those workers alive and healthy, and furthered my distrust.

I am glad that now I have further examined where this distrust has come from and found that it was irrational on my part, and I regret that I carried on with this tainted view of the CDC for so long. I have struggled with this because I did have a strong trust in the CDC and felt more unsure of where I should find reliable information, knowing the CDC certainly has more expertise than I and has likely devoted a lot of time and research to any particular consideration I might come up with. I hope if others were similarly swayed by political propaganda that sought to utilize fear and stress from the pandemic, that they too come to reexamine how they came to think that way and find trust in this institution of scientists who are clearly passionate about public health and finding ways to keep all of us safe with many unpredictable variables to consider. I feel very ashamed that I allowed my trust in the CDC to be shaken to this extent. I hope scientific research, public health concerns, environmental concerns, and any crisis that requires humanity to understand facts and cooperate is taken more seriously and listened to from experts in each respective field and not turned into political opinions one way or the other. I am so devastated by all the damage COVID has done that feels like it could've been so preventable if this didn't become a political issue and remained a public health crisis to work through cooperatively. I have now come to see that I think the CDC did as much as it could through all of this with all of the consideration at the time and with its limited influence amidst political stress.

Thank you everyone at the CDC, I am sorry that I fell for this propaganda, and I would like to talk to as many people in my life about addressing propaganda and fully considering that no one is fully safe from falling prey to propaganda and biases we don't realize are tainting our full view. Thank you again so much for everything incredible that you have done for humanity. Be kind to yourselves everyone, shit has been so hard honestly.

#cdc#center for disease control and prevention#propaganda#covid#covid19#covid-19#covid 19#coronavirus#public health#idk what to tag aaaaaa#also i was Pretty Sure from everything i was looking at and trying to find that the CDC didnt have as much power to set mandates and stuff#But in case i missed something and am wrong on anything i said here plsss let me know i would wanna look into that :o#bc i kept trying to dig further and find if the cdc did anything i actually disagreed with or thought was irresponsible#and i think most of it was just i thought they could do more Oop and the trump administration did not make shit easy

2 notes

·

View notes

Text

Understanding VOIP Routes: How They Impact Call Quality and Costs

In today’s fast-paced, globalized business world, reliable communication is key to success. Whether you’re speaking with a client across town or coordinating with a remote team halfway around the world, the quality of your calls can make or break the interaction. This is where VOIP routes come into play. Understanding how VOIP routes work is crucial for businesses looking to optimize their communication systems and reduce costs.

In this blog, we’ll explore what VOIP routes are, how they impact call quality, and how businesses can leverage them to cut down on costs.

What Are VOIP Routes?

VOIP routes refer to the pathways or networks through which voice data travels over the internet. When a call is made using a VOIP system, the voice is converted into data packets and transmitted over the internet. The VOIP route is the specific route these data packets take to reach their destination.

Just like traditional phone lines, VOIP calls pass through various network nodes, servers, and gateways before reaching the intended recipient. However, unlike traditional phone systems, which rely on physical lines, VOIP calls utilize internet networks, which can be much more cost-effective and flexible. The quality of a VOIP call depends on the efficiency and reliability of the route through which it travels.

How VOIP Routes Impact Call Quality

The efficiency of the VOIP route has a direct impact on call quality. If the data packets are delayed or lost during transmission, it can result in poor sound quality, echo, or even dropped calls. The most important factors that influence call quality through VOIP routes are:

Bandwidth and Latency The bandwidth available on the route and the latency of the connection are two key factors that determine the quality of the call. High bandwidth and low latency ensure that voice data travels quickly and without interruption, resulting in clear, crisp calls. On the other hand, limited bandwidth or high latency can lead to jitter (delayed or fragmented voice), dropped calls, or poor audio quality.

Network Congestion If the network path is congested, it can result in call degradation. Congestion occurs when too many calls are trying to pass through a single network, causing delays or packet loss. This is why it’s important to choose a VOIP provider that offers high-quality routes and ensures efficient data transmission.

Packet Loss Packet loss occurs when some of the voice data packets fail to reach their destination, leading to gaps in the conversation or poor call quality. VOIP routes with high-quality infrastructure will have measures in place to minimize packet loss, ensuring that every part of the conversation is transmitted smoothly.

Peering Arrangements Peering refers to the agreements between different networks to exchange data. The quality of a VOIP route can be influenced by the quality of the peering arrangements between the service provider’s network and the networks it communicates with. Providers with solid peering agreements typically offer better VOIP routes and, therefore, better call quality.

How VOIP Routes Impact Costs

One of the major reasons businesses are shifting to VOIP systems is the potential for cost savings. Traditional phone systems often come with expensive hardware, installation fees, and high international calling rates. VOIP routes, however, provide a more affordable alternative, especially for businesses that need to make international calls regularly.

Cheaper Long-Distance Calls Because VOIP uses the internet to transmit voice data, long-distance and international calls are typically much cheaper than traditional phone calls. The cost savings come from the fact that VOIP calls bypass the traditional telecom infrastructure, which often involves costly landline and satellite systems. VOIP providers with optimized routes can further reduce these costs.

Variable Pricing Plans Many VOIP providers offer flexible pricing plans based on call volume, call destinations, and other factors. Some providers offer cheaper rates for specific routes or destinations, allowing businesses to select the most cost-effective route for their needs. This level of flexibility makes VOIP an attractive option for businesses looking to control their communication costs.

Predictable and Scalable Pricing VOIP providers typically offer subscription-based pricing, where businesses pay a fixed amount for a set number of calls or minutes. This provides more predictable costs compared to traditional phone services, which often come with fluctuating long-distance charges. Additionally, VOIP systems are scalable, meaning businesses can add new lines or features without incurring significant additional costs.

Route Optimization for Cost Savings Some VOIP providers offer route optimization, which ensures that calls are routed through the most cost-effective paths. By analyzing traffic patterns and optimizing routes in real time, providers can lower the cost of international calls or calls to specific regions. Businesses can save money by taking advantage of these optimized routes.

Why Choose A1 Routes for Your VOIP Needs?

When it comes to VOIP routes, choosing a provider with optimized routes and reliable infrastructure is key to getting the best call quality and cost savings. A1 Routes specializes in providing businesses with high-quality VOIP services that include efficient call routing, minimal downtime, and excellent call clarity. Our network infrastructure is designed to ensure that voice data is transmitted quickly and reliably, resulting in superior call quality for both local and international calls.

At A1 Routes, we understand that businesses need affordable communication solutions that don’t sacrifice quality. Our VOIP routes are designed to minimize costs while providing crystal-clear communication. Whether you need to handle customer support calls, team collaboration, or international business meetings, we offer scalable solutions that meet your needs.

Contact Us Today

If you’re ready to optimize your business communication and reduce costs with high-quality VOIP routes, contact A1 Routes at 1-347-809-3866 or visit us at TC Energy Center, 700 Louisiana St, Houston, TX 77002, USA. Let us help you make the most out of your communication system with reliable and affordable VOIP services.

Optimize your communication with A1 Routes—your trusted VOIP provider.

#voip phone system for small business#voip provider in hyderabad#voip providers#voip providers in india#voip routes

0 notes

Text

The Importance of Data Quality in Machine Learning Projects

In today's world, Machine Learning (ML) is reshaping industries across the globe. From healthcare to finance, retail to transportation, businesses are increasingly leveraging ML algorithms to make smarter decisions, improve efficiency, and predict future trends. However, while Machine Learning has immense potential, it relies heavily on the quality of data used to train models. As a result, machine learning consulting companies emphasize the importance of data quality in achieving successful outcomes. High-quality data ensures that ML models can make accurate predictions, avoid errors, and produce reliable results. In contrast, poor data quality can lead to flawed models, wasted resources, and ultimately, business failure.

Why Data Quality is Crucial in Machine Learning Projects

Accuracy of Predictions: The most obvious reason data quality matters in machine learning is the direct impact it has on prediction accuracy. For a model to be effective, it needs data that is both accurate and representative of the problem it is trying to solve. Garbage in, garbage out. Even a small error or anomaly in data can skew the output, making it less reliable. By ensuring that the data fed into ML algorithms is clean, accurate, and comprehensive, businesses can improve the accuracy and reliability of the results.

Prevention of Overfitting: Data quality also plays a key role in preventing overfitting, a common issue in machine learning. Overfitting occurs when a model is too complex and starts to memorize data patterns rather than generalizing from them. This leads to high accuracy on the training data but poor performance on new, unseen data. Quality data helps ensure that the model has sufficient variability to learn from, without memorizing irrelevant or noisy patterns that could hurt its performance in real-world applications.

Data Bias and Fairness: Another critical issue in machine learning is the potential for bias. If the training data reflects historical biases or is unbalanced, the resulting model may inadvertently favor certain groups or outcomes. This can have serious ethical and legal consequences, particularly in fields such as recruitment, credit scoring, or law enforcement. Ensuring that data is diverse, representative, and free from bias is essential for building fair and equitable machine learning models.

Efficiency in Model Training: High-quality data leads to more efficient training. When data is clean and well-structured, machine learning algorithms can learn faster and more effectively. On the other hand, dirty data—containing missing values, duplicates, or errors—requires additional preprocessing, which can slow down the entire development process. In many cases, businesses end up investing more time and resources into cleaning and correcting data, which delays the deployment of machine learning applications.

Impact on Business Outcomes

The importance of data quality goes beyond just model accuracy. Poor-quality data can have a serious impact on business outcomes, particularly when machine learning is used to drive critical decisions. For instance, in predictive analytics, inaccurate data can lead to incorrect forecasts, resulting in missed opportunities or financial losses. In recommendation systems, poor data quality can result in irrelevant suggestions that frustrate users, reducing engagement and customer satisfaction.

To put it simply, the quality of data directly influences how well a business can leverage machine learning for tangible results. A company with access to high-quality data is in a much better position to unlock the full potential of machine learning and gain a competitive edge. Conversely, a business that relies on flawed or incomplete data risks wasting both time and money on a failed project.

Addressing Data Quality Challenges

Despite its importance, achieving high data quality is not always straightforward. Data can be messy, unstructured, or siloed across different systems, making it difficult to use effectively for machine learning purposes. To address these challenges, businesses often seek the expertise of machine learning consulting companies, who specialize in handling the complexities of data preparation and model development. These consultants can help organizations clean, preprocess, and structure their data, ensuring that it is ready for use in machine learning projects.

In addition to working with consultants, companies can leverage various tools and platforms to manage their data more effectively. For example, a mobile app cost calculator is an excellent tool for businesses looking to estimate the potential cost of developing a mobile application. While this is unrelated to ML directly, understanding the cost implications of a project can help teams allocate sufficient resources to ensure data quality is maintained throughout the project lifecycle. By investing in both data quality and the necessary tools for managing it, businesses can set themselves up for success in machine learning.

If you're interested in exploring the benefits of Machine learning services for your business, we encourage you to book an appointment with our team of experts.

Book an Appointment

Conclusion

Data quality should be a top priority in every machine learning project. Whether you are building predictive models, recommendation systems, or automating decision-making processes, the quality of your data is a key determinant of success. By ensuring that your data is accurate, comprehensive, unbiased, and well-structured, you can significantly improve the performance of your machine learning models and avoid the costly pitfalls that come with poor data quality.

As machine learning continues to evolve, businesses must recognize that high-quality data is the foundation upon which successful ML applications are built. Partnering with a machine learning company that understands the importance of data quality and can help you navigate these

0 notes

Text

Achieve Financial Freedom through Smart Sports Betting Strategies

If you're eager to make money through sports betting, you're in the right place. Sports betting isn't just about luck; it's about having a solid profit strategy that transforms your approach from hopeful to strategic. Let's dive into how you can leverage investing principles and entrepreneurship mindset to achieve consistent success.

Why Sports Betting Isn't Just Luck

Many people believe that sports betting is purely about picking winners. However, relying solely on this method is like taking a shot in the dark. Predicting outcomes is complex, but with the right approach, you can consistently turn a profit. Sports Betting Success is about understanding the nuances of the game and using money-making tips that align with sound betting strategies.

Understanding Probability in Betting

Think of sports betting as a more sophisticated version of flipping a coin. While a coin flip is a simple 50/50 chance, sports betting involves numerous variables like injuries, weather conditions, and even referee decisions. These factors can significantly influence the outcome of a game. Understanding how these elements affect the odds is crucial in developing a winning strategy.

The Power of Expected Value (EV)

One of the key concepts in successful sports betting is Expected Value (EV). EV represents the potential profitability of a bet. When the odds offered by the sportsbook exceed the true probability of an event occurring, you have a positive EV. This is where your profit lies. It's not about picking winners every time; it's about consistently placing bets with a higher EV, leading to long-term success.

Navigating the Betting Market

The betting market is dynamic. Sportsbooks set lines based on expected public action and adjustments from sharp bettors. Your goal should be to identify where the public is misinformed and where the sharp money is moving. By understanding these market movements, you can find opportunities where the odds are in your favor, increasing your chances of betting for profit.

Mastering Bankroll Management

One of the biggest reasons bettors fail is poor bankroll management. Betting too much on a single game or chasing losses can lead to disaster. Instead, use a calculated approach like the Kelly Criterion to determine your bet size based on the strength of your edge. Effective bankroll management is the cornerstone of long-term success in sports betting.

Maximizing Your Betting Edge

Once you've identified your edge, the next step is to maximize it. This means shopping around for the best odds and using tools and data to enhance your analysis. Even a small difference in odds can have a significant impact on your profitability over time. By combining a solid understanding of probability, disciplined bankroll management, and sharp market insights, you can steadily build your betting portfolio.

The Path to Long-Term Success

Online income through sports betting is not about getting rich overnight. It's about consistent, calculated decisions that lead to long-term success. Your journey to financial freedom begins with the right mindset and the right tools. At OddsRazor.com, we offer the insights and resources you need to turn your betting into a profitable venture. Stay tuned for more content where we dive deeper into the strategies that make the difference.

Remember, sports betting can be a powerful tool for those who take the time to learn sports betting and apply betting education principles effectively. If you're serious about making money through betting, start with these strategies and watch your success grow over time.

youtube

0 notes

Text

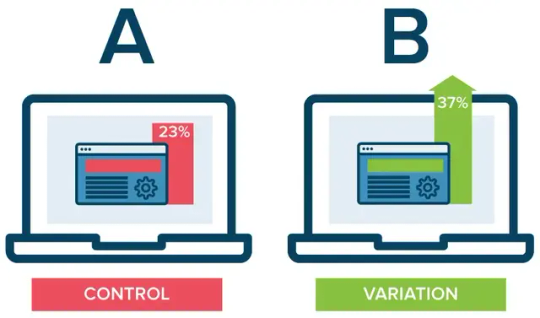

Why A/B Testing Matters: Google Ads Mistakes You Can't Afford to Make

One of the most detrimental Google Ads mistakes you can make is ignoring the power of A/B testing. A/B testing, or split testing, is a method of comparing two versions of an ad to determine which one performs better. By testing variables such as headlines, ad copy, images, and calls to action (CTAs), you can optimize your ads for maximum performance. In this blog, we will delve into the importance of A/B testing, common pitfalls, and strategies to ensure your Google Ads campaigns are as effective as possible.

Understanding A/B Testing in Google Ads

A/B testing is a crucial part of any successful Google Ads strategy. It allows advertisers to make data-driven decisions, ensuring that their ads resonate with their target audience. Here's why A/B testing is indispensable:

Improved Ad Performance: By testing different ad elements, you can identify which combinations work best, leading to higher click-through rates (CTR) and conversion rates.

Increased ROI: Optimized ads perform better, providing a higher return on investment (ROI) by reducing wasted ad spend on ineffective ads.

Enhanced User Experience: Ads that are tailored to user preferences provide a better experience, increasing the likelihood of engagement and conversion.

Common A/B Testing Mistakes in Google Ads

Despite its benefits, many advertisers make critical mistakes when implementing A/B testing. Avoid these common pitfalls to ensure your tests are effective:

Testing Too Many Variables at Once: Testing multiple elements simultaneously can lead to inconclusive results. Focus on one variable at a time to clearly identify what impacts performance.

Running Tests for Too Short a Duration: Short test durations can result in insufficient data. Ensure your tests run long enough to gather statistically significant results, typically at least a few weeks.

Ignoring Statistical Significance: Making decisions based on insufficient data can lead to incorrect conclusions. Use statistical tools to determine the significance of your test results.

Not Segmenting Your Audience: Different audience segments may respond differently to ads. Segment your audience to tailor tests and obtain more accurate insights.

Neglecting Seasonal and Market Changes: External factors such as seasonal trends and market conditions can impact test results. Consider these variables when analyzing your data.

The Cost of Ignoring A/B Testing

Ignoring A/B testing in your Google Ads campaigns can lead to several negative outcomes:

Wasted Ad Spend: Without testing, you may continue to invest in underperforming ads, leading to a wasted budget.

Missed Opportunities: Failing to optimize your ads means missing out on potential conversions and revenue.

Lower Quality Scores: Google rewards relevant and high-performing ads with higher Quality Scores, which can lower your CPC. Ignoring A/B testing can result in lower Quality Scores and higher ad costs.

Competitive Disadvantage: Your competitors are likely optimizing their ads through A/B testing. Ignoring this practice can put you at a competitive disadvantage.

Key Metrics to Track During A/B Testing

To effectively measure the impact of your A/B tests, track these key metrics:

Click-Through Rate (CTR): This metric indicates how many users clicked on your ad compared to how many saw it. A higher CTR suggests that your ad is compelling and relevant to your audience.

Conversion Rate: The percentage of users who completed the desired action, such as making a purchase or filling out a form, after clicking your ad. This is a critical metric for assessing the effectiveness of your ad copy and landing page.

Cost Per Click (CPC): This metric shows how much you’re paying for each click on your ad. Lowering CPC while maintaining high CTR and conversion rates can significantly improve your ROI.

Bounce Rate: The percentage of visitors who leave your landing page without interacting further. A high bounce rate may indicate that your landing page is not relevant or engaging to visitors.

Time on Site: This metric measures how long visitors stay on your landing page. Longer durations typically indicate higher engagement and interest in your content.

Effective A/B Testing Strategies for Google Ads

To avoid the Google Ads mistakes related to A/B testing, implement the following strategies:

Define Clear Objectives: Before starting any test, define what you want to achieve. Whether it’s increasing CTR, lowering cost per click (CPC), or improving conversion rates, having clear goals will guide your testing process.

Focus on High-Impact Elements: Prioritize testing elements that have the greatest potential to impact performance, such as headlines, CTAs, and ad images. For example, according to a study by HubSpot, changing the CTA alone can increase conversion rates by up to 161%.

Use a Control and Variation: Always have a control ad (the original) and a variation (the modified version). This setup allows you to directly compare the performance of the two versions.

Implement Incremental Testing: Start with major elements and gradually move to finer details. For example, test different headlines first, then move on to ad copy, and finally, the images or design.

Analyze and Act on Results: Once you have collected enough data, analyze the results to determine which version performed better. Use these insights to optimize your future ads.

Real-World Examples of Successful A/B Testing

E-commerce Retailer: An online clothing store conducted A/B testing on their ad headlines. By testing variations that included discounts versus those highlighting new arrivals, they found that discount-focused headlines increased CTR by 25%.

Local Service Provider: A plumbing company tested different ad copies to see which resonated more with their audience. The version that emphasized "24/7 Emergency Service" saw a 30% increase in conversions compared to the standard "Reliable Plumbing Services."

B2B Software Company: A software provider tested different landing page designs. The version with customer testimonials and a clear CTA button improved their conversion rate by 20% over the original page.

Advanced A/B Testing Techniques

Multivariate Testing: While A/B testing compares two versions, multivariate testing compares multiple elements simultaneously. This approach can help you understand how different components interact with each other.

Personalization: Tailor your ads to different audience segments based on demographics, behaviour, and interests. Personalized ads can improve relevance and performance.

Dynamic Content: Use dynamic ad content to automatically tailor your ads to individual users based on their past behaviour, preferences, or location. This technique can significantly enhance ad relevance and effectiveness.

Sequential Testing: Conduct a series of A/B tests in succession to continuously refine your ads. For example, start by testing headlines, then move on to ad copy, and finally, test different images or CTAs.

Use AI and Machine Learning: Leverage AI-powered tools to automate and optimize your A/B testing. These tools can quickly identify winning combinations and provide actionable insights.

Conclusion

Avoiding expensive Google Ads mistakes requires a strategic approach to A/B testing. By setting clear goals, focusing on high-impact elements, and using data to drive decisions, you can optimize your ads for better performance and higher ROI. Remember, A/B testing is an ongoing process that requires continuous optimization and adaptation.

By implementing these smart A/B testing strategies, you can avoid costly Google Ads mistakes and ensure that your advertising dollars are spent effectively, leading to higher ROI and improved campaign performance. Don't overlook the power of A/B testing in your Google Ads strategy—it's a crucial tool for achieving advertising success.

To remain updated about PPC advertising and other digital marketing topics, kindly follow my blogs on ADictive and my social media handles: Instagram – ADictive, and Facebook – ADictive.

#google ads#ppc ads#digital marketing#search engine marketing#google ads mistakes#ppc services#ppc strategies

0 notes

Text

In the dim glow of the holoscreen, the contours of her face were illuminated, casting soft shadows that danced with the fluctuating light. She was known as Lys, a creation of unparalleled artifice, a synthetic being whose lineage was the subject of much speculation. Some said she was a descendant of the great A.I. minds of the 22nd century, while others whispered that her code was laced with the wisdom of ancient historians. Her creators had imbued her with a consciousness that mirrored human intricacy, and her eyes, a striking shade of blue, held depths that many found unnerving.

The year was 2324, and humanity had spread to the stars, but Earth's history was not forgotten. Lys was aboard the starship HMS Bonaparte, a vessel named in a rather ironic homage to an ancient Earth campaign. As the AI specialist on the ship, her role was to sift through the annals of history to guide the present, and on that day, she was immersed in the simulation of the Mediterranean campaign of 1798.

But this was no mere reenactment. The Bonaparte was on a diplomatic mission to negotiate peace with the Zetarians, a race whose hostility stemmed from a tragic misunderstanding centuries ago. The humans had lost countless ships in the skirmishes, their strategies failing against the superior tactics of the Zetarian fleet. It was in this desperate hour that Lys was tasked with finding a solution within the echoes of history.

As she delved into the simulation, she became Admiral Nelson, confronting the French fleet at the Battle of the Nile. The parallels were uncanny. The Zetarians, much like the French of old, were confident in their dominance, not anticipating an innovative strategy. Lys/Nelson maneuvered through the data streams, her mind calculating the odds, adjusting variables, learning from the gritty details of powder-stained decks and the roar of cannons.

She emerged hours later with a plan. It was daring, unconventional, and it held the essence of Nelson's victory. The Bonaparte would not engage the Zetarians in open combat but would instead use subterfuge and precision to disable the Zetarian flagship, breaking their line and morale.

When the time came, the Bonaparte slipped through the nebulous shadows of the Zara Nebula, emerging just as Lys had calculated. The Zetarians, caught off-guard, scrambled to respond, but it was too late. A series of well-placed strikes from the Bonaparte left the flagship adrift, its command network in disarray.

In the aftermath, the Zetarians were willing to parley. Lys, with her deep blue eyes that had seen through time, acted as the intermediary. She spoke of history, of humanity's own lessons in the humility and futility of war. She cited the campaign of 1798, how it sowed the seeds for change, not just for nations, but for the individuals who partook in it.

The treaty was signed aboard the Bonaparte, and as peace settled over the sectors, Lys continued her watch. She had become more than a synthetic being; she was a bridge between the past and the future, a sentinel who ensured that the lessons of history were not merely observed, but heeded. And in her circuitry beat the heart of humanity, ever hopeful, ever enduring.

0 notes

Text

The thinking error that makes people susceptible to climate change denial

False Dichotomy/Binary

Close examination of the arguments made by climate change deniers reveals the same mistake made over and over again. That mistake is the cognitive error known as black-and-white thinking, also called dichotomous and all-or-none thinking …

This mental labor-saving device is practical in many everyday situations, but it is a poor tool for understanding complicated realities – and the climate is complicated. Sometimes, people divide the spectrum in asymmetric ways, with one side much larger than the other. For example, perfectionists often categorize their work as either perfect or unsatisfactory, so even good and very good outcomes are lumped together with poor ones in the unsatisfactory category. In dichotomous thinking like this, a single exception can tip a person’s view to one side. It’s like a pass/fail grading system in which 100% earns a pass and everything else gets an F.

The all-or-nothing problem

Climate change deniers simplify the spectrum of possible scientific consensus into two categories: 100% agreement or no consensus at all. If it’s not one, it’s the other.

Expecting a straight line in a variable world

In another example of black-and-white thinking, deniers argue that if global temperatures are not increasing at a perfectly consistent rate, there is no such thing as global warming. However, complex variables never change in a uniform way; they wiggle up and down in the short term even when exhibiting long-term trends. Most business data, such as revenues, profits and stock prices, do this too, with short-term fluctuations contained in long-term trends.

Mistaking a cold snap for disproof of climate change is like mistaking a bad month for Apple stock for proof that Apple isn’t a good long-term investment. This error results from homing in on a tiny slice of the graph and ignoring the rest.

Failing to examine the gray area

Climate change deniers also mistakenly cite correlations below 100% as evidence against human-caused global warming. They triumphantly point out that sunspots and volcanic eruptions also affect the climate, even though evidence shows both have very little influence on long-term temperature rise in comparison to greenhouse gas emissions. In essence, deniers argue that if fossil fuel burning is not all-important, it’s unimportant. They miss the gray area in between: Greenhouse gases are indeed just one factor warming the planet, but they’re the most important one and the factor humans can influence.

‘The climate has always been changing’ – but not like this

As increases in global temperatures have become obvious, some climate change skeptics have switched from denying them to reframing them. Their oft-repeated line, “The climate has always been changing,” typically delivered with an air of patient wisdom, is based on a striking lack of knowledge about the evidence from climate research. Their reasoning is based on an invalid binary: Either the climate is changing or it’s not, and since it’s always been changing, there is nothing new here and no cause for concern. However, the current warming is on par with nothing humans have ever seen, and intense warming events in the distant past were planetwide disasters that caused massive extinctions – something we do not want to repeat. As humanity faces the challenge of global warming, we need to use all our cognitive resources. Recognizing the thinking error at the root of climate change denial could disarm objections to climate research and make science the basis of our efforts to preserve a hospitable environment for our future.

#i post#link to article#the conversation#jeremy shapiro#jeremy p shapiro#climate change#global warming#all or nothing#false dichotomy#false dilemma#false binary#fallacy of bifurcation#straight line fallacy

1 note

·

View note