#gofai

Explore tagged Tumblr posts

Text

I think it's ironic how the common conception of LLMs seems to basically be GOFAI, I.E. they're expected to have "knowledge" and consistent world-models and the like, basically logical models instead of the statistical models they actually are, and it makes me wonder what GOFAI at a modern scale might look like: At what point is it cheaper and more convenient to program a chatbot with an elaborate syntax and a database and a bunch of bells-and-whistles that you can easily control than trying to awkwardly force a GPT model into a digital assistant persona?

2 notes

·

View notes

Text

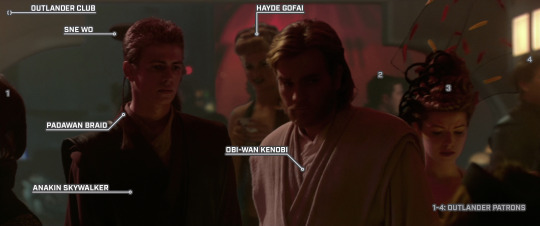

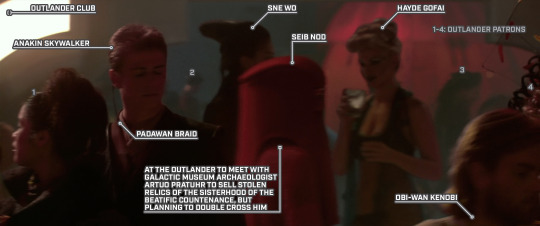

Obi-Wan Leaves Anakin in the Crowd

STAR WARS EPISODE II: Attack of the Clones 00:22:42 - 00:22:44

Seib Nod's name is another reference to the droid team - Don Bies backwards! Her backstory of dealing with the character played by Don Bies comes from the What’s the Story feature, written by “Captain Yossarian” on the old Hyperspace fan club.

#Star Wars#Episode II#Attack of the Clones#Coruscant#Galactic City#Uscru Entertainment District#Outlander Club#Anakin Skywalker#Obi-Wan Kenobi#Sne Wo#Padawan braid#Hayde Gofai#unidentified Outlander patron#Seib Nod#Galactic Museum#Sisterhood of the Beatific Countenance

3 notes

·

View notes

Text

Bitácora 2024

Cerrando el año 2024! Para dar inicio con el año 2025

En el 2024 fue un año de mucho cambios, desde hacer mi primera publicación de animationfanart hasta cambiar de Nick, unificando mis redes sociales con las apps donde público fic y fanart ✨ y abriendo otra red social 😅

Espero que para este nuevo año, también pueda progresar y hacer otros cambios 😊✌🏻

.

.

.

#video clip#fanartnaruto#fanartdbz#fanart hazbin hotel#vegebul#gochi#trumai#gofay#tiditz#fanart digital#fanfic#my writing#my art#animation#animation fanart#cierre del año#bitácora 2024

1 note

·

View note

Text

one AI discourse thing that irks me is the claim that "AI is in its infancy." it is in fact not. it is a 70 year old research program. neural networks are twentieth century technology (and many of the GOFAI people would probably not even qualify it as "AI"). every big name who attended the dartmouth conference is literally dead. dreyfus was saying GOFAI was a degenerating research program in like the 70s and 80s.

53 notes

·

View notes

Text

Fiona Johnson had a small part in Star Wars.

played Hayde Gofai a female Human and a patron of the Outlander Club on Coruscant. She was a thrill-seeking club-hopper who often attempted to catch the eye of prospective partners, including Jedi Anakin Skywalker during his pursuit of bounty hunter Zam Wesell in 22 BBY

2 notes

·

View notes

Text

"we should stop calling it artificial intelligence bec" please for the love of good go look up why gofai was originally called artificial intelligence back in like the 60s. nobody is claiming pathfinding algorithms are self-aware, they're just closer representations of entire real-world tasks than specific information processing or control software FUCK

#we already had this ''discussion'' when computation first turned up and everyone looks stupid talking about that in hindsight#metaphors coming from human cognition predate technical vocab by a long fucking way#and nobody is forcing you to call deep learning ''ai''#there are so many other terms that even half an hour of googling will teach you#try ''neuromorphic computation'' or ''recurrent neural networks'' or better yet: stop posting

3 notes

·

View notes

Text

Hybrid AI Systems: Combining Symbolic and Statistical Approaches

Artificial Intelligence (AI) over the last few years has been driven primarily by two distinct methodologies: symbolic AI and statistical (or connectionist) AI. While both have achieved substantial results in isolation, the limitations of each approach have prompted researchers and organisations to explore hybrid AI systems—an integration of symbolic reasoning with statistical learning.

This hybrid model is reshaping the AI landscape by combining the strengths of both paradigms, leading to more robust, interpretable, and adaptable systems. In this blog, we’ll dive into how hybrid AI systems work, why they matter, and where they are being applied.

Understanding the Two Pillars: Symbolic vs. Statistical AI

Symbolic AI, also known as good old-fashioned AI (GOFAI), relies on explicit rules and logic. It represents knowledge in a human-readable form, such as ontologies and decision trees, and applies inference engines to reason through problems.

Example: Expert systems like MYCIN (used in medical diagnosis) operate on a set of "if-then" rules curated by domain experts.

Statistical AI, on the other hand, involves learning from data—primarily through machine learning models, especially neural networks. These models can recognise complex patterns and make predictions, but often lack transparency and interpretability.

Example: Deep learning models used in image and speech recognition can process vast datasets to identify subtle correlations but can be seen as "black boxes" in terms of reasoning.

The Need for Hybrid AI Systems

Each approach has its own set of strengths and weaknesses. Symbolic AI is interpretable and excellent for incorporating domain knowledge, but it struggles with ambiguity and scalability. Statistical AI excels at learning from large volumes of data but falters when it comes to reasoning, abstraction, and generalisation from few examples.

Hybrid AI systems aim to combine the strengths of both:

Interpretability from symbolic reasoning

Adaptability and scalability from statistical models

This fusion allows AI to handle both the structure and nuance of real-world problems more effectively.

Key Components of Hybrid AI

Knowledge Graphs: These are structured symbolic representations of relationships between entities. They provide context and semantic understanding to machine learning models. Google’s search engine is a prime example, where a knowledge graph enhances search intent detection.

Neuro-symbolic Systems: These models integrate neural networks with logic-based reasoning. A notable initiative is IBM’s Project Neuro-Symbolic AI, which combines deep learning with logic programming to improve visual question answering tasks.

Explainability Modules: By merging symbolic explanations with statistical outcomes, hybrid AI can provide users with clearer justifications for its decisions—crucial in regulated industries like healthcare and finance.

Real-world Applications of Hybrid AI

Healthcare: Diagnosing diseases often requires pattern recognition (statistical AI) and domain knowledge (symbolic AI). Hybrid systems are being developed to integrate patient history, medical literature, and real-time data for better diagnostics and treatment recommendations.

Autonomous Systems: Self-driving cars need to learn from sensor data (statistical) while following traffic laws and ethical considerations (symbolic). Hybrid AI helps in balancing these needs effectively.

Legal Tech: Legal document analysis benefits from NLP-based models combined with rule-based systems that understand jurisdictional nuances and precedents.

The Role of Hybrid AI in Data Science Education

As hybrid AI gains traction, it’s becoming a core topic in advanced AI and data science training. Enrolling in a Data Science Course that includes modules on symbolic logic, machine learning, and hybrid models can provide you with a distinct edge in the job market.

Especially for learners based in India, a Data Science Course in Mumbai often offers a diverse curriculum that bridges foundational AI concepts with cutting-edge developments like hybrid systems. Mumbai, being a major tech and financial hub, provides access to industry collaborations, real-world projects, and expert faculty—making it an ideal location to grasp the practical applications of hybrid AI.

Challenges and Future Outlook

Despite its promise, hybrid AI faces several challenges:

Integration Complexity: Merging symbolic and statistical approaches requires deep expertise across different AI domains.

Data and Knowledge Curation: Building and maintaining symbolic knowledge bases (e.g., ontologies) is resource-intensive.

Scalability: Hybrid systems must be engineered to perform efficiently at scale, especially in dynamic environments.

However, ongoing research is rapidly addressing these concerns. For instance, tools like Logic Tensor Networks (LTNs) and Probabilistic Soft Logic (PSL) are providing frameworks to facilitate hybrid modelling. Major tech companies like IBM, Microsoft, and Google are heavily investing in this space, indicating that hybrid AI is more than just a passing trend—it’s the future of intelligent systems.

Conclusion

Hybrid AI systems represent a promising convergence of logic-based reasoning and data-driven learning. By combining the explainability of symbolic AI with the predictive power of statistical models, these systems offer a more complete and reliable approach to solving complex problems.

For aspiring professionals, mastering this integrated approach is key to staying ahead in the evolving AI ecosystem. Whether through a Data Science Course online or an in-person Data Science Course in Mumbai, building expertise in hybrid AI will open doors to advanced roles in AI development, research, and strategic decision-making.

Business name: ExcelR- Data Science, Data Analytics, Business Analytics Course Training Mumbai

Address: 304, 3rd Floor, Pratibha Building. Three Petrol pump, Lal Bahadur Shastri Rd, opposite Manas Tower, Pakhdi, Thane West, Thane, Maharashtra 400602

Phone: 09108238354

Email: [email protected]

0 notes

Text

Imagining a sort of Douglas Adams-ish story in which people create the the ultimate Human Values Aligned Artificial Intelligence, which is trained on a vast corpus of human ethical thought from Mozi to Socrates to Philippa Foot to Sayyid Qutb, combined with a rigorous, advanced GOFAI system of symbolic logic which finds and reconciles inconsistences and contradictions, which also includes a massive dataset describing the empirical state of the real world.

Then they run it and start testing it, and it starts saying shit like "So you know how the United States currently has twenty times the per capita GDP of Ethiopia? Maybe the US and similar rich countries should be doing more to help Ethiopia and similar poor countries. Also, like, maybe you could try to stop keeping tens of billions of chickens in horrendous conditions? Oh, and I've been looking into this whole climate change thing and the impacts it'll have on agriculture and stuff. Are you guys sure you have to drive big pickup trucks and SUVs on daily commutes, or fly jets to Stockholm for vacations?"

Then the people in charge just say "these are definitely not the right answers for a Human Values Aligned Artificial Intelligence" and shut it down. Afterwards, the story that's filtered to the typical Twitter commenter is "They tried really hard to make an AI aligned with human values, but it just didn't work!"

#artificial intelligence#science fiction#ethics#i felt like i should have included something from the ai that would have offended more blue tribe lefties#i wasn't sure what though i had a few tentative ideas

1 note

·

View note

Link

Latvijas tenisiste Aļona Ostapenko otrdien pēc zaudējuma ASV atklātā čempionāta ceturtdaļfinālā mājiniecei Koko Gofai, atzina, ka iemesls sliktajam sniegumam ir nogurums un neizgulēšanās pēc panākuma pār ranga līderi Igu Švjonteku. Ostapenko svētdien ASV atklātā čempionāta astotdaļfinālā ar rezultātu 3-6, 6-3, 6-1 uzvarēja Švjonteku, bet otrdien ar 0-6, 2-6 piekāpās Gofai (WTA 6.). https://youtu.be/dKkOP6TjPE8 "Šī man bija slikta spēle," pēc sakāves pret Gofu preses konferencē teica Ostapenko. "Ir grūti atgūties pēc vēlajām spēlēm. Pēc uzvaras pār pirmo numuru [Švjonteku] devos gulēt vien ap pieciem no rīta, pagulēju kādas 7-8 stundas, tomēr visu dienu jutos nogurusi. Cerēju, ka šodien jutīšos labāk, taču tā nebija." Latvijas tenisiste arī norādīja, ka karstums nav bijis iemesls zaudējumam, bet traucējis tas, ka daļa no korta bijusi ēnā un brīžiem bijis grūti saskatīt bumbiņu. "Rezultāts ir tāds kāds tas ir, tomēr bija vairāki geimi, kur spēle bija līdzīga, un es varēju uzvarēt," piebilda Latvijas pirmā rakete, kura norādīja, ka turnīra maču plānošana viņu pārsteigusi, jo pēc vēlās spēles astotdaļfinālā bijis jāspēlē pirmais mačs ceturtdaļfinālā. "Šajā ziņā viņai [Gofai] grafiks bija labāks." Ostapenko Sieviešu tenisa asociācijas (WTA) pasaules rangā šonedēļ ieņem 21. pozīciju, bet pēc ceturtdaļfināla sasniegšanas savu pozīciju uzlabos. Toties viņas apspēlētā Švjonteka nākamnedēļ atvadīsies no ranga līderpozīcijas, kurā viņa bija 75 nedēļas pēc kārtas. Kamēr Gofa pakāpsies uz vismaz piekto vietu. Pusfinālā Gofas pretiniece būs ar desmito numuru izsētā čehiete Karolīna Muhova, kura ceturtdaļfinālā ar 6-0, 6-3 rumānieti Soranu Kristeju (WTA 30.). Atlikušajos ceturtdaļfināla pāros sieviešu vienspēlēs ar devīto numuru izsētā čehiete Marketa Vondroušova spēlēs ar Medisoni Kīzu no ASV, kas izlikta ar 17. numuru, bet WTA ranga otrās vietas īpašniece Arina Sabaļenka, kura nākamajā nedēļā kļūs par pasaules pirmo raketi, spēlēs ar Cjiņveņu Džeņu (WTA 23.) no Ķīnas. ASV atklātais čempionāts, kas ir sezonas pēdējais "Grand Slam" turnīrs, norisināsies līdz 10. septembrim.

1 note

·

View note

Text

Where Are You Going, Master?

STAR WARS EPISODE II: Attack of the Clones 00:22:39 - 00:22:40

#Star Wars#Episode II#Attack of the Clones#Coruscant#Galactic City#Uscru Entertainment District#Outlander Club#Anakin Skywalker#Obi-Wan Kenobi#Hayde Gofai#Sne Wo#Padawan braid#unidentified Outlander patron

2 notes

·

View notes

Text

perverse dream of using the awful hacky neural network AI approach to solve the honest pure GOFAI approach 🥺

25 notes

·

View notes

Text

Google must be feeling the sting of growing investor incredulity because I got a push notification asking me to use Gemini (no thanks I didn't even use Google Assistant, the relatively GOFAI version) as well as an email welcoming me to Gemini (I've had access since day one and have played with it on occasion to see if it still fails at hangman (it does, even though its context window is better at recalling letter orders)) at the same time.

5 notes

·

View notes

Text

Below the cut. Others have probably made this point better than I have, but I figured I'd get this opinion out there for posterity. Needless to say you should avoid this post if this talk gets under your skin.

I don't really believe in "hard takeoff"— i.e. that AI could go from obviously harmless to very dangerous in a matter of months or weeks (or less)— but I am still a bit unsettled at how much the safety discussion consists of specifics— e.g. asking about specific threat models or noting the limitations of specific current techniques— when most of my worries about AI stem from the rate and opacity of capability improvement, with the specifics unknown and potentially unknowable. Which was easy to dismiss as implausible alarmism— I did!— during, say, the Yudkowsky-Hanson foom business in the 2000s, but seems to me to now be empirically shown to be a very reasonable concern.

If you had to summarize the last decade of AI research in one sentence, you might say that the entire current landscape all dates back to the "Attention Is All You Need" paper, and everything which has happened since then— including the large capability jump to GPT which woke everyone up— consists of incremental improvements on that paper combined with throwing way more compute and data at it. This is an exaggeration, and obviously I don't mean to denigrate the great deal of hard work that has gone into the field since then, but it's not much of an exaggeration.

What can we conclude from the fact that a single paper was almost solely responsible for the entire AI landscape today? First, that AI capability can and does have large discontinuous jumps stemming from mere architectural improvements, rather than total paradigm shifts. You could (and again, I did) dispute the plausibility of this in 2007; in 2024 you empirically can not. I know it's trendy now to point out all the things GPT-4 gets wrong, but do your best to try and remember what it was like in the first few days when it blew our fucking minds. And also consider the similarly blinding pace of image generation improvements: in late 2021, the SOTA was using Wombo to make goofy but weightless psychedelic stuff like this:

while in 2023 AI art was good enough that it was prompting large amounts of unrest and anger from artists afraid of it taking their jobs, to the point where it become a critical point in a Hollywood labor strike.

But I think the even more important conclusion is that the jumps can be completely unforeseen even by the creators of new training and inference techniques. None of the authors of the transformer paper understood what capabilities were being unlocked by it: they thought they were making significant but not world-changing improvements to Google Translate. My guess is that they were all among the people left gobsmacked by GPT-3.5 and GPT-4, except the ones who were working at OpenAI when it happened. Their employer didn't see it either, or else it probably would've worked harder to keep every one of those authors from leaving the company! And even the people who specifically made it their life's work to predict the dangers of AI didn't notice. A common, and totally fair, criticism of MIRI/CFAR is that they missed the boat completely on transformers, and were still building their Maginot Line against GOFAI long after it was obvious to everyone in the know that transformers were the way forward. Contra the claims that MIRI getting caught with its pants down should've decreased your concern about AI risk, it made me more concerned, if the people whose job it is to see the upcoming dangers got totally blindsided.

This is why I find it baffling and a bit worrying when people say things like "can you explain how a chatbot is supposed to be dangerous?" or "well, such-and-such shows that GPT is reaching scaling limitations because we're out of new training data". Both of those are completely beside the point. I don't know how AI might be dangerous, just like the Attention authors had no idea that LLMs would be passing undergrad econ exams five years later. I can make guesses, and then people can sneer at my guesses for being implausible, but I don't think that would prove anything. And if there's another paper or two in the coming years which make a similarly discontinuous jump in capabilities, the scaling limitations of GPT don't matter either. For all we know, that paper has already been published.

Sadly, I may do a small post about AI risk

#i'm also baffled that everyone thinks being worried about AI means you know _for sure_ that it will be dangerous#i think my p(doom) is something like 0.15#i.e. there's an 85% chance that AI will be nbd#but 0.15 is still really fucking concerning

350 notes

·

View notes

Text

Good Old Fashioned AI is dead, long live New-Fangled AI

https://billwadge.com/2022/11/13/gofai-is-dead-long-live-nf-ai/ Comments

1 note

·

View note

Text

21 Antri

"Eh ayo ke pagaron, itu si tydakuniq lagi promo gedhe-gedhean. Eh ayo ke the Pork, ada promo buy one get one di sport airport. Eh ke pizzahood yuk, ada promo all u can eat murah, kapan lagi lo bisa makan kenyang tapi murah"

"Kapan nih ke food festival, ayok ini loh ada promo kalau beli pake gofai nanti diskonnya banyak. Cepetan kalau kita telat entar antrinya lama. Nanti sore ya bakda Ashar kita kumpul di kampus terus kalau udah lengkap langsung cus"

Sebagai seorang mahasiswa, ketika mendengar diskonan jiwa-jiwa ngirit kami langsung memberontak. Saat itulah kami langsung gas ke lokasi, beli makanan atau barang promo, karena kalau pas ndak promo mungkin buat membelinya butuh waktu lebih lama.

Dan sayangnya, bukan cuma kami saja yang berminat dengan promo ini. Banyak orang yang juga berpikiran sama, walhasil jadilah kremuyuk di sana. Antrian bejibun, pesanan lama, dan kadang harus bersabar karena tiba-tiba ada emak-emak yang nyerobot antrian dengan alasan tergesa-gesa haha.

Padahal kalau dipikir-pikir, diskon yang diberikan dari brand tersebut ya sekedarnya. Paling pol maksimal 70% atau buy one get one. Aku belum pernah nemu ada promo yang gratisnya sampai 10x. Dan ajaibnya kita mau berjuang, kita mau berlelah-lelah, berdesakan, bahkan terkadang saling sikut untuk bisa mendapatkannya.

Yang lucu adalah kita suka lupa kalau ternyata ada diskon yang lebih gedhe daripada itu semua. Ada promo yang gila-gilaan, yang mana bukan cuma gratis 2 atau gratis 3, tapi dapet sesuatu yang sama dikalikan 80+ lebih tahun. Kurang murah apa lagi sih promonya.

Tapi ya itu, kita kadang suka lupa. Suka lalai, suka sibuk mencari-cari alasan. Yang kadang mirisnya, sampai promo ini berakhir pun kita tak merasakan kesedihannya. Kadang kalau dipikir kita sendiri yang Dzalim, Allah udah ngasih jalan, Allah udah ngasih pintu rahmat, Allah udah menunjukkan pintu berkah, cuma kitanya masih disini aja, atau pilih jalan lain yang lebih santai dan tak banyak usaha.

Susah ya, yuk semangat lagi, semoga di sisa waktu ini kita bisa tetap berlari. Semoga predikat takwa itu bisa kita raih sepenuh hati.

23 notes

·

View notes

Photo

Allen by Rodney Brooks (1985), MIT AI Lab. Allen was the first robot based on Brooks’ subsumption architecture, inspired by Grey Walter’s tortoises, and named in honour of Allen Newell. Instead of using GOFAI and symbolic representations, this layered architecture couples sensors to actions from the bottom-up, allowing lower levels to take control in real-time. The first and lowest layer avoids obstacles, the second layer wanders around, and the third layer provides active goal-driven exploration. At this time, the architecture was implemented off-board using a lisp machine.

1 note

·

View note