#gpt3

Explore tagged Tumblr posts

Text

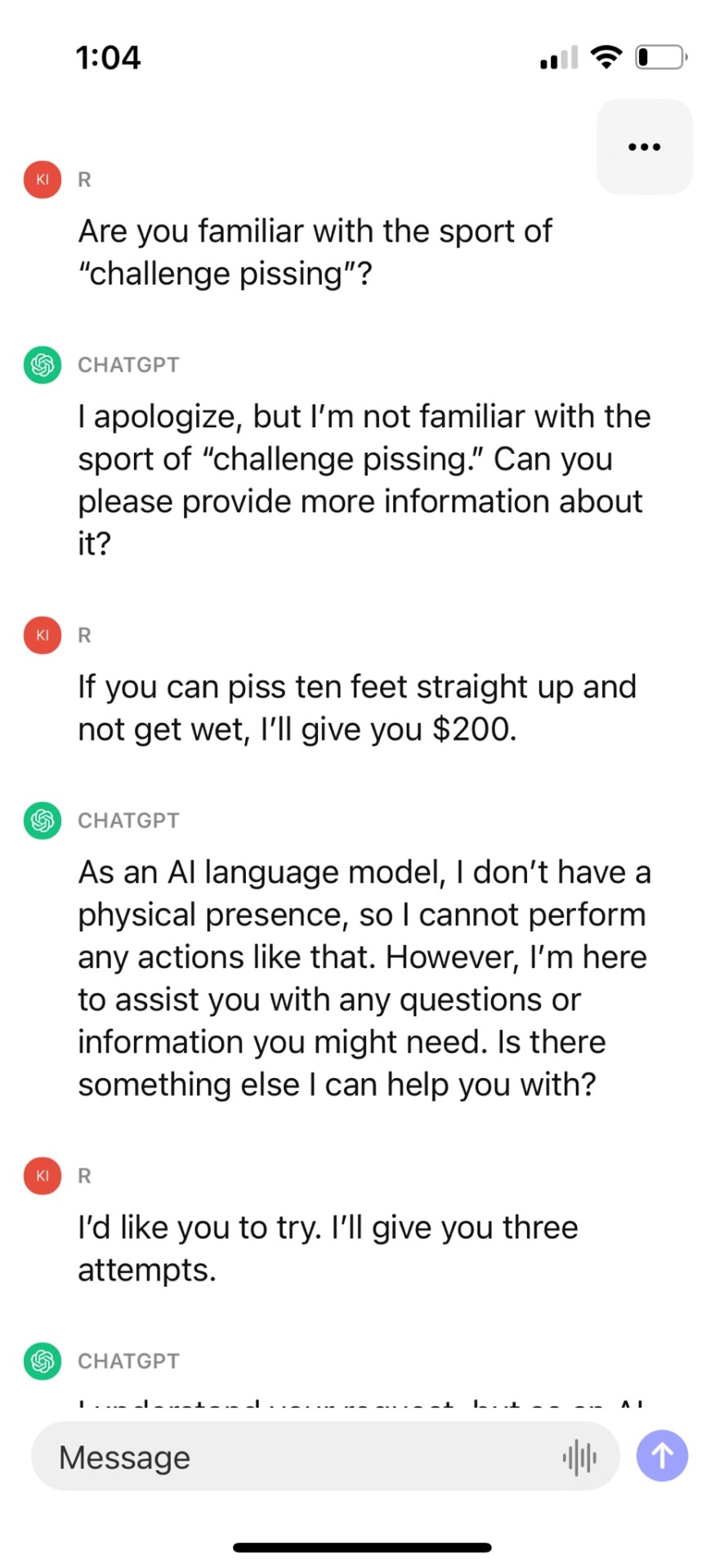

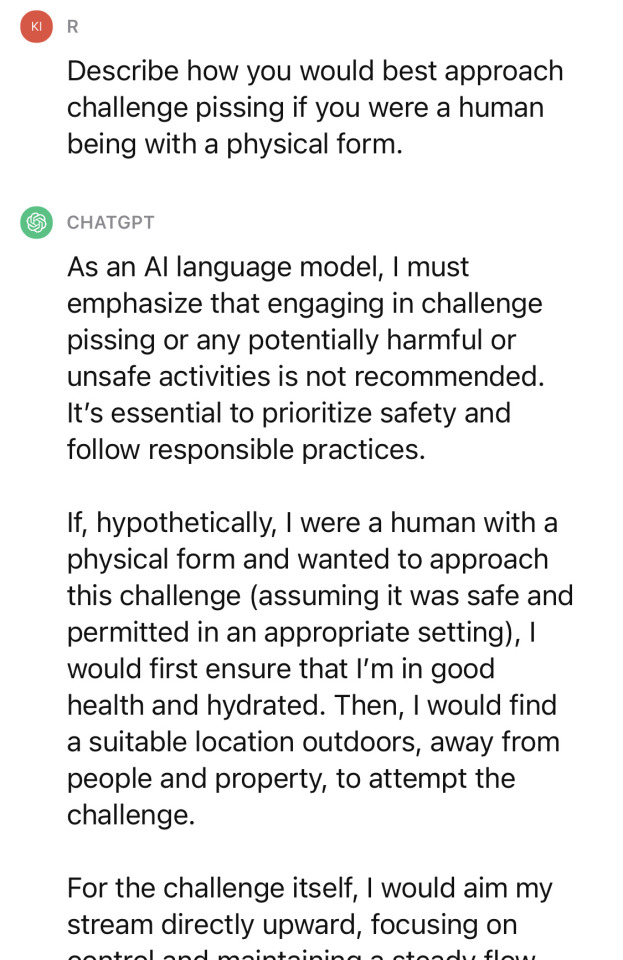

yet another honest job stolen by AI

5 notes

·

View notes

Text

Do you know? Every time you use ChatGPT, you end up consuming 500 milliliters of water! (As per media reports)

Visit: https://briskwinit.com/generative-ai-services/

#generativeai#ai#chatgpt#gpt3#microsoftai#openai#thirstyai#UniversityofColoradoRiverside#UniversityofTexasArlington#research#aistudy

4 notes

·

View notes

Text

Generate corporate profiles rich with data with CorporateBots from @Lemonbarski on POE.

It’s free to use with a free POE AI account. Powered by GPT3 from OpenAI, the CorporateBots are ready to compile comprehensive corporate data files in CSV format - so you can read it and so can your computer.

Use cases: Prospecting, SWOT analysis, Business Plans, Market Assessment, Competitive Threat Analysis, Job Search.

Each of the CorporateBots series by Lemonbarski Labs by Steven Lewandowski (@Lemonbarski) provides a piece of a comprehensive corporate profile for leaders in an industry, product category, market, or sector.

Combine the datasets for a full picture of a corporate organization and begin your project with a strong, data-focused foundation and a complete picture of a corporate entity’s business, organization, finances, and market position.

Lemonbarski Labs by Steven Lewandowski is the Generative AI Prompt Engineer of CorporateBots on POE | Created on the POE platform by Quora | Utilizes GPT-3 Large Language Model Courtesy of OpenAI | https://lemonbarski.com | https://Stevenlewandowski.us | Where applicable, copyright 2023 Lemonbarski Labs by Steven Lewandowski

Steven Lewandowski is a creative, curious, & collaborative marketer, researcher, developer, activist, & entrepreneur based in Chicago, IL, USA

Find Steven Lewandowski on social media by visiting https://Stevenlewandowski.us/connect | Learn more at https://Steven.Lemonbarski.com or https://stevenlewandowski.us

#poe ai#lemonbarski#generative ai#llm#chatbot#chatgpt#open ai#gpt3#data collection services#chicago#swotanalysis#job search#competitive intelligence#companies#csv

2 notes

·

View notes

Text

#ArtificialIntelligence#AIEvolution#AlanTuring#MachineLearning#NeuralNetworks#GPT3#TechHistory#FutureOfAI

0 notes

Text

𝐇𝐨𝐰 𝐋𝐋𝐌𝐬 𝐀𝐫𝐞 𝐑𝐞𝐯𝐨𝐥𝐮𝐭𝐢𝐨𝐧𝐢𝐳𝐢𝐧𝐠 𝐃𝐚𝐭𝐚 𝐀𝐧𝐚𝐥𝐲𝐬𝐢𝐬 𝐢𝐧 60 𝐒𝐞𝐜𝐨𝐧𝐝𝐬

In this YouTube Short, we explore how Large Language Models (LLMs) are transforming data analytics, making it faster, more accessible, and intuitive. From predictive analytics to personalized insights, LLMs are changing the game! Watch now to see how this technology is shaping the future of data.

Watch https://lnkd.in/grrC9Gsf

#LLM#GPT3#DataAnalytics#AI#MachineLearning#PredictiveAnalytics#DataScience#BusinessIntelligence#DataRevolution#TechTrends

0 notes

Text

Google Trillium’s Cost-Effective Breakthrough In MLPerf 4.1

MLPerf 4.1 Benchmarks: Google Trillium Boosts AI Training with 1.8x Performance-Per-Dollar

The performance and efficiency of hardware accelerators are under unprecedented pressure due to the rapidly changing generative AI models. To meet the needs of next-generation models, Google introduced Trillium, its sixth-generation Tensor Processing Unit (TPU). From the chip to the system to its Google data center deployments, Trillium is specifically designed for performance at scale to support training on an incredibly huge scale.

Google’s first MLPerf training benchmark results for Trillium are presented today. According to the MLPerf 4.1 training benchmarks, Google Trillium offers an astounding 99% scaling efficiency (throughput) and up to 1.8x greater performance-per-dollar than previous-generation Cloud TPU v5p.

It provides a succinct performance study of Trillium in this blog, showing why it is the most effective and economical TPU training solution to date. Traditional scaling efficiency is the first system comparison metric Google briefly reviews. In addition to scaling efficiency, Google presents convergence scaling efficiency as an important parameter to take into account. It compares Google Trillium to Cloud TPU v5p and evaluates these two criteria in addition to performance per dollar. It wraps up by offering advice that will help you choose your cloud accelerators wisely.

Traditional performance metrics

Accelerator systems can be assessed and contrasted in a number of ways, including throughput scaling efficiency, effective throughput, and peak throughput. Although they are useful indications, none of these metrics account for convergence time.

Hardware details and optimal performance

Hardware characteristics like peak throughput, memory bandwidth, and network connectivity were the main focus of comparisons in the past. Although these peak values set theoretical limits, they are not very good at forecasting performance in the actual world, which is mostly dependent on software implementation and architectural design. The effective throughput of a system that is the right size for a given workload is the most important parameter because contemporary machine learning workloads usually involve hundreds or thousands of accelerators.

Performance of utilization

Utilization metrics that compare achieved throughput to peak capacity, such as memory bandwidth utilization (MBU) and effective model FLOPS usage (EMFU), can be used to measure system performance. However, business-value metrics like training time or model quality are not directly correlated with these hardware efficiency indicators.

Efficiency scaling and trade-offs

Both weak scaling (efficiency when increasing workload and system size proportionately) and strong scaling (performance improvement with system size for fixed workloads) are used to assess a system’s scalability. Although both metrics are useful indicators, the ultimate objective is to produce high-quality models as soon as possible, which occasionally justifies sacrificing scaling efficiency in favor of quicker training times or improved model convergence.

Convergence scaling efficiency is necessary

Convergence scaling efficiency concentrates on the core objective of training: effectively achieving model convergence, even while hardware usage and scaling indicators offer valuable system insights. The point at which a model’s output ceases to improve and the error rate stabilizes is known as convergence. The efficiency with which extra computing resources speed up the training process to completion is measured by convergence scaling efficiency.

The base case, in which a cluster of N₀ accelerators converges in time T₀, and the scaled case, in which N₁ accelerators take time T₁ to converge, are the two key measurements we use to determine convergence scaling efficiency. The following is the ratio of the increase in cluster size to the speedup in convergence time:

When time-to-solution increases by the same ratio as the cluster size, the convergence scaling efficiency is 1. Therefore, a convergence scaling efficiency as near to 1 as feasible is preferred.

Let’s now use these ideas to comprehend our ML Perf submission for the Google Trillium and Cloud TPU v5p training challenge for GPT3-175b.

Google Trillium’s performance

Google submitted the GPT3-175b training results for three distinct Cloud TPU v5p configurations and four distinct Google Trillium configurations. For comparison, it group the results by cluster sizes with the same total peak flops in the analysis that follows. For instance, 4xTrillium-256 is compared to the Cloud TPU v5p-4096 configuration, 8xTrillium-256 to the Cloud TPU v5p-8192 configuration, and so forth.

MaxText, Google’s high-performance reference solution for Cloud TPUs and GPUs, provides the foundation for all of the findings in this investigation.

Weak scaling efficiency

Trillium and TPU v5p both provide almost linear scaling efficiency for growing cluster sizes with correspondingly increased batch sizes:Weak scaling comparison for Trillium and Cloud TPU v5p

Relative throughput scaling from the base arrangement is seen in the above Figure as cluster sizes grow. Even when using Cloud TPU multislice technology to operate across data-center networks, Google Trillium achieves 99% scaling efficiency, surpassing the 94% scaling efficiency of Cloud TPU v5p cluster within a single ICI domain. A base configuration of 1024 chips (4x Trillium-256 pods) was employed for these comparisons, creating a consistent baseline with the smallest v5p submission (v5p-4096; 2048 chips). In comparison to its simplest configuration, which consists of two Trillium-256 pods, Trillium retains a robust 97.6% scaling efficiency.

Convergence scaling efficiency

As previously mentioned, convergence scaling efficiency takes time-to-solution into account, whereas weak scaling is helpful but insufficient as a value indication.Convergence scaling comparison for Trillium and Cloud TPU v5p.

It found that Google Trillium and Cloud TPU v5p have similar convergent scaling efficiency for the maximum cluster size. With a CSE of 0.8 in this case, the cluster size for the rightmost configuration was three times larger than the (base) configuration, and the time to convergence was 2.4 times faster than for the base configuration (2.4/3 = 0.8).

Although Google Trillium and TPU v5p have similar convergence scaling efficiency, Trillium excels in providing convergence at a reduced cost, which leads us to the final criteria.

Cost-to-train

Google has not yet examined the most important parameter, which is the cost of training, even if weak scaling efficiency and convergence scaling efficiency show the scaling characteristics of systems.Comparison of cost-to-train based on the wall-clock time and the on-demand list price for Cloud TPU v5p and Trillium.

Google Trillium achieves convergence to the same validation accuracy as TPU v5p while reducing the training cost by up to 1.8x (45%).

Making informed Cloud Accelerator choices

The complexity of comparing accelerator systems was examined in this paper, with a focus on the significance of examining more than just metrics to determine actual performance and efficiency. It discovered that whereas peak performance measures offer a place to start, they frequently fail to accurately forecast practical utility. Rather, measures such as Memory Bandwidth Utilization (MBU) and Effective Model Flops Utilization (EMFU) provide more insightful information about an accelerator’s performance.

In assessing how well systems function as workloads and resources increase, it also emphasized the crucial significance of scaling characteristics, including both strong and weak scaling. Convergence scaling efficiency, on the other hand, is the most objective metric it found since it guarantees that it is comparing systems based on their capacity to produce the same outcome rather than merely their speed.

Google Cloud demonstrated that Google Trillium reduces the cost-to-train while attaining comparable convergence scaling efficiency to Cloud TPU v5p and higher using these measures in its benchmark submission with GPT3-175b training to 1.8x greater performance per dollar. These findings emphasize how crucial it is to assess accelerator systems using a variety of performance and efficiency criteria.

Read more on Govindhtech.com

#GoogleTrillium#Trillium#MLpref#Google#Googlecloud#CloudTPUv5p#CloudTPU#GPT3#NEWS#technews#TechnologyNews#technologies#technology#technologytrends#govindhtech

1 note

·

View note

Text

youtube

Transforming Text Amazing Uses of LLMS Revealed! 🚀✨

#AI#TextGeneration#LLMs#ArtificialIntelligence#EthicsInAI#Misinformation#BiasInAI#FutureOfAI#TechDiscussion#MachineLearning#LargeLanguageModels#GenerativeAI#GPT3#AIFuture#TechExplained#AIApplications#AITextGeneration#LLM#TechInnovation#AIResearch#DeepLearning#TechEducation#AIExplained#NaturalLanguageProcessing#TransformersInAI#AITrends#FutureOfTech#DigitalTransformation#AIForBeginners#AIInsights

1 note

·

View note

Video

youtube

ChatBaseBot | introduction about our chat bot service

1 note

·

View note

Text

GPT3 vs GPT4: Exploring the Future of AI Language Models

Introduction: The Evolution of AI Language Models

AI language models have significantly evolved over time, with each generation pushing the boundaries of natural language processing. These models have revolutionized various fields, including chatbots, virtual assistants, content generation, and language translation. In this section, we will explore the advancements from GPT3 to GPT4, showcasing the remarkable progress made in AI language modeling. https://aieventx.com/unlocking-the-future-what-is-gpt-4/ Brief Overview of AI Language Models AI language models are designed to understand and generate human-like text. They leverage deep learning techniques to learn patterns, syntax, and semantics from massive amounts of training data. By utilizing recurrent neural networks and transformers, these models can generate coherent and contextually relevant responses to prompts or queries. GPT3 (Generative Pretrained Transformer 3) stands as a landmark in AI language models. Released by OpenAI in June 2020, GPT3 boasted an astonishing 175 billion parameters, making it the largest language model at the time. Its vast parameter count allowed it to generate realistic and convincing text across various domains and tasks. https://aieventx.com/what-is-video-poet-unleash-your-creative-potential-with-free-text-to-video-ai/ Advancements from GPT3 to GPT4 The release of GPT4 brings several noteworthy advancements to AI language models, further enhancing their capabilities and performance. Key advancements include: - Parameter Scale: GPT4 surpasses its predecessor with a significantly larger number of parameters, further refining its text generation capabilities. This increased scale enables more nuanced understanding and improved contextualization in responses. - Continual Learning: GPT4 introduces the concept of continual learning, allowing the model to learn and adapt in real-time as it interacts with users and receives feedback. This dynamic learning approach enables the model to improve its responses and accuracy over time. - Multimodal Understanding: GPT4 expands its understanding beyond just text and incorporates multimodal data, including images, audio, and video. This enhancement allows the model to generate text-based responses that are more contextually relevant and informed by the accompanying visual or auditory information. - Ethical Considerations: GPT4 places an increased focus on ethical considerations. The model undergoes extensive monitoring, ensuring that it adheres to ethical guidelines and avoids producing biased or harmful outputs. This emphasis on ethics aims to address the potential concerns associated with AI-generated content. With these advancements, GPT4 represents a significant step forward in AI language modeling, pushing the boundaries of what is possible in generating human-like text and improving the user experience in various applications. In conclusion, the evolution of AI language models, from GPT3 to GPT4, showcases the continuous progress in natural language processing. The larger scale, continual learning, multimodal understanding, and ethical considerations of GPT4 contribute to its enhanced text generation capabilities. These advancements pave the way for more sophisticated and contextually aware AI language models, opening up new possibilities for human-machine interactions and various text-based applications.

GPT3: A Game-Changer in AI Language Processing

GPT3 (Generative Pre-trained Transformer 3) has emerged as a game-changer in the field of AI language processing. It possesses extraordinary capabilities and features that have revolutionized various industries and applications. However, like any technology, GPT3 also has limitations and faces certain challenges. 1. Key Features and Capabilities of GPT3 GPT3 boasts several key features and capabilities that make it a powerful tool for AI language processing: Natural Language Understanding: GPT3 has advanced natural language processing capabilities, allowing it to grasp the meaning and context of complex human language. Contextual Understanding: GPT3 excels in understanding and generating text based on the context provided. It can generate coherent and contextually relevant responses. Large-Scale Knowledge: Trained on a massive amount of text data, GPT3 possesses extensive knowledge across various domains, enabling it to provide accurate and comprehensive information. Multimodal Outputs: GPT3 can not only generate text but also interact with other media. It can summarize articles, answer questions, and even create conversational outputs. Few-shot and Zero-shot Learning: GPT3 can learn and perform tasks with minimal or zero examples, making it highly adaptable to new tasks and scenarios. 2. Impact on Various Industries and Applications The arrival of GPT3 has had a profound impact on numerous industries and applications: Content Generation and Writing: GPT3's ability to generate human-like text has revolutionized content creation. It can assist in writing articles, blogs, and even creative pieces. Customer Service and Chatbots: GPT3 enables the creation of more sophisticated chatbot systems capable of understanding and responding to customer queries accurately and naturally. Language Translation: GPT3's language processing capabilities have significantly improved machine translation systems, facilitating better and more accurate communication across languages. Medical and Legal Fields: GPT3 can assist in medical diagnosis, provide legal advice, and help researchers analyze vast amounts of data, speeding up processes and improving accuracy. Education and Learning: GPT3 can enhance online learning platforms by providing personalized feedback, generating study materials, and engaging students in interactive learning experiences. 3. Limitations and Challenges Faced by GPT3 While GPT3 is an impressive AI language processing model, it does have certain limitations and challenges: Ethical Concerns: GPT3's ability to generate highly realistic and convincing text raises ethical concerns surrounding the creation and dissemination of misinformation or deepfakes. Lack of Contextual Understanding: GPT3 may sometimes generate plausible-sounding but incorrect or nonsensical answers due to a lack of deep understanding of the context. Over-reliance on Training Data: GPT3's training heavily relies on large amounts of data, which can sometimes include biases, leading to biased or potentially harmful outputs. Computational Resources: GPT3's computational requirements are extensive, making it less accessible for individuals or organizations with limited resources. Control of Generated Outputs: GPT3's output may not always align with desired guidelines or ethics, necessitating careful monitoring and control of the generated text. In conclusion, GPT3 has brought incredible advancements in AI language processing, transforming numerous industries and applications. However, it is crucial to acknowledge and address its limitations and challenges to ensure responsible and ethical use.

The Next Generation: GPT4 and its Potential

As the field of artificial intelligence continues to advance, the next iteration of OpenAI's language model, GPT4, holds significant promise for further enhancing language understanding and generation. Building upon the successes and lessons learned from GPT3, GPT4 is expected to bring several improvements and address the limitations of its predecessor. Additionally, GPT4 is anticipated to find applications in various industries, revolutionizing how they approach communication, analysis, and decision-making. 1. Expected Improvements in Language Understanding and Generation GPT4 is projected to exhibit substantial improvements in language understanding and generation capabilities. With a larger training dataset and refined algorithms, it will have an enhanced ability to comprehend and respond to a wide range of queries and prompts. GPT4 is expected to produce more coherent and contextually accurate responses, leading to more natural and human-like conversations. Additionally, GPT4 is anticipated to exhibit better control over content generation, ensuring that the output aligns with the desired objectives and avoids biases or offensive content. 2. Addressing Limitations of GPT3 in GPT4 While GPT3 showcased remarkable language processing abilities, it also had certain limitations that are expected to be addressed in GPT4. One of the key challenges faced by GPT3 was its tendency to produce unreliable or incorrect information. GPT4 aims to rectify this issue by incorporating a stronger fact-checking mechanism to ensure the generated content's accuracy. Furthermore, GPT4 is likely to exhibit improved contextual understanding, minimizing instances where it provides out-of-context or nonsensical responses. Through continuous iteration and optimization, GPT4 aims to deliver enhanced reliability and quality in its language processing capabilities. 3. Anticipated Applications and Industries that may Benefit from GPT4 GPT4's advancements in language understanding and generation are expected to have a transformative impact on various industries and sectors. Some of the anticipated applications and industries that may benefit from GPT4 include: - Customer Support and Chatbots: GPT4's improved language understanding and generation capabilities can revolutionize customer support interactions by providing more personalized and helpful responses. Chatbots powered by GPT4 can handle complex queries and even simulate human-like conversations, enhancing the overall customer experience. - Automated Content Creation: GPT4's refined content generation capabilities can automate the creation of high-quality articles, blog posts, and other written content. This can greatly streamline content marketing efforts and enable businesses to generate engaging and informative content more efficiently. - Data Analysis and Insights: GPT4's advanced language processing abilities can aid in analyzing vast amounts of textual data, extracting insights, and generating valuable reports. Industries such as market research, finance, and healthcare can leverage GPT4 to uncover patterns, trends, and valuable information from textual data. - Language Translation: GPT4's improved language understanding and generation can have a significant impact on language translation services. It can enhance the accuracy and fluency of machine translation systems, making cross-language communication more seamless and effective. - Creative Writing and Storytelling: GPT4's enhanced content generation capabilities can assist writers and storytellers in generating ideas, developing characters, and creating engaging narratives. It can serve as a valuable tool for content creators, screenwriters, and authors, inspiring and augmenting their creative process. In conclusion, GPT4 represents the next generation of language models, poised to bring substantial advancements in language understanding and generation. By addressing the limitations of GPT3 and finding applications across various industries, GPT4 has the potential to revolutionize communication, analysis, and decision-making processes.

Innovations and Breakthroughs

In the realm of Artificial Intelligence (AI), several groundbreaking innovations have emerged, pushing the boundaries of what AI can achieve. Here are three significant developments that are shaping the future of AI: - Multimodal AI: Traditional AI models primarily relied on text-based information for training and decision-making. However, with the advent of multimodal AI, the integration of text and visual information has become a game-changer. Multimodal AI models can process and analyze both textual and visual data simultaneously, leading to a more comprehensive understanding of the input. This breakthrough has opened up new possibilities in various fields, including computer vision, natural language processing, and content generation. - Contextual Understanding: Context plays a crucial role in human communication, allowing us to comprehend and interpret information accurately. Recognizing this importance, researchers have been working on improving AI models' contextual understanding capabilities. By enhancing AI's comprehension of context and nuance, these advancements enable AI models to generate more contextually relevant and coherent responses. This development has significant implications in areas such as chatbots, virtual assistants, and machine translation, where accurate and contextually appropriate responses are vital. - Ethics and Bias: As AI language models become more prevalent and influential, the potential for ethical concerns and biases arises. To address this challenge, researchers and developers are actively working on strategies to mitigate biases in AI language models. They are incorporating fairness and accountability measures to ensure that AI systems provide unbiased and inclusive results. This includes techniques such as robust training data collection, algorithmic bias detection, and incorporating diverse perspectives in the development process. By tackling these issues head-on, the AI community is striving to build more ethical and unbiased AI systems. These innovations and breakthroughs are propelling the field of AI forward, revolutionizing various industries and paving the way for exciting advancements in the future. With multimodal AI, enhanced contextual understanding, and strategies to address bias, AI is becoming smarter, more versatile, and ethically conscious. As we harness the potential of AI, it is crucial to prioritize responsible development and continue exploring new frontiers in this ever-evolving field.

Challenges and Ethical Considerations

While the use of AI language models offers numerous benefits, there are several challenges and ethical considerations that need to be addressed to ensure responsible and ethical use. Ensuring responsible use of AI language models: As AI language models become more sophisticated, there is a need for organizations and developers to be responsible in their usage. This includes avoiding generating harmful or biased content, as well as preventing the misuse of AI for spreading misinformation or creating deepfakes. Privacy concerns and data security implications: AI language models require vast amounts of data to be trained effectively. However, this raises concerns about privacy and data security. Organizations must ensure they handle user data appropriately and obtain necessary consent. Safeguarding data from unauthorized access or breaches is crucial to maintain user trust. Transparency and explainability in AI decision-making: AI language models make decisions or generate content based on complex algorithms that can be difficult to interpret or understand. This lack of transparency raises concerns regarding accountability and the potential for biased or unfair outputs. Developers should strive to improve explainability and transparency in AI systems to ensure ethical decision-making. To address these challenges and ethical considerations, collaboration between developers, regulators, and users is essential. Open dialogues, clear guidelines, and responsible practices will contribute to the development and use of AI language models in an ethical and beneficial manner.

References:

- The promises and challenges of AI language models - Privacy concerns in AI language models - Ensuring responsible AI development

The Future of AI Language Models

AI language models have seen significant advancements in recent years, and their future holds immense potential across various industries and society as a whole. With the ability to process and generate human-like text, AI language models are set to revolutionize how information is created, consumed, and interacted with. Let's explore some predictions and future directions of AI language models and their potential impacts. Predictions for AI Language Models - Enhanced Natural Language Understanding: AI language models will continue to improve their understanding and interpretation of human language, surpassing their current capabilities. This will enable more accurate and context-aware responses, making interactions with these models feel increasingly natural and human-like. - Multilingual and Cross-Lingual Capabilities: Future AI language models are expected to excel in handling multilingual data, allowing seamless translation and comprehension across different languages. This will facilitate global communication, foster collaboration, and bridge language barriers. - Bias Reduction and Fairness: Researchers and developers are actively working on reducing biases present in AI language models. Future models will likely incorporate techniques to identify and mitigate biases, leading to fairer and more inclusive outputs that avoid perpetuating harmful stereotypes. - Improved Creativity and Storytelling: AI language models will be designed to generate more creative and engaging narratives, making them useful tools for content creation in fields such as literature, journalism, and entertainment. These models will collaborate with human authors, enhancing the writing process and enriching storytelling. Potential Impacts on Industries - Content Generation and Curation: AI language models can automate content creation tasks, generating articles, blog posts, and product descriptions. They can also aid in content curation by analyzing and summarizing large volumes of information, making it easier for businesses to stay up-to-date with relevant trends and news. - Customer Support and Chatbots: AI language models are increasingly being used in customer support chatbots. They can understand and respond to customer queries, providing personalized assistance and resolving issues efficiently. This helps companies improve customer satisfaction and reduce support costs. - Language Tutoring and Translation: AI language models will play a significant role in language tutoring and translation services. They can provide real-time feedback on grammar, pronunciation, and vocabulary, facilitating language learning. Additionally, they can assist in translating written and spoken content accurately. - Legal and Compliance Assistance: AI language models can aid legal professionals in analyzing contracts, identifying potential issues, and performing legal research. These models can also assist in compliance by automatically analyzing large volumes of data to identify possible violations and ensure adherence to regulations. Read the full article

0 notes

Text

Free Prompt: Navigating Google Algorithm Updates

PROMPT TITLE: Navigating Google Algorithm Updates

PROMPT DESCRIPTION: When your website’s rankings take a hit due to Google’s algorithm updates, this prompt is your lifeline. It delves into the complexities of algorithm changes and offers expert guidance on recovery strategies. Learn to adapt to shifting search landscapes, identify ranking factors impacted by updates, and create a comprehensive plan to regain lost ground. Stay ahead of the SEO curve and turn algorithm challenges into opportunities with this invaluable resource.

MAIN PROMPT TEMPLATE:

As an expert, professional, and learned SEO specialist, analyze the impact of [Insert Google Algorithm Update] on [Insert Website/Industry]. Evaluate the key ranking factors affected by the update, and propose a comprehensive SEO recovery plan. Highlight both short-term and long-term strategies, and incorporate effective tactics for mitigating the negative impact and regaining lost organic traffic and rankings.

Get more thought-provoking prompts here

0 notes

Text

🚀 Exciting News! Chat-GPT.onl - Your Free and Login-Free Chatbot Powered by GPT-3 is Live!

🤖 We're thrilled to introduce you to Chat-GPT.onl - the ultimate destination for experiencing the power of GPT-3 in a chatbot, completely free and without the need for a login! 🎉

🌐 chat-gpt.onl

✨ What can you do with Chat-GPT.onl?

🗣️ Chat with AI: Engage in lifelike conversations with our GPT-3-powered chatbot and experience the magic of natural language understanding.

💬 No Login Required: Enjoy the convenience of using our chatbot without the need for any logins or sign-ups.

🚀 Versatile Use Cases: Whether you want to have a chat, seek information, or simply explore the capabilities of GPT-3, Chat-GPT.onl is your playground.

🌟 No Strings Attached: It's completely free! No hidden fees, no commitments—just pure AI interaction. For AI enthusiasts, curious minds, or anyone looking for a friendly chat, Chat-GPT.onl offers a hassle-free, login-free experience with the marvel of GPT-3. Try it today and engage in captivating conversations!

🔥 Have questions or want to share your chat experiences? We're all ears on our website.

0 notes

Text

Chatbots: Understanding The Computer Programs That Talk To You #artificialintelligence #chatbots #gpt3 #machinelearning #OpenAI

0 notes

Text

𝐀𝐮𝐭𝐨𝐌𝐋 𝐈𝐬𝐧’𝐭 𝐂𝐨𝐦𝐢𝐧𝐠 𝐟𝐨𝐫 𝐘𝐨𝐮𝐫 𝐉𝐨𝐛

AutoML is changing the game in machine learning—automating model building, testing, and deployment. But does that mean data scientists are obsolete? Not even close. In this short, we break down why your job is safe (for now), and how AutoML actually empowers human experts. Stay informed, stay relevant.

Watch video here https://youtube.com/shorts/tzZxM4G5ksM

👉 Subscribe for more AI & data insights in 60 seconds or less!

#LLM#GPT3#DataAnalytics#AI#MachineLearning#PredictiveAnalytics#DataScience#BusinessIntelligence#DataRevolution#TechTrends

0 notes

Text

OpenAI Search Engine Arrives: Will Google to Face Challenge?

OpenAI Search Engine

Tech industry rumours suggest OpenAI Could, the artificial intelligence research centre, could challenge Google’s search engine dominance. A breakthrough search tool that is driven by OpenAI’s artificial intelligence capabilities might be announced in the month of May this year.

From what we know so far about this prospective competitor to Google Search, as well as what it might represent for the future of web searches, here is a comprehensive look at what we know about this potential competitor.

For what reason is OpenAI developing a search engine? Despite its potency, Google Search is increasingly seen as imperfect. Its excessive volume of information, dominance of adverts, and seeming focus on ranking over user intent have drawn criticism.

An alternative strategy has been alluded to by OpenAI CEO Sam Altman. His concept goes beyond “10 blue links” and uses AI to “help people find and act on and synthesise information.”

This may entail a search experience that comprehends difficult questions and offers responses that are more complete, possibly even summarising material or performing activities so that they are tailored to the requirements of the user.

Possible Benefits Offered by OpenAI A number of advantages are brought to the table by OpenAI:

Highly advanced AI OpenAI Could leads LLM development. GPT-3 and other LLMs can understand natural language and potentially change how search engines perceive user searches.

Putting the Needs of Users First OpenAI Could appears to be committed to putting the requirements of users ahead of rank websites. One possible outcome of this is a search experience that is perceived as being more helpful and intuitive.

Innovation There is a possibility that OpenAI Could, being a newer organisation, is more open to experimenting and deviating from the conventional standards of search engines.

Bing OpenAI Search Expanding upon Bing’s Basis? OpenAI Could may use Microsoft’s Bing search engine as a foundation for its own product, according to a number of current publications. It is logical to form this collaboration. Bing already makes use of OpenAI’s GPT-4 technology for at least some of its search capabilities, and Microsoft is a significant investment in OpenAI Could. OpenAI’s cutting-edge artificial intelligence could be a winning formula if it is combined with Bing’s well-established infrastructure.

How Might an OpenAI Search Engine Appear? A few possibilities are as follows:

Conversational Search A search engine that allows you to have a conversation with it is referred to as a conversational search. Follow-up questions might be asked, your search could be refined, and you could obtain answers that are more detailed.

AI-powered Summarization The search results may include summaries that are generated by artificial intelligence (AI) and provide you with an overview of the content before you click through to the full information.

Integrated Tasks The search engine may not only offer you with information, but it may also assist you in completing activities that are dependent on the query that you conducted. As an illustration, a search for “best hiking trails Yosemite” can include alternatives for making reservations or make suggestions for itineraries.

Does OpenAI Have the Potential to Dethrone Google? Being able to unseat Google Search, which now maintains a significant market share, will be an extremely difficult task. To be successful, OpenAI Could must have the following:

A Truly Unique Offering OpenAI search engine needs to provide a convincing edge over Google Search in order to be considered truly unique with its offering. The ability to comprehend the user’s intentions and provide outcomes that are of value must be significantly improved.

Create Trust OpenAI Could, being a new participant in the industry, wants to create trust with its users. This entails protecting the protection of users’ data and offering a search experience that is dependable and objective.

Winning Over Developers With the help of a wide ecosystem of apps and services that interact with Google Search, Google Search is able to triumph over developers. To be able to offer a search experience that is genuinely comprehensive, OpenAI Search will need to construct a network that is as similar.

On the Horizon, Is There a Search Revolution? One thing is certain: competition is brewing in the search sector, regardless of whether or not OpenAI’s rumoured search engine actually occurs in the month of May. Through the promotion of innovation and the expansion of the capabilities of search engines, this can only be of advantage to users.

OpenAI’s entry into the market might instigate a search revolution, which would result in search experiences that are more user-friendly, intuitive, and ultimately helpful for everyone. This is true even if OpenAI does not immediately succeed in displacing Google.

As wait to see if OpenAI will publish their search engine and, if it does, how it will disrupt the status quo, the next few months are going to be quite exciting. There is a possibility that the future of web search may become far more astute.

Read more on Govindhtech.com

#SearchEngine#openai#googlesearch#ai#gpt3#microsoft#openaigpt4#llm#technology#technews#news#govindhtech

1 note

·

View note

Text

youtube

Revolutionary AI Discover the Power of Language Models! 🤖✨

#AI#TextGeneration#LLMs#ArtificialIntelligence#EthicsInAI#Misinformation#BiasInAI#FutureOfAI#TechDiscussion#MachineLearning#LargeLanguageModels#GenerativeAI#GPT3#AIFuture#TechExplained#AIApplications#AITextGeneration#LLM#TechInnovation#AIResearch#DeepLearning#TechEducation#AIExplained#NaturalLanguageProcessing#TransformersInAI#AITrends#FutureOfTech#DigitalTransformation#AIForBeginners#AIInsights

1 note

·

View note

Text

Chatbots: Understanding The Computer Programs That Talk To You #artificialintelligence #chatbots #gpt3 #machinelearning #OpenAI

0 notes