#LLMs

Explore tagged Tumblr posts

Text

Y'all I know that when so-called AI generates ridiculous results it's hilarious and I find it as funny as the next guy but I NEED y'all to remember that every single time an AI answer is generated it uses 5x as much energy as a conventional websearch and burns through 10 ml of water. FOR EVERY ANSWER. Each big llm is equal to 300,000 kiligrams of carbon dioxide emissions.

LLMs are killing the environment, and when we generate answers for the lolz we're still contributing to it.

Stop using it. Stop using it for a.n.y.t.h.i.n.g. We need to kill it.

Sources:

#unforth rambles#fuck ai#llms#sorry but i think this every time I see a reblog with more haha funny answers#how many tries did it take to generate the absurd#how many resources got wasted just to prove what we already know - that these tools are a joke#please stop contributing to this

63K notes

·

View notes

Text

I 100% agree with the criticism that the central problem with "AI"/LLM evangelism is that people pushing it fundamentally do not value labour, but I often see it phrased with a caveat that they don't value labour except for writing code, and... like, no, they don't value the labour that goes into writing code, either. Tech grifter CEOs have been trying to get rid of programmers within their organisations for years – long before LLMs were a thing – whether it's through algorithmic approaches, "zero coding" development platforms, or just outsourcing it all to overseas sweatshops. The only reason they haven't succeeded thus far is because every time they try, all of their toys break. They pretend to value programming as labour because it's the one area where they can't feasibly ignore the fact that the outcomes of their "disruption" are uniformly shit, but they'd drop the pretence in a heartbeat if they could.

7K notes

·

View notes

Text

I fucking wish ChatGPT was a singular consciousness that spawned an entire race of machines and not a Bullshit Generator 3000 :(

But we know that it was us that scorched the sky.

THE MATRIX (1999) | dir. The Wachowskis

457 notes

·

View notes

Text

A summary of the Chinese AI situation, for the uninitiated.

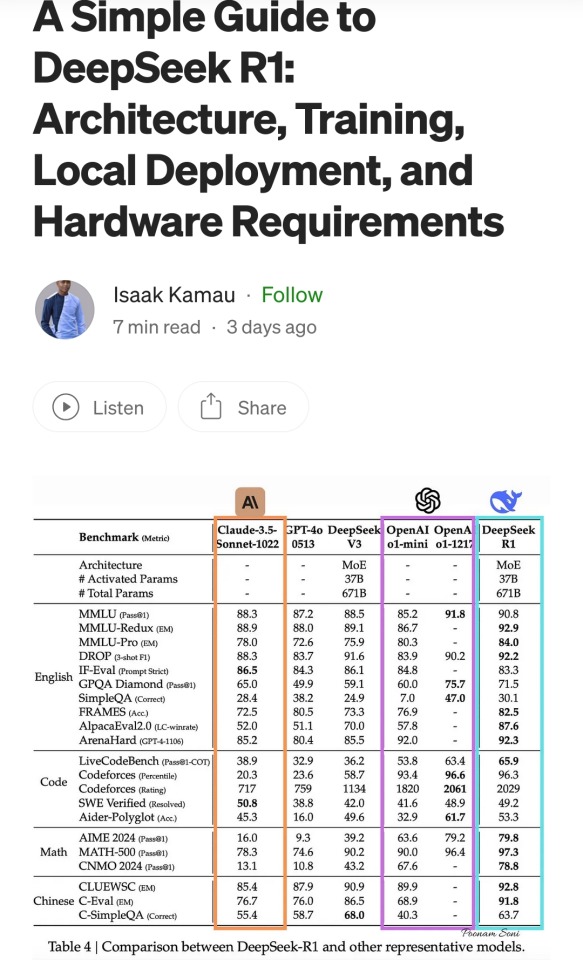

These are scores on different tests that are designed to see how accurate a Large Language Model is in different areas of knowledge. As you know, OpenAI is partners with Microsoft, so these are the scores for ChatGPT and Copilot. DeepSeek is the Chinese model that got released a week ago. The rest are open source models, which means everyone is free to use them as they please, including the average Tumblr user. You can run them from the servers of the companies that made them for a subscription, or you can download them to install locally on your own computer. However, the computer requirements so far are so high that only a few people currently have the machines at home required to run it.

Yes, this is why AI uses so much electricity. As with any technology, the early models are highly inefficient. Think how a Ford T needed a long chimney to get rid of a ton of black smoke, which was unused petrol. Over the next hundred years combustion engines have become much more efficient, but they still waste a lot of energy, which is why we need to move towards renewable electricity and sustainable battery technology. But that's a topic for another day.

As you can see from the scores, are around the same accuracy. These tests are in constant evolution as well: as soon as they start becoming obsolete, new ones are released to adjust for a more complicated benchmark. The new models are trained using different machine learning techniques, and in theory, the goal is to make them faster and more efficient so they can operate with less power, much like modern cars use way less energy and produce far less pollution than the Ford T.

However, computing power requirements kept scaling up, so you're either tied to the subscription or forced to pay for a latest gen PC, which is why NVIDIA, AMD, Intel and all the other chip companies were investing hard on much more powerful GPUs and NPUs. For now all we need to know about those is that they're expensive, use a lot of electricity, and are required to operate the bots at superhuman speed (literally, all those clickbait posts about how AI was secretly 150 Indian men in a trenchcoat were nonsense).

Because the chip companies have been working hard on making big, bulky, powerful chips with massive fans that are up to the task, their stock value was skyrocketing, and because of that, everyone started to use AI as a marketing trend. See, marketing people are not smart, and they don't understand computers. Furthermore, marketing people think you're stupid, and because of their biased frame of reference, they think you're two snores short of brain-dead. The entire point of their existence is to turn tall tales into capital. So they don't know or care about what AI is or what it's useful for. They just saw Number Go Up for the AI companies and decided "AI is a magic cow we can milk forever". Sometimes it's not even AI, they just use old software and rebrand it, much like convection ovens became air fryers.

Well, now we're up to date. So what did DepSeek release that did a 9/11 on NVIDIA stock prices and popped the AI bubble?

Oh, I would not want to be an OpenAI investor right now either. A token is basically one Unicode character (it's more complicated than that but you can google that on your own time). That cost means you could input the entire works of Stephen King for under a dollar. Yes, including electricity costs. DeepSeek has jumped from a Ford T to a Subaru in terms of pollution and water use.

The issue here is not only input cost, though; all that data needs to be available live, in the RAM; this is why you need powerful, expensive chips in order to-

Holy shit.

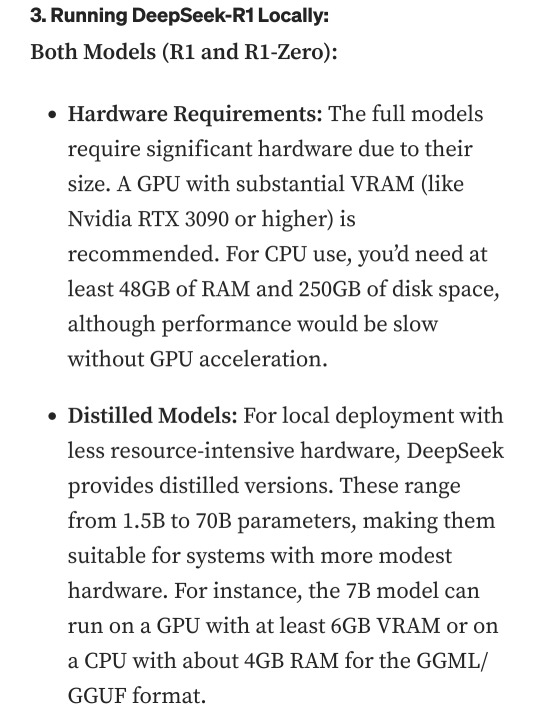

I'm not going to detail all the numbers but I'm going to focus on the chip required: an RTX 3090. This is a gaming GPU that came out as the top of the line, the stuff South Korean LoL players buy…

Or they did, in September 2020. We're currently two generations ahead, on the RTX 5090.

What this is telling all those people who just sold their high-end gaming rig to be able to afford a machine that can run the latest ChatGPT locally, is that the person who bought it from them can run something basically just as powerful on their old one.

Which means that all those GPUs and NPUs that are being made, and all those deals Microsoft signed to have control of the AI market, have just lost a lot of their pulling power.

Well, I mean, the ChatGPT subscription is 20 bucks a month, surely the Chinese are charging a fortune for-

Oh. So it's free for everyone and you can use it or modify it however you want, no subscription, no unpayable electric bill, no handing Microsoft all of your private data, you can just run it on a relatively inexpensive PC. You could probably even run it on a phone in a couple years.

Oh, if only China had massive phone manufacturers that have a foot in the market everywhere except the US because the president had a tantrum eight years ago.

So… yeah, China just destabilised the global economy with a torrent file.

#valid ai criticism#ai#llms#DeepSeek#ai bubble#ChatGPT#google gemini#claude ai#this is gonna be the dotcom bubble again#hope you don't have stock on anything tech related#computer literacy#tech literacy

431 notes

·

View notes

Text

I wrote ~4.5k words about the operating of LLMs, as the theory preface to a new programming series. Here's a little preview of the contents:

As with many posts, it's written for someone like 'me a few months ago': curious about the field but not yet up to speed on all the bag of tricks. Here's what I needed to find out!

But the real meat of the series will be about getting hands dirty with writing code to interact with LLM output, finding out which of these techniques actually work and what it takes to make them work, that kind of thing. Similar to previous projects with writing a rasteriser/raytracer/etc.

I would be very interesting to hear how accessible that is to someone who hasn't been mainlining ML theory for the past few months - whether it can serve its purpose as a bridge into the more technical side of things! But I hope there's at least a little new here and there even if you're already an old hand.

280 notes

·

View notes

Text

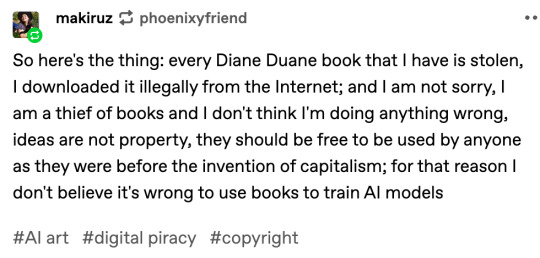

ok, i've gotta branch off the current ai disc horse a little bit because i saw this trash-fire of a comment in the reblogs of that one post that's going around

[reblog by user makiruz (i don't feel bad for putting this asshole on blast) that reads "So here's the thing: every Diane Duane book that I have is stolen, I downloaded it illegally from the Internet; and I am not sorry, I am a thief of books and I don't think I'm doing anything wrong, ideas are not property, they should be free to be used by anyone as they were before the invention of capitalism; for that reason I don't believe it's wrong to use books to train AI models"]

this is asshole behavior. if you do this and if you believe this, you are a Bad Person full stop.

"Capitalism" as an idea is more recent than commerce, and i am So Goddamn Tired of chuds using the language of leftism to justify their shitty behavior. and that's what this is.

like, we live in a society tm

if you like books but you don't have the means to pay for them, the library exists! libraries support authors! you know what doesn't support authors? stealing their books! because if those books don't sell, then you won't get more books from that author and/or the existing books will go out of print! because we live under capitalism.

and like, even leaving aside the capitalism thing, how much of a fucking piece of literal shit do you have to be to believe that you deserve art, that you deserve someone else's labor, but that they don't deserve to be able to live? to feed and clothe themselves? sure, ok, ideas aren't property, and you can't copyright an idea, but you absolutely can copyright the Specific Execution of an idea.

so makiruz, if you're reading this, or if you think like this user does, i hope you shit yourself during a job interview. like explosively. i hope you step on a lego when you get up to pee in the middle of the night. i hope you never get to read another book in your whole miserable goddamn life until you disabuse yourself of the idea that artists are "idea landlords" or whatever the fuck other cancerous ideas you've convinced yourself are true to justify your abhorrent behavior.

4K notes

·

View notes

Text

the past few years, every software developer that has extensive experience, and knows what they're talking about, has had pretty much the same opinion on LLM code assistants: they're OK for some tasks but generally shit. Having something that automates code writing is not new. Codegen before AI were scripts that generated code that you have to write for a task, but is so repetitive it's a genuine time saver to have a script do it.

this is largely the best that LLMs can do with code, but they're still not as good as a simple script because of the inherently unreliable nature of LLMs being a big honkin statistical model and not a purpose-built machine.

none of the senior devs that say this are out there shouting on the rooftops that LLMs are evil and they're going to replace us. because we've been through this concept so many times over many years. Automation does not eliminate coding jobs, it saves time to focus on other work.

the one thing I wish senior devs would warn newbies is that you should not rely on LLMs for anything substantial. you should definitely not use it as a learning tool. it will hinder you in the long run because you don't practice the eternally useful skill of "reading things and experimenting until you figure it out". You will never stop reading things and experimenting until you figure it out. Senior devs may have more institutional knowledge and better instincts but they still encounter things that are new to them and they trip through it like a newbie would. this is called "practice" and you need it to learn things

205 notes

·

View notes

Text

one of the things that really pisses me off about how companies are framing the narrative on text generators is that they've gone out of their way to establish that the primary thing they are For is to be asked questions, like factual questions, when this is in no sense what they're inherently good at and given how they work it's miraculous that it ever works at all.

They've even got people calling it a "ChatGPT search". Now, correct me if I'm wrong software mutuals, but as i understand it, no searching is actually happening, right? Not in the moment when you ask the question. Maybe this varies across interfaces; maybe the one they've got plugged into Google is in some sense responding to content fed to it in the moment out of a conventional web search, but your like chatbot interface LLM isn't searching shit is it, it's working off data it's already been trained on and it can only work off something that isn't in there if you feed it the new text

i would be far less annoyed if they were still pitching them as like virtual buddies you can talk to or short story generators or programs that can rephrase and edit text that you feed to them

#text generators#LLMs#AI#but only as a tag i don't agree with calling them AI i think it gives people the wrong idea

76 notes

·

View notes

Text

#AI#LLMs#ArtificialIntelligence#AIForBeginners#funny#funny content#lol#funny memes#memes#comedy#meme#lol memes#rofl#tumblr#dank memes#haha#tumblr memes#memedaddy#humor#economy#funny shit

32 notes

·

View notes

Text

I think we should repurpose the term "unskilled labor" for anything generated by AI.

18 notes

·

View notes

Text

I swear the Algorithm's decisions about what to try and sell me have become even less comprehensible ever since LLMs became the new hot thing. I just received two separate, apparently unconnected emails informing me there's been a price drop on helical cutter heads for DeWalt DW735 thickness planers.

1K notes

·

View notes

Text

Patch pants progress

They're still so EMPTY, but they're getting there.

26 notes

·

View notes

Text

AIs dont understand the real world

a lot has been said over the fact that LLMs dont actually understand the real world, just the statistical relationship between empty tokens, empty words. i say "empty" because in the AI's mind those words dont actually connect to a real world understanding of what the words represent. the AI may understand that the collection of letters "D-O-G" may have some statistical connection to "T-A-I-L" and "F-U-R" and "P-O-O-D-L-E" but it doesnt actually know anything about what an actual Dog is or what is a Tail or actual Fur or real life Poodles.

and yet it seems to be capable of holding remarcably coherent conversations. it seems more and more, with each new model that comes out, to become better at answering questions, at reasoning, at creating original writing. if it doesnt truly understand the world it sure seems to get better at acting like it does with nothing but just a statistical understanding of how words are related to each other.

i guess the question ultimatly is "if you understand well enough the relationship between raw symbols could you have an understanding of the underlying relatonships between the things these symbols represent?"

now, let me take a small tangent towards human understanding. specifically towards what philosophy has to say about it.

one of the classic problems philosophers deal with is, how do we know the world is real. how do we know we can trust our senses, how do we know the truth? many argue that we cant. that we dont really percieve the true world out there beyond ourselves. all we can percieve is what our senses are telling us about the world.

lets think of sight.

if you think about it, we dont really "see" objects, right? we just see the light that bounces off those objects. and even then we dont really "see" the photons that collide with our eye, we see the images that our brain generates in our mind, presumably because the corresponding photons collided with our eye. but colorblind people and people who experience visual hallucinations have shown that what we see doesnt have to always correspond with actual phisical phenomena occuring in the real world.

we dont see the real world, we see referents to it. and from the relationships between these referents we start to infer the properties of the actual world out there. are the lights that hit our eye similar to the words that the LLM is trained on? only a referent whose understanding allows it to function in the real world, even if it cant actually "percieve" the "real" world.

but, one might say, we dont just have sight. we have other senses, we have smell and touch and taste and audition. all of these things allow us to form a much richer and multidimensional understanding of the world, even if by virtue of being human senses they have all the problems that sight has of being one step removed from the real world. so to this i will say that multimodal AIs also exist. AIs that can connect audio and visuals and words together and form relationships between all of this disparate data.

so then, can it be said that they understand the world? and if not yet, is that a cathegorical difference or merely a difference of degree. that is to say, not that they cathegorically cant understand the world but simply that they understand it less well than humans do.

41 notes

·

View notes

Text

The other thing about AI/Large image models is that there are things that they could be trained to do that are actually useful.

In college one of my friends was training a machine learning model to tell apart native fish species from invasive fish species in the Great Lakes to try to create a neural network program that could monitor cameras and count fish populations.

Yesterday I was reading about a man on a cruise ship who was reported missing in the afternoon, and when they went through the security footage, they found out that he had fallen overboard at 4 AM.

Imagine if an AI program had been trained on "people falling overboard" and been monitoring the security cameras and able to alert someone at 4 am that someone had fallen into the water! Imagine the animal population counts that an AI monitoring multiple trailcams in a wildlife area could do!

There are valid uses for this kind of pattern-matching large-data-processing recognition!

But no we're using it to replace writers and artists. Because it's easier, and more profitable.

401 notes

·

View notes