#how to generate token in github

Explore tagged Tumblr posts

Text

How to Generate Personal Access Token GitHub

Learning How to Generate Personal Access Token GitHub is essential for enhancing your account’s security and enabling seamless integration with various tools and automation. A Personal Access Token (PAT) is a unique string of characters that serves as a secure authentication method for interacting with the GitHub API. By following this comprehensive guide, we’ll walk you through the process of…

View On WordPress

#GitHub API#how to create personal access token in github#how to create token in github#How to Generate a Personal Access Token on GitHub#How to Generate Personal Access Token GitHub#how to generate token in github#personal access token

0 notes

Text

Hi all! We recently hit 10k followers on Twitter and are gaining traction on Bluesky. This is a periodic reminder to check us out over there and follow us if you haven't already!

Additionally, I'd really like to get this bot on a VPS soon and have the bot pay for itself since hosting funds ran out awhile back (DigitalOcean is quite expensive.) I hate to keep asking, but if you are able to, please consider giving a little. You can donate through CashApp Or, if you cannot, starring the repository on GitHub (yes, this is open source) would mean a lot to me personally. Promise I'll stop asking soon since I'm hoping the Twitter monetization revenue will kick in at some point.

Finally, I'd like to thank each and every one of you for following - not just in general, but Tumblr specifically. Out of all the platforms I have brought this bot to, it is Tumblr which has given it the warmest reception, and what motivates me to ultimately keep the bot up (and what caused me to bring it back.) I really am grateful for you guys. As a token of appreciation, I've decided to open up the ask page. I'm unfamiliar with how this really works (and Tumblr in general) but I'll try to answer some questions. In particular, I'd like to know about some things you'd want on this bot or things that are lacking - however much I can make this bot better for you. I realize that's a silly ask of such a meager bot, but who knows - perhaps I'm forgetting something important. In general, any feedback or input or even questions you have are greatly appreciated and I'll try my best to answer/implement whatever I get.

Again, thank you all, from the bottom of my heart. :D

131 notes

·

View notes

Text

Unnecessarily compiling sensitive information can be as damaging as actively trying to steal it. For example, the Cybernews research team discovered a plethora of supermassive datasets, housing billions upon billions of login credentials. From social media and corporate platforms to VPNs and developer portals, no stone was left unturned.

Our team has been closely monitoring the web since the beginning of the year. So far, they’ve discovered 30 exposed datasets containing from tens of millions to over 3.5 billion records each. In total, the researchers uncovered an unimaginable 16 billion records.

None of the exposed datasets were reported previously, bar one: in late May, Wired magazine reported a security researcher discovering a “mysterious database” with 184 million records. It barely scratches the top 20 of what the team discovered. Most worryingly, researchers claim new massive datasets emerge every few weeks, signaling how prevalent infostealer malware truly is.

“This is not just a leak – it’s a blueprint for mass exploitation. With over 16 billion login records exposed, cybercriminals now have unprecedented access to personal credentials that can be used for account takeover, identity theft, and highly targeted phishing. What’s especially concerning is the structure and recency of these datasets – these aren’t just old breaches being recycled. This is fresh, weaponizable intelligence at scale,” researchers said.

The only silver lining here is that all of the datasets were exposed only briefly: long enough for researchers to uncover them, but not long enough to find who was controlling vast amounts of data. Most of the datasets were temporarily accessible through unsecured Elasticsearch or object storage instances.

What do the billions of exposed records contain?

Researchers claim that most of the data in the leaked datasets is a mix of details from stealer malware, credential stuffing sets, and repackaged leaks.

There was no way to effectively compare the data between different datasets, but it’s safe to say overlapping records are definitely present. In other words, it’s impossible to tell how many people or accounts were actually exposed.

However, the information that the team managed to gather revealed that most of the information followed a clear structure: URL, followed by login details and a password. Most modern infostealers – malicious software stealing sensitive information – collect data in exactly this way.

Information in the leaked datasets opens the doors to pretty much any online service imaginable, from Apple, Facebook, and Google, to GitHub, Telegram, and various government services. It’s hard to miss something when 16 billion records are on the table.

According to the researchers, credential leaks at this scale are fuel for phishing campaigns, account takeovers, ransomware intrusions, and business email compromise (BEC) attacks.

“The inclusion of both old and recent infostealer logs – often with tokens, cookies, and metadata – makes this data particularly dangerous for organizations lacking multi-factor authentication or credential hygiene practices,” the team said.

What dataset exposed billions of credentials?

The datasets that the team uncovered differ widely. For example, the smallest, named after malicious software, had over 16 million records. Meanwhile, the largest one, most likely related to the Portuguese-speaking population, had over 3.5 billion records. On average, one dataset with exposed credentials had 550 million records.

Some of the datasets were named generically, such as “logins,” “credentials,” and similar terms, preventing the team from getting a better understanding of what’s inside. Others, however, hinted at the services they’re related to.

For example, one dataset with over 455 million records was named to indicate its origins in the Russian Federation. Another dataset, with over 60 million records, was named after Telegram, a cloud-based instant messaging platform.

“The inclusion of both old and recent infostealer logs – often with tokens, cookies, and metadata – makes this data particularly dangerous for organizations lacking multi-factor authentication or credential hygiene practices,”

While naming is not the best way to deduce where the data comes from, it seems some of the information relates to cloud services, business-oriented data, and even locked files. Some dataset names likely point to a form of malware that was used to collect the data.

It is unclear who owns the leaked data. While it could be security researchers that compile data to check and monitor data leaks, it’s virtually guaranteed that some of the leaked datasets were owned by cybercriminals. Cybercriminals love massive datasets as aggregated collections allow them to scale up various types of attacks, such as identity theft, phishing schemes, and unauthorized access.

A success rate of less than a percent can open doors to millions of individuals, who can be tricked into revealing more sensitive details, such as financial accounts. Worryingly, since it's unclear who owns the exposed datasets, there’s little impact users can do to protect themselves.

However, basic cyber hygiene is essential. Using a password manager to generate strong, unique passwords, and updating them regularly, can be the difference between a safe account and stolen details. Users should also review their systems for infostealers, to avoid losing their data to attackers.

No, Facebook, Google, and Apple passwords weren’t leaked. Or were they?

With a dataset containing 16 billion passwords, that’s equivalent to two leaked accounts for every person on the planet.

We don’t really know how many duplicate records there are, as the leak comes from multiple datasets. However, some reporting by other media outlets can be quite misleading. Some claim that Facebook, Google, and Apple credentials were leaked. While we can’t completely dismiss such claims, we feel this is somewhat inaccurate.

Bob Diachenko, a Cybernews contributor, cybersecurity researcher, and owner of SecurityDiscovery.com, is behind this recent major discovery.

16-billion-record data breach signals a shift in the underground world

According to Cybernews researcher Aras Nazarovas, this discovery might signal that criminals are abandoning previously popular methods of obtaining stolen data.

"The increased number of exposed infostealer datasets in the form of centralized, traditional databases, like the ones found be the Cybernews research team, may be a sign, that cybercriminals are actively shifting from previously popular alternatives such as Telegram groups, which were previously the go-to place for obtaining data collected by infostealer malware," Nazarovas said.

He regularly works with exposed datasets, ensuring that defenders secure them before threat actors can access them.

Here’s what Nazarovas suggests you should do to protect yourself.

"Some of the exposed datasets included information such as cookies and session tokens, which makes the mitigation of such exposure more difficult. These cookies can often be used to bypass 2FA methods, and not all services reset these cookies after changing the account password. Best bet in this case is to change your passwords, enable 2FA, if it is not yet enabled, closely monitor your accounts, and contact customer support if suspicious activity is detected."

Billions of records exposed online: recent leaks involve WeChat, Alipay

Major data leaks, with billions of exposed records, have become nearly ubiquitous. Last week, Cybernews wrote about what is likely the biggest data leak to ever hit China, billions of documents with financial data, WeChat and Alipay details, as well as other sensitive personal data.

Last summer, the largest password compilation with nearly ten billion unique passwords, RockYou2024, was leaked on a popular hacking forum. In 2021, a similar compilation with over 8 billion records was leaked online.

In early 2024, the Cybernews research team discovered what is likely still the largest data leak ever: the Mother of All Breaches (MOAB), with a mind-boggling 26 billion records.

16 billion passwords exposed: how to protect yourself

Huge datasets of passwords spill onto the dark web all the time, highlighting the need to change them regularly. This also demonstrates just how weak our passwords still are.

Last year, someone leaked the largest password compilation ever, with nearly ten billion unique passwords published online. Such leaks pose severe threats to people who are prone to reusing passwords.

Even if you think you are immune to this or other leaks, go and reset your passwords just in case.

Select strong, unique passwords that are not reused across multiple platforms

Enable multi-factor authentication (MFA) wherever possible

Closely monitor your accounts

Contact customer support in case of any suspicious activity

3 notes

·

View notes

Note

Thank you so much for all your hard work on your Immersive mods, they're a joy to play! You've inspired me to give modding a try, and I've got like a billion questions, but just off the top of my head: 1. What came first for you, the dialogue or the cut scenes? (And how did you learn how to do the cutscenes omg they're perfect) 2. What made you decide to involve other characters in your Sam/Sebastian mod? 3. What other tools do you use to see your additions in action? Is there a command you run to play your cutscenes or to manipulate the time of day to test something? Thank you in advance :)

Wow, you're going to make me think for a second, haha! This is a long answer so keep reading...

I start with the basic heart level daily dialogue first, then move on to dated dialogue. If I think of an event I want along the way, I will just hop over to the events and write that, then go back to dialogue. It's easier for me to keep it consistent if I go back and forth. I do have a basic overall story in mind before I start so I can generally plan events along a heart-level timeline.

I added the characters that are involved in my main character's life the most, family and close friends. Then in Seb I added some stuff for Alex just because I wanted to have a mini story with him. So it's just whichever characters seem to want to be in your story or dialogue. If I were to write one for Abigail, Sam and Seb would be in it along with her parents. Leah and Elliott don't have a lot of town connections, so other characters would be less involved or more at a surface level unless I wanted to add a story/connection for them. Elliott would have something with Willy and possibly Seb. Leah has virtually nothing in vanilla so would have to be all new.

Coding events... that is a real challenge to learn, the online resources are kind of there but limited. I pieced it together from looking at code on other mods, the Stardew modding wiki (articles on event modding and creating i18n files), and the Content Patcher GitHub instructions. I really don't recommend using my mod as a beginner reference, it's huge and full of stuff you probably don't need. I'd use a somewhat simpler one that's updated for 1.6 like Immersive Characters - Shane. The structure is similar, but my mods use a lot of dynamic tokens and conditional code that I added along the way making it much more advanced. Join the Stardew Valley discord, they have a modding help section and they are super quick to answer questions.

I also make custom sprites for events, I don't really advise doing this unless you're prepared for some extra headaches. Players DO NOT like when you mess with their sprite mods and you'll end up either ignoring a lot of complaints or making patches for Seasonal Outfits mod in particular.

To test your events, code it all up the best you can, then run debug ebi eventID in the SMAPI console. This plays your event immediately. There are some twitchy things about this involving conditions you'll learn along the way. You also need CJB Cheats Menu mod, this allows you to skip time, change friendship levels, change weather, all kinds of stuff you need for testing dialogue without actually playing the game. Bottom line, it's really trial and error. And running debug ebi like a million times on each event as you watch your characters literally walk off screen, turn the wrong way, farmer's hair pops off, so many bloopers!

9 notes

·

View notes

Text

Exploring SpookySwap: The DeFi Hub of Fantom in 2025

As decentralized finance (DeFi) continues to expand across multiple chains, users are seeking platforms that offer efficiency, transparency, and seamless user experience. On the Fantom Opera blockchain, SpookySwap has consistently delivered on all three fronts — earning its place as one of the most respected and functional DEXs in the ecosystem.

This article explores how SpookySwap evolved into a comprehensive DeFi hub, what it offers users in 2025, and how you can take advantage of its features to navigate the multichain DeFi landscape.

What Is SpookySwap?

SpookySwap is a decentralized exchange (DEX) and automated market maker (AMM) built natively on the Fantom network. Since its launch in 2021, the platform has prioritized speed, low fees, and a simplified user interface, making it one of the most user-friendly DEXs in the space.

Unlike some DEXs that offer only token swaps, SpookySwap has grown into a multifunctional DeFi ecosystem that includes:

Token trading

Yield farming and staking

Cross-chain bridging

Governance through the $BOO token

You can review their technical documentation and open-source repositories via their official GitHub: 🔗 SpookySwap GitHub

Core Features of SpookySwap

1. Token Swaps

SpookySwap allows users to trade any Fantom-compatible token using its AMM protocol. All trades occur in decentralized liquidity pools, which offer competitive slippage and low gas fees.

2. Liquidity Pools and Yield Farming

By contributing tokens to liquidity pools, users receive LP tokens that can then be staked in SpookySwap Farms to earn $BOO, the platform’s governance and utility token.

3. Staking via xBOO

SpookySwap offers a single-sided staking solution. Users can stake $BOO to receive xBOO, which entitles them to a portion of trading fees generated on the platform.

4. Cross-Chain Swaps

The SpookySwap Bridge enables seamless movement of assets between Fantom and other networks like Ethereum, Arbitrum, BNB Chain, and Polygon — directly through the app interface.

Why SpookySwap Stands Out in 2025

Built on Fantom

Fantom's high-speed, scalable architecture allows SpookySwap to offer ultra-fast transactions and negligible gas fees — a major advantage over Ethereum-based DEXs.

Community-Led and Transparent

As a decentralized project, SpookySwap is governed by its community through $BOO token proposals and votes. It also maintains complete transparency via open-source smart contracts and community contributions.

Continuous Upgrades

SpookySwap has stayed relevant by consistently improving its UI/UX, upgrading its backend architecture, and supporting multichain integrations. These efforts have kept the platform at the forefront of DeFi innovation.

Getting Started with SpookySwap

Download a Web3 wallet (MetaMask or Rabby)

Add the Fantom network to your wallet

Transfer FTM tokens for gas and trading

Connect your wallet to SpookySwap

Swap tokens, provide liquidity, or stake — all in one interface

Learning Resources

To help users understand how decentralized exchanges work and how to safely use them, platforms like Binance Academy offer comprehensive guides. Learn more here: 📘 Binance Academy

SpookySwap FAQ

What is the $BOO token? $BOO is the native governance token of SpookySwap. It can be used for staking, farming rewards, and voting on proposals.

Is SpookySwap only on Fantom? While built on Fantom, SpookySwap supports multichain bridging, allowing users to move assets from Ethereum, Arbitrum, and other networks.

How do I earn rewards on SpookySwap? By providing liquidity and staking LP tokens in farms, or staking $BOO in the xBOO pool.

Is SpookySwap audited? Yes. SpookySwap’s smart contracts are open-source and have undergone audits. You can find more information on GitHub.

Final Thoughts

SpookySwap represents a mature, efficient, and community-driven DEX experience in 2025. Its position on the Fantom network gives it an edge in speed and cost, while its multichain features expand its relevance across DeFi ecosystems.

Whether you are a passive holder, active trader, or yield farmer, SpookySwap offers a comprehensive set of tools to help you grow your crypto portfolio — all within a secure and user-friendly environment.

2 notes

·

View notes

Text

How to Spot Cryptocurrency Scams

Cryptocurrency scams are easy to spot when you know what to look for. Legitimate cryptocurrencies have readily available disclosures with detailed information about the blockchain and associated tokens.

Read the White Paper

Cryptocurrencies go through a development process. Before this process, there is generally a document published, called a white paper, for the public to read. If it's a legitimate white paper, it clearly describes the protocols and blockchain, outlines the formulas, and explains how the entire network functions. Fake cryptocurrencies don't produce thoroughly written and researched white papers. The fakes are poorly written, with figures that don't add up.

If the whitepaper reads like a pitchbook and outlines how the funds will be used in a project, it is likely a scam or an ICO that should be registered with the Securities and Exchange Commission. If it isn't registered, it's best to ignore it and move on.

Identify Team Members

White papers should always spotlight the members and developers behind the cryptocurrency. There are cases in which an open-source crypto project might not have named developers, which is typical for open-source projects. Still, you can view most coding, comments, and discussions on GitHub or GitLab. Some projects use forums and applications, like Discord or Slack, for discussion. If you can't find any of these elements, and the white paper is rife with errors, stand down—it's likely a scam

Beware of "Free" Items

Many cryptocurrency scams offer free coins or promise to “drop” coins into your wallet. Remind yourself that nothing is ever free, especially money and cryptocurrencies.

Scrutinize the Marketing

Legitimate blockchains and cryptocurrency projects tend to have humble beginnings and don't have the money to advertise and market themselves. Additionally, they won't post on social media pumping themselves up as the next best crypto—they'll talk about the legitimate issues they are trying to solve.

You might see cryptocurrency updates about blockchain developments or new security measures taken, but you should be wary of updates like "millions raised" or communications that appear to be more about money than about advances in the technology behind the crypto.

Legitimate businesses exist that use blockchain technology to provide services. They might have tokens used within their blockchains to pay transaction fees, but the advertising and marketing should appear professional-looking. Scammers also spend on celebrity endorsements and appearances and have all the information readily available on their websites. Legitimate businesses won't ask everyone to buy their crypto; they will advertise their blockchain-based services.

Where there is a lot of hype, there is usually something to be cautious of.

#crypto#scam#online scams#scammers#scam alert#scam warning#blockchain#cryptotrading#cryptocurrency#online crime

3 notes

·

View notes

Text

Wake up girlies, it's time to return to the frontline!

Guess who has insomniaaaa! 🤗💕💕

A month of cramps, nausea, increasingly worse insomnia (but a strangely good mood) has lead me down the path once again. I caught wind of some strange "gfl2" thing and after being struck with nostalgia, I grabbed bluestacks and fell into hell once more. I'd deleted gfl off my phone simply because it took too much space but now that it's on my computer, it's become DANGEROUS...! Github and clip studio up front with logistics running forever in the background. Yes, the ideal working experience.

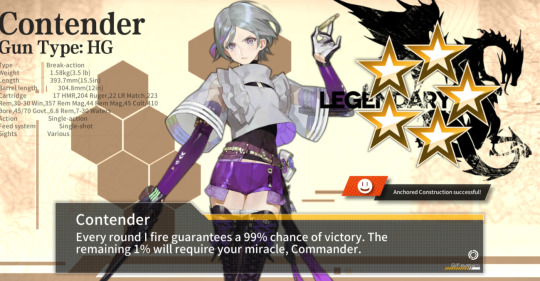

Anyhow, everyone say hello to Contender.

I've been making more progress in these past two days than I had in the entire two months I spent with my new account because I realized how to (partially) Not Be An Idiot. Turns out there's a thing called "anchored construction" and you can get some pretty nice units (eventually) if you realize it exists! Wow! I got the girly and now I'm working on grabbing Carcano because she is pretty but also insane skillz.

Also, there's a discounted gatcha running right now and that means I can finally get over my mental block and spend tokens... I was surprised at how easy it's been to acquire them, so I've just been shilling em out. My dorm was totally bare until now. I'm sorry, everynyan...

As for actual gameplay, I finally made it past 2-6. It might seem like a simple thing to most but I was yet again, being an idiot. I was under the impression that I HAD to have dupes of the girls to dummy link them when I actually was swimming in dummy cores 🤦🏽♂️ What's wrong with me... Well, I jumped over that hurdle, blasted through the emergency missions, and am finishing chapter 3. The first parts arent so bad when you learn how to read! 😃😃😃

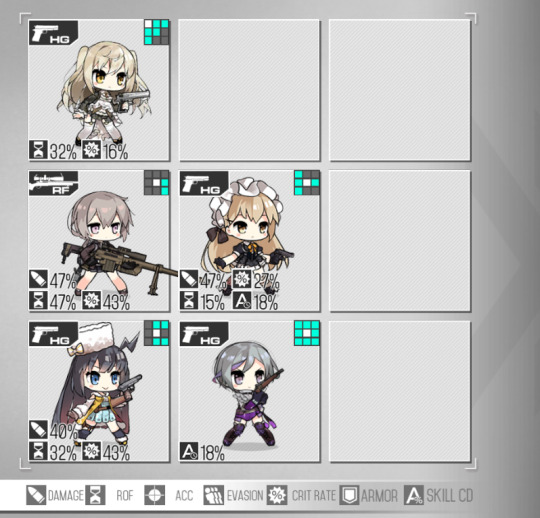

First eschalon is good, it's the standard one that everyone seems to use to clear the early game. This second one is a WIP mess that I'm readying for night missions. You see, I'm really hurting for half-decent SMGs and rifles, the second one there is kinda lacking in defense/fire power... I wanna create a decent second eschalon and night mission groupie but I gotta figure out what units to invest in. I hope for Carcano soon. She is cute. Also, feel free to berate me for my bad decisions and suggest decent compositions. I am so lacking in SMGs that dont immediately explode (mpk you are so cute but so stupid). I'm currently looking at friend's compositions to figure out what formations work...

In completely different news and only further proving how dense I am, I only recently learned that Girl of the Bakehouse was related to GFL. I've had my eye on Reverse Collapse for a while now since it's a remake (of a remake?! I didnt play the previous one) of a visual novel I played in 2012 or so. The original vn was made in 2009 in like Kirikiri script and I was a young lad very fixated on all things with girls and guns (Gunslinger Girl was and still is a favorite of mine, I would've read it one summer at my Uncle's out on the front porch). There's an english patch now, but back then it was only in Chinese so I had to use text extracting and image translators, looking up the characters as I went. I got a cup of coco and opened up a patched version last night for old times sake. It's clearly a doujin work with those rough edges but it's so damn confident in its presentation you can't not get swept up in the presentation. The sound work make it very immersive. I highly reccomend reading it if you want a solid, emotional war story. Looking at the sepia soaked sketches, down-to-earth narrative, dense wordbuilding and general war otaku sentimentality... It really predicted a lot of my tastes, huh... 😅

Behold, teh wolfguy...

Back to work. Logistics still running. I can and WILL continue being stupid. The nostalgia is really strong, I'm tempted to draw fanart despite the sour memories of the past. Again, please berate me and tell me of your team compositions. I think my ID is 772030 but I promise you, I won't be any good on teh battlefield 😇 this machine runs off hopes and dreams, not realities!

#chipco on the frontline#gfl#girl's frontline#sorry im such an idiot#also what the hell gfl 2#i will be seated#girl of the bakehouse#game diary

3 notes

·

View notes

Text

Unlocking New Horizons: 5 Powerful Ways to Use Claude 4

The future of AI is here. Anthropic's highly anticipated Claude 4 models (Opus 4 and Sonnet 4), released in May 2025, have fundamentally shifted the landscape of what large language models are capable of. Moving beyond impressive text generation, Claude 4 represents a significant leap forward in reasoning, coding, autonomous agent capabilities, and deep contextual understanding.

These aren't just incremental upgrades; Claude 4 introduces "extended thinking" and robust tool-use, enabling it to tackle complex, long-running tasks that were previously out of reach for AI. Whether you're a developer, researcher, content creator, or strategist, understanding how to leverage these new powers can unlock unprecedented levels of productivity and insight.

Here are 5 powerful ways you can put Claude 4 to work right now:

1. Revolutionizing Software Development and Debugging

Claude 4 Opus has quickly earned the title of the "world's best coding model," and for good reason. It’s built for the demands of real-world software engineering, moving far beyond simple code snippets.

How it works: Claude 4 can process entire codebases, understand complex multi-file changes, and maintain sustained performance over hours of work. Its "extended thinking" allows it to plan and execute multi-step coding tasks, debug intricate errors by analyzing stack traces, and even refactor large sections of code with precision. Integrations with IDEs like VS Code and JetBrains, and tools like GitHub Actions, make it a true pair programmer.

Why it's powerful: Developers can dramatically reduce time spent on tedious debugging, boilerplate generation, or complex refactoring. Claude 4 enables the automation of entire coding workflows, accelerating development cycles and freeing up engineers for higher-level architectural and design challenges. Its ability to work continuously for several hours on a task is a game-changer for long-running agentic coding projects.

Examples: Asking Claude 4 to update an entire library across multiple files in a complex repository, generating comprehensive unit tests for a new module, or identifying and fixing subtle performance bottlenecks in a large-scale application.

2. Deep Research and Information Synthesis at Scale

The ability to process vast amounts of information has always been a hallmark of advanced LLMs, and Claude 4 pushes this boundary further with its impressive 200K token context window and new "memory files" capability.

How it works: You can feed Claude 4 entire books, dozens of research papers, extensive legal documents, or years of financial reports. It can then not only summarize individual sources but, crucially, synthesize insights across them, identify conflicting data, and draw nuanced conclusions. Its new "memory files" allow it to extract and save key facts over time, building a tacit knowledge base for ongoing projects.

Why it's powerful: This transforms qualitative and quantitative research. Researchers can quickly identify critical patterns, lawyers can analyze massive discovery documents with unprecedented speed, and business analysts can distill actionable insights from overwhelming market data. The memory feature is vital for long-term projects where context retention is key.

Examples: Uploading a collection of scientific papers on a specific disease and asking Claude 4 to identify emerging therapeutic targets and potential side effects across all studies; feeding it competitor annual reports and asking for a comparative SWOT analysis over five years; or using it to build a comprehensive knowledge base about a new regulatory framework.

3. Advanced Document Understanding & Structured Data Extraction

Beyond simple OCR (Optical Character Recognition), Claude 4 excels at Intelligent Document Processing (IDP), understanding complex layouts and extracting structured data even from challenging formats.

How it works: Claude 4 can accurately process PDFs, scanned images, tables, and even mathematical equations. Its advanced vision capabilities combined with its reasoning allow it to not just read text, but to understand the context of information within a document. This makes it highly effective for extracting key-value pairs, table data, and specific entities.

Why it's powerful: This is a boon for automating workflows in industries heavily reliant on documents like finance, healthcare, and legal. It significantly reduces manual data entry, improves accuracy, and speeds up processing times for invoices, contracts, medical records, and more. Its performance on tables and equations makes it particularly valuable for technical and financial data.

Examples: Automatically extracting specific line items and totals from thousands of varied invoices; converting scanned legal contracts into structured data for clause analysis; or digitizing and structuring data from complex scientific papers that include charts and formulas.

4. Building Highly Autonomous AI Agents

The "extended thinking" and parallel tool use capabilities in Claude 4 are specifically designed to power the next generation of AI agents capable of multi-step workflows.

How it works: Claude 4 can plan a series of actions, execute them (e.g., using a web search tool, a code interpreter, or interacting with an API), evaluate the results, and then adjust its strategy – repeating this loop thousands of times if necessary. It can even use multiple tools simultaneously (parallel tool use), accelerating complex processes.

Why it's powerful: This moves AI from a reactive assistant to a proactive collaborator. Claude 4 can manage entire projects, orchestrate cross-functional tasks, conduct in-depth research across the internet, and complete multi-stage assignments with minimal human oversight. It's the beginning of truly "agentic" AI.

Examples: An AI agent powered by Claude 4 autonomously researching a market, generating a business plan, and then outlining a marketing campaign, using web search, data analysis tools, and internal company databases; a customer support agent capable of not just answering questions but also initiating complex troubleshooting steps, accessing internal systems, and escalating issues.

5. Nuanced Content Creation & Strategic Communication

Claude 4's enhanced reasoning and commitment to Constitutional AI allow for the creation of highly nuanced, ethically aligned, and contextually rich content and communications.

How it works: The model's refined understanding allows it to maintain a consistent tone and style over long outputs, adhere strictly to complex brand guidelines, and navigate sensitive topics with greater care. Its "extended thinking" also means it can develop more coherent and logical arguments for strategic documents.

Why it's powerful: This elevates content creation and strategic planning. Businesses can generate high-quality marketing materials, detailed reports, or persuasive proposals that resonate deeply with specific audiences while minimizing the risk of miscommunication or ethical missteps. It's ideal for crafting communications that require significant thought and precision.

Examples: Drafting a comprehensive policy document that balances multiple stakeholder interests and adheres to specific legal and ethical frameworks; generating a multi-channel marketing campaign script that adapts perfectly to different cultural nuances; or crafting a compelling long-form article that synthesizes complex ideas into an engaging narrative.

Claude 4 is more than just a powerful chatbot; it's a versatile foundation for intelligent automation and deeper understanding. By embracing its capabilities in coding, research, document processing, agent building, and content creation, professionals across industries can unlock new levels of efficiency, insight, and innovation. The era of the true AI collaborator has arrived.

0 notes

Text

Why Generative AI Platform Development is the Next Big Thing in Software Engineering and Product Innovation

In just a few years, generative AI has moved from being an experimental technology to a transformative force that’s reshaping industries. Its ability to create text, images, code, audio, and even entire virtual environments is redefining the limits of what software can do. But the real paradigm shift lies not just in using generative AI—but in building platforms powered by it.

This shift marks the dawn of a new era in software engineering and product innovation. Here's why generative AI platform development is the next big thing.

1. From Tools to Ecosystems: The Rise of Generative AI Platforms

Generative AI tools like ChatGPT, Midjourney, and GitHub Copilot have already proven their value in isolated use cases. However, the real potential emerges when these capabilities are embedded into broader ecosystems—platforms that allow developers, businesses, and users to build on top of generative models.

Much like cloud computing ushered in the era of scalable services, generative AI platforms are enabling:

Custom model training and fine-tuning

Integration with business workflows

Extensible APIs for building apps and services

Multimodal interaction (text, vision, speech, code)

These platforms don’t just offer one feature—they offer the infrastructure to reimagine entire categories of products.

2. Accelerated Product Development

Software engineers are increasingly adopting generative AI to speed up development cycles. Platforms that include AI coding assistants, auto-documentation tools, and test generation can:

Reduce boilerplate work

Identify bugs faster

Help onboard new developers

Enable rapid prototyping with AI-generated code or designs

Imagine a product team that can go from concept to MVP in days instead of months. This compression of the innovation timeline is game-changing—especially in competitive markets.

3. A New UX Paradigm: Conversational and Adaptive Interfaces

Traditional user interfaces are built around buttons, forms, and static flows. Generative AI platforms enable a new kind of UX—one that’s:

Conversational: Users interact through natural language

Context-aware: AI adapts to user behavior and preferences

Multimodal: Inputs and outputs span voice, image, text, video

This empowers entirely new product categories, from AI copilots in enterprise software to virtual AI assistants in healthcare, education, and customer service.

4. Customization at Scale

Generative AI platforms empower companies to deliver hyper-personalized experiences at scale. For example:

E-commerce platforms can generate product descriptions tailored to individual customer profiles.

Marketing tools can draft emails or campaigns in a brand’s tone of voice for specific segments.

Education platforms can create adaptive learning content for each student.

This ability to generate tailored outputs on-demand is a leap forward from static content systems.

5. Empowering Developers and Non-Technical Users Alike

Low-code and no-code platforms are being transformed by generative AI. Now, business users can describe what they want in plain language, and AI will build or configure parts of the application for them.

Meanwhile, developers get "superpowers"—they can focus on solving higher-order problems while AI handles routine or repetitive coding tasks. This dual benefit is making product development more democratic and efficient.

6. New Business Models and Monetization Opportunities

Generative AI platforms open doors to new business models:

AI-as-a-Service: Charge for API access or custom model hosting

Marketplace ecosystems: Sell AI-generated templates, prompts, or plug-ins

Usage-based pricing: Monetize based on token or image generation volume

Vertical-specific solutions: Offer industry-tailored generative platforms (e.g., legal, finance, design)

This flexibility allows companies to innovate not only on the tech front but also on how they deliver and capture value.

Conclusion

Generative AI platform development isn’t just another tech trend. It’s a foundational shift—comparable to the rise of the internet or cloud computing. By building platforms, not just applications, forward-looking companies are positioning themselves to lead the next wave of product innovation.

For software engineers, product managers, and entrepreneurs, this is the moment to explore, experiment, and build. The tools are here. The models are mature. And the possibilities are nearly limitless.

0 notes

Text

What datasets train large language generation models?

Large language generation models, such as GPT (Generative Pre-trained Transformer), are trained on vast and diverse datasets comprising text from books, websites, articles, social media, forums, and more. The primary objective of using such large-scale datasets is to teach the model about grammar, facts, reasoning, conversation patterns, and context across multiple domains and languages.

A typical training dataset for a large language model includes data from sources like Wikipedia, Common Crawl (a regularly updated archive of web pages), OpenWebText (an open-source approximation of content similar to Reddit links), Project Gutenberg (a collection of public domain books), academic papers, programming code (from repositories like GitHub), and dialogue from online platforms. The diversity and scale of these datasets help the model generalize knowledge across various topics, understand different writing styles, and respond effectively to complex queries.

Before training, the data is preprocessed to remove irrelevant, inappropriate, or low-quality content. Tokenization techniques are then applied to convert text into numerical formats that the model can understand. Models are typically trained using supervised or unsupervised learning techniques on powerful hardware infrastructures, often utilizing GPUs or TPUs in distributed computing environments.

The quality and quantity of training data play a crucial role in a model’s performance. Biases present in the training data can influence the model’s outputs, which is why developers also implement techniques for bias detection and mitigation.

As the field of generative AI continues to evolve, researchers are exploring better dataset curation methods, ethical data sourcing, and domain-specific datasets to improve relevance and reduce hallucinations in AI-generated content.

To understand how these models work and how datasets influence their capabilities, many professionals are enrolling in a Generative AI certification course to gain hands-on experience and industry-relevant skills.

0 notes

Text

What is API Fuzz Testing for Resilience, Security, and Zero-Day Defense

As digital infrastructures increasingly lean on APIs to drive microservices, connect ecosystems, and expose critical business logic, the surface area for potential vulnerabilities expands exponentially. Functional tests validate expected behavior. But what happens when your APIs are subjected to malformed requests, unexpected data types, or unknown user behaviors?

Enter API Fuzz Testing — an automated, adversarial testing approach designed not to affirm correctness but to uncover flaws, break assumptions, and expose the brittle edges of your application logic and security model.

What Is API Fuzz Testing?

API Fuzz Testing is a fault injection technique in which randomized, malformed, or deliberately malicious inputs are sent to API endpoints to uncover security vulnerabilities, crashes, unexpected behavior, or logical failures. The goal isn't validation — it's disruption. If your API fails gracefully, logs meaningfully, and maintains control under such chaos, it passes the fuzz test.

Unlike traditional negative testing, fuzzing doesn't rely on predefined inputs. It systematically mutates payloads and generates permutations far beyond human-designed test cases, often revealing issues that would otherwise remain dormant until exploited.

What Makes Fuzz Testing Critical for APIs?

APIs increasingly serve as front doors to critical data and systems. They are often public-facing, loosely coupled, and highly reusable — making them the perfect attack vector. Traditional security scans and unit tests can miss edge cases. API fuzzing acts as a synthetic adversary, testing how your API stands up to unexpected inputs, malformed calls, and constraint violations.

Real-World Impacts of Insufficient Input Validation:

Authentication bypass via token manipulation

DoS via payload bloating or recursion

Remote Code Execution via injection flaws

Data leakage from verbose error messages

Core Advantages of API Fuzz Testing

1. Discovery of Unknown Vulnerabilities (Zero-Days)

Fuzz testing excels at discovering the unknown unknowns. It doesn’t rely on known attack patterns or static code analysis rules — it uncovers logic bombs, exception cascades, and systemic flaws that even seasoned developers and static analyzers might miss.

2. Enhanced API Security Assurance

APIs are prime targets for injection, deserialization, and parameter pollution attacks. Fuzzing stress-tests authentication flows, access control layers, and input sanitization — closing critical security gaps before attackers can exploit them.

3. Crash and Exception Detection

Fuzzers are designed to uncover runtime-level faults: segmentation faults, memory leaks, unhandled exceptions, or stack overflows that occur under malformed inputs. These are often precursors to more serious vulnerabilities.

4. Automation at Scale

Fuzz testing frameworks are inherently automated. With schema-aware fuzzers, you can generate hundreds of thousands of input permutations and test them against live endpoints — without writing individual test cases.

5. Integration with DevSecOps Pipelines

Modern fuzzers can integrate with CI/CD systems (e.g., Jenkins, GitHub Actions) and produce actionable defect reports. This enables shift-left security testing, making fuzzing a native part of the software delivery lifecycle.

Under the Hood: How API Fuzz Testing Works

Let’s break down the fuzzing lifecycle in a technical context:

1. Seed Corpus Definition

Start with a baseline of valid API requests (e.g., derived from OpenAPI specs, HAR files, or Postman collections). These are used to understand the structure of input.

2. Input Mutation / Generation

Fuzzers then generate variants:

Mutation-based fuzzing: Randomizes or mutates fields (e.g., type flipping, injection payloads, encoding anomalies).

Generation-based fuzzing: Constructs new requests from scratch based on API models.

3. Instrumentation & Execution

Requests are sent to the API endpoints. Smart fuzzers hook into runtime environments (or use black-box observation) to detect:

HTTP response anomalies

Stack traces or crash logs

Performance regressions (e.g., timeouts, DoS)

4. Feedback Loop

Coverage-guided fuzzers (e.g., AFL-style) use instrumentation to identify which mutations explore new code paths, continuously refining input generation for maximum path discovery.

Read also: What is Fuzz Testing and How Does It Work?

Best Practices for Implementing API Fuzz Testing

Always Use a Staging Environment Fuzz testing is disruptive by design. Don’t run it against production APIs unless you want unplanned downtime.

Combine with Observability Use APM tools, structured logging, and trace correlation to pinpoint the root cause of crashes or regressions triggered by fuzz inputs.

Target High-Risk Endpoints First Prioritize fuzzing around areas handling authentication, file uploads, user input, or third-party integrations.

Maintain Your API Contracts A well-defined OpenAPI spec enhances fuzzing accuracy and lowers the rate of false positives.

Integrate Early, Test Continuously Make fuzzing a standard part of your CI/CD strategy — not a one-time pen test.

Final Thoughts

API fuzz testing is not just a security enhancement — it’s a resilience discipline. It helps uncover deep systemic weaknesses, builds defensive depth, and prepares your application infrastructure for the unexpected.

In a world where APIs drive customer experiences, partner integrations, and internal operations, you can’t afford not to fuzz.

Fortify Your APIs with Testrig Technologies

As a leading Software Testing Company, at Testrig Technologies, we go beyond traditional QA. Our expert engineers blend schema-aware fuzzing, intelligent automation, and security-first test design to help enterprises build resilient, attack-proof APIs.

Want to ensure your APIs don’t just function — but survive chaos?

0 notes

Text

Authenticator App Neues Handy: How to Securely Transfer Your 2FA Credentials During a Phone Change

In an era where cybersecurity is a top priority for professionals and organizations alike, authenticator apps have become a fundamental layer of account protection. These apps—such as Google Authenticator, Microsoft Authenticator, or Authy—enable two-factor authentication (2FA) through time-based one-time passcodes (TOTP), making unauthorized access significantly harder.

But what happens when you change your smartphone? Whether you’re upgrading your device or replacing a lost or damaged phone, knowing how to handle your authenticator app neues Handy (authenticator app on a new phone) is critical. For professionals managing multiple secure accounts, a seamless authenticator app Handy Wechsel (authenticator app phone switch) is essential to avoid disruptions or permanent lockouts.

In this guide, we’ll walk you through the steps for transferring your authenticator app to a new phone—both with and without access to the old device—and provide expert-level best practices for handling 2FA credentials during a phone transition.

Why Authenticator Apps Matter

Authenticator apps generate 6-digit codes that refresh every 30 seconds and are used alongside your username and password to access sensitive accounts. They are widely used for:

Enterprise cloud services (Google Workspace, Microsoft 365)

Developer platforms (GitHub, AWS, Azure)

Financial and banking apps

Email and social media accounts

For IT professionals, developers, and digital entrepreneurs, keeping these codes accessible and secure during a phone change is non-negotiable.

Preparing for a Phone Switch: What to Do Beforehand

If you still have access to your old device, it’s always best to prepare before making the switch. A proactive approach simplifies the authenticator app Handy Wechsel process and ensures continuity.

Checklist Before Migrating:

✅ Install the same authenticator app on your new device

✅ Ensure your old device is unlocked and functional

✅ Check if your authenticator app supports export/import (e.g., Google Authenticator does)

✅ Back up recovery codes or store them securely in a password manager

Let’s now dive into the detailed steps.

How to Transfer Authenticator App to Neues Handy (New Phone) – With Access to Old Phone

Step 1: Install Authenticator App on the New Phone

Download your preferred app from the Google Play Store or Apple App Store. Make sure it’s the official version published by the provider (Google LLC, Microsoft Corporation, etc.).

Step 2: Export Accounts From Old Phone

For Google Authenticator:

Open the app on your old phone.

Tap the three-dot menu > Transfer accounts > Export accounts.

Authenticate with your device credentials.

Select the accounts you want to move.

A QR code will be generated.

For Microsoft Authenticator or Authy:

These apps typically offer account synchronization through cloud backup, making the transfer process even easier. Sign in on the new device using the same account credentials to restore your tokens.

Step 3: Import Accounts on Neues Handy

On your new device:

Open the authenticator app.

Choose Import accounts or Scan QR code.

Use the phone camera to scan the QR code generated on your old phone.

After successful import, test one or more logins to verify that 2FA works correctly.

Step 4: Deactivate Old Device

Once confirmed:

Remove or reset the app on the old phone.

Log out or delete tokens to prevent unauthorized use.

Alternatively, perform a factory reset if you are disposing of or selling the old device.

How to Set Up Authenticator App on Neues Handy Without Old Phone

Losing your old phone complicates the authenticator app Handy Wechsel, but it’s still possible to regain access. This process involves recovering individual 2FA-enabled accounts and re-enabling the authenticator manually.

Step 1: Install Authenticator App on New Phone

Choose and install the same authenticator app you used previously (Google Authenticator, Authy, etc.).

Step 2: Recover Each Account

For each service where you’ve enabled 2FA:

Go to the login page and click “Can’t access your 2FA device?” or a similar option.

Use backup recovery codes (if previously saved) to log in.

If no backup codes are available, follow the account recovery process (usually email or identity verification).

Once logged in, disable 2FA, then re-enable it by scanning a new QR code using the authenticator app on your new phone.

💡 Pro tip: Prioritize access to critical accounts like your primary email, password manager, and cloud service logins first.

What If You Used Authy or Microsoft Authenticator?

Some apps offer cloud-based sync, making authenticator app Handy Wechsel far more seamless.

Authy allows account sync across multiple devices (with PIN or biometric protection).

Microsoft Authenticator can back up to iCloud (iOS) or your Microsoft account (Android).

If you previously enabled cloud backup:

Log in on your new phone using the same credentials.

Restore your token list automatically.

Best Practices for Professionals Handling Authenticator App Migration

Changing devices should never compromise your security posture. Here are expert recommendations for managing the authenticator app neues Handy process efficiently:

1. Use a Password Manager

Many password managers (like 1Password, Bitwarden, and LastPass) now support integrated 2FA tokens, allowing secure storage and syncing of TOTP codes.

2. Save Backup Codes Offline

When enabling 2FA for any service, you're usually offered backup or recovery codes. Save these in an encrypted file or secure offline location. Never store them in plain text on cloud services.

3. Regularly Audit 2FA Accounts

Periodically:

Remove unused tokens

Update recovery information (email/phone)

Verify access to backup codes

This helps reduce risks during device transitions.

4. Avoid Using One Phone for All Authentication

For added security, professionals often use a dedicated device (e.g., a secure tablet or secondary phone) solely for 2FA apps.

Common Pitfalls to Avoid

❌ Failing to back up recovery codes before device reset

❌ Not verifying all logins after transfer

❌ Using third-party apps with poor security reputations

❌ Relying on SMS-based 2FA only (which is less secure than app-based)

Avoiding these mistakes can make the authenticator app Handy Wechsel much smoother.

Conclusion

For professionals and security-conscious users, changing smartphones is more than a simple upgrade—it’s a sensitive process that requires safeguarding access to critical accounts. Whether you're planning ahead or recovering from the loss of your old phone, understanding how to manage your authenticator app neues Handy setup ensures that you maintain full control of your digital footprint.

From exporting tokens and using secure backups to recovering access without your previous device, the strategies covered here can help make your authenticator app Handy Wechsel as seamless and secure as possible.

0 notes

Text

How to Evaluate a Project Before Joining Its Crypto Presale

As blockchain innovation continues to expand in 2025, the number of new crypto projects entering the space has skyrocketed. Many of these initiatives launch through presales—a method that gives early supporters the chance to invest in tokens before they’re listed on public exchanges. While the potential rewards are high, the risks are just as significant. That’s why understanding how to evaluate a project before joining its presale is more important than ever.

Whether you're looking to get involved in the best presale crypto 2025 or explore secure storage options through the best wallet crypto presale, conducting proper due diligence is the key to smart investing.

What Is a Crypto Presale?

A crypto presale is an early-stage token sale that occurs before a project's Initial Coin Offering (ICO) or public launch. Investors who participate in presales typically gain access to tokens at discounted rates, often in exchange for providing the initial capital that helps the project grow. While presales can be highly profitable, they can also attract low-quality or short-lived projects, making evaluation essential.

Step-by-Step Guide to Evaluating a Crypto Presale Project

1. Read and Analyze the Whitepaper

The whitepaper is a project's foundational document. It should clearly outline the project's purpose, technology, use case, roadmap, and tokenomics. Pay close attention to how the project plans to deliver real-world value.

Ask:

Is the problem well-defined?

Does the solution leverage blockchain in a meaningful way?

Are the goals realistic and time-bound?

A well-written whitepaper signals a serious and transparent team.

2. Scrutinize the Tokenomics

Tokenomics refers to how a project structures, distributes, and uses its tokens. A sound token model will prevent inflation, encourage user participation, and reward long-term holders.

Key elements to evaluate:

Total supply and circulation plans

Token allocation (team, investors, public)

Lock-up or vesting periods

Utility within the ecosystem

This is especially important for those researching the best presale crypto 2025, as tokenomics will directly impact a project’s long-term value.

3. Check Development Activity

A working product, MVP (Minimum Viable Product), or active development on platforms like GitHub is a great sign. It shows the team is committed to building rather than just marketing.

Even if the project is in its early stages, regular updates and visible progress help build trust and legitimacy.

4. Assess Community Strength

The strength of a project’s community can often reflect its long-term sustainability. Explore platforms like Telegram, X (formerly Twitter), Reddit, or Discord to evaluate engagement and sentiment.

Indicators of a strong community:

Transparent communication from the team

Active discussions and support

Organic growth and enthusiasm

5. Review the Team and Advisory Board

Although some teams opt for partial anonymity, it’s generally safer to invest in projects with publicly verifiable team members. Look into their background, previous experience, and involvement in blockchain or tech sectors.

Even without specific names, transparency around team structure and roles is essential.

6. Security and Audits

Security should never be overlooked. For projects in the best wallet crypto presale category, where token security and storage functionality are core, it’s critical that the project follows best practices and ideally undergoes third-party smart contract audits.

Ask:

Have the contracts been audited?

Are audit results available to the public?

Is the wallet or protocol designed with user safety in mind?

Red Flags to Watch Out For

Vague promises or exaggerated claims (e.g., "guaranteed 1000% returns")

Lack of a clear use case or weak technical documentation

No transparency about fund allocation

Inactive or ghosted community channels

Any of these could signal a lack of planning or intention to deliver.

Final Thoughts

Getting in early on a promising crypto project can be extremely rewarding, especially when identifying the best presale crypto 2025 before it gains mainstream attention. Similarly, tokens tied to wallet innovations—like those in the best wallet crypto presale category—can offer functional utility alongside financial upside.

1 note

·

View note

Text

CircleCI is a continuous integration and delivery platform that helps organizations automate application delivery with faster builds and simplified pipeline maintenance. CircleCI in particular offers the ability to automate testing during the CI/CD process as part of their daily team habits.CircleCI jobs require secrets in order to pull code from Git repo (e.g. GitHub token) and for installing this code on a newly defined environment (e.g. CSP credentials - access key). By integrating CircleCI with Akeyless Vault, you would not need to keep hard coded secrets within the CircleCI jobs such as API-keys, tokens, certificates and username & password credentials.With an Akeyless Vault integration to CircleCI, Akeyless acts as a centralized secrets management platform by efficiently provisioning user secrets into CircleCI jobs. Akeyless handles secret provisioning through injecting Static or Dynamic Keys into CircleCI jobs. While doing so, Akeyless leverages existing CircleCI functionalities such as jobs, workflows, contexts, in order to securely fetch secrets to CircleCI pipelines. Benefits of Using a Centralized Secrets Management SolutionWith a centralized secrets management platform like Akeyless Vault, CircleCI and all other DevOps tool’s secrets are unified and secured. A secrets management platform like the Akeyless Vault makes it operationally simpler to maintain compliance and generate secret access reports with a usage visibility of what secret, when accessed, and from where.Operation-Wise - For an organization with multiple DevOps tools, managing secrets becomes complicated and requires considerable effort to maintain workforce efficiency. With a holistic secrets management platform like Akeyless, an organization maintains a single source of secrets into different DevOps applications and workflows simultaneously.Audit-Wise - With a consolidated audit of all application secrets directly through the Akeyless Vault, an organization can ensure audit compliance centrally instead of auditing multiple secret repositories. Functionality-Wise - Most DevOps tools, including CircleCI, lack the Zero-Trust strategy while administering secrets. A centralized secrets management solution like Akeyless, secrets are generated on-the-fly to fetch into CircleCI jobs Just-in-Time, thereby aiding a Zero-Trust posture.Security-Wise - Through Akeyless, CircleCI job secrets are provisioned through Static and Dynamic Keys instead of the default way of storing secrets as plain text. Additionally, with automatic expiry of SSH Certificates, Akeyless avoids abuse and theft of access privileges. How to Fetch a Secret with Akeyless Vault in CircleCIWith benefits like ease of operation and enhanced security allowed by Akeyless Vault's centralized secrets management platform, let’s go through the simple steps to fetch a secret in CircleCI.Prerequisites1.You need to have an existing repo that is followed by CircleCI (in our example it’s named TestRepo):2. Sign In or create an account with Akeyless (it’s free) by accessing the URL: https://console.akeyless.io/register 3. In case you are a new user, create your first secret in Akeyless as shown below: Configuration1. Setup global configuration in your CircleCI projecta. Go into Project Settings:b. Go into Environment Variables to setup global configuration:In our example, you would need to configure the following environment variables:· api_gateway_url· admin_email· admin_passwordIf you have your own Akeyless API Gateway setup - set the URL for the Restful API, otherwise you can use Akeyless Public API Gateway with the following URL:https://rest.akeyless.io Similarly, set your admin_email and admin_password as environment variables: 2. Create/update your config.yml file for CircleCI (should be in .circleci/config.yml): 3. Pipeline will be triggered and you'll be able to view your build:To Sum Up With the Akeyless Vault plugin for CircleCI, an

organization can manage CircleCI secrets effortlessly, cutting down operational hassles in addition to maintaining security. With the use of Static or Dynamic secrets, access policies are efficiently permission-controlled across multiple layers of a DevOps workflow.

0 notes

Text

```markdown

Blockchain Ranking API: Revolutionizing the Way We Measure Success

In the ever-evolving landscape of blockchain technology, measuring the success and impact of different projects has become increasingly complex. With a multitude of metrics to consider, from transaction volume to community engagement, it's challenging for both developers and investors to get a clear picture of which projects are truly making a difference. Enter the Blockchain Ranking API—a powerful tool designed to simplify this process and provide a comprehensive overview of blockchain project performance.

The Need for Standardization

Before diving into the specifics of the Blockchain Ranking API, it's important to understand why standardization in blockchain metrics is so crucial. Each blockchain project operates on its own unique set of rules and protocols, making direct comparisons difficult. By providing a standardized framework for evaluating these projects, the Blockchain Ranking API helps eliminate confusion and provides a clear benchmark for assessing performance.

How It Works

The Blockchain Ranking API leverages a variety of data points to rank projects, including but not limited to:

Transaction Volume: A measure of the total number of transactions processed over a given period.

Community Engagement: Metrics such as social media mentions, forum activity, and GitHub contributions.

Developer Activity: An assessment of how active the development team is, including code commits and bug fixes.

Market Capitalization: The total value of all tokens or coins in circulation.

By aggregating these data points, the API generates a score that reflects the overall health and potential of each project. This score is then used to create a ranking system, allowing users to quickly identify top performers.

Benefits for Users

Whether you're a developer looking to build on a robust platform or an investor searching for promising projects, the Blockchain Ranking API offers several key benefits:

Time-Saving: No need to manually gather and analyze data from multiple sources.

Transparency: All data sources are clearly documented, ensuring transparency in the ranking process.

Actionable Insights: The rankings provide actionable insights that can inform strategic decisions.

Potential for Growth

As blockchain technology continues to evolve, the need for tools like the Blockchain Ranking API will only increase. By providing a standardized way to evaluate projects, this API not only helps current users make informed decisions but also sets the stage for future innovations in the space.

Conclusion

The Blockchain Ranking API represents a significant step forward in the world of blockchain evaluation. By offering a standardized, transparent, and comprehensive approach to ranking projects, it empowers users to make more informed decisions and drives the industry towards greater accountability and innovation. As we look to the future, tools like this will be essential in shaping the direction of blockchain technology.

What do you think about the importance of standardization in blockchain metrics? Share your thoughts and experiences in the comments below!

```

加飞机@yuantou2048

谷歌霸屏

EPP Machine

0 notes