#how to set up kubernets

Explore tagged Tumblr posts

Video

youtube

Session 5 Kubernetes 3 Node Cluster and Dashboard Installation and Confi...

#youtube#In this exciting video tutorial we dive into the world of Kubernetes exploring how to set up a robust 3-node cluster and configure the Kuber

0 notes

Text

What is Argo CD? And When Was Argo CD Established?

What Is Argo CD?

Argo CD is declarative Kubernetes GitOps continuous delivery.

In DevOps, ArgoCD is a Continuous Delivery (CD) technology that has become well-liked for delivering applications to Kubernetes. It is based on the GitOps deployment methodology.

When was Argo CD Established?

Argo CD was created at Intuit and made publicly available following Applatix’s 2018 acquisition by Intuit. The founding developers of Applatix, Hong Wang, Jesse Suen, and Alexander Matyushentsev, made the Argo project open-source in 2017.

Why Argo CD?

Declarative and version-controlled application definitions, configurations, and environments are ideal. Automated, auditable, and easily comprehensible application deployment and lifecycle management are essential.

Getting Started

Quick Start

kubectl create namespace argocd kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

For some features, more user-friendly documentation is offered. Refer to the upgrade guide if you want to upgrade your Argo CD. Those interested in creating third-party connectors can access developer-oriented resources.

How it works

Argo CD defines the intended application state by employing Git repositories as the source of truth, in accordance with the GitOps pattern. There are various approaches to specify Kubernetes manifests:

Applications for Customization

Helm charts

JSONNET files

Simple YAML/JSON manifest directory

Any custom configuration management tool that is set up as a plugin

The deployment of the intended application states in the designated target settings is automated by Argo CD. Deployments of applications can monitor changes to branches, tags, or pinned to a particular manifest version at a Git commit.

Architecture

The implementation of Argo CD is a Kubernetes controller that continually observes active apps and contrasts their present, live state with the target state (as defined in the Git repository). Out Of Sync is the term used to describe a deployed application whose live state differs from the target state. In addition to reporting and visualizing the differences, Argo CD offers the ability to manually or automatically sync the current state back to the intended goal state. The designated target environments can automatically apply and reflect any changes made to the intended target state in the Git repository.

Components

API Server

The Web UI, CLI, and CI/CD systems use the API, which is exposed by the gRPC/REST server. Its duties include the following:

Status reporting and application management

Launching application functions (such as rollback, sync, and user-defined actions)

Cluster credential management and repository (k8s secrets)

RBAC enforcement

Authentication, and auth delegation to outside identity providers

Git webhook event listener/forwarder

Repository Server

An internal service called the repository server keeps a local cache of the Git repository containing the application manifests. When given the following inputs, it is in charge of creating and returning the Kubernetes manifests:

URL of the repository

Revision (tag, branch, commit)

Path of the application

Template-specific configurations: helm values.yaml, parameters

A Kubernetes controller known as the application controller keeps an eye on all active apps and contrasts their actual, live state with the intended target state as defined in the repository. When it identifies an Out Of Sync application state, it may take remedial action. It is in charge of calling any user-specified hooks for lifecycle events (Sync, PostSync, and PreSync).

Features

Applications are automatically deployed to designated target environments.

Multiple configuration management/templating tools (Kustomize, Helm, Jsonnet, and plain-YAML) are supported.

Capacity to oversee and implement across several clusters

Integration of SSO (OIDC, OAuth2, LDAP, SAML 2.0, Microsoft, LinkedIn, GitHub, GitLab)

RBAC and multi-tenancy authorization policies

Rollback/Roll-anywhere to any Git repository-committed application configuration

Analysis of the application resources’ health state

Automated visualization and detection of configuration drift

Applications can be synced manually or automatically to their desired state.

Web user interface that shows program activity in real time

CLI for CI integration and automation

Integration of webhooks (GitHub, BitBucket, GitLab)

Tokens of access for automation

Hooks for PreSync, Sync, and PostSync to facilitate intricate application rollouts (such as canary and blue/green upgrades)

Application event and API call audit trails

Prometheus measurements

To override helm parameters in Git, use parameter overrides.

Read more on Govindhtech.com

#ArgoCD#CD#GitOps#API#Kubernetes#Git#Argoproject#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

Ansible Collections: Extending Ansible’s Capabilities

Ansible is a powerful automation tool used for configuration management, application deployment, and task automation. One of the key features that enhances its flexibility and extensibility is the concept of Ansible Collections. In this blog post, we'll explore what Ansible Collections are, how to create and use them, and look at some popular collections and their use cases.

Introduction to Ansible Collections

Ansible Collections are a way to package and distribute Ansible content. This content can include playbooks, roles, modules, plugins, and more. Collections allow users to organize their Ansible content and share it more easily, making it simpler to maintain and reuse.

Key Features of Ansible Collections:

Modularity: Collections break down Ansible content into modular components that can be independently developed, tested, and maintained.

Distribution: Collections can be distributed via Ansible Galaxy or private repositories, enabling easy sharing within teams or the wider Ansible community.

Versioning: Collections support versioning, allowing users to specify and depend on specific versions of a collection. How to Create and Use Collections in Your Projects

Creating and using Ansible Collections involves a few key steps. Here’s a guide to get you started:

1. Setting Up Your Collection

To create a new collection, you can use the ansible-galaxy command-line tool:

ansible-galaxy collection init my_namespace.my_collection

This command sets up a basic directory structure for your collection:

my_namespace/

└── my_collection/

├── docs/

├── plugins/

│ ├── modules/

│ ├── inventory/

│ └── ...

├── roles/

├── playbooks/

├── README.md

└── galaxy.yml

2. Adding Content to Your Collection

Populate your collection with the necessary content. For example, you can add roles, modules, and plugins under the respective directories. Update the galaxy.yml file with metadata about your collection.

3. Building and Publishing Your Collection

Once your collection is ready, you can build it using the following command:

ansible-galaxy collection build

This command creates a tarball of your collection, which you can then publish to Ansible Galaxy or a private repository:

ansible-galaxy collection publish my_namespace-my_collection-1.0.0.tar.gz

4. Using Collections in Your Projects

To use a collection in your Ansible project, specify it in your requirements.yml file:

collections:

- name: my_namespace.my_collection

version: 1.0.0

Then, install the collection using:

ansible-galaxy collection install -r requirements.yml

You can now use the content from the collection in your playbooks:--- - name: Example Playbook hosts: localhost tasks: - name: Use a module from the collection my_namespace.my_collection.my_module: param: value

Popular Collections and Their Use Cases

Here are some popular Ansible Collections and how they can be used:

1. community.general

Description: A collection of modules, plugins, and roles that are not tied to any specific provider or technology.

Use Cases: General-purpose tasks like file manipulation, network configuration, and user management.

2. amazon.aws

Description: Provides modules and plugins for managing AWS resources.

Use Cases: Automating AWS infrastructure, such as EC2 instances, S3 buckets, and RDS databases.

3. ansible.posix

Description: A collection of modules for managing POSIX systems.

Use Cases: Tasks specific to Unix-like systems, such as managing users, groups, and file systems.

4. cisco.ios

Description: Contains modules and plugins for automating Cisco IOS devices.

Use Cases: Network automation for Cisco routers and switches, including configuration management and backup.

5. kubernetes.core

Description: Provides modules for managing Kubernetes resources.

Use Cases: Deploying and managing Kubernetes applications, services, and configurations.

Conclusion

Ansible Collections significantly enhance the modularity, distribution, and reusability of Ansible content. By understanding how to create and use collections, you can streamline your automation workflows and share your work with others more effectively. Explore popular collections to leverage existing solutions and extend Ansible’s capabilities in your projects.

For more details click www.qcsdclabs.com

#redhatcourses#information technology#linux#containerorchestration#container#kubernetes#containersecurity#docker#dockerswarm#aws

2 notes

·

View notes

Text

DevOps for Beginners: Navigating the Learning Landscape

DevOps, a revolutionary approach in the software industry, bridges the gap between development and operations by emphasizing collaboration and automation. For beginners, entering the world of DevOps might seem like a daunting task, but it doesn't have to be. In this blog, we'll provide you with a step-by-step guide to learn DevOps, from understanding its core philosophy to gaining hands-on experience with essential tools and cloud platforms. By the end of this journey, you'll be well on your way to mastering the art of DevOps.

The Beginner's Path to DevOps Mastery:

1. Grasp the DevOps Philosophy:

Start with the Basics: DevOps is more than just a set of tools; it's a cultural shift in how software development and IT operations work together. Begin your journey by understanding the fundamental principles of DevOps, which include collaboration, automation, and delivering value to customers.

2. Get to Know Key DevOps Tools:

Version Control: One of the first steps in DevOps is learning about version control systems like Git. These tools help you track changes in code, collaborate with team members, and manage code repositories effectively.

Continuous Integration/Continuous Deployment (CI/CD): Dive into CI/CD tools like Jenkins and GitLab CI. These tools automate the building and deployment of software, ensuring a smooth and efficient development pipeline.

Configuration Management: Gain proficiency in configuration management tools such as Ansible, Puppet, or Chef. These tools automate server provisioning and configuration, allowing for consistent and reliable infrastructure management.

Containerization and Orchestration: Explore containerization using Docker and container orchestration with Kubernetes. These technologies are integral to managing and scaling applications in a DevOps environment.

3. Learn Scripting and Coding:

Scripting Languages: DevOps engineers often use scripting languages such as Python, Ruby, or Bash to automate tasks and configure systems. Learning the basics of one or more of these languages is crucial.

Infrastructure as Code (IaC): Delve into Infrastructure as Code (IaC) tools like Terraform or AWS CloudFormation. IaC allows you to define and provision infrastructure using code, streamlining resource management.

4. Build Skills in Cloud Services:

Cloud Platforms: Learn about the main cloud providers, such as AWS, Azure, or Google Cloud. Discover the creation, configuration, and management of cloud resources. These skills are essential as DevOps often involves deploying and managing applications in the cloud.

DevOps in the Cloud: Explore how DevOps practices can be applied within a cloud environment. Utilize services like AWS Elastic Beanstalk or Azure DevOps for automated application deployments, scaling, and management.

5. Gain Hands-On Experience:

Personal Projects: Put your knowledge to the test by working on personal projects. Create a small web application, set up a CI/CD pipeline for it, or automate server configurations. Hands-on practice is invaluable for gaining real-world experience.

Open Source Contributions: Participate in open source DevOps initiatives. Collaborating with experienced professionals and contributing to real-world projects can accelerate your learning and provide insights into industry best practices.

6. Enroll in DevOps Courses:

Structured Learning: Consider enrolling in DevOps courses or training programs to ensure a structured learning experience. Institutions like ACTE Technologies offer comprehensive DevOps training programs designed to provide hands-on experience and real-world examples. These courses cater to beginners and advanced learners, ensuring you acquire practical skills in DevOps.

In your quest to master the art of DevOps, structured training can be a game-changer. ACTE Technologies, a renowned training institution, offers comprehensive DevOps training programs that cater to learners at all levels. Whether you're starting from scratch or enhancing your existing skills, ACTE Technologies can guide you efficiently and effectively in your DevOps journey. DevOps is a transformative approach in the world of software development, and it's accessible to beginners with the right roadmap. By understanding its core philosophy, exploring key tools, gaining hands-on experience, and considering structured training, you can embark on a rewarding journey to master DevOps and become an invaluable asset in the tech industry.

7 notes

·

View notes

Text

Full Stack Development: Using DevOps and Agile Practices for Success

In today’s fast-paced and highly competitive tech industry, the demand for Full Stack Developers is steadily on the rise. These versatile professionals possess a unique blend of skills that enable them to handle both the front-end and back-end aspects of software development. However, to excel in this role and meet the ever-evolving demands of modern software development, Full Stack Developers are increasingly turning to DevOps and Agile practices. In this comprehensive guide, we will explore how the combination of Full Stack Development with DevOps and Agile methodologies can lead to unparalleled success in the world of software development.

Full Stack Development: A Brief Overview

Full Stack Development refers to the practice of working on all aspects of a software application, from the user interface (UI) and user experience (UX) on the front end to server-side scripting, databases, and infrastructure on the back end. It requires a broad skill set and the ability to handle various technologies and programming languages.

The Significance of DevOps and Agile Practices

The environment for software development has changed significantly in recent years. The adoption of DevOps and Agile practices has become a cornerstone of modern software development. DevOps focuses on automating and streamlining the development and deployment processes, while Agile methodologies promote collaboration, flexibility, and iterative development. Together, they offer a powerful approach to software development that enhances efficiency, quality, and project success. In this blog, we will delve into the following key areas:

Understanding Full Stack Development

Defining Full Stack Development

We will start by defining Full Stack Development and elucidating its pivotal role in creating end-to-end solutions. Full Stack Developers are akin to the Swiss Army knives of the development world, capable of handling every aspect of a project.

Key Responsibilities of a Full Stack Developer

We will explore the multifaceted responsibilities of Full Stack Developers, from designing user interfaces to managing databases and everything in between. Understanding these responsibilities is crucial to grasping the challenges they face.

DevOps’s Importance in Full Stack Development

Unpacking DevOps

A collection of principles known as DevOps aims to eliminate the divide between development and operations teams. We will delve into what DevOps entails and why it matters in Full Stack Development. The benefits of embracing DevOps principles will also be discussed.

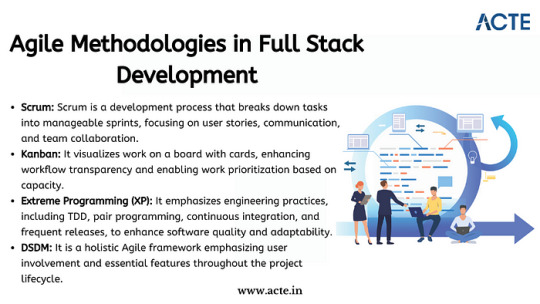

Agile Methodologies in Full Stack Development

Introducing Agile Methodologies

Agile methodologies like Scrum and Kanban have gained immense popularity due to their effectiveness in fostering collaboration and adaptability. We will introduce these methodologies and explain how they enhance project management and teamwork in Full Stack Development.

Synergy Between DevOps and Agile

The Power of Collaboration

We will highlight how DevOps and Agile practices complement each other, creating a synergy that streamlines the entire development process. By aligning development, testing, and deployment, this synergy results in faster delivery and higher-quality software.

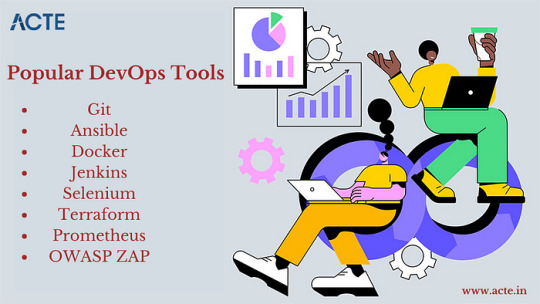

Tools and Technologies for DevOps in Full Stack Development

Essential DevOps Tools

DevOps relies on a suite of tools and technologies, such as Jenkins, Docker, and Kubernetes, to automate and manage various aspects of the development pipeline. We will provide an overview of these tools and explain how they can be harnessed in Full Stack Development projects.

Implementing Agile in Full Stack Projects

Agile Implementation Strategies

We will delve into practical strategies for implementing Agile methodologies in Full Stack projects. Topics will include sprint planning, backlog management, and conducting effective stand-up meetings.

Best Practices for Agile Integration

We will share best practices for incorporating Agile principles into Full Stack Development, ensuring that projects are nimble, adaptable, and responsive to changing requirements.

Learning Resources and Real-World Examples

To gain a deeper understanding, ACTE Institute present case studies and real-world examples of successful Full Stack Development projects that leveraged DevOps and Agile practices. These stories will offer valuable insights into best practices and lessons learned. Consider enrolling in accredited full stack developer training course to increase your full stack proficiency.

Challenges and Solutions

Addressing Common Challenges

No journey is without its obstacles, and Full Stack Developers using DevOps and Agile practices may encounter challenges. We will identify these common roadblocks and provide practical solutions and tips for overcoming them.

Benefits and Outcomes

The Fruits of Collaboration

In this section, we will discuss the tangible benefits and outcomes of integrating DevOps and Agile practices in Full Stack projects. Faster development cycles, improved product quality, and enhanced customer satisfaction are among the rewards.

In conclusion, this blog has explored the dynamic world of Full Stack Development and the pivotal role that DevOps and Agile practices play in achieving success in this field. Full Stack Developers are at the forefront of innovation, and by embracing these methodologies, they can enhance their efficiency, drive project success, and stay ahead in the ever-evolving tech landscape. We emphasize the importance of continuous learning and adaptation, as the tech industry continually evolves. DevOps and Agile practices provide a foundation for success, and we encourage readers to explore further resources, courses, and communities to foster their growth as Full Stack Developers. By doing so, they can contribute to the development of cutting-edge solutions and make a lasting impact in the world of software development.

#web development#full stack developer#devops#agile#education#information#technology#full stack web development#innovation

2 notes

·

View notes

Text

AEM aaCS aka Adobe Experience Manager as a Cloud Service

As the industry standard for digital experience management, Adobe Experience Manager is now being improved upon. Finally, Adobe is transferring Adobe Experience Manager (AEM), its final on-premises product, to the cloud.

AEM aaCS is a modern, cloud-native application that accelerates the delivery of omnichannel application.

The AEM Cloud Service introduces the next generation of the AEM product line, moving away from versioned releases like AEM 6.4, AEM 6.5, etc. to a continuous release with less versioning called "AEM as a Cloud Service."

AEM Cloud Service adopts all benefits of modern cloud based services:

Availability

The ability for all services to be always on, ensuring that our clients do not suffer any downtime, is one of the major advantages of switching to AEM Cloud Service. In the past, there was a requirement to regularly halt the service for various maintenance operations, including updates, patches, upgrades, and certain standard maintenance activities, notably on the author side.

Scalability

The AEM Cloud Service's instances are all generated with the same default size. AEM Cloud Service is built on an orchestration engine (Kubernetes) that dynamically scales��up and down in accordance with the demands of our clients without requiring their involvement. both horizontally and vertically. Based on, scaling can be done manually or automatically.

Updated Code Base

This might be the most beneficial and much anticipated function that AEM Cloud Service offers to consumers. With the AEM Cloud Service, Adobe will handle upgrading all instances to the most recent code base. No downtime will be experienced throughout the update process.

Self Evolving

Continually improving and learning from the projects our clients deploy, AEM Cloud Service. We regularly examine and validate content, code, and settings against best practices to help our clients understand how to accomplish their business objectives. AEM cloud solution components that include health checks enable them to self-heal.

AEM as a Cloud Service:��Changes and Challenges

When you begin your work, you will notice a lot of changes in the aem cloud jar. Here are a few significant changes that might have an effect on how we now operate with aem:-

1)The significant exhibition bottleneck that the greater part of huge endeavor DAM clients are confronting is mass transferring of resource on creator example and afterward DAM Update work process debase execution of entire creator occurrence. To determine this AEM Cloud administration brings Resource Microservices for serverless resource handling controlled by Adobe I/O. Presently when creator transfers any resource it will go straightforwardly to cloud paired capacity then adobe I/O is set off which will deal with additional handling by utilizing versions and different properties that has been designed.

2)Due to Adobe's complete management of AEM cloud service, developers and operations personnel may not be able to directly access logs. As of right now, the only way I know of to request access, error, dispatcher, and other logs will be via a cloud manager download link.

3)The only way for AEM Leads to deploy is through cloud manager, which is subject to stringent CI/CD pipeline quality checks. At this point, you should concentrate on test-driven development with greater than 50% test coverage. Go to https://docs.adobe.com/content/help/en/experience-manager-cloud-manager/using/how-to-use/understand-your-test-results.html for additional information.

4)AEM as a cloud service does not currently support AEM screens or AEM Adaptive forms.

5)Continuous updates will be pushed to the cloud-based AEM Base line image to support version-less solutions. Consequently, any Asset UI console or libs granite customizations: Up until AEM 6.5, the internal node, which could be used as a workaround to meet customer requirements, is no longer possible because it will be replaced with each base line image update.

6)Local sonar cannot use the code quality rules that are available in cloud manager before pushing to git. which I believe will result in increased development time and git commits. Once the development code is pushed to the git repository and the build is started, cloud manager will run sonar checks and tell you what's wrong. As a precaution, I recommend that you do not have any problems with the default rules in your local environment and that you continue to update the rules whenever you encounter them while pushing the code to cloud git.

AEM Cloud Service Does Not Support These Features

1.AEM Sites Commerce add-on 2.Screens add-on 3.Networks add-on 4.AEM Structures 5.Admittance to Exemplary UI. 6.Page Editor is in Developer Mode. 7./apps or /libs are ready-only in dev/stage/prod environment – changes need to come in via CI/CD pipeline that builds the code from the GIT repo. 8.OSGI bundles and settings: the dev, stage, and production environments do not support the web console.

If you encounter any difficulties or observe any issue , please let me know. It will be useful for AEM people group.

3 notes

·

View notes

Text

The Role of DevOps in Custom Software Deployment

In today’s fast-paced digital ecosystem, the success of any software product is determined not just by how well it's coded, but how efficiently and reliably it’s delivered to end-users. This is where DevOps—the fusion of development and operations—plays a transformative role.

For businesses working with a custom software development company in New York, DevOps is no longer an optional methodology—it’s a competitive necessity. From speeding up deployment cycles to improving reliability, security, and scalability, DevOps practices streamline the entire software delivery pipeline.

In this blog, we’ll explore the critical role of DevOps in custom software deployment, the advantages it offers, and how software development companies in New York are leveraging DevOps to deliver faster, smarter, and more resilient digital solutions.

What Is DevOps?

DevOps is a set of practices and tools that bridge the gap between software development (Dev) and IT operations (Ops). Its primary goal is to shorten the development lifecycle, increase deployment frequency, and deliver high-quality software in a repeatable and automated way.

This is accomplished through key principles such as:

Continuous Integration (CI)

Continuous Delivery/Deployment (CD)

Infrastructure as Code (IaC)

Automated testing

Monitoring and logging

The best custom software development companies in New York integrate these practices into their workflows to reduce downtime, prevent deployment bottlenecks, and foster innovation.

DevOps in Custom Software: Why It Matters

Unlike off-the-shelf software, custom solutions are tailor-made for specific business needs. They require greater agility, flexibility, and ongoing refinement—all of which are supported by a robust DevOps approach.

Here’s why DevOps is especially vital in custom software projects:

1. Faster Time-to-Market

Speed is crucial in today’s competitive digital space. DevOps enables rapid iterations, automated testing, and continuous deployments—ensuring that new features and bug fixes go live faster.

For example, a software development company in New York using DevOps can deliver weekly or even daily updates, significantly reducing the time it takes to respond to user feedback or market demands.

2. Higher Quality Code

Automated testing and code integration tools allow developers to catch bugs early in the development cycle. This leads to fewer production issues and better software quality overall.

The top software development companies in New York use automated quality gates, unit tests, and peer code reviews integrated into the CI/CD pipeline, ensuring every code release meets strict standards.

3. Scalability and Flexibility

As businesses grow, their software needs to scale accordingly. DevOps supports scalability through containerization (like Docker), orchestration tools (like Kubernetes), and cloud-native deployment models.

Working with a custom software development company in New York that understands cloud and DevOps architecture means your software can grow seamlessly with your business needs.

Key DevOps Practices That Support Deployment

To understand the true value of DevOps in deployment, it’s important to dive deeper into the specific practices that make it effective.

1. Continuous Integration (CI)

CI involves automatically merging all developer working copies to a shared mainline several times a day. This enables early bug detection, reduced integration issues, and a smoother development workflow.

Custom software development companies in New York often integrate tools like Jenkins, GitLab CI, or CircleCI to automate builds and ensure code is always in a deployable state.

2. Continuous Deployment (CD)

Continuous Deployment automates the release process so that any code passing all tests is immediately deployed to production. This creates a cycle of fast and reliable releases.

This is particularly useful for industries like fintech or e-commerce, where downtime or bugs can result in serious revenue loss. A software development company in New York using CD can deploy features safely and frequently without compromising performance.

3. Infrastructure as Code (IaC)

IaC allows infrastructure (like servers, load balancers, and databases) to be managed through code, making environments consistent and reproducible. Tools like Terraform and Ansible are widely used.

The best software development company in New York will use IaC to reduce human error, enable version control of infrastructure, and simplify environment replication across dev, test, and production.

4. Monitoring and Feedback Loops

DevOps emphasizes continuous monitoring of application performance and infrastructure health using tools like Prometheus, Grafana, and ELK Stack.

With these insights, custom software development companies in New York can proactively identify issues, analyze usage trends, and optimize applications to improve user experience.

DevOps for Custom Software Clients: What to Expect

When working with a DevOps-enabled software development company in New York, clients can expect the following:

1. Transparent Release Processes

Frequent, scheduled releases replace traditional “big-bang” launches. You’re always aware of what’s being deployed and when, with detailed changelogs and documentation.

2. Reduced Downtime

Through canary releases, blue-green deployments, and automated rollback mechanisms, DevOps minimizes the risk of outages during updates.

3. More Reliable Support

DevOps fosters a culture of accountability. Developers monitor how their code performs in production and fix issues promptly—ensuring a more stable product lifecycle.

4. Custom Dashboards and Metrics

Get real-time visibility into your application’s health, user engagement, and server performance with custom dashboards tailored to your KPIs.

The top software development company in New York will customize these tools for your business model, giving you actionable insights at a glance.

Why DevOps Is a Competitive Edge

In industries like finance, healthcare, retail, and logistics, speed and stability aren’t just nice-to-haves—they're vital. DevOps offers:

Accelerated innovation: Quickly test and implement new ideas.

Enhanced security: Apply security policies and patches faster.

Customer satisfaction: Deliver bug-free features continuously.

Operational efficiency: Automate repetitive tasks to focus on high-value work.

For companies looking to stand out, partnering with a custom software development company in New York that champions DevOps is an investment in agility and excellence.

Choosing the Right DevOps-Enabled Software Partner

Not every software firm embraces DevOps, and not every team does it well. Here’s what to look for:

DevOps toolchain expertise (Git, Jenkins, Docker, Kubernetes)

Experience with cloud platforms (AWS, Azure, Google Cloud)

Track record of CI/CD pipelines

Commitment to security and compliance

24/7 monitoring and support

The best software development company in New York will have proven case studies, client testimonials, and a clear DevOps roadmap that aligns with your vision.

Final Thoughts

DevOps has redefined the way custom software is developed, deployed, and maintained. It promotes agility, reliability, and collaboration—three pillars essential for long-term software success.

In a city as competitive and innovation-driven as New York, choosing the right partner is crucial. Whether you’re a startup disrupting your industry or an enterprise modernizing legacy systems, working with a custom software development company in New York that fully embraces DevOps can give you a decisive edge.

If you want continuous improvement, faster delivery cycles, and robust deployment processes, look no further than the top software development company in New York. They don’t just build software—they deliver future-ready solutions that evolve with your business.

#custom software development company in new york#software development company in new york#custom software development companies in new york#top software development company in new york#best software development company in new york#The Role of DevOps in Custom Software Deployment

0 notes

Text

Decoding the Full Stack Developer: Beyond Just Coding Skills

When we hear the term Full Stack Developer, the first image that comes to mind is often a coding wizard—someone who can write flawless code in both frontend and backend languages. But in reality, being a Full Stack Developer is much more than juggling JavaScript, databases, and frameworks. It's about adaptability, problem-solving, communication, and having a deep understanding of how digital products come to life.

In today’s fast-paced tech world, decoding the Full Stack Developer means understanding the full spectrum of their abilities—beyond just writing code. It’s about their mindset, their collaboration style, and the unique value they bring to modern development teams.

What is a Full Stack Developer—Really?

At its most basic definition, a Full Stack Developer is someone who can work on both the front end (what users see) and the back end (the server, database, and application logic) of a web application. But what sets a great Full Stack Developer apart isn't just technical fluency—it's their ability to bridge the gap between design, development, and user experience.

Beyond Coding: The Real Skills of a Full Stack Developer

While technical knowledge is essential, companies today are seeking developers who bring more to the table. Here’s what truly defines a well-rounded Full Stack Developer:

1. Holistic Problem Solving

A Full Stack Developer looks at the bigger picture. Instead of focusing only on isolated technical problems, they ask:

How will this feature affect the user experience?

Can this backend architecture scale as traffic increases?

Is there a more efficient way to implement this?

2. Communication and Collaboration

Full Stack Developers often act as the bridge between frontend and backend teams, as well as designers and project managers. This requires:

The ability to translate technical ideas into simple language

Empathy for team members with different skill sets

Openness to feedback and continuous learning

3. Business Mindset

Truly impactful developers understand the "why" behind their work. They think:

How does this feature help the business grow?

Will this implementation improve conversion or retention?

Is this approach cost-effective?

4. Adaptability

Tech is always evolving. A successful Full Stack Developer is a lifelong learner who stays updated with new frameworks, tools, and methodologies.

Technical Proficiency: Still the Foundation

Of course, strong coding skills remain a core part of being a Full Stack Developer. Here are just some of the technical areas they typically master:

Frontend: HTML, CSS, JavaScript, React, Angular, or Vue.js

Backend: Node.js, Express, Python, Ruby on Rails, or PHP

Databases: MySQL, MongoDB, PostgreSQL

Version Control: Git and GitHub

DevOps & Deployment: Docker, Kubernetes, CI/CD pipelines, AWS or Azure

But what distinguishes a standout Full Stack Developer isn’t how many tools they know—it’s how well they apply them to real-world problems.

Why the Role Matters More Than Ever

In startups and agile teams, having someone who understands both ends of the tech stack can:

Speed up development cycles

Reduce communication gaps

Enable rapid prototyping

Make it easier to scale applications over time

This flexibility makes Full Stack Developers extremely valuable. They're often the "glue" holding cross-functional teams together, able to jump into any layer of the product when needed.

A Day in the Life of a Full Stack Developer

To understand this further, imagine this scenario:

It’s 9:00 AM. A Full Stack Developer starts their day reviewing pull requests. At 10:30, they join a stand-up meeting with designers and project managers. By noon, they’re debugging an API endpoint issue. After lunch, they switch to styling a new UI component. And before the day ends, they brainstorm database optimization strategies for an upcoming product feature.

It’s not just a job—it’s a dynamic puzzle, and Full Stack Developers thrive on finding creative, holistic solutions.

Conclusion: Decoding the Full Stack Developer

Decoding the Full Stack Developer: Beyond Just Coding Skills is essential for businesses and aspiring developers alike. It’s about understanding that this role blends logic, creativity, communication, and empathy.

Today’s Full Stack Developer is more than a technical multitasker—they are strategic thinkers, empathetic teammates, and flexible builders who can shape the entire lifecycle of a digital product.

If you're looking to become one—or hire one—don’t just look at the resume. Look at the mindset, curiosity, and willingness to grow. Because when you decode the Full Stack Developer, you’ll find someone who brings full-spectrum value to the digital world.

0 notes

Text

The Future of Software Development: Top Technologies to Watch in 2025

Software development is one of the growing fields due to the emergence and development of numerous technologies that revolutionize industries and user interfaces. The constant battle for keeping up with rivals obligatory sets businesses on the course of embracing innovative solutions and ideas. In today’s blog, we will list down the top technologies that are expected to transform software development by 2025.

1. Artificial Intelligence and Machine Learning

Many companies do not consider AI and ML as mere hyped terms, but rather as essential components in software product development companies. These technologies include increasing automation of broad working processes as well as predictive analysis. GitHub Copilot is one such AI writing tool that is saving developers time and making it easier to write good code.

2. Low-Code and No-Code Platforms

Low-code or no-code solutions are becoming the next frontier and are enabling people to develop software. These tools allow applications to be constructed with little coding by nontechnical individuals and businesses. The given trend helps providers of customized software development companies to provide solutions that are developed significantly faster with the same price tag and still presents the option of customization for intricate specifications.

3. Cloud-Native Development

Technology modernization remains in high swing as more firms adopt cloud-native development. Modern enterprises are building scalable, flexible, and resilient applications through Kubernetes, serverless architectures, and microservices. SaaS product development companies are driving this change making sure products are easily integrated and there are lower levels of infrastructure overhead.

4. Blockchain Technology

Blockchain is not just a system for the generation of virtual money. That is how it is revolutionizing markets such as supply chain, healthcare, and finance, by offering secure and extremely trustworthy solutions. The custom software product development firms are now incorporating the use of blockchain in creating dApps for various sectors.

5. DevSecOps

Security is an important consideration in 2025 even when implementing DevOps. DevSecOps enable the security aspect of any development process to be combined with the various development stages hence reducing various risks.Tools like Jira software development facilitate collaboration among development, security, and operations teams.

6. Progressive Web Applications (PWAs)

The key advantage of PWAs is that they take the benefits of web and application development approaches to provide users with fast, reliable, and smooth experiences. It removes the need for applications stores, making it cheap and achievable. PWAs are currently being used by custom software application developers to address demand for light and efficient applications.

7. Quantum Computing

Despite the fact that quantum computing is still in its early stages, it carries a staggering amount of opportunities for solving large tasks in logistics, cryptography and artificial intelligence. So there is a trend that software product engineering will pay more attention to this new technology to further expand the prospect.

8. Internet of Things (IoT) Integration

IoT is transforming the way devices interact, creating interconnected ecosystems. From smart homes to industrial automation, IoT-driven solutions require robust software. Customized software development companies are focusing on creating secure and scalable IoT applications tailored to diverse needs.

READ MORE- https://www.precisio.tech/the-future-of-software-development-top-technologies-to-watch-in-2025/

0 notes

Text

Creating and Configuring Production ROSA Clusters (CS220) – A Practical Guide

Introduction

Red Hat OpenShift Service on AWS (ROSA) is a powerful managed Kubernetes solution that blends the scalability of AWS with the developer-centric features of OpenShift. Whether you're modernizing applications or building cloud-native architectures, ROSA provides a production-grade container platform with integrated support from Red Hat and AWS. In this blog post, we’ll walk through the essential steps covered in CS220: Creating and Configuring Production ROSA Clusters, an instructor-led course designed for DevOps professionals and cloud architects.

What is CS220?

CS220 is a hands-on, lab-driven course developed by Red Hat that teaches IT teams how to deploy, configure, and manage ROSA clusters in a production environment. It is tailored for organizations that are serious about leveraging OpenShift at scale with the operational convenience of a fully managed service.

Why ROSA for Production?

Deploying OpenShift through ROSA offers multiple benefits:

Streamlined Deployment: Fully managed clusters provisioned in minutes.

Integrated Security: AWS IAM, STS, and OpenShift RBAC policies combined.

Scalability: Elastic and cost-efficient scaling with built-in monitoring and logging.

Support: Joint support model between AWS and Red Hat.

Key Concepts Covered in CS220

Here’s a breakdown of the main learning outcomes from the CS220 course:

1. Provisioning ROSA Clusters

Participants learn how to:

Set up required AWS permissions and networking pre-requisites.

Deploy clusters using Red Hat OpenShift Cluster Manager (OCM) or CLI tools like rosa and oc.

Use STS (Short-Term Credentials) for secure cluster access.

2. Configuring Identity Providers

Learn how to integrate Identity Providers (IdPs) such as:

GitHub, Google, LDAP, or corporate IdPs using OpenID Connect.

Configure secure, role-based access control (RBAC) for teams.

3. Networking and Security Best Practices

Implement private clusters with public or private load balancers.

Enable end-to-end encryption for APIs and services.

Use Security Context Constraints (SCCs) and network policies for workload isolation.

4. Storage and Data Management

Configure dynamic storage provisioning with AWS EBS, EFS, or external CSI drivers.

Learn persistent volume (PV) and persistent volume claim (PVC) lifecycle management.

5. Cluster Monitoring and Logging

Integrate OpenShift Monitoring Stack for health and performance insights.

Forward logs to Amazon CloudWatch, ElasticSearch, or third-party SIEM tools.

6. Cluster Scaling and Updates

Set up autoscaling for compute nodes.

Perform controlled updates and understand ROSA’s maintenance policies.

Use Cases for ROSA in Production

Modernizing Monoliths to Microservices

CI/CD Platform for Agile Development

Data Science and ML Workflows with OpenShift AI

Edge Computing with OpenShift on AWS Outposts

Getting Started with CS220

The CS220 course is ideal for:

DevOps Engineers

Cloud Architects

Platform Engineers

Prerequisites: Basic knowledge of OpenShift administration (recommended: DO280 or equivalent experience) and a working AWS account.

Course Format: Instructor-led (virtual or on-site), hands-on labs, and guided projects.

Final Thoughts

As more enterprises adopt hybrid and multi-cloud strategies, ROSA emerges as a strategic choice for running OpenShift on AWS with minimal operational overhead. CS220 equips your team with the right skills to confidently deploy, configure, and manage production-grade ROSA clusters — unlocking agility, security, and innovation in your cloud-native journey.

Want to Learn More or Book the CS220 Course? At HawkStack Technologies, we offer certified Red Hat training, including CS220, tailored for teams and enterprises. Contact us today to schedule a session or explore our Red Hat Learning Subscription packages. www.hawkstack.com

0 notes

Text

Google Cloud Service Management

https://tinyurl.com/23rno64l [vc_row][vc_column width=”1/3″][vc_column_text] Google Cloud Services Management Google Cloud Services management Platform, offered by Google, is a suite of cloud computing services that run on the same infrastructure that Google uses internally for its end-user products, such as Google Search and YouTube. Alongside a set of management tools, it provides a series of modular cloud services including computing, data storage, data analytics, and machine learning. Unlock the Full Potential of Your Cloud Infrastructure with Google Cloud Services Management As businesses transition to the cloud, managing Google Cloud services effectively becomes essential for achieving optimal performance, cost efficiency, and robust security. Google Cloud Platform (GCP) provides a comprehensive suite of cloud services, but without proper management, harnessing their full potential can be challenging. This is where specialized Google Cloud Services Management comes into play. In this guide, we’ll explore the key aspects of Google Cloud Services Management and highlight how 24×7 Server Support’s expertise can streamline your cloud operations. What is Google Cloud Services Management? Google Cloud Services Management involves the strategic oversight and optimization of resources and services within Google Cloud Platform (GCP). This includes tasks such as configuring resources, managing costs, ensuring security, and monitoring performance to maintain an efficient and secure cloud environment. Key Aspects of Google Cloud Services Management Resource Optimization Project Organization: Structure your GCP projects to separate environments (development, staging, production) and manage resources effectively. This helps in applying appropriate access controls and organizing billing. Resource Allocation: Efficiently allocate and manage resources like virtual machines, databases, and storage. Use tags and labels for better organization and cost tracking. Cost Management Budgeting and Forecasting: Set up budgets and alerts to monitor spending and avoid unexpected costs. Google Cloud’s Cost Management tools help in tracking expenses and forecasting future costs. Cost Optimization Strategies: Utilize GCP’s pricing calculators and recommendations to find cost-saving opportunities. Consider options like sustained use discounts or committed use contracts for predictable workloads. Security and Compliance Identity and Access Management (IAM): Configure IAM roles and permissions to ensure secure access to your resources. Regularly review and adjust permissions to adhere to the principle of least privilege. Compliance Monitoring: Implement GCP’s security tools to maintain compliance with industry standards and regulations. Use audit logs to track resource access and modifications. Performance Monitoring Real-time Monitoring: Utilize Google Cloud’s monitoring tools to track the performance of your resources and applications. Set up alerts for performance issues and anomalies to ensure timely response. Optimization and Scaling: Regularly review performance metrics and adjust resources to meet changing demands. Use auto-scaling features to automatically adjust resources based on traffic and load. [/vc_column_text][vc_btn title=”Get a quote” style=”gradient” shape=”square” i_icon_fontawesome=”” css_animation=”rollIn” add_icon=”true” link=”url:https%3A%2F%2F24x7serversupport.io%2Fcontact-us%2F|target:_blank”][/vc_column][vc_column width=”2/3″][vc_column_text] Specifications [/vc_column_text][vc_row_inner css=”.vc_custom_1513425380764{background-color: #f1f3f5 !important;}”][vc_column_inner width=”1/2″][vc_column_text] Compute From virtual machines with proven price/performance advantages to a fully managed app development platform. Compute Engine App Engine Kubernetes Engine Container Registry Cloud Functions [/vc_column_text][vc_column_text] Storage and Databases Scalable, resilient, high-performance object storage and databases for your applications. Cloud Storage Cloud SQL Cloud Bigtable Cloud Spanner Cloud Datastore Persistent Disk [/vc_column_text][vc_column_text] Networking State-of-the-art software-defined networking products on Google’s private fiber network. Cloud Virtual Network Cloud Load Balancing Cloud CDN Cloud Interconnect Cloud DNS Network Service Tiers [/vc_column_text][vc_column_text] Big Data Fully managed data warehousing, batch and stream processing, data exploration, Hadoop/Spark, and reliable messaging. BigQuery Cloud Dataflow Cloud Dataproc Cloud Datalab Cloud Dataprep Cloud Pub/Sub Genomics [/vc_column_text][vc_column_text] Identity and Security Control access and visibility to resources running on a platform protected by Google’s security model. Cloud IAM Cloud Identity-Aware Proxy Cloud Data Loss Prevention API Security Key Enforcement Cloud Key Management Service Cloud Resource Manager Cloud Security Scanner [/vc_column_text][/vc_column_inner][vc_column_inner width=”1/2″][vc_column_text] Data Transfer Online and offline transfer solutions for moving data quickly and securely. Google Transfer Appliance Cloud Storage Transfer Service Google BigQuery Data Transfer [/vc_column_text][vc_column_text] API Platform & Ecosystems Cross-cloud API platform enabling businesses to unlock the value of data deliver modern applications and power ecosystems. Apigee API Platform API Monetization Developer Portal API Analytics Apigee Sense Cloud Endpoints [/vc_column_text][vc_column_text] Internet of things Intelligent IoT platform that unlocks business insights from your global device network Cloud IoT Core [/vc_column_text][vc_column_text] Developer tools Monitoring, logging, and diagnostics and more, all in an easy to use web management console or mobile app. Stackdriver Overview Monitoring Logging Error Reporting Trace Debugger Cloud Deployment Manager Cloud Console Cloud Shell Cloud Mobile App Cloud Billing API [/vc_column_text][vc_column_text] Machine Learning Fast, scalable, easy to use ML services. Use our pre-trained models or train custom models on your data. Cloud Machine Learning Engine Cloud Job Discovery Cloud Natural Language Cloud Speech API Cloud Translation API Cloud Vision API Cloud Video Intelligence API [/vc_column_text][/vc_column_inner][/vc_row_inner][/vc_column][/vc_row][vc_row][vc_column][vc_column_text] Why Choose 24×7 Server Support for Google Cloud Services Management? Effective Google Cloud Services Management requires expertise and continuous oversight. 24×7 Server Support specializes in providing comprehensive cloud management solutions that ensure your GCP infrastructure operates smoothly and efficiently. Here’s how their services stand out: Expertise and Experience: With a team of certified Google Cloud experts, 24×7 Server Support brings extensive knowledge to managing and optimizing your cloud environment. Their experience ensures that your GCP services are configured and maintained according to best practices. 24/7 Support: As the name suggests, 24×7 Server Support offers round-the-clock assistance. Whether you need help with configuration, troubleshooting, or performance issues, their support team is available 24/7 to address your concerns. Custom Solutions: Recognizing that every business has unique needs, 24×7 Server Support provides tailored management solutions. They work closely with you to understand your specific requirements and implement strategies that align with your business objectives. Cost Efficiency: Their team helps in optimizing your cloud expenditures by leveraging Google Cloud’s cost management tools and providing insights into cost-saving opportunities. This ensures you get the best value for your investment. Enhanced Security: 24×7 Server Support implements robust security measures to protect your data and comply with regulatory requirements. Their proactive approach to security and compliance helps safeguard your cloud infrastructure from potential threats. [/vc_column_text][/vc_column][/vc_row]

0 notes

Text

How To Use Llama 3.1 405B FP16 LLM On Google Kubernetes

How to set up and use large open models for multi-host generation AI over GKE

Access to open models is more important than ever for developers as generative AI grows rapidly due to developments in LLMs (Large Language Models). Open models are pre-trained foundational LLMs that are accessible to the general population. Data scientists, machine learning engineers, and application developers already have easy access to open models through platforms like Hugging Face, Kaggle, and Google Cloud’s Vertex AI.

How to use Llama 3.1 405B

Google is announcing today the ability to install and run open models like Llama 3.1 405B FP16 LLM over GKE (Google Kubernetes Engine), as some of these models demand robust infrastructure and deployment capabilities. With 405 billion parameters, Llama 3.1, published by Meta, shows notable gains in general knowledge, reasoning skills, and coding ability. To store and compute 405 billion parameters at FP (floating point) 16 precision, the model needs more than 750GB of GPU RAM for inference. The difficulty of deploying and serving such big models is lessened by the GKE method discussed in this article.

Customer Experience

You may locate the Llama 3.1 LLM as a Google Cloud customer by selecting the Llama 3.1 model tile in Vertex AI Model Garden.

Once the deploy button has been clicked, you can choose the Llama 3.1 405B FP16 model and select GKE.Image credit to Google Cloud

The automatically generated Kubernetes yaml and comprehensive deployment and serving instructions for Llama 3.1 405B FP16 are available on this page.

Deployment and servicing multiple hosts

Llama 3.1 405B FP16 LLM has significant deployment and service problems and demands over 750 GB of GPU memory. The total memory needs are influenced by a number of parameters, including the memory used by model weights, longer sequence length support, and KV (Key-Value) cache storage. Eight H100 Nvidia GPUs with 80 GB of HBM (High-Bandwidth Memory) apiece make up the A3 virtual machines, which are currently the most potent GPU option available on the Google Cloud platform. The only practical way to provide LLMs such as the FP16 Llama 3.1 405B model is to install and serve them across several hosts. To deploy over GKE, Google employs LeaderWorkerSet with Ray and vLLM.

LeaderWorkerSet

A deployment API called LeaderWorkerSet (LWS) was created especially to meet the workload demands of multi-host inference. It makes it easier to shard and run the model across numerous devices on numerous nodes. Built as a Kubernetes deployment API, LWS is compatible with both GPUs and TPUs and is independent of accelerators and the cloud. As shown here, LWS uses the upstream StatefulSet API as its core building piece.

A collection of pods is controlled as a single unit under the LWS architecture. Every pod in this group is given a distinct index between 0 and n-1, with the pod with number 0 being identified as the group leader. Every pod that is part of the group is created simultaneously and has the same lifecycle. At the group level, LWS makes rollout and rolling upgrades easier. For rolling updates, scaling, and mapping to a certain topology for placement, each group is treated as a single unit.

Each group’s upgrade procedure is carried out as a single, cohesive entity, guaranteeing that every pod in the group receives an update at the same time. While topology-aware placement is optional, it is acceptable for all pods in the same group to co-locate in the same topology. With optional all-or-nothing restart support, the group is also handled as a single entity when addressing failures. When enabled, if one pod in the group fails or if one container within any of the pods is restarted, all of the pods in the group will be recreated.

In the LWS framework, a group including a single leader and a group of workers is referred to as a replica. Two templates are supported by LWS: one for the workers and one for the leader. By offering a scale endpoint for HPA, LWS makes it possible to dynamically scale the number of replicas.

Deploying multiple hosts using vLLM and LWS

vLLM is a well-known open source model server that uses pipeline and tensor parallelism to provide multi-node multi-GPU inference. Using Megatron-LM’s tensor parallel technique, vLLM facilitates distributed tensor parallelism. With Ray for multi-node inferencing, vLLM controls the distributed runtime for pipeline parallelism.

By dividing the model horizontally across several GPUs, tensor parallelism makes the tensor parallel size equal to the number of GPUs at each node. It is crucial to remember that this method requires quick network connectivity between the GPUs.

However, pipeline parallelism does not require continuous connection between GPUs and divides the model vertically per layer. This usually equates to the quantity of nodes used for multi-host serving.

In order to support the complete Llama 3.1 405B FP16 paradigm, several parallelism techniques must be combined. To meet the model’s 750 GB memory requirement, two A3 nodes with eight H100 GPUs each will have a combined memory capacity of 1280 GB. Along with supporting lengthy context lengths, this setup will supply the buffer memory required for the key-value (KV) cache. The pipeline parallel size is set to two for this LWS deployment, while the tensor parallel size is set to eight.

In brief

We discussed in this blog how LWS provides you with the necessary features for multi-host serving. This method maximizes price-to-performance ratios and can also be used with smaller models, such as the Llama 3.1 405B FP8, on more affordable devices. Check out its Github to learn more and make direct contributions to LWS, which is open-sourced and has a vibrant community.

You can visit Vertex AI Model Garden to deploy and serve open models via managed Vertex AI backends or GKE DIY (Do It Yourself) clusters, as the Google Cloud Platform assists clients in embracing a gen AI workload. Multi-host deployment and serving is one example of how it aims to provide a flawless customer experience.

Read more on Govindhtech.com

#Llama3.1#Llama#LLM#GoogleKubernetes#GKE#405BFP16LLM#AI#GPU#vLLM#LWS#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

Docker Certification Explained: Benefits and Insights

In the realm of software development and DevOps, Docker has become a household name. As organizations increasingly adopt containerization, the demand for skilled professionals proficient in Docker continues to rise. One way to validate your expertise is through Docker certification. This blog will explain what Docker certification is, its benefits, and provide insights into how it can enhance your career.

Enhancing your career at the Docker Certification Course involves taking a systematic strategy and enrolling in a suitable course that will greatly expand your learning journey while matching with your preferences.

What is Docker Certification?

Docker certification is an official credential that verifies your skills and knowledge in using Docker technology. The primary certification offered is the Docker Certified Associate (DCA), which assesses your understanding of essential Docker concepts, tools, and best practices.

Key Areas Covered in the Certification

The DCA exam evaluates your proficiency in several critical areas, including:

Docker Basics: Understanding containers, images, and the Docker architecture.

Networking: Setting up and managing networks for containerized applications.

Security: Implementing security best practices to protect your applications and data.

Orchestration: Using Docker Swarm and Kubernetes for effective container orchestration.

Storage Management: Managing data volumes and persistent storage effectively.

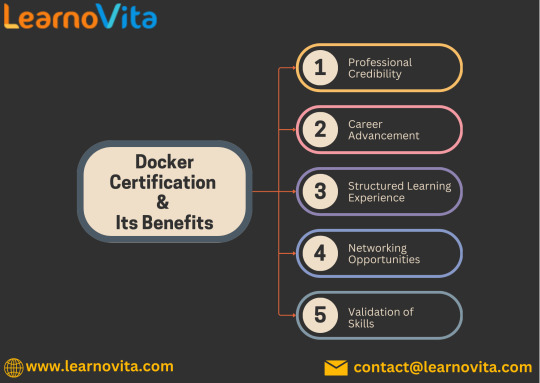

Benefits of Docker Certification

1. Enhanced Professional Credibility

Holding a Docker certification boosts your credibility in the eyes of potential employers. It serves as proof of your expertise and commitment to mastering Docker technology, distinguishing you from non-certified candidates.

2. Career Advancement Opportunities

With the growing adoption of containerization, the demand for Docker-certified professionals is increasing. A Docker certification can open doors to new job opportunities, promotions, and potentially higher salaries. Many organizations actively seek certified individuals for key roles in DevOps and software development.

3. Structured Learning Pathway

Preparing for Docker certification provides a structured approach to learning. The comprehensive curriculum ensures you cover essential topics and gain a solid understanding of Docker, best practices, and advanced techniques, equipping you for real-world challenges.

It's simpler to master this course and progress your profession with the help of Best Online Training & Placement programs, which provide through instruction and job placement support to anyone seeking to improve their talents.

4. Networking Benefits

Becoming certified can connect you to a community of professionals who are also certified. Engaging with this network can provide valuable opportunities for knowledge sharing, mentorship, and job referrals, enriching your career journey.

5. Validation of Skills

For those transitioning into DevOps roles or looking to solidify their Docker expertise, certification serves as tangible proof of your skills. It demonstrates your commitment to professional growth and your capability to work with modern technologies.

Insights for Success

1. Preparation is Key

To succeed in the Docker certification exam, invest time in studying the relevant topics. Utilize official resources, online courses, and practice exams to reinforce your knowledge.

2. Hands-On Experience

Practical experience is invaluable. Engage in real-world projects or personal experiments with Docker to solidify your understanding and application of the concepts.

3. Stay Updated

The tech landscape is constantly evolving. Stay updated with the latest Docker features, tools, and best practices to ensure your knowledge remains relevant.

Conclusion

Docker certification is a powerful asset for anyone looking to advance their career in software development or DevOps. It enhances your credibility, opens up new opportunities, and provides a structured learning experience. If you’re serious about your professional growth, pursuing Docker certification is a worthwhile endeavor. Embrace the journey, and unlock the doors to your future success.

1 note

·

View note

Text

Docker Migration Services: A Seamless Shift to Containerization

In today’s fast-paced tech world, businesses are continuously looking for ways to boost performance, scalability, and flexibility. One powerful way to achieve this is through Docker migration. Docker helps you containerize applications, making them easier to deploy, manage, and scale. But moving existing apps to Docker can be challenging without the right expertise.

Let’s explore what Docker migration services are, why they matter, and how they can help transform your infrastructure.

What Is Docker Migration?

Docker migration is the process of moving existing applications from traditional environments (like virtual machines or bare-metal servers) to Docker containers. This involves re-architecting applications to work within containers, ensuring compatibility, and streamlining deployments.

Why Migrate to Docker?

Here’s why businesses are choosing Docker migration services:

1. Improved Efficiency

Docker containers are lightweight and use system resources more efficiently than virtual machines.

2. Faster Deployment

Containers can be spun up in seconds, helping your team move faster from development to production.

3. Portability

Docker containers run the same way across different environments – dev, test, and production – minimizing issues.

4. Better Scalability

Easily scale up or down based on demand using container orchestration tools like Kubernetes or Docker Swarm.

5. Cost-Effective

Reduced infrastructure and maintenance costs make Docker a smart choice for businesses of all sizes.

What Do Docker Migration Services Include?

Professional Docker migration services guide you through every step of the migration journey. Here's what’s typically included:

- Assessment & Planning

Analyzing your current environment to identify what can be containerized and how.

- Application Refactoring

Modifying apps to work efficiently within containers without breaking functionality.

- Containerization

Creating Docker images and defining services using Dockerfiles and docker-compose.

- Testing & Validation

Ensuring that the containerized apps function as expected across environments.

- CI/CD Integration

Setting up pipelines to automate testing, building, and deploying containers.

- Training & Support

Helping your team get up to speed with Docker concepts and tools.

Challenges You Might Face

While Docker migration has many benefits, it also comes with some challenges:

Compatibility issues with legacy applications

Security misconfigurations

Learning curve for teams new to containers

Need for monitoring and orchestration setup

This is why having experienced Docker professionals onboard is critical.

Who Needs Docker Migration Services?

Docker migration is ideal for:

Businesses with legacy applications seeking modernization

Startups looking for scalable and portable solutions

DevOps teams aiming to streamline deployments

Enterprises moving towards a microservices architecture

Final Thoughts

Docker migration isn’t just a trend—it’s a smart move for businesses that want agility, reliability, and speed in their development and deployment processes. With expert Docker migration services, you can transition smoothly, minimize downtime, and unlock the full potential of containerization.

0 notes

Text

**The Future of Cloud Services: How New York Companies Can Leverage Microsoft and Google Technologies**

Introduction

In ultra-modern speedily evolving virtual panorama, cloud facilities have changed the way organizations function. Particularly for organizations in New York, leveraging structures like Microsoft Azure and Google Cloud can enrich operational efficiency, foster innovation, and be sure that powerful security features. This article delves into the long run of cloud capabilities and offers insights on how New York services can harness the continual of Microsoft and Google technology to stay competitive in their respective industries.

" style="max-width:500px;height:auto;">

The Future of Cloud Services: How New York Companies Can Leverage Microsoft and Google Technologies

The future of cloud amenities shouldn't be virtually garage; it’s about growing a flexible surroundings that supports suggestions across sectors. For New York organisations, adopting technologies from giants like Microsoft and Google can result in extra agility, superior records leadership skills, and enhanced protection protocols. As groups increasingly more shift in opposition t digital strategies, understanding these applied sciences becomes obligatory for sustained growth.

youtube

Understanding Cloud Services What Are Cloud Services?

Cloud https://ameblo.jp/waylongwta678/entry-12895928598.html expertise seek advice from quite a number computing components furnished over the net (the "cloud"). These consist of:

Infrastructure as a Service (IaaS): Virtualized computing components over the web. Platform as a Service (PaaS): Platforms permitting builders to construct applications without managing underlying infrastructure. Software as a Service (SaaS): Software introduced over the web, removing the need for setting up. Key Benefits of Cloud Services Cost Efficiency: Reduces capital expenditure on hardware. Scalability: Easily scales components based mostly on demand. Accessibility: Access documents and packages from any place. Security: Advanced defense points maintain delicate recordsdata. Microsoft's Role in Cloud Computing Overview of Microsoft Azure

Microsoft Azure is one of several most well known cloud carrier providers featuring varying providers reminiscent of virtual machines, databases, analytics, and AI abilties.

Core Features of Microsoft Azure Virtual Machines: Create scalable VMs with numerous operating structures. Azure SQL Database: A managed database provider for app progress. AI & Machine Learning: Integrate AI capabilities seamlessly into functions. Google's Impact on Cloud Technologies Introduction to Google Cloud Platform (GCP)

Google's cloud featuring emphasizes high-functionality computing and mechanical device getting to know capabilities adapted for organisations searching for modern suggestions.

Distinct Features of GCP BigQuery: A efficient analytics tool for gigantic datasets. Cloud Functions: Event-pushed serverless compute platform. Kubernetes Engine: Man

1 note

·

View note

Text

Why Full-Stack Web Development Skills Are in High Demand in 2025

In today’s fast-paced digital world, the way we build, access, and interact with technology is evolving rapidly. Companies are racing to create seamless, scalable, and efficient digital solutions—and at the heart of this movement lies full-stack web development. If you've been exploring career options in tech or wondering what skills will truly pay off in 2025, the answer is clear: full-stack web development is one of the hottest and most valuable skill sets on the market.

But why exactly are full-stack web development skills in high demand in 2025? Let’s break it down in a way that’s easy to understand and relevant, whether you're a student, a working professional looking to upskill, or a business owner trying to stay ahead of the curve.

The Versatility of Full-Stack Developers

Full-stack web developers are often called the "Swiss Army knives" of the tech industry. Why? Because they can work across the entire web development process—from designing user-friendly interfaces (front end) to managing databases and servers (back end).

This versatility is extremely valuable for several reasons:

Cost-efficiency: Companies can hire one skilled developer instead of multiple specialists.

Faster development cycles: Full-stack developers can manage the entire pipeline, resulting in quicker launches.

Better project understanding: With a view of the whole system, these developers can troubleshoot and optimise more effectively.

Strong collaboration: Their ability to bridge front-end and back-end teams enhances communication and project execution.

In 2025, as companies look for more agility and speed, hiring full-stack developers has become a strategic move.

Startups and SMEs Are Driving the Demand

One of the biggest contributors to this rising demand is the startup ecosystem. Small and medium enterprises (SMEs), especially in tech-driven sectors, rely heavily on lean teams and flexible development strategies.

For them, hiring a full-stack developer means:

Getting more done with fewer people

Accelerating time-to-market for their products

Reducing the dependency on multiple external vendors

Even large corporations are adapting this mindset, often building smaller agile squads where each member is cross-functional. In such setups, full-stack web development skills are a goldmine.

Evolution of Tech Stacks

As technology evolves, so do the tools and frameworks used to build digital applications. In 2025, popular stacks like MERN (MongoDB, Express, React, Node.js), MEAN (MongoDB, Express, Angular, Node.js), and even serverless architecture are becoming industry standards.

Being proficient in full-stack web development means you’re equipped to work with:

Front-end technologies (React, Angular, Vue)

Back-end frameworks (Node.js, Django, Ruby on Rails)

Databases (MySQL, MongoDB, PostgreSQL)

Deployment and DevOps tools (Docker, Kubernetes, AWS)

This knowledge gives developers the ability to build complete, production-ready applications from scratch, which is a massive competitive advantage.

Remote Work and Freelancing Boom

The rise of remote work has opened up global opportunities for developers. Employers no longer prioritise geography—they want skills. And full-stack developers, being multi-skilled and independent, are ideal candidates for remote positions and freelance contracts.

Whether you want to work for a U.S.-based tech startup from your home in India or build your own freelance web development business, having full-stack skills is your passport.

Key benefits include:

Higher freelance rates

Flexible work schedules

Access to global job markets

The ability to manage solo projects end-to-end

AI & Automation: Full-Stack Developers Are Adapting

You might wonder—won’t AI and automation replace developers? The truth is, full-stack developers are adapting to this wave by learning how to integrate AI tools and automation into their projects. They're not being replaced; they're evolving.

Skills in full-stack development now often include:

API integration with AI models

Building intelligent web apps

Automating development processes with CI/CD tools

This adaptability makes full-stack developers even more indispensable in 2025.

Conclusion: The Future Is Full-Stack

The digital transformation era isn't slowing down. Businesses of all sizes need agile, skilled professionals who can wear multiple hats. That’s why full-stack web development skills are not just a nice-to-have—they’re a must-have in 2025.

To summarise, here’s why full-stack web development is in high demand:

It offers cost-effectiveness and faster delivery for companies.

Startups and SMEs rely on full-stack developers to scale quickly.

It covers all essential layers of modern tech stacks.

Freelancers and remote workers benefit immensely from this skill.

Developers can stay relevant by integrating AI and automation.

If you’re considering a career in tech or wondering how to future-proof your skill set, learning full-stack web development could be the smartest move you make this year.

0 notes