#how to tag existing docker image

Explore tagged Tumblr posts

Text

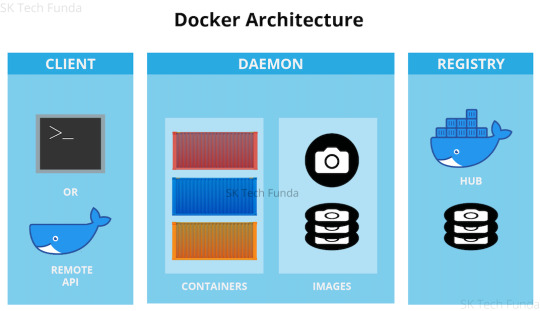

Docker Tag and Push Image to Hub | Docker Tagging Explained and Best Practices

Full Video Link: https://youtu.be/X-uuxvi10Cw Hi, a new #video on #DockerImageTagging is published on @codeonedigest #youtube channel. Learn TAGGING docker image. Different ways to TAG docker image #Tagdockerimage #pushdockerimagetodockerhubrepository #

Next step after building the docker image is to tag docker image. Image tagging is important to upload docker image to docker hub repository or azure container registry or elastic container registry etc. There are different ways to TAG docker image. Learn how to tag docker image? What are the best practices for docker image tagging? How to tag docker container image? How to tag and push docker…

View On WordPress

#docker#docker and Kubernetes#docker build tag#docker compose#docker image tagging#docker image tagging best practices#docker tag and push image to registry#docker tag azure container registry#docker tag command#docker tag image#docker tag push#docker tagging best practices#docker tags explained#docker tutorial#docker tutorial for beginners#how to tag and push docker image#how to tag existing docker image#how to upload image to docker hub repository#push docker image to docker hub repository#Tag docker image#tag docker image after build#what is docker

0 notes

Text

Deploying Containers on AWS ECS with Fargate

Introduction

Amazon Elastic Container Service (ECS) with AWS Fargate enables developers to deploy and manage containers without managing the underlying infrastructure. Fargate eliminates the need to provision or scale EC2 instances, providing a serverless approach to containerized applications.

This guide walks through deploying a containerized application on AWS ECS with Fargate using AWS CLI, Terraform, or the AWS Management Console.

1. Understanding AWS ECS and Fargate

✅ What is AWS ECS?

Amazon ECS (Elastic Container Service) is a fully managed container orchestration service that allows running Docker containers on AWS.

✅ What is AWS Fargate?

AWS Fargate is a serverless compute engine for ECS that removes the need to manage EC2 instances, providing:

Automatic scaling

Per-second billing

Enhanced security (isolation at the task level)

Reduced operational overhead

✅ Why Choose ECS with Fargate?

✔ No need to manage EC2 instances ✔ Pay only for the resources your containers consume ✔ Simplified networking and security ✔ Seamless integration with AWS services (CloudWatch, IAM, ALB)

2. Prerequisites

Before deploying, ensure you have:

AWS Account with permissions for ECS, Fargate, IAM, and VPC

AWS CLI installed and configured

Docker installed to build container images

An existing ECR (Elastic Container Registry) repository

3. Steps to Deploy Containers on AWS ECS with Fargate

Step 1: Create a Dockerized Application

First, create a simple Dockerfile for a Node.js or Python application.

Example: Node.js DockerfiledockerfileFROM node:16-alpine WORKDIR /app COPY package.json . RUN npm install COPY . . CMD ["node", "server.js"] EXPOSE 3000

Build and push the image to AWS ECR:shaws ecr create-repository --repository-name my-app docker build -t my-app . docker tag my-app:latest <AWS_ACCOUNT_ID>.dkr.ecr.<REGION>.amazonaws.com/my-app:latest aws ecr get-login-password --region <REGION> | docker login --username AWS --password-stdin <AWS_ACCOUNT_ID>.dkr.ecr.<REGION>.amazonaws.com docker push <AWS_ACCOUNT_ID>.dkr.ecr.<REGION>.amazonaws.com/my-app:latest

Step 2: Create an ECS Cluster

Use the AWS CLI to create a cluster:shaws ecs create-cluster --cluster-name my-cluster

Or use Terraform:hclresource "aws_ecs_cluster" "my_cluster" { name = "my-cluster" }

Step 3: Define a Task Definition for Fargate

The task definition specifies how the container runs.

Create a task-definition.js{ "family": "my-task", "networkMode": "awsvpc", "executionRoleArn": "arn:aws:iam::<AWS_ACCOUNT_ID>:role/ecsTaskExecutionRole", "cpu": "512", "memory": "1024", "requiresCompatibilities": ["FARGATE"], "containerDefinitions": [ { "name": "my-container", "image": "<AWS_ACCOUNT_ID>.dkr.ecr.<REGION>.amazonaws.com/my-app:latest", "portMappings": [{"containerPort": 3000, "hostPort": 3000}], "essential": true } ] }

Register the task definition:shaws ecs register-task-definition --cli-input-json file://task-definition.json

Step 4: Create an ECS Service

Use AWS CLI:shaws ecs create-service --cluster my-cluster --service-name my-service --task-definition my-task --desired-count 1 --launch-type FARGATE --network-configuration "awsvpcConfiguration={subnets=[subnet-xyz],securityGroups=[sg-xyz],assignPublicIp=\"ENABLED\"}"

Or Terraform:hclresource "aws_ecs_service" "my_service" { name = "my-service" cluster = aws_ecs_cluster.my_cluster.id task_definition = aws_ecs_task_definition.my_task.arn desired_count = 1 launch_type = "FARGATE" network_configuration { subnets = ["subnet-xyz"] security_groups = ["sg-xyz"] assign_public_ip = true } }

Step 5: Configure a Load Balancer (Optional)

If the service needs internet access, configure an Application Load Balancer (ALB).

Create an ALB in your VPC.

Add an ECS service to the target group.

Configure a listener rule for routing traffic.

4. Monitoring & Scaling

🔹 Monitor ECS Service

Use AWS CloudWatch to monitor logs and performance.shaws logs describe-log-groups

🔹 Auto Scaling ECS Tasks

Configure an Auto Scaling Policy:sh aws application-autoscaling register-scalable-target \ --service-namespace ecs \ --scalable-dimension ecs:service:DesiredCount \ --resource-id service/my-cluster/my-service \ --min-capacity 1 \ --max-capacity 5

5. Cleaning Up Resources

After testing, clean up resources to avoid unnecessary charges.shaws ecs delete-service --cluster my-cluster --service my-service --force aws ecs delete-cluster --cluster my-cluster aws ecr delete-repository --repository-name my-app --force

Conclusion

AWS ECS with Fargate simplifies container deployment by eliminating the need to manage servers. By following this guide, you can deploy scalable, cost-efficient, and secure applications using serverless containers.

WEBSITE: https://www.ficusoft.in/aws-training-in-chennai/

0 notes

Text

Docker and Kubernetes Training | Hyderabad

How to Store Images in Container Registries?

Introduction:

Container registries serve as central repositories for storing and managing container images, facilitating seamless deployment across various environments. However, optimizing image storage within these registries requires careful consideration of factors such as scalability, security, and performance. - Docker and Kubernetes Training

Choose the Right Registry:

Selecting the appropriate container registry is the first step towards efficient image storage. Popular options include Docker Hub, Google Container Registry (GCR), Amazon Elastic Container Registry (ECR), and Azure Container Registry (ACR). Evaluate factors such as integration with existing infrastructure, pricing, security features, and geographical distribution to make an informed decision.

Image Tagging Strategy:

Implement a robust tagging strategy to organize and manage container images effectively. Use semantic versioning or timestamp-based tagging to denote image versions and updates clearly. Avoid using generic tags like "latest," as they can lead to ambiguity and inconsistent deployments. - Kubernetes Online Training

Optimize Image Size:

Minimize image size by adhering to best practices such as using lightweight base images, leveraging multi-stage builds, and optimizing Dockerfiles. Removing unnecessary dependencies and layers helps reduce storage requirements and accelerates image pull times during deployment.

Security Considerations:

Prioritize security by implementing access controls, image signing, and vulnerability scanning within the container registry. Restrict image access based on user roles and permissions to prevent unauthorized usage. Regularly scan images for vulnerabilities and apply patches promptly to mitigate potential risks. - Docker Online Training

Automated Builds and CI/CD Integration:

Integrate container registries with continuous integration/continuous deployment (CI/CD) pipelines to automate image builds, testing, and deployment processes. Leverage tools like Jenkins, GitLab CI/CD, or GitHub Actions to streamline workflows and ensure consistent image updates across environments.

Content Trust and Image Signing:

Enable content trust mechanisms such as Docker Content Trust or Notary to ensure image authenticity and integrity. By digitally signing images and verifying signatures during pull operations, organizations can mitigate the risk of tampering and unauthorized modifications.

Data Backup and Disaster Recovery:

Implement robust backup and disaster recovery strategies to safeguard critical container images against data loss or corruption. Regularly backup registry data to redundant storage locations and establish procedures for swift restoration in the event of failures or disasters. - Docker and Kubernetes Online Training

Performance Optimization:

Optimize registry performance by leveraging caching mechanisms, content delivery networks (CDNs), and geo-replication to reduce latency and improve image retrieval speeds. Distribute registry instances across multiple geographical regions to enhance availability and resilience.

Conclusion:

By following best practices such as selecting the right registry, optimizing image size, enforcing security measures, and integrating with CI/CD pipelines, organizations can streamline image management and enhance their containerized deployments without diving into complex coding intricacies.

Visualpath is the Leading and Best Institute for learning Docker And Kubernetes Online in Ameerpet, Hyderabad. We provide Docker Online Training Course, you will get the best course at an affordable cost.

Attend Free Demo

Call on - +91-9989971070.

Visit : https://www.visualpath.in/DevOps-docker-kubernetes-training.html

Blog : https://dockerandkubernetesonlinetraining.blogspot.com/

#docker and kubernetes training#docker online training#docker training in hyderabad#kubernetes training hyderabad#docker and kubernetes online training#docker online training hyderabad#kubernetes online training#kubernetes online training hyderabad

0 notes

Text

How to set up command-line access to Amazon Keyspaces (for Apache Cassandra) by using the new developer toolkit Docker image

Amazon Keyspaces (for Apache Cassandra) is a scalable, highly available, and fully managed Cassandra-compatible database service. Amazon Keyspaces helps you run your Cassandra workloads more easily by using a serverless database that can scale up and down automatically in response to your actual application traffic. Because Amazon Keyspaces is serverless, there are no clusters or nodes to provision and manage. You can get started with Amazon Keyspaces with a few clicks in the console or a few changes to your existing Cassandra driver configuration. In this post, I show you how to set up command-line access to Amazon Keyspaces by using the keyspaces-toolkit Docker image. The keyspaces-toolkit Docker image contains commonly used Cassandra developer tooling. The toolkit comes with the Cassandra Query Language Shell (cqlsh) and is configured with best practices for Amazon Keyspaces. The container image is open source and also compatible with Apache Cassandra 3.x clusters. A command line interface (CLI) such as cqlsh can be useful when automating database activities. You can use cqlsh to run one-time queries and perform administrative tasks, such as modifying schemas or bulk-loading flat files. You also can use cqlsh to enable Amazon Keyspaces features, such as point-in-time recovery (PITR) backups and assign resource tags to keyspaces and tables. The following screenshot shows a cqlsh session connected to Amazon Keyspaces and the code to run a CQL create table statement. Build a Docker image To get started, download and build the Docker image so that you can run the keyspaces-toolkit in a container. A Docker image is the template for the complete and executable version of an application. It’s a way to package applications and preconfigured tools with all their dependencies. To build and run the image for this post, install the latest Docker engine and Git on the host or local environment. The following command builds the image from the source. docker build --tag amazon/keyspaces-toolkit --build-arg CLI_VERSION=latest https://github.com/aws-samples/amazon-keyspaces-toolkit.git The preceding command includes the following parameters: –tag – The name of the image in the name:tag Leaving out the tag results in latest. –build-arg CLI_VERSION – This allows you to specify the version of the base container. Docker images are composed of layers. If you’re using the AWS CLI Docker image, aligning versions significantly reduces the size and build times of the keyspaces-toolkit image. Connect to Amazon Keyspaces Now that you have a container image built and available in your local repository, you can use it to connect to Amazon Keyspaces. To use cqlsh with Amazon Keyspaces, create service-specific credentials for an existing AWS Identity and Access Management (IAM) user. The service-specific credentials enable IAM users to access Amazon Keyspaces, but not access other AWS services. The following command starts a new container running the cqlsh process. docker run --rm -ti amazon/keyspaces-toolkit cassandra.us-east-1.amazonaws.com 9142 --ssl -u "SERVICEUSERNAME" -p "SERVICEPASSWORD" The preceding command includes the following parameters: run – The Docker command to start the container from an image. It’s the equivalent to running create and start. –rm –Automatically removes the container when it exits and creates a container per session or run. -ti – Allocates a pseudo TTY (t) and keeps STDIN open (i) even if not attached (remove i when user input is not required). amazon/keyspaces-toolkit – The image name of the keyspaces-toolkit. us-east-1.amazonaws.com – The Amazon Keyspaces endpoint. 9142 – The default SSL port for Amazon Keyspaces. After connecting to Amazon Keyspaces, exit the cqlsh session and terminate the process by using the QUIT or EXIT command. Drop-in replacement Now, simplify the setup by assigning an alias (or DOSKEY for Windows) to the Docker command. The alias acts as a shortcut, enabling you to use the alias keyword instead of typing the entire command. You will use cqlsh as the alias keyword so that you can use the alias as a drop-in replacement for your existing Cassandra scripts. The alias contains the parameter –v "$(pwd)":/source, which mounts the current directory of the host. This is useful for importing and exporting data with COPY or using the cqlsh --file command to load external cqlsh scripts. alias cqlsh='docker run --rm -ti -v "$(pwd)":/source amazon/keyspaces-toolkit cassandra.us-east-1.amazonaws.com 9142 --ssl' For security reasons, don’t store the user name and password in the alias. After setting up the alias, you can create a new cqlsh session with Amazon Keyspaces by calling the alias and passing in the service-specific credentials. cqlsh -u "SERVICEUSERNAME" -p "SERVICEPASSWORD" Later in this post, I show how to use AWS Secrets Manager to avoid using plaintext credentials with cqlsh. You can use Secrets Manager to store, manage, and retrieve secrets. Create a keyspace Now that you have the container and alias set up, you can use the keyspaces-toolkit to create a keyspace by using cqlsh to run CQL statements. In Cassandra, a keyspace is the highest-order structure in the CQL schema, which represents a grouping of tables. A keyspace is commonly used to define the domain of a microservice or isolate clients in a multi-tenant strategy. Amazon Keyspaces is serverless, so you don’t have to configure clusters, hosts, or Java virtual machines to create a keyspace or table. When you create a new keyspace or table, it is associated with an AWS Account and Region. Though a traditional Cassandra cluster is limited to 200 to 500 tables, with Amazon Keyspaces the number of keyspaces and tables for an account and Region is virtually unlimited. The following command creates a new keyspace by using SingleRegionStrategy, which replicates data three times across multiple Availability Zones in a single AWS Region. Storage is billed by the raw size of a single replica, and there is no network transfer cost when replicating data across Availability Zones. Using keyspaces-toolkit, connect to Amazon Keyspaces and run the following command from within the cqlsh session. CREATE KEYSPACE amazon WITH REPLICATION = {'class': 'SingleRegionStrategy'} AND TAGS = {'domain' : 'shoppingcart' , 'app' : 'acme-commerce'}; The preceding command includes the following parameters: REPLICATION – SingleRegionStrategy replicates data three times across multiple Availability Zones. TAGS – A label that you assign to an AWS resource. For more information about using tags for access control, microservices, cost allocation, and risk management, see Tagging Best Practices. Create a table Previously, you created a keyspace without needing to define clusters or infrastructure. Now, you will add a table to your keyspace in a similar way. A Cassandra table definition looks like a traditional SQL create table statement with an additional requirement for a partition key and clustering keys. These keys determine how data in CQL rows are distributed, sorted, and uniquely accessed. Tables in Amazon Keyspaces have the following unique characteristics: Virtually no limit to table size or throughput – In Amazon Keyspaces, a table’s capacity scales up and down automatically in response to traffic. You don’t have to manage nodes or consider node density. Performance stays consistent as your tables scale up or down. Support for “wide” partitions – CQL partitions can contain a virtually unbounded number of rows without the need for additional bucketing and sharding partition keys for size. This allows you to scale partitions “wider” than the traditional Cassandra best practice of 100 MB. No compaction strategies to consider – Amazon Keyspaces doesn’t require defined compaction strategies. Because you don’t have to manage compaction strategies, you can build powerful data models without having to consider the internals of the compaction process. Performance stays consistent even as write, read, update, and delete requirements change. No repair process to manage – Amazon Keyspaces doesn’t require you to manage a background repair process for data consistency and quality. No tombstones to manage – With Amazon Keyspaces, you can delete data without the challenge of managing tombstone removal, table-level grace periods, or zombie data problems. 1 MB row quota – Amazon Keyspaces supports the Cassandra blob type, but storing large blob data greater than 1 MB results in an exception. It’s a best practice to store larger blobs across multiple rows or in Amazon Simple Storage Service (Amazon S3) object storage. Fully managed backups – PITR helps protect your Amazon Keyspaces tables from accidental write or delete operations by providing continuous backups of your table data. The following command creates a table in Amazon Keyspaces by using a cqlsh statement with customer properties specifying on-demand capacity mode, PITR enabled, and AWS resource tags. Using keyspaces-toolkit to connect to Amazon Keyspaces, run this command from within the cqlsh session. CREATE TABLE amazon.eventstore( id text, time timeuuid, event text, PRIMARY KEY(id, time)) WITH CUSTOM_PROPERTIES = { 'capacity_mode':{'throughput_mode':'PAY_PER_REQUEST'}, 'point_in_time_recovery':{'status':'enabled'} } AND TAGS = {'domain' : 'shoppingcart' , 'app' : 'acme-commerce' , 'pii': 'true'}; The preceding command includes the following parameters: capacity_mode – Amazon Keyspaces has two read/write capacity modes for processing reads and writes on your tables. The default for new tables is on-demand capacity mode (the PAY_PER_REQUEST flag). point_in_time_recovery – When you enable this parameter, you can restore an Amazon Keyspaces table to a point in time within the preceding 35 days. There is no overhead or performance impact by enabling PITR. TAGS – Allows you to organize resources, define domains, specify environments, allocate cost centers, and label security requirements. Insert rows Before inserting data, check if your table was created successfully. Amazon Keyspaces performs data definition language (DDL) operations asynchronously, such as creating and deleting tables. You also can monitor the creation status of a new resource programmatically by querying the system schema table. Also, you can use a toolkit helper for exponential backoff. Check for table creation status Cassandra provides information about the running cluster in its system tables. With Amazon Keyspaces, there are no clusters to manage, but it still provides system tables for the Amazon Keyspaces resources in an account and Region. You can use the system tables to understand the creation status of a table. The system_schema_mcs keyspace is a new system keyspace with additional content related to serverless functionality. Using keyspaces-toolkit, run the following SELECT statement from within the cqlsh session to retrieve the status of the newly created table. SELECT keyspace_name, table_name, status FROM system_schema_mcs.tables WHERE keyspace_name = 'amazon' AND table_name = 'eventstore'; The following screenshot shows an example of output for the preceding CQL SELECT statement. Insert sample data Now that you have created your table, you can use CQL statements to insert and read sample data. Amazon Keyspaces requires all write operations (insert, update, and delete) to use the LOCAL_QUORUM consistency level for durability. With reads, an application can choose between eventual consistency and strong consistency by using LOCAL_ONE or LOCAL_QUORUM consistency levels. The benefits of eventual consistency in Amazon Keyspaces are higher availability and reduced cost. See the following code. CONSISTENCY LOCAL_QUORUM; INSERT INTO amazon.eventstore(id, time, event) VALUES ('1', now(), '{eventtype:"click-cart"}'); INSERT INTO amazon.eventstore(id, time, event) VALUES ('2', now(), '{eventtype:"showcart"}'); INSERT INTO amazon.eventstore(id, time, event) VALUES ('3', now(), '{eventtype:"clickitem"}') IF NOT EXISTS; SELECT * FROM amazon.eventstore; The preceding code uses IF NOT EXISTS or lightweight transactions to perform a conditional write. With Amazon Keyspaces, there is no heavy performance penalty for using lightweight transactions. You get similar performance characteristics of standard insert, update, and delete operations. The following screenshot shows the output from running the preceding statements in a cqlsh session. The three INSERT statements added three unique rows to the table, and the SELECT statement returned all the data within the table. Export table data to your local host You now can export the data you just inserted by using the cqlsh COPY TO command. This command exports the data to the source directory, which you mounted earlier to the working directory of the Docker run when creating the alias. The following cqlsh statement exports your table data to the export.csv file located on the host machine. CONSISTENCY LOCAL_ONE; COPY amazon.eventstore(id, time, event) TO '/source/export.csv' WITH HEADER=false; The following screenshot shows the output of the preceding command from the cqlsh session. After the COPY TO command finishes, you should be able to view the export.csv from the current working directory of the host machine. For more information about tuning export and import processes when using cqlsh COPY TO, see Loading data into Amazon Keyspaces with cqlsh. Use credentials stored in Secrets Manager Previously, you used service-specific credentials to connect to Amazon Keyspaces. In the following example, I show how to use the keyspaces-toolkit helpers to store and access service-specific credentials in Secrets Manager. The helpers are a collection of scripts bundled with keyspaces-toolkit to assist with common tasks. By overriding the default entry point cqlsh, you can call the aws-sm-cqlsh.sh script, a wrapper around the cqlsh process that retrieves the Amazon Keyspaces service-specific credentials from Secrets Manager and passes them to the cqlsh process. This script allows you to avoid hard-coding the credentials in your scripts. The following diagram illustrates this architecture. Configure the container to use the host’s AWS CLI credentials The keyspaces-toolkit extends the AWS CLI Docker image, making keyspaces-toolkit extremely lightweight. Because you may already have the AWS CLI Docker image in your local repository, keyspaces-toolkit adds only an additional 10 MB layer extension to the AWS CLI. This is approximately 15 times smaller than using cqlsh from the full Apache Cassandra 3.11 distribution. The AWS CLI runs in a container and doesn’t have access to the AWS credentials stored on the container’s host. You can share credentials with the container by mounting the ~/.aws directory. Mount the host directory to the container by using the -v parameter. To validate a proper setup, the following command lists current AWS CLI named profiles. docker run --rm -ti -v ~/.aws:/root/.aws --entrypoint aws amazon/keyspaces-toolkit configure list-profiles The ~/.aws directory is a common location for the AWS CLI credentials file. If you configured the container correctly, you should see a list of profiles from the host credentials. For instructions about setting up the AWS CLI, see Step 2: Set Up the AWS CLI and AWS SDKs. Store credentials in Secrets Manager Now that you have configured the container to access the host’s AWS CLI credentials, you can use the Secrets Manager API to store the Amazon Keyspaces service-specific credentials in Secrets Manager. The secret name keyspaces-credentials in the following command is also used in subsequent steps. docker run --rm -ti -v ~/.aws:/root/.aws --entrypoint aws amazon/keyspaces-toolkit secretsmanager create-secret --name keyspaces-credentials --description "Store Amazon Keyspaces Generated Service Credentials" --secret-string "{"username":"SERVICEUSERNAME","password":"SERVICEPASSWORD","engine":"cassandra","host":"SERVICEENDPOINT","port":"9142"}" The preceding command includes the following parameters: –entrypoint – The default entry point is cqlsh, but this command uses this flag to access the AWS CLI. –name – The name used to identify the key to retrieve the secret in the future. –secret-string – Stores the service-specific credentials. Replace SERVICEUSERNAME and SERVICEPASSWORD with your credentials. Replace SERVICEENDPOINT with the service endpoint for the AWS Region. Creating and storing secrets requires CreateSecret and GetSecretValue permissions in your IAM policy. As a best practice, rotate secrets periodically when storing database credentials. Use the Secrets Manager helper script Use the Secrets Manager helper script to sign in to Amazon Keyspaces by replacing the user and password fields with the secret key from the preceding keyspaces-credentials command. docker run --rm -ti -v ~/.aws:/root/.aws --entrypoint aws-sm-cqlsh.sh amazon/keyspaces-toolkit keyspaces-credentials --ssl --execute "DESCRIBE Keyspaces" The preceding command includes the following parameters: -v – Used to mount the directory containing the host’s AWS CLI credentials file. –entrypoint – Use the helper by overriding the default entry point of cqlsh to access the Secrets Manager helper script, aws-sm-cqlsh.sh. keyspaces-credentials – The key to access the credentials stored in Secrets Manager. –execute – Runs a CQL statement. Update the alias You now can update the alias so that your scripts don’t contain plaintext passwords. You also can manage users and roles through Secrets Manager. The following code sets up a new alias by using the keyspaces-toolkit Secrets Manager helper for passing the service-specific credentials to Secrets Manager. alias cqlsh='docker run --rm -ti -v ~/.aws:/root/.aws -v "$(pwd)":/source --entrypoint aws-sm-cqlsh.sh amazon/keyspaces-toolkit keyspaces-credentials --ssl' To have the alias available in every new terminal session, add the alias definition to your .bashrc file, which is executed on every new terminal window. You can usually find this file in $HOME/.bashrc or $HOME/bash_aliases (loaded by $HOME/.bashrc). Validate the alias Now that you have updated the alias with the Secrets Manager helper, you can use cqlsh without the Docker details or credentials, as shown in the following code. cqlsh --execute "DESCRIBE TABLE amazon.eventstore;" The following screenshot shows the running of the cqlsh DESCRIBE TABLE statement by using the alias created in the previous section. In the output, you should see the table definition of the amazon.eventstore table you created in the previous step. Conclusion In this post, I showed how to get started with Amazon Keyspaces and the keyspaces-toolkit Docker image. I used Docker to build an image and run a container for a consistent and reproducible experience. I also used an alias to create a drop-in replacement for existing scripts, and used built-in helpers to integrate cqlsh with Secrets Manager to store service-specific credentials. Now you can use the keyspaces-toolkit with your Cassandra workloads. As a next step, you can store the image in Amazon Elastic Container Registry, which allows you to access the keyspaces-toolkit from CI/CD pipelines and other AWS services such as AWS Batch. Additionally, you can control the image lifecycle of the container across your organization. You can even attach policies to expiring images based on age or download count. For more information, see Pushing an image. Cheat sheet of useful commands I did not cover the following commands in this blog post, but they will be helpful when you work with cqlsh, AWS CLI, and Docker. --- Docker --- #To view the logs from the container. Helpful when debugging docker logs CONTAINERID #Exit code of the container. Helpful when debugging docker inspect createtablec --format='{{.State.ExitCode}}' --- CQL --- #Describe keyspace to view keyspace definition DESCRIBE KEYSPACE keyspace_name; #Describe table to view table definition DESCRIBE TABLE keyspace_name.table_name; #Select samples with limit to minimize output SELECT * FROM keyspace_name.table_name LIMIT 10; --- Amazon Keyspaces CQL --- #Change provisioned capacity for tables ALTER TABLE keyspace_name.table_name WITH custom_properties={'capacity_mode':{'throughput_mode': 'PROVISIONED', 'read_capacity_units': 4000, 'write_capacity_units': 3000}} ; #Describe current capacity mode for tables SELECT keyspace_name, table_name, custom_properties FROM system_schema_mcs.tables where keyspace_name = 'amazon' and table_name='eventstore'; --- Linux --- #Line count of multiple/all files in the current directory find . -type f | wc -l #Remove header from csv sed -i '1d' myData.csv About the Author Michael Raney is a Solutions Architect with Amazon Web Services. https://aws.amazon.com/blogs/database/how-to-set-up-command-line-access-to-amazon-keyspaces-for-apache-cassandra-by-using-the-new-developer-toolkit-docker-image/

1 note

·

View note

Photo

hydralisk98′s web projects tracker:

Core principles=

Fail faster

‘Learn, Tweak, Make’ loop

This is meant to be a quick reference for tracking progress made over my various projects, organized by their “ultimate target” goal:

(START)

(Website)=

Install Firefox

Install Chrome

Install Microsoft newest browser

Install Lynx

Learn about contemporary web browsers

Install a very basic text editor

Install Notepad++

Install Nano

Install Powershell

Install Bash

Install Git

Learn HTML

Elements and attributes

Commenting (single line comment, multi-line comment)

Head (title, meta, charset, language, link, style, description, keywords, author, viewport, script, base, url-encode, )

Hyperlinks (local, external, link titles, relative filepaths, absolute filepaths)

Headings (h1-h6, horizontal rules)

Paragraphs (pre, line breaks)

Text formatting (bold, italic, deleted, inserted, subscript, superscript, marked)

Quotations (quote, blockquote, abbreviations, address, cite, bidirectional override)

Entities & symbols (&entity_name, &entity_number,  , useful HTML character entities, diacritical marks, mathematical symbols, greek letters, currency symbols, )

Id (bookmarks)

Classes (select elements, multiple classes, different tags can share same class, )

Blocks & Inlines (div, span)

Computercode (kbd, samp, code, var)

Lists (ordered, unordered, description lists, control list counting, nesting)

Tables (colspan, rowspan, caption, colgroup, thead, tbody, tfoot, th)

Images (src, alt, width, height, animated, link, map, area, usenmap, , picture, picture for format support)

old fashioned audio

old fashioned video

Iframes (URL src, name, target)

Forms (input types, action, method, GET, POST, name, fieldset, accept-charset, autocomplete, enctype, novalidate, target, form elements, input attributes)

URL encode (scheme, prefix, domain, port, path, filename, ascii-encodings)

Learn about oldest web browsers onwards

Learn early HTML versions (doctypes & permitted elements for each version)

Make a 90s-like web page compatible with as much early web formats as possible, earliest web browsers’ compatibility is best here

Learn how to teach HTML5 features to most if not all older browsers

Install Adobe XD

Register a account at Figma

Learn Adobe XD basics

Learn Figma basics

Install Microsoft’s VS Code

Install my Microsoft’s VS Code favorite extensions

Learn HTML5

Semantic elements

Layouts

Graphics (SVG, canvas)

Track

Audio

Video

Embed

APIs (geolocation, drag and drop, local storage, application cache, web workers, server-sent events, )

HTMLShiv for teaching older browsers HTML5

HTML5 style guide and coding conventions (doctype, clean tidy well-formed code, lower case element names, close all html elements, close empty html elements, quote attribute values, image attributes, space and equal signs, avoid long code lines, blank lines, indentation, keep html, keep head, keep body, meta data, viewport, comments, stylesheets, loading JS into html, accessing HTML elements with JS, use lowercase file names, file extensions, index/default)

Learn CSS

Selections

Colors

Fonts

Positioning

Box model

Grid

Flexbox

Custom properties

Transitions

Animate

Make a simple modern static site

Learn responsive design

Viewport

Media queries

Fluid widths

rem units over px

Mobile first

Learn SASS

Variables

Nesting

Conditionals

Functions

Learn about CSS frameworks

Learn Bootstrap

Learn Tailwind CSS

Learn JS

Fundamentals

Document Object Model / DOM

JavaScript Object Notation / JSON

Fetch API

Modern JS (ES6+)

Learn Git

Learn Browser Dev Tools

Learn your VS Code extensions

Learn Emmet

Learn NPM

Learn Yarn

Learn Axios

Learn Webpack

Learn Parcel

Learn basic deployment

Domain registration (Namecheap)

Managed hosting (InMotion, Hostgator, Bluehost)

Static hosting (Nertlify, Github Pages)

SSL certificate

FTP

SFTP

SSH

CLI

Make a fancy front end website about

Make a few Tumblr themes

===You are now a basic front end developer!

Learn about XML dialects

Learn XML

Learn about JS frameworks

Learn jQuery

Learn React

Contex API with Hooks

NEXT

Learn Vue.js

Vuex

NUXT

Learn Svelte

NUXT (Vue)

Learn Gatsby

Learn Gridsome

Learn Typescript

Make a epic front end website about

===You are now a front-end wizard!

Learn Node.js

Express

Nest.js

Koa

Learn Python

Django

Flask

Learn GoLang

Revel

Learn PHP

Laravel

Slim

Symfony

Learn Ruby

Ruby on Rails

Sinatra

Learn SQL

PostgreSQL

MySQL

Learn ORM

Learn ODM

Learn NoSQL

MongoDB

RethinkDB

CouchDB

Learn a cloud database

Firebase, Azure Cloud DB, AWS

Learn a lightweight & cache variant

Redis

SQLlite

NeDB

Learn GraphQL

Learn about CMSes

Learn Wordpress

Learn Drupal

Learn Keystone

Learn Enduro

Learn Contentful

Learn Sanity

Learn Jekyll

Learn about DevOps

Learn NGINX

Learn Apache

Learn Linode

Learn Heroku

Learn Azure

Learn Docker

Learn testing

Learn load balancing

===You are now a good full stack developer

Learn about mobile development

Learn Dart

Learn Flutter

Learn React Native

Learn Nativescript

Learn Ionic

Learn progressive web apps

Learn Electron

Learn JAMstack

Learn serverless architecture

Learn API-first design

Learn data science

Learn machine learning

Learn deep learning

Learn speech recognition

Learn web assembly

===You are now a epic full stack developer

Make a web browser

Make a web server

===You are now a legendary full stack developer

[...]

(Computer system)=

Learn to execute and test your code in a command line interface

Learn to use breakpoints and debuggers

Learn Bash

Learn fish

Learn Zsh

Learn Vim

Learn nano

Learn Notepad++

Learn VS Code

Learn Brackets

Learn Atom

Learn Geany

Learn Neovim

Learn Python

Learn Java?

Learn R

Learn Swift?

Learn Go-lang?

Learn Common Lisp

Learn Clojure (& ClojureScript)

Learn Scheme

Learn C++

Learn C

Learn B

Learn Mesa

Learn Brainfuck

Learn Assembly

Learn Machine Code

Learn how to manage I/O

Make a keypad

Make a keyboard

Make a mouse

Make a light pen

Make a small LCD display

Make a small LED display

Make a teleprinter terminal

Make a medium raster CRT display

Make a small vector CRT display

Make larger LED displays

Make a few CRT displays

Learn how to manage computer memory

Make datasettes

Make a datasette deck

Make floppy disks

Make a floppy drive

Learn how to control data

Learn binary base

Learn hexadecimal base

Learn octal base

Learn registers

Learn timing information

Learn assembly common mnemonics

Learn arithmetic operations

Learn logic operations (AND, OR, XOR, NOT, NAND, NOR, NXOR, IMPLY)

Learn masking

Learn assembly language basics

Learn stack construct’s operations

Learn calling conventions

Learn to use Application Binary Interface or ABI

Learn to make your own ABIs

Learn to use memory maps

Learn to make memory maps

Make a clock

Make a front panel

Make a calculator

Learn about existing instruction sets (Intel, ARM, RISC-V, PIC, AVR, SPARC, MIPS, Intersil 6120, Z80...)

Design a instruction set

Compose a assembler

Compose a disassembler

Compose a emulator

Write a B-derivative programming language (somewhat similar to C)

Write a IPL-derivative programming language (somewhat similar to Lisp and Scheme)

Write a general markup language (like GML, SGML, HTML, XML...)

Write a Turing tarpit (like Brainfuck)

Write a scripting language (like Bash)

Write a database system (like VisiCalc or SQL)

Write a CLI shell (basic operating system like Unix or CP/M)

Write a single-user GUI operating system (like Xerox Star’s Pilot)

Write a multi-user GUI operating system (like Linux)

Write various software utilities for my various OSes

Write various games for my various OSes

Write various niche applications for my various OSes

Implement a awesome model in very large scale integration, like the Commodore CBM-II

Implement a epic model in integrated circuits, like the DEC PDP-15

Implement a modest model in transistor-transistor logic, similar to the DEC PDP-12

Implement a simple model in diode-transistor logic, like the original DEC PDP-8

Implement a simpler model in later vacuum tubes, like the IBM 700 series

Implement simplest model in early vacuum tubes, like the EDSAC

[...]

(Conlang)=

Choose sounds

Choose phonotactics

[...]

(Animation ‘movie’)=

[...]

(Exploration top-down ’racing game’)=

[...]

(Video dictionary)=

[...]

(Grand strategy game)=

[...]

(Telex system)=

[...]

(Pen&paper tabletop game)=

[...]

(Search engine)=

[...]

(Microlearning system)=

[...]

(Alternate planet)=

[...]

(END)

4 notes

·

View notes

Text

How can I install Podman on CentOS 8 / RHEL 8 Linux machine?. RHEL 8 / CentOS 8 has dropped official support for Docker as container runtime. Instead, Red Hat has been working on libpod (Podman’s container management library) which provides a library for applications to use the Container Pod concept available in the world of Kubernetes. One of the tools provided as part of libpod project is podman – Used for managing Pods, Containers, and Container Images. Podman can be defined as a tool designed for managing containers and pods without requiring a container daemon. All the containers and Pods are created as child processes of the Podman tool. The Podman’s CLI is based on the Docker CLI. It is easy to install Podman on CentOS 8 or RHEL 8 Linux machine. Most container related tools on CentOS 8 are available on the module called container-tools. For installation on other systems, please check below guides: How To Install Podman on Debian How To Install Podman on Arch Linux / Manjaro How To Install Podman on CentOS & Fedora Install Podman on Ubuntu Follow below guides to install Podman on CentOS 8 / RHEL 8. Step 1: Enable EPEL repository Ensure EPEL repository as some Python packages required are available in EPEL/PowerTools repository. Enable EPEL repository CentOS 8 Ensure PowerTools repo is enabled as well – CentOS 8 only sudo dnf config-manager --set-enabled powertools Step 2: Install Podman on CentOS 8 / RHEL 8 First update your system: sudo dnf -y update The fire the commands below to install Podman on CentOS 8 / RHEL 8 Linux machine. $ sudo dnf module list | grep container-tools container-tools rhel8 [d][e] common [d] Most recent (rolling) versions of podman, buildah, skopeo, runc, conmon, runc, conmon, CRIU, Udica, etc as well as dependencies such as container-selinux built and tested together, and updated as frequently as every 12 weeks. $ sudo dnf install -y @container-tools If the installation was successful, you should be able to check the podman version. $ podman version Client: Podman Engine Version: 4.0.2 API Version: 4.0.2 Go Version: go1.17.7 Built: Sun May 15 19:45:11 2022 OS/Arch: linux/amd64 To check the help page, run the command: $ podman --help Step 3: Using Podman on CentOS 8 / RHEL 8 Now that Podman has been installed in our Linux machine, it’s time to start playing with it. First, check if you can run a basic container. $ podman run -it --rm alpine sh / # cat /etc/os-release NAME="Alpine Linux" ID=alpine VERSION_ID=3.16.0 PRETTY_NAME="Alpine Linux v3.16" HOME_URL="https://alpinelinux.org/" BUG_REPORT_URL="https://bugs.alpinelinux.org/" / # exit Manage Container images To download, list and delete images, use the commands: # Pull image $ podman pull ubuntu $ podman pull centos # List existing images $ podman images REPOSITORY TAG IMAGE ID CREATED SIZE docker.io/library/ubuntu latest 775349758637 3 weeks ago 66.6 MB docker.io/library/alpine latest 965ea09ff2eb 5 weeks ago 5.82 MB docker.io/library/centos latest 0f3e07c0138f 7 weeks ago 227 MB # Delete images $ podman rmi $ podman rmi 775349758637 775349758637aff77bf85e2ff0597e86e3e859183ef0baba8b3e8fc8d3cba51c So as not to repeat our work, I recommend you check our complete guide on how to work with podman. Running Docker Containers using Podman and Libpod Setup Docker Container Registry with Podman & Let’s Encrypt SSL How To Publish Docker Image to Docker Hub with Podman

0 notes

Text

Docker Hub: The Choice for Better Business Outcome

Docker store and store cloud are presently important for Docker Hub which gives a solitary encounter to finding, sharing, and putting away compartment pictures. This obviously implies that docker confirmed and affirmed distributor pictures are presently accessible for download and revelation on docker center point. Docker center presents another client experience.

Thousands of organizations and millions of individual users use Docker Hub, cloud, and store for their container needs. This docker hub is designed to update to carry together the features that users of each product recognize and love the most, meanwhile addressing docker hub requests around repository and group control.

Docker removes redundant, everyday setup assignments and is utilized all through the improvement lifecycle for quick, simple, and versatile application advancement - work area and cloud. Docker's complete start to finish stage incorporates UIs, CLIs, APIs, and security that is designed to cooperate across the whole application conveyance lifecycle.

Docker Business:

With this declaration, Docker presented our new membership levels, including Docker Business–our contribution explicitly for associations who need to scale their utilization of Docker all while keeping up with security and consistency with added undertaking grade the board and control. Already, the base number of seats needed for a Docker Business membership was 50+, which restricted admittance to bigger associations.

In any case, Docker has been hearing from clients who need the additional elements and advantages that accompany Docker Business however don't right now meet the base seats. A few clients are prepared to take the action yet need to keep away from extensive deals and buy request (PO) processes. Others may likewise be keen on "trying out" Docker Business at a more limited size prior to focusing on a bigger rollout.

Indeed, today we've made it much more straightforward and more open than any time in recent memory for our clients to take the action to Docker Business.

Advanced Image Management:

how about we investigate the most recent component of docker expert and group client is the new progressed picture the board dashboard accessible on docker center. the brand new dashboard affords developers with a brand new degree of getting entry to all the content material you have got saved in Docker Hub supplying you with extra quality-grained management over getting rid of antique content material and exploring antique versions of driven images.

Generally in Docker Hub, we have had perceivability into the most recent variant of a label that a client has pushed, however, what has been exceptionally difficult to see or even comprehend is what befallen those old things that you pushed. At the point when you push a picture to Docker Hub, you are pushing a show, a rundown of every one of the layers of your picture, and the actual layers.

So when you want to update an existing tag, only the new layers will be pushed along the new manifest that reference these layers.it will be given a tag that you can specify when you push, such as bengotch/simple whale: latest. one thing you have to remember is that this does not mean that all of the old manifests which present at the previous layer that made up your images are removed from the hub.

This implies you can have many old forms of pictures that your frameworks can in any case be pulling by hash rather than by the tag and you might be uninformed of which old renditions are as yet being used. Alongside this, the main way as of recently to eliminate these old variants was to erase the whole repo and start once more!

We trust that you are energized for the initial step of us giving more prominent knowledge into your substance on Docker Hub, assuming you need to get everything rolling investigating your substance then everything clients can perceive the number of dormant pictures they have and Pro and Team clients can see which labels these used to be related with, what the hashes of these are and begin eliminating these today.

Docker Hub Autobuild :

As a significant number of you know, it has been a troublesome period for organizations offering free cloud processes. Shockingly, Docker's Autobuild administration has been designated by similar troublemakers, so today we are frustrated to report that we will be ending Auto expands on the complementary plan beginning from June 18, 2021.

Over the most recent couple of months, we have seen gigantic development in the number of troublemakers who are exploiting this assistance determined to manhandle it for crypto mining. Throughout the previous 7 years, we have been pleased to offer our Autobuild administration to every one of our clients as the most straightforward method for setting up CI for containerized projects. Just as the expanded expense of running the help, this sort of misuse occasionally impacts execution for paying Autobuild clients and prompts numerous restless evenings for our group.

Get an early advantage on your coding by utilizing Docker pictures to productively foster your own special applications on Windows and Mac. Make your multi-holder application utilizing Docker Compose. Incorporate with your beloved devices all through your advancement pipeline - Docker works with all improvement instruments you use including VS Code, CircleCI, and GitHub. Bundle applications as compact compartment pictures to run in any climate reliably from on-premises Kubernetes to AWS ECS, Azure ACI, Google GKE and that's only the tip of the iceberg.

New Self-Serve Payment Option:

Assuming you are prepared to take the action to Docker Business, you would now be able to buy a base 5 seats through credit/charge card by marking in or making a Docker account. Once bought, you have moment admittance to all the extraordinary administration and security highlights elite to Docker Business clients things like Image Access Management for confining client admittance to explicit pictures (e.g., Docker Official Images and Verified Publisher Images), a brought together administration console for complete perceivability on the entirety of your Docker surroundings, SAML single sign-on for consistent onboarding/offboarding (coming soon), and a whole lot more! Obviously, your designer groups can proceed to work together and keep up with the usefulness of Docker Desktop, our trusted multi-stage engineer apparatus.

What's more, you can without much of a stretch overhaul from your present membership to Docker Business. Essentially do as such by signing into your Docker Hub record and refreshing your charging plan there. Your present membership will be credited for the excess term, and the equilibrium will be applied to the expense of your new Docker Business membership. Another membership period will likewise be set.

Benefits:

Each association is in a pursuit to convey better business results, and top entertainers are utilizing programming development to get it going. Definitely, while overseeing many contending needs, programming pioneers will be confronted with a form versus purchase choice sooner or later. At the point when you consider a few variables including the expense of time, opportunity cost, time to esteem, the expense of safety hazards, and when DIY with OSS seems OK, the information shows that most associations will be in an ideal situation purchasing business programming versus building their own elective arrangements. Offloading the undifferentiated work diminishes interruptions and empowers designers to zero in on conveying worth to clients.

Keep it simple:

Docker eliminates intricacies for designers and assists them with accomplishing more prominent usefulness. We are proceeding to put resources into making mystically straightforward encounters for engineers while likewise conveying the scale and security organizations depend on. Docker offers memberships for designers and groups of each size, including our most current membership: Docker Business.

Move Fast:

Introduce from a solitary bundle to get ready for action in minutes. Code and test locally while guaranteeing consistency among advancement and creation.

Collaborate:

Utilize Certified and local area pictures in your venture. Push to a cloud-based application vault and work together with colleagues.

Conclusion

Docker is a containerization stage that bundles your application and every one of its conditions together as holders in order to guarantee that your application works consistently in any climate, be it an improvement, test, or creation. Docker holders, envelop a piece of programming by a total filesystem that contains all that is expected to run: code, runtime, framework apparatuses, framework libraries, and so on It wraps essentially whatever can be introduced on a server. This ensures that the product will consistently run something very similar, paying little mind to its current circumstance.

Frequently Asked Question?

1. What are Docker Images?

Ans - Docker Images is the wellspring of the Docker compartment. At the end of the day, Docker images are utilized to make holders. At the point when a client runs a Docker picture, a case of a holder is made. These docker pictures can be sent to any Docker climate.

2. What is Docker Hub?

Ans - Docker pictures create docker containers. There needs to be a registry wherein those docker images stay. This registry is Docker Hub. customers can pick up images from Docker Hub and use them to create customized pictures and packing containers. presently, the Docker Hub is the arena’s largest public repository of image containers

3. What is a Dockerfile?

Ans - Docker can assemble Images naturally by perusing the guidelines from a document called Dockerfile. A Dockerfile is a text report that contains every one of the orders a client could approach the order line to gather a picture. Utilizing docker assembler, clients can make a mechanized form that executes a few order line directions in progression.

4. What is Docker Machine?

Ans - A Docker machine is a device that allows you to introduce Docker Engine on virtual hosts. These hosts would now be able to be overseen utilizing the docker-machine orders. Docker machine additionally allows you to arrange Docker Swarm Clusters.

1 note

·

View note

Text

Version 320

youtube

windows

zip

exe

os x

app

tar.gz

linux

tar.gz

source

tar.gz

I had a great week. The downloader overhaul is in its last act, and I've fixed and added some other neat stuff. There's also a neat hydrus-related project for advanced users to try out.

Late breaking edit: Looks like I have broken e621 queries that include the '/' character this week, like 'male/female'! Hold off on updating if you have these, or pause them and wait a week for me to fix it!

misc

I fixed an issue introduced in last week's new pipeline with new subs sometimes not parsing the first page of results properly. If you missed files you wanted in the first sync, please reset the affected subs' caches.

Due to an oversight, a mappings cache that I now take advantage of to speed up tag searches was missing an index that would speed it up even further. I've now added these indices--and your clients will spend a minute generating them on update--and most tag searches are now superfast! My IRL client was taking 1.6s to do the first step of finding 5000-file tag results, and now it does it in under 5ms! Indices!

The hyperlinks on the media viewer now use any custom browser launch path in options->files and trash.

downloader overhaul (easy)

I have now added gallery parsers for all the default sites hydrus supports out the box. Any regular download now entirely parses in the new system. With luck, you won't notice any difference, but let me know if you get any searches that terminate early or any other problems.

I have also written the new Gallery URL Generator (GUG) objects for everything, but I have not yet plugged these in. I am now on the precipice of switching this final legacy step over to the new system. This will be a big shift that will finally allow us to have new gallery 'seachers' for all kinds of new sites. I expect to do this next week.

When I do the GUG switch, anything that is supported by default in the client should switch over silently and automatically, but if you have added any new custom boorus, a small amount of additional work will be required on your end to get them working again. I will work with the other parser-creators in the community to make this as painless as possible, and there will be instructions in next week's release post. In any case, I expect to roll out nicer downloaders for the popular desired boorus (derpibooru, FA, etc...) as part of the normal upcoming update process, along with some other new additions like artstation and hopefully twitter username lookup.

In any case, watch this space! It's almost happening!

downloader overhaul (advanced)

So, all the GUGs are in place, and the dialog now saves. If you are interested in making some of your own, check what I've done. I'm going to swap out the legacy 'gallery identifier' object with GUGs this coming week, and fingers-crossed, it will mostly all just swap out no prob. I can update existing gallery identifiers to my new GUGs, which will automatically inherit the url classes and parsers I've already got in place, but custom boorus are too complicated for me to update completely automatically. I will try to auto-generate gallery and post url parsers, but users will need GUGs and url classes to get working again. I think the best solution is if we direct medium-level users to the parser github and have them link things together manually, and then follow-up with whatever 'easy import' object I come up with to bundle downloader-capability into a single object. And as I say above, I'll also fold in the more popular downloaders into some regular updates. I am open to discuss this more if you have ideas!

Furthermore, I've extended url classes this week to allow 'default' values for path components and query parameters. If that component or parameter is missing from a given URL, it will still be recognised as the URL class, but it will gain the default value during import normalisation. e.g. The kind of URL safebooru gives your browser when you type in a query:

https://safebooru.org/index.php?page=post&s=list&tags=contrapposto

Will be automatically populated with an initialising pid=0 parameter:

https://safebooru.org/index.php?page=post&pid=0&s=list&tags=contrapposto

This helps us with several "the site gives a blank page/index value for the first page, which I can't match to a paged URL that will then increment via the url class"-kind of problems. It will particularly help when I add drag-and-drop search--we want it so a user can type in a query in their browser, check it is good, and then DnD the URL the site gave them straight into hydrus and the page stuff will all get sorted behind the scenes without them having to think about it.

I've updated a bunch of the gallery url classes this week with these new defaults, so again, if you are interested, please check them out. The Hentai Foundry ones are interesting.

I've also improved some of the logic behind download sites' 'source url' pre-import file status checking. Now, if URL X at Site A provides a Source URL Y to Site B, and the file Y is mapped to also has a URL Z that fits the same url class as X, Y is now distrusted as a source (wew). This stops false positive source url recognition when the booru gives the same 'original' source url for multiple files (including alternate/edited files). e621 has particularly had several of these issues, and I am sure several others do as well. I've been tracking this issue with several people, so if you have been hit by this, please let me if and know this change fixes anything, particularly for new files going forward, which have yet to be 'tainted' by multiple incorrect known url mappings. I'll also be adding some 'just download the damned file' checkboxes to file import options as I have previously discussed.

A user on the discord helpfully submitted some code that adds an 'import cookies.txt' button to the review session cookies panels. This could be a real neat way to effect fake logins, where you just copy your browser's cookies, so please play with this and let me know how you get on. I had mixed success getting different styles of cookies.txt to import, so I would be interested in more information, and to know which sites work great at logging in this way, and which are bad, and which cookies.txt browser add-ons are best!

a web interface to the server

I have been talking for a bit with a user who has written a web interface to the hydrus server. He is a clever dude who has done some neat work, and his project is now ready for people to try out. If you are fairly experienced in hydrus and would like to experiment with a nice-looking computer- and phone-compatible web interface to the general file/tag mapping system hydrus uses, please check this out:

https://github.com/mserajnik/hydrusrvue

https://github.com/mserajnik/hydrusrv

https://github.com/mserajnik/hydrusrv-docker

In particular, check out the live demo and screenshots here:

https://github.com/mserajnik/hydrusrvue/#demo

Let him know how you like it! I expect to write proper, easier APIs in the coming years, which will allow projects like this to do all sorts of new and neat things.

full list

clients should now have objects for all default downloaders. everything should be prepped for the big switchover:

wrote gallery url generators for all the default downloaders and a couple more as well

wrote a gallery parser for deviant art--it also comes with an update to the DA url class because the meta 'next page' link on DA gallery pages is invalid wew!

wrote a gallery parser for hentai foundry, inkbunny, rule34hentai, moebooru (konachan, sakugabooru, yande.re), artstation, newgrounds, and pixiv artist galleries (static html)

added a gallery parser for sankaku

the artstation post url parser no longer fetches cover images

url classes can now support 'default' values for path components and query parameters! so, if your url might be missing a page=1 initialsation value due to user drag-and-drop, you can auto-add it in the normalisation step!

if the entered default does not match the rules of the component or parameter, it will be cleared back to none!

all appropriate default gallery url classes (which is most) now have these default values. all default gallery url classes will be overwritten on db update

three test 'search initialisation' url classes that attempted to fix this problem a different way will be deleted on update, if present

updated some other url classes

when checking source urls during the pre-download import status check, the client will now distrust parsed source urls if the files they seem to refer to also have other urls of the same url class as the file import object being actioned (basically, this is some logic that tries to detect bad source url attribution, where multiple files on a booru (typically including alternate edits) are all source-url'd back to a single original)

gallery page parsing now discounts parsed 'next page' urls that are the same as the page that fetched them (some gallery end-points link themselves as the next page, wew)

json parsing formulae that are set to parse all 'list' items will now also parse all dictionary entries if faced with a dict instead!

added new stop-gap 'stop checking' logic in subscription syncing for certain low-gallery-count edge-cases

fixed an issue where (typically new) subscriptions were bugging out trying to figure a default stop_reason on certain page results

fixed an unusual listctrl delete item index-tracking error that would sometimes cause exceptions on the 'try to link url stuff together' button press and maybe some other places

thanks to a submission from user prkc on the discord, we now have 'import cookies.txt' buttons on the review sessions panels! if you are interested in 'manual' logins through browser-cookie-copying, please give this a go and let me know which kinds of cookies.txt do and do not work, and how your different site cookie-copy-login tests work in hydrus.

the mappings cache tables now have some new indices that speed up certain kinds of tag search significantly. db update will spend a minute or two generating these indices for existing users

advanced mode users will discover a fun new entry on the help menu

the hyperlinks on the media viewer hover window and a couple of other places are now a custom control that uses any custom browser launch path in options->files and trash

fixed an issue where certain canvas edge-case media clearing events could be caught incorrectly by the manage tags dialog and its subsidiary panels

think I fixed an issue where a client left with a dialog open could sometimes run into trouble later trying to show an idle time maintenance modal popup and give a 'C++ assertion IsRunning()' exception and end up locking the client's ui

manage parsers dialog will now autosort after an add event

the gug panels now normalise example urls

improved some misc service error handling

rewrote some url parsing to stop forcing '+'->' ' in our urls' query texts

fixed some bad error handling for matplotlib import

misc fixes

next week

The big GUG overhaul is the main thing. The button where you select which site to download from will seem only to get some slightly different labels, but in truth a whole big pipeline behind that button needs to be shifted over to the new system. GUGs are actually pretty simple, so I hope this will only take one week, but we'll see!

1 note

·

View note

Text

What is Docker CE ? | learn how to install Dockers

What is docker

Docker is a computer program which is used to provide a running environment to run all kinds of application which are in docker hub, or created in docker. It creates an image of your application and stores all requirements of files into the container. Whenever we want to run docker application in any system, we have to run a single file without providing any other requirements.

Docker is easy to use in Ubuntu. It also supports Window and Mac operating system. For windows, it runs in Windows10/enterprise only. To use in Windows7/8/8.1 or Windows10 home should use docker toolbox.

There are two kind of docker software for programmers.

Docker CE :- Free community edition :- This is an open source software.

Docker EE :- Docker Enterprise Edition :- This is a paid software design for enterprise development and IT teams who build, ship, and run business-critical applications in production.

Requirements :-

Operating system (ubuntu)

Docker

Steps to install docker . Steps to download docker in ubuntu. 1. Open terminal and follow these command to install docker.

Just type docker and check if docker is in your system or not. $ docker

2. To check the version of operating system. To install Docker CE, we need the 64-bit version of one of these Ubuntu versions: 1. Cosmic 18.10 2. Bionic 18.04 (LTS) 3. Xenial 16.04 (LTS) $ lsb_release -a

3. Update the apt package index. $ sudo apt-get update

4. If requires, then install. $ sudo apt-get install

5. If docker is not in your system then install it. $ sudo apt-get install docker.io

6. Now check the staus of docker. $ sudo systemctl status docker

Steps to add user in docker 1. Why sudo :- We have to use 'sudo' command to run docker commands because docker container run user 'root'. We have to join the docker group, when your system join the docker group after that one can run docker command without sudo.

2. 'USER' is your system name, commands to add user as listed below. $user will pick system user 1. $ sudo groupadd docker 2. $ sudo gpasswd -a $USER 3. $ newgrp docker

3. Second way to add user in docker group. 1. $ sudo groupadd docker 2. $ sudo usermod -aG docker $USER

4. After adding a 'USER' into the docker group, we have to shut down or restart so that we can run docker commands without 'sudo'.

5. Command to uninstall docker. $ sudo apt-get remove docker docker-engine docker.io containerd runc

Docker commands 1.To check Docker version $ docker --version

2. To check Docker and containers info $ docker info

3. Find out which users are in the docker group and who is allowed to start docker containers. 1. $ getent group sudo

2. $ getent group docker

4. 'pull' command fetch the 'name_of_images' image from the 'Docker registry' and saves it to our system. $ docker pull busybox (busybox is name of image)

5. You can use the 'docker images' command to see a list of all images on your system. $ docker images

6. To find the location of the images in the system we need to follow some commands:- $ docker info path of docker:- "Docker Root Dir: /var/lib/docker"

Commands to check the images:-

$ cd /var/lib/docker

$ ls

pardise@pardise-MS-7817:/var/lib/docker$ cd image

bash: cd: image: Permission denied

Permission denied for all users

$ sudo su

$ root@pardise-MS-7817:/var/lib/docker# ls

Now docker info command will provide all details about images and containers

$root@pardise-MS-7817:/var/lib/docker/image/overlay2# docker info

7. Now run a Docker container based on this image. When you call run, the Docker client finds the image (busybox in this case), loads up the container and then runs a command in that container. $ docker run busybox

8. Now Docker client ran the 'echo' command in our busybox container and then exited it. $docker run busybox echo "hello from busybox"

9. Command to shows you all containers that are currently running. $ docker ps

10. List of all containers that one can run. Do notice that the STATUS column shows that these containers exited a few minutes ago. $ docker ps -a CONTAINER ID – Unique ID given to all the containers. IMAGE – Base image from which the container has been started. COMMAND – Command which was used when the container was started CREATED – Time at which the container was created. STATUS – The current status of the container (Up or Exited). PORTS – Port numbers if any, forwarded to the docker host for communicating with the external world. NAMES – It is a container name, you can specify your own name.

11. To start Container $ docker start (container id)

12. To login in Container $ docker attach (container id)

13. To stop container $ docker stop (container id)

Difference between images and containers

Docker Image is a set of files which has no state, whereas Docker Container is the abstract of Docker Image. In other words, Docker Container is the run time instance of images.

Remove images and containers 1. Docker containers are not automatically removed, firstly stop them, then can use docker rm command. Just copy the container IDs. $ docker rm 419600f601f9 (container_id)

2. Command to deletes all containers that have a status of exited. -q flag, only returns the numeric IDs and -f filters output based on conditions provided. $ docker rm $(docker ps -a -q -f status=exited)

3. Command to delete all container. $ docker container prune

4. Command to delete all images. To remove all images which are not referenced by any existing container, not just dangling ones, use the -a flag: $ docker images prune -a

dangling image is an image that is not tagged and is not used by any container. To remove dangling images type:-

$ docker images prune

$ docker rmi image_id image_id......

5. Removing all Unused Objects. It will remove all stopped containers,all dangling images,and all unused network. To remove all images which are not referenced by any existing container, use the -a flag: $ docker system prune -a

You can follow us and our codes at our github repository: https://github.com/amit-kumar001/You can follow us and our codes at our github

#What is docker#learn how to install Dockers#Steps to install docker#Difference between images and containers

0 notes

Text

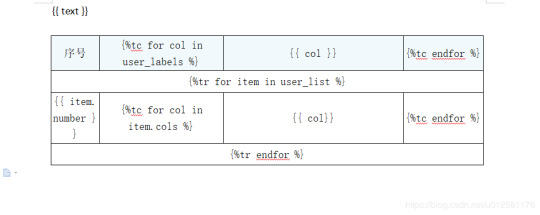

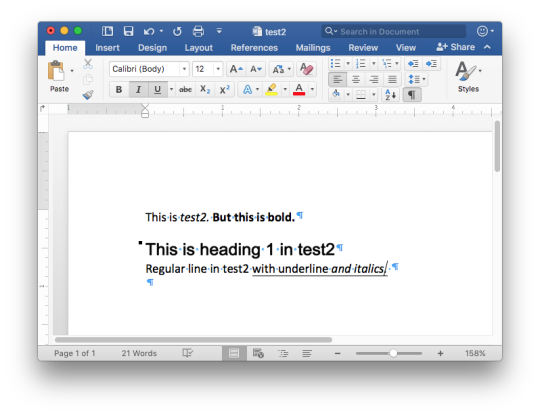

Python Docx

Python Docx4j

Python Docx To Pdf

Python Docx Table

Python Docx To Pdf

Python Docx2txt

Python Docx2txt

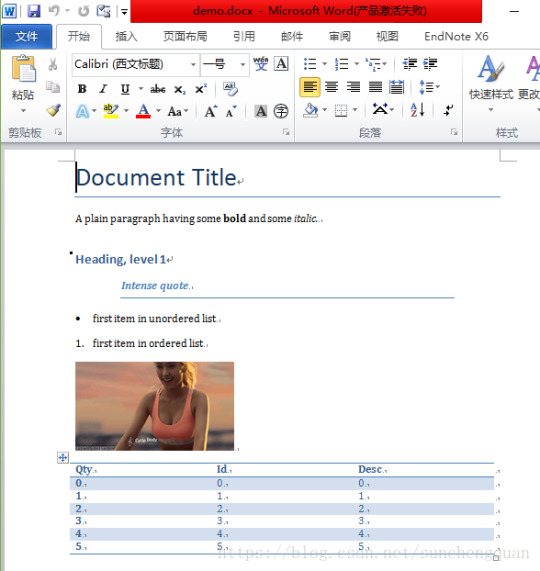

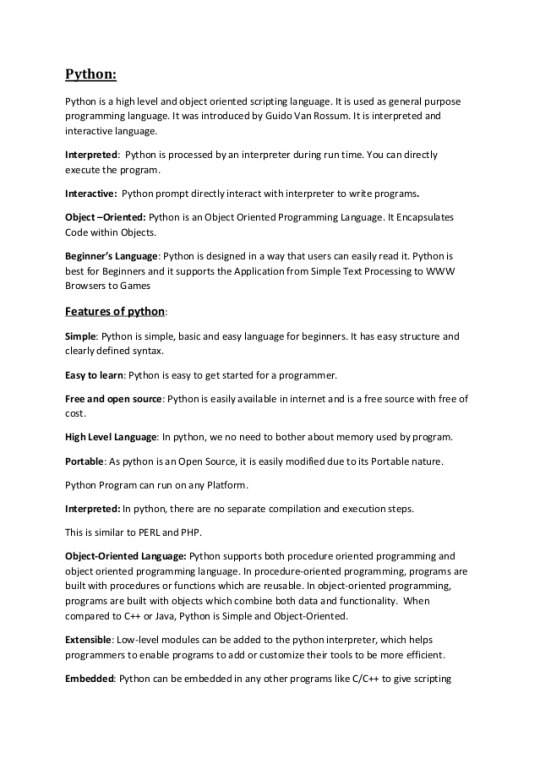

When you ask someone to send you a contract or a report there is a high probability that you’ll get a DOCX file. Whether you like it not, it makes sense considering that 1.2 billion people use Microsoft Office although a definition of “use” is quite vague in this case. DOCX is a binary file which is, unlike XLSX, not famous for being easy to integrate into your application. PDF is much easier when you care more about how a document is displayed than its abilities for further modifications. Let’s focus on that.

Python-docx versions 0.3.0 and later are not API-compatible with prior versions. Python-docx is hosted on PyPI, so installation is relatively simple, and just depends on what installation utilities you have installed. Python-docx may be installed with pip if you have it available.

Installing Python-Docx Library Several libraries exist that can be used to read and write MS Word files in Python. However, we will be using the python-docx module owing to its ease-of-use. Execute the following pip command in your terminal to download the python-docx module as shown below.

Python has a few great libraries to work with DOCX (python-dox) and PDF files (PyPDF2, pdfrw). Those are good choices and a lot of fun to read or write files. That said, I know I'd fail miserably trying to achieve 1:1 conversion.

Release v0.8.10 (Installation)python-docx is a Python library for creating and updating Microsoft Word (.docx) files.

Looking further I came across unoconv. Universal Office Converter is a library that’s converting any document format supported by LibreOffice/OpenOffice. That sound like a solid solution for my use case where I care more about quality than anything else. As execution time isn't my problem I have been only concerned whether it’s possible to run LibreOffice without X display. Apparently, LibreOffice can be run in haedless mode and supports conversion between various formats, sweet!

I’m grateful to unoconv for an idea and great README explaining multiple problems I can come across. In the same time, I’m put off by the number of open issues and abandoned pull requests. If I get versions right, how hard can it be? Not hard at all, with few caveats though.

Testing converter

LibreOffice is available on all major platforms and has an active community. It's not active as new-hot-js-framework-active but still with plenty of good read and support. You can get your copy from the download page. Be a good user and go with up-to-date version. You can always downgrade in case of any problems and feedback on latest release is always appreciated.

On macOS and Windows executable is called soffice and libreoffice on Linux. I'm on macOS, executable soffice isn't available in my PATH after the installation but you can find it inside the LibreOffice.app. To test how LibreOffice deals with your files you can run:

In my case results were more than satisfying. The only problem I saw was a misalignment in a file when the alignment was done with spaces, sad but true. This problem was caused by missing fonts and different width of 'replacements' fonts. No worries, we'll address this problem later.

Setup I

While reading unoconv issues I've noticed that many problems are connected due to the mismatch of the versions. I'm going with Docker so I can have pretty stable setup and so I can be sure that everything works.

Let's start with defining simple Dockerfile, just with dependencies and ADD one DOCX file just for testing:

Let's build an image:

After image is created we can run the container and convert the file inside the container:

Running LibreOffice as a subprocess

We want to run LibreOffice converter as a subprocess and provide the same API for all platforms. Let's define a module which can be run as a standalone script or which we can later import on our server.

Required arguments which convert_to accepts are folder to which we save PDF and a path to the source file. Optionally we specify a timeout in seconds. I’m saying optional but consider it mandatory. We don’t want a process to hang too long in case of any problems or just to limit computation time we are able to give away to each conversion. LibreOffice executable location and name depends on the platform so edit libreoffice_exec to support platform you’re using.

subprocess.run doesn’t capture stdout and stderr by default. We can easily change the default behavior by passing subprocess.PIPE. Unfortunately, in the case of the failure, LibreOffice will fail with return code 0 and nothing will be written to stderr. I decided to look for the success message assuming that it won’t be there in case of an error and raise LibreOfficeError otherwise. This approach hasn’t failed me so far.

Uploading files with Flask

Converting using the command line is ok for testing and development but won't take us far. Let's build a simple server in Flask.

We'll need few helper function to work with files and few custom errors for handling error messages. Upload directory path is defined in config.py. You can also consider using flask-restplus or flask-restful which makes handling errors a little easier.