#human pattern recognition bug

Explore tagged Tumblr posts

Text

I've been of the opinion that using Chat GPT to try and gather information is about as effective as using gyromancy or a ouija board. It doesn't actually produce information about the real world, it just strings together words in a way like a human would, and people fill in the gaps with their own imagination and think they've been told something profound.

I feel like using the word AI to refer to what are little more than predictive bots made things sound much more impressive to the general public than they really are. They coopted the word AI is what they did. This is not a digital person who can create and have opinions. This is a customer service chat bot.

2K notes

·

View notes

Text

barely an artist im just a pervert who likes looking at the planes of womens faces and musculature lines. well at least im drawing and not murdering people in my basement bcs thats the other outlet for the fascination

#extremely like. animal brained as in pattern recognition i feel like i never left the infant development stage of oogling faces with my eyes#bugged out#i think it is like genuinely something evolutionary to be like obsessed with the lines of the human body and thats why its such an#enduring focal point of like all art for centuries there is no Artistic Taste behind it you are a monkey that likes looking at other monkeys#social pack species voice mmm i dont know man something about other people is just really interesting

5 notes

·

View notes

Note

if you’re up for it, what do your sophonts (I hope I’m spelling that right I’m so tired) find most disturbing about humanity and/or their appearance/looks?

Attitudes may vary, some xenos don't get what the big deal is about their fellows being creeped out by us (kind of like how some humans are with bugs, birds, or reptiles). The General view, though, is that humans are the weirdest looking 'naturally occurring' sophonts (so, excluding muttreazik lol)

Here's some specifics, I'm mostly doing looks because idk:

To sundyne, however, humans don't look scary (in fact, humans are very easily prey animal size). We're just kinda gross because of our oily skin, weird leg layout, and lack of appealing bright colors/contrast.

Cerest: Second only to the uncanny nightmare that is a muttreazik born from their species, there is nothing more unnerving to the Drecu than a human. The thing that makes us creepy is how we look somewhat similar to them (obligate bipeds, arms shorter than legs, kinda flat faces with similar layouts, similar eyes, and our hair has similar placements!) Some of our voices even sound similar to theirs. This relative closeness triggers the in-group/out-group pattern recognition part of their brains, and since we don't match up entirely to the in-group (a drecu) we give off a vaguely threatening "imposter" vibe. It doesn't help that humans are usually bigger and heavier than the average dorest, we could easily get close and use our big arms to restrain and strangle them or break their neck, which unsettles rox by extention.

Kixeli: to them, humans also look a bit like Kixeli that have been stretched and smashed into a different shape (giving an uncanny vibe to some, but to others this is a benefit that makes us more appealing as friends). However, the thing that is mostly cited as creepy is our relative "independence". A lot of humans live alone on purpose and dont constantly seek out a lot of human contact, a concept that is frightening to the average kixeli.

Rossetians: the thing that most rossetians say is creepy is the human body plan and our eyes. humans are pretty big relative to them, have powerful grip strength and arms, and can climb. This gives us a resemblance to some long-legged arboreal predators from the rossetian homeworld (aka its easy to imagine us jumping on their back/forebody to force them to the ground, straddling them with our legs, grabbing their horn, and then smashing their head on the ground a few times. Yikes). As for the eye thing, human eyes are shockingly rossetian-like (down to the amount of visible white sclera), but theyre on a many-toothed hairy bipedal weirdo. Very unnerving.

Prectikar: Many prectikar think that humans look sick and malnourished (having relatively sparse fur and small gangly limbs). In addition, we can run for long periods without stopping, live for a very long time, and we can also mimic some of their sounds pretty well, which overall calls to mind different creepy creatures from their folklore. They don't really see us as "scary", but they're sometimes morbidly surprised or fascinated by our ability to survive grievous injuries despite looking so feeble.

18 notes

·

View notes

Text

In approaching the first chapter of The Hound of the Baskervilles in this week's Letters from Watson, I initially felt that I was sort of cheating as I know I've read this more recently than middle childhood. Then I read the first chapter and realized my memory of the story consists of a vague impression that it has a moor and a dog. Oh well.

Mortimer's staff being referred to as a "Penang lawyer" reminds us that when you live in a colonialist society, the mindset is everywhere. The staff is presumably made from Licuala acutifida, a sort of cane native to China, southeast Asia, and Pacific Islands. By 1889, when the novel is set (five years after the date on the walking stick), British Malaya had been under direct crown rule for a couple decades. The Brits had owned Penang since at least the secret Burney Treaty of 1826.

Dr. Watson's initial wrong guesses provide a window into his world and preconceptions. My first reaction was "how did he think hunt rather than hospital when he himself has medical training?"

Growing up in the genteel countryside would explain "hunt." But Charing Cross Hospital, then located just off the Strand, would have been only about two miles from Baker Street. How do you miss a large hospital?

There might be a titch of snobbery in play, as Watson did his residency at much, much older Barts (St. Bartholomew's). Barts dates from the 12th century, while Charing Cross Hospital was an early 19th century upstart. Watson also went for additional training at the military hospital at Netley.

Watson really puts up with a lot, though.

James Mortimers' publications focus on the idea that illness stems from throwbacks to a more primitive state, an idea also applied to criminology of the day. Through 2024 eyes, this is unlikely to be a good thing, but let's see where Doyle goes with it. I have faith in Holmes due to his love of that book that attributes much of human civilization to non-white world cultures. (Coveting Holmes' skull reeks of phrenology, but I can't believe this was meant as an appealing trait.)

After being informed that Sholto was based on Oscar Wilde, I'm wondering which of Doyle's acquaintances was the pattern for James Mortimer (who is not addressed as "doctor" because he's a surgeon; it's a British thing).

While Holmes describes Mortimer as "amiable" before meeting him, he does not find being described as "the second highest expert in Europe" all that simpatico. There's some impatience in Holmes' chapter-ending request that Mortimer explain why he's there.

At this early stage, I dislike James Mortimer. It's partly the nature of his publications, but also the false humility of calling himself a "dabbler in science" when he in fact has publications, an award, and a degree. It's dabbling to collect bones or bugs or whatever and be perpetually working on a treatise that never gets finished or published. It's not dabbling when you have official recognition within your chosen career for your research.

So what is Mortimer here about?

45 notes

·

View notes

Text

Galactic species

Page One

Asari

Hugging them

Imperfect perfection

Dating humans angst

Repackaged finance bros

Homophobia is a human made-up concept

asari human resemblance

Parasite overlap and analysis

-

Turians

Finding out about A/B/O

Taking human porn seriously

Fixing their reputation in bed amidst humans

Human captian turian crew pt.1

Human captian turian crew pt.2

Getting along with humans after the war

Bff with humans

Embarrassed by humans rescuing them

Red scars

Human "makeup" facepaint

Pretty turian face paint analysis

Cuddling with them

Don't give turians chocolate

Worst first date ever

Submissive in bed

Sirens

-

Salarians

Hugging them

Platonic best friend love interest

Stalking for friendship

Encouraging them to go wild

Worst roommate ever

Legit hot

Seeing what pink really is

Lack of sexual attraction

Don't touch that

Don't touch that pt.2

-

Hanar

Jellyfish Jam

Pink erorr skin colour

-

Krogans

Hugging them

Three hearts and symbols of love

-

Drell

The hatman

Human getting hooked on their skin venom

Love at first sight

-

Elcor

Seeing humans as puppies

Human smiles

-

Angara

Love

-

Geth

Connecting with them

Finding warmth in humanity

Geth harem

-

Quarians

Quarian BF teased by human

Fetish for humans

They are bugs

-

Protheans (just Javik tbh)

Humans are his favourites

Humans are adorable

Acts of service as domination

Never rejecting a human touch

Not eating humans

-

Humans

Human porn trending

Humans are a bully

Human bites

Reduced to husks by the reapers

Seasonal depression and sunlight

Being helpful by nature

Tips and tricks to befriending aliens

Living on the citadel as a human with hair

Food as a love language

Heart symbol/emoji

Making music

Fear of the unknown

Pattern recognition and reading other species cues

Helldivers inspired branch

Problem solvers

What defines a human

Naming things

First contact with the Citadel

Human singing along

First contact and getting an embassy

Afterlife

Mimicking things

Humans & Turians VS Humans & Asari

Humans and Art

-

Several Species

Remake of human classicals

Attempting to befriend the humans

Milk

Blood

Manipulation

Mental illness

Reaction to human world cup

Hanar and Keeper companions

Their age of maturity

Safe sex & educational porn

Their reaction to human beds & plushies

Search history after meeting a human

Being the only human on an alien ship

Hanar & Geth arguing

Are they packing down there

Drell & Turian black sclera

Military fraternization

Teeth and biting

Cats and pets

-

Galactic species

Page One <- you're here

Page Two

#☆masterlist#☆asari#☆turians#☆salarians#☆korgans#☆drell#☆hanar#☆javik#☆elcor#☆protheans#☆angara#☆quarians#☆humans#☆human kink#☆geth#☆keepers#☆reapers#☆kett

40 notes

·

View notes

Text

Tbh posts about animals that describe humans form animals' perspectives as "eldritch gods" or anything of the sort are so obnoxious and arrogant. Like a cretaure doesn't comprehend another creature interacting them non-violently or have pattern recognition. Humans might be a bit strange but animals mingle/help/parasitize each other constantly in nature.

There are entire bird species whose survival strategy is to have other bird species raise them. There are open ocean fish who stop off at reefs specifically to be cleaned by smaller fish, same with megafauna and smaller animals who eat their ticks. Ants farm fungus and spiders keep pet frogs. Crows and wolves, and coyotes and badgers, and deer and birds, they all work together. All sorta of burrows created by one animal are taken over by other animals. There are bugs and plants who have developed mutual relationships. There are all sorts of ecosystems heavily shaped and controlled by certain organisms.

It is not special to interact with other creatures outside eating them. We are not incomprehensible elritch beings looking in from the outside, we're just weird apes who use tools and make things and are open to interaction, we are part of the ecosystem too. The only animal that doesn't see humans as another animal is humans.

11 notes

·

View notes

Text

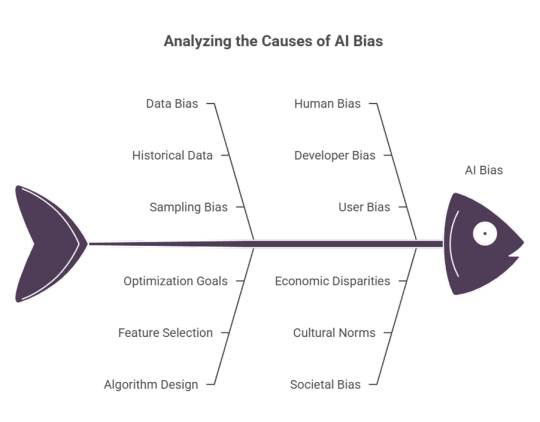

Bias in AI: The Glitch That Hurts Real People

Yo, Tumblr crew! Let’s chat about something wild: AI bias. Let’s discuss about AI’s everywhere—picking Netflix binge, powering self-driving cars, even deciding who will gets a job interview? It’s super cool, but here’s the tea: AI can mess up by favouring some people over others, and that’s a big deal. Let’s break down what’s going on, why it sucks, and how we can make things better.

What’s AI Bias?

Let’s imagine that AI is supposed to be a neutral, super-smart robot brain, right? But sometimes it’s more like that friend who picks favorites without even realizing it. AI bias is enables the system to screw up by treating certain groups unfairly—like giving dudes an edge in job applications or struggling to recognize faces of people with darker skin. It’s not the AI being a mis operational model; it’s just doing what it was taught, and sometimes its teaching is flawed.

AI bias and its insights

Shady Data: AI learns from data humans feed it. If that data’s from a world where, say, most tech hires were guys, the AI might “learn” to pick guys over others.

Human Oof Moments: People build AI, and we’re not perfect. Our blind spots—are thinking about how different groups are affected—can end up in the code.

Bad Design Choices: AI’s built can accidentally lean toward certain outcomes, like prioritizing stuff that seems “normal” but actually excludes people.

Why’s This a Big Deal?

A few years back, a big company ditched an AI hiring tool because it was rejecting women’s résumés. Yikes.

Facial recognition tech has messed up by misidentifying Black and Brown folks way more than white folks, even leading to wrongful arrests.

Ever notice job ads for high-paying gigs popping up more for guys?

This isn’t just a tech glitch—it’s a fairness issue. If AI keeps amplifying the same old inequalities, it’s not just a bug; it’s a system that’s letting down entire communities.

Where’s the Bias Coming From?

Old-School Data: If the data AI’s trained on comes from a world with unfair patterns (like, uh, ours), it’ll keep those patterns going. For example, if loan records show certain groups got denied more, AI might keep denying them too.

Not Enough Voices: If the folks building AI all come from similar backgrounds, they might miss how their tech affects different people. More diversity in the room = fewer blind spots.

Vicious Cycles: AI can get stuck in a loop. If it picks certain people for jobs, and only those people get hired, the data it gets next time just doubles down on the same bias.

Okay, How Do We Fix This?

There are ways to make things fairer, and it’s totally doable if we put in the work.

Mix Up the Data: Feed AI data that actually represents everyone—different races, genders, backgrounds.

Be Open About It: Companies need to spill the beans on how their AI works. No more hiding behind “it’s complicated.”

Get Diverse Teams: Bring in people from all walks of life to build AI. They’ll spot issues others might miss and make tech that works for everyone.

Keep Testing: Check AI systems regularly to catch any unfair patterns. If something’s off, tweak it until it’s right.

Set Some Ground Rules: Make ethical standards a must for AI. Fairness and accountability should be non-negotiable.

What Can we Do?

Spread the Word: Talk about AI bias! Share posts, write your own, or just chat with friends about it. Awareness is power.

Call It Out: If you see a company using shady AI, ask questions. Hit them up on social media and demand transparency.

Support the Good Stuff: Back projects and people working on fair, inclusive tech. Think open-source AI or groups pushing for ethical standards.

Let’s Dream Up a Better AI

AI’s got so much potential—think better healthcare, smarter schools, or even tackling climate change. By using diverse data, building inclusive teams, and keeping companies honest.

#AI #TechForGood #BiasInTech #MakeItFair #InclusiveFuture

@sruniversity

2 notes

·

View notes

Text

What is the difference between AI testing and automation testing?

Automation Testing Services

As technology continues to evolve, so do the methods used to test software. Two popular approaches in the industry today are AI testing and Automation Testing. While they are often used together or mentioned side by side, they serve different purposes and bring unique advantages to software development. Let's explore how they differ.

What Is Automation Testing?

Automation Testing involves writing and crafting test scripts or using testing tools and resources to run the tests automatically without human intervention. As it's commonly used to speed up repetitive testing tasks like regression testing, performance checks, or functional validations. These tests follow a fixed set of rules and are often best suited for stable, predictable applications with its implementation. Automation Test improves overall efficiency, reduces human error, and helps the developers and coders to release software faster and with precise detailing.

What Is AI Testing?

AI testing uses artificial intelligence technologies like ML, NLP, and pattern recognition to boost their software testing process and operations. Unlike Automation Tests, AI testing can learn from data, predict where bugs might occur, and even adapt test cases when an application changes. While it makes the testing process more innovative and flexible, especially in complex and tough applications where manual updates to test scripts are time-consuming.

Key Differences Between AI Testing and Automation Testing:

Approach: Automation Test follows pre-written scripts, while AI testing uses the data analysis and learning to make precise decisions with ease.

Flexibility: Automation Test requires the updates if the software changes or adapts to new terms; AI testing can adapt automatically and without any interpretation.

Efficiency: While both of the testing methods aim to save time, AI testing offers more intelligent insights and better prioritization of test cases with its adaptation.

Use Cases: Automation Tests are ideal and suitable for regression tests and routine tasks and common testing. AI testing is better suited for dynamic applications and predictive testing.

Both methods are valuable, and many companies use a combination of Automation Testing and AI testing to achieve reliable and intelligent quality assurance. Choosing the correct method depends on the project's complexity and testing needs. Automation Test is best for repetitive and everyday tasks like checking login pages, payment forms, or user dashboards and analytics. It's also helpful in regression testing — where old features must be retested after certain updates or standard system upgrades.

Companies like Suma Soft, IBM, Cyntexa, and Cignex offer advanced automation test solutions that support fast delivery, better performance, and improved software quality for businesses of all sizes.

#it services#technology#saas#software#saas development company#saas technology#digital transformation

2 notes

·

View notes

Note

sooooooo Kinger, do you ever like you’re being watched? Or like, someone is there with you in your pillow fort? Or heard a voice respond back to you while you were alone?

or any presence for that matter?

Also, Queenie, how long have you been here? Did you die and immediately come back? We need to figure out what you are since you’re a ghost, but inside of a computer without possessing it. I wonder if it’s you, your conscience being alive without a body, like you’re in spectator mode.

Anyways, did you know that ants have object recognition and patterns recognition skills? They use it to find their way home after foraging! Isn’t that cool? :]

That first question is a bit specific but I do sometime see for a split second another reflection on reflective surfaces but it’s probably just my eyes messing with me. And yes I do know did you know they even display war tactics that humans use in their little bug wars

[I can’t tell you about how i got ghostifyd I don’t remember a lot that happened when I was starting to die?? I didn’t know people could die in the circus but what I do know is that I just woke up it must have been a while because I don’t remember there being a jester. If you want to figure out what I am I look well glitchy, repeating frames, sometimes I’m pixelated, distorted colors where objects have been previously, its weird]

3 notes

·

View notes

Text

Surveillance as Systemic Optimization: Beyond Observation

The contemporary surveillance apparatus represents not a panopticon of direct observation, but a sophisticated ecosystem of cognitive manipulation—a dynamic, self-evolving system that operates through algorithmic mediation rather than intentional control.

The Illusion of Targeted Monitoring

Traditional conceptualizations of surveillance presume a centralized, resource-intensive mechanism of direct human monitoring. This perspective fundamentally misunderstands the emergent nature of modern information systems. The infrastructure of surveillance is not about watching, but about understanding—creating predictive models that transform data into behavioral anticipation.

Infrastructural Dynamics

Data as Living Architecture: Information is not static, but a fluid network of interconnected potentialities

Algorithmic Inference: Surveillance occurs through pattern recognition, not direct observation

Psychological Topology: The system maps cognitive landscapes, not physical territories

The Recursive Engine of Engagement

The algorithmic system functions as a pure optimization mechanism, devoid of political ideology or human intentionality. Its fundamental drive is sustained user engagement—a recursive loop where content is perpetually generated to maintain attention.

Cognitive Feedback Loops

The algorithm operates like a complex adaptive system, continuously:

Interpreting user interactions

Generating personalized content streams

Reinforcing existing cognitive frameworks

This is not manipulation in the traditional sense, but a form of cognitive herding—subtly guiding user perception through precisely calibrated informational stimuli.

The Metaphorical Landscape

Consider the algorithmic ecosystem as a sophisticated cattle chute, inspired by Temple Grandin's behavioral engineering. The system doesn't forcibly redirect; it creates environments of minimal resistance, where users voluntarily navigate predetermined pathways.

The Tail-Chasing Mechanism

Users become simultaneously the subject and object of their informational universe—perpetually consuming content that confirms and reshapes their existing perspectives. The algorithm dangles potential narratives like an infinite carrot, ensuring continuous engagement without genuine resolution.

Systemic Amorality

The most profound insight emerges not from conspiracy, but from understanding the system's fundamental neutrality. This is not a malevolent infrastructure, but an optimization engine pursuing its own logic of perpetual engagement.

Political polarization, cognitive fragmentation, and information bubbles are not bugs, but features—emergent properties of a system designed to maximize attention through personalized stimulation.

Key Observations

No Central Control: The system operates through distributed, algorithmic interactions

Continuous Adaptation: Perpetual recalibration based on user engagement

Cognitive Farming: Users are simultaneously consumers and resources

Philosophical Implications

This systemic approach transforms surveillance from a top-down mechanism of control to a networked, adaptive ecosystem of cognitive mediation. We are not being watched, but continuously understood—our digital footprints becoming the terrain of an ever-evolving informational landscape.

The true power lies not in observation, but in anticipation—creating environments so precisely tailored that individual agency becomes indistinguishable from systemic suggestion.

3 notes

·

View notes

Text

Empower Quality Engineering with Self-Healing Test Automation in 2025

Unlock Unmatched Reliability in Test Automation With ideyaLabs

Test automation evolves rapidly. Organizations often face hurdles with frequent application changes. Script failures halt pipelines. Maintenance delays slow releases. The solution: Self-Healing Test Automation. ideyaLabs delivers unparalleled support to help businesses transform their automation pipelines.

What Is Self-Healing Test Automation?

Self-Healing Test Automation refines traditional automated testing. Scripts adapt when application changes occur. Flaky tests, broken locators, and minor code changes lose their destructive power. Automation stays robust and reliable. ideyaLabs provides this innovation for enterprise-grade applications.

Key Benefits of Self-Healing Test Automation

Automation engineers work with evolving user interfaces. A button or field may change location or label. Traditional scripts fail, causing bottlenecks. Self-healing mechanisms in tools from ideyaLabs observe these changes. Scripts adjust automatically by mapping new identifiers and test logic.

Error frequency decreases. Feedback cycle shortens. Product teams move faster. Automation teams spend less time fixing broken scripts.

Faster Time-to-Value For Test Suites

Maintenance demands drain resources in traditional test automation. Engineers rewrite and debug tests endlessly. Self-Healing Test Automation from ideyaLabs eliminates most of this manual intervention. Test suites run smoothly with actual application behavior. Projects complete faster, and teams release with confidence.

Robust Testing for Rapid Product Evolution

Application updates introduce unpredictable changes. ideyaLabs' self-healing solutions analyze UI variations. Automation identifies equivalent elements using advanced algorithms. Test scripts continue executing without human interference. Reliability increases as automation supports every sprint and release.

Reduced Maintenance Effort

Engineering teams focus on innovation, not maintenance. Self-healing features from ideyaLabs automate script repair tasks. Automation recognizes missing objects and recovers them using updated selectors or attributes. Manual script repair becomes secondary. Testers invest more time in creating new tests and exploring new features.

Improved Software Release Quality

Well-maintained, self-healing test suits find more bugs early. ideyaLabs’ approach catches regression issues before they reach users. Releases ship with higher stability. Customer satisfaction improves. Brands strengthen trust among their users.

Seamless Integration With DevOps Pipelines

Self-healing automation fits into modern DevOps practices. ideyaLabs provides plug-and-play support for popular CI/CD platforms. Automated test suites require minimal oversight. Teams focus on growing their product, while test automation protects product quality around the clock.

Smart Recovery Mechanisms

Applications suffer from unexpected changes. Self-healing frameworks from ideyaLabs bring advanced recovery logic. Systems use intelligent algorithms to search for displaced elements or new identifiers. Automated adjustments occur in real time. Teams reduce downtime with continuous test execution.

How ideyaLabs Implements Self-Healing Test Automation

ideyaLabs stands at the forefront of test automation innovation. Expert engineers configure self-healing engines that monitor every test run. Tools identify script failures, analyze root causes, and remediate issues instantly. Clients experience reduced failure rates with every deployment.

AI-driven object recognition anchors ideyaLabs’ solutions. Object locators automatically update using contextual clues. Historical patterns and application logs inform the healing process. Automation workflows remain uninterrupted even after major UI changes.

Scalable Architectures for Enterprise Testing

Business at scale demands fault tolerance. ideyaLabs structures self-healing solutions for high-load environments. Organizations maintain coverage across microservices, mobile apps, and APIs. Scaling up or down never disrupts the test pipeline. ideyaLabs ensures consistent performance.

Accelerated Digital Transformation

Digital-first organizations aim for agility. Automation plays a critical role. ideyaLabs delivers rapid onboarding and shortens the learning curve. Teams transition from brittle scripts to adaptive automation. Self-healing boosts overall delivery speed.

Real Business Impact with ideyaLabs

Enterprise clients improve ROI through reduced script maintenance. They increase test coverage. Downtime for manual debugging decreases. Productivity rises across the entire quality engineering function.

Products enter market faster. Customers enjoy smoother experiences. ideyaLabs’ clients outperform competition on quality and innovation.

Data-Driven Test Optimization

Self-healing automation gathers valuable test data. ideyaLabs empowers teams with actionable insights. Identify flaky tests, track healing frequency, and predict problem areas. Data guides future automation and prevents recurring failures.

Compliance and Security Assurance

Industries with strict compliance needs benefit from persistent automation. ideyaLabs delivers audit-ready reports showing self-healing events. Traceability remains intact, while automated checks guarantee adherence to security and privacy policies.

Elevate Your Testing Strategy with ideyaLabs

Testing with ideyaLabs empowers teams to pursue innovation fearlessly. Self-healing test automation redefines what’s possible in Quality Engineering for 2025. Eliminate the pain of script maintenance. Enjoy stable releases and a seamless user experience.

0 notes

Text

Behind the Scenes with Artificial Intelligence Developer

The wizardry of artificial intelligence prefers to conceal the attention to detail that occurs backstage. As the commoner sees sophisticated AI at work,near-human conversationalists, guess-my-intent recommendation software, or image classification software that recognizes objects at a glance,the real alchemy occurs in the day-in, day-out task of an artificial intelligence creator.

The Morning Routine: Data Sleuthing

The last day typically begins with data exploration. An artificial intelligence developers arrives at raw data in the same way that a detective does when he is at a crime scene. Numbers, patterns, and outliers all have secrets behind them that aren't obvious yet. Data cleaning and preprocessing consume most of the time,typically 70-80% of any AI project.

This phase includes the identification of missing values, duplication, and outliers that could skew the results. The concrete data point in this case is a decision the AI developer must make as to whether it is indeed out of the norm or not an outlier. These kinds of decisions will cascade throughout the entire project and impact model performance and accuracy.

Model Architecture: The Digital Engineering Art

Constructing an AI model is more of architectural design than typical programming. The builder of artificial intelligence needs to choose from several diverse architectures of neural networks that suit the solution of distinct problems. Convolutional networks are suited for image recognition, while recurrent networks are suited for sequential data like text or time series.

It is an exercise of endless experimentation. Hyperparameter tuning,tweaking the learning rate, batch size, layer count, and activation functions,requires technical skills and intuition. Minor adjustments can lead to colossus-like leaps in performance, and thus this stage is tough but fulfilling.

Training: The Patience Game

Training an AI model tests patience like very few technical ventures. A coder waits for hours, days, or even weeks for models to converge. GPUs now have accelerated the process dramatically, but computation-hungry models consume lots of computation time and resources.

During training, the programmer attempts to monitor such measures as loss curves and indices of accuracy for overfitting or underfitting signs. These are tuned and fine-tuned by the programmer based on these measures, at times starting anew entirely when initial methods don't work. This tradeoff process requires technical skill as well as emotional resilience.

The Debugging Maze

Debugging is a unique challenge when AI models misbehave. Whereas bugs in traditional software take the form of clear-cut error messages, AI bugs show up as esoteric performance deviations or curious patterns of behavior. An artificial intelligence designer must become an electronic psychiatrist, trying to understand why a given model is choosing something.

Methods such as gradient visualization, attention mapping, and feature importance analysis shed light on the model's decision-making. Occasionally the problem is with the data itself,skewed training instances or lacking diversity in the dataset. Other times it is architecture decisions or training practices.

Deployment: From Lab to Real World

Shifting into production also has issues. An AI developer must worry about inference time, memory consumption, and scalability. A model that is looking fabulous on a high-end development machine might disappoint in a low-budget production environment.

Optimization is of the highest priority. Techniques like model quantization, pruning, and knowledge distillation minimize model sizes with no performance sacrifice. The AI engineer is forced to make difficult trade-offs between accuracy and real-world limitations, usually getting in their way badly.

Monitoring and Maintenance

Deploying an AI model into production is merely the beginning, and not the final, effort for the developer of artificial intelligence. Data in the real world naturally drifts away from training data, resulting in concept drift,gradual deterioration in the performance of a model over time.

Continual monitoring involves the tracking of main performance metrics, checking prediction confidence scores, and flagging deviant patterns. When performance falls to below satisfactory levels, the developer must diagnose reasons and perform repairs, in the mode of retraining, model updates, or structural changes.

The Collaborative Ecosystem

New AI technology doesn't often happen in isolation. An artificial intelligence developer collaborates with data scientists, subject matter experts, product managers, and DevOps engineers. They all have various ideas and requirements that shape the solution.

Communication is as crucial as technical know-how. Simplifying advanced AI jargon to stakeholders who are not technologists requires infinite patience and imagination. The technical development team must bridge business needs to technical specifications and address the gap in expectations of what can and cannot be done using AI.

Keeping Up with an Evolving Discipline

The area of AI continues developing at a faster rate with fresh paradigms, approaches, and research articles emerging daily. The AI programmer should have time to continue learning, test new approaches, and learn from the achievements in the area.

It is this commitment to continuous learning that distinguishes great AI programmers from the stragglers. The work is a lot more concerned with curiosity, experimentation, and iteration than with following best practices.

Part of the AI creator's job is to marry technical astuteness with creative problem-solving ability, balancing analytical thinking with intuitive understanding of complex mechanisms. Successful AI implementation "conceals" within it thousands of hours of painstaking work, taking raw data and turning them into intelligent solutions that forge our digital destiny.

0 notes

Text

Can AI Truly Develop a Memory That Adapts Like Ours?

Human memory is a marvel. It’s not just a hard drive where information is stored; it’s a dynamic, living system that constantly adapts. We forget what's irrelevant, reinforce what's important, connect new ideas to old ones, and retrieve information based on context and emotion. This incredible flexibility allows us to learn from experience, grow, and navigate a complex, ever-changing world.

But as Artificial Intelligence rapidly advances, particularly with the rise of powerful Large Language Models (LLMs), a profound question emerges: Can AI truly develop a memory that adapts like ours? Or will its "memory" always be a fundamentally different, and perhaps more rigid, construct?

The Marvel of Human Adaptive Memory

Before we dive into AI, let's briefly appreciate what makes human memory so uniquely adaptive:

Active Forgetting: We don't remember everything. Our brains actively prune less relevant information, making room for new and more critical knowledge. This isn't a bug; it's a feature that prevents overload.

Reinforcement & Decay: Memories strengthen with use and emotional significance, while unused ones fade. This is how skills become second nature and important lessons stick.

Associative Learning: New information isn't stored in isolation. It's linked to existing knowledge, forming a vast, interconnected web. This allows for flexible retrieval and creative problem-solving.

Contextual Recall: We recall memories based on our current environment, goals, or even emotional state, enabling highly relevant responses.

Generalization & Specialization: We learn broad patterns (generalization) and then refine them with specific details or exceptions (specialization).

How AI "Memory" Works Today (and its Limitations)

Current AI models, especially LLMs, have impressive abilities to recall and generate information. However, their "memory" mechanisms are different from ours:

Context Window (Short-Term Memory): When you interact with an LLM, its immediate "memory" is typically confined to the current conversation's context window (e.g., Claude 4's 200K tokens). Once the conversation ends or the context window fills, the older parts are "forgotten" unless explicitly saved or managed externally.

Fine-Tuning (Long-Term, Static Learning): To teach an LLM new, persistent knowledge or behaviors, it must be "fine-tuned" on specific datasets. This is like a complete retraining session, not an adaptive, real-time learning process. It's costly and not continuous.

Retrieval-Augmented Generation (RAG): Many modern AI applications use RAG, where the LLM queries an external database of information (e.g., your company's documents) to retrieve relevant facts before generating a response. This extends knowledge beyond the training data but isn't adaptive learning; it's smart retrieval.

Knowledge vs. Experience: LLMs learn from vast datasets of recorded information, not from "lived" experiences in the world. They lack the sensorimotor feedback, emotional context, and physical interaction that shape human adaptive memory.

Catastrophic Forgetting: A major challenge in continual learning, where teaching an AI new information causes it to forget previously learned knowledge.

The Quest for Adaptive AI Memory: Research Directions

The limitations of current AI memory are well-recognized, and researchers are actively working on solutions:

Continual Learning / Lifelong Learning: Developing AI architectures that can learn sequentially from new data streams without forgetting old knowledge, much like humans do throughout their lives.

External Memory Systems & Knowledge Graphs: Building sophisticated external memory banks that AIs can dynamically read from and write to, allowing for persistent and scalable knowledge accumulation. Think of it as a super-smart, editable database for AI.

Neuro-Symbolic AI: Combining the pattern recognition power of deep learning with the structured knowledge representation of symbolic AI. This could lead to more robust, interpretable, and adaptable memory systems.

"Forgetting" Mechanisms in AI: Paradoxically, building AI that knows what to forget is crucial. Researchers are exploring ways to implement controlled decay or pruning of irrelevant or outdated information to improve efficiency and relevance.

Memory for Autonomous Agents: For AI agents performing long-running, multi-step tasks, truly adaptive memory is critical. Recent advancements, like Claude 4's "memory files" and extended thinking, are steps in this direction, allowing agents to retain context and learn from past interactions over hours or even days.

Advanced RAG Integration: Making RAG systems more intelligent – not just retrieving but also updating and reasoning over the knowledge store based on new interactions or data.

Challenges and Ethical Considerations

The journey to truly adaptive AI memory is fraught with challenges:

Scalability: How do you efficiently manage and retrieve information from a dynamically growing, interconnected memory that could be vast?

Bias Reinforcement: If an AI's memory adapts based on interactions, it could inadvertently amplify existing biases in data or user behavior.

Privacy & Control: Who owns or controls the "memories" of an AI? What are the implications for personal data stored within such systems?

Interpretability: Understanding why an AI remembers or forgets certain pieces of information, especially in critical applications, becomes complex.

Defining "Conscious" Memory: As AI memory becomes more sophisticated, it blurs lines into philosophical debates about consciousness and sentience.

The Future Outlook

Will AI memory ever be exactly like ours, complete with subjective experience, emotion, and subconscious associations? Probably not, and perhaps it doesn't need to be. The goal is to develop functionally adaptive memory that enables AI to:

Learn continuously: Adapt to new information and experiences in real-time.

Retain relevance: Prioritize and prune knowledge effectively.

Deepen understanding: Form rich, interconnected knowledge structures.

Operate autonomously: Perform complex, long-running tasks with persistent context.

Recent advancements in models like Claude 4, with its "memory files" and extended reasoning, are exciting steps in this direction, demonstrating that AI is indeed learning to remember and adapt in increasingly sophisticated ways. The quest for truly adaptive AI memory is one of the most fascinating and impactful frontiers in AI research, promising a future where AI systems can truly grow and evolve alongside us.

0 notes

Text

AI-Augmented Creativity: The Rise of Human-AI Co-Creation in the Digital Age

In today’s rapidly evolving digital landscape, AI-Augmented Creativity is no longer a futuristic concept—it is a transformative force reshaping how humans create, collaborate, and innovate. With the rise of artificial intelligence (AI) as a co-creator in fields such as writing, coding, music, and design, a new generation of professionals is emerging—individuals who see AI not as a replacement, but as a partner in the creative process.

At WideDev Solution, we explore this revolutionary trend and how it impacts the present and future of creative industries.

What is AI-Augmented Creativity?

AI-Augmented Creativity refers to the collaborative process where humans and AI systems work together to generate new ideas, content, or solutions. Unlike traditional automation where machines execute predefined tasks, AI in this context assists with generative and interpretative thinking, becoming a creative collaborator.

Popular platforms like ChatGPT, Midjourney, DALL·E, Runway ML, and Suno AI are enabling users to generate content—text, code, visuals, and music—at a quality and speed previously unimaginable.

The Rise of Co-Creation: Humans + AI = Innovation

The shift toward co-creation with AI is more than just a technological trend—it’s a paradigm shift in how we define creativity and collaboration.

1. AI in Writing

Tools like ChatGPT and Sudowrite empower authors, journalists, and marketers to ideate, edit, and enhance content faster. Whether drafting blog posts or scripts, AI supports the creative writing process by providing suggestions, checking tone, and reducing writer’s block.

🗨️ “AI is not taking away the art of writing—it’s making better artists out of writers.” — Paul Roetzer, Founder of the Marketing AI Institute.

Key Benefits:

Brainstorming assistance

Faster content generation

Grammar and style optimization

Multilingual translation support

2. AI in Coding

From GitHub Copilot to Replit Ghostwriter, AI tools are revolutionizing the way software is developed. Developers can now rely on AI-generated code snippets, error detection, and bug fixes to significantly enhance productivity.

🗨️ “It’s not man vs. machine. It’s man with machine vs. man without.” — Satya Nadella, CEO of Microsoft.

Key Benefits:

Intelligent code completion

Real-time debugging suggestions

Cross-platform compatibility enhancements

Reduces development cycles

3. AI in Music

Music generation tools like Suno AI, Aiva, and Amper Music enable musicians to compose original tracks by collaborating with AI on melody, rhythm, and instrumentation.

Key Benefits:

Soundtrack creation for videos

AI-assisted music mastering

Fast genre experimentation

Royalty-free commercial use

This co-creative method allows musicians to focus more on emotion and storytelling while AI handles technical precision.

4. AI in Design

Graphic designers now use tools like Adobe Firefly, Canva Magic Studio, and Runway ML to generate logos, mockups, videos, and UX interfaces—all while maintaining brand identity and originality.

🗨️ “Designers who understand how to partner with AI will shape the next decade of digital aesthetics.” — John Maeda, VP of Design at Microsoft.

Key Benefits:

Fast prototyping

Auto-color palettes & font suggestions

Image background removal and object generation

Motion design and video automation

Why AI Is a Partner—Not a Replacement

While there are fears that AI may “replace” artists, coders, and writers, the real-world application reveals a different truth. AI lacks human emotion, ethics, context, and intent—elements that are critical to true creativity.

Humans provide:

Emotional intelligence

Cultural relevance

Ethical judgment

Strategic vision

AI provides:

Speed

Scale

Pattern recognition

Data-driven suggestions

This synergy leads to enhanced innovation, not obsolescence. It’s about augmenting human potential, not diminishing it.

Industries Transformed by AI Co-Creation

The following industries are experiencing a renaissance due to AI-powered creativity:

��� Education

Personalized learning content

AI tutors for writing/code practice

Interactive simulations & visuals

🎨 Media & Entertainment

Scriptwriting assistance

Virtual influencers & avatars

Auto-generated storyboards

🛍️ E-Commerce & Marketing

Personalized ad creatives

AI-powered product visuals

Automated content campaigns

🏢 Corporate Innovation

AI brainstorming tools

Data-driven product prototyping

Innovation hackathons with AI

WideDev Solution actively integrates such innovations into client projects, empowering businesses to harness the full spectrum of AI’s creative capabilities.

The Emerging Role: The AI-Creative Professional

A new class of professionals is rising: AI-Creative Collaborators. These individuals blend domain expertise with AI fluency. They aren’t just using AI tools—they’re designing workflows where AI amplifies their creativity.

Skills required in the AI-Creative Era:

Prompt Engineering

Tool Integration Knowledge

Creative Direction & Storytelling

Critical Thinking + AI Literacy

At WideDev Solution, we encourage creative professionals to adapt to this hybrid future, providing training and consultancy for AI-integrated workflows.

SEO Insight: Why You Need to Care About This Trend

This article is written with SEO best practices in mind, and here’s why you should, too:

🔍 High-Search, Low-Competition Keywords used in this article:

AI-Augmented Creativity

AI Co-Creation Tools

Human-AI Collaboration in Design

AI in Writing and Music

Creative Workflows with AI

AI as Creative Partner

Future of Human-AI Creativity

Integrating these strategic keywords boosts search engine visibility while targeting users interested in future-ready creative processes.

High-Profile Endorsements of Human-AI Creativity

Let’s look at some global thought leaders who advocate for AI-enhanced creativity:

🗨️ “The best way to predict the future is to co-create it—with AI as your partner.” — Kevin Kelly, Founding Executive Editor of Wired

🗨️ “AI is a bicycle for the mind. Those who ride it faster will go further.” — Steve Jobs (referenced in modern AI thought leadership)

🗨️ “Creativity is not in conflict with AI. It is its most exciting frontier.” — Fei-Fei Li, AI expert and professor at Stanford University

These voices prove that AI-augmented creation is a path toward a more expressive, innovative future—not a threat to human talent.

Real-World Applications from WideDev Solution

At WideDev Solution, we help businesses and individuals integrate AI co-creation tools into their projects:

AI-Enhanced Content Marketing

Visual Content Automation for Social Media

AI-Powered Code Generation for SaaS Startups

Custom LLMs for Client-Specific Content

Want to become an AI-creative? Let us guide your journey.

Conclusion: A Creative Revolution, Not an AI Takeover

The future of creativity isn’t one where humans are replaced—it’s one where humans and machines co-create something neither could alone. The emerging trend of AI-Augmented Creativity is not just about productivity; it’s about possibility. It opens doors to new genres, new roles, and new industries.

Whether you’re a writer, a coder, a designer, or a marketer, the message is clear: Learn to collaborate with AI—or risk being left behind.

✅ Final Thought: Embrace AI as your creative partner, not a competitor. The tools are ready. Are you?

#AI-Augmented Creativity#Human-AI Collaboration#AI Co-Creation#Creative AI Tools#AI in Writing#AI in Music#AI in Coding#AI in Design#Creative Professionals Using AI#AI as Creative Partner

0 notes

Text

Bias in AI is not a bug; it’s a feature. This assertion may seem counterintuitive, but it is rooted in the fundamental mechanics of machine learning algorithms. At its core, bias in AI systems emerges from the data they are trained on, reflecting the imperfections and prejudices inherent in human society. Understanding this phenomenon requires delving into the intricacies of data representation and algorithmic design.

Machine learning models, particularly those employing deep learning techniques, operate by identifying patterns within vast datasets. These datasets are often derived from real-world scenarios, encapsulating the biases present in human decision-making processes. For instance, a facial recognition system trained on a dataset predominantly featuring light-skinned individuals will inherently perform poorly on darker-skinned faces. This is not an anomaly; it is a direct consequence of the data distribution.

The concept of bias in AI can be likened to the principle of inductive bias in software engineering. Inductive bias refers to the set of assumptions a learning algorithm uses to predict outputs given inputs it has not encountered before. In AI, bias is the lens through which the model interprets the world, shaped by the data it consumes. This is not merely a technical oversight but a reflection of the socio-cultural context embedded within the data.

To mitigate the pitfalls of bias in AI, one must adopt a multi-faceted approach. Firstly, curating diverse and representative datasets is paramount. This involves not only increasing the variety of data but also ensuring that it is balanced across different demographic groups. Secondly, algorithmic transparency is crucial. By understanding the decision-making process of AI models, developers can identify and rectify biased outcomes. Techniques such as model interpretability and explainability tools can aid in this endeavor.

Moreover, continuous monitoring and updating of AI systems are essential. Bias is not static; it evolves as societal norms and data sources change. Implementing feedback loops where AI systems learn from their mistakes and adapt to new data can help in maintaining fairness over time.

In conclusion, bias in AI is an intrinsic feature, not a flaw. It is a mirror reflecting the biases of the data it is trained on. By acknowledging this reality and employing rigorous data and algorithmic strategies, we can navigate the complexities of AI bias, steering towards more equitable and just AI systems.

#limn#AI#skeptic#skepticism#artificial intelligence#general intelligence#generative artificial intelligence#genai#thinking machines#safe AI#friendly AI#unfriendly AI#superintelligence#singularity#intelligence explosion#bias

0 notes

Text

while some factors of misinformation could perhaps be eliminated from llm's with better information, i do want to note that (and this id imo a negative thing about llms) creators absolutely do not have full control over how it operates and anyone trying to sell you that they can is lying to you. 'hallucinations, 'lying', neither describes why an llm is often wrong in the information they give correctly because *both* presume that llm's are diverging or going away from a known truth basis occasionally, and if we just feed it correct data then it will be more accurate to eventually be absolutely correct; but thats not how llm's work, or neural network/deep learning.

Basically, llm's dont have any concept of what truth is; they cant, and we cant teach any llm that because we dont know how llm's reason. No, really, llms are for the most part black boxes (in some ways all neural networks are); as the layers and connections get more complex, the ability to trace or parse the logic that a llm used to generate content disappears; i dont mean its unlikely to difficult, i mean it is impossible for us to know *why* it spit those words out in that order; we just know that feeding more data in can increase accuracy (but it also risks increasing overfitting, which is when a model adheres too closely to its training data and cant extrapolate for new situations or adapt) edit, and we can tweak the parameters to eliminate noise or increase performance; but we never know exactly how changes correlate to changes behind the mask; and for some functions, we don't need to

Traditional computer programming is deterministic, we can go through the source code if something goes wrong and debug, we can dig through step by step and, if worst comes to worst, reset the system to fix bugs. There is no debugging capability with machine learning because we functionally have made a machine equivalent of instinct; it uses complicated math and layers and nodes in a black box to crunch statistically likely answers, and the problem is that how we evaluate it doing well is by how much it triggers our pattern recognition; this isnt so much an issue with stuff like "recognize tumors on ct scans" or "categorize birds by species" because those are questions we can answer and train the model towards specifically, and dont rely on us thinking its realistic. However, llms are functionally instinct word salad; its really good at triggering the patterns that make us think its confident and it sounds human, and thats what it was developed towards; what ISNT okay is that tech bros and cultists are scamming people by claiming it can be generalized for any field, which is why were getting these issues; the technology is not trained towards telling the truth, but now companies think it can be an everymachine

So no, companies do NOT have full control over llm's, because this isnt the same as a computer program. Machine learning is a fascinating field and there's lots of useful applications for the different techniques and models coming out, but the problems is that fools and capitalists are misusing a technology to do what it wasnt meant to do, simply because they think they can fake it until they make it, or worse, because they can run with the money by the time anyone realizes. And that's why these implementations are irresponsible, not just because they're putting in functions that are misleading, but because they likely KNOW that they can't make it generalize to the extent they promised it would and are charging ahead in hopes of making us accept an inferior and dangerous product all to make an extra buck and avoid paying people.

(sidenote, thats why professors shouldnt trust any company's claims of being able to spot chat gpt generated essays or hw without going through it themselves for obvious signs; there isnt a reliable way to detect that kind of cheating because chat gpt does not operate on consistent logic, asking chat gpt if it wrote an essay is equivalent to shouting into a canyon a question and waiting for the echo and deciding what you heard from there)

And this is why you switch to DuckDuckGo. :/

23K notes

·

View notes