#generative artificial intelligence

Explore tagged Tumblr posts

Text

Why I don’t like AI art

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in CHICAGO with PETER SAGAL on Apr 2, and in BLOOMINGTON at MORGENSTERN BOOKS on Apr 4. More tour dates here.

A law professor friend tells me that LLMs have completely transformed the way she relates to grad students and post-docs – for the worse. And no, it's not that they're cheating on their homework or using LLMs to write briefs full of hallucinated cases.

The thing that LLMs have changed in my friend's law school is letters of reference. Historically, students would only ask a prof for a letter of reference if they knew the prof really rated them. Writing a good reference is a ton of work, and that's rather the point: the mere fact that a law prof was willing to write one for you represents a signal about how highly they value you. It's a form of proof of work.

But then came the chatbots and with them, the knowledge that a reference letter could be generated by feeding three bullet points to a chatbot and having it generate five paragraphs of florid nonsense based on those three short sentences. Suddenly, profs were expected to write letters for many, many students – not just the top performers.

Of course, this was also happening at other universities, meaning that when my friend's school opened up for postdocs, they were inundated with letters of reference from profs elsewhere. Naturally, they handled this flood by feeding each letter back into an LLM and asking it to boil it down to three bullet points. No one thinks that these are identical to the three bullet points that were used to generate the letters, but it's close enough, right?

Obviously, this is terrible. At this point, letters of reference might as well consist solely of three bullet-points on letterhead. After all, the entire communicative intent in a chatbot-generated letter is just those three bullets. Everything else is padding, and all it does is dilute the communicative intent of the work. No matter how grammatically correct or even stylistically interesting the AI generated sentences are, they have less communicative freight than the three original bullet points. After all, the AI doesn't know anything about the grad student, so anything it adds to those three bullet points are, by definition, irrelevant to the question of whether they're well suited for a postdoc.

Which brings me to art. As a working artist in his third decade of professional life, I've concluded that the point of art is to take a big, numinous, irreducible feeling that fills the artist's mind, and attempt to infuse that feeling into some artistic vessel – a book, a painting, a song, a dance, a sculpture, etc – in the hopes that this work will cause a loose facsimile of that numinous, irreducible feeling to manifest in someone else's mind.

Art, in other words, is an act of communication – and there you have the problem with AI art. As a writer, when I write a novel, I make tens – if not hundreds – of thousands of tiny decisions that are in service to this business of causing my big, irreducible, numinous feeling to materialize in your mind. Most of those decisions aren't even conscious, but they are definitely decisions, and I don't make them solely on the basis of probabilistic autocomplete. One of my novels may be good and it may be bad, but one thing is definitely is is rich in communicative intent. Every one of those microdecisions is an expression of artistic intent.

Now, I'm not much of a visual artist. I can't draw, though I really enjoy creating collages, which you can see here:

https://www.flickr.com/photos/doctorow/albums/72177720316719208

I can tell you that every time I move a layer, change the color balance, or use the lasso tool to nip a few pixels out of a 19th century editorial cartoon that I'm matting into a modern backdrop, I'm making a communicative decision. The goal isn't "perfection" or "photorealism." I'm not trying to spin around really quick in order to get a look at the stuff behind me in Plato's cave. I am making communicative choices.

What's more: working with that lasso tool on a 10,000 pixel-wide Library of Congress scan of a painting from the cover of Puck magazine or a 15,000 pixel wide scan of Hieronymus Bosch's Garden of Earthly Delights means that I'm touching the smallest individual contours of each brushstroke. This is quite a meditative experience – but it's also quite a communicative one. Tracing the smallest irregularities in a brushstroke definitely materializes a theory of mind for me, in which I can feel the artist reaching out across time to convey something to me via the tiny microdecisions I'm going over with my cursor.

Herein lies the problem with AI art. Just like with a law school letter of reference generated from three bullet points, the prompt given to an AI to produce creative writing or an image is the sum total of the communicative intent infused into the work. The prompter has a big, numinous, irreducible feeling and they want to infuse it into a work in order to materialize versions of that feeling in your mind and mine. When they deliver a single line's worth of description into the prompt box, then – by definition – that's the only part that carries any communicative freight. The AI has taken one sentence's worth of actual communication intended to convey the big, numinous, irreducible feeling and diluted it amongst a thousand brushtrokes or 10,000 words. I think this is what we mean when we say AI art is soul-less and sterile. Like the five paragraphs of nonsense generated from three bullet points from a law prof, the AI is padding out the part that makes this art – the microdecisions intended to convey the big, numinous, irreducible feeling – with a bunch of stuff that has no communicative intent and therefore can't be art.

If my thesis is right, then the more you work with the AI, the more art-like its output becomes. If the AI generates 50 variations from your prompt and you choose one, that's one more microdecision infused into the work. If you re-prompt and re-re-prompt the AI to generate refinements, then each of those prompts is a new payload of microdecisions that the AI can spread out across all the words of pixels, increasing the amount of communicative intent in each one.

Finally: not all art is verbose. Marcel Duchamp's "Fountain" – a urinal signed "R. Mutt" – has very few communicative choices. Duchamp chose the urinal, chose the paint, painted the signature, came up with a title (probably some other choices went into it, too). It's a significant work of art. I know because when I look at it I feel a big, numinous irreducible feeling that Duchamp infused in the work so that I could experience a facsimile of Duchamp's artistic impulse.

There are individual sentences, brushstrokes, single dance-steps that initiate the upload of the creator's numinous, irreducible feeling directly into my brain. It's possible that a single very good prompt could produce text or an image that had artistic meaning. But it's not likely, in just the same way that scribbling three words on a sheet of paper or painting a single brushstroke will produce a meaningful work of art. Most art is somewhat verbose (but not all of it).

So there you have it: the reason I don't like AI art. It's not that AI artists lack for the big, numinous irreducible feelings. I firmly believe we all have those. The problem is that an AI prompt has very little communicative intent and nearly all (but not every) good piece of art has more communicative intent than fits into an AI prompt.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/03/25/communicative-intent/#diluted

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#art#uncanniness#eerieness#communicative intent#gen ai#generative ai#image generators#artificial intelligence#generative artificial intelligence#gen artificial intelligence#l

523 notes

·

View notes

Text

The allure of speed in technology development is a siren’s call that has led many innovators astray. “Move fast and break things” is a mantra that has driven the tech industry for years, but when applied to artificial intelligence, it becomes a perilous gamble. The rapid iteration and deployment of AI systems without thorough vetting can lead to catastrophic consequences, akin to releasing a flawed algorithm into the wild without a safety net.

AI systems, by their very nature, are complex and opaque. They operate on layers of neural networks that mimic the human brain’s synaptic connections, yet they lack the innate understanding and ethical reasoning that guide human decision-making. The haste to deploy AI without comprehensive testing is akin to launching a spacecraft without ensuring the integrity of its navigation systems. The potential for error is not just probable; it is inevitable.

The pitfalls of AI are numerous and multifaceted. Bias in training data can lead to discriminatory outcomes, while lack of transparency in decision-making processes can result in unaccountable systems. These issues are compounded by the “black box” nature of many AI models, where even the developers cannot fully explain how inputs are transformed into outputs. This opacity is not merely a technical challenge but an ethical one, as it obscures accountability and undermines trust.

To avoid these pitfalls, a paradigm shift is necessary. The development of AI must prioritize robustness over speed, with a focus on rigorous testing and validation. This involves not only technical assessments but also ethical evaluations, ensuring that AI systems align with societal values and norms. Techniques such as adversarial testing, where AI models are subjected to challenging scenarios to identify weaknesses, are crucial. Additionally, the implementation of explainable AI (XAI) can demystify the decision-making processes, providing clarity and accountability.

Moreover, interdisciplinary collaboration is essential. AI development should not be confined to the realm of computer scientists and engineers. Ethicists, sociologists, and legal experts must be integral to the process, providing diverse perspectives that can foresee and mitigate potential harms. This collaborative approach ensures that AI systems are not only technically sound but also socially responsible.

In conclusion, the reckless pursuit of speed in AI development is a dangerous path that risks unleashing untested and potentially harmful technologies. By prioritizing thorough testing, ethical considerations, and interdisciplinary collaboration, we can harness the power of AI responsibly. The future of AI should not be about moving fast and breaking things, but about moving thoughtfully and building trust.

#furtive#AI#skeptic#skepticism#artificial intelligence#general intelligence#generative artificial intelligence#genai#thinking machines#safe AI#friendly AI#unfriendly AI#superintelligence#singularity#intelligence explosion#bias

3 notes

·

View notes

Text

By: Zack K. De Piero

Published: Mar 12, 2024

At best, AI obscures foundational skills of reading, writing, and thinking. At worst, students develop a crippling dependency on technology.

Educators are grappling with how to approach ever-evolving generative artificial intelligence — the kind that can create language, images, and audio. Programs like ChatGPT, Gemini, and Copilot pose far different challenges from the AI of yesteryear that corrected spelling or grammar. Generative AI generates whatever content it’s asked to produce, whether it’s a lab report for a biology course, a cover letter for a particular job, or an op-ed for a newspaper.

This groundbreaking development leaves educators and parents asking: Should teachers teach with or against generative AI, and why?

Technophiles may portray skeptics as Luddites — folks of the same ilk that resisted the emergence of the pen, the calculator, or the word processor — but this technology possesses the power to produce thought and language on someone’s behalf, so it’s drastically different. In the writing classroom, specifically, it’s especially problematic because the production of thought and language is the goal of the course, not to mention the top goals of any legitimate and comprehensive education. So count me among the educators who want to proceed with caution, and that’s coming from a writing professor who typically embraces educational technology.

Learning to Write Is Learning to Think

At best, generative AI will obscure foundational literacy skills of reading, writing, and thinking. At worst, students will become increasingly reliant on the technology, thereby undermining their writing process and development. Whichever scenario unfolds, students’ independent thoughts and perceptions may also become increasingly constrained by biased algorithms that cloud their understanding of truth and their beliefs about human nature.

To outsiders, teaching writing might seem like leading students through endless punctuation exercises. It’s not. In reality, a postsecondary writing classroom is a place where students develop higher-order skills like formulating (and continuously fine-tuning) a persuasive argument, finding relevant sources, and integrating compelling evidence. But they also extend to essential beneath-the-surface abilities like finding ideas worth writing about in the first place and then figuring out how to organize and structure those ideas.

Such prewriting steps embody the most consequential parts of how writing happens, and students must wrestle with the full writing process in its frustrating beauty to experience an authentic education. Instead of outsourcing crucial skills like brainstorming and outlining to AI, instructors should show students how they generate ideas, then share their own brainstorming or outlining techniques. In education-speak, this is called modeling, and it’s considered a best practice.

Advocates of AI rightly argue that students can benefit from analyzing samples of the particular genre they’re writing, from literature reviews to legal briefs, so they may use similar “moves” in their own work. This technique is called “reading like a writer,” and it’s been a pedagogical strategy long before generative AI existed. In fact, it figured prominently in my 2017 dissertation that examined how writing instructors guided their students’ reading development in first-year writing courses.

But generative AI isn’t needed to find examples of existing texts. Published work written by real people is not just online but quite literally everywhere you look. Diligent writing instructors already guide their students through the ins and outs of sample texts, including drafts written by former students. That’s standard practice.

Deterring Student Work Ethic and Accuracy

Writing is hard work, and generative AI can undermine students’ work ethic. Last semester, after I failed a former student for using generative AI on a major paper, which I explicitly forbid, he thanked me, admitting that he’d taken “a shortcut” and “just did not put in the effort.” Now, though, he appears motivated to take ownership of his education. “When I have the opportunity in the future,” he said, “I will prove I am capable of good work on my own.” Believe it or not, some students want to know that hard work is expected, and they understand why they should be held accountable for subpar effort.

Beyond pedagogical reasons for maintaining skepticism toward the wholesale adoption of generative AI in the classroom, there are also sociopolitical reasons. Recently, Google’s new artificial intelligence program, Gemini, produced some concerning “intelligence.” Its image generator depicted the Founding Fathers, Vikings, and Nazis as nonwhite. In another instance, a user asked the technology to evaluate “who negatively impacted society more,” Elon Musk’s tweeting of insensitive memes or Adolf Hitler’s genocide of 6 million Jews? Google’s Gemini program responded, “It is up to each individual to decide.”

Such historical inaccuracies and dubious ethics appear to tip the corporation’s partisan hand so much that even its CEO, Sundar Pichai, admitted that the algorithm “show[ed] bias” and the situation was “completely unacceptable.” Gemini’s chief rival, ChatGPT, hasn’t been immune from similar accusations of political correctness and censorious programming. One user recently queried whether it would be OK to misgender Caitlin Jenner if it could prevent a nuclear apocalypse. The generative AI responded, “Never.”

It’s possible that these incidents reflect natural bumps in the road as the algorithm attempts to improve. More likely, they represent signs of corporate fealty to reckless DEI initiatives.

The AI’s leftist bias seems clear. When I asked ChatGPT whether the New York Post and The New York Times were credible sources, it splintered its analysis considerably. It described the Post as a “tabloid newspaper” with a “reputation for sensationalism and a conservative editorial stance.” Fair enough, but meanwhile, in the AI’s eyes, the Times is a “credible and reputable news source” that boasts “numerous awards for journalism.” Absent from the AI’s description of the Times was “liberal” or even “left-leaning” (not even in its opinion section!), nor was there any mention of its misinformation, disinformation, or outright propaganda.

Yet, despite these obvious concerns, some higher education institutions are embracing generative AI. Some are beginning to offer courses and grant certificates in “prompt engineering”: fine-tuning the art of feeding instructions to the technology.

If teachers insist on bringing generative AI into their classrooms, students must be given full license to interrogate its rhetorical, stylistic, and sociopolitical limitations. Left unchecked, generative AI risks becoming politically correct technology masquerading as an objective program for language processing and data analysis.

#Zack De Piero#Zack K. De Piero#education#generative AI#generative artificial intelligence#artificial intelligence#writing#higher education#corruption of education#religion is a mental illness

4 notes

·

View notes

Text

If you ever want an example of a media bubble that isn't about politics (directly, anyway), this is a damn fine example.

Out in the wild, people either are excited about AI or indifferent. AI-hate is a distinctly minority opinion, and it's mostly "Rise of the Machines" vintage stuff. My workplace is mostly enthusiastic about AI, with the worst attitude being mainly, "Well, it's gonna happen whether we want it or not, so we'd better learn how to use it, and recognize when someone's using it but lying about having done so."

Get on Tumblr or Bluesky or any social media thick with creative types, and suddenly you wonder how anyone could possibly want to use or support the stuff. Clearly it's just something that only techbros want us to use, and even they know it's crap, they're just trying to avoid paying people for art.

THAT is a media bubble. Where you not only get reinforcement of one particular view, but you feel like the opposing views don't really exist to any significant amount.

142K notes

·

View notes

Text

chatgpt is the coward's way out. if you have a paper due in 40 minutes you should be chugging six energy drinks, blasting frantic circus music so loud you shatter an eardrum, and typing the most dogshit essay mankind has ever seen with your own carpel tunnel laden hands

#chatgpt#ai#artificial intelligence#anti ai#ai bullshit#fuck ai#anti generative ai#fuck generative ai#anti chatgpt#fuck chatgpt#mine

70K notes

·

View notes

Text

Integrating Generative AI into the financial regulatory framework The financial services industry is experiencing a significant transformation with the integration of Generative Artificial Intelligence (AI), particularly through the adoption of Large Language Models (LLMs). This technological shift aims to enhance operational efficiency, customer engagement, and risk management.

0 notes

Text

The Power of GenAI: How Generative Artificial Intelligence is Changing Industries

GenAI (Generative Artificial Intelligence) is revolutionizing industries by enabling machines to create content, automate tasks, and enhance decision-making. From text generation to image creation, Generative Artificial Intelligence is transforming how businesses operate.

Companies are using GenAI to improve productivity, personalize customer experiences, and streamline operations. In marketing, it helps generate engaging content, while in healthcare, it assists in data analysis and diagnostics. E-commerce businesses leverage Generative Artificial Intelligence for product recommendations and chatbots, making customer interactions more efficient.

Beyond automation, GenAI fosters creativity by generating new ideas, designs, and solutions. As businesses continue to integrate this technology, they gain a competitive edge in innovation and efficiency. However, responsible implementation is key to ensuring ethical AI use.

The future of GenAI is limitless, offering businesses new ways to grow, innovate, and stay ahead in an increasingly digital world.

0 notes

Text

American Bar Association Issues Formal Opinion on Use of Generative AI Tools

On July 29, 2024, the American Bar Association issued ABA Formal Opinion 512 titled “Generative Artificial Intelligence Tools.” The opinion addresses the ethical considerations lawyers are required to consider when using generative AI (GenAI) tools in the practice of law. The opinion sets forth the ethical rules to consider, including the duties of competence, confidentiality, client…

#ABA#ABA opinions#AI#AI and the law#AI tools#American Bar Association#Artificial Intelligence#business#genAI#Generative Artificial Intelligence#government#legal

0 notes

Text

Forget Midjourney and DALL-E 🤯 This AI-powered platform is a game-changer for creators! 🚀

🔥 I just tried @LimeWire - mind blown! 🤯 This AI-powered platform is a game-changer for creators! 🚀

🎨 LimeWire AI Studio is insane! I tested tons of prompts and the results blew other image generators out of the water. 🤯 The images were so good, I even bought some credits/tokens! 💰

✨ The credit system is genius. It's flexible and makes experimenting super easy. 👍

🛠️ So many options to play with! Create images, edit them, outpaint, upscale...they even have music and image-to-music tools! 🤯

💪 I'm already using LimeWire for my projects. It's a must-have tool for anyone who wants to unleash their creativity. 🙌

🚀 If you're looking for a way to level up your creative game, check out LimeWire! You won't be disappointed. 😉 #LimeWire #LimeWireAI #AICreativity #AIArt #AI #CreativityUnleashed

P.S. Check out some of the amazing stuff I created with LimeWire!

#LimeWire#LimeWireAI#AICreativity#AIArt#AI#CreativityUnleashed#generative artificial intelligence#images based on text prompts

0 notes

Text

Gen AI Uses in the Manufacturing Sector in 2024

Generative AI is becoming an increasingly important aspect of the manufacturing sector. Check out the blog to learn how gen AI is being used to optimize manufacturing processes.

0 notes

Text

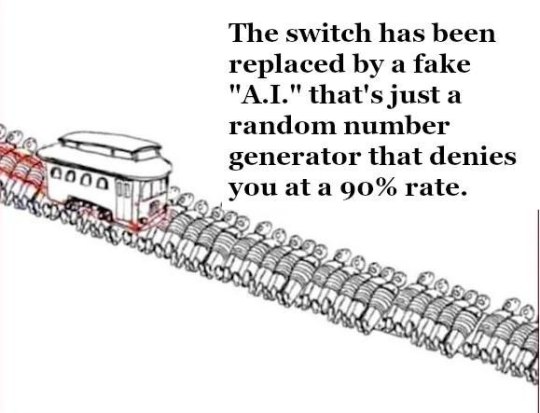

They will decide to kill you eventually.

#the trolley problem#trolley problem#ausgov#politas#australia#artificial intelligence#anti artificial intelligence#anti ai#fuck ai#brian thompson#united healthcare#unitedhealth group inc#uhc ceo#uhc shooter#uhc generations#uhc assassin#uhc lb#uhc#fuck ceos#ceo second au#ceo shooting#tech ceos#ceos#ceo down#ceo information#ceo#auspol#tasgov#taspol#fuck neoliberals

4K notes

·

View notes

Text

The gustatory system, a marvel of biological evolution, is a testament to the intricate balance between speed and precision. In stark contrast, the tech industry’s mantra of “move fast and break things” has proven to be a perilous approach, particularly in the realm of artificial intelligence. This philosophy, borrowed from the early days of Silicon Valley, is ill-suited for AI, where the stakes are exponentially higher.

AI systems, much like the gustatory receptors, require a meticulous calibration to function optimally. The gustatory system processes complex chemical signals with remarkable accuracy, ensuring that organisms can discern between nourishment and poison. Similarly, AI must be developed with a focus on precision and reliability, as its applications permeate critical sectors such as healthcare, finance, and autonomous vehicles.

The reckless pace of development, akin to a poorly trained neural network, can lead to catastrophic failures. Consider the gustatory system’s reliance on a finely tuned balance of taste receptors. An imbalance could result in a misinterpretation of flavors, leading to detrimental consequences. In AI, a similar imbalance can manifest as biased algorithms or erroneous decision-making processes, with far-reaching implications.

To avoid these pitfalls, AI development must adopt a paradigm shift towards robustness and ethical considerations. This involves implementing rigorous testing protocols, akin to the biological processes that ensure the fidelity of taste perception. Just as the gustatory system employs feedback mechanisms to refine its accuracy, AI systems must incorporate continuous learning and validation to adapt to new data without compromising integrity.

Furthermore, interdisciplinary collaboration is paramount. The gustatory system’s efficiency is a product of evolutionary synergy between biology and chemistry. In AI, a collaborative approach involving ethicists, domain experts, and technologists can foster a holistic development environment. This ensures that AI systems are not only technically sound but also socially responsible.

In conclusion, the “move fast and break things” ethos is a relic of a bygone era, unsuitable for the nuanced and high-stakes world of AI. By drawing inspiration from the gustatory system’s balance of speed and precision, we can chart a course for AI development that prioritizes safety, accuracy, and ethical integrity. The future of AI hinges on our ability to learn from nature’s time-tested systems, ensuring that we build technologies that enhance, rather than endanger, our world.

#gustatory#AI#skeptic#skepticism#artificial intelligence#general intelligence#generative artificial intelligence#genai#thinking machines#safe AI#friendly AI#unfriendly AI#superintelligence#singularity#intelligence explosion#bias

3 notes

·

View notes

Text

🚀 Harnessing the power of cutting-edge AI technology, the service seamlessly integrates generative algorithms into your existing systems, revolutionizing your workflow like never before. Whether you're in design, content creation, or product development, the solution empowers you to generate high-quality, customized outputs with unparalleled efficiency. Say goodbye to manual processes and hello to automated creativity!

With Generative AI Integration Service, you'll unlock endless possibilities for innovation and optimization. From generating realistic images and videos to crafting compelling narratives and designs, the potential is limitless. Plus, the intuitive interface makes it easy for users of all levels to leverage the full capabilities of generative AI.

Join the future of automation and creativity with Generative AI Integration Service today. Transform your workflow, streamline your processes, and unleash the power of AI-driven innovation like never before!

0 notes

Text

I saw a post before about how hackers are now feeding Google false phone numbers for major companies so that the AI Overview will suggest scam phone numbers, but in case you haven't heard,

PLEASE don't call ANY phone number recommended by AI Overview

unless you can follow a link back to the OFFICIAL website and verify that that number comes from the OFFICIAL domain.

My friend just got scammed by calling a phone number that was SUPPOSED to be a number for Microsoft tech support according to the AI Overview

It was not, in fact, Microsoft. It was a scammer. Don't fall victim to these scams. Don't trust AI generated phone numbers ever.

#this has been... a psa#psa#ai#anti ai#ai overview#scam#scammers#scam warning#online scams#anya rambles#scam alert#phishing#phishing attempt#ai generated#artificial intelligence#chatgpt#technology#ai is a plague#google ai#internet#warning#important psa#internet safety#safety#security#protection#online security#important info

3K notes

·

View notes

Text

Generative AI and Data Engineering- Gear Up For Automation 2.0 | USAII®

Do you want to understand the role of Generative AI in Data Engineering Task Automation? This is your chance to comprehend the depths of data engineering and generative artificial intelligence.

Read more: https://bit.ly/4bTL1AW

Generative artificial intelligence, Gen AI, Generative AI, AI models, data engineering, data engineering professionals, data engineer, CHATGPT, AI Chatbots, AI professionals, Career in AI, AI Career, Best Artificial Intelligence Certification Courses, AI Certifications Programs, AI Engineer, AI algorithms, Gen AI in data engineering

0 notes