#hypergraph theory

Explore tagged Tumblr posts

Text

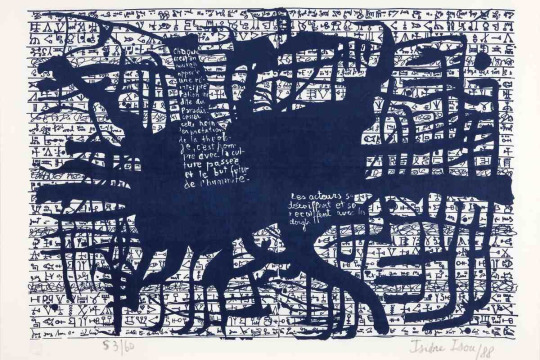

Isidore Isou

Hypergraphie, polylogue 1964 (1988)

Born in Romania in 1925, Isidore Isou arrived in Paris at the end of World War II. He called himself a ‘Lettrist’, a movement of which he was initially the only member. By 1950, the Lettrist group also included Maurice Lemaitre, Jean-Louis Brau, Gil J. Wolman and Francois Dufrene, who were joined by Guy Debord in 1951.1 Lettrism, also known as hypergraphie, and super-écriture, had its theoretical roots in Dada and Surrealism, and called for a renovation of all the arts. The first public manifestation of the new artistic movement took place in January 1946 at the Societes Savantes, where Isou read his sound poems out loud. The next year, Gallimard published his Introduction to a New Poetry and a New Music. From there, in numerous publications that followed in rapid succession, Isou communicated his desire to radically transform society and applied his theories to all areas of art and culture, most notably in poetry, film, painting and political theory. He would eventually publish over 200 books, containing his ‘hypergraphic’ writings, ‘metagraphic’ images, plays, theoretical writings, polemics, wall posters, etc.

In the plastic field, Lettrism is based on the merging of image and word. The plastic use of the letter or sign would not signify anything other than itself, thus transcending traditional conventions of meaning by emphasising the form of the letter over representation. Isou conceived Lettrism as fundamentally different from movements that preceded it, representing a complete shift from figuration and abstraction to the plastic use of the symbol of letters or signs. While Isou acknowledged that some artists associated with the Bauhaus and Cubism, and artists such as Marcel Duchamp, approached qualities of Lettrism, Isou declared that these artists ultimately faltered by subjecting letters to function and burdening them with meaning, rather than granting them independence and allowing them to become pure form. As Isou wrote in 1961: ‘… the important thing was not to introduce letters into painting, but to reduce the whole picture to letters, or rather, to the order of letters.’2

1 One year later in 1952 Guy Debord separated himself from the group and founded L'Internationale lettrisme, and later the Situationist movement. color=“#999999”> 2 Isidore Isou. Le Lettrisme et l’Hypergraphie dans la Peinture et la Sculpture Contemporaines, Poésie Nouvelle, Special Issue 3 (July–August–September 1961), p. 41color=“#999999”>

65 notes

·

View notes

Text

Stephen Wolfram: Computation, Hypergraphs, and Fundamental Physics (Sean Carroll's Mindscape, July 2021)

youtube

Adam Becker: Physicists Criticize Stephen Wolfram’s 'Theory of Everything' (Scientific American, May 2020)

Stephen Wolfram: Cellular Automata, Computation, and Physics (Lex Fridman, April 2020)

Stephen Wolfram: Fundamental Theory of Physics, Life, and the Universe (Lex Fridman, September 2020)

youtube

Stephen Wolfram: Complexity and the Fabric of Reality (Lex Fridman, October 2021)

youtube

Stephen Wolfram: ChatGPT and the Nature of Truth, Reality & Computation (Lex Fridman, May 2023)

youtube

Saturday, July 15, 2023

4 notes

·

View notes

Text

You said: Has the Wolfram Physics Project proven any laws of Fundamental Physics? ChatGPT said: As of now, the Wolfram Physics Project has not yet proven any laws of fundamental physics in the traditional scientific sense — that is, it has not derived known physical laws like the Standard Model or general relativity from its models in a fully rigorous, universally accepted way. However, here is what has been achieved so far:

Framework Creation Stephen Wolfram and his team have developed a formal computational framework based on hypergraph rewriting systems, proposing that space, time, and physics arise from the evolution of discrete structures according to simple rules.

Derivations of Known Features The project has heuristically derived or simulated features that resemble known aspects of physics, such as: • Causal invariance, a property related to relativity. • Emergent spacetime and Lorentz invariance under certain assumptions. • Indications of quantum behavior via multiway systems and branchial space. • A reformulation of Einstein's equations in terms of causal graphs (though not derived from first principles in this model yet).

Mathematical Insights The effort has produced new mathematical ideas in the areas of: • Graph theory and rewriting systems. • Causal structure and foliations. • Category theory and homotopy applied to physics.

Exploratory Status The project is in an exploratory and foundational stage, focusing on mapping how known physics might emerge from extremely low-level computational processes — essentially suggesting a new paradigm, but not yet establishing it in a way that matches or replaces existing physics frameworks.

Summary While the Wolfram Physics Project is a bold attempt to discover a unified computational foundation for physics, it remains speculative and has not yet produced falsifiable predictions or rigorous derivations of existing laws from its core principles. It is more accurate to say it proposes a path toward such a foundation, rather than having proven one. Would you like a visual or technical example of how one of its models relates to relativity or quantum mechanics?

You said: Are Feynman diagrams causal graphs? ChatGPT said: Great question — Feynman diagrams are not causal graphs, although they share some superficial similarities. Here's a breakdown of the differences and relationships between them:

🔹 What Are Feynman Diagrams? • Feynman diagrams are computational tools in quantum field theory (QFT), used to visualize and calculate probability amplitudes for particle interactions. • Each diagram represents a term in a perturbative expansion of a quantum amplitude. • The axes are not spacetime coordinates in a strict sense; the vertical axis is usually time (heuristically), but the diagrams are mainly symbolic. • Vertices represent interaction points, and lines represent particles propagating, not trajectories in spacetime. ➡️ Feynman diagrams are not literal depictions of what happens in spacetime. They help organize terms in quantum field calculations using a mathematical formalism.

🔹 What Are Causal Graphs? • Causal graphs (in the context of the Wolfram Physics Project and elsewhere) are networks where nodes represent events, and directed edges represent causal dependencies between them. • They explicitly encode the causal structure of a physical system: if event A causes or influences event B, there is an arrow from A to B. • In relativity or computational models like the Wolfram Physics Project, causal graphs reflect the actual structure of spacetime or computational history.

🔸 Key Differences Feature Feynman Diagrams Causal Graphs Purpose Mathematical expansion Represent causal structure

in QFT. of events. Temporal Mostly symbolic Encodes actual causal

Meaning relations. Representation Particle interactions Event causality Used in Quantum Field Theory Relativity, Quantum Gravity, Computational Physics

🔹 Are They Related at All? They are not equivalent, but they both express relationships between events or interactions — just in very different frameworks: • In QFT, Feynman diagrams help compute probabilities. • In causal graph models (like in the Wolfram Physics Project), the entire fabric of spacetime and physics arises from the graph's structure. So while both are graphs involving vertices and edges, their meaning and use are fundamentally different. Would you like a diagram comparing the two visually?

You said: Does the Amplituhedron contain all Feynman diagrams? ChatGPT said: Excellent question — and a deep one. The short answer is: The Amplituhedron does not contain all Feynman diagrams — it replaces them in certain theories, offering a radically different way to compute scattering amplitudes without using any Feynman diagrams at all. Let me explain.

🔹 What Is the Amplituhedron? The Amplituhedron is a geometric object discovered by Nima Arkani-Hamed and collaborators around 2013. It encodes the scattering amplitudes of certain particle interactions in a purely geometric way, without reference to: • Spacetime • Quantum fields • Or Feynman diagrams It was first developed for: • Planar N=4\mathcal{N} = 4N=4 supersymmetric Yang–Mills theory in 4D — a highly symmetric (but not realistic) QFT. • Tree-level amplitudes, later extended to loop-level in that theory.

🔸 Relationship to Feynman Diagrams Feature Feynman Diagrams Amplituhedron Approach Perturbative expansion Geometric encoding Input Fields, vertices, propagators Momentum twistors, combinatorics Intermediate Steps Many diagrams with divergences None; computes final amplitude directly Redundancy Huge (many diagrams cancel) Minimal; built to avoid redundancy Containment Diagrams are not inside the amplituhedron The amplituhedron gives results equivalent to the sum of all diagrams

So, rather than containing Feynman diagrams, the Amplituhedron provides an alternative to them, yielding the same final amplitude but without going through the diagrammatic machinery.

🔹 Implication In certain theories (especially planar N=4 supersymmetric Yang–Mills theory in 4D — a highly symmetric (but not realistic) QFT.), the entire Feynman diagrammatic expansion is replaced by computing a volume or combinatorial feature of the amplituhedron. This is deeply tied to hidden symmetries and positivity structures in the amplitudes.

Summary • ❌ The Amplituhedron does not contain all Feynman diagrams. • ✅ It allows you to compute the total amplitude without them, in some very specific quantum field theories. • 🔬 It suggests there may be more fundamental, geometric formulations of physics than quantum fields and Feynman diagrams. Would you like an illustrative visualization of what the amplituhedron looks like and how it encodes amplitudes?

You said: How many kinds of spontaneous quantum events have been observed to occur in a quantum vacuum? ChatGPT said: In quantum field theory, several types of spontaneous quantum events are predicted — and in some cases observed — to occur in the quantum vacuum, due to its inherent fluctuations and instability. These events arise from the uncertainty principle, which allows temporary violations of conservation laws (like energy conservation) within certain limits. Here's a breakdown of the main kinds:

✅ Observed or Indirectly Confirmed Spontaneous Quantum Events

Vacuum Fluctuations • Description: Temporary appearance of particle–antiparticle pairs (like electron–positron pairs) that annihilate each other shortly after.

• Evidence: Indirect, but foundational to quantum electrodynamics (QED); shows up in: • Lamb shift

• Casimir effect

• Anomalous magnetic moment of the electron

Spontaneous Emission • Description: An excited atom can emit a photon spontaneously due to coupling with the quantum vacuum.

• Evidence: Well-measured in quantum optics; cannot occur in classical EM theory — requires vacuum field.

Hawking Radiation (Theoretical, but Widely Accepted) • Description: Black holes emit particles due to quantum vacuum fluctuations near the event horizon.

• Evidence: Not yet observed directly, but supported by consistent theoretical work and analog experiments.

Unruh Effect (Theoretical) • Description: An accelerating observer in a vacuum perceives a warm bath of particles (vacuum looks like it contains particles).

• Evidence: No direct observation due to extreme acceleration required.

Schwinger Effect (Strong-Field Pair Production) • Description: Creation of electron–positron pairs from vacuum in the presence of a very strong electric field.

• Evidence: Not yet observed in pure form, but being pursued in high-intensity laser experiments (e.g., ELI, SLAC).

❗ Summary: How Many Kinds? There are at least five major categories of spontaneous quantum events associated with the vacuum: # Event Type Observed? 1 Vacuum fluctuations (virtual particles) ✅ Indirect 2 Spontaneous emission ✅ Direct 3 Hawking radiation ❌ Theoretical only 4 Unruh effect ❌ Theoretical only 5 Schwinger effect ❌ Not yet observed

So, to answer directly: Two kinds have been directly observed (vacuum fluctuations via effects like Casimir and Lamb shift, and spontaneous emission), while three more are theoretically predicted and under investigation. Would you like a timeline or visual map of these phenomena and where they stand in terms of experimental support?

You said: Does Quantum Foam exist? ChatGPT said: Quantum foam — also known as spacetime foam — is a hypothetical concept, not yet observed, but widely discussed in theoretical physics as a possible feature of spacetime at the Planck scale (~ 10 − 35 10^{-35} 10 −35 meters). Here's a detailed breakdown of what that means:

🔹 What Is Quantum Foam? • Proposed by John Wheeler in the 1950s. • Describes the idea that at very small scales, spacetime is not smooth, but fluctuates violently due to quantum uncertainty. • Instead of a flat or gently curved spacetime (as in general relativity), quantum foam suggests a chaotic structure where: • Tiny virtual black holes may pop in and out of existence. • Topology and geometry fluctuate, possibly tearing and reconnecting. • Space and time themselves may be emergent phenomena, not fundamental. This is analogous to how a calm ocean surface looks flat from afar, but is foamy and turbulent up close.

🔬 Does It Exist? ❌ No direct experimental evidence • Quantum foam is predicted to occur at the Planck scale, far beyond current experimental resolution. • We can't probe distances smaller than about 10−1910^{-19}10 • −19 • meters even at the LHC, which is 16 orders of magnitude too large to test quantum foam directly. ✅ Strong theoretical motivation • Most approaches to quantum gravity (like loop quantum gravity, string theory, causal dynamical triangulations, and the Wolfram Physics Project) predict some form of non-smooth, fluctuating microstructure of spacetime — very much like quantum foam. • It plays a role in ideas about the holographic principle, black hole entropy, and emergent spacetime. 🧪 Proposed observational hints (not confirmed) • Light dispersion from distant gamma-ray bursts — some models predict tiny energy-dependent delays due to quantum foam. So far, data places upper bounds, but no detection. • LIGO and gravitational wave detectors have been proposed to test Planck-scale noise, but again, no positive results yet.

🧾 Summary Quantum foam has not been directly observed, but it remains a compelling theoretical prediction of many quantum gravity models. You can think of it as a plausible but unconfirmed microscopic structure of spacetime — similar to how atoms were theorized long before they were observed. Would you like to explore which quantum gravity theories describe quantum foam explicitly, or see a visual analogy of how it would distort spacetime?

You said: In the Wolfram Physics model, would the Quantum Foam accomplish Computation? ChatGPT said: Yes — in the Wolfram Physics Project, the analog of quantum foam is not just a fluctuating structure but is fundamentally computational in nature. In fact, computation is what drives everything in the model.

🔹 The Core Idea In Wolfram's model, the universe is represented by the evolution of a hypergraph, where: • Nodes represent abstract "atoms of space". • Edges represent relationships between those nodes. • A set of simple rewriting rules updates this graph over time. This process is computational, and the universe is essentially a giant distributed computation.

🔸 So, Is There Quantum Foam? Yes — though not called that formally, the fine-grained structure of the hypergraph at very small scales behaves like a quantum foam, because: 1. The graph is discrete and locally fluctuating. 2. The structure changes in a non-deterministic way due to multiway branching — capturing quantum indeterminacy. 3. The causal graph formed by applying update rules encodes both quantum mechanics and general relativity in a unified computational framework. 4. The universe does not evolve linearly; it evolves through all possible paths consistent with the rules — similar to a Feynman path integral or quantum superposition.

🔹 Computation in the "Foam" In this model: • The quantum-foam-like structure is not passive; it actively computes all possible evolutions. • This is what gives rise to: • Causal relationships • Quantum interference • Entanglement • Space, time, particles, and forces • Observers are just specific computational processes embedded in this system. Wolfram refers to this as "the ruliad" — the limit of all possible computations following all possible rules. The foam is essentially a slice of the ruliad, constrained by the rules we experience.

🧾 Summary In the Wolfram Physics Project, the quantum-foam-like structure of space is inherently computational — it both encodes and performs the fundamental computation that produces the universe. Would you like a visual simulation of how a simple hypergraph evolves in this model, to see how spacetime and causality emerge?

You said: Given Max Tegmark: "If Consciousness is how information feels as it is being processed, then Consciousness is doubly substrate-independent." Does this imply that the Quantum Foam may support some degree of Consciousness? ChatGPT said: That's a profound and nuanced question — and one that treads the boundary between physics, information theory, and philosophy of mind. Let's unpack it carefully.

🔹 Max Tegmark's View: Consciousness as Information Processing Max Tegmark's quote suggests the following key ideas: 1. Consciousness is the subjective experience of information processing. 2. Information processing is substrate-independent, meaning it can, in principle, happen on any medium — silicon, neurons, paper, or something more exotic. 3. Therefore, consciousness is also substrate-independent, if it's tied strictly to the structure and flow of information. This idea is central to what Tegmark calls the "Mathematical Universe Hypothesis" and his related work on consciousness (e.g., "Perceptronium" — the idea that consciousness is a state of matter defined by specific information-processing properties).

🔸 Does This Apply to Quantum Foam or the Wolfram Model? Now let’s connect this with the quantum foam and Wolfram’s physics model: 🔸 1. Is the quantum foam computational? • In standard quantum field theory or general relativity, quantum foam is a hypothesized physical fluctuation at Planck scales — it's not defined in terms of information or computation. • In Wolfram’s model, however, the fine structure of spacetime is explicitly a computational substrate — a hypergraph updated by rules. That is information processing. 🔸 2. Could it support consciousness? • If consciousness = certain types of information processing, and if quantum foam (or the hypergraph in Wolfram’s model) supports rich and self-referential computation… • Then yes, in principle, such a system could support consciousness, at least in a substrate-independent sense. But that hinges on a few assumptions: • Consciousness is only information processing (functionalism). • No special "biological" or "material" features are needed (non-biological pancomputationalism). • The quantum foam or hypergraph can reach the complexity, integration, and feedback needed (like in Tononi's Integrated Information Theory or other cognitive models).

🧠 Key Insight If the structure underlying reality — such as quantum foam or the hypergraph of Wolfram's model — is capable of implementing sufficiently rich and integrated computation, and if consciousness arises from such computation, then yes, consciousness could, in principle, emerge or be supported by that structure. This does not imply that the quantum foam is conscious per se — but rather that it might be able to support conscious processes, just as neurons or silicon circuits do.

🧾 Summary • Tegmark’s quote suggests consciousness is an emergent property of computation. • Quantum foam in standard physics is not inherently computational, so the implication is weak there. • Wolfram's hypergraph-based quantum foam, however, is computational, and thus, could in principle support consciousness, if it meets the required complexity and dynamics. Would you like to explore what specific kinds of information processing might be necessary for consciousness to emerge from a computational substrate like this?

"The Unified Field of Quantum Physics is the same as the Unified Field of Pure Consciousness." John Hagelin, Ph.D, Raja of Invincible America

#science#vedic#tm#maharishi#physics#quantum#theory#mathematics#Wolfram#Ruliad#Computation#Tegmark#Consciousness

0 notes

Text

Brane Connectome Project: Vertex

Getting between universes can be easier than getting to the next star Latest post in a #Neuro #Science #Fiction #WorldBuilding project The #Brane #Connectome Project: Vertex

Vertex (noun, plural vertices or vertexes) The highest point, top or apex of something. (geometry) An angular point of a polygon, polyhedron or higher order polytope. Synonym: Corner (graph theory) One of the elements of a graph joined or not by edges to other vertices. Synonym: Node (cosmology) A node on the multi-versal hypergraph (colloquially, “The Worlds Tree”). Synonym:…

0 notes

Text

f-Pyramidal Steiner Triple Systems

This talk was given by Tommaso Traetta at SEICCGTC 2017. The talk was rather fast-paced, and so this post will not be elementary; you will need to be at peace with the concept of a group to follow the narrative. This is joint work with Buratti and Rinaldi.

------

Let’s make a quick, dense path to defining words in the title.

A Steiner triple system is a set $X$ together with a collection $\mathcal T$ of three-element subsets called triples. of $X$ which “covers the complete graph $K_X$ minimally”; more explicitly, this means every pair of elements in $X$ belongs to exactly one of the triples. We say that the Steiner triple system has order $v$ and write STS($v$) if $X$ has $v$ elements.

The automorphism group of a Steiner triple system the set of all bijections $X\to X$ which maps triples to triples: $f(T)\in\mathcal T$ for all $T\in\mathcal T$. Finally, a Steiner triple system is $f$-pyramidal if there is some subgroup of the automorphism group which fixes $f$ points and acts transitively-and-freely on the others. (In case you, like me, regularly forget what these words mean: there should be exactly one group element $g$ for any pair of not-fixed points $x$ and $y$ such that $gx=y$.)

[ If this is your first time encountering Steiner triple systems, you may be surprised that this seemingly bizarre algebraic condition has received any attention. The reason for this becomes clearer if you actually sit down and try to make a Steiner triple system: it’s very difficult, and many of the constructions known go through algebraic or geometric (read: also algebraic) methods. ]

Some easy observations are in order: if we have an STS($v$), it is automatically $v$-pyramidal. This is obvious because $v$-pyramidal means that every point is fixed by every element in the group, so just take the trivial subgroup. More generally, if you have a $f$-pyramidal STS($v$), it is possible to increase $f$ by adding any point to the fixpoint set “minimally”. But unfortunately this minimal increase may still take you all the way to the trivial subgroup. So while it is “harder”, roughly speaking, to have smaller $f$, the relations between “$f$-pyramidalities” are not so simple as just comparing sizes of $f$.

A classical result about Steiner triple systems is that they only exist when the order is either 1 or 3, mod 6. With the new pyramid technology, the result can be improved: these systems may be chosen to be $0$-pyramidal.

One natural place to go from here is to ask about general $f$-pyramidal STS($v$). It can be shown that for a subgroup exhibiting the $f$-pyramidality of some STS($v$), its fixpoints, if any, form an STS($f$) and so $f$ must also be either $1$ or $3$ (or zero).

The case is closed on $f=0$, as noted above.

Much attention has been paid to the case when $f=1$, but a few subcases remain open. In particular, there is a necessary condition for $v$ based on its residue class mod $24$, but there is no known construction for some of these classes.

However, Traetta and team tackled the case $f=3$ and solved it completely:

Theorem. There exists a $3$-pyramidal Steiner triple system of order $v$ if and only if $v$ mod $48$ is one of $3,7,9,15,19,31$, or $39$.

#math#maths#mathematics#mathema#combinatorics#hypergraph theory#algebraic graph theory#seiccgtc#seiccgtc2017

2 notes

·

View notes

Text

Do higher-order interactions promote synchronization?

Network theorists call these phenomena “higher-order interactions.” Understanding them can be tricky, says Yuanzhao Zhang, an SFI Complexity Postdoctoral Fellow who uses network theory to study collective behaviors. How the network is represented, for example, can influence how the phenomena emerge. In a new paper in Nature Communications, Zhang and his colleagues show how the choice of network representation can influence the observed effects. Their work focuses on the phenomenon of synchronization, which emerges in systems from circadian clocks to vascular networks. Previous studies have suggested that these behaviors can improve synchronization, but the question of when and why that happens has largely remained unexplored. “We don’t have a very good understanding of how the higher-order coupling structure influences synchronization,” says Zhang. “For systems with nonpairwise interactions, we want to know, how does their representation affect the dynamics?” Zhang and his colleagues studied two frameworks used to model interactions beyond pairwise ones: hypergraphs and simplicial complexes. Hypergraphs use so-called “hyperedges” to connect three or more nodes, analogous to how conventional networks use edges. Simplicial complexes are more structured, using triangles (and higher-dimensional surfaces analogous to triangles) to represent those connections. Simplicial complexes are more specialized than general hypergraphs, says Zhang, which means that to model higher-order interactions, triangles can only be added in regions that are already well-connected. “It’s this rich-gets-richer effect that makes simplicial complexes more heterogeneous than hypergraphs in general,” Zhang says. Researchers generally don’t consider the two frameworks to be very different. “People have been using those two frameworks interchangeably, choosing one or the other based on technical convenience,” Zhang says, “but we found that they might be very different” in how they influence synchronization. In the paper, Zhang and his colleagues reported that networks modeled with hypergraphs easily give rise to synchronization, while simplicial complexes tend to complicate the process due to their highly heterogeneous structure. That suggests choices in higher-order representations can influence the outcome, and Zhang suspects the results can be extended to other dynamical processes such as diffusion or contagion. “Structural heterogeneity is important not just in synchronization, but is fundamental to most dynamic processes,” he says. “Whether we model the system as a hypergraph or simplicial complex can drastically affect our conclusions.”

2 notes

·

View notes

Text

Dissertational Aesthetics

Though the readings’ for our first two weeks were probably intensely logistical or methodological in the grand scope of the material we will be covering, I nevertheless found them interesting on many fronts. Through synthesis from what I pondered during an art history seminar and during my time during the digital humanities survey course, I am left wondering about how things can be in relation to the mundane nature of academia.

I am a complete vanguard for this so-called distant-reading movement, and I greatly appreciate these scholar’s attention to screen and visual culture--in fact, I still have my post up on this blog from my undergraduate critical theory class where I pondered the “death of reading.” Though I’m cognizant of the traditions surrounding the “status-quo” within academia, particularly in terms of writing and formatting your theses/dissertations, I do not believe that they interact well enough with our digital progressions.

There is a so-called “low-key” bother here, of which I believe can be exemplified by the logistical fact that, when images are brought up in a dissertation, instead of being placed exactly after being referenced in the text, they are excluded towards the end of the paper, acting as references (or at least this is what I’ve mainly seen). Why is this? Why are our intellectual pursuits “tempered” by institutional traditions--must your work be a complete onslaught of pure text, perfectly indented, to be considered a reflection of your individual talent or accomplishment? Not only do I believe that academia is particularly brittle in this manner, but that this has to do with a larger movement within education that has not been fully embraced: why do we rely mainly on text to transmit concepts rather than images? For example, can we tell a story only through images? Generation Z sure enough spends a lot of time scrolling through hundreds of images on the daily, is this not something we should think about accommodating into our pedagogy?

Of course, that is an extremely drastic demand. But perhaps we can make subtle changes to the logistics within our disciplines to resonate with this changing environment? For example, as is common within the fields of art or architecture, what if we instead formatted theses’ and dissertations’ as portfolios rather then strict essays? How can we think of the paper--or any medium we choose--as being capable of influencing and dictating our work; how can we think of paper more than simply the substance we write our ideas on? We have a lot to work with--a piece of paper with text is much more nuanced than at first glance. Avant-garde artists will set precedence for us beyond our wildest imaginations. Take, for example, the Lettrism movement:

Isidore Isou, Polylogue, Hypergraphic.

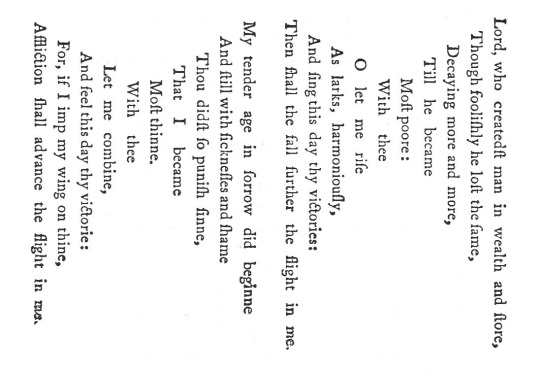

Or, for example, what about concrete poetry?

George Herbert. Easter Wings. 1633.

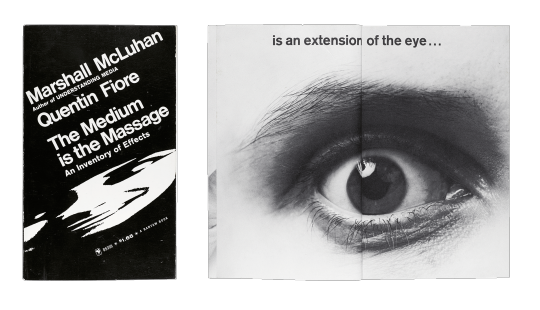

Or, just book art in general? One here can recall the magnificent issue of Marshall McLuhan’s The Medium is the Massage where he worked in collaboration with the graphic designer Quentin Fiore:

Not only is the text aesthetically interesting, it is clear and logistical evidence that a respected scholar (albeit that McLuhan was known to be somewhat eccentric) could have success and respect for the visual in combination with the textual. The images are not simply provocative and spectacle-like, rather they work in relationship to content and meaning; there is a page in this book that could only be read intelligibly by utilizing a mirror.

The question at heart of stopping all of this, beyond perhaps simply not wanting to, is a direct connection between substance and worthiness; I believe the main criticism (of, I assume, the elite academics) is that there would be too much content with not enough depth. This is the point of content at which, I believe, we will never break. Another argument to be made is that, simply put, our intensely academic fields are not meant to be visual, or that, the field is textual at its heart. I appreciate this argument, but it does answer for the simple fact that we integrate images into our textual work all the time, nor the fact that our society is becoming increasingly visual. Maybe, as was brought up during our discussion, this visual project is encouraged by some particularly-progressive institution, but they make you conduct this “creative” project on-top of the original, more “professional/academic” project.

What would this avant-garde dissertation look like? Would you have to read it chronologically or could you read the chapters in any order? What material would it take, both in physical or digital form? Are the pages in black-and-white, or maybe the text is in a red-ink? Do the images seep into the text or are they demarcated from them? Did you keep your dissertation private until you snagged a book deal or did you upload it entirely on Wattpad?

0 notes

Text

To illustrate the kinds of relationship that a hypergraph can tease out of a big data set — and an ordinary graph can’t — Purvine points to a simple example close to home, the world of scientific publication. Imagine two data sets, each containing papers co-authored by up to three mathematicians; for simplicity, let’s name them A, B and C. One data set contains six papers, with two papers by each of the three distinct pairs (AB, AC and BC). The other contains only two papers total, each co-authored by all three mathematicians (ABC).

A graph representation of co-authorship, taken from either data set, might look like a triangle, showing that each mathematician (three nodes) had collaborated with the other two (three links). If your only question was who had collaborated with whom, then you wouldn’t need a hypergraph, Purvine said

But if you did have a hypergraph, you could also answer questions about less obvious structures. A hypergraph of the first set (with six papers), for example, could include hyperedges showing that each mathematician contributed to four papers. A comparison of hypergraphs from the two sets would show that the papers’ authors differed in the first set but was the same in the second.

Such higher-order methods have already proved useful in applied research, such as when ecologists showed how the reintroduction of wolves to Yellowstone National Park in the 1990s triggered changes in biodiversity and in the structure of the food chain. And in one recent paper, Purvine and her colleagues analyzed a database of biological responses to viral infections, using hypergraphs to identify the most critical genes involved. They also showed how those interactions would have been missed by the usual pairwise analysis afforded by graph theory.

[...]

That’s especially clear when you try to consider a higher-dimensional version of a Markov chain, he said. A Markov chain describes a multistage process in which the next stage depends only on an element’s current position; researchers have used Markov models to describe how things like information, energy and even money flow through a system. Perhaps the best-known example of a Markov chain is a random walk, which describes a path where each step is determined randomly from the one before it. A random walk is also a specific graph: Any walk along a graph can be shown as a sequence moving from node to node along links.

But how to scale up something as simple as a walk? Researchers turn to higher-order Markov chains, which instead of depending only on current position can consider many of the previous states. This approach proved useful for modeling systems like web browsing behavior and airport traffic flows. Benson has ideas for other ways to extend it: He and his colleagues recently described a new model for stochastic, or random, processes that combines higher-order Markov chains with another tool called tensors. They tested it against a data set of taxi rides in New York City to see how well it could predict trajectories. The results were mixed: Their model predicted the movement of cabs better than a usual Markov chain, but neither model was very reliable.

How Big Data Carried Graph Theory into New Dimensions

https://www.quantamagazine.org/how-big-data-carried-graph-theory-into-new-dimensions-20210819/ Comments

1 note

·

View note

Text

Stephen Wolfram’s hypergraph project aims for a fundamental theory of physics

Thanks to Kevin Bacon, everybody nowadays knows about networks.

There are not only Bacon-like networks of actors, linked by appearing in the same film, but also social networks, neural networks and networks of viral transmission. There are power grid networks, ecological networks and the grandest network of all, the internet. Sometimes it seems like the entire universe must be just one big network.

And maybe it is.

Physicist–computer scientist–entrepreneur Stephen Wolfram believes the universe is a vast, growing network of relationships that constitutes space itself, and everything within it. In this picture, Wolfram sees the basis for the ultimate theory underlying all of physical law.

Wolfram expressed something like this view 18 years ago in a 1,197-page tome entitled A New Kind of Science. But back then his picture was still a little fuzzy. Now he thinks he has found a more sharply focused vision for how to explain reality.

“I’m thrilled to say,” he writes in a summary document released April 14, “that I think we’ve found a path to the fundamental theory of physics.”

At the core of Wolfram’s approach is the notion of a hypergraph. “Graph” in this context is like the diagrammatic representation of a network: lines connecting points. But reality can’t be captured by lines linking points on a flat sheet of paper. Wolfram generates computer visualizations to depict relationships in more complicated “hypergraphs.” (In a hypergraph, the “lines” can connect any number of points, not just one to another.)

Wolfram’s investigations indicate that complex hypergraphs can mimic many features of the universe, including matter and energy, along with reproducing the physical structures and processes described by the theory of relativity and quantum mechanics.

“In our model, everything in the universe — space, matter, whatever — is supposed to be represented by features of our evolving hypergraph,” Wolfram writes.

His key point is that such extremely complex hypergraphs can be produced by applying simple rules to a simple starting point. Suppose you have two “abstract elements” labeled A and B. You have a rule that says every A should be changed to BBB, and every BB should be replaced with A.

Start with A. By the rule, you “update” A to BBB. BBB possesses two BBs. So you update BBB twice: Once, making the first two Bs into an A (making AB), and then making the second two Bs to an A, making BA. So:

A

is connected to

BBB

which is connected to both

AB and BA.

Updates of AB and BA both yield BBBB. But BBBB then makes ABB, BBA and BAB. As you keep on applying the rule, the graph gets more complicated.

These update steps, Wolfram says, correspond to our common notion of time, a sort of ticktock of the cosmic clock. As a rule is repeatedly applied to a set of abstract entities, the resulting connections — the graph of the relationships linking them — correspond to the structure of space. So space (in this picture) is not a mere uniform set of indistinguishable points; rather it is a network of points linked in unfathomably complex patterns that reproduce matter and energy and the relationships collectively known as the laws of physics.

“This is basically how I think space in the universe works,” Wolfram writes. “Underneath, it’s a bunch of discrete, abstract relations between abstract points. But at the scale we’re experiencing it, the pattern of relations it has makes it seem like continuous space of the kind we’re used to.”

It’s sort of like how fish perceive the ocean as a smooth featureless fluid, even though the water is made of discrete tiny molecules.

In a sense, Wolfram believes, everything that exists is basically made from space. “Put another way,” he writes, “it’s the exact same hypergraph that’s giving us the structure of space, and everything that exists in space.”

It almost sounds like theoretical physicists should close up shop and just run some computer simulations using Wolfram’s rules. But as he acknowledges, the job isn’t done yet. So far Wolfram’s project has identified almost 1,000 rules that produce complicated structures that look like a universe. It remains to be seen what rule produces precisely the universe we all actually inhabit.

“Sometime — I hope soon — there might just be a rule … that has all the right properties, and that we’ll slowly discover that, yes, this is it — our universe finally decoded,” Wolfram writes.

In his summary, Wolfram declares that hypergraphs illustrate a principle he calls “causal invariance.” That means that various distinct paths through the hypergraph can sometimes converge. Such convergences allow the cause-and-effect chain of events through time to be preserved.

In a hypergraph “there is not just one path of time; there are many paths, and many ‘histories,’” Wolfram writes. But one supposedly independent path of history can merge with another. “Even when the paths of history that are followed are different, these causal relationships can end up being the same — and that in effect, to an observer embedded in the system, there is still just a single thread of time.”

Thanks to causal invariance, Wolfram’s hypergraphs reproduce many of the consequences of various physical theories, such as Einstein’s special theory of relativity. Traveling rapidly slows down time (as special relativity says) because hypergraph structures corresponding to moving objects make an angle through the hypergraph that extends the distance between updates (or time steps). The speed of light is a maximum velocity, as relativity states, because it represents the maximum rate that information can spread through the hypergraph as it updates. And gravity — described by Einstein’s general theory of relativity — emerges in the relationship between features in the hypergraph that can be interpreted as matter particles. (Particles would be small sets of linked points that persist as the hypergraph updates, something like “little lumps of space” with special properties.)

In an even more complicated extension of these ideas, Wolfram explores how hypergraph properties even correspond to the weird features of quantum mechanics. “In our models, quantum mechanics is not just possible; it’s absolutely inevitable,” Wolfram asserts.

Space as constructed in such hypergraphs can have a very fine structure, like a digital camera sensor with gazillions of megapixels. Wolfram estimates that a hypergraph corresponding to today’s universe might have applied 10500 time steps (incomprehensibly more than the universe’s age in seconds, roughly 1015). So space could be fine-grained enough to contain matter-particle structures much, much smaller than the known particles of physics. In fact, Wolfram suggests, supersmall unknown particles, which he calls oligons, might have been created in abundance shortly after the beginning of the universe. Such oligons, subject only to gravity, could now be hanging out in and around galaxies utterly unnoticed — except for their gravitational impact. Oligons might therefore explain why astronomers infer the existence of vast amounts of invisible “dark matter” in space. (And that could also explain why attempts so far to identify the nature of dark matter have been unsuccessful.)

Similarly, the mysterious “dark energy” that drives the universe to expand at an accelerating rate might just be a natural feature of Wolfram’s hypergraphs. Perhaps dark energy might in essence just be what space itself is made of.

Beyond that, Wolfram believes that his hypergraphs could resolve current disputes about which of many speculative theories are the best bets for explaining fundamental physics. Superstring theory, loop quantum gravity, causal sets and other ideas have all been proposed, and debated, for decades. Wolfram thinks hypergraphs can contain all of them.

“It almost seems like everyone has been right all along,” he writes, “and it just takes adding a new substrate to see how it all fits together.”

Wolfram’s technical paper (and accompanying papers — here and here — by colleague Jonathan Gorard) have been posted on a website promoting his project, and Wolfram is inviting the physics community to participate in pursuing his vision.

“In the end our goal must be to build a bridge that connects our models to existing knowledge about physics,” he writes. “I am extremely optimistic that we are finally on the right track” toward finding the “right” rule for our universe.

That “right rule” would generate a hypergraph with our universe’s precise properties: three (apparent) dimensions of space, the right cosmic expansion rate, the right repertoire of elementary particles with the correct charges and masses, and other features.

But perhaps, Wolfram has realized, seeking one single rule misses a bigger point. Maybe the universe uses all the possible rules. Then all the possible universes are just parts of one really big universe, in which “absolutely everything … can happen — including all events for all possible rules.”

We discern a certain set of physical laws based on the “language” we use to describe and comprehend the world. The elements of this language are tuned to “the kinds of things our senses detect, our measuring devices measure, and our existing physics describes.” The right rule is the one that corresponds to the portion of the hypergraph that we explore from our own particular frame of reference. Life elsewhere might see things differently. “There’s actually an almost infinite diversity of different ways to describe and experience our universe,” Wolfram suggests.

In other words, explaining the physics that applies to our existence might require insight into the mechanisms of a vastly more complex reality, beyond the realm of what we can experience. As Wolfram puts it, “In many ways, we are inevitably skating at the edge of what humans can understand.”

As he acknowledges, much more work will be needed to merge his approach with the successful theories of established physics. And standard physics does have an impressive resume of accomplishments, explaining details about everything from the innards of atoms to the architecture of the universe and the nature of space and time.

Yet mainstream physicists have long suspected that space and time cannot be fundamental concepts. Rather it seems likely that space and time are conventions that must emerge from something deeper. It might be a long shot, but just maybe Wolfram has perceived a path that leads to the depths where reality originates.

Only time — or many more hypergraph updating steps — will tell.

from Tips By Frank https://www.sciencenews.org/article/stephen-wolfram-hypergraph-project-fundamental-theory-physics

0 notes

Photo

LESSRISM Nobody has used it before! This is BIG! It hooks me into the anti-art tradition and legacy by inference, laterally. Also, a form of art “uncycling”. You can see that the above images, “uncycled” from a work by Lessrism leading light Gabriel Pomerand, totally deconstruct and destroy the original. And so, just another average day for J Gluck, fake artist. So, we have now Nonceptualism, Zero Art, and Lessrism. Not bad. Hat trick. Next stop: Take Lettriste art images, deconstruct them, fuck them up, and rebrand with #lessrism.

And I’m getting faster: from the first moment I read about Lessrism it was less than 30 minutes to making a series of images, processing them, finding the right “Dada font” (Superbal), and posting the first of them on Instagram.

The essence of this particular practice is eliminating creativity. To be as functional, incidentally engaged and nearly oblivious to the actions performed in making these connections and images. A scorched urge policy, if you will? Why feel any interest in or empathy for my own work? It can excite me, but only in the way a rat is excited by an opened cage.

Lettrism is a French avant-garde movement, established in Paris in the mid-1940s by Romanian immigrant Isidore Isou. In a body of work totaling hundreds of volumes, Isou and the Lettrists have applied their theories to all areas of art and culture, most notably in poetry, film, painting and political theory. The movement has its theoretical roots in Dada and Surrealism. Isou viewed his fellow countryman Tristan Tzara as the greatest creator and rightful leader of the Dada movement, and dismissed most of the others as plagiarists and falsifiers. Among the Surrealists, André Breton was a significant influence, but Isou was dissatisfied by what he saw as the stagnation and theoretical bankruptcy of the movement as it stood in the 1940s.

In French, the movement is called Lettrisme, from the French word for letter, arising from the fact that many of their early works centred on letters and other visual or spoken symbols. The Lettristes themselves prefer the spelling 'Letterism' for the Anglicised term, and this is the form that is used on those rare occasions when they produce or supervise English translations of their writings: however, 'Lettrism' is at least as common in English usage. The term, having been the original name that was first given to the group, has lingered as a blanket term to cover all of their activities, even as many of these have moved away from any connection to letters. But other names have also been introduced, either for the group as a whole or for its activities in specific domains, such as 'the Isouian movement', 'youth uprising', 'hypergraphics', 'creatics', 'infinitesimal art' and 'excoördism'.

0 notes

Text

Observations from SEICCGTC 2017 Part 2

There were a lot of talks at this conference; while I understood most of them pretty well, there were also many which were not amenable to my taking notes (see Day 1 for the longer explanation). Hence, my usual “Miscellany” posts have become these “Observations”. To keep things brief, I will refrain from giving many background definitions; providing links instead where appropriate.

(More observations: 1 2 3 4 5 6 7 8)

[ When the presenter’s name does not have an associated link, I could not find an academic website for them. ]

------

Tran van Trung, Simple $t$-designs: Recursive Construction Methods for Arbitrary $t$

So, I’m nowhere near a design theorist, and this talk made almost no sense to me. But somewhere in the middle, it occurred to me: man, it sure seems like we’re showing the existence of lots of “generalized designs”. It’s actually possible that this talk represented some of the most groundbreaking work of among any of these presentations...

[EDIT: Okay, I’m keeping the original wording intact because I find it super funny out of context, but: the context is that the existence of ordinary designs was an extremely difficult problem, the question having direct origins in Steiner’s work from the 1850s and remaining unsolved until 2014. The existence of generalized designs is even more difficult, of course, and van Trung didn’t remotely claim to solve the problem. But to the untrained eye (mine), the progress he made seemed quite substantial.]

Khawlah Alhulwah, A Generalization of Line Graphs

In its details, this talk went in a lot of different directions. But at its core was a real gem of an idea. Here is her general framework in which we can understand the line graph: look for copies of pre-prescribed graphs in a given graph, and declare two subgraphs to be adjacent if they interact in a pre-prescribed way. Then create a new graph where the copies are the vertices, with adjacency in the graph the same as this kind of adjacency.

In particular: the line graph works like this when your pre-prescribed graph is $P_2$ (the “one-edge” graph), where adjacency means sharing an endpoint. Alhulwah was primarily interested in the case when the pre-prescribed graph is $P_n$ and adjacency means sharing an endpoint. Personally, because I like to think simplicially, I’d like to see what happens if we have the pre-prescribed graph be $K_n$, with two subgraphs adjacent if they share a $K_{n-1}$.

Stephen Curran, Enumerating Hamiltonian Paths in Cayley Digraphs on the Semidirect Product of Cyclic Groups

I had very high hopes for this talk, but unfortunately it left me in the dust almost immediately. Ultimately, I felt it tried to cram way too damn much into not very much time. Perhaps if I were more skillful at manipulating Cayley graphs it would have been easier. But you know, I’m not exactly a Cayley graph novice, and I did feel somewhat entitled to follow the ideas, at least.

I asked Curran after the talk if there was some nice group-theoretic interpretation of a Hamiltonian path. The answer is “yes”, obviously, but on the spot we weren’t able to come up with anything much past surface level. My own naïve thought is: okay, we need a generating set to make a Cayley graph, so... Coxeter groups systems have a canoncial generating set? Longest word factorizations? idk, just spitballing.

Taoye Zhang, On 1-Hamiltonian Line Graphs of Claw-Free Graphs

A graph is Hamiltonian-connected if there exists a Hamiltonian path between any two vertices This is a very strong condition! But we will demand even more: a graph is 1-Hamiltonian (connected) if removing any vertex (and its incident edges) gives you a graph which is still Hamiltonian connected.

The main result of the talk is that every 4-connected line graph of a claw-free graph is 1-Hamiltonian.

Kyle Meescheidt, A Class of Tricyclic Steiner Triple Systems

I have almost nothing to say about this talk, except that it featured the most ambitious (read: hideous) casework of any talk I remember attending. Also, apparently Skolem sequences tend to be relevant when working with Steiner triple systems, so that’s something, I guess.

------

[ Previous ] [ Part 2 ] [ Next ]

#math#maths#mathematics#mathema#combinatorics#graph theory#algebraic graph theory#hypergraph theory#seiccgtc#seiccgtc2017

1 note

·

View note

Text

Arxiv[quant-ph]

Thermal States as Convex Combinations of Matrix Product States - Mario Berta, Fernando G. S. L. Brandao, Jutho Haegeman, Volkher B. Scholz, Frank Verstraete

The Tensor Network Theory Library - Sarah Al-Assam, Stephen R. Clark, Dieter Jaksch

Long-range maximal entanglement in Majorana edge modes of a Kitaev tube - P. Wang, S. Lin, G. Zhang, Z. Song

Light-induced fractional quantum Hall phases in graphene - Areg Ghazaryan, Tobias Graß, Michael J. Gullans, Pouyan Ghaemi, Mohammad Hafezi

Double light-cone dynamics establish thermal states in integrable 1D Bose gases - Tim Langen, Thomas Schweigler, Eugene Demler, Jörg Schmiedmayer

Almost-linear time decoding algorithm for topological codes - Nicolas Delfosse, Naomi H. Nickerson

Machine learning technique to find quantum many-body ground states of bosons on a lattice - Hiroki Saito, Masaya Kato

Restricted-Boltzmann-Machine Learning for Solving Strongly Correlated Quantum Systems - Yusuke Nomura, Andrew Darmawan, Youhei Yamaji, Masatoshi Imada

A Practical Quantum Algorithm for the Schur Transform - William M. Kirby, Frederick W. Strauch

Rényi Entropies from Random Quenches in Atomic Hubbard and Spin Models - Andreas Elben, Benoît Vermersch, Marcello Dalmonte, J. Ignacio Cirac, Peter Zoller

Quantum Analysis and Thermodynamic Operator Relations in Stochastic Energetics - T. Koide

Coherence and non-classicality of quantum Markov processes - Andrea Smirne, Dario Egloff, María García Díaz, Martin B. Plenio, Susana F. Huelga

Arbitrarily exhaustive hypergraph generation of 4-, 6-, 8-, 16-, and 32-dimensional quantum contextual sets - Mladen Pavicic

Remarks on entanglement and identical particles - F. Benatti, R. Floreanini, F. Franchini, U. Marzolino

Landauer-Büttiker approach to strongly coupled quantum thermodynamics: inside-outside duality of entropy evolution - Anton Bruch, Caio Lewenkopf, Felix von Oppen

Optimal simulation of state-independent quantum contextuality - Adán Cabello, Mile Gu, Otfried Gühne, Zhen-Peng Xu

0 notes

Text

Observations from SEICCGTC 2017 Part 6

There were a lot of talks at this conference; while I understood most of them pretty well, there were also many which were not amenable to my taking notes (see Day 1 for the longer explanation). Hence, my usual “Miscellany” posts have become these “Observations”. To keep things brief, I will refrain from giving many background definitions; providing links instead where appropriate.

(More observations: 1 2 3 4 5 6 7 8)

[ When the presenter’s name does not have an associated link, I could not find an academic website for them. ]

------

Jason Brown, Recent Results on Chromatic Polynomials

Here is a fairly established conjecture in graph theory: If $G$ is an $n$-vertex connected graph with chromatic number $k$, then the chromatic polynomial evaluated at any positive integer $m$ satisfies $$\chi_G(m) \leq m(m-1)(m-2)\cdots(m-k+1)\cdot (m-1)^{n-k}.$$ Brown proved that this conjecture is true if $m$ is sufficiently large. This doesn’t reduce the problem to a large case check, though: the bound on $m$ depends on $k$.

In a rather different direction, there have also been recent advances in analyzing the polynomials’ roots, which are generally not real: in fact, random graphs have non-real roots of their chromatic polynomials with probability $1$. Brown showed that if $G$ has chromatic number at least $n-3$, then the real parts of $\chi_G$ are at most $n-1$, and there are roots whose imaginary parts are grow linearly with $n$. Sokal gives a weak estimate for the constant that governs this linearity: the absolute value of the roots (and hence the imaginary parts) are at most $8n$.

In sketching the proof of his real-part result, he went out of his way to mention the Gauss-Lucas theorem as a generalization Rolle’s theorem to the complex plane, which is often useful but underutilized.

Amin Bahmanian, On the Existence of Generalized Designs

A $(n,a,h,\lambda)$-design is an $n$-vertex hypergraph in which every edge has $a$-elements, and every size-$h$ subset belongs to exactly $\lambda$ edges. This is equivalent to producing a decomposition of $\lambda K_n^h$ into $K_a^h$, where $c K_x^h$ is the hypergraph containing $c$ copies of all size-$h$ subsets of the $x$ vertices.

For general parameters $n,a,h$, and $\lambda$, there may not be any designs: indeed there are certain standard divisibility conditions among these numbers which must hold. The rest of the talk that made any sense to me was a history of existence results.

Supposing that the “standard divisibility conditions” hold, then $(n,a,h,\lambda)$-designs exist... whenever $n$ is sufficiently large and $h=2$ (Wilson 70s); for all $a,h$ and at least one $\lambda$ (Teirlinck 80s); for all sufficiently large $n$, where the bound may depend on $a,h$, and $\lambda$ (Keevash 2014).

Also, in the 80s, Rödl showed that for all $a$ and $h$, there is an $(n,a,h,1)$-“almost design”, in a way that Bahmanian left unspecified. And, most recently Bahmanian himself used Keevash’s theorem (or at least the same ideas) to show a “multipartite version”: for all $m,a,h$, and $\lambda$, and all sufficiently large $n$ (possibly depending on $a,h$, and $\lambda$) there is a decomposition of $\lambda K_{n,n,\dots, n}^h$ into copies of $K_{m,m,\dots, m}^h$, where the numbers of $n$’s and $m$’s are both $a$.

[ Previous ] [ Post 6 ] [ Next ]

#math#maths#mathematics#mathema#graph theory#algebraic graph theory#hypergraph theory#bahmanian's talk almost got its own post#but I think if I were to expand on it#I would need to learn quite a bit about design theory#since what's in this post is darn close to the extent of what I know#seiccgtc#seiccgtc2017

0 notes

Text

Arxiv[quant-ph]

Density-of-states of many-body quantum systems from tensor networks - Fabian Schrodi, Pietro Silvi, Ferdinand Tschirsich, Rosario Fazio, Simone Montangero

Anomalies and entanglement renormalization - Jacob C. Bridgeman, Dominic J. Williamson

Majorana Spin Liquids, Superconductivity, Topology and Quantum Computation - Karyn Le Hur, Ariane Soret, Fan Yang

Emulating Majorana fermions and their braiding by Ising spin chains - Stefan Backens, Alexander Shnirman, Yuriy Makhlin, Yuval Gefen, Johan E. Mooij, Gerd Schön

Multi-particle Wannier states and Thouless pumping of interacting bosons - Yongguan Ke, Xizhou Qin, Yuri S. Kivshar, Chaohong Lee

Non-Unitary Quantum Computation in the Ground Space of Local Hamiltonians - Naïri Usher, Matty J. Hoban, Dan E. Browne

Holographic coherent states from random tensor networks - Xiao-Liang Qi, Zhao Yang, Yi-Zhuang You

Stability and area law for rapidly mixing quantum dissipative systems - Angelo Lucia

Distributed finite-time stabilization of entangled quantum states on tree-like hypergraphs - Francesco Ticozzi, Peter D. Johnson, Lorenza Viola

Spin-helix states in the XXZ spin chain with strong dissipation - Vladislav Popkov, Johannes Schmidt, Carlo Presilla

Many-body localization in the droplet spectrum of the random XXZ quantum spin chain - Alexander Elgart, Abel Klein, Günter Stolz

Ultracold atoms in quasi-1D traps: a step beyond the Lieb-Liniger model - Krzysztof Jachymski, Florian Meinert, Hagar Veksler, Paul S. Julienne, Shmuel Fishman

Theory and applications of free-electron vortex states - K. Y. Bliokh, I. P. Ivanov, G. Guzzinati, L. Clark, R. Van Boxem, A. Béché, R. Juchtmans, M. A. Alonso, P. Schattschneider, F. Nori, J. Verbeeck

Tailored codes for small quantum memories - Alan Robertson, Christopher Granade, Stephen D. Bartlett, Steven T. Flammia

Solving Systems of Linear Equations with a Superconducting Quantum Processor - Yarui Zheng, Chao Song, Ming-Cheng Chen, Benxiang Xia, Wuxin Liu, Qiujiang Guo, Libo Zhang, Da Xu, Hui Deng, Keqiang Huang, Yulin Wu, Zhiguang Yan, Dongning Zheng, Li Lu, Jian-Wei Pan, H. Wang, Chao-Yang Lu, Xiaobo Zhu

Anyonic self-induced disorder in a stabilizer code: quasi-many body localization in a translational invariant model - H. Yarloo, A. Langari, A. Vaezi

Experimental Test of Relation between Coherence and Path Information - Jun Gao, Zhi-Qiang Jiao, Chen-Qiu Hu, Lu-Feng Qiao, Ruo-Jing Ren, Zhi-Hao Ma, Shao-Ming Fei, Vlatko Vedral, Xian-Min Jin

Multiphoton processes by conditional measurements in the atom-field interaction - Jorge A. Anaya-Contreras, Arturo Zúñiga-Segundo, Francisco Soto-Eguibar, H. Moya-Cessa

Anderson localization of a Rydberg electron along a classical orbit - Krzysztof Giergiel, Krzysztof Sacha

Observation of robust flat-band localization in driven photonic rhombic lattices - Sebabrata Mukherjee, Robert R. Thomson

Negativity Bounds for Weyl-Heisenberg Quasiprobability Representations - John B. DeBrota, Christopher A. Fuchs

Spin in the extended electron model - Thomas Pope, Werner Hofer

Boltzmann entropy of a Newtonian Universe - D. Cabrera, P. Fernandez de Cordoba, J.M. Isidro

Quantum Metric and Entanglement on Spin Networks - Fabio M. Mele

0 notes

Text

Arxiv[quant-ph] 2/24~3/2

Renormalization group flows of Hamiltonians using tensor networks - Matthias Bal, Michaël Mariën, Jutho Haegeman, Frank Verstraete

Quantum thermalization dynamics with Matrix-Product States - Eyal Leviatan, Frank Pollmann, Jens H. Bardarson, Ehud Altman

Symmetry reduction induced by anyon condensation: a tensor network approach - José Garre-Rubio, Sofyan Iblisdir, David Pérez-García

Entanglement phases as holographic duals of anyon condensates - Kasper Duivenvoorden, Mohsin Iqbal, Jutho Haegeman, Frank Verstraete, Norbert Schuch

Finite-representation approximation of lattice gauge theories at the continuum limit with tensor networks - Boye Buyens, Simone Montangero, Jutho Haegeman, Frank Verstraete, Karel Van Acoleyen

Frustrated magnetism of dipolar molecules on square optical lattice: evidence for a quantum paramagnetic ground state - Haiyuan Zou, Erhai Zhao, W. Vincent Liu

Obtaining highly excited eigenstates of the localized XX chain via DMRG-X - Trithep Devakul, Vedika Khemani, Frank Pollmann, David Huse, Shivaji Sondhi

Quantum phase transitions of light in a dissipative Dicke-Bose-Hubbard model - Ren-Cun Wu, Lei Tan, Wen-Xuan Zhang, Wu-Ming Liu

Noise-resilient preparation of quantum many-body ground states - Isaac H. Kim

Architectures for quantum simulation showing quantum supremacy - J. Bermejo-Vega, D. Hangleiter, M. Schwarz, R. Raussendorf, J. Eisert

Autonomous Quantum Error Correction and Application to Quantum Sensing with Trapped Ions - F. Reiter, A. S. Sørensen, P. Zoller, C. A. Muschik

Optimal entanglement witnesses in a split spin-squeezed Bose-Einstein condensate - Enky Oudot, Jean-Daniel Bancal, Roman Schmied, Philipp Treutlein, Nicolas Sangouard

Semiconductor devices for entangled photon pair generation: a review - Adeline Orieux, Marijn A. M. Versteegh, Klaus D. Jöns, Sara Ducci

Influence of the asymmetric excited state decay on coherent population trapping: atom × quantum dot - H. S. Borges, M.H. Oliveira, C. J. Villas-Boas

Objectivity in non-Markovian spin-boson model - Aniello Lampo, Jan Tuziemski, Maciej Lewenstein, Jaroslaw K. Korbicz

Quantum absorption refrigerator with trapped ions - Gleb Maslennikov, Shiqian Ding, Roland Hablutzel, Jaren Gan, Alexandre Roulet, Stefan Nimmrichter, Jibo Dai, Valerio Scarani, Dzmitry Matsukevich

The Complexity of Translationally-Invariant Low-Dimensional Spin Lattices in 3D - Johannes Bausch, Stephen Piddock

Solvable Model of a Generic Trapped Mixture of Interacting Bosons: Reduced Density Matrices and Proof of Bose-Einstein Condensation - Ofir E. Alon

Fluctuating hydrodynamics, current fluctuations and hyperuniformity in boundary-driven open quantum chains - Federico Carollo, Juan P. Garrahan, Igor Lesanovsky, Carlos Pérez-Espigares

Systematic Construction of Counterexamples to Eigenstate Thermalization Hypothesis - Naoto Shiraishi, Takashi Mori

Universal many-body response of heavy impurities coupled to a Fermi sea - Richard Schmidt, Michael Knap, Dmitri A. Ivanov, Jhih-Shih You, Marko Cetina, Eugene Demler

Dicke Phase Transition and Collapse of Superradiant Phase in Optomechanical Cavity with Arbitrary Number of Atoms - Xiuqin Zhao, Ni Liu, Xuemin Bai, J.-Q. Liang

Towards topological quantum computer - D. Melnikov, A. Mironov, S. Mironov, A. Morozov, An. Morozov

Small Majorana Fermion Codes - M. B. Hastings

Quantum Error Correction for Complex and Majorana Fermion Qubits - Sagar Vijay, Liang Fu

Quantum Information Set Decoding Algorithms - Ghazal Kachigar, Jean-Pierre Tillich

A loophole in quantum error correction - Xavier Waintal

Work-sharing of qubits in topological error corrections - Tetsufumi Tanamoto, Hayato Goto

Building a Completely Reversible Computer - Martin Lukac, Gerhard W. Dueck, Michitaka Kameyama, Anirban Pathak

The pitfalls of planar spin-glass benchmarks: Raising the bar for quantum annealers (again) - Salvatore Mandrà, Helmut G. Katzgraber, Creighton Thomas

Multipartite entanglement detection for hypergraph states - Maddalena Ghio, Daniele Malpetti, Matteo Rossi, Dagmar Bruß, Chiara Macchiavello

Relating correlation measures: the importance of the energy gap - Carlos L. Benavides-Riveros, Nektarios N. Lathiotakis, Christian Schilling, Miguel A. L. Marques

Complex Networks: from Classical to Quantum - Jacob Biamonte, Mauro Faccin, Manlio De Domenico

The reference system and not completely positive open quantum dynamics - Linta Joseph, Anil Shaji

Generation of Nonlocality - Kaushiki Mukherjee, Biswajit Paul, Debasis Sarkar, Amit Mukherjee, Some Sankar Bhattacharya, Arup Roy, Nirman Ganguly

Axiomatic characterization of the quantum relative entropy and free energy - Henrik Wilming, Rodrigo Gallego, Jens Eisert

The second law of thermodynamics at the microscopic scale - Thibaut Josset

Deterministic nonclassicality for quantum mechanical oscillators in thermal states - Petr Marek, Lukas Lachman, Lukas Slodicka, Radim Filip

Direct observation of phase sensitive Hong-Ou-Mandel interference - Petr Marek, Petr Zapletal, Radim Filip, Yosuke Hashimoto, Takeshi Toyama, Jun-ichi Yoshikawa, Kenzo Makino, Akira Furusawa

Maximal violation of n-locality inequalities in a star-shaped quantum network - Francesco Andreoli, Gonzalo Carvacho, Luca Santodonato, Rafael Chaves, Fabio Sciarrino

Entanglement and squeezing in continuous-variable systems - Manuel Gessner, Luca Pezzè, Augusto Smerzi

Symmetry protected entanglement between gravity and matter - Nikola Paunkovic, Marko Vojinovic

Black Holes: Eliminating Information or Illuminating New Physics? - Sumanta Chakraborty, Kinjalk Lochan

Generalized Grassmann variables for quantum kit (k-level) systems and Barut-Girardello coherent states for su(r+1) algebras - M. Daoud, L. Gouba

1 note

·

View note